Abstract

For successful communication, we need to understand the external world consistently with others. This task requires sufficiently similar cognitive schemas or psychological perspectives that act as filters to guide the selection, interpretation and storage of sensory information, perceptual objects and events. Here we show that when individuals adopt a similar psychological perspective during natural viewing, their brain activity becomes synchronized in specific brain regions. We measured brain activity with functional magnetic resonance imaging (fMRI) from 33 healthy participants who viewed a 10-min movie twice, assuming once a ‘social’ (detective) and once a ‘non-social’ (interior decorator) perspective to the movie events. Pearson's correlation coefficient was used to derive multisubject voxelwise similarity measures (inter-subject correlations; ISCs) of functional MRI data. We used k-nearest-neighbor and support vector machine classifiers as well as a Mantel test on the ISC matrices to reveal brain areas wherein ISC predicted the participants' current perspective. ISC was stronger in several brain regions—most robustly in the parahippocampal gyrus, posterior parietal cortex and lateral occipital cortex—when the participants viewed the movie with similar rather than different perspectives. Synchronization was not explained by differences in visual sampling of the movies, as estimated by eye gaze. We propose that synchronous brain activity across individuals adopting similar psychological perspectives could be an important neural mechanism supporting shared understanding of the environment.

Keywords: Psychological perspective, Inter-subject correlation, Attention, fMRI

Introduction

Shared understanding between people requires a certain degree of similarity in perception and interpretation of external events, both related to social situations and to physical environment. Top-down cognitive processing modes or psychological perspectives adopted toward external events greatly influence how we interpret the world (Moll and Meltzoff, 2011). For example, a referee calling a last-minute penalty kick in the Champions League final may be perceived as unfair and incompetent by supporters of the penalized team, while the supporters of the opposing team will praise the referee's accurate reading of the game (see, e.g., Hastorf and Cantril, 1954). In behavioral studies, sharing psychological perspectives enhances the similarity of interpretation of simple visual scenes (Kaakinen et al., 2011) and recall of expository text (Kaakinen et al., 2002, 2003) across individuals. However, the neural mechanisms supporting shared psychological perspectives across individuals have remained poorly specified.

Psychological perspective-taking involves building an internal model or schema, which helps to select task-relevant objects and events from the external world, thereby aiding the interpretation of the experienced events and the selection of appropriate actions. Accordingly, the interpretation of a scene and the corresponding brain activity go hand-in-hand. For example, activity in the fusiform face area is stronger when the ambiguous Rubin's vase–face illusion is perceived as opposing faces rather than a vase (Andrews et al., 2002; Hasson et al., 2001). Moreover, directing attention to specific objects in dynamic visual scenes shapes both brain responses to those objects and the related semantic categories (Çukur et al., 2013). Similar mechanisms may underlie directing of attention to task-relevant sensory information during psychological perspective taking. However, it remains unclear to what extent the shifts in sensitivity to various objects and features in the incoming sensory streams are shared among individuals during perception of naturalistic scenes.

During prolonged naturalistic stimulation, such as movie viewing, brain activity becomes synchronized across individuals in the time scale of a few seconds, both in early sensory cortices and in brain areas involved in higher-order vision and attention (Hasson et al., 2004; Jääskeläinen et al., 2008; Malinen et al., 2007; Wilson et al., 2008). Such brain-region specific inter-subject synchronization of hemodynamic activity could be an important neural mechanism that supports sharing of psychological perspectives with others, as it may reflect the similarity of information processing across individuals. Recent evidence supports this notion by showing that mental action simulation increases the across-participants synchrony of brain activity in the action–observation network (Nummenmaa et al., 2014).

In the present study, we directly tested the hypothesis that sharing a psychological perspective with others enhances synchronization of brain activity across subjects, and that the degree of brain synchronization between two individuals could be used to predict the perspective they are taking. Participants viewed a 10-min movie segment twice, assuming a different psychological perspective on the two runs. We replicated the results in two experiments using independent subject populations and different functional magnetic resonance imaging (fMRI) scanners. We show that inter-subject synchronization of brain activity, particularly in the lateral occipital cortex, parahippocampal gyrus and posterior parietal cortex, allows classifying whether two participants viewed the movie from the same or different perspectives. The results thus suggest that synchronous brain activation across individuals supports shared understanding of the environment.

Material and methods

Participants

The study protocol was approved by the Ethics Committee of the Hospital District of Helsinki–Uusimaa, and each subject signed an ethics-committee-approved informed consent form prior to participation. In Experiment 1 twenty healthy volunteers (13 males, 7 females; 3 left-handed; mean age 27 years, range 21–38) participated in the experiment. One additional participant was scanned but the data were removed from the analysis due to excessive head motion (relative displacement > voxel size) during fMRI scanning. Eye-movements were recorded in a separate session outside of the scanner from independent subjects. To control for potential differences in viewing behavior inside vs. outside of the fMRI scanner and to be able to directly model the effects of subject-specific eye movements on brain activation, we ran Experiment 2 with 13 additional subjects (8 females; 1 left-handed; mean age 27 years, range 22–34) whose eye gaze was tracked during the fMRI. One additional subject was scanned but was excluded from the analyses due to misunderstanding of the instructions. None of the participants reported a history of neurological or psychiatric disease and they were not currently taking medication affecting the central nervous system.

Experimental design

The participants watched the first 10 min of an episode of the television series Desperate Housewives (Season 1, Episode 15, Cherry Alley Productions, 2005; original English soundtrack with no subtitles) twice during fMRI. All participants of the two experiments were fluent in English and understood the dialog without subtitles. In the fMRI experiment, the stimuli were delivered using the Presentation software (Neurobehavioral Systems Inc., Albany, California, USA). The video was back-projected on a semitransparent screen using a 3-micromirror data projector (Christie X3, Christie Digital Systems Ltd., Mönchengladbach, Germany), and from there via a mirror to the subject. In Experiment 2 the setup was otherwise similar except that the projector was replaced by a Panasonic PT-DZ110X projector (Panasonic Corporation, Osaka, Japan). Auditory stimulation was delivered using the UNIDES ADU2a audio system (Unides Design, Helsinki, Finland) via plastic tubes through porous EAR-tip (Etymotic Research, ER3, IL, USA) earplugs. For the last 10 subjects of Experiment 2, the auditory stimuli were delivered through Sensimetrics S14 insert earphones (Sensimetrics Corporation, Malden, Massachusetts, USA) due to equipment update at the imaging site. Sound intensity was adjusted individually to be comfortable but loud enough to be heard over the scanner noise.

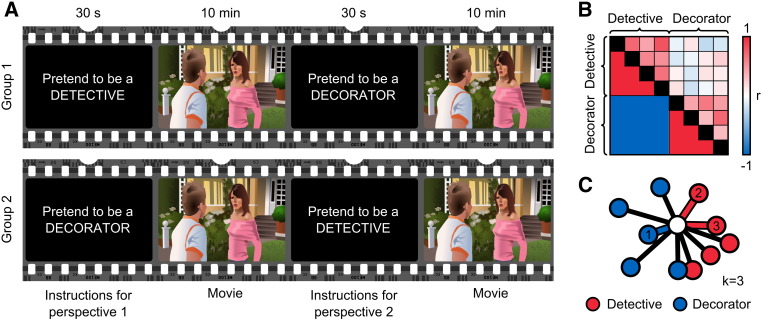

Prior to watching the episode, the participants were given written instructions regarding the psychological perspective they should adopt while watching the movie for the first time (see Fig. 1A). Initially, half of the subjects were instructed to assume a ‘social’ perspective of a forensic detective whose task was to solve which one of the persons appearing in the movie was the murderer. In contrast, the other half of the subjects was instructed to assume a ‘non-social’ perspective of an interior decorator whose task was to redesign the interiors and exteriors seen in the episode (see the Appendix for the full instruction). All participants were encouraged to view the movie according to their designated perspective, and the instructions emphasized that after the experiment the subjects would need to respond to questions related to their task. The initial perspective-taking instructions were shown for 30 s at the beginning of the scanning session. After the episode ended, the participants were instructed to switch their perspective: those who initially assumed the role of a detective were now to assume the role of a decorator and vice versa. Finally, the participants watched the movie again from this new perspective. Thus, the total task lasted for 20 min. To disentangle the effects of the perspective-taking task from differences between subject groups, we collapsed the first and the second viewing in the analysis. After the fMRI session, the participants completed a form that measured the success of perspective taking and perspective taking behavior during the two perspectives.

Fig. 1.

Experimental design and analyses. A: Participants watched the same movie clip twice, once from the detective and another time from the interior decorator perspective, with the starting perspective counterbalanced across participants. B: Mantel test was used to compare the pairwise ISC values (upper triangle entries) to a correlation matrix template (lower triangle entries) where ISC in same-perspective pairs (red) was higher than different-perspective pairs (blue). C: Subjects were classified according to the labels of the training subjects (detective — red, decorator — blue) with whom their ISC was highest. Proximity between two dots reflects the strength of the ISC between those subjects. The nearest three neighbors are indexed according to their proximity to the current subject, and the links are highlighted with the color corresponding to their class. For k = 3 the current subject (white dot) would be classified as a detective because two of the three nearest neighbors (neighbors 2 and 3) are detectives.

fMRI acquisition and preprocessing

MR imaging was performed at the Advanced Magnetic Resonance Imaging Centre at Aalto University. In Experiment 1, the data were acquired with a General Electric Signa 3-Tesla MRI scanner (GE Healthcare Ltd., Chalfront St Giles, UK) with Excite upgrade using a 16-channel receiving head coil (MR Instruments Inc., MN, USA). Anatomical images were acquired using a T1-weighted sequence (Spoiled gradient echo pulse sequence, preparation time 300 ms, TR 10 ms, TE 4.6 ms, flip angle 15°, scan time 313 s, 1 mm3 resolution). Whole-brain functional data were acquired with T2*-weighted echo-planar imaging (EPI) sequence, sensitive to the blood-oxygen-level-dependent (BOLD) signal contrast (TR 2000 ms, TE 32 ms, flip angle 90°, FOV 220 mm, 64 × 64 matrix, 4.0-mm slice thickness with 1-mm gap between slices, 29 interleaved oblique slices acquired in ascending order covering the whole brain).

In Experiment 2, the brain-imaging data were acquired with a 3 T Siemens MAGNETOM Skyra (Siemens Healthcare, Erlangen, Germany), using a standard 20-channel receiving head-neck coil. Anatomical images were acquired using a T1-weighted MPRAGE pulse sequence (TR 2530 ms, TE 3.3 ms, TI 1100 ms, flip angle 7°, 256 × 256 matrix, 176 sagittal slices, 1-mm3 resolution). Whole-brain functional data were acquired with T2*-weighted EPI sequence sensitive to the BOLD contrast. Imaging parameters for the functional images were similar to those in Experiment 1 with the exception that there were no gaps between the slices and that 32–36 slices were collected to ensure whole-brain coverage. A total of 660 images were acquired in both experiments. The first 13 volumes were discarded to allow for equilibration of the magnetization and to exclude brain activity recorded during the time when the subjects were reading the instructions for the upcoming task.

Standard preprocessing steps were applied to the data using the FSL software (www.fmrib.ox.ac.uk): The EPI images were realigned to the middle scan by rigid body transformations to correct for head movements. EPI and structural images were co-registered and normalized to the T1 standard template in MNI space (Montreal Neurological Institute (MNI) — International Consortium for Brain mapping) using linear transformations with 9 degrees of freedom, high-pass filtered with a cut-off frequency of 0.01 Hz, and smoothed with a Gaussian kernel of 8-mm FWHM.

Self-reports

After the fMRI experiment, the participants of the fMRI experiment completed a questionnaire regarding their perspective-taking behavior. Using a 5-point Likert scale they rated (i) how easy it was to adopt each perspective, and (ii) how much they focused on task-relevant movie features (social perspective: faces, speech, body language, characters' intentions, characters' appearance; non-social perspective: furniture, furnishing, details of the rooms, condition of the houses, condition of the gardens). The subjects also wrote short freeform accounts on how they adopted each perspective. In Experiment 2, the subjects were additionally asked to describe what particular details they found important for the different perspectives.

Eye-gaze recording

In Experiment 1, eye gaze was recorded in a separate session from 31 independent subjects (all male, mean age 25 yrs, range 19–38). The design was identical to that in the fMRI experiment with the following exceptions: Eye gaze was recorded with an EyeLink 1000 eye tracker (SR Research, Mississauga, Ontario, Canada; sampling rate 1000 Hz, spatial accuracy better than 0.5°, with a 0.01° resolution in the pupil-tracking mode). A nine-point calibration and validation was completed prior to the experiment. Saccade detection was performed using a velocity threshold of 30°/s and an acceleration threshold of 4000°/s2. The movie was broken down into nine segments, and eye-tracker drift was corrected at the beginning of each segment.

In Experiment 2, we gathered the eye gaze data with similar equipment and parameters but this time during fMRI scanning so that the 10-min video clip was shown in its entirety and the eye tracker was calibrated only once before the experiment. Due to technical difficulties, the eye-tracking data were lost from two subjects so that 11 subjects (6 females) were included in the eye-gaze analysis. Because the experiment was relatively long and no intermediate drift correction was performed, we retrospectively corrected the mean effect of the drift. We first calculated the mean of all fixation locations over the entire experiment (both perspectives) for each subject, and then rigidly shifted the fixation distributions so that the mean fixation location coincided with the grand mean fixation location over all subjects.

fMRI data analysis

Inter-subject synchronization of brain activity

To analyze the similarity of brain activity across participants in each experimental condition, we computed group-mean ISC maps over all pairs of subjects when they (i) shared the decorator perspective, (ii) shared the detective perspective, and (iii) when they had mismatching perspectives (i.e., subject pairs where one subject was viewing from the detective and the other subject from the decorator perspective). ISC matrices were obtained for each brain voxel by calculating all pairwise Pearson's correlation coefficients (r) of the voxel time courses across the participants and conditions, resulting in 780 (i.e. 20 subjects in both perspectives compared with all other subjects in both perspectives) unique pairwise r-values for each voxel in Experiment 1 and 325 pairwise values per voxel in Experiment 2. To test the statistical significance of the resulting ISC maps, we performed a non-parametric permutation test for r statistic by randomly circularly shifting each subject's time course and calculating r statistic 1,000,000 times. For visualization, we selected the maximum observed value in the null distribution as the threshold of significant ISC. Finally, the ISCs were also calculated in 10-sample sliding windows.

Testing for mean ISC differences across perspectives

Differences between the ISC maps in the detective and decorator conditions were compared using permutation testing based on the Pearson–Filon sum statistics on Fisher's Z-transformed correlation coefficients (ZPF statistics) as implemented in the ISC toolbox (Kauppi et al., 2014). The ZPF statistics were derived from the r-values by first applying the Fisher's Z-transform and then calculating their transformed difference between the perspectives for each subject pair (Kauppi et al., 2010; Raghunathan et al., 1996). The group-level statistics were then estimated as the sum of the ZPF statistics over the subject pairs. Thus, the sum statistic corresponds to the sum of the pairwise differences of ISC strength across perspectives. To estimate the statistical significance of mean ISC difference across perspectives, the maximum and minimum sum statistics were sampled with 25,000 random permutations of the group labels of each pair. The maximum (minimum) statistics were sampled to estimate the largest differences that would be observed by chance over the entire brain between randomly shuffled groups, thus controlling for the family-wise error (FWE) rate as implemented in the ISC toolbox.

Classifying participants with same vs. different perspectives from the ISC matrices

To directly test our hypothesis that viewing the movie from a similar perspective increases the ISC of brain activity compared with watching it from different perspectives (i.e. the activity is consistent within a perspective but different across perspectives) we calculated the contrast of within-perspective ISCs (pairs where both subjects shared the same perspective) vs. across-perspective ISCs (pairs where one subject assumed the decorator and another the detective perspective or vice versa) using the Mantel test (Mantel, 1967) based on the correlation of the upper triangle entries of the correlation matrix with a template matrix (Fig. 1B). The statistical significance of the results was estimated by recalculating the correlations with 1,000,000 random permutations of the ISC matrix of randomly selected voxels.

Finally, to reveal how well the two perspectives could be separated from each other based on the activity time courses of single voxels, we used (i) a k-nearest-neighbor (kNN) classifier (Fix and Hodges, 1951) based on ISC matrices of individual voxels (Fig. 1C) with odd k-values from 1 to 31, and (ii) a linear support vector machine (SVM) classifier (Cortes and Vapnik, 1995) based on the Euclidean distance of the standardized BOLD time courses, and leave-one-out cross-validation approach at each voxel to find brain areas that separated the perspectives significantly better compared with the mean of 1,000,000 random classifications.1 Because kNN classifiers can be sensitive to noise, especially with low k-values (Mitchell et al., 2004), the mean classification accuracy over all k-values was used to reveal time points where the classification was successful, yet unaffected by the chosen k-value. We further required that the areas should show significantly above-chance classification with at least half of the selected k-values. To confirm the reliability of the results, the kNN-classifier was also run separately on the data of the two experiments. To compare the results to the moment-to-moment classification accuracy of eye-tracking data, the classifier was trained and tested in sliding windows (length of 10 samples) to reveal which time windows showed best classification accuracies.

Eye-gaze analysis

Subject-wise fixation heatmaps were generated by modeling each fixation as a Gaussian function with mu of fixation's Cartesian coordinate and sigma of approximately 1° (i.e., full-width-at-half-maximum was approximately 2.35°) and multiplied with fixation duration. Subject-wise fixation distributions across perspectives were compared with two-sample t-tests. Mean saccade amplitudes and fixation durations were computed for each condition. Finally, we measured the degree of temporal inter-subject synchronization of eye movements by dividing the movie into 2-s time-windows, corresponding to the TR used in fMRI. These 2-s windows thus allowed a potentially different fixation order of the same spatial locations between participants during the time windows. Next, a mean spatial inter-subject correlation of the fixation heatmaps (inter-subject correlation of eye gaze, eyeISC; Nummenmaa et al., 2014) was computed for each time window. To calculate the spatial correlation, the fixation heatmaps were reshaped to vectors and the Pearson's product–moment correlation coefficient was calculated between the vector pairs.

The average eyeISC for each individual was first estimated by calculating the mean eyeISC between that subject and all other individuals adopting the same perspective. We then performed t-tests for each time window to reveal the time bins where eyeISC differed statistically significantly between the groups assuming different perspectives. Furthermore, we tested whether the within-perspective eyeISC differed from the across-perspective eyeISC using the Mantel test (see description in the Classifying participants with same vs. different perspectives from the ISC matrices section), and whether the groups could be classified based only on the eyeISC matrices calculated over the whole experiment using a kNN classifier with odd values of k from 1 to 31. The classification accuracy was then compared with distributions of 100,000 random classifications for each k-value. Additionally, we used a linear support vector machine SVM classifier based on the pairwise Euclidean distance of the subjects' standardized (Z-scored) fixation heatmaps to test whether gaze distribution over the movie would predict the adopted perspective. Finally, a similar classification was performed in 2-s sliding windows with 0.1-s hops between windows to reveal the scenes that contained maximum gaze information regarding the participants' perspectives.

Predicting cerebral ISC with eyeISC

To quantify the relationship between inter-subject synchronization of eye gaze and brain activity, we computed moment-by-moment ISC of brain activity by calculating the average ISC for each acquired EPI using a 10-sample (20 s) sliding window. The eyeISC time courses were then aligned with the middle samples of the cerebral ISC windows, and the hemodynamic lag was accounted for with a single gamma function (delay 6 s). Finally, the correlations between aligned eyeISC time courses and the voxel-wise ISC time courses were calculated.

The null distribution for the eyeISC time course vs. ISC time course comparison was obtained by correlating the ISC time courses with a surrogate version of the eyeISC time courses using bootstrap resampling (circular shifting by at least five samples). Therefore, any observed synchrony in the null distribution between ISC and eyeISC circularly-shifted time courses should be false positives. We built the null distribution based on the procedure implemented in the ISC toolbox for statistical testing; the method has been demonstrated to be appropriate for fMRI data (Pajula et al., 2012). We estimated the null distribution through 1,000,000 circular shifts and corrected the resulting p-values using the False Discovery Rate (FDR) correction with an independence or positive dependence assumption (q = 0.05). Finally, a similar analysis was performed on the classification-accuracy time courses to examine whether the eye-gaze classification accuracy was correlated with the brain-activity classification accuracy.

Results

Subjective ratings and eye-gaze recordings

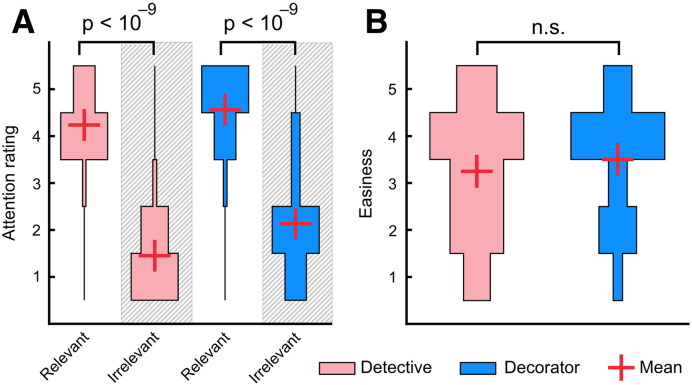

The subjects in Experiment 1 reported paying more attention to task-relevant features during both perspectives (t(19) = 19.58, p = 4.7 ∗ 10− 14, ηp2 = 0.95 for the detective and t(19) = 11.26, p = 7.5 ∗ 10− 10, ηp2 = 0.87 for the decorator condition, Fig. 2A). The mean difference scores for attention to task-relevant vs. task-irrelevant features were 2.78 for the detective and 2.48 for the decorator perspective (n.s.; t(19) = 1.74, p = 0.10, ηp2 = 0.14). Participants reported assuming both perspectives equally easily, (t(19) = 0.93, p = 0.36, Mdetective = 3.25, Mdecorator = 3.50, Fig. 2B).

Fig. 2.

Behavioral ratings. A: Distributions of subjects' ratings (on scale 1–5) for attending to task-relevant and task-irrelevant features in the movie during the detective (pink) and decorator (blue) perspectives. B: Distributions of subjects' ratings on how easy they found the two perspective-taking tasks (on scale 1–5). Red crosses indicate the mean rating over subjects.

In their freeform accounts of perceptive-taking strategies that we transcribed by counting the perspective-relevant and perspective-irrelevant details in the answers, the subjects reported behaving in a perspective-relevant manner (p = 3.43 ∗ 10− 15, Chi-squared goodness-of-fit test against uniform distribution). When assuming the detective perspective, the subjects mentioned most often trying to assess the motives of the characters (8/20), by analyzing what the characters said (6/20), and by reading their facial expressions (6/20). Other things mentioned were looking for unusual behavior or things and assessing the emotional states and personality traits (e.g. tension, nervousness, aggression, and impulse control) of the characters.

In turn, while assuming the decorator perspective, the participants reported most often thinking of ways to improve the interiors/exteriors depicted in the clip (6/20), as well as focusing on the background (5/20) and colors of the houses and furnishings (5/20). Subjects also reported trying to obtain a general impression of the surroundings (e.g. general design, 3; general garden condition, 3; and style, 3) and focusing more on the details (e.g. details, 3; decorations, 3; and furniture, 2). Three subjects reported having actively tried to ignore people, conversations and/or the entire plot of the video during the decorator perspective.

The answers to the question about what specific details the subjects found important for each perspective in Experiment 2 yielded similar results although the examples given by the participants were more specific. For example, for the detective perspective, the subjects mentioned specific people and events in the movie (e.g. specific suspicious characters, police arresting a person, and discussion about a murder), while for the decorator perspective they reported details about the interiors and yards (e.g. condition of plants in the gardens and style of the furniture and decorations).

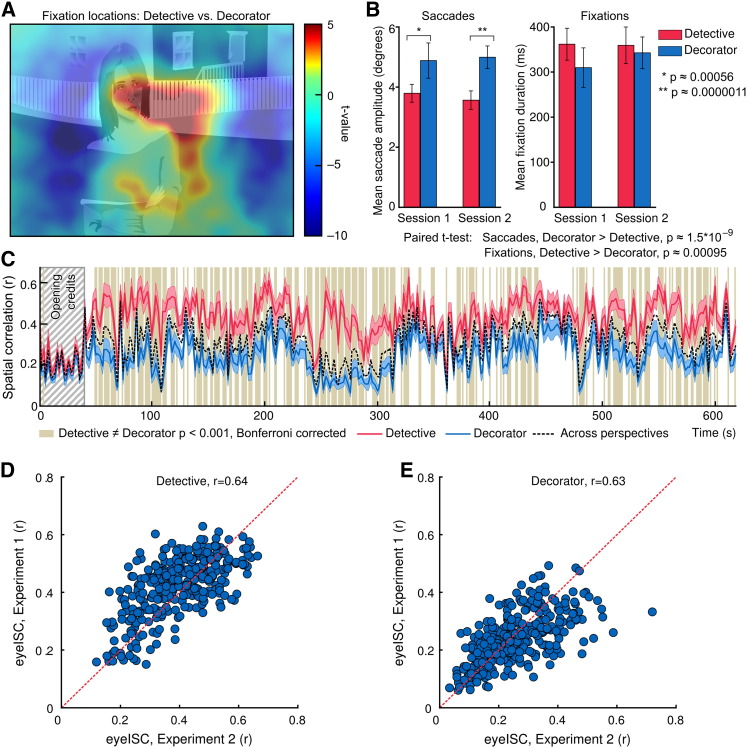

Eye movement analysis in Experiment 1 revealed that the detective (‘social’) perspective biased fixations toward the center of the screen where the actors were typically shown, whereas the decorator (‘non-social’) perspective biased fixations toward the edges of the screen where the interiors/exteriors were visible (Fig. 3A). Furthermore, participants made significantly shorter saccades and longer fixations during the detective than the decorator perspective (Fig. 3B). Inter-subject synchronization of eye movements (eyeISC) was significantly stronger during the detective than the decorator perspective (Fig. 3C). Crucially, the difference in the strength of eyeISC between the detective vs. decorator perspectives was significant only after the opening credits, which contained no task-relevant information (see the beginning of the time courses in Fig. 3C). Although the eye-movement parameters (i.e. saccade length and fixation duration) between the perspectives were statistically different, both classification approaches and the Mantel test based on the eyeISC matrices failed to separate the subjects based on the perspectives they had taken in 97% of the time windows as well as in the analysis based on the fixation heatmaps of the entire experiment, thus suggesting that the locations of the fixations were too similar across perspectives to allow successful classification.

Fig. 3.

Eye movement patterns. A: The subtraction heatmap (T-scores, unthresholded) shows regions receiving more fixations during the detective (yellow to red) and decorator (turquoise to blue) perspectives. Heatmaps were computed over the entire experiment and are overlaid on a sketch of a representative frame of the movie. B: Saccades were longer during the decorator perspective and fixations during the detective perspective. C: Time courses of inter-subject synchronization (± 95% confidence interval) of gaze position within perspectives (red and blue) and across perspectives (black dashed line). Vertical bars indicate time intervals with significantly different eyeISCs across conditions. Opening credits are indicated by gray striped background. D and E: Scatter plots of eye ISC across time windows in Experiment 1 vs. Experiment 2 in the detective and decorator perspectives. The red dashed line indicates the region where eyeISCExp1 = eyeISCExp2.

The results of the eye-gaze analyses were similar in Experiment 2 where the eye gaze was recorded during fMRI scanning and in Experiment 1 where the eye gaze was recorded outside the scanner. The contrast of fixation distributions across perspectives showed a similar pattern of increased visual sampling of the background in the decorator perspective and increased sampling of the middle area of the screen in the detective perspective. Furthermore, the time courses of eyeISC were highly correlated between the two datasets (r = 0.64 for detective, r = 0.63 for decorator; Figs. 3D and E).

Inter-subject synchronization of brain activity

During both perspective-taking conditions, brain activity was synchronized across participants in sensory and associative regions (Fig. 4). Synchrony was strongest in the visual and auditory projection cortices although reliable synchronization was also observed in the parietal and dorsolateral prefrontal cortices. These brain areas were also synchronized between individuals taking different perspectives (i.e., over pairs where one subject was assuming the detective and the other the decorator perspective). The results were replicable in Experiments 1 and 2 analyzed separately, and therefore data from the two experiments were pooled.

Fig. 4.

Mean ISC maps. Maps show the mean ISC across subject pairs in the detective (top) and decorator (middle) perspectives. Bottom row shows the mean ISC across conditions (i.e., correlation between detective vs. decorator pairs). The results are based on combined data from Experiments 1 and 2. Abbreviations: AC — auditory cortex, dlPFC — dorsolateral prefrontal cortex, dmPFC — dorsomedial prefrontal cortex, PCC — posterior cingulate cortex, Pcu — precuneus, PPC — posterior parietal cortex, STS — superior temporal sulcus, VC — visual cortex, VTC — ventral temporal cortex.

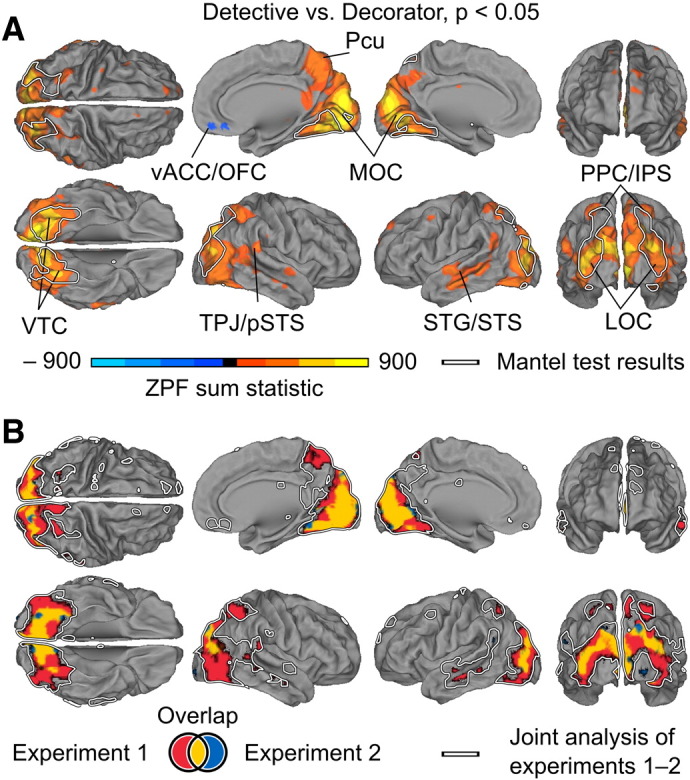

Taking the detective vs. decorator perspectives increased inter-subject synchronization in several brain areas, including the lateral and medial occipital, posterior parietal and ventral temporal regions, as well as in the superior temporal sulcus, parts of the superior temporal gyrus, and the temporoparietal junction (Fig. 5). Moreover, the lateral occipital, inferior temporal, and posterior parietal cortical regions showed significantly higher ISC in subject pairs assuming the same perspective than in pairs assuming different perspectives (Fig. 6). Therefore, we could predict the correct perspective of the left-out subjects based on the ISC with a significantly above-chance accuracy in these higher-order areas (kNN: p < 0.001, uncorrected, corresponding to classification accuracies of 63.6–68.2% in joint analysis of Experiments 1 and 2, 67.7%–72.5% in Experiment 1, and 73.1%–76.9% in Experiment 2. Exact accuracy depends on the selected value of k. Only areas where accuracy was significant with at least half of the values of k are presented; Mantel test: q < 0.05, FDR corrected). Table 1 lists the coordinates of the voxels showing the greatest difference between within- vs. across-perspective ISCs for each brain area.

Fig. 5.

Brain regions showing stronger ISC during the detective than the decorator perspective (orange to yellow) and vice versa (blue to turquoise). A) Results calculated over both experiments. Data are thresholded at p < 0.05 (FWE controlled). White outlines indicate areas exhibiting higher within- vs. across- perspective ISCs in Fig. 6. Abbreviations: LOC — lateral occipital cortex, MOC — medial occipital cortex, Pcu — precuneus, PPC/IPS — posterior parietal cortex/intra-parietal sulcus, STG/STS — superior temporal gyrus/superior temporal sulcus, TPJ/pSTS — temporoparietal junction/posterior superior temporal sulcus, vACC/OFC — ventral anterior cingulate cortex/orbitofrontal cortex, VTC — ventral temporal cortex. B) Areas showing higher ISC in Experiment 1 (red), Experiment 2 (blue) or in both experiments (yellow). White outlines indicate the results from panel A.

Fig. 6.

Brain areas showing higher ISC within vs. across perspectives. A: Brain regions where accuracy of classification based on pairwise ISC values was significantly above chance level (p < 0.001, uncorrected) with at least half of the values of k. The color coding (red–yellow) indicates the average accuracy over the classification results. White outlines indicate areas exhibiting higher within- vs. across-perspective ISCs. The results are calculated over both experiments. B: Areas where classification accuracy was significantly higher than chance in the Experiment 1 (red) or Experiment 2 (blue) and their overlap (yellow). C: Scatter plots show the subjects plotted on a 2D plane using multidimensional scaling where the proximity between two subjects corresponds to their ISC. We selected only the largest clusters for visualization by setting the cluster extent threshold to 27 voxels. Pale blue and red background colors indicate areas where subjects are classified as decorators and detectives, respectively, using a kNN classifier trained on the entire group (k = 33). These data are plotted for visualization only and were not subjected to secondary statistical testing. Abbreviations: LOC — lateral occipital cortex, PHG — parahippocampal gyrus, PPC — posterior parietal cortex. For the coordinates of the peak voxels, see Table 1.

Table 1.

Voxel coordinates showing the greatest within- vs. across-perspective differences in ISC strength.

| Brain region | X | Y | Z |

|---|---|---|---|

| PHG R | 28 | − 68 | − 18 |

| PHG L | − 32 | − 46 | − 20 |

| LOC R | 34 | − 82 | 14 |

| LOC L | − 34 | − 86 | 12 |

| PPC L | − 18 | − 66 | 50 |

The results of analogous analyses performed separately for Experiments 1 and 2 yielded concordant results. The differences in ISC between the detective vs. decorator perspectives were located in similar brain regions in both experiments but due to lower statistical power the areas showing higher ISC in the detective than the decorator condition were smaller than in the joint analysis of the experiments (Fig. 5B). A small region in the junction of the ventral anterior cingulate and orbitofrontal cortex showed a small but statistically significant effect in the opposite direction, but the effect was not robust enough to appear in either experiment alone. The classification analysis revealed consistently overlapping regions of ventral and dorsal stream areas in both experiments (Fig. 6B).

While both eye-gaze patterns and brain activity were significantly affected by the perspective-taking task, the eye-gaze patterns consistently predicted neither the ISC of brain activity nor the classification accuracy based on the brain data.

Discussion

We show, for the first time, that different individuals' fMRI signals synchronize when the individuals assume a similar psychological perspective while viewing a movie. We further demonstrate that such perspective taking is accompanied by changes in the selection of visual information as indexed by eye-gaze patterns during movie viewing.

The observed inter-subject synchronization of brain activity was stronger in LOC and VTC participating in higher-order visual processing and in PPC having an important role in top-down allocation of attention (Corbetta and Schulman, 2002) when the subjects were assuming same vs. different perspectives toward the movie. Even though taking the detective vs. decorator perspectives increased synchronization also in the early visual cortical regions, ISC in these regions did not allow predicting which perspective the participants were taking. Rather, only the activity beyond the early visual areas in ventral and dorsal visual stream brain areas, including the parahippocampal gyrus, lateral occipital cortex and posterior parietal cortex, contained sufficient information for accurate classification. Critically, we observed concordant results in Experiments 1 and 2 involving the same design but with different subjects and scanners. While the brain areas found to be sensitive to perspective taking in the two experiments did not overlap completely (likely due to different scanners and individual variability in locations of functional brain regions) the overall effects were very similar (see Figs. 5 and 6). Thus, the effects were replicable and highly consistent across participants.

ISC reflects similarity of perspective beyond visual sampling

The increased within- vs. across-perspective ISCs in the ventral and dorsal streams are unlikely to be accounted for by the eye-gaze patterns for the following reasons. Both eye gaze and early visual responses during the decorator perspective were consistently less similar calculated in both sliding windows and over the entire experiment compared with both (i) pairs where both participants were taking the detective perspective and (ii) pairs taking dissimilar perspectives. Recent evidence also indicates that neural responses in the ventral stream are largely invariant to eye movements (Nishimoto et al., 2013) and that activity in the posterior parietal cortex is poorly predicted by gaze during natural viewing (Salmi et al., 2014). Thus, the higher within- vs. across-perspective ISCs in the dorsal and ventral streams may constitute a part of the neural substrate for psychological perspective-taking that guides task-specific perception of the movie scene independent from gaze location.

In addition to the higher-order visual areas, ISC was increased during the detective vs. decorator perspectives also in the superior temporal sulcus and the adjoining temporoparietal junction that play important roles in social cognition (Allison et al., 2000; Lahnakoski et al., 2012a; Nummenmaa and Calder, 2009) and speech processing in particular (Boldt et al., 2013; Lahnakoski et al., 2012b). However, this effect was relatively small compared with the effects in the visual regions which may be, in part, explained by the difficulty of ignoring task-irrelevant social information, particularly the speech.

Receptive fields of sensory cortical neurons act as rapidly changing dynamic filters whose response gain and feature selectivity may be modified by attention to task-relevant features (Desimone and Duncan, 1995; Jääskeläinen et al., 2011). For example, attention to visual features, such as motion and color, modulates the response amplitudes in several extrastriate visual areas (Chawla et al., 1999). Analogous perspective-driven filtering mechanisms could explain modulations of higher-level brain responses in the present study. However, here the task-relevant features are not, for example, simple visual features. Rather, participants had to construct mental models to direct perspective-dependent search and monitor salient animate and inanimate objects. The brain regions revealed by our analyses may thus reflect the neural processes that enable these high-level models to guide attention and actions depending on the current goals. Furthermore, the cortical processes underlying these models operate in a synchronized manner across individuals who assume a shared psychological perspective to the movie events. Our results thus provide experimental evidence to support the proposal (Hasson et al., 2004; Nummenmaa et al., 2012) that inter-subject synchronization of brain activity reflects the similarity of mental states and high-level information processing across individuals, rather than mere similarity in stimulus-driven neural activity.

The link between synchronized neural activity and ‘mind sharing’ is supported by a range of recent studies. The synchrony of brain activity between a sender and a receiver has been shown to enhance communication between individuals (Schippers et al., 2010; Stephens et al., 2010). People with increased risk perception of a health crisis (H1N1 pandemic) exhibit enhanced ISC in the anterior cingulate cortex (Schmälzle et al., 2013) during viewing of TV reports on the topic. The ISC of viewers' brain activity is increased during emotional episodes in a movie (Nummenmaa et al., 2012). Finally, activity in brain regions involved in language processing differs between individuals who listen to the same short stories but with different attentional tasks (Cooper et al., 2011). These findings support the notion that synchrony in the involved brain regions reflects how complex stimuli are interpreted rather than their physical characteristics.

Functions of brain areas participating in the tasks

Significant perspective-dependent ISC in the posterior parietal cortical regions accords well with prior studies showing the activation of these regions during maintenance of a cognitive task set (Corbetta and Schulman, 2002; Esterman et al., 2009; Wager et al., 2004). This proposal is further supported by our behavioral data indicating that subjects adopting similar rather than dissimilar perspectives attended to more similar objects and events in the movie, which has likely increased the ISC in the PPC.

The ventral temporal areas modulated here by the perspective-taking task have been linked to processing of objects and scenes (Haxby et al., 2001), but their activity is also modulated by task demands, such as working-memory load (Rose et al., 2005). Furthermore, attention increases activity induced by the attended category of visual stimuli in the parahippocampal and fusiform gyri during perception of scenes and faces, respectively (Vuontela et al., 2013). The parahippocampal gyrus also plays an important role in the associative process that shapes perceptual schema-representations (Bar, 2009), and similar attentional modulations have been reported also when attention is not explicitly directed to the particular categories but rather to other features of the image (e.g. moving vs. stationary pictures of faces and houses; O'Craven et al., 1999).

Our results reveal that also higher-level perspective taking tasks synchronize the activation time courses across participants in the dorsal and ventral visual streams, suggesting that naturalistic experimental paradigms can provide a valid way to probe complex cognitive processes in real-life-like experimental conditions.

To further address the brain basis of psychological perspective taking, future work should address how the function of the brain regions revealed here differ between a ‘true’ psychological perspective taking task compared, for example, with explicit attention to people vs. objects and places during naturalistic stimulation. It will also be of interest to characterize how the functional network structure between brain areas changes during different tasks.

Effects of perspective taking on eye gaze

Even though we could not classify the subjects' perspective based on the eye movement data alone, the eye gaze patterns were more synchronous in the detective than the decorator perspective. Such a result accords with prior behavioral work on perspective-driven text and scene processing, which have consistently found that some perspectives produce larger effects than others, possibly due to task constrains or the number of items relevant for each perspective (Anderson and Pichert, 1978; Kaakinen and Hyönä, 2008). Subjects probably also have more overlapping prototypical knowledge on some perspectives than others (Kaakinen and Hyönä, 2008), which may increase the similarity of interpretation of the scenes and the associated brain activity. Additionally, the gaze differences across perspectives may be related to different processing modes of the visual scenes. The eye-gaze patterns, with longer fixations and shorter saccades during the detective than the decorator perspective and vice versa, suggested that during the detective perspective the subjects adopted a focal and during the decorator perspective an ambient processing mode (Pannasch and Velichkovsky, 2009).

Methodological remark

Viewing the same video naturally elicits robust inter-subject correlation in wide cortical regions even when the subjects are focusing on different aspects of the video (see bottom panel of Fig. 4). To reveal genuine perspective-driven ISC modulations, we reduced the between-subjects variance by using a fully counterbalanced within-subjects design. In general, the effects of the perspective-taking task were larger during the first viewing. However, some effects were reversed during the second viewing, thus suggesting that these effects were not related to perspective taking and were rather reflecting inherent group differences.

Conclusions

Our results, replicated in two experiments on independent subject groups, demonstrate that sharing a psychological perspective with others is reflected in increased inter-subject brain synchrony in the posterior parietal, lateral occipital, and ventral temporal regions. This perspective-driven enhancement of inter-subject synchronization of brain activity likely reflects the similarity of the mental states of individuals rather than the similarity of sensory input. Importantly, the synchrony in these brain regions could be an important mechanism supporting maintenance of similar psychological perspectives across individuals, and ultimately supporting mutual understanding of the shared environment.

Acknowledgments

The study was supported by the Academy of Finland (grants #121031, #138145, and #131483), the European Research Council (Advanced Grant #232946 to R.H., Starting Grant #313000 to L.N.), Aalto University (aivoAALTO and Aalto Science IT projects), and the Doctoral Program “Brain & Mind”. We thank Jukka-Pekka Kauppi for providing the code for the across-perspective ISC comparisons, Marita Kattelus and Heini Heikkilä for their help with data acquisition, as well as our participants for making this study possible.

Footnotes

Both classification approaches yielded similar results. The peak classification accuracies for the SVM classifier were slightly higher than the mean kNN-accuracies over k-values, as has been reported in prior literature (Mitchell et al., 2004). However, the null distribution of the SVM classifier had heavier tails than that of the kNN-classifier, which rendered the thresholded results very similar. For the sake of conciseness, we only report the results of the kNN-classifier (see the Results section).

Appendix A.

Instructions for inducing the perspectives

Social perspective (forensic detective): “Next we will show you a short movie. One of the individuals depicted in the movie is guilty of homicide. While watching the movie, your task is to assume the role of a forensic detective. Your task is to evaluate which persons act suspiciously and could be potential suspects for murder. Be prepared to answer questions related to the movie after the experiment”.

Non-social perspective (interior decorator): “Next we will show you a short movie. The exteriors and interiors of the houses depicted in the movie need improvement. While watching the movie, your task is to assume the role of an interior and exterior decorator. Your task is to evaluate how you could improve the interiors and exteriors you see in order to make them more comfortable. Be prepared to answer questions related to the movie after the experiment”.

References

- Allison T., Puce A., McCarthy G. Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Anderson R.C., Pichert J.W. Recall of previously unrecallable information following a shift in perspective. J. Verbal Learn. Verbal Behav. 1978;17:1–12. [Google Scholar]

- Andrews T.J., Schluppeck D., Homfray D., Matthews P., Blakemore C. Activity in the fusiform gyrus predicts conscious perception of Rubin's vase–face illusion. NeuroImage. 2002;17:890–901. [PubMed] [Google Scholar]

- Bar M. The proactive brain: memory for predictions. Philos. Trans. R. Soc. B. 2009;364:1235–1243. doi: 10.1098/rstb.2008.0310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boldt R., Malinen S., Seppä M., Tikka P., Savolainen P.I., Hari R., Carlson S. Listening to an audio drama activates two processing networks, one for all sounds, another exclusively for speech. PLoS ONE. 2013;8:e64489. doi: 10.1371/journal.pone.0064489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chawla D., Rees G., Friston K.J. The physiological basis of attentional modulation in extrastriate visual areas. Nat. Neurosci. 1999;2:671–676. doi: 10.1038/10230. [DOI] [PubMed] [Google Scholar]

- Cooper E.A., Hasson U., Small S.L. Interpretation-mediated changes in neural activity during language comprehension. NeuroImage. 2011;55:1314–1323. doi: 10.1016/j.neuroimage.2011.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M., Schulman G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cortes C., Vapnik V. Support-vector networks. Mach. Learn. 1995;20:273–297. [Google Scholar]

- Çukur T., Nishimoto S., Huth A.G., Gallant J.L. Attention during natural vision warps semantic representation across the human brain. Nat. Neurosci. 2013;16:763–770. doi: 10.1038/nn.3381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R., Duncan J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Esterman M., Chiu Y.-C., Tamber-Rosenau B.J., Yantis S. Decoding cognitive control in human parietal cortex. Proc. Natl. Acad. Sci. U. S. A. 2009;106:17974–17979. doi: 10.1073/pnas.0903593106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fix E., Hodges J.L. Report Number 4, Project Number 21-49-004. USAF School of Aviation Medicine; Randolph Field, Texas: 1951. Discriminatory analysis–nonparametric discrimination: consistency properties. (Reprinted as International Statistical Review 57: 238–247) [Google Scholar]

- Hasson U., Hendler T., Ben Bashat D., Malach R. Vase or face? A neural correlate of shape-selective grouping processes in the human brain. J. Cogn. Neurosci. 2001;13:744–753. doi: 10.1162/08989290152541412. [DOI] [PubMed] [Google Scholar]

- Hasson U., Nir Y., Levy I., Fuhrmann G., Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- Hastorf A.H., Cantril H. They saw a game: a case study. J. Abnorm. Psychol. 1954;49:129–134. doi: 10.1037/h0057880. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Gobbini M.I., Furey M.L., Ishai A., Schouten J.L., Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Jääskeläinen I.P., Koskentalo K., Balk M.H., Autti T., Kauramäki J., Pomren C., Sams M. Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. Open Neuroimaging J. 2008;2:14–19. doi: 10.2174/1874440000802010014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jääskeläinen I.P., Ahveninen J., Andermann M.L., Belliveau J.W., Raij T., Sams M. Short term plasticity as a neural mechanism supporting memory and attentional functions. Brain Res. 2011;1422:66–81. doi: 10.1016/j.brainres.2011.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaakinen J.K., Hyönä J. Perspective-driven text comprehension. Appl. Cogn. Psychol. 2008;22:319–334. [Google Scholar]

- Kaakinen J.K., Hyönä J., Keenan J.M. Perspective effects on online text processing. Discl. Process. 2002;33:159–173. [Google Scholar]

- Kaakinen J.K., Hyönä J., Keenan J.M. How prior knowledge, WMC, and relevance of information affect eye fixations in expository text. J. Exp. Psychol. Learn. Mem. Cogn. 2003;29:447–457. doi: 10.1037/0278-7393.29.3.447. [DOI] [PubMed] [Google Scholar]

- Kaakinen J.K., Hyönä J., Viljanen M. Influence of a psychological perspective on scene viewing and memory for scenes. Q. J. Exp. Psychol. 2011;64:1372–1387. doi: 10.1080/17470218.2010.548872. [DOI] [PubMed] [Google Scholar]

- Kauppi Jukka-Pekka, Jääskeläinen Iiro P., Sams Mikko, Tohka Jussi. Inter-subject correlation of brain hemodynamic responses during watching a movie: localization in space and frequency. Front Neuroinf. 2010;4 doi: 10.3389/fninf.2010.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kauppi J.-P., Pajula J., Tohka J. A versatile software package for inter-subject correlation based analyses of fMRI. Front Neuroinf. 2014;8:2014. doi: 10.3389/fninf.2014.00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lahnakoski J.M., Glerean E., Salmi J., Jääskeläinen I.P., Sams M., Hari R., Nummenmaa L. Naturalistic fMRI mapping reveals superior temporal sulcus as the hub for the distributed brain network for social perception. Front. Hum. Neurosci. 2012;6:233. doi: 10.3389/fnhum.2012.00233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lahnakoski J.M., Salmi J., Jääskeläinen I.P., Lampinen J., Glerean E., Tikka P., Sams M. Stimulus-related independent component and voxel-wise analysis of human brain activity during free viewing of a feature film. PLoS ONE. 2012;7:e35215. doi: 10.1371/journal.pone.0035215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malinen S., Hlushchuk Y., Hari R. Towards natural stimulation in MRI — issues of data analysis. NeuroImage. 2007;35:131–139. doi: 10.1016/j.neuroimage.2006.11.015. [DOI] [PubMed] [Google Scholar]

- Mantel N. The detection of disease clustering and a generalized regression approach. Cancer Res. 1967;27:209–220. [PubMed] [Google Scholar]

- Mitchell T.M., Hutcinson R., Niculescu R.S., Pereira F., Wang X., Just M., Newman S. Learning to decode cognitive states from brain images. Mach. Learn. 2004;57:145–175. [Google Scholar]

- Moll H., Meltzoff A.N. Perspective taking and its foundation in joint attention. In: Eilan N., Lerman H., Roessler J., editors. Perception, Causation, and Objectivity. Issues in Philosophy and Psychology. Oxford University Press; Oxford, England: 2011. pp. 286–304. [Google Scholar]

- Nishimoto S., Huth A., Bilenko N., Gallant J. Human visual areas invariant to eye movements during natural vision. J. Vis. 2013;13:1061. (article) [Google Scholar]

- Nummenmaa L., Calder A.J. Neural mechanisms of social attention. Trends Cogn. Sci. 2009;13:135–143. doi: 10.1016/j.tics.2008.12.006. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Glerean E., Viinikainen M., Jääskeläinen I.P., Hari R., Sams M. Emotions promote social interaction by synchronizing brain activity across individuals. Proc. Natl. Acad. Sci. U. S. A. 2012;109:9599–9604. doi: 10.1073/pnas.1206095109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L., Smirnov D., Lahnakoski J., Glerean E., Jääskeläinen I.P., Sams M., Hari R. Mental action simulation synchronizes action–observation circuits across individuals. J. Neurosci. 2014;34:748–757. doi: 10.1523/JNEUROSCI.0352-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Craven K.M., Downing P.E., Kanwisher N. fMRI evidence for objects. Nature. 1999;401:584–587. doi: 10.1038/44134. [DOI] [PubMed] [Google Scholar]

- Pajula J., Kauppi J.-P., Tohka J. Inter-subject correlation in fMRI: method validation against stimulus-model based analysis. PLoS ONE. 2012;7:e41196. doi: 10.1371/journal.pone.0041196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pannasch S., Velichkovsky B.M. Distractor effect and saccade amplitudes: further evidence on different modes of processing in free exploration of visual images. Vis. Cogn. 2009;17:1109–1131. [Google Scholar]

- Raghunathan T.E., Rosenthal R., Rubin D. Comparing correlated but nonoverlapping correlations. Psychol. Methods. 1996;1:178–183. [Google Scholar]

- Rose M., Schmid C., Winzen A., Sommer T., Büchel C. The functional and temporal characteristics of top-down modulation in visual selection. Cereb. Cortex. 2005;15:1290–1298. doi: 10.1093/cercor/bhi012. [DOI] [PubMed] [Google Scholar]

- Salmi J., Glerean E., Jääskeläinen I.P., Lahnakoski J.M., Kettunen J., Lampinen J., Tikka P., Sams M. Posterior parietal cortex activity reflects the significance of others' actions during natural viewing. Hum. Brain Mapp. 2014 doi: 10.1002/hbm.22510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schippers M.B., Roebroeck A., Renken R., Nanetti L., Keysers C. Mapping the information flow from one brain to another during gestural communication. Proc. Natl. Acad. Sci. U. S. A. 2010;107:9388–9393. doi: 10.1073/pnas.1001791107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmälzle R., Häcker F., Renner B., Honey C.J., Schupp H.T. Neural correlates of risk perception during real-life risk communication. J. Neurosci. 2013;33:10340–10347. doi: 10.1523/JNEUROSCI.5323-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens G.J., Silbert L.J., Hasson U. Speaker–listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U. S. A. 2010;107:14425–14430. doi: 10.1073/pnas.1008662107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuontela V., Jiang P., Tokariev M., Savolainen P., Ma Y., Aronen E.T., Fontell T., Liiri T., Ahlström M., Salonen O., Carlson S. Regulation of brain activity in the fusiform face and parahippocampal place areas in 7–11-year-old children. Brain Cogn. 2013;81:203–214. doi: 10.1016/j.bandc.2012.11.003. [DOI] [PubMed] [Google Scholar]

- Wager T.D., Jonides J., Reading S. Neuroimaging studies of shifting attention: a meta-analysis. NeuroImage. 2004;22:1679–1693. doi: 10.1016/j.neuroimage.2004.03.052. [DOI] [PubMed] [Google Scholar]

- Wilson S.M., Molnar-Szakacs I., Iacoboni M. Beyond superior temporal cortex: intersubject correlations in narrative speech comprehension. Cereb. Cortex. 2008;18:230–242. doi: 10.1093/cercor/bhm049. [DOI] [PubMed] [Google Scholar]