Abstract

A widely used approach to solving the inverse problem in electrocardiography involves computing potentials on the epicardium from measured electrocardiograms (ECGs) on the torso surface. The main challenge of solving this electrocardiographic imaging (ECGI) problem lies in its intrinsic ill-posedness. While many regularization techniques have been developed to control wild oscillations of the solution, the choice of proper regularization methods for obtaining clinically acceptable solutions is still a subject of ongoing research. However there has been little rigorous comparison across methods proposed by different groups. This study systematically compared various regularization techniques for solving the ECGI problem under a unified simulation framework, consisting of both 1) progressively more complex idealized source models (from single dipole to triplet of dipoles), and 2) an electrolytic human torso tank containing a live canine heart, with the cardiac source being modeled by potentials measured on a cylindrical cage placed around the heart. We tested 13 different regularization techniques to solve the inverse problem of recovering epicardial potentials, and found that non-quadratic methods (total variation algorithms) and first-order and second-order Tikhonov regularizations outperformed other methodologies and resulted in similar average reconstruction errors.

Keywords: Electrocardiographic inverse problem, Tikhonov regularization, Truncated singular value decomposition, Conjugate gradient, ν-method, MINRES method, Total variation

Introduction

In clinical practice, physicians assess, from a limited number of electrocardiographic (ECG) signals, the complex electrical activity of the heart, which is often simplified in the form of a single dipole. Such an approach, despite being to a large extent qualitative, still represents the cornerstone of a day-to-day initial diagnosis in cardiology.

During the past 35 years, many research efforts have been devoted to exploring and validating the utility of inverse electrocardiography or electrocardiographic imaging (ECGI) (1), in which potential distribution on the surface enveloping the heart (often called the epicardial surface), or other characterizations of cardiac electrical activity, are computed from a distributed set of body-surface ECGs. Motivation for ECGI in terms of epicardial potentials lies in the well-documented observations that epicardial potentials directly reflect the underlying cardiac activity and thus could provide a more effective means than, for example, an implicit single dipole model for localizing regional cardiac events. (2-5)

ECGI can be essentially defined as a non-uniform, or filtered, “amplification” of body-surface ECG signals. However, such amplification is far from being straightforward since it requires that all of the following technological conditions are met:

Recording multiple ECGs, beyond the standard 12 leads both in their number (32, 64, or even 100 or more) and spatial distribution (generally covering both the anterior and posterior torso surface),

Defining a mathematical model that links potential distributions on the torso surface to those on the epicardial surface,

Constructing geometric models of both torso and epicardial surfaces and any intrathoracic organs deemed important, and approximating volume conductor between torso and epicardial surfaces by a system of linear equations,

Regularizing this system of linear equations in order to reconstruct, from the potential distributions on the torso, reasonably physiologic distributions on the epicardial surface.

Throughout the years, conditions #1 through #3 have been progressively standardized (6-15). Condition #4, however, is still receiving considerable attention (16-19) and is related to the peculiar (and challenging) problem of the inherent ill-posedness of ECGI, which means that small errors in body-surface measurements may result in unbounded errors in the reconstruction of epicardial potentials. Given that the ECGI community has developed a plethora of regularization techniques to tackle the ill-posedness and to suppress the rapidly oscillating epicardial reconstructions that result from the illposedness, there is a growing need to compare, structure, and unify those diversified regularization methods. In particular, such a comparison requires the use of the same volume conductor and the same cardiac source models in order to eliminate other sources of variation in results. For example, one of the open questions in regularizing ECGI problem remains whether to choose L1-norm penalty function, which may be – as recently indicated (16) – better suited for localizing epicardial pacing sites and reconstruction of epicardial potentials, instead of the more standard L2-norm penalty function.

Accordingly, the purposes of this study were: 1) to systematically evaluate the performance of different regularization techniques using a realistic human torso model with both idealized and physiological cardiac source models; and 2) in particular, to test the hypothesis that non-quadratic regularizations are superior to Tikhonov regularizations.

Methods

Problem formulation

The electric potential field induced by cardiac activity can be modeled by a generalized Laplace's equation defined over the torso-shaped volume conductor subject to Cauchy boundary conditions (10). Assuming the human torso is homogeneous and isotropic, this boundary value problem can be solved by several numerical methods. One that has been widely used for ECGI is the boundary element method (BEM), which relates the potentials at the torso nodes (expressed as an m-dimensional vector ΦB) to the potentials at the epicardial nodes (expressed as an n-dimensional vector ΦE),

| (1) |

where A is the transfer coefficient matrix (m × n) and n < m. The transfer coefficient matrix depends entirely on geometric integrands(20, 21) and implies piece-wise linear approximation of potentials over a given triangle.

In principle, the epicardial potential distribution could be simply approximated in the form of a pseudoinverse ΦE = (ATA)−1 AT ΦB. However, as a consequence of the underlying ill-posedness, the matrix A is ill-conditioned, i.e., its singular values tend to zero with no particular gap of separation in the singular value spectrum, yielding highly unstable solutions (22). To find the true solution, extra constraints are needed using some form of regularization; in one of its articulations – called Tikhonov regularization (22) – the inverse problem is expressed as the weighted least-squares problem,

| (2) |

where ∥ ∥2 denotes the L2 (Euclidean) norm of a vector, λ is the regularization parameter and the matrix Λ (n × n) determines the regularizing operator. In typical practical computations, the identity matrix (Λ = I), or the discretized gradient, i.e., first-order operator (Λ = G), or Laplacian, i.e., second-order operator (Λ = L), are used. We determined the regularization parameter λ for each data set and regularization method separately, since the actual value of λ strongly depends on both the data set and the regularization method. A reasonable value of λ can be typically determined by the L-curve method (23), e.g., using function l_corner from Regularization tools 4.1 package (24).

Another possible approach to regularization are truncated iterative methods, which are based on iteration schemes that access the transfer coefficient matrix A only via matrix-vector multiplications, and consequently produce a sequence of iteration vectors, ΦE(i), which converge toward the desired solution. When iterative algorithms are applied to discrete ill-posed problems, the iteration vector initially approaches the correct solution, but in later stages of the iterations, some other undesired vector is obtained, which can be eliminated by regularization through truncating the iterations. From a computational cost perspective, iterative methods are also preferred when A is large, because explicit decomposition of A can require prohibitive amounts of computer memory. The conjugate gradient (CG) method (25) is a well-known technique for solving ill-posed problems, with matrices I, G and L, representing constraints on amplitude and first and second order spatial gradients, respectively, used as regularizing operators. The ν-method (23) is an iterative method derived from the classical Landweber method (26) and deployed when a non-negativity constraint is required. The CG method is actually one member of the family of Krylov subspace methods, which are mathematically largely equivalent in their expected solutions but differ in the computational details involved (27). Common members of the Krylov subspace family, in addition to CG, include the LSQR (28), GMRES (29), and MINRES (25) methods. Fast methods also exist for calculating solutions for multiple regularization parameters at little extra computational cost (30, 31).

Another approach is the so-called non-quadratic regularization technique (16, 32), with the objective function defined as

| (3) |

where ∥ ∥1 denotes not the L2, but rather the L1 norm of a vector of penalty function, in which the regularizing operator is either the first (Λ = G) or second (Laplacian) order gradient (Λ = L). This approach is computationally more demanding than the previous ones since it is non-linear due to the non-differentiability of the L1-norm of the penalty function when its argument is 0. The L1-norm can be shown to favor sparser solutions than the corresponding L2-norm. Thus in the context of Eq. (3) it might be hypothesized that using such a non-quadratic regularization constraint might better preserve sharp wavefronts in the reconstructed potentials which would be smoothed out by the L2-norm penalty.

In this work, we analyzed in a unified computational framework 13 regularization methods in total. For the sake of structure, we organized these regularization techniques into 3 groups corresponding to the discussion above:

Tikhonov regularizations: zero order (ZOT) (22, 33), first order (FOT) (6, 16), and second order (SOT) (33),

iterative techniques: truncated singular value decomposition (23) (zero order (ZTSVD), first order (FTSVD), and second order (STSVD)), conjugate gradient (25) (zero order (ZCG), first order (FCG), and second order (SCG)), ν-method (23), and MINRES method (25),

non-quadratic techniques (16, 32): total variation (FTV), and total variation with Laplacian (STV).

These regularization techniques cover well the spectrum of different regularization approaches used in ECGI.

Experimental Protocol I: Idealized source model

First, we assessed regularization techniques using progressively more complex idealized source models (34, 35). The reason for such an undertaking was twofold: 1) to test the hypothesis that reconstruction of epicardial potentials arising from complex (albeit idealized) sources would benefit more from the non-quadratic regularizations than from other regularizations, and 2) to test the hypothesis that non-quadratic regularizations are superior in reconstructing multiple ventricular events, for which we specifically used a two-dipole source model.

The protocol consisted of the following steps:

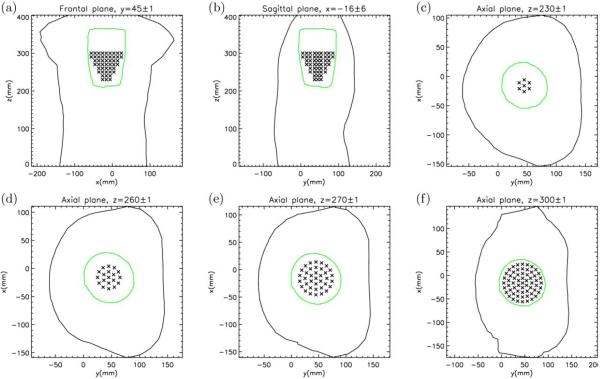

Step 1. We used a geometry based on the homogeneous torso model with the measurements taken on the torso shaped outer boundary of an electrolytic tank (defined with 771 nodes) and an internal, barrel-shaped, cage 602-electrodes array, which surrounded all cardiac sources during the measurements, as described in Experimental Protocol II. In effect, the cage electrode surface was regarded as a surrogate for the epicardial surface. Inside of the cage surface, we placed three different idealized source models: 1) single dipoles at 16 different locations and 3 different orientations (in total 48 combinations), 2) pairs of dipoles at 324 combinations, and 3) triplets of dipoles at 24 different combinations. Fig. 1 shows cross-sectional views of the tank-cage volume conductor model and dipole positions on a given plane; the selection of source locations is described in detail in the Appendix.

- Step 2. We calculated torso potentials at 771 nodes and cage potentials at 602 nodes using the well-known discretized form of the fundamental integral equation for electric potential within bounded homogeneous medium (36),

where Φ = (ΦB , ΦE) and Φ∞ = (ΦB∞ , ΦE∞) are (m + n)-dimensional vectors of the discretized node potentials of the torso and cage surfaces associated with the bounded homogeneous torso. The square matrix B contains geometrical integrands, already present in the matrix A of Eq. 1(4)

The torso and cylindrical-cage potentials in Eq. 4 were obtained by means of the non-iterative, fast-forward method (37); finally, measurement noise level (at SNR of 40 dB with respect to the body surface measurements) was added to the torso potentials to mimic experimental measurement conditions.(5) Step 3. The 602-node cylindrical-cage potentials were reconstructed by the 13 regularization techniques. To this end, we used the transfer matrix A of Eq. 1.

Step 4. The cylindrical-cage potentials, calculated using Eq. 4, served as the comparator to evaluate the accuracy of the inverse solution. We used the normalized rms (root-mean-square) error , and the correlation coefficient, , where are the directly-computed cylindrical-cage potentials and are the reconstructed potentials. We also compared qualitative features of both measured and inversely computed potential maps, (e.g., areas of negative potentials and positions of extrema).

Fig. 1.

Cross-sectional views of dipole positions denoted by (a) Frontal, (b) Sagittal and (c through f), Axial planes at different z levels. Tank and cage borders are displayed with black and green colors, respectively. On each plane, sources within x or y or z levels ± tolerance are displayed. The polar axis of the cylindrical cage is at x = -16 mm and y = 45 mm.

Experimental Protocol II: Canine heart model

A physiological source model offered an additional means to test our hypothesis of superiority of the non-quadratic regularization techniques. The protocol steps were as follows:

Step 1. We modeled the cardiac source using a live canine heart (3), which was retrogradely perfused via the aorta of a second, “support” dog. The perfused heart was suspended in roughly the correct anatomical position in an electrolytic tank modeled from an adolescent thorax; the geometries of the torso and the cage electrode array were the same as in the Experimental Protocol I. We recorded electric potentials (at 1 kHz sampling rate) from the 602-lead cage enveloping the suspended canine heart during sinus rhythm, in sample epochs of 4-7 seconds duration.

Step 2. We calculated the torso potentials at 771 nodes from the 602 cylindrical-cage potentials using Eq. 1. As before, the same measurement noise level (40 dB) was added to the torso potentials to mimic experimental measurement conditions.

Steps 3 and 4. As in the Experimental Protocol I, except the comparison is made to measured rather than simulated cylindrical-cage potentials.

Results

Idealized source model

Table 1 summarizes average reconstruction results for 1-dipole, 2-dipole and 3-dipole models with 40-dB input noise. When using 2 dipoles instead of 1 dipole, error levels worsened for all methodologies; results were comparable for 2-dipole and 3-dipole models. It is evident that the differences among regularization methodologies were relatively small: for example, in a 1-dipole model, Tikhonov regularization FOT (average relative error of 0.28±0.16), iterative methodology FTSVD (0.31±0.20) and non-quadratic techniques FTV (0.29±0.16) and STV(0.28±0.15) produced virtually identical results, with other approaches (SOT, STSVD, FCG, SCG) not too inferior. These differences remained small even in the presence of complex sources, consisting of 3 dipoles: Tikhonov FOT (0.40±0.20) and least-squares SCG (0.39±0.17) and non-quadratic FTV (0.40±0.18) and STV (0.39±0.18) performed on average, equally well. The only outlier that on average consistently underperformed was the ν-method.

Table 1.

Average root-mean-square (rms) errors (±SD) for reconstruction results in a single-dipole, 2-dipole, and 3-dipole models, with added 40-dB noise input

| Method | 1-dipole | 2-dipole | 3-dipole |

|---|---|---|---|

| ZOT | 0.42 ± 0.11 | 0.50 ± 0.13 | 0.49 ± 0.12 |

| FOT | 0.28 ± 0.16 | 0.43 ± 0.17 | 0.40 ± 0.20 |

| SOT | 0.33 ± 0.12 | 0.43 ± 0.14 | 0.42 ± 0.13 |

| ZTSVD | 0.44 ± 0.13 | 0.53 ± 0.15 | 0.51 ± 0.13 |

| FTSVD | 0.29 ± 0.17 | 0.46 ± 0.19 | 0.44 ± 0.22 |

| STSVD | 0.31 ± 0.20 | 0.48 ± 0.21 | 0.45 ± 0.23 |

| ZCG | 0.42 ± 0.12 | 0.51 ± 0.14 | 0.50 ± 0.12 |

| FCG | 0.31 ± 0.18 | 0.48 ± 0.19 | 0.42 ± 0.20 |

| SCG | 0.31 ± 0.18 | 0.44 ± 0.18 | 0.39 ± 0.17 |

| V | 0.59 ± 0.15 | 0.65 ± 0.15 | 0.65 ± 0.17 |

| MINRES | 0.44 ± 0.14 | 0.56 ± 0.18 | 0.53 ± 0.12 |

| FTV | 0.29 ± 0.16 | 0.42 ± 0.17 | 0.40 ± 0.18 |

| STV | 0.28 ± 0.15 | 0.42 ± 0.17 | 0.39 ± 0.18 |

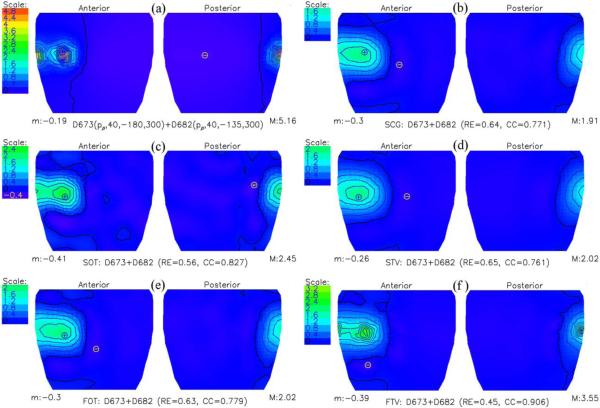

Simulations with the pairs of parallel dipoles served as the surrogate of ventricular multiple events. Fig. 2 compares directly simulated cylindrical-cage potentials with inversely computed potentials using SCG, SOT, STV, FOT, and FTV, when the two dipoles were 30.6 mm apart. It is evident that two distinct extrema were reconstructed only when using SOT (relative error of 0.56) or FTV (0.44); it is interesting that for this specific example, the Laplacian was a more suitable operator than the gradient when applying Tikhonov regularization, while the opposite was true for the total variation technique. When taking averages, however, the difference among regularization techniques in the reconstruction of two-dipole potential distributions on the cylindrical-cage surface was small and results were nearly identical when using, FOT (0.43±0.17), SOT (0.43±0.14), FTV (0.42±0.17), or STV (0.42±0.17). These observations point toward the often-neglected notion that qualitative features of reconstructed maps may show different comparative assessment than do quantitative summary statistics (e.g., relative error), and that in some instances, smaller relative errors may not mean more potent qualitative discrimination of localized events.

Fig. 2.

Cylindrical-cage potential distribution due to a pair of parallel dipoles, 30.6 mm apart, positioned close to the surface of the cage. (a) Cylindrical cage potentials calculated directly. (b through f) Inversely computed cylindrical cage potentials using a second-order conjugate gradient iterative method (SCG), second-order Tikhonov regularization (SOT), total variation algorithm, whose gradient operator was replaced by a Laplacian operator (STV), first-order Tikhonov regularization (FOT), and first-order total variation technique (FTV), respectively. Two extrema were reconstructed clearly only by SOT and FTV. “M” denotes maximum potential and “m” denotes minimum potential.

Canine heart model

Upper part of Table 2 illustrates reconstruction results during the initial phase of the QRS complex, from the Q-onset to the peak of the Q-wave (at 22 ms after the Q-onset), in the presence of a 40-dB input noise. During the low-signal-to-noise ratio after the onset, the normalized RMS errors of the reconstructed cage potentials were relatively high, ranging from 0.22 to 0.36, and the correlation coefficients were in the range of 0.93-0.98, depending on the regularization technique used. It appears that the difference between non-quadratic methods and other regularization techniques became most pronounced at the peak of the Q wave.

Table 2.

Root-mean-square (rms) errors for reconstruction results during the initial phase of the QRS complex, from the Q-onset to the peak of the Q-wave, and for standard reference points of the sinus rhythm (peaks of P, R, S, and T waves) in the presence of a 40-dB noise. Q5 refers to the potential distributions at 5 ms after the Q-onset; the same applies to Q10 and Q15; Qpk refers to the distributions at the peak of the Q-wave (at 22 ms after the Q-onset)

| ZOT | FOT | SOT | ZTSVD | FTSVD | STSVD | ZCG | FCG | SCG | v | MINRES | FTV | STV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q5 | 0.32 | 0.22 | 0.22 | 0.33 | 0.22 | 0.23 | 0.32 | 0.25 | 0.25 | 0.32 | 0.42 | 0.23 | 0.22 |

| Q10 | 0.26 | 0.11 | 0.10 | 0.27 | 0.11 | 0.11 | 0.26 | 0.11 | 0.11 | 0.26 | 0.34 | 0.15 | 0.12 |

| Ql5 | 0.30 | 0.18 | 0.16 | 0.27 | 0.15 | 0.14 | 0.26 | 0.19 | 0.15 | 0.27 | 0.38 | 0.14 | 0.13 |

| Qpk | 0.49 | 0.43 | 0.39 | 0.44 | 0.38 | 0.31 | 0.40 | 0.45 | 0.38 | 0.45 | 0.55 | 0.31 | 0.25 |

| P | 0.47 | 0.43 | 0.42 | 0.51 | 0.42 | 0.42 | 0.47 | 0.45 | 0.45 | 0.48 | 0.49 | 0.37 | 0.41 |

| R | 0.45 | 0.40 | 0.39 | 0.42 | 0.39 | 0.37 | 0.40 | 0.40 | 0.38 | 0.43 | 0.51 | 0.35 | 0.33 |

| S | 0.48 | 0.42 | 0.40 | 0.50 | 0.41 | 0.40 | 0.47 | 0.45 | 0.44 | 0.49 | 0.53 | 0.37 | 0.40 |

| T | 0.27 | 0.16 | 0.16 | 0.27 | 0.16 | 0.16 | 0.26 | 0.16 | 0.16 | 0.26 | 0.35 | 0.17 | 0.16 |

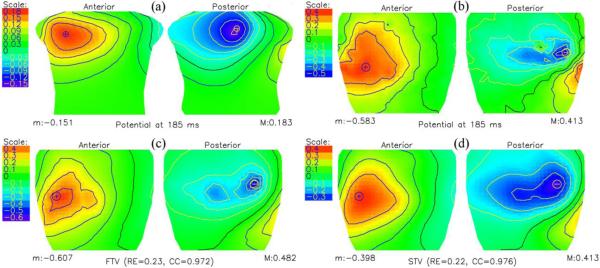

Fig. 3 depicts the body-surface potentials and the measured and calculated cage potentials at 5 ms after the onset of the Q wave. The simulation was carried out by adding 40-dB noise to the torso potentials calculated from the measured cage potentials, and the cage potentials were inversely computed by the FTV and STV, two methodologies recently deemed better suited for localizing cardiac events (16). The body-surface potentials exhibit initial anterior maxima, resulting from the septal activation of the left ventricle. Both FTV and STV capture well the qualitative features of the cage potentials, with STV providing a smoother solution.

Fig. 3.

Potential distributions at 5 ms after the onset of the Q wave. (a) Torso potentials computed from the measured cylindrical cage potentials using boundary element method (BEM). (b) Measured cylindrical cage potentials. (c and d) Inversely computed cylindrical cage potentials using the total variation method (FTV), and the total variation algorithm with the Laplacian instead of a gradient operator (STV). Although both approaches have similar relative errors, in qualitative terms, FTV better captures the measured potential distribution.

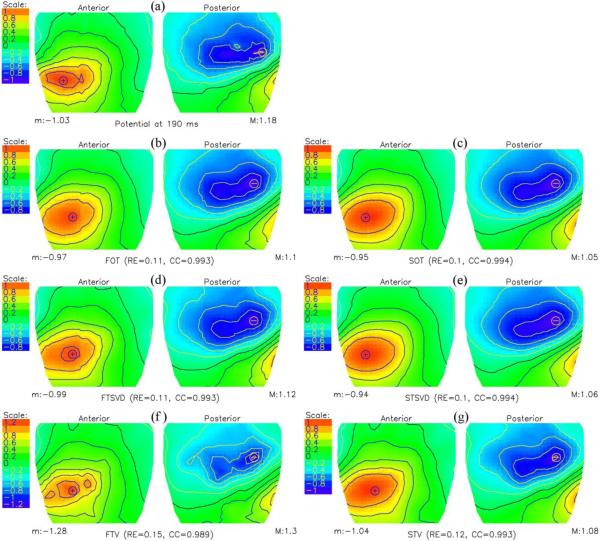

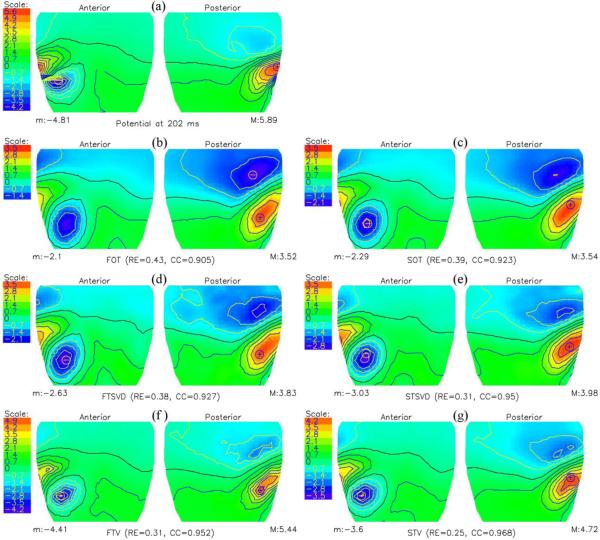

Figs. 4 and 5 show the measured and calculated cage potentials at 10 ms after the onset and at the peak of the Q wave. The cage potentials were inversely computed by the FOT, SOT, FTSVD, STSVD, FTV and STV, respectively. As before with the idealized source model, the reconstructed solutions from 3 of the methodologies were practically indistinguishable at 10 ms into the Q wave, in both quantitative and qualitative terms. At the peak of the Q wave, according to quantitative measures, STV (0.25) performed better than FTV (0.31), STSVD (0.31), FTSVD (0.38), SOT (0.39) and FOT (0.43), although the differences in qualitative features of the maps were not prominent.

Fig. 4.

Potential distributions at 10 ms after the onset of the Q wave. (a) Measured cylindrical cage potentials. (b, c) Inversely computed cylindrical cage potentials using first (FOT) and second (SOT) order Tikhonov regularizations, (d, e) first (FTSVD) and second (STSVD) order truncated singular value decomposition methods, and (f, g) total variation algorithms with a gradient (FTV) and a Laplacian operator (STV), respectively.

Fig. 5.

Potential distributions at the peak of the Q wave, i.e., at 22 ms from the onset of the Q wave. (a) Measured cylindrical cage potentials. (b, c) Inversely computed cylindrical cage potentials using a first (FOT) and second (SOT) order Tikhonov regularizations, (d, e) first (FTSVD) and second (STSVD) order truncated singular value decomposition methods, and (f, g) total variation algorithms with a gradient (FTV) and a Laplacian operator (STV), respectively.

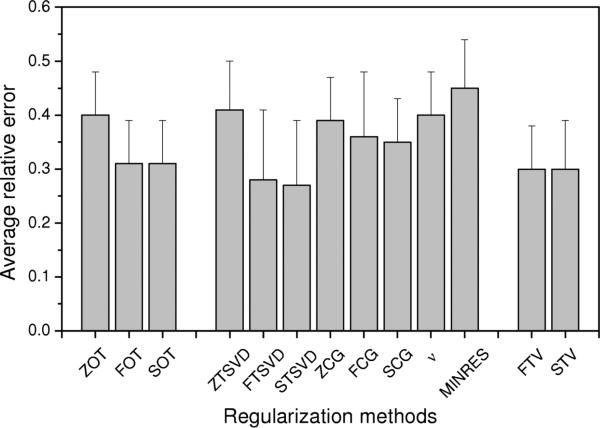

Lower part of Table 2 summarizes results for the standard fiducial points of the sinus rhythm (peaks of P, R, S, and T waves), again using 40-dB input noise. Similar to results during the initial phase of the QRS, both non-quadratic regularization techniques (FTV, STV) performed – at least at some time instants – somewhat better than the other 11 methodologies tested. On average, however, these differences were small and Tikhonov regularizations (FOT, SOT) and iterative regularizations were, for the most part, on a par with the non-quadratic techniques. Fig. 6 further emphasizes this point by comparing average reconstruction results during the entire sinus rhythm, with similar outcomes for FOT, SOT, FTSVD, STSVD, FTV, and STV methodologies.

Fig. 6.

Comparison of average relative errors (with SD represented as the bars above the columns) over the entire sinus rhythm (n = 484) for 13 regularization techniques. On average, FOT, SOT, FTSVD, STSVD, FTV, and SVT perform equally well (p < 0.05).

Discussion

The motivation for this study was to compare the performance of various regularization techniques using a unified computational framework derived from a realistic torso model with both an idealized source model and a canine heart. Our main hypothesis that non-quadratic methods (FTV and STV) would be superior in regularization of ECGI was not confirmed. In fact, our results indicate that there is, on average over all source models, little difference among three main groups (i.e., Tikhonov, iterative, and non-quadratic) of regularization techniques. Somewhat disappointing was the performance of the ν-method, which is otherwise a potent tool in solving non-negativity constrained ill-posed problems in related fields due to straightforward implementation of the constraint (38).

We have observed some instances in which the non-quadratic regularization FTV (and sometimes STV) appeared to capture inverse solutions better than other methodologies, but not on the level reported by Ghosh and Rudy (16), who noted that FTV method (also called L1 regularization) markedly and consistently better captured the spatial patterns of epicardial potentials. It appears that FTV may, in some specific instances, be more potent in capturing multiple sources that are not too far apart, although under such circumstances SOT also performs quite well. It seems that, for example, FTV tends to under-regularize the inverse solution and hence provide more detail, providing, of course, that the researcher has at least some a priori information about the nature of sources (e.g., the possible sites of early activation) and a systemized differentiation of quantitative and qualitative features of maps with respect to a given clinical application. Such under-regularization – which really refers to under-suppression of type of error the regularization penalizes for in the resulting solution – can undermine the robustness of the solution and may well lead in some instances to ambiguity when distinguishing between the true source and noise. Our experience in the field of inverse reconstructions suggests that slight over-regularization (as provided by, e.g., SOT) is, at least for clinical applications given the current uncertainties of the field, better suited than any form of under-regularization.

Our study has some obvious limitations: the data from the canine heart were directly measured only on the cylindrical-cage surface, and this surface potential distribution was then used as the equivalent source for calculating the torso potentials. The cylindrical cage was some distance away from the canine heart, which at least to some extent smoothed out the potential distribution on the cage surface. To address these potential limitations, we placed the idealized dipolar sources close to the surface of the cylindrical cage (e.g., for parallel-dipole examples shown in Fig. 2 the distance was 7.9±0.9 mm). Maybe it is important to note that it is extremely difficult to record potentials on the epicardium while obtaining an accurate geometry of epicardial surface.

We plan to extend our research in five directions: 1) using epicardial potentials instead of cage potentials, 2) testing validity of a homogeneous torso assumption, 3) applying regularization to identifying sites of early activation during pacing, 4) in further assessing regularization techniques which impose multiple spatial and spatiotemporal constraints on the inverse solution (32, 33, 39, 40), and 5) in using a simulation framework with models of ventricular fibrillation (41).

Acknowledgment

Authors wish to thank J Sinstra and D Wang for help in providing the data for this study.

Appendix

We created a cylindrical source space, consisted of 744 dipoles in 248 positions, which were arranged in 8 axial planes 10 mm apart along the polar z-axis. There were three perpendicular dipoles in each position: normal (radial, pρ) and tangential (along the polar angle, pφ) to the cage side surface, and alongside the polar axis (pz). In the first and second source (axial) planes there were 7 source positions (Fig. 1c), one in the center and 6 arranged along a concentric circle with a radius of 10 mm (nodes of hexagon with side 10 mm). The center of the bottom plane was at (−16, 45, 230) mm in the Cartesian coordinate system of the torso-cage model. In the next two source planes (Fig. 1d), there were 19 positions on each plane, 7 as in the first and second planes and 12 arranged on an additional concentric circle with a radius of 20 mm (nodes of a dodecagon with side 10 mm). In the next two source planes, there were 37 positions on each plane (Fig. 1e), 19 as in the previous two planes, and 18 on an additional concentric circle with a radius of 30 mm (equivalent to the nodes of an octadecagon with a side length of 10 mm). In the last two source planes, there were 61 positions on each plane (Fig. 1f), 37 as in the previous two planes and 24 on an additional concentric circle with a radius of 40 mm (nodes of tetracosagon with sides of 10 mm). Additional details are available in Technical supplement at [http://fizika.imfm.si/ECGI/simulation.htm].

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Lux RL. Electrocardiographic mapping. Noninvasive electrophysiological cardiac imaging. Circulation. 1993;87(3):1040–2. doi: 10.1161/01.cir.87.3.1040. [DOI] [PubMed] [Google Scholar]

- 2.Barr RC, Spach MS. Inverse calculation of QRS-T epicardial potentials from body surface potential distributions for normal and ectopic beats in intact dog. Circ Res. 1978;42(5):661–75. doi: 10.1161/01.res.42.5.661. [DOI] [PubMed] [Google Scholar]

- 3.Oster H, Taccardi B, Lux RL, Ershler PR, Rudy Y. Noninvasive electrocardiographic imaging: reconstruction of epicardial potentials, electrograms, and isochrones and localization of single and multiple cardiac events. Circulation. 1997;96(3):1012–24. doi: 10.1161/01.cir.96.3.1012. [DOI] [PubMed] [Google Scholar]

- 4.Ramsey M, Barr RC, Spach MS. Comparison of measured torso potentials with those simulated from epicardial potentials for ventricular depolarization and repolarization in the intact dog. Circ Res. 1977;41(5):660–72. doi: 10.1161/01.res.41.5.660. [DOI] [PubMed] [Google Scholar]

- 5.Taccardi B, Macchi E, Lux RL, Ershler PR, Spaggiari S, Baruffi S, Vyhmeister Y. Effect of myocardial fiber direction on epicardial potentials. Circulation. 1994;90(6):3076–90. doi: 10.1161/01.cir.90.6.3076. [DOI] [PubMed] [Google Scholar]

- 6.Colli-Franzone P, Taccardi B, Viganotti C. An approach to inverse calculation of epicardial potentials from body surface maps. Adv Cardiol. 1978;21(1):50–4. doi: 10.1159/000400421. [DOI] [PubMed] [Google Scholar]

- 7.Colli-Franzone P, Macchi E, Viganotti C. Oblique dipole layer potentials applied to electrocardiology. J Math Biol. 1983;17(1):93–124. doi: 10.1007/BF00276116. [DOI] [PubMed] [Google Scholar]

- 8.Fischer G, Tilg B, Wach P, Modre R, Leder U, Nowak H. Application of high-order boundary elements to the electrocardiographic inverse problem. Comput Methods Programs Biomed. 1999;58(2):119–31. doi: 10.1016/s0169-2607(98)00076-5. [DOI] [PubMed] [Google Scholar]

- 9.Horacek BM, Clements JC. The inverse problem of electrocardiography: a solution in terms of single- and double-layer sources of the epicardial surface. Math Biosci. 1997;144(2):119–54. doi: 10.1016/s0025-5564(97)00024-2. [DOI] [PubMed] [Google Scholar]

- 10.Johnson CR. Computational and numerical methods for bioelectric field problems. CRC Crit Rev Biomed Eng. 1997;25(1):1–81. doi: 10.1615/critrevbiomedeng.v25.i1.10. [DOI] [PubMed] [Google Scholar]

- 11.Lux RL. Electrocardiographic body surface potential mapping. CRC Crit Rev Biomed Eng. 1997;8(3):253–79. [PubMed] [Google Scholar]

- 12.MacLeod RS, Gardner MJ, Miller RM, Horacek BM. Application of an electrocardiographic inverse solution to localize ischemia during coronary angioplasty. J Cardiovasc Electropysiol. 1995;6(1):2–18. doi: 10.1111/j.1540-8167.1995.tb00752.x. [DOI] [PubMed] [Google Scholar]

- 13.MacLeod RS, Brooks DH. Recent progress in inverse problems of electrocardiography. IEEE Eng Med Biol. 1998;17(1):73–83. doi: 10.1109/51.646224. [DOI] [PubMed] [Google Scholar]

- 14.Rudy Y, Oster H. The electrocardiographic inverse problem. CRC Crit Rev Biomed Eng. 1992;20(1-2):25–46. [PubMed] [Google Scholar]

- 15.Throne RD, Olson LG, Hrabik TJ. A comparison of higher-order generalized eigensystem techniques and Tikhonov regularization for the inverse problem of electrocardiography. Inverse Problems in Engineering. 1999;7(2):143–93. [Google Scholar]

- 16.Ghosh S, Rudy Y. Application of L1-norm regularization to epicardial potential solution of the inverse electrocardiography problem. Ann Biomed Eng. 2009;37(5):902–12. doi: 10.1007/s10439-009-9665-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li G, He B. Non-invasive estimation of myocardial infarction by means of a heart-model-based imaging approach: a simulation study. Med. Biol Eng Comput. 2004;42(1):128–36. doi: 10.1007/BF02351022. [DOI] [PubMed] [Google Scholar]

- 18.Milanic M, Jazbinsek V, Wang D, Sinstra J, MacLeod RS, Brooks D, Hren R. Evaluation of approaches to solving electrocardiographic imaging problem. Comput Cardiol. 2009;36:177–80. [Google Scholar]

- 19.Shou G, Xa L, Liu F, Jiang M, Crozier S. On epicardial reconstruction using regularization schemes with the L1-norm data term. Phys Med Biol. 2011;56(1):57–72. doi: 10.1088/0031-9155/56/1/004. [DOI] [PubMed] [Google Scholar]

- 20.De Munck JC. A linear discretization of the volume conductor boundary integral equation using analytically integrated elements. IEEE Trans Biomed Eng. 1992;39(9):986–90. doi: 10.1109/10.256433. [DOI] [PubMed] [Google Scholar]

- 21.Ferguson AS, Zhang X, Stroink G. A complete linear discretization for calculating the magnetic field using the boundary element method. IEEE Trans Biomed Eng. 1994;41(5):455–9. doi: 10.1109/10.293220. [DOI] [PubMed] [Google Scholar]

- 22.Tikhonov A, Arsenin V. Solutions of Ill-Posed Problems. Winston; Washington, DC: 1977. [Google Scholar]

- 23.Hansen PC. Rank-Deficient and Discrete Ill-Posed Problems. SIAM; Philadelphia PA: 1998. [Google Scholar]

- 24.Hansen PC. Regularization Tools version 4.0 for Matlab 7.3. Numer Algo. 2007;46(2):189–194. [Google Scholar]

- 25.Hanke M. Conjugate Gradient Type Methods for Ill-Posed Problems. Longman Scientific & Technical; Harlow: 1995. [Google Scholar]

- 26.Baker CTH. The Numerical Treatment of Integral Equations. Clarendon Press; Oxford UK: 1977. [Google Scholar]

- 27.Saad Y. Iterative Methods for Sparse Linear Systems. 2nd Edition SIAM Press; Philadelphia, PA: 2003. [Google Scholar]

- 28.Paige CC, Saunders MA. LSQR: An algorithm for sparse linear equations and sparse least squares. ACM Transactions on Mathematical Software. 1982;8(1):43–71. [Google Scholar]

- 29.Saad Y, Schultz MH. GMRES: A generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J Sci Stat Comput. 1986;7(3):856–869. [Google Scholar]

- 30.Hoge WS, Kilmer ME, Haker SJ, Brooks DH, Kyriakos WE. An Iterative Method for Fast Regularized Parallel MRI Reconstruction.. Proc. of ISMRM 14th Scientific Meeting; Seattle, WA. 2006. [Google Scholar]

- 31.Parks M, de Sturler E, Mackey G, Johnson DD, Maiti S. Recycling Krylov Subspaces for Sequences of Linear Systems. SIAM Journal on Scientific Computing. 2006;28(5):1651–74. [Google Scholar]

- 32.Brooks DH, Srinidhi KG, MacLeod RS, Kaeli DR. Multiply constrained cardiac electrical imaging methods. Subsurface Sensors and Applications. Proc SPIE. 1999;3752:62–71. [Google Scholar]

- 33.Brooks DH, Ahmad GF, MacLeod RS, Maratos GM. Inverse electrocardiography by simultaneous imposition of multiple constraints. IEEE Trans Biomed Eng. 1999;46(1):3–18. doi: 10.1109/10.736746. [DOI] [PubMed] [Google Scholar]

- 34.Horacek BM. Numerical model of an inhomogeneous human torso. Adv Cardiol. 1974;10(3):51–7. [Google Scholar]

- 35.Hren R. Value of epicardial potential maps in localizing pre-excitation sites for radiofrequency ablation. A simulation study. Phys Med Biol. 1998;43(6):1449–68. doi: 10.1088/0031-9155/43/6/006. [DOI] [PubMed] [Google Scholar]

- 36.Geselowitz DB. On bioelectric potentials in an inhomogeneous volume conductor. Biophys J. 1967;7(1):1–11. doi: 10.1016/S0006-3495(67)86571-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jazbinsek V, Hren R, Stroink G, Horacek BM, Trontelj Z. Value and limitations of an inverse solution for two equivalent dipoles in localising dual accessory pathways. Med Biol Eng Comput. 2003;41(3):133–40. doi: 10.1007/BF02344880. [DOI] [PubMed] [Google Scholar]

- 38.Milanic M, Sersa I, Majaron B. A spectrally composite reconstruction approach for improved resolution of pulsed photothermal temperature profiling in water-based samples. Phys Med Biol. 2009;54(9):2829–44. doi: 10.1088/0031-9155/54/9/016. [DOI] [PubMed] [Google Scholar]

- 39.Ghodrati A, Brooks DH, Tadmor G, MacLeod RS. Wavefront-based models for inverse electrocardiography. IEEE Trans Biomed Eng. 2006;53(9):1821–31. doi: 10.1109/TBME.2006.878117. [DOI] [PubMed] [Google Scholar]

- 40.White R. The Generalized Minimal Residual Method applied to the Inverse Problem of Electrocardiography. MSc Thesis, Dalhousie University. 2006 [Google Scholar]

- 41.Ten Tusscher KH, Hren R, Panfilov AV. Organization of ventricular fibrillation in the human heart. Circ. Res. 2007;100(12):e87–e101. doi: 10.1161/CIRCRESAHA.107.150730. [DOI] [PubMed] [Google Scholar]