Abstract

Wheelchair control requires multiple degrees of freedom and fast intention detection, which makes electroencephalography (EEG)-based wheelchair control a big challenge. In our previous study, we have achieved direction (turning left and right) and speed (acceleration and deceleration) control of a wheelchair using a hybrid brain–computer interface (BCI) combining motor imagery and P300 potentials. In this paper, we proposed hybrid EEG-EOG BCI, which combines motor imagery, P300 potentials, and eye blinking to implement forward, backward, and stop control of a wheelchair. By performing relevant activities, users (e.g., those with amyotrophic lateral sclerosis and locked-in syndrome) can navigate the wheelchair with seven steering behaviors. Experimental results on four healthy subjects not only demonstrate the efficiency and robustness of our brain-controlled wheelchair system but also indicate that all the four subjects could control the wheelchair spontaneously and efficiently without any other assistance (e.g., an automatic navigation system).

Keywords: Brain-controlled wheelchair, Hybrid brain–computer interface, Asynchronous, Motor imagery, P300 potentials , Eye blinking

Introduction

Brain-computer interfaces (BCI) can facilitate direct communication between the human brain and external environment by translating human intentions into control signals, which provide a communication method to convey brain messages independently from the brain’s normal output pathway (Bin et al. 2009; Panicker et al. 2011). For people suffering from severe motor disabilities, such as amyotrophic lateral sclerosis (ALS) and locked-in syndrome, BCIs emerge as a feasible type of human-computer and human-machine interface that can allow these patients to interact with the world and help to improve their quality of life (Bromberg 2008; Williams et al. 2008). Brain activity can be monitored using different approaches, such as scalp-recording electroencephalograms (EEGs), magnetoencephalograms (MEGs), functional magnetic resonance imaging (fMRI), and electrocorticograms (ECoGs). However, an EEG-based BCI is considered to be a practical method in daily life because it is noninvasive and the acquisition system is portable. In this case, BCI systems are categorized based on the brain activity patterns, such as the P300 component of event-related potentials (ERPs), event-related desynchronization/synchronization (ERD/ERS), steady-state visual evoked potentials (SSVEPs), and slow cortical potentials (SCPs) (Sellers et al. 2006; Pfurtscheller et al. 2000; Williams et al. 2008; Birbaumer 1999).

The idea of moving robots by merely “thinking” has attracted the interest of researchers for the past 40 years. Until recently, experiments have shown the feasibility of using BCIs for the control of simulated or real wheelchairs. One example is the wheelchair developed by Millán et al. (2009) which realized only three wheelchair steering behaviors: turning left, turning right, and moving forward. Another example is the wheelchair developed by Rebsamen et al. (2006), which used P300 potentials. P300 can provide multi-commands, but the information transfer rate is low because dozens of rounds are needed to improve the signal-to-noise ratio. Another 2-D virtual wheelchair was designed by Huang et al. (2012). This wheelchair is controlled by event-related desynchronization/synchronization, but its steering behavior is limited to go, stop, right turn, and left turn. The eye blinking causes larger electrooculogram (EOG) potentials than EEG and background noises. So it is not difficult to detect. In addition, EOG can be recorded by using small numbers of electrodes. For these reasons, EOG is attracting much attention, and it seems suitable for controlling wheelchairs. Nakanishi and Mitsukura (2013) proposed double-eye blinking and left/right eye wink to obtain go, stop, right turn and left turn for wheelchair control. Kim et al. (2001) proposed an eye blinking detection method and applied it to the control of robots. In addition, the control of mouse cursors Borghetti et al. (2007), robotic vehicles Lv et al. (2008) or virtual keyboards Usakli et al. (2010) using eye blinking and eye movement were proposed. However, spontaneous eye blinking is inevitable.

Each type of BCI has its limitations. A hybrid BCI combines different approaches to utilize the advantages multiple modalities (Pfurtscheller et al. 2010; Allison et al. 2010). In hybrid BCI, either two or more types of mental activity modalities can be combined or mental activity modalities can be combined with non-brain based activities system, such as electromyogram (EMG) or EOG. A hybrid BCI combining motor imagery and P300 was proposed in Li et al. (2010). It was further used to control the direction and speed of a wheelchair in Long et al. (2012). However, a fast and accurate design for the stop command and the forward and backward commands has not been obtained.

The challenging issue for brain-controlled wheelchair (BCW) is how to extract multi-commands from EEGs and achieve fast intention detection. To address this issue, this paper presents a hybrid EEG-EOG (HEE)-BCI by combining motor imagery, P300 potentials, and eye blinking. Specifically, the forward and backward commands are produced by motor imagery (continuously imagining the right/left hand for 4 s in our BCW system), and the stop command is produced by eye blinking. Additionally, the commands for turning left and right are produced by motor imagery, and the acceleration and deceleration are determined by a joint feature composed of P300 and motor imagery, as in Long et al. (2012). Therefore, the users can navigate the wheelchair spontaneously by using visual attention, motor imagery, and eye blinking. The effectiveness of the proposed BCW was confirmed through online experiments with four users in an indoor environment.

System paradigm of the BCW

As shown in Fig. 1, the BCW mainly consists of four components: an EEG acquisition system, a host computer, a communication module, and a robotic wheelchair. The EEG acquisition system includes a commercial Neuroscan EEG system and an EEG cap (LT37), with the channel referenced to the right ear, digitized at a sampling rate of 250 Hz and bandpass filtered between 0.5 and 30 Hz. One Intel(R) Core(TM)2 Duo 2.0 GHz host computer (Fujitsu) was installed onboard to run the HEE-BCI algorithm. The EEG acquisition system is connected via an USB interface and a parallel port to the host computer. The online HEE-BCI algorithm detects P300 potentials, motor imagery and eye blinking, and then translates the detection result as a control command to the USB interface of the host computer. The wheelchair is constructed based on the SHOPRIDER P-424L wheelchair made by Shoprider Mobility Products, with a PG joystick controller that can receive control commands from a host computer via the communication module.

Fig. 1.

Mechatronic design of our BCW

EEG data acquisition

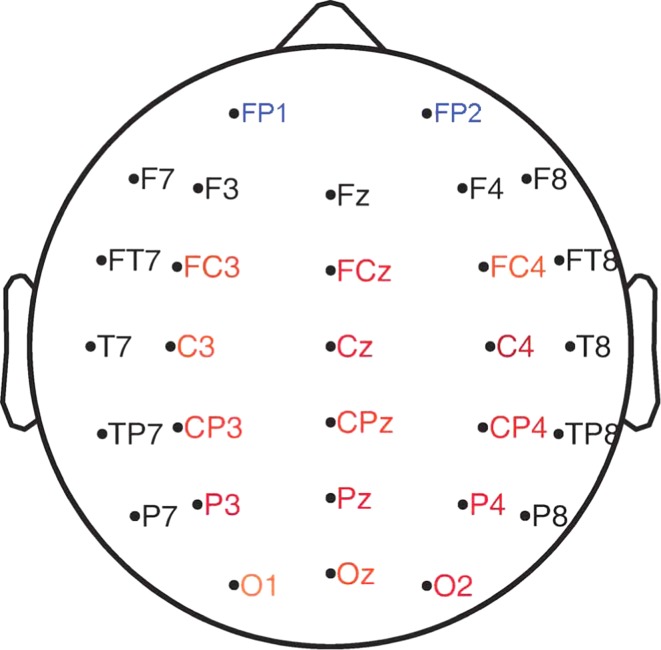

In the data acquisition system, a NuAmps device from NeuroScan is used to measure the scalp EEG signals. Each user wears an EEG cap, and signals are measured from the electrodes. The EEG signals are referenced to the right ear. As shown in Fig. 2, standard wet Ag/AgCl electrodes are employed, which are placed at electrode sites in the frontal, central, parietal, and occipital regions, including 30 channels. All impedances are kept below 5 k . The EEG signals are amplified, sampled at a rate of 250 Hz, and band-pass filtered between 0.5 and 100 Hz. In EEG online processing for left- and right-hand motor imagery and P300 potentials detection, 15 channels are selected, including FC3, FCz, FC4, C3, Cz, C4, CP3, CPz, CP4, P3, Pz, P4, O1, Oz, and O2, whereas eye blinking detection uses two channels, FP1 and FP2.

. The EEG signals are amplified, sampled at a rate of 250 Hz, and band-pass filtered between 0.5 and 100 Hz. In EEG online processing for left- and right-hand motor imagery and P300 potentials detection, 15 channels are selected, including FC3, FCz, FC4, C3, Cz, C4, CP3, CPz, CP4, P3, Pz, P4, O1, Oz, and O2, whereas eye blinking detection uses two channels, FP1 and FP2.

Fig. 2.

Names and distribution of the EEG cap electrodes. The 15 red channels are used for motor imagery and P300 potentials detection, and the left two blue channels FP1 and FP2 are used for eye blinking detection

GUI

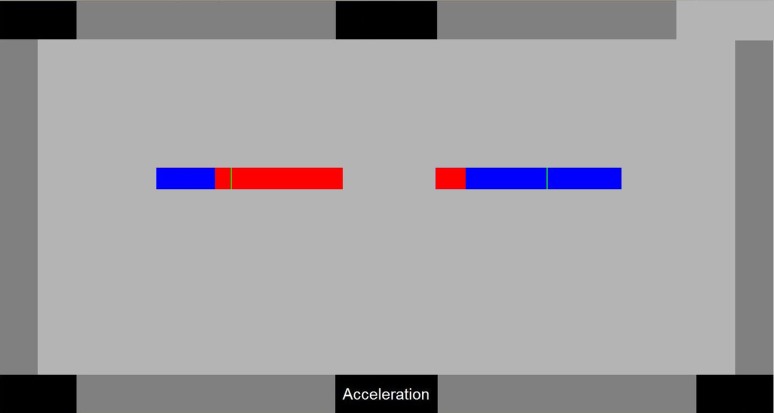

The GUI was developed using Microsoft C++, running at the user level of a Windows XP system with a real-time application interface (Matlab algorithm) for real-time capabilities. As shown in Fig. 3, the GUI for the BCW is displayed in the LCD screen of the host computer with two blue horizontal bars and six flashing buttons. The left and right bars are used to display the feedback (SVM classification score) for the left- and right-hand motor imagery respectively. The green line in each bar represents the threshold, which is adjustable to the specific subject. When in operation, the “Acceleration” button and the other five buttons are randomly intensified for 100 ms with white color, and the time interval between each intensification is 120 ms. The occurrence probabilities of the target and non-target buttons are 1/6 and 5/6, respectively, to evoke P300 potentials.

Fig. 3.

GUI for our BCW. Two bar codes are used to display the MI classification scores (red) as the feedback for the users. The green line in each bar represents the threshold, which is adjustable to the specific subject. The six buttons flash in a random sequence

Communication module

The robotic wheelchair in our study is driven by commands from the host computer that are detected by HEE-BCI. The original wheelchair was controlled by a joystick. We designed a communication module to replace the conventional joystick to achieve communication between the robotic wheelchair and host computer. The hardware architecture of the communication module is mainly based on the STM32F103C6T6 microchip and DAC7612, which can receive commands via an USB interface from the host computer and output two analog voltages as the joystick control signals.

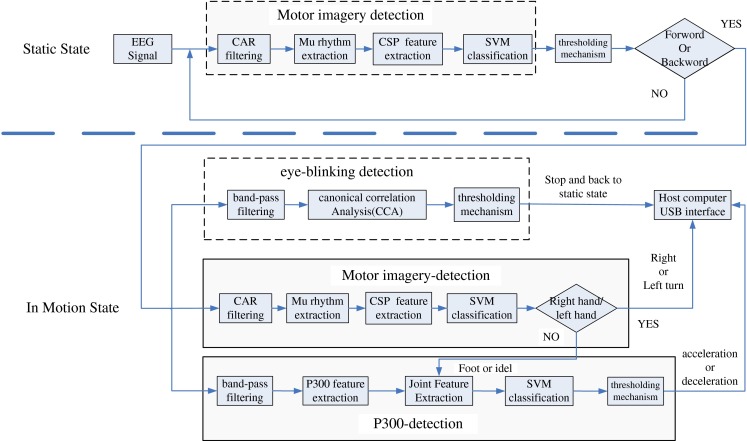

Hybrid EEG and EOG based BCI

The proposed HEE-BCI approach combines motor imagery, P300 potentials, and eye blinking to obtain seven control commands and a greater information transfer rate. As shown in Fig. 4, we consider two states during the wheelchair navigation. The wheelchair is initially in a static state. It can be started at a low speed of 0.1 m/s by a continuous 4 s of motor imagery from the user. Left- or right-hand motor imagery indicates forward or backward movement, respectively. Otherwise, the wheelchair will remain still. Once the wheelchair is started, it enters into a motion state. In this state, the user can realize continuous navigation because of the asynchronous mechanism. The HEE-BCI algorithm will detect five other activities, so we can navigate the wheelchair with the behaviors of turning left, turning right, acceleration, deceleration, and stopping. First, the motor imagery patterns are extracted to identify the control commands for turning left and right. If the right- or left-hand motor imagery is detected, then it is interpreted as a right or left turn, respectively. Otherwise, acceleration or deceleration is extracted. These two commands are determined by a joint feature of motor imagery patterns (foot motor imagery and idle state) and P300 potentials. If the P300 potentials of “Acceleration” button with the idle state of motor imagery is detected, then it is interpreted as an acceleration command, resulting in a low speed of 0.3 m/s; if foot motor imagery with an idle state for P300 is detected, then it is interpreted as a deceleration command, resulting in a low speed of 0.1 m/s. Whenever rapid eye blinking (triple) is detected, it is interpreted as a stop command, and the algorithm jumps back to detect a continuous 4 s motor imagery from the user. The seven steering behavior commands and relevant activities are shown in Table 1. The user is instructed to navigate the wheelchair by performing these activities.

Fig. 4.

Flow chart of the HEE-BCI algorithm

Table 1.

The control commands and their corresponding activities

| Control commands | Activities |

|---|---|

| Forward | Left hand MI for 4 s |

| Backward | Right hand MI for 4 s |

| Stop | Eye blinking |

| Turning left | Left hand MI |

| Turning right | Right hand MI |

| Acceleration | P300 and idle MI |

| Deceleration | Foot MI and idle P300 |

| No control command | Idle (remain in static state or motion as previous command) |

Control of forward and backward movement based on MI

As introduced above, the control of forward and backward motion is achieved by producing 4 s of right-or left-hand motor imagery. First, the EEG signals are spatially filtered with a common average reference (CAR) and then bandpass filtered with a band of 8–32 Hz Blanchard and Blankertz (2004). Second, we compute the spatial patterns through the method of one versus the rest common spatial patterns (OVR-CSP) proposed in Dornhege et al. (2004). Based on system calibration data sets collected before the online test, two CSP transformation matrixes  and

and  for two classes are calculated by the well-known joint diagonalization method Li and Guan (2008). we select the first three rows and the last three rows from each CSP transformation matrix

for two classes are calculated by the well-known joint diagonalization method Li and Guan (2008). we select the first three rows and the last three rows from each CSP transformation matrix  for feature extraction. Third, a support vector machine (SVM) classifier is used for classification. The SVM scores are denoted as

for feature extraction. Third, a support vector machine (SVM) classifier is used for classification. The SVM scores are denoted as  and

and  . Finally, we compare the SVM scores with the defined threshold values

. Finally, we compare the SVM scores with the defined threshold values  and

and  .

.

|

1 |

In (1), if  for a duration of

for a duration of  , then the algorithm determines that the user is imagining left-hand motor imagery for 4 s, and this case results in the forward command. Otherwise, if

, then the algorithm determines that the user is imagining left-hand motor imagery for 4 s, and this case results in the forward command. Otherwise, if  for a duration of 4 s, then the algorithm determines that the user is imagining right-hand motor imagery for 4 s. This case results in the backward command. Otherwise, if

for a duration of 4 s, then the algorithm determines that the user is imagining right-hand motor imagery for 4 s. This case results in the backward command. Otherwise, if  , then the user is considered to be in an idle state. No command is given to the wheelchair, and the algorithm continues motor imagery detection. The variety of the SVM scores value (red) are also displayed in the two bars in GUI as the feedback.

, then the user is considered to be in an idle state. No command is given to the wheelchair, and the algorithm continues motor imagery detection. The variety of the SVM scores value (red) are also displayed in the two bars in GUI as the feedback.  ,

,  and

and  are adjustable according to different subjects.

are adjustable according to different subjects.

Control of stopping by eye blinking

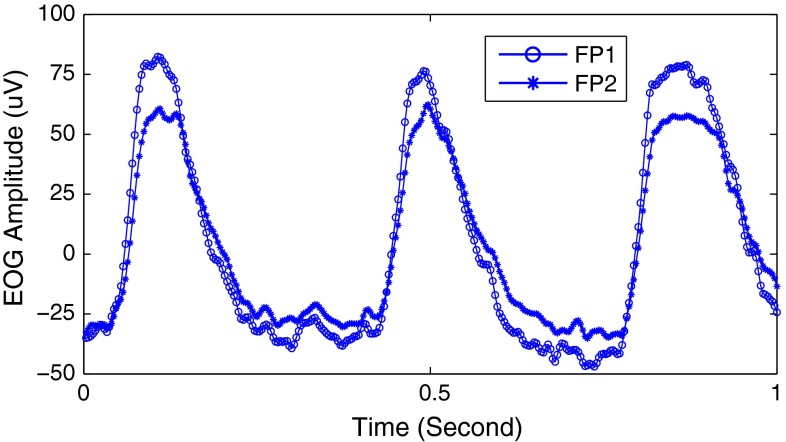

Stopping is controlled by eye blinking. To identify the user’s intention to stop, we adopted a rapid eye blinking (triple) method and used two channels: FP1 and FP2 in online processing. Eye blinking detection is performed every 1,000 ms. First, the EEG signals are filtered within the range of 0.1–15 Hz. Then, we extract the segment of EEG signals from two channels (FP1 and FP2) in the 1 s period (250 data points) before the current time point with a window length of 250 points and 25 sliding points. In the calibration procedure, we capture five typical triple eye blinking templates with different phase and shape from the off line blinking calibration data sets beforehand. Each template includes two channels FP1 and FP2 with a window length of 250 points.

Second, we calculate the correlation coefficients between the extracted online EOG segment and each template signal using canonical correlation analysis (CCA) Hardoon et al. (2004). Consider two multidimensional random variables  and

and  and their linear combinations

and their linear combinations  and

and  , respectively. CCA finds the weight vectors

, respectively. CCA finds the weight vectors  and

and  that maximize the correlation between

that maximize the correlation between  and

and  by solving the following problem:

by solving the following problem:

|

2 |

The maximum of  with respect to

with respect to  and

and  is the maximum canonical correlation. Projections onto

is the maximum canonical correlation. Projections onto  and

and  , i.e.,

, i.e.,  and

and  , are called canonical variants. The stop command

, are called canonical variants. The stop command  is recognized as

is recognized as

|

3 |

where  are the CCA coefficients obtained with the five template signals.

are the CCA coefficients obtained with the five template signals.

Finally, if one of the five scores is higher than the threshold  , a stop command is sent out. The threshold

, a stop command is sent out. The threshold  is a predefined positive constant, which can be chosen by receiver operating characteristics (ROC) curves obtained in blinking calibration procedure.

is a predefined positive constant, which can be chosen by receiver operating characteristics (ROC) curves obtained in blinking calibration procedure.

Control of direction and speed

In our previous study Long et al. (2012), we have achieved wheelchair direction control by motor imagery. In this section, we adopt the same method. Once the BCW is started, the steering function of turning left or right is determined by left-/right-hand motor imagery, which is detected every 200 ms with the data window of 1 s. Each command implies a constant rotation degree of 7°.

The acceleration and deceleration controls are implemented by a joint feature combining two types of EEG patterns using the method proposed in Dornhege et al. (2004). The two types of EEG patterns involve motor imagery (foot motor imagery and an idle state of motor imagery) and P300 potentials. Specifically, foot motor imagery without P300 potentials is detected as deceleration, and the idle state of motor imagery with attention given to the P300 “Acceleration” button is detected as acceleration. So there are 4 classes in the motor imagery based BCI(left hand, right hand, foot motor imageries and idle state). We obtain 4 CSP transformation matrices  ,

,  ,

,  and

and  . we select the first three rows and the last three rows from each CSP transformation matrix

. we select the first three rows and the last three rows from each CSP transformation matrix  and obtain a transformation matrix with 24 rows for feature extraction (logarithm variances of the projections of the EEG signals based on the transformation matrix). More details can be found in Long et al. (2012).

and obtain a transformation matrix with 24 rows for feature extraction (logarithm variances of the projections of the EEG signals based on the transformation matrix). More details can be found in Long et al. (2012).

Experiment and validation

To evaluate the performance of our BCW, four healthy subjects from South China University of Technology (four males aged between 24 to 32 with normal or corrected-to-normal vision) participated two online experiments. All subjects had prior experience with the wheelchair navigation during the system’s development. Before the online experiments, imagery data sets, P300 data sets and eye blinking data sets were collected for subject-specific system calibration. After system calibration, the users started to validate the proposed BCW by two online experiments.

System calibration

Motor imagery and P300 calibration data collection

The data collection of motor imagery and P300 calibration was carried out as the follows: For the first 2.25 s, the screen remains blank. From 2.25 to 4 s, a cross appeared in the screen to attract the subject’s visual fixation. From 4 to 8 s, an arrow cue appeared. The subject was instructed to perform a mental task according to the cue: left/right arrow for left-/right-hand motor imagery, up arrow for foot motor imagery and idle P300, and down arrow for “Acceleration” button attention and idle motor imagery. When an arrow appeared on the screen, the six buttons began to flash alternately in random order. Each button was intensified for 100 ms, whereas the time interval between two consecutive button flashes was 120 ms. Thus, one round of button flashes taken 720 ms, and there were four rounds (repeats) of button flashes in each trial. One data session containing three runs were collected for each user. In each run, 10  arrows, 10

arrows, 10  arrows, 10

arrows, 10  arrows, and 10

arrows, and 10  arrows were presented in random order. Thus, there were 120 trials in each session. More details of motor imagery and P300 calibration can be found in Long et al. (2012), Li et al. (2010).

arrows were presented in random order. Thus, there were 120 trials in each session. More details of motor imagery and P300 calibration can be found in Long et al. (2012), Li et al. (2010).

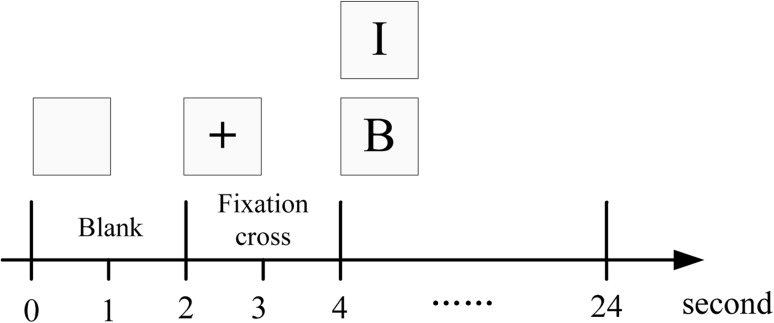

Blinking calibration

The aim of the blinking calibration for stop is to set the threshold according to the ROC curves and make five templates using offline blinking celebration data sets. Figure 5 illustrates the paradigm of a trial in calibration. At the beginning of each trial (0–2 s), screen was blank. From 2 to 4 s, a cross was shown on screen to capture subject’s attention. From 4 to 24 s, a cue “B” or “I” appeared. “B” cued making a triple blinking. “I” cued keeping in idle state, where the subject was asked to rest for 20 s. The blinking and idle trials alternatively appeared. The subject was asked to do 20 trials in a section. A total blinking calibration procedure contains 5 sections. The process of blinking calibration can help the user to be familiar with triple blinking operation. Hence no extra training is needed for the subjects.

Fig. 5.

Paradigm of a trial in blinking calibration. At the beginning of each trail (0–2 s), screen is blank. From 2 to 4 s, a cross is shown on screen to capture subject’s attention. From 4 to 24 s, a cue “B” or “I” appeared. “B” cues the subject make a triple blinking. “I” cues keeping in idle state, where the subject was asked to rest for 20 s. The blinking and idle trials alternatively appeared

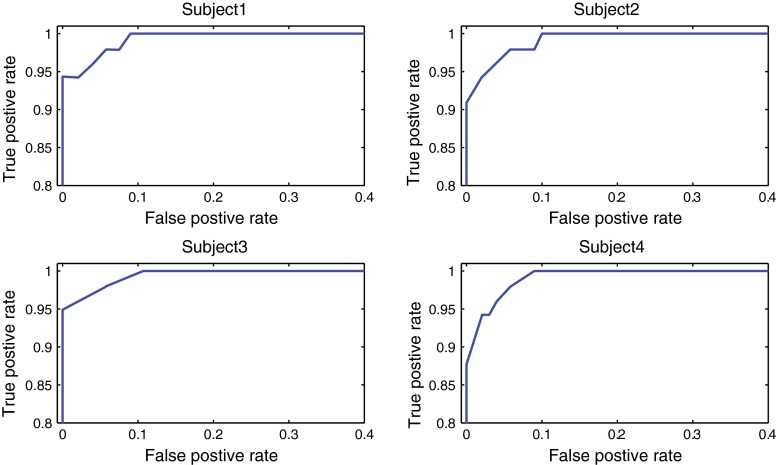

For wheelchair control system, true positive rate (TPR) and false negative rate (FPR) are appropriate measures to evaluate the performance of asynchronous control. In this paper, the correct recognition of a triple blinking as a true positive (TP), and TPR indicates how many true positives the system is able to detect. Furthermore, misclassifying an idle state as a control state is a false positive (FP), and FPR indicates how many false positives the wheelchair system will detect. The ROC curve depicts the relationship between the two rates of TPR and FPR. We used these calibration data sets to calculate the ROC curve for various thresholds of the four subjects. The threshold varied from 0.4 to 0.8 and we select 0.01 as the discrimination threshold interval. Figure 6 shows the ROC curve for all the four subjects.

Fig. 6.

ROC curves for all the four subjects in blink calibration experiment. The TPR is the rate of control events being detected during the control state, whereas the FPR is the rate of false control events detected during the idle state

Next, we used the blink detector calibration data sets collected as pilot data to define a threshold for online experiments. We should find the threshold according to a criterion, e.g., the top left threshold can achieve a satisfactory TPR while keeping FPR below a given limit, which is an optimal threshold ( ). In an actual application, there is another considerable threshold in wheelchair control. When navigating a wheelchair, we want to avoid false stop triggering in order to realize asynchronous control. The special threshold (

). In an actual application, there is another considerable threshold in wheelchair control. When navigating a wheelchair, we want to avoid false stop triggering in order to realize asynchronous control. The special threshold ( ) is a point on ROC where TPR equals 1 and FPR equals 0.

) is a point on ROC where TPR equals 1 and FPR equals 0.

Furthermore, we capture the typical triple eye blinking template in the off line EEG to build five templates from the two channels FP1 and FP2 with a window length of 1 s (250 points). One of the templates is shown in Fig. 7.

Fig. 7.

Template including triple eye blinking signals from FP1 and FP2, respectively

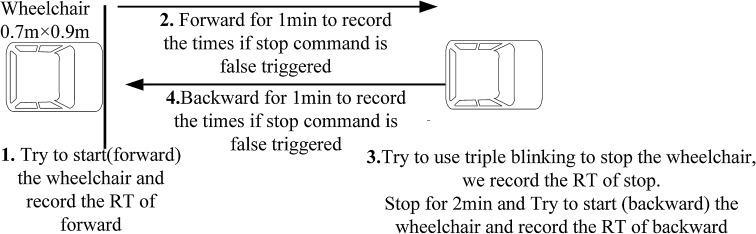

Evaluation for forward, stop and backward experiment

In this experiment, we defined the following parameters to test the forward, stop, and backward steering behaviors according to Rebsamen et al. (2010). Especially we conducted twice according to the optimal threshold ( ) and special threshold (

) and special threshold ( ). As shown in Fig. 8, the time sequence for a trial was carried out in four steps as follows:

). As shown in Fig. 8, the time sequence for a trial was carried out in four steps as follows:

(1) step one: When the forward command was received, the user began trying to start the wheelchair at a constant speed of 0.1 m/s by imagining left hand movement for 4 s. We recorded the RT of forward.

(2) step two: The user navigated the wheelchair forward for 1 min and we recorded the number of false stop command, which was false triggered.

(3) step three: 1 min later, when the stop command was received, the user began trying to use triple blinking to stop the wheelchair (we recorded the RT of stop) and stopped for 2 min. After that, when the backward command was received, the user tried to start (backward) the wheelchair by imagining right hand movement for 4 s and we recorded the RT of backward.

(4) step four: The user navigated the wheelchair backward for 1 min and we recorded the times if stop command was false triggered. 1 min later, when the stop command was received, the user began trying to use triple blinking to stop the wheelchair (we recorded the RT of stop). During the experiment, if the RT of forward/backward exceeded 5.5 s or the RT of stops exceeds 5 s, the trial was denoted as a task failure. Each subject carried out 40 trials in this experiment.

(5) Response Time: The RT is the interval from the time that the user initiates the control to the time the command is issued.

(6) False Activation Rate: The FA is the number of times per minute that a command is issued when the subject is not intending to activate the interface, that is, the rate of false triggering.

(7) Accuracy: Ratio of the task successes to the number of total task.

(8) Results: The results are presented in Table 2: In this online evaluation experiment for forward, stop and backward. If the threshold is set to the special threshold, stopping with eye blinking is effective within 4.5 s on average, and the FA was 0/min. Whereas if the threshold is set to the optimal threshold, stopping with eye blinking is effective within 2.0 s on average, and the FA was 0.3/min. Because the forward and backward movements correspond to right- and left-hand motor imagery from the subject, the RT of forward and backward movements is similar: approximately 5 s on average. The FA rate of forward or backward is 0/min. Furthermore, the average accuracy of forward, backward, stop (

) and stop (

) and stop ( ) are 91 %, 93 %, 89 % and 92 % respectively. The result is remarkable and will make a significant contribution to the safety of the BCW.

) are 91 %, 93 %, 89 % and 92 % respectively. The result is remarkable and will make a significant contribution to the safety of the BCW.

Fig. 8.

Evaluation for forward, stop and backward. Task objective was to test the RT and FA of each steering behaviors

Table 2.

Metrics to evaluate response time and false activation rate for forward, backward and stop

| Commands | Subject | RT ± SD (in seconds) | Accuracy (%) | FA ± SD (in per minute) |

|---|---|---|---|---|

| Forward | Subject1 | 4.8 ± 0.5 | 90 | 0 ± 0 |

| Subject2 | 5.2 ± 0.4 | 90 | 0 ± 0 | |

| Subject3 | 4.6 ± 0.5 | 95 | 0 ± 0 | |

| Subject4 | 5.4 ± 0.6 | 90 | 0 ± 0 | |

| Average | 5.0 ± 0.5 | 91 | 0 ± 0 | |

| Backward | Subject1 | 4.9 ± 0.6 | 90 | 0 ± 0 |

| Subject2 | 5.1 ± 0.5 | 90 | 0 ± 0 | |

| Subject3 | 4.7 ± 0.5 | 95 | 0 ± 0 | |

| Subject4 | 5.4 ± 0.8 | 95 | 0 ± 0 | |

| Average | 5.0 ± 0.6 | 93 | 0 ± 0 | |

| Stop (special threshold) | Subject1 | 4.5 ± 0.6 | 90 | 0 ± 0 |

| Subject2 | 4.7 ± 0.7 | 85 | 0 ± 0 | |

| Subject3 | 4.3 ± 0.6 | 90 | 0 ± 0 | |

| Subject4 | 4.6 ± 0.5 | 90 | 0 ± 0 | |

| Average | 4.5 ± 0.6 | 89 | 0 ± 0 | |

| Stop (optimal threshold) | Subject1 | 1.9 ± 0.5 | 90 | 0.3 ± 0.1 |

| Subject2 | 2.2 ± 0.4 | 90 | 0.2 ± 0.1 | |

| Subject3 | 1.8 ± 0.6 | 90 | 0.4 ± 0.1 | |

| Subject4 | 2.2 ± 0.5 | 95 | 0.2 ± 0.1 | |

| Average | 2.0 ± 0.5 | 92 | 0.3 ± 0.1 |

Overall evaluation of BCW navigation experiment

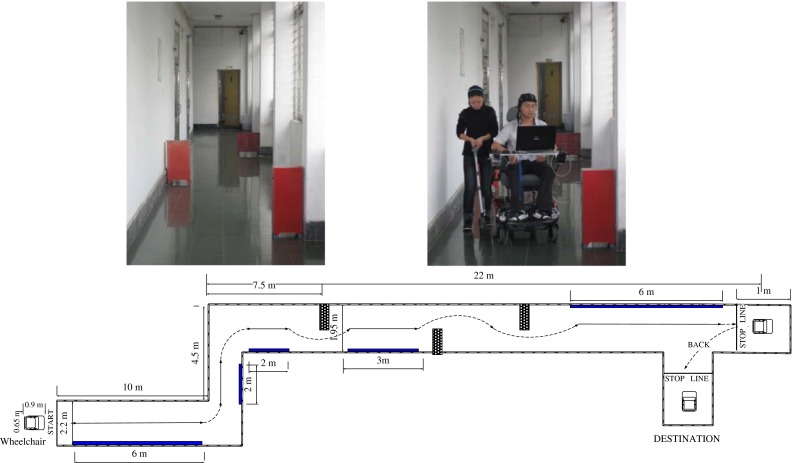

In this section, we designed an overall evaluation experiment for BCW navigation in an indoor setting. The objective of this experiment was to test the users’ ability to accomplish complex maneuverability tasks-moving forward, accelerating, decelerating, moving backward, avoiding obstacles, and navigating in indoor settings by performing the revelent corresponding activities as shown in Table 1. A trial of the experiment was shown in Fig. 9. Each subject performed 10 trials of this task. The metrics proposed in Iturrate et al. (2009) were followed to evaluate the performance of our BCW.

(1) Task success: accomplishment of the navigation task;

(2) Path length: distance in meters traveled to accomplish the task;

(3) Time: time taken in seconds to accomplish the task;

(4) Path length optimality ratio: ratio of the path length to the optimal path (the optimal path was approximately 39-

-long);

-long);(5) Time optimality ratio: ratio of the time taken to the optimal time (the optimal time was approximated assuming a low velocity of 0.1 m/s, a high velocity of 0.3 m/s, and a rotational velocity of 7°/command. The consumption time of the forward, backward, and stop commands was considered during the experimental procedure, resulting in an optimal time of 282 s (5+19/0.3+20/0.1+4.5+5+4.5, the guide length of low speed (0.1 m/s) and high speed (0.3 m/s) segments are 20 and 19 m, respectively. The time consumption of start (5 s) and the time consumption of stop (4.5 s) were also taken into account.);

(6) Collisions: number of collisions. The user navigated the wheelchair from the start line to the destination, following the guide ideal trajectories.

Fig. 9.

Top view of the route used for the BCW in the overall evaluation experiment. The objective of this experiment was to navigate the wheelchair from the start line and stop at the destination line by avoiding three obstacles. During the navigation the subject can obtain seven control commands(forward, backward, turning right, turning left, accelerating, decelerating, and stop) by performing the revelent corresponding activities as shown in Table 1. The shaded objects represent static obstacles. In addition, we advised the user to navigate the BCW at high speed when moving in a straight line under safe road conditions, as marked with a black bar according to the guide ideal trajectories, whereas in the remainder of the experiment, we advised the user to use low speed

The results are summarized in Table 3. All four users completed the course successfully without collisions. The average time taken to accomplish the task was 345 22

22  . In particular, the metric for the path-optimal ratio is satisfied, and the value for the time-optimal ratio is remarkable because of multi-command extraction and fast intention detection.

. In particular, the metric for the path-optimal ratio is satisfied, and the value for the time-optimal ratio is remarkable because of multi-command extraction and fast intention detection.

Table 3.

Metrics for evaluating our BCW

| Subject 1 | Subject 2 | Subject 3 | Subject 4 | Average | |

|---|---|---|---|---|---|

| Task success | yes | yes | yes | yes | yes |

| Path length ± SD (m) | 42.5 ± 0.7 | 43.1 ± 0.5 | 45.1 ± 0.5 | 44.8 ± 0.6 | 43.9 ± 0.6 |

| Path opt.ratio | 1.09 | 1.1 | 1.13 | 1.15 | 1.13 |

| Time ± SD (s) | 342 ± 23 | 351 ± 20 | 338 ± 24 | 346 ± 28 | 345 ± 22 |

| Time opt.ratio | 1.21 | 1.24 | 1.19 | 1.23 | 1.22 |

| Collisions | 0 | 0 | 0 | 0 | 0 |

Discussion

For our asynchronous BCW, we applied two thresholds (the special threshold and the optimal threshold) according to the ROC in the first online experiment. The result is remarkable that the average response time (RT) for stop by blinking are 2  and 4.5

and 4.5  respectively, the false positive rate (FA) for stop by blinking are 0.3 per minute and 0 per minute respectively. We obtained a fast and accurate stop command by performing triple blinking. First, by setting to the optimal threshold, we can achieve a revelent satisfactory TPR while keeping FPR below a given limit. Second, When navigating a wheelchair, we want to avoid false stop triggering in order to realize asynchronous control. By setting to the special threshold, we can achieve a satisfactory TPR while keeping FPR equal 0. That means false stop triggering is well avoided while the correct detection of blinking maybe longer. Therefore, Two thresholds are available in our system.

respectively, the false positive rate (FA) for stop by blinking are 0.3 per minute and 0 per minute respectively. We obtained a fast and accurate stop command by performing triple blinking. First, by setting to the optimal threshold, we can achieve a revelent satisfactory TPR while keeping FPR below a given limit. Second, When navigating a wheelchair, we want to avoid false stop triggering in order to realize asynchronous control. By setting to the special threshold, we can achieve a satisfactory TPR while keeping FPR equal 0. That means false stop triggering is well avoided while the correct detection of blinking maybe longer. Therefore, Two thresholds are available in our system.

As far as we known, only two existing studies utilized a stop command in BCW. In Li et al. (2013) have achieved a Go/stop(’GS’) command by P300 and SSVEP. The average response time (RT) and false activation rate (FA) for stop are 5.28 and 0.52 respectively. In Rebsamen et al. (2010) utilized a stop command using a fast P300 and mu/beta rhythm. The RT and FA for stop by fast P300 are 5.9 2.2 and 1.4

2.2 and 1.4 0.8 respectively, while Mu/beta are 5.5

0.8 respectively, while Mu/beta are 5.5 3.0 and 0.0

3.0 and 0.0 0.0 respectively. As shown in Table 4, we compared these different methods of stop.

0.0 respectively. As shown in Table 4, we compared these different methods of stop.

Table 4.

Comparison of different methods of stop

Effective control of BCI-based wheelchair is a big challenge. In our previous study, we have achieved direction (turning left and right) and speed (acceleration and deceleration) control of a wheelchair using a hybrid BCI combining motor imagery and P300 potentials. In this paper, we proposed an asynchronous BCW with seven steering behaviors based on HEE-BCI. A summery of the recent typical studies about BCW are shown in Table 5. Compering with the existing systems, our BCW is vast superior in multiple degrees of freedom and fast intention detection.

Table 5.

Summary of relevant research works in the field of brain-actuated wheelchairs

| BCI approach | Asynch.control | Description | Subjects | Speed |

|---|---|---|---|---|

| Motor imagery (left and right hand) Huang et al. (2012) | Yes | Control of 2D simulated wheelchair (commands: go, stop, right, left) | 5 | |

| P300 visual paradigm combined with motor imagery Rebsamen et al. (2010) | Yes | Control of real wheelchair (selection of high-level destination goals with P300 (e.g. kitchen) and stop detection with motor imagery | 5 | constant velocity is set to 0.5

|

| P300 visual paradigm built in a virtual 3D reconstruction of environment Iturrate et al. (2009) | No | Control of real wheelchair and simulated wheelchair in virtual environment (selection of local surrounding points) | 5 | maximum translation velocity is set to 0.3

|

| SSVEP visual paradigm and the stimuli flickering performing at high frequency (37, 38, 39, 40 Hz).Rebsamen et al. (2010) | Yes | Control of real wheelchair from start zone to goal zone with SSVEP with four commands. | 15 (13 healthy) | constant velocity is set to 0.2

|

| Our hybrid EEG-EOG BCI combining P300 visual paradigm, motor imagery and eye blinking electrooculogram potential) | Yes | Control of real wheelchair with seven steering behavior commands: forward, backward, turning right,turning left, acceleration, deceleration and stop. | 4 | low velocity is set to 0.1  and high velocity is set to 0.3 m/s and high velocity is set to 0.3 m/s |

Conclusions

An asynchronous BCW with seven steering behaviors based on HEE-BCI combining motor imagery, P300 potentials, and eye blinking is proposed. In our BCW system, the forward and backward steering behaviors are determined by left-/right-hand motor imagery with a duration of 4  , and the stop function is determined by eye blinking. Additionally, the direction is determined by left-/right-hand motor imagery, and acceleration/deceleration is determined by a joint feature combining motor imagery and P300 potentials. In this manner, seven commands are extracted effectively for continuous wheelchair navigation without any other assistance(e.g., an automatic navigation system). Evaluation experiments were conducted, the results demonstrate not only the effectiveness of the HEE-BCI strategy but also the high robustness of the BCW system. The proposed BCW demonstrates its potential as a mobility aid for those suffering from severe neuromuscular disorders.

, and the stop function is determined by eye blinking. Additionally, the direction is determined by left-/right-hand motor imagery, and acceleration/deceleration is determined by a joint feature combining motor imagery and P300 potentials. In this manner, seven commands are extracted effectively for continuous wheelchair navigation without any other assistance(e.g., an automatic navigation system). Evaluation experiments were conducted, the results demonstrate not only the effectiveness of the HEE-BCI strategy but also the high robustness of the BCW system. The proposed BCW demonstrates its potential as a mobility aid for those suffering from severe neuromuscular disorders.

Acknowledgments

This research is supported by the National High-Tech R and D Program of China (863 Program) under Grant 2012AA011601, National Natural Science Foundation of China under Grant 91120305, University High Level Talent Program of Guangdong, China under Grant N9120140A, Foundation for Distinguished Young Talents in Higher Education of Guangdong, China under Grant LYM11122, Project supported by Jiangmen R and D Program 2012(156) and Science Foundation for Young Teachers of Wuyi University (NO:2013zk08).

References

- Allison B, Brunner C, Kaiser V, Müller-Putz G, Neuper C, Pfurtscheller G. Toward a hybrid brain-computer interface based on imagined movement and visual attention. J Neural Eng. 2010;7(2):1–9. doi: 10.1088/1741-2560/7/2/026007. [DOI] [PubMed] [Google Scholar]

- Bin G, Gao X, Yan Z, Hong B, Gao S. An online multi-channel ssvep-based brain-computer interface using a canonical correlation analysis method. J Neural Eng. 2009;6:1–6. doi: 10.1088/1741-2560/6/4/046002. [DOI] [PubMed] [Google Scholar]

- Birbaumer N. Slow cortical potentials: plasticity, operant control, and behavioral effects. Neuroscientist. 1999;5(2):74–78. doi: 10.1177/107385849900500211. [DOI] [Google Scholar]

- Blanchard G, Blankertz B. BCI competition 2003-data set IIa: spatial patterns of self-controlled brain rhythm modulations. IEEE Trans Biomed Eng. 2004;51(6):1062–1066. doi: 10.1109/TBME.2004.826691. [DOI] [PubMed] [Google Scholar]

- Bromberg MB. Quality of life in amyotrophic lateral sclerosis. Phys Med Rehabil Clin N Am. 2008;19(3):591–605. doi: 10.1016/j.pmr.2008.02.005. [DOI] [PubMed] [Google Scholar]

- Borghetti D, Bruni A, Fabbrini M, Murri L, Sartucci F. A low-cost interface for control of computer functions by means of eye movements. Comput Biol Med. 2007;37(12):1765–1770. doi: 10.1016/j.compbiomed.2007.05.003. [DOI] [PubMed] [Google Scholar]

- Dornhege G, Blankertz B, Curio G, Müller KR. Boosting bit rates in noninvasive EEG single-trial classifications by feature combination and multiclass paradigms. IEEE Trans Biomed Eng. 2004;51(6):993–1002. doi: 10.1109/TBME.2004.827088. [DOI] [PubMed] [Google Scholar]

- Hardoon D, Szedmak S, Shawe-Taylor J. Canonical correlation analysis: an overview with application to learning methods. Neural Comput. 2004;16(12):2639–2664. doi: 10.1162/0899766042321814. [DOI] [PubMed] [Google Scholar]

- Huang D, Qian K, Fei D-Y, Jia W, Chen X, Bai O. Electroencephalography (eeg)-based brain-computer interface (bci): a 2-d virtual wheelchair control based on event-related desynchronization/synchronization and state control. IEEE Trans Neural Syst Rehabil Eng. 2012;20(3):379–388. doi: 10.1109/TNSRE.2012.2190299. [DOI] [PubMed] [Google Scholar]

- Iturrate I, Antelis JM, Kubler A, Minguez J. A noninvasive brain-actuated wheelchair based on a p300 neurophysiological protocol and automated navigation. IEEE Trans Robot. 2009;25(3):614–627. doi: 10.1109/TRO.2009.2020347. [DOI] [Google Scholar]

- Kim Y, Doh N, Youm Y, Chung WK (2001) Development of human-mobile communication system using electrooculogram signals. In: Intelligent robots and systems, 2001. Proceedings of 2001 IEEE/RSJ international conference on, vol 4. IEEE, pp. 2160–2165

- Li Y, Long J, Yu T, Yu Z, Wang C, Zhang H, Guan C. An EEG-based BCI system for 2-D cursor control by combining Mu/Beta rhythm and P300 potential. IEEE Trans Biomed Eng. 2010;57(10):2495–2505. doi: 10.1109/TBME.2010.2055564. [DOI] [PubMed] [Google Scholar]

- Li Y, Guan C. Joint feature re-extraction and classification using an iterative semi-supervised support vector machine algorithm. Mach Learn. 2008;71(1):33–53. doi: 10.1007/s10994-007-5039-1. [DOI] [Google Scholar]

- Li Y, Pan J, Wang F, Yu Z. A hybrid bci system combining p300 and ssvep and its application to wheelchair control. IEEE Trans Biomed Eng. 2013;60(11):3156–3166. doi: 10.1109/TBME.2013.2270283. [DOI] [PubMed] [Google Scholar]

- Long J, Li Y, Wang H, Yu T, Pan J, Li F. A hybrid brain computer interface to control the direction and speed of a simulated or real wheelchair. IEEE Trans Neural Syst Rehabil Eng. 2012;20(4):720–729. doi: 10.1109/TNSRE.2012.2197221. [DOI] [PubMed] [Google Scholar]

- Lv Z, Wu X, Li M, Zhang C (2008) Implementation of the eog-based human computer interface system. In: Bioinformatics and biomedical engineering, 2008. ICBBE 2008. The 2nd international conference on. IEEE, pp. 2188–2191

- Millán J, Galán F, Vanhooydonck D, Lew E, Philips J, Nuttin M (2009) Asynchronous non-invasive brain-actuated control of an intelligent wheelchair. In: Engineering in medicine and biology society, 2009. Annual international conference of the IEEE, pp. 3361–3364 [DOI] [PubMed]

- Nakanishi M, Mitsukura Y (2013) Wheelchair control system by using electrooculogram signal processing, In: Frontiers of Computer Vision, (FCV), IEEE joint workshop on 2013 19th Korea-Japan, pp. 137–142

- Panicker R, Puthusserypady S, Sun Y. An asynchronous p300 bci with ssvep-based control state detection. IEEE Trans Biomed Eng. 2011;99:1781–1788. doi: 10.1109/TBME.2011.2116018. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Neuper C, Guger C, Harkam W, Ramoser H, Schlogl A, Obermaier B, Pregenzer M. Current trends in Graz brain-computer interface (BCI) research. IEEE Trans Neural Syst Rehabil Eng. 2000;8(2):216–219. doi: 10.1109/86.847821. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Solis-Escalante T, Ortner R, Linortner P, Muller-Putz GR. Self-paced operation of an ssvep-based orthosis with and without an imagery-based brain switch: a feasibility study towards a hybrid bci. IEEE Trans Neural Syst Rehabil Eng. 2010;18(4):409–414. doi: 10.1109/TNSRE.2010.2040837. [DOI] [PubMed] [Google Scholar]

- Rebsamen B, Guan C, Zhang H, Wang C, Teo C, Ang MH, Burdet E. A brain controlled wheelchair to navigate in familiar environments. IEEE Trans Neural Syst Rehabil Eng. 2010;18(6):590–598. doi: 10.1109/TNSRE.2010.2049862. [DOI] [PubMed] [Google Scholar]

- Rebsamen B, Burdet E, Guan C, Zhang H, Teo C, Zeng Q, Ang M, Laugier C (2006) A brain-controlled wheelchair based on p300 and path guidance. In Biomedical robotics and biomechatronics, 2006. BioRob 2006. The first IEEE/RAS-EMBS international conference on. IEEE, pp. 1101–1106

- Sellers E, Kubler A, Donchin E. Brain-computer interface research at the university of south florida cognitive psychophysiology laboratory: the p300 speller. IEEE Trans Neural Syst Rehabil Eng. 2006;14(2):221–224. doi: 10.1109/TNSRE.2006.875580. [DOI] [PubMed] [Google Scholar]

- Usakli AB, Gurkan S, Aloise F, Vecchiato G, Babiloni F (2010) On the use of electrooculogram for efficient human computer interfaces. Computational intelligence and neuroscience Vol. 2010, p. 1 [DOI] [PMC free article] [PubMed]

- Williams MT, Donnelly JP, Holmlund T, Battaglia M. Als: Family caregiver needs and quality of life. Amyotroph Lateral Scler. 2008;9(5):279–286. doi: 10.1080/17482960801934148. [DOI] [PubMed] [Google Scholar]