Abstract

Microscopic diagnosis of malaria is a well-established and inexpensive technique that has the potential to provide accurate diagnosis of malaria infection. However, it requires both training and experience. Although it is considered the gold standard in research settings, the sensitivity and specificity of routine microscopy for clinical care in the primary care setting has been reported to be unacceptably low. We established a monthly external quality assurance program to monitor the performance of clinical microscopy in 17 rural health centers in western Kenya. The average sensitivity over the 12-month period was 96% and the average specificity was 88%. We identified specific contextual factors that contributed to inadequate performance. Maintaining high-quality malaria diagnosis in high-volume, resource-constrained health facilities is possible.

Background

Since the discovery of the blood stage infection of Plasmodium by Laveran in 18801,2 and the development of differential staining techniques by Romanowsky and others in the early 20th century, microscopy has been the gold standard for diagnosing blood-stage malaria infection. Under ideal conditions, microscopic examination of stained blood smears can detect infections of < 20 parasites per microliter of blood.3,4

High quality diagnosis requires microscopes with high-powered lenses in good repair, trained personnel, and good laboratory practices to prepare clean, uncontaminated smears (e.g., Mbakilwa and others5). Clinical microscopy in health facilities in malaria-endemic countries is often lacking these critical elements. As a result, low sensitivity and specificity of routine clinical microscopy has been documented in several studies.

Given the importance of parasitological confirmation of malaria infection and the central role of microscopy in the peripheral health system, we implemented an external quality assurance (EQA) program in 17 rural government health facilities in Kenya to monitor and improve diagnosis of malaria by microscopy. We measured the accuracy of routine clinical microscopy during a 1-year period and the relationship between accuracy, working conditions, and workload.

Methods

Study area.

The study was conducted in seven districts in five counties in the Western and Rift Valley Regions of Kenya. High transmission counties included Bungoma and Busia counties where malaria endemicity is high. Uasin Gishu, Elgeyo Marakwet, and Baringo counties have low transmission and are prone to epidemics when climatic conditions are suitable.

Selection of health facilities.

Health centers within the five counties were eligible for the study if they were government owned and had capacity to diagnose malaria by microscopy at the time of the study. Eighteen health centers from seven districts were selected by simple random sampling from a list of 61 eligible facilities. One facility was later dropped 4 months into the study as a result of noncompliance with study protocol and absence of a laboratory technologist. Seventeen facilities completed the study.

Training.

All of the participating facilities were staffed by laboratory technologists with a diploma in laboratory sciences, with the exception of one laboratory technologist with a degree. One laboratory technologist from each facility attended a 2-week Malaria Microscopy Training Course at the Malaria Diagnostic Center, Kisumu, Kenya during June and July 2012. This training has been shown to improve sensitivity and specificity of microscopic diagnosis of malaria to > 85% and 90%, respectively.6 The two expert study microscopists also attended the 2-week Malaria Microscopy Training Course. They both hold degrees in medical laboratory science. They have been working in research settings for a minimum of 5 years and focused exclusively on malaria microscopy for the last 2 years.

External quality assurance.

The external quality assurance program was implemented as part of a larger study investigating the impact of incentives on facility performance. A full description of the study activities can be found in Menya and others.7

The EQA program consisted of four components: 1) re-reading of positive and negative smears collected from the facility for calculation of sensitivity and specificity, 2) feedback on the quality of slide preparation along with suggested corrective measures, 3) preparation of monthly performance reports for each facility indicating the sensitivity and specificity of their laboratory malaria diagnosis and 4) on-the-job mentorship of laboratory staff including rereading of all discrepant slides.

The facilities were supplied with only slides and slide boxes; the facilities continued to purchase their own laboratory reagents as they had before the program. Starting in October 2012, all malaria smears prepared and read in each facility were archived by the staff and collected each month by the field team. Each month, patients were selected from the laboratory register by systematic random sampling until at least 15 malaria-positive and 15 malaria-negative patients had been identified. Because of the importance of reducing false negatives in the clinical setting, we chose to read proportionately more negatives than the standard rechecking scheme (i.e., all positive slides and 5–10% of negative slides). Fifteen positive and 15 negative slides gives a reasonable estimate of sensitivity and specificity each month. In facilities with very few positive patients, all of the positive patients were selected. Slides corresponding to the first 15 positive and first 15 negative patients from the sample were located in the archived patient smears and re-read by the expert study microscopist, who was blinded to the facility results. The blood slide results were reported by the expert microscopist as either positive or negative. Smears that were unreadable were not included in the re-checking scheme. Specificity and sensitivity were calculated by comparing the results recorded in the laboratory register to that of the expert microscopist. Discrepant slides were re-read by a second expert microscopist and taken back to the facility to be reviewed until both the expert microscopist and the facility laboratory technologist came to an agreement. The expert microscopist's results were never overturned during these reviews.

Data analysis.

Mean sensitivity and specificity are weighted by the number of positive or negative slides read each month for each facility. In some months in the low transmission facilities, no positive slides were found and sensitivity could not be calculated; these are treated as missing and excluded from the analysis. Linear regression was used to explore correlation between sensitivity and specificity and other factors. Analytic weights (number of positive or negative slides read) were used and standard errors were adjusted for repeat measures within a facility.

Ethical review.

Permission to conduct the study was granted by the Director of the Division of Malaria Control, the Provincial Director of Public Health and Sanitation (Western and Rift Valley), the District Medical Officer of Health from each district, and the Officer In-charge of each participating health facility. Ethical approval was granted by the Duke University Institutional Review Board (Pro00035154) and the Moi University Institutional Research and Ethics Committee (000804).

Results

Results of training.

Standardized slide reading tests were administered before and after the training course to evaluate performance and track improvement. The mean sensitivity and specificity of participants before the training were 50.2% and 72.1%, respectively. Following the training, sensitivity and specificity rose to 77.7% and 91.7%, respectively. Fifteen of the 18 trainees exceeded 90% specificity in the post-test but only 8 of the trainees exceeded 80% sensitivity.

Overall performance.

Seventeen facilities completed 12 months of external quality assurance (Table 1 ). The expert microscopist reviewed an average of 26 slides from each facility each month; 25.5% of slides were positive. The overall sensitivity and specificity for all 17 facilities over the 12-month program was 96% and 88%, respectively (range of sensitivity 82–100, range of specificity 62–100).

Table 1.

Sensitivity and specificity by facility

| Facility | Average slides read per month by laboratory | Total slides rechecked | True positives | True negatives | False positives | False Negatives | Sensitivity | Specificity | Comments |

|---|---|---|---|---|---|---|---|---|---|

| High transmission | |||||||||

| Angurai | 405 | 295 | 103 | 165 | 5 | 22 | 82 | 97 | |

| Budalangi | 589 | 288 | 137 | 143 | 6 | 2 | 99 | 96 | |

| Bumala A | 520 | 296 | 101 | 165 | 20 | 10 | 91 | 89 | Laboratory tech. transferred |

| Bumala B | 778 | 354 | 166 | 183 | 2 | 3 | 98 | 99 | |

| Malaba | 961 | 336 | 118 | 172 | 35 | 11 | 91 | 83 | Temporary laboratory tech (2 months) |

| Moding | 279 | 260 | 106 | 138 | 15 | 1 | 99 | 90 | |

| Mukhobola | 559 | 302 | 118 | 167 | 12 | 5 | 96 | 93 | Laboratory tech. transferred |

| Milo | 288 | 316 | 115 | 162 | 37 | 2 | 98 | 81 | Temporary laboratory tech (2 months) |

| Sinoko | 245 | 188 | 23 | 103 | 62 | 0 | 100 | 62 | Broken microscope (5 months) |

| Low transmission | |||||||||

| Kapteren | 77 | 141 | 4 | 110 | 27 | 0 | 100 | 80 | Broken microscope (5 months) |

| Kiptagich | 30 | 242 | 2 | 240 | 0 | 0 | 100 | 100 | |

| Moi's Bridge | 1084 | 198 | 9 | 139 | 49 | 1 | 90 | 74 | |

| Msekekwa | 82 | 291 | 3 | 275 | 13 | 0 | 100 | 95 | Laboratory tech. transferred |

| Railways | 851 | 260 | 42 | 172 | 46 | 0 | 100 | 79 | |

| Sosiani | 248 | 244 | 10 | 187 | 46 | 1 | 91 | 80 | |

| Soy | 423 | 272 | 30 | 213 | 26 | 3 | 91 | 89 | |

| Tenges | 41 | 231 | 3 | 227 | 1 | 0 | 100 | 100 | |

| Average | 96 | 88 | |||||||

| Range | 18 | 38 | |||||||

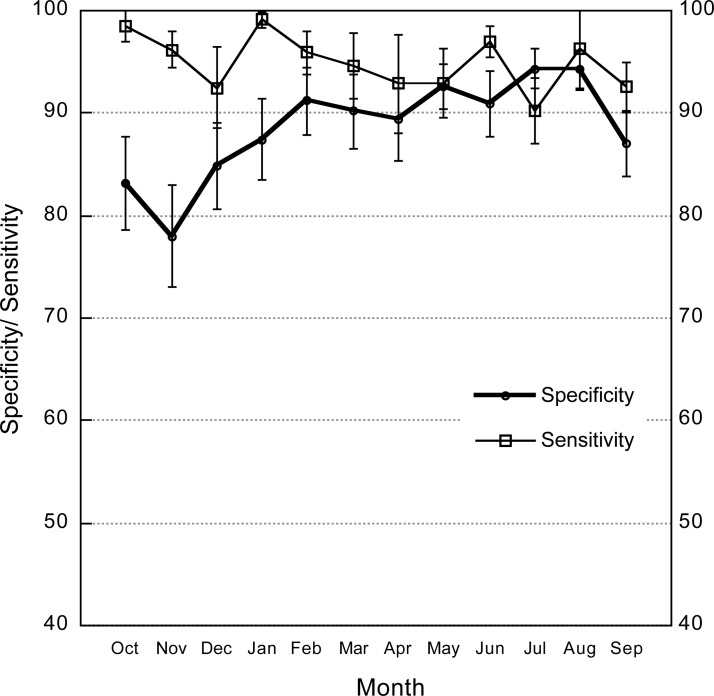

During the first 2 months of the program, slides from 10 of the 17 facilities had debris or other quality problems that were reported to the facilities. These issues may have contributed to lower specificity at the beginning of the program. The study team showed the laboratory technologist how to filter the stain and avoid getting the smear too hot during drying. By the third month, the expert microscopists had no further concerns about slide quality and smear preparation.

Sensitivity remained consistently high over the study period (Figure 1). Mean sensitivity dropped below 90% in only 2 months—December and July. Mean specificity increased slightly over the study period (P = 0.056).

Figure 1.

Sensitivity and specificity of study facilities by month (October 2012–December 2013). The 95% confidence intervals are shown.

Effect of laboratory conditions.

We calculated the sensitivity and specificity for facilities in high versus low transmission zones, facilities with suboptimal equipment, and facilities where the laboratory technologist changed during the study (Table 2 ). We saw no significant difference in quality of diagnosis between high and low transmission areas. The specificity for facilities and months where the microscope was in bad repair (two facilities, 5 months each) was significantly lower than the average, but there was no measurable effect on sensitivity. Three facilities had a permanent change in personnel and two facilities hired temporary staff to cover absences of their normal technologist. In facilities with a change in laboratory personnel, either temporary or permanent (five facilities, total of 16 months), specificity was not significantly different, but sensitivity was slightly lower. Laboratory technologists who came on in a temporary capacity to cover during the absence of a regular technologist had very poor performance (74% sensitivity and 58.5% specificity). Laboratory technologists who were transferred within the government system performed well and no difference could be measured between these individuals and those trained in at the Malaria Diagnostic Center after a few months in the program.

Table 2.

Sensitivity and specificity by facility characteristics

| Facility characteristics (n) | Sensitivity (95% CI) | Specificity (95% CI) |

|---|---|---|

| High Transmission (9) | 94.7 (92.9–96.6) | 88.1 (85.1–91.2) |

| Low transmission (8) | 96.1 (91.2–100) | 88.3 (85.2–91.4) |

| Good equipment (15) | 94.8 (93.1–96.5) | 90 (88.0–92.0) |

| Poor equipment (2) | 100 (no variation) | 64.4 (54.4–74.3) |

| Laboratory tech change (5) | 86.9 (78.5–95.3) | 84.8 (74.7–94.8) |

| No laboratory tech change (12) | 95.8 (94.2–97.4) | 88.6 (86.4–90.8) |

| Slides read per month (number of facility-months*) | ||

| Less than 400 (49) | 95.3 (92.4–98.2) | 89.9 (86.8–93.1) |

| 400–800 (45) | 93.2 (90.1–96.3) | 89.4 (86.5–92.4) |

| More than 800 (39) | 96.3 (93.5–99.1) | 82.3 (77.4–87.2) |

Slide volume was unknown for seven facility-months.

CI = confidence interval.

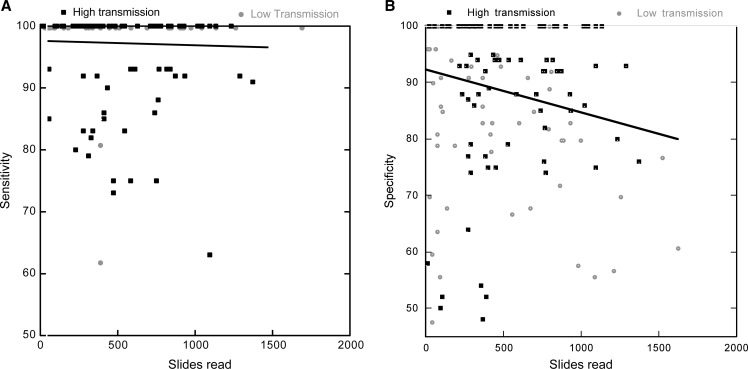

The number of slides read by the laboratory each month had a significant impact on specificity (P = 0.001), but not on sensitivity (P = 0.659, Figure 2A and B). Specificity declined by 1% for every additional 100 slides read in a month.

Figure 2.

(A) Sensitivity as a function of total number of slides read in the facility for each month and facility. (B) Specificity as a function of total number of slides read for each month and facility. Linear regression results are shown.

Discussion

Accurate diagnosis is critical in the management of malaria. Our results show that a simple performance monitoring program can reinforce training and help to maintain high-quality diagnosis of malaria by microscopy.

Our results differ from other studies that estimate the accuracy of routine microscopy in health facilities to be unacceptably low8–11 and highly variable between facilities.12 For example, in Tanzania and Kenya, sensitivity of <70% and specificity of 47–61% of clinical microscopy compared with expert readings has been observed.13,14 Similar to our results, Kiggundu and others15 reported sensitivity and specificity > 95% following a brief refresher training program. However, their evaluation only included slides prepared 1 month after training. Our study shows that high quality diagnosis can be maintained for at least a year after training.

Specific conditions could be linked to months or facilities with suboptimal performance. Workload was a key determinant of accurate diagnosis. Facilities that read more than 800 slides in a month had significantly lower specificity. Because high-volume facilities were primarily located in high transmission zones, this result may reflect a tendency to overcall slides as positive, influenced by the expectation of high slide positivity rates. Facilities with inadequate microscopes and temporary laboratory technologists also contributed to poor specificity. Sensitivity declined only slightly in December when temporary technologists were hired to cover absences of government technologists and again in July when health facility fees were removed by a government order, resulting in a spike in patient volume.

The program described here employs routine patient specimens prepared in each laboratory for diagnostic purposes rather than a standardized set of specimens prepared externally. This approach allows evaluation of performance under actual working conditions, including stain preparation, patient volume, and equipment condition. Slide quality has been shown to be a key determinant in accurate reading,5 therefore standardized specimens may lead to artificially high performance results if smears are of higher quality than routine smears, or personnel allocate more time to read these smears than they normally would for patient smears. However, our approach does not permit comparison of sensitivity and specificity between facilities because of the inherent differences in working conditions and smear preparation.

On-the-job supervision and slide rechecking was an important component of the program and continually reinforced the training. Two other programs have reported positive effects of on-site mentorship and slide rechecking.16,17 In our study, specificity improved over the first few months, probably reflecting the influence of the regular feedback and review of discrepant slides. Some participants were initially reluctant to review slides with the study microscopist and had to be reassured that there was no penalty for discrepant slides, only the opportunity for feedback and improvement. Slide review emphasized consensus rather than correction, allowed questions to be raised, and generated important discussion. These sessions often led to self-correction in the laboratory.

The training at the Malaria Diagnostic Center of Excellence dramatically improved performance, but there are three pieces of information internal to our study that support an effect of the EQA program over and above the training. First, results after 1 year of EQA were higher than post-training performance. Second, not all of the laboratory technicians in each facility went to the program—only one attended and yet both trained and untrained technicians contributed to excellent performance. Finally, when new laboratory technicians replaced trained technicians (three facilities), their performance was initially lower, but after 1 or 2 months in the program, was equal to that of trained technicians.

Our study was conducted in rural, peripheral facilities with high patient volume and very basic infrastructure, therefore we believe that this approach could be applied in other similar contexts. We did not provide any special equipment or reagents. Nonetheless, health care infrastructure in the malaria-endemic world varies widely and this type of program may not be practical in places with no electricity, no laboratory technologists, or other major infrastructure limitations. However, given the long delay in provision and extremely erratic supply of rapid diagnostic tests (RDTs) in health facilities in Kenya, this is a simple approach that could significantly improve quality of diagnosis and clinician confidence in diagnosis in the absence of RDTs. Supporting excellence in microscopy could also have trickle-down effects to other microscopically diagnosed infections such as tuberculosis, helminthes, and urinary tract infections among others.

Several clear recommendations emerge from these results. First, the sourcing of temporary staff to stand in for regular technologists should be more stringently controlled. Only trained, credentialed personnel should be allowed to provide clinical laboratory services. Second, functioning equipment is essential. Using substandard equipment can severely compromise patient care. Finally, on-the-job supervision and establishment of performance metrics with regular feedback can ensure high quality diagnosis. The results of performance evaluation should also be made available to clinicians to increase their confidence in the results of malaria diagnosis in their facility and ultimately improve adherence to the results of laboratory testing.

The World Health Organization (WHO) now recommends that all suspected malaria cases be confirmed by parasitological diagnosis before treatment whenever possible.18 Point-of-care immunochromatographic diagnostic tests (RDTs) have been proposed as an alternative to microscopy to circumvent problems with poor performance of microscopic diagnosis. These tests suffer from inadequate supply chain management and erratic availability in many government health facilities. Transport and storage of RDTs require controlled temperatures not exceeding 40°C. In contrast, stains for malaria microscopy are readily available and affordable in the nearest commercial center and are far less sensitive to temperature changes.

Our study shows that maintaining high-quality malaria diagnosis in high-volume, resource-constrained health facilities is possible.

Footnotes

Authors' addresses: Rebecca Wafula, Edna Sang, and Olympia Cheruiyot, AMPATH, Research, Eldoret, Kenya, E-mails: rebeccahwafulha@gmail.com, edna_sang@yahoo.com, and olydip84@yahoo.com. Angeline Aboto, Ministry of Health, Uasin Gishu County, Eldoret, Kenya, E-mail: narooan@yahoo.com. Diana Menya, Moi, University, School of Public Health, Eldoret, Kenya, E-mail: dianamenya@gmail.com. Wendy Prudhomme O'Meara, School of Public Health, Moi University College of Health Sciences, Eldoret, Kenya, Duke University School of Medicine, Durham, NC, Duke Global Health Institute, Durham, NC, E-mail: wendypomeara@gmail.com.

References

- 1.Laveran A. Deuxieme note relative a un nouveau parasite trouve dans le sang des malades atteints de la fievre paludisme. Bulletin de l'Academie medicale 2ieme serie. 1880:1346–1347. [Google Scholar]

- 2.Bruce-Chwatt LJ. Alphonse Laveran's discovery 100 years ago and today's global fight against malaria. J R Soc Med. 1981;74:531–536. doi: 10.1177/014107688107400715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bejon P, Andrews L, Hunt-Cooke A, Sanderson F, Gilbert S, Hill A. Thick blood film examination for Plasmodium falciparum malaria has reduced sensitivity and underestimates parasite density. Malar J. 2006;5:104. doi: 10.1186/1475-2875-5-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Payne D. Use and limitations of light microscopy for diagnosing malaria at the primary health care level. Bull World Health Organ. 1988;66:621–626. [PMC free article] [PubMed] [Google Scholar]

- 5.Mbakilwa H, Manga C, Kibona S, Mtei F, Meta J, Shoo A, Amos B, Reyburn H. Quality of malaria microscopy in 12 district hospital laboratories in Tanzania. Pathog Glob Health. 106:330–334. doi: 10.1179/2047773212Y.0000000052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ohrt C, Obare P, Nanakorn A, Adhiambo C, Awuondo K, O'Meara WP, Remich S, Martin K, Cook E, Chretien JP, Lucas C, Osoga J, McEvoy P, Owaga ML, Odera JS, Ogutu B. Establishing a malaria diagnostics centre of excellence in Kisumu, Kenya. Malar J. 2007;6:79. doi: 10.1186/1475-2875-6-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Menya D, Logedi J, Manji I, Armstrong J, Neelon B, O'Meara WP. An innovative pay-for-performance (P4P) strategy for improving malaria management in rural Kenya: protocol for a cluster randomized controlled trial. Implement Sci. 2013;8:48. doi: 10.1186/1748-5908-8-48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Endeshaw T, Graves PM, Ayele B, Mosher AW, Gebre T, Ayalew F, Genet A, Mesfin A, Shargie EB, Tadesse Z, Teferi T, Melak B, Richards FO, Emerson PM. Performance of local light microscopy and the ParaScreen Pan/Pf rapid diagnostic test to detect malaria in health centers in Northwest Ethiopia. PLoS ONE. 2012;7:e33014. doi: 10.1371/journal.pone.0033014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Harchut K, Standley C, Dobson A, Klaassen B, Rambaud-Althaus C, Althaus F, Nowak K. Over-diagnosis of malaria by microscopy in the Kilombero Valley, southern Tanzania: an evaluation of the utility and cost-effectiveness of rapid diagnostic tests. Malar J. 2013;12:159. doi: 10.1186/1475-2875-12-159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mukadi P, Gillet P, Lukuka A, Atua B, Kahodi S, Lokombe J, Muyembe JJ, Jacobs J. External quality assessment of malaria microscopy in the Democratic Republic of the Congo. Malar J. 2011;10:308. doi: 10.1186/1475-2875-10-308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mukadi P, Gillet P, Lukuka A, Atua B, Sheshe N, Kanza A, Mayunda JB, Mongita B, Senga R, Ngoyi J, Muyembe JJ, Jacobs J, Lejon V. External quality assessment of Giemsa-stained blood film microscopy for the diagnosis of malaria and sleeping sickness in the Democratic Republic of the Congo. Bull World Health Organ. 2013;91:441–448. doi: 10.2471/BLT.12.112706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Barat L, Chipipa J, Kolczak M, Sukwa T. Does the availability of blood slide microscopy for malaria at health centers improve the management of persons with fever in Zambia? Am J Trop Med Hyg. 1999;60:1024–1030. doi: 10.4269/ajtmh.1999.60.1024. [DOI] [PubMed] [Google Scholar]

- 13.Zurovac D, Midia B, Ochola SA, English M, Snow RW. Microscopy and outpatient malaria case management among older children and adults in Kenya. Trop Med Int Health. 2006;11:432–440. doi: 10.1111/j.1365-3156.2006.01587.x. [DOI] [PubMed] [Google Scholar]

- 14.Kahama-Maro J, D'Acremont V, Mtasiwa D, Genton B, Lengeler C. Low quality of routine microscopy for malaria at different levels of the health system in Dar es Salaam. Malar J. 2011;10:332. doi: 10.1186/1475-2875-10-332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kiggundu M, Nsobya SL, Kamya MR, Filler S, Nasr S, Dorsey G, Yeka A. Evaluation of a comprehensive refresher training program in malaria microscopy covering four districts of Uganda. Am J Trop Med Hyg. 2011;84:820–824. doi: 10.4269/ajtmh.2011.10-0597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Khan MA, Walley JD, Munir MA, Khan MA, Khokar NG, Tahir Z, Nazir A, Shams N. District level external quality assurance (EQA) of malaria microscopy in Pakistan: pilot implementation and feasibility. Malar J. 2011;10:45. doi: 10.1186/1475-2875-10-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hemme F, Gay F. Internal quality control of the malaria microscopy diagnosis for 10 laboratories on the Thai-Myanmar border. Southeast Asian J Trop Med Public Health. 1998;29:529–536. [PubMed] [Google Scholar]

- 18.World Health Organization . Guidelines for the Treatment of Malaria. Second edition. Geneva: WHO; 2010. [Google Scholar]