Abstract

Objective

To evaluate the influence of community-onset/healthcare facility-associated cases on Clostridium difficile infection (CDI) incidence and outbreak detection.

Design

Retrospective cohort.

Setting

Five acute-care healthcare facilities in the United States.

Methods

Positive stool C. difficile toxin assays from July 2000 through June 2006 and healthcare facility exposure information were collected. CDI cases were classified as hospital-onset (HO) if they were diagnosed > 48 hours after admission or community-onset/healthcare facility-associated if they were diagnosed ≤ 48 hours from admission and had recently been discharged from the healthcare facility. Four surveillance definitions were compared: HO cases only and HO plus community-onset/healthcare facility-associated cases diagnosed within 30 (HCFA-30), 60 (HCFA-60) and 90 (HCFA-90) days after discharge from the study hospital. Monthly CDI rates were compared. Control charts were used to identify potential CDI outbreaks.

Results

The HCFA-30 rate was significantly higher than the HO rate at two healthcare facilities (p<0.01). The HCFA-30 rate was not significantly different from the HCFA-60 or HCFA-90 rates at any healthcare facility. The correlations between each healthcare facility’s monthly rates of HO and HCFA-30 CDI were almost perfect (range, 0.94–0.99, p<0.001). Overall, 12 time points had a CDI rate >3 SD above the mean, including 11 by the HO definition and 9 by the HCFA-30 definition, with discordant results at 4 time points (κ = 0.794, p<0.001).

Conclusions

Tracking community-onset/healthcare facility-associated cases in addition to HO cases captures significantly more CDI cases but surveillance of HO CDI alone is sufficient to detect an outbreak.

Keywords: Clostridium difficile, surveillance, healthcare epidemiology, hospital-associated infections

INTRODUCTION

Clostridium difficile is the most common cause of infectious diarrhea among hospitalized patients, causing significant morbidity, mortality, and increase in healthcare costs.1;2 Inpatient stay in a healthcare facility (HCF) is a well-established risk factor for both C. difficile colonization and C. difficile infection (CDI).3;4 Although earlier studies suggest a relatively short incubation period (i.e., 3 to 7 days),4;5 patients often develop CDI after discharge from an HCF.6–9 More recent evidence indicates that CDI onset after discharge from an HCF may be increasing.10 The majority of patients with CDI onset after discharge from an HCF have symptom onset within 4 weeks of discharge,6;8 although CDI symptom onset may occur in patients as many as 2–3 months after discharge.7;9

Current surveillance definitions, which were developed to track disease trends, detect outbreaks, and facilitate comparison of CDI rates among similar institutions, incorporate previous HCF exposure information.10 However, the decision of individual HCFs to report community-onset, HCF-associated (CO/HCFA) cases in addition to HCF-onset, HCF-associated cases is dependent on their ability to accurately and efficiently collect HCF exposure information, categorize cases, and report rates. Given limited infection prevention and control resources, it is important to understand whether tracking CO/HCFA cases improves the ability of an HCF to detect an abnormal increase in CDI rates. A recent study limited to medical wards at a single institution reported that CDI rates that include CO/HCFA cases closely reflect CDI rates that track only HCF-onset, HCF-associated cases.7 The purpose of this study was to evaluate the influence of HCF exposure surveillance on CDI incidence and outbreak detection at five geographically diverse hospitals in the United States.

METHODS

This retrospective multicenter study was conducted using all adult patients admitted to five academic medical center hospitals from July 1, 2000 through June 30, 2006. Eligibility was limited to patients ≥ 18 years of age. All study hospitals participated in the Centers for Disease Control and Prevention (CDC) Epicenters Program. Study hospitals were Barnes-Jewish Hospital (St. Louis, MO), Brigham and Women’s Hospital (Boston, MA), The Ohio State University Medical Center (Columbus, OH), Stroger Hospital of Cook County (Chicago, IL), and University of Utah Hospital (Salt Lake City, UT). Results of stool C. difficile toxin assays, patient-days, and dates of admission, discharge and assays were collected from electronic hospital databases. Toxin assay results from one hospital were not available from July 1, 2000 through June 30, 2001. CDI cases were defined as any inpatient with a positive stool toxin assay for C. difficile. Chart review was performed for all patients with C. difficile toxin identified within 48 hours of admission to ascertain healthcare facility (HCF) exposures in the 90 days prior to hospital admission. Recurrent CDI cases defined as repeated episodes within eight weeks of each other were excluded from the analysis.10 Community-onset, community associated CDI cases (CDI onset ≤ 48 hours from admission and no study hospital HCF exposures in the previous 90 days) and CO/HCFA CDI cases not attributed to a study hospital were also excluded.

CDI cases were classified as hospital-onset, HCF-associated (HO) or CO/HCFA, according to a modified version of published surveillance definitions.10 HO cases were defined as patients with positive toxin assays > 48 hours after hospital admission. CO/HCFA cases were defined as patients with positive toxin assays ≤ 48 hours after hospital admission, provided that diagnosis occurred within 90 days after the last discharge from the study hospital and there were no other HCF exposures prior to readmission. To evaluate the utility of incorporating recent HCF exposure information into CDI surveillance definitions, four different definitions of HCF-associated CDI were compared. Surveillance definitions included 1) HO cases; 2) HO and CO/HCFA cases diagnosed within 30 days after the last discharge from the study hospital (HCFA-30); 3) HO and CO/HCFA cases diagnosed within 60 days after the last discharge from the study hospital (HCFA-60); and 4) HO and CO/HCFA cases diagnosed within 90 days after the last discharge from the study hospital (HCFA-90) (Table 1).

Table 1.

Definitions of Healthcare Facility-Associated Clostridium difficile Infection According to Recent Healthcare Facility Exposures

| Category | Definition |

|---|---|

| HO |

|

| HCFA-30 |

|

| HCFA-60 |

|

| HCFA-90 |

|

HO, hospital-onset, healthcare facility-associated; HCFA, healthcare facility-associated.

Data Analysis

For all hospitals, monthly CDI rates per 10,000 patient-days were calculated for each CDI definition. HO CDI cases were attributed to the month of stool collection for the C. difficile toxin assay and CO/HCFA CDI cases were attributed to the month of discharge from the HCF before symptom onset. Rates were compared with χ2 summary tests with Bonferroni correction (p < 0.0125 considered significant). Cross correlation coefficients (ρ) were calculated to assess the correlation in rate variability over time (in months) between CDI definitions. The annual and overall hospital rankings by CDI rates were described. These analyses excluded the last three months of the study period because we did not assess whether patients discharged in the last three months of the study period presented to the study hospital with C. difficile after the study period.

Statistical control charts were constructed for the HO and HCFA-30 surveillance definitions by hospital to provide a standardized, objective method to compare CDI rate definitions and to monitor for the occurrence of abnormal increases in CDI rates. The primary comparison of interest was the HO vs. the HCFA-30 definitions due to the insignificant difference in CDI rates between the HCFA-30 vs. HCFA-60 definitions and the HCFA-60 vs. HCFA-90 definitions. This analysis excluded the last month of the study period because we did not assess whether patients discharged in the last month of the study period presented to the study hospital with C. difficile after the study period.

Shewhart u control charts were used, as described by Benneyan11 and Sellick.12 Given the discrete count data following a Poisson distribution with unequal size of monthly patient-day subgroups, u control charts were the appropriate choice of chart type. The primary indication for an abnormal CDI rate was a value > three standard deviations (SD) from the mean.11 In addition, a supplementary set of within-limit criteria as described by Benneyan13 were used to identify non-random variation, such as trends, cycles, shifts above the mean, and other forms of non-random or low-probability behavior. For each hospital, incidence rates of HO and HCFA-30 CDI were plotted. Time points with abnormally high rates of CDI were identified for the > three SD rule and the within-limit criteria; abnormal time points defined by the within-limit rules were labeled at the first time point to meet the rule. The kappa statistic (κ) was calculated to measure the agreement between the number of times an abnormal CDI rate was detected by the HO and HCFA-30 definitions.

All tests were two-tailed, and P < 0.05 was considered significant. Statistical analyses were performed with SPSS for Windows, version 14.0 (SPSS, Inc., Chicago, IL). Approval for this study was obtained from the human research protection offices of all participating centers.

RESULTS

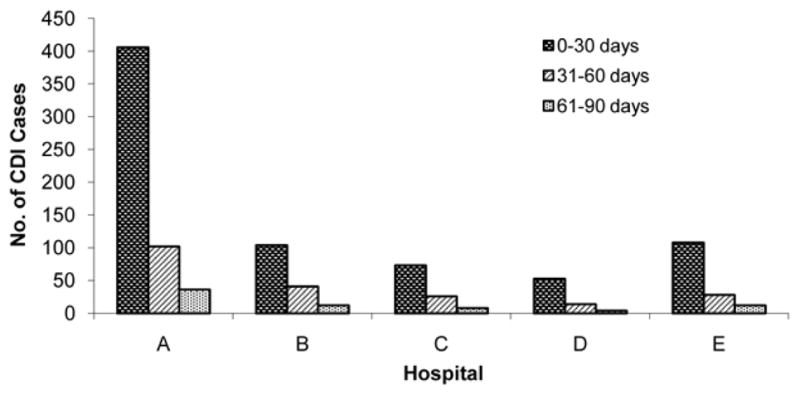

During the six-year study period, the participating hospitals identified 4,668 HO cases and 1,027 CO-HCFA cases with most recent HCF discharge from the study hospital within 90 days. Of the 1,027 CO-HCFA cases, 744 (72%) were diagnosed within 30 days since last hospital discharge, 211 (21%) within 31–60 days, and 72 (7%) within 61–90 days (Figure 1).

Figure 1.

Time of onset of community-onset healthcare facility-associated cases of Clostridium difficile infection (CDI) after most recent discharge from hospital.

Incidence rates for four healthcare-associated CDI definitions by hospital are presented in Table 2. The overall HO CDI rate was significantly lower than the HCFA-30 rate (8.94 vs. 10.36 cases per 10,000 patient-days, p < 0.001). There were no significant differences in HO and HCFA-30 rates at three hospitals (B, C, and D). Overall CDI incidence rates were not significantly different between the HCFA-30 vs. HCFA-60 definitions (10.36 vs. 10.77 cases per 10,000 patient-days, p = 0.05) or the HCFA-60 vs. HCFA-90 definitions (10.77 vs. 10.90 cases per 10,000 patient-days, p = 0.50). Incidence rates were significantly lower for the HCFA-30 vs. HCFA-90 definitions overall (10.36 vs. 10.90 cases per 10,000 patient-days, p < 0.01), but not at any of the individual hospitals.

Table 2.

Clostridium difficile Infection Incidence Rates Between July 2000 and June 2006 by Hospital

|

|

||||

|---|---|---|---|---|

| Rate per 10,000 Patient-days | ||||

|

| ||||

| Hospital | HO | HCFA-30 | HCFA-60 | HCFA-90 |

| A | 15.60† | 18.26 | 18.93 | 19.17 |

| B | 15.81 | 17.81 | 18.59 | 18.82 |

| C | 3.94 | 4.49 | 4.69 | 4.75 |

| D* | 6.23 | 7.05 | 7.27 | 7.33 |

| E | 4.49† | 5.39 | 5.62 | 5.72 |

| Total | 8.94† | 10.36‡ | 10.77 | 10.90 |

HO, hospital-onset, healthcare facility-associated; HCFA, healthcare facility-associated.

The study period was restricted to September 2001 – March 2006.

HO CDI was significantly lower than HCFA-30 CDI (p < 0.01).

HCFA-30 CDI was significantly lower than HCFA-90 CDI (p < 0.01).

The rank-order of hospitals by CDI rates remained constant across all definitions within each study year. In addition, the hospital rankings for the entire study period remained constant across the different definitions: hospital B maintained the highest rate, followed by hospitals A, D, E, and C (Table 2).

The correlations between each hospital’s monthly rates of HO and HCFA-30 CDI were almost perfect (range, ρ = 0.94–0.99, p < 0.001). There were similar correlations between the rates of HCFA-30 and HCFA-60 CDI (range, ρ = 0.98–1.00, p ≤ 0.001) and HCFA-60 and HCFA-90 CDI (ρ = 1.00 for all, p < 0.05).

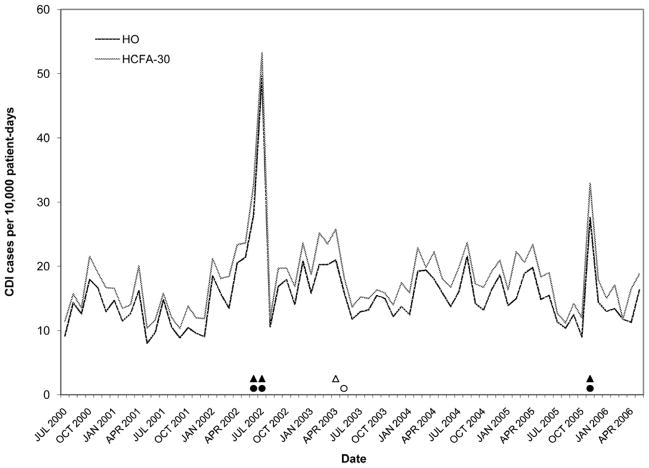

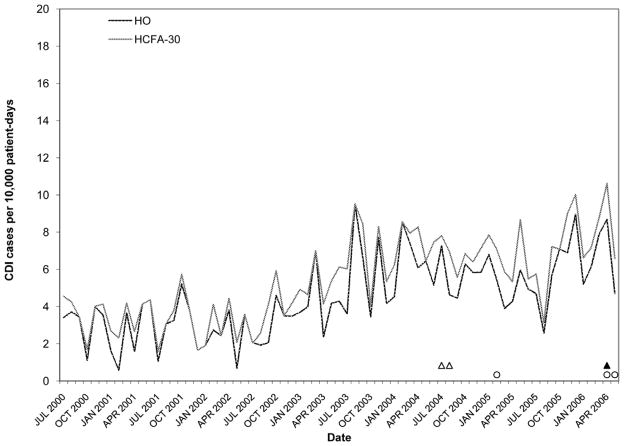

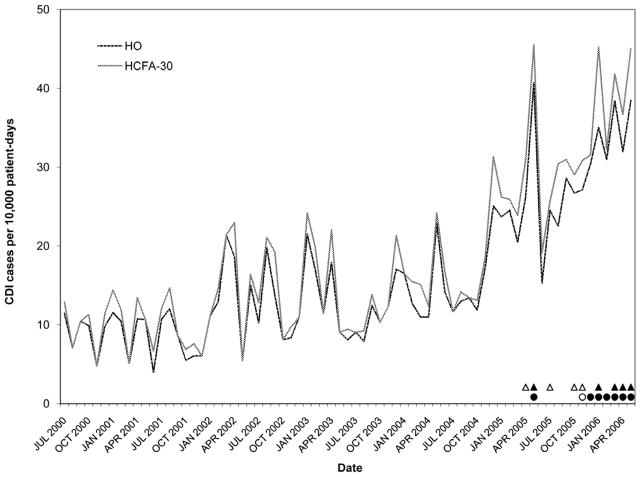

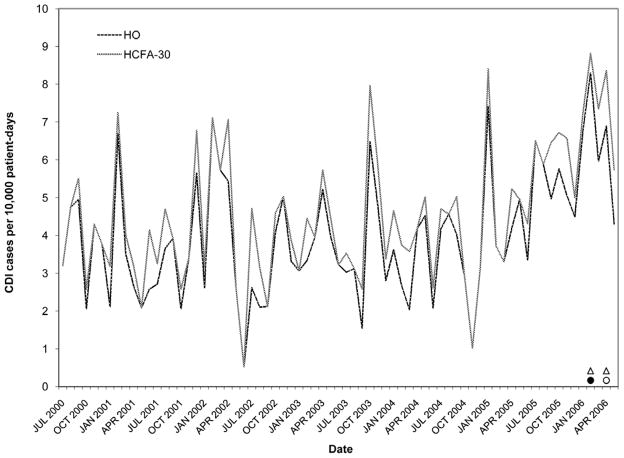

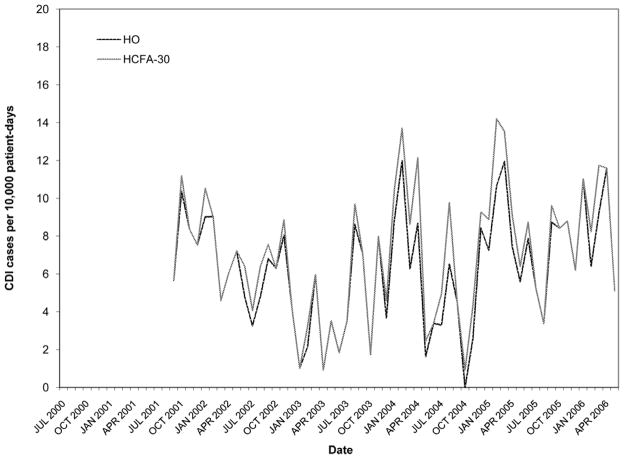

Figures 2–6 present HO and HCFA-30 CDI incidence rates and abnormal time points by hospital. During the study period, four hospitals (80%) detected at least one time point where the CDI rate was > three SD above the center line (Table 3), with a range of one to seven abnormal time points per hospital. Overall, 12 time points were identified to have CDI rates > three SD above the center line, including 11 time points identified by the HO definition and nine time points identified by the HCFA-30 definition, with discordant results at four time points (κ = 0.794, p < 0.001). There was perfect agreement between HO and HCFA-30 definitions at two hospitals (hospitals A and D), with a total of three time points with CDI rates > three SD above the center line. There was almost perfect agreement at three hospitals (hospitals B, C, and E). At hospital B, there were seven months with CDI rates > three SD above the center line: five months were identified by both HO and HCFA-30 definitions and two by the HO definition only. At hospital C, one month had a HO CDI rate > three SD above the center line, but no abnormally high monthly CDI rates were identified by the HCFA-30 definition. At hospital E, one month had a HCFA-30 CDI rate > three SD above the center line, but no abnormally high monthly CDI rates were identified by the HO definition. In addition to the 11 time points identified to have HO CDI rates > three SD above the center line, the more conservative supplementary within-limit criteria identified five more months with abnormally high HO CDI rates (one month each at hospitals A, B, and C; two months at hospital E). Similarly, the more conservative supplementary within-limit criteria identified eight more months with abnormally high HCFA-30 CDI rates (one month at hospitals A and C; two months at hospital E; and four months at hospital B) in addition to the nine time points identified to have HCFA-30 CDI rates > three SD above the center line. When combining the results of abnormally high CDI rates detected by either the > three SD above the center line rule or the within-limit criteria, the overall kappa statistic decreased to 0.669 (p < 0.001) (Table 3).

Figure 2.

Rates of Clostridium difficile infection (CDI) by surveillance definition at hospital A. Solid black circles, abnormally high HO CDI incidence (> 3 SD); Outlined circles, abnormally high HO CDI incidence (within-limit); Solid black triangles, abnormally high HCFA-30 CDI incidence (> 3 SD); Outlined triangles, abnormally high HCFA-30 incidence (within-limit) (for definitions, see Methods).

Figure 6.

Rates of Clostridium difficile infection (CDI) by surveillance definition at hospital E. Solid black circles, abnormally high HO CDI incidence (> 3 SD); Outlined circles, abnormally high HO CDI incidence (within-limit); Solid black triangles, abnormally high HCFA-30 CDI incidence (> 3 SD); Outlined triangles, abnormally high HCFA-30 incidence (within-limit) (for definitions, see Methods).

Table 3.

Agreement of Abnormal Monthly Rates between HO and HCFA-30 Surveillance Definitions of Healthcare Facility-Associated Clostridium difficile Infection

| Hospital | No. of abnormal monthly rates

|

|||||

|---|---|---|---|---|---|---|

| > 3 SD | > 3 SD and within-limit criteria* | |||||

|

| ||||||

| HO | HCFA-30 | κ† | HO | HCFA-30 | κ† | |

| A | 3 | 3 | 1.00 | 4 | 4 | .735 |

| B | 7 | 5 | .818 | 8 | 9 | .666 |

| C‡ | 1 | 0 | n/a‡ | 2 | 2 | 1.00 |

| D | 0 | 0 | 1.00 | 0 | 0 | 1.00 |

| E‡ | 0 | 1 | n/a‡ | 3 | 3 | .304 |

| Total | 11 | 9 | .794 | 17 | 18 | .669 |

SD, standard deviation; HO, hospital-onset, healthcare facility-associated; HCFA, healthcare facility-associated; κ, kappa statistic.

Supplementary within-limit criteria considered in the absence of abnormal time points identified by > 3 standard deviations definition.

p ≤ 0.01 for all comparisons.

Kappa statistic not calculated due to constant values for either the HO or HCFA-30 definition.

DISCUSSION

This is the first multicenter study comparing standardized CDI surveillance definitions across institutions to determine the influence that different definitions of CDI have on perceived CDI incidence and detection of abnormal increases in CDI rates. The results of this investigation suggest that tracking CO/HCFA cases in addition to HO cases captures significantly more CDI cases, but does not improve the ability of HCFs to detect abnormally high rates of CDI. Compared to surveillance of HO cases alone, the expanded surveillance definitions that also track CO/HCFA cases had excellent correlation over time and almost perfect agreement for detection of abnormally high CDI rates. The rank-order of hospitals by CDI rates did not vary by surveillance definition; instead, the hospital rankings remained constant within each study year as well as over the six-year study period. From a public health perspective, the primary purposes of CDI surveillance are to guide the implementation of interventions to control CDI in HCFs and to monitor the impact of such interventions. Therefore, it is critical that surveillance definitions have the ability to accurately identify outbreaks. Our findings provide evidence that surveillance of HCF-onset, HCF-associated CDI alone is sufficient to detect an outbreak.

We found excellent correlation of rates over time between the HO and the CO/HCFA surveillance definitions, which reflects the high proportion of CDI cases captured by the HO definition. In our study, HO cases comprise 82% of all HCF-associated cases. This proportion is consistent with a study by Kutty et al., who reported that 77% of HCF-associated cases occurring within 90 days of hospital discharge were HO.8 Although uncertainty exists regarding the typical incubation period from exposure to infection,4;5 the high proportion of cases with HO is not surprising since inpatient stay at an HCF is a major risk factor for development of C. difficile colonization and infection. Biological explanations for the increased risk of CDI at an HCF include exposure to concurrently admitted patients with CDI, antimicrobial use, and the advanced age and severity of illness of patients in HCFs.3;4;14

Current surveillance definitions classify CO/HCFA CDI cases by the timing of recent HCF exposures. Published studies report that the majority of patients with delayed-onset cases have CDI symptom onset within four weeks of discharge from an HCF,6–8 with less CDI symptom onset in patients as many as two to three months after discharge.7;9 For instance, Kutty et al. identified 70% of CO/HCFA CDI cases within the first 30 days after hospital discharge.8 Chang et al. identified 85% of CO/HCFA CDI cases within the first 30 days after hospital discharge.6 Unlike our study which focused exclusively on hospital-based surveillance, these studies employed surveillance strategies that captured cases managed in outpatient settings. Since the availability of outpatient toxin results vary across HCFs, we focused exclusively on hospital-based surveillance in order to increase the generalizability of our findings. Despite different surveillance approaches, our study identified 72% of CO/HCFA CDI cases within the first 30 days after hospital discharge, an estimate that falls between the Kutty et al. and Chang et al. estimates.

A unique strength of this study is the use of statistical control charts to evaluate the influence of CO/HCFA surveillance on outbreak detection. The HCFA-60 and HCFA-90 definitions did not identify significantly higher CDI rates compared to the HCFA-30 definition at any of the institutions and were almost 100% concordant with the HCFA-30 definition; therefore, we focused the control chart analysis on the HO and HCFA-30 definitions. Despite significantly higher HCFA-30 vs. HO CDI rates at two out of five hospitals, the HO and HCFA-30 definitions detected similar totals of abnormally high time points using the > three SD criteria and with the addition of the more conservative within-limit criteria. The κ values for HO and HCFA-30 surveillance indicate substantial concordance between the two definitions to identify abnormally high CDI rates. Many of the discordant time points determined by one definition were different by only one month compared to time points determined by the other definition. The κ value improved to 0.899 when months with abnormally high HO CDI rates (defined by either the > 3 SD rule or the within-limit criteria) that occurred within one month of abnormally high HCFA-30 CDI rates, and vice versa, were considered concordant. From a clinical perspective, the almost perfect κ value calculated in the latter analysis provides evidence that the simpler HO surveillance definition accurately identifies increases from endemic to epidemic CDI rates.

Statistical control charts provide a standardized, objective method to monitor CDI rates but do not preclude the need for visual inspection of CDI rates by infection prevention and control practitioners. Despite the gradual increase in CDI incidence over the study period at hospital E, an abnormal time point by the > three SD rule was not identified until April 2006. Other limitations of this study include a lack of generalizability to smaller, community hospitals. The large size of the academic medical centers included in this study likely resulted in less variability of rates than hospitals with lower patient-day totals and fewer cases of CDI. This may explain the consistency in the rank-order of hospitals by annual CDI rates across our surveillance definitions, as well as the excellent correlation between surveillance definitions. In addition, the definitions of patient-days varied slightly across study hospitals. However, the expected impact on the results is minimal as all comparisons were intra-hospital and used the same patient-day total for the CDI rate denominator.

Although HCF-onset, HCF-associated CDI is currently considered the minimum surveillance required for healthcare settings,10 there is rationale for additional tracking of CO/HCFA cases. Currently, the transmission source for CO/HCFA CDI is poorly understood. Past studies indicate that patients with prior healthcare exposures are more likely to be colonized with C. difficile than patients without prior healthcare exposures, suggesting acquisition from an HCF.4;5 However, patients with CO/HCFA CDI frequently present to the hospital with CDI symptoms more than seven days after hospital discharge, which is beyond the understood incubation period for CDI.4;5 Furthermore, the strains of C. difficile present at readmission may differ from the strains present at discharge.5 Potential for acquisition of C. difficile after hospital discharge has implications for HCFs, as this may introduce new strains into the healthcare setting and be a source of C. difficile transmission, contributing to HO CDI rates.5 In addition, studies indicate that the risk factors for CO/community acquired CDI may differ from the risk factors for HO CDI.15;16 It is also possible that risk factors for CO/HCFA CDI differ from risk factors for HO CDI.7 Therefore, HCFs may need to tailor CDI prevention efforts to target the more prevalent types of CDI in their institution. Future studies are needed to provide insight into recent increases in the incidence of both HO and CO CDI, as well as the transmission source and risk factors for CO CDI.

This is the first study to compare different standardized CDI surveillance definitions across institutions to determine if the definitions impact the perceived burden of CDI or alter the ability to detect a CDI outbreak. Our findings suggest that 30 days after hospital discharge is a reasonable time frame for surveillance of CDI to detect cases associated with an HCF, but that HCFs have the ability to accurately detect abnormal increases in CDI rates with a more simplistic HCF-onset, HCF-associated case definition. Given limited infection control resources, these findings could have important implications for surveillance methods in HCFs.

Figure 3.

Rates of Clostridium difficile infection (CDI) by surveillance definition at hospital B. Solid black circles, abnormally high HO CDI incidence (> 3 SD); Outlined circles, abnormally high HO CDI incidence (within-limit); Solid black triangles, abnormally high HCFA-30 CDI incidence (> 3 SD); Outlined triangles, abnormally high HCFA-30 incidence (within-limit) (for definitions, see Methods).

Figure 4.

Rates of Clostridium difficile infection (CDI) by surveillance definition at hospital C. Solid black circles, abnormally high HO CDI incidence (> 3 SD); Outlined circles, abnormally high HO CDI incidence (within-limit); Solid black triangles, abnormally high HCFA-30 CDI incidence (> 3 SD); Outlined triangles, abnormally high HCFA-30 incidence (within-limit) (for definitions, see Methods).

Figure 5.

Rates of Clostridium difficile infection (CDI) by surveillance definition at hospital D. Solid black circles, abnormally high HO CDI incidence (> 3 SD); Outlined circles, abnormally high HO CDI incidence (within-limit); Solid black triangles, abnormally high HCFA-30 CDI incidence (> 3 SD); Outlined triangles, abnormally high HCFA-30 incidence (within-limit) (for definitions, see Methods).

Acknowledgments

This work was supported by grants from the Centers for Disease Control and Prevention (#UR8/CCU715087-06/1, #1U01C1000333-01) and the National Institutes of Health (K12RR02324901-01, K24AI06779401, K01AI065808-01). Findings and conclusions in this report are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention or the National Institutes of Health.

Footnotes

Preliminary data was presented in part at the 46th Annual Meeting of the Infectious Diseases Society of America, Washington, DC (October 25-28, 2008).

Potential conflicts of interest: E.R.D. has served as a consultant to Merck, Salix, and Becton-Dickinson and has received research funding from Viropharma. D.S.Y. has received research funding from Sage Products, Inc.

References

- 1.Johnson S, Gerding DN. Clostridium difficile--associated diarrhea. Clin Infect Dis. 26:1027–1034. doi: 10.1086/520276. [DOI] [PubMed] [Google Scholar]

- 2.Dubberke ER, Reske KA, Olsen MA, McDonald LC, Fraser VJ. Short- and long-term attributable costs of Clostridium difficile-associated disease in nonsurgical inpatients. Clin Infect Dis. 46:497–504. doi: 10.1086/526530. [DOI] [PubMed] [Google Scholar]

- 3.Dubberke ER, Reske KA, Olsen MA, et al. Evaluation of Clostridium difficile-associated disease pressure as a risk factor for C difficile-associated disease. Arch Intern Med. 167:1092–1097. doi: 10.1001/archinte.167.10.1092. [DOI] [PubMed] [Google Scholar]

- 4.McFarland LV, Mulligan ME, Kwok RY, Stamm WE. Nosocomial acquisition of Clostridium difficile infection. N Engl J Med. 320:204–210. doi: 10.1056/NEJM198901263200402. [DOI] [PubMed] [Google Scholar]

- 5.Clabots CR, Johnson S, Olson MM, Peterson LR, Gerding DN. Acquisition of Clostridium difficile by hospitalized patients: evidence for colonized new admissions as a source of infection. J Infect Dis. 166:561–567. doi: 10.1093/infdis/166.3.561. [DOI] [PubMed] [Google Scholar]

- 6.Chang HT, Krezolek D, Johnson S, Parada JP, Evans CT, Gerding DN. Onset of symptoms and time to diagnosis of Clostridium difficile-associated disease following discharge from an acute care hospital. Infect Control Hosp Epidemiol. 28:926–931. doi: 10.1086/519178. [DOI] [PubMed] [Google Scholar]

- 7.Dubberke ER, McMullen KM, Mayfield JL, et al. Hospital-associated Clostridium difficile infection: Is it necessary to track community-onset disease? Infection Control and Hospital Epidemiology. doi: 10.1086/596604. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kutty PK, Benoit SR, Woods CW, et al. Assessment of Clostridium difficile-associated disease surveillance definitions, North Carolina, 2005. Infect Control Hosp Epidemiol. 29:197–202. doi: 10.1086/528813. [DOI] [PubMed] [Google Scholar]

- 9.Palmore TN, Sohn S, Malak SF, Eagan J, Sepkowitz KA. Risk factors for acquisition of Clostridium difficile-associated diarrhea among outpatients at a cancer hospital. Infect Control Hosp Epidemiol. 26:680–684. doi: 10.1086/502602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McDonald LC, Coignard B, Dubberke E, Song X, Horan T, Kutty PK. Recommendations for surveillance of Clostridium difficile-associated disease. Infect Control Hosp Epidemiol. 28:140–145. doi: 10.1086/511798. [DOI] [PubMed] [Google Scholar]

- 11.Benneyan JC. Statistical quality control methods in infection control and hospital epidemiology, part I: Introduction and basic theory. Infect Control Hosp Epidemiol. 19:194–214. doi: 10.1086/647795. [DOI] [PubMed] [Google Scholar]

- 12.Sellick JA., Jr The use of statistical process control charts in hospital epidemiology. Infect Control Hosp Epidemiol. 14:649–656. doi: 10.1086/646659. [DOI] [PubMed] [Google Scholar]

- 13.Benneyan JC. Statistical quality control methods in infection control and hospital epidemiology, Part II: Chart use, statistical properties, and research issues. Infect Control Hosp Epidemiol. 19:265–283. [PubMed] [Google Scholar]

- 14.Dubberke ER, Reske KA, Yan Y, Olsen MA, McDonald LC, Fraser VJ. Clostridium difficile--associated disease in a setting of endemicity: identification of novel risk factors. Clin Infect Dis. 45:1543–1549. doi: 10.1086/523582. [DOI] [PubMed] [Google Scholar]

- 15.Severe Clostridium difficile-associated disease in populations previously at low risk--four states. MMWR Morb Mortal Wkly Rep. 2005;54:1201–1205. [PubMed] [Google Scholar]

- 16.Surveillance for community-associated Clostridium difficile--Connecticut. MMWR Morb Mortal Wkly Rep. 2006;57:340–343. [PubMed] [Google Scholar]