Abstract

The release of the Institute of Medicine (Kohn et al., 2000) report “To Err is Human”, brought attention to the problem of medical errors, which led to a concerted effort to study and design medical error reporting systems for the purpose of capturing and analyzing error data so that safety interventions could be designed. However, to make real gains in the efficacy of medical error or event reporting systems, it is necessary to begin developing a theory of reporting systems adoption and use and to understand how existing theories may play a role in explaining adoption and use. This paper presents the results of a 9-month study exploring the barriers and facilitators for the design of a statewide medical error reporting system and discusses how several existing theories of technology acceptance, adoption and implementation fit with many of the results. In addition we present an integrated theoretical model of medical error reporting system design and implementation.

Keywords: Patient safety, Medical error, Reporting systems

1. Introduction

The release of the Institute of Medicine (IOM) (Kohn et al., 2000) report “To Err is Human”, brought attention to the problem of medical errors, which sparked a debate about how best to reduce medical errors (Layde, et al., 2002; McNutt, et al., 2002). This debate initially focused more on reactive methods such as error reporting, as opposed to proactive methods such as good system design.

In health care, error or event reporting systems initially received the most attention and funding. Even the most recent IOM report on patient safety (Institute of Medicine, 2004) was heavily focused on reporting systems. Despite this attention, the study of medical error reporting systems has remained mostly atheoretical. The focus has been on the development of error reporting systems (Institute of Medicine, 2004; Pace, et al., 2003), applications in specific settings that quantify and classify the reported errors (Suresh, et al., 2004), and explanation of the characteristics of a successful reporting system (Johnson, 2002; Kaplan and Barach, 2002; Kaplan, 2003; Kaplan and Fastman, 2003; Leape, 2002).

Although such approaches are critical for organizations implementing or designing reporting systems, real gains in the efficacy of medical error or event reporting systems will come about through developing new theories of reporting systems adoption and use or applying existing ones. Theory provides a conceptualization of how and why different phenomena occur. It helps develop testable hypotheses that guide research, which in turn improves the theory's sensitivity and specificity. As the validity of the theory improves, practitioners have better information for their applications. Given the high cost of medical errors in terms of human suffering, loss of life, and dollars (Brennan, et al., 1991; Kohn et al., 2000; Leape, et al., 1991), applying error reporting research to develop a useful reporting system is important. That can be done best when patient safety scientists better understand the mechanisms by which people choose to adopt and use these systems.

There is some consensus that successful reporting systems include those whose data are analyzed by independent organizations composed of subject matter and safety experts, provide timely feedback, suggest systems-oriented solutions to reported problems, have participant organizations responsive to suggested changes, and are non-punitive and confidential (Leape, 2002). But each of those characteristics affords success for different reasons, and these reasons could be understood in terms of many different theories, especially those related to technology acceptance, adoption and implementation. A medical error reporting system is a technology, and like any technology, a health care organization must decide to adopt it or engage in it, and once implemented, individuals must decide whether or not to use it—even if the reporting system is supposedly mandatory. Thus, theories that explain why organizations choose to adopt technologies and theories that explain what motivates people to use or to decide to use technologies are relevant.

This study was initially developed to uncover barriers and facilitators for the design of a statewide medical error reporting system. As results were being analyzed, it became apparent that several existing theories of technology acceptance, adoption and implementation were compatible with or fit many of the results obtained in this study and others. To explore the compatibility of the results with existing theory, we purposefully selected theories related to three different levels of sociotechnical system fit to which we compare the study results: organizational adoption (Rogers, 1995), system implementation (Clegg, 2000), and end user acceptance (Davis, 1989). This paper will share the overall results of the study as they demonstrate how a variety of theories fit the results, with the hope that future research on medical errors will incorporate one or more of the theories to guide their efforts so as to speed progress toward more effective systems.

2. Methods

2.1. Design

A focus group design, comprised of two separate focus groups (physician and clinical assistant), was used. There was a total of 16 focus group meetings (seven clinical assistant, nine physician) with the following topics: fears and concerns about reporting medical errors, potential purposes of a medical error reporting system, barriers and motivators for reporting to a system given the identified purposes, what to report (e.g. chain of causality, mitigating factors, near misses), instructions for using the reporting system, mechanisms of and medium for reporting, uses of the reported data, security and ethical issues, as well as an end-of-study feedback meeting. These topical areas were based on investigator experience and previous literature on error reporting inside and outside of health care. Sessions were open to further exploration of other relevant issues that emerged during the discussions. Some topics were discussed over multiple sessions.

2.2. Subjects

The group termed `physician' (n = 8) was comprised of seven family physicians and one nurse practitioner. The group termed `clinical assistant' (n = 6) was comprised of medical assistants and nurses whose primary role was to assist physicians in their duties. Physician participants were identified from a list of family physicians that were members of the family physician academy of a Midwestern state. Clinical assistants were recruited from a listing of those who had participated in a previous study (n = 248) regarding family practice quality of work life in that state (no comprehensive list otherwise exists for clinical assistants). The clinical assistants working with participating physicians were excluded from recruitment. Recruitment continued until a maximum of 8 individuals per group volunteered to participate. Participants in both groups practiced in urban and rural locations, had a range of work experience, and worked in different types of organizations. Specific demographic characteristics were not collected to protect participant confidentiality.

2.3. Procedures

The study was approved by the University of Wisconsin-Madison Institutional Review Board (IRB). Meetings took place between 7:00 am to 8:00 am over toll-free teleconference lines and occurred one to two times per month from October 2001 to June 2002 for the physicians, and February 2002 to July 2002 for the clinical assistants. Attendance was not taken to protect confidentiality. The sessions were co-moderated by the two study investigators: a human factors engineer (BK) and a family physician (JB). Participants were instructed not to use names, not to name organizations, and not to talk about actual errors or adverse events. Sessions were audio-taped, edited by one of the moderators to remove any form of accidental participant identification, and then transcribed.

2.4. Analysis

The focus group transcripts were subjected to a content analysis by one of the researchers (KHE) using inductive analysis. QSR Nvivo software was used to store the coding. Validity of the coding was ensured in two ways. First, two other research team members (BK and JB) reviewed and discussed the codes to make sure they had face validity. Second, the data and analytic categories with their respective quotes were provided to all study participants so they could confirm validity. All participants were given 1 month to review the information and send any feedback. The three participants who provided feedback indicated that the results and interpretations were valid. No changes were recommended.

3. Results

The focus groups meetings yielded over 300 pages of text, 86 major and minor themes, and over 1000 coded passages. The major themes are discussed individually in the following sections. Based on these results, key characteristics of an error reporting system were summarized and listed in Table 1.

Table 1.

Key characteristics for error reporting system design

| Purpose | System improvement |

| Organizational accountability | |

| Clinician education | |

| Patient education | |

| Administrative education | |

| Public assurance | |

| Process solution development | |

| External (e.g. drug companies) entity education | |

| Content | Form |

| Elements such as situational characteristics, contributing factors, tips for prevention, tools involved, mitigating factors | |

| Limited identification of both clinician and patient | |

| Format | |

| Blend of checkboxes and free-flow narrative sections | |

| Design | Non-punitive |

| Secured access | |

| Optional identification | |

| Flexibility (reporting medium, anonymity, etc.) | |

| Easy to use | |

| Pilot testing before implementation | |

| Tactics employed to ensure clinician buy-in | |

| Continuous feedback | |

| Partial protection from consequential events for reporting | |

| Solutions availability (e.g. for common errors) | |

| Professional analysis of the data | |

| Presence of an intermediary (e.g. editor, consultant) | |

| Positive terminology (e.g. “Care Improvement System” vs. “Error Reporting System”) | |

| Processing entity | Separate from the state |

| Diverse mix of professionals (clinical and non-clinical) | |

| No punitive power | |

| Instructions | Information distributed to impart when to use, system goals, protections offered, and system limitations |

| Training options: on-site expert, help desk, auto tutorials, help icons |

3.1. System purpose (see Table 2)

Table 2.

Participant quotes about the purpose of the system

| Topic | Representative quotes |

|---|---|

| Purpose: system improvement | • “… the main goal should be to improve the way we take care of patients.” |

| • “It would also help me I think, besides the personal gain of just looking at your own practice, to know how other people were doing. Was this a problem that a lot of people had or was I more of an isolated case in having that error?” | |

| Purpose: public awareness | • “I think educationally it might be important for people to realize when you go to a medical facility or you seek healthcare… there is inherent risk just like flying.” |

| Purpose: drawback of public awareness | • “…it may even be counterproductive in the public eye if all they see is error after error…” |

| Purpose: not punitive | • “I think the accountability, however, should be on the side of what programs has your organization instituted to prevent the recognized error.” |

| Purpose: payor education | • “If a payor reimbursed for…health education services…it would be a case where they could help reimburse for what would amount to error reduction.” |

Participants agreed that the most important purpose for an error reporting system was for overall system improvement. This included the potential for a system to guide the development of error prevention tactics and best practice recommendations. Similarly, participants envisioned a system that provided information facilitating education for themselves, patients, administration, and even for entities external to health care. Reported data could be integrated into clinician training or curricula, used to educate patients on the risks of certain behaviors, could help administrators build effective support systems for practice, and could offer solid information for drug manufacturers on weaknesses in their products. Information from error reports could also be communicated to patients to help them improve their own safety.

Participants also saw benefit in using the system for public assurance and awareness. This acknowledges that there are errors in health care while presenting a balanced picture by informing the public of safety activities.

Of equal importance were the undesirable system purposes that participants identified. These purposes included punishment, payors using the system (to manipulate participants), creation of performance standards (which encourage unfair comparisons), and establishing policies of accountability to threaten clinician status with licensing or other organizations.

3.2. Motivators (see Table 3)

Table 3.

Participant quotes about motivators related to a medical error reporting system

| Motivators: feedback | • “I think there has to be… a high likelihood of getting some positive benefit out of it rather than just another place where forms are sent or reports are sent and then they just kind of vanish.” |

| • “But I think the real plus to it would be if there would be some way to get instantaneous feedback on what you're reporting. Because I suspect that most errors that people would report have occurred before. And maybe somebody has the answer to that error problem and you could get it immediately while it's still in your head.” | |

| Motivators: mandatory system | • “I almost think if you don't make it mandatory that you aren't going to get much information.” |

| • “… are you going to take extra time out in an extremely busy day to say 'Oh, yeah, I get to go report this to a voluntary committee'? I don't think so.” | |

| • “There are too many things to do in a day that are…well, there are so many things to do in the day that some voluntary things, you'd almost have to have missionary zeal…” | |

| Motivators: financial incentives | • “… I think it would taint it right away that we're not any longer doing this for professional pride or trying to fix a recognizable problem, that we have to be bought in order to do something, and so therefore we don't see the inherent value in it anyway.” |

| Motivators: other incentives | • “…perhaps by having reported an error early, you would get some sort of recompense or whatever from whatever malpractice action might occur or, you know, disciplinary action might occur within your facility.” |

Participants listed several system features that would serve as motivators to report to the system. One agreed-upon motivator was a mechanism for feedback, particularly to keep reporters informed of error occurrences at their facilities and progress made. Participants suggested feedback in the form of quarterly or yearly summaries, perhaps highlighting the most frequently occurring types of errors. Other participants discussed the possibility of extracting from the system process solutions for addressing certain types of errors.

The group of clinical assistants felt that a mandatory system would provide motivation to participate whereas voluntary reports would be of lower priority than other schedule demands. Additionally, participants raised the possibility that voluntary systems would lead to biased reporting (i.e. only those interested in change would report).

Financial incentives offered mixed reactions. The general sentiment was that error reporting was not an activity that one should have to be paid to do; in addition, financial incentives make the system appear tainted, sending a negative message to the public. Moreover, participants felt financial incentives might bias the data as they might encourage one set of participants while discouraging another, depending on the economical situation of the practice. Participants did express the desire to receive benefits such as partial protection from quality assurance actions or malpractice suits.

3.3. Concerns and barriers (see Table 4)

Table 4.

Participant quotes about concerns or barriers related to a medical error reporting system

| Concerns: ease of use | • “Ease of use so that we're not talking about adding lOmin more of paperwork per day to the schedule.” |

| Concerns: confidentiality | • “I don't trust confidentiality. It's breached too often.” |

| • “I would have some concerns about its existence, meaning it was possibly up for grabs no matter what safeguards were conceived initially.” | |

| Concerns: system abuse | • “I'm not sure how to present this in a way that it's not going to be used for legal reasons and that type of thing. And that, I think, is my biggest fear of all of this is that this would eventually come out and be able to be used against us.” |

| Barriers: length of report | • “Well, if you're going to have pages upon pages to fill out, you know, it's not going to get done.” |

| • “My biggest concern would be that there seems to be more likely on the days when we're busiest, and I would be worried that any system that we'd come up with would be cumbersome and therefore just not used. You know, it would be fine to report on the slow day but they are less likely to occur on a slow day.” | |

| Barriers: punishment | • “Why should they report anything, if there is going to be any retaliation or punitive things happening, I think you're going to have to lose that ability to do that if you want any incentive for people to report it. Because at this point, if there's anything punitive happening, why should anybody report it?” |

| Barriers: reporting near missies | • “Well, unless there was an adverse outcome, I don't think people are going to take the time to report.” |

Participants preferred a system that would fit well within their current work structure and would not require a lengthy reporting procedure. This was mainly because participants felt that errors were likely to occur on busier days, making it critical that time spent on reporting errors be nominal. Likewise, participants were also looking for a “pluralistic” system in which they would have a choice of medium in which to submit the error report (e.g. Internet, phone, paper). This preference was a result of the diverse work environments of participants as well as their personal comfort level with various reporting mediums. The key component of whatever medium was that it be consistently available, giving all individuals and organizations an equal opportunity to report. Beyond the fit with individual work processes, there was also concern regarding fit with organizational processes. Participants noted that if an organization has to continuously devote resources to act upon the results of error reports, it could become burdensome, and displace other quality improvement initiatives.

The degree of identification or confidentiality in the system was also a concern for participants. Discomforted by the potential for punishment, participants expressed interest in a “blinded” reporting system: one having optional identification fields or asking for generic reporter information that would not be easily identifiable. Related closely was the issue of system access—participants repeatedly questioned `who would have access to (the information in) this system?' Concerns about access varied from the medical licensing board (which could lead to punitive actions) to the patients themselves.

Additionally, participants expressed concerns specific to reporting behavior. The issue of system integrity surfaced due to the possibility of different clinicians double or triple reporting the same event, or reporting unequally to the system. Participants were also concerned over having to report errors that resulted from external sources (e.g. patient behaviors).

The potential for the system to be abused also concerned participants. Beyond the possibility of the information being acquired for legal purposes, participants also feared the system would be abused by employees (co-worker retaliation, whistleblowers), employers (supervisors who did not like the employee), and/or the lay press. Lastly, participants (but more notably the clinical assistants) listed organizational and institutional issues regarding reporting. These included attitude of the administration (e.g. encouragement to report errors), administrative support following the report of an error, and management philosophy regarding error reporting. Clinical assistants mentioned the difficulty of reporting an error made by colleagues—especially physicians.

3.4. Content (see Table 5)

Table 5.

Participant quotes about the content of the medical error reporting system

| Content: prevention factors | • “You know, as a practicing physician, to be given advice on how to avoid problems…that would be helpful to me. Or just say, “These are the things that led up to it or these are the things of how we averted it'” |

| • “… I wouldn't stifle somebody's suggestions including a fix or potential remedy or how the remedy did actually take place in that case.” | |

| Content: patient and clinician identifiers | • “Well, my concern is that you could take that on a whole number of dimensions. You could take it to race and ethnicity, to age, to hair color practically… It's not that it's trivial but…my guess is that the number of times that would really help us understand what happened is relatively low.” |

| • “Title and experience. I guess that would be the two biggest things in this area.” | |

| • “I think any identifying information would not be…appropriate. And you never know how somebody is going to figure out who somebody might be.” | |

| Content: format of report form | • “There's an infinite number of events that could happen, but I like your idea of at least categorizing, sort of like a file folder…what happened…med error versus wrong side surgery…but then certainly leave plenty of space for those who are not either equipped for or…comfortable with the just check box, which…makes you feel like it's one size doesn't fit all.” |

Participants listed elements necessary for inclusion in the reporting medium and format preferences. Items in the reporting form included situational characteristics, such as time of day or on-call weekend (“I think the number of hours worked is very crucial…”), as well as specific analysis categories such as contributing factors, preventive factors, tools, mitigators, etc.

Much debate centered upon the degree of identification in the system, particularly of the reporter or personnel involved. The primary concern was for personal protection, but it was also noted that excessive information might make reporting a lengthier procedure without adding anything to quality. The general (though not complete) agreement was that the situational context was the most important component of an error report, and that job title and experience level would be the most personally identifiable information that participants would feel comfortable reporting. Reportable patient information could include age and gender, and only characteristics relevant to the case beyond that.

The preferred system format combines checkboxes and free-flow narrative sections. Checkboxes were suggested because of their time-saving ability, and narrative sections were identified as appropriate for listing the facts of the occurrence (most likely brief descriptions). Participants had several ideas for active reporting including algorithms to go through the system, as well as templates that would navigate the user through different error scenarios (e.g. medication error).

3.5. System design (see Table 6)

Table 6.

Participant quotes about system design issues related to a medical error reporting system

| System design: implementation | • “I think I'd like to have a few of the bugs worked out of the system before we have them come on board with the data that could be used directly to affect contracting. If we had a few dry runs…” |

| • “…is there a way that either a videotape presentation or even online, a little video clip type of thing… those kinds of little pre-presentations might set the stage for why this is important and kind of grab their attention to buy in.” | |

| System design: identification issues | • “My sense is to make it more user friendly will mean less information and no chance of being identified would be about the first criteria to get people to even put their toe in the water.” |

| • “I don't know if there would be different users groups…you'd say, `OK, here's the anonymous club and here's the confidential club and here's the, you know, daring de-identified group,' you could literally migrate through different levels. Some people might feel they're totally comfortable with a de-identified approach and could sign on for that level of disclosure.” | |

| System design: name of the system | • “If you use a word like occurrence, it doesn't denote I'm at fault. It's something that happened.” |

| System design: time to complete | • “… I think the longer the form, the more people get filtered out from doing lower level event reporting, which potentially might be the most helpful in terms of preventing the major events. 5min was the number in my head as an absolute upper, and 2 would be the more desirable.” |

| System design: integration into existing systems | • “We already have a very elaborate incident report system that happens within my clinic system. And it would be nice if…the things that were reported there would automatically feed into your database.” |

Participants discussed issues such as the type of system, processes involved, as well as implementation components, to name a few. Discussions even included the suggestion of a database that would contain solutions or preventives to occurrences, rather than just the actual incidents or errors themselves.

The participants felt that an error reporting system would have to be introduced into the health care system gently, with pilot tests to assure its accuracy. Focus group discussions also proposed different means to assure clinician buy-in, such as having chances to communicate with current system users, obtaining endorsements (e.g. by professional organizations), or even the “salesman” approach (pens with the website URL, video clips).

Participants brought up the importance of an intermediary in the system; this might be “…somebody [who] should evaluate the situation besides yourself,” (said clinical assistants), an individual trained to conduct initial reviews of the data for comprehensiveness (said physicians), an editor to filter out ineffective reports, or someone to actually fill out error reports (e.g. from a verbal description left on a voice system).

Participants agreed that reporting time should not exceed 5 min and preferred around 2 min, which they felt was adequate to obtain pertinent information. Regarding the reporting timeframe, some felt that reporting immediately following the occurrence would be most beneficial while others felt more comfortable reporting at their leisure. Lastly, a system linked to clinician facilities' internal systems would eliminate wasted time from reporting the same error to both.

3.6. Processing entity (see Table 7)

Table 7.

Participant quotes about the processing entity for the medical error reporting system

| Processing entity: members | • “…once you get the state involved, then you start getting into politics.” |

| • “I think it has to be peers. I mean if you're judging an LPN, there should be an LPN on there. If you're judging a social worker, there should be a social worker on there…You're going to have to have somebody that actually does the work and knows.” | |

| Processing entity: authority | • “It seems like the only thing they should be empowered to do maybe is to make recommendations for improvement or training or…you know, not punishment…” |

| • “…when you talk about enforcement issues…it gets difficult because I can think of incidents where it would have been nice if there were some agency that could have done something…” |

It was agreed that a non-governmental entity would be most desirable, due to the political and potentially punitive implications of involving the government. Diverse clinical representation was considered important in order to benefit from broad perspectives and because of the variety of clinicians involved in medical error. Additionally, participants felt that safety professionals would be necessary for data analysis and would foster credibility among the public. Another perceived benefit of outside representation was that it would eliminate the perception of internal error due to the similar thinking among clinicians.

Participants wanted this organization to collect information, make recommendations and/or improvements, or serve an advisory role. A group that would not have the necessary power to act upon incidents might be futile.

3.7. Instructions (see Table 8)

Table 8.

Participant quotes about instructions for using the medical error reporting system

| Instructions | • “I would think that we would say, this is for medical error reporting and even giving some case scenarios about what would be appropriate to report here versus what should really go to the licensing board or what should go to your…legal counsel within an HMO or a hospital or what the state medical society might need to hear about…” |

| • “I think if I were going to introduce it to somebody else in my group, I would be looking at making it clear that they understood purpose, understood some fundamental definitions of errors and categorizations and had trust in the system in its, not only its value but its restrictions in use, the confidentiality issues, and that sort of thing.” | |

| • “We talked about the safety or the protections for the user, so you don't just walk into or feel like you're being led down the path where you're going to incriminate yourself.” | |

| • “… some of the limitations of it. And by acknowledging that up front at the time of making the report would make me more confident that as it's used, it's used in that light also.” | |

| • “It might be nice to have a variety of ways to offer it. You know, personally I like things I can do on my own in my own time. So, if I could pick something up and do something through the computer, maybe the first time I used it, to walk through some basic instructional things…” |

Participants felt that clear directives on when it was appropriate to use the system should be an integral part of training and instruction. For example, a “laundry list” of errors could clarify when reporting was necessary, though it would be difficult to develop. Participants also felt that instructions that explicitly state the goals, mechanics, limitations, and protections of the system would put users more at ease.

Proposals for mechanisms to facilitate use of the system included an expert on site for consultation, a component added to new employee orientation, help facilities or icons built into the actual system (if it were electronic), the availability of auto-tutorials, or perhaps even a toll-free phone number for a help desk.

4. Discussion

The purposes of this paper were to present barriers and motivators to the use of a statewide medical error reporting system and then compare the results to existing theories. As Table 1 shows, the results provided ample information on barriers and motivators related to the purpose of the system, content, design, processing entity and instructions. The findings generally corroborate those of other studies (Barach and Small, 2000; Cohen, 2000; Crawford, et al., 2003; Johnson, 2002; Kaplan and Barach, 2002; Leape, 2002; Uribe, et al., 2002), though the current results provide more depth into each topic and have uncovered differences between clinical assistants and physicians (for details see (Escoto, et al., 2004)). Although this study and others have discussed the factors that encourage or discourage the use of error-reporting systems, these factors have never been framed in terms of the rich technology acceptance, adoption, and implementation literature that has developed in the past almost 30 years. This literature can provide a theoretical backbone for understanding the reasons behind the use, acceptance, and success of technologies such as medical error-reporting systems. Theory-driven discussion of error-reporting systems provide (1) a means for applying knowledge gained from prior work to guiding and perhaps improving system design and implementation and (2) a principled way to combine technology design/implementation factors in predicting and modeling implementation outcomes.

Here, though we did not initially set out to frame our findings in such theory, we demonstrate how a theoretical framework could be achieved using three theories of technology acceptance, adoption, and implementation. We purposefully selected theories related to three different levels of sociotechnical system fit to which we compare the study results. At the organizational adoption level we chose Innovation Diffusion Theory (IDT) (Moore and Benbasat, 1991; Rogers, 1995), at the system implementation level we chose Sociotechnical Systems Theory (STS) (Clegg, 2000; Hendrick and Kleiner, 2001; Hendrick and Kleiner, 2002; Pasmore, 1988), and at the end user level we chose the Technology Acceptance Model (TAM) (Davis, 1989; Venkatesh and Davis, 2000). We acknowledge that numerous other technology change or acceptance theories could be used (for a review of such theories and how they relate to health care, (see Karsh and Holden, 2006) and that theories of decision making or human behavior and motivation may also be applicable. However, since a medical error reporting system must be adopted by organizations, implemented within organizations, and ultimately used by end users, we felt it would be most appropriate to target those three levels of sociotechnical fit. Tables 9–13 list select categories of implementation and design factors and principles from the three theories and corresponding findings from the focus group showing how study results fit the theories.

Table 9.

System usefulness: perceived usefulness (TAM); Relative advantage, results demonstrability (IDT); Information in the system and feedback should be useful (STS)

| Theory component | Study results |

|---|---|

| System purpose | System should improve patient care and provide education, information, and public awareness |

| Motivators | System should have feedback that facilitates patient care improvements |

| Barriers | System should not displace other quality improvement initiatives |

| Content | Content should have useful details, suggestions for improvement |

| System design | A moderator should extract useful reported information; option to report solutions may improve care |

| Processing entity | An experienced and diverse processing entity with (at least) the ability to make recommendations could lead to improvement |

Table 13.

Rewards and punishments: rewards should be aligned with policies and practices, costs and barriers should be minimized (STS)

| Theory component | Study results |

|---|---|

| System purpose | Purpose of system should not be punitive |

| Concerns | Identifying information (if any) should not facilitate punishment; system abuse should be prevented |

| Motivators | Incentives for reporting could motivate, but should not conflict with practice procedures or ethics |

| Barriers | System should not allow for retaliation or punitive consequences of reporting |

| System design | Preferred system would be anonymous or de-identified, to minimize possible punishment |

| Processing entity | Processing entity should not have any punitive power |

4.1. Technology Acceptance Model (TAM)

TAM (Davis, 1989; Davis, et al., 1989; Venkatesh and Davis, 2000) is concerned with individual users' acceptance of new technology, measured in terms of reported intention to use and subsequent technology usage. TAM boasts parsimony and predictive power; the model's two technology-specific factors have been repeatedly demonstrated to predict technology acceptance (see (Venkatesh, 1999) for a review). The perceived usefulness factor refers to the extent to which using a system is perceived to improve an individual's job performance. System usefulness was a critical matter for participants. Issues of usefulness centered on the fundamental purpose of the system to improve patient care, and the design and contents that could best achieve that purpose (Table 9). TAMs other predictor of technology acceptance is perceived ease of use, the extent to which the system is easy and effortless to operate. Participants repeatedly expressed the importance of a system that would be easy to use, and, perhaps more importantly, one that would place minimal demands on time (Table 10). It may be that a time-efficiency component of ease of use is particularly important in health care, where time is of tremendous value (Temte and Beasley, 2001). Finally, a modified version of TAM (TAM2) includes the factor subjective norms, referring to social pressure to use the system placed on potential users by important others, like co-workers and supervisors (Venkatesh and Davis, 2000). Fellow clinicians' opinions are of great importance (Walker and Lowe, 1998; Weingart, et al., 2001), and our results suggest that the opinions of colleagues, supervisors, the organization, and even those outside of health care may affect whether clinicians use an error-reporting system (Table 11).

Table 10.

Ease of use: perceived ease of use (TAM); Ease of use/complexity (IDT); System should be simple and easy to use (STS)

| Theory component | Study results |

|---|---|

| Concerns | System should be easy and quick to use, and should contribute minimally to extra workload |

| Barriers | Reporting process should not be lengthy, drawn-out, or burdensome for users or the organization |

| Content | Format should have facilitative, time-saving features (e.g., checkboxes, templates) |

| System design | Users should be able to report quickly, at their leisure, and through other, integrated systems |

| Instructions | Instructions should clarify system characteristics and how to use it |

Table 11.

Social factors: subjective norms (TAM2); Image, visibility, social factors (IDT); Social/cultural/political factors influence the system (STS)

| Theory component | Study results |

|---|---|

| Concerns | Error reporting is an ethical obligation and can serve as professional reprieve; trust in system is important |

| Barriers | Reporting may be contingent on the perceived importance of system or individuals' motivation |

| System design | Buy-in could be gained through endorsements from important professional organizations |

4.2. Innovation Diffusion Theory (IDT)

Whereas TAM applies to individual-user decisions to accept technology, IDT is more concerned with the sum process and timecourse of individual decisions that lead to organization-wide adoption of technological innovations (Moore and Benbasat, 1991; Rogers, 1995). Several components of IDT are similar or isomorphic to those of TAM: relative advantage refers to the extent to which the innovation improves upon the previous system; and results demonstrability refers to the observability of system benefits. Both of these are related to perceived usefulness in TAM (Table 9). Similarly, IDT, like TAM, includes an ease of use construct (Table 10), as well as constructs relating to social norms and pressures (Table 11): image refers to whether using the technology improves the user's image in the organization and visibility refers to whether one sees others (e.g., co-workers) using the system. Social norms inherent to the organization's culture are also included in the model of innovation diffusion. Clinicians who report may encounter positive or negative social consequences. Reporting may positively reflect one's ethicality or may be rewarding simply for the “value of confessions” but that those who report often may be paradoxically thought of as unsafe.

Though describing organization-wide diffusion of technology, IDT also considers the personalities and attitudes of individuals within the organization1 (Rogers, 1995). This factor is consistent with participants' comments regarding individual differences in motivation to report and is useful as a reminder that the interaction between a technology and its user unavoidably depends as much on the characteristics of that user as on the characteristics of the technology. An important determinant of technology diffusion in IDT is the degree of compatibility between the technology and the organization's practices, policies, and extant systems. We found that participants preferred a system that would fit their current practices (Table 12).

Table 12.

Flexible/compatible system design: compatibility (IDT); System should be flexible and compatible with policies, practices, other technology, etc. (STS)

| Theory component | Study results |

|---|---|

| System purpose | Reporting system could change current clinician–payor relationship |

| Concerns | System should not impose on busy schedules; multiple reporting mediums should be available |

| Motivators | Incentives for reporting could taint the professional pride associated with reporting |

| Barriers | System should not interfere with other (safety) initiatives |

| Content | Preferred format would be flexible (e.g., would allow narrative reporting) |

| System design | System terminology should be compatible with safety culture (i.e., would not encourage fault or blame); system should be linked with extant systems/databases |

| Instructions | Instructional information should be offered through a number of different media |

Finally, IDT includes two predictors of diffusion related to organizational structures and policies. Triability, defined as the degree to which potential users can freely try out various system functions, relates to the training policies and structures that the organization enacts. One last IDT factor, the voluntariness of the system, was discussed by participants, who mentioned benefits and disadvantages of voluntary and mandatory systems.

4.3. Sociotechnical System Theory (STS)

Rather than considering individual users, the organization, or the technology alone, STS takes a whole-systems approach to understanding technology design and implementation (Clegg, 2000; Hendrick and Kleiner, 2001; Pasmore, 1988). In other words, STS focuses on all system components, and how they interact with one another. Several STS principles apply in terms of present findings. In opposition to technology-driven design and implementation, STS advises careful consideration of the organization's social system, the culture inherent to this system, and the ways in which social factors influence user-technology interaction (Table 11). How the attitude of the administration affects one's comfort with reporting, the importance of communicating with colleagues prior to reporting, and clinical assistants' reluctance to report physicians, are examples of social/cultural issues brought up in discussion. The design of the technology, however, is equally important in STS. Consistent with participants' opinions about the design of reporting technology, STS states that the system: should include useful information and feedback (Table 9); should be simple and easy to use (Table 10); and should be flexible, adjustable, and compatible (Table 12). STS principles also concern the post-design stages of technology. Careful evaluation of the system through an iterative implementation process is one such principle. As the participants suggested, technology should be pilot tested and any uncovered problems or bugs must be dealt with promptly. “A good system would have an ongoing collaborative and continual reevaluation redesign element to it,” offered one physician.

Again, STS warns against optimizing the social or technological subsystems alone. Instead, they must be jointly designed and optimized and this design should optimally interact with its environment. In the present case, the environment is comprised of patients and their families, payors, regulatory bodies, the general public, and media. Participants addressed the environment in the form of payors' roles in the system, obligations to and concerns regarding regulatory agencies, and public and media awareness and attitudes. One physician stated that “…it may even be counterproductive in the public eye if all they see is error after error,” emphasizing both the importance of considering the environment in designing the system and the overlap between social, technological, and environmental factors.

Much discourse surrounded potential aversive consequences involved with using an error reporting system, consistent with prior literature describing barriers to error reporting (Cullen, et al., 1995; Leape, 2002; Wakefield, et al., 1996; Walker and Lowe, 1998). An applicable STS principle is that barriers and costs to users must be reduced or removed. Clearly, the participants were greatly concerned with the possibility punitive actions (Table 13). Other discussion, however, concerned rewards and incentives. Although some rewards had appeal, the participants disapproved of rewards that would challenge professional obligations or create uneven reporting. A relevant principle of STS states that if rewards are used, they must be aligned with the goals and practices of the organization. Finally, given the eagerness with which participants participated in discussions regarding error-reporting system design and implementation processes, as well as comments voicing the desire to participate in these processes, the STS principle of participatory design and implementation is quite applicable to the study's results.

5. Conclusions

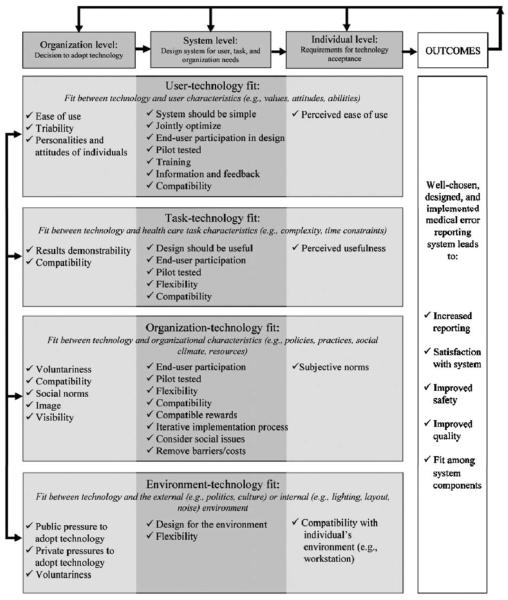

Not all of the results from the focus group discussions fit with factors or principles from the three theories (TAM, IDT, STS). Conversely, not all of the factors and principles themselves were represented in the discussions. None of the three theories could alone account for the many issues discussed. These observations may suggest that a more inclusive, detailed theory of technology implementation is needed. For example, only STS includes principles related to rewards and punishment, a recurring point of discussion; accordingly, this might suggest that theories like IDT and TAM could stand to benefit from the addition of factors related to user-related punishment. A more reasonable and practical conclusion, however, may be that multiple theories may and should be used to describe technology acceptance, adoption, and implementation. Our use of three theories yielded a putatively sound framework for the results from a focus group discussion on error report system design and implementation. It should be noted that we used three theories that addressed three different levels of analysis: the individual user level (TAM), the organization level (IDT), and the whole-system level (STS). A multi-level approach may help create a more comprehensive theoretical framework for understanding findings related to technology design and implementation (see Fig. 1). However, a theoretical understanding does not require the use of such theories, and may additionally (or alternatively) rely on theories of human behavior, motivation, and cognition.

Fig. 1.

Multilevel systems model of technology design and implementation.

Fig. 1 shows three levels of design goals across the top. At the organizational level, a technology such as a medical error reporting system (or, for that matter, any information technology) must be designed to fit within the parameters of the organization if the organization is to adopt the technology in the first place, as suggested by the IDT. At the system level, the technology must be designed to integrate with existing systems, as indicated by STS. Finally, there is the individual level, for which design considerations relate to facilitating end user acceptance of the technology (based on the TAM). At each of the three levels of design there are four types of fit that need to be addressed: user-technology fit, task-technology fit, organization-technology fit, and environment-technology fit. The text in each cell of the figure represents considerations from each of the three incorporated theories. Certainly there may be many more considerations, but these are drawn directly from the theories of current interest.

At the far right of the figure are the outcomes that might result from good design at each level, namely information technology satisfaction, improved safety, and improved quality. In the case of a medical error reporting system, it would also include increased reporting and feedback. At the top of the figure, the arrows show that (a) decisions at each level and (b) outcome success or failure, will feedback to impact design choices at the organization, system, and individual levels. The figure intentionally excludes a “time” dimension. While organizational level decisions to adopt technology typically precede system and individual level concerns, this is not always the case (e.g., as when modifying existing technology to improve end-user acceptance in a particular unit motivates these modifications at the organization-wide level). Also, many of the variables that are shown to only exist at the organization or system or individual levels do, in fact, exist at multiple levels. For example, the extent to which a technology is voluntary is relevant to the organization level at the time of adoption, but is also a consideration for the individual user after the organization adopts. However, our placement of the variables within specific levels is consistent with the way in which the theories are applied.

As previously stated, research addressing the advantages and disadvantages of error-reporting system factors has been mainly atheoretical. For the benefits previously listed, we submit that a more theoretical approach is preferable. Technology implementation theories, which have been only sparsely applied to health care technology implementation and design (e.g., (Berg, 1999; Berg, et al., 1998; Chau and Hu, 2001; Gagnon, et al., 2003; Hu, et al., 1999; Hu, et al., 2002; Stricklin and Struk, 2003), can be used to frame empirical findings, as we have demonstrated. We recommend for future error-reporting system research—and for health care technology research, in general—that multiple theories be used to guide the formation of hypotheses and for interpreting results.

Acknowledgments

This research was supported by a grant from the University of Wisconsin-Madison Industrial and Economic Development Research Fund—University-Industry Relations with in-kind contributions from members of Wisconsin Academy of Family Physicians and its research arm at that time, the Wisconsin Research Network and the clinical assistants of these physicians. The authors wish to thank Mary Stone for her valuable contributions toward convening focus group meetings and transcribing audiotaped sessions. We also thank Samuel Alper and Calvin Or for their insight into the model and editorial assistance.

Footnotes

The original version of TAM also modeled user attitudes toward technology, though the most recent versions exclude this construct (Venkatesh et al., 2003).

References

- Barach P, Small SD. Reporting and preventing medical mishaps: lessons from non-medical near miss reporting systems. Br.Med. J. 2000;320:759–763. doi: 10.1136/bmj.320.7237.759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berg M. Patient care information systems and health care work: a sociotechnical approach. Int. J.Med. Inf. 1999;55:87–101. doi: 10.1016/s1386-5056(99)00011-8. [DOI] [PubMed] [Google Scholar]

- Berg M, Langenberg C, Berg Ignas v., Kwakkernaat J. Considerations for sociotechnical design: experiences with an electronic patient record in a clinical context. Int. J. Med. Inf. 1998;52:1–3. doi: 10.1016/s1386-5056(98)00143-9. [DOI] [PubMed] [Google Scholar]

- Brennan TA, Leape LL, Laird NM, Hebert L, Localio AR, Lawthers AG, Newhouse JP, Weiler PC, Hiatt HH. Incidence of adverse events and negligence in hospitalized-patients—results of the Harvard medical-practice Study-I. N. Engl. J. Med. 1991;324:370–376. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- Chau PYK, Hu PJH. Information technology acceptance by individual professionals: a model comparison approach. Decision Sci. 2001;32:699–719. [Google Scholar]

- Clegg CW. Sociotechnical principles for system design. Appl. Ergon. 2000;31:463–477. doi: 10.1016/s0003-6870(00)00009-0. [DOI] [PubMed] [Google Scholar]

- Cohen MR. Why error reporting systems should be voluntary. Br. Med. J. 2000;320:728–729. doi: 10.1136/bmj.320.7237.728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford SY, Cohen MR, Tafesse E. Systems factors in the reporting of serious medication errors in hospitals. J. Med. Syst. 2003;27:543–551. doi: 10.1023/a:1025985832133. [DOI] [PubMed] [Google Scholar]

- Cullen DJ, Bates DW, Small SD, Cooper JB, Nemeskal AR, Leape LL. The incident reporting system does not detect adverse drug events: a problem for quality improvement. Joint Comm. J. Qual. Improvement. 1995;21:541–548. doi: 10.1016/s1070-3241(16)30180-8. [DOI] [PubMed] [Google Scholar]

- Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13:319–340. [Google Scholar]

- Davis FD, Bagozzi RP, Warshaw PR. User Acceptance of computer-technology—a comparison of 2 theoretical-models. Manage. Sci. 1989;35:982–1003. [Google Scholar]

- Escoto K, Karsh B, Beasley J. Multiple user considerations and their implications in medical error reporting system design. Hum. Factors. 2004 doi: 10.1518/001872006776412207. [DOI] [PubMed] [Google Scholar]

- Gagnon MP, Godin G, Gagne C, Fortin JP, Lamothe L, Reinharz D, Cloutier A. An adaptation of the theory of interpersonal behaviour to the study of telemedicine adoption by physicians. Int. J. Med. Inf. 2003;71:103–115. doi: 10.1016/s1386-5056(03)00094-7. [DOI] [PubMed] [Google Scholar]

- Hendrick H, Kleiner B. Macroergonomics An Introduction to Work System Design. Human Factors and Ergonomics Society; Santa Monica: 2001. [Google Scholar]

- Hendrick HW, Kleiner BM. Macroergonomics: Theory, Methods and Applications. Lawrence Erlbaum Associates; Mahwah, NJ: 2002. [Google Scholar]

- Hu PJ, Chau PYK, Sheng ORL, Tam KY. Examining the technology acceptance model using physician acceptance of telemedicine technology. J. Manage. Inf. Syst. 1999;16:91–112. [Google Scholar]

- Hu PJH, Chau PYK, Sheng ORL. Adoption of telemedicine technology by health care organizations: an exploratory study. J. Organ. Comput. Electron. Commerce. 2002;12:197–221. [Google Scholar]

- Institute of Medicine . Patient Safety: Achieving a New Standard for Care. National Academies Press; Washington DC: 2004. [PubMed] [Google Scholar]

- Johnson C. Reasons for the failure of Incident reporting in the healthcare and rail industries. In: Redmill F, Anderson T, editors. Components of System Safety: Proceedings of the 10th Safety-Critical Systems Symposium; Springer, Berlin. 2002. pp. 31–60. [Google Scholar]

- Kaplan HS. Benefiting from the “gift of failure”: essentials for an event reporting system. J. Legal Med. 2003;24:29–35. doi: 10.1080/713832121. [DOI] [PubMed] [Google Scholar]

- Kaplan H, Barach P. Incident reporting: science or proto-science? Ten years later. Qual. Safety Health Care. 2002;11:144–145. doi: 10.1136/qhc.11.2.144. comment. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaplan HS, Fastman BR. Organization of event reporting data for sense making and system improvement. Qual. Safety Health Care. 2003;12:ii68–ii72. doi: 10.1136/qhc.12.suppl_2.ii68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karsh B, Holden RJ. New technology implementation in health care. In: Carayon P, editor. Handbook of Human Factors and Ergonomics in Patient Safety. 2006. in press. [Google Scholar]

- Kohn LT, Corrigan JM, Donaldson MS. To Err is Human: Building a Safer Health System. National Academy Press; Washington DC: 2000. [PubMed] [Google Scholar]

- Layde PM, Cortes LM, Teret SP, Brasel KJ, Kuhn EM, Mercy JA, Hargarten SW, Maas LA. Patient safety efforts should focus on medical injuries. J. Am. Med. Assoc. 2002;287:1993–1997. doi: 10.1001/jama.287.15.1993. [DOI] [PubMed] [Google Scholar]

- Leape LL. Reporting of adverse events. N. Engl. J. Med. 2002;347:1633–1638. doi: 10.1056/NEJMNEJMhpr011493. see comment. [DOI] [PubMed] [Google Scholar]

- Leape LL, Brennan TA, Laird N, Lawthers AG, Localio AR, Barnes BA, Hebert L, Newhouse JP, Weiler PC, Hiatt H. The Nature of adverse events in hospitalized-patients—results of the Harvard medical-practice study-II. N. Engl. J. Med. 1991;324:377–384. doi: 10.1056/NEJM199102073240605. [DOI] [PubMed] [Google Scholar]

- McNutt RA, Abrams R, Aron DC. Patient safety efforts should focus on medical errors. J. Am. Med. Assoc. 2002;287:1997–2001. doi: 10.1001/jama.287.15.1997. [DOI] [PubMed] [Google Scholar]

- Moore GC, Benbasat I. Development of an instrument to measure the perceptions of adopting an information technolgy innovation. Inf. Syst. Res. 1991;2:192–222. [Google Scholar]

- Pace WD, Staton EW, Higgins GS, Main DS, West DR, Harris DM, Collaborative A. Database design to ensure anonymous study of medical errors: a report from the ASIPS Collaborative. J. Am. Med. Inf. Assoc. 2003;10:531–540. doi: 10.1197/jamia.M1339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasmore W. Designing Effective Organizations: The Socio-technical Systems Perspective. Wiley; New York: 1988. [Google Scholar]

- Rogers EM. Diffusion of Innovations. The Free Press; New York: 1995. [Google Scholar]

- Stricklin MLV, Struk CM. Point of care technology: a sociotechnical approach to home health implementation. Methods Inf. Med. 2003;42:463–470. [PubMed] [Google Scholar]

- Suresh G, Horbar JD, Plsek P, Gray J, Edwards WH, Shiono PH, Ursprung R, Nickerson J, Lucey JF, Goldmann D. Voluntary anonymous reporting of medical errors for neonatal intensive care. Pediatrics. 2004;113:1609–1618. doi: 10.1542/peds.113.6.1609. [DOI] [PubMed] [Google Scholar]

- Temte JL, Beasley JW. Rate of case reporting, physician compliance, and practice volume in a practice-based research network study. J. Fam. Pract. 2001;50:977. [PubMed] [Google Scholar]

- Uribe CL, Schweikhart SB, Pathak DS, Dow M, Marsh GB. Perceived barriers to medical-error reporting: an exploratory investigation. J. Healthcare Manage. 2002;47:263–279. [PubMed] [Google Scholar]

- Venkatesh V. Creation of favorable user perceptions: exploring the role of intrinsic motivation. MIS Q. 1999;23:239–260. [Google Scholar]

- Venkatesh V, Davis FD. A theoretical extension of the technology acceptance model: four longitudinal field studies. Manage. Sci. 2000;46:186–204. [Google Scholar]

- Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. Mis Q. 2003;27:425–478. [Google Scholar]

- Wakefield DS, Wakefield BJ, Uden-Holman T, Blegen MA. Perceived barriers in reporting medication administration errors. Best Pract. Benchmarking Healthcare. 1996;1:191–197. [PubMed] [Google Scholar]

- Walker SB, Lowe MJ. Nurses' views on reporting medication incidents. Int. J. Nurs. Pract. 1998;4:97–102. doi: 10.1046/j.1440-172x.1998.00058.x. [DOI] [PubMed] [Google Scholar]

- Weingart SN, Callanan LD, Ship AN, Aronson MD. A physician-based voluntary reporting system for adverse events and medical errors. J. Gen. Internal Med. 2001;16:809–814. doi: 10.1111/j.1525-1497.2001.10231.x. [DOI] [PMC free article] [PubMed] [Google Scholar]