Abstract

Functional magnetic resonance imaging (fMRI) studies have relied on multivariate analysis methods to decode visual motion direction from measurements of cortical activity. Above-chance decoding has been commonly used to infer the motion-selective response properties of the underlying neural populations. Moreover, patterns of reliable response biases across voxels that underlie decoding have been interpreted to reflect maps of functional architecture. Using fMRI, we identified a direction-selective response bias in human visual cortex that: (1) predicted motion-decoding accuracy; (2) depended on the shape of the stimulus aperture rather than the absolute direction of motion, such that response amplitudes gradually decreased with distance from the stimulus aperture edge corresponding to motion origin; and 3) was present in V1, V2, V3, but not evident in MT+, explaining the higher motion-decoding accuracies reported previously in early visual cortex. These results demonstrate that fMRI-based motion decoding has little or no dependence on the underlying functional organization of motion selectivity.

Keywords: coarse-scale bias, direction preference, fMRI, motion decoding, multivariate classification, visual cortex

Introduction

Multivariate pattern analysis has been used to read out or “decode” the direction of motion of a visual stimulus from the spatially distributed pattern of voxel responses (Kamitani and Tong, 2006; Serences and Boynton, 2007; Apthorp et al., 2013; Beckett et al., 2012). One interpretation of these results is that the direction preferences in functional magnetic resonance imaging (fMRI) measurements arise from random spatial irregularities in the fine-scale columnar architecture (Boynton, 2005; Haynes and Rees, 2005; Kamitani and Tong, 2005; Op de Beeck et al., 2008). According to this account, fMRI motion decoding goes hand in hand with a direction-selective columnar organization.

It is widely agreed that human primary visual cortex (V1) is analogous to monkey V1 and that human visual cortical area MT+ is analogous to monkey areas MT and MST. Activity in human MT+, measured with fMRI, is greater for moving than static stimuli (Watson et al., 1993; Tootell et al., 1995; Huk et al., 2002). MT+ activity increases monotonically with motion coherence (Rees et al., 2000) and exhibits direction-selective adaptation (Huk et al., 2001), pattern-motion selectivity (Huk and Heeger, 2002), and motion opponency (Heeger et al., 1999). In contrast, human V1 shows weak, direction-selective adaptation (Huk and Heeger, 2002; Huk et al., 2001) and little motion opponency (Heeger et al., 1999).

Motion-decoding accuracies have, however, been found to be higher and more robust in V1 than in MT+ (Kamitani and Tong, 2006; Serences and Boynton, 2007; Beckett et al., 2012), which is surprising given the physiology and functional organization of monkey MT and V1. Monkey MT contains a large portion of direction-selective neurons (>∼85%; Maunsell and Van Essen, 1983; Albright et al., 1984) organized in columns (∼500 μm; Albright et al., 1984), whereas monkey V1 contains relatively few direction-selective neurons (∼20%–30%; De Valois et al., 1982; Orban et al., 1986) and no direction-selective columns (Lu et al., 2010).

Here, we report that motion decoding depends strongly on a systematic, coarse-scale organization of direction preferences in human visual cortex, in which adjacent voxels share similar direction preferences. We used fMRI to measure the spatial pattern of cortical activity evoked by coherently translating random-dot stimuli. We observed an “aperture-inward” response bias for motion that predicted motion-decoding accuracy. The response bias was evident in V1, V2, and V3, but was not evident in MT+. The response bias depended on the stimulus aperture rather than the absolute motion direction. Specifically, responses were largest at the edge of the stimulus aperture corresponding to motion origin, consistent with previously reported response differences between the leading and trailing edges of a motion stimulus (Whitney et al., 2003; Liu et al., 2006) and inducing correlated direction preferences across large patches of cortex. We conclude that the aperture-inward representation of motion in early visual cortex and the accuracy of motion decoding have little or no dependence on the direction-selective columnar organization in cortex.

Materials and Methods

Observers.

Five observers (two females, age 24–32 years) with normal or corrected-to-normal vision participated in the study. One observer (O1) was an author. Observers provided written informed consent. The experimental protocol was in compliance with the safety guidelines for MRI research and was approved by the University Committee on Activities Involving Human Subjects at New York University.

Each observer participated in multiple scanning sessions for several experiments. We refer to the experiments by the shape of the stimulus aperture because manipulating aperture shape was critical for the interpretation of the results. Four observers (O1–O4) each participated in one session of the “large annulus” experiment. All five observers participated in two sessions for the “two circles” experiment. One observer (O5) participated in a third session of the two circles experiment. Three observers (O1–O3) participated in one session of the “two strips” experiment. In addition, all five observers participated in one session to obtain a set of high-resolution anatomical volumes and one session for retinotopic mapping.

Stimuli.

Stimuli were generated using MATLAB (MathWorks) and MGL (http://gru.brain.riken.jp/doku.php/mgl/overview) on a Macintosh computer. Stimuli were displayed via an LCD projector (LC-XG250, Eiki; resolution: 1024 × 768 pixels; refresh rate: 60 Hz) onto a back-projection screen in the bore of the magnet. Observers viewed the display through an angled mirror at a viewing distance of ∼58 cm (field of view: 31.6° × 23.7°). The display was calibrated and gamma corrected using a linearized lookup table.

Visual stimuli were moving random-dot patterns (luminance: 583 cd/m2; dot diameter: 0.1°; density: 3 dots/°2; speed: 5°/s) presented on a uniform black background. Dots had a lifetime of 200 ms and were regenerated randomly on the screen at the end of their lifetime. Dots that moved outside of the stimulus aperture reappeared on the opposite side of the aperture.

Large annulus experiment.

Coherently translating dots were presented within a large annular aperture centered on fixation (inner radius: 2° eccentricity; outer radius: 11°; Fig. 1A). The inner and outer edges of the annulus were tapered with a 2° raised cosine transition. The cosine transitions were centered on the inner and outer edges of the annulus, such that dots near the inner edge were 0% contrast at 1° eccentricity and 100% contrast at 3°, and that dots near the outer edge were 100% contrast at 10° and 0% contrast at 12°. During each 3 s period, the dots moved in one of eight directions (evenly spaced between 0° and 360°). The direction of the dots changed by 45° every 3 s, either counterclockwise or clockwise, taking 24 s to complete a cycle. The stimulus completed 10.5 cycles in each run. O1 completed 18 runs; O2–O4 each completed 14 runs. The runs alternated between counterclockwise and clockwise cycling directions, each of which accounted for half of the runs.

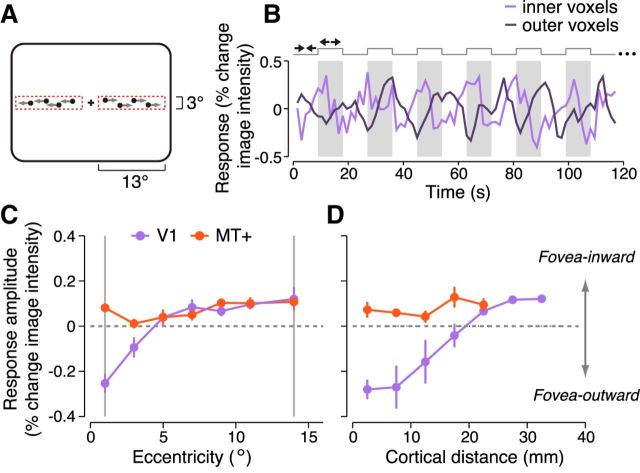

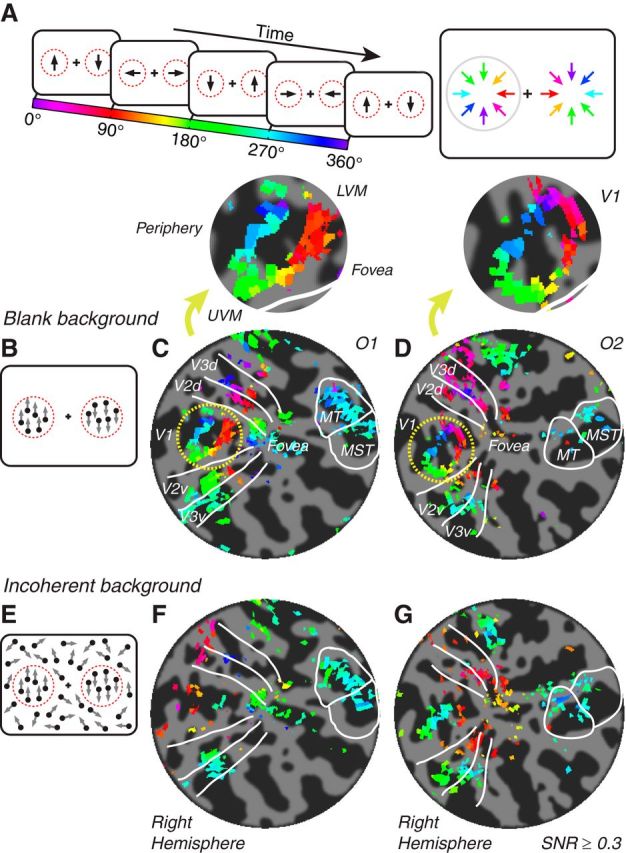

Figure 1.

Stimulus and direction preference maps for the large annulus experiment. A, The stimulus cycled through eight motion directions in 24 s (every other direction shown here). The actual stimulus contained coherent white dots on black background. Dotted red circles mark the aperture in which dots were presented; they did not appear in the actual stimulus. B, C, Maps of motion direction preference displayed on flattened representations of occipital lobes of two example observers (O1, B; O2, C). Dark gray indicates sulci; light gray, gyri. Flat patches have approximate radii of 65–85 mm. Color indicates the phase of each voxel's fMRI response time series, which corresponds to the voxel's motion direction preference (see color bar and color wheel in A). White contours indicate boundaries between visual cortical areas. Inset, Inflated hemisphere from one observer indicating the region of cortex shown in the flat map. D, E, Motion-decoding accuracy as a function of the number of voxels (randomly selected from each visual area; Observer O1, D; Observer O2, E). GM indicates gray matter. Error bars indicate 95% confidence intervals (see Materials and Methods, “Decoding motion direction”). Horizontal gray lines indicate theoretical chance performance (12.5%). F, Decoding accuracies averaged across observers (n = 4) shown separately for each visual area. Error bars indicate SEM across observers. Thick and thin horizontal gray lines indicate, respectively, the mean and the 95th percentile of the null distribution of decoding accuracies (Bonferroni corrected for five ROIs).

Two circles experiment.

The stimuli contained two coherently translating dot fields to the left and right of fixation (Fig. 2A). Each dot field was presented within a circular aperture centered at 8° eccentricity. The radial edge of each aperture was tapered with a 3° raised cosine transition (2.5–5.5° from the aperture centers). In the “blank background” condition, dots were presented against a black background, as in the large annulus experiment (Fig. 2B). At the edge of each circular aperture, the contrast of dots transitioned from 0% (black background) at 5.5° radius to 100% at 2.5° radius. In the “incoherent background” condition, coherent dots within the apertures were surrounded by a larger incoherent dot field that filled the rest of the screen (Fig. 2E). Motion coherence is the probability that a dot would be assigned as a “signal” dot, which moved in a designated direction. A dot that was not a signal dot was a “noise” dot, which moved in a random-walk manner; its location was displaced in a random direction at each screen update (60 Hz). At 100% motion coherence, all dots were signal dots that moved coherently; at <100% motion coherence, only a fraction of the dots moved coherently. Dots were reassigned as signal or noise randomly at each screen update. The edge of each circular aperture was tapered by varying motion coherence (rather than contrast) such that dots within each aperture moved with 100% motion coherence (all signal dots) and dots outside of the apertures moved with 0% motion coherence (all noise dots). At aperture edges (2.5–5.5° from the aperture centers), dots transitioned from 100% to 0% motion coherence.

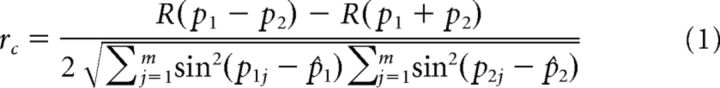

Figure 2.

Stimulus and direction preference maps for the two circles experiment. A, The stimulus cycled through eight evenly spaced motion directions in 24 s (every other direction shown here). Dots in the left and right apertures moved in opposite directions. B, Blank background stimulus. C, D, Maps of motion direction preference for the blank background stimulus for two example observers (O1, C; O2, D). Flat patches have approximate radii of 65–85 mm. Color indicates phase of each voxel's fMRI response time series, which corresponds to the voxel's motion direction preference (see color bar and color wheel in A). Insets, Magnified view of V1 response phases. Adjacent text indicates visual field orientation of the patch: UVM, upper vertical meridian; LVM, lower vertical meridian. Only right hemispheres are shown here, corresponding to left visual hemifield (direction preferences in gray circle of A). Left hemispheres were similar. E, Incoherent background stimulus. F, G, Maps of motion direction preference for the incoherent background stimulus. Same format and same two observers as C and D.

During each 3 s period, dots within each aperture moved in one of eight directions (evenly spaced between 0° and 360°), but the directions in the left and right apertures were always opposite to one another. Out-of-phase motion on opposite sides of fixation helped to minimize the possibility that observers made systematic fixation errors (e.g., ocular tracking in the direction of motion; see “Eye movement measurements”). The directions in both apertures changed clockwise or counterclockwise, taking 24 s to complete a cycle. Each run contained 11 cycles. Runs alternated between counterclockwise and clockwise and between blank and incoherent backgrounds. O1–O4 completed two sessions each consisting of 12 runs, yielding a total of 12 blank background runs and 12 incoherent background runs. O5 completed a third session consisting of eight runs, yielding in a total 16 runs per background condition.

Two strips experiment.

Coherently moving dots were presented within two sharp-edged rectangular apertures to the left and right of fixation (see Fig. 7A). The length of each rectangular aperture extended from 1° to 14° eccentricity along the horizontal meridian. Aperture height was 3°, from 1.5° below to 1.5° above the horizontal meridian.

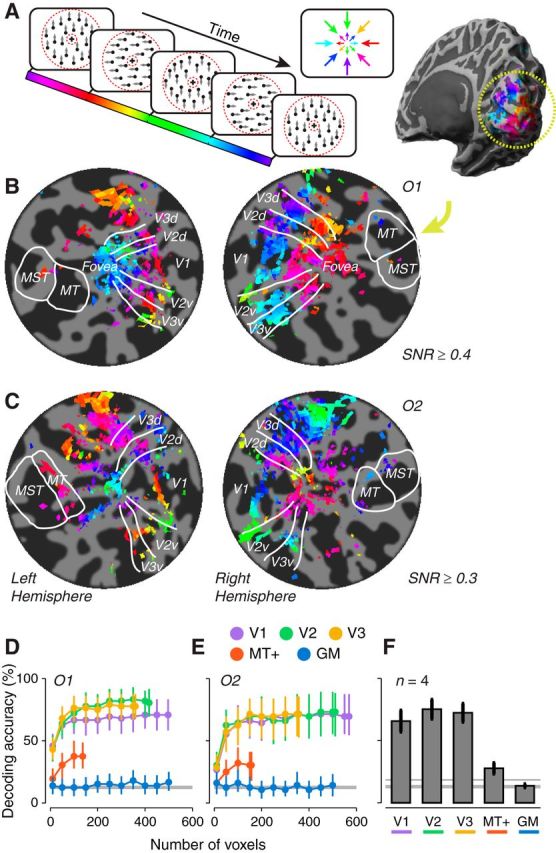

Figure 7.

Two strips experiment. A, Stimulus alternated between fovea-inward and fovea-outward motion directions (9 s each) in two narrow rectangular apertures to the left and right of fixation. B, Response time course in V1 for an example observer. Light purple curve is the mean response across voxels near the inner edge of the stimulus (1–2° eccentricity; “inner voxels”). Dark purple curve indicates voxels near the outer edge of the stimulus (10–14° eccentricity, “outer voxels”). The time course was averaged across runs for that observer and is shown for 6.5 cycles of stimulus alternation (half the duration of a run). C, Response amplitude as a function of horizontal eccentricity, computed by projecting the voxel time series onto a unit-norm sinusoid of a fixed phase (see Materials and Methods, “Response amplitude as a function of distance”). Positive values indicate a preference for fovea-inward motion. Negative values indicate a preference for fovea-outward motion. Purple curve is V1. Red curve is MT+. Circles and error bars indicate mean and SEM across observers, respectively (n = 3). Gray vertical lines indicate the inner and outer edges of the rectangular stimulus apertures along the horizontal meridian. D, Response amplitude as a function of cortical distance from the fovea. Same format as C.

Dots within each aperture translated either leftward or rightward, toward or away from fixation. Within each run, dots alternated every 9 s (18 s cycles) between moving inward and moving outward: that is, dots in the right aperture moved to the left and dots in the left aperture moved to the right, or vice versa. Each run contained 14 cycles. O1–O3 completed one session each consisting of 12 runs.

Behavioral task.

Throughout each run, observers continuously performed a demanding two-interval, forced-choice task to maintain a consistent behavioral state and stable fixation, and to divert attention from the main experimental stimuli. In each trial of the task, the fixation cross (a 0.4° crosshair) dimmed twice (for 400 ms at a time) and the observer indicated with a button press the interval (1 or 2) in which it was darker. The observer had 1 s to respond and received feedback through a change in the color of the fixation cross (correct = green; incorrect = red). Each contrast decrement and response period was preceded by an 800 ms interval such that each trial lasted 4.2 s. The fixation task was out of phase with the main experimental stimulus presentation. Contrast decrements were presented using an adaptive, 1-up-2-down staircase procedure (Levitt, 1971) to maintain performance at ∼70% correct.

Motion direction preferences.

Motion direction preferences were computed from the average response time series across runs (after time-reversing and shifting the clockwise runs to match the sequence of the counterclockwise runs; see “fMRI time series preprocessing”). The average time series of each voxel was fit with a sinusoid with period matched to the period of the stimulus cycle (24 s for the large aperture and two circles experiments; 18 s for the two strips experiment). The phase of the best-fitting sinusoid indicated the motion direction preference of the voxel. For each voxel, the signal-to-noise ratio (SNR) of the responses was quantified as the correlation between the best-fitting sinusoid and the time series (Engel et al., 1997). This quantity is typically referred to as “coherence,” but we designated it here as “SNR” to distinguish it from motion coherence.

Map similarity.

Circular correlation (Jammalamadaka and SenGupta, 2001) was used to quantify the similarity between two sets of direction preferences across voxels (e.g., measured across sessions or across experiments). The details are as documented in Freeman et al. (2011). Briefly, the circular correlation coefficient, rc, between two sets of phase values, p1 and p2, is defined as follows:

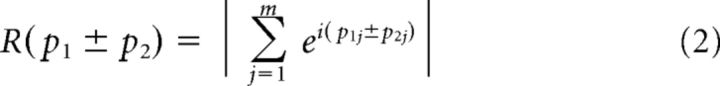

|

where j indexes voxels, m is the number of voxels, and (^) indicates the circular mean across voxels. R(p1 ± p2) gives the concentration of the angular sum (or difference) as follows:

|

where ‖ is the magnitude of a complex number. The value of rc ranges from −1 to 1, and the closer it is to 1, the greater the relationship between p1 and p2. Circular correlations between direction preferences across experiments were computed on voxels that fell within the intersection of the two regions of interests (ROIs) determined by the functional localizers of each experiment (see “Functional localizers”).

A randomization test was used to assess the statistical significance of the circular correlations. Phase values p1 and p2 were shuffled (i.e., randomly reassigned to different voxels) and circular correlation was recomputed between the shuffled phase values. The process was repeated 1000 times to obtain a null distribution of circular correlations. A p-value was computed as the fraction of the null distribution that was smaller than the observed circular correlation without randomization.

A bootstrap analysis was used to determine whether two circular correlations, rc1 and rc2, differed significantly. First, a bootstrapped distribution was obtained separately for rc1 and rc2. This was done by resampling voxels with replacement corresponding to pairs of phase values from p1 and p2, computing the circular correlation between the resampled phase values, and repeating the procedure 1000 times. Second, we computed the difference between pairs of bootstrapped values corresponding to rc1 and rc2. The p-value corresponded to the fraction of these differences that was <0. The circular correlations rc1 and rc2 were always computed on the same voxels to make them comparable; when these involved different experiments, the correlations were recomputed on the intersecting set of voxels.

Decoding motion direction.

In multivariate pattern analysis of fMRI data, the distributed spatial pattern of response amplitudes to a particular stimulus condition can be described as a point in a multidimensional space in which each dimension represents the response amplitude of a single voxel. Accurate decoding is possible when the responses corresponding to different conditions form distinct clusters within this high-dimensional space. We used a classifier to decode motion direction from the fMRI responses obtained from the large annulus and two circles experiments. The classification analyses were performed separately for each visual area ROI (see “Retinotopic maps” and “Functional localizers”).

fMRI response amplitudes were measured for each voxel, for each motion direction, and for each run of each experiment. The clockwise runs were time reversed to approximately match the sequence of the counterclockwise runs (see “fMRI time series preprocessing”). The response amplitudes were then estimated by averaging across the 10 cycles in the run and averaging across the two volumes (3 s) that were collected during the presentation of a particular motion direction. Therefore, each run yielded eight response amplitudes per voxel, corresponding to the eight motion directions. For each experiment, these response amplitudes were stacked across runs, forming an n × m matrix for each ROI, where m is the number of voxels in the ROI and n is the number of repeated measurements (large annulus experiment: n = 112 or 144, eight directions × 14 or 18 runs; two circles experiment: n = 96 or 128, eight directions × 12 or 16 runs).

We performed decoding with a maximum likelihood classifier, using the “classify” function in MATLAB with the option “diagLinear.” The classifier optimally separated responses to each of the eight motion directions if the response variability in each voxel was normally distributed and statistically independent. Because the number of voxels, m, was large relative to the number of measurements, n, the computed covariance matrix would have been a poor estimate of the true covariance. This would have made the performance of the classifier unstable, because it relied on the inversion of this covariance matrix. We therefore ignored covariances between voxels and modeled the responses as being statistically independent across voxels. Although noise in nearby voxels was likely correlated, the independence assumption was conservative; including accurate estimates of the covariances, if available, would have improved the decoding accuracies.

A split-halves procedure assessed decoding accuracy. For each observer and each ROI, the n × m response matrix was randomly partitioned along the n dimension (repeated measurements) into training and test sets, each containing an equal number of runs. Data in the training and test sets were drawn from different runs in the same session and were thus statistically independent. The training data were used to estimate the parameters of the classifier. Decoding accuracy was determined as the proportion of correct predictions for the test data (of eight motion directions × number of test runs). This decoding procedure was repeated after repartitioning the data. In the cases in which the number of runs was relatively small (e.g., 12 runs for each of O1–O4 in the two circles experiment), decoding accuracies were computed for all possible permutations of partitioning the data into training and testing halves (924 permutations). In the other cases where the number of possible permutations exceeded 1000, decoding accuracies were computed for 1000 randomly selected permutations. The 2.5–97.5th percentiles across repeated cross-validations specified the 95% confidence interval of the decoding accuracy.

Because decoding accuracies are not normally distributed (e.g., they are restricted between 0 and 100%), a nonparametric randomization test was used to determine the statistical significance of decoding accuracies. Specifically, we constructed a distribution of decoding accuracies expected under the null hypothesis that there was no relationship between the stimulus motion and the measured responses. To generate this null distribution, we computed a phase-randomized time series for each run and performed the same cross-validated decoding procedure on the phase-randomized data as we had done on the original data (Freeman et al., 2011). Phase randomization of a time series was computed by taking its Fourier transform, randomly permuting its Fourier phase without changing the amplitude, and inverting the Fourier transform. Phase randomization preserved the temporal autocorrelation and power spectrum of the responses, but still randomized the relationship between time points and stimulus motion directions. Repeating phase randomization 100 times yielded a total of 92,400–100,000 decoding accuracies per observer expected under the null distribution. An average null distribution across observers was obtained by computing the null decoding accuracies for each observer and then averaging the null decoding accuracies across observers. Decoding accuracy for the original data was then considered statistically significant if it was higher than the 95th percentile of the null distribution (p < 0.05, one-tailed randomization test). Null distributions computed by randomly shuffling motion direction labels and recomputing the decoding accuracies yielded nearly identical results. Where noted, Bonferroni correction was used to adjust for multiple comparisons across ROIs.

Aperture-inward predictions.

Response phases were predicted for the two circles experiment, assuming a preference for motion direction pointing radially toward the center of each circular aperture (at 8° eccentricity). For each voxel, we analytically determined the predicted direction preference using each voxel's estimated retinotopic location measured in independent scanning sessions (see “Retinotopic maps”). Specifically, for a given visual field location x, y, the predicted motion direction preference was given by the following:

where arctan(y, x) is the four-quadrant inverse tangent, and θL,R indicates the local direction in the left or right visual field. To convert from predicted direction preference to response phase and combine across hemispheres, we added π to θR (voxels in the left hemisphere) because the stimuli in left and right apertures moved in opposite directions.

Binning by aperture-inward predictions.

This analysis determined whether the aperture-inward direction preference was sufficient for motion decoding. For each voxel, we analytically computed the response phase corresponding to the aperture-inward prediction using each voxel's estimated retinotopic location. Each voxel was assigned to one of several bins, corresponding to a range of predicted response phases (“bin width”). The time series of voxels within each bin were averaged to yield a small number of “super voxels.” The classification analysis was then performed on the resulting averaged responses for the super voxels. Classification was also performed on voxels assigned to bins randomly rather than based on their predicted response phases. This entire procedure was repeated for a number of different bin widths.

Response amplitude as a function of distance.

Measurements from the two strips experiment were used to characterize how response amplitudes changed as a function of distance from the aperture edge. Because the stimulus alternated between inward and outward motion relative to fixation, the response of each voxel reflected the modulation of activity evoked by the two types of stimuli. To measure the amplitude of this modulation, for each observer, the average time series (across runs) of each voxel was projected (by computing the dot product) onto a unit-norm sinusoid. The sinusoid had a period matched to the stimulus alternation (18 s) and a temporal phase of π/2 (4.5 s; to approximate the hemodynamic lag) such that the peak of the sinusoid corresponded in time to the fovea-inward motion. The projected amplitude (Heeger et al., 1999) provided a signed value isolating the component of the time series reflecting responses to fovea-inward (positive) and fovea-outward (negative) motion. If there was no modulation (equal response to both stimuli), the amplitude would have been zero. This analysis differed from the computation of direction preferences for eight motion stimuli: here, we took advantage of the fact that the responses could only occur at two phases separated by π, and therefore could be modeled as a single sinusoid with a fixed phase.

Voxels were binned according to the eccentricity of their population receptive field (pRF) locations. Responses were then averaged across voxels in each bin and averaged across observers. Using different bin sizes yielded similar results. Voxels were also binned according to their cortical distance from the fovea. This was done as follows. For each observer, we defined a small ROI (∼10–20 voxels) corresponding to the fovea, separately for V1 and MT+, based on the retinotopy measurements (see “Retinotopic maps”). Each voxel corresponded to a “vertex” on the cortical surface. For every voxel in each visual area ROI (V1 or MT+; see “Functional localizers”), we computed its shortest distance on the cortical surface to every voxel in the corresponding fovea ROI (Dijkstra, 1959) and averaged across the foveal voxels. For each visual area, the distance measure of each voxel was then subtracted by the smallest distance measure of that area, such that a distance of 0 corresponded to the inner edge of the stimulus. Visual area voxels were then binned according to their distances and averaged across observers.

MRI acquisition.

MRI data were acquired on a Siemens 3T Allegra head-only scanner using a transmit head coil (NM-011, Nova Medical) and an eight-channel phased-array surface receive coil (NMSC-071, Nova Medical). For each observer, a high-resolution anatomy of the entire brain was acquired by coregistering and averaging three T1-weighted anatomical volumes (magnetization-prepared rapid-acquisition gradient echo, or MPRAGE; TR: 2500 ms; TE: 3.93 ms; FA: 8°; voxel size: 1 × 1 × 1 mm; grid size: 256 × 256 voxels). The averaged anatomical volume was used for coregistration across scanning sessions and for gray matter segmentation and cortical flattening. Functional scans were acquired using T2*-weighted, gradient recalled echoplanar imaging to measure blood oxygen level-dependent changes in image intensity (Ogawa et al., 1990). Functional imaging was conducted with 24 slices oriented perpendicular to the calcarine sulcus and positioned with the most posterior slice at the occipital pole (TR: 1500 ms; TE: 30 ms; FA: 72°; voxel size: 2 × 2 × 2.5 mm; grid size: 104 × 80 voxels). An MPRAGE anatomical volume with the same slice prescription as the functional images (“inplane”) was also acquired at the beginning of each scanning session (TR: 1530 ms; TE: 3.8 ms; FA: 8°; voxel size: 1 × 1 × 2 mm with 0.5 mm gap between slices; grid size: 256 × 160 voxels). The inplane anatomical was aligned to the high-resolution anatomical volume using a robust image registration algorithm (Nestares and Heeger, 2000).

fMRI time series preprocessing.

Data from the beginning of each functional run were discarded to minimize the effect of transient magnetic saturation and to allow hemodynamic response to reach steady state: the first half cycle (large annulus, 8 volumes) or first full cycle (two circles, 16 volumes; two strips and localizer runs, 12 volumes) of each run was discarded. Functional data were compensated for head movement within and across runs (Nestares and Heeger, 2000), linearly detrended, and high-pass filtered (cutoff: 0.01 Hz) to remove low-frequency noise and drift (Smith et al., 1999). The time series for each voxel was divided by its mean to convert from arbitrary intensity units to percent change in intensity. For the large annulus and two circles experiments, time series data from each run were shifted back in time by 3 volumes (4.5 s) to compensate approximately for hemodynamic lag. For those experiments, time series for the clockwise runs were time reversed to match the sequence of the counterclockwise runs.

Retinotopic maps.

Retinotopy was measured using nonperiodic traveling bar stimuli and analyzed using the pRF method (Dumoulin and Wandell, 2008). Bars were 3° wide and traversed the field of view in sweeps lasting 24 or 30 s. Eight different bar configurations (four orientations and two traversal directions) were presented in randomly shuffled order within each run. The pRF of each voxel was estimated using standard fitting procedures (Dumoulin and Wandell, 2008) implemented in mrTools (http://www.cns.nyu.edu/heegerlab/?page=software). Visual area boundaries were drawn by hand on the flat maps following published conventions (Engel et al., 1997; Larsson and Heeger, 2006; Wandell et al., 2007). In four of the observers, retinotopy was also measured using periodically rotating wedges and expanding/contracting rings and analyzed using the conventional traveling-wave method (Engel et al., 1994; Sereno et al., 1995; DeYoe et al., 1996; Engel et al., 1997; Larsson and Heeger, 2006), which yielded similar visual area definitions as those obtained from the pRF method.

Functional localizers.

Visual area MT+ (including both MT and MST) was delineated by measuring responses to coherently versus incoherently moving dots. MT and MST were then identified separately from one another by their topographic organization relative to neighboring visual areas obtained from retinotopic mapping (Huk et al., 2002; Smith et al., 2006; Gardner et al., 2008; Amano et al., 2009). We were only able to identify MST with certainty in approximately half of the 10 hemispheres. We performed all analyses separately for MT (in all hemispheres), for MST (for those hemispheres in which it could be conclusively identified), and for MT+ (in all hemispheres). The results were similar, supporting the same conclusions (i.e., low decoding accuracies, Figs. 1F, 5A, and similar fovea-inward biases, Fig. 4B,D,F). We therefore grouped MT and MST areas together and report the results for MT+.

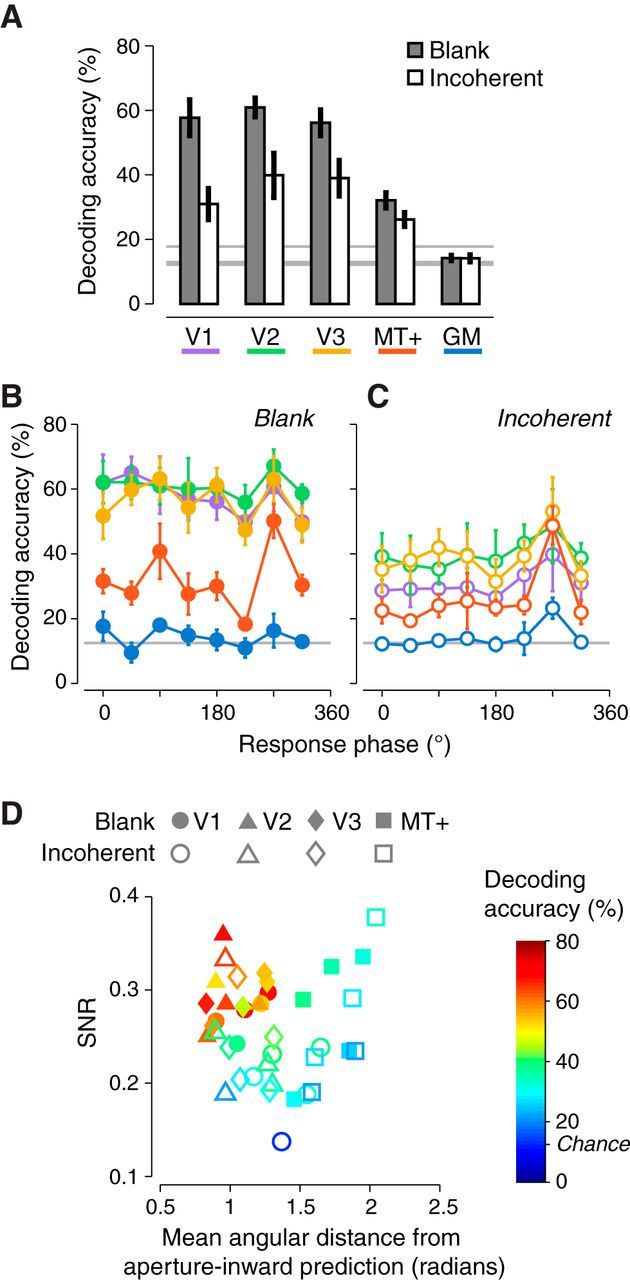

Figure 5.

Motion decoding for the two circles experiment. A, Decoding accuracies were averaged across observers (n = 5) and are shown separately for V1, V2, V3, MT+, and a patch of gray matter voxels (GM) that did not exhibit visually evoked responses. Same format as Figure 1F. B, C, Decoding accuracies shown separately for each response phase, defined as in Figure 2A. Each curve indicates a single visual area ROI. Color is defined as in A. Decoding accuracies were averaged across observers. Error bars indicate SEM across observers. Horizontal gray line indicates the chance decoding accuracy (12.5%). B, Blank background condition. C, Incoherent background condition. D, Aperture-inward bias and SNR predict decoding accuracies across observers. Each symbol is a single observer (n = 5). Circles, triangles, diamonds, and squares denote V1, V2, V3, and MT+, respectively. Filled symbols indicate the blank background condition. Empty symbols indicate the incoherent background condition. The abscissa is the mean angular distance (bounded between 0 and π) between each voxel's direction preference and the aperture inward prediction, averaged across voxels. The ordinate is the average SNR across voxels. Color indicates decoding accuracy.

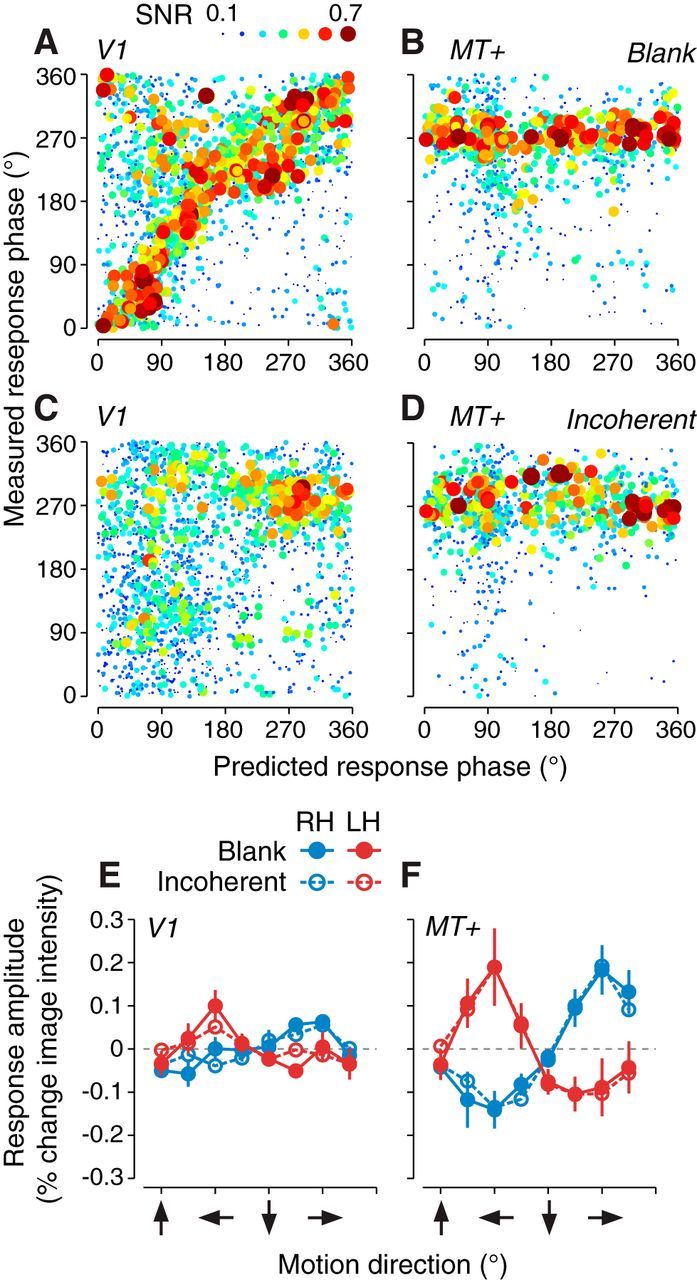

Figure 4.

Aperture-inward and fovea-inward motion direction biases. A–D, Measured response phase of each voxel from the two circles experiment (ordinate) plotted against the response phase predicted by an aperture-inward motion preference (abscissa). Each circle is a single voxel. Data were pooled across observers (n = 5). Circle size and color indicate voxel SNR. Response phases, corresponding to motion direction preferences, are defined as in Figure 2A. A, V1 blank background condition. B, MT+ blank background condition. C, V1 incoherent background condition. D, MT+ incoherent background condition. E, F, Mean fMRI response amplitude as a function of motion direction. Error bars indicate SEM across observers (n = 5). Blue and red symbols indicate left and right hemisphere visual regions, respectively. Filled and empty symbols indicate blank and incoherent background conditions, respectively. E, V1. F, MT+.

Functional localizers were used to restrict each visual area to only those voxels retinotopically corresponding to stimuli in each of the main experiments. Dots translated coherently for 9 s, changing direction randomly (to one of eight possible directions) every second. After 9 s, dots were removed from screen and the observer viewed only the black screen with a fixation cross for the next 9 s. This stimulus-on/off cycle was repeated 11 times in each run. For the large annulus and two strips experiment, dots were presented in the same apertures as those used for the main experimental stimulus (i.e., a large annulus and two rectangular strips, respectively). For the two circles experiment, the circular apertures in which dots were presented were similar to those in the main experiment, but with a narrower transition region at the edges. Specifically, each circular aperture was centered at 8° eccentricity to the left or right of fixation, with a radius that extended from 4.5 to 5.5° as dot contrast transitioned from 100% to 0%.

For each observer and each functional localizer, we computed the average response time series across runs. The average time series of each voxel was fit with a sinusoid matched to the period of the stimulus alternation (18 s; 9 s on, 9 s off). For each voxel, we computed the correlation (“SNR”) between the best-fitting sinusoid and the time series (Engel et al., 1997) (see “Motion direction preferences”). We defined a stimulus-activated ROI for each visual area by restricting to those voxels that exceeded SNR > 0.4. We further restricted each ROI to include only those voxels for which the phase of the best-fitting sinusoid fell within a π interval that corresponded to the “on” phase of the stimulus.

Eye movement measurements.

In a subset of the fMRI sessions (large annulus experiment: each session for all four observers; two circles experiment: both sessions for O2–O4 and one session for O5), we measured eye positions (500 Hz, monocular) using an infrared video eye tracker (Eyelink 1000, SR Research). Raw gaze positions were calibrated using a nine-point calibration procedure and converted to degrees of visual angle. Blinks were identified with the Eyelink blink detection algorithm and removed from the eye position data, along with samples from 200 ms before to 350 ms after each blink interval.

Saccades were detected using an established algorithm (Engbert and Kliegl, 2003). The entire eye position trace from each run (after blink removal) was used for setting a saccade-detection velocity threshold. A threshold criterion for saccade detection was determined based on the 2D (horizontal and the vertical) eye-movement velocity during the run. Specifically, we set the threshold to be 7 times the SD of the 2D eye-movement velocity using a median-based estimate of the SD (Engbert and Kliegl, 2003). A saccade was identified when the eye movement velocity exceeded this threshold for 8 ms (4 consecutive eye-position samples). We also imposed a minimum intersaccadic interval (between the last sample of one saccade and the first sample of the next saccade) of 10 ms so that potential overshoot corrections were not considered new saccades (Møller et al., 2002). Using these methods, we have shown previously that we can detect saccades as small as 0.1° when observers were participating in a similar fMRI experiment (Merriam et al., 2013). Most (98.5%) of the saccades that were detected in the present study were smaller than 1° in amplitude.

Eye position samples and saccades were separately segmented and assigned to 3 s epochs corresponding to the presentation of each stimulus. Saccades that occurred in epochs corresponding to the same motion direction (large annulus experiment) or response phase (two circles experiment) were grouped together, resulting in eight categories of saccades for each experiment. A single eye position measure (horizontal and vertical) was obtained for each epoch by computing the median eye position (across time). We excluded from the eye position analysis epochs that contained 1.5 s (of 3 s) or more missing data samples (e.g., excessive blinks and some runs during which the eye tracker was partially occluded). Median eye positions for these excluded epochs were likely to be unreliable (if missing more than half of the samples) or could not be estimated at all (if missing all samples). Eye positions and saccades were separately pooled across runs, sessions, and observers for each experiment, yielding ∼4600 median eye positions and ∼15,000 saccades for the large annulus experiment and ∼5400 median eye positions and ∼16,000 saccades for the two circles experiment.

Decoding motion direction from eye movements.

A multivariate classifier was used to decode motion direction from eye position or saccade vector, analogous to decoding from voxel responses (see “Decoding motion direction”). For each experiment, the median horizontal and vertical eye positions (across the 3 s presentation epoch) were each stacked across epochs, forming a k × 2 matrix, where k corresponded to the number of stimulus presentation epochs (across runs, sessions, and observers). Saccade vectors (horizontal and vertical amplitudes) were also stacked to form an s × 2 matrix, where s corresponded to the number of saccades (across presentation epochs, runs, sessions, and observers). For either type of eye movement measurement, a split-halves procedure was used to assess decoding accuracy. Specifically, data were split into training and test halves. We trained a linear classifier on the 2D eye movement measurements from the training data and used it to predict the motion directions for the test data. The 2.5–97.5th percentiles across 1000 repeated cross-validations provided the 95% confidence interval of the decoding accuracy. A nonparametric randomization test was used to determine the statistical significance of decoding accuracy based on constructing a null distribution of decoding accuracies. The null distribution was generated by randomly permuting the stimulus labels for the eye positions or saccades 1000 times and recomputing the decoding accuracy each time. Decoding accuracy for the original data was then considered statistically significant if it was higher than the 95th percentile of the null distribution (p < 0.05, one-tailed randomization test).

Saccade removal analysis.

To determine whether differences in saccade vectors across motion directions contributed to fMRI motion decoding, we “removed” saccade-evoked responses from the time series of each voxel before recomputing the motion direction preferences and decoding accuracies from the residual voxel responses. Specifically, for each run, a regressor vector (at 500 Hz) was constructed corresponding to the occurrence of saccades in that run. Entries in the vector contained 1's at time points corresponding to the onset of each saccade and 0's otherwise. The regressor was convolved with a canonical hemodynamic impulse response function (difference of two gamma functions, also at 500 Hz), down-sampled to 0.67 Hz (corresponding 1.5 s TR), and band-pass filtered with the same filter as that used for the fMRI time series. This resulting regressor vector yielded a time series corresponding to the predicted saccade-evoked response for that run. This predicted time series was removed from the original time series of each voxel via linear projection as follows:

|

where x corresponded to the predicted saccade-evoked response, y corresponded to the original time series, and r corresponded to the resulting residual time series. Removal by projection ensured that the residual time series r was orthogonal to the removed component x.

Results

Motion direction biases in early visual areas

We characterized the organization of motion direction preferences in visual cortex using periodic stimulation analogous to that used for mapping retinotopic organization. Observers viewed a field of coherently translating dots presented within a large annular aperture centered on fixation; the direction of the dots changed every 3 s and cycled through 8 evenly spaced directions around the clock (Fig. 1A). The responses of each voxel were fit to a sinusoid with the period of the stimulus. The phase of the best-fitting sinusoid corresponded to the voxel's motion direction preference. The SNR of the responses for each voxel was quantified as the correlation between the best-fitting sinusoid and the time series (Engel et al., 1997). Direction preferences of the voxels were visualized on flattened representations of each observer's visual cortex thresholded by SNR (Fig. 1B,C). Retinotopic maps were measured in the same observers using the pRF method (Dumoulin and Wandell, 2008; see Materials and Methods, “Retinotopic maps”).

We observed a coarse-scale organization of motion direction preferences in early visual areas (V1–V3; Fig. 1B,C). Voxels with peripheral pRFs preferred inward motion: in the left hemisphere, colors traversed from purple-pink in dorsal regions (upward motion) to red (leftward motion) to yellow-green in ventral regions (downward motion); in the right hemisphere, colors traversed from purple-blue in dorsal regions (upward motion) to blue-cyan (rightward motion) to yellow-green in ventral regions (downward motion). Voxels with central/foveal pRFs preferred outward motion: color traversal from dorsal to ventral regions in the left hemisphere represented downward, rightward, and then upward motions, whereas color traversal from dorsal to ventral regions in the right hemisphere represented downward, leftward, and then upward motions. Voxels with pRFs at intermediate eccentricities had low SNR and failed to show any systematic preference for any motion directions (not visible on the flat maps thresholded by SNR). At both small and large eccentricities, motion direction preferences varied smoothly with the polar angle component of the retinotopic maps, with neighboring voxels in cortex preferring similar motion directions. In summary, voxels encoding visual field locations near or at the edges of the annular stimulus aperture showed a systematic organization of direction preferences for motion directions orthogonal to the aperture edge and pointing into the aperture. We did not observe a similar organization of motion direction preferences in the motion-selective visual area MT+.

Replicating previous results (Kamitani and Tong, 2006; Serences and Boynton, 2007; Apthorp et al., 2013; Beckett et al., 2012), motion directions were decoded from the fMRI responses using a multivariate classification analysis (Fig. 1D–F). Specifically, we treated the eight directions as eight different stimulus categories. For each observer and each visual area, the response amplitudes of each voxel to each of the eight categories were measured separately for each run. The data were then split into training and test sets. We trained a linear classifier on the multivariate pattern of voxel responses from the training data and used it to predict the stimulus motion directions from the fMRI responses in the test data (see Materials and Methods, “Decoding motion direction”).

Decoding accuracy increased with the number of voxels (randomly selected from each visual area), reaching asymptotic performance at ∼100 voxels in each observer (Fig. 1D,E). Consistent with previous studies (Kamitani and Tong, 2006; Serences and Boynton, 2007; Beckett et al., 2012), motion-decoding accuracies were higher in V1–V3 than in MT+ regardless of whether the number of voxels were restricted to compensate for the smaller size (cortical surface area) of MT+ compared with the other visual cortical areas (Fig. 1D–F). For each observer, we also performed decoding on the responses from voxels in a patch of gray matter outside of visually responsive regions of cortex. Decoding performance from these gray matter voxels served as a control. Indeed, decoding accuracy for the gray matter region hovered around the theoretical chance performance (12.5%).

Aperture-inward versus fovea-inward bias of motion direction preferences

Previous studies have suggested that fMRI responses at different eccentricities in early visual cortex show either an inward or outward motion direction bias relative to fixation (Raemaekers et al., 2009). The response bias that we observed might therefore reflect the organization of motion selectivity at different eccentricities. Alternatively, the bias might be linked to the edges of the stimulus aperture, which we refer to as an “aperture-inward” bias. If this were the case, then the bias would change systematically with the location and geometry of the stimulus aperture.

To distinguish between these two possibilities, we presented coherently moving dots in two smaller circular apertures on either side of fixation. Similar to the large annulus experiment, dots in each of the apertures cycled through eight evenly spaced directions every 24 s, but the directions in the left and right apertures were always opposite to one another (Fig. 2A,B). Response phases of the voxels were visualized on the flattened cortical maps, which corresponded to opposite motion directions in the left and right hemifields (Fig. 2A).

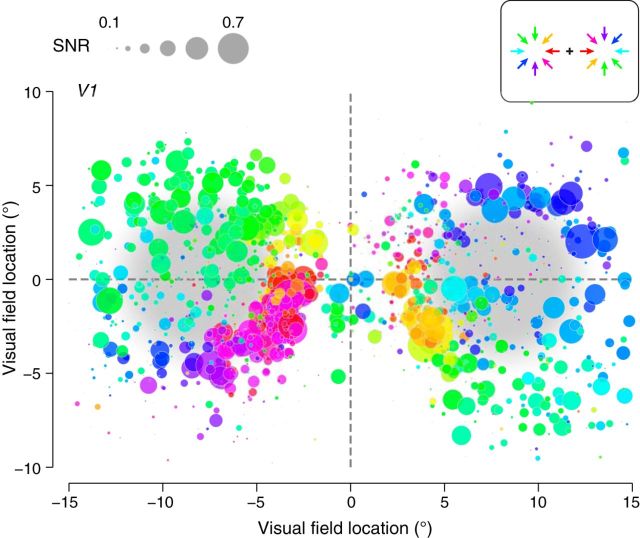

We again observed a smooth progression of response phases (corresponding to motion direction preferences) in regions of V1–V3 corresponding to the edges of the stimulus apertures (Fig. 2C,D). When voxels were visualized as a function of their pRF locations in the visual field, they showed a clear, systematic organization of motion direction preferences, which pointed radially toward the centers of the stimulus apertures (Fig. 3). Voxels with pRFs at the centers of the apertures had low SNRs and failed to show any systematic preference for any motion directions. For each observer, the motion preference of each voxel was reliable across sessions, as quantified with circular correlation rc between the direction preferences estimated from separate scanning sessions on different days (p < 0.001 for V1, V2, V3 in each observer; Table 1, top; see Materials and Methods, “Map similarity”).

Figure 3.

Motion direction preferences of voxels in V1 from the blank background condition pooled across observers (n = 5). Each circle corresponds to a single voxel, the coordinates of which represent the center of the voxel's pRF. Circle size is proportional to SNR. Colors indicate motion direction preferences, opposite in left and right hemifields (inset). Dashed lines indicate horizontal and vertical visual field meridians. Pale gray patches indicate stimulus apertures.

Table 1.

Direction preferences within and across experiments

| V1 | V2 | V3 | V1–V3 | MT+ | |

|---|---|---|---|---|---|

| Within | |||||

| O1 | 0.37 | 0.55 | 0.55 | 0.45 | 0.15 |

| O2 | 0.37 | 0.40 | 0.48 | 0.40 | 0.18 |

| O3 | 0.32 | 0.40 | 0.44 | 0.42 | 0.15 |

| O4 | 0.42 | 0.67 | 0.46 | 0.56 | 0.04 |

| O5 | 0.27 | 0.32 | 0.30 | 0.28 | 0.17 |

| Group | 0.46 | 0.54 | 0.44 | 0.51 | 0.17 |

| Between | |||||

| O1 | 0.000 | 0.000 | 0.000 | 0.000 | 0.523 |

| O2 | 0.021 | 0.157 | 0.000 | 0.001 | 0.019 |

| O3 | 0.000 | 0.001 | 0.000 | 0.000 | 0.638 |

| O4 | 0.000 | 0.000 | 0.019 | 0.000 | 0.153 |

| Group | 0.000 | 0.000 | 0.000 | 0.000 | 0.046 |

Top (Within), Circular correlations, across voxels, between direction preferences measured across two sessions of the two circles (blank background) experiment on separate days. Correlations are listed for each visual area, for each individual observer (O1–O5), for data combined across V1, V2, and V3, and for data combined across observers (“group”). Bold font indicates correlations that were significantly higher than chance as determined by a one-tailed randomization test (p < 0.05, Bonferroni corrected for the number of visual areas).

Bottom (Between), Differences (p values) in direction preferences across experiments (two circles versus large annulus). Bold font indicates that within-experiment circular correlations were significantly greater than across-experiment correlations (one-tailed randomization test with Bonferroni correction).

The organization of direction preferences depended systematically on the stimulus aperture. Direction preferences of voxels obtained from the large annulus experiment were not well predicted by the direction preferences from the two circles experiment. Four observers participated in both the large annulus and two circles experiments. For each of those observers and each visual area, motion direction preferences across voxels were significantly less similar across the two experiments with different stimulus apertures than across scanning sessions with the same aperture (Table 1, bottom).

Motion direction preferences of voxels in the two circles experiment correlated strongly with an aperture-inward motion prediction. We quantified the aperture-inward bias in a visual area by comparing the measured response phase of each voxel with the response phase predicted by preferred motion directions pointing radially toward the centers of the circular apertures. The aperture-inward prediction was computed analytically using the retinotopic locations corresponding to the center of each voxel's pRF (estimated from separate scanning sessions; see Materials and Methods, “Aperture-inward predictions”). The correlation between measured and predicted response phases for V1 (combined across observers) is shown in Figure 4A (rc = 0.39, p < 0.001). Similar results were observed for V1, V2, and V3 for four of five individual observers (Table 2, top).

Table 2.

Patch-inward bias

| V1 | V2 | V3 | V1–V3 | MT+ | |

|---|---|---|---|---|---|

| Blank background | |||||

| O1 | 0.38 | 0.34 | 0.56 | 0.49 | −0.13 |

| O2 | 0.52 | 0.59 | 0.43 | 0.52 | −0.04 |

| O3 | 0.05 | −0.06 | 0.04 | 0.00 | 0.10 |

| O4 | 0.24 | 0.34 | 0.23 | 0.24 | −0.07 |

| O5 | 0.30 | 0.25 | −0.02 | 0.23 | −0.05 |

| Group | 0.39 | 0.34 | 0.36 | 0.38 | −0.19 |

| Incoherent background | |||||

| O1 | 0.02* | 0.16* | 0.30* | 0.20* | −0.05 |

| O2 | −0.01* | 0.25* | 0.09* | 0.09* | 0.11 |

| O3 | 0.05 | 0.01 | −0.07 | −0.05 | 0.31 |

| O4 | −0.02* | 0.12* | 0.15 | 0.06* | 0.20 |

| O5 | 0.02* | 0.43 | 0.25 | 0.23 | 0.07 |

| Group | −0.02* | 0.22* | 0.21* | 0.12* | 0.03 |

Circular correlations, across voxels, between measured direction preferences and predicted direction preferences given a patch-inward bias. Same format as Table 1, top. Asterisks in incoherent background section indicate correlations that were significantly smaller than those in the blank background section (p < 0.05, one-tailed randomization test, Bonferroni corrected).

An aperture-inward bias was not evident in MT+. Instead, MT+ exhibited preferences for motion toward fixation, a “fovea-inward” bias. We adopted this term to distinguish the bias from the aperture-inward bias, which was defined with respect to the location of the stimulus aperture. This fovea-inward bias was evident as a clustering of measured response phases near 270° in Figure 4B. It was also evident in the flat maps (Fig. 2C,D, cyan). Computing the average responses to each of the eight directions, separately for left and right MT+, confirmed a consistent fovea-inward bias in both hemispheres: left MT+ responded most strongly to leftward motion and right MT+ responded most strongly for rightward motion (Fig. 4F, filled circles). Unlike early visual areas, there was no evidence that motion direction preferences in MT+ were less similar across experiments with different stimulus apertures than across scanning sessions with the same aperture (Table 1, bottom).

Although motion direction preferences of voxels in V1–V3 were dominated by the aperture-inward bias (Figs. 2C,D, 3, 4A), there was also evidence for a weaker fovea-inward bias (Fig. 4A, clustering at 270°). Averaging the responses across voxels as a function of motion directions revealed that both left and right V1 responded more to fovea-inward motion than for other directions (Fig. 4E, filled circles). V2 and V3 (data not shown) were similar to V1. Therefore, early visual areas showed a weak preference for fovea-inward motion in addition to the bias related to the stimulus aperture.

To further test the hypothesis that the aperture-inward bias was linked to the edges of the stimulus aperture, we surrounded the coherently moving dots in a larger field of incoherent dots, which removed the contrast-defined aperture edge (Fig. 2E). The aperture-inward bias in V1–V3 was largely attenuated (Fig. 2F,G). The response phases of voxels in V1 were not significantly correlated with the aperture-inward prediction (Fig. 4C; rc = −0.02, p = 0.81), though a weaker aperture-inward bias remained in V2 and V3 (Table 2, bottom). The fovea-inward bias remained in MT+ (Fig. 4D, and F, empty circles). A weak fovea-inward bias also remained in V1–V3 (Fig. 4C, and E, empty circles).

Motion decoding depends on aperture-inward bias

Motion-decoding accuracies depended on the aperture-inward bias (Fig. 5). When the dot patches were presented against a blank background, decoding accuracies were high in V1–V3, where the aperture-inward bias was evident, and low in MT+ (Fig. 5A, filled bars). The magnitude of the bias was manipulated by surrounding the coherent dot stimulus with a larger field of incoherent dots. Surrounding the stimulus with incoherent dots lowered the SNR in V1–V3 and attenuated the aperture-inward motion biases in V1–V3 (Table 2, bottom). Decoding accuracies were significantly lower in V1–V3 and remained low in MT+ (Fig. 5A, empty bars).

Variability in decoding accuracies across observers, visual areas, and stimulus conditions was well predicted by a combination of both the aperture-inward bias and SNR (Fig. 5D). Decoding accuracy was high only when SNR was high and when direction preferences matched the aperture-inward prediction (Fig. 5D, red and orange symbols in top left corner). MT+ exhibited direction preferences far from the aperture-inward prediction regardless of SNR and exhibited low decoding accuracies (Fig. 5D, square symbols on the right). Multiple regression revealed that the aperture-inward bias in tandem with SNR accounted for 75% of the variance of decoding accuracy (p < 0.0001; a = b1d + b2s + c; d = mean difference between each voxel's direction preference and the aperture inward prediction, s = SNR, b1 = −0.28 ± 0.08, b2 = 1.79 ± 0.48, and c = 0.32 ± 0.16). SNR is proportional to response amplitude, which is also known to affect decoding accuracy (Smith et al., 2011).

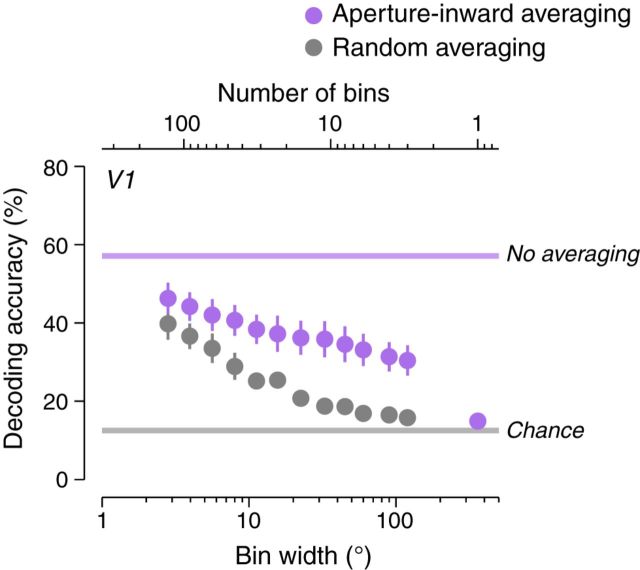

To test the hypothesis that the aperture-inward bias was sufficient to account for motion decoding in early visual areas, we binned voxels predicted by an aperture-inward bias to have similar response phases (i.e., similar motion direction preferences) and performed decoding on the averaged responses (see Materials and Methods, “Binning by aperture-inward predictions”). The aperture-inward prediction was calculated analytically using the pRF locations of the voxels. For comparison, we also performed decoding after binning voxels randomly rather than based on the aperture-inward prediction. If the voxels within each bin had dissimilar direction preferences (as in the case of random binning), then increasing binning would result in averaged responses that would be decreasingly direction selective, and decoding accuracy would be worse based on these averaged responses. If the aperture-inward bias were sufficient for decoding, then binning based on the aperture-inward prediction would average together voxels with relatively similar direction preferences and preserve direction selectivity in averaged responses. Although decoding was still expected to degrade with more binning, decoding accuracy should have been high relative to random binning and should have remained above chance (Freeman et al., 2011; Beckett et al., 2012).

Decoding accuracy was high in V1 when responses were averaged according to the aperture-inward prediction (Fig. 6A, purple circles). As the bin width was increased (i.e., the number of bins decreased), decoding accuracies remained well above chance, even with three bins. Conversely, decoding accuracy for random binning (Fig. 6A, gray circles) degraded close to chance for moderate bin widths. These results were consistent with the hypothesis that the aperture-inward bias was sufficient to account for decoding accuracy in V1. V2 and V3 were similar to V1.

Figure 6.

Aperture-inward bias was sufficient for motion decoding. Decoding accuracies (V1, blank background condition) were computed after binning and averaging across voxels. Purple circles indicate voxels binned according to whether they were predicted by an aperture-inward bias to have similar response phases. Gray circles are voxels binned randomly and averaged. The rightmost purple circle is the decoding accuracy after averaging all voxels within the ROI. Error bars indicate SEM across observers. Horizontal purple line is the decoding accuracy without binning (mean across observers). Horizontal gray line is the chance decoding accuracy (12.5%).

A weak or absent aperture-inward bias corresponded to low decoding accuracies, but decoding was still significantly above chance. We speculate that residual decoding was largely due to the fovea-inward bias for the following three reasons. First, the fovea-inward bias was evident in MT+ for both the blank and incoherent background conditions (Fig. 4B,D,F, response phases near 270°) and decoding accuracy in MT+ was above chance in both conditions. Second, decoding accuracies were highest in MT+ for the fovea-inward motion directions and lower for other directions (Fig. 5B,C, response phase = 270°). Decoding accuracies for the other directions were not at chance because any motion preference that deviated from perfectly fovea-inward enabled above-chance decoding. However, we did not observe this asymmetry in decoding accuracies for V1–V3 in the blank background condition, where the aperture-inward bias corresponded to voxels with all response phases, and thus all motion direction preferences. Third, although the incoherent background attenuated the aperture-inward bias in V1–V3, there was still a weak fovea-inward bias (Fig. 4C,E). Decoding accuracies in V1–V3 for the incoherent background condition were highest for the fovea-inward motion directions and lower for other directions, similar to MT+ (Fig. 5C, response phases = 270°). There remained a weak aperture-inward bias in V2 and V3 (Table 2, bottom), which also contributed to decoding in the incoherent background condition. This weak aperture-inward bias corresponded to higher decoding accuracies in V2 and V3 than in V1 and MT+ for all motion directions (Fig. 5C).

Response amplitudes and location of motion origin

Previous studies have reported asymmetries in fMRI response amplitudes between the leading and trailing edges of a motion stimulus (Whitney et al., 2003; Liu et al., 2006), suggesting that there may be systematic variations in response amplitudes with distance from the location of motion origin. We hypothesized that the aperture-inward bias in early visual areas arises from such variations in response amplitudes. We characterized changes in response amplitude as a function of distance from aperture edges by measuring responses to two horizontal “strips” of motion extending 13° of visual angle, which follows and extends previous results (e.g., see Figure 5 in Liu et al., 2006). Observers viewed coherently moving dots in two sharp-edged, rectangular apertures to either side of fixation (Fig. 7A). Dots in each aperture alternated between fovea-inward and fovea-outward motion every 9 s. The response amplitude of each voxel (Fig. 7B) was characterized by projecting the measured time series onto a fixed-phase sinusoid. This procedure yielded a signed response amplitude that indicated whether the voxel responded preferentially to either motion direction (see Materials and Methods, “Response amplitude as a function of distance”).

Responses were largest in voxels corresponding to the location of motion origin at the aperture edge and decreased gradually with distance from that location. Voxels with pRFs near the fovea exhibited larger responses to outward motion (Fig. 7B, light purple), corresponding to negative response amplitudes (Fig. 7C,D, left ends of purple curves). Voxels near the periphery exhibited larger responses to inward motion (Fig. 7B, dark purple), corresponding to positive response amplitudes (Fig. 7C,D, right ends of purple curves). Intermediate voxels exhibited no clear direction preference (Fig. 7C,D, purple curve near 5° and 20 mm). Results for V2 and V3 (data not shown) were similar to V1. The absolute values of the response amplitudes were smaller in voxels corresponding to the outer edge than to the inner edge of the stimulus (Fig. 7C,D, purple curves), presumably because receptive fields (RFs) are larger at peripheral locations and were therefore less stimulated by the rectangular stimulus. The position where the preference switched from inward motion to outward motion was biased toward lower eccentricities (Fig. 7C), presumably because RFs at the outer edge were larger and thus covered a greater portion of the stimulus. After accounting for cortical magnification, the crossing point was approximately centered between the inner and outer aperture edges (Fig. 7D). Response amplitudes varied over a distance of ∼35 mm on the cortical surface, consistent with previous fMRI measurements of the spatial extent of visual cortical areas using a comparable field of view (Amano et al., 2009). It is unlikely that the variation in response amplitudes was due to hemodynamic blurring because the distance was far greater compared with the extent of spatial blurring of the hemodynamic responses, which has an estimated point spread of ∼3.5 mm (Engel et al., 1997; Parkes et al., 2005). In contrast, the responses of MT+ voxels exhibited a fovea-inward bias regardless of which aperture edge corresponded to motion origin (Fig. 7C,D, red curves). We conclude that the aperture-inward bias in V1–V3 arose from a systematic decrease in response amplitudes with distance from the location of motion origin.

Similar results were observed by analyzing data from the two circles experiment. That experiment was not designed to measure response amplitudes as a function of distance, because the edges of the apertures spanned a range of eccentricities and because we used eight motion directions. Nonetheless, the results (data not shown) were similar to those from the two strips experiment, supporting the same conclusion.

Eye movements were not a confound

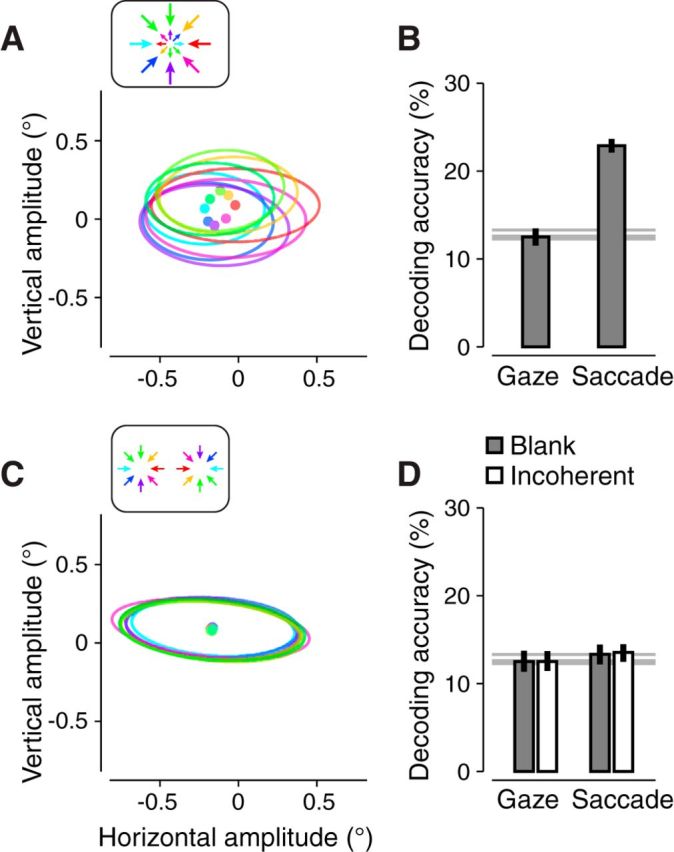

It was critical that observers maintained fixation for the duration of each run. It was unlikely that observers made large eye movements because all were experienced psychophysical observers and because the demanding fixation task encouraged accurate fixation. We monitored eye movements in a subset of the fMRI sessions (see Materials and Methods, “Eye movement measurements”). These data confirmed that large saccades were rare (0.1% of saccades >2°; 1.5% of saccades >1°) and that eye position remained within a small window around fixation (1 SD: 0.29°). However, there were small, involuntary eye movements, such as microsaccades, and possibly optokinetic nystagmus (OKN). OKN is characterized by periods of pursuit-like drifts in eye position aligned with stimulus motion (slow phase), followed by a rapid saccade-like return of the eyes back in the direction of fixation (fast phase) (Cohen et al., 1977). These small eye movements would confound the interpretation of our results if they varied systematically with the motion directions. To address this possibility, we measured eye position (across the 3 s of each stimulus presentation epoch) and detected small saccades (<2°). The saccades might have included both microsaccades and small return saccades during the fast phase of OKN.

Eye position did not covary with stimulus motion direction. The angular component of eye position was not significantly correlated with motion direction (large annulus experiment: rc = 0.01, p = 0.23). We trained a multivariate classifier to decode motion direction from eye position (see Materials and Methods, “Decoding motion direction from eye movements”). The classifier performed at chance for both the large annulus experiment and the two circles experiment (Fig. 8B,D, “Gaze”), indicating that it was not possible to discriminate the motion directions based on the eye position during stimulus presentation.

Figure 8.

Eye position measurements. A, B, Large annulus experiment. C, D, Two circles experiment. A, C, Median saccade vector for each stimulus motion direction. Colors indicate stimulus motion directions (insets). Ellipses indicate 1 SD across saccades. B, D, Motion decoding using either median eye positions (“Gaze”) or saccade vectors (“Saccade”). Error bars indicate 95% confidence intervals. Thick and thin horizontal gray lines indicate the mean and 95th percentile of the null distribution of decoding accuracies. Filled bars are the blank background. Empty bars are the incoherent background. Measurements were pooled across runs, sessions, and observers for each experiment.

Saccade vectors did vary systematically with motion direction, but were unlikely to have confounded the interpretation of the fMRI results. During the large annulus experiment, small saccades tended to be directed in the opposite direction of the motion stimulus (Fig. 8A; rc = 0.35, p < 0.001). We interpret these saccades as correcting fixation error induced by the slow phase of OKN. Indeed, motion directions could be successfully decoded from these 2D saccade vectors (Fig. 8B, “Saccade”). However, this covariation between saccade vector and motion direction was unlikely to have accounted substantially for the voxel responses for the following reasons. First, we observed little covariation between saccade direction and the motion stimulus for the two circles experiment (Fig. 8C). Correspondingly, it was not possible to decode motion direction from the saccade vectors in this experiment (Fig. 8D, “Saccade”). Indeed, the two circles experiment was designed to minimize systematic fixation errors; opposite stimulus motion was presented in left and right hemifields (Fig. 2A). Nonetheless, the aperture-inward bias (Figs. 2C,D, 3, 4A) and fMRI motion decoding (Fig. 5A) were robust for the two circles arrangement, indicating that neither the aperture-inward bias nor fMRI motion decoding depended on differences in saccade vectors across motion directions. Second, motion-decoding accuracies from saccade vectors were similar for blank and incoherent background conditions (Fig. 8D, empty vs filled bars), suggesting that saccade vectors from either condition were similarly predictive of motion direction. However, the robustness of the aperture-inward bias and the accuracy of fMRI motion decoding differed substantially between the blank and incoherent background conditions.

To test the possibility that small saccades partially contributed to the fMRI results in the large annulus experiment, saccade-evoked responses were removed via a linear projection (see Materials and Methods, “Saccade removal analysis”). We recomputed motion direction preferences and decoding accuracy from the saccade-removed voxel time series. It is well known that small saccades are correlated with stimulus onset (Engbert and Kliegl, 2003); therefore, removing saccade-evoked activity also removed some of the stimulus-evoked activity. Unsurprisingly, motion direction preferences as visualized on each observer's visual cortex were less robust compared with the direction preferences before saccade removal. Nonetheless, motion direction preferences across voxels were highly correlated with those before saccade removal (V1: rc = 0.52 ± 0.07; MT+: rc = 0.53 ± 0.03; mean ± SEM across 4 observers). Moreover, decoding accuracies were also similar with and without removing saccades (at most 4% lower among all visual areas and all observers; V1: 1.6 ± 0.7%; MT+: 0.4 ± 0.4%; mean ± SEM across 4 observers).

Discussion

We observed a systematic organization of motion direction preferences in human V1–V3 that depended on the stimulus aperture. Modulation of fMRI responses was largest at the aperture edges, inducing an “aperture-inward” bias for motion directions toward the aperture center. fMRI motion decoding depended strongly on this aperture-inward bias rather than on the absolute motion direction. We conclude that motion decoding did not reflect the underlying direction-selective columnar functional organization.

Origins of aperture-inward bias

Our results are consistent with previous reports that the trailing edges of a motion stimulus evoke larger responses in V1 than the leading edges (Whitney et al., 2003; Liu et al., 2006). The interpretation for this finding has remained controversial. Whitney et al. (2003) interpreted this as a displacement in the peak neural activation and concluded that the retinotopic representation in cortex shifts depending on stimulus motion. Liu et al. (2006) replicated this finding, but refuted the conclusion; they found that differences in response amplitude to opposite motion directions did not significantly shift the retinotopic representation.

The aperture-inward bias in V1–V3 may reflect spatial interactions between visual motion signals along the path of motion (Raemaekers et al., 2009; Schellekens et al., 2013). Neural responses might have been suppressed when the stimulus could be predicted from the responses of neighboring neurons nearer the location of motion origin, a form of predictive coding (Rao and Ballard, 1999; Lee and Mumford, 2003). Under this hypothesis, spatial interactions between neurons depend on both stimulus motion direction and the neuron's relative RF locations, but the neurons themselves need not be direction selective. Perhaps consistent with this hypothesis, psychophysical sensitivity is enhanced at locations further along the path of motion than at motion origin (van Doorn and Koenderink, 1984; Verghese et al., 1999).

Alternatively, the aperture-inward bias may arise from an asymmetry in transient response amplitudes evoked by dot onsets and offsets. Dots that appear suddenly within the RF center of a neuron might evoke a larger onset-transient response than dots that have gradually moved into the RF. Therefore, a neuron with a RF at the aperture edge of motion origin might show a larger onset-transient response than a neuron with its RF at the center or opposite edge of the stimulus. We took two precautions to mitigate any potential effect of onset/offset transients. First, we used dots with limited lifetime, which helped to equalize dot onsets and offsets across the entire stimulus aperture. Second, we used stimulus edges with tapered contrast transitions, such that the onset transient within any given RF at the edge should have been greatly attenuated relative to a sharp contrast edge. Nonetheless, it remains possible that residual onset-transient responses were responsible for some portion of the aperture-inward bias. However, previous studies that have reported response asymmetry between leading and trailing edges used other types of moving stimuli, including Gabor stimuli and contrast-modulated gratings (Whitney et al., 2003; Liu et al., 2006; Whitney and Bressler, 2007). The results of those studies cannot be explained by asymmetry in onset-transient responses.

The aperture-inward bias might arise also from biases in the responses of direction-selective neurons with RFs at the aperture edges (Carlson, 2014). Carlson (2014) demonstrated that the edges of a grating stimulus produced biases in the responses of simulated orientation-selective neurons that depended on the orientation of the grating relative to the RF orientation, and that grating orientation could be decoded from these biases. A similar model of direction-selective neural responses might explain our results in V1–V3: that motion direction biases and motion decoding depended on the shape of the stimulus aperture. Such a model, however, would also predict similar results in MT+, but we found no evidence for the aperture-inward bias in MT+.

We considered, and ruled out, two alternative possibilities for how the aperture-inward bias might arise. First, the bias might have arisen from orientation-selective responses to motion streaks (Geisler, 1999; Apthorp et al., 2013). This explanation implies that voxels would have shown equal responses for motion directions 180° apart (i.e., no direction selectivity), which is inconsistent with our results. Second, the response bias might have been evoked by the motion-defined (second-order) contour (Reppas et al., 1997; Larsson et al., 2010) or pop-out (perceptual saliency) associated with the aperture edge (Treisman and Gelade, 1980; Bergen and Julesz, 1983). This is also unlikely because, although the motion stimuli in our experiments created motion-defined contours, these contours did not change as a function of motion direction.

Motion direction preferences in visual cortex

Macaque MT exhibits a fovea-outward bias in the periphery beyond 12° (Albright, 1989). We observed a fovea-inward bias in human MT+ at locations within the central 14°. Further experiments with a wider field of view are needed to determine whether human MT+ contains a fovea-outward bias at greater eccentricities.

Several studies have reported radial direction biases in human V1–V3 (Raemaekers et al., 2009; Beckett et al., 2012; Schellekens et al., 2013). All of these results can be explained by the aperture-inward bias that we have described here. For example, Raemaekers et al. (2009) reported a fovea-outward bias at the smallest eccentricities, corresponding to the inner edge of the stimulus aperture, and a fovea-inward bias at greater eccentricities. Analogous arguments apply for the other two studies.

One fMRI study reported a fovea-inward bias in MT+ (Giaschi et al., 2007), which is consistent with our results. However, our results and the results of Giaschi et al. (2007) seemingly contradict two studies that did not find a fovea-inward bias in MT+ (Raemaekers et al., 2009; Beckett et al., 2012). We found that MT+ voxels with pRFs to the left and right of fixation exhibited a fovea-inward bias (the two circles and two strips experiment), but our experiments did not determine whether voxels at other visual field locations in MT+ also exhibited a bias toward fixation (e.g., downward bias for voxels above fixation). Data from the large annulus experiment did not reveal this conclusively (Fig. 1B,C). One reason might be that the fovea-inward bias is relatively small and we did not have sufficient data to detect it reliably. Another possibility is that MT+ contains an overrepresentation of inward horizontal motion, which potentially explains the seemingly contradictory findings. Giaschi et al. (2007) reported a fovea-inward bias in MT+, but they only tested horizontal and vertical motion directions. Conversely, the two studies that failed to find any motion direction bias in MT+ (Raemaekers et al., 2009; Beckett et al., 2012) both tested a wide range of motion directions spanning 360°.

We did not observe an aperture-inward bias in MT+, but it might exist nonetheless. The aperture-inward bias might be harder to detect in MT+ because of inferior retinotopic maps (due to larger RFs), wider tuning widths, or lower response amplitudes (Smith et al., 2011).

fMRI decoding