Abstract

A decision may be difficult because complex information processing is required to evaluate choices according to deterministic decision rules and/or because it is not certain which choice will lead to the best outcome in a probabilistic context. Factors that tax decision making such as decision rule complexity and low decision certainty should be disambiguated for a more complete understanding of the decision making process. Previous studies have examined the brain regions that are modulated by decision rule complexity or by decision certainty but have not examined these factors together in the context of a single task or study. In the present functional magnetic resonance imaging study, both decision rule complexity and decision certainty were varied in comparable decision tasks. Further, the level of certainty about which choice to make (choice certainty) was varied separately from certainty about the final outcome resulting from a choice (outcome certainty). Lateral prefrontal cortex, dorsal anterior cingulate cortex, and bilateral anterior insula were modulated by decision rule complexity. Anterior insula was engaged more strongly by low than high choice certainty decisions, whereas ventromedial prefrontal cortex showed the opposite pattern. These regions showed no effect of the independent manipulation of outcome certainty. The results disambiguate the influence of decision rule complexity, choice certainty, and outcome certainty on activity in diverse brain regions that have been implicated in decision making. Lateral prefrontal cortex plays a key role in implementing deterministic decision rules, ventromedial prefrontal cortex in probabilistic rules, and anterior insula in both.

Keywords: Decision, Choice, Certainty, Uncertainty, Probability, Rule-use, Reward, fMRI

Introduction

When faced with a decision, we strive to maximize positive outcomes and minimize negative outcomes. In some circumstances, we can predict with high accuracy the outcome of a given response. In these cases, decisions may be guided by a response rule that maps the best possible outcome to the best possible response option based on contextual information. For example, if I am trying to decide where to go for coffee in the morning, and I know that café A gives out free samples every Friday, then I might apply the rule “if today is Friday, go to café A.” We refer to this process of deliberation as rule-based decision making. Complex rule-based decisions require multiple steps of information processing from various sources of information to predict the outcomes of possible responses and make the best choice. For example, taking into account other information such as the time of my first meeting of the day and the distance to the café increases the complexity of my café choice rule.

In other circumstances, it may be difficult to perfectly predict the outcomes that result from possible responses, and a positive outcome may be more or less certain. We refer to this process of deliberation as probabilistic decision-making. In probabilistic decisions, individuals may not be certain as to which is the best option, and – having made their choice – they may not be certain about the resulting outcome. For example, if I know two cafes that each give free samples only to the first 50 customers, I may not be certain about which café to choose, and on my walk to the chosen café I will not be certain whether I will receive a free sample. In contrast to complexity in rule-based decisions, difficulty in probabilistic decisions arises from low certainty rather than from information processing demands.

Although complexity and low probabilistic certainty both contribute to the difficulty of a decision, they exert different influences on the decision-making process. A simple decision may involve a choice between two responses. Certainty in this case is minimal when both responses are equally likely to lead to a positive outcome and maximal when one response definitely leads to a positive outcome. Thus, low certainty makes a decision difficult by decreasing the predictability of outcomes from possible responses. Unlike low certainty, complexity increases the level of information processing necessary to map possible responses to possible outcomes in a decision. Choice complexity is high when multiple sources of information must be monitored to implement a rule (e.g., incorporating information about the day of the week, time of day, and traffic patterns to implement a rule about which route to use on a drive home from work). Thus, both rule complexity and low probabilistic certainty contribute to decision difficulty, but in different ways. The present study compares the effects of rule complexity and low probabilistic certainty, and how these factors may differentially or similarly influence decision-making activity in the brain.

Separate lines of research examining complexity and certainty in decision making suggest that these factors influence activity in both overlapping and distinct regions of prefrontal cortex (PFC). In one line of research, brain imaging studies focusing on rule-guided behavior have found that different parts of PFC are engaged as a function of the particular task rules involved (Bunge and Wallis, 2007). In these studies, participants learn rules instructing them on how to choose a response for particular classes of stimuli. For example, lateral PFC (LPFC) is engaged when participants make decisions involving abstract conditional rules with a set of response contingencies, taking the form of “if stimulus or condition X, then respond A; if stimulus or condition Y, then respond B” (Bunge et al., 2003). LPFC activation levels increase with the complexity of the rule involved (e.g., when the context in which the stimulus is presented determines which decision rule must be implemented; Bunge and Zelazo, 2006; Crone et al., 2006).

Conversely, more inferior regions of PFC often referred to as orbitofrontal cortex (i.e., portions of Brodmann's area (BA) 11 and BA 47) or an overlapping region referred to as ventromedial prefrontal cortex (VMPFC, i.e., medial portions of BA 11, BA 47, BA 10, and BA 25) are involved in representing and updating associations between a stimulus and a reward (Bunge and Zelazo, 2006; Crone et al., 2006; Hampton et al., 2006; O'Doherty et al., 2001). These stimulus–reward associations may serve as the basis for simple response rules in a decision context, suggesting that VMPFC may be preferentially recruited for simple as opposed to complex decisions (Bunge and Zelazo, 2006). In contrast to findings with simple stimulus–reward associations, however, VMPFC also appears to be important in monitoring diffuse sources of social and emotional contextual information to determine optimal behavior (Beer et al., 2006a,b; De Martino et al., 2006). Thus, additional research is needed to determine the precise influence of complexity on VMPFC involvement in decision making.

Certainty influences activity in similar, as well as distinct, regions as those influenced by complexity in decision making. Research examining influences of certainty has primarily focused on choice certainty by employing guessing tasks, where limited information is available to select the best response. As certainty decreases, activity increases in the lateral orbitofrontal cortex (LOFC), dorsal anterior cingulate cortex (DACC), dorsomedial prefrontal cortex (DMPFC), LPFC, anterior insula, and ventral striatum (Critchley et al., 2001; Huettel et al., 2005; Paulus et al., 2001; Preuschoff et al., 2006; van Leijenhorst et al., 2006; Volz et al., 2003).

Further complicating the study of decision making, there are often several sources of low certainty associated with the decision: low certainty regarding which choice to make (low choice certainty), as well as low certainty regarding the outcome of a particular decision (low outcome certainty). For example, choice certainty is low when making a selection between two unfamiliar driving routes because there is no information to support one option over another. On the other hand, after a route is selected, outcome certainty would be determined by information gained about the result of the choice (e.g., a radio report comes on describing traffic conditions ahead on the chosen route). These two forms of low certainty should be examined separately for a better understanding of how low certainty is resolved in the brain.

Previous research has demonstrated that VMPFC activity is related to choice and/or outcome certainty, but it is unclear whether this brain region is more active under conditions of low choice/outcome certainty (Elliott et al., 1999) or high choice/outcome certainty (Daw et al., 2006; Hampton et al., 2006; Knutson et al., 2005; Tobler et al., 2007). VMPFC is more active when choosing between four possible responses, each equally likely to be the correct response (low choice and outcome certainty), than when choosing between two possible responses (relatively higher choice certainty and outcome certainty) in a task that did not involve incentives for correct guesses (Elliott et al., 1999). In incentivized decisions, higher probability of reward translates to higher outcome certainty, but also to higher choice certainty, as information about reward probability will influence choice certainty. VMPFC activity correlates with the probability of an anticipated reward in incentivized tasks, thus suggesting a positive relationship to choice and/or outcome certainty (Daw et al., 2006; Hampton et al., 2006; Knutson et al., 2005; Tobler et al., 2007). Other research has demonstrated that VMPFC activity correlates with subjective preference and more generally encodes the value of stimuli (McClure et al., 2004; O'Doherty, 2004; Plassmann et al., 2007). However, none of the studies reviewed included distinct measures of choice certainty versus outcome certainty. Thus, the VMPFC region is likely related to certainty in decision making, but it is unclear exactly how it responds to choice certainty or outcome certainty.

Although choice complexity and low choice certainty differentially influence decision making, they both contribute to decision difficulty. The research reviewed above shows that diverse and overlapping brain regions are related to either complex rule-based choice or probabilistic choice, but in prior research, these types of decisions have been examined separately. The current study aims to connect research on rule-based decision making with research on probabilistic decision making by directly comparing factors of complexity and certainty that contribute to rule-based decision difficulty and probabilistic decision difficulty.

The present study addressed questions concerning the neural distinctions between decision rule complexity, choice certainty, and outcome certainty in decisions where the relevant rules and probabilistic contingencies are known ahead of time rather than learned. First, which brain regions are associated with rule-based choice complexity when certainty is equated, to the extent possible? Second, which brain regions are associated with probabilistic choice certainty when complexity is equated, to the extent possible? The present study examined neural activity in relation to rule-based choice complexity and probabilistic choice certainty with a choice task in which participants made repeated incentivized decisions.

Drawing on prior studies that focused either on choice complexity or certainty, we predicted that LPFC – in particular ventrolateral PFC (VLPFC) – would be engaged more strongly for decisions involving higher rule complexity (Bunge et al., 2003, 2005; Bunge and Zelazo, 2006; Crone et al., 2006), whereas VMPFC would be engaged in decisions involving higher choice certainty and/or outcome certainty (Daw et al., 2006; Hampton et al., 2006; Knutson et al., 2005; Tobler et al., 2007). We also predicted that anterior insula, DACC, and ventral striatum would be more engaged in decisions involving lower choice certainty and/or outcome certainty (Critchley et al., 2001; Paulus et al., 2001; Preuschoff et al., 2006). The present study was designed to disambiguate the influence of decision rule complexity, choice certainty, and outcome certainty on activity in these brain regions.

Materials and methods

Participants

Fifteen right-handed participants were included in the study (7 females; ages 18–28 years, mean age= 21.9, SD= 3.09). Data from an additional participant was excluded from analysis due to excessive head movement (>4 mm over the scanning session). All participants provided informed consent and the study approved by the institutional review board of the University of California at Davis.

Task

Participants learned to perform a binary choice task prior to scanning (Fig. 1). In the choice task, participants made decisions that varied in Choice Certainty (high versus low choice certainty) and Choice Complexity (simple probabilistic versus complex rule-based). All stimuli were presented visually. The cues were pictures of common objects: playing cards, billiard balls, highway route signs, tickets, sports jerseys, and football helmets. Embedded in each image was a number between 2 and 9. Participants pressed one of two response buttons on each trial, based on information provided by the cue. Correct responses were associated with a gain of either 20 cents or 1 cent, whereas incorrect responses were associated with a loss of either 20 cents or 1 cent. The total amount won on the task was provided to the participant at the end of the study, together with a fixed sum of $30 as compensation for getting involved in the study.

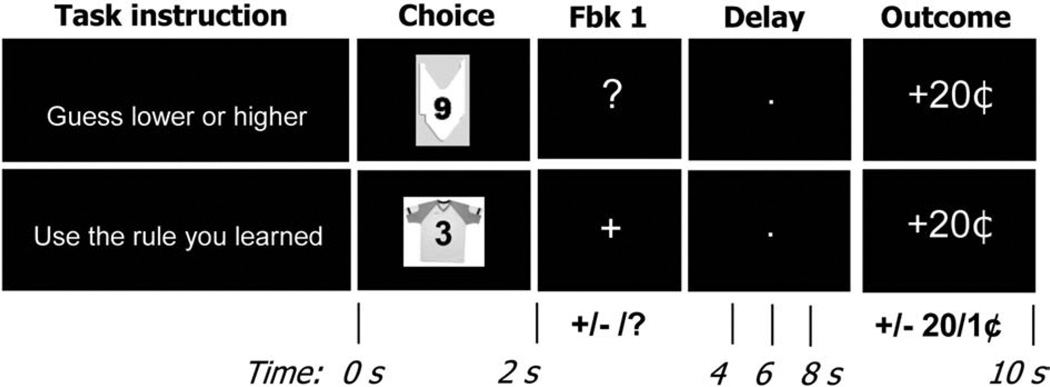

Fig. 1.

Stimuli and timing in choice task. Participants saw a cue (Choice screen), made a response, saw an initial feedback stimulus (Fbk1 screen; immediate partial feedback: ‘+’/‘−’, or delayed feedback: ‘?’; delayed feedback occurred in probabilistic choice trials only), waited through a delay (Delay screen), then received final feedback (Outcome screen; ‘+’ or ‘−’ 20¢ or 1¢) showing valence and magnitude of the trial outcome.

Trials were organized into two blocks called Gambling blocks and Rule blocks. Gambling blocks consisted of four experimental conditions resulting from two crossed variables: certainty (high or low) and feedback type (immediate or delayed). Rule blocks consisted of a single complex rule-based choice condition, based on previous research on rule-guided behavior (e.g., Bunge and Wallis, 2007; Wallis and Miller, 2003). Within Gambling blocks, the information in the cue probabilistically determined the correct response in a guessing task based on previous research on probabilistic decision making (Critchley et al., 2001; Delgado et al., 2000, 2005). Within Rule blocks, a response rule based on information in the cue fully determined the correct response. Training took place immediately before the scan, and consisted of 20 Gambling trials and 20 Rule trials, in separate blocks.

Each 10-s trial started with a 250-ms small green fixation point, followed by a cue that was presented for a 2000-ms choice phase (Fig. 1, “Choice” screen), during which participants made their response. An initial feedback stimulus was presented for 500 ms (Fig. 1, “Fbk 1” screen, followed by a large white fixation point presented for a 6500-ms delay period (Fig. 1, “Delay” screen), and finally a second feedback stimulus for 750 ms (Fig. 1, “Outcome” screen). Inter-trial intervals varied from 0 to 8 s, the order and length of inter-trial intervals were determined with an algorithm designed to maximize the efficiency of recovery of the bold response (Dale, 1999).

Choice Complexity manipulation

Choice Complexity was manipulated across Gambling and Rule blocks (see Fig. 1). Choices in Rule block trials were based on a complex rule learned in a practice session before the scan (“complex rule-based choice trials”). The complex rule required consideration of two features of the cue. Specifically, in complex rule-based choice trials, participants were instructed to press the ‘lower’ response key if the cue was an even-numbered sports jersey or an odd-numbered football helmet, and to press the ‘higher’ response key if the cue was an odd-numbered sports jersey or an even-numbered football helmet. Choices that were made correctly according to the complex rule were rewarded every time and the complex rule remained constant throughout the session.

Cue pictures in complex rule-based choice trials were always sports jerseys or football helmets, and those objects never appeared in the Gambling blocks. All responses made in accordance with the rule in the Rule block were deemed correct, and therefore Rule block choices are considered high choice certainty trials. Immediate partial feedback (‘+’ or ‘−’) followed each complex rule-based choice. To best equate for choice certainty, high choice certainty probabilistic trials (immediate feedback type) constituted the condition that was contrasted with complex rule-based choice trials for the Choice Complexity manipulation. High choice certainty probabilistic trials (immediate feedback type) are described below.

Choice Certainty manipulation

Choice Certainty was manipulated within Gambling blocks by varying the difference between the probability of a positive outcome resulting from one choice and the probability of a positive outcome resulting from the other choice. A greater difference between the probabilities meant higher Choice Certainty, since participants could be more confident in one choice over the other. During Gambling blocks, participants pressed a ‘lower’ (index finger of right hand) or ‘higher’ (middle finger of right hand) response key during the choice phase to guess whether a randomly generated number between 1 and 10, inclusive, would be lower or higher than the number in the cue (participants were informed that this number would always be lower or higher but never equal to the number in the cue). Trials in the Gambling blocks were classified as low choice certainty probabilistic trial types if the cue contained the number 4, 5, 6, or 7; high choice certainty probabilistic trial type cues contained the number 2, 3, 8, or 9. On every trial, one response was associated with a higher probability of reward (for low choice certainty trials the higher probability choice resulted in a positive outcome 61% of the time, for high choice certainty trials, the higher probability choice resulted in a positive outcome 83% of the time). These trial types were interspersed in pseudorandom order throughout Gambling blocks. The probabilistic contingencies associated with each cue remained the same through the experiment and were known before participants began the task. Thus, the task differed from others involving dynamic updating of response contingencies (e.g., Delgado et al., 2005; Huettel et al., 2005). This feature of the task facilitated comparison with the complex rule-based decision making task.

Outcome Certainty manipulation

Gambling block trials were associated with either immediate or delayed feedback. A ‘+’ or ‘−’ symbol immediately followed each choice in immediate feedback trials to indicate a correct or incorrect response (Fig. 1). A ‘?’ symbol immediately followed each choice in delayed feedback trials, meaning that the choice would not be revealed as correct or incorrect until the second feedback stimulus appeared after the delay period. Final feedback came at the end of a trial and consisted of ‘+’ or ‘−’ indicating accuracy and ‘20¢’ or ‘1¢’ indicating the magnitude of gain or loss. Participants were not cued as to the magnitude of gain or loss on the trial until the final feedback stimulus appeared at the end of the trial, so that there is always some unknown information during the delay period. What varied between the immediate (high outcome certainty) vs. delayed feedback trials (low outcome certainty) was the amount of unknown information during the delay. As such, we conceptualized this distinction between trials as a manipulation of outcome certainty, independent of the choice certainty manipulation. Mini-blocks within the Gambling blocks alternated between sequences of immediate feedback and delayed feedback trials with an 8 second delay between each mini-block.

Data acquisition

Participants performed a total of 225 experimental trials over the course of five functional scans. Each scan included one Gambling block and one Rule block. Each Gambling block contained 36 trials organized in strings of mini-blocks of 9 trials; each mini-block consisted of either all immediate feedback trials or all delayed feedback trials. The mini-blocks appeared in an alternating order that was counterbalanced across subjects. High choice certainty probabilistic and low choice certainty probabilistic trials occurred in a pseudorandom order within each mini-block. Each Rule block consisted of 9 trials with cues arranged in pseudorandom order. Gambling blocks and Rule blocks alternated, each block beginning with an instruction screen presented for 4000 ms followed by 4000 ms of blank screen. Over the course of the scanning session, participants performed 45 complex rule-based choice trials, 45 low choice certainty probabilistic choice (immediate feedback) trials, 45 high choice certainty probabilistic choice (immediate feedback) trials, 45 low choice certainty probabilistic choice (delayed feedback) trials, and 45 high choice certainty probabilistic choice (delayed feedback) trials. The order of trial types and inter-trial intervals within each block were determined with an algorithm designed to maximize the efficiency of recovery of the bold response (Dale, 1999).

Scanning was performed with a standard head coil on a 1.5-T GE scanner at the UC Davis Imaging Research Center. Functional magnetic resonance imaging (FMRI) data were acquired using a gradient echoplanar pulse sequence (TR= 2 s, TE= 40 ms, 24 slices, 3.475 × 3.475 × 5 mm, 0 mm inter-slice gap, 304 volumes per scan). Functional volume acquisitions were time-locked to the onset of the cue at the beginning of each trial. The first five volumes of each scan were discarded. Coplanar and high-resolution T1-weighted anatomical images were also collected. Visual stimuli were projected onto a screen that was viewed through a mirror. Button presses were recorded from a respond keypad in the participant's right hand.

fMRI data analysis

Data were preprocessed using SPM2 (Wellcome Department of Cognitive Neurology, London). Images were corrected for differences in timing of slice acquisition, followed by rigid body motion correction. Structural and functional volumes were normalized to T1 and EPI templates, respectively. The normalization algorithm used a 12-parameter affine transformation together with a nonlinear transformation involving cosine basis functions and resampled the volumes to 2-mm cubic voxels. Templates were based on the MNI305 stereotaxic space (Cocosco et al., 1997), an approximation of Talairach space (Talairach and Tournoux, 1988). Functional volumes were spatially smoothed with an 8-mm FWHM isotropic Gaussian kernel.

Statistical analyses were performed on individual participants' data using the general linear model in SPM2. The fMRI time series data were modeled by regressors representing events convolved with a canonical hemodynamic response function and its temporal derivative.

Choice Certainty and Choice Complexity were hypothesized to influence regional brain activity at the onset of the choice phase. These effects were examined with a regression model consisting of regressors describing choice phase and outcome phase events (0 ms duration). Choice phase regressors modeled activity beginning at the onset of the choice phase (see Fig. 1) in three distinct trial types: 1) complex rule trials, 2) high choice certainty probabilistic trials (immediate and delayed feedback), and 3) low choice certainty probabilistic trials (immediate and delayed feedback). The immediate and delayed feedback conditions were collapsed in this model because a preliminary analysis showed no effects of feedback type at the onset of the choice phase, as expected. Outcome phase regressors modeled 3 types of final feedback outcome events: 1) positive outcomes on all probabilistic choices, 2) negative outcomes on all probabilistic choices, and 3) positive outcomes on all complex rule-based choices. Error trials were modeled by a regressor of noninterest (10-s event duration). Error trials included trials on which participants failed to respond and Rule block trials on which participants used the response rule incorrectly. A separate regressor of noninterest described instruction screen epochs (4-s duration). These regressors were entered into a general linear model along with a set of cosine functions that high-pass filtered the data (cutoff at 128 s) and a covariate for session effects. The least-squared parameter estimates for each condition were used to create contrast images comparing activity across different conditions.

To test whether regions responded differentially according to Choice Complexity, a contrast image compared high choice certainty probabilistic choice with complex rule-based choice. To test whether regions responded differentially according to Choice Certainty, another contrast image compared high choice certainty probabilistic choice with low choice certainty probabilistic choice.

Group statistical maps were computed for each contrast by calculating one-sample t-tests on participants' contrast images (participants were treated as a random effect). Clusters were selected for further examination if they survived small volume correction (FWE, p<.05) based on a priori volumes of interest from the Automated Anatomical Labeling (AAL) map (Tzourio-Mazoyer et al., 2002) (left LPFC based on left triangularis AAL region, DACC on the middle and anterior cingulum regions, VMPFC on the left and right medial orbital AAL regions, left amygdala on the left amygdala AAL region). Based on specific interest in the anterior insula (Critchley et al., 2001; Paulus et al., 2003), small volume correction in right anterior insula was based on voxels in the right insula AAL region with a y-coordinate greater than 0. The focus on these regions is motivated by previous research demonstrating that LPFC activity relates to complex rule use (Bunge et al., 2003) and low certainty decisions (Huettel et al., 2005), VMPFC activity relates to anticipation of high versus low probability reward and preferred versus non-preferred items (Daw et al., 2006; Hampton et al., 2006; Knutson et al., 2005; McClure et al., 2004; Plassmann et al., 2007; Tobler et al., 2007; van Leijenhorst et al., 2006), DACC and anterior insula are implicated in low certainty decisions (Critchley et al., 2001; Paulus et al., 2003), and amygdala is implicated in choice in preferred contexts (Arana et al., 2003).

Outcome Certainty was hypothesized to influence regional brain activity during the delay phase preceding the final feedback stimulus. This effect was examined with a separate regression model (Delay Model) consisting of regressors for the delay phase events (7-s duration) and outcome phase events. Delay phase regressors modeled the period beginning with the onset of initial feedback (Feedback 1) following the choice phase and preceding the final outcome, when participants have limited or no information about the outcome of their choice and are awaiting full final feedback. Five distinct trial types were modeled: complex rule-based trials, high choice certainty probabilistic trials (immediate feedback), low choice certainty probabilistic trials (immediate feedback), high choice certainty probabilistic trials (delayed feedback), and low choice certainty probabilistic trials (delayed feedback). Three outcome phase regressors modeled (1) positive outcomes on all probabilistic choices, (2) negative outcomes on all probabilistic choices, and (3) positive outcomes on all complex rule-based choices. Error trials were modeled as 10-s epochs and were excluded from further analysis as were the 4-s instruction epochs. Regressors were entered into a general linear model along with a set of cosine functions that high-pass filtered the data (cutoff at 128 s) and a covariate for session effects. The least-squared parameter estimates for each condition were used to create contrast images comparing activity across different conditions.

To test whether regions responded differentially according to Outcome Certainty, a contrast image compared immediate feedback probabilistic delay phase activity (high and low choice certainty) with delayed feedback probabilistic delay phase activity (high and low choice certainty). Group statistical maps were computed for each contrast by calculating one-sample t-tests on participants’ contrast images. This contrast revealed no significant regions of activation at the threshold of p<.001, uncorrected for family-wise error, with a cluster criterion of 10 contiguous voxels. Thus, this analysis is not described further, although ROI analyses also tested for effects of Outcome Certainty in regions identified by other contrasts.

To test for brain regions that responded to both Choice Complexity and Choice Certainty, a conjunction analysis was conducted using the Minimum Statistic compared to the Conjunction Null (Nichols et al., 2005). This conjunction analysis examined the common voxels of activation across the Choice Complexity and Choice Certainty contrasts. The Choice Complexity and Choice Certainty maps were thresholded at p<.001, uncorrected for family-wise error, with a cluster criterion of 10 contiguous voxels.

The Choice Complexity and Choice Certainty contrasts identified significant activation in several regions previously associated with complex rule use and probabilistic choice. The Choice Complexity contrast identified regions of interest (ROIs) within left VLPFC and DACC. The Choice Certainty contrast identified regions within VMPFC and left amygdala. The conjunction of the Choice Complexity and Choice Certainty contrasts identified a region in right anterior insula. These regions defined by the Choice Certainty and Choice Complexity contrasts and their conjunction were then examined further in ROI analyses.

Choice and outcome phase parameter estimates were extracted from each ROI using the Marsbar toolbox in SPM2 (Brett et al., 2002). Choice phase parameter estimates from ROIs were used for visualization of effects. Outcome phase parameter estimates from ROIs were entered into paired tests for differences in positive versus negative feedback outcome phase activity. Additionally, delay phase parameter estimates were extracted from each ROI based on the Delay Model for each probabilistic choice condition. Delay phase estimates were entered into paired tests of Outcome Certainty effects (immediate versus delayed feedback for high choice certainty trials, as well as immediate versus delayed feedback for low choice certainty trials). Reported effects from all ROI analyses are significant at p<.05, uncorrected.

For event-related response visualization, average raw signal change values were extracted for complex rule-based, high choice certainty probabilistic, and low choice certainty probabilistic choice phase events from VLPFC, DACC, VMPFC, and right anterior insula for 8 time points (16 s) following trial onset using the Marsbar toolbox in SPM2 (Brett et al., 2002).

Results

Behavioral results

Probabilistic versus complex rule-based choice

We expected that complex rule-based decisions would take longer than probabilistic decisions, because they require the consideration of two features of the cue stimulus (number and object), while probabilistic decisions require only the consideration of one feature (number). In contrast to hypothesized response time differences, we expected that the optimal response would be selected with about the same frequency in the complex rule-based choice condition compared to the high choice certainty probabilistic condition. Responses according to the learned behavioral rule were optimal in Rule blocks, while responses for the option with the higher probability of success were optimal in Gambling blocks. We compared average response times and average frequencies of the optimal response for complex rule-based choices and high choice certainty probabilistic choices (Fig. 2). As expected, response times were longer for complex rule-based choices (M±SD: 1111 ms± 153 ms) compared to both high choice certainty probabilistic choices (841 ms±111 ms; t(14)= 6.62, p<.001) and low choice certainty probabilistic choices (971 ms±132 ms; t(14)= 4.17, p= .001). However, the frequency of optimal responses in the complex rule-based choice condition (M±SD: 94.9%±4%) was not significantly different from that of the high choice certainty probabilistic choice condition (94.8% ± 10%, t( 14) < 1, p > .9). Unlike with high certainty probabilistic choices, optimal responses for low certainty probabilistic choices were less frequent than complex rule-based choices (low certainty probabilistic: 79.4%±12.9%; t(14)= 5.05, p<.001).

Fig. 2.

Behavioral results for choice task. Left bar graph shows mean response times, right bar graph shows mean frequency that participants selected the optimal response on high choice certainty probabilistic, low choice certainty probabilistic, and complex rule-based choices. ‘*’ indicates significant difference (two-tailed, p<.05). Error bars represent standard error of the mean.

High versus low choice certainty probabilistic choice

Decisions under low choice certainty should be more difficult than decisions under high choice certainty because the outcome is less predictable. Consistent with this prediction, response times were longer for low than high choice certainty probabilistic trials (t(14) = 6.27, p<.001). Participants also chose the optimal response more frequently on high than low choice certainty trials (t(14) = 6.60, p<.001).

In summary, responses for complex rule-based choices took longer than low choice certainty choices, which took longer than high choice certainty choices. Further, participants chose the optimal response less frequently in the low choice certainty condition than in the complex rule-based and high choice certainty conditions.

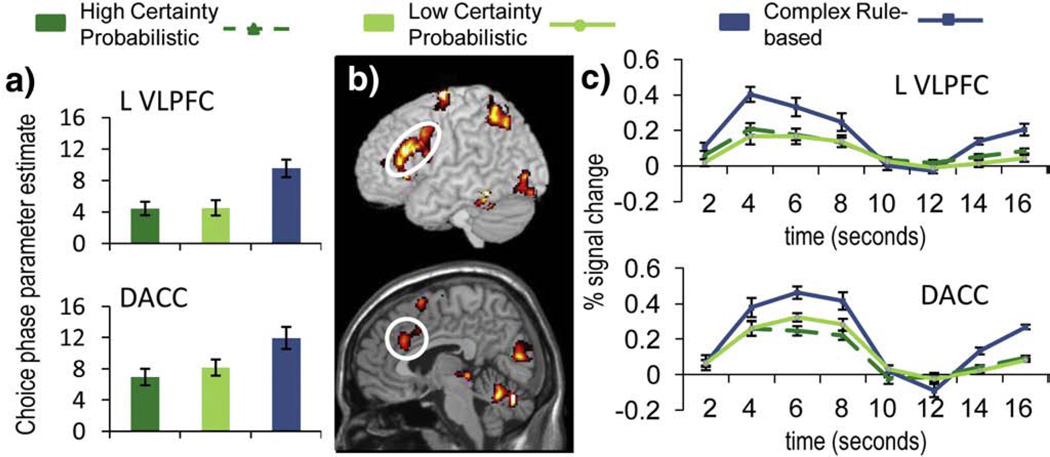

fMRI results

Complex rule-based versus probabilistic choice

We predicted that LPFC, and in particular VLPFC, would be engaged more strongly by complex rule-based choices than by probabilistic choices (Bunge and Zelazo, 2006). Regional activity associated with Choice Complexity was examined by contrasting the complex rule-based condition with the high choice certainty probabilistic condition (see Table 1). Complex rule-based choice was associated with regions in left VLPFC (BA 44/45), DACC, right and left anterior insula, and ventral striatum (Figs. 3b and 4c). In contrast, high choice certainty probabilistic choice was associated with areas in ventral anterior cingulate (BA 25), superior frontal gyrus (BA 8/9), left temporal pole (BA 38), and left middle temporal gyrus (BA 21), among others.

Table 1.

Activation foci from high choice certainty probabilistic versus complex rule-based choice contrast

| Region of activation (right/left) |

Brodmann | MNI coordinates |

t Value | ||

|---|---|---|---|---|---|

| x | y | z | |||

| High choice Certainty certainty probabilistic – Complex Rule-based | |||||

| Superior frontal (R) | 8/9 | 16 | 48 | 54 | 5.60 |

| Ventral anterior cingulate (R) | 25 | 6 | 30 | 0 | 4.46 |

| Temporal pole (L) | 20 | −38 | 18 | −42 | 7.31 |

| Inferior temporal (R) | 20 | 48 | 10 | −42 | 4.61 |

| Inferior temporal (L) | 20 | −46 | 6 | −50 | 4.84 |

| Middle temporal (L) | 21 | −52 | −6 | −16 | 5.19 |

| Parahippocampal (R) | 35 | 14 | −16 | −30 | 6.10 |

| Angular gyrus (L) | 39 | −56 | −70 | 32 | 5.75 |

| Complex Rule-based - high choice Certainty certainty probabilistic | |||||

| Lateral prefrontal (R) | 46 | 40 | 50 | 20 | 5.06 |

| Lateral prefrontal (L) | 45 | −42 | 46 | 12 | 4.09 |

| Lateral prefrontal (L) | 45 | −52 | 36 | 32 | 4.15 |

| Dorsal anterior cingulate (R) | 24/32 | 6 | 28 | 28 | 5.00 |

| Anterior insula (L) | −32 | 24 | 4 | 8.45 | |

| Anterior insula (R) | 32 | 22 | −6 | 10.99 | |

| Lateral prefrontal (L) | 44/45 | −40 | 22 | 24 | 6.83 |

| Ventral striatum (R) | 10 | 4–14 | 5.67 | ||

| Supplementary motor area (L/R) | 6 | −2 | 0 | 68 | 5.37 |

| Insula (R) | 36 | −2 | 16 | 4.95 | |

| Ventral striatum (L) | −14 | −4 | −10 | 4.87 | |

| Precentral gyrus (R) | 6 | 52 | −6 | 58 | 7.14 |

| Superior frontal (L) | 6 | −26 | −8 | 76 | 8.83 |

| Superior frontal (R) | 6 | 18 | −10 | 70 | 5.39 |

| Hippocampus (R) | 27 | 20 | −32 | −2 | 5.87 |

| Thalamus (L) | −8 | −32 | −2 | 5.73 | |

| Inferior Temporal (L) | 20 | −52 | −48 | −16 | 8.14 |

| Inferior Parietal (L) | 40/7 | −38 | −54 | 54 | 5.62 |

| Occipital (L) | 18 | −28 | −64 | −8 | 4.21 |

| Occipital (R) | 18 | 20 | −66 | −4 | 4.14 |

| Superior parietal (L) | 7 | −14 | −66 | 62 | 4.43 |

| Precuneus (L) | 7 | −6 | −70 | 34 | 4.31 |

| Cerebellum (L/R) | −4 | −70 | −24 | 7.96 | |

| Occipital (L) | 19 | −42 | −84 | −2 | 5.31 |

| Occipital (L) | 18 | −14 | −92 | −16 | 4.43 |

| Occipital (R) | 18 | 18 | −98 | −8 | 8.28 |

Regions listed contain 10 contiguous resampled voxels with t value significant at p<.001, uncorrected. Approximate Brodmann’s areas are shown. Regions are ordered from anterior to posterior by y-coordinate of the peak. Regions printed in bold are depicted in Fig. 3.

Fig. 3.

VLPFC and DACC regions of interest defined by the complex rule-based choice versus high choice certainty probabilistic choice contrast. Center column shows t-statistic maps from the complex rule-based choice versus high choice certainty probabilistic choice contrast (top: VLPFC, bottom: DACC; threshold at p<.001 uncorrected, 10 contiguous voxels), (a) Choice phase parameter estimates for high choice certainty probabilistic, low choice certainty probabilistic, and complex rule-based conditions, (b) Outcome phase parameter estimates for probabilistic choice positive outcomes, probabilistic choice negative outcomes, and complex rule-based positive outcomes. Significant differences (two-tailed, p<.05) are denoted in (b) with a ‘*’. (c) VLPFC (top) and DACC (bottom) activation time course for high choice certainty probabilistic, low choice certainty probabilistic, and complex rule-based conditions. Onset of the choice phase corresponds to 0 s. Error bars in each chart represent the standard error of the mean.

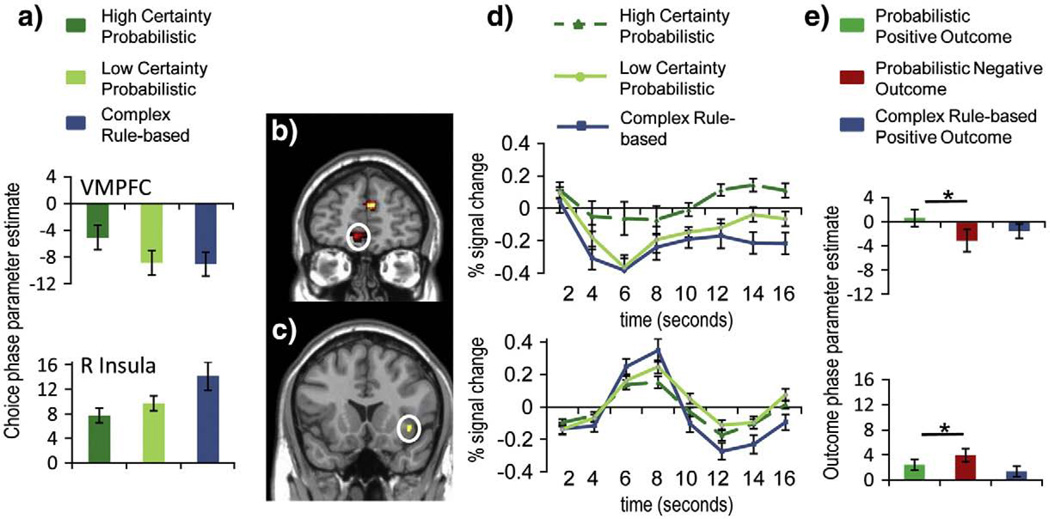

Fig. 4.

VMPFC region of interest defined by the high choice certainty probabilistic versus low choice certainty probabilistic contrast, and right anterior insula (R Insula) region defined by the conjunction of the low choice certainty probabilistic versus high choice certainty probabilistic contrast with the complex rule-based choice versus high choice certainty probabilistic choice contrast, (a) Choice phase parameter estimates for high choice certainty probabilistic, low choice certainty probabilistic, and complex rule-based conditions (VMPFC: top, R Insula: bottom), (b) VMPFC in t-statistic map from the high choice certainty probabilistic choice versus low choice certainty probabilistic choice contrast, (c) R Insula significant voxels from conjunction of the low choice certainty probabilistic versus high choice certainty probabilistic contrast with the complex rule-based choice versus high choice certainty probabilistic choice contrast, (d) VMPFC (top) and R Insula (bottom) activation time course for high choice certainty probabilistic, low choice certainty probabilistic, and complex rule-based conditions. Onset of the choice phase corresponds to 0 s. (e) Outcome phase parameter estimates for probabilistic choice positive outcomes, probabilistic choice negative outcomes, and complex rule-based positive outcomes (VMPFC: top, R Insula: bottom). Significant differences are noted in the bar graphs in (e) with a ‘*’. Error bars in each chart represent the standard error of the mean.

High versus low choice certainty

Within the probabilistic choice conditions, a contrast between low choice certainty probabilistic and high choice certainty probabilistic choice phase activity (immediate and delayed feedback) was used to identify regions that were engaged according to choice certainty at the time of the choice (see Table 2). As expected, VMPFC showed greater activity for high choice certainty compared to low choice certainty probabilistic choices (Fig. 4b). Other regions that showed greater activity for high compared to low choice certainty included dorsal regions of medial prefrontal cortex, medial orbitofrontal cortex, left amygdala, and regions of superior temporal Cortex Only right anterior insula exhibited greater activation for low choice certainty.

Table 2.

Activation foci from high choice certainty probabilistic choice versus low choice certainty probabilistic choice contrasts.

| Region of activation (right/left) |

Brodmann | MNI coordinates |

t Value | ||

|---|---|---|---|---|---|

| x | y | z | |||

| High choice Certainty certainty-low choice certainty | |||||

| Medial prefrontal (L/R) | 10/32 | 8 | 52 | 22 | 6.48 |

| Ventromedial prefrontal (L/R) | 10/11 | −8 | 50 | −10 | 4.54 |

| Dorsomedial prefrontal (L) | 8/9 | −2 | 44 | 54 | 5.54 |

| Medial orbitofrontal (R) | 11 | 12 | 36 | −10 | 4.73 |

| Dorsal anterior cingulate (L) | 24 | −10 | 28 | 14 | 4.52 |

| Ventral anterior cingulate (R) | 25 | 14 | 28 | 2 | 4.41 |

| Amygdala (L) | 34 | −28 | 4 | −20 | 5.18 |

| Superior temporal (R) | 21 | 68 | −2 | 2 | 4.4 |

| Thalamus (L) | −12 | −10 | 20 | 5.3 | |

| Postcentral gyrus (R) | 43 | −56 | −10 | 14 | 4.45 |

| Superior temporal (L) | 42 | −52 | −26 | 20 | 4.9 |

| Posterior insula (L) | −34 | −30 | 12 | 4.19 | |

| Superior temporal (R) | 42 | 54 | −32 | 20 | 5.59 |

| Postcentral gyrus (R) | 4 | 16 | −32 | 74 | 4.68 |

| Superior temporal (L) | 42 | −68 | −34 | 24 | 4.58 |

| Precuneus (R) | 29 | 20 | −44 | 16 | 4.84 |

| Posterior cingulate (L) | 23 | −16 | −44 | 36 | 4.83 |

| Precuneus (R) | 5 | 6 | −46 | 56 | 4.43 |

| Cerebellum (L) | −32 | −46 | −30 | 4.32 | |

| Cerebellum (L/R) | 0 | −48 | −2 | 5.22 | |

| Occipital (L) | 17 | −16 | −52 | 24 | 5.15 |

| Cerebellum (L) | −44 | −52 | −32 | 4.36 | |

| Middle temporal (R) | 39 | 36 | −56 | 14 | 4.71 |

| Occipital (R) | 19 | 54 | −70 | −22 | 4.47 |

| Occipital (R) | 17 | 6 | −74 | 10 | 5.1 |

| Occipital (L) | 17 | −42 | −74 | 2 | 4.93 |

| Occipital (L) | 18 | −16 | −78 | −6 | 4.26 |

| Low choice Certainty certainty – high choice certainty | |||||

| Anterior insula (R) | 40 | 16 | −6 | 4.27 | |

Regions listed contain 10 contiguous resampled voxels with t value significant at p<.001, uncorrected. Approximate Brodmann’s areas are shown. Regions are ordered from anterior to posterior by y-coordinate of the peak Regions printed in bold are depicted in Fig. 4.

Choice Complexity and Choice Certainty conjunction

The intersection of the Choice Complexity and Choice Certainty contrasts revealed regions that were sensitive to both manipulations. A region of right anterior insula (center of mass located at MNI coordinates 41, 17, −5) showed greater activity for complex rule-based choice compared to probabilistic choice as well as greater activity for low certainty compared to high certainty choice (Figs. 4a, c, and d). Additionally, foci in left cerebellum (center of mass −30, − 48, −30) and right occipital cortex (center of mass 5, −75, 12) showed greater activity for complex rule-based choice compared to probabilistic choice as well as greater activity for high certainty compared to low certainty choice.

High versus low outcome certainty

All ROIs defined by the above contrasts were examined for differences during the delay phase between the high and low outcome certainty conditions. Parameter estimates were extracted from each ROI based on estimation of the Delay Model, as described in the Materials and methods section. No ROIs showed significant differences between high and low outcome certainty (high choice certainty trials and low choice certainty trials were tested separately; p>.3 in all comparisons).

Positive versus negative outcome

All ROIs defined by the above contrasts were examined for differences between activity related to positive versus negative final feedback (Fig. 4e). Right anterior insula activity was greater for negative compared to positive outcomes (t(14)= 3.10, p<.05), while VMPFC activity was greater for positive compared to negative outcomes (t(14)= 2.78, p<.05).

Discussion

Choice difficulty is an important but general factor that influences the recruitment of brain regions in decision-making. Both choice complexity (the degree of information processing required) in deterministic choices and choice certainty (predictability of outcomes from responses) in probabilistic choices influence the level of difficulty in a decision. The current study builds on previous studies examining neural correlates of probabilistic or rule-based choices by manipulating choice complexity and choice certainty in comparable decision tasks and directly comparing them. Behaviorally, we found that high choice certainty was associated with high frequencies of optimal decisions regardless of choice complexity, and that low choice certainty was associated with a reduced rate of optimal decisions. However, participants took longer to reach the optimal decision in the complex rule condition compared to the high choice certainty probabilistic condition.

The distinction between complex rule-based choice and high choice certainty probabilistic choice was also evident at the neural level. Left VLPFC (BA 44/45), right anterior insula, DACC, and ventral striatum activity was greater for complex rule-based choices than for high choice certainty probabilistic choices (Table 1, Figs. 3a–c). The conjunction analysis showed that activity in a region of right anterior insula was greater for low compared to high choice certainty probabilistic choices as well as for complex rule-based choices compared to high choice certainty probabilistic choices (Figs. 4a, c, d). Regarding probabilistic choices made under high versus low choice certainty, VMPFC and left amygdala activity was associated with higher choice certainty (Table 2). Regarding outcome phase activity, right anterior insula showed greater activity on probabilistic choice trials for negative compared to positive final outcomes, while VMPFC showed greater activity on probabilistic choice trials for positive compared to negative final outcomes (Fig. 4e).

Occipital and cerebellar regions also show effects in both the Choice Complexity and Choice Certainty contrasts, as well as the conjunction analysis. These regions showed greater activity in complex rule-based choice compared to high certainty probabilistic choice, also greater activity in high certainty probabilistic choice compared to low certainty probabilistic choice. This pattern is broadly consistent with previous research and may reflect an influence of expected reward or task engagement (Huettel et al., 2005; Preuschoff et al., 2006).

Choice certainty was high in both the complex rule-based choice and high certainty probabilistic choice conditions (i.e., high optimal decision rates did not differ between the two conditions). Longer response times in complex rule-based decisions reflected greater information processing demands compared to high certainty probabilistic decisions. Thus, both conditions could be considered high choice certainty, and choice complexity was the primary factor differing between the rule-based choice and high certainty probabilistic choice conditions. However, it should be noted that participants received fewer positive outcomes on the high certainty probabilistic choices (82% positive outcomes) compared to complex rule-based choices (95% positive outcomes) because the optimal response was not rewarded every time, as it was on complex rule-based choices. Thus, the two conditions also differed in reward outcome certainty. However, reward outcome certainty is addressed by the outcome certainty manipulation, which was designed to examine the effect of certainty during outcome anticipation. No region showed an effect of the outcome certainty manipulation. These results taken together suggest that choice complexity was the primary factor influencing brain activity observed in the Choice Complexity contrast.

Complex rule-based choice engages putative cognitive control and effort regions

Left VLPFC and DACC exhibited greater activity for complex rule-based choices compared to probabilistic choices (Table 1, Figs. 3a–c). This activity represented the increased information processing required to represent and implement the response rule based on contextual features of the environment (two pieces of information in the cue). VLPFC and DACC activity in the current study may reflect the increased monitoring demands associated with the complex rule-based choices (MacDonald et al., 2000). These complex rule-based choices required that participants monitor two pieces of information in the pictures and integrate that information to make a decision. Importantly, there was high choice certainty in the complex rule-based choice condition because all choices made according to the decision rule were rewarded. In both low and high choice certainty probabilistic conditions in the current study, the attentional demands were equal because participants only needed to monitor a single stimulus feature to make a decision.

Previous research has shown greater activity in LPFC for low certainty choices compared to high certainty choices. One study showed greater LPFC activation with low certainty on a task in which participants made choices based on predictive stimuli (Huettel et al., 2005). A key difference between the task in that previous study and the task in the current study is that participants are not required to dynamically update predictions based on successive stimuli in the current task. In that previous study, low certainty occurred when successive stimuli conflicted, increasing the demand on attention. Thus, LPFC activation may have reflected increased demand on attention in agreement with the finding in the current study that VLPFC increased for complex rule-based choice compared to probabilistic choice.

Additionally, right anterior insula activity was greater for complex rule-based choices compared to probabilistic choice as well as greater for the low choice certainty condition compared to the high choice certainty condition. This pattern in right anterior insula mirrored the pattern of response times, which provide an index of the difficulty of each choice (Figs. 2 and 4a). Anterior insula activity may be influenced by a broad range of factors that contribute to decision difficulty in general. Previous studies have found a relation between anterior insula decision-making activity and factors related to low certainty such as anticipated risk, risk-averse preferences, and low confidence decisions (Fleck et al., 2006; Kuhnen and Knutson, 2005; Paulus et al., 2003; Paulus and Stein, 2006). Other studies have found a relation between anterior insula activity and factors that relate to general decision difficulty, such as retrieval effort, task-switching, and multi-attribute decisions (Buckner et al., 1998; Bunge et al., 2002b; Zysset et al., 2006).

The combination of the anterior insula activity and VLPFC activity in the current study is consistent with previous research suggesting that these two areas work together as parts of separable networks that contribute to task-set maintenance and adaptive (trial-to-trial) control, respectively (Bunge et al., 2002a; Dosenbach et al., 2007). The complex rule-based condition required task-set maintenance because the decision rule was consistent throughout the study, whereas the low choice certainty condition required the most adaptive trial-to-trial control to monitor the low certainty stimulus-reward relationship. Consistent with this research, VLPFC and anterior insula display different response profiles in the current study, with right anterior insula activity but not VLPFC demonstrating an influence of choice certainty in the choice phase (low > high choice certainty) and feedback valence (negative > positive) in the outcome phase (Figs. 4a–e).

Choice certainty modulates activity in VMPFC and amygdala

Several brain regions were more active for high compared to low choice certainty probabilistic choices. Notably, VMPFC and left amygdala showed greater choice-related activity for high choice certainty compared to low choice certainty decisions (Tables 1 and 2, Figs. 4a, b, d, and e). The manipulation of outcome certainty had no detectable effect on VMPFC activity in the delay period, suggesting that VMPFC activity is related to choice certainty rather than outcome certainty as conceptualized in this study. The low outcome certainty condition involved low certainty about both the valence and magnitude of an incentive outcome, and therefore, a high outcome certainty trial did not mean that a preferred outcome was more likely than for a low outcome certainty trial. In this way, the outcome certainty manipulation was orthogonal to the choice certainty manipulation and differed from other research that has examined brain activity influenced by the probability of an anticipated preferred outcome (Daw et al., 2006; Hampton et al., 2006; Knutson et al., 2005; Tobler et al., 2007). In the current study, a preferred outcome was more probable in the high choice certainty probabilistic condition than in the low choice certainty probabilistic condition, but a preferred outcome was most probable in the complex rule-based condition. VMPFC activity was lowest for complex rule-based choices, which were most likely to result in a preferred outcome. Therefore, VMPFC activity may have been related to the probability of an anticipated preferred outcome, but only in probabilistic choices. Indeed, anticipating a preferred outcome is the essential problem in a probabilistic choice, and VMPFC activity may be important in resolving low choice certainty by encoding a predicted value for a probabilistic choice (Daw et al., 2006; Hampton et al., 2006; O'Doherty, 2004; Plassmann et al., 2007). Previous research has shown that VMPFC activity during anticipation of outcomes correlates with risk-seeking preferences, suggesting that VMPFC activity may influence behavioral choices (Tobler et al., 2007).

Other research suggests that VMPFC may show a relative decrease in activity for tasks that demand more attention (Gusnard et al., 2001; Raichle et al., 2001). High choice certainty probabilistic choices were less attention demanding than complex rule-based choices, but attentional demand does not account for the difference in VMPFC activity between high and low choice certainty conditions, as only one piece of information needed to be monitored in both conditions.

The finding of different response profiles in VMPFC and anterior insula builds upon previous research demonstrating these regions to be critical in low choice certainty decisions. Studies have demonstrated that lesions to VMPFC and insula have different effects on choices; for example VMPFC lesions increase overall risk-seeking behavior while insular lesions result in reduced sensitivity to outcome probabilities (Clark et al., 2008; Weller et al., 2007, 2009). The current study provides convergent evidence that VMPFC and anterior insula are modulated by choice certainty and further shows that VMPFC activity decreases while anterior insula increases in low choice certainty decisions. Further research might examine the relation between VMPFC and anterior insula activation and how these regions interact to influence response selection.

Left amygdala also showed greater activity for high compared to low choice certainty choices. In the current study, participants made choices based on known probabilities, so high choice certainty choices were more likely to lead to positive outcomes than low choice certainty choices, and complex rule-based choices were most likely to lead to a positive outcome. The pattern of amygdala activity mirrored the frequency of optimal response and accorded with previous research showing that amygdala activity is greater for choice in preferred compared to less preferred contexts and correlates with predicted reward value (Arana et al., 2003; Gottfried et al., 2003).

Conclusion and future directions

The current study represents one way of separating factors that contribute to difficulty in decision-making, and how those factors influence the brain regions that are recruited in the course of a decision. Choice certainty and choice rule complexity are two factors among many others (e.g., option similarity) that contribute to the difficulty of a decision. This study demonstrates that these factors are separable and useful in describing how distinct regions of the PFC are recruited in decision-making. We observed that choice certainty correlates with activity in brain regions involved in anticipating incentives (VMPFC, amygdala), choice rule complexity correlates with activity in regions involved in cognitive control (VLPFC, DACC), and both factors influence activity in a region involved in completing tasks involving various types of difficulty (anterior insula). The findings reveal differences as well as similarities in how choice complexity and low probabilistic certainty tax decision making resources within the framework of a single study, instead of requiring inferences across studies.

Though not addressed by the current study, complex rule-based decisions might be contrasted with simple heuristic-based decisions that have been examined in previous research. Researchers have argued that separable psychological systems carry out simple heuristic-based decisions compared to more cognitively demanding decisions that involve considering multiple pieces of information (e.g., Kahneman, 2003). Research that varies the complexity of a heuristic used for a decision might specifically show how brain regions are involved in heuristic decision making. Further studies might also examine other factors that increase decision difficulty, how they influence the way the brain carries out a decision, and how these factors affect basic variables such as anticipated reward probability and attentional demand.

References

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. J. Neurosci. 2003;23:9632–9638. doi: 10.1523/JNEUROSCI.23-29-09632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beer JS, John OP, Scabini D, Knight RT. Orbitofrontal cortex and social behavior: integrating self-monitoring and emotion-cognition interactions. J. Cogn. Neurosci. 2006a;18:871–879. doi: 10.1162/jocn.2006.18.6.871. [DOI] [PubMed] [Google Scholar]

- Beer JS, Knight RT, D'Esposito M. Controlling the integration of emotion and cognition: the role of frontal cortex in distinguishing helpful from hurtful emotional information. Psychol. Sci. 2006b;17:448–453. doi: 10.1111/j.1467-9280.2006.01726.x. [DOI] [PubMed] [Google Scholar]

- Brett M, Anton J, Valabregue R, Poline J. Region of interest analysis using an SPM toolbox; 8th International Conference on Functional Mapping of the Human Brain.2002. [Google Scholar]

- Buckner RL, Koutstaal W, Schacter DL, Wagner AD, Rosen BR. Functional-anatomic study of episodic retrieval using fMRI: I. Retrieval effort versus retrieval success. Neurolmage. 1998;7:151–162. doi: 10.1006/nimg.1998.0327. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Wallis JD. Neuroscience of Rule-Guided behavior. Oxford Univ. Press: USA; 2007. [Google Scholar]

- Bunge SA, Zelazo PD. A brain-based account of the development of rule use in childhood. Curr. Dir. Psychol. Sci. 2006;15:118–121. [Google Scholar]

- Bunge SA, Dudukovic NM, Thomason ME, Vaidya CJ, Gabrieli JD. Immature frontal lobe contributions to cognitive control in children: evidence from fMRI. Neuron. 2002a;33:301–311. doi: 10.1016/s0896-6273(01)00583-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunge SA, Hazeltine E, Scanlon MD, Rosen AC, Gabrieli JD. Dissociable contributions of prefrontal and parietal cortices to response selection. Neuroimage. 2002b;17:1562–1571. doi: 10.1006/nimg.2002.1252. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Kahn I, Wallis JD, Miller EK, Wagner AD. Neural circuits subserving the retrieval and maintenance of abstract rules. J. Neurophysiol. 2003;90:3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Wallis JD, Parker A, Brass M, Crone EA, Hoshi E, Sakai K. Neural circuitry underlying rule use in humans and nonhuman primates. J. Neurosci. 2005;25:10347–10350. doi: 10.1523/JNEUROSCI.2937-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark L, Bechara A, Damasio H, Aitken MR, Sahakian BJ, Robbins TW. Differential effects of insular and ventromedial prefrontal cortex lesions on risky decision-making. Brain. 2008;131:1311–1322. doi: 10.1093/brain/awn066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cocosco CA, Kollokian V, Kwan RKS, Evans AC. Brainweb: online interface to a 3D MRI simulated brain database. Neurolmage. 1997;5:425. [Google Scholar]

- Critchley HD, Mathias CJ, Dolan RJ. Neural activity in the human brain relating to uncertainty and arousal during anticipation. Neuron. 2001;29:537–545. doi: 10.1016/s0896-6273(01)00225-2. [DOI] [PubMed] [Google Scholar]

- Crone EA, Wendelken C, Donohue SE, Bunge SA. Neural evidence for dissociable components of task-switching. Cereb. Cortex. 2006;16:475–486. doi: 10.1093/cercor/bhi127. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Hum. Brain. Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J. Neurophysiol. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. Neuroimage. 2005;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosenbach NU, Fair DA, Miezin FM, Cohen AL, Wenger KK, Dosenbach RA, Fox MD, Snyder AZ, Vincent JL, Raichle ME, Schlaggar BL, Petersen SE. Distinct brain networks for adaptive and stable task control in humans. Proc. Natl. Acad. Sci. USA. 2007;104:11073–11078. doi: 10.1073/pnas.0704320104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliott R, Rees G, Dolan RJ. Ventromedial prefrontal cortex mediates guessing. Neuropsychologia. 1999;37:403–411. doi: 10.1016/s0028-3932(98)00107-9. [DOI] [PubMed] [Google Scholar]

- Fleck MS, Daselaar SM, Dobbins IG, Cabeza R. Role of prefrontal and anterior cingulate regions in decision-maldng processes shared by memory and nonmemory tasks. Cereb. Cortex. 2006;16:1623–1630. doi: 10.1093/cercor/bhj097. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal Cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Gusnard DA, Akbudak E, Shulman GL, Raichle ME. Medial prefrontal cortex and self-referential mental activity: relation to a default mode of brain function. Proc. Natl. Acad. Sci. USA. 2001;98:4259–4264. doi: 10.1073/pnas.071043098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampton AN, Bossaerts P, O'Doherty JP. The role of the ventromedial prefrontal cortex in abstract state-based inference during decision making in humans. J. Neurosci. 2006;26:8360–8367. doi: 10.1523/JNEUROSCI.1010-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huettel SA, Song AW, McCarthy G. Decisions under uncertainty: probabilistic context influences activation of prefrontal and parietal cortices. J. Neurosci. 2005;25:3304–3311. doi: 10.1523/JNEUROSCI.5070-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D. A perspective on judgment and choice: mapping bounded rationality. Am. Psychol. 2003;58:697–720. doi: 10.1037/0003-066X.58.9.697. [DOI] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J. Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- MacDonald AW, III, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288:1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- McClure SM, Li J, Tomlin D, Cypert KS, Montague LM, Montague PR. Neural correlates of behavioral preference for culturally familiar drinks. Neuron. 2004;44:379–387. doi: 10.1016/j.neuron.2004.09.019. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr. Opin. Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal Cortex. Nat. Neurosci. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Stein MB. An insular view of anxiety. Biol. Psychiatry. 2006;60:383–387. doi: 10.1016/j.biopsych.2006.03.042. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Hozack N, Zauscher B, McDowell JE, Frank L, Brown GG, Braff DL. Prefrontal, parietal, and temporal cortex networks underlie decision-making in the presence of uncertainty. Neuroimage. 2001;13:91–100. doi: 10.1006/nimg.2000.0667. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Rogalsky C, Simmons A, Feinstein JS, Stein MB. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. Neuroimage. 2003;19:1439–1448. doi: 10.1016/s1053-8119(03)00251-9. [DOI] [PubMed] [Google Scholar]

- Plassmann H, O’Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J. Neurosci. 2007;27:9984. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschoff K, Bossaerts P, Quartz SR. Neural differentiation of expected reward and risk in human subcortical structures. Neuron. 2006;51:381–390. doi: 10.1016/j.neuron.2006.06.024. [DOI] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proc. Natl. Acad. Sci. USA. 2001;98:676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar Stereotaxic Atlas of the Human Brain. Stuttgart: Thieme; 1988. [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J. Neurophysiol. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical panellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- van Leijenhorst L, Crone EA, Bunge SA. Neural correlates of developmental differences in risk estimation and feedback processing. Neuropsychologia. 2006;44:2158–2170. doi: 10.1016/j.neuropsychologia.2006.02.002. [DOI] [PubMed] [Google Scholar]

- Volz KG, Schubotz RI, von Cramon DY. Predicting events of varying probability: uncertainty investigated by fMRI. Neuroimage. 2003;19:271–280. doi: 10.1016/s1053-8119(03)00122-8. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur. J. Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- Weller JA, Levin LP, Shiv B, Bechara A. Neural correlates of adaptive decision making for risky gains and losses. Psychol. Sci. 2007;18:958–964. doi: 10.1111/j.1467-9280.2007.02009.x. [DOI] [PubMed] [Google Scholar]

- Weller JA, Levin LP, Shiv B, Bechara A. The effects of insula damage on decision-making for risky gains and losses. Soc. Neurosci. 2009;4:347–358. doi: 10.1080/17470910902934400. [DOI] [PubMed] [Google Scholar]

- Zysset S, Wendt CS, Volz KG, Neumann J, Huber O, von Cramon DY. The neural implementation of multi-attribute decision making: a parametric fMRI study with human subjects. Neuroimage. 2006;31:1380–1388. doi: 10.1016/j.neuroimage.2006.01.017. [DOI] [PubMed] [Google Scholar]