Abstract

Problem behavior often has sensory consequences that cannot be separated from the target response, even if external, social reinforcers are removed during treatment. Because sensory reinforcers that accompany socially mediated problem behavior may contribute to persistence and relapse, research must develop analog sensory reinforcers that can be experimentally manipulated. In this research, we devised analogs to sensory reinforcers in order to control for their presence and determine how sensory reinforcers may impact treatment efficacy. Experiments 1 and 2 compared the efficacy of differential reinforcement of alternative behavior (DRA) versus noncontingent reinforcement (NCR) with and without analog sensory reinforcers in a multiple schedule. Experiment 1 measured the persistence of key pecking in pigeons, whereas Experiment 2 measured the persistence of touchscreen responses in children with intellectual and developmental disabilities. Across both experiments, the presence of analog sensory reinforcers increased the levels, persistence, and variability of responding relative to when analog sensory reinforcers were absent. Also in both experiments, target responding was less persistent under conditions of DRA compared to NCR regardless of the presence or absence of analog sensory reinforcers.

Keywords: sensory reinforcement, automatic reinforcement, persistence, key peck, pigeons, screen touch, children with intellectual disability

Severe problem behavior such as aggression, property destruction, or self-injury has at least two types of consequences. One is attention by other people. Even the most patient caregiver, attempting to ignore a client’s problem behavior during an intervention such as extinction, will be unable to remain passive in the presence of severe problem behavior, whether directed toward other people, objects, or self-directed at the client’s own body. Another is the immediate sensory effect of the problem behavior itself. Striking another person produces a bodily reaction; smashing a window produces the sound of shattered glass; and biting one’s arm can produce pain. Sensory stimuli like these are inextricably linked to the problem behavior itself and therefore have been termed “intrinsic” or “automatic” reinforcers (e.g., Vollmer, 1994). If sensory stimuli function as reinforcers for the responses that produce them, they may summate with the reinforcing effects of external events that can be administered or withheld according to arbitrary contingencies, such as caregiver attention and access to preferred activities, and make problem behavior more resistant to change during intervention (Ahearn, Clark, Gardenier, Chung, & Dube, 2003).

Sensory reinforcers cannot be administered or withheld by clinicians or experimenters because they are linked to responding (i.e., they are produced by engaging in the response). Therefore, empirical efforts to evaluate their effects must employ analogs that can be presented contingent on behavior in controlled settings.

Background and Rationale

In multiple schedules with equal reinforcer rates in two components, the addition of alternative reinforcers to one component typically lowers the rate of target responding in that component but paradoxically increases its resistance to change. While the decrease in target responding is evident in the immediate change in behavior, resistance to change is typically evaluated during subsequent tests that disrupt behavior. With nonhuman animals, resistance tests have included prefeeding and extinction; with humans, resistance tests often include distraction by novel stimuli or competing tasks. Decreases in target response rate and increases in its resistance to change have been obtained with alternative reinforcers that are the same as those maintaining behavior and with those that are qualitatively different from the reinforcer for target responding, regardless of whether they are presented independently of responding (NCR) or contingent upon an explicitly defined alternate response (DRA; Ahearn et al., 2003; Cohen, 1996; Grimes & Shull, 2001; Harper, 1999; Igaki & Sakagami, 2004; Mace et al., 1990; Nevin, Tota, Torquato, & Shull, 1990; Pyszczynski & Shahan, 2011; Shahan & Burke, 2004). Increases in resistance to change have also been reported in clinical applications that arrange NCR or DRA (e.g., Lieving, DeLeon, Carreau-Webster, Frank-Crawford, & Triggs, in press; Mace et al., 2010).

Behavioral momentum theory proposes that whereas steady-state response rate depends on response–reinforcer contingencies, resistance to change depends on the correlation between reinforcers and environmental stimuli such as the cues signaling multiple-schedule components (for review see Nevin & Grace, 2000). The addition of alternative reinforcers in one component both weakens the relation between target responding and reinforcers and strengthens the stimulus–reinforcer relation in that component. Accordingly, baseline response rate decreases but its resistance to change increases. Another interpretation of the decrease in baseline response rate is that added reinforcers serve to disrupt ongoing target behavior (e.g., Nevin & Shahan, 2011). Also, the increase in resistance to change may be ascribed to the higher probability of reinforcement for the target response that necessarily occurs on VI schedules when response rate decreases (McLean, Grace, & Nevin, 2012). Whatever the interpretation, the basic phenomenon of increased persistence resulting from alternative reinforcement remains a challenge, most especially in clinical intervention when decreasing problem behavior is paramount.

An extension of momentum theory also accounts for relapse of problem behavior when treatment is interrupted or discontinued altogether. Specifically, the omission of alternative reinforcers removes their immediate disruptive effect while retaining the strengthening effect of their historical correlation with environmental stimuli. Nevin and Shahan (2011) and Shahan and Sweeney (2011) have expressed these effects of alternative reinforcement in a quantitative model that accounts for the data of nonhuman animals in experimental analyses and of human participants in treatment settings (Wacker et al., 2011).

In clinical applications, extinction of problem behavior is often arranged concurrently with DRA or NCR in order to provide maximally effective treatment (e.g., Iwata, Dorsey, Slifer, Bauman, & Richman, 1994; Petscher, Rey, & Bailey, 2009). However, as noted above, extinction cannot be implemented for the sensory consequences that follow automatically from the problem behavior itself; therefore, experimental analyses must arrange extrinsic analogs to sensory reinforcers in order to evaluate their possible effects on behavior during alternative reinforcement and extinction. Here, we describe two studies employing pigeons and children with intellectual disabilities in an experimental paradigm designed to mimic the availability of sensory reinforcers during and after analog treatments with DRA and NCR in multiple schedules.

Experiment 1

In Experiment 1, we developed an animal model of a target behavior that is maintained by a combination of socially mediated reinforcement and sensory reinforcement using food deliveries in a standard pigeon operant chamber. Pigeons’ key pecking was examined across a three-component multiple schedule, distinguished by key color. Phase I established similar baseline performances of the target response in the three components. Then, Phase II compared the effectiveness of treatment with extinction of the target behavior plus either DRA (component 2, C2) or NCR (C3); C1 served as a no-treatment control. Phase II was meant to model and compare interventions with extinction plus alternative reinforcement in the form of contingent (DRA) or noncontingent (NCR) alternative reinforcement delivery relative to a context in which treatment is not implemented. Phase III examined the effects of these treatments on resistance to extinction; the initial sessions evaluated posttreatment resurgence of the target response in C2 and C3, when alternative reinforcers were removed. Phase IV evaluated potential reinstatement of problem behavior by response-independent reinforcers presented twice during each component. This phase was conducted primarily as an experimental test of relative behavioral strength, and not to model any specific clinical situation.

This sequence of experimental phases occurred first with analog sensory reinforcement (Condition 1) and then without analog sensory reinforcement (Condition 2). Condition 3 was a replication of Condition 1. Table 1 shows a summary of the Experiment 1 procedure, including reinforcement rates.

Table 1.

Phases and Conditions of Experiment 1

| Condition 1 | Condition 2 | |||||

|---|---|---|---|---|---|---|

| Target | Alternative | Target | Alternative | |||

| Phase I (Baseline) 30 sessions |

C1 | (No treatment) | VI 120 RT + VI 30 rT | - | VI 120 RT | - |

| C2 | (DRA) | VI 120 RT + VI 30 rT | - | VI 120 RT | - | |

| C3 | (NCR) | VI 120 RT + VI 30 rT | - | VI 120 RT | - | |

| Phase II (Treatment) 30 sessions |

C1 | (No treatment) | VI 120 RT + VI 30 rT | - | VI 120 RT | - |

| C2 | (DRA) | VI 30 rT | VI 30 RT | Ext | VI 30 RT | |

| C3 | (NCR) | VI 30 rT (VT 30 RT) | - | Ext (VT 30 RT) | - | |

| Phase III (Extinction) 30 sessions |

C1 | (No treatment) | VI 30 rT | - | Ext | - |

| C2 | (DRA) | VI 30 rT | Ext | Ext | Ext | |

| C3 | (NCR) | VI 30 rT | - | Ext | - | |

| Phase IV (Reinstatement) 10 sessions |

C1 | (No treatment) | VI 30 rT + RI RT | - | Ext + RI RT | - |

| C2 | (DRA) | VI 30 rT + RI RT | Ext | Ext + RI RT | Ext | |

| C3 | (NCR) | VI 30 rT + RI RT | - | Ext + RI RT | - | |

Note. Target = left or right side key (counterbalanced), Alternative = opposite side key. C1, C2, and C3 = Multiple-schedule component 1 (no treatment), 2 (DRA), and 3 (NCR), respectively. RT represents 3-s food presentations with a steady hopper light, rT represents 1-s food presentations with a flashing hopper light and key light. VI 120 and VI 30 = variable-interval 120-s and 30-s schedules, respectively. VT 30 = variable-time 30-s schedule. Ext = extinction for pecks to a lit key. RI = response-independent food at 2 and 8 s into each component. Condition 3 replicated Condition 1.

Method

Subjects and apparatus

Subjects were seven experimentally naïve homing pigeons (Double T Farm, Glenwood, IA). Pigeons were maintained at approximately 80–90% of their free-feeding weights by supplementary postsession food. Subject living conditions and the operant chambers have been described elsewhere in detail (Sweeney & Shahan, 2013).

Procedure

Pretraining

Manual shaping of successive approximations was used to train pigeons to peck the illuminated (white) center key. Then, all subjects completed three sessions (one each for center, right, and left active keys) that ended after the subject earned fifty 2-s hopper presentations which were delivered on a fixed-ratio (FR) 1 schedule of reinforcement.

Phase I

During Phase I (baseline), a three-component multiple schedule was implemented where components were signaled by target response key colors of red, green, and blue. The positions of the target response (left or right key) and component colors were counterbalanced across subjects. The components lasted 3 min and were separated by 1-min intercomponent intervals (ICIs). There were 12 total components and component order was randomized in blocks of three, such that sessions included 36 min of time in the components, excluding ICIs and reinforcement time. In all components, pecks to the target key (either the left or right key) produced 3-s access to food with a steady hopper light (hereafter abbreviated RT) on a variable-interval (VI) 120-s schedule. During Condition 1 and Condition 3, pecks to the target side key also produced analog sensory reinforcement, 1-s access to food with a flashing hopper light and flashing white target key light (rT), on a superimposed VI 30-s schedule. In Conditions 1 and 3, rT was available for the target response in all components during all phases. If both rT and RT were set to deliver a response-contingent reinforcer at the same time, a peck to the target key produced rT, a 0.5-s blackout, and then RT. Phase I lasted at least 30 sessions. In Condition 1, pigeons required between zero and four additional Phase I sessions to ensure no downward trends in target responding over the last three sessions. In Conditions 2 (no rT events) and 3, Phase I lasted 30 sessions for all pigeons.

Phase II

Phase II introduced alternative reinforcement treatments in C2 and C3, while Phase I schedules of reinforcement continued in C1. In C2, corresponding to DRA treatment, RT was no longer available for the target response. Also in C2, the alternative response key (opposite side key from the target response key) was illuminated with the same stimulus color as the target response key. RT was available for pecks to the alternative response key on a VI 30-s schedule. There was a changeover delay (COD) in place such that the alternative key could not produce RT and the target key could not produce rT if the other response had occurred in the last 3 s. P196 had difficulty acquiring the alternative response in C2, and after 12 sessions of Phase II was manually shaped to the alternative key (illuminated in isolation) immediately prior to the session. In C3, corresponding to NCR treatment, RT was no longer available for the target response, but RT was delivered on a variable-time (VT) 30-s schedule of alternative reinforcement. Due to a software error, data from session 25 of Phase II in Condition 2 and from session 18 of Phase II in Condition 3 were not saved for P314, P251, or P348. Phase II lasted 30 sessions.

Phase III

In Phase III, RT was discontinued in all components; rT remained available for target-key pecks in Conditions 1 and 3. Response keys were lit as in Phase II. Phase III lasted 30 sessions.

Phase IV

In Phase IV, RT was introduced response-independently at 2 and 8 s into each component. Response keys were lit as in Phases II and III. Phase IV lasted 10 sessions. Phase IV was a test of reinstatement that replicates prior research (e.g., Pyszczynski & Shahan, 2011).

Results and Discussion

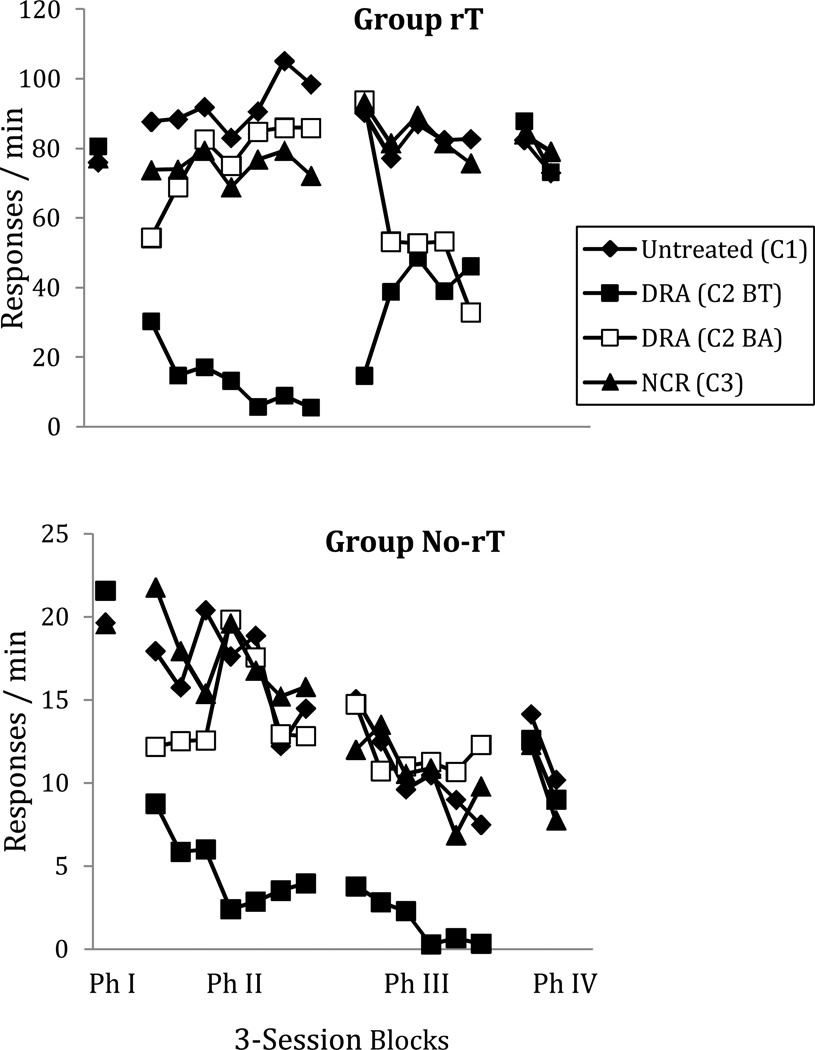

To summarize the results, Figure 1 presents average response rates for all seven pigeons during Conditions 1, 2, and 3. Baseline response rates, averaged for the final 10 sessions of Phase I in each condition, appear above the “Ph 1” marker on the x-axis in each panel. There were no consistent differences in baseline rates between Condition 2 (no rT) and Conditions 1 and 3 (with rT). Likewise, there is little or no difference across conditions with and without rT in Phase II for the no-treatment component C1 (filled diamonds). Thus, rT did not affect response rates before treatment was introduced in components C2 and C3.

Fig. 1.

Responses per min on the target and alternative response key (C2 B Alt) across all phases and conditions of Experiment 1. Ph I = Phase I baseline, Ph II = Phase II treatment, Ph III = Phase III RT discontinued, Ph IV = Phase IV reinstatement. C1, C2, and C3 = multiple-schedule components: Component 1 (no treatment), 2 (DRA), and 3 (NCR), respectively. Phase I data points are the average of the last five sessions of baseline.

In Phase II, the introduction of DRA in C2 led to rapid increases in responding to the DRA alternative (unfilled squares), accompanied by rapid decreases in target responding (filled squares). The introduction of NCR in C3 led to progressive decreases in target responding (filled triangles) that were substantially smaller than for DRA in C2. Both DRA and NCR were increasingly effective across successive conditions. In the no-treatment component (C1), baseline response rates were generally lower than during Phase I (albeit with substantial individual variation). This effect is consistent with negative behavioral contrast resulting from the addition of DRA and NCR reinforcers in C2 and C3.

In Phase III, the discontinuation of RT food reinforcers in all components led to systematic decreases in alternative responding in the DRA component, with initial increases in target responding in C2 (formerly DRA)—an effect known as resurgence. Responding in C3 (formerly NCR) increased similarly. The magnitude of resurgence when DRA was discontinued in C2 was greater in Conditions 1 and 3 (with rT) than in Condition 2 (no rT), but there were no consistent differences across conditions when NCR was discontinued in C3. In all conditions and components, target response rates generally decreased over subsequent sessions during Phase III; Figure 1 shows their persistence during extinction was greater in Conditions 1 and 3 (with rT) than in Condition 2 (no rT).

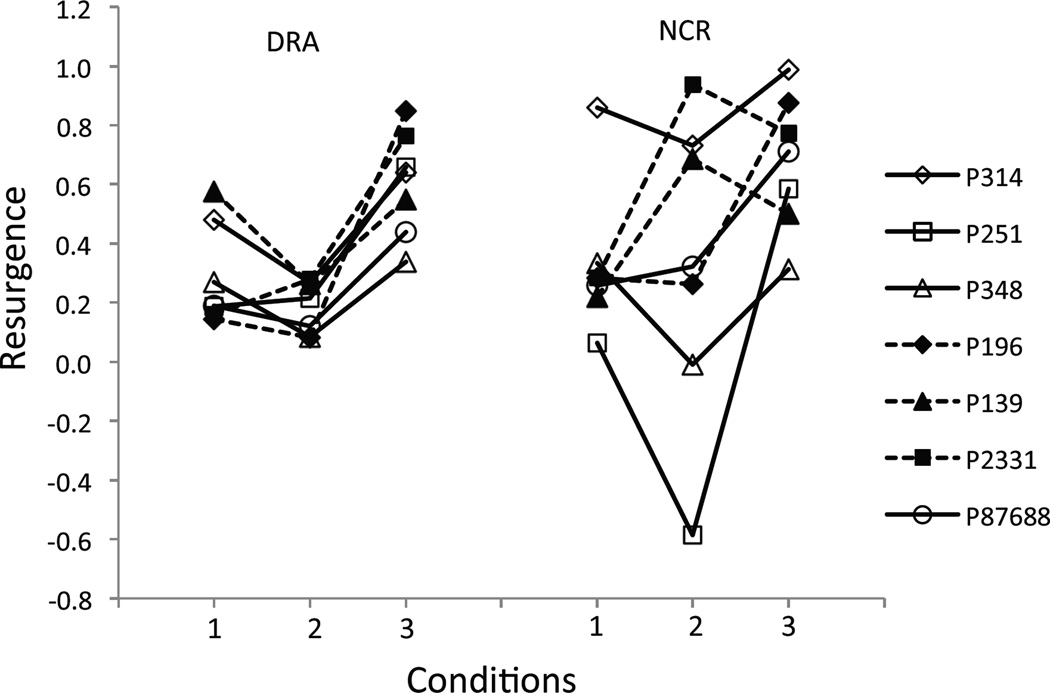

Figure 2 examines resurgence as the difference in proportions of baseline responding between the first five sessions of Phase III and the last five sessions of Phase II; a positive value demonstrates that target responding increased when alternative reinforcement was discontinued. Results for the DRA and NCR components are shown across successive conditions in the left and right portions of the figure. In the DRA component, resurgence was generally greater in Conditions 1 (five of seven pigeons) and 3 (all seven pigeons) with rT than in Condition 2 with no rT. In the NCR component, there were no systematic differences across conditions and individuals.

Fig. 2.

Resurgence of the target response in C2 (DRA) and C3 (NCR), Conditions 1–3, Experiment 1. Here, resurgence is the difference in proportions of baseline between the first five sessions of Phase III and the last five sessions of Phase II. A positive value shows that target responding increased when alternative reinforcement was removed.

Reinstatement by response-independent reinforcers in Phase IV was generally greater in Conditions 1 and 3 (with rT) than in Condition 2 (no rT; see Fig. 1). Alternative responding also was reinstated in each condition, with the magnitude of the effect decreasing across conditions. There were no systematic differences between components or conditions in the magnitude of reinstatement.

There were some idiosyncratic differences in the response to DRA and NCR treatments as well as differences in the effects of rT on target responding in the absence of RT (in Phase III). In the DRA treatment component, target responding was reduced rapidly to near-zero levels during Phase II, with the exception of P196, Condition 1 (see procedure note on this pigeon). Target responding in the NCR component showed substantial variability across pigeons during Phase II (see Supporting Information). In NCR, target responding decreased during Phase II for five pigeons but remained high or increased for P314 and P196 in Condition 1 and for P251 and P348 in Condition 2; decreasing response rates were the rule in Condition 3. In Phase III (no RT available for any response), during Conditions 1 and 3 (rT and rT replication), individual target response rates in all components varied widely, with two subjects in Condition 1 and three subjects in Condition 3 showing reacquisition of the target response for rT alone, whereas responding decreased to near zero across sessions for all pigeons in Condition 2 (no rT). Individual subject data for Phases II and III for each component are available in Supporting Information.

In summary, the availability of analog sensory reinforcement (i.e., rT) in Conditions 1 and 3 had little or no effect across conditions on baseline responding or on response rates in Component 1 during Phase II. There were no clear effects of rT on target responding in the DRA component during Phase II, perhaps because response rates fell so rapidly for all but one pigeon as to mask any small effects. Likewise, there were no clear across-subject effects of rT on target responding in the NCR component during Phase II. However, during Phase III, target responding increased substantially after DRA was discontinued in Conditions 1 and 3 with rT; the effect was reliably greater than in Condition 2 with no rT. Resurgence was less clear and less differentiated across successive conditions after NCR was discontinued. Overall, during Phase III, rT increased the levels, the persistence, and the variability of responding between subjects in all components.

Regardless of the presence or absence of rT, target responding was eliminated rapidly for all pigeons with treatment by DRA but not with NCR. One plausible account of this difference is that DRA establishes explicitly defined alternative behavior (i.e., pecking a second key for reinforcers on a VI schedule) whereas NCR contingencies leave alternative behavior unspecified. Still, this result is somewhat surprising given that NCR and DRA are both established treatments for problem behavior (for review see Carr, Severtson, & Lepper, 2009; Petscher, Rey, & Bailey, 2009), although they are rarely directly compared while controlling for other extraneous variables. One other reason for the relative differences in DRA and NCR effectiveness in our study may be that clinicians, in an effort to avoid adventitious reinforcement of the target response, often implement NCR contingencies with the additional specification that the target response must not have occurred too closely before the delivery of NCR, whereas we made no such requirement.

If rT is considered an adequate analog of the sensory consequences associated with engaging in a behavior, the present results suggest that such sensory reinforcers might be more likely to contribute to the persistence of problem behavior during lapses of treatment rather than during treatment itself. Potential theoretical and applied implications of these findings will be further explored in the General Discussion.

Experiment 2

This experiment examined the effects of the presence or absence of analog sensory reinforcers during and after simulated DRA and NCR treatments in multiple schedules with children with intellectual disabilities. Comparisons were made between subjects, rather than within as in Experiment 1. We did not conduct within-subject replications to limit the overall duration of research participation. The experimental protocol required approximately 65 sessions over 5 months, and we were reluctant to request parental consent and clinical team approval for a protocol of twice this duration. The contingencies for Group rT included analog sensory reinforcers presented on VI schedules, as in Experiment 1, Condition 1, and the contingencies in Group No-rT did not include analog sensory reinforcers, as in Experiment 1, Condition 2.

Method

Participants

Ten individuals with intellectual disabilities who attended a private school for children with autism participated in this study. Each participant’s gender, chronological age, mental-age equivalent score from the Peabody Picture Vocabulary Test 4 (PPVT; Dunn, Dunn, & Pearson Assessments, 2007), diagnosis, and experimental group are listed in Table 2. A trained research assistant administered the PPVT and clinical diagnoses were obtained from student records.

Table 2.

Experiment 2 Participant Characteristics

| Participant | Gender | Age | PPVT | Diagnosis | Group |

|---|---|---|---|---|---|

| JHG | M | 18 | < 2:0 | PDD | rT |

| 391 | M | 13 | 4:8 | PDD-NOS | rT |

| 158 | M | 18 | 2:2 | Autism | rT |

| 2242 | M | 12 | 4:3 | Autism | rT |

| BHH | M | 20 | 3:0 | PDD, Autism | No rT |

| 359 | M | 16 | < 2:0 | Autism | No rT |

| AEA | M | 14 | 3:1 | Autism | No rT |

| 1659 | M | 13 | 2:2 | PDD-NOS | No rT |

| 1664 | F | 15 | 5:7 | Angelman Syndrome | No rT |

| JET** | M | 11 | 2:2 | Autism |

Note. PPVT = Peabody Picture Vocabulary Test 4 Mental age equivalent score (years:months). PDD = Pervasive Developmental Disability, NOS = Not Otherwise Specified, Group rT = with analog sensory reinforcers, Group No rT = without analog sensory reinforcers.

JET did not complete the experimental conditions.

Apparatus and setting

Sessions were conducted in a 1.5 × 1.8 m laboratory testing room located at the participants’ school. Participants sat facing a wall in which a 17″ touchscreen color monitor was flush mounted at eye level. Speakers on both sides of the touch screen were flush mounted behind 7-cm square metal screens. Tokens were dispensed by an automated poker-chip dispenser (Med ENV-703) into a small opening in the wall to the left of monitor. There was a narrow countertop extending 18 cm from the wall below the monitor and token dispenser opening. Participants were given a small plastic container in which to place tokens as they were collected. The experimenter sat in the corner behind the participant and did not interact with him or her during the experiment. A computer running software written in MATLAB (MathWorks, Inc.) controlled experimental events and data collection.

Procedure

Experimental sessions of approximately 20-min duration were conducted once per day, usually 4 or 5 days per week.

Preliminary discrimination test

An initial tabletop pretest served two functions, to verify discrimination of the experimental stimuli and to introduce the tokens and token exchange procedure. The pretest consisted of a sorting task. The participant sat at a table with an array of four laminated cards, each displaying a colored shape (red circle, blue square, green triangle, and yellow diamond). Four demonstration trials were presented in which the experimenter placed an additional card with one of the shapes on top of the matching shape on the table. The experimenter then presented 32 test trials. On each trial, the experimenter gave the participant a card, said “Match,” and presented a token after each correct matching response. All participants were 100% correct on the sorting test.

Token exchange procedure and training

Throughout the experiment, accumulated tokens were exchanged for food or drink items immediately after each session. Food items for each participant were based on recommendations from classroom teachers. The exchange area was outside of the test room; there were five to seven food items in small containers arranged in a row on a countertop. One or two clear plastic tubes that held 10 tokens were placed in front of each container, signaling the price of the item. The participant was taught by vocal instructions to select an item, fill the tube(s) with tokens, and then exchange the token tube for the container of food. During preliminary testing and introductory sessions with relatively rich schedules of reinforcement (details below), participants typically earned more than 60 tokens and the price of each item was 20 tokens; during the experiment the price was 10 tokens.

Reinforcer function test

A second preliminary test introduced the touch screen, computer-generated stimuli that accompanied automatically-dispensed tokens, and determined whether presentation of tokens functioned as a conditioned reinforcer (similar to Dube, McIlvane, Mazzitelli, & McNamara, 2003). When the token dispenser was activated during a session, on-screen stimuli disappeared and were replaced by a 3-s animated fireworks display that filled the active screen area and 3 s of chimes presented through the speakers. The token with fireworks display and chimes corresponded to the RT consequence in Experiment 1.

Each test session consisted of two 1-min sampling components presenting individual stimuli to which responses did or did not produce tokens, followed by one 3-min choice component with both stimuli presented concurrently. In the first component, a 4-cm purple star appeared on the left third of the computer screen and touching it produced a token on a fixed-interval (FI) 2-s schedule. During the second component, a 4-cm orange star was presented in the right third of the screen, and touching it did not produce a token (EXT). In the third component, both stars were displayed simultaneously, with the same reinforcement contingencies just described and a 1.5-s COD. The dependent variable was the proportion of responding to the left side (tokens) during the 3-min concurrent-choice component. The criterion to continue testing was at least 80% responses to the left side for one session, within a limit of five sessions. All participants met this criterion. Sessions then continued with the reinforcement contingencies and order of presentation for the first two components reversed. The orange star on the right side was presented first for 1 min with tokens dispensed on FI 2 s, the purple star on the left side followed for 1 min with EXT, and the 3-min choice component presented both of these stimuli concurrently and with these schedules. Sessions continued until 80% of responses were made to the right side during the third component for one session, or to a maximum of five test sessions. All participants met this criterion except one, JET, who was excused from the experiment at this point.

Experimental stimuli and responses

The experimental stimuli presented on the touch screen monitor were colored shapes (blue squares, red circles, or green triangles) each approximately 1.5 cm square in size. Stimuli appeared on a black background. The screen was divided vertically into 3 equal-sized areas (i.e., left, center, and right), analogous to the three response keys in Experiment 1. During each component, only one type of stimulus appeared. Six copies of that stimulus were presented at random locations within one screen area. When the participant touched a stimulus, it disappeared with a soft “pop” sound and another copy appeared at a different random location within the same screen area. Software recorded all responses to the touchscreen within the active screen area (both hits and misses) and the number of tokens delivered. When a token was dispensed, it was accompanied by the fireworks and chimes as described above. All touches within an active screen area were used for calculation of response rates, including both hits and misses.

Introductory experimental sessions

The VI schedules, ICI, and longer session durations for the experiment proper were introduced gradually over two sessions. The first session included nine components with component order randomized in blocks of three. Component duration was 1 min for all components. Assignment of stimuli to components was counterbalanced across subjects. All stimuli appeared on the left screen area, and the schedules of reinforcement were the same for all stimuli. The schedule for token delivery changed from FI 3 s to VI 5 s within the session. During ICIs, the stimuli disappeared and the screen turned gray. ICIs were gradually increased from 4 s to 10 s within the session. The second session was similar to the first, except there were six 2-min components, the schedule of reinforcement changed from VI 5 s to VI 8 s within the session, and ICIs increased from 10 to 15 s. The criterion to continue in the experiment was average response rate greater than 5/min in the second session. All participants met this criterion.

Phase I

Table 3 shows a summary of the procedure for Experiment 2, including reinforcement schedules and contingencies for each component and group during Phases I–IV. Phase I baseline sessions consisted of nine components per session, with order randomized into blocks of three. Components were 2 min and ICIs were 15 s, thus the total duration of each session was 20 min. In all components tokens were delivered on a VI 32 s schedule (with 3 s of fireworks and chimes as described above). Phase I continued for 12 sessions; Participant 2242 received only 11 sessions because of experimenter error.

Table 3.

Phases and Conditions of Experiment 2

| Group rT | Group No-rT | |||||

|---|---|---|---|---|---|---|

| Target | Alternative | Target | Alternative | |||

| Phase I | C1 | (No treatment) | VI 32 token + VI 8 rT | - | VI 32 token | - |

| (Baseline) | C2 | (DRA) | VI 32 token + VI 8 rT | - | VI 32 token | - |

| 12 sessions | C3 | (NCR) | VI 32 token + VI 8 rT | - | VI 32 token | - |

| Phase II | C1 | (No treatment) | VI 32 token + VI 8 rT | - | VI 32 token | - |

| (Treatment) | C2 | (DRA) | VI 8 rT | VI 8 token | Ext | VI 8 token |

| 21–33 sessions | C3 | (NCR) | VI 8 rT + VT 8 token | - | Ext + VT 8 token | - |

| Phase III | C1 | (No treatment) | VI 8 rT | - | Ext | - |

| (Extinction) | C2 | (DRA) | VI 8 rT | Ext | Ext | Ext |

| 15–18 sessions | C3 | (NCR) | VI 8 rT | - | Ext | - |

| Phase IV | C1 | (No treatment) | VI 32 token + VI 8 rT + RI | - | VI 32 token + RI | - |

| (Reacquisition) | C2 | (DRA) | VI 32 token + VI 8 rT + RI | - | VI 32 token + RI | - |

| 6 sessions | C3 | (NCR) | VI 32 token + VI 8 rT + RI | - | VI 32 token + RI | - |

Note. Target = left screen area, Alternative = right screen area. C1, C2, and C3 = Multiple-schedule component 1 (no treatment), 2 (DRA), and 3 (NCR), respectively. VI 32 and VI 8 = variable-interval 32-s and 8-s schedules, respectively. VT 8 = variable-time 8-s schedule. Token = token deliveries accompanied by 3-s of animated fireworks and chimes, rT = 0.5-s smaller fireworks and muted chime (no token). Ext = extinction for responses to a screen area. RI = two response-independent tokens in the first three components.

For Group rT, the analog sensory reinforcer was a small 5 × 5 cm animated fireworks display of 0.5 s duration, accompanied by a 0.5-s muted chime, but with no token. The rT consequence was delivered on a superimposed VI 8 s schedule for responses to the left (target) screen area within all components. Participants in Group No-rT never received this consequence. The rT contingencies modeled a combination of socially mediated reinforcement (tokens) and sensory reinforcement (rT) for responses.

Phase II

For both groups, the structure of Phase II (treatment) sessions was similar to baseline sessions—nine block-randomized 2-min components. For participants in Group rT, the rT consequence continued on a superimposed VI 8-s schedule for responses to the left screen area in all components. Component 1 (C1, no treatment) was identical to the baseline procedure. Component 2 (C2) modeled treatment with DRA with C2 stimuli presented in both the left and right screen areas. In C2 tokens were discontinued for responses to the left area (modeling extinction of target behavior), and responses to the right screen area produced tokens on a VI 8-s schedule (modeling rich reinforcement for an alternate response). A 1.5-s COD applied to both token deliveries and presentation of rT. C2 was discontinued for Participant JHG after eight sessions because he did not respond to the DRA alternative stimulus. A procedure that attempted to establish responding to this stimulus by presenting it in isolation at various points in the session (similar to that used with P196 in Exp. 1) was not successful. Component 3 (C3) modeled an NCR procedure; response-independent tokens were presented on a VT 8-s schedule and RT was no longer available for the target response. As with all other token deliveries, VT tokens were accompanied by the 3-s fireworks and chimes. Phase II continued for 21 sessions, plus 3–18 additional sessions for four participants, as described below.

Disruption test with concurrent distracting stimulus

Four participants (JHG, 391, 158, and BHH) received disruption tests with a concurrent distracting stimulus. Disruption sessions were identical to other Phase-II sessions for the first block of three components. During the next six components, a large yellow star, similar to those used during the reinforcer function pretest, was presented in the center screen area (analogous to the concurrent distracting stimulus in Mace et al., 1990). Responses to the star were followed by the token consequence on a VI 16-s schedule. Participants completed three disruption sessions, followed by additional Phase II treatment sessions until response rates became stable. The disruption test was discontinued after the first four participants because of floor or ceiling effects; responding continued at characteristic rates for two subjects (no effect) and fell to extremely low rates for two others (data not presented).

Phase III

During Phase III (extinction), session structure and stimuli were identical to Phase II, but no tokens were delivered. Participants in Group rT continued to receive the rT consequence on VI 8 s for responses to the left screen area. Phase III continued until one of three criteria were met: (a) at least 15 sessions with visually stable responding for the last six sessions; (b) three consecutive sessions with refusals to attend the session or remain in the testing room for at least six components; (c) repeated sessions with significant destructive or health-threatening behavior.

Phase IV

After extinction the initial baseline conditions were reinstated for six sessions. During the first three sessions, two response-independent tokens were delivered in each of the first three components to assist in response reacquisition. If Phase III had been terminated because of refusal to attend sessions, the participant was invited back to the lab by bringing a few tokens and food items to the classroom and telling the participant during the invitation that she or he would now be able to get tokens and other reinforcers.

Results and Discussion

Figure 3 shows average response rates for Groups rT (top panel) and No-rT (bottom panel). This analysis includes data for all subjects except Participant JHG, who did not receive C2. All individual participant (including JHG) baseline response rates are shown in the Appendix; figures showing all individual response rates for each condition as proportion of baseline rates are included in the Supporting Information. In Figure 3, Phase I baseline response rates, averaged for the final six sessions of each condition, appear above the “Ph 1” marker in each panel. Response rates were compared between subjects (in contrast to Exp. 1), so that differences in baseline rates between Groups rT and No-rT may be due to individual variation in response characteristics; the Appendix shows substantial overlap between groups in individual baseline response rates. Therefore, the data cannot support conclusions regarding the effects of rT on baseline response rates.

Fig. 3.

Responses per min on the target (C1, C2 BT, C3) and alternative response (C2 BA) screen areas across all phases and conditions of Experiment 2. Phase I data points are the average of the last six sessions of baseline. See Figure 1 for further details of phases (Ph) and multiple-schedule conditions (C).

In Phase II, C1 (no treatment), evidence for the negative behavioral contrast seen with pigeons in Experiment 1 was not found with the human subjects, although response rates eventually declined in Group No-rT. Target response rates decreased in C2 in both groups when the alternative (DRA) response was concurrently available. Otherwise, there were only minor differences between groups, with a trend toward accelerating response rates in Group rT, and a trend toward decelerating rates in Group No-rT.

In Phase III (extinction), there were decreasing trends in C1 and C3 in both groups. The major difference between groups is seen in C2, with increased target rates and decreased alternative response rates in Group rT, but decreasing target and alternative response rates in Group No-rT. In Phase IV (reacquisition), response rates were comparable to baseline in Group rT, but the rates were substantially lower than baseline for Group No-rT.

Figure 4 examines resurgence in C2 and C3 as the difference in proportions of baseline between the first six sessions of Phase III and the last six sessions of Phase II; a positive value indicates that target responding increased when reinforcement was discontinued. Resurgence occurred in one of the treatment components for three participants in Group rT (391 in DRA, 158 and 2242 in NCR) and for one participant in No-rT (BHH in NCR). No participant showed evidence of resurgence in both components. The primary difference between the results of Groups rT and No-rT was that procedural extinction in Phase III was generally less consistent and slightly less effective in rT.

Fig. 4.

Resurgence of the target response in C2 (DRA) and C3 (NCR) for Groups rT and No-rT in Experiment 2. Resurgence is the difference in proportions of baseline between the first six sessions of Phase III and the last six sessions of Phase II. A positive value shows that target responding increased when reinforcement was discontinued.

Two overall aspects of the results are consistent with those in Experiment 1, at least to a first approximation. First, during Phase III, rT increased the levels and the persistence of responding between subjects in all components. In addition, the data presented in Supporting Information exhibit greater between-subject variability in Group rT, as in Experiment 1.

Figure 5 shows average response rates in Phase-III extinction as mean proportions of baseline in Experiments 1 and 2. The three panels on the left compare resistance to extinction for pigeons over successive conditions with and without rT. The right panel compares resistance to extinction between separate groups of children with and without rT. For both pigeons and humans, resistance to extinction was greater with rT than without rT, and greater in C1 and C3 than in C2. Second, regardless of the presence or absence of rT, target responding was eliminated to a greater extent with treatment by DRA than by NCR. As suggested in relation to Experiment 1, this difference may arise from the fact that DRA arranges reinforcers for explicitly defined alternative behavior whereas NCR contingencies leave alternative behavior unspecified.

Fig. 5.

Mean proportions of baseline during Phase III, extinction, in Experiments 1 and 2. The left, center-left, and center-right panels compare resistance to extinction, averaged over seven pigeons, in successive conditions with and without rT. The right panel compares resistance to extinction between separate groups of children with and without rT. Error bars display standard errors.

General Discussion

The primary purpose of this research was to systematically evaluate the effects of intrinsic or automatic reinforcers on behavior in the context of commonly used behavior deceleration procedures. Automatic reinforcement, though used as a working hypothesis in applied research and applied practice, is controversial in that behavior analysts typically reserve the term reinforcement for situations in which functional control over responding can be empirically demonstrated. However, automatically reinforced behavior does not easily lend itself to such demonstrations in that the consequence (i.e., reinforcer occurrence/delivery) is not readily observable/measurable. Automatic reinforcement is inferred as having taken place because behavior, putatively maintained by such consequences, has properties similar to those displayed by overt operant response–reinforcer relations. It has long been presumed that automatic reinforcers are crucial for maintaining operant responding, including complex human behavior (Vaughan & Michael, 1982), but there is little direct evidence for this presumption.

One exception is Ahearn et al. (2003) which provides a test of whether automatically reinforced stereotypic behavior would conform to a prediction of behavioral momentum theory that adding reinforcers into an environmental context would increase the persistence of stereotypy. These reinforcers increased behavioral persistence, thus it can be concluded that stereotypy was discriminated operant responding. Similar findings of increased persistence have been obtained for tightly controlled operant responding maintained by access to consumable items both when the additional reinforcers were qualitatively distinct from those maintaining operant responding (Grimes & Shull, 2001) and when reinforcement was delivered independent of responding (Nevin, Tota, Torquato, & Shull, 1990). Thus the automatically reinforced behavior in the Ahearn et al. preparation produced the same type of behavioral persistence observed with experimentally-controlled reinforcers.

Given that automatically reinforced behavior is reinforced by the sensory products of responding, it is reasonable to assume that automatic reinforcers may also affect socially mediated responding, as there are also sensory products for this behavior. Moreover, best clinical practices relative to treating socially mediated problem behavior involve identifying the specific functional reinforcer (i.e., the aspect of the social environment) maintaining problem behavior and developing an intervention that addresses this social function (see Hanley, Iwata, & McCord, 2003; Pelios, Morren, Tesch, & Axelrod, 1999). Though automatic reinforcement as a maintaining variable is also assessed when evaluating problem behavior, little attention has been directed to the possible contribution of sensory reinforcers for socially maintained problem behavior.

In the two current studies, analogs to automatic reinforcers (rT) were devised as a means of controlling their presence. The analog reinforcers did not produce a difference in the rate of the pigeons’ key pecking during baseline in Experiment 1. On average, baseline rates of responding were higher for the human participants with rT than without rT in Experiment 2, but there was substantial overlap between groups.

In Phase II, two commonly used interventions for problem behavior, DRA and NCR, were arranged in multiple schedule components. DRA arranges an explicit contingency and responding shifted to the alternative response option in both experiments. In Experiment 1, this shift approached exclusive allocation to the alternative response (Fig. 1, C2) while in Experiment 2 the reallocation of behavior was less extreme (Fig. 3, C2). There was, again, no apparent difference in response rates across the rT and No-rT conditions in Experiment 1. In Experiment 2, the decrease in target response rates relative to baseline in the DRA component was comparable for groups with and without rT. This indicates that rT did not function as a conditioned reinforcer with sufficient strength to compete with the token reinforcers available for the alternative response.

NCR arranges access to reinforcing stimuli that either accelerate extinction by disrupting responding or decreasing the motivation to engage in the response (Kahng, Iwata, Thompson, & Hanley, 2000). In Experiment 1 NCR produced a clear effect relative to control (C1), yet one that was less pronounced than in DRA. This rate-decreasing effect of NCR increased across the three sequential conditions but even in the final condition was still much smaller than the change in behavior obtained with DRA. As in the previous two phases there was no difference attributable to rT across the rT and No-rT conditions. In Experiment 2 there was no clear differentiation between the NCR and no-treatment conditions, and rT generally enhanced responding relative to its absence. Overall, it seems that Phase II responding is more variable when rT is present but it is not clear whether this is attributable to the presence of rT.

In both experiments there was a clear effect of rT on behavioral persistence during extinction (Phase III—see summary in Fig. 5). In Experiment 1 responding was more persistent in all components during extinction with rT, with no clear differentiation across the no-treatment control condition and the two treatment conditions. In the rT replication condition, persistence during extinction was more pronounced, implying that the extended history of reinforcement may have enhanced persistence. Similarly, in Experiment 2 responding was more persistent across components during extinction with rT. In both experiments NCR, both with and without rT, was associated with greater persistence than DRA, although this difference was less pronounced in Experiment 1 than Experiment 2. As noted during Phase II, the presence of rT, while associated with a clear persistence effect, also seemed to produce greater variability in responding. It may be that intrinsic automatic reinforcers increase variability within and between participants.

In Experiment 1 there was greater resurgence during the rT conditions in the DRA component. While there was a generally higher level of responding with rT in Experiment 2, there was no difference in resurgence associated with any condition, although this may be related to the general ineffectiveness of procedural extinction to reduce response rates. As observed in the previous two phases, rT was associated with greater variability in responding across participants (details in Appendix and Supplementary Information).

The presence of rT did not consistently affect reinstatement in Experiment 1; in Experiment 2, however, Group rT generally exhibited greater levels of responding than Group No-rT during reacquisition.

There are two potential clinical implications of these findings. First, the present results suggest that intrinsic or automatic reinforcers have their most notable impact on behavior during extinction. Specifically arranging extinction-based treatment for socially-mediated behavior may provide the most salient context for this enhanced behavioral persistence to occur. Given that most behavior deceleration procedures either directly or indirectly impose extinction for problem behavior, more research is necessary to understand the potential effect of rT on clinical interventions. Second, because DRA produced more rapid and pronounced reductions in target behavior than NCR and because the persistence of target behavior during extinction was greater in the NCR than the DRA component, our findings support the use of DRA over NCR. In the applied literature there have been some direct comparisons of DRA and NCR for treating problem behavior. Hanley, Piazza, Fisher, Contrucci, and Maglieri (1997) and Kahng, Iwata, DeLeon, and Worsdell (1997) showed that both procedures produce substantial reductions in problem behavior but recipients of both treatments generally prefer DRA (Hanley et al., 1997; Luczynski & Hanley, 2009). In addition, problem behavior is more persistent when instances of this behavior closely precede an NCR-arranged reinforcer (DeLeon, Williams, Gregory, & Hagopian, 2005; Holden, 2005). The greater persistence of problem behavior in the current research during NCR is relevant because NCR has been found to interfere with reinforcement contingencies imposed with the purpose of establishing alternative responding (Goh, Iwata, & DeLeon, 2000). Thus, alternative responding may be difficult to establish when NCR is in place.

Despite the superior performance of DRA over NCR, there is the issue of persistence of problem behavior following DRA; for example, when alternative reinforcers are discontinued. One approach proposed by Mace and colleagues (2010) is to train the alternative response in a novel environmental context. In their study, when DRA was implemented in novel and familiar contexts there was less persistence in the novel context. Another approach would be to increase the behavioral persistence of reinforced alternative responding with additional extrinsic reinforcers. Additional research is warranted focusing on increasing the persistence of functional alternatives to problem behavior. It would also be helpful to clinical practice if techniques to reduce the impact of rT during treatment were identified.

There were also limitations of the current research. First and foremost, these two experiments did not include study of the actual treatment of socially maintained problem behavior. A natural extension would be to directly or systematically replicate these results with individuals who present with socially maintained problem behavior. It should also be noted that the nature of the analog intrinsic or automatic reinforcers across the two experiments differed in that the analogs for Experiment 1 consisted of conditioned (or small-magnitude unconditioned) reinforcement closely associated with the primary reinforcer (food delivered via a hopper) while the analogs for Experiment 2 were conditioned reinforcers (small 0.5-s fireworks display) similar to other conditioned reinforcers (large 3-s fireworks display plus token). The degree to which sensory reinforcers resemble the primary reinforcer may be important, as one could argue that the increased persistence and variability of response rates observed here with rT may be the result of decreasing the discriminability of extinction with small-scale primary reinforcers. Finally, the intrinsic reinforcers were analogs rather than the sensory products for responding, and were presented intermittently rather than following each response, as might occur in a clinical setting. Further support for the present findings may be found when exposing automatically reinforced behavior (e.g., stereotypy) to NCR and DRA interventions in a similar manner to the current research.

Conclusion

This article began by suggesting that automatic, intrinsic, or sensory reinforcers that are not readily measured or controlled might summate with explicit socially mediated reinforcers in clinical settings to make problem behavior more persistent. From the perspective of behavioral momentum theory, all reinforcers that occur within a stimulus context increase the persistence of a target response in that context even if they are contingent on an alternative response. Here, the discontinuation of alternative reinforcers after a phase corresponding to treatment of analog problem behavior was arranged to test the persistence of treatment effects. Both pigeons and children with intellectual and developmental disabilities exhibited greater resistance to extinction in conditions where response-contingent analog sensory reinforcers were available than when they were absent (see Fig. 5 for summary). Therefore, to the extent that our analogs correspond functionally to the naturally occurring intrinsic consequences of problem behavior, such reinforcers may contribute to the persistence of problem behavior in clinical settings after seemingly effective treatment with explicit alternative reinforcement.

Supplementary Material

Acknowledgments

The research reported here was supported in part by the Eunice Kennedy Shriver National Institute of Child Health and Human Development under award number R01HD064576 to the University of New Hampshire and P30HD004147 to University of Massachusetts Medical School. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. These results were presented in part at the 39th Annual Convention for the Association for Behavior Analysis International in Minneapolis, May 2013. The authors thank the members of the USU Behavior Analysis Lab for their assistance in conducting this research.

Appendix

Mean response rates for individual sessions in Experiment 2, Phase I.

| JHG | 391 | 158 | 2242 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sess | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 |

| 1 | 29.4 | 20.7 | 35.4 | 118.0 | 124.9 | 120.4 | 28.8 | 53.2 | 33.7 | 53.7 | 75.8 | 69.4 |

| 2 | 19.4 | 22.0 | 25.3 | 130.6 | 125.2 | 139.4 | 33.9 | 45.0 | 39.2 | 69.9 | 50.5 | 70.2 |

| 3 | 26.5 | 19.9 | 18.5 | 136.6 | 123.4 | 124.0 | 25.7 | 20.8 | 22.7 | 68.1 | 70.0 | 77.8 |

| 4 | 15.9 | 18.4 | 19.5 | 128.4 | 130.6 | 126.9 | 39.8 | 25.8 | 32.0 | 31.9 | 60.5 | 62.2 |

| 5 | 13.7 | 15.4 | 15.6 | 135.6 | 144.3 | 130.6 | 1.1 | 9.2 | 0.9 | 62.7 | 77.2 | 76.5 |

| 6 | 17.5 | 14.1 | 16.4 | 123.6 | 131.3 | 119.2 | 67.8 | 36.0 | 56.3 | 66.5 | 91.3 | 80.8 |

| 7 | 11.1 | 11.4 | 14.7 | 131.8 | 116.1 | 114.2 | 39.5 | 43.1 | 25.1 | 73.0 | 71.4 | 85.4 |

| 8 | 33.3 | 32.3 | 29.6 | 137.4 | 132.7 | 119.1 | 23.2 | 20.2 | 17.8 | 84.9 | 77.1 | 89.3 |

| 9 | 15.5 | 17.0 | 16.2 | 117.3 | 117.0 | 99.7 | 27.0 | 22.1 | 8.3 | 87.3 | 97.3 | 103.7 |

| 10 | 15.5 | 14.8 | 13.3 | 113.9 | 123.5 | 119.4 | 15.2 | 18.1 | 32.8 | 97.3 | 101.1 | 91.6 |

| 11 | 9.2 | 10.0 | 8.8 | 95.3 | 115.7 | 109.4 | 24.4 | 54.4 | 56.6 | 77.9 | 93.3 | 85.4 |

| 12 | 9.5 | 12.4 | 13.2 | 101.1 | 123.1 | 103.0 | 27.5 | 26.8 | 30.5 |

| BHH | 359 | AEA | 1659 | 1664 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sess | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 |

| 1 | 48.1 | 58.7 | 55.5 | 17.1 | 11.6 | 19.9 | 28.3 | 7.7 | 19.9 | 21.6 | 15.5 | 20.6 | 83.8 | 81.8 | 86.0 |

| 2 | 36.3 | 27.0 | 26.0 | 14.4 | 2.5 | 7.4 | 15.8 | 30.3 | 15.7 | 30.9 | 29.2 | 30.6 | 77.3 | 70.8 | 60.8 |

| 3 | 64.4 | 62.0 | 50.1 | 9.6 | 14.6 | 13.5 | 29.6 | 13.9 | 22.9 | 5.4 | 8.6 | 9.1 | 66.1 | 58.3 | 71.5 |

| 4 | 27.2 | 29.2 | 38.4 | 2.5 | 4.4 | 4.9 | 11.8 | 35.9 | 45.7 | 17.5 | 17.6 | 9.4 | 54.8 | 45.3 | 59.5 |

| 5 | 37.3 | 40.9 | 26.5 | 4.5 | 0.5 | 4.1 | 16.5 | 6.4 | 1.5 | 14.8 | 7.7 | 19.4 | 35.6 | 30.9 | 26.7 |

| 6 | 31.9 | 39.1 | 28.3 | 2.6 | 3.8 | 2.6 | 32.2 | 26.7 | 23.9 | 14.1 | 6.7 | 15.7 | 54.6 | 56.2 | 54.8 |

| 7 | 26.6 | 25.7 | 22.2 | 0.8 | 1.0 | 6.6 | 13.3 | 7.5 | 3.8 | 14.6 | 15.2 | 18.4 | 71.8 | 73.6 | 65.7 |

| 8 | 12.6 | 16.3 | 14.9 | 0.5 | 0.2 | 8.1 | 0.9 | 5.8 | 9.1 | 6.0 | 3.4 | 12.5 | 66.5 | 76.5 | 73.9 |

| 9 | 26.4 | 20.4 | 17.2 | 16.6 | 16.0 | 11.8 | 8.7 | 18.8 | 0.0 | 9.2 | 6.2 | 13.9 | 57.2 | 70.9 | 45.5 |

| 10 | 25.7 | 26.7 | 18.9 | 1.0 | 7.9 | 0.2 | 0.0 | 2.1 | 5.3 | 6.9 | 8.6 | 13.1 | 83.8 | 83.5 | 80.8 |

| 11 | 13.6 | 31.3 | 10.3 | 19.2 | 8.1 | 9.2 | 3.0 | 10.1 | 14.4 | 4.3 | 1.4 | 4.7 | 66.8 | 71.8 | 70.9 |

| 12 | 16.2 | 18.9 | 19.1 | 5.8 | 2.7 | 5.8 | 2.8 | 11.6 | 6.5 | 7.0 | 5.2 | 2.8 | 58.9 | 51.8 | 76.8 |

References

- Ahearn WH, Clark K, Gardenier N, Chung B, Dube WV. Persistence of automatically reinforced stereotypy: Examining the effects of external reinforcers. Special Issue on Translational Research - Journal of Applied Behavior Analysis. 2003;36:439–448. doi: 10.1901/jaba.2003.36-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr JE, Severtson JM, Lepper TL. Noncontingent reinforcement is an empirically supported treatment for problem behavior exhibited by individuals with developmental disabilities. Research in Developmental Disabilities. 2009;30(1):44–57. doi: 10.1016/j.ridd.2008.03.002. [DOI] [PubMed] [Google Scholar]

- Cohen SL. Behavioral momentum of typing behavior in college students. Journal of Behavior Analysis and Therapy. 1996;1:36–51. [Google Scholar]

- DeLeon IG, Williams DC, Gregory MK, Hagopian LP. Unexamined potential effects of the noncontingent delivery of reinforcers. European Journal of Behavior Analysis. 2005;6:57–71. [Google Scholar]

- Dube WV, McIlvane WJ, Mazzitelli K, McNamara B. Reinforcer rate effects and behavioral momentum in individuals with developmental disabilities. American Journal of Mental Retardation. 2003;108:134–143. doi: 10.1352/0895-8017(2003)108<0134:RREABM>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn DM Pearson Assessments. PPVT-4: Peabody picture vocabulary test. Minneapolis, MN: Pearson Assessments; 2007. [Google Scholar]

- Goh HL, Iwata BA, DeLeon IG. Competition between noncontingent and contingent reinforcement schedules during response acquisition. Journal of Applied Behavior Analysis. 2000;33(2):195–205. doi: 10.1901/jaba.2000.33-195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimes JA, Shull RL. Response-independent milk delivery enhances persistence of pellet-reinforced lever pressing by rats. Journal of the Experimental Analysis of Behavior. 2001;76:179–194. doi: 10.1901/jeab.2001.76-179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP, Iwata BA, McCord BE. Functional analysis of problem behavior: A review. Journal of Applied Behavior Analysis. 2003;36:147–185. doi: 10.1901/jaba.2003.36-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP, Piazza CC, Fisher WW, Contrucci SA, Maglieri KM. Evaluation of client preference for function-based treatments. Journal of Applied Behavior Analysis. 1997;30:459–473. doi: 10.1901/jaba.1997.30-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harper DN. Drug-induced changes in responding are dependent upon baseline stimulus-reinforcer contingencies. Psychobiology. 1999;27:95–104. [Google Scholar]

- Holden B. Noncontingent reinforcement: An introduction. European Journal of Behavior Analysis. 2005;6:1–8. [Google Scholar]

- Igaki T, Sakagami T. Resistance to change in goldfish. Behavioural Processes. 2004;66:139–152. doi: 10.1016/j.beproc.2004.01.009. [DOI] [PubMed] [Google Scholar]

- Iwata BA, Dorsey MF, Slifer KJ, Bauman KE, Richman GS. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahng S, Iwata BA, DeLeon IG, Worsdell AS. Evaluation of the “control over reinforcement” component in functional communication training. Journal of Applied Behavior Analysis. 1997;30:267–277. doi: 10.1901/jaba.1997.30-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahng SW, Iwata BA, Thompson RH, Hanley GP. A method for identifying satiation versus extinction effects under noncontingent reinforcement schedules. Journal of Applied Behavior Analysis. 2000;33:419–432. doi: 10.1901/jaba.2000.33-419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieving GA, DeLeon IG, Carreau-Webster AB, Frank-Crawford MA, Triggs MM. Additional free reinforcers increase persistence of problem behavior in a clinical context: A partial replication of laboratory findings. Journal of the Experimental Analysis of Behavior. in press doi: 10.1002/jeab.310. [DOI] [PubMed] [Google Scholar]

- Luczynski KC, Hanley GP. Do children prefer contingencies? An evaluation of the efficacy of and preference for contingent versus noncontingent social reinforcement during play. Journal of Applied Behavior Analysis. 2009;42:511–525. doi: 10.1901/jaba.2009.42-511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace FC, Lalli JS, Shea MC, Pinter Lalli E, West BJ, Nevin JA. The momentum of human behavior in a natural setting. Journal of the Experimental Analysis of Behavior. 1990;54:163–172. doi: 10.1901/jeab.1990.54-163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace FC, McComas JJ, Mauro BC, Progar PR, Taylor B, Ervin R, Zangrillo AN. Differential reinforcement of alternative behavior increases resistance to extinction: Clinical demonstration, animal modeling, and clinical test of one solution. Journal of the Experimental Analysis of Behavior. 2010;93:349–367. doi: 10.1901/jeab.2010.93-349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLean AP, Grace RC, Nevin JA. Response strength in extreme multiple schedules. Journal of the Experimental Analysis of Behavior. 2012;97:51–70. doi: 10.1901/jeab.2012.97-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin JA, Grace RC. Behavioral momentum and the Law of Effect. Behavioral and Brain Sciences. 2000;23:73–130. doi: 10.1017/s0140525x00002405. [DOI] [PubMed] [Google Scholar]

- Nevin JA, Shahan TA. Behavioral momentum theory: Equations and applications. Journal of Applied Behavior Analysis. 2011;44:877–895. doi: 10.1901/jaba.2011.44-877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin JA, Tota ME, Torquato RD, Shull RL. Alternative reinforcement increases resistance to change: Pavlovian or operant contingencies? Journal of the Experimental Analysis of Behavior. 1990;53:359–379. doi: 10.1901/jeab.1990.53-359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelios L, Morren J, Tesch D, Axelrod S. The impact of functional analysis methodology on treatment choice for self-injurious and aggressive behavior. Journal of Applied Behavior Analysis. 1999;32:185–195. doi: 10.1901/jaba.1999.32-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petscher ES, Rey C, Bailey JS. A review of empirical support for differential reinforcement of alternative behavior. Research in Developmental Disabilities. 2009;30:409–425. doi: 10.1016/j.ridd.2008.08.008. [DOI] [PubMed] [Google Scholar]

- Pyszczynski AD, Shahan TA. Behavioral momentum and relapse of ethanol seeking: Nondrug reinforcement in a context increases relative reinstatement. Behavioural Pharmacology. 2011;22:81–86. doi: 10.1097/FBP.0b013e328341e9fb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan TA, Burke KA. Ethanol-maintained responding of rats is more resistant to change in a context with added non-drug reinforcement. Behavioral Pharmacology. 2004;15:279–285. doi: 10.1097/01.fbp.0000135706.93950.1a. [DOI] [PubMed] [Google Scholar]

- Shahan TA, Sweeney MM. A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior. 2011;95(1):91–108. doi: 10.1901/jeab.2011.95-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Testing a model of resurgence: Time in extinction and repeated resurgence tests. Learning & Behavior. 2013;41(4):414–424. doi: 10.3758/s13420-013-0116-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaughan ME, Michael JL. Automatic reinforcement: An important but ignored concept. Behaviorism. 1982;10:217–228. [Google Scholar]

- Vollmer TR. The concept of automatic reinforcement: Implications for behavioral research in developmental disabilities. Research in Developmental Disabilities. 1994;15(3):187–207. doi: 10.1016/0891-4222(94)90011-6. [DOI] [PubMed] [Google Scholar]

- Wacker DP, Harding JW, Berg WK, Lee JF, Schieltz KM, Padilla YC, Shahan TA. An evaluation of persistence of treatment effects during long-term treatment of destructive behavior. Journal of the Experimental Analysis of Behavior. 2011;96:261–282. doi: 10.1901/jeab.2011.96-261. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.