Abstract

How do we apply learning from one situation to a similar, but not identical, situation? The principles governing the extent to which animals and humans generalize what they have learned about certain stimuli to novel compounds containing those stimuli vary depending on a number of factors. Perhaps the best studied among these factors is the type of stimuli used to generate compounds. One prominent hypothesis is that different generalization principles apply depending on whether the stimuli in a compound are similar or dissimilar to each other. However, the results of many experiments cannot be explained by this hypothesis. Here we propose a rational Bayesian theory of compound generalization that uses the notion of consequential regions, first developed in the context of rational theories of multidimensional generalization, to explain the effects of stimulus factors on compound generalization. The model explains a large number of results from the compound generalization literature, including the influence of stimulus modality and spatial contiguity on the summation effect, the lack of influence of stimulus factors on summation with a recovered inhibitor, the effect of spatial position of stimuli on the blocking effect, the asymmetrical generalization decrement in overshadowing and external inhibition, and the conditions leading to a reliable external inhibition effect. By integrating rational theories of compound and dimensional generalization, our model provides the first comprehensive computational account of the effects of stimulus factors on compound generalization, including spatial and temporal contiguity between components, which have posed longstanding problems for rational theories of associative and causal learning.

Imagine choosing the destination of your next vacation. You love large cities, but also enjoy beaches. Would you predict even more pleasure from going to a large city near a beach? In contrast, suppose that you want to invest in the stock market, and you read in two different financial newspapers that a certain stock is predicted to rise 10-15% over the next year. In the past, the predictions from each newspaper have been accurate and you trust both of them. Would you predict a higher profit given the two sources of information, as compared to one source? And would this change if you knew that the two newspapers base their predictions on different market variables?

When confronted with combinations of stimuli that are predictive of an outcome, why do we summate predictions for outcomes in some cases (e.g., predictions for enjoyment from the city and from the beach), but average predictions in other cases (e.g., the stock market)? What factors affect how we combine the effects of multiple stimuli, and how does the similarity between different stimuli (two financial newspapers that use the same vs. different variables for their analyses) affect our tendency to summate predictions?

These questions are important not only to vacation planners and stock market investors, as they represent instantiations of a general problem in daily life: although our environment is complex and multidimensional, we naturally try to isolate what elements in a certain situation were predictive of consequences such as pleasure or pain. We then have to combine these learned predictions anew each time we are faced with a different combination of the elements. In essence, this is a problem of generalization: how do we apply learning from one situation to another that is not identical?

For psychologists studying learning, this question is fundamental: we may understand how animals and humans learn to associate simple stimuli such as lights and tones with rewards, but without understanding the principles that determine generalization across compound stimuli in associative and causal learning tasks, we will not be able to explain anything but the simplest laboratory experiment. Not surprisingly, this problem of compound generalization has been the focus of one of the most active areas of research in the psychology of learning for the past 20 years.

Two types of explanations, mechanistic and rational, have been proposed for compound generalization phenomena. Mechanistic explanations explicitly propose representations and processes that would underlie the way in which an agent learns and behaves. Rational explanations (also called normative or computational; Anderson, 1990; Marr, 1982) formalize the task and goals of the agent, and derive the optimal rules of behavior under such circumstances. Although sometimes viewed as mutually exclusive, these two types of explanations can provide complementary accounts of behavior (Marr. 1982).

Most recent research on compound generalization has been motivated by a controversy between two types of mechanistic theory: configural and elemental models. These models agree in that they represent knowledge about the environment in the form of associations (e.g., an association between beaches and enjoyment and between large cities and enjoyment), but they disagree on how the stimuli are represented when they are presented in a compound (e.g., the large-city-on-the-water compound), and thus on how the compound can be associated with a predicted outcome.

Elemental theories, such as the Rescorla-Wagner model (Rescorla & Wagner, 1972), propose that associations with an outcome are acquired and expressed separately by each of the elements in a compound. In the Rescorla-Wagner model, the associative strength of a compound is equal to the algebraic sum of the associative strength of its components. For example, the model would predict that since beaches and cities were each independently associated with enjoyable vacations in the past, compounding the two should be expected to double the pleasure; that is, the model predicts a “summation” effect.

In contrast, configural theories, such as Pearce's (1987; 1994; 2002) model, propose that associations with an outcome are acquired and expressed by entire stimulus configurations. In Pearce's model, generalization from one configuration to another depends on the components shared by the configurations. In particular, generalization strength is computed according to the proportion of elements from the trained configuration that are present in the new configuration multiplied by the proportion that these shared elements comprise of in the new configuration. According to this theory, if cities and beaches are each independently associated with enjoyment, then a vacation in a city by the beach should be expected to yield 50% of the enjoyment expected from a big city (as 100% of the components of the trained ‘big city’ stimulus are present in the compound, but they comprise only 50% of the compound), plus 50% of the enjoyment expected when vacationing at a beach; that is, the model predicts an “averaging” effect instead of summation.

The most important difference between elemental and configural theories is not so much the type of representation that they propose (both theories require some form of configural and elemental representation to work), but the principles of generalization that they implement. Configural theories predict less generalization across compounds sharing a given number of elements than do elemental theories.

What generalization principles do animals and humans use in compound generalization tasks? As suggested by the examples above, the answer is that it depends. Both humans and animals seem to use different generalization principles depending on a number of factors, including the type of stimuli used to form compounds and the structure of tasks which they have previously experienced (reviewed in Melchers, Shanks, & Lachnit, 2008; Wagner, 2003, 2007).

Among all the factors known to affect compound generalization, one has attracted the most attention in the field: the type of stimuli used to create compounds. For example, animal associative learning studies have found that a summation effect is easily observed with components that belong to different sensory modalities, but not with those belonging to the same modality (Kehoe, A. J. Horne, P. S. Horne, & Macrae, 1994). Similar effects have been observed using other generalization tests and discrimination designs (Wagner, 2003, 2007), and it is now generally accepted that many contradictory results in the literature can be explained as a function of the type of stimuli used in each study.

More generally, many authors (Harris, 2006; Kehoe et al., 1994; Myers, Vogel, Shin, & Wagner, 2001; Wagner, 2003, 2007) hypothesize that elemental processing, like that proposed by the Rescorla-Wagner model, should occur more easily with stimuli that are very dissimilar, such as stimuli coming from different modalities. On the other hand, configural processing, like that proposed by Pearce's configural theory, should occur with similar stimuli, such as those coming from the same modality. A number of flexible models, which can act as configural or elemental theories depending on changes in their free parameters (Harris, 2006; Kinder & Lachnit, 2003; McLaren & Mackintosh, 2002; Wagner, 2003, 2007), can implement this similarity hypothesis by changing parameter values as a function of stimulus similarity.

The similarity hypothesis makes intuitive sense, because a group of similar stimuli would be more easily processed as parts of a configuration which predicts one shared outcome (two newspapers predicting a single outcome of stock market value), whereas a group of disparate stimuli (beach, city) would be more easily processed as independent entities that each predict its own outcome.

Although there is evidence in line with the similarity hypothesis (reviewed by Melchers et al., 2008; Wagner, 2003, 2007), there are also examples of compound generalization effects that are not modulated by type of stimuli (e.g., Pearce & Wilson, 1991) and compound generalization effects that show more “configural” processing with more dissimilar stimuli (e.g., Gonzalez, Quinn, & Fanselow, 2003). Also, other stimulus factors besides similarity affect elemental/configural processing in similar ways. For example, both spatial and temporal contiguity seem to foster configural encoding of stimuli, while spatial and temporal separation fosters elemental encoding (Glautier, 2002; Livesey & Boakes, 2004; Martin & Levey, 1991; Rescorla & Coldwell, 1995).

Thus, similarity between elements is not truly a unifying principle that explains the effect of stimulus factors in compound generalization. One approach to discovering a unifying principle is to formalize a model of the computational task that a learner is faced with in compound generalization situations. Such a theory would generate predictions about how a rational agent should act. Knowledge about why some specific circumstances foster the use of a particular strategy might enlighten the search for mechanisms to explain how this happens.

The motivation for the present work is to develop such rational theory of compound generalization. In the following sections, we first briefly review the literature on rational theories of compound generalization and on rational theories of dimensional generalization. We then propose a new model that combines concepts from both types of theory, and can explain compound generalization phenomena through rational principles of dimensional generalization. We show that this proposed model can explain both data for which mechanistic explanations already exist (e.g., the similarity hypothesis) and data that cannot be explained by current mechanistic models. Importantly, as a rational model, our model suggests a new conceptualization of the principles underlying compound generalization in causal learning.

Rational theories of compound generalization

Two types of rational models have been proposed that are applicable to compound generalization in causal and associative learning tasks. A large class of models, most of them proposed in the field of human causal and contingency learning (e.g., Cheng, 1997; Dayan, Kakade, & Montague, 2000; Griffiths & Tenenbaum, 2005, 2007; Kakade & Dayan, 2002; Lu, Yuille, Liljeholm, Cheng, & Holyoak, 2008; Novick & Cheng, 2004), assume that observable stimuli can directly cause an outcome. According to these models, the task of the learner is to infer the strength of the causal relations between stimuli and outcomes, which determine the probability distribution of the outcome conditional on the presence or absence of the stimuli. Work using these models has focused largely on the problem of how people learn estimates of causal strength, but has ignored the issue of compound generalization. As a result, we know little about the ability of these models to explain most generalization phenomena.

In contrast, generative models are a class of models that define a causal structure that is presumed to generate the observable events in the world. These models propose that all observable events, that is, both the stimuli and the outcomes are generated by latent (unobserved) causes. Intuitively, the distinction between observable events (stimuli and outcomes) and latent causes is similar to the distinction between the symptoms of a disease and the virus causing the disease. Imagine that you wake up one morning with a sore throat. Later in the day, you also start coughing and get a fever. Instead of inferring that your sore throat caused your coughing and fever, you immediately realize that there is an unobserved cause for all these symptoms: you caught a cold. In this example, you have learned about a latent cause (the cold virus) by making inferences from observable events (your symptoms).

Thus, the task of the learner according to the generative modeling perspective is to infer the latent causes responsible for generating observable variables. One model in this tradition, due to Courville and colleagues (Courville, 2006; Courville, Daw, & Touretzky, 2002) is able to explain a number of compound generalization phenomena in Pavlovian conditioning (Pavlov, 1927).

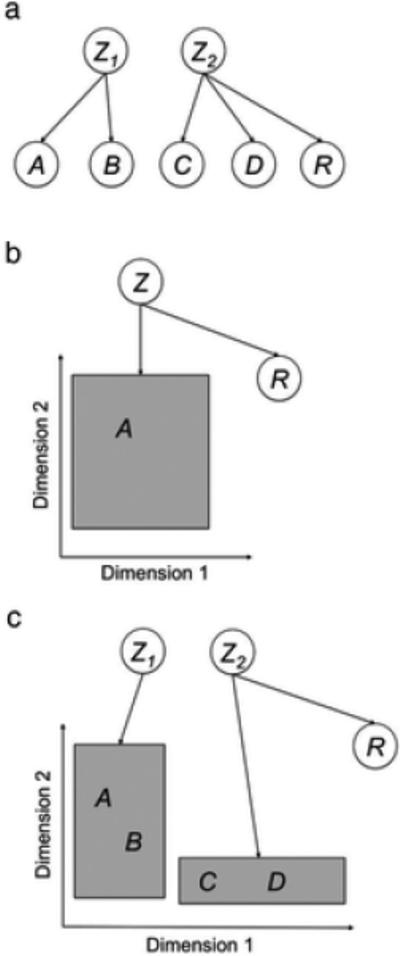

Figure 1a shows a schematic representation of the latent cause model of Courville and colleagues. Each circle represents a different variable, either a latent cause (represented by the letter Z), an observable stimulus (represented by letters A-D), or an observable outcome (represented by the letter R for reward). Arrows represents causal links between latent causes and observable events. There is a weight associated with each of these links, and the probability that a stimulus or outcome is observed when the latent cause is active is a function of those weights. For simplicity, assume that all links in Figure 1a are positive and strong, so that if a latent cause is present, it generates a linked observable variable with very high probability. In this particular example, whenever the latent cause Z1 is active it generates two stimuli, A and B, but no outcome due to the absence of a link from Z1 to R. Whenever latent cause Z2 is active, it generates two stimuli, C and D, and also an outcome.

Figure 1.

Schematic representations of the most important rational models discussed here. In each model, latent causes (Z) are assumed to generate observable stimuli (A-D) and outcomes (R). This generative process is represented by arrows. In the latent causes model of Courville and colleagues (panel a; Courville, Daw & Touretzky, 2002), one or more latent causes can produce one or more stimuli. In the rational theory of dimensional generalization (panel b), a single latent cause produces a single stimulus with values in a number of stimulus dimensions. In our model (panel c), one or more latent cause can produce one or more stimuli with values in a number of stimulus dimensions.

In order to predict the observable events (and specifically, the outcomes), the learner must infer both which latent causes are active in each trial, and the links between each latent cause and observable stimuli, from the (observable) training data alone. When a new stimulus compound is presented during a generalization test, the previously inferred knowledge allows the animal to estimate the probability of an outcome given the observed stimulus compound. This is done by inferring what latent causes are more likely to be active, given the observed stimulus configuration, and what is the probability of an outcome given these latent causes.

This latent cause model is able to explain a number of basic phenomena of compound generalization (Courville et al., 2002). Consider a summation effect, in which A and B are separately paired with an outcome, and presentation of AB leads to a larger response than presentation of A or B. The model can explain some forms of summation by assuming learning of the following structure: one latent cause, Z1, produces A with high likelihood and the outcome with medium likelihood, and a second latent cause, Z2, produces B with high likelihood and the outcome with medium likelihood. When the learner is presented with AB, she infers that both Z1 and Z2 must be active and thus the likelihood of the outcome being produced is higher than when only one of them is active.

This model, however, cannot explain why different stimuli can lead to different predictions (summation versus averaging) in this same experimental design. Furthermore, because in Courville et al.'s (2002) model the outcome is a binary variable, the only thing that can be estimated during compound generalization is probability of the outcome's occurrence. As such, the model cannot explain summation for two 100% reliable stimuli, that is, the observation that sometimes animals respond more to a compound of two stimuli that each reliably predict an outcome than to each stimulus alone (e.g., Kehoe, A. J. Horne, P. S. Horne, & Macrae, 1994; Collins & Shanks, 2006; Rescorla, 1997; Rescorla & Coldwell, 1995; Soto, Vogel, Castillo & Wagner, 2009; Whitlow & Wagner, 1972). As Courville et al. (2002) admit, it seems likely that the summation effect is concerned with the estimation of expected outcome magnitude and not just its probability.

More recently, Gershman, Blei and Niv (2010) used a generative model to explain results from extinction procedures. In extinction, a stimulus that was previously paired with an outcome is repeatedly presented without the outcome, until the learner eventually stops responding as if the stimulus predicts the outcome. Interestingly, this ‘extinction learning’ is fragile, with various manipulations showing that the prediction of the outcome is but dormant, and can be revived. To explain this, Gershman et al. (2010) suggested that the learner attributes training trials and extinction trials to separate latent causes. Thus manipulations that promote inference of the existence of the latent cause that was active at training, will lead to renewed outcome predictions.

An important advance introduced by this model was the inclusion of an infinite-capacity distribution from which latent causes are sampled on each trial. Such a distribution allows the learner to add new latent causes as needed, meaning that the number of possible latent causes need not be determined in advance. However, the distribution over latent causes in this model allows for only one latent cause to be active on each trial, and thus cannot account for compound generalization effects, as Courville et al.'s model does.

How could the class of rational theories be extended to account for the effect of stimulus factors on compound generalization? One way, which we explore here, is by integrating Courville et al.'s latent causes theory of compound generalization with the rational theory of dimensional generalization (Navarro, 2006; Navarro, Lee, Dry, & Schultz, 2008; Shepard, 1987; Tenenbaum & Griffiths, 2001a, 2001b) which we detail in the next section.

The rational theory of dimensional generalization

Dimensional generalization refers to the finding that if a stimulus controlling a response is changed in an orderly fashion along an arbitrarily chosen physical dimension (e.g., color, size), then the probability of the response decreases in an orderly monotonic fashion as the difference between the training stimulus and the testing stimulus increases (Guttman & Kalish, 1956).

Despite the robustness of this finding, the exact shape of the function relating response probability and changes in the relevant physical dimension tends to vary, depending on the choice of sensory continuum, species, training conditions, and even the particular dimensional value that originally controls the response (Shepard, 1965, 1987). Roger Shepard (1965) provided a solution to this problem based on the idea that there is a non-arbitrary transformation of the dimensional scale that makes generalization gradients that were obtained for the same physical dimension (each time using a different value as the rewarded stimulus) assume the same shape.

If such a transformation is found, then the re-scaled dimensional values represent the distance between stimuli, not measured on a physical scale, but in “psychological space.” Work with such a scaling procedure, and later work with multidimensional scaling, has shown that stimulus generalization follows an exponential-decay function of psychological distance for a variety of stimulus dimensions, training conditions, and species (Shepard, 1987).

To explain the shape of the generalization function, Shepard (1987) proposed that when an animal encounters a stimulus S1 followed by some significant consequence, S1 is represented as a point in a psychological space. The animal assumes that any such stimulus is a member of a natural class associated with the consequence. This class occupies a region in the animal's psychological space, called a consequential region. The only information that the animal has about this consequential region is that it overlaps with S1 in psychological space. If the animal encounters a new stimulus, S2, the inferential problem that it faces is to determine the probability that S2 belongs to the same natural kind as S1—the same consequential region—thus leading to the same consequence.

Assuming that the consequential region is connected and centrally symmetric, it is possible to compute the probability that a consequential region overlapping S1 would also overlap S2, given a particular size of the consequential region. Because this size is unknown, Shepard proposed putting a prior over this parameter and integrating over all possible sizes to obtain the probability that S2 falls in the consequential region, given that S1 does. Importantly, Shepard showed that, regardless of the choice of the prior over size, this probability falls approximately exponentially with distance between S1 and S2 in psychological space.

In Shepard's theory, observed stimuli and consequential regions are sampled independently. Tenenbaum and Griffiths (2001a) replaced this with the assumption that observed stimuli are directly sampled from all possible values of the consequential region, incorporating consequential regions into the generative model that produces observable data in a task. Under this assumption, consequential regions act as latent causes that produce observed stimuli. This is schematically represented in Figure 1b, where latent cause Z is linked not to discrete stimuli, as in Figure 1a, but to a whole region in stimulus space. The latent cause is also linked to some significant consequence, represented by the variable R. In this model, the learner knows that stimulus A belongs to a consequential region linked to R, but it doesn't know the size or location of the region. The inferential task is to determine the probability that a new stimulus was also caused by Z, depending on its position in stimulus space.

An important consequence of sampling stimuli from the consequential regions is that the likelihood of any particular stimulus is higher for smaller, more precise consequential regions, what Tenenbaum and Griffiths named the size principle. As a result, as more stimuli with similar values in a dimension are observed to lead to a particular consequence, the learner will tend to infer smaller sizes for the consequential region, and generalization to values outside the observed range will decrease. Tenenbaum & Griffiths (2001a, 2001b) review evidence from the literature on concept and word learning in human adults and children that agrees with this prediction (see also Navarro, Dry, & Lee, 2012; Navarro & Perfors, 2010; Xu & Tenenbaum, 2007).

Some evidence suggests that the extent to which people use the size principle during generalization is variable and might depend on factors such as the specific task to which they are exposed and their previous knowledge about the task (Navarro, Dry, & Lee, 2012; Navarro, Lee, Dry, & Schultz, 2008; Tenenbaum & Griffiths, 2001b; Xu & Tenenbaum, 2007). However, it is likely that the assumption that stimuli are sampled directly from consequential regions is a good approximation to the processes generating observations in a number of environmental settings, which has led to the proposal that the size principle could be considered a “cognitive universal” (Tenenbaum & Griffiths, 2001a), holding across a number of cognitive tasks and domains.

More recently, Navarro (2006) has extended the rational theory of dimensional generalization to explain some stimulus categorization phenomena. In Navarro's model, each category is composed of a number of subtypes, each associated with a particular consequential region. This structure is proposed to implement the fact that complex natural categories are likely composed of objects with disparate sensory features, thus consisting of more than one consequential region. Navarro has shown how this rational model can explain typicality and selective attention effects in categorization.

In the following section, we present a latent cause model of compound generalization that incorporates central ideas from the rational theory of dimensional generalization developed by Shepard and others. We will see that the concept of consequential regions addresses the shortcomings of earlier latent cause models, enabling it to explain the effect of stimulus factors on compound generalization.

The Model

Generative Model

We assume that the task of the animal during an associative learning situation, and the task of humans in a causal learning or contingency learning experiment, is to infer the latent causes that have produced observable stimuli and an outcome.

More specifically, we assume a generative model of the observed stimuli in which each latent cause is linked to a consequential region (see Figure 1c), from which stimuli are sampled. Each latent cause also generates an outcome with a specific magnitude (which could be zero) and valence (positive or negative). Thus, in our model stimulus values are generated as in the rational theory of dimensional generalization (Figure 1b). However, several latent causes can be active in any given trial and each of them can generate any number of observable stimuli, as in the latent causes theory of Courville and colleagues (Figure 1a).

If the learner could infer the latent causes that produce each particular configuration of stimuli and outcome value, this knowledge would allow solving two more specific inferential tasks. First, given the observation of a number of stimuli, it would be possible to predict future outcome values. Second, given that inferences about outcomes are based on the presence or absence of latent causes and not the observable stimuli produced by those causes, any learning about a specific latent cause would automatically generalize to other stimuli produced by the same cause.

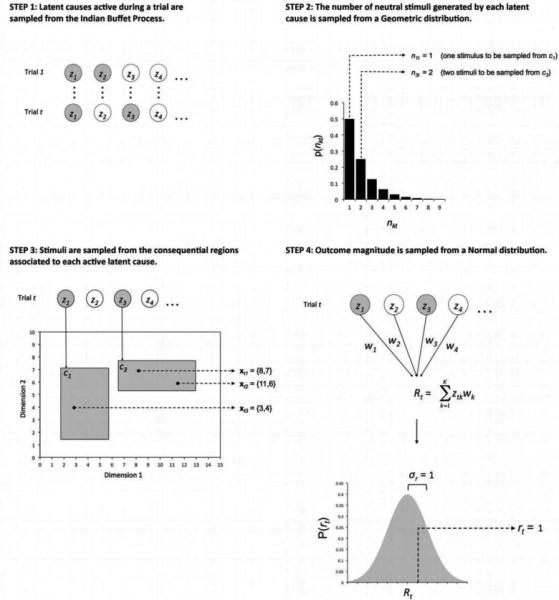

Figure 2 shows a schematic representation of the generative process implemented in our model. The information that the learner observes on each trial t is: (1) a number of stimuli (indexed by i) observed at the beginning of trial t, each described by a vector xti = {xti1, ..., xtiJ} of J continuous variables or stimulus dimensions, and (2) a scalar rt representing the magnitude of the outcome occurring at the end of trial t. The generative process that produces these data is as follows. On each trial, the process starts (Step 1 in Figure 2) by sampling active latent causes from a distribution (the Indian Buffet Process, explained in detail below). In Figure 2, latent causes are represented through nodes labeled z1, z2,..., zk, and shaded nodes represent latent causes active during a trial (in the example, latent causes z1 and z3 are active during trial t). Each of the active latent causes can generate a number of observable stimuli, with the number sampled from a geometric distribution (Step 2 in Figure 2). In the example shown in Figure 2, in trial t latent cause z1 generates one stimulus (n1t = 1) and latent cause z3 generates two stimuli (n3t=2). Each of the stimuli has a value on each of a number of dimensions; such dimensional values are sampled from a consequential region ck associated with the latent cause (Step 3 in Figure 2). The consequential regions, represented by shaded rectangles in Step 3 of Figure 2, determine all possible values that a stimulus can have along dimensions 1, 2, ..., J. In this example, the number of dimensions is two, the stimulus produced by latent cause z1 has values {3,4} in these dimensions, whereas the two stimuli produced by latent cause z3 have values {8,7} and {11,6}. Finally, all latent causes together produce an outcome with magnitude rt (Step 4 in Figure 2). Each latent cause is associated with a single weight parameter wk. The total outcome magnitude observed is sampled from a normal distribution with mean equal to the sum of the weights of all active latent causes. In the example shown in Figure 2, the final sampled value of outcome magnitude is 1. Our model thus differs from most previous rational models of associative and causal learning, in that it assumes that the outcome can vary in magnitude from trial to trial, instead of simply being present or absent. The following sections describe in more detail each of the steps in this generative process.

Figure 2.

A schematic representation of the generative process assumed by the model. In trial t of a compound generalization experiment, each active (shaded) latent cause zk for k=1, 2,...K (Step 1) generates a number nkt of observed stimuli (Step 2). The values of each of these stimuli on several dimensions are sampled from a consequential region ck associated with the latent cause (Step 3). The active latent causes also produce an outcome with magnitude rt, which is sampled from a normal distribution around the sum of weights associated with all active latent causes (Step 4).

Latent Causes

Latent causes in this model (see Step 1 in Figure 2) are defined as binary variables with zkt=1 if the kth cause is active on trial t, and zkt=0 if the cause is not active. One can think of all the latent causes in the experiment as a matrix Z with K columns representing latent causes and T rows representing experimental trials. The choice of distribution on Z should implement the assumption that several latent causes can be present during a single trial and independently produce the observed stimuli. This is the assumption that allowed Courville et al. (2005) to explain basic phenomena of compound generalization, such as summation. On the other hand, the number of causes that could possibly be present (K) is not known in advance, as in the model presented by Gershman et al. (2010). Following earlier work on models with simultaneously active latent causes of a priori unknown number (e.g., Austerweil & Griffiths, 2011; Navarro & Griffiths, 2008), we use the Indian Buffet Process (IBP; see Griffiths & Ghahramani, 2011) as an infinite-capacity distribution on Z:

| (1) |

The IBP generates sparse matrices of zeros and ones with an infinite number of latent causes (columns) and a limited number of experimental trials (rows). Although there are an infinite number of columns in a matrix produced by the IBP, the matrix becomes sparse as K→∞, with most columns completely filled with zeroes (that is, most latent causes are never active and thus can be ignored). For a more complete description of the IBP, see Appendix A. For a tutorial introduction to Bayesian nonparametric models, including the IBP, see Gershman & Blei (2012).

The IBP has a single free parameter, α, that governs the number of different latent causes that will be active in an experiment with a given length. The value of α was fixed to 5 in all the simulations reported here.

Generation of Stimuli

The observation of a compound of stimuli in trial t is represented by N vectors xti, each describing a single discrete stimulus in a continuous multidimensional stimulus space. Following previous work (Navarro, 2006; Navarro & Perfors, 2009; Shepard, 1987; Tenenbaum & Griffiths, 2001a), we assume that each latent cause zk is associated with a consequential region ck in the stimulus space, which determines the range of possible observations xi that can be produced by that latent cause. Each region ck is assumed to be an axis-aligned hyperrectangle parameterized by two vectors of variables θk={mk,sk}. The position parameter vector mk determines the center of the region in the stimulus space in each of the dimensions, whereas the size parameter vector sk determines the extent of the region on each dimension of the space. Step 3 in Figure 2 shows a schematic representation of a set of latent causes and their associated consequential regions in a two-dimensional space.

Unlike other models involving consequential regions, in which a single stimulus is sampled in each trial, our model requires a way to implement the assumption—from Courville et al.'s latent cause theory—that a single latent cause can generate any number of observable stimuli within a single trial (see Figure 1a). This assumption was implemented by setting a distribution on the number of observable stimuli sampled from each active consequential region.

The generative process by which stimuli are produced on each trial is the following. First, for each active latent cause, it is determined whether or not the cause generates stimuli during this trial, through draws from a Bernoulli distribution. The Bernoulli distribution parameter λ was fixed to .99 in all our simulations to permit a small, non-zero probability that an active latent cause does not generate any observable stimuli on a particular trial. This simplifies inference in the model, as discussed below and in Appendix B. Second, for each latent cause that generates stimuli, a number of observations, nkt, is sampled from a geometric distribution with parameter π (fixed to .9 in all our simulations, giving high prior probability for each latent cause to produce only a small number of stimuli). That is, the probability of sampling nkt stimuli from region ck on trial t is given by:

| (2) |

The geometric distribution has the desirable property of allowing any number of stimuli to be sampled, while favoring a small number. This biases the model to infer a larger number of active latent causes as the number of observed stimuli grows, rather than infer that a small number of latent causes each generate many different stimuli.

In the final step of the generative process, nkt stimuli (values of x) are sampled from the consequential region k. The distribution of observations xti on the consequential region ck is uniform along each jth dimension:

| (3) |

Given that the consequential regions are shaped as hyperrectangles with size sj in dimension j, then the probability density of sampling any particular stimulus xti from region ck is equal to:

| (4) |

for all xti that fall within the consequential region, and 0 otherwise, where skj is the length of region ck on dimension j.

For inference purposes, we will need to evaluate how likely a set of specific stimuli are, given a specific configuration of active latent causes and their consequential regions. If we knew exactly what active consequential regions have generated each stimulus, we could use equations 2 and 4 (and the probability λ of each latent cause generating observations) to compute the likelihood of the stimuli presented during trial t:

| (5) |

However, it is not always possible to know which consequential regions have generated each stimulus on trial t. For example, if a particular stimulus lands within the area in which two consequential regions overlap, the likelihood will usually differ depending on whether the stimulus was generated by one consequential region or the other. In cases such as this, we have a number Y of possible assignments y of stimuli to consequential regions. Given that Y is a small number in all the situations that will be of interest here, it is possible to compute the likelihood of xt: by marginalizing over all possible assignments:

| (6) |

If we assume that all assignments have equal prior probability, then we have:

| (7) |

where nkt|y is the value of nkt for assignment y. Finally, assuming that xt: is sampled independently for different trials, the likelihood term for the whole set of observations X is the product of the likelihood of the observations in each individual trial:

| (8) |

Prior on the size and location of consequential regions

Following Navarro (2006), we assume the existence of a consequence distribution over the possible locations and sizes of regions, which is shared by all latent causes. The location parameter mkj for ck along each dimension j is drawn from a Gaussian distribution with zero mean and variance :

| (9) |

where is given a large value relative to the scale of the stimulus space, resulting in a diffuse prior over locations ( in all simulations presented here). Regarding skj, it is assumed that the possible sizes of consequential regions have both a lower bound a and an upper bound b (here, a and b were fixed to 0 and 5, respectively), and all sizes within those bounds are equally likely:

| (10) |

Two important things must be noted about the consequence distribution. First, the values of the parameters μm, , a, and b can vary for different dimensions j, to represent the different scale of each stimulus dimension. For our simulations, a simpler distribution that scaled all dimensions similarly sufficed.

Second, the way in which the size of the consequential region is generated for each dimension in the space will have important consequences for the predictions derived from the model. Because of the uniform distribution for x within a region, small regions have a higher likelihood to have produced a particular data point than large regions (see Equation 4). As noted before, this “size principle” will be important in explaining compound generalization phenomena. Here we assume that the sizes for each dimension j of a consequential region are generated independently from each other, leading to hyperrectangular consequential regions. Note that since the area of the region is the product of the length of its sides, a small value on any one dimension is sufficient to reduce the overall area and thus to increase the likelihood of stimuli that are sampled from this region.

Because consequential regions in our model are axis-aligned, generalization is stronger along the axes of the stimulus space (Austerweil & Griffiths, 2010; Shepard, 1987). Empirically, such a pattern of generalization is observed for stimuli with dimensions that are psychologically distinct (e.g., shape and orientation), known as separable dimensions (Garner, 1974). This suggests that separable dimensions should each be represented as a separate axis (and dimension) in our stimulus space.

On the other hand, dimensions that are not psychologically distinct (e.g., brightness and saturation), known as integral dimensions (Garner, 1974), are not privileged with respect to generalization. In this case, there is no reason to align consequential regions with these stimulus dimensions, and one way in which consequential regions theory has dealt with integrality is by allowing for inference of consequential regions that are aligned in any direction in space (Austerweil & Griffiths, 2010; Shepard, 1987)1. Assuming such non-aligned consequential regions, and since our model preferentially infers smaller consequential regions, we can approximate all integral dimensions using a single axis in space, because for two stimuli differing on any number of integral dimensions the smallest consequential region will be a line connecting the stimuli. As we will discuss later in the context of an experiment involving three stimuli, for more than two stimuli this approximation does not necessarily hold, however, even in that case integral dimensions are different from separable dimensions.

In sum, only when two dimensions are assumed to be separable we will consider them as distinct dimensions in stimulus space. When two stimuli vary on a number of integral dimensions, each of them separable from dimension j, we will represent the set of integral dimensions as a single dimension in stimulus space, with consequential regions aligned to this axis, as defined above.

Both theoretical and practical arguments support our suggestion to treat multidimensional stimuli with integral dimensions as varying along a single dimension. Most important among these are issues relating to the concepts of correspondence and dimensional interaction. Correspondence (Dunn, 1983) refers to the assumption— widespread in research and theory involving multidimensional stimuli (e.g., Ashby & Townsend, 1986; Dunn, 1983; Hyman & Well, 1967; Ronacher & Bautz, 1985; Soto & Wasserman, 2010)—that stimulus dimensions manipulated or identified by a researcher correspond to perceptual dimensions. In general, there is no reason that the dimensions chosen by a researcher would necessarily correspond to atomic units of processing in perceptual systems. For example, in some applications color hue is treated as a single stimulus dimension, although a higher-dimensional space could be used (e.g., the RGB color model). Conversely, in other cases hue is combined with other color and shape properties into extremely abstract dimensions (e.g., “identity” and “emotional expression” of faces; see recent examples in: Fitousi & Wenger, 2013; Soto & Wasserman, 2011). Representing hue as a single dimension is thus not fundamentally different from, say, representing the integral dimensions of hue and saturation as a single dimension, as we will do here. The arbitrariness of the dimensions selected to represent a set of stimuli is a problem that plagues the study of multidimensional generalization and is in no way aggravated by the assumptions of our model. That is, although our choice to represent several perceptually real (integral) dimensions as a single dimension may be a violation of correspondence, this assumption is violated in most work with multidimensional stimuli.

The popularity of the correspondence assumption, despite it being wrong for most applications, is perhaps due to the fact that it allows one to focus on the more interesting problem of dimensional interaction. That is, regardless of whether or not two perceptual dimensions represent indivisible atoms of perceptual processing, the question is how do those two dimensions or their components interact with each other (Ashby & Townsend, 1986). Following this tradition, our model implies different interaction rules for separable and integral dimensions: as demonstrated below, stimuli differing on separable dimensions (that is, dimensions that lie on distinct axes of stimulus space in our generative model) tend to give rise to inference of separate consequential regions, whereas stimuli differing on integral dimensions are more likely to be attributed to a common consequential region.

Finally, we note that there is a theoretical precedent to our implementation of integrality, as one interpretation of integrality is that dimensions that interact in this way are, in effect, combined into a single new stimulus dimension (Felfoldy & Garner, 1971; Garner, 1970). Thus, our representation of integral dimensions can be seen as an implementation of this “integration of dimensions” interpretation, in addition to being an approximation to other interpretations of integrality from consequential regions theory, as previously discussed. However, there are many other ways in which dimensions can be integral (see Ashby & Maddox, 1994; Maddox, 1992; Pomerantz & Sager, 1975), as integrality is not a single form of dimensional interaction, but rather a blanket category used to refer to dimensions that are not separable. While there is no doubt that a more complete treatment of this issue is worth pursuing in the future, our results will show that our simple implementation of integrality is sufficient to account for the empirical results of most interest to our study of compound generalization.

Generation of outcome magnitudes

All latent causes present during trial t produce an outcome with magnitude rt according to the following distribution:

| (11) |

where

| (12) |

The outcome observed in a trial is generated from a normal distribution with mean Rt and variance (in our simulations, the variance was fixed to ). Each latent cause influences the mean of the outcome distribution through its weight wk and we assume that the influences of different latent causes are additive, such that the mean of the outcome distribution is equal to the sum of the weights of all active causes.

The values of wk are also sampled from a normal distribution:

| (13) |

with the parameters fixed at μw = 0 and in our simulations. Step 4 of Figure 2 shows schematically how the latent causes z generate rt in the model.

Inference Algorithm

Inferences in our model are aimed at determining the expected value of the outcome on test trial t, or rt, given the current observation of the compound of stimuli xt:, and the data observed on previous trials. That is, inference is focused on finding E(rt | X, r1:t-1, θ), where θ is a vector of all the variables describing the prior, which are fixed and assumed as known in our model θ = {α = 5, π = 0.9, λ = 0.99, a = 0, b = 5, μm = 0, σm = 101/2, μw = 0, σw = 11/2, σr = 0.011/2}, X is a matrix of observed stimuli (both those observed so far and the current observation), and r1:t-1 is a vector of previously observed outcome values. In order to calculate the distribution p(rt | X, r1:t-1, θ) and from it the expected value of rt, a number of hidden variables of the model that are not specified and thus not known, need to be integrated out (or averaged over). Specifically:

| (14) |

Since this integral is not tractable, we approximate it using a set of L samples {, Z1:L, w1:L, m1:L, s1:L} drawn from the posterior distribution using a Markov Chain Monte Carlo (MCMC) procedure. Our MCMC algorithm involves a combination of Gibbs and Metropolis-Hastings sampling (Gilks, Gilks, Richardson, & Spiegelhalter, 1996). The general strategy is to use a Gibbs sampler to cycle repeatedly through each variable, sampling them from its posterior distribution conditional on the previously sampled values of all the other variables. In the cases in which the conditional posterior is itself intractable, we use Metropolis-Hastings to approximate sampling from the posterior. A more complete description of the inference algorithm can be found in Appendix B.

For the simulations presented here, the MCMC sampler was run for at least 3,000 iterations so as to converge on the correct posterior distribution (“burn in”). Then, the algorithm was run for another 2,000 iterations, from which every 20th iteration was taken as a sample, for a total of 100 samples. This sampling interval was used because successive samples produced by the MCMC sampler are not independent from each other. The approximated expected value of rt is then the average of the 100 samples:

| (15) |

Note that a small number of samples is sufficient to accurately compute the expected outcome value, as the standard error of this estimator decreases as the square root of the number of samples (Mackay, 2003).

Finally, we assume that behavioral measures in Pavlovian conditioning experiments (e.g., rate or strength of response to a stimulus), and in human contingency and causal learning experiments (e.g., causal ratings), are monotonically related to the outcome expectation computed according to Equation 15. However, the exact mapping between outcome expectation and response measures in different paradigms is unknown and not necessarily linear. Therefore, the simulations presented next have the aim of documenting the ability of our model to reproduce only the qualitative patterns of results observed in the experimental data.

Simulation of empirical results

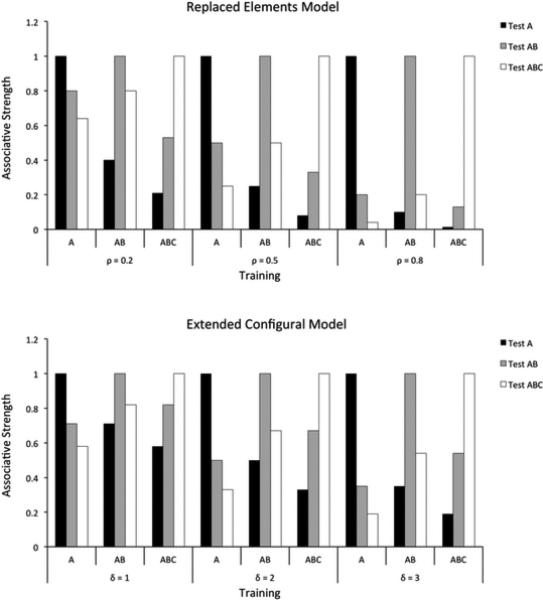

In this section, we evaluate the predictions of our model against empirical data from several experiments in compound generalization. To evaluate the model's performance, it will be useful to compare its predictions against those from previous models of associative and causal learning. No previous rational model can handle the effects of stimulus factors on compound generalization, but there are several flexible mechanistic models that have been developed with this goal in mind (Harris, 2006; Kinder & Lachnit, 2003; McLaren & Mackintosh, 2002; Wagner, 2003, 2007). We will compare the predictions of our model to those of two of these flexible models: the replaced elements model (REM; Wagner, 2003, 2007) and an extension of Pearce's configural model (ECM; Kinder & Lachnit, 2003). These two models represent recent extensions to traditional elemental (REM) and configural (ECM) models of associative learning, and show enough flexibility to predict opposite patterns of results for compound generalization experiments depending on the value of free parameters in the model.

Simulations with REM and ECM were carried out using the simulation software ALTSim (Thorwart et al., 2009; which can be downloaded from http://www.staff.unimarburg.de/~lachnit/ALTSim/). All parameters were set to their default values, except for the free parameters controlling the proportion of replaced elements in REM, represented here by ρ (r in the original articles), and the amount of generalization across configurations in ECM, represented by δ (d in the original article). Due to space limitations, the interested reader should consult the original articles by Wagner (2003), Kinder and Lachnit (2003), and Thorwart et al. (2009) for a more detailed description of the models and their implementation.

Two features of these models are important for a correct interpretation of the results of our simulations. First, REM and ECM are mechanistic models of associative learning, so they provide a different kind of explanation of behavioral phenomena than the rational model presented here. However, the two types of explanation can constrain each other, so that a successful rational explanation gives clues to what is required from a successful mechanistic explanation, and vice-versa. Thus we provide predictions from mechanistic models only as a benchmark to compare our model to. We do not present a systematic comparison of the different models with the aim of showing that one is categorically better than others, neither in terms of simulating all the relevant data from the literature, nor in terms of evaluating what model offers the best quantitative fit to the data.

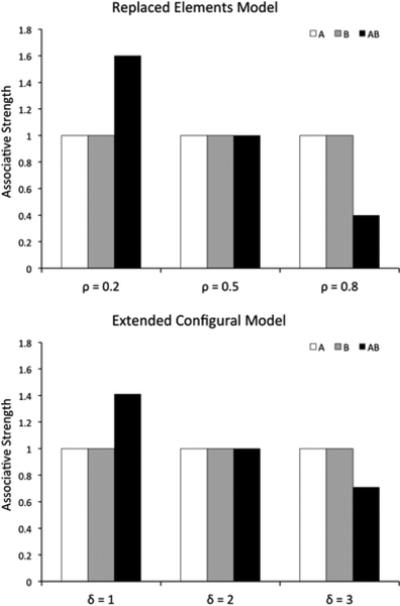

Second, both REM and ECM include a free parameter that affects the amount of associative strength that is generalized from one stimulus configuration to another. This allows both models to flexibly reproduce any possible result from several experimental designs in the literature. However, the models can be constrained by fixing their free parameters to the same value for all experiments using similar stimuli. For example, Wagner (2007) has pointed out that results from experiments using stimuli from different modalities are reproduced by REM with ρ = 0.2, whereas results from experiments using stimuli from the same modality are reproduced by using ρ ≥ 0.5. Accordingly, here we present simulations of REM with ρ equal to 0.2, 0.5 and 0.8.

Similarly, the ECM acts as Pearce's configural model when its free parameter δ is equal to 2. Lower values lead to behavior that is more similar to elemental models of associative learning and higher values lead to more configural processing. Here, we present simulations of ECM with δ equal to 1, 2 and 3.

In sum, both REM and ECM can implement the similarity hypothesis, if we assume that larger values of their free parameters correspond to stimuli that are more similar (e.g., within the same modality), whereas lower values correspond to stimuli which are more dissimilar (e.g., from different modalities). What our model adds is a principled explanation for why parameters of a specific mechanistic implementation may differ between conditions. In addition, as will be seen below, despite their flexibility, the REM and ECM cannot account for the full range of phenomena that our model explains.

Associative and rational models offer different and complementary explanations of behavior, and thus we do not conduct a quantitative comparison of the models, but rather concentrate on qualitative comparisons. Additional considerations discourage an evaluation of quantitative fits of the models to data: In general, quantitative predictions are outside the scope of most associative models, including REM and ECM. These models make predictions about an unobservable theoretical quantity, usually termed “associative strength.” Although this quantity is assumed to be monotonically related to overt behavior, the shape of this relation is left unspecified. This should not be seen as a criticism of REM and ECM – our model makes the same assumption regarding the relation between expected outcome value and measures of behavior. However, as a result of this assumption, it is difficult to adjudicate between the three models based on quantitative fits to behavioral data. Moreover, even if we added some simple assumptions to the models (e.g., a linear relation between associative strength/expected outcome and a response measure) and obtained measures of model fit to data, it would be unclear whether a better quantitative fit is due to a model's ability to capture a psychological process versus simply being more flexible than its competitors. To perform quantitative model selection taking into account model complexity, it would be necessary to make further modifications to associative models to make them not only quantitative, but also statistically defined (Pitt et al., 2008). Such modifications could potentially change the predictions of the original associative models (see Lee, 2008). As a result, we believe that the qualitative comparison proposed here is the most informative comparison of the models as defined.

Simple summation with stimuli from the same or different modalities

A summation experiment involves training with two stimuli separately paired with an outcome until both acquire a strong response (presumably due to prediction of the outcome), followed by a test in which each stimulus is presented separately, as well as in compound, and the strength of responding is measured. The critical comparison is the degree of responding to the compound as compared to that for the separate stimuli. Elemental models of associative learning predict that the response to the compound should be larger than the response to each of its components due to summation of the predictions for each element, whereas configural models predict that the response to the compound should be equal or lower than the average of the response to each component due to generalization decrement for a never-seen compound that is not wholly similar to any of the previous conditioning trials.

Early literature reviews by Weiss (1972) and Kehoe and Gormezano (1980) concluded that summation tests in Pavlovian conditioning can lead to any of these results. Thus, empirical evidence regarding this test does not allow us to reach any conclusion about whether stimulus processing is elemental or configural. More recent investigations have led to the same pattern of results, with some finding response summation (e.g., Kehoe et al., 1994; Rescorla, 1997), others something closer to response averaging (e.g., Rescorla & Coldwell, 1995), and still others a response to the compound that is lower than the average response to the components (e.g., Aydin & Pearce, 1995, 1997).

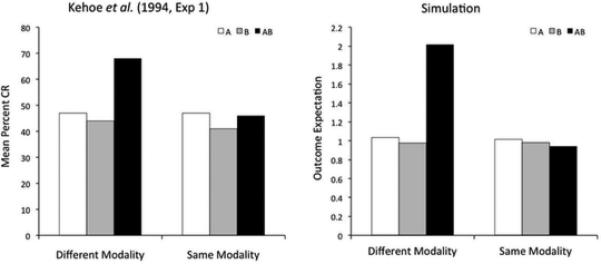

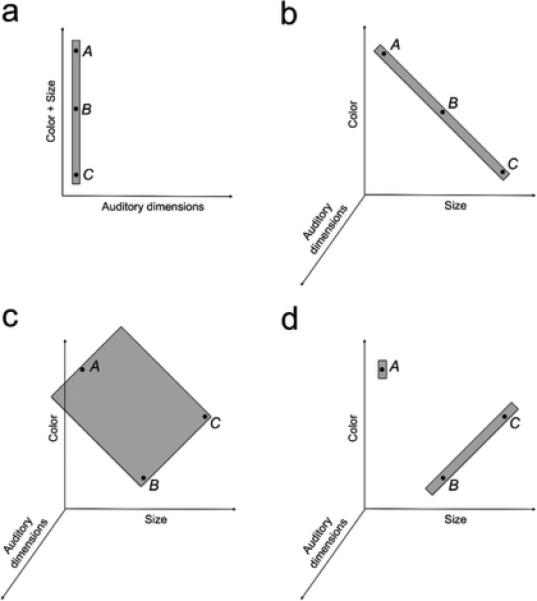

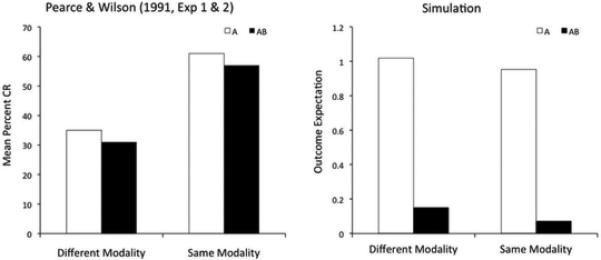

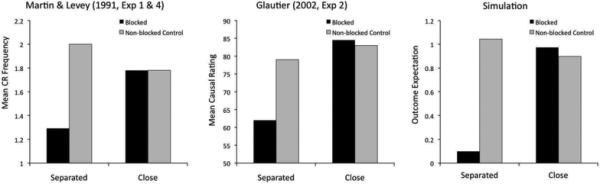

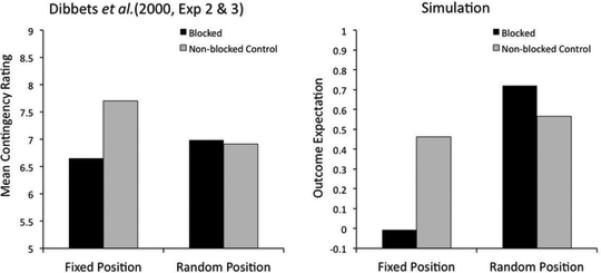

One important difference among summation studies leading to different results is that many of those supporting a configural hypothesis (i.e., no summation) have used stimuli from the same sensory modality, whereas those supporting an elemental hypothesis (i.e., summation of predictions) have used stimuli from different sensory modalities. Kehoe et al. (1994; see also Rescorla & Coldwell, 1995) directly tested the consequences of training with stimuli from the same or different modalities for the summation effect in rabbit nictitating membrane conditioning. Their results, reproduced in the left panel of Figure 3, show that the response to a compound of tone and light is larger than the response to each individual stimulus, whereas the response to a compound of a tone and a noise is closer to the average of the response to each individual stimulus.

Figure 3.

Experimental data (left) and simulated results (right) of an experiment by Kehoe et al. (1994) on the modulation of the summation effect by stimulus modality. A significant summation effect was found with stimuli from the different modalities, but not with stimuli from the same modality.

To simulate this experiment using our model, each stimulus was represented by a vector of two variables, one encoding sound and the other encoding visual stimulation. Representing the tone and noise stimuli as each varying along a single perceptual dimension is appropriate for two reasons. First, it is commonly assumed that the primary perceptual dimensions of a sound are pitch, loudness and timbre (e.g., Melara & Marks, 1993). Kehoe et al. matched the loudness of the tone and noise, and the latter does not have a characteristic pitch. Therefore, the two sounds varied mostly in timbre. Second, there is some evidence that these three sound dimensions are not separable (e.g., Grau & Nelson, 1988; Melara & Marks, 1993). On the other hand, different modalities are the quintessential example of stimulus dimensions that are separable (Garner, 1974). Although there is evidence suggesting violations of separability between dimensions, such violations seem to stem from decisional rather than perceptual processes (for a review, see Marks, 2004). Thus we represent stimuli from different modalities as varying along two separable dimensions. This means that, in our framework, multimodality can be seen as a special case of dimensional separability. We assumed that all stimuli are represented in a common stimulus space and have a value on each dimension. Finally, only dimensions relevant for the experimental task were included in this simulation and all those that follow.

To simulate the “different modality” condition, the tone was represented as the vector (sound=1, visual=0), whereas a light was represented as the vector (sound=0, visual=1). To simulate the “same modality” condition, the tone was represented as the vector (sound= 21/2, visual=0) and the noise was represented as the vector (sound=0, visual=0). These values were chosen to match the distance between stimuli in the two simulations, so as to establish that inference of a common latent cause in our model is determined due to the alignment of stimuli along a dimension rather than their distance. Note that here a value of zero is arbitrary and does not represent the absence of a feature (i.e., there was visual stimulation coming from the speaker and the noise had a particular timbre).

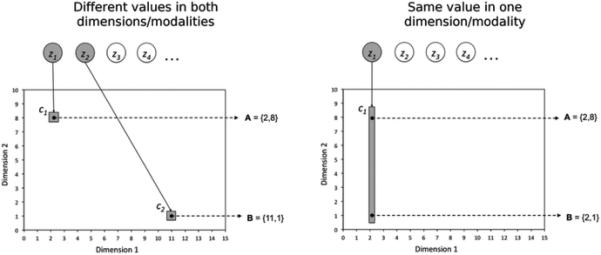

The right panel of Figure 3 shows the results of our simulations. The model correctly predicts the observed pattern of results, and Figure 4 presents a schematic explanation of the reason behind this success. When the two stimuli are from different modalities (left panel of Figure 4), their spatial separation is too large for them to have been generated by a single large consequential region. Instead, the model infers the presence of two different small regions, each producing a single stimulus and an outcome of magnitude one. When the two stimuli are presented together during the compound test, the model infers that both latent causes are active, and so the outcome magnitudes produced by each cause are added together (according to Equation 12) and a summation effect occurs.

Figure 4.

Schematic explanation of why the model predicts a summation effect with stimuli from different modalities/dimensions (left panel) and the lack of a summation effect with stimuli from the same modality/dimension (right panel). Active latent causes and their consequential regions are shaded.

On the other hand, when the two stimuli are from the same modality (right panel of Figure 4), the model infers that a single consequential region, very small along the irrelevant dimension (Dimension 1 in Figure 4) and elongated along the relevant dimension (Dimension 2 in Figure 4), has produced both observations. This is true even for stimuli that are very dissimilar along the relevant dimension, because the size of the consequential region along the irrelevant dimension can be arbitrarily small, leading to a small area for the region and a high likelihood for stimuli contained in that area (see Equation 4). Because the two stimuli are inferred to be generated by one latent cause, the expected value of the outcome for trials with each single stimulus and their compound is the same (one unit of outcome, as typically generated by this latent cause) and no summation is observed.

In sum, the likelihood function for stimuli (Equation 4) embeds in it the principle that stimuli that have similar values on a particular dimension are likely to have been produced by the same latent cause. This is an instantiation of the size principle proposed by the rational theory of dimensional generalization of Tenenbaum and Griffiths (2001a), which was discussed in the introduction section. The size principle explains why stimuli varying on a single dimension do not produce summation effects, and it will prove useful in explaining several other contradictory results observed in the literature on compound generalization.

It is important to underscore that what our model predicts is an effect of separability and integrality of stimulus dimensions on the summation effect. The effect of stimulus modality is considered a special case of the separability/integrality distinction, with different modalities being one case of separable dimensions. However, regardless of whether cues vary within a modality or across modalities, the model predicts a summation effect if cues vary across separable dimensions and no summation effect if cues vary across integral dimensions. This also means that, according to our model, stimuli used in previous studies finding no summation effect (notably, experiments using visual stimuli and autoshaping with pigeons) must have varied either along integral dimensions or along a single dimension, as in Kehoe et al. (1994).

According to previous mechanistic theories (Harris, 2006; McLaren & Mackintosh, 2002; Wagner, 2003, 2007), similarity between two stimuli is what modulates the extent to which they are processed configurally, that is, as a whole rather than as a sum of their parts. Figure 5 shows the predictions of the REM (top) and ECM (bottom) models for a summation experiment. Assuming that more similar stimuli produce more configural processing (larger values of ρ and δ), both models can correctly reproduce the experimental results of Kehoe and colleagues (Figure 3).

Figure 5.

Results of simulations of a summation experiment using REM (top) and ECM (bottom). Each simulation was run using three different values of the free parameters ρ and δ. Both models can predict any pattern of results from a summation experiment, with responding to the compound higher, equal or lower than to each stimulus alone.

However, explanations based on the concept of stimulus similarity have difficulty explaining the results of a related experiment by Rescorla and Coldwell (1995, Experiment 6), who found that although simultaneous presentations of two visual stimuli in compound does not produce a summation effect, the sequential presentation of the same stimuli does lead to such effect. The problem for approaches based on the concept of similarity is that it is difficult to think of stimuli that are close in time as more “similar” to each other than the same stimuli when they are separated in time. Our model incorporates the idea that similarity is an important factor in compound generalization, because distance along several dimensions can be interpreted as inversely proportional to the similarity between two stimuli. However, the model also expands beyond the limitations of the similarity hypothesis, because for other dimensions distance is better interpreted as inversely proportional to the contiguity between two stimuli. This is the case for the relative temporal and spatial position of stimuli during an experimental trial. Temporal contiguity–and spatial contiguity, as we will see later–can both be cast as differences on the dimensions of time and space, so that stimuli that are closer together in these dimensions have a higher likelihood of having been produced by the same latent cause.

The only additional assumption required to apply our model to such dimensions is that the origin of each dimension is set in each trial by a landmark event. The position of stimuli in space and time is encoded relative to such landmarks. For example, “trial time” would be encoded relative to an event that signals the beginning of a trial, such as the beginning or end of an inter-trial interval (as demarcated by the outcome in a previous trial, or the first stimulus in the current trial, respectively). This is a reasonable assumption as our model is a trial level model, in which we assume that latent causes become active at the beginning of a trial and only stimuli happening after that point (and before the beginning of the next trial) can be generated by the active latent causes. Similarly, “task space” would be encoded relative to the position of a landmark stimulus, such as the corner of a monitor or the experimental chamber. Importantly, one of the cues could itself serve the function of a landmark to set up ‘trial time’ and ‘task space’ (e.g., the first cue presented or the most salient cue in a compound). These assumptions are common to most other theories dealing with contiguity, which usually parse an experiment coarsely into individual trials, simulating events within a single trial.

To summarize, a focus on generalization as an inference task gives a unified and principled explanation of the effects of stimulus modality and temporal separation over the summation effect.

Differential summation with stimuli from the same and different modalities

A variant of the summation design involves training with three stimuli, A, B, and C, followed by an outcome, and testing with the compound ABC. In this experiment there are two training conditions: in the single condition, all stimuli are independently paired with the outcome (A+, B+, C+), whereas in the compound condition, all combinations of two stimuli are paired with the outcome (AB+, AC+, BC+). If one of these conditions leads to a higher level of responding during the summation test with ABC, then a “differential summation” effect is said to be found (Wagner, 2003). Traditional elemental models (Rescorla & Wagner, 1972) predict more summation in the single condition, whereas traditional configural models (e.g., Pearce, 1987) predict more summation in the compound condition.

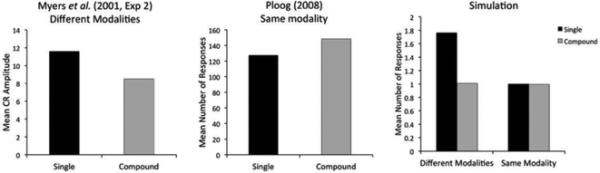

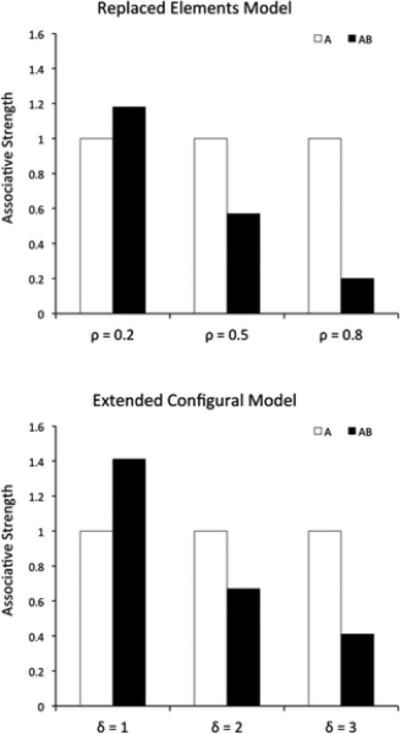

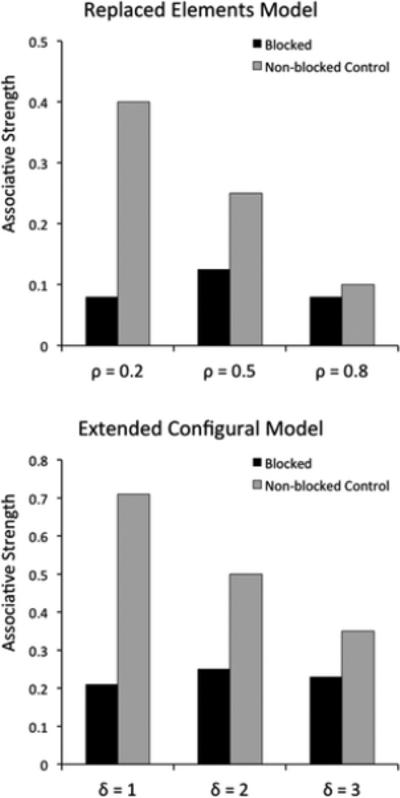

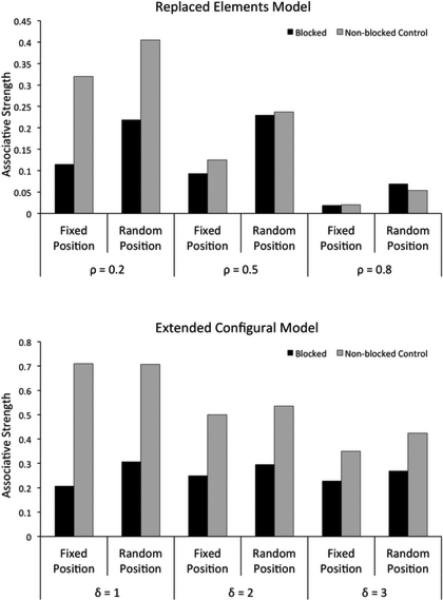

The left panel of Figure 6 shows the results of a differential summation experiment carried out by Myers and colleagues (Myers, Vogel, Shin, & Wagner, 2001, Exp. 2) using a tone, a light and a vibrotactile stimulus as conditioned stimuli. The experiment confirmed the predictions of elemental theory, finding higher summation in the single condition than in the compound condition. The middle panel of Figure 6 shows the results of a differential summation experiment carried out by Ploog (2008), using only visual stimuli that differed in color and size. In this case, there was no evidence of summation for either condition (responding to ABC was not higher than to the training stimuli) and the small difference between conditions observed in the figure is not statistically significant.

Figure 6.

Experimental data from differential summation experiments using stimuli from different modalities (left) and from the same modality (middle panels), and simulated results (right). Bar height represents responding to the compound ABC in the different conditions. The difference between conditions found by Myers et al. (2001; left) was statistically significant, whereas Ploog (2008, middle) did not find a statistically significant difference between the conditions. The simulation (right) reproduces this pattern of results.

To simulate the experiment of Myers et al. (2001), which used stimuli from different modalities, we represented the tone as the vector (sound=1, visual=0, somatosensory=0), the light as (sound=0, visual=1, somatosensory=0) and the vibrotactile stimulus as (sound=0, visual=0, somatosensory=1). To simulate the experiment of Ploog (2008), which used stimuli from the same modality, we represented the first visual stimulus as (sound=0, visual=0, somatosensory=0), the second as (sound=0, visual=21/2, somatosensory=0) and the third as (sound=0, visual=2×21/2, somatosensory=0). That is, our simulation assumes that the dimensions of size and color are integral for pigeons, at least in the stimuli used in this experiment. To the best of our knowledge, there is currently no empirical evidence that contradicts this assumption. In both cases, our choice of values on each dimension makes the present simulation comparable to the simulation of a simple summation effect presented in the previous section.

The results from our simulation are presented in the last panel of Figure 6. It can be seen that the model predicts the results obtained by Myers et al. (2001) using stimuli from different modalities and the results obtained by Ploog (2008) using stimuli from the same modality. The explanation is the same as for our simulation of the simple summation effect: stimuli that vary along multiple separable dimensions lead to the inference of a separate latent cause for each stimulus, whereas stimuli that vary along a single dimension lead to the inference of a single latent cause for all stimuli (see Figure 4).

Using visual stimuli and the same experimental paradigm as Ploog (2008), Pearce and colleagues (Pearce, Aydin, and Redhead, 1997, Exp. 1) found higher summation in the compound condition than in the single condition. As is clear from our simulation, the latent causes model cannot predict this result using the current parameter values and stimulus encoding. However, the differential summation effect found by Pearce and colleagues was statistically significant only in 5 out of 15 testing trials, and no correction for multiple comparisons was applied in this analysis. Thus, both the experiments of Ploog (2008) and those of Pearce et al. (1997) seem to suggest that it is difficult to obtain a reliable differential summation effect using stimuli from the same modality.

Flexible mechanistic models, such as REM and ECM, can predict all the observed patterns of results in differential summation experiments. This can be seen in Figure 7, which shows the results from simulating these models. However, both models run into the same problem as our latent causes model when attempting to explain the contradictory results of Ploog (2008) and Pearce et al. (1997). These studies used similar visual stimuli, the same species and the same experimental paradigm, so there is little reason to justify different values of ρ and δ to explain their different results. Only further empirical research will shed light on whether specific experimental conditions can produce a robust differential summation effect using visual stimuli.

Figure 7.

Results of simulations of a differential summation experiment using REM (top) and ECM (bottom). Bar height represents responding to the compound ABC in the different conditions. Each simulation was run using three different values of the free parameters ρ and δ. Both models can predict any pattern of results from a differential summation experiment, with responding to ABC in the single condition being lower, equal or higher than responding to ABC in the compound condition.

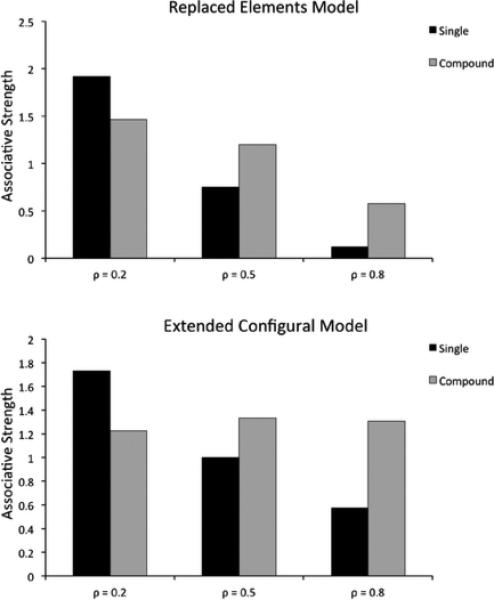

As these are the only experiments involving more than two stimuli varying along integral dimensions discussed in this paper, a short discussion of the validity of our assumptions in the case of three stimuli is in line. In our simulation of the study by Ploog (2008) we have represented all visual stimuli as varying along a single dimension, as exemplified in Figure 8a. As discussed previously, variation along a single dimension is a good approximation for cases in which two stimuli vary along integral dimensions, because if consequential regions can orient in any direction of integral space (Shepard, 1987), the size principle will result in the regions orienting in the direction of a line connecting the two stimuli, which is equivalent to representing the stimuli as points along one axis in stimulus space.

Figure 8.

Schematic explanation of how the latent causes model explains the effect of stimulus modality on differential summation. In our implementation, the integral dimensions of size and color are collapsed in a single dimension (a). A separable auditory dimension is also depicted, for didactic purposes. The application of the size principle would be similar if each of the two dimensions was represented separately and the three stimuli were aligned, as regions can be oriented in any direction in integral space (b). However, the size principle applies even without stimulus alignment as long as the consequential regions are small along the auditory dimension (in the figure, the size on this dimension is so small that the regions are essentially planes), as they can then include more than one cue with relatively high likelihood (c). Moreover, because in integral space consequential regions do not have to be aligned to axes, the size principle can be applied again to generate two consequential regions, essentially a line and a point in space, rather than three (d). Thus integral dimensions imply less summation than separable dimensions, even for more than two non-aligned stimuli.

What happens when three (or more) stimuli differ on two integral dimensions? In this case, if the stimuli are aligned in integral space (that is, if they can be connected by a straight line, as in Figure 8b), our choice to represent the stimuli as points along a single axis is still reasonable as in the integral subspace consequential regions need not be aligned to axes. If the stimuli are not aligned the approximation of consequential regions that are not axis-aligned by representing all integral dimensions as one axis no longer holds. However, even in this case, we argue that application of the size principle would still lead to more “configural” representations when stimuli vary on two integral dimensions, as in Ploog (2008), as compared to three separable dimensions as in Myers et al. (2001), for two reasons. First, even if we represent size and color as two separate axes in stimulus space, large regions encompassing two or three stimuli are more likely in the two-dimensional space of color and size (as shown in Figure 8c) than in the three-dimensional auditory-visual-tactile space needed to represent Myers et al.'s (2001) multimodal stimuli, because a hyperrectangle in a two dimensional plane has a much smaller volume than one in a three dimensional space. In general, low-dimensional consequential regions are considerably more likely than high-dimensional ones. This is demonstrated in Figure 8b-d by using the auditory dimension, on which consequential regions are essentially planes regardless of the configuration of stimuli in the dimensions of color and size. Second, because within the two-dimensional integral space consequential regions do not have to be aligned to axes (as no specific direction of axes is privileged), in integral space hypotheses involving thin elongated regions that include pairs of cues as in Figure 8d are possible. Due to the size principle, such hypotheses will have high posterior probability, thus implying a summation effect that is smaller than when each cue is produced by its own latent cause, as would be the only high probability solution for three separable dimensions. If color and size were separable dimensions rotation of consequential regions would not be possible, so unless two stimuli shared values on either of the dimensions, this configuration of consequential regions would not be possible.

In sum, regardless of whether we choose to represent the integral dimensions of size and color as one or two axes in space, that is, whether Ploog's (2008) cues are aligned in the integral space or not, the theory predicts that cues varying along two integral dimensions should lead to less elemental summation than cues that differ on three clearly separable dimensions. Our simulations capture this effect of modality on differential summation qualitatively by collapsing integral dimensions into a single axis in stimulus space, as in Figure 8a, but the results would not change qualitatively if we assumed a two dimensional space with consequential regions that are not necessarily axis-aligned, as in Figure 8d.

Summation with a recovered inhibitor

Perhaps the best-known design for the study of inhibitory learning involves a feature-negative discrimination, in which presentations of a stimulus followed by an outcome (denoted A+) are intermixed with presentations of the same stimulus in compound with a second stimulus and not followed by the outcome (AB-). After this training, B acquires the ability to inhibit responding to an excitatory stimulus (Rescorla, 1969).