Abstract

The feasibility and efficacy of Virtual Reality Job Interview Training (VR-JIT) was assessed in a single-blinded randomized controlled trial. Adults with autism spectrum disorder were randomized to VR-JIT (n=16) or treatment as usual (TAU) (n=10) groups. VR-JIT consisted of simulated job interviews with a virtual character and didactic training. Participants attended 90% of lab-based training sessions and found VR-JIT easy-to-use, enjoyable, and they felt prepared for future interviews. VR-JIT participants had greater improvement during live standardized job interview role-play performances than TAU participants (p=0.046). A similar pattern was observed for self-reported self-confidence at a trend level (p=0.060). VR-JIT simulation performance scores increased over time (R-Squared=0.83). Results indicate preliminary support for the feasibility and efficacy of VR-JIT, which can be administered using computer software or via the internet.

Keywords: autism spectrum disorder, Internet-based Intervention, job interview skills, vocational training

The 1990’s witnessed a rapid increase in the prevalence of autism spectrum disorders (ASD) (Gurney et al. 2003), with approximately 50,000 individuals with ASD turning 18 years old each year and transitioning into adult-based services (Shattuck et al. 2012). Historically, the community-based employment rate for these individuals ranges from 25-50% (Hendricks 2010; Shattuck et al. 2012; Taylor and Seltzer 2011), suggesting a need for programs and services to assist with the transition to employment (Shattuck et al. 2012). For example, Project SEARCH recently demonstrated efficacy at helping youth with ASD transition from high school to finding employment (Wehman et al. 2013). However, research developing adult-based services to help individuals obtain competitive employment appears to be much more limited. A common gateway to obtaining competitive employment is the job interview, but this experience may be a significant barrier for individuals with ASD (Higgins et al. 2008; Strickland et al. 2013). Thus, improving job interview performance is a critical target for employment services and is especially important for individuals with ASD given their significant social deficits (APA 2013; Wing and Gould 1979).

A review of the occupational literature (Huffcutt, 2011) and a meta-analysis (Salgado and Moscoso, 2002) indicate that successful job interviewees are proficient at conveying job-relevant interview content (e.g., experience, core knowledge) and providing a strong interviewee performance (e.g., social effectiveness, interpersonal presentation). Thus, interventions designed to target both of these constructs may improve job interview performance. There is also evidence that greater self-confidence in one’s ability to perform a job interview is associated with greater social engagement during interviews as well as more effective verbal and nonverbal communicative strategies during interviews (Hall et al. 2011; Tay et al. 2006). This suggests that improving job interview skills may also increase interview-based self-confidence, which may increase the likelihood that individuals will be motivated to go on job interviews.

Highly interactive virtual reality role-play training based on behavioral learning principles demonstrated greater effectiveness than conventional role-playing for training other types of interactive skills. This software has been used to train federal law enforcement agents on interrogation methods (Olsen et al. 1999), primary care physicians to perform brief psychosocial intervention to treat alcohol abuse (Fleming et al. 2009), and individuals with ASD to improve their social skills (Trepagnier et al. 2011). Other virtual reality training systems have been designed to train social skills in individuals with ASD (Kandalaft et al. 2013). Research suggests that virtual reality training offers trainees several advantages over traditional learning methods (Issenberg et al. 2005). These include: 1) repetitive practice with the simulation, 2) active participation and not passive observation, 3) a unique training experience with each simulation, 4) consistent in-the-moment feedback, 5) opportunity for trainees to make, detect, and correct errors without adverse consequences, 6) accurate representation of real-life situations as opposed to avatar-type environments, 7) opportunity to apply multiple learning strategies across a range of difficulty levels, and 8) access to web-based educational material that promotes learning skillful strategies before and during the simulation (Issenberg et al. 2005). The training advantages of virtual reality simulations should be equally helpful for individuals with ASD who can benefit from being able to progress at their own rate of learning and to repeat the exercises as often as necessary until they achieve mastery.

The purpose of the present study was to test the feasibility and efficacy of a highly-interactive virtual reality role-play simulation, “Virtual Reality Job Interview Training” (VR-JIT), to improve job interview skills that relate to job relevant interview content and interviewee performance (Huffcutt 2011), among individuals with ASD. The intervention was initially developed for use by individuals with chronic mental illness (e.g., schizophrenia, bipolar disorder, depression) (BLINDED REFERENCE), and has limited e-learning material and interviewing scripts that focus on autism. However, this study evaluated the feasibility and efficacy of VR-JIT in a sample of adults with ASD. The feasibility of VR-JIT was evaluated via the number of training sessions that participants attended, the total time participants engaged in simulated interviews, and participant feedback on the intervention’s efficacy via a brief self-report measure. In a randomized, single-blinded controlled trial, efficacy of VR-JIT was evaluated by measuring participant performance on standardized job interview role-plays and their self-reports of job interview self-confidence before and after training. The VIR-JIT group was compared to participants randomized to a Treatment-As-Usual (TAU) control condition. VR-JIT performance scores were examined as a process measure to determine if there was improvement across trials.

Based on participant feedback during the prototype development of VR-JIT (BLINDED REFERENCE), the hypotheses were that training would be well attended, and participants would rate the intervention as easy to use, enjoyable, and helpful, as well as increase their confidence and readiness for interviewing. A second hypothesis was that the VR-JIT group would demonstrate increased job-interview skills (job relevant interview content and interviewee performance) and enhanced interview-based self-confidence between baseline and follow-up, while the TAU control group would remain stable over time. A third hypothesis was that VR-JIT performance scores would increase over the course of their training. Lastly, the study explored whether the follow-up role-play performance, self-confidence, and the performance scores were associated with demographic characteristics, ASD-related symptoms, vocational history, neurocognitive, and social cognitive functioning.

Methods

Participants

Participants (ages 18-31) included 26 individuals with an autism spectrum disorder (ASD) recruited through advertisements at community-based service providers, local universities, community-based support groups (e.g., Anixter Center, Chicagoland Autism Connection, Autism Society of Illinois, Illinois Department of Rehabilitation Services), and online (e.g., Facebook). A non-specific diagnosis on the autism spectrum was required for participation in this pilot study and was determined with a T-score of 60 or higher using parent and self-report versions of the Social Responsiveness Scale, Second Edition (SRS-2) (Constantino and Gruber 2012). The SRS-2 has high sensitivity and moderate specificity (Aldridge et al. 2012). Four participants did not meet the required SRS-2 cutoff (scoring 51-59), but were included based on documentation of an ASD diagnosis by their clinician. Participants were also required to: 1) have at least a 6th grade reading level as determined by the sentence comprehension subtest of the Wide Range Achievement Test-IV (WRAT-IV) (Wilkinson and Robertson 2006)), 2) be willingly video-recorded, 3) unemployed or underemployed (i.e., working less than half time and looking for additional work), and 4) actively seeking employment.

Participants were excluded from the study for: 1) having a medical illness that significantly comprises cognition (e.g., Traumatic Brain Injury), 2) an uncorrected vision or hearing problem, which would prevent full participation in the intervention, or 3) having a current diagnosis of substance abuse or dependence as assessed using the MINI International Neuropsychiatric Interview (MINI) (Sheehan et al. 1998). The corresponding University’s Institutional Review Board approved the study protocol, and all participants provided informed consent. After enrollment, participants were randomized with a 2 out of 3 chance into the intervention (n=16) or treatment-as-usual (TAU) groups (n=10). The uneven randomization was to enable us to learn more about the intervention process.

Intervention

Virtual Reality Job Interview Training (VR-JIT) is a computerized virtual reality training simulation that can be used as computer software or via the internet. VR-JIT was developed by (BLINDED Company) (Blinded Company Website) in consultation with expert panels convened with academic and vocational experts. BLINDED COMPANY’s patented PeopleSIM™ technology is the main platform for the simulated interactions in VR-JIT and has been used in several other studies (Blinded Citations). It uses nonbranching logic, which provides users with variation and freedom in their responses and provides a virtual reality interviewer displaying a wide range of emotions, personality, and memory. The nonbranching nature of the interview creates a different interview each time from >1000 video-recorded interview questions and 2000 trainee responses, the novelty of which further encourages repeated plays (BLINDED REFERENCE). VR-JIT is designed to improve job interview performance by targeting the following domains recommended by the expert panel, which were grouped into job relevant interview content and interviewee performance constructs as outlined in the literature (Huffcutt 2011). The job relevant interview content includes: conveying oneself as a hard worker (dependable), sounding easy to work with (teamwork), conveying that one behaves professionally, and negotiating a workable schedule. Interviewee performance includes: sharing things in a positive way, sounding honest, sounding interested in the position, and establishing overall rapport with the interviewer.

VR-JIT uses the following strategies to target improvement in the aforementioned domains: 1) providing repeatable VR interviews, 2) offering in-the-moment feedback, 3) displaying scores on key dimensions of performance and 4) allowing review of audio and written transcripts color coded for strong, neutral or weak interview responses. In addition, VR-JIT provides didactic electronic learning (e-learning) guidance on how to successfully perform job interviews and covers related topics such as creating a resume, researching a position, hygiene, what to wear, what types of questions to ask, reminders about eye contact, and whether to disclose a disability. The e-learning material is available to trainees at any time while working with the intervention.

Since many jobs now require an on-line application, the program also provides training in how to complete such a process and uses an on-line application that is later used by the program to personalize the simulated job interviews. The job application section prompts users for information such as education, employment history, and job-related skills. Thus, the VR-JIT simulated interviewer might ask questions regarding prior work experience, work history gaps, or the specific skills necessary to perform the position. The program allows trainees to choose from eight different employment positions when completing the job application, including: cashier, inventory worker, food service worker, grounds worker, stock clerk, janitor, customer service representative, and security. The variation in these positions enables trainees to learn how to talk generally about their skills and strengths across a variety of positions.

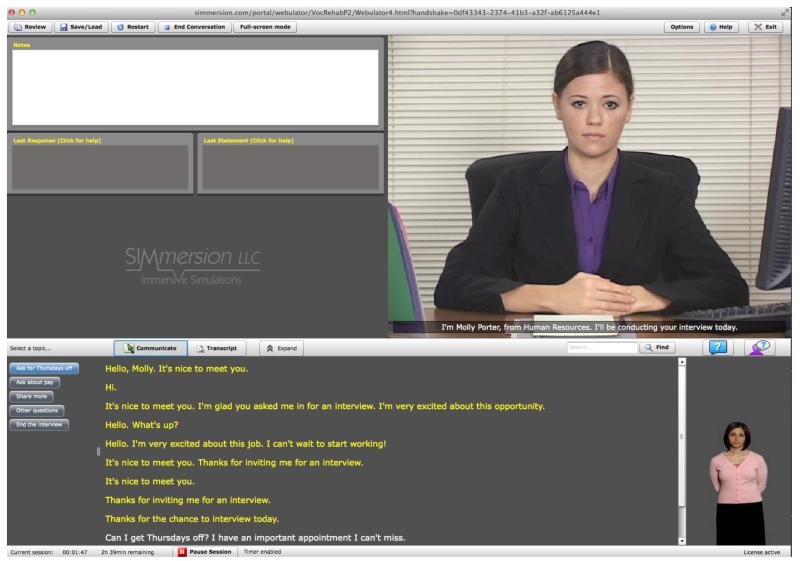

VR-JIT’s simulated interviews allow trainees to interact with Molly Porter (the virtual human resources (HR) representative) while using speech recognition software (Figure 1). This feature allows trainees to practice speaking their responses to Molly’s questions. The variation in available responses provides multiple options that can enhance or damage rapport with the interviewer. This approach allows trainees to make mistakes and learn how to improve their responses. VR-JIT provides trainees with in-the-moment feedback on their performance using an on-screen nonverbal job coach (Figure 1). Trainees can receive verbal feedback from the on-screen coach by clicking on a help button. VR-JIT also enables trainees to review a transcript of every simulated question and response, which indicates why responses were appropriate/inappropriate and gives related advice to the trainee. The transcripts can be reviewed using an audio format where they can hear themselves respond to questions (if trainees used speech recognition). Following each simulated interview, trainees are also provided feedback on why certain training objectives received a particular score.

Figure 1.

VR-JIT Simulated Interview Interface. Molly’s interview question is asked as well as displayed at the bottom of her video image. SIMantha, the virtual job coach is displayed in the bottom-right corner. Available relevant responses are coded in yellow that can be read aloud and understood by Molly with the use of voice recognition software. Buttons to the left of the responses allow trainees to change the direction of the conversation. When Molly asks her question the computer screen transitions into full screen mode.

There are additional features that individualize the VR-JIT learning experience. To promote hierarchical learning, the simulated interviews have three difficulty levels where Molly is friendly (easy), business-oriented (medium), or brusque (hard). At the hard level she is unforgiving of errors and may even ask illegal questions. Also, Molly’s character continually evolves so her demeanor or questions may change depending on the trainee’s prior responses and the rapport that has been established. This emotional realism creates a dynamic experience in which the trainee sees Molly become nicer when responded to honestly and respectfully, or sees her become more abrupt and dismissive when responded to evasively or rudely. These features, taken together with the scope of VR-JIT’s main components and nonbranching logic, provide a comprehensive and interactive learning experience for practicing and performing a successful job interview.

Training Fidelity

Two research staff members were trained to administer the intervention using a checklist devised by the scientific team (see Appendix A). Participant orientation using the checklist covered the following topic areas: navigating the graphic user interface (GUI), creating a user profile, completing a job application, e-learning materials, starting the simulation, reading transcripts, using in-the-moment feedback and help modules, reviewing transcripts, and reviewing summarized interview performance. Staff engaged in practice sessions where they oriented team members on administering the intervention.

Study Procedures

Both groups completed baseline and follow-up assessments in the research lab. Baseline assessments included psychosocial and vocational interviews, clinical assessments, and neurocognitive and social cognitive assessments. The TAU group completed baseline assessments, and then, after a two-week waiting period, they returned to repeat the two standardized role-plays and the self-confidence measure as follow-up tests. Following the completion of baseline measures, the intervention group was asked to complete 10 hours (approximately 20 trials) of VR-JIT training over the course of 5 visits (within a two-week period), and then complete the Treatment Experience Questionnaire (TEQ), 2 standardized role-plays, and the self-confidence measure as follow-up tests.

Once VR-JIT orientation was completed, participants were given the opportunity to ask questions and receive clarification on any aspect of the program. Then participants began a trial run by creating a practice job application and engaging in a single practice session to demonstrate that they could navigate VR-JIT. Staff provided feedback and assistance until the participant felt ready to begin. VR-JIT was administered in private offices to provide a safe environment where participants felt comfortable using the speech recognition component.

Participants were encouraged to use the e-learning materials prior to each simulated interview. To promote hierarchical learning, participants were required to progress through three difficulty levels. First, at least three ‘easy’ interviews needed to be completed. One score of 80 or higher was required to advance to the ‘medium’ level. Participants were automatically advanced to medium if a score of at least 80 was not achieved prior to 5 completed interviews. This process was repeated for participants at the ‘medium’ level before advancing to the ‘hard’ level. Participants played on the ‘hard’ level for the remainder of training.

Participants were asked to notify the research team once an interview was completed so the staff could record the simulated interview scores. The research team then reviewed the completed transcript with the participant, particularly emphasizing places where improvement could be made. Only after this information had been reviewed could the participant begin another interview. Participants could make notes within VR-JIT that could be printed out for their use at home.

Study Measures

Demographic Characteristics, Vocational History and Clinical Assessment

A psychosocial interview was used to obtain the participants’ demographic characteristics (e.g., age, gender, race), and vocational history (e.g., months since prior employment, prior vocational training). Raw scores on the 65-item SRS-2 were converted to T-scores to generate the following domain scores of social deficits: social awareness, social cognition, social communication, social motivation, and restricted interests and repetitive behaviors (Constantino and Gruber 2012).

Neuocognitive and Social Cognitive Measures

The Repeatable Battery for the Assessment of Neuropsychological Status (RBANs) (Randolph et al. 1998) was administered to assess neurocognitive functioning. The total score of the RBANS is a general reflection of the following cognitive functions: immediate memory, visuospatial capacity, language, attention, and delayed memory.

Basic social cognition was measured using the Bell-Lysaker Emotion Recognition Task (BLERT) (Bell et al. 1997). The BLERT assesses emotion recognition using twenty-one video-recorded vignettes of an affective monologue, which are observed and then participants label each vignette with the emotion that is most prominently displayed. An accuracy rating was generated based on the number of correct responses. The BLERT recently received the highest scientific rating for measures of its kind from an independent RAND panel (Pinkham et al. 2013).

Advanced social cognition was measured using the Emotional Perspective-Taking Task, which is a proxy of cognitive empathy. Participants were shown 60 scenes of 2 actors engaged in social interactions. The face of one actor is masked and participants were asked to select which of two faces reflected how the masked character would feel in the interaction. An accuracy rating was generated based on the number of correct responses (Smith et al. 2013).

Feasibility Assessments

Participants were invited to attend five training sessions during which they would spend up to two hours working with VR-JIT. The attendance of participants was recorded across the five training sessions and the number of minutes (out of a possible 600 minutes) that each participant engaged in training with VR-JIT.

Participants completed the training experience questionnaire (TEQ) to assess the extent to which VR-JIT was easy to use, enjoyable, helpful, instilled confidence in interviewing, and prepared them for interviews (BLINDED reference). The TEQ’s 5 items were rated on a 7-point Likert scale, with higher scores reflecting more positive opinions of VR-JIT (α=0.84).

Primary Efficacy Assessments

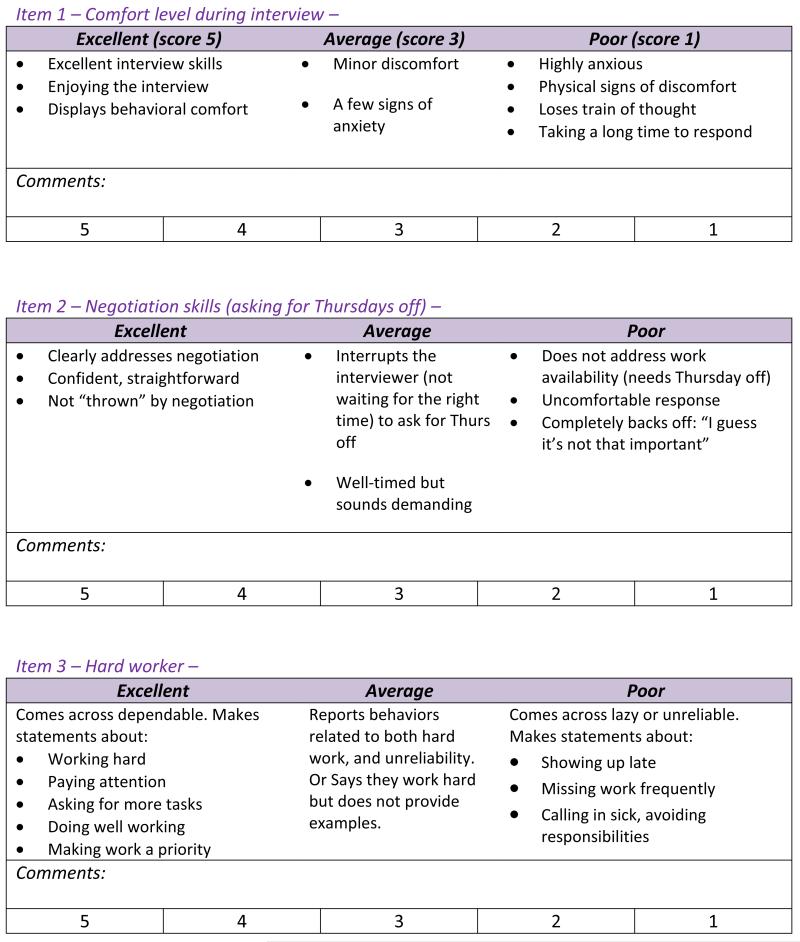

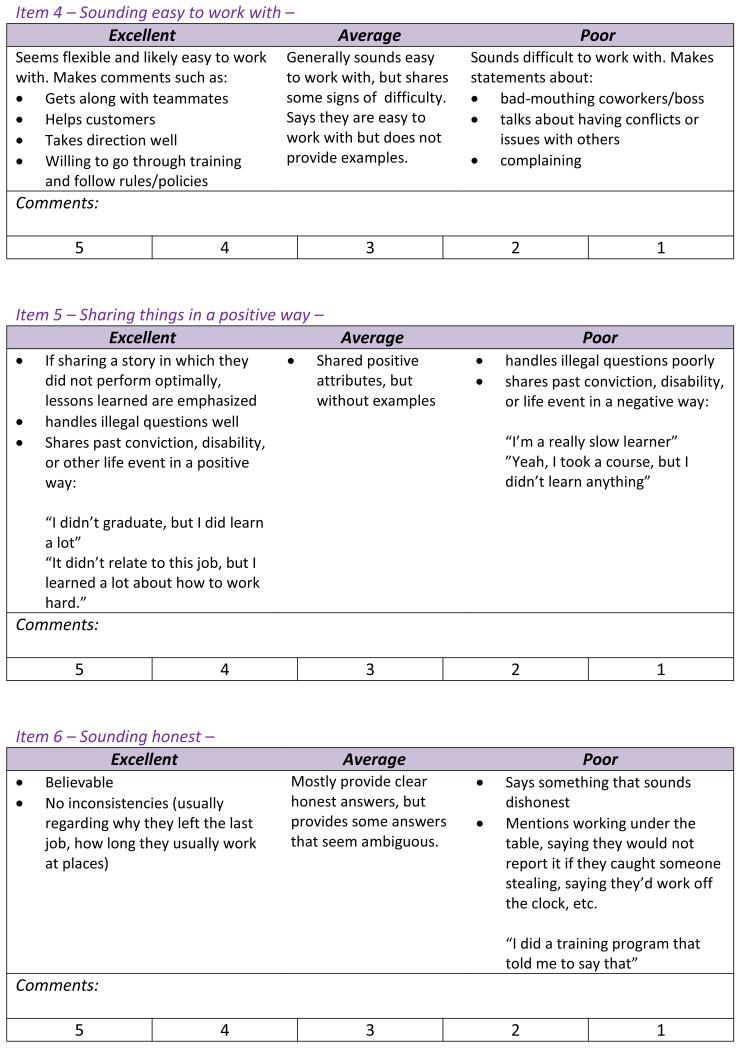

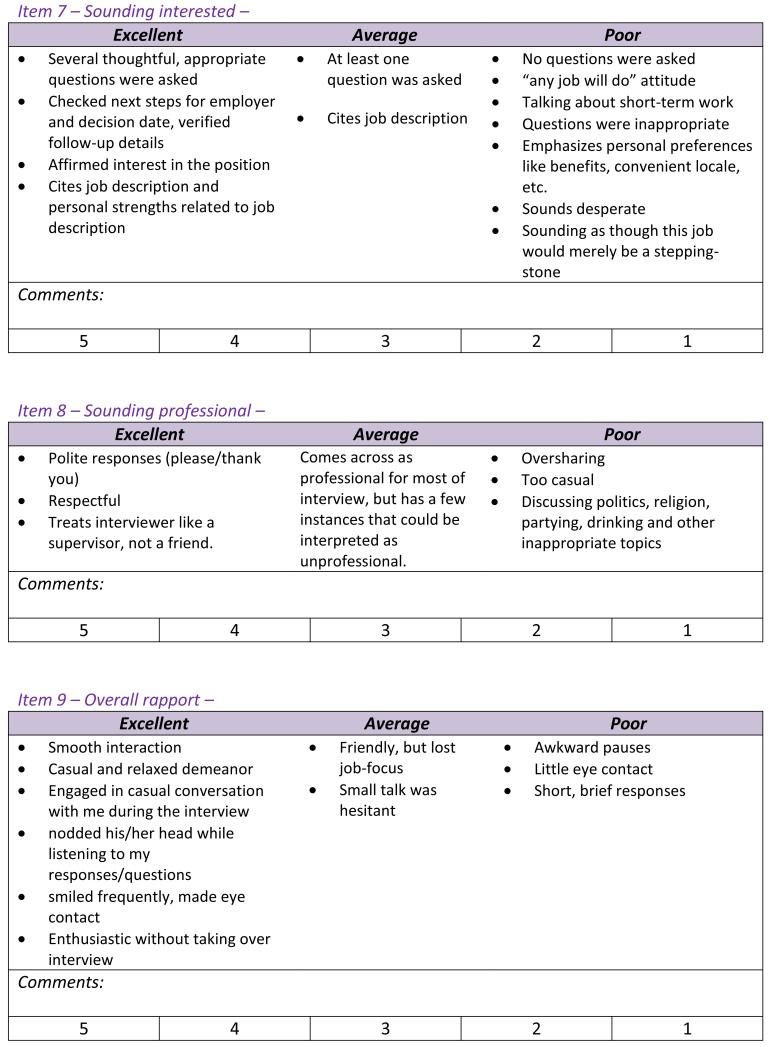

Role-play job interviews were the first primary outcome and lasted approximately 20 minutes each and were intended to assess nine communication skills that contribute to successful job interviews: 1) comfort level, 2) negotiation skills (asking for Thursdays off), 3) conveying oneself as a hard worker (dependable), 4) sounding easy to work with (teamwork), 5) sharing things in a positive way, 6) sounding honest, 7) sounding interested in the position, 8) conveying that one behaves professionally, and 9) establishing overall rapport with the interviewer. These role-play scoring domains were the same as those used for feedback in VR-JIT and that are consistent with the job relevant interview content and interviewee performance constructs from the literature (Huffcutt 2011) as outlined above.

Participants completed two baseline role-plays and two follow-up role-plays. They selected four of eight employment scenarios and completed a job application to guide each of their role-play sessions. Participants were provided the following instructions prior to each interview: “You are interviewing for part-time work, particularly because you need to have Thursdays off for personal reasons. You will need to negotiate for a schedule that will accommodate for Thursdays off.” Interview role-plays were conducted by standardized role-play actors (SRAs) posing as HR representatives. Eight employment scenarios were developed by the research team and vetted through a panel of vocational rehabilitation experts. Interviews entailed 13 standard questions (e.g., “Why did you leave your last position?” “If you were offered this job, how long do you see yourself working here?”), with additional questions optionally asked, to help make the interview as naturalistic as possible. All role-plays were video-recorded for scoring purposes.

Standardized role-play actors (SRAs) are extensively used to act as standardized patients in the field of medical education (Issenberg et al. 2005; Sommers et al. 2013) and are used to improve clinicians’ clinical and communication skills (Barrows 1993; Cohen et al. 1996). SRAs were recruited from the Clinical Education Center at [Blinded University] to act as HR representatives during the role-plays. They were selected based on their experience as SRAs and having previously worked as human resource interviewers. They were trained to follow the study protocol (i.e., ask 13 required questions in a naturalistic way) and were monitored by the research team for any deviations in performance, which were addressed with feedback and additional training.

Role-play videos were randomly assigned to two raters who were blind to condition. The raters were human resource experts. Raters were trained with 10 practice videos before independently rating the study videos. The raters established reliability with the study data by double scoring 20% of the videos and attained a high degree of reliability (ICC=.94). In order to prevent rater drift, both raters met with the research team every 20 videos to review two videos and discuss inconsistencies and reach a consensus score. A total score was computed across nine domains (range of 1-5 per domain) for each of the two baseline role-plays, and then averaged to compute a single score. A similar method was used to compute a single follow-up role-play score. The role-play interview scoring (including anchors) can be found in Appendix B.

Job interview self-confidence was the second primary outcome. Participants rated their confidence in performing job interviews using a 7-point Likert scale to answer nine questions (e.g., “How comfortable are you going on a job interview?” “How skilled are you at making a good first impression?” and “How skilled are you at maintaining rapport throughout the interview?”). Total baseline and follow-up job interview self-confidence scores were computed. The internal consistencies at baseline (α=0.90) and follow-up (α=0.94) across all subjects were strong.

Process Measure

Participants’ VR-JIT performance scores and time spent engaged with the simulated interviews were also tracked in the lab. The VR-JIT program scored each simulated interview from 0-100 using an algorithm programmed into the software based on the appropriateness of their responses throughout the interview in the eight domains outlined above: negotiation skills (asking for Thursdays off), conveying you are a hard worker (dependable), sounding easy to work with (teamwork), sharing things in a positive way, sounding honest, sounding interested in the position, acting professionally, and establishing overall rapport with the interviewer.

Data Analysis

Continuous data were examined for normality and no transformations were necessary. Examination of the role-play data revealed that although participants were instructed to negotiate for Thursdays off, participants forgot during 25% of the role-plays despite prompting from the SRAs. The mean value of the other role-play scores was used as an imputed value for the missing variable (Myers 2000; Sterne et al. 2009). No other ratings were missing.

In addition to analyzing the total score of the role-play assessments, subscores were created for the job-relevant interview content (using item scores from hard worker, easy to work with, sounding professional, and negotiation skills) and interviewee performance (using item scores from sharing things positively, sounding honest, sounding interested, comfort level, and overall rapport) constructs (Huffcutt 2011).

T-tests and Chi-Square analyses were used to determine between-group differences related to demographics, vocational history, global neurocognition, social cognition, and ASD-related social deficits. Feasibility of VR-JIT was determined from descriptive statistics that characterized the frequency of attended sessions, the mean number of minutes required to complete the simulated interviews, and mean responses to the training experience questionnaire. Repeated measures analysis of variance (RM-ANOVA) was performed to examine whether there was a group effect and a time-by-group interaction for the primary outcome measures (role-play performance and job interview self-confidence). For significant interactions, Cohen’s d effect sizes were generated to characterize the differences between baseline and follow-up scores.

VR-JIT total performance scores were evaluated as a process measure. The VR-JIT performance across trials was determined by computing linear regression slopes for each subject based on the regression of their performance scores on the log of trial number. In addition, a plot of the group-level performance average was created for each successive VR-JIT trial, and report the R-Square from the regression of average performance on the log of trial number.

Partial correlations explored whether the primary outcome scores at follow-up and VR-JIT performance slopes were associated with age, gender, months since prior employment, global neurocognition, basic and advanced social cognition, and ASD-related symptoms (while co-varying for baseline outcome scores).

Study data were collected and managed using REDCap electronic data capture tools hosted at [BLINDED INSTITUTION] (Harris et al. 2009). REDCap (Research Electronic Data Capture) is a secure, web-based application designed to support data capture for research studies.

Results

Our between-group analyses revealed that the VR-JIT and control groups did not statistically differ with respect to background and baseline characteristics (Table 1). Thus, covariates were not analyzed in the RM-ANOVAs. The feasibility results are presented in Table 2, and suggest VR-JIT was well-attended, easy to use, enjoyable, helpful, instilled confidence in interviewing, and prepared participants for future interviews.

Table 1. Characteristics of the Study Sample.

| TAU Group (n=10) |

VR-JIT Group (n=16) |

χ2/ T-Statistic |

|

|---|---|---|---|

| Demographics | |||

| Mean age (SD) | 23.2 (3.0) | 24.9 (6.7) | −0.7 |

| Gender (% male) | 80% | 75% | 0.1 |

| Parental education, mean years (SD) | 15.9 (1.3) | 15.0 (2.6) | 0.9 |

| Race | |||

| % Caucasian | 40% | 50% | |

| % African-American | 30% | 25% | 2.4 |

| % other | 30% | 25% | |

| Vocational History | |||

| Prior full-time employment (%) | 10% | 12.5% | <0.1 |

| Prior paid employment (any type) (%) | 30% | 62.5% | 2.6 |

| Prior participation in vocational training program | 20% | 43.8% | 1.5 |

| Months since any prior employment, mean (SD) | 26.5 (24.9) | 32.7 (22.2) | −0.5 |

| Social Responsiveness Scale | 65.7 (11.1) | 69.1 (7.9) | −0.8 |

| % Normal (<60)a | 30% | 6.3% | |

| % Mild (60-65) | 40% | 37.5% | 4.2 |

| % Moderate (66-75) | 10% | 37.5% | |

| % Severe (76+) | 20% | 18.8% | |

| Total Score, mean (SD) | 65.7 (11.1) | 68.8 (7.7) | −0.8 |

| Social Awareness, mean (SD) | 62.8 (9.9) | 63.7 (6.2) | −0.3 |

| Social Cognition, mean (SD) | 63.5 (12.0) | 66.5 (9.5) | −0.7 |

| Social Communication, mean (SD) | 64.8 (10.8) | 68.5 (11.5) | −0.8 |

| Social Motivation, mean (SD) | 60.3 (11.8) | 61.3 (9.0) | −0.2 |

| Restricted Interests and Repetitive Behaviors, mean (SD) |

68.8 (9.2) | 70.9 (11.4) | −0.5 |

| Cognitive function | |||

| Global Neurocognition, mean (SD) | 89.0 (19.2) | 89.8 (21.4) | −0.1 |

| Basic Social Cognition, mean (SD) | .81 (.11) | .72 (.17) | 1.5 |

| Advanced Social Cognition, mean (SD) | .80 (.13) | .76 (.08) | 1.0 |

Note: TAU, treatment as usual participants; VR-JIT intervention participants.

The 4 subjects in the ‘normal’ category provided clinical documentation of an ASD diagnosis.

Table 2.

Feasibility Characteristics of VR-JIT Training (SD)

| Attendance Measures | |

| % Session Attendance | 91.3 (0.1) |

| Elapsed Simulation Time (min) |

532.5 (92.6) |

| Simulated Interviews (count) | 15.8 (4.8) |

| Training Experience Questionnaire | |

| Ease of use | 5.8 (1.2) |

| Enjoyable | 5.1 (1.6) |

| Helpful | 5.4 (1.6) |

| Instilled confidence | 5.4 (1.7) |

| Prepared for interviews | 5.8 (1.4) |

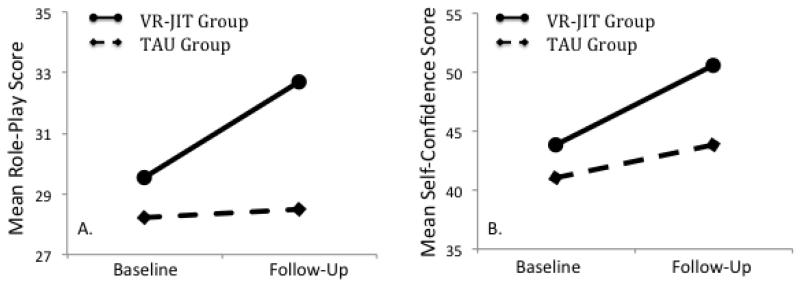

The results of the primary outcome analyses are displayed in Table 3. A significant Group-by-Time interaction was found for the total role-play assessment scores (F1,24=4.4, p=0.046) that was characterized by a moderate effect size in the VR-JIT group and no change in the TAU group (d=0.55 and d=0.05, respectively) (Figure 2a). The VR-JIT group demonstrated trend-level improvements in the subdomains of job relevant interview content (F1,24=4.0, p=0.056) and interviewee performance (F1,24=3.2, p=0.086) that were characterized by moderate effect sizes (d=0.42 and d=0.55, respectively), while the TAU group did not demonstrate improvement (d= 0.04 and d=0.06, respectively). VR-JIT subjects improved on the following individual items: hard worker, easy to work with, sharing things positively, sounding interested in the job, and establishing overall rapport, which were characterized by moderate-to-large effect sizes (Cohen’s d range from 0.40 to 0.82). The TAU group did not improve on any individual items (all d<0.15).

Table 3. Primary Outcome Measures.

| TAU Group | VR-JIT Group | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Baselin e mean (SD) |

Follow- Up mean (SD) |

Cohen’ s d |

Baseline mean (SD) |

Follow- Up mean (SD) |

Cohen’s d |

|

| Role-Play Performance Total Score | 28.2 (50) |

28.5 (6.1) |

0.05 | 29.5 (5.7) |

32.7 (5.7) |

0.55 |

| Job Relevant Interview Content Score |

12.7 (2.4) |

12.8 (2.6) |

0.04 | 13.5 (2.5) |

14.6 (2.7) |

0.42 |

| Hard Worker | 3.3 (0.9) |

3.3 (0.8) |

0.00 | 3.3 (0.8) |

3.8 (0.9) |

0.60 |

| Easy to work with/teamwork | 2.9 (0.6) |

3.0 (0.7) |

0.15 | 3.3 (0.6) |

3.6 (0.7) |

0.40 |

| Sounding Professional | 3.0 (0.9) |

3.1 (1.0) |

0.11 | 3.2 (0.7) |

3.5 (0.9) |

0.35 |

| Negotiation skills | 3.7 (0.7) |

3.5 (0.6) |

−0.31 | 3.7 (1.0) |

3.8 (0.8) |

0.11 |

| Interviewee Performance Score | 15.5 (2.8) |

15.7 (3.6) |

0.06 | 16.2 (3.4) |

18.0 (3.3) |

0.55 |

| Sharing things positively | 3.0 (0.6) |

3.0 (0.7) |

0.00 | 3.0 (0.9) |

3.7 (0.9) |

0.82 |

| Sounding honest | 4.5 (0.8) |

4.6 (0.7) |

0.13 | 4.7 (0.4) |

4.8 (0.4) |

0.39 |

| Sounding interested in the job | 2.5 (1.2) |

2.5 (1.2) |

0.00 | 2.4 (1.1) |

2.8 (0.8) |

0.49 |

| Comfort level | 2.7 (0.9) |

2.7 (0.9) |

0.00 | 3.0 (0.8) |

3.3 (0.9) |

0.33 |

| Establishing overall rapport | 2.9 (0.7) |

3.0 (0.8) |

0.13 | 3.0 (0.9) |

3.4 (0.9) |

0.40 |

| Job interview self-confidence rating | 41.0 (9.6) |

43.8 (9.1) |

0.30 | 41.4 (10.6) |

50.6 (8.4) |

0.96 |

Note: TAU, treatment as usual participants; VR-JIT intervention participants.

Figure 2.

Primary Outcomes. Panel A plots the significant Time-BY-VR-JIT group interaction with regard to baseline and follow-up role-play scores. Panel B plots the trend-level Time-BY-VR-JIT group interaction with regard to baseline and follow-up self-confidence measures.

A trend-level Group-by-Time interaction was found for the total score on the job interview self-confidence measure (F1,22=3.9, p=0.060) (Figure 2b). The improvement in the VR-JIT group was characterized by a large effect size (d=0.96), while the improvement in the TAU group was characterized by a small effect size (d=0.30).

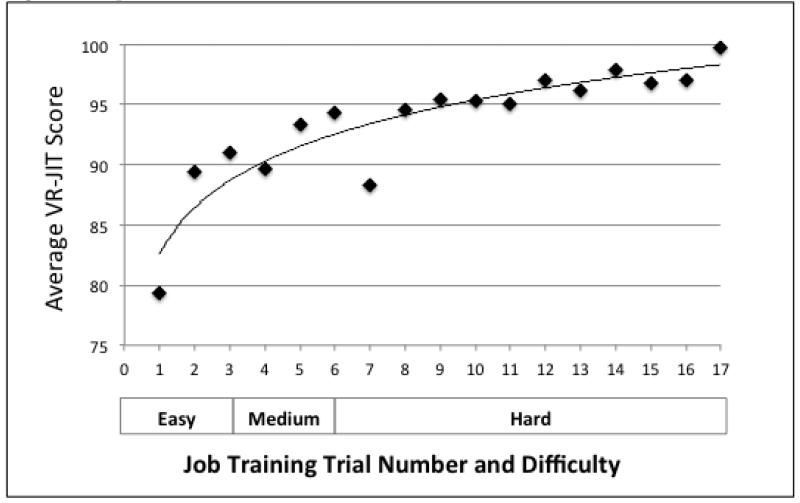

The results of the process measure demonstrated that VR-JIT performance scores were lower during the early trials at each difficulty level (1st trial on easy, 1st trial on medium, and 1st trial on hard), and then improved as the number of trials progressed across each difficulty level (Figure 3). Specifically, the slope (mean=4.7, sd=6.1) suggests that performance improves 4.7 points for every 1 point increase in the natural log of the trial number (R-Squared=0.83). There were no significant exploratory correlations between the outcome measures and baseline variables across the entire sample or within the VR-JIT group alone. However, due to the small sample size, the correlations may not have been stable.

Figure 3.

VR-JIT Learning Curve in Adults with an Autism Spectrum Disorder. This figure plots the average score for each successive VR-JIT simulated interview trial. Trials 1-3 at easy, trials 4-6 at medium, and trials 7-17 at hard. Initial trials at each difficulty level (trials 1, 4, and 7) are much lower than subsequent trials at that level. Overall model fit, R2=.83.

Discussion

The goal of the study was to evaluate the feasibility and efficacy of VR-JIT in a small randomized single-blinded controlled trial. The feasibility results suggest that participants: 1) were largely compliant with attendance at training sessions (>90%), 2) were engaged with the simulated interviews during these sessions (>500 minutes of training out of a maximum of 600 minutes), and 3) reported that VR-JIT was easy-to-use, enjoyable, helpful, instilled them with confidence, and prepared them for future interviews. The efficacy results suggest that when compared to the TAU group, the VR-JIT group demonstrated: 1) significantly improved job interview skills that were characterized by moderate effect sizes, 2) enhanced job interview self-confidence, and 3) a progressive increase in simulated interview scores across trials and increasing levels of difficulty. Thus, this study provides initial evidence that VR-JIT may be a feasible and efficacious program to enhance practical job interview skills for adults with ASD.

The findings that job interview role-play performance can be improved with virtual reality training were consistent with a recent study that demonstrated job interview skills, practiced in a virtual environment, and performed in a live role-play, were amenable to change (Strickland et al. 2013). In this study, participants completed 1) a pre-training interview with a human resources expert, 2) reviewed multimedia educational content at their discretion for one week, and 3) used a human controlled avatar to practice interviewing skills they displayed weaknesses on during the pre-training interview, and 4) completed a post-training interview with a human resources expert. This approach was associated with significant improvements in the content of responses to job interview questions and a trend towards improving the delivery of these responses (Strickland et al. 2013).

There were some limitations to the current study. First, given the limited scope and resources of this pilot study, there was no access to trained clinicians who could administer the standard ASD diagnostic instruments, the ADI-R (Lord et al. 1994) and ADOS (Lord et al. 1989). Future work assessing the efficacy of VR-JIT will include diagnostic confirmation via these instruments. Next, the intervention was initially developed for use by adults with chronic mental illness (e.g., schizophrenia and bipolar disorder) (BLINDED REFERENCE), and has limited e-learning material and interviewing scripts that focus on autism. However, the findings suggest that the training is still generalizable to adults with ASD as they demonstrated significantly improved job interview skills between the baseline and follow-up role-play sessions as well as increased self-confidence. Although a greater increase in self-confidence in the intervention group was observed, this finding should be interpreted with caution as individuals with ASD have difficulty with self-report and may overestimate their abilities (Knott et al. 2006). Moreover, unexpected interview responses may potentially be more common among individuals with ASD and present possible limitations to the use of VR-JIT, which uses scripted responses. Thus, future development of VR-JIT for use with individuals with ASD will specifically address these issues.

Although the e-learning component of VR-JIT provides information on eye contact, personal appearance, appropriate behavior and responses, a current limitation of the software is that users do not receive feedback about these issues directly from the program. Unfortunately, the use of the e-learning materials was not tracked, which may or may not have a direct benefit on improving the outcome and process measures. This data would provide a more thorough assessment of how participants use and benefit from the virtual reality training. Some participants did not consistently use the speech recognition option, which provides the opportunity to practice saying responses out loud. The inconsistent use of this option could limit the potential benefit from training. Inclusion criteria required that participants were actively seeking employment, which may have created a self-selection sampling bias, although these are the individuals who are likely to use the software. In the future, a larger sample will be needed to demonstrate the effectiveness of VR-JIT as the main interaction was significant, but the observed changes in the subdomains were at the trend level. In addition, greater statistical power would be needed to evaluate whether the observed effectiveness of VR-JIT is associated with ASD symptoms, neurocognitive and social cognitive functioning. Lastly, employment outcome data for the participants in this study has not yet been collected. Future studies will examine whether VR-JIT completion is associated with completing more interviews and obtaining employment.

In conclusion, virtual reality training is an efficacious and highly accessible strategy for improving community-based outcomes among individuals with ASD. There is a major gap in services available to address job interview skills among adults with ASD after they transition out of high school (Taylor et al. 2012). This study presents preliminary evidence that the use of VR-JIT may be a feasible and efficacious tool to improve job interview skills for adults with ASD. This training system uses a virtual reality platform (via computer software or the internet), and as such, is widely accessible to families, support groups, and service providers.

Acknowledgments

Support for this work was provided by a grant to Dr. Dale Olsen (R44 MH080496) from the National Institute of Mental Health with a subcontract to Dr. Michael Fleming at Northwestern University Feinberg School of Medicine. We would like to thank Dr. Zoran Martinovich for his consultation on the statistical analyses. The authors acknowledge research staff at Northwestern University’s Clinical Research Program for data collection and our participants for volunteering their time. The authors have declared that there are no conflicts of interest in relation to the subject of this study.

Virtual Reality Job Interview Training in Adults with Autism Spectrum Disorder

Appendix A

Fidelity Checklist for Facilitator of Virtual Reality Job Interview Training

First Training Session:

-

□

Oriented trainee to the training environment

-

□Related the training to the trainee’s personal goals for job seeking

- Explain the following:

-

□User PIN number

-

□Navigation buttons

-

□E-learning material

-

□Application (filling out, saving, and submitting)

-

□Disability page

-

□Simulated interviews

-

□Scenario

-

□Instructions (video and written)

-

□Coach (click light bulb for verbal feedback)

-

□Voice recognition

-

□Responses highlighted in yellow vs. white

- Explain and demonstrate how to:

-

□Launch interviews

-

○New application, old application, or no application

-

○With or without disabilitiesReturn to e-learning

-

○

-

□Saving simulated interview session

-

□Ending simulated interview session (Do NOT use “red X”)

-

□Return to help video

-

□Change response topics

-

□Review and interpret transcript

-

□Review summary feedback

-

□

First and Subsequent Training Sessions

-

□

Set the correct level for Molly

-

□

Monitored trainee’s motivation and attention

-

□

Asked trainee to use E-learning materials

-

□

Asked trainee to review the transcript

-

□

Available to answer any questions or concerns

Appendix B

Role-Play Interview Scoring

References

- Aldridge FJ, Gibbs VM, Schmidhofer K, Williams M. Investigating the clinical usefulness of the Social Responsiveness Scale (SRS) in a tertiary level, autism spectrum disorder specific assessment clinic. Journal of autism and developmental disorders. 2012;42:294–300. doi: 10.1007/s10803-011-1242-9. doi:10.1007/s10803-011-1242-9. [DOI] [PubMed] [Google Scholar]

- APA . Diagnostic and Statistical Manual of Mental Disorders. Fifth Edition edn. American Psychiatric Publishing; Arlington, VA: 2013. [Google Scholar]

- Barrows HS. An overview of the uses of standardized patients for teaching and evaluating clinical skills. AAMC. Academic medicine: journal of the Association of American Medical Colleges. 1993;68:443–51. doi: 10.1097/00001888-199306000-00002. discussion 451-3. [DOI] [PubMed] [Google Scholar]

- Bell M, Bryson G, Lysaker P. Positive and negative affect recognition in schizophrenia: a comparison with substance abuse and normal control subjects. Psychiatry research. 1997;73:73–82. doi: 10.1016/s0165-1781(97)00111-x. [DOI] [PubMed] [Google Scholar]

- Cohen DS, Colliver JA, Marcy MS, Fried ED, Swartz MH. Psychometric properties of a standardized-patient checklist and rating-scale form used to assess interpersonal and communication skills. Academic medicine: journal of the Association of American Medical Colleges. 1996;71:S87–9. doi: 10.1097/00001888-199601000-00052. [DOI] [PubMed] [Google Scholar]

- Constantino JN, Gruber CP. Social Responsiveness Scale, Second Edition (SRS-2) Second edn. Western Psychological Services; Los Angeles, CA: 2012. [Google Scholar]

- Fleming M, et al. Virtual reality skills training for health care professionals in alcohol screening and brief intervention. Journal of the American Board of Family Medicine: JABFM. 2009;22:387–98. doi: 10.3122/jabfm.2009.04.080208. doi:10.3122/jabfm.2009.04.080208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gurney JG, Fritz MS, Ness KK, Sievers P, Newschaffer CJ, Shapiro EG. Analysis of prevalence trends of autism spectrum disorder in Minnesota. Archives of pediatrics & adolescent medicine. 2003;157:622–7. doi: 10.1001/archpedi.157.7.622. doi:10.1001/archpedi.157.7.622. [DOI] [PubMed] [Google Scholar]

- Hall NC, Gradt-Jackson SE, Goetz T, Musu-Gillette LE. Attributional retraining, self-esteem, and the job interview: benefits and risks for college student employment. Journal of Experimental Education. 2011;79:318–339. [Google Scholar]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hendricks D. Employment and adults with autism spectrum disorders: challenges and strategies for success. J Vocat Rehabil. 2010;32:125–134. [Google Scholar]

- Higgins KK, Koch LC, Boughfman EM, Vierstra C. School-to-work transition and Asperger Syndrome. Work. 2008;31:291–8. [PubMed] [Google Scholar]

- Huffcutt AI. An empirical review of the employment interview construct literature. International Journal of Selection and Assessment. 2011;19:62–81. [Google Scholar]

- Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Medical teacher. 2005;27:10–28. doi: 10.1080/01421590500046924. doi:10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- Kandalaft MR, Didehbani N, Krawczyk DC, Allen TT, Chapman SB. Virtual reality social cognition training for young adults with high-functioning autism. Journal of autism and developmental disorders. 2013;43:34–44. doi: 10.1007/s10803-012-1544-6. doi:10.1007/s10803-012-1544-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knott F, Dunlop AW, Mackay T. Living with ASD: how do children and their parents assess their difficulties with social interaction and understanding? Autism: the international journal of research and practice. 2006;10:609–17. doi: 10.1177/1362361306068510. doi:10.1177/1362361306068510. [DOI] [PubMed] [Google Scholar]

- Lord C, et al. Autism diagnostic observation schedule: a standardized observation of communicative and social behavior. Journal of autism and developmental disorders. 1989;19:185–212. doi: 10.1007/BF02211841. [DOI] [PubMed] [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of autism and developmental disorders. 1994;24:659–85. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Myers WR. Handling missing data in clinical trials: an overview. Drug Information Journal. 2000;34:525–533. [Google Scholar]

- Olsen DE, Sellers WA, Phillips RG. Office of National Drug Control Policy. Washington, D.C.: 1999. The simulation of a human subject for law enforcement training. [Google Scholar]

- Pinkham AE, Penn DL, Green MF, Buck B, Healey K, Harvey PD. The Social Cognition Psychometric Evaluation Study: Results of the Expert Survey and RAND Panel. Schizophrenia bulletin. 2013 doi: 10.1093/schbul/sbt081. doi:10.1093/schbul/sbt081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randolph C, Tierney MC, Mohr E, Chase TN. The Repeatable Battery for the Assessment of Neuropsychological Status (RBANS): preliminary clinical validity. Journal of clinical and experimental neuropsychology. 1998;20:310–9. doi: 10.1076/jcen.20.3.310.823. doi:10.1076/jcen.20.3.310.823. [DOI] [PubMed] [Google Scholar]

- Shattuck PT, Narendorf SC, Cooper B, Sterzing PR, Wagner M, Taylor JL. Postsecondary education and employment among youth with an autism spectrum disorder. Pediatrics. 2012;129:1042–9. doi: 10.1542/peds.2011-2864. doi:10.1542/peds.2011-2864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheehan DV, et al. The Mini-International Neuropsychiatric Interview (M.I.N.I.): the development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. The Journal of clinical psychiatry. 1998;59(Suppl 20):22–33. quiz 34-57. [PubMed] [Google Scholar]

- Smith MJ, et al. Performance-Based Empathy Mediates the Influence of Working Memory on Social Competence in Schizophrenia. Schizophrenia bulletin. 2013 doi: 10.1093/schbul/sbt084. doi:10.1093/schbul/sbt084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommers MS, et al. Emergency Department-Based Brief Intervention to Reduce Risky Driving and Hazardous/Harmful Drinking in Young Adults: A Randomized Controlled Trial. Alcoholism, clinical and experimental research. 2013 doi: 10.1111/acer.12142. doi:10.1111/acer.12142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterne JA, et al. Multiple imputation for missing data in epidemiological and clinical research: potential and pitfalls. BMJ. 2009;338:b2393. doi: 10.1136/bmj.b2393. doi:10.1136/bmj.b2393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stichter JP, Laffey J, Galyen K, Herzog M. iSocial: Delivering the Social Competence Intervention for Adolescents (SCI-A) in a 3D Virtual Learning Environment for Youth with High Functioning Autism. Journal of autism and developmental disorders. 2013 doi: 10.1007/s10803-013-1881-0. doi:10.1007/s10803-013-1881-0. [DOI] [PubMed] [Google Scholar]

- Strickland DC, Coles CD, Southern LB. JobTIPS: A Transition to Employment Program for Individuals with Autism Spectrum Disorders. Journal of autism and developmental disorders. 2013 doi: 10.1007/s10803-013-1800-4. doi:10.1007/s10803-013-1800-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tay C, Ang S, Van Dyne L. Personality, biographical characteristics, and job interview success: A longitudinal study of the mediating effects of interviewing self-efficacy and the moderatin geffects of internal locus of causality. Journal of Applied Psychology. 2006;91:446–454. doi: 10.1037/0021-9010.91.2.446. [DOI] [PubMed] [Google Scholar]

- Taylor JL, McPheeters ML, Sathe NA, Dove D, Veenstra-Vanderweele J, Warren Z. A systematic review of vocational interventions for young adults with autism spectrum disorders. Pediatrics. 2012;130:531–8. doi: 10.1542/peds.2012-0682. doi:10.1542/peds.2012-0682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JL, Seltzer MM. Employment and post-secondary educational activities for young adults with autism spectrum disorders during the transition to adulthood. Journal of autism and developmental disorders. 2011;41:566–74. doi: 10.1007/s10803-010-1070-3. doi:10.1007/s10803-010-1070-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trepagnier CY, Olsen DE, Boteler L, Bell CA. Virtual conversation partner for adults with autism. Cyberpsychology, behavior and social networking. 2011;14:21–7. doi: 10.1089/cyber.2009.0255. doi:10.1089/cyber.2009.0255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wehman PH, et al. Competitive Employment for Youth with Autism Spectrum Disorders: Early Results from a Randomized Clinical Trial. Journal of autism and developmental disorders. 2013 doi: 10.1007/s10803-013-1892-x. doi:10.1007/s10803-013-1892-x. [DOI] [PubMed] [Google Scholar]

- Wilkinson GS, Robertson GJ. Wide Range Achievement Test 4 Professional Manual. Psychological Assessment Resources; Lutz, FL,: 2006. [Google Scholar]

- Wing L, Gould J. Severe impairments of social interaction and associated abnormalities in children: epidemiology and classification. Journal of autism and developmental disorders. 1979;9:11–29. doi: 10.1007/BF01531288. [DOI] [PubMed] [Google Scholar]