Abstract

The topic of compression has been discussed quite extensively in the last 20 years (eg, Braida et al., 1982; Dillon, 1996, 2000; Dreschler, 1992; Hickson, 1994; Kuk, 2000 and 2002; Kuk and Ludvigsen, 1999; Moore, 1990; Van Tasell, 1993; Venema, 2000; Verschuure et al., 1996; Walker and Dillon, 1982). However, the latest comprehensive update by this journal was published in 1996 (Kuk, 1996). Since that time, use of compression hearing aids has increased dramatically, from half of hearing aids dispensed only 5 years ago to four out of five hearing aids dispensed today (Strom, 2002b). Most of today's digital and digitally programmable hearing aids are compression devices (Strom, 2002a). It is probable that within a few years, very few patients will be fit with linear hearing aids. Furthermore, compression has increased in complexity, with greater numbers of parameters under the clinician's control. Ideally, these changes will translate to greater flexibility and precision in fitting and selection. However, they also increase the need for information about the effects of compression amplification on speech perception and speech quality. As evidenced by the large number of sessions at professional conferences on fitting compression hearing aids, clinicians continue to have questions about compression technology and when and how it should be used. How does compression work? Who are the best candidates for this technology? How should adjustable parameters be set to provide optimal speech recognition? What effect will compression have on speech quality? These and other questions continue to drive our interest in this technology. This article reviews the effects of compression on the speech signal and the implications for speech intelligibility, quality, and design of clinical procedures.

Categorizing Compression

With a linear hearing aid, a constant gain is applied to all input levels until the hearing aid's saturation limit is reached. Because daily speech includes such a wide range of intensity levels, from low-intensity consonants such as /f/ to high-intensity vowels such as /i/, and from whispered speech to shouting, the benefit of a linear hearing aid is restricted when the amplification needed to make low-intensity sounds audible amplifies high-intensity sounds to the point of discomfort. In other words, linear hearing aids have a limited capacity to maximize audibility across a range of input intensities. The smaller the dynamic range (ie, the difference between hearing threshold and loudness discomfort level) of the listener, the more difficult it is to make speech (and other daily sounds) audible in a variety of situations.

To solve this problem, most hearing aids now offer some forms of compression in which gain is automatically adjusted based on the intensity of the input signal. The higher the input intensity, the more gain is reduced. This seems like a reasonable strategy. High-intensity signals (such as shouted speech) require less gain to be heard by the listener than low-intensity signals (such as whispered speech). We might expect patients wearing compression hearing aids to perform better than those wearing linear peak clipping aids in listening conditions that include a wide range of speech levels. However, the benefits of compression are not clear-cut. We begin by describing the characteristics of compression hearing aids.

Compression hearing aids are generally described according to a set of fixed or adjustable compression parameters. The compression threshold or kneepoint is the lowest level at which gain reduction occurs. Linear gain is usually applied below this level. Alternatively, some digital hearing aids use expansion rather than linear gain below the compression threshold. With expansion, the lower the input level, the less gain is applied. The intent is to reduce amplification of microphone noise or low-level ambient noise (eg, Kuk, 2001).

For example, a hearing aid with a compression threshold of 80 dB SPL could apply constant (linear) gain below the compression threshold and reduce its gain automatically for signals exceeding 80 dB SPL. In contrast, a hearing aid with a compression threshold of 40 dB SPL would have variable gain over nearly the entire intensity range of speech. For the purposes of this article, compression threshold is described as low (50 dB SPL or less), moderate (approximately 55–70 dB SPL) and high (75 dB SPL or greater). Hearing aids with low compression thresholds are referred to as wide-dynamic range compression (WDRC) (eg, Dillon, 1996 and 2000; Kuk, 2000) or full-dynamic range compression (FDRC) aids (eg, Kuk, 2000; Verschuure, 1997). Hearing aids with high compression thresholds are referred to as compression limiting aids (Walker and Dillon, 1982).

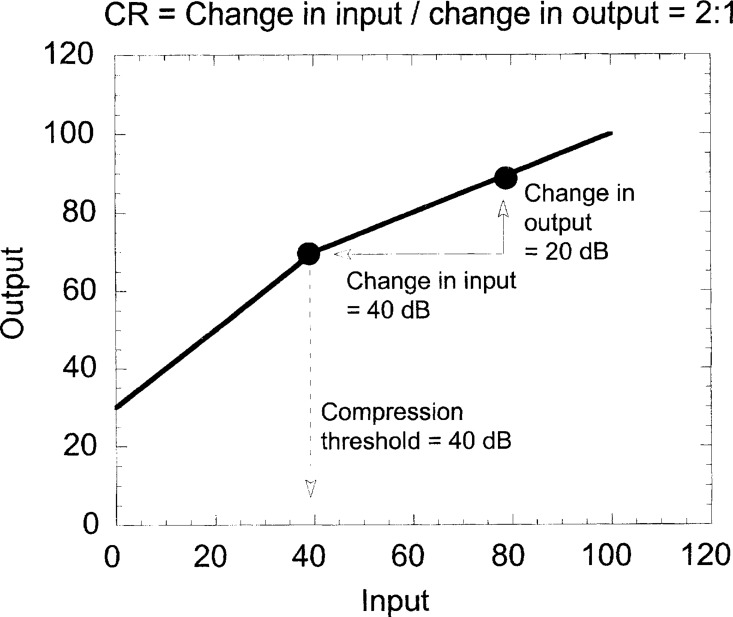

The compression ratio determines the magnitude of gain reduction. The compression ratio is the ratio of increase in input level to increase in output level. For example, a compression ratio of 2:1 means that for every 2 dB increase in the input signal, the output signal increases by 1 dB. Figure 1 shows an example of an input-output function for a compression hearing aid. Linear gain, with gain of 30 dB, is applied below the compression threshold of 40 dB SPL. Above this input level, a compression ratio of 2:1 is applied.

Figure 1.

An example of input-output function, compression threshold, and compression ratio.

Compression ratios for WDRC aids are typically low (<5:1), while compression ratios for compression limiting aids are usually high (>8:1) (Walker and Dillon, 1982). Often, both features are combined in the same aid, with a low compression ratio for low-to-moderate level signals and a high compression ratio to limit saturation as the output level approaches the listener's discomfort threshold.

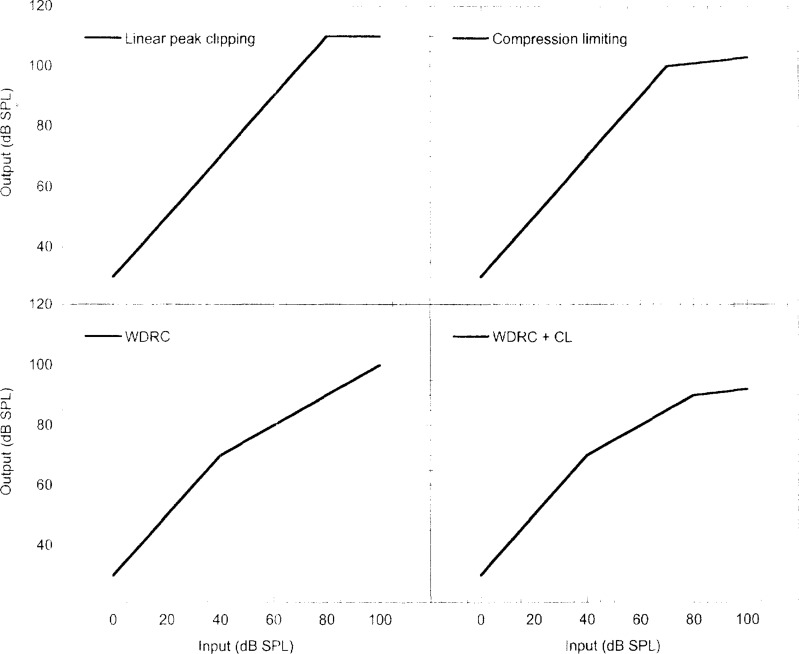

Figure 2 shows examples of input-output functions of four different circuit configurations. Figure 3 shows the gain plotted as a function of input level for the same four circuits.

Figure 2.

An example of four input-output functions. The upper left panel is a linear peak clipping hearing aid; the upper right panel shows a linear compression limiting aid. The lower left panel shows a wide-dynamic range compression (WDRC) hearing aid. The lower right panel shows a WDRC hearing aid using output limiting function.

Figure 3.

An example of four input-gain functions. The upper left panel is a linear peak clipping hearing aid with a constant gain of 30 dB and an output limit of 110 dB SPL. The upper right panel shows a linear compression limiting aid with a constant gain of 30 dB for input signals lower than 80 dB SPL, and a compression ratio of 10:1 for input levels greater than 80 dB SPL. The lower left panel shows a wide-dynamic range compression (WDRC) hearing aid with a 40 dB SPL compression threshold and a compression ratio of 2:1. The lower right panel shows a WDRC hearing aid using output limiting function. It operates linearly (with 30 dB of gain) for inputs below 40 dB SPL. There are two compression thresholds, one at 40 dB SPL and the other at 80 dB SPL. For input levels between 40 and 80 dB SPL, a 2:1 compression ratio is applied. For inputs above 80 dB SPL, the hearing aid acts as a compression limiter with a compression ratio of 10:1.

An important parameter of a compression hearing aid is the speed with which it adjusts its gain to changes in input levels. Attack time refers to the time it takes the hearing aid to stabilize to the reduced gain state following an abrupt increase in input level. For measurement purposes, the attack time is defined as the time it takes the output to drop to within 3 dB of the steady-state level after a 2000 Hz sinusoidal input changes from 55 db SPL to 90 dB SPL (ANSI, 1996). Because compression should respond quickly to reduce gain in the presence of high-level sounds that might otherwise exceed the listener's discomfort threshold, attack times are usually short. An informal review of commonly prescribed WDRC hearing aids shows that most have attack times of less than 5 milliseconds (Buyer's Guide, 2001).

Release time refers to the time it takes the hearing aid to recover to linear gain following an abrupt decrease in input level. For measurement purposes, the release time is defined as the time it takes the 2000 Hz sinusoidal output to stabilize to within 4 dB of the steady-state level after input changes from 90 dB SPL to 55 dB SPL (ANSI, 1996). Clinicians can choose among hearing aids with release times ranging from a few milliseconds to several seconds.

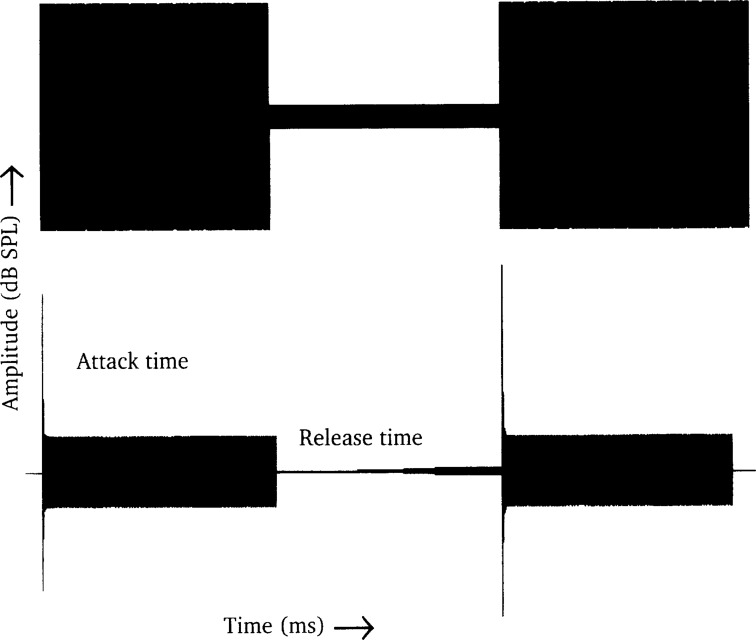

Attack and release time are illustrated in Figure 4. Compression amplifiers are traditionally classified based on their time constants as slow-acting (release times greater than 200 milliseconds) or fast-acting (release times less than 200 milliseconds) (Dreschler, 1992; Walker and Dillon, 1982). This nomenclature has become somewhat blurred in current use, with some aids referred to as fast-acting even with release times greater than 200 milliseconds. Fast-acting compression systems can serve two distinct purposes. In conjunction with a high compression threshold they act as an output limiter, limiting output while preventing saturation distortion. This is referred to as compression limiting. In conjunction with a low compression threshold, they act on a syllable-length speech sound and are referred to as syllabic compressors because they reduce the level differences between syllables or phonemes (Braida et al., 1982). Although technically any release time shorter than a syllable—about 200 milliseconds—can be termed a syllabic compressor, in practice syllabic compression uses release times of 150 milliseconds or less (Hickson, 1994).

Figure 4.

The attack and release time for a 2 kHz test signal. The top panel shows a 2 kHz input signal that abruptly decreases from an initial level of 90 dB SPL to 55 dB SPL and then increases again to 90 dB SPL. The lower panel shows the response of a compression hearing aid with an attack time of 3 milliseconds and a release time of 50 milliseconds to the same signal.

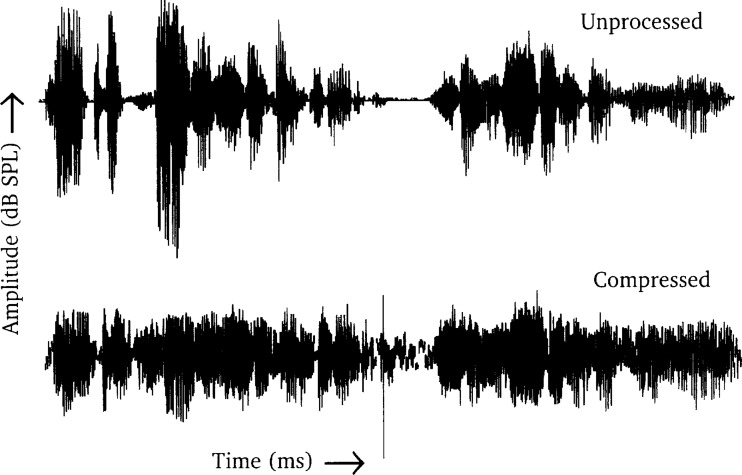

Figure 5 shows an example of speech processed with syllabic compression, using a 50-millisecond release time and compression threshold of 45 dB SPL. The upper panel shows the unprocessed sentences “Joe took father's shoe bench out. She was seated at my lawn”. In the unprocessed version, there is a marked contrast between low-intensity (typically consonants) and high-intensity (typically vowels) inputs. The lower panel shows the same sentences, processed by the syllabic compression circuit with a compression threshold at 45 dB SPL and an attack and release time of 3 milliseconds and 50 milliseconds, respectively. The most obvious effect is the reduced amplitude variation; high-intensity phonemes are reduced in level relative to low-intensity phonemes, resulting in an overall smoothing of amplitude variations. This is also known as amplitude smearing.

Figure 5.

A comparison of unprocessed sentences (top panel) to wide-dynamic range compression-amplified speech (lower panel). See text for details.

Slow-acting compression uses a long release time. The intent is to maintain a relatively constant output level, thus avoiding the need for frequent adjustments of the volume control. One potential problem with a long release time is that if the hearing aid has just responded to a high-intensity sound by decreasing gain, it may not be able to provide sufficient gain for a low-intensity sound that occurs while the aid is still at reduced gain. A release time that is very short can compensate quickly for changes in input levels but often causes an unpleasant “pumping” sensation as the aid cycles rapidly in and out of compression. As a compromise, some hearing aids have used an adaptive release time in which the release time depends on the duration of the activating signals. For brief, intense sounds (such as a door slamming), the release time is short, allowing the hearing aid to increase gain quickly to amplify successive low-intensity speech sounds. For longer intense sounds (such as a raised voice), the release time is long, allowing the hearing aid to maintain a comfortable output level. In these types of hearing aids, the typical release time is about 200 milliseconds for most daily situations.

When we consider the effect of compression hearing aids for our patients, it is important to keep in mind that the listed compression characteristics are measured using a steady-state signal (ANSI, 1996). Such measurements do not adequately describe the effects of compression on complex waveforms such as speech (Stelmachowicz et al., 1995). For example, the effective compression ratio for speech will be lower than the compression ratio noted in the hearing aid specifications (Stone and Moore, 1992). This happens when the modulation period of the input signal does not exceed the predefined attack and/or release times of the compression hearing aid. This is because the circuit only reaches maximum compression at the end of the attack time and only remains at maximum compression as long as the signal does not drop below the compression threshold. Because the level of the speech signal fluctuates from moment to moment, maximum compression is seldom achieved with everyday inputs. The higher the specified compression ratio and the longer the attack and release time, then the greater the discrepancy between specified and actual (or effective) compression ratio. For example, a compression aid with a specified compression ratio of 5:1 will provide closer to 3.5:1 for actual speech, depending on the time constants used (Stelmachowicz et al., 1994).

Single-channel compression systems vary gain across the entire frequency range of the signal. Thus, they cannot accommodate variations in the listener's dynamic range that may occur for different frequency regions. For example, many listeners with a sloping loss have a normal or near-normal dynamic range for low-frequency sounds but a sharply reduced dynamic range for high-frequency sounds where hearing loss is more severe. In some single-channel systems, an intense low-frequency sound can decrease overall gain and cause high-frequency sounds to become inaudible, although inclusion of an appropriate prefilter can minimize this problem (Kuk, 1996).

In a multichannel compression hearing aid, the incoming speech signal is filtered into two or more frequency channels. Compression is then performed independently within each channel prior to summing the output of all channels. The cutoff frequency between channels is termed the crossover frequency, and it may be either fixed or adjusted by the clinician. It is also important to consider whether each compression channel can be controlled independently. In some hearing aids, shallow filter slopes and/or preset interdependence between channels effectively limits how much one channel can be adjusted without affecting other channels. In general, digital hearing aids with a capability for steeper filter slopes provide greater channel independence.

A recent report on the state of the hearing aid industry notes, “virtually all high-performance products … are exclusively multichannel, nonlinear processing devices” (Strom, 2002a). Commercially available multichannel aids offer between 2 and 20 channels, although 2-channel or 3-channel systems are still the most likely choice. A survey of product lines available from major manufacturers indicates that about one third are single-channel, one third are two-channel and the remaining one third are divided equally between three-channel systems and those with more than three channels (Buyer's Guide, 2001).

A multichannel compression hearing aid may be better able than a single-channel compression hearing aid to accommodate variations in hearing threshold at each frequency (Venema, 2000), especially for atypical audiometric configurations. For example, a listener with a “cookie bite” configuration could be fit with a three-channel compression system with amplification in each channel that is precisely suited to her hearing loss. From the clinician's perspective, this is easier to accomplish if each channel can be independently controlled. However, it is not clear whether a larger number of channels will result in greater hearing aid benefit and/or higher listener satisfaction. These issues are discussed later in this article.

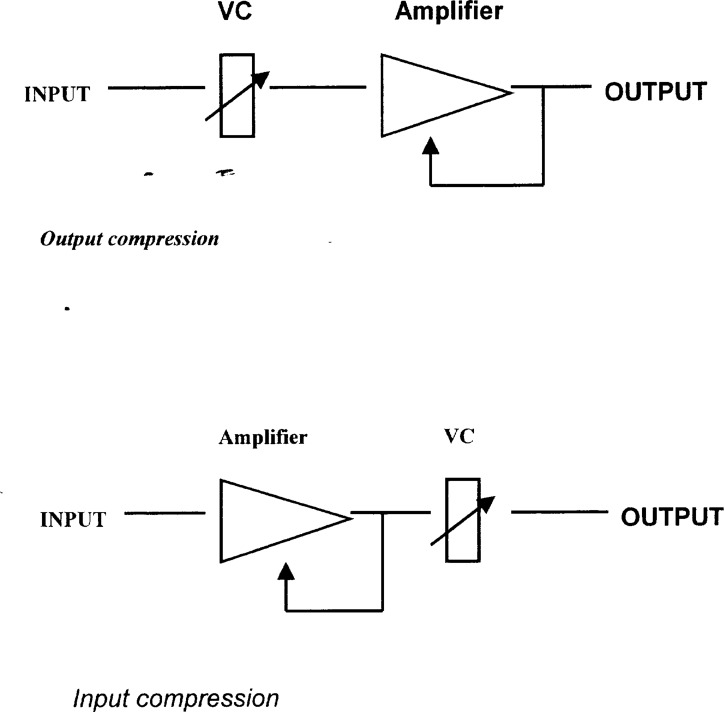

Finally, compression can be described as input or output compression. Unlike the compression parameters previously described, this is not a parameter within the control of the clinician but instead is determined by the circuit configuration of the hearing aid. In a compression hearing aid, a level detector monitors signal level. The level detector may rely on peak or average amplitude, on the root mean square level of the signal, or on some statistical property of the signal (Kuk and Ludvigsen, 1999). The output of the level detector is then connected via a feedback loop to the amplifier, whose gain is controlled by this output level. In a simple compression circuit with gain controlled by a level detector, gain is automatically varied once the level of the input signal exceeds the compression threshold. The distinction between input and output compression refers to the position of the level detector relative to the volume control.

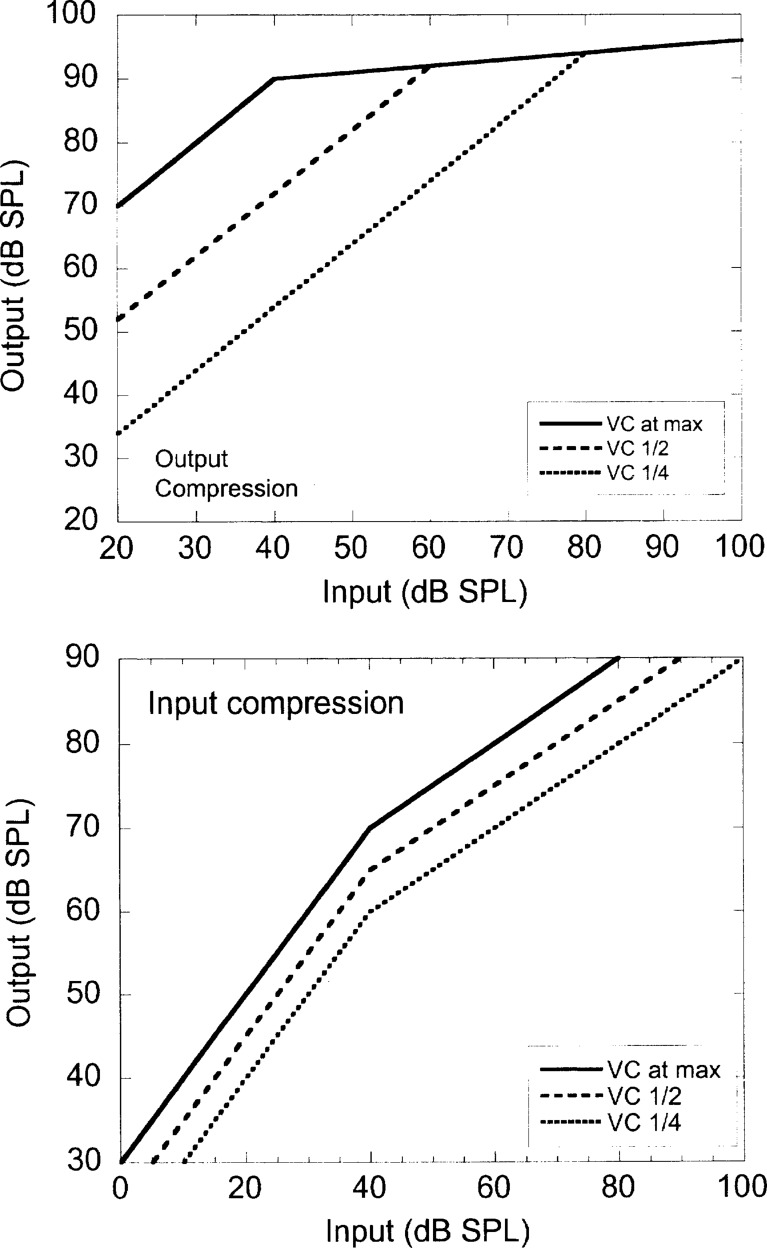

In output compression, also called AGC-O, the volume control is positioned before the level detector (Figure 6). Activation of compression (ie, gain reduction) is based on the output level from the amplifier (which is determined by the input level plus the volume control setting on the hearing aid). Thus in output compression, the maximum output level is not influenced by volume control setting (Figure 7). For this reason, compression limiting is most often implemented in an output compression circuit.

Figure 6.

Block diagrams for output (top panel) and input compression (lower panel).

Figure 7.

Effect of volume control position for output compression (top panel) and input compression (lower panel).

In input compression, the volume control is positioned after the level detector (Figure 6). Activation of compression is based on the level of the input signal. The compressed signal is then amplified according to the frequency–gain response of the hearing aid before it is modified by the volume control setting. Thus in input compression, the volume control setting influences the maximum output level received by the listener (Figure 7).

Compression is available in analog, digitally programmable analog, and digital hearing aids. Thus far, no evidence shows that compression in some digital aids is superior to compression in a programmable aid (Walden et al., 2000), although digitally implemented compression may allow more control over compression parameters. As in other amplification features available in both digital and analog aids (Valente et al., 1999), it is the signal processing strategy that matters rather than the underlying digital or analog “hardware”.

Using Compression for Output Limiting

Compression limiting refers to compression with a high compression threshold, high compression ratio, and fast attack time. The purpose of compression limiting is to serve as an output limiter to prevent discomfort—or hearing damage—from high-level signals while limiting saturation distortion. Thus, this type of compression is an alternative to peak clipping. In peak clipping, maximum electric output is controlled by instantaneously limiting the output of the hearing aid and, thereby, clipping the peaks of the signal. When the input signal plus gain is below the saturation point, a hearing aid with peak clipping is expected to perform similarly as one with output limiting.

Peak clipping causes undesirable distortion as input increases beyond the output limit of the hearing aid. Electroacoustically, there are marked differences in distortion levels between linear peak clipping and compression limiting aids once input level plus gain exceeds the saturation threshold. Listeners perceive this distortion as degraded speech clarity and sound quality (eg, Larson et al., 2000). The greater the amount of saturation, then the stronger the preference for compression limiting over peak clipping (Hawkins and Naidoo, 1993; Stelmachowicz et al., 1999; Storey et al., 1998).

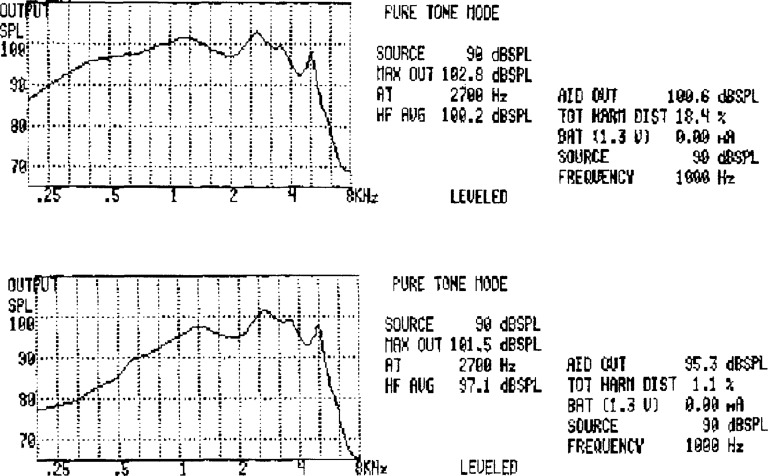

Figure 8 shows results of electroacoustic tests of the same programmable hearing aid, set for peak clipping (top panel) or compression limiting (lower panel). In each case, the input signal was a 90 dB SPL pure-tone sweep. One consequence of peak clipping saturation is the high harmonic distortion (18.4%) caused by peak clipping when the aid is in saturation. When operating as a compression limiter, harmonic distortion is very low—only 1.1%. This increased distortion (eg, when the speech level increases relative to the aid's maximum output) in a peak clipping aid is associated with reduced speech intelligibility, while output limiting has little effect on intelligibility in a compression limiting aid (Crain and Van Tasell, 1994; Dreschler, 1988a). Therefore, for most listeners, compression limiting should be used rather than peak clipping. One potential exception is for listeners with a severe-to-profound loss. These listeners, who require maximum power and often are accustomed to wearing high-gain linear aids in saturation, may report (at least initially) that compression limiting aids are not loud enough (Dawson et al., 1991).

Figure 8.

Coupler measurements of peak clipping (top) and compression limiting (lower panel).

Using Compression to Normalize Loudness

One characteristic of sensorineural hearing loss is a steeper-than-normal loudness growth curve. The goal of loudness normalization is also consistent with the idea that patients with a sensorineural hearing impairment lose the compressive nonlinearity that is part of a normally functioning cochlea (Dillon, 1996; Moore, 1996). Use of WDRC has been proposed as a means to compensate for abnormal loudness growth, and several fitting procedures have been developed in accordance with this philosophy (eg, Allen et al., 1990; Cox, 1995 and 1999; Kiessling et al., 1997; Kiessling et al., 1996; Ricketts, 1996; Ricketts et al., 1996). The intent of these procedures is to set compression parameters such that a listener wearing a WDRC aid will perceive changes in loudness in the same way as a normal-hearing listener (Kuk, 2000). Some of these procedures recommend that the patient's own loudness growth functions be measured in one or more frequency bands prior to the hearing aid fitting. Alternatively, loudness growth functions can be estimated based on the wearer's hearing threshold measurements, without the need to conduct specific loudness growth measures at fitting (Jenstad et al., 2000; Moore, 2000; Moore et al., 1999).

Recent data confirms that WDRC amplification can normalize loudness growth better than linear amplification (Fortune, 1999; Jenstad et al., 2000). It is less clear whether a fitting based on normalized loudness is superior in terms of speech intelligibility and/or speech quality to a fitting based on some other criteria, such as speech audibility. Kiessling et al., (1996) demonstrated improved speech recognition with a loudness–normalization-based fitting procedure (ScalAdapt) versus a threshold-based fitting strategy. In contrast, Keidser and Grant (2001a) found superior speech recognition in noise with NAL-NL1, an audibility maximization strategy, over IHAFF, which is based on normalizing loudness growth. Given that loudness normalization is implemented differently in each fitting method, it is likely that the differences in hearing aid benefit seen in research studies are due to the individual procedure, rather than the underlying philosophy of loudness normalization.

The idea of placing amplified speech within the listener's loudness comfort range seems reasonable and is implicit within many nonlinear prescriptive procedures. However, there is little direct evidence that normalizing loudness will provide optimal amplification characteristics (eg, Byrne, 1996; Van Tasell, 1993). In fact, Byrne (1996) argues against strict loudness normalization, pointing out that normal-hearing subjects can easily adjust to situational variations in loudness. Byrne (1996) also notes that hearing-impaired listeners might do better with compression parameters that explicitly do not normalize loudness growth, such as equalizing loudness across frequency (Byrne et al., 2001).

Finally, clinical measures of loudness growth rely on brief, steady-state signals which are used to determine the desired compression ratio. However, the effective compression ratio obtained with a complex, time-varying speech signal will be lower than that specified for a static signal such as a pure tone (Stone and Moore, 1992). Thus, normalizing loudness for individual frequency bands or pure tones does not mean loudness growth will be normalized for more complex, broad-band signals such as speech (Dillon, 1996; Moore, 1990 and 2000), although recent work (Moore, Vickers et al., 1999) suggests that loudness judgments using steady-state sounds should be adequate for predicting loudness with time-varying signals in compression systems with long time constants.

Using Compression to Improve Speech Intelligibility

Studies of the effects of compression amplification on speech intelligibility have generally taken one of two forms: laboratory-based research and clinical trials. Clinical trials may provide the most realistic assessment because the subjects wear the hearing aids in the home environment for several weeks or months and then complete one or more outcome measures. The disadvantage of clinical trials is that many variables are manipulated simultaneously, making it difficult to isolate specific effects. For example, a number of clinical trials compared aids that not only differed in compression characteristics, but also in frequency-gain responses, microphones, receivers, acoustic modifications, and/or fitting algorithms (eg, Knebel and Bentler, 1998; Newman and Sandridge, 1998; Valente et al., 1998 and 1997; Walden et al., 2000). In such studies, it is difficult to attribute differences between hearing aids to differences in compression processing versus other amplification variables.

Laboratory studies provide better control over experimental variables, thus one may interpret the results in a more straightforward manner. However, some laboratory-based studies may not incorporate variables inherent in wearable hearing aids, such as venting or earmold acoustics; or the acoustic test environment used in the laboratory may be dissimilar to that encountered by the subjects in everyday life. Both types of research are needed to understand the benefits and limitations of nonlinear amplification.

In a much-anticipated study, a large-scale clinical trial was conducted with 360 patients recruited from audiology clinics at eight Department of Veterans Affairs medical centers (Larson et al., 2000). An important feature of this study was double-blinding, in which neither the subjects nor the test audiologists knew which type of circuit was being tested. All patients wore three different hearing aids (peak clipping, compression limiting, and WDRC) for 3 months each. The compression limiting aid had an 8:1 compression ratio and adaptive release time; the WDRC hearing aid had a compression threshold of 52 dB SPL, a compression ratio between 1.1:1 and 2.7:1, and a 50-millisecond release time.

After each 3-month trial period, the patient completed a series of outcome measures, including speech recognition in quiet and in noise; speech quality ratings, and a questionnaire assessing overall communication performance. At the end of the three trials, each patient rank-ordered the three aids. As expected, the WDRC circuit provided more favorable loudness comfort for a range of input levels than the other circuits.

Although there were significant differences in speech intelligibility among circuits for selected conditions, there was no consistent pattern and the mean differences in performance between circuits were small. In the rank-order test, the patients preferred compression limiting (41.6%), followed by WDRC (29.8%) and peak clipping (28.6%).

In a similar but smaller trial, Humes et al., (1999) fit 55 hearing-impaired adults with linear peak clipping (fit according to linear, NAL-R targets) and two-channel WDRC aids (fit according to nonlinear, DSL [i/o] targets). All patients wore the linear aids for 2 months, followed by the WDRC aids for 2 months. At the end of each 2-month trial period, a battery of outcome measures were completed that included word recognition in quiet and in noise at various presentation levels; judgments of sound quality; and subjective ratings of hearing aid benefit. In general, results showed better speech intelligibility with the WDRC aid at all but high-level inputs. Patients also reported that the WDRC hearing aids provided greater ease of listening for low-level speech in quiet. The authors attributed these results to the greater gain at low input levels provided by the WDRC circuit and the higher DSL target gain levels for the WDRC aid.

Many focused studies have compared linear and compression amplification in a controlled environment, using either simulated hearing aid responses or wearable hearing aids. A good example of this is recent work by Jenstad, Seewald et al., (1999). Five conditions were included, representing different speech spectra that varied in levels and frequency responses. The same hearing aid was used for both linear and WDRC conditions, with targets generated using the same prescriptive procedure (DSL[i/o]). Outcome measures included sentence and nonsense syllable intelligibility and speech loudness ratings. For average speech levels, both circuits provided similar loudness comfort and speech intelligibility. For low and high speech levels, the WDRC aid provided better intelligibility and loudness comfort.

Bentler and Duve (2000) tested a variety of hearing aids that represented advances in amplification technology during the 20th century. Among the devices were a linear peak clipping analog aid, a single-channel analog compression aid, a two-channel analog WDRC aid, and two digital multichannel WDRC aids, all in behind-the-ear versions. Each device was fit using its recommended prescriptive procedure: NAL-R for the linear aid, FIG6 for the single-channel compression hearing aid, and the manufacturers' proprietary fitting algorithms for the remaining devices. Despite the differences in circuitry, speech recognition scores in quiet and in noise were similar across devices. The exception was poorer performance at very high speech levels (93 dB SPL) for the linear aid. This is not a surprising result given the distortion generated by peak clipping at such high input levels.

Moore and his colleagues (eg, Laurence et al., 1983; Moore and Glasberg, 1986; Moore et al., 1985 and 1992) worked extensively with an amplification system that applies a first-stage, slow-acting compression with a compression threshold of 75 dB SPL to compensate for overall level variations, followed by fast-acting compression amplifiers, acting independently in two frequency channels. Results showed improved speech reception threshold in quiet and in noise (Moore, 1987) and improved speech intelligibility, particularly at low input levels (Moore and Glasberg, 1986; Laurence et al., 1983) when compared to linear amplification or to slow-acting compression.

An important issue is the ability of compression amplification to improve speech intelligibility in noise. Although initially expected as a benefit of nonlinear amplification, compression does not appear to provide substantial benefit in noise compared to linear amplification (eg, Boike and Souza, 2000a; Dreschler et al., 1984; Hohmann and Kollmeier, 1995; Kam and Wong, 1999; Nabalek, 1983; Stone et al., 1997; van Buuren et al., 1999; van Harten-de Bruijn et al., 1997). This is certainly not the case when compared to a directional microphone (Ricketts, 2001; Valente, 1999; Yueh et al., 2001).

More recently, some investigators have suggested that the modulation properties of the background noise may influence the benefit of compression (Boike and Souza, 2000b; Moore et al., 1999; Stone et al., 1999; Verschuure et al., 1998). Specifically, compression may improve intelligibility when the background noise is modulated instead of unmodulated. This may be related to improved speech audibility during the noise “dips”.

In summary, WDRC provides the greatest advantage over linear amplification for low-level speech in quiet. In background noise, WDRC and linear amplification provide similar benefit. Several factors emerge as possible explanations for the disparate results seen across research studies. First, in some studies, performance with recently fitted and validated compression aids was compared to performance of the patient's own (linear) hearing aids (Benson et al., 1992; Schuchman et al., 1996). In addition to an expectation bias that the subject anticipated better performance from the “new” aid, the patients' own aids may have differed in other ways, such as a narrower frequency response or higher distortion. Second, the ability of compression to improve speech intelligibility in noise may be linked to the characteristics of the background noise (eg, Moore et al., 1999) or to the specific signal-to-noise ratio (Yund and Buckles, 1995a). Third, in some studies, potential differences in speech audibility were not accounted for and may have affected results of comparisons among amplification conditions. This issue is discussed in the next section.

Effects of Compression on Speech Audibility

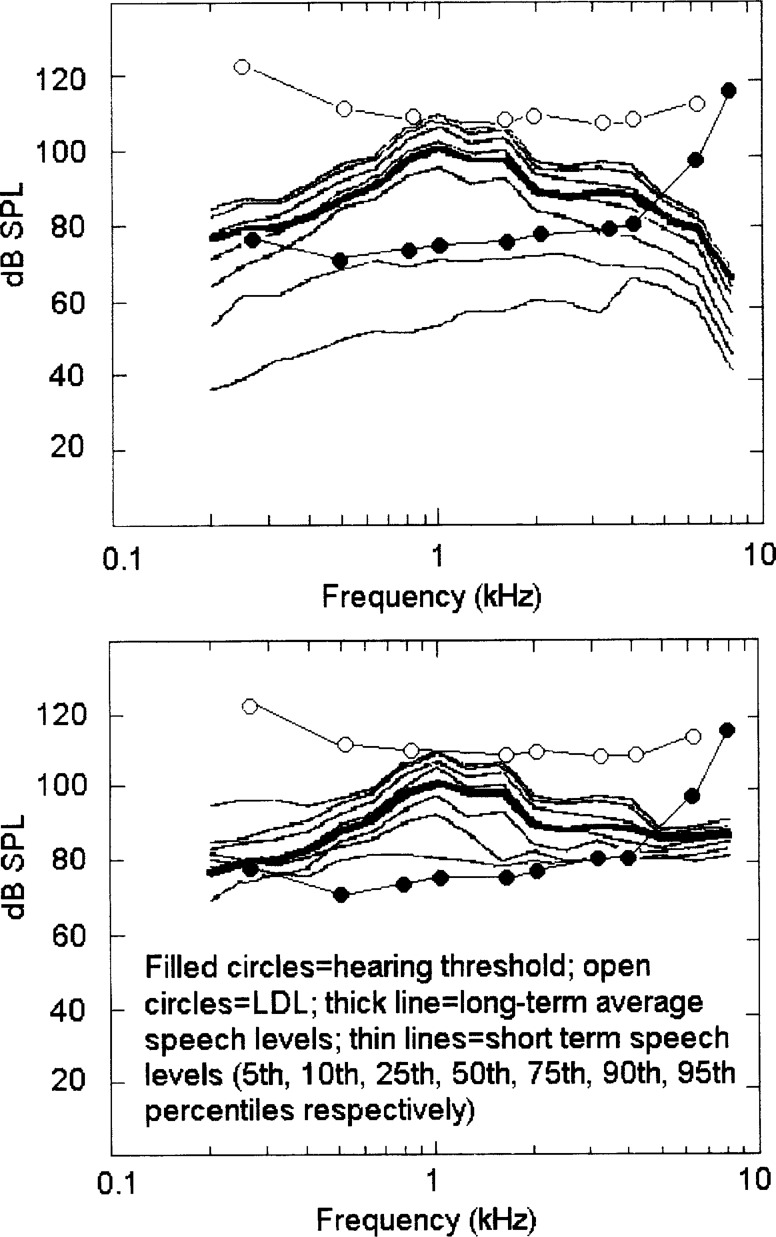

A primary goal of compression is to place greater amounts of the speech signal within the listener's dynamic range (ie, between threshold and loudness discomfort level) without the wearers adjusting the volume control. This is particularly true of fast-acting WDRC, which can improve audibility of short-term speech components by providing customized gain suited to each syllable or phoneme. The well-known “speech banana” should actually become narrower, with the amplified output varying across a smaller intensity range than the unamplified input.

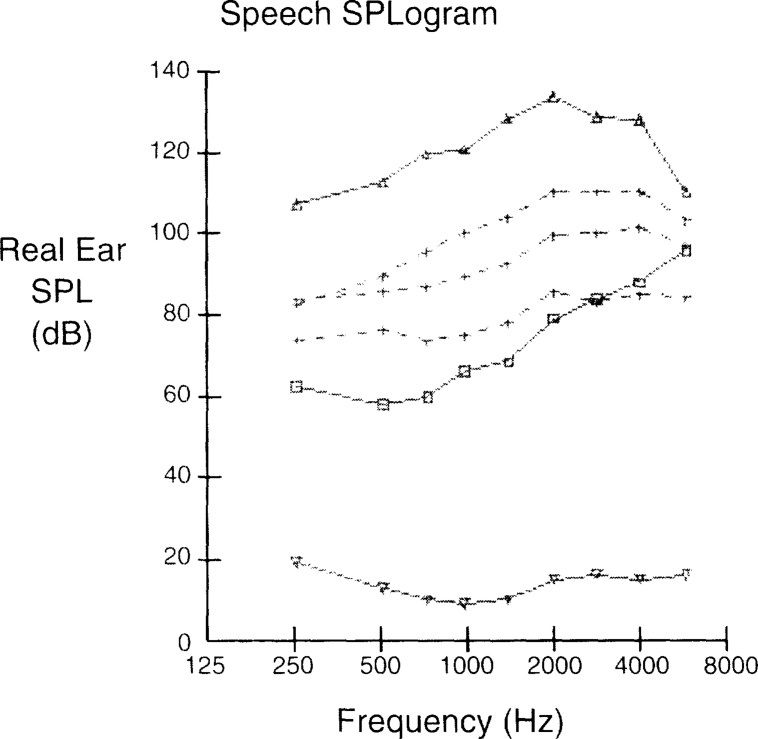

A few investigators have actually compared measured distributions of short-term RMS speech levels for linear and compressed speech (Verschuure et al., 1996; Souza and Turner, 1996, 1998, 1999). Such studies show that the range of speech levels is indeed reduced by compression. The reduction of the speech range depends on the compression parameters of the amplification system, most notably the compression ratio, on the release time, and on the length of the measurement window. With multichannel compression, the speech level distribution is reduced across frequency in accordance with the compression ratio in each channel (Souza and Turner, 1999). The higher the compression ratio, the greater the effect on the speech level distribution. Even for a single-channel compressor, the speech level distribution is unevenly affected across frequency (Verschuure et al., 1996).

Figure 9 shows the measured speech level distribution for linear (top panel) and compressed (lower panel) speech. In each panel, the filled circles represent audiometric thresholds for a listener with a moderate-to-severe loss. The filled diamonds are the loudness discomfort levels for the same listener. The thick line shows the long-term speech level, and the thin lines represent the range of short-term speech levels. For the linear speech, when speech is adjusted to avoid discomfort from peak levels, lower speech levels are inaudible. In contrast, the speech level distribution is reduced by compression, allowing for audibility of the entire range of speech levels.

Figure 9.

Short-term RMS speech level distribution for linear (top panel) and compressed speech (lower panel). In each panel, filled circles are hearing thresholds and filled diamonds are loudness discomfort levels for a single patient. The long-term RMS level is shown by the thick line.

Another possible explanation for the conflicting results seen among previous studies of compression hearing aids is that compression did not always improve audibility. In many studies, the subject was allowed to adjust the volume control or choose the presentation level (eg, Dreschler et al., 1984; Laurence et al., 1983; Tyler and Kuk, 1989). While this is the most realistic procedure because it reflects the way listeners will use the aids in everyday communication, adjustment of the volume control could minimize the difference between the linear and compressed conditions. For example, listeners might show similar performance with a linear versus a compression hearing aid if they adjusted hearing aid output to the same level in both conditions.

How do hearing aid wearers adjust the speech presentation level in a compression aid relative to a linear aid? Souza and Kitch (2001b) measured speech audibility at the volume setting chosen by mild-to-moderately impaired listeners. The hearing aid was a programmable single-channel aid, programmed (in sequential order) for peak clipping, compression limiting, or wide-dynamic range compression processing. For each amplification condition, listeners were instructed to adjust the volume control while listening to a variety of different test signals. Regardless of the speech input level or the background (quiet or noise), listeners adjusted each circuit configuration to similar output levels. In essence, the volume control adjustment eliminated the natural audibility advantage of WDRC hearing aids.

Of course, the subjects in this study were specifically directed to adjust volume to accommodate changes in input level. We expect that in everyday use of compression hearing aids, subjects will also choose to make volume adjustments to accommodate changes in the listening environment. This is supported by research showing that subjects fit with WDRC amplification prefer to have a manual volume control (Knebel and Bentler, 1998; Kochkin, 2000; Valente et al., 1998) and that once one is provided, most experienced hearing aid wearers report using it (Barker and Dillon, 1999).

However, there are numerous situations when use of a manual volume control is not possible or practical. For example, completely-in-the-canal (CIC) hearing aids rarely include manual volume adjustments and instead rely on compression to accommodate changes in the communication environment. Manual volume controls are also eliminated if the patient cannot physically manipulate them because of poor manual dexterity, loss of physical control from strokes or arthritis, impaired cognitive functioning from Alzheimer's disease or developmental delay, or is a young child. How well does compression work when a range of speech levels is presented at a fixed volume control setting?

Several investigators have noted improved performance with compression amplification relative to linear amplification only at low speech levels and/or when a wide range of speech levels was processed with a single volume control setting (Jenstad, Seewald, et al., 1999; Kam and Wong, 1999; Laurence et al., 1983; Mare et al., 1992; Moore and Glasberg, 1986; Peterson et al., 1990; Stelmachowicz et al., 1995). The recent large-scale study sponsored by the National Institute on Deafness and Communication Disorders and the Department of Veterans Affairs found minimal differences in speech intelligibility between WDRC and linear (compression limiting or peak clipping) hearing aids when hearing aid volume was adjusted to National Acoustic Laboratories (NAL-R) targets (Larson et al., 2000). Presumably, NAL-R targets were similar across amplification types for moderate input levels, resulting in similar speech audibility and no net advantage for the WDRC hearing aid in that situation.

In a more direct test of the relationship between audibility and the benefit of compression for speech intelligibility, Souza and Turner (1996 and Souza and Turner 998) measured the distribution of short-term RMS speech levels for a set of nonsense syllables. Speech identification scores were then measured for a two-channel WDRC amplification scheme and for a linear amplification scheme under conditions of varying speech audibility. Speech audibility was defined according to the proportion of the short-term RMS level distribution that was above the listener's hearing threshold. Listeners with a mild-to-moderate loss performed better with compression as long as it improved speech audibility. When compression and linear amplification provided equivalent audibility, there was no difference in performance.

In summary, wide-dynamic range compression is shown to improve audibility of speech components at low input levels (eg, Moore and Glasberg, 1986; Souza and Turner, 1998; Larson et al., 2000). One caution is that improved audibility is usually defined according to an optimal volume setting determined by the dispensing clinician. However, audibility could vary considerably in everyday listening situations where the patient controls the volume setting. This may partially explain the poor correlation between improved speech audibility measured in the clinic and everyday communication benefit described by the patient (Souza et al., 2000).

We cannot explain the results of all compression research in terms of speech audibility. Some studies have shown no improvements with compression even under conditions where compression clearly increased the amount of audible information in the speech signal (eg, DeGennaro et al., 1986). To account for such results, numerous investigators have speculated that essential cues for intelligibility are disrupted by WDRC (eg, Boothroyd et al., 1988; Dreschler, 1989; Festen et al., 1990; Plomp, 1988; Verschuure et al., 1996). This issue is discussed in the next section.

Effects of Compression on Acoustic Cues for Speech Identification

Speech intelligibility is determined by the listener's ability to identify acoustic cues essential to each sound. Implicit in this process is accurate transmission of these cues by the hearing aid. Certainly audibility of specific speech cues is a major factor in speech intelligibility. However, it is also important to consider whether acoustic cues are distorted or enhanced by compression amplification.

The work of DeGennaro et al. (1986) provides a convincing demonstration that more than simple audibility changes are involved. These investigators began by measuring the distribution of short-term RMS levels at each frequency. They then processed speech with compression systems that placed progressively greater amounts of the range of amplitude distributions above the subject's hearing threshold. Interestingly, no subject showed a consistent improvement with compression, although from an audibility perspective some improvement would be expected as greater amounts of auditory information exceeded detection thresholds and thus became audible.

It is possible that compression distorts some speech cues, offsetting the benefits of improved audibility, at least for some compression systems and for some listeners. Recently, interest has been renewed in the importance of temporal cues for speech intelligibility (eg, Shannon et al., 1995; Turner et al., 1995; van der Horst et al., 1999; Van Tasell et al., 1987 and 1992) and speculation that these cues are disrupted by fast-acting WDRC (eg, Boothroyd et al., 1988; Dreschler, 1989; Festen et al., 1990; Plomp, 1988; Verschuure et al., 1996). Temporal cues include the variations in speech amplitude over time and range from the very slow variations of the amplitude envelope to the rapid “fine-structure” fluctuations in formant patterns or voicing pulses (Rosen, 1992). With regard to compression, most attention has focused on fluctuations in the amplitude envelope, in part because alteration of the amplitude envelope is the most prominent temporal effect of fast-acting WDRC. The amplitude envelope contains information about manner and voicing (Rosen, 1992; Van Tasell et al., 1992) and some cues to prosody and also the suprasegmentals of speech (Rosen, 1992). Compression alters the variations in the amplitude envelope and reduces the contrast between high-intensity and low-intensity speech sounds. Of course, the reduced intensity variation is a desirable effect of compression. However, because both normal-hearing and hearing-impaired listeners can extract identification information from amplitude envelope variations (Turner et al., 1995), it is possible that alterations of these cues could affect speech intelligibility.

This has not been a simple issue to resolve. Because most studies use natural speech, which simultaneously varies in spectral and temporal content, it is difficult to separate the effect of altered temporal variations from other possible consequences, such as spectral distortion. The most direct method is to use a speech signal processed to limit spectral information. One processing technique is to digitally multiply the time-intensity variations of the speech signal by a broad-band noise (eg, Van Tasell et al., 1992; Turner et al., 1995). Naïve listeners describe these signals as “robotic” or “noisy” speech. Although more difficult to understand than natural speech, these signals can provide some identification information. Such signals can then be used to compare speech intelligibility for linear and compressed speech, while focusing on transmission of temporal cues. Results of such studies show that WDRC reduces consonant (Souza, 2000; Souza and Turner, 1996 and 1998) and sentence (Souza and Kitch, 2001a; Van Tasell and Trine, 1996) intelligibility. This effect is greater for higher compression ratios and/or short time constants (Souza and Kitch, 2001a).

These studies measured the effects of compression under conditions where the listener was forced to rely on temporal cues. Of course, the natural speech signal also contains spectral information. What is the clinical impact of these findings? The impact is probably minimal when one considers conversational speech presented in quiet to listeners with a mild-to-moderate loss who wear WDRC hearing aids with a small number of channels and a low compression ratio (<3:1). These listeners have normal or near-normal spectral discrimination ability (Moore, 1996; Van Tasell, 1993) and should be able to extract sufficient spectral and contextual information to compensate for altered temporal cues. The clinical impact may be greater for listeners who depend to a greater extent on temporal cues—most obviously, listeners with a severe-to-profound loss (Lamore et al., 1990; Moore 1996; Van Tasell et al., 1987).

Are all sounds equally susceptible to distortions of temporal cues, or do they affect some sounds more than others? Today's sophisticated digital algorithms could, in theory, allow hearing aids to be programmed to provide compression characteristics customized to each phoneme. To reach this point, we need to understand what aspects of each phoneme are enhanced or degraded by multichannel WDRC and which compression parameters will preserve these cues optimally. We might expect the greatest effect for sounds where critical information is carried by variations in sound amplitude over time. For example, important features of the stop consonants (/p, t, k, b, d, g/) include a stop gap (usually 50 to 100 milliseconds in duration) followed by a noise burst (5 to 40 milliseconds in duration). Voiced stops (/b, d, g/) are distinguished from voiceless stops (/p, t, k/) by the onset of voicing relative to the start of the burst. For syllable-initial stops, voice onset time (VOT) ranges from close to 0 milliseconds for voiced stops to 25 milliseconds or more for voiceless stops (Kent and Read, 1992). Perception of stop consonants can therefore be modeled as a series of temporal cues (ie, a falling or rising burst spectrum followed by a late or early onset of voicing) (Kewley-Port, 1983).

Because stop consonant identification depends on transmission of temporal cues (Turner et al., 1992; van der Horst et al., 1999) we expect these sounds to be especially susceptible to WDRC-induced alterations in the amplitude envelope. For example, hearing-impaired listeners may place more weight on the relative amplitude between the consonant burst and the following vowel (Hedrick and Younger, 2001), a cue that can be significantly changed by WDRC. The little data available do suggest WDRC can have negative effects on stop consonant intelligibility. In one study, single-channel, fast-acting compression applied to synthetic speech increased the amplitude of the consonant burst, resulting in erroneous perception (/t/ for /p/) (Hedrick and Rice, 2000). Similarly, Sreenivas et al., (1997) noted that a two-channel syllabic compressor increased the amplitude of the consonant burst, particularly in the mid-frequency region, resulting in more errors of /g/ for /d/ (for unprocessed speech, the peaks of /g/ are more prominent in the 1–2 kHz range, with the spectral peaks for /d/ mainly in the 4–5 kHz range). Alternatively, stop consonant errors could occur if the attack time overshoot is mistaken for a burst (Franck et al., 1999), so errors might be reduced by careful selection of time constants.

As another example, the affricates /d3, t∫/ are distinguished from stops by the rise-time of their noise energy and the duration of frication noise (Howell and Rosen, 1983). Specifically, the rise-time of affricates is intermediate between the short rise-time of stop consonants and the long rise-time of fricatives. Because one effect of WDRC would be to alter the rise-time pattern of the phoneme, it is possible that fast-acting WDRC systems would be detrimental (Dreschler, 1988b). Indeed, recent data from our laboratory suggests that affricate perception is impaired in multichannel WDRC systems, and that the most common error is a stop consonant (Jenstad and Souza, 2002b).

In summary, we expect multichannel WDRC to have diverse effects on acoustic cues that depend on the individual phoneme and on the salient cues for identification of that phoneme. Additionally, we expect these effects will be contingent on the parameters of the compressor. Compression with a high compression ratio and short time constants will produce the most dramatic alterations. These changes should be considered in conjunction with the improved speech audibility possible with compression amplification.

Effects of WDRC on Speech Quality

There is increased interest in using sound-quality judgments as an aid to the hearing aid fitting process (eg, Byrne, 1996; Mueller, 1996). Sound-quality judgments appeal to clinicians for a number of reasons. In the absence of a standard protocol for adjusting compression parameters, they can be used to guide compression settings according to patient preference; they can be completed in a short amount of time; and they involve the patient in the fitting process (Iskowitz, 1999). Of course, they are also subject to the patient's previous experience or biases.

Studies of speech quality have used paired comparisons (Byrne and Walker, 1982; Neuman et al., 1994; Kam and Wong, 1999), sound quality ratings (Neuman et al., 1998) or ratings of speech intelligibility (Bentler and Nelson, 1997). Generally, patients prefer the quality of speech with the least complex processing. Specifically, they prefer lower compression ratios (Boike and Souza, 2000a; Neuman et al., 1994; Neuman et al., 1998; van Buuren et al., 1999); longer release times (Hansen, 2002; Neuman et al., 1998) and smaller numbers (<3) of compression channels (Souza et al., 2000). Listeners with sloping loss show a slight preference for two-channel over single-channel compression (Keidser and Grant, 2001b; Preminger et al., 2001).

Before using sound-quality judgments to determine whether compression is “better” than linear amplification, it is important to consider the relationship between sound quality and speech intelligibility. Previous research has shown that listeners who were asked to choose a system on the basis of sound quality did not necessarily choose the system that maximizes speech intelligibility. An example of this is the frequency-gain response used in a linear aid; generally, patients prefer a frequency response with greater low-frequency gain, while greater high-frequency gain usually provides better speech intelligibility (Punch and Beck, 1980).

With regard to compression, a few studies have examined the relationship between intelligibility and sound quality for compressed speech. Boike and Souza (2000a) measured speech intelligibility and sound-quality ratings for speech processed with single-channel WDRC amplification at a range of compression ratios. Quality ratings and speech intelligibility were highest for linear speech, and decreased with increasing compression ratios. High quality ratings were significantly correlated with higher speech intelligibility scores. Souza et al., (2001) asked patients with a severe loss to choose among four digitally simulated amplification conditions: linear peak clipping, compression limiting, two-channel WDRC, and three-channel WDRC in a paired-comparison test. The WDRC systems used a compression threshold of 45 dB SPL, a compression ratio of 3:1, and attack and release times of 3 and 25 milliseconds, respectively. Speech intelligibility was also measured in each condition. The pattern of preference rankings paralleled that of speech intelligibility, with compression limiting preferred most often and providing the highest intelligibility. The least preferred (and least intelligible) was the three-channel compression system.

In studies with wearable hearing aids, patients preferred compression limiting over peak clipping or single-channel, fast-acting WDRC hearing aids in the large-scale clinical trial sponsored by the Veteran's Administration and the National Institute on Deafness and Communication Disorders (Larson, 2000). Humes et al., (1999) reported that 76% of patients tested preferred a two-channel WDRC aid to a linear peak clipping aid. Given the higher distortion from peak clipping aids at a high input level, a preference for WDRC is not surprising. Additionally, Humes et al., (1999) noted that the WDRC aid was the last circuit option used and may have represented a “new” aid to some of the subjects. Kam and Wong (1999) found WDRC was preferred over compression limiting for “loudness appropriateness” and for “pleasantness” of high-level signals in paired-comparison testing.

In summary, current research studies indicate that patients prefer simpler sound processing strategies to those with large numbers of compression channels and/or high compression ratios. In the few studies that assessed patient preference and speech intelligibility, subjects also selected amplification systems that provided good speech intelligibility. These results are encouraging because they imply that sound-quality judgments or patient preference could be used clinically to select compression parameters without compromising speech intelligibility.

Setting Compression Parameters

The electroacoustic parameters on conventional compression hearing aids were generally fixed by the manufacturer, or were adjustable only within a small range. Today's digital and digitally programmable aids are increasingly adjustable by the clinician. How, then, should compression parameters be adjusted for a particular patient?

The hearing aid fitting procedures recommended by the American Speech-Language-Hearing Association rely heavily on probe microphone testing (ASHA, 1998). However, this approach provides little guidance in setting compression parameters. Differences in attack and release time, for example, would not be evident with standard probe microphone procedures but could have significant effects on speech intelligibility and/or speech quality.

In a recent study (Jenstad, Van Tasell et al., 1999), clinicians were surveyed about their solutions to common fitting problems noted by the patient, including lack of clarity, excessive loudness, and complaints about background noise. Some problems received consistent responses. For example, the majority of clinicians surveyed would adjust either maximum output or gain in response to complaints that the aid was too loud. Knowledge of the effect of compression adjustments was much less consistent. Only about half the respondents answered the questions about compression parameters. Of those who did, responses were often inconsistent or conflicting. For example, survey respondents sometimes stated that they would solve the same fitting problem in opposite ways (ie, both by increasing the release time and by decreasing the release time). Given the obvious uncertainty about setting compression parameters, how should these aids be set in the clinic?

One approach is to accept the manufacturer's default settings. In programmable aids, these are usually applied automatically when the manufacturer's fitting algorithm is used. While this may provide a good starting point, it does not account for the effects of later adjustments in response to patient complaints about intelligibility or sound quality. Additionally, many manufacturers' fitting recommendations are based on the audiogram only and may not address individual differences in suprathreshold processing, loudness growth, or preference.

Obviously, there is a need for guidance in setting compression parameters. Fortunately, a number of researchers have directly or indirectly addressed these questions (eg, Barker and Dillon, 1999; Boike and Souza, 2000a; Fikret-Pasa, 1994; Hansen, 2002; Hornsby and Ricketts, 2001; Neuman et al., 1994). Although it is difficult to compare results directly across studies, as each study used different amplification systems, subject population, and fitting procedures, their research can provide some guidance to setting compression characteristics.

Setting Compression Threshold and Compression Ratio

For compression limiting aids, the primary goal is to avoid discomfort for high-level inputs without saturation distortion. Assuming a hearing aid that processes sound linearly below the compression threshold, the compression ratio per se is less important than setting the aid appropriately to prevent loudness discomfort. For example, the NAL-R prescriptive procedure recommends setting compression limiting parameters (compression threshold and/or compression ratio, depending on the hearing aid) so that maximum output is halfway between the listener's loudness discomfort level and a level that allows a 75 dB SPL input to be amplified without saturation (Dillon and Storey, 1998).

Interestingly, Fortune and Scheller (2000) found that a hearing aid with a low compression threshold, low compression ratio, and slow attack time produced loudness discomfort levels that varied with signal duration (ie, increasing loudness discomfort levels with decreasing signal duration). Use of compression limiting resulted in flat loudness discomfort functions (ie, signal duration did not affect the loudness discomfort level). The authors suggested that use of such parameters rather than the conventional high compression threshold, high compression ratio, and short attack time of a compression limiting aid might allow greater signal audibility without discomfort for brief speech components.

For wide-dynamic range compression amplification, the compression threshold and compression ratio are usually low (Walker and Dillon, 1982). Generally, the lower the compression threshold, the more audibility is improved for low-level speech (Souza and Turner, 1998). Some recently introduced hearing aids use compression thresholds as low as 0 dB HL, in contrast to the 40–50 dB SPL compression thresholds normally used in WDRC aids. However, most hearing aid wearers prefer substantially higher compression thresholds (Dillon et al., 1998; Barker and Dillon, 1999). Barker and Dillon, as well as Ricketts (in Mueller, 1999) speculate that listeners reject a low compression threshold because it amplifies low-level background noise, resulting in undesirable sound quality. A low compression threshold might be more acceptable if the hearing aid used expansion instead of linear processing below the compression threshold.

In many programmable aids, compression ratio is the primary adjustment available to the clinician. How should this parameter be adjusted? In a recent study, Boike and Souza (2000a) measured sentence recognition for compression ratios ranging from 1:1 to 10:1. For each condition, listeners also rated clarity, pleasantness, ease of listening, and overall sound quality. Sentences were presented in quiet and in noise at a +10 signal-to-noise ratio.

In quiet, increasing compression ratio had no effect on speech intelligibility. In background noise, there was a decrease in performance as compression ratio increased. Goedegebure et al., (2001) and Hohmann and Kollmeier (1995) reported similar findings for speech in noise. Speech level may also have an effect. Hornsby and Ricketts (2001) found decreased speech intelligibility at increased compression ratios ranging up to 6:1 for conversational-level speech. However, there were only minimal effects of increasing compression ratio for high-level (95 dB SPL) speech.

In the laboratory studies previously described, speech audibility was purposely held constant. In many compression aids, the compression ratio is controlled by adjusting gain separately for low-intensity versus high-intensity input signals. Most often, the gain for high-intensity sounds is fixed according to the listener's loudness discomfort level, and the compression ratio is increased by increasing the gain for low-intensity speech. In other words, a higher compression ratio could also improve speech audibility. This may account for the results of some studies with wearable aids in which increasing the compression ratio did not show a detrimental effect (eg, Fikret-Pasa, 1994); perhaps the improved audibility offset any negative effects. If we consider improved speech audibility as a primary goal, in conjunction with the potential for reduced speech intelligibility and decreased preference at higher compression ratios, it seems reasonable to use the lowest possible compression ratio that will maximize audibility across a range of speech levels. This may require decreasing gain for soft sounds and/or increasing gain for loud sounds, as long as soft sounds remain audible and loud sounds do not cause discomfort.

Setting Attack and Release Time

Attack time does not vary much and usually cannot be adjusted by the clinician. A short attack time is important to allow the hearing aid to respond quickly to increases in sound level. However, the listener could perceive as a click an attack time that is too short (<1 millisecond). Walker and Dillon (1982) recommend attack times between 2 and 5 milliseconds.

Release times have a wide range of possible settings and may have an impact on speech intelligibility and sound quality. There is no consensus about the optimal release time (Dillon, 1996; Hickson, 1994; Jenstad et al., 1999). Shorter release times minimize intensity differences between speech peaks (eg, high-intensity vowels) and valleys (eg, low-intensity consonants) and therefore can provide greater speech audibility (eg, Jenstad and Souza, 2002a). As an example, recall that a compression hearing aid applies greater gain for low-intensity input signals, and less gain to high-intensity input signals. Consider a speech signal with a high-intensity vowel followed by a low-intensity consonant. The variable gain amplifier would respond to the high-intensity vowel with increased compression (ie, decreased gain). With a short release time, gain recovers quickly for the low-intensity consonant, allowing for greater consonant audibility.

Next, consider the same speech stimulus processed with a long release time. Again, the variable gain amplifier would respond to the high-intensity vowel with increased compression (ie, decreased gain). In this case, however, the hearing aid recovers its gain slowly; that is, it takes a longer time to return to higher gain (and output). With gain still low, the low-intensity consonant receives little gain, and may be inaudible to the listener.

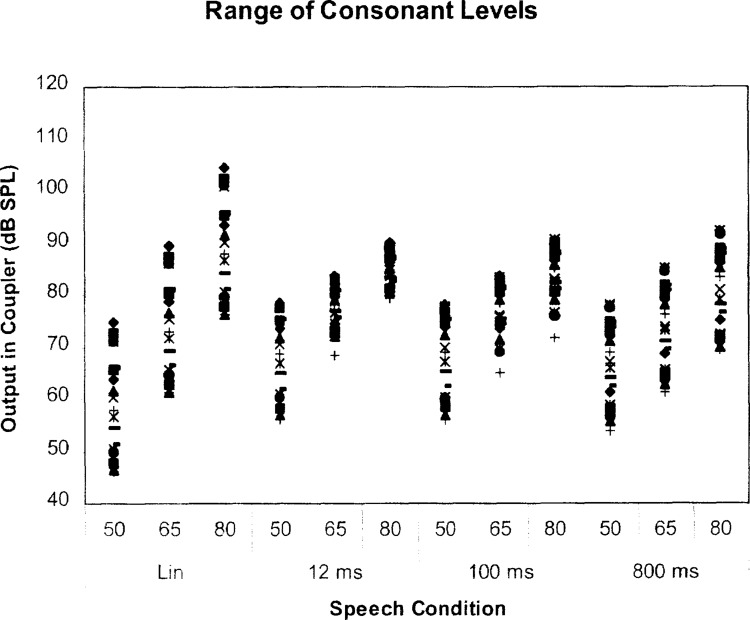

We can use acoustic measurements to illustrate the consequences of release time for speech audibility. Figure 10 summarizes the effect of input level and release time on consonant audibility. The most obvious effect is a systematic increase in output level at higher input levels. Additionally, the distribution of consonant levels (ie, from lowest-intensity consonant to highest-intensity consonant) is reduced with shorter release times, both within an input level and across input levels.

Figure 10.

Consonant levels (in dB SPL) measured in a 2cc coupler as a function of release time and input level. Each point represents the level of an individual consonant measured in a 2cc coupler. Data are shown for simulated linear amplification and for three simulated WDRC amplification conditions, with release times of 12, 100, and 800 milliseconds, respectively. A flat frequency response was used. In each amplification condition, results are presented for three input levels: 50, 65, and 80 dB SPL.

In addition to improved audibility for brief, low-intensity speech components, a short release time may even out small amplitude fluctuations, making it easier to detect gaps in the signal (Glasberg and Moore, 1992; Moore et al., 2001). However, fast-acting compression also alters the normal amplitude contrast between these sounds, which may be an invariant cue for speech identification (Hickson and Byrne, 1997; Hickson et al., 1999; Plomp, 1988). Thus, shorter release times can also minimize or distort temporal cues.

A hearing aid with a long release time cannot respond quickly to changes in level between individual phonemes. However, with longer release times, the natural amplitude contrast between the vowel and consonant is preserved (Jenstad and Souza, 2002a). Therefore, compression with longer release times may be a better choice for listeners who rely on variations in speech amplitude.

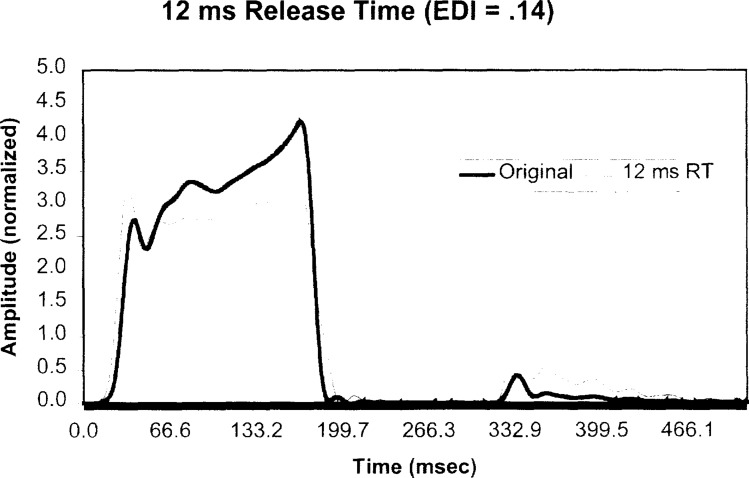

Acoustic measurements can also be used to describe the effect of release time on speech amplitude variations. Figure 11 compares the amplitude envelope for the syllable /ip/ for a linear circuit (thick line) and a WDRC circuit with a 12-millisecond release time (thin line). Amplitude was normalized to emphasize the differences in amplitude envelope rather than differences in overall level, which also depend on the individual gain prescription and volume control setting. Acoustically, the amplitude contrast between the consonant and vowel, as well as the rise-time of the initial portion of the consonant, are significantly altered by WDRC. Specifically, WDRC reduces the vowel level and increases the consonant level relative to the original speech token.

Figure 11.

A comparison of amplitude envelopes for linear (thick) and compressed (thin line) for the syllable /ip/.

To describe these effects, it is helpful to use an index that quantifies the degree of temporal change to the signal. One available measure is the envelope difference index (EDI; Fortune et al., 1994). The EDI is an index of change to the temporal envelope between the two signals, designed to describe temporal effects of amplification. Briefly, this involves obtaining the amplitude envelope of two signals (by rectifying and low-pass filtering) and calculating the difference between them on a scale from 0 to 1, with 0 representing complete correspondence between the waveforms and 1 representing no correspondence between the waveforms. For the syllables compared in Figure 11, the calculated EDI is 0.14. How much the speech is altered depends not only on the release time, but also on input level, compression threshold, compression ratio, frequency response, and number of compression channels. Nonetheless, we can use the EDI to systematically describe the effect of varying release time on individual phonemes.

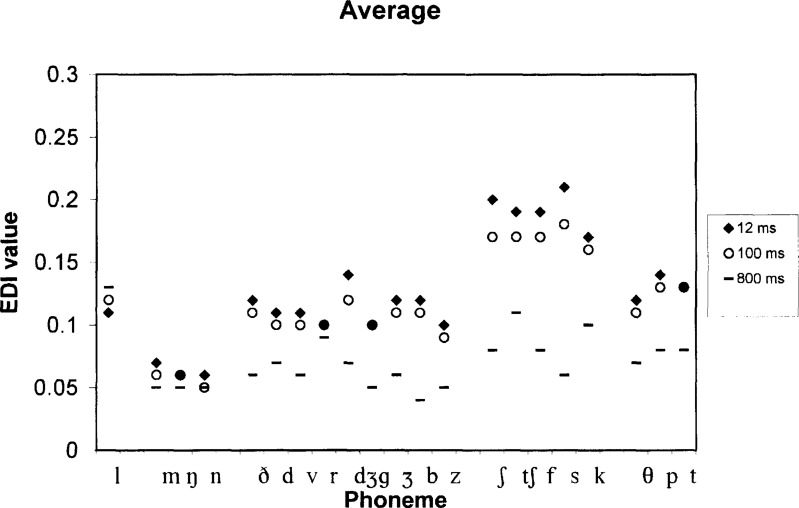

The relationship between release time and EDI is plotted in Figure 12 for a variety of consonants presented in an /iC/ format. Shorter release times altered the amplitude envelope more than longer release times, although there was little difference between release times of 12 and 100 milliseconds for most consonants. These effects were also phoneme-dependent, with the greatest alteration for voiceless fricatives and affricates and minimal effects for nasals and glides.

Figure 12.

Envelope Difference Index (EDI) for vowel-consonant syllables as a function of release time.

Evidently, varying release time can introduce significant differences in speech audibility or temporal cues. The consequences of these acoustic changes for intelligibility are less clear. Based on research data available at the time, Walker and Dillon (1982) suggested that a release time of between 60 and 150 milliseconds would provide the best speech intelligibility. More recent studies show little effect of varying release time on sentence intelligibility for release times up to 200 milliseconds on listeners with a mild-to-moderate loss (Bentler and Nelson, 1997; Jerlvall and Lindblad, 1978; Novick et al., 2001). However, changes in release time may have more subtle effects. The amplitude envelope has been shown to carry important properties for identification of some phonemes (eg, Turner et al., 1995), and changes in these properties may affect phoneme recognition for listeners who rely on these cues (Souza et al., 2001). Finally, the issue is complicated by use of an adaptive release time, which can respond in different ways depending on the duration, intensity, and crest factor of the triggering signal (Fortune, 1997).

Changes in release time may also affect speech quality. Results have been mixed; some studies show no distinct preference for release time (Neuman et al., 1994; Bentler and Nelson, 1997) while others show speech with longer release times (>200 milliseconds) is rated as more pleasant or less noisy than shorter release times (Hansen, 2002; Neuman et al., 1998).

In summary, there is a possible discrepancy between a preference for longer release times and improved speech intelligibility for shorter release times. It is also important to consider the interaction between release time and other processing features such as the number of channels and the compression thresholds of the hearing aids. For example, because a lower compression threshold can improve audibility, a hearing aid with a slow release time may improve intelligibility if paired with a lower compression threshold. Also, the combination of multiple compression channels with short release times may cause significant temporal and spectral smearing (eg, Moore and Glasberg, 1986). Thus, in a multichannel hearing aid, a longer release time may improve intelligibility over a shorter release time.

Number of Compression Channels

How many channels should be used? Presumably, the more channels, the more control the clinician has over signal characteristics and speech audibility. With a large number of compression channels, relative differences in level across frequency (ie, spectral peak-to-valley differences) will be reduced. Therefore, use of more than two or three channels may substantially reduce the frequency distinction in the speech signal, potentially degrading temporal and spectral cues (Bustamente and Braida, 1987; Dreschler, 1992; Moore and Glasberg, 1986). Any negative effects of increasing numbers of channels are likely to have the greatest consequences for sounds that carry pertinent information in the spectral domain; among them, vowels or the nasal consonants /m, n, η/ (Kent and Read, 1992). For example, the most important cue for vowel identity is detection of spectral peaks relative to the surrounding frequency components. Even if overall audibility of the sound is improved, these changes may reduce intelligibility. Differences in the number of channels could explain differences in results between investigators who demonstrate improved vowel intelligibility using WDRC with a small number of channels (eg, Dreschler et al., 1988b and 1989; Stelmachowicz et al., 1995) and those who show a detrimental effect. For example, Franck et al., (1999) showed vowels were harder to identify via an eight-channel compression hearing aid than with a single-channel compression hearing aid.

In a review of published data on multichannel amplification prior to 1994, Hickson (1994) concluded that the best results were obtained with compression systems having three or fewer channels. For speech intelligibility in general, recent data suggest that multichannel systems with up to four channels are equivalent to, but not superior to, single-channel systems (eg, Keidser and Grant, 2001b; van Buuren et al., 1999).

For studies that demonstrated improved performance with greater numbers of channels, the advantage appears to be one of improved audibility rather than the number of channels per se. For example, Yund and Buckles (1995b) demonstrated improved nonsense syllable recognition in noise as the number of channels increased from four to eight. Comparison of consonant confusions and frequency response for the different numbers of channels were consistent with improved high-frequency audibility. The authors note that results of multichannel compression experiments should be interpreted in the context of the stimuli used. In this case, no additional improvement was seen with more than eight channels, perhaps because the eight-channel system already provided sufficient information for recognition of high-frequency consonants. Similarly, Braida et al., (1982) pointed out that some early studies that showed a large advantage for multichannel compression likely provided improved high-frequency audibility relative to a linear condition.

For most audiometric configurations, two-channel or three-channel compression hearing aids seem to offer a good compromise between customized manipulation of the hearing aid response and providing coherent spectral contrast. For more unusual audiometric configurations (ie, rising or cookie bite audiograms), larger numbers of channels are appealing. Available data on larger numbers of channels is mixed, although larger numbers of channels should be most advantageous when adequate frequency shaping is provided (Crain and Yund, 1995); when adding more channels improves speech audibility over a smaller number of channels; and when compression ratios are low enough to avoid distortion of speech components (Yund and Buckles, 1995b). Larger numbers of channels also have potential benefits for feedback cancellation. The audibility advantage of multichannel compression may be most effective for listeners with a mild-to-moderate loss (Yund and Buckles, 1995a).

Candidacy for Compression Amplification

Is one type of compression system best for every patient? No, almost certainly not. In research studies, results are usually reported in favor of the majority. For example, in a recent study, 7 of 16 subjects demonstrated improved performance for a compression aid versus a linear aid, 5 showed no difference, and 4 showed degraded performance. The overall conclusion was that WDRC was superior to linear amplification (Yund and Buckles, 1995a). While such statistical conclusions follow accepted research standards, the underlying individual variability is of great interest to clinicians, whose goal is to determine the optimal hearing aid processing for an individual patient. Numerous studies show such differences in performance across subjects, with improved scores with compression for some listeners but not for others (eg, Benson et al., 1992; Laurence et al., 1983; Moore, Johnson et al., 1992; Tyler and Kuk, 1989; Walker et al., 1984). Individual performance differences are noted even across listeners with the same amount of hearing loss (eg, Boothroyd et al., 1988). Clearly more research is needed to relate individual audiometric characteristics, suprathreshold processing ability, and previous hearing aid experience to performance with nonlinear amplification. For example, differences in compression benefit may be related to individual differences in dynamic range across subjects (Moore, Johnson et al., 1992; Peterson et al., 1990), to the configuration of the audiogram (Souza and Bishop, 2000), or to the perceptual weights individual listeners place on different portions of the signal (Doherty and Turner, 1996). The next sections review recent research on the benefits of compression amplification for specific audiometric groups.

Use of WDRC for Severe-to-Profound Loss

Until recently, most listeners with a severe-to-profound loss were fit with either linear peak clipping or linear compression limiting aids, both of which operate linearly for most situations. The availability of high-gain wide-dynamic range compression aids offers new options for those with greater degrees of hearing loss. Nearly all of the major programmable and digital product lines are now available in a power behind-the-ear style (Buyer's Guide, 2001). However, most research has focused on listeners with mild-to-moderate hearing losses. According to dispensing professionals, 23% of hearing aids were dispensed to listeners with hearing thresholds exceeding 70 dB HL (Strom, 2002). Clearly, there is a need for research that is focused on this special group.

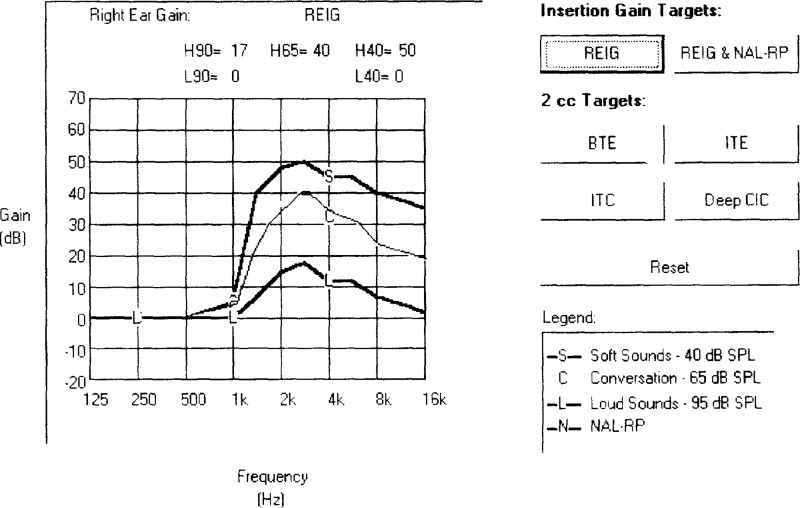

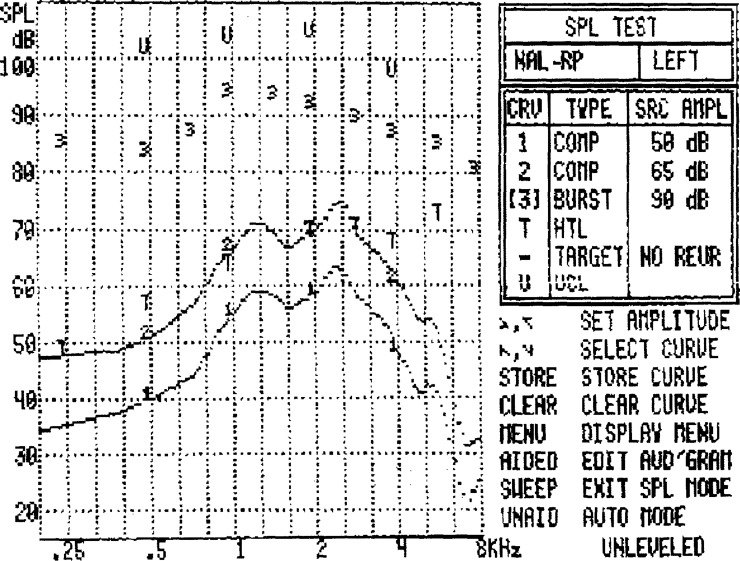

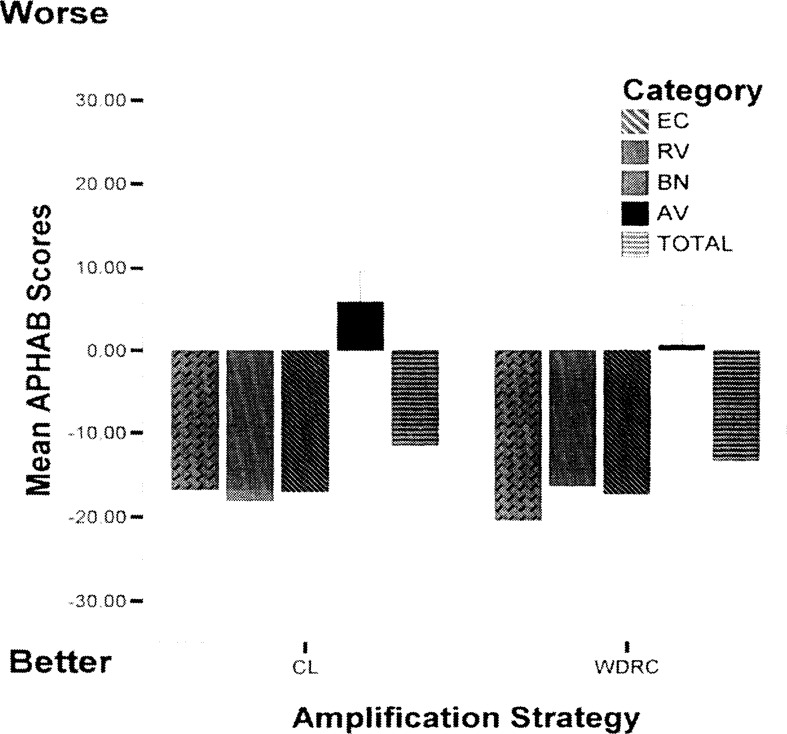

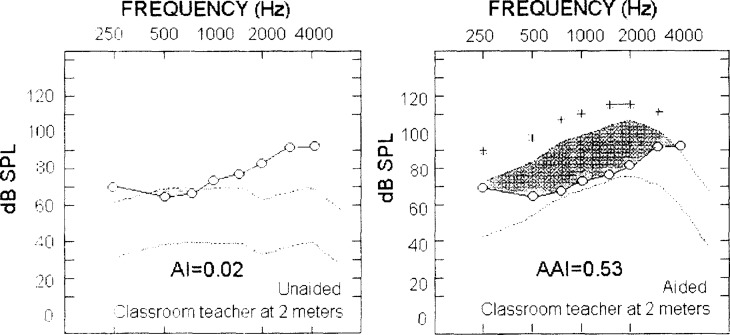

A drastically reduced dynamic range is characteristic of a severe-to-profound hearing loss. The range from the threshold to the loudness discomfort level can be as small as 5 dB at some frequencies. Because conversational speech varies over 30 dB SPL or more, it is difficult to place the full range of speech components into the audible range of the listener using only linear amplification. In theory, WDRC amplification could be used to solve this problem.