Abstract

Immediately before a large eye movement, a target object is crowded by clutter placed near the target’s future location. This new finding, from a recent study, shows that the brain’s remapping for the anticipated eye movement unavoidably combines features from the target’s current and future retinal locations into one perceptual object.

Object recognition proceeds through the selection and combination of features, governed by rules of grouping and crowding [1,2,3,4,5]. This is variously called binding, grouping, region growing, or crowding, depending partly on whether the observer recognizes the object. In “crowding”, target identification fails because the feature combination extends unavoidably over an inappropriately large area, jumbling elements of the adjacent clutter with those of the target, which spoils identification [6,7]. The size of this minimum area over which features are combined is roughly the same for all simple objects, like letters, and grows proportionally with eccentricity (distance from fixation) [5,8,9]. Transformed by the cortical magnification factor, the minimum size of the combination region corresponds to a fixed area in mm2 on the surface of the cortex [10,11]. This compact region of compulsory combination is roughly centered on the target. In their recent Current Biology paper, Harrison et al. [12] find that, immediately before a large eye movement (saccade), features from two widely separated regions, at the present and future locations of the target, are combined to produce one perceptual object.

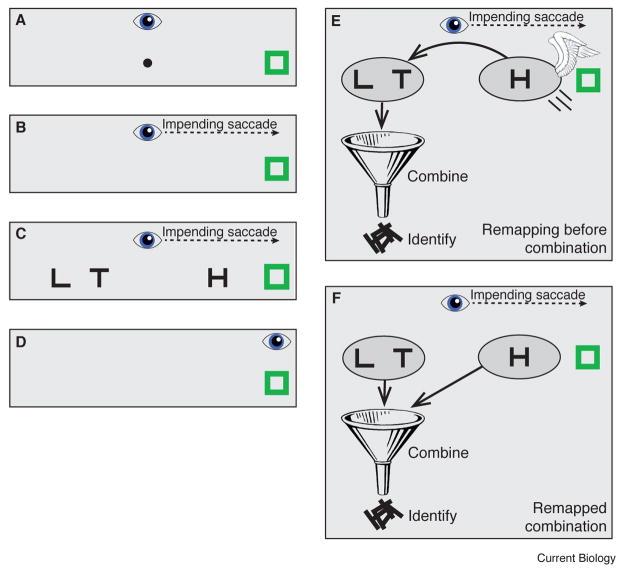

Harrison et al. present target and clutter briefly, immediately before a large saccade (Figure 1). When clutter is placed next to the future retinal location of the target (far away, in the other hemifield), the clutter impairs recognition nearly as strongly as when it is placed next to the target’s current location. As in normal crowding, the strength of the effect depends on similarity between the target and the clutter [13]: An L crowds an H, but an X does not crowd an O. The sensitivity to similarity shows that the impairment is due to crowding, not to the indiscriminate impairment of saccadic suppression [14,15].

Figure 1.

(A) Initially, the observer fixates the central dot. (B) Disappearance of that central dot cues the observer to move his or her eyes rightward to the green square. (C) The random target (shown as H) is briefly presented to the right of fixation. At the same time, clutter is briefly presented to the left of fixation. The duration is 17 ms. (D) The brief target and clutter are gone by the time the eye moves and finally arrives at the green square. (Our simplified diagram omits the other placeholders and the changing content of the green square, which are not discussed here.) (E) Feature Remapping Theory: The features of the target are displaced from its original retinotopic location to its anticipated post-saccadic location. That places the target amid the clutter. Identification unavoidably combines information over a region that includes the (displaced) target and clutter. The combination region, the critical zone for crowding is represented by a gray oval. This combination degrades identification despite the large offset between the flankers and target in the display. (F) Attention Remapping Theory: The remapping causes identification to be based on a combination of features from two locations: the present and future locations of the target [19]. The combination regions are shown as gray ovals. Again, combining the features of the target and clutter degrades identification. Based on Harrison et al. [12]. Funnel image from Merriam Webster’s Learner’s Dictionary. http://www.learnersdictionary.com/search/funnel

The story of remapping began with physiological studies of cells that direct saccades to their targets, showing a correction in their target locations for an upcoming eye movement [16]. It was then extended to the allocation of attention, where a similar correction for upcoming saccades was found [17,18]. Now, Harrison et al. use crowding to show that remapping displaces not just location information, but also content, effectively combining the content of two distant regions.

The discovery of remapping by Duhamel, Colby, and Goldberg in 1992 launched intense scientific activity evaluating the mechanisms, brain areas, and pathways involved. They found that almost all the neurons in lateral intraparietal cortex (LIP) begin to fire in response to a stimulus that will be brought into their respective fields by an eye movement, even if the stimulus is extinguished before the eyes arrive. This remapped response is only found for attended targets, either flashed or task-relevant e.g. [19], and always moves in the direction opposite the saccade, predicting where the attended target will be after the saccade. The predictive response of these neurons can begin as early as 100 ms before the saccade and tends to peak at the onset of the saccade, much earlier than the cell would be able to respond if the stimulus simply appeared in the cell’s receptive field following the eye movement. This pre-saccadic stimulus activates the cells for the remapped location (which depends on the saccade vector) and, at the same time, it also activates cells that normally respond to the target’s location. As a result, activity can be seen just before the saccade in two widely separated sets of cells, at the target’s actual retinal location and its remapped location, both in response to the same brief stimulus.

These physiological studies were followed by behavioral studies that showed a similar pre-saccadic shift of attention by placing probes [17] at the target’s remapped location just before a saccade. Moreover, there is compelling evidence from time stamping and masking, and now crowding, showing that remapping does not just displace location information but generates a perceived target object that combines target information from the target’s pre- and (expected) post-saccadic retinal locations. When observers are asked to saccade to a clock with rapidly spinning hands and report the time that their eyes arrive, they report times 40–60 ms before their eyes arrived [20]. Masking and crowding are very different [7]. Masking requires overlap; crowding requires proximity. Without a saccade, a mask must overlap the target to impair its visibility, but immediately before a saccade a mask is effective at the target’s post-saccadic location [18]. These “bi-local” results are now buttressed by the Harrison et al. finding of remapped crowding. As already noted, without a saccade, clutter must be near the target to crowd it, but, immediately before a saccade, clutter is also effective at the target’s post-saccadic retinal location [12]. Since the range of masking and crowding is fairly small, and the size of saccades is quite variable, it would be interesting to measure the spatial profile of remapping to discover how accurately the pre-saccadic remapping predicts the saccade.

Thus, Harrison et al. now show that the brain’s remapping for the anticipated eye movement unavoidably combines features from the current and future retinal locations of the target into one perceptual object (see Figure 1 for two proposals on how this might happen).

Contributor Information

Denis G. Pelli, Email: denis.pelli@nyu.edu, Psychology Department and Center for Neural Science, New York University, New York, New York, USA

Patrick Cavanagh, Laboratoire Psychologie de la Perception, Université Paris Descartes, CNRS UMR 8158, Paris, France.

References

- 1.Pelli DG, Burns CW, Farell B, Moore-Page DC. Feature detection and letter identification. Vision Res. 2006;46:4646–4674. doi: 10.1016/j.visres.2006.04.023. [DOI] [PubMed] [Google Scholar]

- 2.Suchow JW, Pelli DG. Learning to detect and combine the features of an object. Proceedings of the National Academy of Sciences. 2013;110(2):785–790. doi: 10.1073/pnas.1218438110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wertheimer M. Untersuchungen zur Lehre von der Gestalt, II [Laws of organization in perceptual forms] Psychologische Forschung. 1923;4:301–350. [Google Scholar]

- 4.Levi DM. Crowding–an essential bottleneck for object recognition: a mini-review. Vision Res. 2008;48:635–654. doi: 10.1016/j.visres.2007.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pelli DG, Tillman KA. The uncrowded window of object recognition. Nature Neuroscience. 2008;11(10):1129–1135. doi: 10.1038/nn.2187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stuart JA, Burian HM. A study of separation difficulty: Its relationship to visual acuity in normal and amblyopic eyes. American Journal of Ophthalmology. 1962;53:471–477. [PubMed] [Google Scholar]

- 7.Pelli DG, Palomares M, Majaj NJ. Crowding is unlike ordinary masking: distinguishing feature integration from detection. J Vis. 2004;4:1136–1169. doi: 10.1167/4.12.12. [DOI] [PubMed] [Google Scholar]

- 8.Bouma H. Interaction effects in parafoveal letter recognition. Nature. 1970;226:177–178. doi: 10.1038/226177a0. [DOI] [PubMed] [Google Scholar]

- 9.Toet A, Levi DM. The two-dimensional shape of spatial interaction zones in the parafovea. Vision Res. 1992;32:1349–1357. doi: 10.1016/0042-6989(92)90227-a. [DOI] [PubMed] [Google Scholar]

- 10.Pelli DG. Crowding: A cortical constraint on object recognition. Current opinion in neurobiology. 2008;18(4):445. doi: 10.1016/j.conb.2008.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Freeman J, Simoncelli EP. Metamers of the ventral stream. Nature Neuroscience. 2011;14(9):1195–1201. doi: 10.1038/nn.2889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Harrison WJ, Retell JD, Remington RW, Mattingley JB. Curr Biol. 2013. Visual crowding at a distance during predictive remapping. 6th May issue. [DOI] [PubMed] [Google Scholar]

- 13.Kooi FL, Toet A, Tripathy SP, Levi DM. The effect of similarity and duration on spatial inter-action in peripheral vision. Spatial Vision. 1994;8(2):255–279. doi: 10.1163/156856894x00350. [DOI] [PubMed] [Google Scholar]

- 14.Burr DC, Morrone MC, Ross J. Selective suppression of the magnocellular visual pathway during saccadic eye movements. Nature. 1994;371(6497):511–513. doi: 10.1038/371511a0. [DOI] [PubMed] [Google Scholar]

- 15.Castet E, Masson GS. Motion perception during saccadic eye movements. Nature Neuroscience. 2000;3(2):177–183. doi: 10.1038/72124. [DOI] [PubMed] [Google Scholar]

- 16.Duhamel JR, Colby CL, Goldberg MR. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- 17.Rolfs M, Jonikaitis D, Deubel H, Cavanagh P. Predictive remapping of attention across eye movements. Nature Neuroscience. 2010;14(2):252–256. doi: 10.1038/nn.2711. [DOI] [PubMed] [Google Scholar]

- 18.Hunt AR, Cavanagh P. Remapped visual masking. Journal of Vision. 2011;11(1) doi: 10.1167/11.1.13. http://www.journalofvision.org/content/11/1/13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kusunoki M, Goldberg ME. The time course of perisaccadic receptive field shifts in the lateral intraparietal area of the monkey. Journal of Neurophysiology. 2003;89:1519–1527. doi: 10.1152/jn.00519.2002. [DOI] [PubMed] [Google Scholar]

- 20.Hunt AR, Cavanagh P. Looking ahead: The perceived direction of gaze shifts before the eyes move. Journal of Vision. 2009;9(9):1, 1–7. doi: 10.1167/9.9.1. http://www.journalofvision.org/content/9/9/1. [DOI] [PMC free article] [PubMed] [Google Scholar]