Abstract

Objective

To evaluate the efficacy of a mammography boot camp (MBC) to improve radiologists' performance in interpreting mammograms in the National Cancer Screening Program (NCSP) in Korea.

Materials and Methods

Between January and July of 2013, 141 radiologists were invited to a 3-day educational program composed of lectures and group practice readings using 250 digital mammography cases. The radiologists' performance in interpreting mammograms were evaluated using a pre- and post-camp test set of 25 cases validated prior to the camp by experienced breast radiologists. Factors affecting the radiologists' performance, including age, type of attending institution, and type of test set cases, were analyzed.

Results

The average scores of the pre- and post-camp tests were 56.0 ± 12.2 and 78.3 ± 9.2, respectively (p < 0.001). The post-camp test scores were higher than the pre-camp test scores for all age groups and all types of attending institutions (p < 0.001). The rate of incorrect answers in the post-camp test decreased compared to the pre-camp test for all suspicious cases, but not for negative cases (p > 0.05).

Conclusion

The MBC improves radiologists' performance in interpreting mammograms irrespective of age and type of attending institution. Improved interpretation is observed for suspicious cases, but not for negative cases.

Keywords: Breast, Breast neoplasms, Mammography, Education, Mass screening

INTRODUCTION

Since 1999, the National Cancer Screening Program (NCSP) in Korea has recommended biannual screening mammograms for females 40 years of age or older (1, 2). However, the outcome of breast cancer screening has been poor, showing the worst results among the five cancers included in the NCSP (3, 4). Additionally, the cancer detection rate, sensitivity, and positive predictive value did not reach the levels recommended by the American College of Radiology (ACR) (5). Therefore, to improve breast cancer screening, quality control of mammography equipment, technicians, and radiologists, is needed. Quality control of mammography equipment and technicians has been performed by the Korean Institute for Accreditation of Medical Imaging since 2003 (6), however that of radiologists has never been implemented. Because mammograms in the NCSP should be interpreted by radiologists, their performance may be the most important factor in improving the quality of breast cancer screening (7).

The Korean Society of Breast Imaging (KSBI) was formed in 1992 and was officially included as a sub-specialty of the Korean Society of Radiology (KSR) in 1994 (8). Breast imaging was included recently in the resident training programs of the KSR (9); the training, especially interpretation of mammograms, was previously insufficient. Therefore, a dedicated educational program for interpreting mammograms is required as part of the NCSP.

To improve the interpretation of mammograms, the KSR and KSBI developed the mammography boot camp (MBC). The MBC is a 3-day educational program which benchmarked the Breast Imaging Boot Camp (BIBC) of the ACR (10). In 2012 the KSR and KSBI, with support from the National Cancer Center, conducted MBCs that demonstrated an improvement of radiologists' skill in interpreting mammograms (11). A higher average post-camp test score was observed compared with the average pre-camp test score (71.7 ± 9.2 vs. 58.3 ± 12.8) and attendees' program satisfaction averaged 96.4%. Several weak points, including validation of the test set cases, were updated in 2013.

In this study, we evaluate radiologists' performance in interpreting mammograms using the updated MBC and analyzed the factors affecting their performance.

MATERIALS AND METHODS

This study was approved by the Institutional Review Board of the corresponding author's institution and the need for informed consent from participants was waived.

Attendees

Between January and July 2013, the KSR and KSBI conducted the updated MBC four times. The target population was radiologists, excluding physicians, surgeons, and technicians because the Ministry of Health and Welfare Notice states that NCSP mammograms should be interpreted only by radiologists (7). Any radiologist interested in interpreting mammograms could apply online to attend the MBC irrespective of participation in the NCSP. The registration form included the attendee's name, age, gender, the name of the attending institution, and the year and acquisition number of board certification in radiology. Applying radiologists were registered on a first-come, first-served basis.

The MBC Group Practice Reading

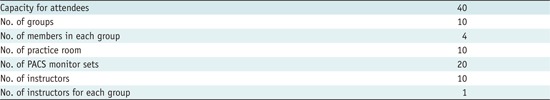

The MBC was a 3-day event comprised of lectures, group practice readings, and pre- and post-camp tests (Appendix 1, 2). For group practice reading, the applicants were divided into 10 groups, each of which was composed of four attendees of similar age. For each group, two sets of 3- or 5-megapixel monitors (WIDE; WIDE Corp., Yongin, Korea) (BARCO; Barco Inc., Duluth, GA, USA) (TOTOKU; JVCKENWOOD Corporation, Yokohama, Japan) were provided. Two types of picture archiving and communication system (PACS) software were used; Marosis (INFINITT Healthcare, Seoul, Korea) and Deja-View (Dongeun IT, Bucheon, Korea). A brief lecture on the use of the PACS software was given immediately before the start of the group practice reading.

Ten instructors facilitated group practice readings; all were experienced members of the KSBI. Seven had attended the BIBC of the ACR and facilitated the MBCs in 2012. During the group practice reading, attendees rotated 10 times among stations and interpreted 250 digital screening mammography cases prepared by instructors. Images of patients with palpable markers or surgical scars were excluded. Each case was composed of four routine mammography views without prior examinations. The ratio of negative/benign cases to suspicious cases was approximately 1:4. Attendees were asked to recall suspicious finding(s) of category 4 or 5 according to the Breast Imaging Reporting and Data System (12) and choose one answer from the following four choices reflecting side-specific accuracy: if the case was negative or had benign finding(s) only, choice 1 (Fig. 1); if the case had suspicious finding(s) in the right breast, choice 2 (Fig. 2); if the case had suspicious finding(s) in the left breast, choice 3 (Fig. 3); if the case had suspicious findings in both breasts, choice 4 (Fig. 4). After completion of each group practice, the instructor provided a case review with slides prepared using PowerPoint (Microsoft).

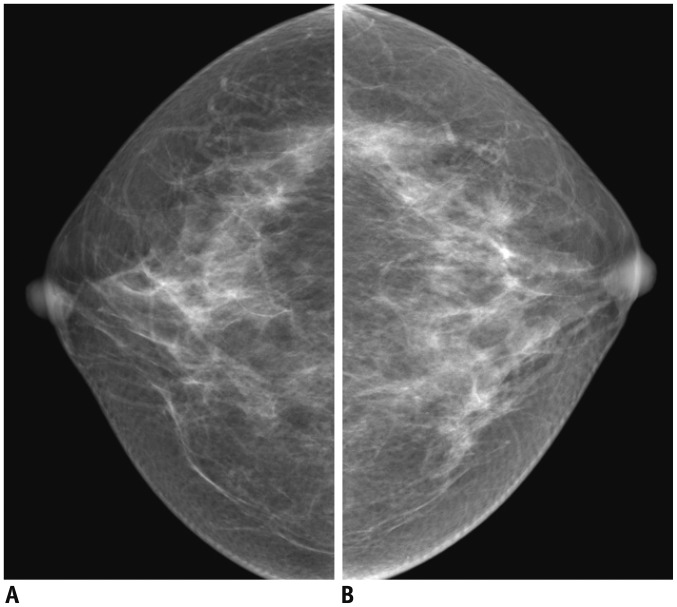

Fig. 1.

Example case of choice 1 in group practice reading.

Both craniocaudal views (A, B) show negative findings. Follow-up mammogram after 3 years (not shown) shows negative findings confirming case as truly negative.

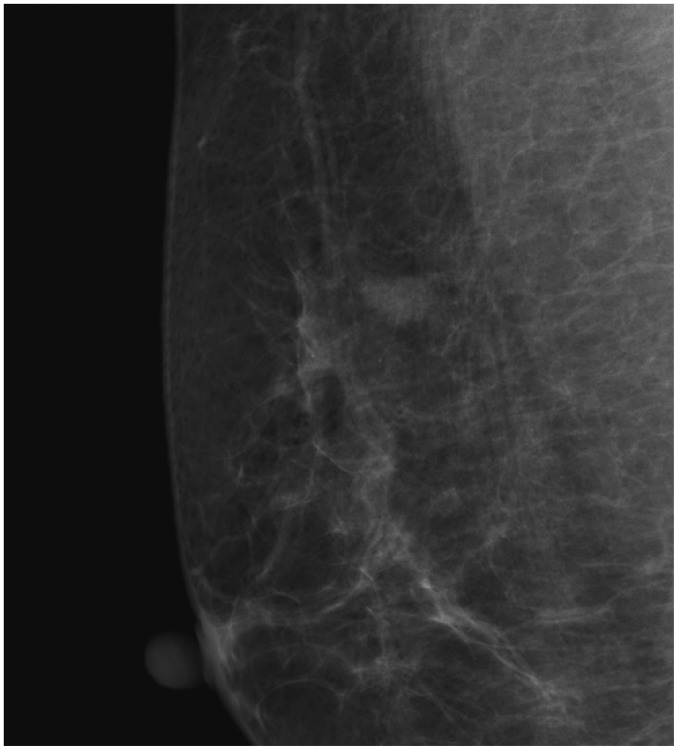

Fig. 2.

Example case of choice 2 in group practice reading.

Right mediolateral oblique view shows small lobular microlobulated isodense mass in upper portion that was confirmed to be invasive ductal carcinoma.

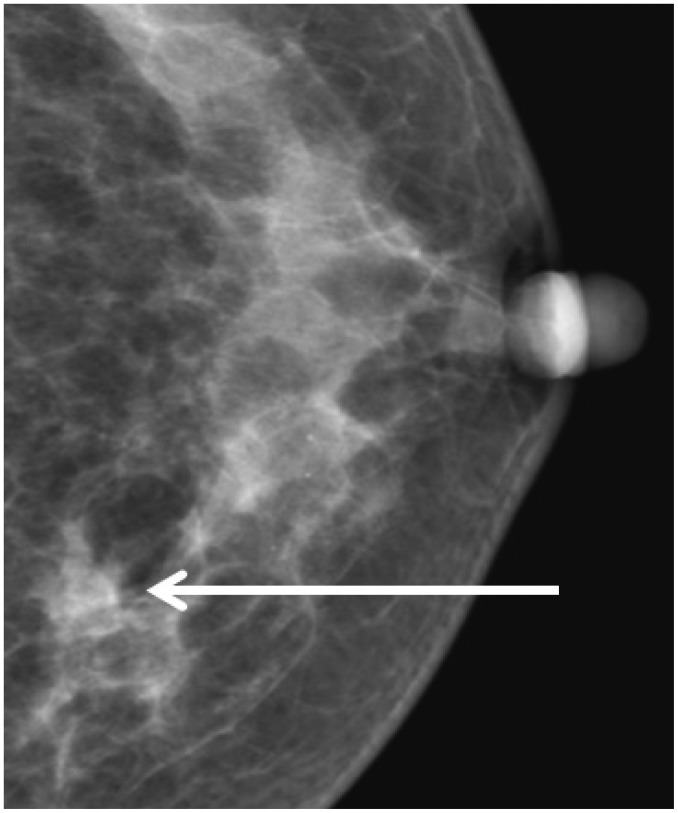

Fig. 3.

Example case of choice 3 in group practice reading. Left craniocaudal view shows asymmetry (arrow) in inner portion that was confirmed to be invasive ductal carcinoma.

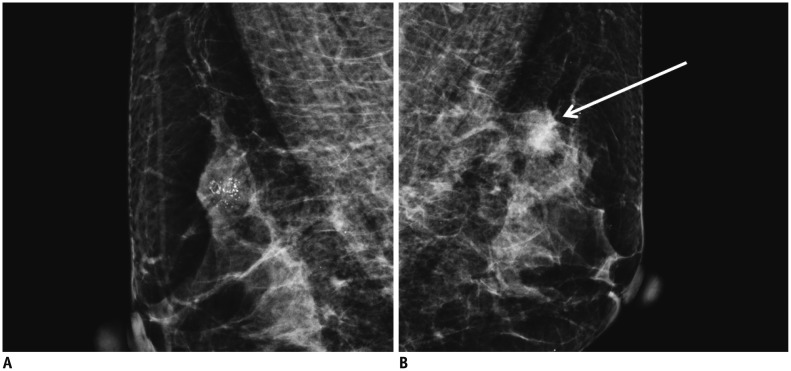

Fig. 4.

Example case of choice 4 in group practice reading.

Right mediolateral oblique (MLO) view (A) shows clustered fine pleomorphic calcifications in upper portion and left MLO view (B) shows round, obscured hyperdense mass (arrow) in upper portion. These were confirmed to be ductal carcinoma in situ in right breast and invasive ductal carcinoma in left breast.

Pre- and Post-Camp Tests

Pre- and post-camp tests were performed to evaluate attendees' improvement in interpreting mammograms. Prior to the MBC, the test set cases were selected from among 30 candidate cases. Twenty-six experienced members of the KSBI were invited to validate the candidate cases and participated as a control group. The answering method was the same as for the group practice reading. As a result of the validation, four cases were considered too difficult, with a rate of incorrect answers over 50%, and two cases were considered too easy, with a 0% rate of incorrect answers. These six cases were excluded and one new case was added via consensus of the authors. Finally, 25 test set cases were prepared with a ratio of negative/benign cases to suspicious cases of 1:2.5. The patterns in the 18 suspicious cases were as follows: 13 masses or asymmetries, 4 pure microcalcifications and 1 axillary lymphadenopathy. There were six negative and one benign case with a false-positive finding.

To use the PACS monitor set individually during the post-camp test, odd-numbered attendees took the test first, followed by even-numbered attendees. To ensure fairness, the participants were isolated during the interim. The answering method was the same as for the group practice reading. Attendee scores were calculated by multiplying the number of right answers by a factor of four.

Data Analysis

We compared the pre- and post-camp test scores of all attendees, the pre- and post-camp test scores of the attendees in each camp, and the pre- and post-camp test scores of all attendees with the control group scores. The pre- and post-camp test scores of all attendees were compared according to age group and type of attending institution. Age groups were as follows: under 40 years, 40-49, 50-59, and 60 years or older. The types of attending institution were as follows: university-affiliated hospital, hospital, private clinic, and screening center. A hospital was defined as a medical institution where sick or injured people are given medical or surgical care; university-affiliated hospital was defined as a higher grade hospital affiliated by an university providing all types of medical and surgical services; and private clinic was defined as a healthcare facility for outpatient care operated by a private owner or a group of medical specialists.

Before comparison, the scores were evaluated for normal distribution using the Shapiro-Wilk test. If the scores were normally distributed, a parametric test such as a paired t test or analysis of variance was used. Otherwise, a non-parametric test such as the Wilcoxon signed-rank test was used. The scores of pre- and post-camp tests, most age groups, most types of attending institution, and the control group showed normal distributions. In contrast, pre- and post-camp test scores of attendees under 40 years of age and the screening center did not show normal distributions.

Lastly, we compared the rates of incorrect answers for each case between the pre- and post-camp tests with the control group using the McNemar method. The rate of incorrect answers was defined as the proportion of attendees answering incorrectly. A p value < 0.05 indicated statistical significance. All statistical analyses were performed using the SAS software version 9.2 (SAS Inc., Cary, NC, USA).

RESULTS

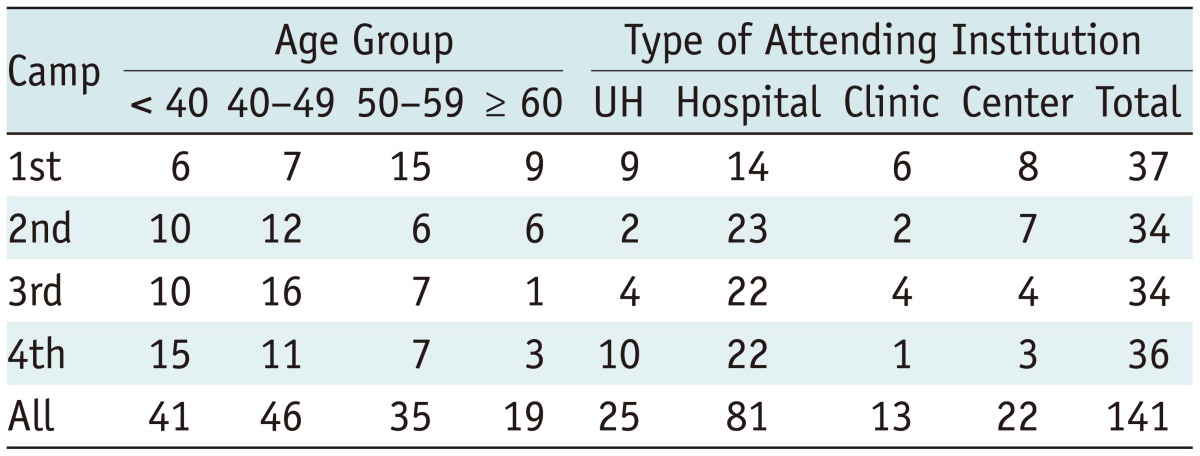

In total, 141 radiologists attended four MBCs. There were 63 males and 78 females (mean age 46.2 years, range 29-68 years). Table 1 shows the attendee characteristics for each camp.

Table 1.

Characteristics of Attendees in Each Camp

Note.-Center = screening center, Clinic = private clinic, UH = university-affiliated hospital

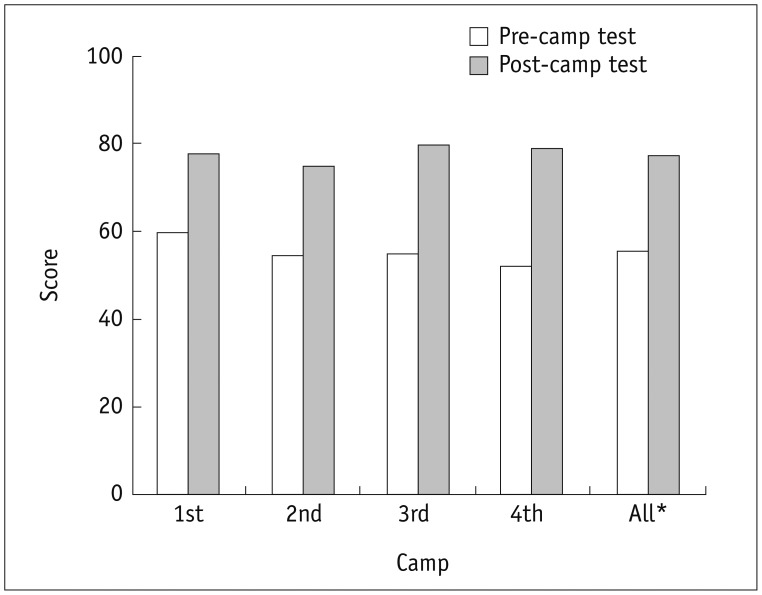

The average pre- and post-camp test scores of all attendees were 56.0 ± 12.2 and 78.3 ± 9.2, respectively, showing a significant improvement (p < 0.001) (Fig. 5). The score difference between the pre- and post-camp tests was 22.3 ± 14.1. The average pre-camp test scores for each MBC were 60.7 ± 10.6, 55.2 ± 10.1, 55.4 ± 14.2, and 52.6 ± 12.7 in the first, second, third, and fourth camp, respectively. The average post-camp test scores for each MBC were 78.4 ± 9.4, 75.4 ± 9.8, 79.9 ± 8.7, and 79.4 ± 8.6 in the first, second, third, and fourth camp, respectively, showing improvement in each camp (p < 0.001). For the pre-camp test, the average scores of each camp were in the following order: the first, third, and second camps, and lastly the fourth camp (p = 0.03). For the post-camp test, however, the scores did not differ significantly among camps (p = 0.38).

Fig. 5.

Attendees' pre- and post-camp test scores. Average score of attendees in each mammography boot camp as well as those of all attendees showed significant improvement when pre- and post-camp test scores were compared. *P value based on Wilcoxon signed-rank test (otherwise, p value according to paired t test). Score = average score of attendees

When comparing the score of all camp attendees with the control group using 24 common cases, the average control group score was higher than the pre-camp test score of all attendees (79.6 ± 7.2 vs. 54.2 ± 12.7; p < 0.01). However, the control group score was not different compared with the post-camp test score of all attendees (79.6 ± 7.2 vs. 77.5 ± 9.6; p = 0.31) and was not different when compared with the post-camp test score of each MBC (79.6 ± 7.2 vs. 77.5 ± 9.8 vs. 74.6 ± 10.2 vs. 79.1 ± 9.1 vs. 76.5 ± 14.6; p = 0.33).

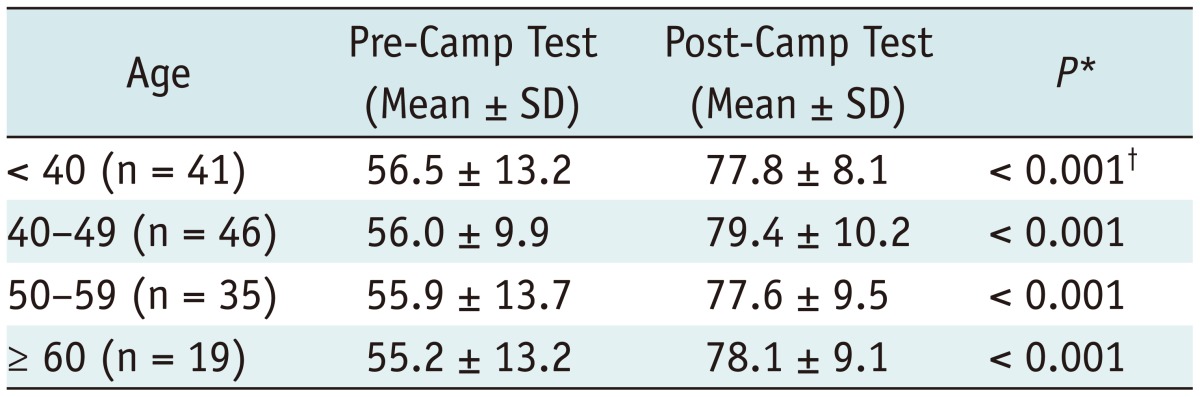

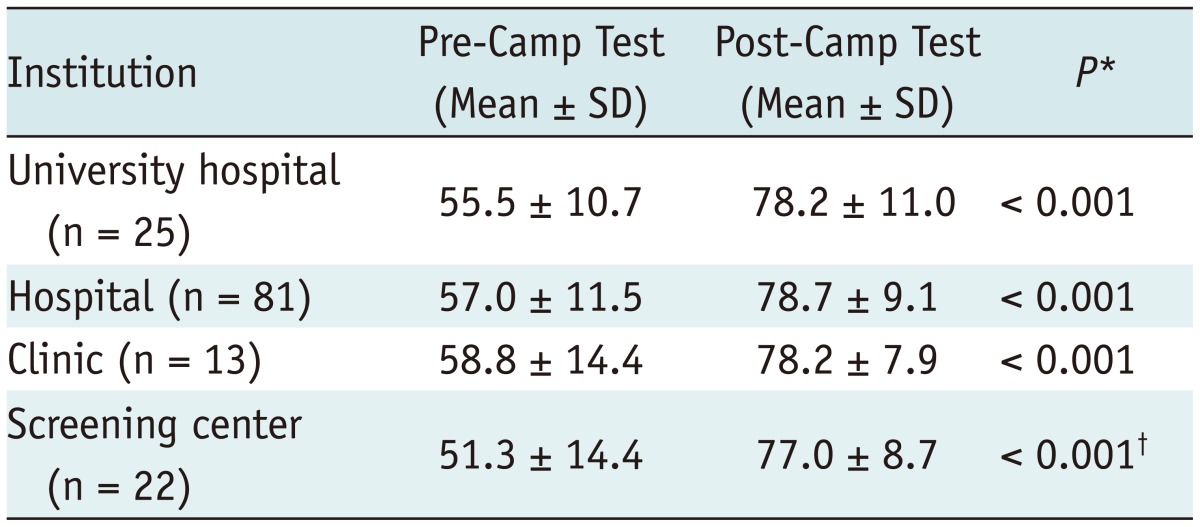

For all age groups, the average post-camp test scores were higher than the pre-camp test scores (p < 0.001) (Table 2). However, the pre- and post-camp test scores were similar among the age groups. For all types of attending institution, the post-camp test scores were higher than the pre-camp test scores (p < 0.001) (Table 3) although the pre- and post-camp test scores did not differ according to the type of attending institution (p = 0.38).

Table 2.

Attendees' Pre- and Post-Camp Test Scores According to Age

Note.-*P value by paired t test, †P value by Wilcoxon signed-rank test. SD = standard deviation

Table 3.

Attendees' Pre- and Post-Camp Test Scores According to Type of Attending Institution

Note.-*P value by paired t test, †P value by Wilcoxon signed-rank test. SD = standard deviation, University hospital = university-affiliated hospital

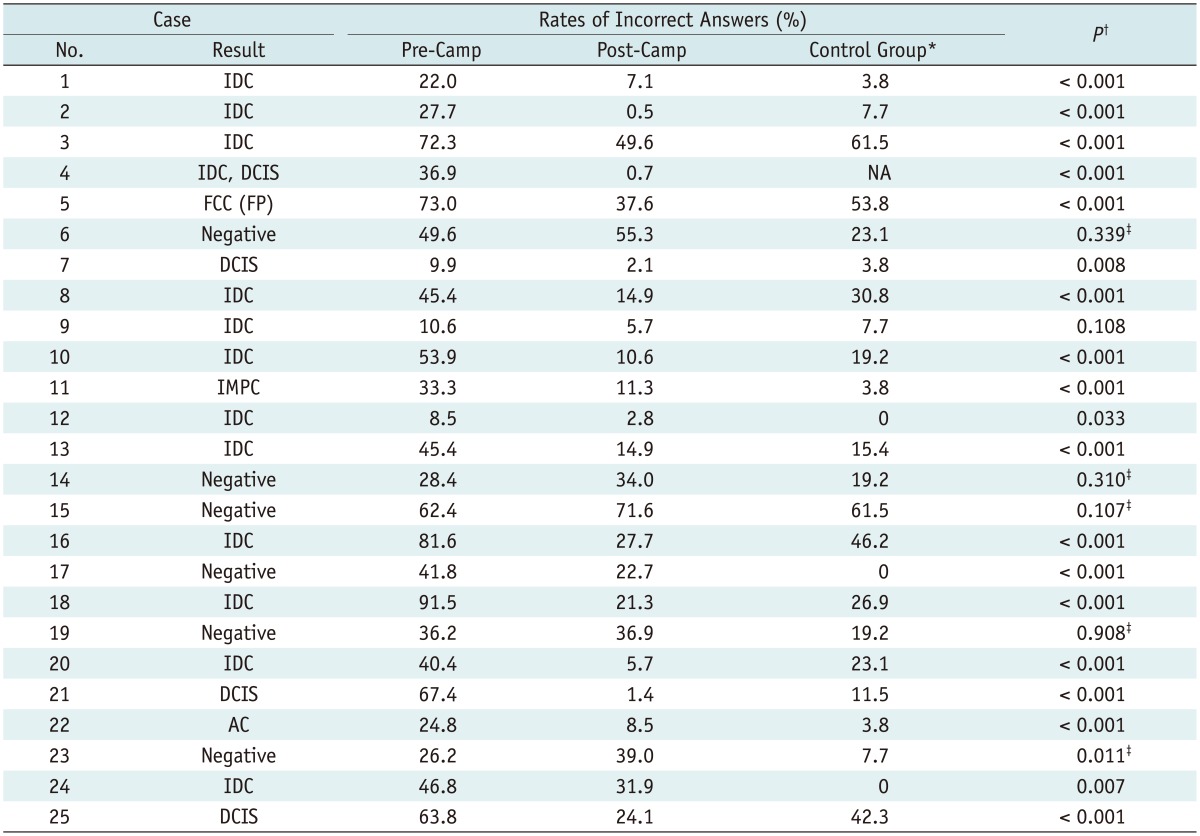

The rate of incorrect answers was lower in the post-camp test than in the pre-camp test except for five cases, which were negative cases (Table 4). In these five negative cases, the rates of incorrect answers ranged from 26.2-62.4% in the pre-camp test, 39.0-71.6% in the post-camp test, and 7.7-61.5% in the control group. For the only negative case showing improvement, the rates of incorrect answers were 41.8% in the pre-camp test, 22.7% in the post-camp test, and 0% in the control group. In the post-camp test, the average rate of incorrect answers increased for negative cases (40.7 ± 13.7 vs. 43.3 ± 17.4) and decreased for suspicious cases (43.5 ± 24.7 vs. 13.4 ± 13.2).

Table 4.

Rates of Incorrect Answers in Pre- and Post-Camp Tests Compared with Control Group

Note.-*Rates of incorrect answers from 26 experienced members of Korean Society of Breast Imaging (KSBI) as control group, †P value by McNemar test comparing rates of incorrect answers in pre- and post-camp tests, ‡Effectiveness of education of case was poor. AC = apocrine carcinoma, DCIS = ductal carcinoma in situ, FCC = fibrocystic change, FP = false-positive case, IDC = invasive ductal carcinoma, IMPC = invasive micropapillary cancer, NA = not applied, No. = number

DISCUSSION

The MBC improved the radiologists' performance in interpreting mammograms; all attendees achieved a higher average score in the post-camp test compared with the pre-camp test. Moreover, the education received in the MBC was equally effective for all age groups and all types of attending institution.

In the USA, all physicians interpreting mammograms must meet the Mammography Quality Standards Act-required qualifications. The initial qualification includes board certification in radiology (or equivalent) and initial experience of interpreting 240 mammograms in any 6 months within the last 2 years of residency (13). The BreastScreen Australia National Accreditation Standards requires radiologists in the national mammography screening program to hold a fellowship in the Royal Australian and New Zealand College of Radiologists (or equivalent) (14). The National Health Service Breast Screening Program of the United Kingdom requires screening mammography readers to participate in formal performance audits, such as Personal Performance in Mammographic Screening and to read a minimum of 5000 screening and/or symptomatic cases per year (15). In contrast, the NCSP in Korea does not require any qualifications for interpreting mammograms except board certification in radiology (7).

Presently, a resident's training in interpreting mammograms may be insufficient (9, 16). Lee and Lyou (16) reported that 31.6% of institutions showed no 'training effects' in interpreting mammograms, defined as a difference in scores between trained and non-trained residents. Most of the radiologists participating in the NCSP are exposed to relatively few cancer cases each year because Korean females have a 1/3 lower incidence rate of breast cancers compared with non-Hispanic white females (45.4 vs. 127.3 per 100000, respectively) (17, 18). Mammogram interpretation requires a training period, thus hindering the performance of the NCSP.

Kundel et al. (19) reported that false-negative errors in pulmonary nodule detection were divided into three classes: scanning errors (30%), recognition errors (25%), and decision-making errors (45%). Brem et al. (20) reported that perceptual error accounts for up to 60% of all diagnostic errors in screening mammograms. Lee et al. (21) suggested that overcoming the main causes of false-negative examinations, such as misperception and misinterpretation, is essential to improve the quality of breast screening. Perceptual errors arise from several factors, including insufficient knowledge for proper evaluation of an image, inability to recognize an abnormality, and faulty decision-making as to whether an imaging finding is a candidate for recall (19, 22). Perceptual errors depend on the radiologist's specialization and experience. Thus, the MBC is designed mainly to improve radiologists' perception in interpreting mammograms.

The MBC is a quality-enhancement program that provides practice and test sets of challenging mammographic cases. Attendees interpret more than 200 breast cancer cases within a short period, significantly more than they would usually experience as radiologists in Korea. The MBC has several unique features. First, immediate feedback is provided by the instructors for all practice set cases. This feedback is tailored to attendees' level of experience, potentially improving performance and sensitivity in the NCSP; the rate of incorrect answers in the post-camp test markedly improved for suspicious cases but not for negative cases. Second, prior to the MBC, we validated the test set cases with the help of experienced members of the KSBI. Prior validation can eliminate inappropriate cases; the post-camp validation in 2012 revealed that 16.0% of the cases were vague or inappropriate (11). Third, we doubled the number of PACS monitor sets compared with the previous camp, providing one set per attendee for the post-camp test, and provided attendees with a real-world environment. Experimental test set interpretations do not represent real-world clinical readings and limits the effectiveness of quality enhancement programs (23). Fourth, we reviewed all practice set cases prior to the MBC and ensured uniform teaching points among the instructors. The last three features were updated on the basis of the lessons learned from the MBCs in 2012 (11). These updates contributed to the improvement in post-camp test scores; the difference between the pre- and post-camp test scores was 22.3 points in 2013 vs. 13.4 points in 2012 (11).

This study showed that both pre- and post-camp tests scores were similar for all age groups, suggesting that residents' training in interpreting mammograms remains insufficient despite the fact that breast imaging is now included in KSR resident training programs (9). Lee and Lyou (16) previously reported that trained residents showed better performance in interpreting mammograms compared to non-trained residents, which differs from our experience. Further studies comparing the performance between trained residents and camp attendees are required to explain this discrepancy.

This study showed uniform improvement in the post-camp test scores for all types of attending institutions and all age groups. Although we could not identify a relationship between attendees' pre-existing experience in interpreting mammograms and performance in the MBC, we suggest that post-camp test scores should be higher than pre-camp test scores, irrespective of pre-existing experience. Tohno et al. (24) established an educational and testing program for the standardization of breast cancer screening using ultrasonography in Japan. They reported that attendees who had experienced less than 100 cases over the previous 5 years and physicians over the age of 50 years showed significantly lower test scores compared with more experienced and younger attendees. The discrepancy between the two studies may have resulted from the instructors' instant and tailored feedback regarding practice set cases in the MBC.

The present study and the MBC had several limitations. First, the pre- and post-camp tests could not fully reflect real-life clinical readings because prior images were not available and the proportion of suspicious cases was high. We plan to provide an environment similar to real-life clinical readings via prior images and to lower the proportion of suspicious cases in future test sets. Second, experiencing 250 cases in the MBC may be insufficient to improve NCSP specificity over a short period, although sufficient to improve sensitivity. Rawashdeh et al. (22) reported that reading volume was associated with reader performance, and 1000 mammography readings per year were suggested as the minimum desirable level of experience. We anticipate decreased specificity and increased recall rate in the NCSP because this study showed inadequate improvement in benign cases. Lastly, instructors' participation could be a major obstacle hindering regular MBCs. The improvement in the post-camp test scores resulted mainly from instructors providing instant and tailored feedback. Although all of the instructors volunteered their time in 2013, their participation in future MBC events may be limited. Therefore, an increased pool of instructors is necessary, which will require a significant investment.

In conclusion, the MBC successfully improved the performance of radiologists interpreting mammograms across all age groups and types of attending institution. Performance for suspicious cases improved in the post-camp test scores whereas that for negative cases did not. We expect regular MBCs to improve the quality of breast cancer screening in the NCSP in Korea in the near future.

Acknowledgments

The authors thank all instructors who voluntarily served for the MBC, including (in alphabetical order) An JK from Eulji General Hospital, Bae KK from Ulsan University Hospital, Choi BB from Chungnam National University Hospital, Choi SH and Hwang KW from Kangbuk Samsung Medical Center, Kim DB from Dongguk University Medical Center, Kim KW from Konyang University Hospital, Kim SH from The Catholic University of Korea Seoul St. Mary's Hospital, Kim SJ from Inje University Haeundae Paik Hospital, Kim SJ from Chung-Ang University Hospital, Kim TH from Ajou University Medical Center, Ko KL and Lee CW from the National Cancer Center, Lee JH from Dong-A Medical Center, Moon HJ from Severance Hospital, Nam SY from Gachon University Gil Hospital, and Park YM from Inje University Busan Paik Hospital.

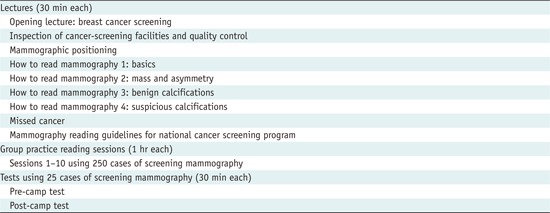

Appendix 1

Contents of Mammography Boot Camp Program

Appendix 2

Specifications of Group Practice Reading

Note.- No. = number, PACS = picture archiving and communication system

Footnotes

This work was supported in part by the Soonchunhyang University Research Fund.

References

- 1.Suh M, Choi KS, Lee YY, Jun JK. Trends in Cancer Screening Rates among Korean Men and Women: Results from the Korean National Cancer Screening Survey, 2004-2012. Cancer Res Treat. 2013;45:86–94. doi: 10.4143/crt.2013.45.2.86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Park B, Choi KS, Lee YY, Jun JK, Seo HG. Cancer screening status in Korea, 2011: results from the Korean National Cancer Screening Survey. Asian Pac J Cancer Prev. 2012;13:1187–1191. doi: 10.7314/apjcp.2012.13.4.1187. [DOI] [PubMed] [Google Scholar]

- 3.Kim Y, Jun JK, Choi KS, Lee HY, Park EC. Overview of the National Cancer screening programme and the cancer screening status in Korea. Asian Pac J Cancer Prev. 2011;12:725–730. [PubMed] [Google Scholar]

- 4.Kang MH, Park EC, Choi KS, Suh M, Jun JK, Cho E. The National Cancer Screening Program for breast cancer in the Republic of Korea: is it cost-effective? Asian Pac J Cancer Prev. 2013;14:2059–2065. doi: 10.7314/apjcp.2013.14.3.2059. [DOI] [PubMed] [Google Scholar]

- 5.Sickles EA, D'Orsi CJ. ACR BI-RADS® Follow-up and outcome monitoring. In: D'Orsi CJ, Sickles EA, Mendelson EB , Morris EA , editors. ACR BI-RADS® Atlas Breast Imaging Reporting and Data System. 5th ed. Reston: ACR; 2013. pp. 21–31. [Google Scholar]

- 6.Ministry of health and welfare regulation 386 (2007.02.20). Web site. [Accessed March 28, 2014]. http://www.mw.go.kr/front_new/al/sal0101vw.jsp?PAR_MENU_ID=04&MENU_ID=040101&page=1&CONT_SEQ=40424&SEARCHKEY=TITLE&SEARCHVALUE=특수의료장비의 설치 및 운영에 관한 규칙.

- 7.Ministry of health and welfare notice 2006-5. Web site. [Accessed March 28, 2014]. http://www.bokjiro.go.kr/policy/legislationView.do?board_sid=300&data_sid=151815&searchGroup=AR01&searchSort=REG_DESC&pageIndex=1&searchWrd=%EA%B2%80%EC%A7%84&searchCont=&pageUnit=10&searchProgrYn=

- 8.History of Korean Society of Breast Imaging. Web site. [Accessed March 28, 2014]. http://ksbi.radiology.or.kr/

- 9.Ministry of health and welfare notice 2002-23. Web site. [Accessed March 28, 2014]. http://www.mw.go.kr/front_new/jb/sjb0402vw.jsp?PAR_MENU_ID=03&MENU_ID=030402&BOARD_ID=220&BOARD_FLAG=03&CONT_SEQ=22366&page=1.

- 10.Breast Imaging Boot Camp. Web site. [Accessed March 28, 2014]. http://www.acr.org/Meetings-Events/EC-BIBC.

- 11.Lee EH, Jun JK, Kim YM, Bae K, Hwang KW, Choi BB, et al. Mammography boot camp to improve a quality of national cancer screening program in Korea: a report about a test run in 2012. J Korean Soc Breast Screen. 2013;10:162–168. [Google Scholar]

- 12.ACR BI-RADS® Committee. BI-RADS® breast imaging and reporting data system: breast imaging atlas. 4th ed. Reston: American College of Radiology; 2003. [Google Scholar]

- 13.American College of Radiology. Mammography Accreditation Program Requirements. [Accessed March 28, 2014]. http://www.acr.org/~/media/ACR/Documents/Accreditation/Mammography/Requirements.pdf.

- 14.National Accreditation Committee. BreastScreen Australia National Accreditation Standards. [Accessed March 28, 2014]. http://www.cancerscreening.gov.au/internet/screening/publishing.nsf/Content/A03653118215815BCA257B41000409E9/$File/standards.pdf.

- 15.NHS Breast Cancer Screening Program. [Accessed March 28, 2014]. http://www.cancerscreening.nhs.uk/breastscreen/index.html.

- 16.Lee EH, Lyou CY. Radiology residents' performance in screening mammography interpretation. J Korean Soc Radiol. 2013;68:333–341. [Google Scholar]

- 17.National Cancer Center. Annual report of cancer statistics in Korea in 2010. [Accessed March 28, 2014]. http://ncc.re.kr/manage/manage03_033_list.jsp.

- 18.DeSantis C, Ma J, Bryan L, Jemal A. Breast cancer statistics, 2013. CA Cancer J Clin. 2014;64:52–62. doi: 10.3322/caac.21203. [DOI] [PubMed] [Google Scholar]

- 19.Kundel HL, Nodine CF, Carmody D. Visual scanning, pattern recognition and decision-making in pulmonary nodule detection. Invest Radiol. 1978;13:175–181. doi: 10.1097/00004424-197805000-00001. [DOI] [PubMed] [Google Scholar]

- 20.Brem RF, Baum J, Lechner M, Kaplan S, Souders S, Naul LG, et al. Improvement in sensitivity of screening mammography with computer-aided detection: a multiinstitutional trial. AJR Am J Roentgenol. 2003;181:687–693. doi: 10.2214/ajr.181.3.1810687. [DOI] [PubMed] [Google Scholar]

- 21.Lee EH, Cha JH, Han D, Ryu DS, Choi YH, Hwang KT, et al. Analysis of previous screening examinations for patients with breast cancer. J Korean Radiol Soc. 2007;56:191–202. [Google Scholar]

- 22.Rawashdeh MA, Lee WB, Bourne RM, Ryan EA, Pietrzyk MW, Reed WM, et al. Markers of good performance in mammography depend on number of annual readings. Radiology. 2013;269:61–67. doi: 10.1148/radiol.13122581. [DOI] [PubMed] [Google Scholar]

- 23.Soh BP, Lee W, McEntee MF, Kench PL, Reed WM, Heard R, et al. Screening mammography: test set data can reasonably describe actual clinical reporting. Radiology. 2013;268:46–53. doi: 10.1148/radiol.13122399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tohno E, Takahashi H, Tamada T, Fujimoto Y, Yasuda H, Ohuchi N. Educational program and testing using images for the standardization of breast cancer screening by ultrasonography. Breast Cancer. 2012;19:138–146. doi: 10.1007/s12282-010-0221-x. [DOI] [PubMed] [Google Scholar]