Abstract

Randomized control trials and its meta-analysis has occupied the pinnacle in levels of evidence available for research. However, there were several limitations of these trials. Network meta-analysis (NMA) is a recent tool for evidence-based medicine that draws strength from direct and indirect evidence generated from randomized control trials. It facilitates comparisons across multiple treatment options, direct comparisons of which have not been attempted till date due to multitude of reasons. These indirect treatment comparisons of randomized controlled trials are based on similarity and consistency assumptions that follow Bayesian or frequentist statistics. Most NMAuntil date use Microsoft Windows WinBUGs Software for analysis which relies on Bayesian statistics. Methodology of NMA is expected to undergo further refinements and become robust with usage. Power and precision of indirect comparisons in NMA is a concern as it is dependent on effective number of trials, sample size and complete statistical information. However, NMA can synthesize results of considerable relevance to experts and policy makers.

Keywords: Indirect, multiple, network meta-analysis, treatment comparisons

INTRODUCTION

Evidence-based practice proposes to make well informed decisions in healthcare. Comparison of relevant competing interventions form core of decision making process.[1,2,3] Medicine has been a dynamic field with newer competent technologies and therapies constantly replacing earlier ones. Meta-analysis and systematic reviews of well conducted randomized controlled trials (RCT) occupy pinnacle in the “Levels of evidence” for health research.[4,5] While compliance with Nuremberg Code has ensured the best possible treatment for study subjects it has limited the freedom of researchers to experiment with drugs.[6] RCTs compare given treatment against placebos or standard treatment. Not all active interventions can be tested against each other due to ethical and resource constraints. Thus, RCTs provide a fragment of evidence in the complex decision-making process, not satiating the needs of experts and policy makers.

Traditional pair wise meta-analysis conducted in systematic reviews of RCTs came as a solution when felt need was to synthesize the evidence from two or more RCTs. However, evidence was assimilated from trials making same pair-wise comparisons. Hence, need for sound statistical methods that can generate evidence across related interventions and trials was realized. Comparison of interventions not compared directly in any RCT was made feasible. Network meta-analysis (NMA) are studies that combine results from more than two RCTs connecting more than or equal to three interventions.[7] Scientific literature is witnessing an increasing number of studies using indirect comparison of interventions.[8,9]

NMA

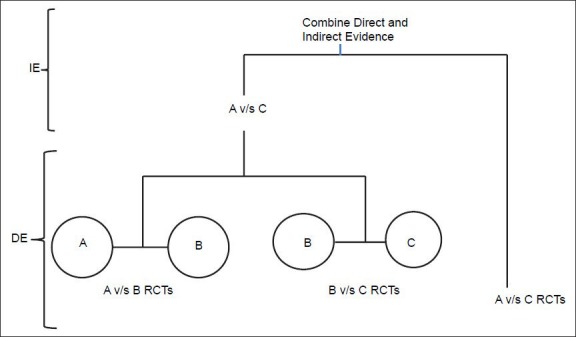

Direct evidence to inform the choice between interventions is available when head-to-head comparison of RCTs are available. However, in the absence of direct evidence, researcher resorts to indirect evidence where each of the two interventions have been individually compared against common comparator but not with each other. Common comparator, is the anchor to which treatment comparisons are anchored. NMA is credited with the ability to conclude from both direct and indirect evidence by pooling results statistically across treatments to obtain the pooled estimate, a combined weighted average [Figure 1].

Figure 1.

Network meta-analysis using combination of direct and indirect evidence using a common comparator, IE-Indirect Evidence, DE-Direct Evidence

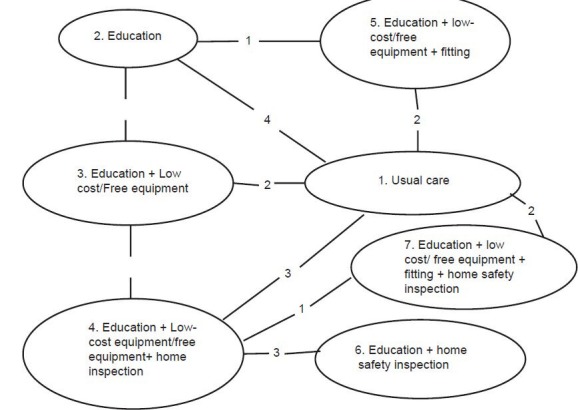

Basis of NMA is a network diagram where each node represents an intervention and connecting lines between them represent one or more RCTs in which interventions have been compared.[10] A closed-loop exists in a network when for a given pair of interventions both direct and indirect evidence is available. Direct evidence can be generated by a head-to-head comparison that has been done in a RCT, whereas indirect estimate is obtained from other direct comparisons. When a network has at least one closed loop, analysis is termed, “multiple treatment comparison (MTC).” Analysis of open loop (at least one pair of interventions for which direct evidence is not available) is termed “indirect treatment comparison (ITC)”[11] [Figure 2]

Figure 2.

Cooper et al. Network meta-analysis to evaluate the effectiveness of interventions to increase the uptake of smoke alarms. Epidemiologic Reviews 2012;34

As shown in Figure 2, ITCs has been demonstrated with both open and closed loop in the figure. Loop formed by intervention 5, 2, 1 and 3 is a closed loop, whereas loop formed by intervention 7 and 6 is an open loop. Numbers linking the nodes are number of trials performed whereas each node represents an intervention. E.g. two RCTs comparing intervention 1 and 5 have been included in the NMA [Figure 2].

Diversity and strength of a network is determined by the number of different interventions and comparisons that are available, how represented they are in network and the evidence they carry.[11]

It is important to understand that ITCs use relative effects of two treatments when tested against common comparator. This is because the researcher does not want to break randomization within individual RCTs.[11] Simple comparison of responders in the arm of interest from different trials cannot be conducted as they are from different trials with differing baseline risks. Moreover, placebo effect needs to be separated from drug effect.

Approaches of statistical analysis in NMA

NMA can utilize the fixed effect or the random effect approach. Fixed effect approach assumes that all studies are trying to assume one true effect size and any difference between estimates from different studies is attributable to sampling error only (within study variation). A random effects approach assumes that in addition to sampling error, observed difference in effect size considers the variation of true effect size across studies (between study variation) otherwise called heterogeneity. Fixed effect model can be further explained by assuming that the effect of drug in study 1 is E1 and the effect of same in study 2 is E2 then the difference between E1 and E2 is due to chance or sampling error in the studies. If the study were having infinitely large sample size then, there would be identical results. Thus, the treatment effect using fixed effect model will equal to the common effect plus the within study variation. Whereas in case of random effects approach it can be attributed to mean of the true effects of study, within study variation and between study variation. Such differences can be due to severity of disease, age of patients, dose of drug received, follow-up time period, etc.[12] Extending this concept to NMA, we expect that effect size estimates not only vary across studies but also across comparisons (direct and indirect) otherwise called inconsistency.

Assumptions in NMA

Similarity assumption

Trial selection should be based on rigorous criteria. Besides study population, design, and outcome measures, trials must be comparable on effect modifiers to obtain an unbiased pooled estimate. Effect modifiers are study and patient characteristics, e.g., age, disease severity, duration of follow-up, etc., that are known to influence treatment effect of interventions. Imbalanced distribution of effect modifiers between studies can bias comparisons resulting in heterogeneity and inconsistency.[13]

Consistency assumption

In case of a closed loop of mixed treatment comparison, where both direct and indirect evidence is available, it is assumed that for each pair wise comparison direct and indirect estimates are consistent. Violation of either or both of these assumptions violates the theory of transitivity where one cannot conclude that C is better than A from trial results that have already proven that C is better than B and B is better than A.[13]

Statistical methods in NMA

Analysis of network involves pooling of individual study results. Factors such as total number of trials in a network, number of trials with more than two comparison arms, heterogeneity (i.e., clinical, methodological, and statistical variability within direct and indirect comparisons), inconsistency (i.e., discrepancy between direct and indirect comparisons), and bias may influence effect estimates obtained from network meta-analyses.[14] Statistical methods for NMA are; Adjusted indirect comparison method with aggregate data, meta-regression, hierarchical models, and Bayesian methods. An imbalance of effect modifiers between studies may lead to heterogeneity or inconsistency and can be adjusted statistically using statistical meta-regression models.[11] Most NMA until date use Microsoft Windows WinBUGs statistical software to conduct analysis based on Bayesian statistics. Results are presented in the form of direct evidence, indirect evidence and combined evidence (mean [standard deviation]/odds ratio [confidence interval (CI)]) from NMA. Recently, Chaimani et al. have elucidated the graphical tools for NMA using STATA. Corp which has made the methodology of NMA accessible to the non-statisticians.[15]

Presentation of NMA results and its interpretation can be done with a frequentist or Bayesian framework. With a frequentist approach, result of analysis is presented as a point estimate with a 95% CI. However, these CIs cannot be interpreted in terms of probabilities; This shortcoming is overcome by the use of Bayesian methods which presents probabilities that can predict and is of relevance to the decision maker.[16,17] These methods assume prior probability distribution, prior belief of possible values of model parameter based on what is already known on the subject. Then in the light of observed data in the study, likelihood distribution of these parameters is used to obtain a corresponding posterior probability distribution. For NMA, specific advantage is that the posterior probability distribution allows calculating the probability of the competing interventions. Results are expressed in credible intervals as opposed to the CI in case of frequentist analysis. Other advantages of Bayesian meta-analysis include the straightforward way to make predictions and possibility to incorporate different sources of uncertainty.[18]

Issues with NMA

NMA inherits all challenges present in a standard meta-analysis (issues of bias, heterogeneity and precision) but with increased complexity due to the multitude of comparisons involved. With increasing number of links distancing the interventions to be compared, indirect comparisons become less reliable.[19] Degree of power and precision of indirect evidence can be estimated based on the effective number of trials, effective sample size and an effective statistical information or the fisher information. Varying levels of power and precision across all comparisons specifically indirect comparisons is a major challenge in analysis. However, it adds to the direct estimates in the pooled analysis.[20] Statistical heterogeneity can be checked by the Cochran's Q and quantified by I2 statistic. It overlaps considerably with the conceptual heterogeneity. Conceptual heterogeneity refers to differences in methods, study designs, study populations, settings, definitions, measurement of outcome, follow-up, co-interventions or other features that make trials different. Inconsistency can be assessed in closed loops by node splitting, a method comparing point estimates of direct and in-direct evidence informing the same comparison. Random effects model takes into consideration the un-explained heterogeneity in contrast to the fixed effects model.

It is essential to assess the internal and external validity of the network. Internal validity of NMA is dependent on: (1) Appropriate identification of studies that form the evidence network, (2) quality of the individual RCTs, and (3) extent of confounding bias due to similarity and consistency violations. Song et al. did a meta epidemiological study to assess the quality of studies reporting indirect comparisons. Methodological problems identified in the process were: Poor understanding of underlying assumptions, inappropriate search and selection of trials, use of inappropriate or flawed methods, lack of objective and validated methods to assess or improve trial similarity and inadequate comparison or inappropriate combination of direct and indirect evidence.[21] External validity of NMA is limited by external validity of RCTs included in the evidence network, which is to be reviewed by decision makers as to whether results can be extrapolated to the population of interest.

In the absence of evidence from RCTs, observational studies can be used by researcher in MTCs keeping in mind the inherent biases and shortcomings that plague observational studies in epidemiology.

Examples of NMA

Comparative efficacy and acceptability of anti-manic drugs in acute mania: A multiple-treatment meta-analysis was conducted by Cipriani et al. to assess the effect of nearly 13 anti-maniac drugs using direct and in-direct comparisons of nearly 68 RCTs. This helped in comparative assessment of treatment available for acute mania with antipsychotic drugs being significantly more effective than mood stabilizers. Out of the pool of drugs, risperidone, olanzapine and haloperidol were evaluated best for the treatment of manic episodes.[22] Similar NMA was performed for patients of rheumatoid arthritis who were refractory to synthetic disease modifying anti rheumatic drugs and tissue necrosis factor inhibitor. Several new biological have demonstrated efficacy against these sub-group of patients when compared with placebos. Their comparative efficacy has not been assessed. NMA was performed with RCTs comparing these biological with placebos. Results of NMA showed significant improvement with four biologicals and acceptable safety outcomes in study population. Thus, NMA can well form the basis of clinical decision making.[23]

Reporting of NMA

International Society for Pharmacoeconomics and Outcomes Research Board of directors approved the formation of an ITCs good research practices task force to develop good research practices document (s) for ITC in January 2009.[11] It prescribed reporting standards to be adopted for NMA. Methodology of NMA is currently undergoing more refinements. It has already gained popularity among the researchers with 121 NMAs published till date. Among them nearly, 83% were based on pharmacological interventions and 9% on non-pharmacological interventions. Major methodological errors in NMAs identified by the study were poor reporting of electronic search strategy. Nearly half of the NMA did not report any information on the assessment of risk of bias of individual studies, and 85% did not report any methods to assess the likelihood of publication bias.[24] Methodological issues of sample size, power, sources of bias and heterogeneity is the focus of research.

CONCLUSION

NMA is an interesting methodology for research that will fortify evidence based practice of healthcare. Sound NMA should be conducted with the existing research to update our health care decisions. However, one should acknowledge the need to focus on methodological issues, which shall evolve with increasing use of this technique by researchers.

Footnotes

Source of Support: Nil.

Conflict of Interest: None declared.

REFERENCES

- 1.Sackett DL. Evidence-based medicine. Semin Perinatol. 1997;21:3–5. doi: 10.1016/s0146-0005(97)80013-4. [DOI] [PubMed] [Google Scholar]

- 2.Evidence-Based Medicine Working Group. Evidence-based medicine. A new approach to teaching the practice of medicine. JAMA. 1992;268:2420–5. doi: 10.1001/jama.1992.03490170092032. [DOI] [PubMed] [Google Scholar]

- 3.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: What it is and what it isn’t. BMJ. 1996;312:71–2. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Harbour R, Miller J. A new system for grading recommendations in evidence based guidelines. BMJ. 2001;323:334–6. doi: 10.1136/bmj.323.7308.334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Atkins D, Best D, Briss PA, Eccles M, Falck-Ytter Y, Flottorp S, et al. Grading quality of evidence and strength of recommendations. BMJ. 2004;328:1490. doi: 10.1136/bmj.328.7454.1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Resiser SJ, Dyck AJ, Curran WJ, editors. Trials of War Criminals before the Nuremberg Military Tribunals under Control Council Law. 10. Vol. 2. Washington DC: US Government Printing Office; 1949. The Nuremberg Code; pp. 181–2. Ethics in medicine: Historical perspectives and contemporary concerns. Cambridge, Mass.: MIT Press; 1978:272-3. [Google Scholar]

- 7.Caldwell DM, Ades AE, Higgins JP. Simultaneous comparison of multiple treatments: Combining direct and indirect evidence. BMJ. 2005;331:897–900. doi: 10.1136/bmj.331.7521.897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Song F, Xiong T, Parekh-Bhurke S, Loke YK, Sutton AJ, Eastwood AJ, et al. Inconsistency between direct and indirect comparisons of competing interventions: Meta-epidemiological study. BMJ. 2011;343:d4909. doi: 10.1136/bmj.d4909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Glenny AM, Altman DG, Song F, Sakarovitch C, Deeks JJ, D’Amico R, et al. Indirect comparisons of competing interventions. Health Technol Assess. 2005;9(iii-vi):134. doi: 10.3310/hta9260. [DOI] [PubMed] [Google Scholar]

- 10.Hoaglin DC, Hawkins N, Jansen JP, Scott DA, Itzler R, Cappelleri JC, et al. Conducting indirect-treatment-comparison and network-meta-analysis studies: Report of the ISPOR Task Force on Indirect Treatment Comparisons Good Research Practices: Part 2. Value Health. 2011;14:429–37. doi: 10.1016/j.jval.2011.01.011. [DOI] [PubMed] [Google Scholar]

- 11.Jansen JP, Fleurence R, Devine B, Itzler R, Barrett A, Hawkins N, et al. Interpreting indirect treatment comparisons and network meta-analysis for health-care decision making: Report of the ISPOR Task Force on Indirect Treatment Comparisons Good Research Practices: Part 1. Value Health. 2011;14:417–28. doi: 10.1016/j.jval.2011.04.002. [DOI] [PubMed] [Google Scholar]

- 12.Riley RD, Higgins JP, Deeks JJ. Interpretation of random effects meta-analyses. BMJ. 2011;342:d549. doi: 10.1136/bmj.d549. [DOI] [PubMed] [Google Scholar]

- 13.Jansen JP, Naci H. Is network meta-analysis as valid as standard pairwise meta-analysis? It all depends on the distribution of effect modifiers. BMC Med. 2013;11:159. doi: 10.1186/1741-7015-11-159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li T, Puhan MA, Vedula SS, Singh S, Dickersin K Ad Hoc Network Meta-analysis Methods Meeting Working Group. Network meta-analysis-highly attractive but more methodological research is needed. BMC Med. 2011;9:79. doi: 10.1186/1741-7015-9-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chaimani A, Higgins JP, Mavridis D, Spyridonos P, Salanti G. Graphical tools for network meta-analysis in STATA. PLoS One. 2013;8:e76654. doi: 10.1371/journal.pone.0076654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jansen JP, Crawford B, Bergman G, Stam W. Bayesian meta-analysis of multiple treatment comparisons: An introduction to mixed treatment comparisons. Value Health. 2008;11:956–64. doi: 10.1111/j.1524-4733.2008.00347.x. [DOI] [PubMed] [Google Scholar]

- 17.Spiegelhalter DJ, Abrams KR, Myles JP. Bayesian approaches to clinical trials and health-care evaluation. Pharm Stat. 2004;3:230–1. [Google Scholar]

- 18.Sutton AJ, Abrams KR, Jones DR, Sheldon TA, Song F. Chichester, United Kingdom J: Wiley; 2000. Methods for Meta-Analysis in Medical Research. [Google Scholar]

- 19.Hawkins N, Scott DA, Woods B. How far do you go. Efficient searching for indirect evidence? Med Decis Making. 2009;29:273–81. doi: 10.1177/0272989X08330120. [DOI] [PubMed] [Google Scholar]

- 20.Thorlund K, Mills EJ. Sample size and power considerations in network meta-analysis. Syst Rev. 2012;1:41. doi: 10.1186/2046-4053-1-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Song F, Loke YK, Walsh T, Glenny AM, Eastwood AJ, Altman DG. Methodological problems in the use of indirect comparisons for evaluating healthcare interventions: Survey of published systematic reviews. BMJ. 2009;338:b1147. doi: 10.1136/bmj.b1147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cipriani A, Barbui C, Salanti G, Rendell J, Brown R, Stockton S, et al. Comparative efficacy and acceptability of antimanic drugs in acute mania: A multiple-treatments meta-analysis. Lancet. 2011;378:1306–15. doi: 10.1016/S0140-6736(11)60873-8. [DOI] [PubMed] [Google Scholar]

- 23.Schoels M, Aletaha D, Smolen JS, Wong JB. Comparative effectiveness and safety of biological treatment options after tumour necrosis factor α inhibitor failure in rheumatoid arthritis: Systematic review and indirect pairwise meta-analysis. Ann Rheum Dis. 2012;71:1303–8. doi: 10.1136/annrheumdis-2011-200490. [DOI] [PubMed] [Google Scholar]

- 24.Bafeta A, Trinquart L, Seror R, Ravaud P. Analysis of the systematic reviews process in reports of network meta-analyses: Methodological systematic review. BMJ. 2013;347:f3675. doi: 10.1136/bmj.f3675. [DOI] [PMC free article] [PubMed] [Google Scholar]