Summary

The primate visual system consists of multiple hierarchically organized cortical areas, each specialized for processing distinct aspects of the visual scene. For example, color and form are encoded in ventral pathway areas such as V4 and inferior temporal cortex, while motion is preferentially processed in dorsal pathway areas such as the middle temporal area. Such representations often need to be integrated perceptually to solve tasks which depend on multiple features. We tested the hypothesis that the lateral intraparietal area (LIP) integrates disparate task-relevant visual features by recording from LIP neurons in monkeys trained to identify target stimuli composed of conjunctions of color and motion features. We show that LIP neurons exhibit integrative representations of both color and motion features when they are task relevant, and task-dependent shifts of both direction and color tuning. This suggests that LIP plays a role in flexibly integrating task-relevant sensory signals.

Introduction

We often face the challenge of selecting behaviorally relevant stimuli among competing distracters. For example, we might need to choose a red target moving rightwards at one moment, but a green target moving leftwards a moment later. Solving such a task relies on neuronal representations of basic visual features such as color and direction, as well as the ability to keep track of which features are task-relevant (Treisman and Gelade, 1980). Decades of work have described neuronal representations of visual features in a network of cortical areas specialized for processing different aspects of the visual scene. For example, motion is processed in dorsal stream areas such as the middle temporal (MT) and medial superior temporal (MST) areas (Born and Bradley, 2005; Maunsell and Van Essen, 1983), while color and form are represented in ventral stream areas such as V4 and the inferior temporal cortex (ITC) (Desimone et al., 1985; Zeki, 1976). One appealing theory posits that feature-based selective attention allows visual feature representations in visual cortex to be flexibly read out by downstream areas (Treisman and Gelade, 1980). However, the underlying neuronal mechanisms which allow such flexible feature integration remain unknown. While much previous work has focused on the lateral intraparietal area’s (LIP) role in visuo-spatial functions (e.g. spatial attention, saccadic eye movements) (Bisley and Goldberg, 2003; Gnadt and Andersen, 1988; Goldberg et al., 1990), we hypothesize that feature-based attention allows LIP neurons to flexibly integrate multiple visual feature representations from upstream areas. LIP is interconnected with both dorsal and ventral stream visual areas (Felleman and Van Essen, 1991; Lewis and Van Essen, 2000), and can encode visual features such as direction (Fanini and Assad, 2009), color (Toth and Assad, 2002), and form (Fitzgerald et al., 2011; Sereno and Maunsell, 1998), particularly when stimuli are task-relevant (Assad, 2003; Oristaglio et al., 2006). LIP is also interconnected with areas of the prefrontal cortex (PFC) (Cavada and Goldman-Rakic, 1989), which has been associated with executive functions and the voluntary control of attention (Armstrong et al., 2009; Funahashi et al., 1989; Ibos et al., 2013; Miller and Cohen, 2001; Miller et al., 1996). Furthermore, LIP activity can reflect extra-retinal and/or cognitive factors such as categories (Freedman and Assad, 2006; Swaminathan and Freedman, 2012), task rules (Stoet and Snyder, 2004), and salience (Gottlieb et al., 1998; Leathers and Olson, 2012). Because LIP participates in both sensory and cognitive functions, it is therefore well positioned to flexibly integrate diverse visual and cognitive inputs. This is further supported by recent work showing that LIP neurons can independently encode, or multiplex, both sensory-motor and cognitive signals (Meister et al., 2013; Rishel et al., 2013).

In this study, we tested the hypothesis that LIP integrates task-relevant visual feature representations. We employed a visual matching task in which monkeys used two visual features (color and motion direction) to identify target (i.e. match) stimuli. One of two sample stimuli was followed by a succession of test stimuli. Monkeys had to indicate whether the test stimuli matched the sample in both color and direction. Because the identity of the sample stimulus varied across trials, the task-relevant color and direction also varied from trial-to-trial. This allowed us to determine how color and direction selectivity in LIP varied according to which features were task relevant. Neuronal recordings revealed substantial color and direction selectivity in LIP. Interestingly, many LIP neurons showed task-dependent shifts in their direction and/or color tuning, with most neurons showing shifts toward the direction and/or color that was task-relevant. This shows that visual feature representations in LIP are flexible on short time-scales, and that LIP integrates multiple task-relevant visual features. Furthermore, our observations are consistent with a model in which LIP linearly integrates attention-related response-gain modulations of feature representations from upstream sensory areas.

Results

Delayed conjunction matching (DCM) task and behavioral performance

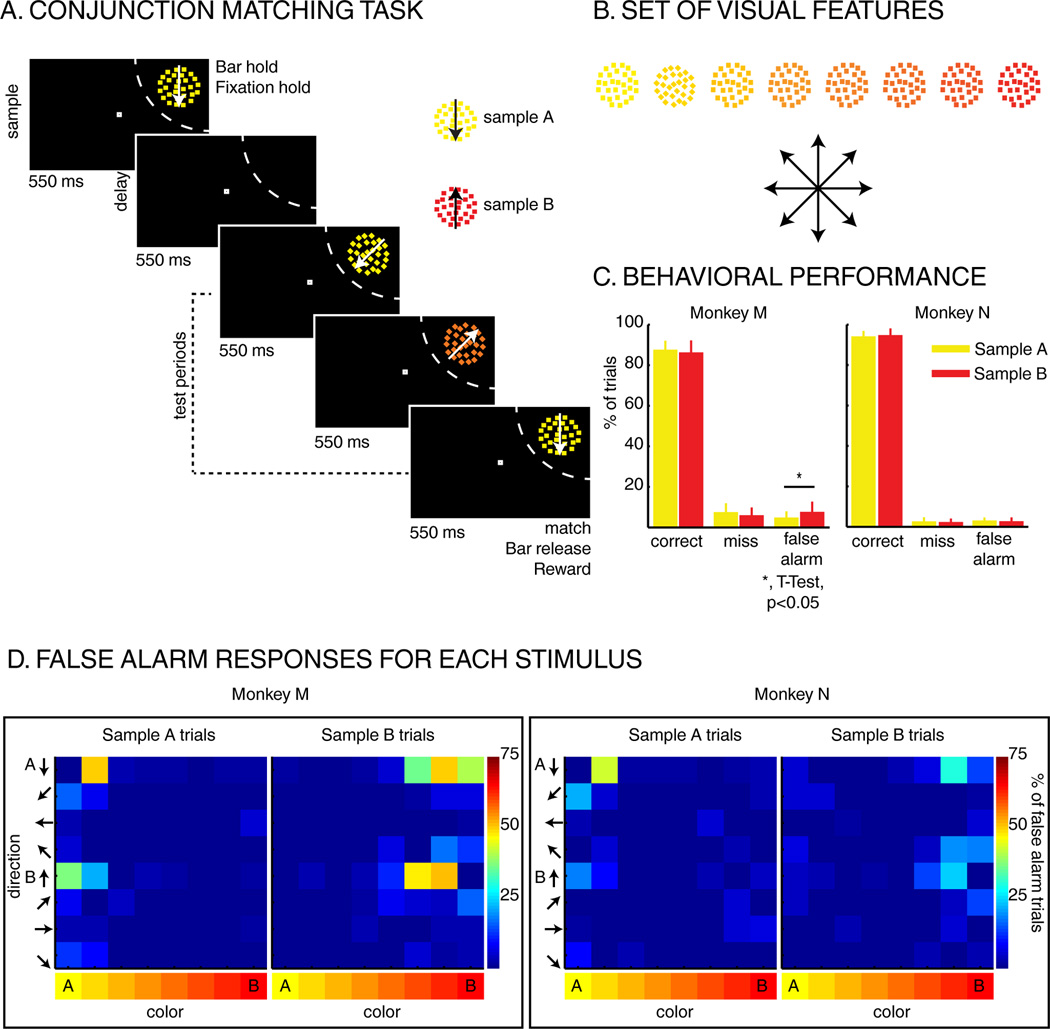

Two monkeys performed a DCM task in which a sample stimulus was followed by 1 to 3 test stimuli (Figure 1A). The stimuli were circular patches (3° radius) of random dot motion patterns which varied across 8 directions and 8 hues (Figure 1B). To receive a fluid reward, monkeys were required to release a manual touch bar in response to a test stimulus which matched both the sample’s direction and color. On 25% of trials, none of the test stimuli matched the sample, and monkeys were rewarded for withholding their response. On each trial, one of two sample stimuli (“A” or “B”) instructed the monkeys about which feature values were relevant. Sample A was always yellow dots moving downward, while sample B was always red dots moving upward. Each test stimulus could be any conjunction of the 8 hues and 8 directions (64 unique test stimuli). For each of the 3 test periods, test stimuli were pseudo-randomly picked between five types of stimuli: 1) the stimulus matching the sample, 2) one of the 7 non-match stimuli whose color matched the sample color, 3) one of the 7 non-match stimuli whose direction matched the sample direction, 4) the sample stimulus which was not presented on this trial (e.g. sample B during sample A trials), or 5) one of the 48 remaining non-match stimuli (both color and direction non-match).

Figure 1.

Task and behavior: A) Delayed conjunction matching task: one of two sample stimuli was presented for 550 ms, in the RF of the recorded neuron (dashed arc, not shown to monkeys). The sample can either be sample A (yellow dots moving downwards) or sample B (red dots moving upwards). After a delay of 550 ms, 1 to 3 test stimuli were presented in succession for 550 ms each. All stimuli were conjunctions of one color and one direction. To receive a reward, monkeys had to release a manual lever when the test stimulus matched the sample in both color and direction. On 25% of trials, none of the test stimuli matched the sample, and monkeys had to hold fixation and withhold their manual response to receive a reward. B) Test-stimulus features: eight colors and eight directions were used to generate 64 different test stimuli. Colors varied from yellow to red, and directions were evenly spaced across 360 degrees. C) Behavioral performance: Both monkeys M and N performed the task with high accuracy as ~90% of trials were correct, ~5% were misses and ~5% were false alarms (excluding fixation breaks). D) Percent of false alarm responses for each of the 64 test stimuli: each row represents one direction, and each column represents one color.

Both monkeys performed the DCM task accurately (Percent correct: Monkey M: ~86%, Monkey N: ~90%), consistent with the monkeys using both features to guide their responses (Figure 1C). An examination of false-alarm errors (i.e. responding to a non-match test stimulus) reveals elevated error rates for test stimuli which matched the sample color (Figure 1D), and for which the direction was visually similar to the sample.

Direction and color selectivity in LIP during DCM task

A central aim of this study is to examine whether feature tuning in LIP varies according to the features that are task-relevant. The DCM task allows this by independently assessing each neuron’s test-period direction and color tuning on sample A and B trials. Importantly, throughout this manuscript (except for Figure S2), we analyzed only non-matching test stimuli. This ensures that neuronal activity related to the monkeys’ manual responses does not contribute to the observed effects.

We recorded from 127 LIP neurons (Monkey M: N=65; Monkey N: N=62) during DCM task performance. A substantial fraction of LIP neurons were selective for the direction and/or the color of test stimuli (three-way ANOVA, with test direction, test color, and sample identity as factors; P<0.05). During the test period, 99/127 (77%) of LIP neurons were direction selective and 55/127 (43%) were color selective (main effect of either factor and/or interaction between direction/color and sample identity). 12/99 direction-selective neurons showed an interaction between direction and sample identity, while a color-sample interaction was observed in 39/55 color-selective neurons. This suggests that neuronal selectivity for test stimuli varied according to the directions and colors that were relevant for solving each trial. A majority of feature-selective LIP neurons were selective for both direction and color (N=46, 83% of color selective neurons, and 46% of direction selective neurons).

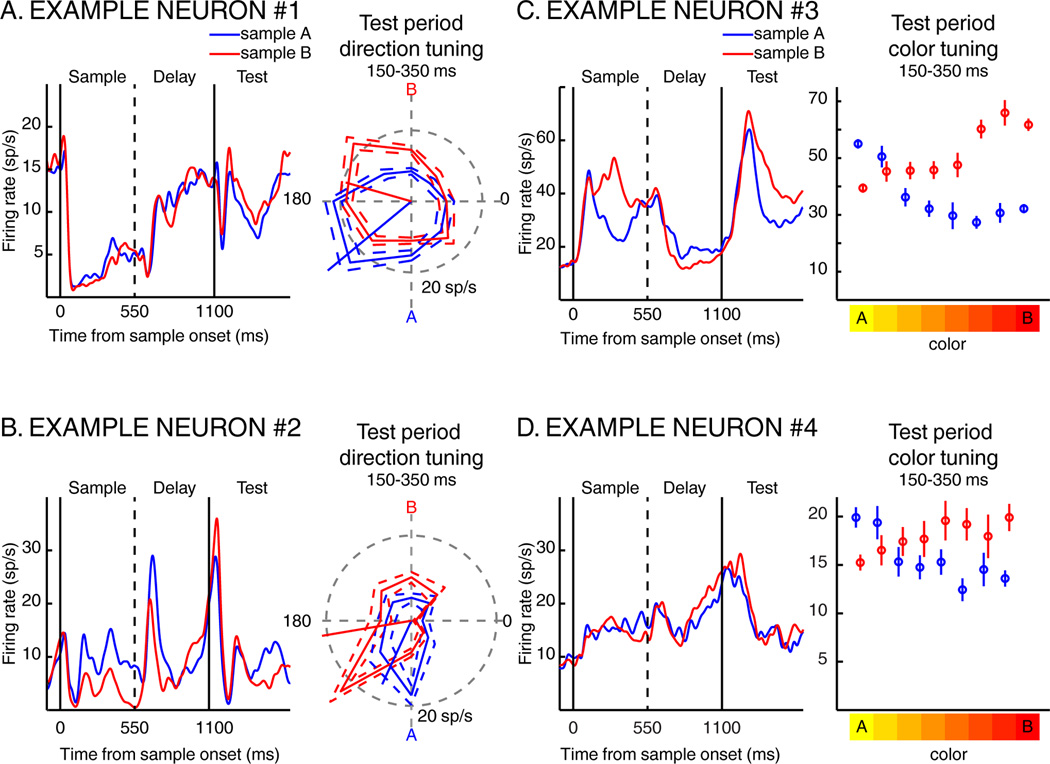

Example neurons showing sample-dependent modulations of direction and color selectivity during the DCM task are shown in Figure 2. For each neuron, the left panel shows average activity across the sample, delay, and first test period for sample A and B trials. The neurons in Figure 2B and 2C responded preferentially to samples A and B, respectively. The right panel depicts test-period responses to each motion direction (Figure 2A and 2B) or each color (Figure 2C and 2D) for sample A (blue) and B (red) trials. Both neurons in Figures 2A and 2B showed shifts in their preferred directions toward the direction of the relevant sample. The example neurons in Figures 2C and 2D showed test-period color selectivity which was strongly influenced by sample identity, with both neurons exhibiting greater activity to test stimuli of similar color as the sample. These examples suggest that visual feature selectivity in LIP can show trial-by-trial modulations in tuning depending on the task-relevance of visual features.

Figure 2.

Four examples of individual LIP neurons. For each neuron, we present the time course of the neuronal response to sample A (blue traces) and to sample B (red traces) during the sample presentation, the delay and the first test epochs. A and B) Shifts of direction tuning. The polar plots to the right of each PSTH show average test-period firing rate to each test direction on sample A (blue) and sample B (red) trials. The solid trace indicates the mean firing rate, while the dotted traces indicate the standard error of the mean (SEM). The blue and red oriented line segments correspond to each neurons’ preferred direction on sample A and sample B trials. C and D) Shifts of color tuning. The plots to the right of each PSTH show each neurons’ average test-period firing rate to each test color on sample A (blue) and sample B (red) trials. Error bars indicate SEM.

For population analysis, neuronal direction and color selectivity were characterized separately on sample A and B trials. Each neuron’s preferred direction was determined by computing two vectors–the sum of the average response to each direction weighted by the cosine and sine of their respective angle. Across the population of direction selective neurons (N=99), the mean preferred direction was significantly shifted toward the relevant sample direction by 21.6° (William-Watson test, P=0.001). We examined whether this population-level shift in direction tuning could be due to the impact of a few neurons showing large tuning shifts. A bootstrap analysis (Figure S1, P<0.05) revealed that 18% (18/99) of LIP neurons showed significant differences in their preferred direction between sample A and B trials. Not surprisingly, this neuronal population showed a large (117.9°) and significant (P=0.005) shift toward the relevant sample. After removing these 18 neurons from the neuronal pool, we still observed a significant shift toward the relevant direction (N=81, shift amplitude = 5.6°, Watson-Williams test, P=0.002).

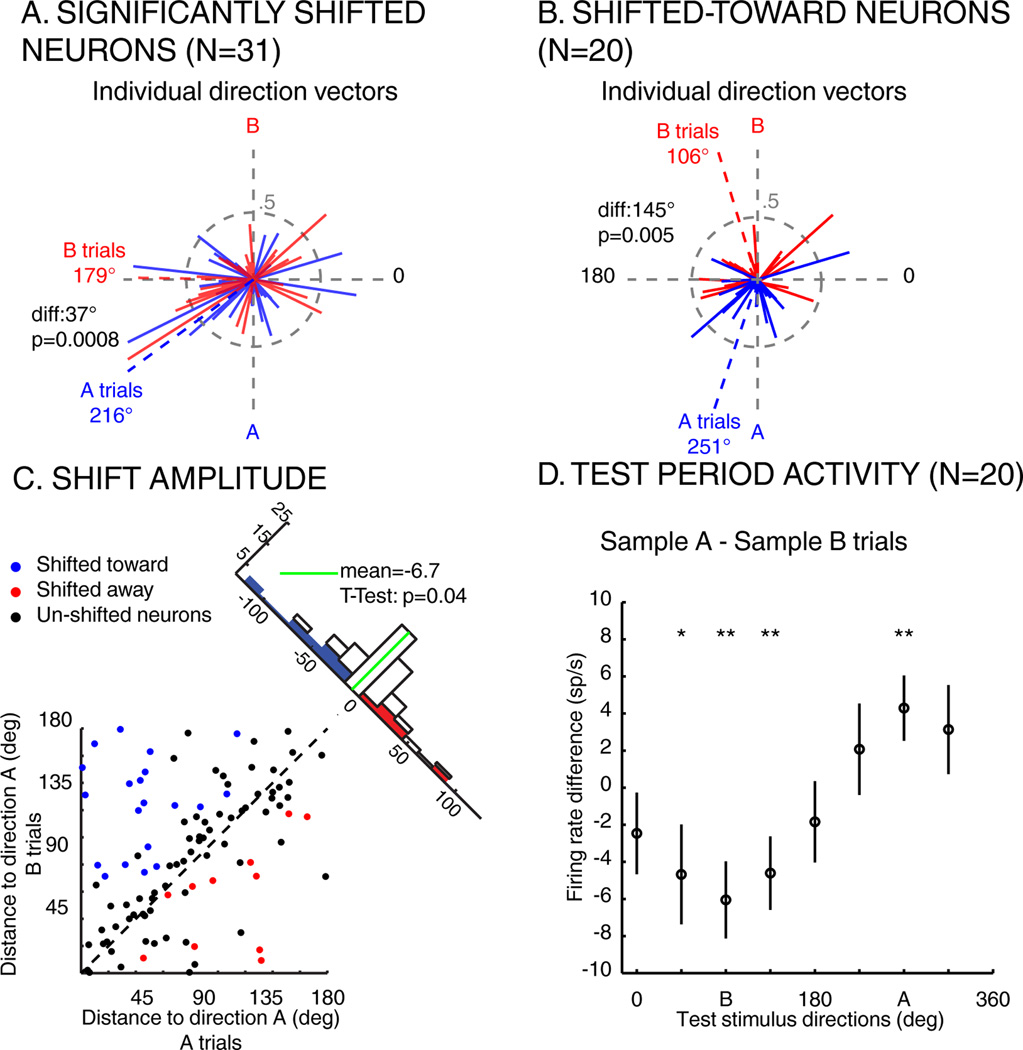

Although the above analysis used a fixed time epoch, we observed variability in the time-course of shift effects during the test period across the population (mean latency = 176.1 ms, standard deviation = 101 ms). Thus, we calculated direction-tuning shifts using a sliding window approach and found 31/127 neurons whose preferred direction was significantly (P<0.05) shifted (20/31 toward, 11/31 away; Figure 3A, average shift amplitude=37.0° toward the sample, Watson-Williams test, P=8.10−4). Among the 18 neurons that showed significant shifts using the fixed window, they all showed the same direction of shift using the sliding-window method. Since qualitatively similar results were obtained using fixed or sliding windows, we will examine the population defined by the sliding window approach (since it yielded a larger population). For each neuron, we computed the difference between the response to each direction during sample A and B trials. As shown by the average population differential response of shifted toward neurons (N=20; Figure 3D), the representation of the direction of the relevant sample is emphasized.

Figure 3.

Impact of task demands on LIP direction tuning. A) Individual direction vectors and normalized activity of shifted neurons (N=31) during sample A (blue) and B (red) trials. Solid lines represent the direction vector of each neuron during sample A (blue) or B (red) trials. Blue and red dashed lines represent the sum of the blue and red (respectively) direction vectors. diff: angular distance between sample A and sample B vectors. B) Individual direction vectors and normalized population activity of shifted-toward neurons, same conventions as in A. C) Angular distance between the preferred direction of each direction selective neuron (N=99) and the direction of sample A (270 degrees), when monkeys are looking for sample A (x-axis) or for sample B (y-axis). Blue points: neurons whose preferred direction is significantly shifted toward the relevant direction. Red points: neurons whose preferred direction is significantly shifted away from the relevant direction. Black points: neurons whose preferred direction is not significantly shifted. Histogram along diagonal: projection of each point of the scatter plot on the diagonal. D) Average difference of response to each direction (+/− SEM) between sample A and B trials (N=20, shifted toward neurons; *P<0.05, **P<0.01, paired T-Test).

We also characterized the color tuning of each LIP neuron by fitting a linear regression to the neuronal responses to each of the 8 test stimulus colors. Yellow and red were the end points of the neuronal tuning function, so a neuron tuned to yellow had a negative slope, while a neuron tuned to red had a positive slope. A bootstrap analysis, similar to the one used to characterize the shift in direction tuning revealed that 44% (56/127) of LIP neurons showed significant (P<0.05) differences in their tuning slope between sample A and sample B trials (49/56 shifted toward, 7/56 shifted away). Using the sliding window permutation test (mean latency = 165.5 ms, standard deviation = 66.1 ms), we found that 70/127 LIP neurons’ color tuning was significantly shifted (61 shifted toward, 9 shifted away). Among color selective neurons (according to the 3-way ANOVA described above), 13/55 were not significantly shifted.

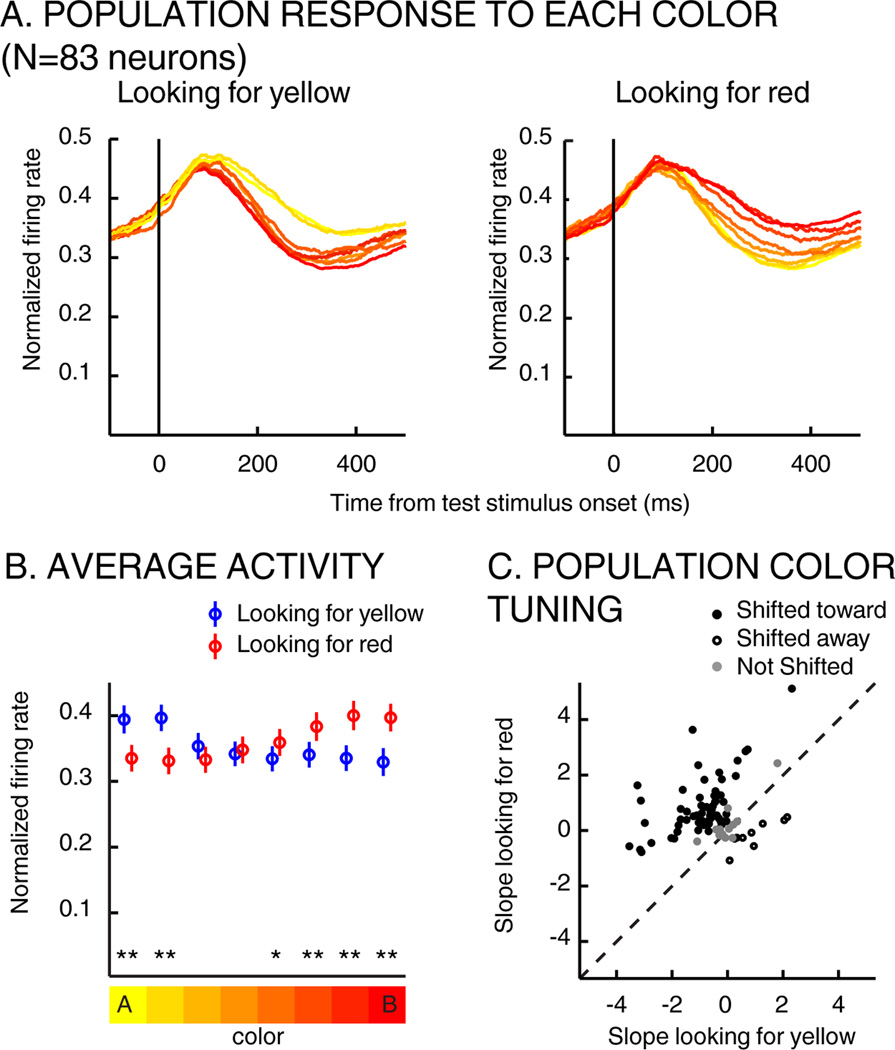

The average activity of this population (N=83 neurons that were color selective or showed significant shifts of color tuning) during the test period is shown in Figure 4A and 4B. This reveals that population-level color preferences reversed depending on the task-relevance of each color. Neuronal color tuning was examined by applying a linear regression to each neuron’s (N=83) average responses to the 8 colors, which revealed a mean slope of −0.56 on A trials (indicating a preference for yellow) and 0.58 on B trials (red preference) (paired T-Test, P=~10−13).

Figure 4.

Impact of task demands on LIP color tuning. A) Average time-course response (during test period) to each test-stimulus color (across color selective neurons, N=83) when monkeys were looking for yellow (left) or for red (right). The color of each trace indicates test-stimulus color. B) Average test-period activity among color-selective neurons (N=83) when monkeys were looking for yellow (blue), for red (red) in a 100 to 400 ms timing window (paired T-Test, * p<0.05, ** p<0.001). Error bars indicate SEM. C) Each point represents the slope of the linear regression fit of each neuron when monkeys were looking for yellow (x-axis) vs. red (y-axis).

Relationship between direction and color tuning

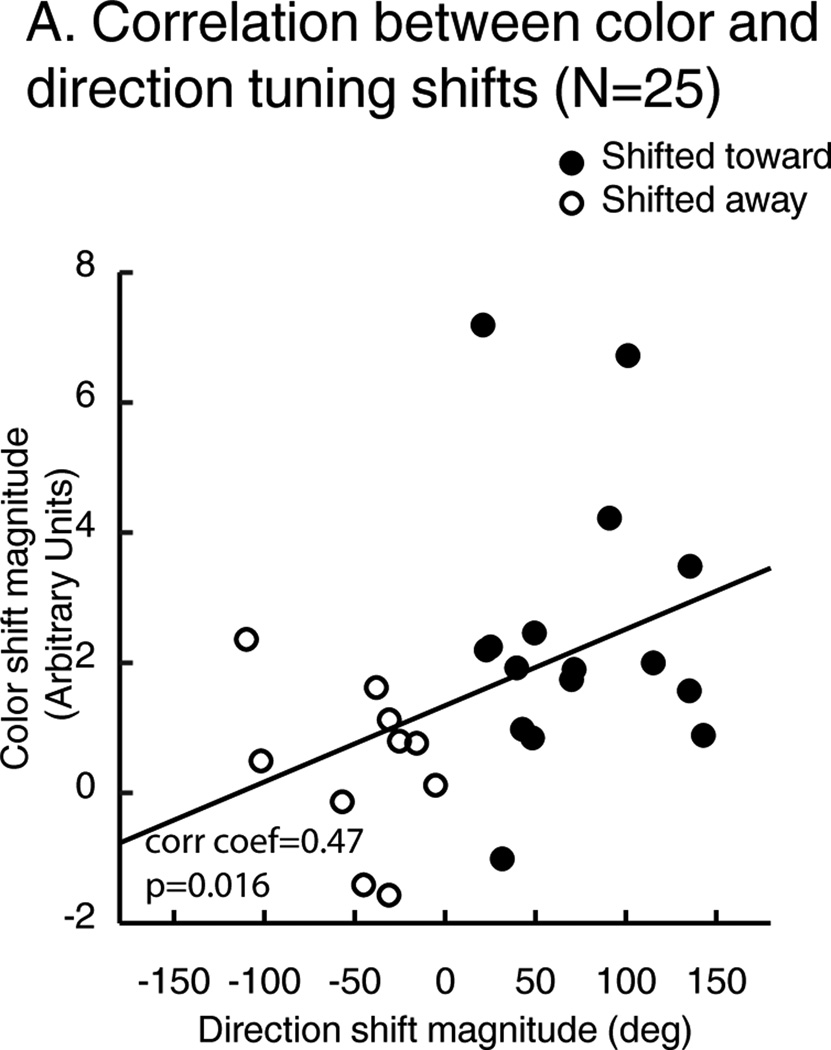

While LIP showed significant sample-dependent shifts of direction and/or color selectivity, we tested whether tuning-shift effects were evident in the same pool of neurons. Among 31 neurons that showed significant direction tuning shifts, 25/31 also showed significant color tuning shifts. We examined the relationship between each neuron’s direction-shift and color-shift effects (Figure 5), and found that neurons with larger direction shifts also showed larger color shifts (Spearman correlation coefficient: 0.47, P=0.016).

Figure 5.

A) Correlation between color and direction shift effects. Each point represents the amplitude of neuronal tuning shifts for direction (X axis) and color (Y axis). Filled points: neurons whose preferred direction is shifted toward the attended direction. Open points: neurons whose preferred direction is shifted away the attended direction.

This raises a question about whether feature encoding in LIP is used to detect and respond to match stimuli. To examine this, we compared neuronal responses to stimulus A and stimulus B when they were shown as match and non-match test-stimuli. We focused on neurons which were both color and direction selective using the 3-way ANOVA or showed significant color and direction tuning shifts (N=71) during the DCM task (using different populations led to similar results). Responses to match stimuli (Figure S2A) were higher than for non-match stimuli for both sample A (paired T-Test, P=4 × 10−5) and B trials (P=0.001). Moreover, the average population response was greater for match compared to non-match stimuli during both sample A and B trials (Figure S2B). This shows that, in addition to flexible visual feature selectivity, LIP also reflects the match/non-match status of test stimuli.

Our results suggest that tuning shifts in LIP stem from modulations of selective attention. However, we considered whether they could instead reflect other cognitive or behavioral processes such as arousal or motor planning, which could co-vary with the similarity between sample and test stimuli. For example, arousal could increase in proportion to the sample-test similarity, which could in turn modulate LIP activity. This could yield an apparent tuning shift (i.e. greater firing to test features similar to the sample), and a correlated amplitude of color and direction shifts. We examined test-period activity among significantly shifted (for both color and motion) neurons (N=25) as a function of sample-test similarity (or “spectral distance”; Figure S3A). We examined neuronal activity separately on sample A and B trials among neurons that preferred the direction of samples A and B, respectively. Spectral distance = 1 are the most similar non-matching test stimuli to the sample in all four plots. A global sample-test similarity effect would result in stronger activity for smaller spectral distances in each plot. Instead, such similarity effects were only evident when the relevant sample was the same as the neurons’ preferred sample (Sample A trials, Sample A preferring neurons: correlation coefficient = −0.099, P = 0.0023; Sample B trials, Sample B preferring neurons: correlation coefficient = −0.231, P = 4×10−8) but not when they differed (Sample A trials, Sample B preferring neurons: correlation coefficient = −0.048, P = 0.25; Sample B trials, Sample A preferring neurons: correlation coefficient = −0.009, P = 0.76), consistent with a tuning shift rather than a global similarity effect. Moreover, the response of these neurons to the 64 test stimuli did not increase with the probability of false alarm responses (Figure S3B). This suggests that neuronal activity does not track sample-test similarity and that tuning shifts are not due to factors such as arousal or motor planning.

Visual feature tuning during DCM task and passive viewing

For a subpopulation of LIP neurons (N=83/127), we assessed direction and color selectivity during passive viewing (PV) in addition to the DCM task. This revealed that a pool of LIP neurons (N=40/83; one-way ANOVA across 8 directions, p<0.05) was direction selective during PV, while a comparatively small population was color selective (N=17/83; one-way ANOVA across 8 colors, p<0.05). We examined whether feature selectivity during PV was related to selectivity during the DCM task. Among direction selective neurons during PV, most were also direction selective during the DCM task (N=36/40; three-way ANOVA for DCM task, one-way ANOVA for PV, P<0.05). In contrast, many LIP neurons were color selective during the DCM task (N=53) but not PV (N=17 during PV; 9/17 also during DCM; three-way ANOVA for DCM task and one-way ANOVA for PV, P<0.05).

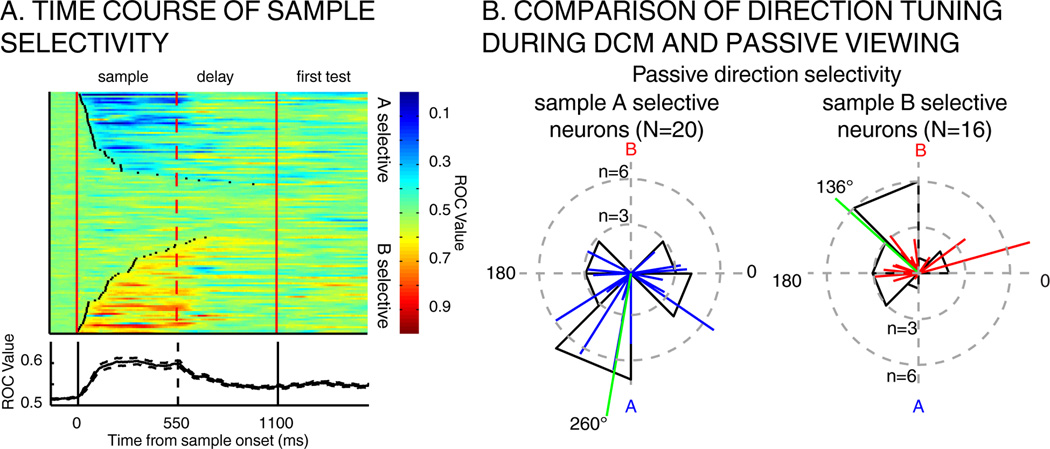

A large proportion of LIP neurons (104/127) responded preferentially to one of the sample stimuli (T-Test, P<0.01) during the DCM task. The time-course of LIP sample selectivity is shown for each LIP neuron in Figure 6 (median latency: sample A selective neurons: 129 ms absolute deviation to the median 83 ms; sample B selective neurons: 200 ms absolute deviation to the median: 155 ms). We asked whether neurons showed similar directional preferences in the DCM and PV tasks. We focused on the neurons (N=36/83) which were direction selective during PV and responded differentially to the two samples during the sample period of the DCM task. Neurons which preferred sample A during the DCM task had a mean preferred direction of 260° during PV (direction of sample A = 270°). Likewise, neurons which preferred sample B had a mean preference of 136° during PV (direction of sample B = 90°) (Figure 6B). This reveals a relationship between DCM sample selectivity and selectivity during PV. In contrast, we did not detect an obvious relationship between the relatively weak color selectivity during PV and DCM sample selectivity. Among 17 color selective neurons during PV, 14/17 were sample selective during the DCM task (8 preferred sample A, 6 preferred sample B). The slope of the linear regression for test-period color tuning was negative for 2/8 sample A selective neurons, and positive for 6/6 of sample B selective neurons. The small size of this pool precludes strong conclusions about the relationship between color selectivity during the passive and DCM tasks

Figure 6.

A) Time-course of sample selectivity. Top: each row depicts the time course of sample selectivity (A vs. B) for one neuron from sample onset to the offset of the first test stimulus. Black points on each row represent the latency at which selectivity becomes significant for that neuron (N=102/127). Neurons are sorted according to their latency of sample selectivity. Bottom: average time-course of sample selectivity (ROC) across population shown above. B) Comparison of direction tuning during DCM task and passive viewing. Left: preferred directions during passive viewing task is shown among direction selective neurons that preferred sample A during the DCM task (N=20). Right: the same, shown across neurons that preferred sample B during DCM task. Green lines represent the mean angle across all vectors.

These results indicate that many LIP neurons show “native” direction tuning outside the DCM task, while a distinct pool of neurons show direction selectivity that is more task dependent. To determine whether these two groups of neurons exhibit the same degree of sample-dependent tuning shifts, we compared the magnitude of tuning shifts between the two populations. Interestingly, LIP neurons that were not direction selective during PV showed larger tuning shifts (61.9°) compared to passively direction-tuned neurons (40.3°,T-Test, P=0.029). This suggests that natively un-tuned neurons show more flexible tuning during the DCM task.

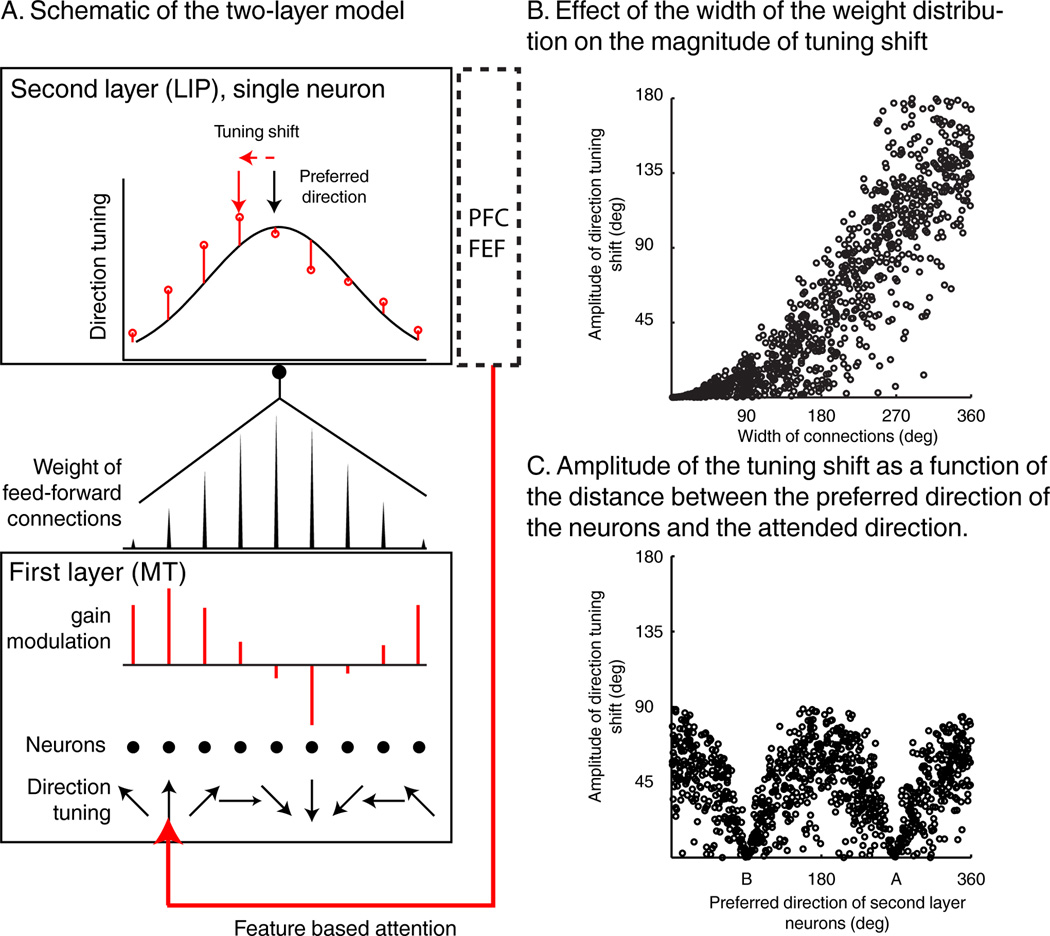

Gain modulations in upstream visual areas could produce tuning shifts in LIP

We propose a model which suggests that shifts of feature tuning in LIP could arise via linear integration of attention-related gain changes that occur in upstream visual areas, as described in MT and V4 (Martinez-Trujillo and Treue, 2004; Maunsell and Treue, 2006; McAdams and Maunsell, 1999; Treue and Martínez Trujillo, 1999). The model consists of two interconnected neuronal layers, a first layer sending feed-forward connections to a second layer. In this example, the first and second layers correspond to areas MT and LIP, respectively as we will focus on the shifts of direction tuning in LIP. But the same model applies similarly to color with layer-one neurons corresponding to V4 color selective neurons. Layer-two (L2) neurons integrate multiple inputs from a population of direction-tuned layer-one (L1) neurons (Figure 7A). The distribution of connection weights between layers determines the direction selectivity of L2 neurons: the sharper the distribution of synaptic weights, the sharper the direction tuning of the LIP neuron. Likewise, a L2 neuron which receives uniform input from L1 neurons would respond uniformly to all directions.

Figure 7.

Visual feature integration model. A) Schematic representation of the two layer model. Bottom: each point represents one neuron. Each L1 neuron is tuned to a specific direction. The length and direction (up or down) of red lines represent the multiplicative gain applied to each neuron due to feature based attention. The red arrow indicates the attended direction. Middle: representation of synaptic weights between L1 neurons and a single L2 neuron. Top: tuning curve of a layer-two (i.e. LIP) neuron that linearly integrates inputs from the first layer population, during passive viewing (black curve), or when attention is applied to one direction (red points). B) Amplitude of the tuning shift between A and B trials as a function of the width of the distribution of the connections between the two layers of neurons. C) Amplitude of the tuning shift as a function of the distance between the attended direction and the preferred direction of L2 neurons for a fixed width of connections weight of 180 degrees.

Previous work in MT and V4 found that feature-selective neurons show changes in response consistent with a gain modulation due to feature-based attention (Bichot et al., 2005; Martinez-Trujillo and Treue, 2004; McAdams and Maunsell, 1999, 2000; Treue and Martínez Trujillo, 1999). Thus, we applied gain modulations to L1 neurons and considered the impact on tuning in L2 (Figure 7). When monkeys are looking for a specific direction, there will be an increase in response gain for L1 neurons tuned to nearby directions. Increases in gain are expected to be strongest for neurons tuned to directions closest to the relevant direction, and progressively weaker (or even gain decreases) for larger mismatches in tuning (Martinez-trujillo and Treue, 2004). As a consequence, linear integration of gain-modulated L1 neuronal activity by L2 neurons produces shifts in L2 direction tuning toward the relevant (i.e. attended) direction (Figure 7A). Moreover, the model can also account for tuning shifts away from the attended direction, as observed in a small neuronal pool in our study. Such modulations in model units occurred when attention-related gain modulations in L1 neurons were smaller for the attended than unattended direction, as previously reported in a subset of MT neurons (Treue and Martínez Trujillo, 1999). Including L2 neurons in the model which specifically integrate inputs from L1 neurons with such gain modulations results in direction tuning shifted away from the attended direction for those neurons.

This model provides a candidate mechanism to explain two main observations in our study. First, shifts in direction tuning were smaller for LIP neurons that were direction tuned during passive viewing, and vice versa. Second, LIP neurons which were weakly color selective during passive viewing strongly represented the relevant color during the DCM task. The model suggests that these effects arise because DCM-task feature tuning in L2 neurons is due to a linear combination of two factors: 1) the native feature selectivity of L2 neurons, and 2) the impact of attention-related gain modulations in L1 neurons on L1 activity. The model assumes that direction tuning in L2 (LIP) neurons comes about by inputs from populations of L1 (MT) neurons with non-uniform distributions of preferred directions. Likewise, passively un-tuned L2 neurons receive inputs from populations of L1 neurons with uniform distributions of preferred directions. This predicts that passively direction-tuned L2 neurons will show modest tuning shifts (Figure 7B). In contrast, passively un-tuned L2 neurons (e.g. most LIP neurons were not color selective during passive viewing) can show large shifts since that tuning primarily reflects the readout of gain modulations in L1 (as we observed for LIP color tuning during the DCM task) (Figure 7B)

Interestingly, the model also predicts that the amplitude of the tuning shift depends on the distance between the native preferred direction of each neuron and the direction of the task-relevant sample stimulus (Figure 7C). L2 neurons whose native preferred directions are similar to the attended direction show gain modulations (but not tuning shifts) similar to that in upstream visual areas. This will be a focus of future work, as this specific prediction could not be tested given the limited size of our neuronal pool.

Discussion

We examined the hypothesis that feature-based attention selectively gates information about task-relevant visual features between LIP and upstream visual areas. Previous work showed that color and motion are primarily processed in distinct visual areas. This raises a question about how information about multiple visual features is read-out by downstream areas. We recorded LIP activity during a DCM task which manipulated the task relevance of color and direction features. This revealed substantial color and direction selectivity during the DCM task. Furthermore, we observed significant shifts of color and direction tuning depending on which color and direction were relevant for solving the task. A majority of neurons showed shifts toward the attended direction or color, and the amplitude of the direction tuning shifts were dependent on neurons’ “native” direction selectivity (assessed during passive viewing)—neurons that were natively direction tuned showed smaller shifts than neurons which were not natively tuned. This is consistent with a model in which LIP neurons integrate the activity of pools of upstream neurons (e.g. MT and V4) which are known to show response-gain changes as a result of feature-based attention. Together, this suggests that feature-based attention gates inputs from upstream visual areas to LIP, resulting in integrative representations of task-relevant visual features.

Although previous theoretical work has focused on the potential utility of such tuning shifts (Carrasco et al., 2004; Compte and Wang, 2006), this study is, to our knowledge, the first demonstration of shifts of direction and color tuning. Previous studies of feature-based attention in the motion processing system showed that attending to a particular direction increases the response gain of MT neurons tuned to the attended direction (Martinez-trujillo and Treue, 2004; Treue and Martínez Trujillo, 1999). Similar gain modulations of form (Bichot et al., 2005), color (Bichot et al., 2005; Motter and Health, 1994) or orientation (McAdams and Maunsell, 1999) tuning have also been shown in area V4 as a result of feature based attention. One previous study showed that visual representations in V4 during natural image viewing can shift as a function of feature-based attention (David et al., 2008). In this study, monkeys had to detect target images, either during delayed matching or free-viewing search tasks. The authors showed that the joint selectivity to orientation and to spatial frequency of V4 neurons shifted toward the spectral properties of the target. This raises the possibility that visual areas upstream from LIP may also show attention-related tuning shifts. Moreover, earlier work showed that feature selectivity can emerge in visual cortex when those features are task relevant. For example, direction selectivity has been described in V4 during a delayed matching task (Ferrera et al., 1994), and color selectivity has been described in MT during visual search (Buracas and Albright, 2009). Although the DCM task engages feature-based attention, the monkeys’ high accuracy rate along with the lack of invalid trials—in which attention is cued to task-irrelevant features—precludes a direct examination of the relationship between neuronal tuning shifts and behavior.

A previous study reported that LIP neurons show color selectivity when the stimulus color cued monkeys about the direction of a saccade (Toth and Assad, 2002). The color selectivity we describe differs from that study in at least two important ways. First, the previous study reported that LIP neurons differentiated between pairs of complementary colors, but did not report shifts in color tuning or examine fine color discrimination. We show that LIP color selectivity exhibits trial-to-trial shifts in tuning toward the task-relevant color in monkeys making fine color discriminations. Second, color selectivity in the previous study appeared to emerge with a relatively long latency following stimulus onset–during the late-stimulus and pre-saccadic delay periods. During this period of the task, monkeys had to use non-spatial information (cue color) to plan a spatially-targeted motor response. In contrast, we found comparatively short-latency task-dependent color selectivity independent of planned actions.

We present a model which can account for the observed effects of task demands on color and direction tuning in LIP. The model may also relate to visual feature selectivity reported in previous studies in LIP (Fanini and Assad, 2009; Sereno and Maunsell, 1998; Toth and Assad, 2002). A model prediction is that LIP can exhibit selectivity for any visual feature encoded by neurons located one synapse upstream from LIP (e.g. MT or V4), which are assumed to show multiplicative gain changes as a result of feature-based attention.

LIP has been extensively studied in the context of visual-spatial processing (Bisley and Goldberg, 2003, 2010; Goldberg et al., 1990; Gottlieb et al., 1998; Herrington and Assad, 2009; Ibos et al., 2013; Ipata et al., 2009). However, mounting evidence suggests that LIP is also involved in a number of non-spatial and cognitive functions. For example, LIP activity can reflect the learned category membership of visual stimuli in monkeys performing a visual categorization task (Freedman and Assad, 2006; Swaminathan and Freedman, 2012). Furthermore, non-spatial representations are encoded independently from, and multiplexed with, spatial signals related to saccadic eye movements (Rishel et al., 2013). Likewise, encoding of stimulus salience can be integrated with effector signals (Oristaglio et al, 2006). The current study gives additional insight into the flexibility of non-spatial representations in LIP, and shows that LIP feature selectivity can vary rapidly from trial to trial (in contrast to the long training durations in the studies mentioned above) according to changes in task demands. In the DCM task, monkeys were extensively trained with the two sample stimuli used during LIP recordings, raising the possibility that the patterns of selectivity and tuning shifts in LIP might vary according to set of stimuli used during training—a question that should be examined in future work.

Finally, LIP has been described as a priority map, in which the behavioral valences of stimuli are spatially mapped and used to guide behavior (Bisley and Goldberg, 2010; Ipata et al., 2009). At the population level, tuning shifts, both toward and away from the attended feature values, could serve to enhance the representation of stimuli containing those features and, as a consequence, enhance their priority. Consistent with this hypothesis, LIP neurons responded strongly to match stimuli which required a behavioral response. Similar match/non-match selectivity has been observed in a number of areas including ITC (Miller et al., 1991, 1993) and PFC (Miller et al., 1996). However, the DCM task was not designed to precisely characterize match/non-match selectivity, which could reflect a mixture of perceptual, cognitive and premotor processes.

Recent studies have raised the possibility that the PFC could play a leading role in the voluntary control of both spatial (Armstrong et al., 2009; Ibos et al., 2013; Schafer and Moore, 2011) and feature-based (Zhou and Desimone, 2011) attention as it exerts top-down control on both visual and parietal cortices (Ekstrom et al., 2008; Gregoriou et al., 2009; Noudoost et al., 2010). Moreover, PFC also encodes task-dependent representations of cognitive variables. For example, changes in task context or rules significantly modulates neuronal selectivity in PFC (Bichot et al., 1996; Miller and Cohen, 2001; Wallis et al., 2001). Importantly, we posit that the dynamic feature encoding we have observed in LIP reflects the bottom-up integration of the activity in upstream visual areas, on which attentional modulations could be driven primarily by PFC. Therefore, our data support the hypothesis that the PFC directs the flow of task-relevant information from early sensory areas to LIP. Key issues for future work will be to directly test this hypothesis and better understand the brain-wide neuronal circuitry underlying this process.

Experimental procedures

Behavioral task and stimulus display

Two male monkeys (macaca mulatta, ~10–11 kg) were seated in primate chairs, head restrained, and faced a 21-inch color CRT monitor on which stimuli were presented (1280*1024 resolution, 85 Hz, 57 cm viewing distance). Monkeys maintained fixation during each trial (2° radius). Stimuli were 6° diameter circular patches of 476 colored random dots moving at a speed of 10° per second with 100% coherence. Sample and test stimuli were presented for 550 ms. Stimulus colors were generated using the LAB color space (1976 CIE L*a*b) and all colors were measured as isoluminant in experimental conditions, using a luminance meter (Minolta).

For a subset of recording sessions, after the DCM task, we tested neuronal color and direction selectivity during a passive viewing task (PV). Monkeys were rewarded for maintaining fixation and holding the bar while stimuli were shown (550 ms each) sequentially in the receptive field (RF) of the neuron being recorded. Both the sequence of stimuli and the conjunction of color and direction were different for each monkey. For monkey N, each trial consisted of a succession of 4 stimuli randomly picked among the set of 64 stimuli. For monkey M, each trial consisted of a succession of 8 stimuli. In half of the trials (direction trials), direction of the stimuli varied from direction 1 to direction 8 and were all colored similarly (color 3 in figure 1). In the other half of the trials (color trials), the color of the stimuli varied from color 1 to 8 and were all moving in the same direction (direction 4 in Figure 1).

The fixed sequence of stimuli used for Monkey M could bias selectivity toward either direction/color 1 (via stronger responses to the first stimulus) or direction/color 8 (as reward expectation increased toward the end of each trial). This could result in co-varying responses between colors and directions. We tested 56/66 monkey M’s neurons during PV. More than half of them (N=37/56) were either exclusively direction selective (N=17/37), exclusively color selective (N=6), or both color and direction (N=14) selective during PV (ANOVA, P<0.05). Activity during color and direction trials was correlated for 11/37 neurons (P<0.05), which were discarded from further PV analysis.

Gaze position was measured with an optical eye tracker (SR Research) at a 1.0 kHz sampling rate and stored for offline analysis. Control and measurement of task events, stimulus presentation, behavioral signals, and rewards were controlled by Monkeylogic software (Asaad and Eskandar, 2008a, 2008b; Asaad et al., 2013) (www.monkeylogic.net) running in MATLAB on a Windows-based PC.

Electrophysiological recordings

Monkeys were implanted with a headpost and recording chamber during aseptic procedures. Both the stereotaxic coordinates and the angle of the chambers were determined by 3D anatomical images obtained by Magnetic Resonance Imaging conducted prior to surgery. The recording chambers were positioned over the left intraparietal sulcus. All procedures were in accordance with the University of Chicago’s Animal Care and Use Committee and US National Institutes of Health guidelines.

During each experiment session, a single 75 µm tungsten microelectrode (FHC) was lowered into the cortex using a motorized microdrive (NAN Instruments) and dura-piercing guide tube. Neurophysiological signals were amplified, digitalized and stored for offline spike sorting (Plexon) and analysis.

RF mapping

Neurons’ RFs were tested prior to running the DCM task, using a memory-saccade (MS) task (Gnadt and Andersen, 1988). Neurons were considered to be in LIP if they showed spatially selective visual or delay-period responses, spatially selective presaccadic responses, or were located between such neurons in an electrode penetration. Although the color and direction selectivity of some LIP neurons was assessed outside the context of the DCM task, color and direction selectivity were not used as selection criteria for choosing which neurons to record. DCM and PV task stimuli were always presented inside the neurons’ RFs.

Data analysis

Behavioral and neuronal results were similar in both monkeys. Thus, we merged their data for population analysis. All analyses were conducted using only correct trials (except for Figure S2).

Normalization

Except where indicated otherwise, population analysis utilized normalized firing rates for each neuron. Each neurons’ maximum firing rate was determined by using a moving average (window width = 100 ms, step size = 1 ms) across the sample, delay, and test periods, applied separately to average activity for each of the 64 unique sample stimuli. Each neurons’ normalized firing rate was computed by dividing each neurons’ response by its maximum response. Similar results of all analyses were obtained when using raw, rather than normalized, firing rates.

Sample selectivity

Firing rates during sample A and B trials were computed in two time windows: the sample and delay periods. Both windows were 450 ms long and began 50 ms after the sample onset (for sample period) and after the sample offset (for delay period). Sample-selective neurons were those that showed a significantly different response between sample A or B trials either during the sample or delay periods (T-Test, P<0.01). The time course of this selectivity for each neuron (Figure 6A) was computed by running sliding Receiver Operating Characteristic (ROC) comparisons (Green and Swets, 1966). Firing rates were computed in 100 ms moving average windows (1 ms step size) from the beginning of the fixation period to the end of the first test stimulus and compared using ROC analysis. Latencies were computed for all sample-selective neurons, defined as the first time bin at which the ROC comparison is significant (Wilcoxon rank test, P<0.01) for 100 consecutive 1.0 ms time steps. Each neuron’s latency is depicted by a black vertical line in Figure 6A.

Color and direction selectivity

Because monkeys report the presence of matching test stimuli with an arm/hand movement, that response could influence the firing rate of LIP neurons. In order to eliminate the impact of motor-related responses on the results of test-period analyses, we only included non-matching test stimuli in these analyses. Test period selectivity was examined for each neuron using a fixed time window between 100 and 400 ms following test onset. Selectivity was evaluated with a 3-way ANOVA (P<0.05), with sample identity (A or B), test color, and test direction as factors.

Direction tuning

Neuronal direction tuning was quantified by computing a directional vector for each neuron separately on sample A and B trials. Direction vectors were defined by the following equation: Where FR(i) is the firing rate of the neuron to the ith direction; [0 X] and [0 Y] are the Cartesian coordinates of the vector. The significance of the angular distances between direction vectors on sample A and B trials was computed in a time window of 100–400 ms after test onset (Figure S1). From each pool of trials corresponding to each direction, we sampled with replacement ni trials for the ith direction. For example, for one neuron on which we acquired n1 trials for direction 1, the sampling with replacement procedure consisted of picking one trial at random only from the pool of direction-1 trials (we were not shuffling trials of a specific direction with trials from other directions), replacing it in the pool of direction-1 trials, and repeating this procedure until n1 trials were picked for that direction. We proceeded similarly for each direction within their respective pools of trials (n2 trials for direction 2, n3 trials for direction 3… n8 trials for direction 8) and computed the preferred direction during sample A and sample B trials (Pref-dirA and Pref-dirB) based on those re-sampled trials. Pref-dirA and Pref-dirB were then directly compared to the direction of sample A (270°, DirAtoPref-dirA and DirAtoPref-dirB). This procedure was repeated 1000 times in order to generate as many values of DirAtoPref-dirA and DirAtoPref-dirB. We computed all the possible combinatory differences between the 1000 DirAtoPref-dirA and the 1000 DirAtoPref-dirB (1,000,000 DirAtoPref-dirA − DirAtoPref-dirB). Negative values of this difference means that Pref-dirA is closer to the direction of sample A than Pref-dirB. If at least 97.5% (P≤0.05) of these differences were negative, the preferred direction of the neuron was considered to be significantly shifted toward the attended direction. Inversely, if at least 97.5% of these differences were positive, the preferred direction of the neuron was significantly shifted away from the attended direction.

Conventional statistics such as the T-test or Wilcoxon tests are not suited for circular data. Thus, equity of the means of circular data were compared using a parametric William-Watson test.

Sliding window permutation test

Inspection of individual neurons revealed variability (across neurons) in the time that tuning-shift effects appeared during the early test period. To take this variability into account, we ran the same permutation test (described above) using a 100 ms window (step size = 5 ms), with the first point of the window sliding between the test onset and the RT time during that session. Similar effects were observed with a range of window widths, steps and starting/ending points. A set of trials for each of the 8 directions was randomly picked and used to compute the time course of Pref-dirA and Pref-dirB. This procedure was repeated 1000 times and differences in preferred direction are considered significant if 97.5% of the differences between DirAtoPref-dirA and DirAtoPref-dirB are negative (shifted toward) or positive (shifted away) for at least 10 consecutive time bins. The direction vectors presented in Figure 3 are computed over a 100 ms window following the first significant time bin.

Color Tuning

Color tuning shifts were characterized with a similar method as for direction. However, the color space we used was not circular but linear, preventing the use of a vector-based method for measuring color tuning. Instead, we fitted a linear regression to the responses of each neuron to the 8 colors, because most color selective neurons appeared to show monotonically increasing or decreasing activity across the tested color space (e.g. Figure 2). Color A (Yellow) was arbitrarily defined as color 1 and color B (red) as color 8 so that negative and positive slopes reflected neuronal tuning to colors A and B, respectively. Color tuning shifts as a function of sample identity were examined using the same permutation tests as used for direction selectivity. However, instead of computing the angular distance of DirAtoPref-dirA and DirAtoPref-dirB, we compared the slopes of the linear fit during A trials (slopeA) and B trials (slopeB) to investigate the magnitude of the tuning shifts. A negative difference between slopeA and slopeB (slopeA-slopeB) indicates that slopeB is greater than slopeA. If at least 97.5% (P≤0.05) of the differences between slopeA and slopeB were negative, the color tuning was significantly shifted toward the attended color. Inversely, if at least 97.5% (p≤0.05) of slopeA-slopeB were positive, the color tuning of the neurons was significantly shifted away the attended color. The same sliding window procedure described for the direction shift analysis was also applied to color tuning.

Distance to the sample

We analyzed neuronal sensitivity to the visual similarity or “spectral distance” between all possible combinations of sample and test stimuli. A distance of 0 corresponds to identical (i.e. “match”) sample and test stimuli. Each step away from the color or the direction of the sample adds 1.0 to the spectral distance. The maximal spectral distance between sample and test stimuli is 11 (7 color steps + 4 direction steps). Test period neuronal responses to different spectral distances were computed across the population that showed significant color and direction tuning shifts, and were separately examined for each sample stimulus. Moreover, neurons were segregated based on their preferred direction during the test period: one population had preferred directions closer to sample A, and the other population had preferred directions closer to sample B. The average preferred direction of each neuron was computed by summing the direction vectors Pref-dirA and Pref-dirB.

Two-layer integration model

The integration model we propose consists of two neuronal layers. Each of 1000 second-layer (L2) neurons received weighted inputs from 8 neurons of the first layer (L1). L1 neurons were feature tuned according to equation (1). Each neuron’s direction tuning was a Gaussian distribution centered on its preferred direction, and whose amplitude was modulated by task demands.

| (1) |

Tun1(i) is the tuning curve of the ith neuron of L1. In our example, Tun1 are Gaussian distributions centered at µ (0, 45, 90, 135, 180, 225, 270 and 215 degrees) with a 50° standard deviation (σ) (different σ give similar results). attgain is the gain applied to the activity of the L1 neuron when monkeys attended to one specific direction. During passive viewing, attgain was equal to 1 for all layer one neurons. When one direction was relevant, attgain was a 360° wide Gaussian distribution centered on the relevant direction (in our example direction A (270°) or direction B (90°)), with a standard deviation of 45°. Similar results were obtained with different values of standard deviation of Tun1 and attgain. For each of the 1000 iterations, gain modulation amplitudes were randomly assigned. The lower bound of the distribution (which modulates neurons tuned to the un-attended direction) was randomly picked between 0.85 and 1 while the higher bound (which modulates neurons tuned to the attended direction) was randomly picked between 0.95 and 1.25. If gain modulation values for the attended feature were defined as being lower than those for the unattended feature, this produced shifts away from the attended feature-value in L2 of the model.

Feature tuning of L2 neurons depended on the linear integration of L1 inputs and followed equation (2):

| (2) |

Tun2 is the direction tuning of a L2 neuron after linear integration of the weighted inputs from the 8 neurons of L1. W(j) is the synaptic weight of the connection between the jth neuron of L1 and L2 neuron; const corresponds to a constant term, arbitrarily defined as 10.0 sp.s−1., which corresponds to other inputs that L2 neurons also integrate, such as purely non-selective visual responses, saliency of the stimuli, spatial attention, motivation or other cognitive factors.

For each of the 1000 L2 neurons, attgain was either centered on 270° or 90°. The standard deviation of the distribution of the synaptic weight (W) was randomly assigned between 1 to 360°. This consequently modulated the sharpness of the tuning of the L2 neurons; a sharp distribution of synaptic weights resulted in L2 neuron sharply direction tuned, a broad distribution of synaptic weights resulted in an un-tuned L2 neuron. We also randomly defined the center of the distribution of the connection weights (between 1 to 360°), which consequently modulated the preferred direction of L2 neurons.

Supplementary Material

Highlights.

LIP neurons encode both color and direction when those features are task relevant

LIP shows task-dependent shifts in visual color and motion-direction tuning

Encoding in LIP is consistent with integration of inputs from upstream visual areas

Acknowledgements

We thank G. Huang and S. Thomas for animal training and technical assistance, N.Y. Masse for analysis advice, The University of Chicago Animal Resources Center for expert veterinary assistance. We thank J. Assad, J. Clemens, J. Fitzgerald, N.Y. Masse, and J.H. Maunsell for comments and discussion on earlier versions of this manuscript. This work was supported by NIH R01 EY019041 and NSF CAREER award 0955640, with additional support from a McKnight Scholar award, the Alfred P. Sloan Foundation, The Brain Research Foundation, and the Fyssen Foundation (G.I.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions

G.I. conceived and designed the experiment, trained the animals, acquired and analyzed the data, and wrote the manuscript. D.J.F. assisted in experiment design, data analysis, and writing the manuscript.

References

- Armstrong KM, Chang MH, Moore T. Selection and maintenance of spatial information by frontal eye field neurons. J. Neurosci. 2009;29:15621–15629. doi: 10.1523/JNEUROSCI.4465-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Eskandar EN. Achieving behavioral control with millisecond resolution in a high-level programming environment. J. Neurosci. Methods. 2008a;173:235–240. doi: 10.1016/j.jneumeth.2008.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Eskandar EN. A flexible software tool for temporally-precise behavioral control in Matlab. J. Neurosci. Methods. 2008b;174:245–258. doi: 10.1016/j.jneumeth.2008.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Santhanam N, McClellan S, Freedman DJ. High-performance execution of psychophysical tasks with complex visual stimuli in MATLAB. J. Neurophysiol. 2013;109:249–260. doi: 10.1152/jn.00527.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assad JA. Neural coding of behavioral relevance in parietal cortex. Curr. Opin. Neurobiol. 2003;13:194–197. doi: 10.1016/s0959-4388(03)00045-x. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Schall JD, Thompson KG. Visual feature selectivity in frontal eye fields induced by experience in mature macaques. Nature. 1996;381:697–699. doi: 10.1038/381697a0. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Rossi AF, Desimone R. Parallel and serial neural mechanisms for visual search in macaque area V4. Science. 2005;80-(308):529–534. doi: 10.1126/science.1109676. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003;299:81–86. doi: 10.1126/science.1077395. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu. Rev. Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Born RT, Bradley DC. Structure and function of visual area MT. Annu. Rev. Neurosci. 2005;28:157–189. doi: 10.1146/annurev.neuro.26.041002.131052. [DOI] [PubMed] [Google Scholar]

- Buracas GT, Albright TD. Modulation of neuronal responses during covert search for visual feature conjunctions. Proc. Natl. Acad. Sci. U.S.A. 2009;106:16853–16858. doi: 10.1073/pnas.0908455106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M, Ling S, Read S. Attention alters appearance. Nat. Neurosci. 2004;7:308–313. doi: 10.1038/nn1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavada C, Goldman-Rakic PS. Posterior parietal cortex in rhesus monkey: I. Parcellation of areas based on distinctive limbic and sensory corticocortical connections. J. Comp. Neurol. 1989;287:393–421. doi: 10.1002/cne.902870402. [DOI] [PubMed] [Google Scholar]

- Compte A, Wang XJ. Tuning curve shift by attention modulation in cortical neurons: a computational study of its mechanisms. Cereb. Cortex. 2006;16:761–778. doi: 10.1093/cercor/bhj021. [DOI] [PubMed] [Google Scholar]

- David SV, Hayden BY, Mazer JA, Gallant JL. Attention to stimulus features shifts spectral tuning of V4 neurons during natural vision. Neuron. 2008;59:509–521. doi: 10.1016/j.neuron.2008.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Schein SJ, Moran J, Ungerleider LG. Contour, color and shape analysis beyond the striate cortex. Vision Res. 1985;25:441–452. doi: 10.1016/0042-6989(85)90069-0. [DOI] [PubMed] [Google Scholar]

- Ekstrom LB, Roelfsema PR, Arsenault JT, Bonmassar G, Vanduffel W. Bottom-up dependent gating of frontal signals in early visual cortex. Science. 2008;321:414–417. doi: 10.1126/science.1153276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fanini A, Assad JA. Direction selectivity of neurons in the macaque lateral intraparietal area. J. Neurophysiol. 2009;101:289–305. doi: 10.1152/jn.00400.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Ferrera VP, Rudolph KK, Maunsell JH. Responses of neurons in the parietal and temporal visual pathways during a motion task. J. Neurosci. 1994;14:6171–6186. doi: 10.1523/JNEUROSCI.14-10-06171.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald JK, Freedman DJ, Assad JA. Generalized associative representations in parietal cortex. Nat. Neurosci. 2011;14:1075–1079. doi: 10.1038/nn.2878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443:85–88. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J. Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Gnadt JW, Andersen RA. Memory related motor planning activity in posterior parietal cortex of macaque. Exp. Brain Res. 1988;70:216–220. doi: 10.1007/BF00271862. [DOI] [PubMed] [Google Scholar]

- Goldberg ME, Colby CL, Duhamel JR. Representation of visuomotor space in the parietal lobe of the monkey. Cold Spring Harb. Symp. Quant. Biol. 1990;55:729–739. doi: 10.1101/sqb.1990.055.01.068. [DOI] [PubMed] [Google Scholar]

- Gottlieb JP, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature. 1998;391:481–484. doi: 10.1038/35135. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. John Wiley and Sons; 1966. [Google Scholar]

- Gregoriou GG, Gotts SJ, Zhou H, Desimone R. High-frequency, long-range coupling between prefrontal and visual cortex during attention. Science. 2009;324:1207–1210. doi: 10.1126/science.1171402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrington TM, Assad JA. Neural activity in the middle temporal area and lateral intraparietal area during endogenously cued shifts of attention. J. Neurosci. 2009;29:14160–14176. doi: 10.1523/JNEUROSCI.1916-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibos G, Duhamel J-R, Ben Hamed S. A Functional Hierarchy within the Parietofrontal Network in Stimulus Selection and Attention Control. J. Neurosci. 2013;33:8359–8369. doi: 10.1523/JNEUROSCI.4058-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ipata AE, Gee AL, Bisley JW, Goldberg ME. Neurons in the lateral intraparietal area create a priority map by the combination of disparate signals. Exp. Brain Res. 2009;192:479–488. doi: 10.1007/s00221-008-1557-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leathers ML, Olson CR. In monkeys making value-based decisions, LIP neurons encode cue salience and not action value. Science. 2012;338:132–135. doi: 10.1126/science.1226405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J. Comp. Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Treue S. Feature-based attention increases the selectivity of population responses in primate visual cortex. Curr. Biol. 2004;14:744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Martinez-trujillo JC, Treue S. Feature-Based Attention Increases the Selectivity of Population Responses in Primate Visual Cortex. 2004;14:744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Maunsell JH, Van Essen DC. Functional properties of neurons in middle temporal visual area of the macaque monkey. I. Selectivity for stimulus direction, speed, and orientation. J. Neurophysiol. 1983;49:1127–1147. doi: 10.1152/jn.1983.49.5.1127. [DOI] [PubMed] [Google Scholar]

- Maunsell JHR, Treue S. Feature-based attention in visual cortex. Trends Neurosci. 2006;29:317–322. doi: 10.1016/j.tins.2006.04.001. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Effects of attention on orientation-tuning functions of single neurons in macaque cortical area V4. J. Neurosci. 1999;19:431–441. doi: 10.1523/JNEUROSCI.19-01-00431.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Attention to both space and feature modulates neuronal responses in macaque area V4. J. Neurophysiol. 2000;83:1751–1755. doi: 10.1152/jn.2000.83.3.1751. [DOI] [PubMed] [Google Scholar]

- Meister MLR, Hennig JA, Huk AC. Signal multiplexing and single-neuron computations in lateral intraparietal area during decision-making. J. Neurosci. 2013;33:2254–2267. doi: 10.1523/JNEUROSCI.2984-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miller EK, Li L, Desimone R. A neural mechanism for working and recognition memory in inferior temporal cortex. Science. 1991;254:1377–1379. doi: 10.1126/science.1962197. [DOI] [PubMed] [Google Scholar]

- Miller EK, Li L, Desimone R. Activity of neurons in anterior inferior temporal cortex during a short-term memory task. J. Neurosci. 1993;13:1460–1478. doi: 10.1523/JNEUROSCI.13-04-01460.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J. Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motter BC, Health S. Neural Correlates of Feature Selective Extrastriate Area V4 Memory and Pop-Out in. 1994;14 doi: 10.1523/JNEUROSCI.14-04-02190.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noudoost B, Chang MH, Steinmetz NA, Moore T. Top-down control of visual attention. Curr. Opin. Neurobiol. 2010;20:183–190. doi: 10.1016/j.conb.2010.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oristaglio J, Schneider DM, Balan PF, Gottlieb J. Integration of visuospatial and effector information during symbolically cued limb movements in monkey lateral intraparietal area. J. Neurosci. 2006;26:8310–8319. doi: 10.1523/JNEUROSCI.1779-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rishel CA, Huang G, Freedman DJ. Independent Category and Spatial Encoding in Parietal Cortex. Neuron. 2013;77:969–979. doi: 10.1016/j.neuron.2013.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schafer RJ, Moore T. Selective attention from voluntary control of neurons in prefrontal cortex. Sci. New York NY. 2011;332:1568–1571. doi: 10.1126/science.1199892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno AB, Maunsell JH. Shape selectivity in primate lateral intraparietal cortex. Nature. 1998;395:500–503. doi: 10.1038/26752. [DOI] [PubMed] [Google Scholar]

- Stoet G, Snyder LH. Single neurons in posterior parietal cortex of monkeys encode cognitive set. Neuron. 2004;42:1003–1012. doi: 10.1016/j.neuron.2004.06.003. [DOI] [PubMed] [Google Scholar]

- Swaminathan SK, Freedman DJ. Preferential encoding of visual categories in parietal cortex compared with prefrontal cortex. Nat. Neurosci. 2012;15:315–320. doi: 10.1038/nn.3016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toth LJ, Assad JA. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415:165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cogn. Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Treue S, Martínez Trujillo JC. Feature-based attention influences motion processing gain in macaque visual cortex. Nature. 1999;399:575–579. doi: 10.1038/21176. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Anderson KC, Miller EK. Single neurons in prefrontal cortex encode abstract rules. Nature. 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]

- Zeki SM. Colour coding in the superior temporal sulcus of the rhesus monkey. J. Physiol. 1976;263:169–170. [PubMed] [Google Scholar]

- Zhou H, Desimone R. Feature-based attention in the frontal eye field and area V4 during visual search. Neuron. 2011;70:1205–1217. doi: 10.1016/j.neuron.2011.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.