Abstract

Background

Since October of 2012, Medicare’s Hospital Readmissions Reduction Program has fined 2,200 hospitals a total of $500 billion. While the program penalizes readmission to any hospital, many institutions can only track readmissions to their own hospitals. We sought to determine the extent to which same-hospital readmission rates may be used to estimate all-hospital readmission rates following major surgery.

Study Design

We evaluated 3,940 hospitals treating 741,656 Medicare fee-for-service beneficiaries undergoing coronary artery bypass grafting, hip fracture repair, or colectomy between 2006 and 2008. We used hierarchical logistic regression to calculate risk- and reliability-adjusted rates of 30-day readmission to the same hospital and to any hospital. We next evaluated the correlation between same-hospital and all-hospital rates. To analyze the impact on hospital profiling, we compared rankings based on same-hospital rates to those based on all-hospital rates.

Results

The mean risk- and reliability-adjusted all-hospital readmission rate was 13.2% (SD 1.5%) and mean same-hospital readmission rate was 8.4% (SD 1.1%). Depending upon operation, between 57% (colectomy) and 63% (coronary artery bypass grafting) of hospitals were reclassified when profiling was based on same-hospital readmission rates instead of on all-hospital readmission rates. This was particularly pronounced in the middle three quintiles where 66–73% of hospitals were reclassified.

Conclusions

In evaluating hospital profiling under Medicare’s Hospital Readmissions Reduction Program, same-hospital rates provide unstable estimates of all-hospital readmission rates. To better anticipate penalties, hospitals require novel approaches for accurately tracking the totality of their post-operative readmissions.

Introduction

Readmissions affect nearly one in eight post-operative patients, costing Medicare $28 billion per year.1 In 2012, the Centers for Medicare and Medicaid Services (CMS) aimed to curb unnecessary readmissions through monetary penalties. Under the Hospital Readmissions Reduction Program (HRRP), institutions are fined for greater than expected readmissions following select medical and surgical hospitalizations. During the program’s first year, more than 2,200 hospitals received penalties totaling $500 million.2 While most hospitals are able to track readmissions to their own facilities (ie same-hospital readmission rate), few hospitals routinely track the totality of their readmission to any hospital (ie all-hospital readmission rate). In fact, this is a commonly cited limitation of studies examining readmission in both medical and surgical patients.3–5 This poses a significant challenge for hospitals seeking to implement changes in real-time aimed at reducing readmission.

The extent to which readmissions to other institutions affect a hospital’s ability to effectively track its readmissions remains unknown. Currently, institutions must use same-hospital readmission rates to estimate all-hospital readmission rates. Yet, some evidence suggests same-hospital readmissions are an unreliable and biased predictor of all-hospital readmissions. For example, Nasir et al evaluated hospitals profiled on heart failure readmissions and found same-hospital readmission rates underestimated allhospital readmission rates by approximately 5% (range 1% to >10%).6 While this study sheds some light on the reliability of same-hospital readmission rates, it is only a single medical condition and there are currently no studies evaluating this phenomenon in surgical patients.

In this study, we used data on Medicare beneficiaries undergoing one of three common surgical procedures to address three questions. First, what is the correlation between same-hospital and all-hospital readmission rates? Second, to what extent do hospital rankings change when using all-hospital rates instead of same-hospital rates? Finally, are any hospital characteristics associated with greater underestimation of all-hospital rates?

Methods

Data source, patient population, and patient variables

We obtained patient-level data from two sources – the national Medicare Provider Analysis and Review files; and the Medicare denominator file. Using International Classification of Disease 9th Revision, Clinical Modification (ICD-9-CM) codes, we created operative cohort datasets for patients undergoing coronary artery bypass grafting (CABG), hip fracture repair, or open colectomy between 2006 and 2008. Table 1. Hip fracture repair was selected for evaluation in this study because it is the only surgical procedure currently scrutinized under the HRRP;7 CABG was selected because of its relatively high readmission rate (17%) and because CMS had previously indicated its intention to target cardiovascular procedures;8 colectomy was selected because it is the most frequently performed major general surgical operation with a significant readmission rate (14%).9

Table 1.

ICD-9CM Codes Used to Define Operative Cohorts

| Operative Cohort | ICD-9CM Procedure Code |

Description |

|---|---|---|

| Open Colectomy | 45.71 | Open and other multiple segmental resection of large intestine |

| 45.73 | Open and other right hemicolectomy | |

| 45.74 | Open and other transverse colectomy | |

| 45.75 | Open and other left hemicolectomy | |

| 45.76 | Open and other sigmoidectomy | |

| 45.79 | Other and unspecified partial excision of large intestine | |

| 45.82 | Open total intra-abdominal colectomy | |

| 45.83 | Other and unspecified total intra-abdominal colectomy | |

| Coronary Artery Bypass Grafting | 36.10 | Aortocoronary bypass not otherwise specified |

| 36.11 | Aortocoronary bypass 1-artery | |

| 36.12 | Aortocoronary bypass 2-artery | |

| 36.13 | Aortocoronary bypass 3-artery | |

| 36.14 | Aortocoronary bypass 4-artery | |

| 36.15 | 1 internal mammary coronary artery bypass grafting | |

| 36.16 | 2 internal mammary coronary artery bypass grafting | |

| 36.17 | Abdominal-coronary artery bypass | |

| 36.19 | Heart revasc bypass anast necrosis | |

| Hip Fracture Repair | 79.00 | Closed reduction of fracture without internal fixation, unspecified site |

| 79.05 | Closed reduction of fracture without internal fixation, femur | |

| 79.10 | Closed reduction of fracture with internal fixation, unspecified site | |

| 79.15 | Closed reduction of fracture with internal fixation, femur | |

| 79.20 | Open reduction of fracture without internal fixation, unspecified site | |

| 79.25 | Open reduction of fracture without internal fixation, femur | |

| 79.30 | Open reduction of fracture with internal fixation, unspecified site | |

| 79.35 | Open reduction of fracture with internal fixation, femur | |

| 79.40 | Closed reduction of separated epiphysis, unspecified site | |

| 79.45 | Closed reduction of separated epiphysis, femur | |

| 79.50 | Open reduction of separated epiphysis, unspecified site | |

| 79.55 | Open reduction of separated epiphysis, femur | |

A three-year time period was selected to mirror the methodology used by CMS’ HRRP.10,11 We next generated variables for patient age and female gender; dates of admission, discharge and death; and comorbidities defined by Elixhauser’s methods.12 We excluded patients younger than age 65, older than age 99, those with a diagnosis of end-stage renal disease, and patients not surviving to discharge.

Hospital Readmissions Reduction Program

HRRP relies on patient-level administrative billing data to determine index admission and readmissions. Thus, CMS data captures all inpatient episodes of care for which Medicare received a bill from an acute care hospital. As a consequence, in the Medicare fee-for-service population there is essentially no “lost to follow-up” effect. Two theoretical exceptions exist: (1) among “dual eligibles” (Medicare Advantage) population, when a patient is readmitted but the private payer is wholly responsible for the hospital stay; (2) among the small subgroup of Medicare patients also receiving Veterans Administration (VA) benefits, when a patient is readmitted to a VA hospital but no Medicare bill is generated. As such, readmission rates calculated under HRRP are often higher than reported in studies using clinical registries because CMS data is more complete; representing a-HRRs rather than only s-HRRs or same-health system readmission rates.13 In contrast, data from non-Medicare sources may underestimate a hospital’s true readmission rate owing to either a reliance on patient memory (e.g. ACS-NSQIP) or an inability to track readmissions to non-participating hospitals (e.g. Veterans Affairs Surgical Quality Improvement Program, University Health Consortium).3,14,15 Finally, we must consider the possibility that CMS overestimates readmission rates owing to either clerical error or fraudulent billing.

CMS’ HRRP operates via two mechanisms. Performance for both is based on a-HRRs. The first is public reporting of hospital readmission rates using one of three possible descriptions – better than the national average (upper limit of 95% confidence interval [CI] does not include national mean), no different than the national average (95% CI includes national mean), or worse than the national average (lower limit of 95% CI does not included national mean). The second mechanism is monetary penalties. This “payment adjustment” involves decreasing the hospital’s total base operating diagnostic related group payments from Medicare by up to 3%, proportional to the excess readmission ratio. A more complete discussion of the penalty calculation is available on the CMS.gov website.7

Unit of Analysis and Primary Outcome Measure

The unit of analysis for this study was the hospital. For each hospital, we generated two risk- and reliability-adjusted readmission rates – (1) readmission to any hospital i.e. the all-hospital readmission rate (a-HRR); and (2) readmission to the index hospital i.e. the same-hospital readmission rate (s-HRR). We defined readmission using CMS’ criteria: “a subsequent acute care inpatient hospital admission for any reason within 30 days of discharge of the index admission.”10,11

Calculation of Hospital Readmission Rates

To calculate readmission rates for each hospital, we used hierarchal logistic regression. This advanced statistical technique applies empirical Bayes theorem to account for differences in the reliability of rates between hospitals. This approach produces a point estimate of each hospital’s risk-adjusted readmission rate that is “shrunken” toward the overall population grand mean. The degree of shrinkage is proportional to the reliability of the hospital’s readmission rates. Since reliability is inversely related to sample size, hospitals with lower operative volumes will be closer to the grand mean than to their unadjusted readmission rate. For example, if one assumes the population grand mean to be 15% for readmission, then a hospital performing 10 operations per year with 5 readmissions will have a rate closer to 15% than to 50%. In contrast, a hospital performing 1,000 operations per year with 500 readmissions will have a readmission rate closer to 50%. Reliability-adjustment has been previously used to report outcomes for general, trauma, and vascular surgery.16–19 Further, our method of using hierarchical logistic models mirrors the procedure used by CMS to calculate rates for the purposes of the HRRP.20

We included patient age, gender, an indicator variable for procedure type, and Elixhauser12 comorbidities in our risk-adjustment model. To align our methods with CMS’ methodology, we specifically excluded race and socioeconomic status as explanatory variables.10,21 We assessed model discrimination with c-statistics (the proportion of time the model predicts a higher probability of readmission for a readmitted patient compared to a non-readmitted patient). The c-statistic ranges between 0.5 (predictive ability equivalent to a coin toss) and 1.0 (perfect predictive ability). Our models’ c-statistic was 0.66 for both same-hospital and all-hospital readmissions. This is similar to previous reports of model discrimination for readmissions as well as to Medicare’s data on surgical readmissions.20–23

Statistical Approach

Our first analysis examined the correlation between same-hospital readmission rates and all-hospital readmission rates. First, we used Pearson’s product-moment test to quantify the correlation between s-HRR and a-HRR. As a preliminary matter, we used visual inspection of each variable’s histogram to determine if our data meets Pearson’s requirement of bivariate normality. To ensure our findings were robust to outliers, we used Spearman’s rank correlation.

The second goal of our study was to assess the impact on hospital profiling when using a-HRRs instead of s-HRRs. We report these findings in a quintile reclassification matrix, which was derived as follows. First we ranked hospitals by s-HRR and by a-HRR. Next we divided the population into quintiles based on performance for each measure (worst-, poor-, median-, good-, and best–performing hospitals). We then compared migration between quintiles when profiling on a-HRR instead of on s-HRR. For this analysis we included only the 2,879 institutions that were not critical access hospitals or low-volume centers (annual volume of less than 25 operations per year). Reclassification tables are reported by operation to parallel CMS’ methodology for public reporting.

Finally, we sought to determine if certain hospital characteristics were associated with greater underestimation of a-HRR. We first divided hospitals into quintiles based on the relative difference between a-HRR and s-HRR (i.e. [a-HRR minus s-HRR] / a-HRR). Hospitals in the highest quintile of relative difference were considered to have a positive outcome. Next, we used multiple logistic regression to generate adjusted odds ratios for each hospital characteristic (urban location, teaching status, network membership, and full implementation of and electronic health record).

Results

Our study population included 3,940 hospitals covering 741,656 patients. The mean risk- and reliability-adjusted a-HRR was 13.2% (standard deviation [SD] 1.5%; range 8.4% to 21.2%) and the mean risk- and reliability-adjusted s-HRR was 8.4% (SD 1.1%; range 2.6% to 15.3%). Summary statistics for patient demographics and hospital characteristics are provided in Table 2.

Table 2.

Patient Demographics and Hospital Characteristics

| Variable | CABG | Colectomy | Hip Fracture |

|---|---|---|---|

| Patient Demographics | |||

| Count | 233,256 | 226,050 | 282,350 |

| Median age [ICR] | 73 [69–78] | 76 [71–82] | 76 [71–81] |

| Gender (% female) | 31.0 | 58.5 | 64.0 |

| Black race (%) | 5.3 | 8.4 | 4.6 |

| 3+ comorbidities (%) | 39.6 | 36.3 | 27.3 |

| Hospital Characteristics§ | |||

| Count | 1,001 | 1,985 | 1,880 |

| Teaching Status (%) | 21 | 12.6 | 11.5 |

| Network Membership (%) | |||

| Yes | 36.3 | 35.1 | 32.4 |

| No | 48.8 | 47.2 | 47.9 |

| Unverified | 15 | 17.7 | 17.7 |

| Technology Category (%) | |||

| Transplant | 11.10 | 3.90 | 5.50 |

| Transplant plus fully-implemented EHR | 7.60 | 6.10 | 3.80 |

| Neither | 81.30 | 90.10 | 90.70 |

ICR, interquartile range; EHR, electronic health record

Derived from self-reported data on the 2009 American Hospital Association Survey.

Correlation Between Measures

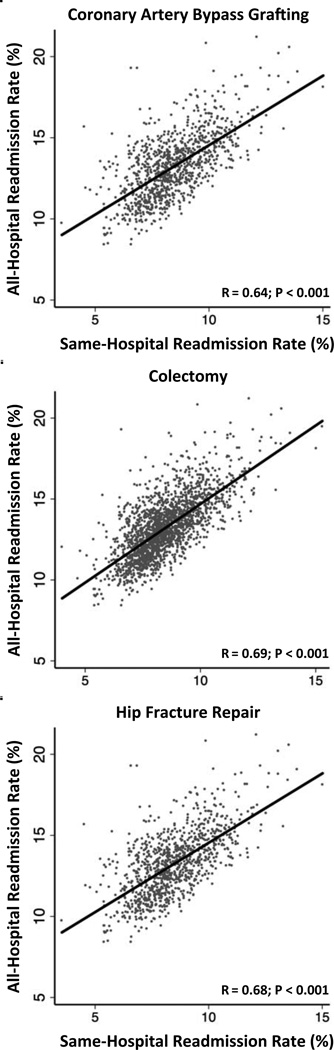

The Pearson correlation coefficient between s-HRR and a-HRR ranged from 0.64 to 0.69 (P <0.001) depending upon operation. Figure 1. When we evaluated the association using Spearman’s correlation, results were similar (range 0.62 to 0.68, P < 0.001). Same-hospital rates underestimated all-hospital rates by an average of 4.7 to 4.8 percentage points depending upon operation. However since the range of underestimation was quite wide (2.6% to 7.4%), simply adding 5% to each hospital’s s-HRR would not suffice as a method of estimating a-HRR. For example one hospital may only underestimate its a-HRR by 3% whereas a different hospital may underestimate by 10%.

Figure 1.

Same-Hospital Readmission Rate versus All-Hospital Readmission Rate.

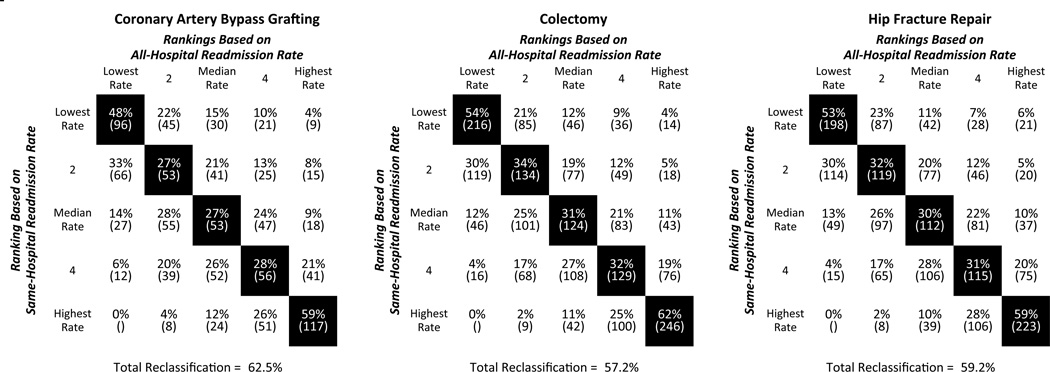

Impact of Changing Measures on Hospital Profiling

After the best performing hospitals (lowest s-HRRs) were re-ranked according to a-HRR, 41% were reclassified into another quintile of performance; with 6% reclassified into the worst quintile. Figure 2. Similarly, when the worst performing hospitals (highest s-HRRs) were re-ranked according to a-HRR, 40% were reclassified. Reclassification was greatest for the middle three quintiles (64–69% reclassified). These “average” institutions are particularly important under the HRRP because Medicare’s profiling methodology is mean-centric, therefore reclassification can have significant effects on their reported performance. We observed similar reclassifications when examining each operation individually.

Figure 2.

Comparison of Quintile Rankings based upon All-Hospital Readmission Rate and Same-Hospital Readmission. There were 1,001 hospitals performing CABG; 1,985 performing colectomy; and 1,880 hospitals performing hip fracture repair. (n) represents the number of hospitals in each quintile combination. If correlation between rankings were perfect, cells with black backgrounds would be 100%.

Hospital Characteristics Associated Less Underestimation

For hospitals in the highest quintile of relative difference, s-HRR underestimated a-HRR by 47%. In contrast, hospitals in the lowest quintile of relative difference underestimated a-HRR by 27%. In multivariate analysis, urban location and teaching hospitals were associated with greater underestimation. Table 3.

Table 3.

Differences in Selected Hospital Characteristics By Readmissions Performance

| Relative Difference Between a-HRR and s-HRR |

||

|---|---|---|

| Hospital Characteristic | Adjusted Odds Ratio |

95% Confidence Interval |

| Urban Locationa | 3.14 | (2.39 – 4.14) |

| Teaching Hospital | 1.89 | (1.38 – 2.60) |

| Network Membership | 1.05 | (0.83 – 1.33) |

| Fully Implemented EHR | 0.97 | (0.74 – 1.27) |

Data is presented for a composite of all operations.

Hospitals located in one of the 50 largest American cities were considered urban.

Discussion

This is the first study of surgical patients examining whether s-HRR rates provide adequate estimates of a-HRR. We found that s-HRRs consistently underestimated a- HRRs by an average of five absolute percentage points, however the range of underestimation was quite wide (1.5 to 12.7 percentage points). Additionally, we observed that the majority of hospitals were reclassified when profiling hospitals based on all-hospital rates instead of same-hospital rates. Taken together, our findings suggest that individual hospitals may not be able to solely rely on their own data to accurately predict their true post-operative readmission rate.

Given the recent emphasis on leveraging coordination of care to decrease costs, readmission reduction will likely remain a national priority for years to come.1,24 To formulate effective strategies to reduce readmissions, individual hospitals must have a method of accurately tracking their own readmission rates. Our findings add to the existing readmissions literature by quantifying the extent to which hospitals may underestimate their true readmission rate when relying on internal data.

Prior work by Nasir et al has evaluated the correlation between s-HRR and a-HRR for congestive heart failure patients.6 In that study, the difference between s-HRR and a- HRR ranged from 1% to 11%. As in our study, nearly half (48%) of the hospitals changed rankings when a-HRRs were used instead of s-HRRs. Specifically, of the 935 best performing hospitals (based on s-HRRs), 35% were reclassified after ranking were based on a-HRRs.

There are at least two possible solutions that address the issue of underestimation when relying on same-hospital readmission rates. One option is for CMS to provide hospitals with more frequent reports. Currently, hospitals only receive annual reports – meaning that there is no time to implement quality improvement initiatives prior to monetary fines. Many quality improvement efforts, with aims ranging from reducing length of stay to increasing patient satisfaction, rely on monthly or quarterly feedback to hospitals.25,26 The significant infrastructure and resources required to implement such a reporting system may be one reason many hospitals do not participate in quality improvement programs such as NSQIP. For instance, although our cohort included 2,430 hospitals, as of 2014, only 487 hospitals participate in NSQIP.27 Further, one may argue that, in the absence of a federal mandate for QI collaborative participation, CMS should provide more timely data to institutions that are not affiliated with a collaborative.

However, as healthcare makes further gains in collecting, storing, and analyzing large amounts of patient data, true real-time feedback may be feasible. Under the current model, communication between providers is often too delayed for exchanging clinically actionable information. For instance, inpatient physicians generally send post-hoc discharge summaries to the patient’s other providers (e.g. PCP, specialists) – often in hard copy and via the United States post. However, one may envision a system that generates an automatic email notification to these other providers whenever one of their patients is readmitted. This might facilitate the exchange of timely and clinically actionable information between providers. Alternatively, electronic health records that can exchange information across institutions may provide similar benefits.

Another option is to increase incentives for hospitals to participate in quality improvement networks. These networks are often administered through specialty societies (e.g. the American College of Surgeon’s National Surgical Quality Improvement Program) or regional collaboratives (e.g. the Michigan Surgical Quality Collaborative).28,29 In considering the unique challenges of tracking readmissions, such collaboratives offer a particularly important feature – the ability to collect data directly from patients. This makes it possible to track readmissions to any hospitals whether or not the readmitting hospital participates in the collaborative. Further, it has the ability to track other interfaces with the healthcare system that are not easily captured in administrative data – e.g. emergency department visits, non-billable post-operative visits to surgery clinic, use of urgent care facilities. To the extent that the goal of HRRP is to reduce readmissions rather than solely to recuperate costs for unnecessary hospitalizations, Medicare may consider using a portion of a hospital’s penalty dollars to assist institutions with enrollment in a quality improvement collaborative.

Limitations

This paper is not without limitations. Since our study relies on administrative data, we were unable to adjust for risk factors not captured by patient demographics or ICD-9 diagnostic billing codes. To mitigate the effects of this drawback, we used advanced statistical methods such as risk-adjustment and reliability-adjustment. These techniques are standard practice for profiling hospitals using administrative data.10 Second, as measured by c-statistics, our model performance was suboptimal. However, this was similar to prior reports analyzing Medicare data.6,30,31 More importantly, our study design has the same limitations as Medicare’s Hospital Readmissions Reduction Program. From a broader perspective, the consistently poor model performance across clinical situations may indicate that readmissions are subject to more unmeasured confounding compared to other metrics of hospital quality such as mortality.

Conclusions

Understanding the limitations of using s-HRR to estimate a-HRR is critical for hospitals and providers. As currently implemented under CMS’ HRRP, hospitals lack access to performance data until after monetary penalties have already been assessed. As such, our findings have important policy implications, namely, hospitals are unable to estimate true readmission rates without external data. Further studies are needed to determine the best methods of providing hospitals with complete and timely readmissions information.

Acknowledgments

Funding: This study was supported by grants R01 AG039434-03 (Dr. Dimick) from the National Institute on Aging; K08 HS017765-04 (Dr. Dimick) from the Agency for Healthcare Research and Quality; Ruth L. Kirschstein National Research Service Award T32 HL076123-09 from the National Heart Lung and Blood Institute (Dr. Gonzalez; Dr. Shih); and the Eleanor B. Pillsbury Research Fellowship Award from the University of Illinois Chicago Medical Center.

Abbreviations and Acronyms

- CMS

Centers for Medicare and Medicaid Services

- HRRP

Hospital Readmissions Reduction Program

- ICD-9CM

International Classification of Disease 9th Revision, Clinical Modification

- CABG

Coronary Artery Bypass Grafting

- CI

Confidence Interval

- a-HRR

All-Hospital Readmission Rate

- s-HRR

Same-Hospital Readmission Rate

- AHA

American Hospital Association Methods

- SD

standard deviation

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Meeting Presentation: This abstract was presented at the 2013 American College of Surgeon’s Clinical Congress in Washington DC (session SF15 – Quality, Outcomes and Costs II).

References

- 1.Lavizzo-Mourey R. The Revolving Door: A Report on U.S. Hospital Readmissions. 2013 Available at: http://bit.ly/1gwqC0V. [Google Scholar]

- 2.Rau J. Medicare Revises Hospitals’ Readmissions Penalties. [Accessed April 16, 2013];Kaiser Heal News. 2012 Available at: http://bit.ly/1eyqWxA. [Google Scholar]

- 3.Vogel TR, Kruse RL. Risk factors for readmission after lower extremity procedures for peripheral artery disease. J Vasc Surg. 2013;58(1):90–97. e1–e4. doi: 10.1016/j.jvs.2012.12.031. [DOI] [PubMed] [Google Scholar]

- 4.Roy CL, Kachalia A, Woolf S, Burdick E, Karson A, Gandhi TK. Hospital readmissions: physician awareness and communication practices. J Gen Intern Med. 2009;24(3):374–380. doi: 10.1007/s11606-008-0848-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jackson BM, Nathan DP, Doctor L, Wang GJ, Woo EY, Fairman RM. Low rehospitalization rate for vascular surgery patients. J Vasc Surg. 2011;54(3):767–772. doi: 10.1016/j.jvs.2011.03.255. [DOI] [PubMed] [Google Scholar]

- 6.Nasir K, Lin Z, Bueno H, et al. Is Same-Hospital Readmission Rate a Good Surrogate for All-hospital Readmission Rate? Med Care. 2010;48(5):477–481. doi: 10.1097/MLR.0b013e3181d5fb24. [DOI] [PubMed] [Google Scholar]

- 7.Centers for Medicare & Medicaid Services. [Accessed February 12, 2014];Readmissions Reduction Program. 2012 Available at: http://go.cms.gov/1eUTfaO.

- 8.Medicare Payment Advisory Commission (MedPAC) Chap 4. Refining the hospital readmissions reduction program. [Accessed April 1, 2014];Rep to Congr Medicare Heal Care Deliv Syst. 2013 :91–114. Available at: http://1.usa.gov/1gkJ418.

- 9.Tsai TC, Joynt KE, Orav EJ, Gawande AA, Jha AK. Variation in surgical-readmission rates and quality of hospital care. NEJM. 2013;369(12):1134–1142. doi: 10.1056/NEJMsa1303118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Centers for Medicare & Medicaid Services. Frequently Asked Questions: CMS Publicly Reported Risk-Standardized Outcome Measures. 2013 Available at: http://bit.ly/1hrSC9s. [Google Scholar]

- 11.Quality Net. [Accessed November 11, 2013];Measure Methodology Reports: Readmission Measures. 2013 Available at: http://bit.ly/1hrSC9s. [Google Scholar]

- 12.Elixhauser A, Steiner C, Harris D, Coffey RM. Comorbidity Measures for Use with Administrative Data. Med Care. 1998;36(1):8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- 13.Lucas DJ, Haider A, Haut E, et al. Assessing readmission after general, vascular, and thoracic surgery using ACS-NSQIP. Ann Surgl. 2013;258(3):430–439. doi: 10.1097/SLA.0b013e3182a18fcc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sellers MM, Merkow RP, Halverson A, et al. Validation of new readmission data in the American College of Surgeons National Surgical Quality Improvement Program. J Amer Coll Surg. 2013;216(3):420–427. doi: 10.1016/j.jamcollsurg.2012.11.013. [DOI] [PubMed] [Google Scholar]

- 15.Kassin MT, Owen RM, Perez S, et al. Risk Factors for 30-Day Hospital Readmission among General Surgery Patients. J Amer Coll Surg. 2012;215(3):322–330. doi: 10.1016/j.jamcollsurg.2012.05.024. Available at: 22726893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dimick JB, Ghaferi AA, Osborne NH, Ko CY, Hall BL. Reliability Adjustment for Reporting Hospital Outcomes. Ann Surg. 2012;255(4):703–707. doi: 10.1097/SLA.0b013e31824b46ff. [DOI] [PubMed] [Google Scholar]

- 17.Dimick JB, Staiger DO, Birkmeyer JD. Ranking Hospitals on Surgical Mortality: The Importance of Reliability Adjustment. Heal Serv Res. 2010;45(6 Part 1):1614–1629. doi: 10.1111/j.1475-6773.2010.01158.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Osborne NH, Ko CY, Upchurch GR, Dimick JB. The impact of adjusting for reliability on hospital quality rankings in vascular surgery. J Vasc Surg. 2011;53(1):1–5. doi: 10.1016/j.jvs.2010.08.031. [DOI] [PubMed] [Google Scholar]

- 19.Hashmi ZG, Dimick JB, Efron DT, et al. Reliability adjustment: A necessity for trauma center ranking and benchmarking. J Trauma Acute Care Surg. 2013;75(1):166–172. doi: 10.1097/ta.0b013e318298494f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Suter LG, Grady JN, Lin Z, et al. 2013 Measure Updates and Specifications : Elective Primary Total Hip Arthroplasty (THA) And / Or Total Knee Arthroplasty (TKA) All-Cause Unplanned 30-Day Risk-Standardized Readmission Measure. New Haven; 2013. pp. 1–58. Available at: http://bit.ly/Pnuj2o. [Google Scholar]

- 21.Krumholz HM, Lin Z, Drye EE, et al. An administrative claims measure suitable for profiling hospital performance based on 30-day all-cause readmission rates among patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2011;4(2):243–252. doi: 10.1161/CIRCOUTCOMES.110.957498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McPhee JT, Nguyen LL, Ho KJ, Ozaki CK, Conte MS, Belkin M. Risk prediction of 30-day readmission after infrainguinal bypass for critical limb ischemia. J Vasc Surg. 2013;57(6):1481–1488. doi: 10.1016/j.jvs.2012.11.074. [DOI] [PubMed] [Google Scholar]

- 23.McPhee JT, Barshes NR, Ho KJ, et al. Predictive factors of 30-day unplanned readmission after lower extremity bypass. J Vasc Surg. 2013;57(4):955–962. doi: 10.1016/j.jvs.2012.09.077. [DOI] [PubMed] [Google Scholar]

- 24.Medicare Payment Advisory Commission (MedPAC) [Accessed August 27, 2012];Report to Congress: Promoting Greater Efficiency in Medicare. 2007 Chapter 5 Available at: http://1.usa.gov/1lp2gJ9. [Google Scholar]

- 25.Quinn GP, Jacobsen PB, Albrecht TL, et al. Real-time patient satisfaction survey and improvement process. Hosp Top. 2004;82(3):26–32. doi: 10.3200/HTPS.82.3.26-32. [DOI] [PubMed] [Google Scholar]

- 26.Van der Veer SN, de Vos MLG, van der Voort PHJ, et al. Effect of a multifaceted performance feedback strategy on length of stay compared with benchmark reports alone: a cluster randomized trial in intensive care. Crit Care Med. 2013;41(8):1893–1904. doi: 10.1097/CCM.0b013e31828a31ee. [DOI] [PubMed] [Google Scholar]

- 27.ACS NSQIP. Participants. 2014 [Google Scholar]

- 28.American College of Surgeons National Surgical Quality Improvement Project. [Accessed November 21, 2013];ACS NSQIP: How It Works. 2012 Available at: http://bit.ly/1h6fVXC. [Google Scholar]

- 29.Birkmeyer NJO, Share D, Campbell DA, Prager RL, Moscucci M, Birkmeyer JD. Partnering with payers to improve surgical quality: the Michigan plan. Surgery. 2005;138(5):815–820. doi: 10.1016/j.surg.2005.06.037. [DOI] [PubMed] [Google Scholar]

- 30.Ghaferi AA, Birkmeyer JD, Dimick JB. Variation in Hospital Mortality Associated with Inpatient Surgery. NEJM. 2009;361(14):1368–1375. doi: 10.1056/NEJMsa0903048. [DOI] [PubMed] [Google Scholar]

- 31.Ghaferi AA, Birkmeyer JD, Dimick JB. Complications, Failure to Rescue, and Mortality With Major Inpatient Surgery in Medicare Patients. Ann Surg. 2009;250(6):1029–1034. doi: 10.1097/sla.0b013e3181bef697. [DOI] [PubMed] [Google Scholar]