Abstract

The increasing use of medical checklists to promote patient safety raises the question of their utility in diagnostic radiology. This study evaluates the efficacy of a checklist-style reporting template in reducing resident misses on cervical spine CT examinations. A checklist-style reporting template for cervical spine CTs was created at our institution and mandated for resident preliminary reports. Ten months after implementation of the template, we performed a retrospective cohort study comparing rates of emergent pathology missed on reports generated with and without the checklist-style reporting template. In 1,832 reports generated without using the checklist-style template, 25 (17.6 %) out of 142 emergent findings were missed. In 1,081 reports generated using the checklist-style template, 13 (11.9 %) out of 109 emergent findings were missed. The decrease in missed pathology was not statistically significant (p = 0.21). However, larger differences were noted in the detection of emergent non-fracture findings, with 17 (28.3 %) out of 60 findings missed on reports without use of the checklist template and 5 (9.3 %) out of 54 findings missed on reports using the checklist template, representing a statistically significant decrease in missed non-fracture findings (p = 0.01). The use of a checklist-style structured reporting template resulted in a statistically significant decrease in missed non-fracture findings on cervical spine CTs. The lack of statistically significant change in missed fractures was expected given that residents’ search patterns naturally include fracture detection. Our findings suggest that the use of checklists in structured reporting may increase diagnostic accuracy.

Keywords: Structured reporting, Radiology reporting, Quality assurance

Introduction

Radiology reports have historically been generated by using free-text dictations, in which the radiologist dictates in a narrative style without a standardized order or format. The content of radiology reports generated in this manner has been shown to be error-prone and often unclear to referring physicians [1–5]. As other fields of medicine have achieved quality improvement by reducing variability within their practices, there has been an effort to reduce variability in the field of radiology by shifting from conventional free-text reporting to structured reporting [6, 7].

Structured reporting is a term that can encompass a variety of data entry and report generation techniques. Essential attributes of structured reports include a standardized lexicon, predetermined data elements, and consistent format and organization. Structured reports typically contain headings and paragraphs that distinguish the basic elements of the report, and frequently contain subheadings to delineate the anatomic areas evaluated [8]. Many benefits have been attributed to structured reporting techniques, including increased clarity of reports, decreased report turnaround times, improved quality and consistency of reports, and increased satisfaction with reports among referring physicians [9–13].

While there are a variety of structured reporting formats, many structured reports take the form of a checklist, with a list of anatomic areas or findings thought to be part of a thorough search pattern for a particular study. The increasing use of checklists in other fields of medicine and the ease with which checklists can be incorporated into structured reporting templates raise the question of their utility in improving structured reporting practices. After Pronovost’s widely publicized study in which a simple five-item central-line safety checklist resulted in startling reductions in catheter-related infections [14], a growing body of research has emerged supporting the efficacy of even the simplest of checklists in various areas of medicine [15–20]. However, few studies have evaluated the use of checklist-style structured reporting for improving diagnostic accuracy in radiology.

In this study, a checklist-style structured reporting template for cervical spine CT examinations was introduced at a tertiary care hospital with a level-one trauma center. We then evaluated the efficacy of the checklist-style template in reducing missed pathology in resident preliminary reports for cervical spine CTs.

Methods

Our study was performed with an institutional review board exemption and is compliant with the requirements of the Health Insurance Portability and Accountability Act. Informed consent was waived.

This study was conducted within a 25-resident radiology residency program based at a 1,076-bed hospital with a level-one trauma center. Workflow within the hospital’s radiology and emergency departments is frequently assisted by full preliminary reports conveying imaging interpretations rendered by radiology residents, with the majority of preliminary reports generated during on-call hours when there is limited coverage by attending radiologists. Preliminary reports are later reviewed and finalized when there is availability of an attending radiologist, with additional comments added to convey any discrepancies between the interpretations of the resident and attending radiologist. When there are discrepancies which may affect patient management, these findings are communicated to the covering physician. If the patient has been discharged from the emergency department (ED), the patient is recalled to the ED and an electronic patient care reporting system (Med-Media EMStat™, Harrisburg, Pennsylvania) is used to document these resident misses.

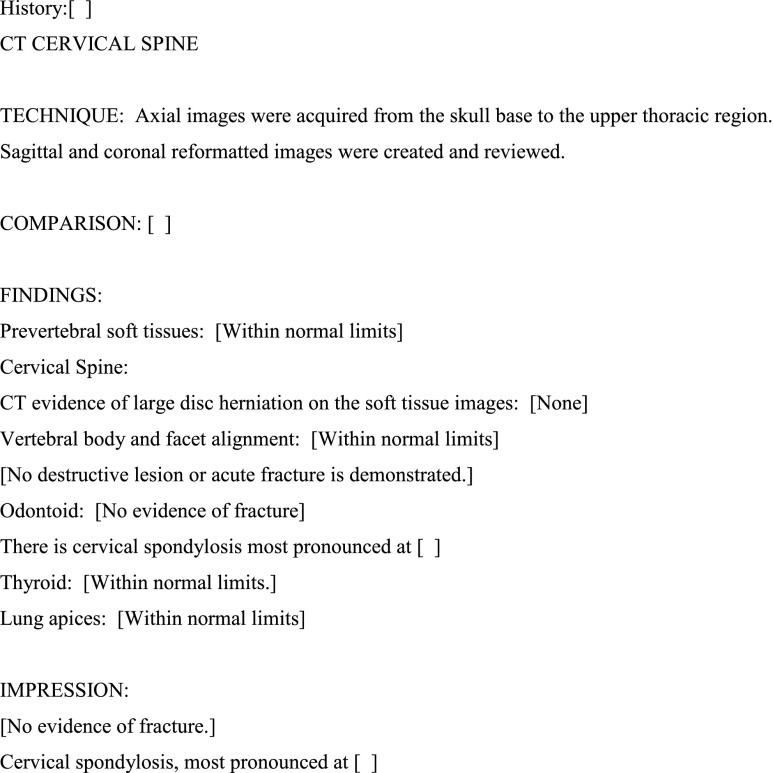

A checklist-style structured reporting template for cervical spine CTs was created by residents and attending neuroradiologists within this residency program in December 2010. Contents of the template were decided upon after review of discrepancies between resident preliminary reports and attending final reports for cervical spine CTs over a 2-year period, with more frequently missed pathology chosen for inclusion in the checklist (Fig. 1). Radiological Society of North America (RSNA) best-practices radiology report templates were also referenced during the creation of the template [21].

Fig. 1.

Checklist-style reporting template implemented at the study institution. Brackets indicate fill-in fields, with text within brackets representing default text

Prior to the creation of this checklist template, preliminary and final reports for cervical spine CTs were generated without a standardized structured reporting system. The structure of preliminary reports were left to the discretion of the reporting radiology resident or attending radiologist, with some reports generated using free-text dictation while other reports were generated using a variety of non-checklist templates which—as a collective—lacked the standardized content, format, language, or organization typical of structured reporting systems. Once the cervical spine checklist template was introduced to residents within the program, its use was mandated for all resident preliminary reports on cervical spine CTs ordered from the emergency department. The checklist template was added to each resident’s user profile autotext lists in the department’s speech recognition reporting system (Nuance Powerscribe 360, Burlington, Massachusetts). The mandated use of this checklist template represented the department’s first attempt at standardized structured reporting outside of breast imaging reports.

A retrospective review of the institution’s radiologic database was subsequently performed, evaluating all cervical spine CT reports generated during a 28-month period from July 2009 to October 2011. All CTs were performed on 64-slice multidetector scanners (Toshiba Aquilion 64, Tokyo, Japan or Siemens Somatom Sensation 64, Munich, Germany). Sagittal, axial, and coronal images in soft tissue and bone algorithms were obtained for all CTs. Only reports generated prior to our decision to perform this study—and thus prior to resident knowledge of this study—were included.

We identified 2,913 cervical spine CTs ordered in the emergency department with preliminary resident reports during this time period. One thousand eighty-one reports generated between December 2010 and October 2011 using the checklist-style reporting template were compared with 1,832 reports generated between July 2009 and March 2011 using free-text or non-checklist structured reporting templates. Both study periods included the first 3 months of an academic year (July to September), when the call pool is typically comprised of more inexperienced residents. The time period from December 2010 to March 2011 was included in both the pre and posttest groups due to a transition period when there was incomplete compliance with use of the template; however, only reports using the checklist-style template during this transition period were included in the posttest group and only reports using free text or other templates were included in the pretest group.

Preliminary resident findings later confirmed by a certificate of added qualifications (CAQ)-certified attending neuroradiologist and resident misses later detected by a CAQ-certified attending neuroradiologist were counted. Only findings considered relevant to emergency department management were considered, which included all acute fractures, all causes of severe spinal canal stenosis, prevertebral soft tissue swelling or masses, abnormal spinal cord or extradural densities, intracranial hemorrhage, and emergent lung findings (consolidation, pneumothorax, or pleural effusions). In studies with multiple findings, each finding was counted separately, with the exception of studies with multiple fractures, which were counted as one fracture finding. In one study with multiple fractures, there was one fracture which was identified and one fracture which was missed in the preliminary report, and for this study, both an identified and missed finding were counted. Findings which were also identified on other studies (e.g., intracranial hemorrhage detected on concurrently performed head CT or pneumothoraces detected on concurrently performed chest CT or radiograph) were not counted.

Emergency department radiology recall numbers for other neuroradiology studies during the study period were also analyzed to assess for overall changes in neuroradiology reporting accuracy by residents within the call pool. Data for resident misses requiring patient recall were obtained from the hospital’s electronic patient care reporting system, and we analyzed the frequency of such resident misses on head and lumbar spine CTs, which were being reported without the use of checklist-style reporting templates within our institution. The number and rates of missed findings on head and lumbar spine CTs were counted for the pretest period from July 2009 to December 2010 (before the institution of our cervical spine CT template) and the posttest period from January 2011 to October 2011 (after the institution of our template). Statistical comparisons were then performed to assess for statistically significant differences in resident accuracy on neuroradiology studies during the pre and posttest periods.

Differences in the rate of missed emergent findings on cervical spine CTs before and after the institution of checklist-style reports were compared by use of chi-square testing. Differences in rates of missed emergent non-fracture findings, missed emergent prevertebral soft tissue and spinal canal findings, and rates of missed findings requiring patient recalls on head and lumbar spine CTs were also compared by use of chi-square testing. Differences in rates of missed lung findings were compared by use of Fisher’s exact test due to the presence of a zero cell. Statistical software (StatCorp Stata 12.1, College Station, Texas) was used for the analysis. A p value of 0.05 or less was considered to indicate statistical significance.

Results

Following the creation of the checklist-style template in December 2010, the use of the template was mandated for all preliminary resident dictations for cervical spine CTs ordered in the emergency department. In December 2010, 45 (49.5 %) out of 91 preliminary dictations were compliant with the mandated use of the checklist-style template. From January 2011 through March 2011, 277 (93.6 %) out of 296 preliminary dictations used the checklist-style template. From April 2011 to the end of our study period, compliance with the checklist-style template was greater than 99 %.

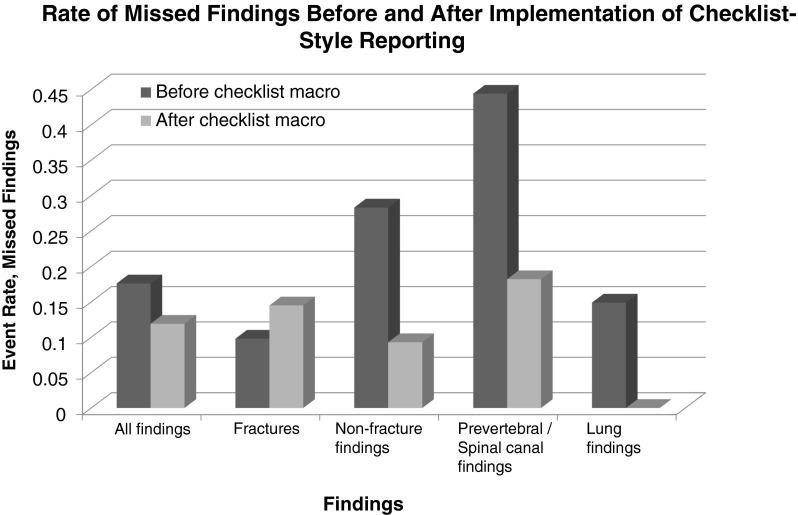

In 1,832 reports generated without use of the checklist-style reporting template, 25 (17.6 %) out of 142 emergent findings were missed by on-call residents. In 1,081 reports generated with the checklist-style template, 13 (11.9 %) out of 109 emergent findings were missed by on-call residents. The decrease in missed emergent findings after implementation of the checklist-style template was not statistically significant (p = 0.21). Fractures accounted for the majority of emergent findings. There was an increase in frequency of missed fractures after institution of the checklist template, with 8 (9.8 %) out of 82 fractures missed on reports without use of the checklist-style templates and 8 (14.5 %) out of 55 fractures missed on reports with the checklist-style templates. The increase in missed fractures was not statistically significant (p = 0.39).

Larger differences were noted in the detection of emergent non-fracture findings, with 17 (28.3 %) out of 60 findings missed on reports without use of checklist-style templates and 5 (9.3 %) out of 54 findings missed on reports using checklist-style templates, corresponding to a statistically significant decrease in rates of missed emergent non-fracture findings (p = 0.01). For emergent lung findings, 7 (14.9 %) out of 47 findings were missed on reports without use of the checklist-style templates and 0 (0 %) out of 27 findings were missed on reports using checklist-style templates, representing a statistically significant decrease in rates of missed emergent lung findings (p = 0.04). For emergent prevertebral and spinal canal findings, 8 (44.4 %) out of 18 findings were missed on reports without use of the checklist-style templates and 4 (18.2 %) out of 22 findings were missed on reports using checklist-style templates, but the decrease did not meet criteria for statistical significance (p = 0.07). There were only two cases with emergent intracranial findings on cervical spine CTs without concurrently performed head CTs during the pretest period, so analysis of statistics for intracranial findings was not performed.

In comparisons of patient recall rates during the study periods before and after the institution of the cervical spine CT checklist-style template, 24 (0.15 %) out of 15,273 resident-generated head CT reports during the pretest time period had a missed finding requiring an emergency department patient recall, compared with 26 (0.32 %) out of 8,148 head CTs during the posttest time period. The increase in ED recall rates for head CTs during the posttest period was statistically significant (p = 0.01). For lumbar spine CTs, 3 (0.78 %) out of 384 studies performed during the pretest period required a patient recall, compared with 2 (1.0 %) out of 193 studies during the posttest period. The increase in ED recall rates for lumbar spine CTs was not statistically significant (p = 0.76). No checklist-style templates or other changes in reporting practices were instituted for head or lumbar spine CTs during the study period (Fig. 2).

Fig. 2.

Graph depicting the rate of resident misses before and after the implementation of the checklist-style structured reporting template

Discussion

The use of checklists in medicine has been widely supported, with research in various specialties demonstrating their efficacy in achieving safety goals ranging from decreased catheter-related bloodstream infections to decreased surgical morbidity and mortality [14–20]. Studies evaluating structured radiology reports—many of which have a checklist format—have attributed a wide range of benefits ranging from increased clarity to increased satisfaction among referring physicians [9–13]. However, relatively little has been published regarding the efficacy of checklists in improving the diagnostic accuracy of radiologic studies, particularly among radiologists-in-training. The one study we are aware of that has addressed this question found a decrease in accuracy and completeness scores in resident reports for a preselected set of 25 brain MR imaging cases when using a structured reporting software system when compared with free-text dictation [22]. However, the study was limited by sparse training of resident subjects on the structured reporting software system and performance of the study outside of the clinical setting, without subject blinding. Our study involved the long-standing use of a checklist-style structured reporting template in a clinical setting, with evaluation of its efficacy in decreasing resident misses on preliminary cervical spine CT reports.

The results of this study suggested that checklist-style structured reporting templates may improve the accuracy and thoroughness of resident reports. The use of checklists in standardized reporting templates for cervical spine CTs resulted in an observed reduction in the number of misses by on-call residents. Although the decrease in overall emergent missed pathology was of uncertain significance (p = 0.21), there was a statistically significant decrease in the number of missed emergent non-fracture findings (p = 0.01). A particularly large reduction was noted in emergent lung findings (p = 0.04), with the included lung apices often overlooked by residents on cervical spine studies prior to the checklist-style template. A statistically significant decrease in overall emergent missed pathology also may have been achieved with a larger sample size.

The reduction in missed emergent non-fracture findings—but not missed fractures—was expected, given that residents’ search patterns on cervical spine CTs naturally include fracture detection, particularly at a level-one trauma center. A checklist-style reporting template with one field addressing fractures was unlikely to improve the accuracy of fracture detection in this setting. The rate of missed fractures actually increased after the institution of the checklist-style template, but the increase was not statistically significant (p = 0.39).

Given the suggestion by some authors that structured reporting systems may be distracting and possibly even detrimental to the accuracy of a radiologists’ normal search habits [8, 23], we were initially concerned that the increase in missed fractures—although statistically insignificant—might suggest a negative effect of the checklist-style templates on resident search patterns for fractures. However, analysis of patient recall numbers from our electronic patient care reporting system suggested that this finding was more likely secondary to an overall decline in accuracy of neuroradiology reporting by the group of on-call residents during the posttest period (after the institution of the cervical spine CT checklist template). There was a statistically significant increase in the rate of missed findings requiring patient recalls on head CTs during the posttest period (p = 0.01). There was also an increase in the rate of missed findings requiring patient recalls on lumbar spine CTs during the posttest period, although this increase was not statistically significant (p = 0.76). Checklist-style structured reporting templates were not instituted for either head or lumbar spine CTs, and the increased rate of missed findings was thought to be secondary to differences in residents within the call pools during the pre and posttest periods. These findings also supported the validity of the statistically significant decrease in missed emergent non-fracture findings on cervical spine CTs during the posttest period (Table 1).

Table 1.

Summary of results

| p values and odds ratios for resident missed findings | ||

|---|---|---|

| Variable | Odds ratio (95 % CI) | p value |

| All emergent findings | 0.63 (0.31–1.31) | 0.21 |

| Fractures | 1.57 (0.55–4.48) | 0.39 |

| Emergent non-fracture findings | 0.26 (0.09–0.76) | 0.01 |

| Lung findings | 0.10 (0.01–1.79) | 0.04 |

| Prevertebral/spinal canal findings | 0.28 (0.07–1.16) | 0.07 |

There were several limitations to this study. First, it was performed with a small group of residents from a single radiology residency program, with only 12–18 residents within the call pool at any given time. Second, the composition of the resident call pool naturally changed with each academic year, with new residents joining the call pool each July. With our sample of reports without the use of checklist-style templates generated between July 2009 to March 2011, and our sample of reports with checklist-style templates generated between December 2010 and October 2011, there were changes in the composition of resident subjects reading cervical spine CTs both within and between the pre and posttest periods. Consequently, it is difficult to exclude the possibility that differences in rates of missed findings on cervical spine CTs were a result of having different residents reading the studies rather than being a result of the new checklist-style reporting template. We attempted to assess for differences in the accuracy of residents generating reports during the pre and posttest time periods by analyzing patient recall rates for head and lumbar CTs. However, this method fails to account for multiple variables, including, but not limited to, differences in the rates of positive findings during the two study groups and differences in the number of missed findings on studies for admitted patients, for whom patient recall was unnecessary. Third, it is difficult to exclude the Hawthorne effect in this study [24]. Although this was a retrospective study and residents were not aware of the intent to perform this study at the time of CT reporting, residents were still aware that the residency program director was monitoring the usage of the checklist-style reporting template, which may have increased the thoroughness of resident search patterns during the posttest time period. Finally, this was a study of only a single specific checklist-style template, designed after review of missed findings within our residency program, and the results may not be generalizable to all checklist-style reporting templates.

Conclusion

Our study suggested an improvement in the accuracy of reports generated with a checklist-style reporting template. There was a reduction in resident missed findings after the institution of a checklist-style structured reporting template for cervical spine CTs, particularly with emergent non-fracture findings, an expected result given that resident search patterns naturally include fracture detection. However, there were many limitations to this study, and further research on this topic is warranted to determine the generalizability of our findings.

Acknowledgments

The authors thank Christopher Moosavi, MD and Shain Wallis, MD for their work on the checklist-style structured reporting template. The authors also thank David Lucido, PhD for his assistance with the statistical analysis.

Conflicts of Interest

There are no financial disclosures or conflicts of interest to report.

Contributor Information

Eaton Lin, Phone: (212) 636-3379, Email: elin@chpnet.org.

Daniel K. Powell, Phone: (212) 420-2540, Email: dpowell@chpnet.org

Nolan J. Kagetsu, Phone: (212) 636-3379, Email: nkagetsu@chpnet.org

References

- 1.Gagliardi RA. The evolution of the X-ray report. AJR Am J Roentgenol. 1995;164(2):501–502. doi: 10.2214/ajr.164.2.7839998. [DOI] [PubMed] [Google Scholar]

- 2.Hobby JL, Tom BD, Todd C, et al. Communication of doubt and certainty in radiological reports. Br J Radiol. 2000;73:999–1001. doi: 10.1259/bjr.73.873.11064655. [DOI] [PubMed] [Google Scholar]

- 3.Plumb AA, Grieve FM, Khan SH. Survey of hospital clinicians’ preferences regarding the format of radiology reports. Clin Radiol. 2009;64(4):386–394. doi: 10.1016/j.crad.2008.11.009. [DOI] [PubMed] [Google Scholar]

- 4.Sierra AE, Bisesi MA, Rosenbaum TL, et al. Readability of the radiologic report. Invest Radiol. 1992;27:236–239. doi: 10.1097/00004424-199203000-00012. [DOI] [PubMed] [Google Scholar]

- 5.Sobel JL, Pearson ML, Gross K, et al. Information content and clarity of radiologists’ reports for chest radiography. Acad Radiol. 1996;3:709–717. doi: 10.1016/S1076-6332(96)80407-7. [DOI] [PubMed] [Google Scholar]

- 6.Groom RC, Morton JR. Outcomes analysis in cardiac surgery. Perfusion. 1997;12:257–261. doi: 10.1177/026765919701200409. [DOI] [PubMed] [Google Scholar]

- 7.Tobler HG, Sethi GK, Grover FL, et al. Variations in processes and structures in cardiac surgery practice. Med Care. 1995;33(10):OS43–OS58. doi: 10.1097/00005650-199510001-00006. [DOI] [PubMed] [Google Scholar]

- 8.Weiss DL, Langlotz CP. Structured reporting: patient care enhancement or productivity nightmare? Radiology. 2008;249(3):739–747. doi: 10.1148/radiol.2493080988. [DOI] [PubMed] [Google Scholar]

- 9.Khorasani R, Bates DW, Teeger S, Rothschild JM, Adams DF, Selter SE. Is terminology used effectively to convey diagnostic certainty in radiology reports? Acad Radiol. 2003;10(6):685–688. doi: 10.1016/S1076-6332(03)80089-2. [DOI] [PubMed] [Google Scholar]

- 10.Kong A, Barnett GO, Mosteller F, Youtz C. How medical professionals evaluate expressions of probability. N Engl J Med. 1986;315(12):740–744. doi: 10.1056/NEJM198609183151206. [DOI] [PubMed] [Google Scholar]

- 11.Liu D, Berman GD, Gray RN. The use of structured radiology reporting at a community hospital: a 4-year case study of more than 200,000 reports. Appl Radiol. 2003;32(7):23–26. [Google Scholar]

- 12.Naik SS, Handbridge A, Wilson SR. Radiology reports: examining radiologist and clinician preferences regarding style and content. AJR Am J Roentgenol. 2001;176:591–598. doi: 10.2214/ajr.176.3.1760591. [DOI] [PubMed] [Google Scholar]

- 13.Schwartz LH, Panicek DM, Berk AR, Li Y, Hricak H. Improving communication of diagnostic radiology findings through structured reporting. Radiology. 2011;260(1):174–181. doi: 10.1148/radiol.11101913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pronovost P, Needham D, Berenholtz S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006;355:2725–2732. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- 15.Berenholtz SM, Pham JC, Thompson DA, et al. Collaborative cohort study of an intervention to reduce ventilator-associated pneumonia in the intensive care unit. Infect Control Hosp Epidemiol. 2011;32:305–314. doi: 10.1086/658938. [DOI] [PubMed] [Google Scholar]

- 16.Hales B, Terblanche M, Fowler R, Sibbald W. Development of medical checklists for improved quality of patient care. Int J Qual Health Care. 2008;20(1):22–30. doi: 10.1093/intqhc/mzm062. [DOI] [PubMed] [Google Scholar]

- 17.Hart EM, Owen H. Errors and omissions in anesthesia: a pilot study using a pilot’s checklist. Anesth Analg. 2005;101:246–250. doi: 10.1213/01.ANE.0000156567.24800.0B. [DOI] [PubMed] [Google Scholar]

- 18.Haynes AB, Weiser TG, Berry WR, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N Engl J Med. 2009;360:491–499. doi: 10.1056/NEJMsa0810119. [DOI] [PubMed] [Google Scholar]

- 19.Lingard L, Espin S, Rubin B, et al. Getting teams to talk: development and prior implementation of a checklist to promote interpersonal communication in the OR. Qual Saf Health Care. 2005;14:340–346. doi: 10.1136/qshc.2004.012377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Van Roozendaal BW, Krass I. Development of an evidence-based checklist for the detection of drug related problems in type 2 diabetes. Pharm World Sci. 2009;31(5):580–595. doi: 10.1007/s11096-009-9312-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Radiological Society of North America (RSNA) radiology reporting initiative. Available at http://www.radreport.org/specialty/nr. Accessed 26 November 2010

- 22.Johnson AJ, Chen MY, Swan JS, Applegate KE, Littenberg B. Cohort study of structured reporting compared with conventional dictation. Radiology. 2009;253(1):74–80. doi: 10.1148/radiol.2531090138. [DOI] [PubMed] [Google Scholar]

- 23.Langlotz CP. Structured radiology reporting: are we there yet? Radiology. 2009;253(1):23–25. doi: 10.1148/radiol.2531091088. [DOI] [PubMed] [Google Scholar]

- 24.McCarney R, Warner J, Iliffe S, et al. The Hawthorne effect: a randomized, controlled trial. BMC Med Res Methodol. 2007;7:30. doi: 10.1186/1471-2288-7-30. [DOI] [PMC free article] [PubMed] [Google Scholar]