INTRODUCTION

When fitting hearing aids, there are three guidelines that should always be followed by the dispenser:

restore audibility; so that the amplified sound is above the user's threshold,

limit the output; so that the amplified signal does not exceed the user's discomfort level, and

do no harm; so that the amplified signal is not unintentionally or undesirably altered by the hearing aid.

The first two of these three guidelines are obvious. The first is to present sounds above the user's threshold of hearing in order to create an acoustic environment with the maximum amount of audible speech cues. The second ensures that discomfort level is not exceeded, so that the user does not wince or remove the hearing aids when loud sounds are present.

The third guideline—do no harm—is less obvious. This indicates that it is important that the hearing aids produce no alteration of the signal other than that which the fitter intends. This is a subtle way of saying that, among other things, well-fitted hearing aids should not distort the desired sound. The topic of distortion is the subject of this issue of Trends in Amplification. The intention of this issue is to explain why distortion occurs in some hearing aids; to explain how distortion is measured; to summarize what can be done to prevent distortion; and to summarize the perception of distortion by the hearing aid wearer.

Distortion in hearing aids can be broadly defined as the generation of undesired audible components that are present in the output, but which are not present in the input. When distortion occurs, hearing aids produce undesired elements at the output through the interaction of the processed signal with some internal non-linear mechanism. These undesired components may interfere to some degree or other with the reception of sound by the listener. If these added elements are small compared to the overall signal level, they may effectively cause no interference at all. If they are large, they can be so disruptive to the listener that the desired sound becomes irritating or even incomprehensible.

All audio systems inevitably contain some amount of distortion. The practical problem for the listener is the type of distortion that is present and the level that is acceptable or tolerable in hearing aids before the distortion becomes disruptive to speech intelligibility and sound quality. It is known that highly distorted speech in quiet can remain intelligible (Licklider, 1946); however, as dispensers often experience, intelligibility is not the only measure of whether or not a user will accept and wear hearing aids. Gabrielsson and Sjogren (1979b) have shown that overall perceived sound quality is important to users when selecting a hearing aid. Punch (1978) has shown that listeners with mild to moderate sensorineural hearing losses retain the same ability to make distinctions in sound quality judgments as listeners with normal hearing. This implies that good sound quality is as equally important and desired by listeners with a hearing impairment as it is for listeners with normal hearing.

Three characteristics of distortion in hearing aids modify the sound delivered to the user:

the type of distortion,

the relative amount of distortion at different frequencies, and

the variation of distortion with different input levels.

Since the most important goal for fitting hearing aids is to restore or facilitate communication ability, undistorted sound is important for optimum speech intelligibility and sound quality. However, it is important to also recognize that there are other reasons to provide undistorted amplification of sound. For example, undistorted and pleasant reproduction of music may be an important sensory experience for some listeners.

This issue concentrates on different types of undesired modification to the waveshape of the sound, primarily by total harmonic distortion (THD) and intermodulation distortion (IMD). However, in a more general sense, any undesired component added to the sound by hearing aids is a form of distortion. Thus, this issue also discusses sound generated by hearing aids with no signal present at the microphone. This artifact is more commonly called internal noise. Internal noise fits within the definition of distortion given earlier, since internal noise is an undesired product generated in the output of hearing aids that is not present at the input. In some cases, distortion and internal noise are so closely linked that they cannot always be separated into discrete entities. For example, instability in the frequency and shape of the clock pulses in a Class D output stage (often loosely called clock jitter) results in distortion of the signal. However, this artifact may be audibly revealed in the output sound primarily as noise, rather than as distortion of the waveform.

According to this broad definition of distortion, undesired noises such as “motorboating” and other acoustic feedback oscillations can also be considered to be forms of distortion. However, acoustic feedback and other similar audible artifacts occurring within hearing aids will not be discussed here. These types of distortion have been discussed in detail by Agnew (1996b) in a previous issue of Trends in Amplification.

In this issue distortion is discussed in two general categories:

Undesired modification of the waveform of the incoming sound by some mechanism occurring within the hearing aids. The severity of this effect may or may not vary with the acoustic level of the incoming sound. This type of audible artifact is generally what most dispensers refer to as “distortion”. This will be the subject of Part I of this issue, and will follow the definition used for distortion in this text.

The production of undesired audible components in the output sound, regardless of whether or not there is any acoustic input to the hearing aid. This is generally called “noise”. This will be the subject of Part II of this issue.

The first part of this issue discusses the causes and effect of distortion. First, different types of distortion are described, followed by an explanation of how they are measured. This is followed by a discussion of the sources of distortion that may be present in hearing aids and the various mechanisms by which distortion is created. The final section of Part I discusses the effects of distortion on the hearing aid wearer. For the reader having difficulty understanding the technical concepts in the first part of this tutorial, a good overview from a different perspective has been presented by Kuk (1996).

The second part of this issue discusses the causes and effects of internal noise in hearing aids. This part starts with a discussion of sources of internal noise and continues with a description of methods for the measurement of internal noise. This part concludes with a discussion of the perception of internal noise and its effects on the user of hearing aids.

At the end of the text is an intentionally long list of references, which is intended to serve as a reasonably comprehensive resource for the reader seeking further information on distortion and noise in hearing aids.

TYPES OF DISTORTION

There are two fundamental and significant forms of distortion that occur in hearing aids. These are harmonic distortion (HD), usually more broadly called total harmonic distortion (THD), and intermodulation distortion (IMD). Both of these result from non-linearities in the amplifying system, and are sometimes collectively called amplitude distortion (Langford-Smith, 1960). Harmonic distortion occurs when a single frequency is presented to the input of a hearing aid and the output contains the original frequency plus additional undesired frequencies that are harmonically related to the original frequency. Intermodulation distortion occurs when two frequencies are presented simultaneously to a hearing aid and the output contains one or more frequencies that are related to the sum and difference of the two input frequencies.

There are other forms of distortion that may be present in the output of hearing aids. For example, transient intermodulation distortion (TIM) occurs when a large abrupt change occurs in the level of the input sound and creates IMD products in the output. Such distortions will be discussed in more detail later. However, these other distortions are not generally perceived as readily by a listener as the effects of THD and IMD, and are thus not considered to be as important for this discussion. Thus most of the discussion presented here will center around THD and IMD.

Often confusing the understanding of distortion is that there is no such thing as inherent distortion in a hearing aid. Rather, the level and type of distortion present in the output sound delivered to the user depends on the applied test conditions and the characteristics of the hearing aid being tested (Burnett, 1967). Thus, the type and level of input signal applied and the associated test conditions must be carefully considered when evaluating reported distortion performance.

One final comment should be made before addressing the details of distortion. This discussion will treat distortion from the point of view that distortion is an undesirable characteristic of amplified sound. While this is generally true for listeners with normal hearing, and for those with a mild to moderate hearing loss, it is possible that some amount of distortion may be useful for some listeners with severe or profound hearing losses.

It has been theorized that distortion may increase the perception of loudness of the signal through the creation of additional harmonic energy and, through these additional harmonics, may provide additional identification cues for speech understanding for some listeners with hearing losses. This viewpoint has been discussed among hearing scientists, but there has not been an unequivocal conclusion.

One such technique adds additional frequencies to the processed sound at one-half and double the frequencies present in the 1000 Hz to 2000 Hz range (DuPret and Lefevre, 1991). These additional frequency components could be considered distortion under the definition used for this discussion, because audible components are being added to the output even though, in this case, they are intentionally added. Although this technique has shown promise when evaluated in wearable devices on patients, there is only limited clinical data available on its usefulness (Parent et al, 1997).

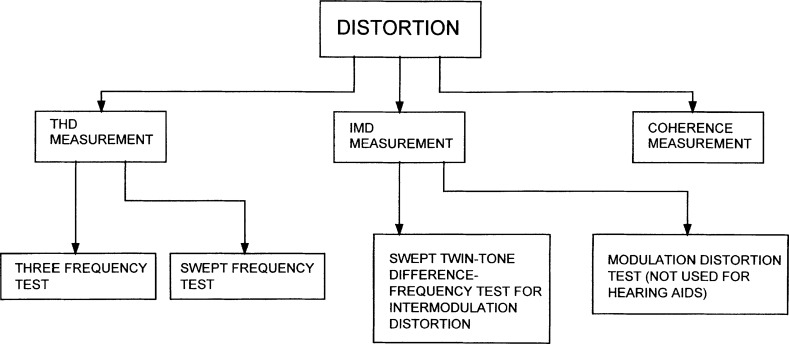

It may be helpful to refer to Figure 1 during the following discussion of distortion and its measurement. Figure 1 presents an overview of the three major types of distortion measurement commonly used for objective measurement of hearing aid distortion, and then shows methods by which each is accomplished.

Figure 1.

An overview of the three major types of distortion measurement commonly used for quantifying hearing aid distortion and the methods by which each is accomplished.

Total Harmonic Distortion

Harmonic distortion is present when undesired frequencies that are harmonics of an input frequency are created in the output. For example, if the input is 1000 Hz, an output with harmonic distortion could contain 2000 Hz, 3000 Hz, 4000 Hz, and other multiples (harmonics) of 1000 Hz, as well as the original signal at 1000 Hz. The even-numbered multiples of the fundamental (2 × 1000, 4 × 1000, 6 × 1000, etc.) are called even-order harmonics. The odd-numbered multiples of the fundamental (3 × 1000, 5 × 1000, 7 × 1000, etc.) are called odd-order harmonics. The fundamental, the original frequency, is sometimes called the first harmonic.

Figure 2 is a graph of output versus frequency for a hearing aid with an input signal of 1000 Hz, showing the harmonics present in the output. The graph shows that 2nd and 3rd harmonics are present. Numerical values for these harmonics are listed in Table 1. The harmonic values in Table 1 may be converted to a percentage of distortion at each harmonic frequency, as shown in the last column of the table. This conversion is made by measuring or estimating how much lower the harmonic frequency of interest is below the fundamental frequency, and then looking up this difference in Table 2. In Table 1 the level of the 1000 Hz frequency was 89.5 dB SPL. The second harmonic, 2000 Hz, was 50.5 dB SPL. Thus, the second harmonic is 39 dB down from (e.g., lower than) the fundamental. Looking at Table 2, a difference of 39 dB corresponds to a distortion level of 1.1%. Similarly, the 3rd harmonic is 47 dB down from the fundamental, which corresponds to a distortion level of 0.45%.

Figure 2.

Harmonic distortion components present in a hearing aid adjusted below saturation with an input signal of 1000 Hz. This graph clearly reveals 2nd and 3rd harmonics present at 2000 Hz and 3000 Hz, respectively. Table 1 lists the corresponding numerical values for the three output frequencies.

Table 1.

Levels of distortion present in Figure 2.

| Frequency (Hz) | Output Level (dB SPL) | Level down from fundamental (dB) | Level of distortion (%) |

|---|---|---|---|

| 1000 | 89.5 | — | — |

| 2000 | 50.5 | 39.0 | 1.10 |

| 3000 | 42.5 | 47.0 | 0.45 |

| noise floor at 3500 Hz | 29.7 | 59.8 | equivalent to 0.10 |

Table 2.

Conversion from decibels below the signal level of the fundamental to percentage of distortion.

| Decibels | Percent | Decibels | Percent | Decibels | Percent | ||

|---|---|---|---|---|---|---|---|

| 1 | 89.1 | 28 | 4.0 | 55 | 0.18 | ||

| 2 | 79.4 | 29 | 3.5 | 56 | 0.16 | ||

| 3 | 70.8 | 30 | 3.2 | 57 | 0.14 | ||

| 4 | 63.1 | 31 | 2.8 | 58 | 0.13 | ||

| 5 | 56.2 | 32 | 2.5 | 59 | 0.11 | ||

| 6 | 50.1 | 33 | 2.2 | 60 | 0.10 | ||

| 7 | 44.7 | 34 | 2.0 | 61 | 0.09 | ||

| 8 | 39.8 | 35 | 1.8 | 62 | 0.08 | ||

| 9 | 35.5 | 36 | 1.6 | 63 | 0.07 | ||

| 10 | 31.6 | 37 | 1.4 | 64 | 0.06 | ||

| 11 | 28.2 | 38 | 1.3 | 65 | 0.06 | ||

| 12 | 25.1 | 39 | 1.1 | 66 | 0.05 | ||

| 13 | 22.4 | 40 | 1.0 | 67 | 0.04 | ||

| 14 | 19.9 | 41 | 0.89 | 68 | 0.04 | ||

| 15 | 17.8 | 42 | 0.79 | 69 | 0.03 | ||

| 16 | 15.8 | 43 | 0.71 | 70 | 0.03 | ||

| 17 | 14.1 | 44 | 0.63 | 71 | 0.03 | ||

| 18 | 12.6 | 45 | 0.56 | 72 | 0.02 | ||

| 19 | 11.2 | 46 | 0.50 | 73 | 0.02 | ||

| 20 | 10.0 | 47 | 0.45 | 74 | 0.02 | ||

| 21 | 8.9 | 48 | 0.40 | 75 | 0.02 | ||

| 22 | 7.9 | 49 | 0.35 | 76 | 0.02 | ||

| 23 | 7.1 | 50 | 0.32 | 77 | 0.01 | ||

| 24 | 6.3 | 51 | 0.28 | 78 | 0.01 | ||

| 25 | 5.6 | 52 | 0.25 | 79 | 0.01 | ||

| 26 | 5.0 | 53 | 0.22 | 80 | 0.01 | ||

| 27 | 4.5 | 54 | 0.20 |

When considering these types of graphs, two useful numbers that are easy to remember are that harmonics that are 20 dB down from the fundamental correspond to 10% distortion, and 40 dB down correspond to 1%. As will be discussed in the later section on the perception of distortion, distortion below 1% is probably negligible for hearing aid purposes; values above 10% are probably audible and may be objectionable. Values between 1% and 10% are considered on a case-by-case basis.

If the fundamental frequency is filtered from the output and all the other harmonic frequencies are measured and summed, the resulting combination is a measure of the amount of all the harmonic distortion present. Since all the harmonics are summed together into one measurement, the resulting figure is called the total harmonic distortion (THD) that is present. THD is usually expressed as an (undesired) percentage of the desired signal level.

The basic concept for the formula for calculating THD is:

thus,

where f1 is the level of the fundamental and f2, f3, f4, and so forth, are the levels of the harmonics present. Note that this calculation will also include any circuit noise that may be present. If the levels of the harmonics are comparable to the inherent noise, the internal noise will be a significant factor in the calculations.

The standard method of measuring THD in hearing aids in the United States is according to ANSI standard S3.22 (1996). This is a measure of the THD present at 500 Hz with an input level of 70 dB SPL, at 800 Hz with 70 dB SPL, and at 1600 Hz with 65 dB SPL, or at three special-purpose frequencies with the same levels. In the event that the frequency response curve rises 12 dB or more between any test frequency and its second harmonic, the test may be omitted at that particular frequency. For example, if the frequency response curve rises 15 dB between 500 Hz and 1000 Hz, the distortion test at 500 Hz may be omitted.

ANSI standard S3.22 (1996) is intended to be a quality control standard for manufacturers to test their product, and this measurement is easy to perform with automated equipment. An example of results from this type of testing is shown in Figure 3, which shows a hearing aid tested according to this standard. The results of distortion testing at the three required frequencies are in the text section at the top.

Figure 3.

Example of a printout from the measurement of a hearing aid according to ANSI standard S3.22 (1996).

In countries outside the United States, the IEC (1983a) standard method for the characterization of the electroacoustic characteristics of hearing aids specifies sweeping the test frequency from 200 Hz to 5000 Hz at an input level of 70 dB SPL, then plotting the THD content of the output at each frequency. Examples of this type of graph will be shown and discussed later.

One limitation of the ANSI standard S3.22 (1996) method is that it only requires tests for distortion at three frequencies. However, this is not necessarily inappropriate, because ANSI (1996) is intended to be a quality control standard for manufacturers to test their product, not an indication of how the hearing aid will perform on the user.

A more complete method for measuring THD sweeps the input test frequency across the whole range, in order to find possible distortion spikes that could occur between the three designated ANSI measuring frequencies. An example of a swept-frequency THD graph is shown in Figure 4. This graph shows the level of THD that is generated by the hearing aid between 200 Hz and 6200 Hz with an input level of 70 dB SPL. Immediately below the graph is data gathered from the same hearing aid according to ANSI (1996). Thus, even though the ANSI data showed that distortion levels were less than 3% at the three ANSI frequencies measured, the hearing aid contained a distortion peak of 12.8% at 2500 Hz. The presence of this distortion peak could degrade the performance of the hearing aid for the user. This frequency is not routinely measured by the ANSI test, unless the hearing aid is a special-purpose hearing aid and requires measurement at this frequency. See ANSI (1996) for further details on the definition and measurement of special-purpose hearing aids.

Figure 4.

Data from a hearing aid showing the measured three-frequency ANSI data, compared to a graph showing the levels of THD that occurred as the distortion was measured continuously between 200 Hz and 6200 Hz.

In general, THD measurements are meaningful up to about half the upper frequency limit of the hearing aid, which means in practice that THD measurements are valid up to about 3000 Hz. The reason for this is related to the limited bandwidth of most hearing aids. Hearing aids are typically severely limited in high frequency response above about 6000 Hz, due to falling high frequency response characteristics inherent in the receiver. Thus the second harmonic of 3000 Hz and frequencies beyond are so severely attenuated that any harmonics present will be buried in either the measurement system noise or the internal hearing aid noise. The noise floor of a typical measurement is shown in the graph in Figure 2. The noise floor of the measurement, which is the wavy line between about 30 dB and 40 dB SPL at frequencies other than 1000, 2000 and 3000 Hz, is due to internal noise present in the hearing aid. At 3500 Hz the level of the noise is 29.7 dB SPL, which is 12.8 dB lower than the harmonic signal being measured at 3000 Hz. This illustrates the point that eventually any high frequency harmonics present will become buried in the internal noise.

A further factor in the measurement of THD is that the combination of 2 cm3 coupler and the associated microphone that are used for hearing aid measurements also produce severe attenuation of the signal in the higher frequencies. This makes reliable measurements of high frequency harmonics very difficult.

Intermodulation Distortion

Intermodulation distortion is created when two frequencies (f1 and f2) are present simultaneously at the input of the hearing aid and the output contains various multiples of the sum (f2+f1) and difference (f2-f1) of these two frequencies. Thus IMD can result in the creation of many frequencies that occur across the frequency spectrum. For example, if the input frequencies are 1000 Hz and 1200 Hz, the output might contain added distortion frequencies at 200 Hz (the difference frequency), 400 Hz (twice the difference frequency), and other frequencies spaced every 200 Hz across the spectrum. In addition, the output may contain 2200 Hz (the sum of the frequencies), 4400 Hz (twice the sum of the frequencies), and many other frequencies related to the sum and difference of the input frequencies. Direct harmonics of the frequencies may also be present at 2000 Hz and 2400 Hz. In practice, the distortion products of the most interest for hearing aid measurement are the difference frequency (f2–f1) and the 3rd order product (f2 – 2f1). Distortion products higher than third order typically rapidly decrease in intensity and do not contribute significantly to the final distortion value (Brockbank and Wass, 1945).

Because many difference frequencies at the input occur within the passband of hearing aids, audible IMD products tend to appear in the middle and higher frequencies, though they may also commonly appear in the low frequencies. A measurement of IMD is probably a more realistic measure of hearing aid distortion than THD, since speech and music consist of the equivalent of multiple frequencies applied to hearing aids simultaneously, rather than a single frequency. Cabot (1988) has stated that this type of testing may be the most likely to measure what the ear might hear.

Figure 5 shows how an intense high frequency sibilant sound can overload a linear hearing aid and produce many IMD products at lower frequencies. Figure 6 is the acoustic spectrum of a test signal that resembles an extended “…sssss…” sound at a level equivalent to that in a spoken word. This type of sound is often received at the microphone at a level that is high enough to overdrive and saturate a hearing aid. This energy is concentrated in a band of frequencies primarily between 3500 Hz and 8500 Hz.

Figure 5.

Output of a linear hearing aid tested with the signal shown in Figure 6, with the test signal subtracted in order to reveal residual products in the output. The output shown in this graph is the resulting IMD with energy primarily in the 500 Hz to 2000 Hz region.

Figure 6.

Test signal consisting of a band of noise with energy primarily between 3500 Hz and 8500 Hz, used to simulate an extended sibilant speech sound.

Figure 5 shows the resulting output from a particular hearing aid, after the test signal has been electronically subtracted. This graph shows that a large amount of IMD products not present in the input signal have been created between about 500 Hz and 2000 Hz. The level of the test signal at the input of the hearing aid was 68 dB SPL, thus showing that even relatively low levels of high frequency energy can provide significant IMD in some hearing aids.

This knowledge can be used in a dispenser's office as a rough test for the presence of IMD. If a suspect hearing aid is held fairly close to the mouth and the word “tesssssssssst” is spoken slowly into the microphone, the sound level on the sibilant portion will probably be on the order of 75 to 80 dB SPL, which is enough to saturate many hearing aids. If a raspy, harsh or buzzing quality is simultaneously heard in the output of the hearing aid, then the hearing aid may produce the same type of sound quality under conditions of intense sibilant input sounds. A loud talker's own voice can easily reach these levels at the hearing aid microphone.

There are two primary methods for measuring IMD in audio systems. Both methods apply two frequencies to the input of the hearing aid and measure the resulting distortion products at the output. The first of these methods to be discussed is generally not suitable for hearing aid measurement. However, it is important to understand why it is not, therefore it will be discussed briefly for completeness.

The first standardized method of testing for IMD in audio amplifiers is called the SMPTE (Society of Motion Picture and Television Engineers) test. This is a test for frequency modulation (FM) distortion, which is sometimes incorrectly called Doppler distortion. This type of distortion occurs when one sound at the input modulates another. An example of a possible situation that could cause this problem is listening to choral music accompanied by a sustained low note on an organ. If the sound reproduction quality is poor, the sound of the voices perceived by the listener may contain a wavering quality because the singing has been modulated by the low organ note. This example is similar to how the test is applied in practice. A low frequency of 60 Hz is applied to the amplifier under test, along with a high test frequency of 7000 Hz (Metzler, 1993). The amplitude of the low frequency is applied at a level four times higher than that of the high frequency. The resulting distortion is measured and recorded as a single number.

The SMPTE test for IMD is not considered suitable for hearing aid testing (Burnett, 1967), because the 60 Hz low frequency and the 7000 Hz high frequency are both outside the passband (amplifying frequency range) of most hearing aids. Also, if the test is performed by sweeping the high frequency, the relationship of the amplitudes of the low and high frequencies would vary drastically according to the frequency response of the hearing aid, thus making the resulting measurement difficult to interpret.

The other method for testing for IMD that has been standardized in the audio industry is useful for hearing aid measurement. The test is variously known as the CCIF (International Telephonic Consultative Committee) test, the twin-tone test, the CCITT (International Telephone and Telegraph Consultative Committee) test, or the IHF (Institute of High Fidelity) intermodulation distortion test. Common frequencies used for testing audio equipment are fixed at 13 kHz and 14 kHz, or at 19 kHz and 20 kHz (Metzler, 1993), though frequencies from 1000 Hz to 9000 Hz with difference frequencies from 50 Hz to 500 Hz have also been used (Langford-Smith, 1960).

As when measuring THD, a more complete method for measuring IMD in practice is to sweep the two input frequencies across the whole frequency range, while maintaining a constant difference frequency between them. The IEC (1983a) standard for characterizing hearing aids uses a difference frequency of 125 Hz, and sweeps the test frequencies over a range from 350 Hz to 5000 Hz, with an input sound level of 64 dB SPL. This small frequency difference ensures that the frequencies within the hearing aid will be maintained at approximately the same level for both frequencies while they are swept across the frequency range. If the relative amplitudes of the two test frequencies vary with respect to each other due to the normal variations in the frequency response of the hearing aid, errors in the measurement result may occur. Specific techniques for the measurement of IMD in hearing aids in the setting of an acoustics laboratory are described in Thomsen and Moller (1975) and White (1977).

Useful as the measurement and interpretation of IMD would appear to be, the routine measurement and reporting of IMD by manufacturers on user brochures and specification sheets is not currently widespread. Hampering this is that equipment incorporating the routine measurement of IMD is not readily available for the clinician. Also, this measurement is not required by ANSI standard S3.22 (1996) or by IEC (1983b), which are quality control standards intended for testing hearing aids during manufacturing.

Other Types of Distortion

There are several other forms of distortion that may be present in hearing aids, but which are generally of lesser practical importance than THD and IMD. Some of these distortions are discussed in more detail in Agnew (1988).

Frequency distortion is the unequal amplification of different parts of the spectrum. In hearing aids, this type of “distortion” is introduced deliberately as a method of compensating for hearing loss that varies with frequency. This is sometimes also called spectral distortion.

Phase distortion is the alteration of timing relationships between input and output, and between different frequencies that exist simultaneously in a particular sound. This should be distinguished from a phase shift that is proportional to frequency, and which does not cause phase distortion (Langford-Smith, 1960). Phase distortion occurs in almost all hearing aids due to the use of capacitors and inductors for amplifying and tailoring the frequency response in the circuitry. For example, band-split filters, commonly used in hearing aids with signal processing in two frequency bands, produce large alterations in phase at the cross-over frequency. Though phase relationships between the two ears are important for localization of sound (Batteau, 1967; Rodgers, 1981; Blauert, 1983), the significance for a listener of changes in relative phase within a complex sound at a single ear are uncertain. The ear may be unable to detect phase shifts in continuous tones (Scroggie, 1958), though phase is important to undistorted reproduction of transient sounds (Langford-Smith, 1960; Moller, 1978a; 1978b). Changes in phase between harmonically-related components of a complex sound can be perceived, and can change the perception of timbre and pitch (Moore, 1982). Phase distortion is probably not significant at frequencies of interest for hearing aids (Douglas-Young, 1981; Moore, 1982). Killion (1979) has indicated that phase changes of less than 90° per octave are generally inaudible.

Transient distortion occurs when a hearing aid or other audio system cannot respond rapidly enough to sounds that either change very rapidly or which have short duration, such as drums and cymbal clashes. This type of distortion is related to an inaccurate response to phase changes. A typical symptom of poor transient response is an oscillation that continues for a brief period of time after the test signal has ceased. This is called ringing, and causes a blurred quality to be introduced into the sound, which degrades the clarity and sharpness of transient sounds.

Crossover distortion occurs primarily in Class B (push-pull) amplifiers. It results from a discontinuity in amplification around the zero crossings of the wave, when the amplifier switches from one side of the Class B output to the other side. For this reason, what are loosely called Class B amplifiers in hearing aids are, in reality, Class AB amplifiers. This type of amplifier is not a true Class B amplifier, but contains some forward bias as in a Class A amplifier—hence the addition of the “A” to create Class AB. This creates a smooth transition between the two sides of the amplifier and reduces crossover distortion.

Frequency Modulation (FM) distortion occurs when a low frequency modulates a higher frequency or frequencies. One method for testing for FM distortion was described earlier as the SMPTE method.

The preceding distortions are forms of distortion that are static with a constant input signal. There are also dynamic forms of distortion that change the characteristics of the processed signal as the input varies. For example, hearing aids may distort the amplitude relationship between sound levels within speech. Thus the relationship of the relative sound levels between a loud sound (perhaps a vowel) followed closely by a soft sound (perhaps a consonant) is not maintained and speech identification cues may be altered. This phenomenon is common in compression hearing aids, which deliberately distort this relationship in order to fit sounds into the residual dynamic range of a hearing aid user.

Little work has been reported in the literature to quantify the results of this type of distortion on intelligibility and sound quality. Indications that certain compression settings can adversely affect the signal-to-distortion ratio (SDR) have been reported by Kates (1992).

Because of space limitations, it is only possible here to present a broad picture of distortion measurement. Many specific improvements in measurement techniques have been proposed. The interested reader is referred for more details to Corliss et al (1968), Leinonen et al (1977), Cordell (1983), Skritek (1983; 1987), Thiele (1983), Small (1986), Levitt et al (1987), Williamson et al (1987), Schneider and Jamieson (1995), and Anderson et al (1996).

COHERENCE

A different technique used for the measurement of distortion in hearing aids is the use of coherence. Coherence shows the degree to which the output from a hearing aid is correlated to the input (ANSI, 1992). For a random noise test signal, coherence is degraded by non-linearity and by system noise. Coherence is not degraded by steady-state magnitude (i.e., gain) changes, because a distortionless change occurring in gain is a linear property. Thus, in its most fundamental sense, coherence is a measure of how well the output signal of a system, such as a hearing aid, is linearly related to the input signal.

Coherence is reported as a dimensionless quantity between 0 and 1. If the measured coherence is 0, then the output signal is completely unrelated to the input signal. If the coherence is 1, then the output is linearly related to the input with no corrupting influences. If the coherence is between 0 and 1, then there is some amount of distortion in the signal.

The basic formula for calculating the percentage of distortion from a coherence measurement is (Preves, 1994):

where: SDR = signal-to-distortion ratio

The percentage of distortion for selected coherence values between 0 and 1, calculated by this formula, are listed in Table 3. Note that 100% distortion occurs for a coherence value of 0.5. In other words, for a coherence of 0.5, equal contributions come from distortion and from the signal. As the coherence value decreases below 0.5 and approaches 0, the output signal has less and less resemblance to the input signal. Finally, as the coherence value reaches 0, the input signal has been totally degraded by the time that it appears at the output. Further tutorial information and specific details of hearing aid test methodology are described in ANSI (1992), Kates (1992), and IEC (1997).

Table 3.

Percentage of distortion for selected coherence values.

| Coherence value | Percentage of distortion |

|---|---|

| 1.00 | 0 |

| 0.99 | 10 |

| 0.97 | 17 |

| 0.95 | 23 |

| 0.90 | 33 |

| 0.85 | 42 |

| 0.80 | 50 |

| 0.70 | 65 |

| 0.60 | 82 |

| 0.50 | 100 |

Coherence is an overall measure of anything that makes the output different from the input, except for any system gain that may be present. Thus, with one measurement of coherence it is possible to quantify the level of all the artifacts that cause the output to be different from the input, a situation which meets the definition of distortion given in the introduction to this issue. However, because coherence is a global measurement, the results do not distinguish the individual characteristics that make the output and input different. While this difference may well be due to THD or IMD, differences may also be due to phase shift (time delay), internal oscillations, or internal noise. The specific nature of these differences is not clear from the measurement of coherence. For example, coherence may decrease around the frequencies of the resonant peaks in a hearing aid receiver; however, this is due to differences in group delay (phase shift) and not to what may be traditionally considered to be distortion.

In general, for low input levels, such as 50 dB SPL, a low coherence value in a hearing aid measurement often indicates high system noise. This may be due either to inherent noise within the hearing aid or to external noise in the test environment corrupting the measurement results. For high input levels, such as 80 or 90 dB SPL, a low coherence value often indicates that saturation distortion is present.

Two examples of coherence measured across frequency are shown in Figure 7. Figure 7a shows a coherence measurement for what would generally be considered to be a “good” hearing aid. It can be seen that the coherence is almost 1 across the entire frequency range, particularly above about 500 Hz. This indicates a very high correlation between output and input. Figure 7b shows a coherence measurement for what would generally be considered to be a “poor” hearing aid. In the low frequencies, particularly below about 2000 Hz, the coherence drops to about 0.7, then drops even further at frequencies below 1000 Hz. This indicates very poor correlation between output and input in these lower frequencies. However, Figure 7b also illustrates the difficulty that may be encountered when interpreting coherence measurements. This hearing aid had a very large decrease in amplification in the low frequencies. This decrease probably resulted in a degraded signal-to-noise ratio (SNR) in the low frequencies which, in turn, may have resulted in the poor coherence below 2000 Hz because the measurement does not distinguish between distortion and noise.

Figure 7.

Example of coherence measurements from two hearing aids.

Though a considerable amount of effort has been expended on coherence measurements for hearing aids (Dyrlund, 1989; 1992; Preves and Newton, 1989; Preves, 1990; Preves and Woodruff, 1990; Kates, 1992; Dyrlund et al, 1994; Schneider and Jamieson, 1995) and the ANSI S3.48 hearing aid working group has studied the application of the measurement in detail, the use of coherence has been slow to be adopted by hearing aid manufacturers for reporting individual hearing aid data to the dispenser.

In a similar effort to improve hearing aid distortion reporting, Kates (1990) has proposed a measure for distortion similar to the Articulation Index (AI). The signal-to-distortion ratio (SDR) of the hearing aid is measured in each auditory critical band. Each value obtained is then limited to a lower value of 0 dB SDR and an upper value of 30 dB SDR. The resultant set of SDR values are summed and then divided by 30 times the number of critical bands. The result yields a number between 0 and 1, where 0 is the poorest and 1 is the best.

SOURCES OF DISTORTION

Distortion that occurs in hearing aids may be categorized into two different types, depending on the level of the input signal present. The first type is a fixed level of distortion that is present at all levels of input, but which is primarily observed with low levels of input. The exact input level below which this may be observed will vary according to the hearing aid undergoing measurement and the composition of the input signal; however, in general, “low level” in this context refers to input levels that are below about 70 dB SPL. The distortion resulting from low levels of input is inherent in the design and operation of the amplifier.

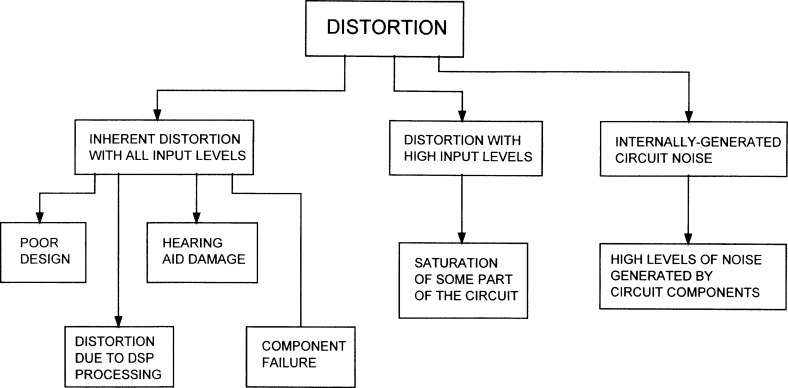

The second type is distortion, which occurs primarily with higher levels of input (i.e. higher than about 70 dB SPL), typically varies in measured level with the level of the input signal. This is often called saturation distortion because saturation of some part of the circuit results in overload and the generation of distortion. This type of distortion is also called non-linear distortion because it results from non-linearities in the hearing aids, and may be called amplitude distortion or overload distortion, because it occurs and worsens as the amplitude of the input signal increases. This results in the production of high levels of THD and IMD in the output. These generic categories of distortion are illustrated as a diagram in Figure 8.

Figure 8.

Diagram illustrating the relationship of different categories of distortion to some of the possible causes.

Distortion Caused by Low-level Inputs

Due to inherent limitations in hearing aid circuits and transducers, some small percentage of distortion normally occurs in most hearing aids. This is known and accepted by hearing aid designers, fitters and users. This distortion may have several causes, from slight inaccuracies in the matching and linearity of components to minor electromechanical non-linearities in receivers. In Class A circuits distortion may occur due to non-linearities and output loading effects. In Class B circuits distortion may occur due to cross-over distortion. In Class D circuits distortion may arise from clock jitter. Due to non-linearities in electromechanical operation, hearing aid receivers produce inherent distortion. All these contributions to overall distortion usually result in less than 5% THD and IMD across the frequency spectrum. This low level of distortion is typically unnoticed by the user and has no significant effect on hearing aid use.

Objectionable levels of distortion in hearing aids with low level inputs are primarily caused by two mechanisms: inadequate design or hearing aid failure. Distortion due to poor design is rare in modern hearing aids. Occurrence of this problem may be easily observed by performing a distortion frequency sweep, as shown in Figure 4.

A more likely cause for persistent objectionable distortion in hearing aids with low levels inputs is that some component in the hearing aid has failed, or that the hearing aid has been damaged. For example, accidentally dropping a hearing aid onto a hard surface, such as a tiled floor, may damage the receiver and produce levels of distortion that are higher than normal. Typically this type of problem is not a subtle effect, but results in large and very noticeable increases in distortion. This distortion will most likely be present with all inputs, all the time. A diagnosis can easily be made by performing standard distortion tests. The obvious solution is to return the hearing aid for repair.

Fully digital hearing aids may have additional potential sources of low-level distortion, such as A/D converter non-linearities, aliasing, clock instability, quantization errors, quantization noise and phase distortion (Pohlmann, 1992). To date, very little work has been performed to determine how significant these sources of distortion will be to the user with a hearing impairment. Those readers who are unfamiliar with digital signal processing (DSP) in hearing aids may wish to refer to Levitt (1987), Staab (1990), Williamson and Punch (1990), Agnew (1991), and Murray and Hansen (1992) for comprehensive background information. General information on digital signal processing at a reasonably-readable technical level for the non-technical reader may be found in Bloom (1985) and Pohlmann (1991, 1992).

Distortion Caused by High-level Inputs

Distortion that occurs with low-level inputs is usually reduced to a minimum by the hearing aid circuit designer, and is not usually a particular problem. A more significant type of distortion occurs when high levels of input are presented to the hearing aid. This type of distortion is often not constant, but varies with the level of the input, typically becoming more severe with higher levels of input. This problem is due to saturation distortion, named because some internal part of the hearing aid has saturated and overloaded.

This phenomenon is also known as peak-clipping, as illustrated in Figure 9. An undistorted sine wave is shown in Figure 9a. Figure 9b and 9c show the effects of peak-clipping on a sine wave. Figure 9b shows clipping on one half of the sine wave; this is called asymmetrical peak clipping. Figure 9c shows equal clipping on both halves of the sine wave; this is called symmetrical peak clipping.

Figure 9.

The effects of peak clipping on a sine wave.

Peak-clipping is used deliberately as a simple and inexpensive method of limiting the output of a hearing aid in response to loud sounds. The threshold of the clipping is set such that the peaks of the amplified waves are cut off, or clipped, as shown in Figure 9c at a level that produces the desired lowered acoustic output. Hawkins and Naidoo (1993), reporting on a survey of hearing aid manufacturers stated that, in 1990, 82% of the hearing aids sold in the United States used peak-clipping as a method of output limitation.

The side-effect of peak clipping as a form of output limitation is the production of THD and IMD. Because of the deleterious effects of this on sound quality, it has also been half-humorously called “crummy” peak clipping. Revit (1994) has described how a lack of smoothness in the appearance of the family of hearing aid output curves obtained by varying the input level of composite noise between 50 dB SPL and 80 dB SPL, in 10 dB steps, may be used to indicate the presence of peak-clipping in linear hearing aids.

An example of the increase in distortion that may occur when a hearing aid saturates is illustrated in Figure 10a. This graph shows output versus frequency for the same hearing aid that was shown below saturation in Figure 2. The corresponding numerical results are shown in Table 4. The graph shows large increases in the levels of the 2nd and 3rd harmonics and the corresponding distortion. The 4th through the 9th harmonics have now appeared on the graph, though the distortion figures in Table 4 indicate that the 5th through the 9th harmonics are effectively negligible because they are so small. Figure 10a shows the harmonic distortion products for the condition under which the hearing aid was driven slightly into saturation. In Figure 10b, the hearing aid was driven further into saturation by increasing the level of the input signal. In this instance, the level of the 2nd harmonic has increased, but the levels of the 3rd and 4th have decreased. The other harmonics may be neglected. The difference between 10a and 10b shows that types and levels of harmonic distortion products, even during saturation, can vary widely.

Figure 10.

Graph of output versus frequency for the hearing aid measured in Figure 2, showing HD present when the hearing aid was driven into saturation.

Table 4.

Levels of distortion present in Figures 10a and 10b.

| Frequency (Hz) | Output level (dB SPL) | Level down from fudnamental (dB) | Level of distortion (%) |

|---|---|---|---|

| Figure 10a | |||

| 1000 | 101 | — | — |

| 2000 | 87 | 14 | 19.90 |

| 3000 | 75 | 26 | 5.00 |

| 4000 | 71 | 30 | 3.20 |

| 5000 | 57 | 44 | 0.63 |

| 6000 | 60 | 41 | 0.89 |

| 7000 | 42 | 59 | 0.11 |

| 8000 | 31 | 70 | 0.03 |

| 9000 | 19 | 82 | 0.01 |

| Figure 10b | |||

| 1000 | 102 | — | — |

| 2000 | 92 | 10 | 31.60 |

| 3000 | 65 | 37 | 1.40 |

| 4000 | 68 | 34 | 2.00 |

| 5000 | 61 | 41 | 0.89 |

| 6000 | 61 | 41 | 0.89 |

| 7000 | 43 | 59 | 0.11 |

| 8000 | 41 | 61 | 0.09 |

| 9000 | 31 | 71 | 0.03 |

As will be discussed further in the section on the perception of distortion, the presence and relative levels of different harmonics contribute to the perception of the annoyance of the distorted sound. Even though Figure 10b shows measurements with the hearing aid further into saturation than does Figure 10a and shows a higher level of second harmonic distortion to be present, the perception of the condition in Figure 10a may actually be worse for a listener, due to the higher level of third harmonic present.

Saturation distortion occurs primarily because there is only a limited amount of amplification that can be obtained from the battery used to power hearing aids. At some combination of input level and amplification, something in the hearing aid, either microphone, amplifier, or receiver, reaches the limit of what it can amplify and deliver to the ear without distortion. Overload can occur at any stage in the hearing aid.

Contemporary microphones in hearing aids operate with very low distortion in the range of sound levels typically present in the environment. Distortion figures on the order of less than 0.5% are typical for electret microphones with an input frequency of 1000 Hz at 60 dB SPL. Distortion levels remain on the order of 1% up to about 105 dB SPL input, then increase to about 10% by 120 dB SPL. Coherence typically remains essentially 1 up to about 110 dB SPL input with a broadband noise input signal. Even though these levels are higher than those required to reproduce the normal range of speech, it is not unusual for other sounds exceeding speech to occur commonly in listener's environment. Table 5 illustrates some of the high levels of sound that may be encountered in household environments. Teder (1995) measured crowd noise at a baseball game to be as high as 120 dB SPL. Peaks of orchestral music may reach 120–125 dB SPL.

Table 5.

Levels of loud sounds commonly encountered in everyday environments.

| Common kitchen sounds (compiled from Teder, 1995) | |

|---|---|

| Source | Peak sound level (dB SPL) |

| Cupboard door closing | 84 |

| Pots and pans put in cupboard | 89 |

| Setting plate in sink | 91 |

| Dropped pot lid | 102 |

| Fork dropped on plate | 104 |

| Spoon tapped on cup | 104 |

| Common environmental sounds (Agnew, 1995) | |

|---|---|

| Source | Peak sound level (dB SPL) |

| Electric hair dryer (slow setting) | 82–88 |

| Conversational speech | 85–90 |

| Man's electric shaver | 89 |

| Electric hair dryer (fast setting) | 90–98 |

| 3/8″ electric drill in wood | 95 |

| Gasoline-powered lawn mower | 105 |

| 7″ circular saw cutting wood | 110 |

| Hammer driving nail in wood | 125–135 |

A linear preamplifier is limited in the amount of amplification that it can provide without distortion by its supply voltage. If the amount of gain present in the amplifier causes the signal at the output of a Class A preamplifier to exceed this limit, it will overload, saturate and distort when the input signal is increased beyond the level that exceeds the signal swing limitation.

Similar to the overload and distortion in the preamplifier that occurs as the input signal increases, the electrical interaction of the output amplifier and receiver can also produce significant distortion if the input level to the amplifier is high enough. The level where saturation will occur is determined by the type and design of the output amplifier and receiver that are specified by the hearing aid designer. A Class A output stage can produce a voltage swing of slightly less than two times the battery voltage (Agnew et al, 1997). A Class B output amplifier can produce a swing of four times the battery voltage. This is one reason that a Class B amplifier is typically used for high-power hearing aids, because it is possible to obtain twice the output voltage swing for a given input than from a Class A amplifier.

An erroneous assumption that is often made is that low distortion amplification may be achieved up to the maximum SSPL90 value of a hearing aid. (Note that the term SSPL90 has been replaced by the newer term OSPL90 in ANSI standard S3.22 (1996) in order to harmonize with IEC (1983b) specifications; however, the older term SSPL90 will be retained in this issue due to widespread contemporary usage). The value for SSPL90 defines the maximum level of hearing aid output with a 90 dB SPL input; however, this is not necessarily either the maximum output of the hearing aid, or the point of the onset of distortion. Maximum output of a hearing aid may occur a few decibels above the maximum SSPL90 value if the input signal is greater than 90 dB SPL, and may occur below this value at different frequencies. The test signal of 90 dB SPL input is used as a convenient and consistent input value for testing a hearing aid for quality control purposes, which is the intent of ANSI Standard S3.22 (1996).

Amplifier Headroom

Saturation distortion basically occurs because of a lack of headroom in hearing aids. In sound system engineering, headroom is defined as the difference, in decibels, between the highest amplified level present in a given output signal and the maximum output level that the system can produce without noticeable distortion (White, 1993). This maximum level is the upper end of the dynamic range of the amplifying system (Foreman, 1987). High quality audio amplifiers are capable of being designed to have minimal distortion until clipping occurs due to saturation, because of the ready availability of high enough voltages from the power supply.

Headroom and distortion level definitions are more complex in a hearing aid because significant distortion occurs in most Class A and Class D hearing aid output stages below the hearing aid saturation level. Thus, it is more appropriate to say that hearing aid amplifier headroom is the amount of amplification range remaining between the instantaneous signal output level and the maximum undistorted output capability of the hearing aid, bearing in mind that this maximum undistorted output level varies with frequency and is not necessarily the SSPL90 value of the hearing aid.

Though saturation and distortion do not necessarily only occur in the output stage, the onset of receiver saturation is often assumed to be the SSPL90 value of the hearing aid. However, the onset of distortion does not necessarily occur at this value. The onset of distortion in a linear hearing aid usually occurs below the values plotted in the SSPL90 graph and the amount of distortion usually varies with frequency. An example illustrating this is shown in Figure 11. The solid black line is the SSPL90 curve of a typical linear ITE hearing aid with a Class A output amplifier stage. This hearing aid had 40 dB of peak gain, a peak SSPL90 of 108 dB SPL, and a rising frequency response curve of about 12 dB from 500 Hz to the first peak at 1500 Hz. The dashed line is the output level of the hearing aid at which 10% distortion occurs. From 200 Hz to about 1000 Hz, this level is about 6 dB to 8 dB lower than the SSPL90 curve. At 2000 Hz, the 10% distortion level is only about 2 dB below the SSPL90 value. Thus low frequency sounds will tend to saturate this hearing aid sooner than will high frequency sounds, and will therefore produce harmonic distortion components that spread upwards into the higher frequencies.

Figure 11.

Graph showing the SSPL90 of a hearing aid (black line), and the output level (dashed line) at which 10% distortion occurred.

Linear hearing aids with Class D output stages have been presented as having more headroom, and thus lower distortion, than linear hearing aids with Class A output stages. The maximum voltage swing across the receiver in a linear Class A or Class D output amplifier is approximately twice the voltage available from the battery. In both types of output stage there are some losses of output drive capability due to finite resistances in the output transistors that do not allow the use of the full battery voltage to drive the receiver (Grebene, 1984). However, due to different concepts used to drive the receiver in the two types of output stage, the levels of the output signal that may be obtained without appreciable distortion also differ.

Depending on the receiver and the design of the output stage, an empirical rule-of-thumb is that the voltage swing that appears across the receiver without appreciable distortion in a linear Class D output stage is approximately 3 dB less than twice the full battery voltage. Beyond this point the levels of distortion rise very rapidly. In a linear Class A output stage, similar empirical findings are that the maximum undistorted voltage swing across the receiver is approximately 6 dB less than twice the battery voltage. Therefore the difference between Class A and Class D maximum undistorted voltage swing across the receiver is approximately 3 dB, when using receivers of the same type and impedance (Agnew et al, 1997). Thus, when configured to produce the same SSPL90, a Class D output stage will have approximately 3 dB more headroom than will the equivalent Class A output stage, and the Class D will not reach the same distortion levels as the equivalent Class A output stage until a 3 dB higher output level is achieved.

Agnew et al (1997) showed that, for low and medium level input signals, both Class A and Class D output stages can perform equally well. As will be discussed further in the section on the perception of distortion, Palmer et al (1995) noted that when the design of the Class A amplifier that they tested was changed to increase the current drive through the receiver, comparable sound quality performance of the Class A circuit to the Class D circuit was obtained with no obvious audible difference. Johnson and Killion (1994) have stated that “all other things being equal, competently designed amplifiers of any class cannot be distinguished from one another on the basis of even the most careful listening tests”.

Because the headroom phenomenon is noticeable at levels approaching maximum amplification and output, it is only for higher input levels that the Class A circuit will distort sooner than the Class D circuit with equivalent SSPL90. In other words, there is additional headroom available in the Class D circuit. When either output stage amplifies the signal close to the saturation level, either hearing aid will distort, whether the output stage is a Class A or Class D.

It is important to recognize that this discussion relates to Class A and Class D output stages with equal SSPL90 values, and to understand how this relates to the amount of headroom available in both. Inadequate headroom in any hearing aid can result from high gain with a low SSPL90, a combination that causes clipping and other types of nonlinear distortion at high input levels (Palmer et al, 1995). Increased headroom reduces the generation of these distortion products that degrade the coherence and sound quality of a hearing aid (Preves, 1990). However, the specific value of SSPL90 is set primarily by the receiver type and the configuration of the output stage chosen by the design engineer, not by the generic type of output stage. In practice, a hearing aid with a Class D or a Class A output stage will have the combination of SSPL90 and gain that the circuit designer chooses. This could include a combination of high gain and high SSPL90, low gain and low SSPL90, low gain and high SSPL90, or high gain and low SSPL90, though it is unlikely that this last combination would be found in an appropriately-fitted hearing aid.

There is also another important factor in this discussion that should not be overlooked: a Class D amplifier is inherently more efficient than a Class A amplifier (Carlson, 1988). Thus, for a particular SSPL90, a hearing aid with a Class D output stage will typically draw less current than an equivalent hearing aid with a Class A output stage. Agnew et al (1997) studied the sound quality performance of a hearing aid with a Class A output stage with 1.2 mA of current drain. These researchers found equivalent distortion performance was obtained with a hearing aid with a Class D output stage that had an idle current drain of 0.27 mA, increasing to a current drain of 0.51 mA at 90 dB SPL input (Agnew et al, 1997). Depending on the design, a Class D amplifier may dissipate as little as one-fourth the power of a Class AB design for the same output power (Subbarao, 1974).

Preves and Woodruff (1990) have presented an example of how increasing the headroom can improve coherence measurements in linear hearing aids. They compared a linear hearing aid with a Class A output stage with 35 dB of peak gain and an HFA SSPL90 of 103 dB (hearing aid A) to one that had a different linear amplifier, and a Class D output stage and receiver that provided 40 dB of peak gain and an HFA SSPL90 of 117 dB (hearing aid B). They showed that coherence measurements were improved for hearing aid B with the higher SSPL90 value. Hearing aid B used a Class D output stage; however, the same experiment could have been performed by comparing two Class A output stages with different SSPL90 values configured to provide increased headroom in one of them. The outcome was not necessarily the comparison between Class A and Class D, but that hearing aid B had increased headroom due to a different amplifier and a different receiver. The result was a higher HFA SSPL90 for hearing aid B than for hearing aid A, thus the higher headroom produced lower distortion in hearing aid B than in hearing aid A.

Preves and Newton (1989), and Preves and Woodruff (1990) have presented expanded explanations of the concept of headroom and the distortion problems that can occur in hearing aids due to the lack of headroom.

Multiple Input Levels for Distortion Testing

Since a hearing aid user may enter many different acoustic environments, it is important to characterize hearing aid distortion performance with different input levels. A range of sound levels may be encountered from soft conversational speech (60 dB SPL), through average speech (70 dB SPL) and intense speech (80 dB SPL), to shouted speech and loud music (90 dB SPL). Thus it is important to determine hearing aid distortion performance across a range of these levels.

Though it would seem that distortion would not be present during normal speech communication, since conversational speech occurs at an average level of around 65 dB SPL, peaks in speech occur at 12 dB to 20 dB above this average level. This potential peak level of 85 dB SPL can quickly drive a hearing aid amplifier into saturation and produce distortion. In addition, many other common sounds in the environment occur at levels of greater than 85 dB SPL, as shown in Table 5.

Hearing aids that have the same specifications when measured according to ANSI S3.22 (1996) may have very different distortion performance when the input levels are varied. (Note that this is not necessarily a shortcoming of the standard; ANSI standard S3.22 (1996) is intended to be a quality control standard for manufacturers to test their product, not as an indication of how the hearing aid will perform on the user). Thus, one method used to comprehensively characterize the distortion performance of a hearing aid is to perform swept measurements of THD and IMD at levels of 60, 70, 80 and 90 dB SPL input. Agnew (1994) has described measurements made on three different hearing aids that had the same specifications and which exhibited low levels of distortion when measured according to ANSI test methods. Distortion performance was very similar for the three hearing aids with a test input level of 60 dB SPL. However, the three aids had significantly different levels of distortion when tested with a swept frequency at 75 dB SPL input. One aid contained a distortion peak of 27%, as compared to the other two which peaked respectively at 7% and 17%.

Graphs obtained from THD measurement of these three hearing aids are shown in Figure 12. Figure 12a shows a comparison of THD graphs measured with 70 dB SPL input level. Under this condition, all three hearing aids exhibited similar low distortion performance. However, when measured with 80 dB SPL input level with no adjustment to the hearing aids, hearing aid #1 exhibited significantly lower levels of THD than did hearing aids #2 and #3, as shown in Figure 12b.

Figure 12.

Graphs of a comparison of THD on a scale of 0% to 50% measured on three hearing aids, showing the difference in distortion levels that were obtained when measured with two different input levels.

A further example of graphs obtained from hearing aid measurements with different input levels is shown in Figure 13a and 13b. Figure 13a shows swept-frequency THD measurements made on a linear hearing aid with 60, 70, 80 and 90 dB SPL input. With 60 dB and 70 dB SPL input, the distortion levels are low, being less than 2% across most of the frequency range. However, with 80 dB SPL input, the distortion suddenly rises, peaking at over 50% at 900 Hz. With 90 dB SPL input, the distortion is 100% at 1300 Hz. These results indicate that it is probable that, at some input level between 70 dB and 80 dB SPL, some part of the hearing aid amplifier has saturated, thus resulting in a rapid increase in measured distortion. Similar performance is observed in Figure 13b, which shows swept-frequency IMD measurements on the same hearing aid with 60, 70, 80 and 90 dB SPL input and a 200 Hz separation of the test frequencies.

Figure 13.

An example of graphs of the results of swept-frequency measurements for a linear hearing aid, using input levels of 60, 70, 80 and 90 dB SPL input.

Reduction of Saturation Distortion

One solution to the amplifier saturation problem is to limit the amplifier gain in various stages with the use of compression, such that the processed signal never exceeds the amplifier limits of gain, battery voltage, output amplifier and receiver. For example, if the appropriate compression is added to the preamplifier and output amplifier circuits, these circuits will operate in the linear region until the limit of undistorted amplification is approached, then the circuitry will limit the available gain to the maximum that can be achieved without distortion (Agnew, 1997a).

Agnew (1995) has presented data that compared three hearing aids: a linear hearing aid, a hearing aid with input compression, and a hearing aid with multiple compression functions configured to prevent saturation. Swept-frequency THD graphs at 60 and 70 dB SPL input showed that the distortion performance was similar for all three circuits, and was very low (only about 2% or 3% at maximum). However, with 80 and 90 dB SPL input the distortion performance diverged considerably. With 80 dB SPL input the linear hearing aid peaked at 50% distortion, and with 90 dB SPL input was well over 50%. Distortion in the hearing aid with input compression peaked at about 20% with 80 dB SPL input and about 40% with 90 dB SPL input. Thus the use of input compression lowered the distortion compared to the linear hearing aid. The hearing aid with the circuitry to prevent saturation was superior in distortion performance to both the linear hearing aid and the hearing aid with input compression, and maintained the measured distortion levels consistently at less than 5% for all input levels.

THE PERCEPTION OF DISTORTION

It is difficult to state definitively which type of distortion is perceived by a listener as being worst; however, it is recognized that most of the unpleasant sound quality that occurs when distortion is present in an audio system is due to the presence of IMD, and not THD (Scroggie, 1954; Thomsen and Moller, 1975). IMD typically generates dissonant frequencies, or ones that are not pleasantly related to musical intervals. Thus, IMD is often considered strident and more objectionable to listeners than are THD products (Durrant and Lovrinic, 1984). Also, a given non-linearity in a system will typically result in more IMD components being produced, and ones that are often higher in amplitude than the THD present (Thomsen and Moller, 1975). Schweitzer et al (1977) have expressed a belief that IMD is equal to or more important than THD for specifying hearing aid performance and suggested that the presence of IMD is a pronounced possibility in every spoken syllable.

The audible perception of IMD has been described as making the sound quality of the signal as “blurred”, “fuzzy”, “tinny”, “harsh”, “rattling”, “shrill”, “mushy”, “raucous”, “muddy”, “grating”, “rasping”, “buzzing” and “rough” (Scroggie, 1958; Langford-Smith, 1960). Though sound containing IMD products may be intelligible to a hearing aid user, listening to these objectionable-sounding components of IMD for long periods of time may lead to auditory fatigue (Ashley, 1976).

Fortune et al (1991) showed that linear hearing aids that generate saturation distortion produce a lower loudness discomfort level (LDL) than hearing aids with a high enough headroom to reduce saturation distortion. These authors contend that, to combat this, wearers of these hearing aids may turn down the gain of their aids to the point of receiving inadequate gain for beneficial amplification.

Levels of THD in a hearing aid of less than approximately 10% may often not be perceived as audible or particularly objectionable (Killion, 1979; Dillon and Macrae, 1984; Agnew, 1988; Cole 1993). There are two possible reasons for this. One is that, by definition, THD products in the output are harmonically-related to the input frequencies.

The second harmonic of a frequency is twice the frequency, or one octave above that frequency. Tones an octave apart sound very similar in pitch (Handel, 1993), and the ear tolerates a fairly large percentage of the second harmonic frequency in a sound before its presence becomes objectionable (Scroggie, 1958). Indeed, it is precisely because of the presence of these harmonic relationships in sound that music and voices have a rich-sounding timbre. The second possible reason that low levels of harmonic distortion in hearing aids may not be particularly objectionable is that many of the harmonic products fall above the passband of the hearing aid and are not audible.

The Effect of Distortion on Sound Quality Judgments

Though distorted sounds may remain highly intelligible (Licklider, 1946), the distortion present may severely degrade a listener's perception of sound quality. Criteria used in judgments of sound quality are commonly based on those of Gabrielsson (Gabrielsson and Sjogren, 1979a; 1979b; Gabrielsson and Lindstrom, 1985; Gabrielsson et al, 1988). These sound quality judgments are based on a 10 point scale of descriptors, such as clarity, fullness and spaciousness, using contrasts such as sharpness/softness, fullness/thinness and brightness/dullness (Gabrielsson and Sjogren, 1979a; 1979b; Gabrielsson and Lindstrom, 1985; Gabrielsson et al, 1988). Examples of scales used to record subject responses are shown in Figure 14. Gabrielsson and Sjogren (1979b) found that there was good agreement between the sound quality perception of hearing aid users and similar sound quality experiments using sound engineers and normal listeners as subjects.

Figure 14.

Examples of scales used to record subject responses in sound quality testing.

Punch et al (1980) found that there was a high correlation between the low cut-off frequency of the amplified spectrum and the perceived sound quality. The lower cut-off frequencies were preferred by their subjects. Similarly, Franks (1982) found that though subjects with hearing impairments were not able to detect or appreciate the high frequency components of music, low frequencies were perceived and appreciated.

Yanick (1977), reporting on the results from 12 subjects wearing hearing aids with and without transient intermodulation distortion, concluded that the hearing aid amplifier which minimized distortion was consistently more effective in improving the clarity of speech sounds. Witter and Goldstein (1971), using 30 listeners with normal hearing to compare frequency response, THD, IMD and transient response, concluded that transient response was the best predictor of the listener's judgments of sound quality.

Kates and Kozma-Spytek (1994), studying the responses of eight listeners with normal hearing, showed that speech quality was significantly affected by peak-clipping. They went on to propose good sound quality as a design goal for hearing aids because of the ability of their subjects to detect small amounts of distortion and the significant effect of peak-clipping on speech quality. Hence these authors concluded that clipping distortion should be minimized in all stages of a hearing instrument. Van Tasell and Crain (1992), in a study of adaptive frequency response hearing aids, suggested completely avoiding peak-clipping as a method of output limitation. Hawkins and Naidoo (1993) studied 12 subjects with mild-to-moderate hearing loss and found that the subjects preferred the sound quality and clarity of compression limiting as a method of output reduction, as compared to asymmetrical peak clipping.

Several studies have reported on distortion relative to different types of output stages used in hearing aids. Kochkin and Ballad (1991) reported that participants in a focus group study and 110 participants in listening tests at a trade show exhibit felt that a Class D output stage used produced higher sound quality than a Class A output stage. This difference was theorized to be the result of the higher headroom available in the hearing aids with the Class D output stage (Longwell and Gawinski, 1992). However, electroacoustic data on the hearing aids were not reported and to properly evaluate these results it is important to know if the test devices had identical gain, frequency responses, saturation levels and peak frequencies (Johnson and Killion, 1994).

Agnew (1997a) compared the perception of sound quality of seventeen listeners with hearing impairments using hearing aids with a Class D output stage that contained circuitry to prevent saturation distortion to linear hearing aids with a Class D output stage that were allowed to amplify into saturation. The stimuli used were a female talker, orchestral music and solo piano music presented at input levels of 60, 80 and 90 dB SPL. For 60 dB SPL input, when the measured distortion was low, there appeared to be no particular preference for either hearing aid and the differences noted were not statistically significant. For 80 dB SPL and 90 dB SPL inputs the listeners preferred the sound quality of the hearing aid with the antisaturation compression, which had significantly lower distortion than the standard hearing aid. At these higher input levels the results were found to be statistically significant.

Agnew and Mayhugh (1997) reported on the results of a perception study using fourteen listeners with normal hearing to determine if it was possible to perceive distortion differences between a linear hearing aid with a Class D output stage and a linear hearing aid with a Class D output stage and identical ANSI specifications, but with compression added to minimize saturation distortion. The stimuli used were recordings made through the hearing aids with different input levels, then equalized to remove the effects of loudness on the perceived judgments. In three-alternative forced-choice trials, the listeners consistently correctly identified the hearing aid with the higher measured distortion.

Palmer et al (1995) reported on sound quality judgments obtained during a comparison of hearing aids with a starved Class A output stage to hearing aids with a Class D output stage. The term “starved” in this context was used to indicate a Class A output stage that was deliberately biased for a low current drain and was thereby inadequate to minimize distortion. The subjects rated the Class D circuit as having superior sound quality. Palmer et al (1995) noted, however, that this study was not intended to compare Class D and Class A amplifiers, but to compare a Class D output stage to a “starved” Class A output stage. The authors also noted that when the biasing of the Class A amplifier was changed such that the current drain was significantly increased, performance of the Class A circuit comparable to the Class D was obtained with no obvious audible difference.

Agnew et al (1997) studied the relationship between headroom and perceived sound quality measures for a Class A output stage compared to a Class D output stage with the same measured SSPL90 value. Measured THD and IMD levels were approximately the same for both output stages at low input levels; however, at higher input levels, the output stage with lower headroom produced higher distortion. When the hearing aids were matched to have equal SSPL90, the hearing aid with the Class A output stage produced higher levels of distortion than the Class D output stage, particularly for input levels of 75 dB SPL and 90 dB SPL. When the SSPL90 of the hearing aid with the Class A output stage was raised 4 dB over that of the Class D in order to provide equivalent headroom in the two output stages, the Class D circuit had higher levels of distortion than the Class A circuit. Subjective preferences of sound quality obtained from four hearing aid wearers coincided with the trends in objective electroacoustic measurements of THD and IMD made for both conditions. There was generally a preference for the hearing aid circuit that exhibited lower distortion, particularly with high input levels.

Though these studies reported in the literature show a link between increasing distortion and a perception of decreased sound quality, a definitive correlation has not yet been shown. For example, the study of Agnew et al (1997) showed that even though the distortion was lower for the Class D than for the Class A circuit under the same conditions, there was a subjective preference for the Class A. Trends observed in the data indicated that there are also apparently other subtle features to sound quality perception that were not quantified by the sound quality rating scales used in this study, and which made a definite link between the distortion measurements and the sound quality perceptions in this study inconclusive.

The Effect of Distortion on Speech Intelligibility

There have also been attempts to link increased hearing aid distortion to reduced speech intelligibility. However, a clear-cut link between hearing aid distortion and intelligibility has not yet been established (Peters and Burkhard, 1968; Curran, 1974; Williamson et al, 1987). This is probably because speech is highly redundant and a highly disruptive combination of corrupting influences must be present in the signal, the hearing mechanism and the hearing aid before intelligibility is significantly degraded.