INTRODUCTION

As is widely known, the pace of technological change in electronics and computer-related communications has been extremely rapid in the past decade. The effect of this remarkable transformation, including a proliferation of personal computers and greatly improved telecommunication systems, has been to alter the daily lives of most citizens in the developed regions of the world. Previous technically-driven sociological transformations were coupled to prominent developments, such as the printing press, the internal combustion engine, or television. The current great metamorphosis has the underlying driving theme of “digital.” Indeed, there is the suggestion that a new elite social class is emerging, which the Wall Street Journal (October 28, 1996) has labeled with tongue in cheek, “the digerati.” The obvious implication is that expertise, or at least minimal understanding, of digital developments is an inescapable and essential aspect of the knowledge base for those who intend to “keep up” with the rapid changes that will inevitably continue. The digital transformation in hearing aids is certainly a profound technological “trend” in amplification as this article will illustrate.

The introduction of digital signal processing into hearing aids has been heralded with great acclaim and high expectations. The promise of such technology has always included advantages such as better fidelity, greater flexibility, improved performance in noise, and greater restoration of function for a variety of perplexing auditory deficits. Numerous gallant attempts and a variety of disappointments have preceded what appears to be the final emergence of a sustainable class of commercially available digital signal processing (DSP) hearing aids. Kruger and Kruger (1994) proposed that the late 1990's would be known as the “period of the digital hearing aid,” and indications of the accuracy of that forecast continue to accumulate. Indeed, it seems clear that digital hearing aids have not only arrived after a multidecade, often fitful and arduous birthing process, but they are almost certainly beginning to claim their long awaited place as the dominant mode of rehabilitative amplification. The path to fulfillment of such promise deserves review and study by professionals involved in the application of all types of hearing aids.

SCOPE

This issue of Trends will explore some of the fundamental challenges of “digitizing” the hearing aid industry, and then discuss specific attributes of some of the early entries into the arena. The issue will conclude with a brief glance at developments that may follow in the near future. The intention is to provide, for a wide range of readers, useful information regarding the rapidly developing digital technologies for auditory rehabilitation purposes. The number of research efforts that have direct linkages into the realization of commercially viable digital hearing aids far exceed the space available for this manuscript. Apologies are due to those many investigators not cited in the limited pages here.

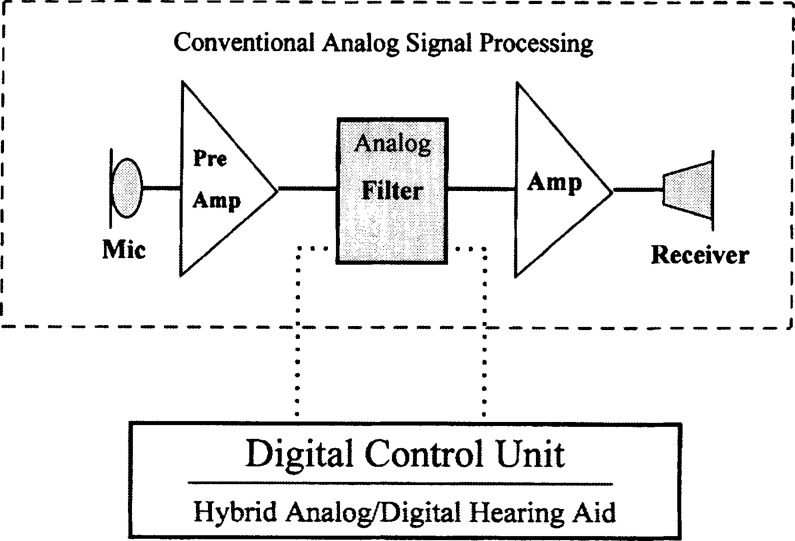

Several authors (e.g., Widen, 1987, 1990; Miller, 1988; Levitt, 1993a) have attempted to clarify the differences between traditional analog hearing aids, the so-called “hybrid” class, that incorporated digital program instructions, and those devices that are genuinely digital in terms of their audio processing. Agnew (1996a) correctly termed the hybrid class, Digitally Controlled Analog (DCA) hearing aids. The DCA programmable hearing aids were sometimes confusing to consumers (and some dispensers) because of the use of the word “digital” in the marketing materials. In terms of audio signal processing, however, hybrid programmable devices maintained the analog properties of traditional non-programmable hearing aids. This is illustrated in Figure 1. Conventional analog, and DCA “hybrid” hearing aids, will receive little further explanation in this paper as it will focus entirely on full digital processing approaches. However, the historic progression of commercial hearing aids from analog to hybrid to digital (DSP) forms is undeniable and should not be dismissed as unimportant.

Figure 1.

Simplified block diagrams illustrating the basic elements of analog and “hybrid” hearing aids. The upper area within the dashed line are the simplest elements of a conventional analog hearing aid. The addition of the digital control box was the essential requirement to produce digitally programmable “hybrid” devices.

HISTORICAL WORK TOWARDS DIGITAL HEARING AIDS

Many decades of evolutionary progress within the technical domains of electronics, signal processing theory, telecommunications, and low voltage power supply production have all contributed to the arrival of viable, consumer-acceptable digital hearing aids. The application of digital signal processing techniques to speech were first applied as simulations of complex analog systems. Early simulations required great amounts of time, as much as an hour or more for just a few seconds of speech (Rabiner and Schafer, 1978). In the mid-1960s major improvements in computer processing speeds and digital theory made it clear that digital systems had virtues that would far exceed their value as simple simulation devices. The telecommunications industry, in particular, was strongly motivated to continue work on digital speech processing in the belief that pay-offs in resistance to noise and economies of transmission would ensue.

Digital hardware advantages of reliability and compactness became increasingly evident in the late 1960s and early 1970s. Continued increases in the speed of logic devices and the extended development of integrated circuits (ICs) also contributed. These could perform large numbers of computations on a single chip and meant that many processing functions could be implemented in real time at speech sampling rates.

With regards to hearing aid-specific research and development, in the United States, Levitt and his colleagues at the City University of New York (CUNY) and Engebretsen and his associates at the Central Institute for the Deaf (CID) deserve credit for many early and sustained contributions. The CUNY team began exploring the area of computerized psychophysical testing in the mid-1960's, while the CID team extended their work with computerized evoked potentials into digital speech systems. At CUNY, those efforts eventually resulted in a digital master hearing aid built around an array processor (Levitt, 1982) prior to the development of high-speed DSP chips. Array processors, computing devices by which large arrays of numbers could be processed simultaneously, were an important link in the developmental steps leading to digital hearing aids. They enabled computationally intensive manipulations to be managed at high speeds. This is an essential requirement for digital processing of rapidly changing acoustical signals since processing operations in DSP are often numbered in the millions of instructions per second (MIPS).

At CID, versions of early DSP laboratory digital hearing aids went through several evolutionary iterations beginning in the early 1980s (Engebretsen, 1990). As in other labs, the developmental cycle was accelerated by incorporation of a number of processing techniques that were first developed for the telecommunications industry. A body-worn, battery operated experimental digital hearing aid was running at CID by 1985.

Technical progress in a relatively small industry, such as that involved in the manufacture of hearing rehabilitation products, depends heavily on developments in the larger, supplier industries, such as the makers of semi-conductors and embedded software (programmable hardware). Hence, the development in the 1980s of fast DSP chip technology in a small size was quickly followed by a number of other notable efforts towards construction of wearable DSP hearing aids (Cummins and Hecox, 1987; Dillier, 1988; Engebretsen, 1993; Engebretsen et al, 1986; Harris et al, 1988; Nunley et al, 1983).

The accomplishments of other teams at such diverse locations as Bell Labs, Brigham Young University, Gallaudet University, the Massachusetts Institute of Technology (M.I.T.), the Universities of Minnesota, Wisconsin, Wyoming, Utah, and numerous labs in Northern Europe and Japan, were plentiful. They served to generate the precedents and sustained impetus towards products that were finally released in the second half of the 1990s. The commercial efforts at Audiotone, Nicolet, and Rion were also of undeniable importance in the historic path to consumer-ready hearing aid products of digital design.

Audiotone (Nunley et al, 1983; Staab, 1985) had an early working true digital hearing processor of body-aid configuration. It had essentially no commercial circulation, but was a noteworthy technical development. The Phoenix body-worn digital processor developed by Nicolet connected to one or two behind-the-ear units (Hecox and Miller, 1988; Roeser and Taylor, 1988; Sammeth, 1990a). The company eventually attempted to produce a self-contained, rather large-sized BTE, with three 675-size batteries stacked one on top of another, but production was very limited before the project was terminated. Description of the Rion product will follow in a later section.

Presently, as the momentum towards digital hearing aids has greatly accelerated, research in numerous places throughout the world will continue to impact the hearing aid industry into the next decades. Many of the developmental accomplishments are excellent examples of partnerships between government agencies, such as the National Institutes for Health (NIH) and the Veterans Administration (VA), in conjunction with university and commercial efforts. This may be especially true of the ongoing development of software algorithms that will enable the digital products to perform previously undeliverable processing operations for attractive clinical and listener benefits.

SOME DIGITAL SIGNAL PROCESSING FUNDAMENTALS

To some extent digital signal processing is as old as some of the numeric procedures invented by Newton and Gauss in the 17th and 19th centuries (Roberts and Mullis, 1987). Dramatic reductions in the cost of hardware and increases in the speed of computation are key evolutionary advances that led to this late 1990s discussion of digital processing in hearing aids. It will serve the reader to have a solid understanding of some fundamental properties and terms relevant to DSP as applied to low power devices such as hearing aids. Some of the principles also apply to computerized diagnostic instrumentation, such as evoked potential measurement devices, and some are more unique to hearing aid applications. Sammeth (1990b) provided a useful glossary of the basic terms relevant to the topic. A number of additional terms (such as Sigma-Delta Converters) have emerged since her publication, which is otherwise still valid and instructive.

From Analog Smooth to Digital Discrete

Many, including Hussung and Hamill (1990), Brunved (1996) and Negroponte (1995) have observed that the world of experience is essentially analog; that is, characterized by the appearance, at least, of continuity and smoothness. Smoothness of motion is consistent with most perceptual experiences. In analog systems, such as scales of sound pressure or temperature, essentially any fractionally reduced value can be assigned, thus providing the perception of a continuous flow of data. In a digital system, continuity is replaced by discreteness and a highly constrained, finite set of symbols. Monetary values, for example, are a familiar example of a discrete units system. Prices obviously vary in finite, discrete, steps. Additionally, the reading of this text requires familiarity with a fairly large, but finite, range of symbols (the alphabet and various punctuation marks).

The symbols in the simplest digital code (binary) are reduced to either “1” or “0.” As the word bi-nary implies, there are only two symbol choices from which to make decisions. One binary digit (bit) can be either of two symbols. Hence, in the digital domain, there are specific discrete, or step-like, values. Unlike an analog system with the possibility for many (theoretically, infinite) fractional sub-divisions, nothing is allowed in between the finite values of the code.

One could make the light-hearted suggestion that the first digital hearing aid was the hand cupped over the ear, the fingers of course being “digits.” Fingers still often serve a counting purpose and Lebow (1991) shared this topical limerick:

An inventive young man named Shapiro

Became a sensational hero,

By doing his sums

With only his thumbs,

And calling them One and Zero.

Furthermore, it might be noted that Longfellow attributed a kind of binary code to Paul Revere's historic ride with the famous “one if by land, two if by sea” lines.

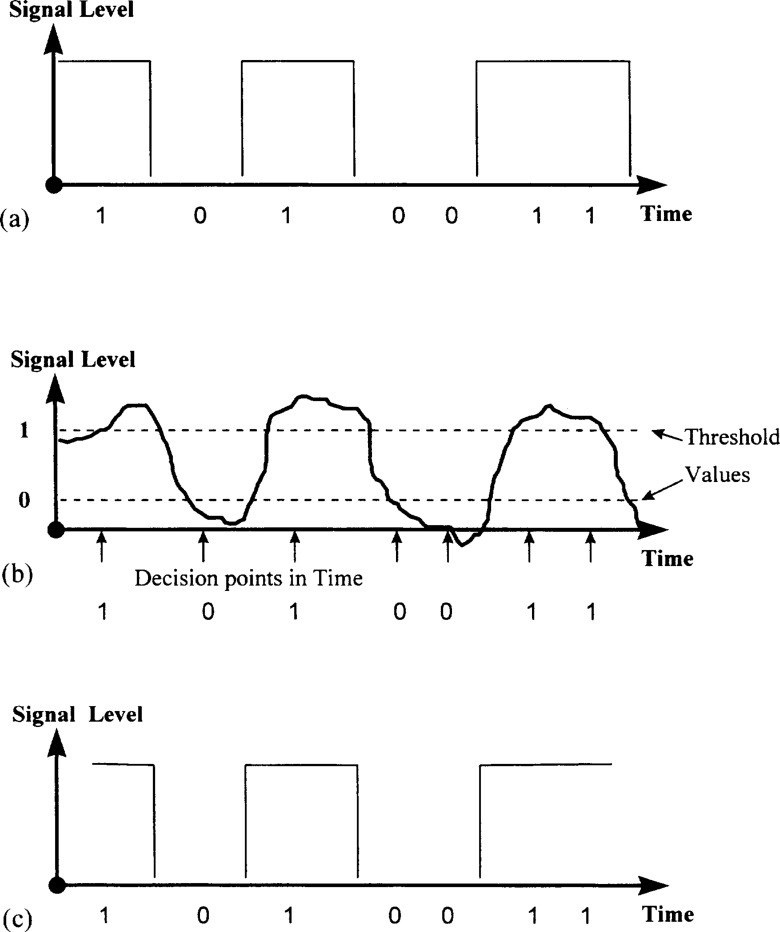

A binary code constructed of either of two digits has an immediate advantage of robustness. That is, it is relatively impervious to mistakes and interference from noise since faithful transmission only depends on recognizing whether a “1” or “0” has been sent. An illustration of this principle in telecommunications is given in Figure 2. Figure 2 illustrates a signal of the form 1010011 (a). In section (b) waveform distortion and noise are quite evident as the signal is transmitted through a noisy medium. Nevertheless, the code received (c) is faithful to the source code, and an errorless receipt of 1010011 is accomplished even though the details of the waveform are obviously altered. Certainly it is possible for noise or distortion to be high enough to generate errors and to require increases in the signal strength. But the theory and science of digital information transmission through noise is highly advanced, and generally threshold values can be selected so that errors regarding whether a sample point is a “1” or a “0” can be kept minimal by the proper applications of the statistical tools used in digital theory (Bissell and Chapman, 1992). More discussion of this property of digital code will follow later.

Figure 2.

An illustration of the robustness of a binary code system. A signal of the form 10110001 is indicated in section (a). In (b) this signal is subjected to noise and distortion, however, due to the either/or properties, with the decision values for 1 and 0 given by the digital code, the derived signal (c) is a faithful rendering of the binary message.

The appearance in Figure 2 of a pulse-like sequence in time is a useful image for conceptualizing the streams of 1s and 0s basic to digital processing. Some type of modulation of a stream of pulses is a fundamental aspect of digital signal transmission and control (Lebow, 1991).

Although a binary code contains only two symbols, complexity in the system proceeds in a manner in which every doubling of a value is achieved by the application of an additional 0 at the end of a string (for example, the standard numbers 1,2, and 4 are rendered as 1, 10, and 100 in binary). In contrast, of course, the addition of a 0 to the end of a decimal system number indicates a multiplication of 10. A simple binary progression contrasted with the more familiar decimal code is given in Table 1.

Table 1.

Progression of a Binary Code as contrasted to the more familiar Decimal System.

| Decimal System | Binary Code | |

|---|---|---|

| 0 | 0 | |

| 1 | 1 | |

| 2 | 10 | |

| 3 | 11 | |

| 4 | 100 | |

| 5 | 101 | |

| 6 | 110 | |

| 7 | 111 | |

| 8 | 1000 | |

| 9 | 1001 | |

| 10 | 1010 | |

| 16 | 10000 | |

| 32 | 100000 | |

| 64 | 1000000 | |

| 128 | 10000000 |

It is especially important to appreciate the increasing number of possible code combinations that occur in the binary system by the power of 2n. Hence, a string of four binary numbers (4 bits) can be formed into only 16 possible states (1001, 0011, 0001, etc.), 8 bits can be formed into 256 permutations beginning with 00000000 and ending with 11111111, while a 16-digit binary number has 65,536 possible states. So, the relationship between an increasing number of bits and a substantially increased complexity of signal representation should be clear to the reader.

To the engineering community, DSP is actually a special case of a larger class of “discrete-time signal processing” (Ahmed and Natarajan, 1983; Oppenheim and Schafer, 1989). That is, one could slice up an analog signal into specified, discrete, time points but still allow the magnitude to take on all possible values over some range, and thereby remain continuous. This time-domain discretizing of analog data, which can be done by switched capacitor filters sampling analog data, actually describes the process used in some hybrid analog-digital applications (Levitt, 1987; Preves, 1990). However, the rules, or algorithms, for true DSP operations use discrete-time and discrete-amplitude premises and assumptions, although the theory relating to DSP assumes only discrete-time properties (Simpson, 1994).

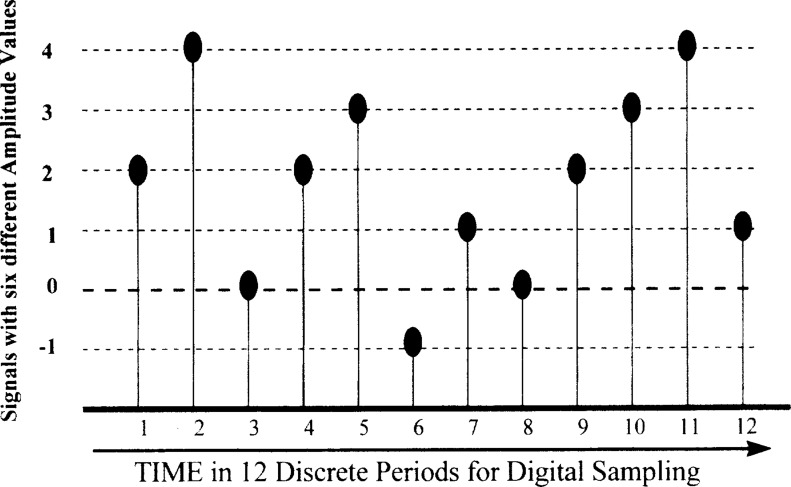

To summarize, then, unlike analog signals, and some types of discrete time signals, digital signals are discrete in both time and amplitude. Hence, to be processed as digital signals they must be characterized by their specific locations in discrete time and level (Proakis and Manolakis, 1996). Figure 3 illustrates the positioning of digital signals at discrete points in time and magnitude, an essential requirement for digital signal processing.

Figure 3.

For signals to be processed digitally they must exist as discrete in both time and magnitude. This figure indicates a signal represented at six possible magnitudes on an arbitrary scale. Each signal point is positioned at a specific location in hypothetical time.

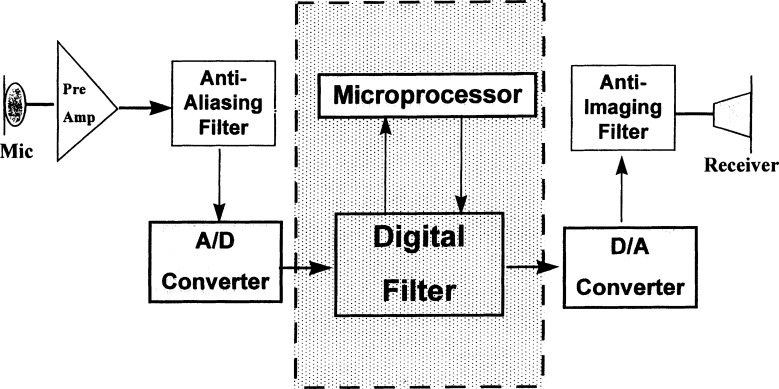

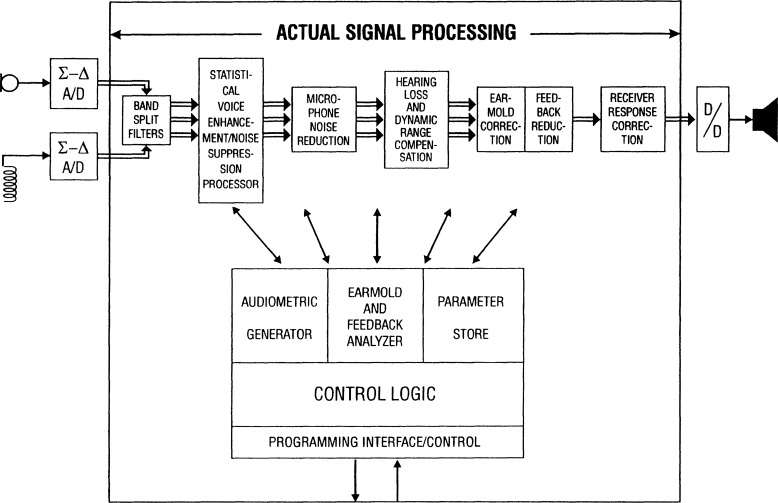

Architecture for a Prototype Digital Hearing Aid

It should now be clear to the reader that digital signal processing refers to the manipulation of a binary numeric code prior to converting the signal back to its analog form. For hearing aid purposes, the output signal will presumably be manipulated in a manner specified to achieve some desirable adjustments, such as spectral shaping or amplitude compression, in order to address the user's communication requirements. To help introduce some of the unique operations of digital processing in hearing aids, a basic schematized prototype might be helpful at this time. A layout for a generic digital hearing aid was outlined by Levitt (1993a), and a rendering of that basic flow chart for a prototype DSP hearing aid is shown as Figure 4.

Figure 4.

Basic architecture for a digital hearing aid indicating several components not found in analog or hybrid hearing aids (Figure 1). This layout is an adaptation of one by Levitt (1993a) with permission.

The reader will observe that these fundamentals, as suggested by Levitt, will vary somewhat as “enabling technologies” become available. Nevertheless, Figure 4 is a useful starting point for the reader to become familiar with the signal management concerns in digital hearing devices.

Several of the components will receive more attention later in this article, but a few preliminary comments about Figure 4 may help readers especially new to digital processing.

The Anti-Aliasing and Anti-Imaging filters serve to reduce distortions due to certain properties of digital signal processing. Hence, those specific filters are not found in conventional hearing aids (Figure 1). The two “converter” boxes refer to components in the hearing aid design that transform the signal into either digital or analog states. Throughout the first part of this article reference will be made to Figure 4 to either elaborate a concept or to illustrate contrasting innovations.

Sampling and Quantization

Since acoustical signals are, by nature, continuous-time and continuous-amplitude (that is, analog), a process of conversion into discrete-time and discrete-amplitude (digital) signals must precede any actual processing. The conversion of an analog signal to digits involves two fundamental and related processes. The first is sampling. The sampling process involves the generation of a train of pulses at specified intervals in time. These pulses “sample” the waveform by capturing the amplitude at each of those intervals. The more frequently those sampling pulses occur, the more samples will be obtained within a given period, or window, of time. Hence, more detail of the changes in a signal occurring over time can be captured by sampling at a higher rate.

At each of the sample points the amplitude of the waveform is converted from a continuous analog signal to a number (digit) consistent with the precision of the measurement. This stage of converting the amplitudes of the waveform at the sample points into digits is called quantization. The combination of sampling and quantization is what is typically referred to as the analog to digital (A/D) conversion.

Decisions regarding the rate, or frequency, of the sampling and the number of binary units (bits) that will represent the amplitude properties of the waveform have a direct impact on the faithfulness which the analog signal represented in digital code. As a general rule, the faster the sampling rate and the greater the number of bits for data representation in the processing, the closer the digital signal will be to the original analog signal.

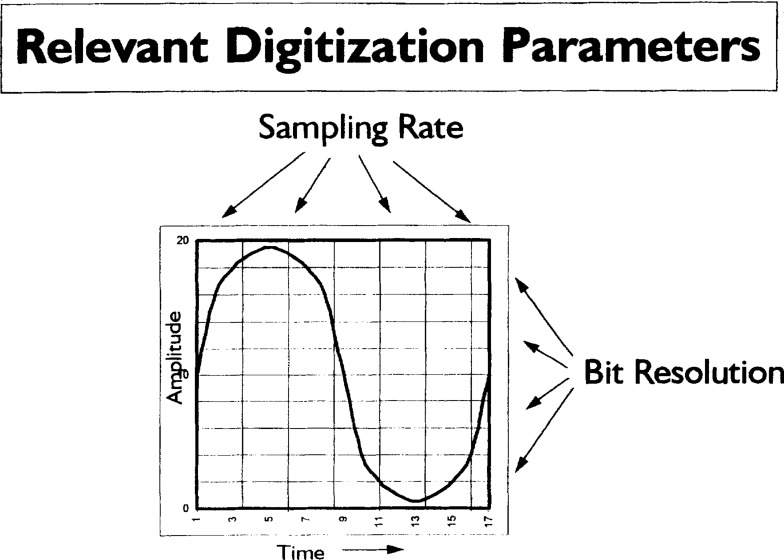

The number of bits, (sometimes referred to as “wordwidth”) determines the “digitizer resolution,” that is, the accuracy of the amplitude detail. This may also be expressed as the quantization granularity. On the other hand, the sampling rate (typically expressed in samples per second, or Hz) has a direct bearing on the frequency bandwidth that can be accurately represented. The illustration in Figure 5 should help the reader new to the concepts of how sampling and bit rate relate to signal resolution.

Figure 5.

The two primary parameters that determine digital signal resolution - sampling rate in the time domain which impacts frequency resolution and bit rate which impacts amplitude resolution. (Adapted with permission from Oticon.)

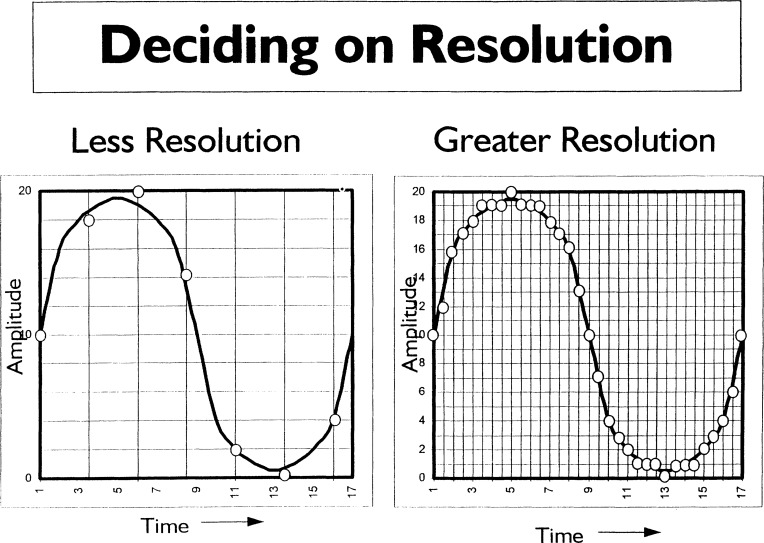

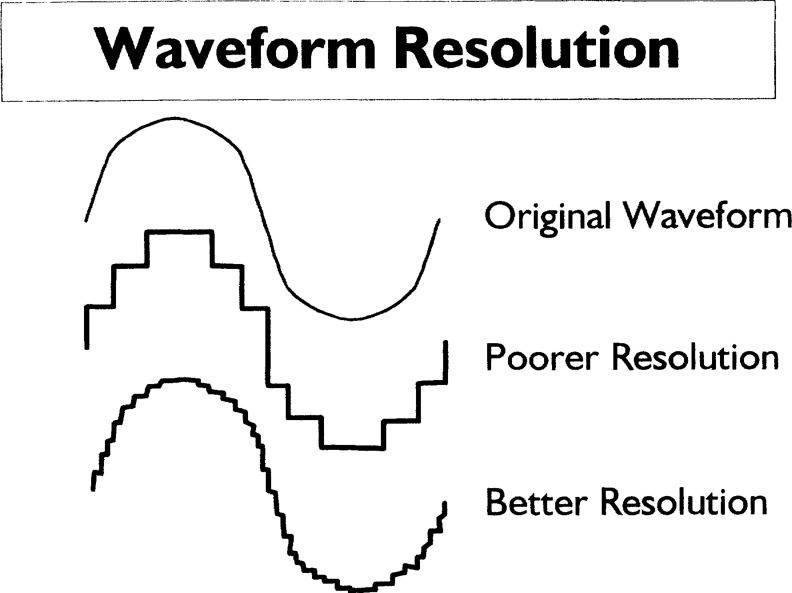

Additional illustrations of these fundamental concepts will be found in Figures 6 and 7.

Figure 6.

The effects on a waveform of low (left) and high (right) resolution. Finer gradations of resolution result in signals more closely representing analog inputs. (Adapted with permission from Oticon.)

Figure 7.

Illustration of two digital renderings of a smooth analog signal (top) with discrete step sizes associated with relatively poor and good levels of digital resolution. (Adapted with permission from Oticon).

The marketing departments for digital audio products often boast about the number of bits or the speed of their product's sampling rate. But a point of diminishing returns is no doubt reached whereby the cost of furthering the number of bits used in the conversion process conveys no audible improvements (Harris et al, 1991). Indeed, Rosen and Howell (1991) make the comment that the fineness of the quantization for most digital audio systems using 10 bits or more provides such a good representation of the original signal that the effects of quantization are essentially unimportant. Recall that an 8-bit quantization means that there are 256 amplitude points.

Aliasing

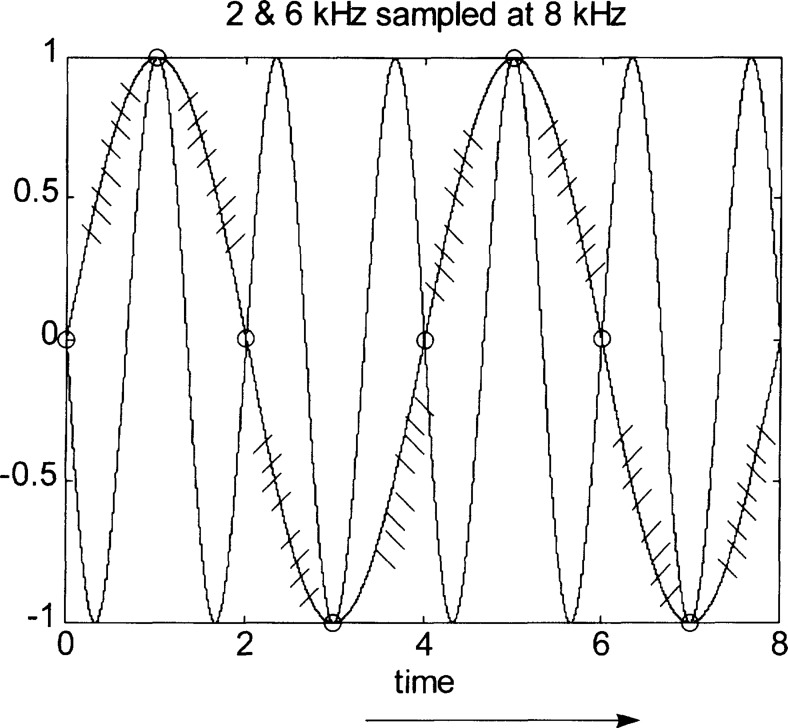

A basic rule of digital sampling is that the analog to digital (A/D) conversion stage (see Figure 4) must be accomplished by sampling the input signal at a rate (or frequency) at least twice as fast as the highest frequency that is desired to be included in the signal processing. This elegant and powerful rule is known as the Nyquist-Shannon sampling theorem, or simply the Nyquist, named for Harry Nyquist who preceded Claude Shannon at Bell Laboratories. Sampling rates which are less than the Nyquist frequency, are susceptible to producing a type of error known as aliasing. The errors which are manifested as false, or “alias” frequencies result from an insufficiency of discrete sample points. This means that frequencies above the Nyquist criterion may have parts of their waveforms fall into the sample periods, or bins, causing the appearance of frequencies that are not actually entering the analysis. To illustrate this concept of “folding” of high frequencies down into the analysis area consider Figure 8.

Figure 8.

Example of aliasing. An input of 6 kHz, when sampled at 8 kHz will generate an alias frequency of 2 kHz. Sampling theory indicates that without filtering, an alias signal frequency of fs – fm, will appear, where fs is the sample frequency and fm is the frequency of maximum signal. Hence, 8 kHz − 6 kHz equals 2 kHz.

Figure 8 shows the waveforms of 2 kHz and 8 kHz sampled at a rate of 6 kHz. The small circles show the sample points (pulses) which capture the entering signals. The Nyquist rule indicates that if signals as high as 6 kHz are to be included in the sampling, then the frequency of the sampling must be at least 12 kHz (twice the frequency of the upper signal frequency). Figure 8 indicates that both a 2 kHz and a 6 kHz signal would be sampled at entirely coincidental points in time. As a result, a 6 kHz signal would be misrepresented as if it were a 2 kHz signal because the sample rate is not fast enough to capture all the details of the more frequently changing 6 kHz waveform. In other words, the aliased (2 kHz) signal shown in Figure 8 could be due to either a true 2 kHz signal, or as a result of 6 kHz folding into the analysis parameters. The same would of course be true for other frequencies. To be precise, sampling theory dictates that an alias signal frequency of fs – fm, will appear, where fs is the sample frequency and fm is the frequency of maximum signal to be analyzed or processed. In the present case, 8 kHz − 6 kHz equals 2 kHz.

To avoid aliasing errors, DSP designs generally must include anti-aliasing filter methods prior to the A/D converter. Such filters are intended to prohibit the introduction of signals higher in frequency than the Nyquist criterion. They pass those frequencies that do not exceed Nyquist and reject those that do.

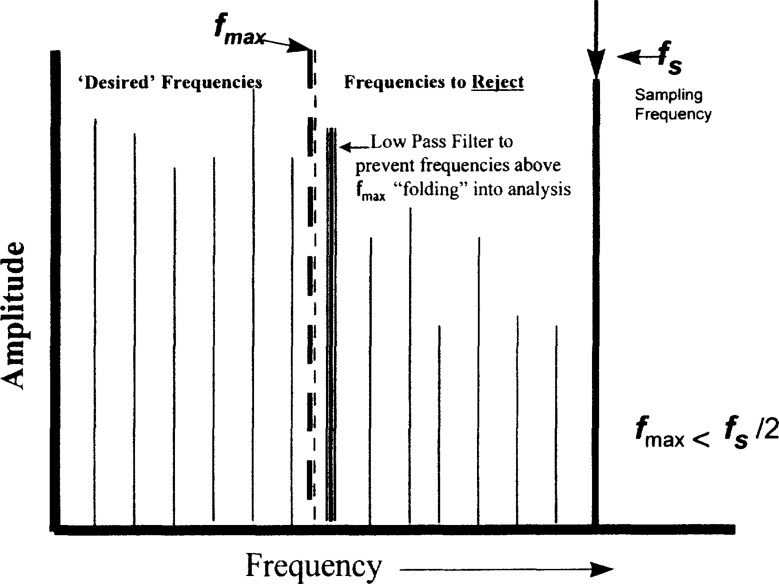

While Figure 8 indicates how aliasing may inject unwanted low frequencies based on the difference between the sampling rate and the maximum input signal (fs – fm), Figure 9 illustrates the operation of low pass filtering to reject signals that exceed the Nyquist criterion. In Figure 9, the introduction of an anti-aliasing filter is shown to reject signals above the desired region. The frequency region that can be accurately sampled to represent the analog signal is constrained by the expression (fmax < fs 12). That is, the maximum frequency must be less than one half the sampling frequency. As indicated above, if the highest frequency of interest in a hearing aid was 6 kHz, then the sampling frequency must be at least 12 kHz to insure the accuracy of signal representation in the digital domain.

Figure 9.

To address the alias problem, filter techniques are required to reduce the interference of aliasing by eliminating frequencies above the theoretical maximum predicted by the Nyquist theorem. Signals greater than fmax are excluded by the low pass filter (see text).

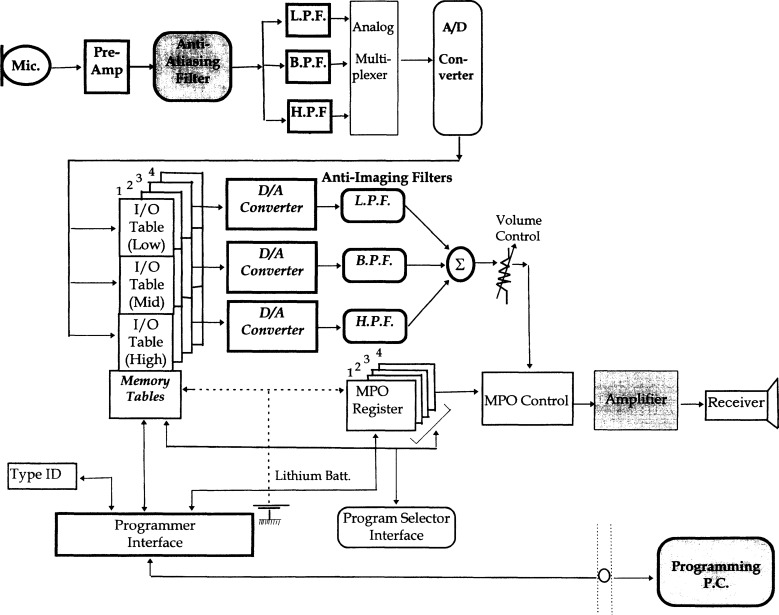

To summarize, an anti-aliasing filter is commonly located early in the flow path of a digital hearing aid to exclude signals above the desired maximum frequency. It is usually seen just prior to the A/D converter element, as shown in Figure 4. Another schematic of the architecture of an actual digital hearing aid brought to limited commercial production is shown in Figure 10. This figure illustrates a digital hearing aid manufactured by Rion as reported by Schweitzer (1992). In addition to numerous additional enhancements, such as four addressable memories, note again the presence of the anti-aliasing filter just after the pre-amplifier.

Figure 10.

Block diagram of the Rion HD-10 digital hearing aid. This well-constructed body type hearing aid was never officially introduced in the U.S.

The Rion HD-10 device was introduced as a relatively small body aid. It used a lithium battery for maintenance of memory and AAA cells for power. The HD-10 divided the signal into three control bands. These are indicated as LPF, BPF, HPF in Figure 10 for Low Pass, Band (middle) Pass, and High Pass Filters. Users were allowed to select from four available program memories. The location of the A/D converter came relatively late in the signal path in the Rion HD-10 which was released on a limited basis, mostly to the Asian market beginning around 1991. Other notable components on Figure 10 are the “I/O” tables. These refer to the input/output curves that could be applied to each of the three bands so that appropriate gains (linear or compression) could be applied according to the user's audiological requirements at different input levels. Separate I/O configurations could be set for each of the four selectable memories, as could the maximum output levels settings (MPO). The programming personal computer (PC) allowe42d the fitter to implement a wide range of gain response patterns in a very direct and expedient manner.

As various technologies advance, some conceptual requirements become managed by new methods, or processes, and their specific inclusion as a physical component may no longer necessarily appear on the flow diagram. That is because a specific operation may be accomplished as a process nested within the newer design elements. This is now becoming the case for anti-aliasing filters. For example, the use of a lowpass filtered microphone may accomplish the same control and elimination of spurious high frequency components as is achieved by conventional filters. In other words, if a microphone could be chosen which was designed (or modified) to exclude signals above 6 kHz, the same effective result, in terms of alias signal prevention can be accomplished for a sample rate of 12 kHz as if an actual filter unit had been inserted. This is the approach used by some manufacturers of commercial digital hearing aids. The filtering is simply accompushed by the properties of the microphone which do not pass frequencies above the Nyquist. Hence, while numerous textbooks have been written on the subject of digital filtering techniques, some advances in production efficiency can be made by the clever use and proper adjustments of indispensable components, such as microphones and receivers. This will apply to another type of filter (anti-imaging) that will be discussed in a later section.

Analog to Digital (A/D) Conversion Techniques

There have been a considerable number of methods devised and used to accomplish the A/D stage of conversion. Haykin (1989) states that the process of analog to digital conversion was often a variation of some type of digital pulse modulation. He lists types of digital pulse code modulations including: Delta Modulation, Pulse-Code Modulation (PCM), and Differential Pulse-Code Modulation. It is not intended here to discuss the details of the different methods, but rather to illustrate that A/D conversion techniques are important and well-developed. Horowitz and Hill (1989) describe six basic and many additional variations of A/D conversion techniques. Their list includes: parallel encoders, successive approximation, voltage-to-frequency conversion, single-slope integration, charge-balancing techniques, dual slope integration. They proceed to list over 35 commercial A/D converters available at the time of their text. Ignoring again the technical details of these various methods, it should be stated that the goal of any A/D converter is to generate “a train of pulses which sample the waveform at periodic intervals of time by capturing its amplitude at those particular instants of time” (Lebow, 1991). Most methods, such as the ones mentioned above, appear to have evolved along with the development of electronic computing devices without some of the size and power constraints that characterize wearable hearing aids. Capacitors, counters, resistive networks, comparators and buffers are among the elements required by most historic conversion processes. Both the size of components and the vulnerability to value variations made all these approaches questionable for digital hearing aid applications. As a general rule, the fewer the components external to the DSP core, the greater the overall efficiency of the device. Additionally as Levitt noted, the analog-to-digital converter component in early digital hearing aids was a major source of power consumption (1993a).

A more recent approach to converting the analog input signals into discrete form uses what are known as Sigma-Delta techniques. This method requires no external components for trimming which is desirable both for improving power consumption properties and for reducing system noise levels. As of this writing, Sigma-Delta conversion is considered the best technique for use in all digital audio products. Readers may recall that the Greek letters, σ (sigma) and Δ (delta), are sometimes used to represent summation and differences. The Sigma-Delta converter makes use of a very high sampling rate (often called ‘oversampling’) operating hundreds of thousands of times per second or even a million times (1 MHz) a second MHz. This high sampling rate is converted to a very robust stream which is represented on a 1-bit basis. The one bit indicates whether an increase or decrease in the signal is indicated. The sampled data is then summed.

The conversion to a single bit stream typically involves the use of a Pulse Density Modulation (PDM) approach, another variation of pulse code modulation. In this case, density refers to the frequency of the serial pulses which changes with time. PDM signals are standard in Compact Disc (CD) players because of the associated high efficiency and sound quality of this approach.

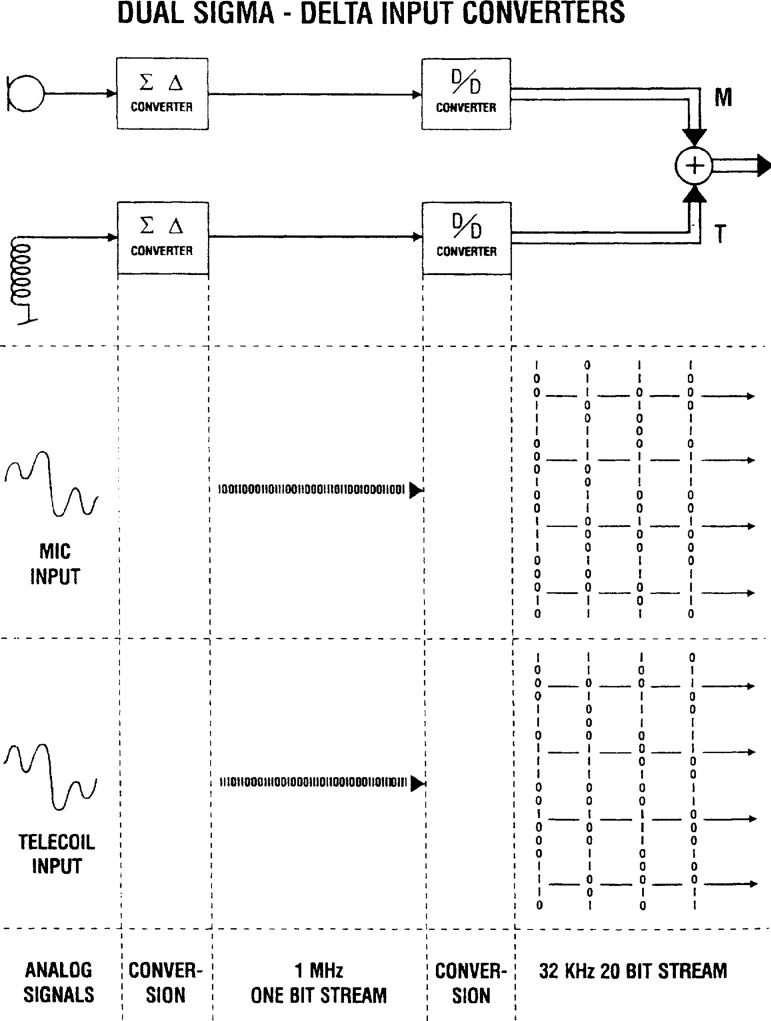

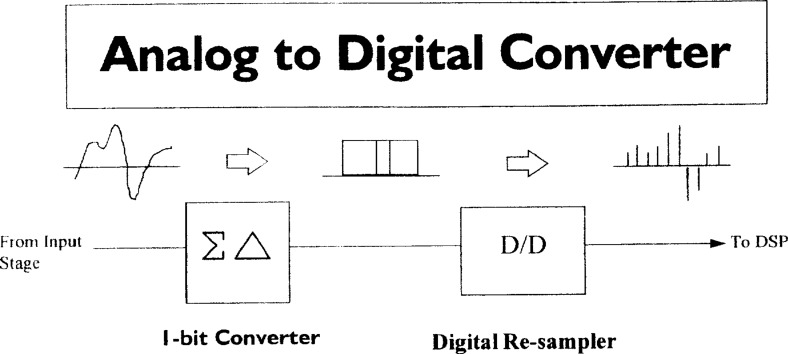

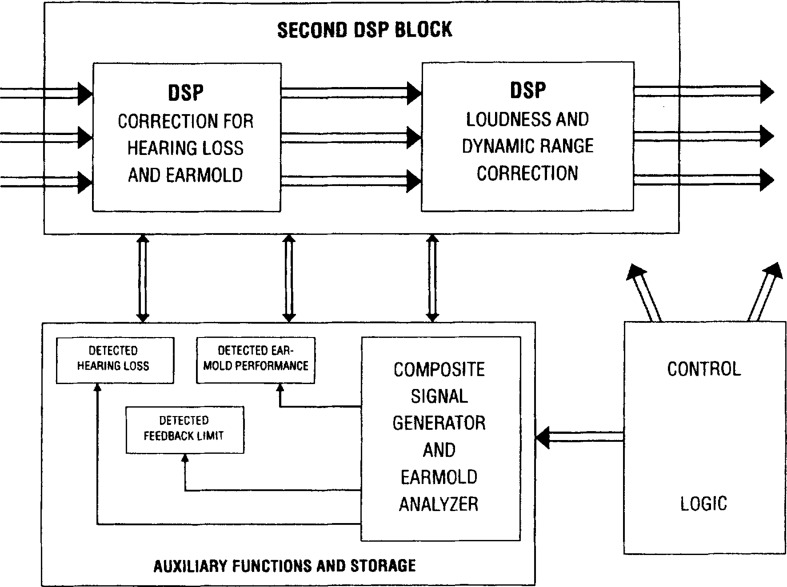

Figure 11 shows a diagram indicating the use of the Sigma-Delta conversion techniques in the Widex Senso architecture. In Figure 12 the Oticon version of σ-Δ approach is illustrated as used in the DigiFocus hearing aid. The work in progress on digital hearing aids at AudioLogic, GN Danavox, and ReSound are understood to make use of variations of σ-Δ converters. Presumably other entries into the digital hearing aid market will do so as well.

Figure 11.

Illustration of Widex's use of the Sigma-Delta (σ-Δ) conversion process on analog inputs from either the microphone or telecoil in the Senso hearing aid. (From Sandlin, 1996 with permission.)

Figure 12.

A representation of the Sigma-Delta conversion approach used by Oticon in the DigiFocus product (from Oticon with permission).

Note that in both Figures 11 and 12 a conversion, or re-sampling, process is indicated. One ramification of the use of σ-Δ converters is that a Digital-to-Digital conversion must be implemented to convert the fast 1-bit digital stream to another for digital processing operations. For example, the Oticon DigiFocus converts a 504 kHz 1-bit PDM stream into a more conventional 16-bit, 16 kHz signal. By use of parallel processing within the DSP chip, up to 32-bit calculations can be managed with this signal technique. In the Widex approach shown in Figure 11, the one bit stream is shown as running at 1 MHz and the digital re-sampler results in a final 32 kHz 20-bit stream.

The reader should note that this D-D conversion, or re-sampling, stage of digital signal management does not appear in the earlier “prototype” architectures, such as Levitt's (Figure 4). The operation involves a decimation process (portioning out some fraction of) on the 1-bit stream and filtering the result.

Quantization “Noise”

Recall that the maximum processing frequency (the Nyquist criterion) is constrained to no more than half of the input sampling rate to avoid aliasing errors. But another form of error results from the quantization that locates the continuous magnitude of the analog signal into discrete digital decision points. This is known as quantization error and it is derived from the requirement of forcing signal amplitude values to be rounded (or truncated) to the nearest discrete value. The loss of amplitude resolution is considered a kind of noise in the digital processing.

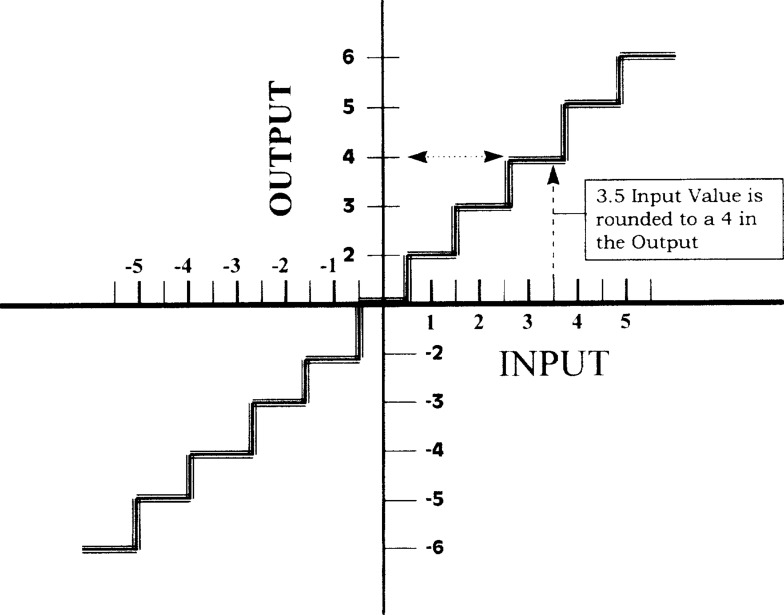

Figure 13 illustrates an input-output function for the processing of a simple sinusoidal signal. It can be observed that input magnitudes (x-axis) falling between the whole numbers of the output (y-axis) are forced to be represented as whole numbers by the resolving properties of the implemented quantization.

Figure 13.

An input-output function for a simple sinusoid signal converted to digital domain. The figure illustrates how the output values are forced to discrete magnitudes in the quantization rounding process.

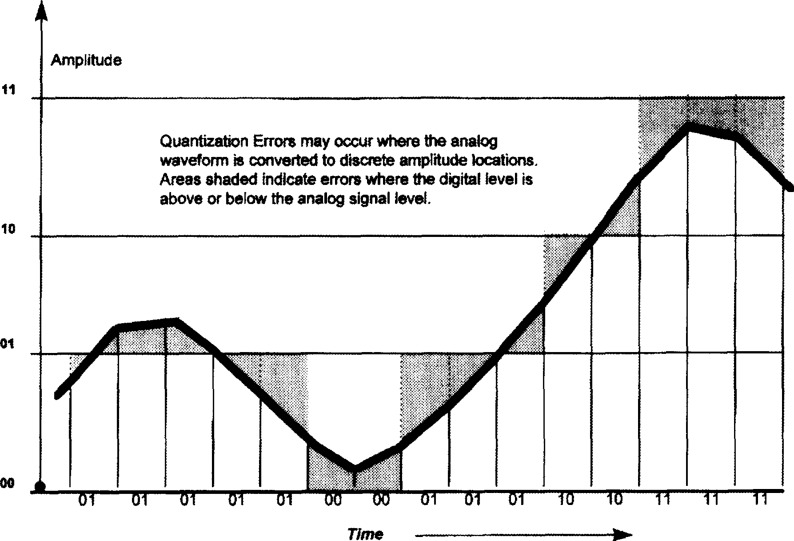

Another illustration of the concept of quantization noise is given as Figure 14. This figure, adapted after one by Hojan (1996), shows an analog signal that is sampled at 15 points in time. At each sample point the signal is converted into one of four amplitude values. The areas where the analog signal and the digital values do not precisely coincide are quantization errors due to rounding. Some readers might find it easier to understand this figure than the input-output type illustration of Figure 13.

Figure 14.

Another illustration of the concept of Quantization Errors. An analog signal with a continuous amplitude waveform is represented in each of the sample period as one of four possible amplitude states. Shaded areas indicate errors due to the rounding of magnitude values to discrete values. (Adapted from Hojan, 1997 with permission.)

It has been shown that a greater number of sampling points results in a closer representation of the analog signal in the frequency domain. The use of as many bits as economically possible in the quantization process correspondingly improves the amplitude resolution. But additionally, an increase in quantization has a favorable impact on the signal to noise ratio. This is an important aspect of DSP for hearing aids because the lower the noise of a system the greater the overall dynamic range. As a rule, each additional bit of quantization improves the signal to noise ratio (SNR) by 6 dB (Bissell and Chapman, 1992). Theoretically then, a 16 bit converter yields an SNR of 96 dB. (By contrast a 12 bit converter would have a more constrained, 72 dB SNR.) Microphone noise and other practical limitations in a sound processing system may result in lower net SNR values. Further discussion on the relation of SNR to number of bits will follow in a later section.

Re-conversion to Analog: A/D or D/D Methods

Before discussing the specific manipulations of the digitized signal, the issue of re-conversion to acoustic information will be considered now. Ultimately, of course, in air conduction hearing aids the final stage is to push air molecules in the small space immediately adjacent to the wearer's tympanic membrane. The method for returning the signal to analog form shown in both Figures 4 and 10 is to use one or more digital-to-analog (D/A) converters. It should be obvious to the reader that this is a step to bring the processed signal back to a condition of analog acoustics, a re-engineered form of the original input signal.

However, the conversion process, literally from discreteness to smoothness, can also introduce errors related to the Nyquist-Shannon theorem. At this stage of abrupt change from numbers to a waveform, artificially induced signals may again be injected. These are called “images” and usually require the use of “anti-imaging” filters which are again of a low pass construction to reject unwanted high frequencies. Hence, the use of anti-imaging filters are necessary and can be seen in the architectures illustrated in Figures 4 and 10. These filters are also described as “smoothing” filters installed to reduce the high frequency digital imaging noise. In the first commercial digital hearing aids (Oticon DigiFocus and Widex Senso) the “process” of filtering for anti-imaging purposes is accomplished within the Digital to Digital conversion methods that are described below.

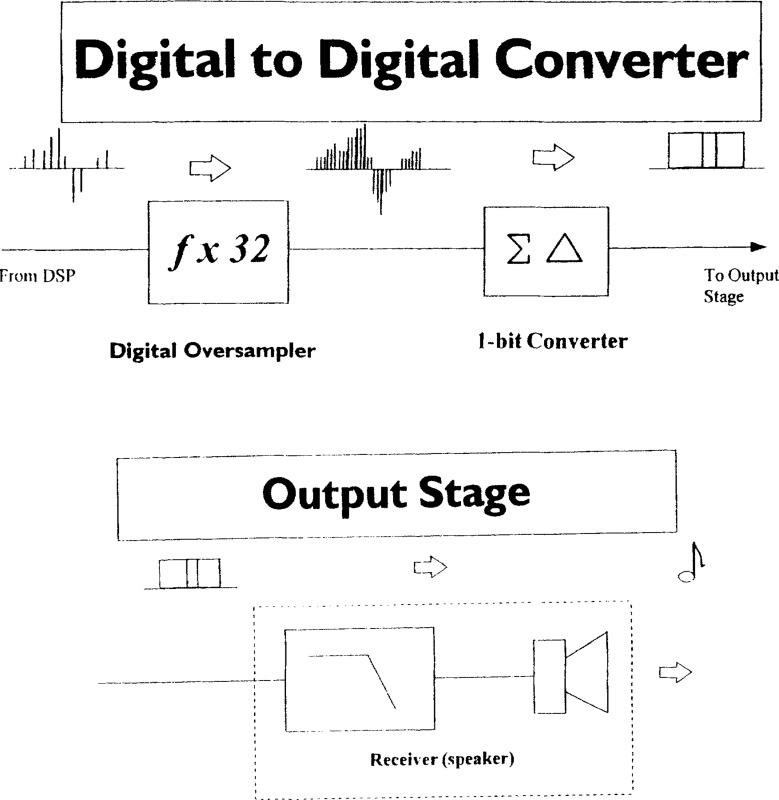

Some of the available power and space of laboratory digital designs was commonly reserved for the D/A conversion. However, another approach, used in both the Widex and Oticon products, eliminates the need for a discrete D/A converter. Both products use a Digital to Digital (D/D) operation in the final conversion stage of their hearing aid products. This method, which has been called Digital Direct Drive (DDD), takes advantage of the operating principles of Class D amplifier, or output stage, operations. Kruger and Kruger (1994) described this alternative to conventional D/A converters in their discussion on future applications of digital technologies. Some description of Class amplifiers will help to illustrate the process.

A Class D type amplifier, also known as a pulse-width modulation output stage, is noted for its low current consumption behavior and improved “headroom” properties (Carlson, 1988; Preves, 1990). Headroom refers to the amount of undistorted gain available. Current savings is related to the Class D operation of turning the output stage “fully on” or “fully off” as a high rate of electrical pulses, or digital switchings. The pulse rate, or carrier frequency, usually operates in the range of 100 kHz, or higher. Basically, the amplitude and frequency form of the analog signal causes a proportionate modulation of the width of the pulses by several operational steps. The process includes averaging of voltage polarities and results in a representation of the analog signal which can be applied to the coil of a hearing aid receiver for retransduction to an acoustic (analog) signal. The net result is that digital signals can be directly coded into the carrier frequency which, as noted, consists of a stream of discrete pulses.

The actual addressing of a Class D type output stage by the digital code may be accomplished by a second Sigma-Delta operation similar to that previously described for the analog to digital conversion process. Recall that a σ-Δ conversion generates a high speed, 1-bit stream. This modulated pulse stream can be demodulated into audible sounds by the induction of the coil in the hearing aid receiver. The process generates some very high frequency noise, but the receiver acts as a low-pass filter and effectively removes the unwanted frequencies. Figure 15 provides a representation of some of these operations as schematized for the Oticon DigiFocus hearing aid. A similar process is reportedly applied in the Widex Senso.

Figure 15.

Schematic of the final stages of the Oticon DigiFocus showing that a σ-Δ converter is again used. The pulse density modulated 504 kHz signal from the σ-Δ converter is passed on to the receiver which demodulates it to an audible signal. The receiver doubles as a lowpass (anti-imaging) filter.

The use of Class D amplifier techniques then enables the return back to analog audio by a means other than a conventional digital to analog converter. The D/D technique was not anticipated in the designs of early DSP hearing aid prototypes. In fact, it might be considered a kind of technical serendipity that Class D output amplifiers operate on a pulsed width modulation basis. It permits an attractive interface to the digital processing operations and allows DSP hearing aid designers to reduce a step in the processing. This saves both power consumption and physical space in the hearing aid architecture.

TECHNICAL CHALLENGES AND ADVANTAGES OF DIGITAL HEARING AIDS

Economy and Stability of Components

While the rationale and incentives for DSP hearing aids have been understood for many years (Preves, 1987, 1990), the technical challenges have been numerous and often daunting. While acknowledging the considerable obstacles to digitizing hearing aids, Preves (1990) nevertheless noted that one of the advantages of “true” digital processing is that software algorithms can be used to replace conventional components such as transistors, resistors, capacitors, and diodes provided “the digital hardware is in place” (p.49). Greenberg and her colleagues (1996) at M.I.T. point out that the development of DSP hearing aids have been greatly augmented by developments in semiconductor technology. Murray and Hansen (1992) indicated that over half of the 25 DSP algorithms that they discussed had been developed in just the previous three years as a result of new capabilities in integrated circuit technology. Prior to the most recent technical advances, engineers had to consider manufacturing tradeoffs of size and noisiness in choosing among bipolar semiconductor, CMOS (complimentary metal oxide semiconductor) circuits and variations of both these technologies, such as BICMOS, which combines both bipolar and CMOS (Preves, 1994).

The pressure to make use of steeper filters in hearing aid products pushed the use of switched capacitors in BICMOS. This permitted lower noise and higher order filter capacity. According to Andersen and Weis (1996), the Widex Senso hearing aid required new BICMOS technology on a custom integrated circuit to achieve all the design goals. Expanded use of both newly developed “off the shelf” components and the introduction of more custom circuits in digital hearing aids are expected as more digital hearing products are introduced.

Filter Construction and Phase Control

In analog technology of any type, filters specifications are difficult to precisely control and values change as components age. But in digital signal processing, precision is greatly increased since coefficients are represented by exact numbers. Problems related to aging of various components are essentially eliminated (Lunner et al, 1993).

Studebaker et al (1987) reported that digital filters enabled greater precision in frequency response shaping than is possible in analog. This has obvious clinical ramifications for the fitting of unusual, or particularly steep, hearing loss patterns. But there are other important advantages of digital filters related to stability, power and size. In analog technology, filters with steep slopes (high order) generally require large space and power consuming capacitors that characteristically add noise into the system.

Finite impulse response (FIR) filters are often used in digital technology. FIR filters are considerably more adjustable in shape than analog filters and are notably more stable. Lunner and Hellgren (1991) and also Engebretsen (1990) reported the use of FIR filters in multi-band digital hearing aid designs, and they have been included in many subsequent DSP hearing aid implementations. Magotra and Hamill (1995) used an adaptive FIR filter construction in a digital hearing aid unit and reported inter-band isolation of up to 80 dB, substantially more than what might be obtained in analog filters.

FIR filters also have some advantages in controlling the phase characteristics of signals. This property of linear phase, or group delay is important to some processing goals that will be described in a latter section. Essentially it refers to the phase or temporal relation of all frequencies that are simultaneously represented in the signal.

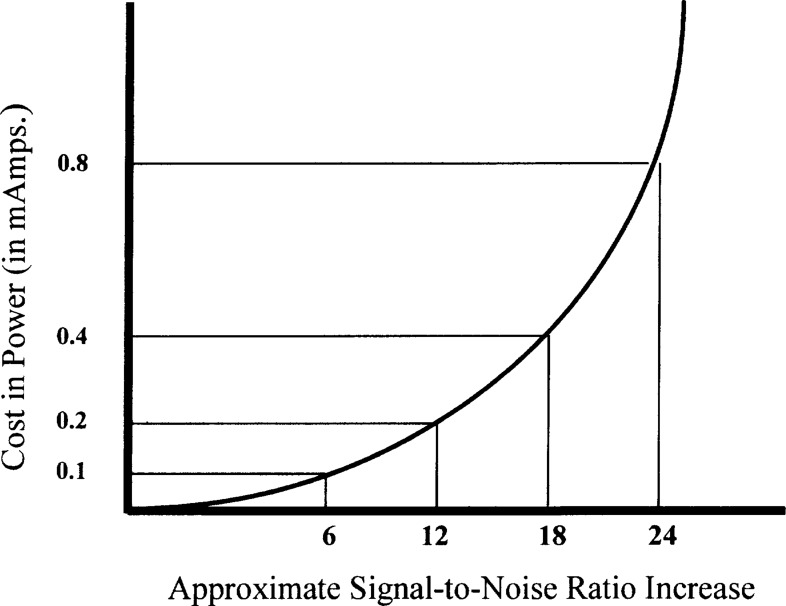

System Noise Advantages

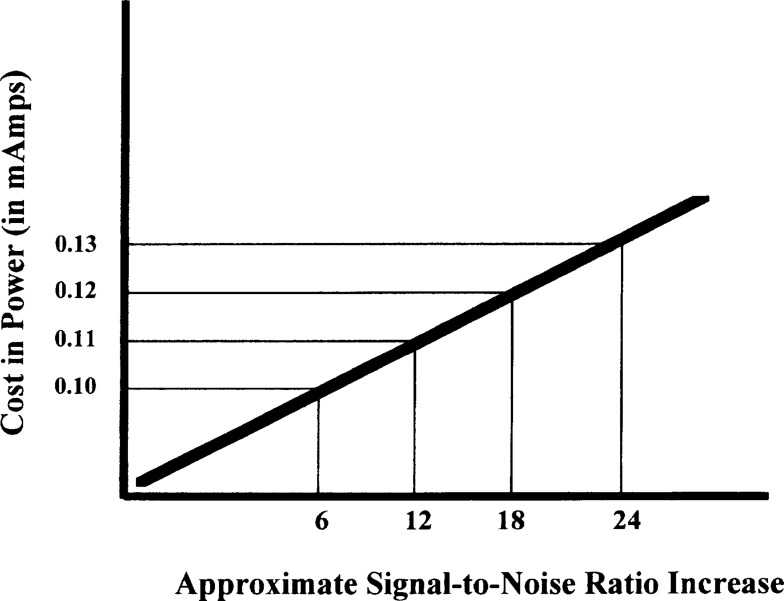

Maintenance (or improvement) in signal to noise ratio (SNR) in analog technology has a relatively high cost in power consumption as each additional electronic component adds an increase in thermal noise. The approximate relation is illustrated in Figure 16 indicating that a 6 dB gain in SNR comes at a price of approximately a doubling in power consumption.

Figure 16.

Illustration of the approximate relation in analog technology of power consumption to signal-to-noise ratio expressed in units of 6 dB.

However, in the digital domain a clear advantage can be demonstrated. The same 6 dB SNR improvement can be accomplished at only an approximate 10% cost of increased power consumption! This is possible by simply using 11 binary digits instead of 10. An obviously more favorable slope of the relation of power consumption to SNR in a digital construction is illustrated in Figure 17.

Figure 17.

Contrasted to Figure 16, the approximate relation in digital technology of power consumption to signal-to-noise ratio. Each 6 dB increase in signal to noise-ratio requires an additional bit of digital calculation.

Digital coding strategies may include the use of an extra bit, or bits, strictly for the purpose of verifying that a previous group of bits were of a particular form. Various error-checking and error-correction techniques may further increase the robustness and accuracy of digital signal management (Bissell and Chapman, 1992) and are often used in digital telecommunication algorithms and other applications.

Packaging and Power Consumption Challenges

Packaging and power consumption constraints continued to confound the construction of headworn digital hearing aids through the mid-1990s. As Levitt (1993a) indicated, first generation wearable digital hearing aids were relatively cumbersome and required a rather large battery pack due to high power consumption. Preves (1994) noted that the prospective use of “off the shelf” DSP chips had the obvious advantage of minimal development expense, but the clear disadvantage of excessive size for hearing aid applications. Yet the supposition for many years was that DSP chips, customized for hearing aid applications, could be developed if sufficient financial investment were made. In fact, considerable investment in the development of custom DSP chips for hearing aids has been made by a number of companies since the early 1990s. Customized digital circuits consume far less power than general purpose DSP according to Lunner et al (1993).

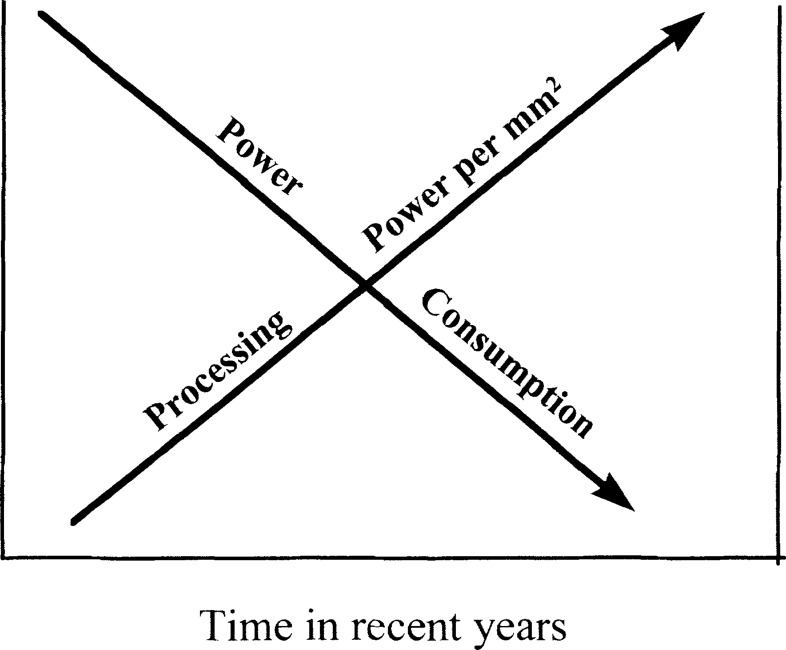

A number of technological developments that can be viewed as “enabling gateways” for hearing aid applications have emerged at a relatively remarkable pace. As recently as 1990, Engebretsen indicated that the technology used in digital audio systems could not be transferred into wearable hearing aids because the size and power demands of A/D, D/A, and DSP chips. In 1991 this author attended an international hearing aid conference while evaluating a commercially-ready digital hearing aid produced by the Japanese company, Rion (Schweitzer, 1992). During the conference several prominent researchers from major companies proclaimed, “no one will introduce a commercial digital hearing aid before the year 2000.” Obviously, the pace of change throughout the 1990's was extremely steep. Indeed the rapid introduction of new, related technologies helped to nullify numerous prior forecasts. The pressure for greater density of processing capability, that is the amount of computation capable within a small physical area, and increasing reductions in power consumption was largely driven by the telecommunications and computer industries. Demand for smaller and more versatile cell phones, hand held computers, and other electronic consumer electronic devices clearly benefited the pace of developments for wearable digital hearing aids. These developments in collateral industries resulted in an “all bets are off” situation for the introduction of DSP hearing aids. Figure 18 is an illustration of two important trends in microcircuitry that have both contributed to accelerating the pace of DSP developments in hearing aids. As indicated in the figure, rapidly falling power consumption specifications and steep rises in the processing capability of DSP circuitry both combined to substantially quicken the pace of progress beyond previous estimates.

Figure 18.

Two significant macrodevelopments in the computer electronics industry that impacted the pace of development of digital hearing aids. The contrasting slopes for power consumption and processing capacity within DSP circuits are obviously highly schematized to illustrate the trends.

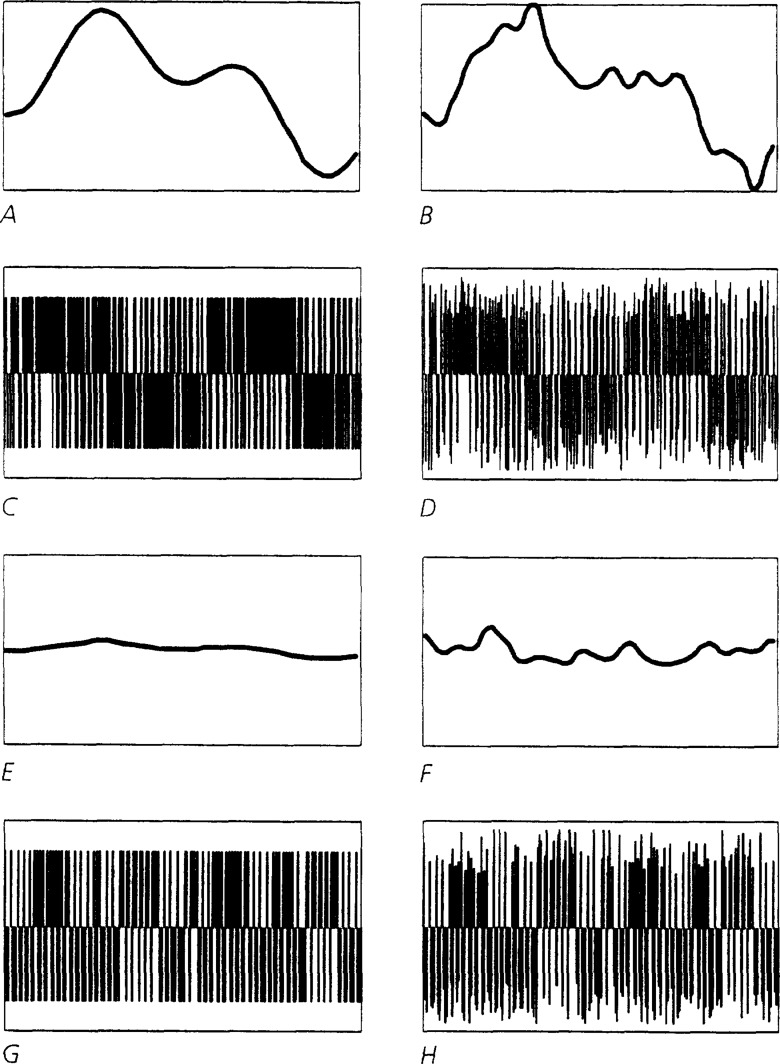

The evidence that digital signals can be managed with greater precision and reliability, and less additive noise, as compared to analog processing, argues strongly for the digitization of audio. Even the problem of basic circuit noise is improved to some extent by digitization. An illustration from Andersen and Weis (1996) is useful for describing this technical advantages of DSP amplification. Figure 19 shows how noise in an analog system may have a more deleterious effect on a signal than an equivalent noise would have on a signal represented in a binary code.

Figure 19.

Visual analogy of the robustness of a binary code in digital amplification reduces the interference of circuit noise. Panel A shows a large analog signal without noise, B noise added; Panels C and D show a representation of the same events in digital domain; Panels E and F show a reduced smaller analog signal with and without noise; G and H repeat the sequence in digital form. Examination of the sequence of black and white bars discloses that the coded signal may still be perceivable by an optical reader that ignores the lengths of the lines. (Adapted from Andersen and Weis, 1996 with permission.)

In the figure, the vertical black lines can be thought of as representing “1”s and the absence of a black line indicates a “0.” The figure makes the argument that the binary code is more impervious to circuit noise regardless of whether the signal is weak or strong in magnitude. It should be understood that the information theory from which DSP has evolved is based on probabilities. The probability that a digitally encoded signal may be correctly transmitted (including from the front end of DSP hearing aid through various other stages) is greater in the same noise environment that may easily corrupt an analog signal. Statistical tools for modeling the probability of signal properties constitute a significant portion of signal processing theory. Of course it is certainly still possible that the noise, relative to signal level, may become so high that even a simple binary code could be rendered untransmittable, that is, entirely obscured by the noise.

Finally, the prospect for simultaneously accomplishing these technical advantages in a greatly reduced circuit component space strengthens the rationale and impetus for developing DSP amplification for hearing aid products. It seems inevitable that the conversion of most new hearing aids to DSP circuits will continue on a steady basis throughout the next decade. The fact that a single chip can be programmed by a fitter so it can effectively address a wide range of electroacoustic requirements further supports the economic advantages of digital production. In short, DSP is a highly attractive option for use in hearing aids.

To summarize the technical benefits of digital hearing aids: greater stability of operation, lower system noise, greater filter flexibility in reduced space, control over phase, and signal robustness in noise have all been noted. Whereas, the major obstacles related to packaging and power consumption of the past are rapidly evaporating, especially with investments in custom integrated circuit (IC) chips, the long-range challenge for the hearing aid industry to profit, and thereby expand development efforts from the digitization of products necessarily depends on improvements conveyed to hearing aid consumers. To elaborate an old economic adage, consumers will vote with their ears, as well as with their pocketbooks.

APPLICATIONS-SPECIFIC USE OF DIGITAL PROCESSING IN HEARING AIDS

As mentioned in the introductory comments, this paper is intended to focus primarily on fully digital hearing aids. However, a brief mention of some digital processing approaches for very limited and focused purposes is warranted because of their close linkage to the development of true digital hearing aids.

By the late 1980's there was the assumption that the first commercial use of DSP designs would be in “applications-specific” roles. That is, some particular function, for example, noise management as with the Zeta Noise Blocker (Graupe et al, 1986, 1987) or the reduction of acoustic feedback, could be installed in conjunction with a more conventional analog amplifier. The use of digital processing in noise management will be discussed in a later section.

Feedback Management

With regards to feedback control, there is an apparent DSP technical (and clinical) advantage, and one of the first implementations of a digital circuit in a sustainable product was a DSP feedback control circuit introduced by Danavox (Bisgaard, 1993; Bisgaard and Dyrlund, 1991; Dyrlund and Bisgaard, 1991; Smriga, 1993). The Danavox DFS (Digital Feedback System) was inserted as a digital control circuit into a line of analog behind-the-ear type hearing aids. The technique was reported to increase the usable gain for some hearing aids by 8 to 12 dB. That was a good example of an “applications-specific” use of DSP in hearing aids.

Others working on laboratory DSP hearing aids made progress on improved control over feedback by a variety of methods including frequency and phase shifting, notch filter and phase warbling (Bustamante et al, 1989; Egolf et al, 1985; Engebretsen and French-St. George, 1993; French-St. George et al, 1993a; 1993b). Kates (1991) has worked extensively on the theoretical considerations of feedback management in the digital domain.

Several of the most promising approaches involve some element of control or manipulation of phase. Phase management is considerably more feasible to implement in a digital system than in an analog one. A good review of work in this area appears in Preves (1994), whose doctoral dissertation in 1985 included a method for phase management of feedback. Alwan et al (1995) also have reported progress in work on adaptive feedback cancellation algorithms. Agnew (1996a) provided a recent comprehensive review of feedback theory and management strategies. It is highly probable that further developmental progress in DSP hearing aids will include more sophisticated and robust feedback management algorithms in future products (Kates, personal communication, 1997). However, it should be mentioned that the problem of feedback is a particularly daunting one. Most conceivable solutions are constrained by trade-offs between fundamental effectiveness and speed, and the potential for introducing artifacts into the processing which listeners may find objectionable.

CLINICAL ARGUMENTS FOR DIGITAL HEARING AIDS

While reductions in acoustical feedback is both a clinically and technically attractive benefit of digital design, there are a host of other clinically-oriented DSP advantages that are theoretically possible and generally expected. Audiological solutions enabled by DSP may require research efforts nearly equaling the scale of the technical advances that have enabled the development of wearable digital chips at reasonable power requirements.

Harry Levitt, drawing on his considerable experience and studied perspective, identified three general advantages of digital aids which might be anticipated (1993b). Essentially he proposed that digital hearing aids should be expected to:

do what conventional analog aids can do, but more efficiently in several regards;

process signals in ways not possible by analog methods; and

change the fundamental ways of thinking about hearing aids.

To the first comment, efficiency might be considered essentially a technical advantage that underlies the other two which are clearly clinical in nature. The complexities of sensorineural hearing loss and the difficulties of predicting user sound quality preferences require new solutions not possible in analog and new ways of thinking about hearing aid fitting.

It has been suggested that otopathologic listeners, particularly the elderly and those with sensorineural type deficits, rarely manifest a simple loss of sensitivity (Dreschler and Plomp, 1985; Fitzgibbons and Gordon-Salant, 1987, 1996; Humes, 1993; Humes et al, 1988; Stach et al, 1990; Van Tassel, 1993). Traditional amplification techniques, of course, have had limited ability to address more than sensitivity as a function of frequency, and to some extent suprathreshold loudness processing. Moore (1996) provided an excellent summary of the multiple auditory consequences of cochlear damage. In addition to the familiar audibility (threshold) loss pattern, other pertinent potential effects of hearing loss on communication he cited include:

Impaired Frequency Selectivity

Altered Loudness Perception

Altered Intensity Resolution

Altered Temporal Resolution

Reduced Temporal Integration

Altered Pitch Perception and Frequency Discrimination

Impaired Localization Ability

Reduced Binaural Masking Level Differences

Impaired Spatial Separation Ability

Increased Susceptibility to Noise Interference

Moore concluded by saying that the research results to date “strongly suggest that one or more factors other than audibility contribute to the difficulties of speech perception” especially for those with moderate to severe cochlear losses.

This variable, and possibly incomplete, cluster of sound processing complications which may affect the typical hearing aid user argue for signal processing strategies in hearing aids that are more computationally intensive, more individually customizable, and which incorporate expanded diagnostic fitting approaches. They argue, quite simply for digital solutions. Addressing the various problems listed by Moore evokes a number of signal processing solutions, many of which have been researched in laboratories making use of experimental DSP devices.

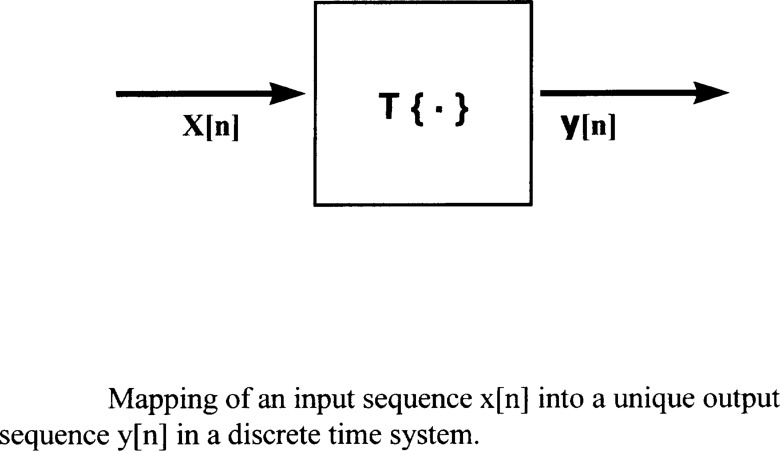

Since the obvious goal of any hearing aid design is to accomplish transformations of speech signals in such a way so as to improve communication, a fundamental digital concept should be illustrated. Recalling that DSP algorithms operate in a discrete time system, a simplified “black box” concept can be used to represent the mathematical possibilities for transforming such signals. Figure 20 attempts to do that in highly simplified form. It illustrates the underlying conceptual mapping that must be planned and organized by the DSP engineer. A theoretically desired output sequence is designed by intelligent manipulation of the input sequence upon its entrance into the digital box. Rearrangements in both the time and amplitude characteristics fall within the domain of the mathematical possibilities in DSP.

Figure 20.

The digital “black box” concept. Input (x) events can be transformed (T) in both temporal (e.g., system delays, specific or overall phase properties) and amplitude (magnitude) properties within a discrete time system to a desired output (y) sequence. (Adapted from Oppenheim & Schafer, 1989 with permission.)

It is assumed that much of the important work in the future will be related to organizing resources to insert recipes into the middle of the box in Figure 20. Such recipes will presumably produce output sequences that Van Tassel (1993) described as needing to be guided by the Hippocratic principle of “first do no harm,” that is, do not worsen the signal for the listener. After securing that fundamental goal, the range of exotic processing schemes that might be added to the benefit of users should be enormous given the multitudes of processing problems presently identified. A few are discussed in the following sections.

Noise Management

Another early applications-specific digital processing circuit in hearing aids was the Zeta Noise Blocker (ZNB) developed by Graupe and Causey (1977), and made available to hearing aid manufacturers for a time by the Intelletech company. The premise of the algorithm was that while speech generally varies relatively rapidly over time (several times a second), many noise sources have longer duration. The ZNB filter was of an adaptive Wiener filter design. That is, it would change according to computational estimates of the duration of signals passing into the several frequency bands of the circuit. Wiener filters use a relatively old strategy that attempts, with reasonable efficiency, to optimize the signal to noise ratio by gain adjustments within each band for a given, stationary situation. For signals that vary, such as most real-world noises, and certainly speech, adaptive updating of a Wiener filter improves the possibility of SNR enhancement. Some limited clinical benefit for the ZNB was reported (Stein and Dempsey-Hart, 1984), but consumers apparently were not well-pleased with the changing sound quality caused by the filtering, and few companies continued the use of the circuit. It was nevertheless an important effort towards the application of digital filter theory into hearing aid products.

Spectral Subtraction and Spectral Shaping

Weiss and Newman (1993) identified several other digital noise management approaches that could be implemented with a single microphone based hearing aid. Two are mentioned here.

The Spectral Subtraction method makes use of Fast Fourier Transform (FFT) operations to compute amplitude and phase within each time frame. By overlapping frames, or windows, and the use of some weighting assignments to scale the amplitudes, distortion is reasonably minimized. Like Wiener filtering, spectral subtraction makes use of differences in the temporal properties of speech and noise to generate estimates of noise spectrum power in periods when speech is not present. The computed noise spectrum is then subtracted from those periods which are estimated to contain both speech and noise, thus reducing the interference of the noise. The noise spectrum estimates are continuously updated over specified time intervals. The stored phase information is recombined with the modified amplitude spectrum by an inverse FFT.

Spectral Subtraction techniques are effective for extracting voiced speech components, such as formants from random background noise. This has made it useful in some cochlear implant pre-processing schemes where versions of it have been used for some time. The reasonable improvements with implant users (Hochberg et al, 1992; McKay and McDermott, 1993) unfortunately have not been matched by successes in hearing aid applications.

Spectrum shaping is another digital noise strategy that uses many similar operations as in spectral subtraction. However, in spectrum shaping each point in the spectrum is scaled in amplitude according to estimates within a band of whether noise or speech is present and attempts to attenuate components assumed to be noise. Work in the 1970s and 80s, however, was unable to yield noteworthy improvements in speech intelligibility in the presence of wideband noise.

While electroacoustic measures tend to show improvements in the Signal to Noise Ratio using several of the noise filtering approaches tried to date, the benefits in speech understanding in noise for hearing aid wearers has often been ambiguous and variable. Some versions introduce audible distortions, sometimes of a musical quality. Levitt et al (1993c) suggested that the tradeoff between reductions in noise and tolerance for distortion is a beguiling one, and clinical experience at AudioLogic is in agreement (Schweitzer et al, 1996a). It appears that when the task of hearing a speech message is particularly difficult due to background noise, listeners may briefly suspend rejection of sound quality complaints due to processing distortions. But identical distortion under less demanding listening situations may be more objectionable for the same subjects. It harks back the importance of the “do no harm” premise, and implies that the interpretation of “harm” may vary with message reception circumstances, as well as with the individual receptors.

Ephraim et al (1995) described preliminary results on another newly developed process called signal subspace that includes in its operations an attempt to mask processed noise by the speech signal, certainly a reversal of the normal situation. The investigators reported sound quality improvements over spectral subtraction processing and potential value for improving speech understanding.

MULTIPLE MICROPHONE (ARRAY) TECHNIQUES AND BINAURAL APPROACHES

Strategic approaches to the noise interference problem have resulted in considerable work being directed at the use of multi-microphone, or array processing techniques. These have taken many forms, and variations, but fundamental in the concept of array processing approaches is the computation of acoustical differences between two or more microphone inputs. Such computation necessarily requires the use of digital signal processing. A relatively recent review of work in this area appears in Schweitzer and Krishnan (1996). Kates and Weis (1996) comment that a microphone array is one of the few approaches proposed in hearing aid research that have actually yielded gains in speech intelligibility in noise for the hearing impaired. This has, no doubt, contributed to the diverse and extensive efforts with array processing methods which include research by Asano et al (1996), Bilsen et al (1993), Bodden (1993, 1994), Bodden and Anderson (1995), Desloge et al (1995), Gaik (1993), Greenberg and Zurek (1992, 1995), Grim et al (1995), Hoffman (1995), Hoffman et al (1994), Kates and Weis (1995, 1996), Kollmeier and Peissig (1990), Kollmeier et al (1993), Kompis and Dillier (1994), Lindemann (1995, 1996), Peterson et al (1987, 1990), Schweitzer and Terry (1995), Schweitzer et al (1996a), Soede et al (1993a, 1993b), Stadler and Rabinowitz (1993), Sullivan and Stern (1993), Van Comperolle (1990), Weiss and Neuman (1993), Yao et al (1995) among others.

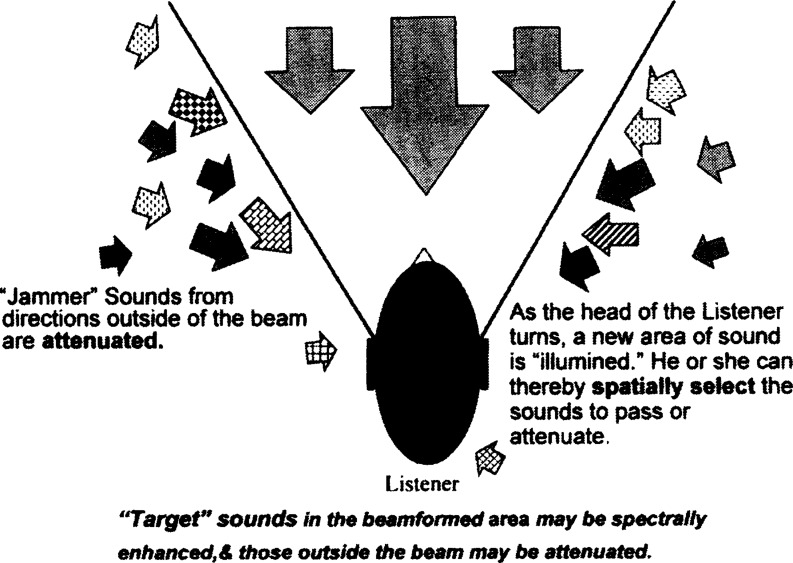

Since Blauert's important discussion of “spatial hearing” (1983), it has become particularly tantalizing to conceptualize a true binaural processor that might manage complex, rapidly changing acoustics with some of the robust processing apparently used in normal hearing processes. Colburn et al (1987) and Bodden (1997) make strong and cogent arguments for the use of binaural models in advancing the processing capabilities of digital hearing aids. An example of how a binaural processing system with a simple ear-mounted array might be used in a digital hearing aid is beamforming. A beamforming hearing aid system makes rapid computations of the phase and magnitude differences arriving at the two ears, and attempts to selectively attenuate regions not forward of the head (Schweitzer, 1997b). This concept is illustrated in Figure 21. One obvious requirement for such a system is that there must be a mechanism for interaural communication in the computational processing, such as some sort of hardwiring arrangement. This has generally been considered somewhat impractical and unattractive to hearing aid users. Such a constraint clearly complicates commercialization of this type of spatial filtering approach to the noise problem. While many consumers report that they are so highly motivated to hear with less difficulty in noise that cosmetics are unimportant, it may be necessary for future solutions using some form of wireless data transmission, or a cosmetically acceptable wire design to produce a commercially viable beamforming hearing aid system.

Figure 21.

A conceptual representation of the beamforming DSP strategy as developed and investigated at AudioLogic. Sounds from the frontal region can be estimated by phase and magnitude comparisons at the two ears. Attenuation rules are applied to those estimated to arrive from regions other than forward of the listener. (From Schweitzer et al, 1996b.)

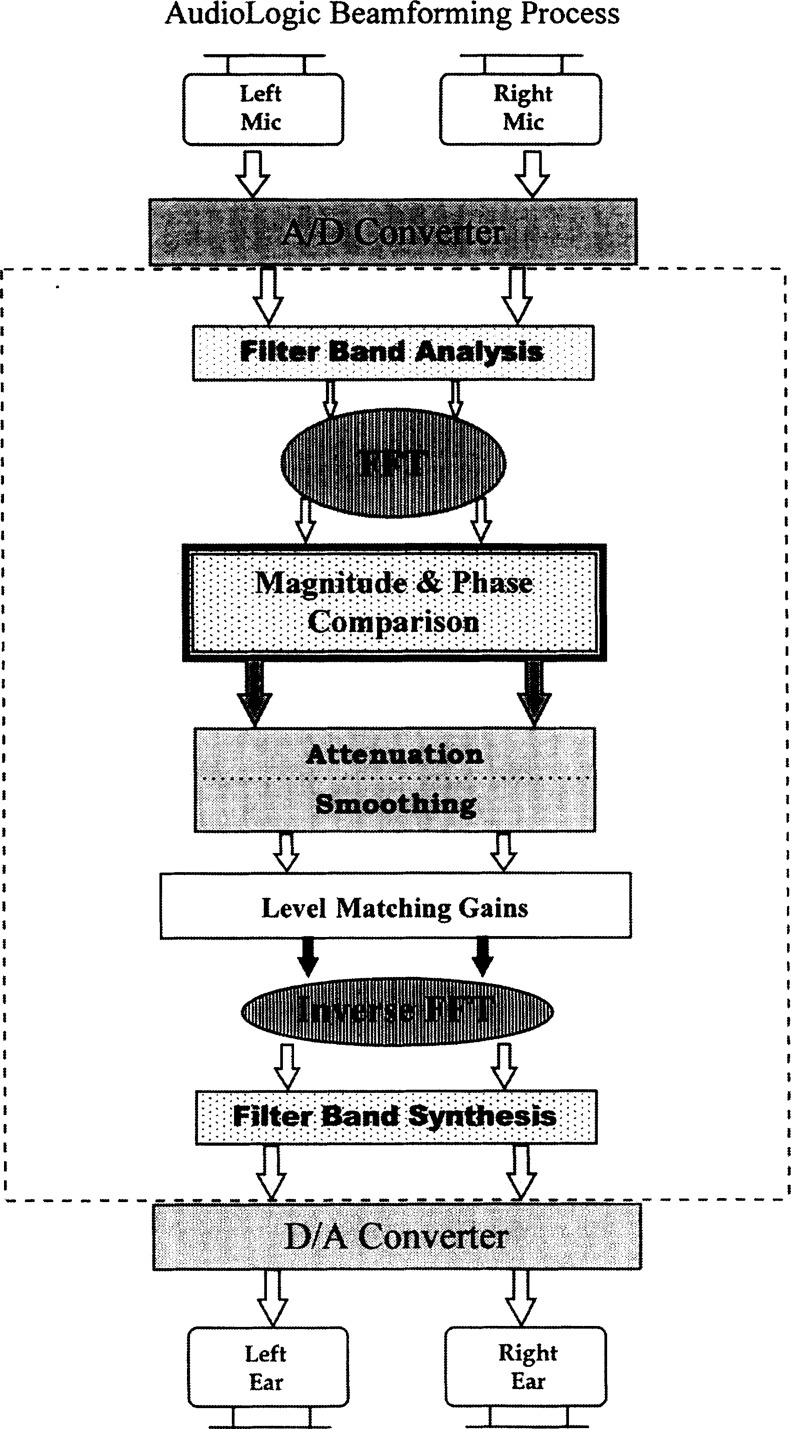

The binaural processing used in the AudioLogic experiments showed that a real time digital processor could produce a fairly robust beam. In other words, the processing speeds and computational elegance of innovative algorithms could accomplish a spatial filter in a wearable device. The implementation of this beamforming algorithm with hearing aid users generated consistent subjective reports of reductions in noise interference (Schweitzer et al, 1996a). While it remains to be seen whether such a processing device can be implemented in a commercial hearing aid product, it shows definite promise for use with cochlear implants (Margo et al, 1996; 1997; Terry et al, 1994) applications. A basic block diagram of how beamforming can be implemented appears in Figure 22. The illustrated technique is similar to the one used at AudioLogic (Lindemann, 1995; 1996) and also one reported by Kollmeier et al (1993). Essential to the processing is the correlation of phase and magnitude properties that differentially occur at the ears (inputs) to the system. This operation, not unlike human auditory processing, as it is generally understood (Agnew, 1996b; Blauert, 1983; Yost and Gourevitch, 1987; Yost and Dye, 1997; Zurek, 1993), generates rapid estimates of which sounds have arrived from regions forward of the listener. Those forward-originating signals are treated more favorably than sounds which are estimated to have originated from the sides. The latter, off target sounds, are attenuated while the former, on target sounds, are amplified according to the wearer's audiological requirements.

Figure 22.

A schematic block diagram of the processing approach used by AudioLogic to introduce beamforming into a wearable digital hearing aid system. (From Schweitzer and Krishnan, 1996 with permission.)

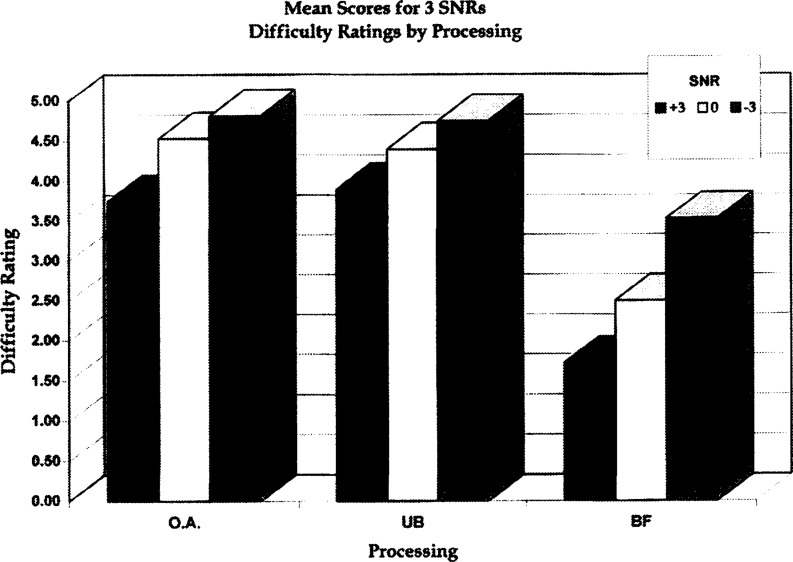

In experiments where the beamforming processing was compared to subjects' own hearing aids, beamformed “target” signals proved significantly more resistant to noise interference from speech “jammers” located off the beam area in Space. Some of the mean results reported previously (Schweitzer et al, 1996a) appear in Figure 23, indicating an unambiguous advantage. The performance measure used was a subjective estimate of the “Difficulty” of hearing connected discourse from a frontally-located speaker in the presence of four uncorrelated competing voices from locations as shown in the figure.

Figure 23.

Some results of experiments with beamforming. Mean subjective difficulty ratings for 16 subjects (lower numbers indicate less perceived difficulty) for three signal-to-noise ratios with listeners' own hearing aids (O.A.), Unbeamed (UB) digital processing, and with beamforming (BF). (From Schweitzer et al, 1996a with permission.)

An alternative approach in a digital binaural hearing aid system has been pursued by a research team at House Ear Institute in cooperation with Q-Sound and Starkey Labs (Agnew, 1997; Gao et al, 1995; Gelnett et al, 1995; Soli et al, 1996). Their prototype work was on a body worn processor connected to in-the-ear microphones and receivers. It was designed to preserve amplitude and phase cues to the two ears by use of Finite Impulse Response (FIR) filter techniques. The Cetera DSP hearing aid system that Starkey is preparing to introduce (Wahl et al, 1996) is designed to manipulate the magnitude and phase of the incoming sounds to compensate for individual ear canal acoustics and alterations of the signal due to the insertion of hearing aids. The intention is to reproduce without disruption the rich binaural cues that naturally occur in the unaided ear canal. These cues are often disrupted by conventional hearing aid systems which have no way of accounting for such information. Starkey reports that the Cetera technology should enable a user to receive better advantage of head shadow effects. Additionally, noise interference may be lessened by facilitating a listener's natural binaural suppression (squelch) processes. The hearing aids are designed to be essentially transparent to binaural acoustic cues. The fitting system of the Cetera uses a probe-tube measurement approach to, among other things, calculate and correct for the phase and magnitude alterations introduced by the inserted hearing aid.

It is expected that further development work in DSP hearing aids may give greater attention to the natural interaural acoustic conditions of hearing aid users. Clearly it was entirely outside the realm of possibility with traditional, uncorrelated analog hearing aids.

DIGITAL ENHANCEMENT OF SPEECH SIGNALS

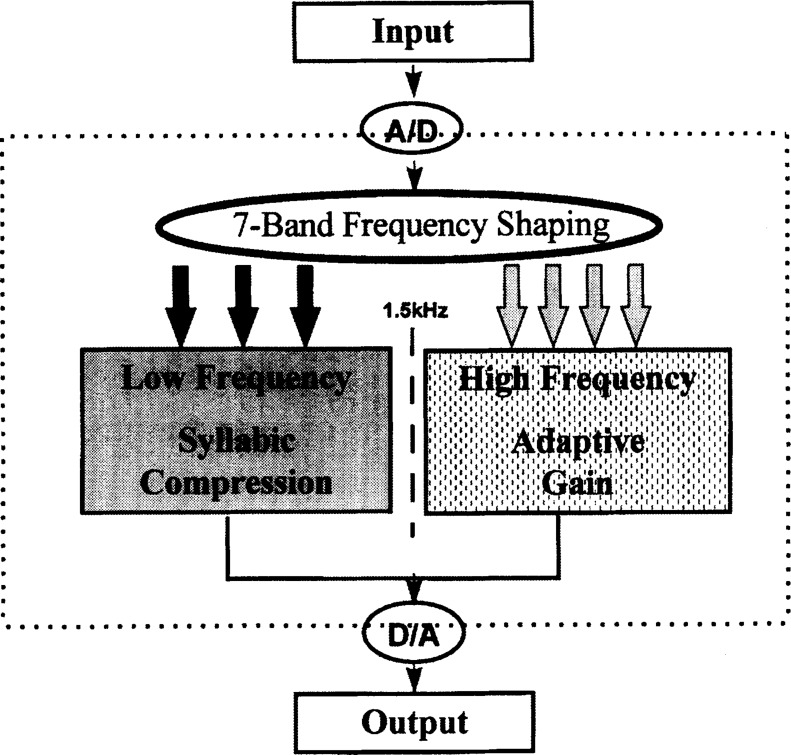

Loudness Compensation

Probably, the area that has received the most attention on Moore's list is the area of Loudness Perception aberrations. Many digital, single-band and multi-band algorithms have been proposed and evaluated in recent years. Kuk (1996) provides a comprehensive discussion of many of the issues related to nonlinear, compression designs. The general assumption is that some type of nonlinear amplification processing may be logically desirable for many sensorineural hearing losses. Whether compression should be applied over the entire dynamic range, only to the highest level of signals, as in compression limiting, or how different versions of non-linear processing should best be allocated in different bands remains quite murky. Recent reports by Lunner et al (1997) and Van Harten-de Bruijn et al (1997) further illustrate the rather imprecise and difficult to predict relations between listener performance, subjective impressions, conventional audiometric patterns and various multiband nonlinear processing approaches possible in digital amplifier constructions.

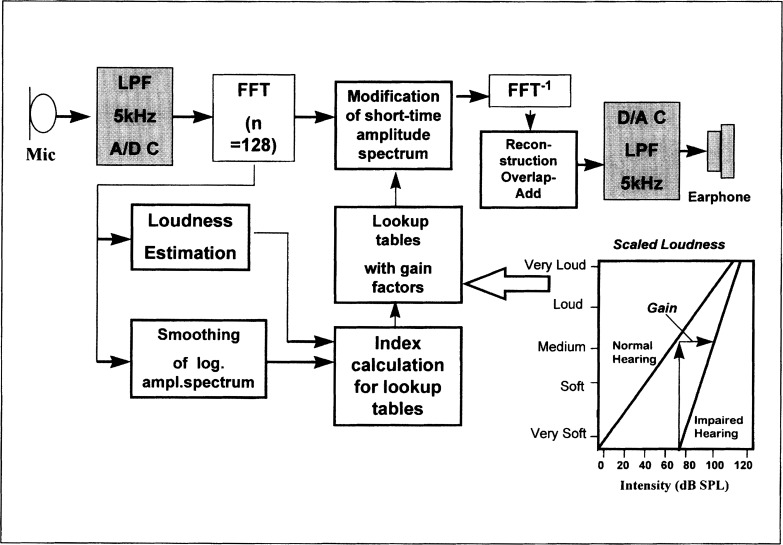

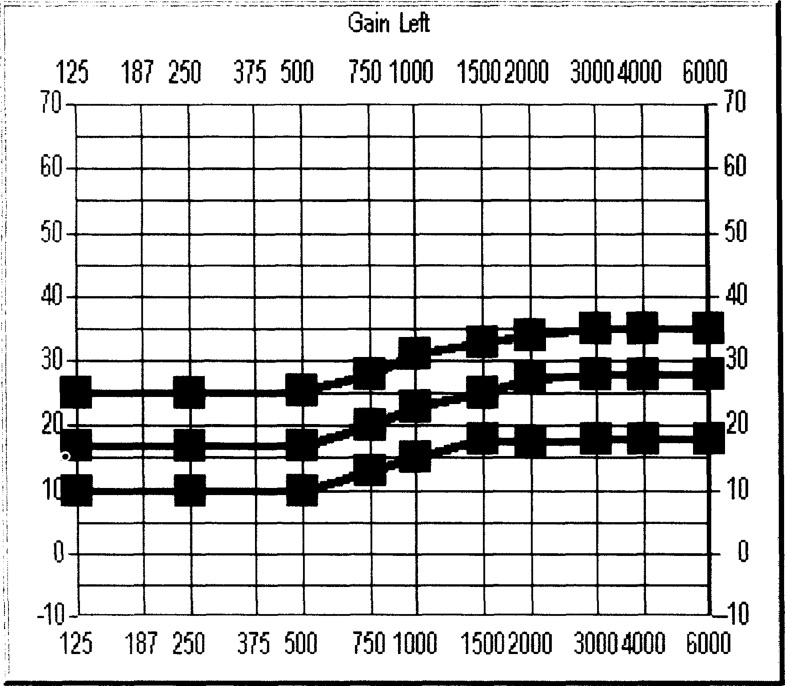

An example of an earlier application of a digital multi-band nonlinear correction approach (Dillier et al, 1993) is given in Figure 24. Note the integration of listener loudness data in Figure 24. The use of listener- interactive loudness scaling to drive the processing for the level-dependent gains used in Switzerland by Dillier and his colleague (see also, Dillier and Frolich, 1993) is a concept also seen expressed in work by Kollmeier (1990), Kollmeier et al (1993) and Kiessling (1995). Variations of in situ delivered test signals to which listener responses drive digitally-controlled filter gain adjustments also appear in such products as the Danavox/Madsen ScalAdapt (Grey and Dyrlund, 1996; Schweitzer, 1997a), the Resound RELM fitting system (Humes et al, 1996), as well as in the Widex Senso (Sandlin, 1996).

Figure 24.

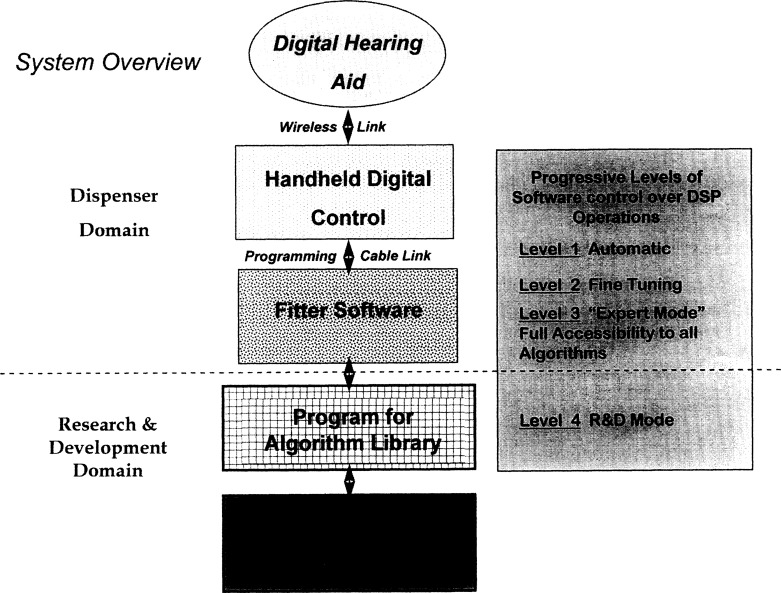

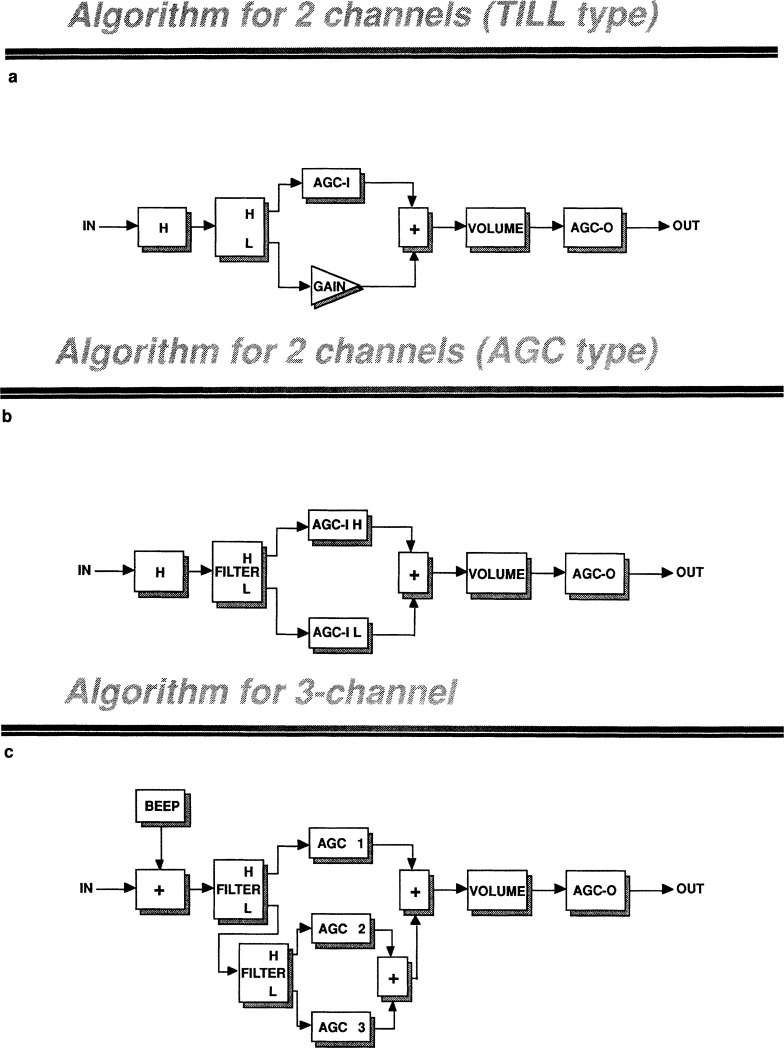

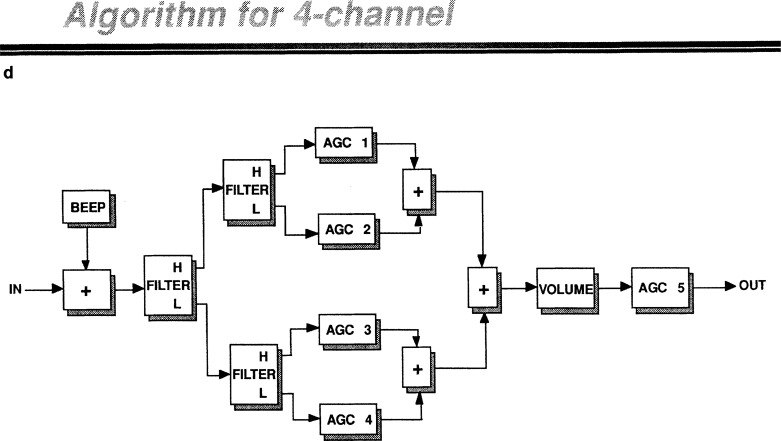

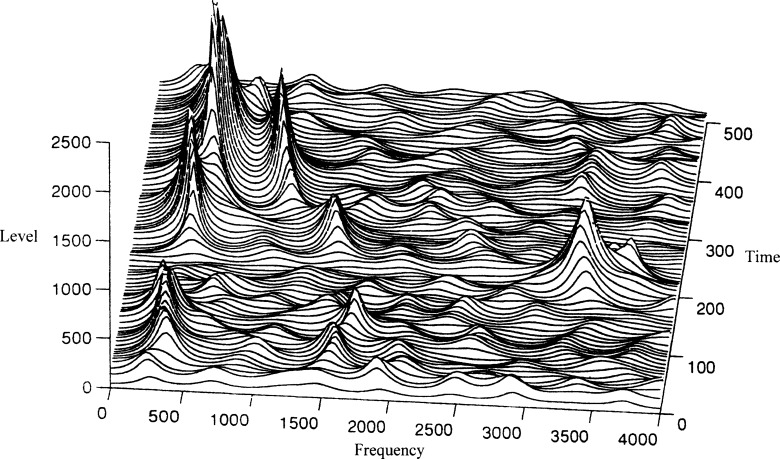

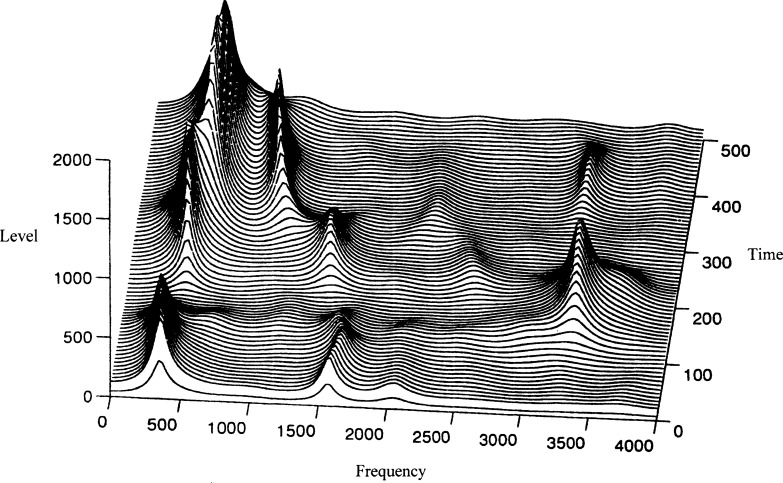

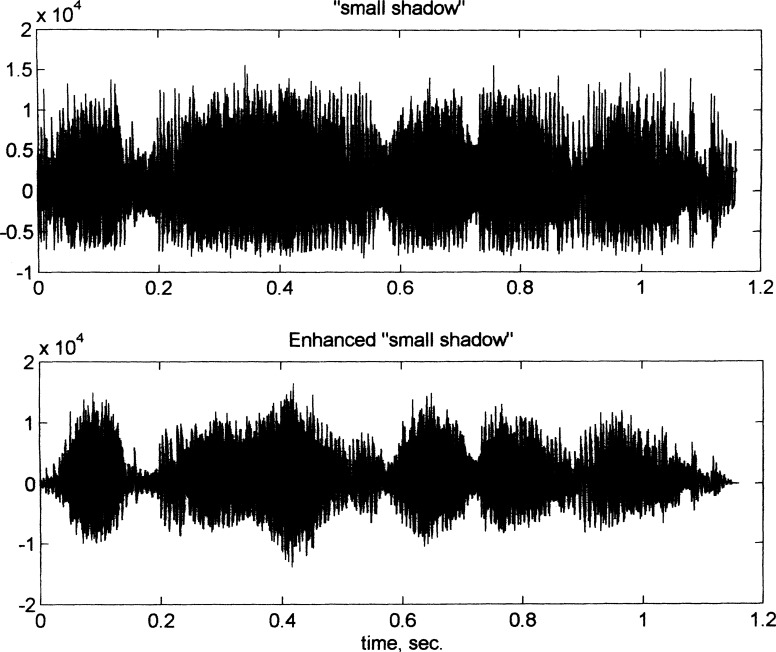

Application of digital processing for loudness compensation in a Swiss laboratory DSP hearing aid reported by Dillier et al (1993). Note the low pass filter after the input for anti-aliasing purposes, and a similar operation at the return to analog (D/A). In this design, listener loudness scaling was fed back into the gain tables and used in the amplitude modification stage. (Adapted from J Rehab Res Dev Vol 30(1) 1993 with permission.)