Abstract

This study re-examines the issue of how much of working memory storage is central, or shared across sensory modalities and verbal and nonverbal codes, and how much is peripheral, or specific to a modality or code. In addition to the exploration of many parameters in 9 new dual-task experiments and re-analysis of some prior evidence, the innovations of the present work compared to previous studies of memory for two stimulus sets include (1) use of a principled set of formulas to estimate the number of items in working memory, and (2) a model to dissociate central components, which are allocated to very different stimulus sets depending on the instructions, from peripheral components, which are used for only one kind of material. We consistently find that the central contribution is smaller than was suggested by Saults and Cowan (2007), and that the peripheral contribution is often much larger when the task does not require the binding of features within an object. Previous capacity estimates are consistent with the sum of central plus peripheral components observed here. We consider the implications of the data as constraints on theories of working memory storage and maintenance.

A key issue in cognitive psychology is the nature of limitations in working memory, the small amount of information temporarily held and used in various cognitive tasks. An important question is to what extent working memory storage depends on a mental faculty that is general across domains as opposed to domain-specific (e.g., Kane et al., 2004). A long tradition of dual-task methods lends itself to the examination of this question, and has been used for many years (e.g., Allen, Baddeley, & Hitch, 2006; Baddeley, 1986; Baddeley & Hitch, 1974; Cocchini, Logie, Della Sala, MacPherson, & Baddeley, 2002; Cowan & Morey, 2007; Fougnie & Marois, 2011; Morey & Cowan, 2004, 2005; Morey, Cowan, Morey, & Rouder, 2011; Saults & Cowan, 2007; Stevanovski & Jolicoeur, 2007). With this method, it has been fairly well established that there is some dual-task interference between very different tasks. To some degree, for example, the need to retain visual items interferes with concurrent storage of verbal items, and vice versa. Yet, this methodology has never lived up to its promise because, we contend, a thorough analysis of the problem has not been available.

We examine the consequences of what we believe to be an improved methodology to ask how much of the working memory storage capacity is central, or capable of being allocated to different materials to be remembered according to task demands. This concept is separate from peripheral storage, which has different varieties, each of which can only be allocated to a single type of stimulus regardless of task instructions. In our case, storage capacity could be allocated to colored objects (sometimes differing also in shape) and/or words (spoken or, in one experiment, written). Assuming that there is a limited amount of central storage to be allocated freely, the number of colored objects remembered should be reduced when the participant must also remember words at the same time, and vice versa.

A dual-task design, with memory for one or two different stimulus sets of different types required on a given trial, is required in order to estimate central and peripheral components of working memory. The reason is that memory for a single stimulus set theoretically can be based on multiple storage mechanisms, only some of which are shareable resources. With a single type of stimulus, multiple storage mechanisms cannot be separated. Here we apply a model in which memory for items in each modality is assumed to come from the sum of the contributions of central and peripheral components. The central component can be estimated as the portion of memory for stimuli of a certain type (e.g., colors) that have to be shared with stimuli of a second type (e.g., words) if that second type also is to be remembered. The peripheral component can be estimated as the portion of memory for stimuli of a certain type that does not have to be shared. Before the analysis of results into central and peripheral components can be accomplished, the number of items of each type retained in working memory must be estimated, as in past work (Cowan, 2001; Cowan, Blume, & Saults, 2013), separately for single- and dual-task conditions (cf. Saults & Cowan, 2007). Then certain subtractions between conditions can be carried out to estimate the central and peripheral components, as we explain below. Before explaining that, though, we discuss in more detail the theoretical implications of central and peripheral storage.

THEORETICAL IMPLICATIONS OF CENTRAL AND PERIPHERAL STORAGE

The notion of central storage is assumed to involve categorical as opposed to sensory information. Categorical information refers to information that allows the assignment of each item to one of a finite number of categories. Considering the stimuli in our experiments, it is intended that categorical information could distinguish the different items. Information about a color is categorical in our experiments because it is enough to remember the conventional color group of each stimulus (red, blue, green, etc.) to perform correctly. We did not require non-categorical color information as we did not test multiple shades of the same basic color. The shapes we used included only one of each familiar category (star, square, circle, and so on) and therefore, again, could be retained as categorical information. The same was true of spoken digits or words; there are a finite number of categories and a very small number of different words were used over and over in any one experiment. Finally, although spoken voices must at first be distinguished on the basis of fine-grained acoustic information, the same few, quite discriminable voices were presented over and over in any one experiment, to allow categories reflecting the voices to be built up in memory quickly. Thus, we assess whether the amount of categorical information in a central part of working memory may be limited across modalities or types of stimuli, with interference even across different modalities.

Unlike categorical information, sensory information appears to be represented separately in each modality (vision and hearing in our case) with little interference between modalities (e.g., Cowan, 1988). However, sensory information is quickly lost (e.g., Darwin, Turvey, & Crowder, 1972; Sperling, 1960), so categorical information is needed for recall in working memory tasks. We ensure that this is the case in most of our experimental conditions by including a pattern mask after a reasonable perceptual period, to overwrite any residual sensory information (cf. Saults & Cowan, 2007).

It is also possible to have peripheral information that is categorical rather than sensory in nature. A possible example is phonological information, which can be derived from either spoken or written words (e.g., Conrad, 1964). According to one traditional theory of working memory (Baddeley, 1986; Cocchini et al., 2002), all working memory storage could be peripheral. According to this theory, phonemes might be retained in one mechanism termed the phonological store, whereas visual or spatial features (line orientations, colors, spatial arrangements of objects, visual patterns, and so on) might be retained in another mechanism termed the visuospatial sketchpad. Provided that sufficient attention is made available to encode items of both types, the number of phonemes stored in working memory would not depend on the number of visuospatial elements stored concurrently, nor vice versa. Both would therefore be considered peripheral types of storage.

If the number of verbal and visuospatial units trade off, so that storage of one type limits concurrent storage of the other type, then according to our terminology there is a central storage mechanism that must be shared between the different types of information. Theories differ as to the amount of central storage versus peripheral storage to expect. One way in which central storage could work is that there could be a limit on the total number of items that can be remembered (cf. Cowan, 2001; Cowan, Rouder, Blume, & Saults, 2012). Each item in this approach is called a chunk of information (Miller, 1956), i.e., a group of elements that are strongly associated with one another and together form a member of a conceptual category. For example, a string of phonemes making up a known word together comprise a single chunk, as do an array of lines or curves making up a known geometrical shape such as a square, circle, or other familiar shape. According to this theoretical view, there would be a numerical limit of the central component of working memory to several chunks at once, and these chunks could be of any type (e.g., spoken words, shapes) or combination of types.

Peripheral stores could supplement central storage. To measure central storage, therefore, a method must be developed to separate it from peripheral storage, and soon we will explain our method to do so.

According to some other theoretical views, central storage should exist but it should be more severely limited. Attention could be focused on different memory representations in a rotating fashion, at a certain rate, to refresh them before a decay process makes them inaccessible to working memory (cf. Barrouillet, Portrat, & Camos, 2011). The working memory information could be stored as activated chunks in long-term memory, which is presumably not capacity-limited except that items soon become inactive because of decay, unless attention is used to refresh them (Camos, Mora, & Oberauer, 2011; Cowan, 1988, 1992). In that case, there would be a tradeoff between different types of storage; if attention cannot refresh all items at once, then some items will decay when they are not being refreshed. Yet, the sharing of the refreshing process between modalities might be enough to produce only a relatively small amount of tradeoff between visual and verbal memory, and therefore only a small estimate of central storage.

In another popular view, we cannot make predictions because we believe that the amount of central storage to be obtained has been left unspecified. Specifically, Baddeley (2000, 2001) described an episodic buffer component that could hold several chunks and could supplement the phonological and visuospatial stores from his earlier model. This episodic buffer clearly is said to hold various types of information, including the binding between information from other stores, and semantic information. It is unclear, however, whether this buffer is used only when the other stores are inappropriate to hold the information. For example, if words and visual objects were held in separate buffers, could the episodic buffer hold a version of the items concurrently, perhaps in a more semantic form? Because we do not know, we will not suggest predictions of the total amount of central and peripheral storage based on this view.

The present experiments are designed to assess how much information is central and how much is peripheral, despite not knowing the exact nature of the central or peripheral memory representations. This assessment helps greatly to constrain models of working memory. Below, we discuss our analytic technique and its boundary conditions or limits, the historical context that motivated it, and the application of the technique to the present set of experiments.

A NEW ANALYTIC TECHNIQUE AND ITS LIMITS

Our work provides a new analytic technique to assess central versus peripheral storage, as described above. The steps in doing so involve (1) the use of a discrete slot model to estimate the number of items in working memory appropriate to the test procedure; (2) examination of memory for two different stimulus sets, separately and also when memory for both sets is required at once; and (3) use of a new method to distinguish central and peripheral components of working memory, based on these data. To anticipate the results, relatively plentiful information about item features (in this study, color, shape, voice, and word) tends to be held in peripheral storage, whereas feature binding information does not seem to benefit from peripheral storage as much. For both item and binding information, a small but typically above-zero amount of information is held centrally.

Limits of the Method

Before describing the analytic technique, we hasten to mention two important constraints in our investigation. First, we do not examine the conflict between two sets of stimuli with the same code (two nonverbal object sets or two verbal sets). Undoubtedly, more interference between sets at the time of memory maintenance would be obtained in studies with two stimulus sets of the same kind than with two different kinds (see for example Cowan and Morey, 2007; Oberauer, Lewandowsky, Farrell, Jarrold, & Greaves, 2012). It is of course also possible for visual stimuli to be encoded verbally (e.g., Conrad, 1964) or for verbal stimuli to be encoded visually (e.g., Logie, Della Sala, Wynn, & Baddeley, 2000), which would allow for more interference during maintenance, but any such recoding should be minimized by the presentation of verbal and visual stimuli on the same trial. Still, we used articulatory suppression to avoid verbal rehearsal of either stimulus set. This technique should also prevent the verbal coding of visual materials (cf. Baddeley, Lewis, & Vallar, 1984). Our results should not be taken to indicate the most interference that is possible between two sets, only the amount of interference between two rather different sets at the time of memory maintenance.

A second constraint is roughly the converse of the first. We cannot rule out the possibility that if one stimulus set required the retention of object identities whereas another stimulus set required the retention of something different, such as the precise locations of a set of objects, there would be no interference between them at all. This, in fact, has been proposed in one recent investigation (Marois, 2013). Other investigations have similarly emphasized a separation between item capacity and precision as separate resources (e.g., Machizawa, Goh, & Driver, 2012; Zhang & Luck, 2008). However, one must also consider the possibility that a spatial arrangement of locations can be combined to form a spatial configuration, a kind of item amalgamation or chunking that would in effect reduce the number of items to be held in memory independently (Jiang, Chun, & Olson, 2004; Miller, 1956). Nevertheless, any conclusions we draw are specific to situations in which both sets to be remembered contain categorizable items or identities, not locations.

Methods of Analysis for Several Slightly Different Procedures

Previous dual-task memory studies have examined the tradeoff between memory for one stimulus set and memory for the other using various metrics (e.g., Cocchini et al., 2002; Fougnie & Marois, 2011; Morey et al., 2011). These metrics, however, have not been based on the estimated number of items in memory, which must take into account the effects of guessing in a recognition task. The importance of such estimates is that they allow a principled description of the allocation of storage to different kinds of items. We now have well-considered estimates of the number of items in storage for various versions of tasks in which a single set of items is followed by a single-item probe to be judged the same as one of the items in the set or different from all of them (Cowan, 2001; Cowan, Blume, & Saults, 2013; Rouder et al., 2008; Rouder, Morey, Morey, & Cowan, 2011).

In the tasks we will use, there is a set of N items to be remembered (with no duplicates in the set), followed by a probe to be judged the same as one item or different from all of them. The assumption is that k of them actually are remembered and we wish to estimate an individual's k value. Response to the probe can be based on a comparison of that probe to a single list or array item in working memory, if the probe appears in a way that makes clear which item may be identical to the probe. If, on the other hand, the task is such that the probe is always presented without an identifying cue, it must be compared to all items in working memory. Finally, if an item matching the probe is not found in working memory and some items were forgotten, the response can only be based on a guess. Based on this fundamental logic, Appendix A reviews the formulae used for slightly different procedures in this study, to estimate the number of items held in working memory. We will discuss these methods to estimate k before going on to explain how they are used to estimate central and peripheral components of working memory.

Probe in the Location of a Specific Stimulus, with an Old or New Feature

In each case, the formula for items in working memory shown and explained in Appendix A represents a logical analysis of the task demands. In one visual task version we use, an array is followed by a single-item probe appearing at the location of an array item; the probe is either identical to that array item (e.g., both of them blue) or differs from it by one feature (e.g., the probe red unlike any item in the array). This task version is also extended here to verbal lists in some experiments, by presenting a probe word within a series of identical nonverbal sounds to indicate the serial position of the probed item.

For this kind of task in which a specific stimulus is probed, if the participant has the probed item in working memory, he or she presumably will correctly judge whether it is the same as or different than the probe.. If the item at the probed location in the array is not in working memory, either because it was not presented or because it was presented but not remembered, the participant will not have any relevant information and must guess.

This is the model proposed by Cowan (2001) and, as show in the Appendix, the formula is simply k=N(H-F), where H is the proportion of hits, i.e., changes successfully detected, and F is the proportion of false alarms, i.e., incorrect reports of a change. This kind of model makes predictions that have been strikingly confirmed for visual arrays (Rouder et al., 2008). It correctly predicts that the receiver operating characteristic (ROC) curve should be linear, whereas signal detection theory incorrectly predicts a curvilinear ROC curve, and Cowan's model also correctly predicts exactly how the effects of criterion bias should be combined with k for different values of N. Donkin, Nosofsky, Gold, and Shiffrin (2013) further show that models with discrete slots provide the preferred account of reaction times.

The essential assumption of the model is that the knowledge of a particular item is all-or-none; there can be no partial knowledge of an item in the model. This need be assumed only in so far as the tested feature is concerned. For example, if one always tests for color and never for shape, the model's assumptions could be satisfied sufficiently to make it appropriate as a measurement model for the colors, regardless of whether knowledge of the shapes is as good as knowledge of the colors. Luck and Vogel (1997) suggested that knowledge of an item in working memory carries with it knowledge of all of its features, but that assumption has been brought into question (Cowan, Blume, & Saults, 2013; Oberauer & Eichenberger, 2013; Xu, 2002) and we no longer hold it.

The assumption of discrete knowledge of the items is an oversimplification. Zhang and Luck (2008) devised a technique in which the items were drawn from a continuum: colors from any point on a color wheel or figures rotated to any orientation. The response was to reproduce the stimulus value as a location on a response wheel, so that the precision of the memory representation could be measured. Zhang and Luck found that participants did not spread their attention evenly over all items, with less precise representations as the number of array items increased; attention was spread only to about three items. In fact, participants are unable to spread attention to more objects in working memory at once even when the incentives favor it (Zhang & Luck, 2011). The evidence suggests that when there is only one item, precision is greatest, whereas precision is diminished with two items and further with three items, reaching a stable plateau after that (for further evidence see Anderson, Vogel, & Awh, 2011).

The simpler model of Cowan (2001) and the other models used in this article do not include a precision factor. Our assumption based on the evidence is that the precision factor is likely to be important only when the stimuli are chosen from a continuum or differ by small amounts. We chose stimuli that were designed to be categorically different, with features including colors with different basic names (red, green, etc.), simple shapes (star, cross, etc.), simple familiar words, and voices that sounded very different from one another. If there is a partial loss of precision in the representation based on decay or interference, we simply assume that this degradation does not matter until it passes some threshold.

Not everyone in the field is convinced that the models with a limited number of discrete slots are correct. Bays and Husain (2008) argued that attention can be spread thinly across all items (but see the response by Cowan & Rouder, 2009). Van den Berg, Shin, Chou, George, and Ma (2012) proposed a model with a variable precision of items (as did Fougnie, Suchow, & Alvarez, 2012) and Sims, Jacobs, and Knill (2012) proposed a model in which the number of items in working memory can vary from trial to trial. Although there may well be some truth to these approaches that include precision, they do not seem to serve as an argument against using a measurement model with a simpler formula that just counts how many items are in working memory. An item might be considered out of working memory because there is no information about it, or because the working memory representation is too poor to be used to guide a response to the probe. Given that our aim is to determine how much of working memory is central between two different stimulus sets or peripheral to just one of them, it seems at worst a helpful and convenient simplification to use discrete slot models for this purpose, given the categorically distinct stimuli that we use in these experiments.

Probe in a Central Location, to be Matched to All List or Array Items

New-feature probe

In another task version that we use, a probe is centrally presented and is identical to one array item or has a new feature not found in the list or array. In the verbal list version, a single probe is presented and could match any list item, or none of them. Then if there is a matching item from the array or list in working memory, the participant presumably will know that there was a match. If there is no matching item in working memory, however, and there are some array items not represented in working memory, the participant has no way to know whether the forgotten items do or do not include the probe, and therefore the participant must guess. This model was presented by Cowan, Blume, and Saults (2013). Its formula is k=N(H-F)/H, with terms as defined above; that is, it is the formula for in-location probes divided by the proportion of changes detected or hit rate. This formula is similar, but not identical, to a model for a whole-array probe provided by Pashler (1988), as Appendix A shows.

Feature-binding probe

Finally, we also used a task in which a central probe was either identical to one list item, or else consisted of a recombination of the features of two items (the shape of one item combined with the color of another, or the voice of one item combined with the word identity of another). In this task, if there is a match, the participant can get the answer by having the matching item in working memory. If there is no match, then the participant can get the answer by having in working memory either of two items: for example, either the item with a shape matching the visual probe or the item with the color matching the visual probe. If neither of these items is in working memory, the participant must guess. This model, too, was presented by Cowan, Blume, and Saults (2013). Its form is complex, as indicated in Appendix A.

Central and Peripheral Components Based on Single and Dual Tasks Together

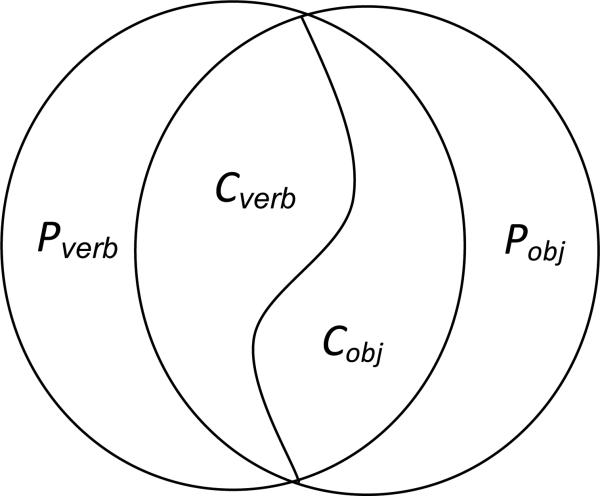

The present work extends the discrete-slots analysis of items in working memory (Appendix A) to tasks with two sets of items potentially in competition for memory capacity. Figure 1 is a schematic diagram illustrating how this application of the capacity-estimation models to the dual-task situation occurs when there is one set of verbal items and one set of nonverbal objects to be remembered. The number of items in working memory is partitioned into four subsets: the number of verbal items that are retained no matter what the task instructions, or Pverb; the number of nonverbal objects that are retained no matter what the task instructions, or Pobj; the number of slots that are filled with verbal items when both sets are to be remembered, but with objects when only those are to be remembered, or Cverb; and the number of slots that are filled with nonverbal objects when both sets are to be remembered, but with verbal items when only they are to be remembered, or Cobj. In this nomenclature, P stands for peripheral and C stands for central. Moreover, conceptually we care about the total number of slots that can be allocated freely to either verbal or nonverbal information, or C, such that C=Cverb+ Cobj. These quantities can be calculated if one has estimates of the number of objects and verbal items retained both when the task is to retain only one set, and when the task is to retain both objects and verbal items at the same time. For example, the difference between the number of verbal items remembered alone versus the smaller number of verbal item remembered in a dual task yields an estimate of the number of verbal items that were sacrificed for the sake of object memory in the dual task, Cobj. When one adds this to the converse, namely the number of objects sacrificed for the sake of verbal information in a dual task, Cverb, then the sum C is obtained and is termed the central portion of working memory. C is thus the portion that can be reallocated depending on task demands. It also follows that, in a single-stimulus-set condition, memory results from the peripheral portion plus the central portion: for verbal items, C+Pverb and, for non-verbal objects, C+Pobj. These expressions allow the calculation of the peripheral portions of working memory, Pverb and Pobj. This basic description of the method is explained in a more mathematical form in Appendix B.

Figure 1.

A simple theoretical model of the distribution of working memory resources in the present tasks, including peripheral resources specific to memory of the verbal items (Pverb), peripheral resources specific to memory of the visual objects (Pobj), central resources devoted to verbal items during divided attention (Cverb), and central resources devoted to visual objects during divided attention (Cobj). In divided-attention situations, the number of items retained for modality x is Px+Cx, where x=verb or x=obj. In a single-modality-attention situation, the number of items retained is Px+C, where C= Cverb+Cobj.

CONTEXT FOR THE PRESENT ANALYSIS

We will discuss the relevant literature broadly speaking, and then the immediate precursor to our study. In an early popular model of working memory (e.g., Baddeley, 1986), information was said to be stored in code-specific buffers, the phonological loop and the visuo-spatial sketchpad. Cowan (1988) proposed that information was stored as the activated portion of long-term memory, which could exhibit feature-specific interference that would make storage seem domain-specific like Baddeley's buffers. Additionally, though, Cowan suggested that there was some storage that took place in the focus of attention, regardless of the domain of coding. The peripheral storage in the present work might map onto the activated portion of long-term memory and the central storage might map onto the focus of attention. Note that this mapping depends, though, on the focus of attention being voluntarily directed. The mapping theoretically could break down if, for some reason, there is domain-specific information in the focus of attention that cannot be traded off between domains. For example, it is possible that some stimuli linger for a while in the focus of attention even after they become irrelevant to the task (Oberauer, 2002). To the extent that the allocation of attention is beyond the participant's control, any such information uncontrollably in focus contributes to the peripheral components of our model, not to the central component.

Other models also have proposed some type of central store in addition to some type of peripheral storage (e.g., Atkinson & Shiffrin, 1968; Baddeley, 2000; Baddeley & Hitch, 1974; Broadbent, 1958; Oberauer, 2002). The balance between peripheral and central storage, however, remains unclear. Cowan (2001) suggested that when mnemonic strategies like rehearsal and grouping are prevented and sensory memory is eliminated, adults are restricted to central storage and can remember only about 3-5 items, not about seven as Miller (1956) famously proposed. Oberauer et al. (2012) suggested that this capacity limitation is not the focus of attention, and that only one item at a time is in the focus of attention.

An important reason to raise the possibility of central storage is that peripheral storage cannot, in principle, include the links between very different types of material such as spatial and verbal materials. This reasoning led Baddeley (2000) to add to his model a central component called the episodic buffer, with a function that includes storage of the binding between the different attributes of a stimulus.

One can distinguish between two questions about any particular store: how many objects it can include, and how many attribute bindings it can include. The answer to these two questions is the same, according to Luck and Vogel (1997), based on their seminal task in which an array of objects is followed by a probe to be judged the same as the array or differing from it in one feature. Specifically, they found that objects with multiple features (e.g., color, orientation, length, and presence or absence of a gap within a bar) are retained in an all-or-none fashion, such that items that are remembered include all of their features. This result is, however, seemingly at odds with some subsequent work. Wheeler and Treisman (2002) found that the proportion correct for detecting feature changes was, under some circumstances, higher than for the binding between features. Vul and Rich (2010) found that features are remembered independently across objects, with the probability of remembering the binding between two features no higher than one would expect based on the probability that the two features were by chance retained for the same object (see also Bays, Wu, & Husain, 2011; Fougnie & Alvarez, 2011). Cowan, Blume, and Saults (2013) replicated that effect with the finding that people retained about 3 array items in various conditions, but that if retention of two attributes (color and shape) were both required, some of the objects were retained with only color or only shape. (This model was confirmed and extended to more features by Oberauer & Eichenberger, 2013). In some of our experiments we used items with two attributes (colored shapes, and digits spoken in different voices) in order to compare the means of storage of the attributes, and of the binding of attributes. With our new analysis method to be presented, we aim to examine the retention of both attributes and their binding in both peripheral and central stores.

Immediate Precursor to the Present Study: Saults & Cowan (2007)

One study (Saults & Cowan, 2007) did result in estimates of the number of items in working memory in a dual task. Because the present study was designed to rectify issues remaining after that study, it will be described in some detail. In five experiments, spoken digits were presented from four loudspeakers concurrently in different voices to avoid rehearsal, while any array of several colored squares also was presented. Either the visual or the spoken array was then repeated as a probe, and was identical to the first array of that modality or differed in the identity of one item. The task was to indicate whether the studied array and the probe array were the same or different. In some trial blocks, the spoken digits were to be ignored; in others, the visual items were to be ignored; and in still others, both modalities were to be attended. In some trials, a difference consisted of a new feature (e.g., a red item where a green item had been, when no other studied item on that trial was red) whereas, in other trials, a difference consisted in a changed but familiar item (e.g., a red item where a green item had been, when another array item was also red).

In some of the experiments of Saults and Cowan (2007), after an amount of time that was shown to be enough to encode the stimuli (cf. Jolicoeur & Dell'Acqua, 1998; Vogel, Woodman, & Luck, 2006), a pattern mask was presented to eliminate any residual sensory memory. . Although some evidence suggests that sensory memory ends in a matter of several hundred milliseconds (e.g., Efron, 1970a, 1970b, 1970c; Massaro, 1975; Sperling, 1960), there is other evidence of modality-specific memory that might be considered sensory lasting several seconds, both in audition (e.g., Cowan, Lichty, & Grove, 1990; Darwin et al., 1972) and in vision (e.g., Phillips, 1974; Sligte, Scholtel, & Lamme, 2008). Massaro referred to a brief, literal sensory memory and a longer, synthesized sensory memory; Cowan (1988) similarly distinguished between two phases of sensory memory in every modality, a brief literal afterimage followed by a second, more processed sensory memory. The mask is included because of the longer form of sensory memory (or Massaro's synthesized sensory memory).

In some experiments of Saults and Cowan (2007), stimuli in the two modalities were presented one after the other in order to eliminate any interference at the time of encoding. In most experiments, digit-location associations and color-location associations could be used to carry out the task; in the last experiment in the series, though, the only possible information that could be used was digit-voice associations and color-location associations (because the digits were spatially shuffled between study and test).

The results of all of the experiments of Saults and Cowan (2007) showed a tradeoff between modalities. In the experiments with masks to eliminate sensory memory, people could remember about 4 visual items or 2 acoustic items in unimodal attention conditions, and about 4 items total in the bimodal condition (about 3 visual and 1 acoustic).

THE PRESENT EXPERIMENTS

The present work follows up on this previous work of Saults and Cowan (2007) with the intent of improving the work in several ways. (1) First, we presented only a single item as a probe in order to limit the number of decisions that the participant must make (Luck & Vogel, 1997). (2) Second, we more consistently incorporated what we considered to be the best practices of Saults and Cowan (use of a mask, and sequential rather than concurrent presentation of the two stimulus sets to be remembered). (3) Third, inasmuch as Saults and Cowan found that only about two items could be remembered from concurrent arrays of sounds, we changed the verbal stimulus sets to sequences rather than arrays, and incorporated articulatory suppression (repetition of the word “the” during stimulus presentation and retention intervals) to prevent rehearsal. The use of verbal sequences avoids the perceptual bottleneck of encoding concurrent sounds into working memory.. (4) Fourth, we separated the procedures so that on a given trial, a participant only had to remember a single kind of feature of each object, or only had to remember the binding between features of each object. We did this to simplify the task so that the existing formulas of performance (Appendix A) would apply.

The other main change has to do with the manner in which the results were evaluated. Saults and Cowan (2007) assumed that, because unimodal visual capacity and bimodal capacity both were about 4 items, this must measure the capacity of the focus of attention. We now believe that logic to be faulty. Using the method of analysis of bimodal results described above and in Appendix B, we estimate that the peripheral storage of visual information included 2 to 3 items, the peripheral storage of acoustic information from spatial arrays of sounds included at most about 1 item, and the central storage component included only 1 to 2 items. Thus, the analysis method made a big difference even in the understanding of the results of Saults and Cowan.

Overview of the Present Series of Experiments

Nine experiments were conducted to test the generality of the estimates obtained from Saults and Cowan (2007) according to our re-analysis of their data. The experiments can be divided into four sets based on the nature of the probe and conditions. Conditions in the first three sets of experiments are described in Tables 1-3, respectively. First, in Experiments 1a and 1b (see Table 1), the stimuli were the simplest we could devise but, as a result, the modalities were not closely comparable. Specifically, the acoustic probe (test) stimulus was a single digit (with no indication of serial position in the list) whereas the visual probe stimulus was a single item presented in the same spatial location as the corresponding array item. Different formulas were used to estimate items in working memory in the two modalities. In these experiments, effects of rehearsal were also investigated by the use of suppression versus tapping, but no difference was observed.

Table 1.

Accuracy and working memory parameter values in experiments with a single acoustic probe (no position cue) or with a visual probe presented in a target location.

| Unimodal | Bimodal | Parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Condition | Digits(h,fa) | Objects(h,fa) | Digits(h,fa) | Objects(h,fa) | C | Pverb | Pobj | ||||

| Experiment 1a | |||||||||||

| Articulatory Suppression | |||||||||||

| M | 0.87 | 0.09 | 0.82 | 0.24 | 0.87 | 0.11 | 0.79 | 0.32 | 0.68 | 3.75 | 2.23 |

| SEM | 0.03 | 0.02 | 0.03 | 0.03 | 0.02 | 0.02 | 0.04 | 0.03 | 0.27 | 0.29 | 0.34 |

| Tapping | |||||||||||

| M | 0.97 | 0.06 | 0.89 | 0.23 | 0.96 | 0.08 | 0.82 | 0.33 | 0.93 | 3.73 | 2.39 |

| SEM | 0.01 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.03 | 0.04 | 0.21 | 0.20 | 0.24 |

| Experiment 1b | |||||||||||

| Articulatory Suppression | |||||||||||

| M | 0.77 | 0.21 | 0.81 | 0.30 | 0.76 | 0.24 | 0.75 | 0.37 | 0.87 | 2.62 | 1.63 |

| SEM | 0.04 | 0.02 | 0.03 | 0.04 | 0.03 | 0.03 | 0.03 | 0.03 | 0.39 | 0.39 | 0.36 |

| Tapping | |||||||||||

| M | 0.87 | 0.15 | 0.83 | 0.26 | 0.83 | 0.14 | 0.83 | 0.34 | 0.30 | 3.83 | 2.56 |

| SEM | 0.02 | 0.02 | 0.03 | 0.03 | 0.02 | 0.02 | 0.03 | 0.03 | 0.24 | 0.23 | 0.24 |

Note. Parameter values that are above zero by t test, p<.05, are shown in bold.

Table 3.

Accuracy and working memory parameter values in Experiments 3a, 3b, and 4, with a single acoustic probe with no position cue or a visual probe presented at the center of the screen.

| Unimodal | Bimodal | Parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Condition | Digits(h,fa) | Objects(h,fa) | Digits(h,fa) | Objects(h,fa) | C | Pverb | Pobj | ||||

| Experiment 3a (Changes in Digit-Position or Color-Location Binding) | |||||||||||

| No Mask | |||||||||||

| M | 0.72 | 0.13 | 0.85 | 0.24 | 0.67 | 0.17 | 0.74 | 0.37 | 0.73 | 0.73 | 0.96 |

| SEM | 0.03 | 0.02 | 0.03 | 0.04 | 0.04 | 0.04 | 0.03 | 0.05 | 0.19 | 0.15 | 0.20 |

| Experiment 3b (Changes in Digit-Position or Color-Location Binding) | |||||||||||

| Same-Modality Mask | |||||||||||

| M | 0.63 | 0.22 | 0.75 | 0.23 | 0.64 | 0.26 | 0.65 | 0.44 | 0.61 | 0.52 | 0.87 |

| SEM | 0.03 | 0.02 | 0.03 | 0.02 | 0.03 | 0.03 | 0.04 | 0.03 | 0.18 | 0.17 | 0.16 |

| No Mask | |||||||||||

| M | 0.70 | 0.17 | 0.77 | 0.23 | 0.69 | 0.19 | 0.64 | 0.39 | 0.48 | 0.82 | 1.09 |

| SEM | 0.03 | 0.03 | 0.02 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.22 | 0.20 | 0.20 |

| Different-Modality Mask | |||||||||||

| M | 0.73 | 0.17 | 0.75 | 0.24 | 0.63 | 0.22 | 0.64 | 0.39 | 0.69 | 0.78 | 0.75 |

| SEM | 0.03 | 0.02 | 0.03 | 0.03 | 0.03 | 0.03 | 0.04 | 0.03 | 0.20 | 0.17 | 0.17 |

| Experiment 4 (Changes to a New Item) | |||||||||||

| Same-Modality Mask | |||||||||||

| M | 0.96 | 0.08 | 0.94 | 0.15 | 0.91 | 0.15 | 0.88 | 0.23 | 0.80 | 2.87 | 2.51 |

| SEM | 0.01 | 0.02 | 0.02 | 0.03 | 0.02 | 0.03 | 0.03 | 0.03 | 0.25 | 0.23 | 0.24 |

| No Mask | |||||||||||

| M | 0.98 | 0.06 | 0.95 | 0.17 | 0.95 | 0.12 | 0.85 | 0.26 | 0.68 | 3.08 | 2.60 |

| SEM | 0.01 | 0.01 | 0.01 | 0.04 | 0.01 | 0.03 | 0.03 | 0.03 | 0.25 | 0.24 | 0.25 |

| Different-Modality Mask | |||||||||||

| M | 0.95 | 0.07 | 0.91 | 0.12 | 0.97 | 0.13 | 0.86 | 0.21 | 0.78 | 2.94 | 2.69 |

| SEM | 0.01 | 0.02 | 0.02 | 0.02 | 0.01 | 0.03 | 0.03 | 0.03 | 0.25 | 0.23 | 0.22 |

Note. Parameter values that are above zero by t test, p<.05, are shown in bold.

In Experiments 2a-2c (see Table 2), a serial position cue was added to the verbal probe so that the task became more directly comparable in the two modalities. Moreover, given that we observed a smaller central component than Saults and Cowan (2007), we considered whether aspects of the sequential presentation of verbal items (compared to their concurrent presentation as acoustic arrays in Saults & Cowan) were responsible. Specifically, participants might combine temporally adjacent items to form larger chunks (Miller, 1956). To discourage this and thus make retention more dependent on attention, we introduced semantic-category and voice changes within lists. We tried lists of words from different semantic categories, thinking that words from the same semantic category might be chunked together (e.g., McElree, 1998; Miller, 1956), which could reduce the load on central processing. We also used lists with voice changes in some conditions of Experiment 2b, because Goldinger, Pisoni, and Logan (1991) found that under certain circumstances, it was harder to retain lists of words spoken in different voices (i.e., speaker variability). This finding may occur because the words in a variable-voice list are not perceived as falling within a single perceptual stream (Bregman, 1990; Macken, Tremblay, Houghton, Nicholls, & Jones, 2003). Despite these attempts, the same basic pattern of results was obtained in all conditions. Finally, we considered that the peripheral storage upon which participants relied might be a form of auditory sensory memory that somehow survived the mask. To rule this out, the acoustic stimuli were replaced by printed lists of verbal items in Experiment 2c. This, however, this failed to reduce peripheral storage or increase central storage; the pattern of results remained basically the same.

Table 2.

Accuracy and working memory parameter values in experiments with a single verbal probe with a serial position cued, or a visual probe presented in a target location.

| Unimodal | Bimodal | Parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Condition | Words(h,fa) | Objects(h,fa) | Words(h,fa) | Objects(h,fa) | C | Pverb | Pobj | ||||

| Experiment 2a | |||||||||||

| Same Category Within a Spoken List | |||||||||||

| M | 0.93 | 0.07 | 0.91 | 0.17 | 0.86 | 0.11 | 0.87 | 0.31 | 1.15 | 2.29 | 1.81 |

| SEM | 0.02 | 0.02 | 0.02 | 0.04 | 0.03 | 0.03 | 0.02 | 0.05 | 0.30 | 0.29 | 0.29 |

| Different Categories within a Spoken List | |||||||||||

| M | 0.93 | 0.13 | 0.92 | 0.20 | 0.92 | 0.09 | 0.81 | 0.28 | 0.67 | 2.54 | 2.23 |

| SEM | 0.02 | 0.03 | 0.02 | 0.04 | 0.02 | 0.02 | 0.04 | 0.04 | 0.29 | 0.28 | 0.29 |

| Experiment 2b | |||||||||||

| Same Voice and Category Within a Spoken List | |||||||||||

| M | 0.90 | 0.09 | 0.88 | 0.23 | 0.91 | 0.09 | 0.83 | 0.31 | 0.46 | 2.79 | 2.11 |

| SEM | 0.03 | 0.02 | 0.04 | 0.04 | 0.03 | 0.04 | 0.05 | 0.05 | 0.30 | 0.35 | 0.38 |

| Different Voices and Categories Within a Spoken List | |||||||||||

| M | 0.90 | 0.11 | 0.90 | 0.23 | 0.91 | 0.09 | 0.88 | 0.27 | 0.14 | 3.04 | 2.54 |

| SEM | 0.03 | 0.04 | 0.04 | 0.04 | 0.02 | 0.02 | 0.03 | 0.06 | 0.29 | 0.26 | 0.32 |

| Experiment 2c | |||||||||||

| Same Category Within a Printed List | |||||||||||

| M | 0.93 | 0.12 | 0.94 | 0.18 | 0.80 | 0.17 | 0.89 | 0.23 | 1.13 | 2.12 | 1.91 |

| SEM | 0.02 | 0.02 | 0.02 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.32 | 0.29 | 0.29 |

| Different Categories Within a Printed List | |||||||||||

| M | 0.90 | 0.14 | 0.92 | 0.19 | 0.81 | 0.16 | 0.88 | 0.27 | 0.94 | 2.13 | 1.98 |

| SEM | 0.03 | 0.04 | 0.02 | 0.04 | 0.05 | 0.03 | 0.03 | 0.04 | 0.37 | 0.33 | 0.32 |

Note. Parameter values that are above zero by t test, p<.05, are shown in bold.

Experiments 3a-3b and 4 (see Table 3) were conducted in order to apply the central / peripheral storage distinction to the case of attribute binding information. This purpose required some changes in design, the key one being the presentation of items with two physical attributes each (object color and shape; spoken word identity and voice). Also, the probe position cues were omitted; the spoken digit probe was presented without a serial position cue and the visual probe (on other trials) was presented at the center of the screen, not in the spatial location of one array item. This ensured that position information could not be used to reconstruct the binding between attributes.

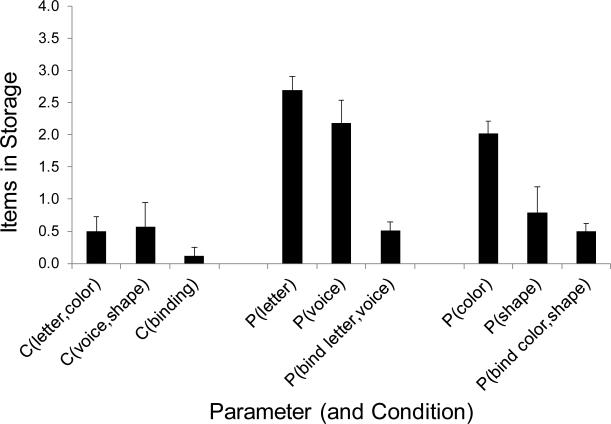

Finally, Experiment 5 was conducted to assess the generality of the findings of the previous experiments. It included both item and binding trial blocks in one experiment. It also examined the features underlying binding in more detail: not only memory for spoken words but also, separately, for the voices in which they were spoken, and not only memory for object colors but also, separately, for the shapes in which they were presented.

These experiments included 26 different combinations of experimental variables yielding estimates of central, verbal, and visual nonverbal working memory parameters. To preview, these are the two most notable findings: First, the estimate of items in the central resource, C, is consistently small, in contrast to the conclusions of Saults and Cowan (2007). Second, estimates of peripheral storage are larger for item-change tasks than for binding-change tasks. These results call for a theoretical modification of a view in which individuals hold several items concurrently in the focus of attention continually from the time of the presentation of these items until the time of test (Cowan, 1988, 1999, 2001, 2005). We will argue that a multi-item focus does exist, but is not loaded continually during the retention interval with all the items in storage. Recent support for the concept of working memory items both inside and outside of the focus of attention comes from recent neuroimaging work showing stimulus-specific activation patterns for items to be considered for a current task (i.e., in the focus of attention), but not for all items to be remembered (Lewis-Peacock, Drysdale, Oberauer, & Postle, 2012).

Fougnie and Marois (2011) also carried out a set of experiments similar to some of ours. They did not, however, modify the capacity formula in the manner we suggest, and they did not apply the formulas to separate central and peripheral components of storage. Moreover, they used articulatory suppression to prevent rehearsal in only one experiment. They observed more dual-task tradeoff when feature binding was required than when only feature information was required by the task. Their experiments in which feature binding was not required, however (their 3a and 3b) involved tonal stimuli, whereas their studies of memory for feature binding involved verbal acoustic stimuli. Using a wider variety of verbal stimuli, we find that the difference between attribute and binding memory rests not in the central component, but primarily in peripheral components.

EXPERIMENT SERIES 1: EFFECTS OF ARTICULATORY SUPPRESSION

Experiment 1a

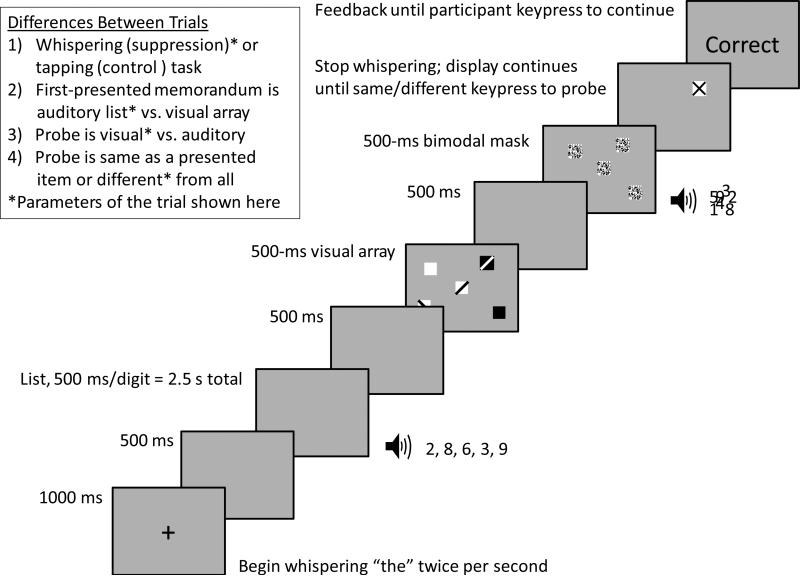

In this experiment, we made use of the stimulus arrangement that seemed most simple and natural (Figure 2; Figure 3, top panel). Arrays of colored squares were combined with lists of spoken digits and the conditions included attention to visual stimuli only, acoustic stimuli only, or both modalities. Masks were presented in order to eliminate any residual sensory memory (Saults & Cowan, 2007). Then a probe was presented in an attended modality, a single spoken digit or a colored square in the spatial location of one of the array items. The item was always either identical to an array or list item (in the visual case, the replaced item) or different from all of the studied items. During the trial, either articulation was required, to suppress any covert verbal rehearsal, or repeated finger-tapping was required, as a secondary-task control.

Figure 2.

A detailed illustration of a trial in Experiment 1a. The inset explains the different trial types in this experiment.

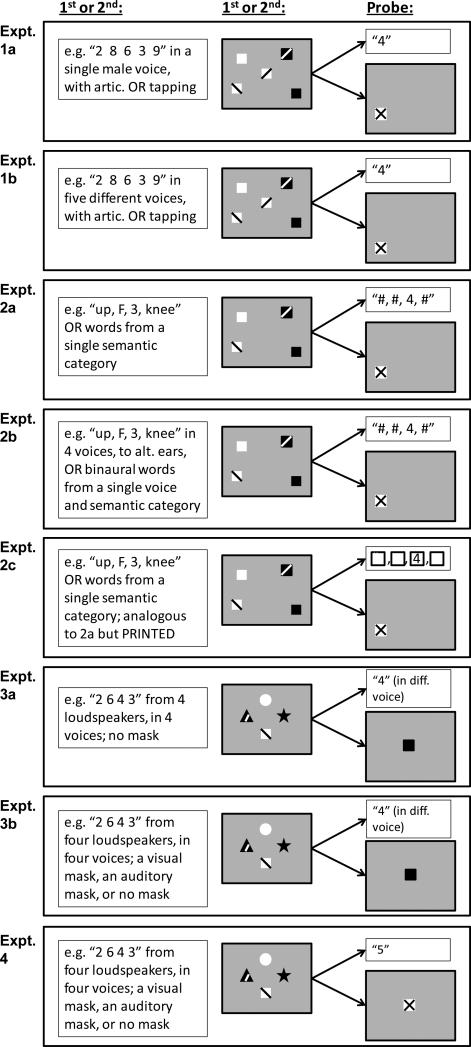

Figure 3.

Illustration of two change trials, one for each stimulus type, in Experiments 1-4. On half the trials the nonverbal visual display preceded the verbal display (unlike what is shown in the figure). There was a bimodal mask before the probe, except as otherwise stated. In bimodal trial blocks, the probe could be either verbal or nonverbal. Objects’ patterns within the arrays represent different colors.

Method

Participants

The participants were 24 college students (14 female) who received course credit for their participation. Two female participants were excluded because of equipment problems.

Apparatus and Stimuli

Auditory stimuli were the spoken digits, 1-9, digitally recorded using an adult male voice. Recorded digits were temporally compressed to a maximum duration of 250 ms but presented at a pace of 2 digits per second. Temporal compression, from 65% to 95% of each word's original duration, was accomplished without altering pitch using the software Praat (Boersma & Weenink, 2009). The auditory mask combined all digits with onsets aligned. Digits and mask were presented to each ear with an intensity of 65-75 dB(A) using Telephonics TDH-39 headphones. Visual stimuli were presented on a 17-inch cathode ray tube monitor (1024 by 768 pixels). Each visual study array was presented for 500 ms. It consisted of 5 squares whose colors were sampled without replacement from 10 colors (black, white, red, blue, green, yellow, orange, cyan, magenta, and dark-blue-green). Squares were randomly positioned on the screen as in (Cowan et al., 2005): at a viewing distance of 50 cm, the array fell within 9.8 horizontal and 7.3 vertical degrees of visual angle. Squares of 0.750 on a side were randomly placed within this region except that the minimum center-to-center separation between squares was 2.00 and no square was located within 2.00 of the center of the array. Patterned masks consisted of identical multicolored squares in the same locations as the items in the studied array, also presented for 500 ms.

Procedure

Each participant was tested individually in a quiet room. An experimenter was present throughout each session. Visual, auditory, and combined bimodal memory capacities were tested using a general procedure similar to Experiment 4 of Saults and Cowan (2007) with a few important differences. Most notably, in the current study, we presented sequences of 5 spoken digits before or after arrays of 5 colored squares and the probe was a single spoken digit or colored square the same or different from any stimulus in the study items. One of two secondary tasks, manual tapping or articulatory suppression, were performed during encoding and retention of the study items.

All conditions were blocked and their order counterbalanced between participants. Each session was divided into two subsessions, one with each secondary task, tapping or articulatory suppression; half had tapping first. Each of these subsessions was sub-divided into three blocks of memory load conditions: remember visual, remember auditory, and remember both. Each participant did these three memory load blocks in the same order within each secondary task subsession; the six possible orders of memory load were distributed equally across participants who did each order of the secondary tasks.

Each secondary task subsession began with general instructions about the memory task and the secondary task, as well as practice doing the secondary task at the proper rate. For the articulatory task, participants practiced whispering “the” in time with a click from the computer presented twice per second for 30 seconds. For the tapping task, participants practiced tapping the control key in time with a click presented twice per second for 30 seconds. During the memory task, they were instructed to begin the secondary task (tap or whisper “the”) when they saw the fixation cross, and stop when they saw or heard the probe.

Next, the participant began the first block of memory trials. An illustration of a trial is shown in Figure 2, which also summarizes the main differences between trials (inset). All stimuli were presented on a screen with a uniform medium gray background. Each trial began with fixation cross presented in the center of the screen for 1000 ms, followed by a 500-ms blank screen. This was followed by an array of 5 different colored squares lasting 500 ms, and a sequence of 5 different digits presented 2 per second over headphones. The two modalities were separated by an interstimulus interval (ISI) of 500 ms; one modality was completed before the other one started (Figure 2) and each modality occurred first on half of the trials. A blank screen appeared for 500 ms after the study items. Then five multicolored-mask squares appeared in the same locations as the squares in the study array. At the same time, an auditory mask, consisting of the combined digits, was presented via headphones. Thus, even though the target memoranda were presented consecutively by modality (all auditory before all visual or vice versa), the mask was presented with both modalities at once. Although this means that the target-mask interval varied between modalities used on a trial, the timing was counterbalanced across trials and the arrangement was considered important in order to avoid separate distracting switches of attention from one mask modality to the other.

Immediately after the 500-ms mask, a probe was presented. The auditory probe was a spoken digit identical to one in the study list or different from any in the study list. When an auditory probe occurred, a “?” appeared in the center of the screen. The visual probe was a square in the same location and the same color as a study square or in the same location as a square but a different color than any square in the study array. The participant was to press the “S” key if the probe was the same as a study item and the “D” key if the probe was different from all of the study items. The “?” or probe square remained on the screen until a response was recorded. Then participants saw “Correct” or “Incorrect” as feedback in the center of screen until they pressed “Enter” to begin the next trial.

For each subsession (tapping or articulatory suppression) there were three blocks of memory trials. Each visual-load block consisted of 8 practice and 40 experimental trials, in which participants were instructed to remember only the colored squares and were always tested with a probe square. Analogously, each auditory-load block consisted of 8 practice and 40 experimental trials, in which participants were instructed to remember only the spoken digits and were always tested with a probe digit. Finally, each bimodal-load block consisted of 16 practice and 80 experimental trials, in which participants were instructed to remember both the colored squares and the spoken digits. In these blocks there were an equal numbers of auditory- and visual-probe trials randomly intermixed. In every block of trials, half of the trials for each probe modality and presentation order (visual-first or auditory first) were change (different) trials. In experimental blocks with auditory probes, the probe digit in no-change (same) trials occurred in each serial position of the study list equally often.

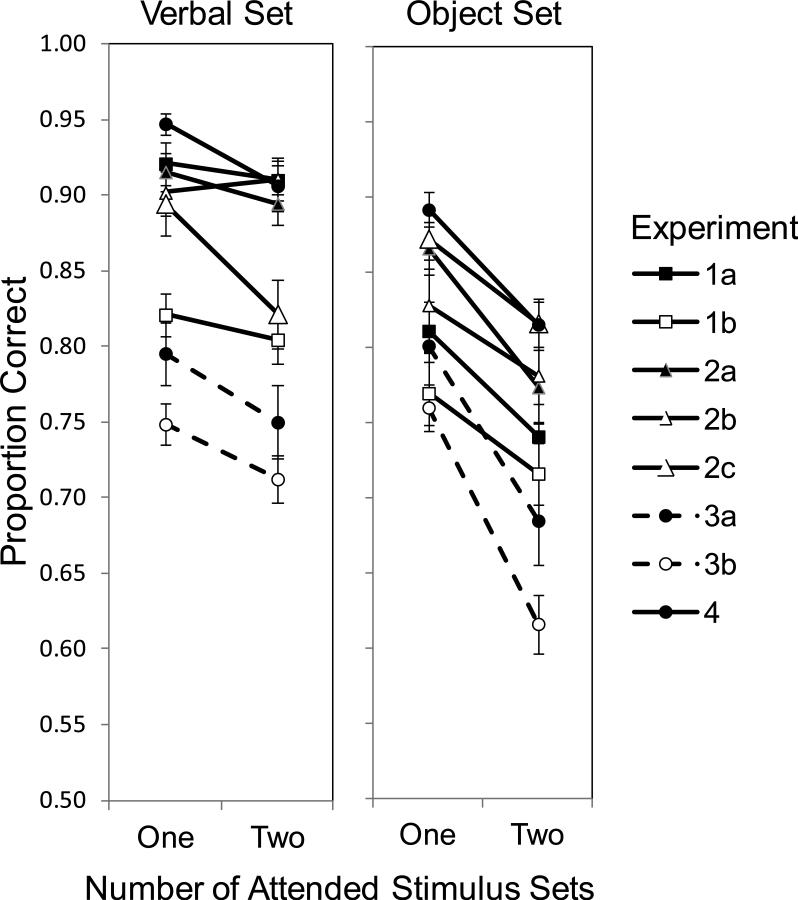

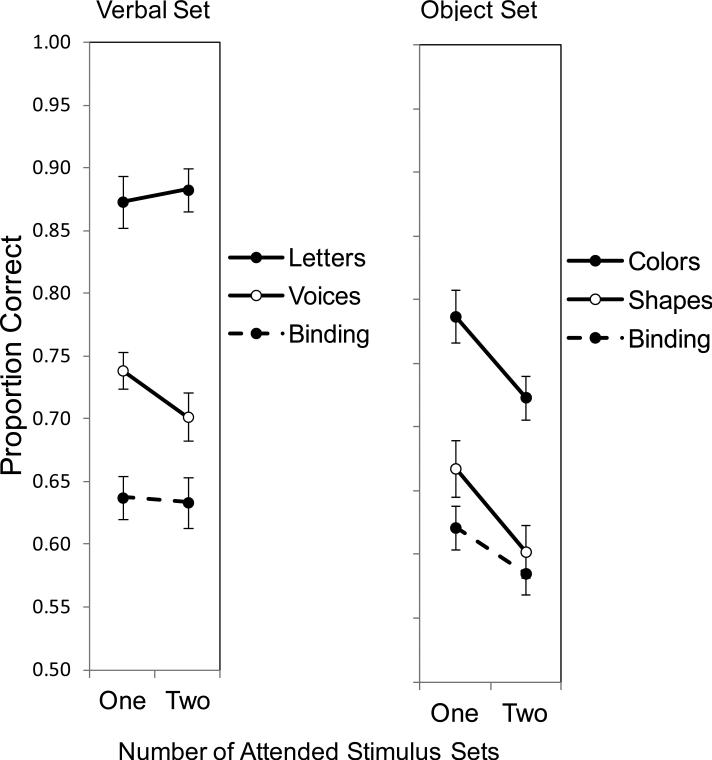

Results and Discussion

The proportions correct are shown in Table 1 for each condition separately. In Figure 4, unimodal and bimodal proportions correct for verbal and object sets, collapsed across other conditions, is represented along with the next seven experiments. Clearly there was diminished performance in the bimodal conditions relative to the unimodal conditions, although the loss was modest for verbal items in Experiment 1a, and more notable for nonverbal objects, in support of the claim of an asymmetry in dual-task tradeoffs (Morey & Mall, 2012; Morey, Morey, van der Reijden, & Holweg, 2013; Vergauwe, Barrouillet, & Camos, 2010).

Figure 4.

Mean proportion correct across conditions in each of the first eight experiments (graph parameter). The left panel shows the tests on verbal items and the right panel shows the tests on nonverbal objects. On the X axis is the number of stimulus sets attended: one (unimodal) versus two (bimodal) conditions. Error bars are standard errors.

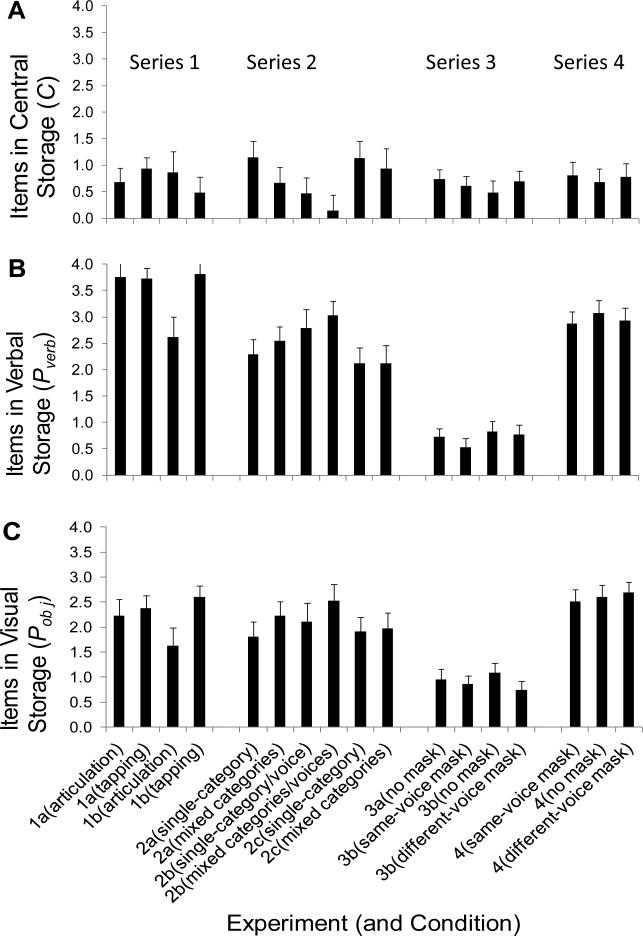

The verbal and object results in this experiment had to be analyzed with different formulas (Appendix A) because, in the verbal case, the probe could match any list item; whereas, in the object case, knowledge of a specific item was probed. The central and peripheral storage parameters are shown both in Table 1 and in Figure 5. The central parameter was less than 1 item in both the tapping and suppression conditions, and the auditory and visual peripheral storage parameters were both several items in both conditions. Articulation versus tapping made no statistical difference in t tests for the C, Pverb, and Pobj parameters, but all six means were significantly above zero by t test (as shown in Table 1).

Figure 5.

Mean values of parameters of working memory for 17 conditions from the first 8 experiments. (A) Top panel: Parameter C, items in central storage; (B) middle panel, Parameter Pverb, items in peripheral verbal storage; (C) bottom panel, Parameter Pobj, objects in peripheral visual storage. Experiments in Series 3 (3a and 3b) are the only ones that required binding of visual features (colors with shapes) and verbal features (digits with spoken voices), and they produced much lower estimates of peripheral information storage than did the other experiments.

The absence of an effect of articulatory suppression suggests that, with suppression or without it, there was no effective use of verbal rehearsal to recode and rehearse the visual stimuli in phonological or verbal form. This result mirrors the finding of previous research (notably Morey & Cowan, 2004) showing no effect of articulatory suppression on visual array memory.

Consistency with past capacity estimates

Previous work has put the mean capacity estimate at 3-5 items and, for simple visual items, typically toward the lower end of that range (e.g., Cowan, 2001; Cowan et al., 2012; Luck & Vogel, 1997; Rouder et al., 2008). It should be emphasized that to obtain a comparison with the present results, one should not use only the central component but the sum of the central and peripheral components. For example, for the articulatory suppression condition of the present experiment, the verbal capacity (based on means in Table 1) is 0.68(central)+3.75(peripheral)=4.43(total), and for objects it is 0.68(central)+2.23(peripheral)=3.91(total). These numbers are quite consistent with past work. The finding of limited tradeoffs between modalities is also consistent with past work when an acoustic or verbal series is paired with a visual array (Cocchini et al., 2002; Fougnie & Marois, 2011). These observations hold across the series of experiments taken as a whole.

Experiment 1b

Perhaps the small size of the central resource parameter in Experiment 1a occurred because the acoustic stimuli all were presented in the same male voice, which elicit an auditory sensory means of storage for that modality despite the presence of a mask. Therefore, in the present experiment, we presented auditory stimuli in different voices and in alternating ears to make it more difficult to use a coherent auditory sensory stream (Figure 3, second panel).

Method

Participants

The participants were 25 college students (17 female) who received course credit for their participation.

Apparatus and stimuli

This experiment replicated Experiment 1a in every way except for the auditory stimuli, which were the digits 1-9 spoken in five voices: those of a male adult, two distinctive female adults (one with a deeper voice than the other), a male child, and a female child. The temporal compression algorithm described for Experiment 1a was again applied so that none of the recordings was longer than 250 ms. Then, 20 different auditory masks were created by combining different digits spoken by all 5 voices in each channel (left and right) with aligned onsets. Auditory study items on each trial consisted of 5 digits randomly sampled without replacement, each digit spoken by a different voice. On each trial, study digits were presented alternately to the left and right ear. Which ear received the first item alternated from trial to trial. A 2-channel auditory mask was randomly selected on each trial, and presented at the same time as the visual mask.

Results and Discussion

As shown in Table 1 and Figures 4 (overall proportion correct) and 5 (parameter values), the results were very similar to Experiment 1a. Most importantly, it was again found that there was a dual-task tradeoff but that most of the storage was peripheral (modality- or code-specific), and only a smaller amount was central in nature. The fact that the results were similar across experiments goes against the notion that an auditory sensory stream in Experiment 1a was an important contributing factor to the results.

In this experiment, unlike the first experiment, the articulation condition produced lower estimates of Pverb, t(24)=3.57, p=.002, and lower estimates of Pobj, t(24)=3.33, p=.003, compared to the tapping condition. This finding, in combination with the absence of such an effect in Experiment 1a, suggests that the presentation of changing voices may cause distraction. In the tapping condition, rehearsal may help to overcome or compensate for that distraction.

Of the six parameters shown in Table 1, five were significantly above zero (Table 1), the exception being the C parameter in the tapping condition. Perhaps in that condition, verbal rehearsal tended to replace central storage as a mnemonic strategy.

As in Experiment 1a and Morey and Cowan (2004), the absence of an effect of articulatory suppression suggests that, with suppression or without it, there was no effective use of verbal rehearsal to recode and rehearse the visual stimuli in phonological or verbal form. (This would not prevent phonological encoding of the spoken verbal stimuli, and phonological storage could be the basis of peripheral verbal storage; see for example Baddeley et al., 1984). Nevertheless, we continue to use suppression in all of the experiments to make sure that rehearsal is discouraged in all test circumstances.

EXPERIMENT SERIES 2: EFFECTS OF SEMANTIC CATEGORY MIXTURE

Experiment 2a

Next we explored whether semantic differences between the acoustic items would increase central storage (Figure 3, third panel). Mixed-category lists might help to prevent a potential mnemonic strategy that worried us, the combination of list items to form semantically coherent, multi-word chunks (McElree, 1998; Miller, 1956), which would lower the load on the central store.

In this experiment, we also modified the stimuli so that the same formula could be used to assess the verbal and object stimulus sets. Specifically, acoustic markers were used to indicate the serial position of the list to which the probe word was to be compared. This is conceptually comparable to putting a visual probe object at a specific item location to indicate to which array item the probe was to be compared.

Method

Participants

The participants were 24 college students (13 female) who received course credit for their participation.

Apparatus and stimuli

The visual stimuli were reduce to 4 per array, and the same was true of spoken lists, to accommodate the greater amount of information per spoken list in this experiment. Acoustic stimuli were spoken in a male voice and were items from four categories: directions (up, down, in, out, on, off, left, right), letters (B, F, H, J, L, Q, R, Y), digits (1-9), and body parts (head, foot, knee, wrist, mouth, nose, ear, toe). Each list consisted of either four words drawn from the same category, or one word drawn from each category for a total of four (as illustrated in the third panel of Figure 3). The word “go”, in the same voice, was recorded and used as an auditory mask. The durations of all auditory stimuli were less than 500 ms. They were presented to participants with intensities between 65 and 75 dB(A) over Telephonics TDH-39 headphones.

Procedure

All trials in this experiment included articulatory suppression to prevent rehearsal. Each session begin with instructions and a practice example of each kind of trial. The instructions included a step-by-step demonstration of exactly what a participant would see and hear during each trial. Then there was a real-time practice example of each kind of trial so that the participant had a chance to see and hear each kind of cue and stimulus. More extended practices followed this instruction phase. First the participant got instruction and practice doing articulatory suppression. As in the previous experiments, the participants practiced for 30 s whispering “the” twice a second in time to a click. Then they did practice blocks of each memory condition while also doing articulatory suppression. They first did 8 auditory practice trials, each preceded by instruction to attend to and remember only the auditory stimuli and ending with an auditory probe. Next they did 8 visual practice trials, each preceded by instructions to attend to and remember only the visual stimuli and ending with a visual probe. Finally they did a practice block of bimodal memory trials, each preceded by instruction to attend to and remember both auditory and visual stimuli. Half of these trials ended with an auditory probe and half ended with a visual probe. Each block of practice trials included examples of the same combinations of stimuli the participant would see and hear in the experimental trials. For example, half of the trials in each practice block presented auditory study stimuli first, and half presented visual study stimuli first. In half of the practice trials, the four auditory study stimuli in a list were from the same category, and the remaining practice trials were mixed lists with one word from each category. After participants practiced a block of each kind of memory trial, with articulatory suppression, the experimenter left the booth but continued to monitor participants via a camera and microphone while they completed 128 experimental trials, including all kinds of memory trials intermixed in a different random order for each participant.

The stimuli and their relative timings were similar for all trials, with the exception of the pretrial memory cue, the order of study modality (auditory-first or visual-first) and the modality of the probe stimuli. Each trial began with the display of one of the following memory cues in the center of the screen that told the participant “Attend to and remember ONLY AUDITORY STIMULI”, “Attend to and remember ONLY VISUAL STIMULI”, or “Attend to and remember BOTH VISUAL and AUDITORY STIMULI”, and “Press enter to continue.” When participants pressed the enter key they saw a fixation cross in the center of the screen for 1000 ms, followed by a 500 ms blank screen. Then the first study stimuli occurred. If it was an auditory-first trial, the screen remained blank while the participant heard 4 words with onset-to-onset times of 500 ms. Next, the visual array of 4 colored squares appeared 1500 ms after the onset of the last auditory stimulus. The visual array was displayed for 500 ms, followed by a 500 ms blank screen and then by the bimodal mask consisting of the word “Go” and multicolored squares in the same locations as the colored squares. The mask was displayed for 500 ms, followed by a 500 ms blank screen and then the probe stimuli. If it was a visual-first trial, the 500 ms blank screen was followed by a visual study display for 500 ms and, 1500 ms after the onset of the visual array, the participant heard 4 words with onset-to-onset times of 500 ms. The bimodal mask occurred 1000 ms after the onset of the last auditory stimulus. The 500 ms mask was followed by 500 ms blank screen and then the probe stimuli. If the probe modality was also the modality that had been presented first, then the onset of the probe occurred 1000 ms after the onset of the mask. If the probe modality was the modality that had been presented last, then there was an additional delay before the probe, depending on its modality, so that the retention delay always was a constant 5000 ms from the onset of the study stimuli to the onset of the probe.

After the mask and the additional delay for the 5000 ms retention interval, the probe occurred. The visual probe was a single square in the same location and the same color as a study square or a different color than any in the study array. The probe square remained on the screen for 2 s or until the participant responded. The auditory test consisted of sequence of four sounds with the same timing as the study list. The auditory probe was presented in a serial position that corresponded to the relative location of the probed study item. Other stimuli in the test list consisted of a brief buzz. Each buzz served as a placeholder for a non-probed word and indicated the serial position of the probed item (e.g., when probing the third item for the word foot, the test would proceed as buzz – buzz – foot – buzz). The probe stimulus was identical to the auditory stimulus presented in the same serial position of the study list or it was a word from the same category that was not in the test list. Immediately after the test sequence a “?” appeared in the center of the screen and remained on the screen for 2 s or until the participant responded. Immediately after each response, accuracy feedback (correct or incorrect) was displayed for 1 s.

The experimental block consisted of 128 trials with all types randomly intermixed; half were bimodal memory trials and half were unimodal memory trials. Of the 64 bimodal (remember-both) trials, 32 had auditory probes and 32 had visual probes. Of the 64 unimodal trials, 32 were remember-auditory trials and 32 were remember-visual trials. Each trial-type included 8 change and 8 no-change trials for each modality order, visual-first and auditory-first. An important characteristic of auditory lists was whether all words were from the same category (same-list) or each word was from a different category (mixed-list). There were equal numbers of same- and mixed-list trials for each memory condition, even when auditory stimuli were irrelevant. Also, the words in same-list trials were composed of each of the four categories equally often for each trial type. The serial position of the probed item was another important variable balanced across the different kinds of auditory probe trials. Of the 32 auditory probe trials for each memory load condition, each serial position was probed once for each combination of probe order (visual- or auditory-first), category (same- or mixed-list), and correct response (same or different).

Results and Discussion

Table 2 and Figures 4 and 5 show that mixed semantic information and changed method did not alter the results in a notable way from previous experiments; the central information included only 0.67 item on average, again with several items in peripheral storage in each modality. When a single category per list was used, the average central storage was increased to 1.15 items, but this difference did not reach significance by t test. Fundamentally, the results are unchanged. In t tests for each parameter, none showed a difference between single-category and mixed-category lists. All six parameter values were significantly above zero (Table 2). Thus, the results cannot be explained in terms of semantic grouping in uniform lists.

Experiment 2b

In this experiment we attempted to give as much distinctiveness as possible to each acoustic item, by varying not only the semantic category within mixed lists, but also the voice and ear of presentation in those lists (Figure 3, fourth panel). Perhaps that would increase the central storage component and prevent any chunking that might occur. As mentioned, voice variability does make recall more difficult in many situations (Goldinger et al., 1991).

Method

Participants

The participants were 24 college students (7 female) who received course credit for their participation.

Apparatus and stimuli

The equipment and setting were all same as the previous experiment. The only difference in the stimuli was that the spoken stimuli were synthesized in four different voices (whereas they were naturally spoken in previous experiments), so that each stimulus could be presented in a different voice in each mixed-category list. Speech synthesis was done using the built-in speech synthesis capabilities of the Macintosh operating systems 10.6.7 (Snow Leopard). Synthesized words were saved for playback as wave files with 16-bit resolution and 44.1 khz sample rate. To obtain four high-quality and distinctive voices, we selected two built-in voices (Alex and Victoria) and added two from a third-party source, the Infovox iVox voices Ryan and Heather from AssistiveWare (http://www.assistiveware.com/). The duration and intensity of stimuli, reproduced over the same headphones, were similar to the previous experiment. Each stimulus was presented with equal intensity to the two ears. For each trial presenting different-category auditory stimuli, each study stimulus in the four-word list was a word from a different category presented in a different voice, randomly selected from the four available voices. Also, the presentation side alternated between right and left ear for each successive study stimulus. The side of the first stimulus was randomly selected for each trial. Likewise, at the time of test, the “buzz” and probe stimuli alternated, side-to-side, in the same way as the study stimuli.

For each trial presenting same-semantic-category auditory stimuli, all speech stimuli were presented in the same voice, which was randomly selected from the four possible voices.

The auditory mask was the work “go”, spoken in the same voice(s) as the study stimuli, The auditory mask in trials with different-category stimuli consisted of the word “go” simultaneously presented to each ear, spoken by each of the two voices, superimposed, that had presented study stimuli to the same ear on that trial. The test stimulus was the same word or a different word than the study stimulus in the probed serial position, also spoken in the same category, voice, and location as the probed stimulus, in both conditions. Half the time the probe stimulus was the same word and half the time it was a different word from the same category as the target stimulus.

Counterbalancing and randomization of conditions, both within and between subjects, were the same as for the previous experiment. Likewise, the numbers of trials in each block and the assignments of trials to each condition within each block were the same, including assignments of same- and different-category conditions for the four auditory stimuli. The only difference from Experiment 2a was the assignment in Experiment 2b of different voices and spatial channels to auditory stimuli in the mixed semantic category condition.

Results and Discussion

Table 2 and Figures 4 and 5 show that the results were similar to the previous experiment. The central component was quite small for both the uniform and mixed-category list conditions in this experiment, and the peripheral visual and auditory storage components again held several items each. Apparently, auditory chunking cannot explain why a larger central component is not found.

As in the previous experiment, t tests for each parameter revealed no significant effects of pure versus mixed lists. The Pverb and Pobj parameter values were significantly above zero for both kinds of list but, in this experiment, the C parameter was not significantly above zero for either kind of list (Table 2). The low C values suggest that for participants to use central storage to best advantage, there must be a certain amount of predictability about the nature of materials not only within a list, but also between lists within a session.

Experiment 2c

To this point, the central storage component is quite small but it is not clear why. It could be that peripheral acoustic and visual stores can retain the information. Alternatively, the peripheral storage may reflect different verbal and nonverbal codes rather than sensory modalities. In this study, therefore, the acoustic digital lists were replaced with printed lists (Figure 3, fifth panel), in order to determine what would happen with two visual domains, verbal versus nonverbal.

Method

Participants

The participants were 24 college students (5 female) who received course credit. An additional male was omitted from the study for falling asleep.

Apparatus and stimuli