Abstract

Objectives

The study aims are twofold. First, to investigate the suitability of hand hygiene as an indicator of accreditation outcomes and, second, to test the hypothesis that hospitals with better accreditation outcomes achieve higher hand hygiene compliance rates.

Design

A retrospective, longitudinal, multisite comparative survey.

Setting

Acute public hospitals in New South Wales, Australia.

Participants

96 acute hospitals with accreditation survey results from two surveys during 2009–2012 and submitted data for more than four hand hygiene audits between 2010 and 2013.

Outcomes

Our primary outcome comprised observational hand hygiene compliance data from eight audits during 2010–2013. The explanatory variables in our multilevel regression model included: accreditation outcomes and scores for the infection control standard; timing of the surveys; and hospital size and activity.

Results

Average hand hygiene compliance rates increased from 67.7% to 80.3% during the study period (2010–2013), with 46.7% of hospitals achieving target compliance rates of 70% in audit 1, versus 92.3% in audit 8. Average hand hygiene rates at small hospitals were 7.8 percentage points (pp) higher than those at the largest hospitals (p<0.05). The association between hand hygiene rates, accreditation outcomes and infection control scores is less clear.

Conclusions

Our results indicate that accreditation outcomes and hand hygiene audit data are measuring different parts of the quality and safety spectrum. Understanding what is being measured when selecting indicators to assess the impact of accreditation is critical as focusing on accreditation results would discount successful hand hygiene implementation by smaller hospitals. Conversely, relying on hand hygiene results would discount the infection control related research and leadership investment by larger hospitals. Our hypothesis appears to be confounded by an accreditation programme that makes it more difficult for smaller hospitals to achieve high infection control scores.

Strengths and limitations of this study.

The main strengths of this study relate to the use of a comprehensive data set involving the number of acute hospitals (96) participating in the accreditation process and the length of follow-up over eight hand hygiene audit points and two accreditation cycles.

This study also addresses an important research question in terms of identifying and assessing the components of hospital accreditation and quantifying their inter-related benefits.

The results have important implications for health policymakers internationally in terms of designing health service accreditation programmes that accurately monitor a wide range of hospital sizes and types.

The main limitation was the lack of a control group as all hospitals in the survey were accredited. This meant that it was not possible to assess direct or reverse causal relationships or to prevent an omitted variable bias.

Other limitations include potential measurement error resulting from the use of self-reported hand hygiene data; however, the data collection methods adhered to the WHO's best practice guidelines.

Introduction

Hospital accreditation programmes are designed to set clinical and organisational standards, assess compliance with those standards and strengthen quality improvement efforts. Accreditation is widely practised with national level accreditation agencies active in at least 27 countries.1 The problems associated with measuring accreditation benefits are well documented.2 3 A clear understanding of the inputs, in terms of costs and resource use, and outcomes, in terms of improved patient safety and quality, is essential in ensuring that accreditation programmes are achieving their aims of improving patient safety and healthcare quality.4 5 Measuring the effects of accreditation on clinical practice and quality of care is important as we need to determine whether the cost burden of data collection and audit processes is outweighed by the expected improvements in quality and safety outcomes.5

In this study, we analyse the relationship between hand hygiene compliance rates and accreditation outcomes in order to test the suitability of hand hygiene as an indicator of accreditation outcomes. Our hypothesis is that hospitals with better accreditation outcomes and infection control scores than others reflect organisational processes that support a positive culture towards improving quality and safety,6 and therefore they would achieve higher hand hygiene compliance rates.

Hand hygiene assessment is an integral component of the infection control standards used to evaluate whether Australian hospitals are compliant with accreditation standards.7–9 Hand hygiene compliance rates have been validated as a potential process indicator for accreditation outcomes. Moreover, healthcare-associated infections are recognised as a leading cause of increased morbidity and healthcare costs.10 A US study estimated that there were 1.7 million healthcare-associated infections in 2002, comprising 4.5% of admissions, and resulting in nearly 99 000 deaths.11 A recent meta-analysis estimated the cost of the five most common healthcare-associated infections at US$9.8 billion per annum.12 In Australia, the most recent figures available indicate that healthcare-associated infections resulted in an extra two million bed days in 2005, with estimated additional costs of $A21 million from postdischarge surgical infections.13

There is increasing evidence that improving hand hygiene reduces healthcare-associated infections and the spread of antimicrobial resistance.14–18 However, it is difficult to demonstrate a causal link between hand hygiene and healthcare-associated infections due to a multiplicity of interventions and scarcity of randomised trials.19–21 Nevertheless, the WHO has identified good hand hygiene as a major factor in reducing healthcare-associated infections based on epidemiological evidence.18

Hand hygiene policies in Australian hospitals follow international best practices. They are based on WHO's recommendations with a multimodal approach incorporating: access to cleaning agents at the point of care; training and education; monitoring and feedback; reminders in the workplace; and development of an institutional safety climate.22 Auditors trained by Hand Hygiene Australia monitor hand hygiene activity by direct observation of hospital staff and compare hand hygiene activity against the total number of potential ‘moments’ for hand hygiene.23 The national target for hand hygiene compliance is 70% and audit results are publicly reported three times a year. The Australian Commission on Safety and Quality in Health Care (ACSQHC) has recommended that hand hygiene programmes need to be repeatedly monitored using both process indicators (compliance rates) and outcome indicators (infection rates).24

Methods

Study design, setting and context

The study comprised a retrospective, longitudinal, multisite comparative survey of hand hygiene compliance rates and accreditation outcomes in acute public hospitals in New South Wales (NSW), Australia. With a population of 7.2 million, NSW comprises 30.5% of the 736 public hospitals and 32.0% of the population in Australia.25 We employed retrospective data matching techniques over the study period, 2009–2013, to analyse the relationship between hand hygiene compliance data and accreditation outcomes.

Data matching and analysis

Hand hygiene compliance data

Hand hygiene policies include five ‘moments’ when hand hygiene should be performed: before touching a patient; before a procedure; after a procedure or body fluid exposure risk; after touching a patient and after touching a patient's surroundings.23 26 Audits are carried out three times a year by healthcare workers who have been accredited by Hand Hygiene Australia. Surveys are conducted using a standardised observation assessment tool which measures hand hygiene activity versus the total number of observed possible ‘moments’. Auditors are trained in selecting the wards or units for the audit, and the minimum number of required ‘moments’ for each audit is determined by hospital size and activity. We obtained hand hygiene compliance rates data from late 2010 to early 2013 from the NSW Clinical Excellence Commission, the quality and safety body responsible for implementing the hand hygiene initiative in NSW and collecting hand hygiene audit data.27

Accreditation programme and infection control standards

Data on accreditation outcomes from 2009 to 2013 were provided by the Australian Council on Healthcare Standards (ACHS). The ACHS Evaluation and Quality Improvement Program (EQuIP) was introduced in 1997 and comprises a 4-year cycle with external surveys in years 2 and 4.28 During these surveys, hospitals are assessed by an external team of surveyors against ACHS developed standards. The EQuIP has undergone several revisions, none of which materially affected this study. Our study period included EQuIP4, which was introduced in 2007 and used for the surveys in 2009 and 2010, and EQuIP5, which was introduced in 2011. Accreditation standards were changed significantly following the introduction of national mandatory standards in 2013,9 but the infection control criteria were the same for both versions of the EQuIP standards assessed during the study period (see online supplementary file 1). Surveyors scored facilities on a five-point Likert scale for each standard or criterion assessed in the survey. Scores were designated as Outstanding Achievement (OA), Extensive Achievement (EA), Moderate Achievement (MA), Some Achievement and Little Achievement (LA). Hospitals needed to achieve OA, EA or MA, scores in each mandatory standard or criterion in order to meet accreditation requirements.

Infection control related criteria were part of a broader standard regarding the safe provision of care and services.7 8 To meet accreditation requirements, hospitals needed to ensure that the infection control policy: met all regulatory requirements and industry guidelines; had executive support; incorporated ongoing education activities; and included indicators to show both compliance with the policy and effective outcome measurements. Additional activities which counted towards achieving higher (EA and OA) scores included: benchmarking of performance indicators; use of feedback to inform and improve; contributing to infection control research and recognised leadership in infection control systems.7 8 Accreditation is often granted to a cluster of facilities within a local health district and therefore reflects conditions at all the facilities within that survey group. These clusters are subject to boundary changes, as seen in the NSW 2011 health system reorganisation, which took place during the study period.29 We therefore identified the different hospitals within each cluster in order to match the accreditation scores with the hand hygiene data from individual hospitals.

Study variables

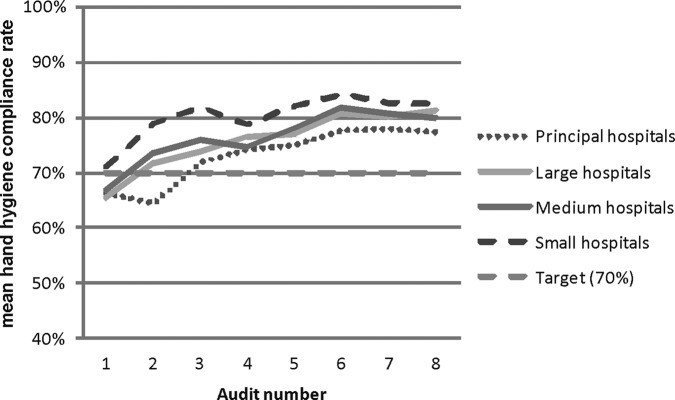

To analyse the matched data, we used hand hygiene compliance rates as our outcome of interest, expressed as a continuous outcome variable. Data were available at eight different time points from the end of 2010 to early 2013 (see figure 1). We characterised the accreditation scores as either full or partial accreditation. Partial accreditation was defined as either accreditation being granted for a reduced time, or resulting in a recommendation for action. No hospitals in the study were refused accreditation during the study period. We included infection control scores in the model by whether hospitals achieved a higher score in one, none (our reference case) or both surveys. To test for a possible timing effect, we included a variable to identify whether surveys were either carried out in the 2009 and 2011 accreditation cycle (cycle=0), or in the 2010 and 2012 cycle (cycle=1).

Figure 1.

Timeline of accreditation surveys and hand hygiene audits.

Acute hospitals were grouped according to the Australian Institute of Health and Welfare activity matrix, based on annual numbers of acute episodes of care adjusted for patient complexity, and geographic location (see online supplementary file 2).30 We used these groups to create a categorical variable with principal referral and specialist hospitals as the reference case (principal=0), with large hospitals scored as 1, medium hospitals as 2 and small hospitals as 3.

Analysis

The nature of the data, with irregular audit dates and clustering within hospitals, indicated that a multilevel model would be most appropriate and would allow adjustment for hospital level variance.31 32 After matching the accreditation and hand hygiene data, our sample comprised 96 hospitals each with two accreditation surveys. For some hospitals, we were not able to match the accreditation outcomes because of changes in the accreditation clusters. Missing data were higher during the first two audits while the programme was progressively implemented. We did not impute values for missing data as the pattern of missing results indicated that these were not missing at random. For example, data from some of the smaller hospitals were not included as they did not meet the minimum publication requirements during the study period (50 moments of hand hygiene), which we determined was likely to be related to hospital size.

We tested our main model using hand hygiene data from audits 1 through 8 as the outcome variable, and accreditation outcomes, infection control scores, accreditation cycle and peer groups as our explanatory variables. We tested the model fit versus ordinary least squares by calculating the intraclass correlation to assess the within-hospital effect. We also tested whether to use a random coefficient or random intercept model. Analysis was conducted using Stata statistical software (V.12SE),33 applying a two-sided significance level of 5%. We also fitted a restrictive model using data from audits 3–8 to determine whether the different peer group mix in the first two surveys was affecting the results.

Results

Hand hygiene and accreditation data analysis

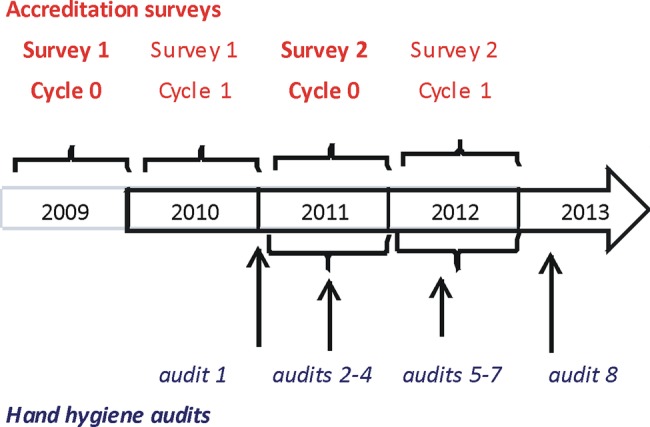

We assessed hand hygiene data on 118 hospitals from eight different audit points during 2010–2013. Overall, hand hygiene rates showed an improvement during the study period, with 28 out of 60 hospitals (46.7%) achieving 70% compliance rates in the first audit in 2010 vs 108 out of 117 hospitals (92.3%) in the final audit, in early 2013. Average hand hygiene compliance rates increased from 67.7% in audit 1, to 80.3% in audit 8, and remained above the 70% national target rate from audit 2 onwards. The average audit compliance rates by audit and hospital peer group are shown in figure 2.

Figure 2.

Mean hand hygiene compliance rates by audit and hospital peer group, 2010–2013.

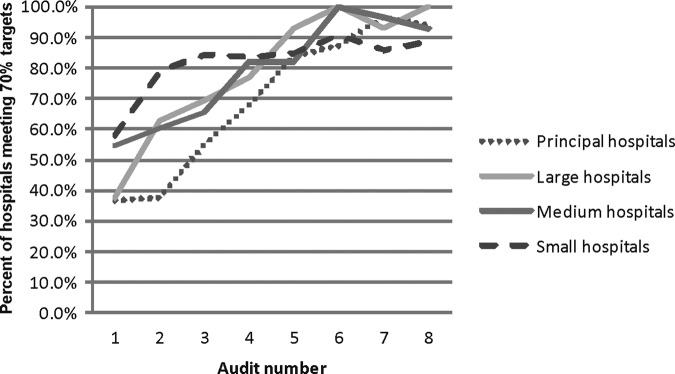

During the study period, 2009–2013, 61 hospitals underwent an accreditation survey in cycle 0, and 44 in cycle 1. The accreditation outcomes showed that 59% of organisations were granted full accreditation in the first survey in each cycle (during 2009–2010) versus 77% in the second survey (2011–2012). The number of hospitals receiving higher infection control scores also increased over time, with 13% receiving a high score (EA) in the first survey of each accreditation cycle versus 18% in the second surveys during 2011 and 2012. No hospitals received an OA score for infection control during the study period. We further examined whether there was a difference between meeting, or not meeting, the target compliance rates by comparing the partial data from audit 1 when the programme was being rolled out, and the final audit in our study, audit 8 (see figure 3). Large hospitals showed the biggest increase, with 30% meeting the target in audit 1, rising to 100% by audit 8.

Figure 3.

Percentage of hospitals meeting hand hygiene targets by audit and hospital peer group.

We also noted that principal and large hospitals comprised 51.7% of hospitals in audit 1 versus 39.3% in audit 8, suggesting they were early adopters in the programme. We tested whether this would influence the results using the restricted model (comprising data from audits 3–8). The infection control outcomes also improved over time with 3% of organisations receiving high scores in both surveys in cycle 0 versus 17% in cycle 1; however, we note the absolute small numbers involved (n=8). A size effect was noted with 6.5% (n=2) of smaller hospitals receiving a higher infection control score in one or both surveys versus 35.7% (n=10) of principal hospitals and 46.2% (n=6) of large hospitals (see table 1).

Table 1.

Summary characteristics of accreditation and infection control scores, breakdown by peer group and timing of surveys

| Hospital peer group |

||||

|---|---|---|---|---|

| Principal | Large | Medium | Small | |

| N=28 (%) | N=13 (%) | N=24 (%) | N=31 (%) | |

| Full accreditation on both surveys | 57.1 | 61.5 | 33.3 | 3.2 |

| Full accreditation in cycle 0 (surveys in 2009 and 2011) | 58.8 | 62.5 | 21.4 | 0.0 |

| Full accreditation in cycle 1 (surveys in 2010 and 2012) | 54.5 | 60.0 | 50.0 | 10.0 |

| High IC scores in one survey | 25.0 | 30.8 | 8.3 | 3.2 |

| High IC scores in both surveys | 10.7 | 15.4 | 8.3 | 3.2 |

| High IC scores in cycle 0 (surveys in 2009 and 2011) | 29.4 | 25.0 | 7.1 | 0.0 |

| High IC scores in second survey of each cycle (2011 or 2012) | 45.5 | 80.0 | 30.0 | 20.0 |

Peer group as defined by the Australian Institute of Health and Welfare.30

IC, infection control.

Testing the model

The intraclass correlation coefficient indicated that 38% of the variance was due to within-hospital effects, indicating sufficient variance for using a random intercept model.32 We ran a likelihood ratio test using a null model (without the random intercept). This gave a χ2 test result of 190 (p=0.001), which confirmed our approach versus using ordinary least squares. We also tested the model using infection control scores as a random coefficient, but the results (χ2=0.81, p=0.67) indicated that the random intercept model was more appropriate. We noted a ceiling effect in our data with 100% of large hospitals and 92% of all hospitals meeting the target compliance rates by audit 8, with less incentive to reach higher levels (see figure 3). Although hospitals were incrementally enrolled in the hand hygiene programme, the lack of hand hygiene compliance data in the unenrolled hospitals meant we were not able to use a stepped wedge design to provide controls, or evaluate a before and after effect and our results are also subject to omitted variable bias. A fixed effects panel data model might be a more traditional approach to reduce sample variation, but we determined that the random intercept model would be more appropriate due to the policy requirement for all public hospitals to submit hand hygiene data, and the presence of time-invariant variables.34

Multilevel model

After matching the hand hygiene data with hospitals that underwent two accreditation surveys, our main model included data from 661 hand hygiene audits from 96 hospitals, an average of 6.9 audits per hospital. The results (table 2) show that achieving full accreditation for both surveys was not significantly associated with higher hand hygiene rates versus those hospitals achieving full accreditation in only one survey. The association between hand hygiene rates and infection control scores is less clear. Hospitals achieving high infection control scores (EA) in one survey (n=14) showed 4.2 percentage point (pp) lower hand hygiene rates than hospitals which just met the accreditation standard (MA score), and this result was significant (p=0.033). Hospitals achieving high infection control scores in both surveys (n=8) showed higher rates (2.1 pp), but this was not significant (p=0.40). Small-sized and medium-sized hospitals experienced significantly higher hand hygiene compliance rates (7.8 pp for small hospitals and 3.5 pp for medium hospitals) compared with principal hospitals. The restricted model, using data from audit 3 onwards, also showed a negative relationship between higher infection control scores and hand hygiene audit results. However, the effect was smaller (2.9 pp) and the results were no longer significant (p=0.14). The size effect in the restricted model was consistent with the main model, with small and medium hospitals showing significantly higher hand hygiene rates than principal referral hospitals. These results do not lend support to our study hypothesis that high infection control scores are associated with higher hand hygiene rates.

Table 2.

Multilevel model to show effect of accreditation outcomes on hand hygiene audit rates

| Variables | Main model (audits 1–8) |

Restricted model (audits 3–8) |

||||

|---|---|---|---|---|---|---|

| Mean values | Standard error | p Values | Mean values | Standard error | p Values | |

| Full accreditation in both surveys | 0.004 | 0.016 | 0.809 | 0.0077 | 0.016 | 0.620 |

| High infection control scores in one survey | −0.042 | 0.020 | 0.033 | −0.029 | 0.020 | 0.135 |

| High infection control scores in two surveys | 0.021 | 0.025 | 0.404 | 0.033 | 0.024 | 0.172 |

| Later cycle (surveys in 2010/2012) | 0.024 | 0.014 | 0.073 | 0.0205 | 0.013 | 0.123 |

| Hospital peer group* (principal referral=0) | ||||||

| Large | 0.024 | 0.020 | 0.247 | 0.0233 | 0.023 | 0.244 |

| Medium | 0.035 | 0.017 | 0.046 | 0.0343 | 0.034 | 0.045 |

| Small | 0.078 | 0.018 | <0.001 | 0.0807 | 0.081 | <0.001 |

| Number of observations | 661 | 563 | ||||

| Number of hospitals | 96 | 96 | ||||

| Average compliance rates | 0.741 | 0.744 | ||||

| Log likelihood | 662 | 634 | ||||

Bold typeface indicates significance at p<0.05.

*Peer group as defined by the Australian Institute of Health and Welfare.30

Discussion

Our analysis of 118 NSW hospitals showed that hand hygiene rates increased from 67.7% in audit 1 in late 2010 to 80.3% by audit 8 in early 2013. This is comparable with rates of 62.2% from a sample of NSW hospitals in February 2007, which were observed following the introduction of the Clean Hand Saves Lives campaign during 2006–2007,15 and continues the improvement shown nationally in Australia with average hand hygiene rates estimated at 68.3% in 2011.35 It is challenging to compare these results internationally. A US study estimated average hand hygiene rates of 56.6% in 40 hospitals using data collected for 1 year before and after the introduction of national hand hygiene guidelines in 2002.36 However, it must be noted that this programme was different to that followed by Hand Hygiene Australia and so the results would not be directly comparable.

Smaller hospitals in our study had higher hand hygiene compliance rates but the relationship between accreditation outcomes and hand hygiene data was less clear. This hospital size effect on hand hygiene compliance has been confirmed by other research investigating the link between hand hygiene rates and healthcare-associated Staphylococcus aureus bacteraemia.37 38 We consider that the results from small and medium hospitals in our study can be explained by looking at the organisational infrastructure necessary to meet the hand hygiene and accreditation requirements. Both are dependent on a widespread organisational response in terms of education, monitoring, infrastructure and management involvement.6 Achieving higher infection control scores requires additional benchmarking, feedback and research capabilities.7 8 The organisational size effect suggests that small-sized and medium-sized hospitals can effectively embrace multimodal quality improvement strategies as seen by the higher compliance rates. However, the requirements for achieving high infection control scores within an accreditation survey may be measuring different aspects of quality that are not reflected in the hand hygiene compliance rates. The results indicate that smaller hospitals are able to focus on the practical implementation of a national hand hygiene policy. Having the resources to meet the requirements for higher infection control scores, in terms of conducting research or being recognised leaders in infection control, may not be practical for these smaller organisations. Although some smaller hospitals will be accredited as part of a larger cluster of hospitals, which includes principal and large hospitals, this is not always the case. Our hypothesis that higher accreditation scores would be reflected in hand hygiene rates appears to be confounded by an accreditation programme that makes it more difficult for smaller hospitals to achieve high infection control scores.

This study uses one indicator for evaluating accreditation, whereas multiple measures may be more effective.39 For example, outcome indicators are widely used in the US hospital system,40–42 and include hospital-acquired Staphylcoccus aureus bacteraemia (SAB) rates and surgical site infection rates. These incorporate a broader mix of the antimicrobial, hand hygiene and specialist cleaning practice modules of the infection control standard. Using outcome indicators would complete Donabedian's triad of performance measures to include structural (accreditation results), process (hand hygiene compliance rates) and outcome (S. aureus infection rates) measures.43 44

Measurement issues in our study include a possible observer effect since the hospital staff might be aware that the audit was taking place. However, although this may increase the compliance rate, the results would still be valid as the standards include requirements for staff education and installation of appropriate infrastructure. Increased rates during an audit imply that the correct infrastructure is in place, in terms of availability of functioning hand washing stations, and that the staff are aware of the hand hygiene policies. In addition, any observer effect is likely to be mitigated as all the hospitals used the same methods for collecting data and thus are equally subjected to this bias. Other methods to measure hand hygiene activity include measuring consumption rates of hand hygiene products, such as alcohol rubs,45 and electronic systems for monitoring compliance,46 but the WHO guidelines suggest that direct observation is still the gold standard.40 We also note that although accreditation surveys are assessed on a five-point scale, only two scores (MA and EA) were used in the infection control standard during the four surveys in our study. The lack of granularity in the accreditation scores makes it difficult to differentiate accreditation performance. Intersurveyor reliability is recognised as a limitation of audit systems that are based on subjective assessments.47 To reduce idiosyncratic scoring, ACHS surveyors need to provide evidence to ACHS for their scoring methods, and in the decision to award higher scores, but some variation between surveyors may remain.

Limitations of the model include reverse causality in that higher compliance rates could lead to higher infection control scores at the next survey where hand hygiene audit rates are used as evidence of implementation during an accreditation survey. This would most likely be the case going forward as ACSQHC includes hand hygiene audit results as part of the evidence of implementation of standard 3 under the new national standards.9 48

Implications

Different indicators will give different perspectives on how an organisation approaches and implements relevant policy. However, the costs of measurement in healthcare should be balanced against using a range of indicators to capture a broader mix of infection control policies, and across the different standards assessed during accreditation surveys. Indicator selection should include both process indicators, recognised as a method of measuring organisational changes,49 and outcome indicators. Public reporting of indicator data further increases the requirement to accurately identify and measure the parts of the patient safety and quality spectrum that are being addressed. In this study, a focus on the accreditation results would underestimate the successful implementation of the hand hygiene policy by smaller hospitals. Conversely, just using hand hygiene results would underestimate the research and leadership investment in infection control by larger hospitals. Disentangling these two outcomes within the same safety and quality initiative is a pre-requisite to understanding how they can be effectively assessed and monitored. For example, consideration could be given to changing criteria for awarding higher scores for infection control such that achieving higher scores was evidence based and could be feasible for all hospitals. Although we focus on Australian hospitals in our study, international accreditation programmes will also need to ensure that indicators accurately capture outcomes and reflect performance across a range of hospital sizes and types.

Conclusion

Identifying indicators to measure the effectiveness of accreditation is challenging due to the complexity of implementing a wide range of accreditation-related processes across multiple hospital activities. Our results do not support our study hypothesis that high infection control scores are associated with higher hand hygiene rates. Instead, this study suggests that accreditation outcomes and hand hygiene audit data measure different parts of the quality and safety spectrum. Developing a framework to identify suitable indicators is an important contribution to understanding the impact of hospital accreditation internationally. Policymakers need to appreciate the assumptions behind the choice of indicators and understand exactly what is being measured to ensure that key performance indicators encourage quality improvements in the delivery of hospital services.

Supplementary Material

Acknowledgments

This study forms part of the Accreditation Collaborative for the Conduct of Research, Evaluation and Designated Investigations through Teamwork (ACCREDIT) project. The ACCREDIT collaboration involves researchers in the Centre for Clinical Governance Research and Centre for Health Systems and Safety Research in the Australian Institute of Health Innovation at the University of New South Wales, Australia. The ACCREDIT research team benefits from a high-profile international advisory group containing researchers in health safety and quality from the UK, Spain and Sweden. The collaboration includes two leading health safety and quality bodies (ACSQHC and the NSW Clinical Excellence Commission) plus three of the major Australian health services accreditation agencies: ACHS, Australian General Practice Accreditation Limited, and the Australian Aged Care Quality Agency. The authors would like to thank ACHS and the NSW Clinical Excellence Commission for providing data for the study. They would also like to acknowledge the feedback received on the first draft of the paper from the PhD group at the Centre for Health Economics Research and Evaluation at the University of Technology Sydney, in particular: Professor Marion Haas; Dr Rebecca Reeve and Ms Bonny Parkinson.

Footnotes

Contributors: JB, DG, JW and VM contributed to the concept and design of the study. VM, JB and DG contributed to the acquisition of data. EG contributed to the model specification and model analysis, and to the drafting of the results sections. KF and AH contributed to the data collection and analysis, and to the drafting of the methods section. VM wrote the first draft of the manuscript. JB and DG provided a critical revision of the first draft. JW contributed to the drafting of the discussion section. All authors reviewed the final manuscript and approved the final manuscript.

Funding: This research is supported by the National Health and Medical Research Council Program Grant 568612, and under the Australian Research Council's Linkage Projects scheme (project LP100200586). Industry partners (ACHS, Australian General Practice Accreditation Limited, and the Australian Aged Care Quality Agency) contributed to the project design and may provide assistance with its implementation. They may also contribute to data analysis, interpretation of findings and presentation of results. Final decision-making responsibility for all research activities, including the decision to submit research for publication, resides with UNSW.

Competing interests: None.

Ethics approval: The University of New South Wales Human Research Ethics Committee has approved this project (approval number HREC 10274).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Shaw CD, Braithwaite J, Moldovan M, et al. Profiling health-care accreditation organizations: an international survey. Int J Qual Health Care 2013;25:222–31 [DOI] [PubMed] [Google Scholar]

- 2.Mumford V, Forde K, Greenfield D, et al. Health services accreditation: what is the evidence that the benefits justify the costs? Int J Qual Health Care 2013;25:606–20. [DOI] [PubMed] [Google Scholar]

- 3.Groene O, Botje D, Suñol R, et al. A systematic review of instruments that assess the implementation of hospital quality management systems. Int J Qual Health Care 2013;25:525–41 [DOI] [PubMed] [Google Scholar]

- 4.Australian Commission on Safety and Quality in Health Care. Australian safety and quality framework for health care, putting the framework into action: getting started. Sydney: ACSQHC, 2011 [Google Scholar]

- 5.van Harten WH, Casparie TF, Fisscher OAM. Methodological considerations on the assessment of the implementation of quality management systems. Health Policy 2000;54:187–200 [DOI] [PubMed] [Google Scholar]

- 6.Braithwaite J, Greenfield D, Westbrook J, et al. Health service accreditation as a predictor of clinical and organisational performance: a blinded, random, stratified study. Qual Saf Health Care 2010;19:14–21 [DOI] [PubMed] [Google Scholar]

- 7.Australian Council on Healthcare Standards. The ACHS EQuIP 4 guide: part 2—standards. Ultimo: ACHS, 2007 [Google Scholar]

- 8.Australian Council on Healthcare Standards (ACHS). EQuIP5 standards and criteria. Ultimo: ACHS, 2010 [Google Scholar]

- 9.Australian Commission on Safety and Quality in Health Care (ACSQHC). National safety and quality health service standards. Sydney: ACSQHC, 2012 [Google Scholar]

- 10.Productivity Commission. Public and private hospitals: research report. Canberra: Productivity Commission, 2009 [Google Scholar]

- 11.Klevens RM, Edwards JR, Richards C, et al. Estimating health care-associated infections and deaths in U.S. hospitals (2002). Public Health Rep 2007;122:160–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zimlichman E, Henderson D, Tamir O, et al. Health care–associated infections: a meta-analysis of costs and financial impact on the US health care system. JAMA Intern Med 2013;173:2039–46 [DOI] [PubMed] [Google Scholar]

- 13.Graves N, Weinhold D, Tong E, et al. Effect of healthcare-acquired infection on length of hospital stay and cost. Infect Control Hosp Epidemiol 2007;28:280–92 [DOI] [PubMed] [Google Scholar]

- 14.Pittet D, Allegranzi B, Sax H, et al. Evidence-based model for hand transmission during patient care and the role of improved practices. Lancet Infect Dis 2006;6:641–52 [DOI] [PubMed] [Google Scholar]

- 15.Grayson ML, Jarvie LJ, Martin R, et al. Significant reductions in methicillin-resistant Staphylococcus aureus bacteraemia and clinical isolates associated with a multisite, hand hygiene culture-change program and subsequent successful statewide roll-out. Med J Aust 2008;188:633–40 [DOI] [PubMed] [Google Scholar]

- 16.Pincock T, Bernstein P, Warthman S, et al. Bundling hand hygiene interventions and measurement to decrease health care-associated infections. Am J Infect Control 2012;40(4 Suppl):S18–27 [DOI] [PubMed] [Google Scholar]

- 17.Johnson P, Martin R, Burrell LJ, et al. Efficacy of an alcohol/chlorhexidine hand hygiene program in a hospital with high rates of nosocomial methicillin-resistant Staphylococcus aureus (MRSA) infection. Med J Aust 2005;183:509–14 [DOI] [PubMed] [Google Scholar]

- 18.World Health Organization. WHO guidelines on hand hygiene in health care: a summary. First global patient safety challenge—clean care is safer care. Geneva, Switzerland: World Health Organisation, 2009 [PubMed] [Google Scholar]

- 19.Gould DJ, Moralejo D, Drey N, et al. Interventions to improve hand hygiene compliance in patient care. Cochrane Database Syst Rev 2010;(9):CD005186. [DOI] [PubMed] [Google Scholar]

- 20.Backman C, Zoutman DE, Marck PB. An integrative review of the current evidence on the relationship between hand hygiene interventions and the incidence of health care-associated infections. Am J Infect Control 2008;36:333–48 [DOI] [PubMed] [Google Scholar]

- 21.ACSQHC. Surveillance of healthcare associated infections: Staphylococcus aureus bacteraemia & Clostridium difficile infection—version 4.0. Sydney: Australian Commission for Safety and Quality in Health Care, 2012 [Google Scholar]

- 22.World Health Organization. A guide to the implementation of the WHO multimodal hand hygiene improvement strategy. Geneva: WHO, 2009 [Google Scholar]

- 23.Hand Hygiene Australia. Hand Hygiene Australia manual: 5 moments for hand hygiene .3rd edn Heidelberg, VIC: Commonwealth of Australia, 2013 [Google Scholar]

- 24.Grayson L, Hunt C, Johnson P, et al. Hand hygiene. In: Cruickshank M, Ferguson J, eds. Reducing harm to patients from health care associated infection: the role of surveillance. Sydney: Australian Commission for Safety and Quality in Health Care, 2008:259–73 [Google Scholar]

- 25.Australian Institute of Health and Welfare. Australian hospital statistics 2011–12. Health services series no. 50. Cat. no. HSE 134. Canberra: AIHW, 2013 [Google Scholar]

- 26.ACSQHC. Australian guidelines for the prevention and control of infection in healthcare. Canberra: Australian Government, NHMRC & the Australian Commission on Safety and Quality in Healthcare, 2010 [Google Scholar]

- 27.Clinical Excellence Commission. Clinical excellence commission strategic plan 2012–2015. Sydney: CEC, 2012 [Google Scholar]

- 28.Australian Council on Healthcare Standards. National report on health services accreditation performance 2003–2006. Ultimo: ACHS, 2007 [Google Scholar]

- 29.Commonwealth of Australia. National health reform: progress and delivery. Canberra: Department of Health and Ageing, 2011 [Google Scholar]

- 30.Independent Hospital Pricing Authority. National hospital cost data collection, Australian public hospitals cost report 2010–2011, round 15. Sydney: IHPA, 2013 [Google Scholar]

- 31.Garson D Fundamentals of hierarchical linear and multilevel modeling. In: Garson D, ed. Hierarchical linear modeling: guide and applications. Sage Publications Inc, 2013:3–25 [Google Scholar]

- 32.Rabe-Hesketh S, Skrondal A. Multilevel and longitudinal modelling using stata. 3rd edn College Station: Stata Press, 2012 [Google Scholar]

- 33.Stata Statistical Software: Release 12 [program]. College Station, TX: StataCorp LP, 2011

- 34.Allison PD Using panel data to estimate the effects of events. Sociol Methods Res 1994;23:174–99 [Google Scholar]

- 35.Grayson ML, Russo PL, Cruickshank M, et al. Outcomes from the first 2 years of the Australian National Hand Hygiene Initiative. Med J Aust 2011;195:615–19 [DOI] [PubMed] [Google Scholar]

- 36.Larson EL, Quiros D, Lin SX. Dissemination of the CDC's Hand Hygiene Guideline and impact on infection rates. Am J Infect Control 2007;35:666–75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Playford EG, McDougall D, McLaws M-L. Problematic linkage of publicly disclosed hand hygiene compliance and health care-associated Staphylococcus aureus bacteraemia rates. Med J Aust 2012;197:29–30 [DOI] [PubMed] [Google Scholar]

- 38.Australian Commission on Safety and Quality in Health Care. National core, hospital-based outcome indicator specification, Version 1.1—CONSULTATION DRAFT. Sydney: ACSQHC, 2012 [Google Scholar]

- 39.Brown C, Hofer T, Johal A, et al. An epistemology of patient safety research: a framework for study design and interpretation. Part 1. Conceptualising and developing interventions. Qual Saf Health Care 2008;17:158–62 [DOI] [PubMed] [Google Scholar]

- 40.The World Health Organization. Automated/electronic systems for hand hygiene monitoring: a systematic review Geneva. Switzerland: WHO, 2013 [Google Scholar]

- 41.The Joint Commission. Improving America's hospitals: The Joint Commission's annual report on quality and safety. The Joint Commission, Oakland Terrace, 2013

- 42.Agency for Healthcare Research and Quality. AHRQ quality indicators: inpatient quality indicators. Rockville: AHRQ, 2012 [Google Scholar]

- 43.Donabedian A The quality of care: how can it be assessed? JAMA Intern Med 1988;260:1743–8 [DOI] [PubMed] [Google Scholar]

- 44.Mountford J, Shojania KG. Refocusing quality measurement to best support quality improvement: local ownership of quality measurement by clinicians. BMJ Qual Saf 2012;21:519–23 [DOI] [PubMed] [Google Scholar]

- 45.Behnke M, Gastmeier P, Geffers C, et al. Establishment of a national surveillance system for alcohol-based hand rub consumption and change in consumption over 4 years. Infect Control Hosp Epidemiol 2012;33:618–62 [DOI] [PubMed] [Google Scholar]

- 46.Boyce JM Measuring healthcare worker hand hygiene activity: current practices and emerging technologies. Infect Control Hosp Epidemiol 2011;32:1016–28 [DOI] [PubMed] [Google Scholar]

- 47.Greenfield D, Pawsey M, Naylor J, et al. Are healthcare accreditation surveys reliable? Int J Health Care Qual Assur 2009;22:105–16 [DOI] [PubMed] [Google Scholar]

- 48.Australian Commission on Safety and Quality in Health Care. Safety and quality improvement guide, standard 3: preventing and controlling healthcare associated infections. Sydney: ACSQHC, 2012 [Google Scholar]

- 49.Mant J Process versus outcome indicators in the assessment of quality of health care. Int J Qual Health Care 2001;13:475–80 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.