Abstract

Imaging utilization in emergency departments (EDs) has increased significantly. More than half of the 1.2 million patients with mild traumatic brain injury (MTBI) presenting to US EDs receive head CT. While evidence-based guidelines can help emergency clinicians decide whether to obtain head CT in these patients, adoption of these guidelines has been highly variable. Promulgation of imaging efficiency guidelines by the National Quality Forum has intensified the need for performance reporting, but measuring adherence to these imaging guidelines currently requires labor-intensive and potentially inaccurate manual chart review. We implemented clinical decision support (CDS) based on published evidence to guide emergency clinicians towards appropriate head CT use in patients with MTBI and automated data capture needed for unambiguous guideline adherence metrics. Implementation of the CDS was associated with a 56% relative increase in documented adherence to evidence-based guidelines for imaging in ED patients with MTBI.

Keywords: Decision Support Systems, Clinical; Computerized Physician Order Entry System; Electronic Health Records; Evidence-Based Practice; Radiology

Background

The use of CT in the emergency department (ED) increased sixfold from 1995 to 2007.1 Although the rate of growth may have slowed recently, the absolute numbers of such examinations are large.2 CT imaging provides timely and efficient diagnoses for trauma and complex diseases, but inappropriate use contributes to waste and potential patient harm from unnecessary radiation exposure.3 4 A number of evidence-based guidelines exist for appropriate CT use,5–7 and policymakers, including the Centers for Medicare and Medicaid Services, are developing reporting measures for imaging efficiency to assess physician adherence to such evidence, which may eventually be used for reimbursement.8

Patients with mild traumatic brain injury (MTBI) are those who have had traumatically induced disruption of brain function, manifested by at least one of the following: loss of consciousness, amnesia, alteration of mental state, or a focal neurologic deficit that may or may not be transient.9 Further, patients defined as having MTBI cannot have had loss of consciousness for >30 min, an initial Glasgow Coma Scale10 <13 (range 3–15), or post-traumatic amnesia >24 h.9

MTBI accounts for more than 1.2 million visits to US EDs annually, with 63% resulting in head CTs.11 While up to 15% of ED patients with MTBI have an acute finding on CT, <1% require neurosurgical intervention.12 Although evidence-based guidelines may help emergency physicians decide whether to obtain a head CT for the evaluation of MTBI,5 6 13 14 their adoption into practice has been highly variable. A survey of US emergency physicians found that only 30% were aware of one well-validated guideline; only 12% reported using it clinically.15 One-third of emergency physicians cited forgetting guideline details in clinical practice.16

In 2008, the American College of Emergency Physicians published a clinical policy on neuroimaging and decision-making for adult head trauma in the ED.17 Subsequently, the National Quality Forum endorsed similar consensus standards in 2012 to promote quality and efficiency in medical imaging delivery.18 In 2013, the Choosing Wisely campaign disseminated recommendations to reduce unnecessary testing, including head CT imaging for MTBI based on validated decision rules.19 Despite these attempts, it has been difficult to promote guidelines and measure adherence. Current methods to assess guideline adherence require labor-intensive manual chart review to collect granular clinical data.

Clinical decision support (CDS) integrated with computerized provider order entry (CPOE) has been shown to decrease utilization, and improve yield, of ED CT imaging.20 CDS implementation has been mandated by the Health Information Technology for Economic and Clinical Health Act and federal meaningful use requirements as a tool to promote evidence-based practice.21–23 Therefore, we aimed to determine the impact of an electronic CDS tool, based on validated evidence, designed to guide emergency clinician decision-making for use of head CT for patients with MTBI. We hypothesized that health information technology in the form of CPOE-integrated CDS can be used as a tool to measure and improve documented adherence to evidence-based guidelines.

Methods

Setting and population

The requirement to obtain informed consent was waived by the institutional review board for this Health Insurance Portability And Accountability Act (HIPAA)-compliant, prospective study, performed in the ED of a 793-bed, urban, academic level 1 trauma center. We evaluated the 27-month periods prior and subsequent to the month when a CDS tool, based on the New Orleans Criteria, the Canadian CT Head Rule, and the CT in Head Injury Patients Prediction Rule, was implemented.5–7 Using the same inclusion and exclusion criteria as in these decision rules, we included all ED patients for whom clinicians ordered CTs for MTBI during baseline (1 August 2007 to 31 October 2009) and post-intervention (1 December 2009 to 29 February 2012) periods. As a level 1 trauma center, our ED has multi-body part trauma CT combinations, which were excluded from the MTBI CDS.

CDS intervention

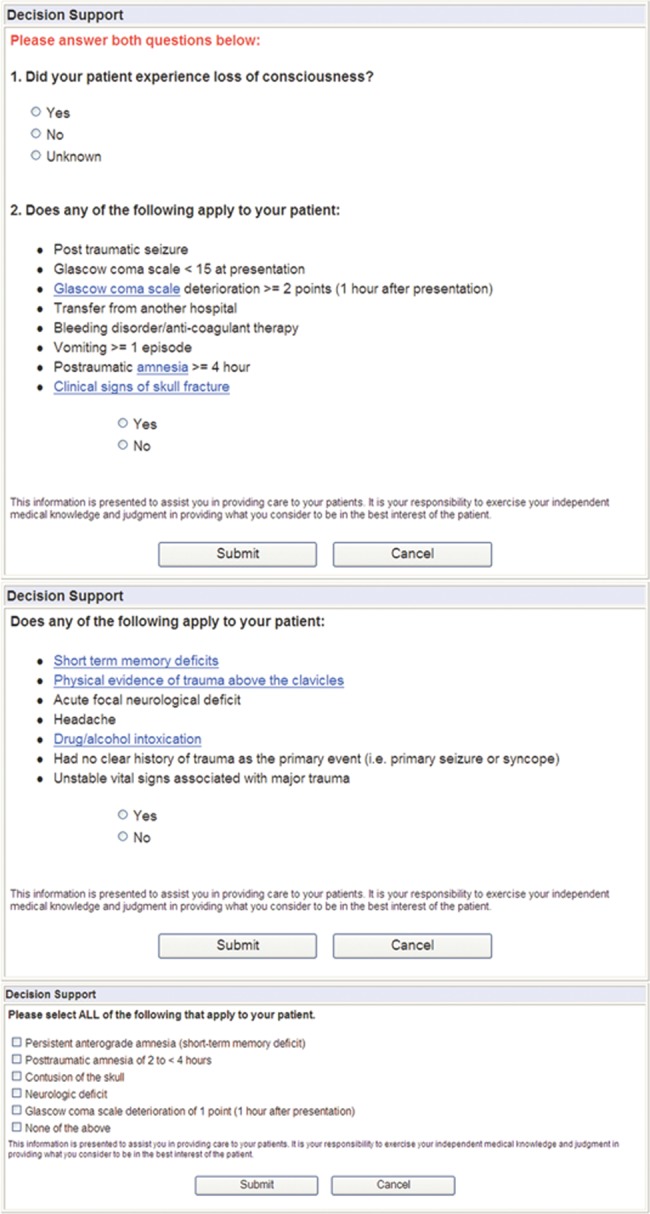

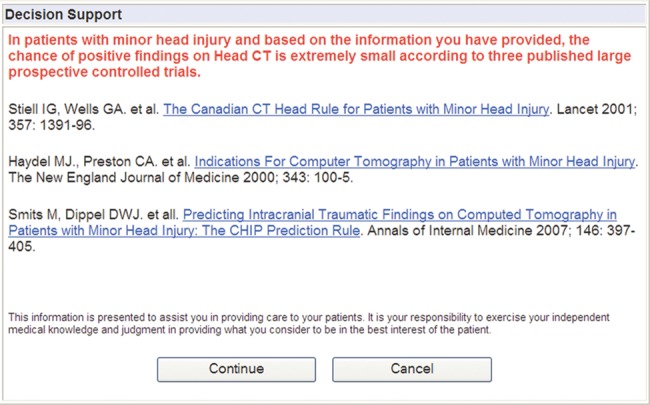

All imaging orders at our institution are placed via a web-based CPOE system (Percipio; Medicalis Corp, San Francisco, California, USA). Implementation details have been described previously.24 The intervention consisted of electronic CDS, launched when a head CT for MTBI was ordered. The requesting clinician was required to input data into the CDS specifically designed to address the evidence-based criteria needed to provide justification for imaging (figure 1). If the order met the criteria for at least one guideline based on the inputted data, no CDS recommendations were made and the request proceeded. For orders not meeting the criteria for all three guidelines, the CDS recommended not obtaining CT imaging and presented the clinician with references (figure 2). The clinician could ignore the advice and proceed with imaging, or cancel the order.

Figure 1.

Screenshots of electronic clinical decision support data collection screens.

Figure 2.

Electronic clinical decision support output for non-adherent studies.

The development of this CDS required the harmonization of the multiple validated prediction rules for MTBI in order to maximize sensitivity. Harmonization involved reviewing the three decision rules for overlap, and incorporating risk factors from each of the rules into CDS as guideline-adherent indications for performing head CT. In cases where similar criteria existed between the rules, we chose the most sensitive. For example, both the New Orleans Criteria6 and the CT in Head Injury Patients Prediction Rule7 identify age above 60 years as a risk factor, whereas the Canadian CT Head Rule5 identifies age 65 or greater as a risk factor; in this case, performing a head CT for patients above 60 years old was considered guideline adherent. Addressing head CT appropriateness by each of these rules would have created complexity in clinical input and would have been cumbersome for clinicians to remember and document had it not been incorporated into a CPOE-integrated CDS tool.

Data collection

We included a random sample of 200 head CTs on patients with MTBI from each of the baseline and intervention periods. For the baseline period, we initially identified all patients who had a trauma-related head CT based on information entered into the CPOE system. From this list of patients, we subsequently performed random chart reviews until we identified 200 patients with MTBI, based on inclusion criteria listed above. Any visits that were not unambiguously associated with MTBI presentation were excluded. The intervention period's CDS implementation allowed identification of patients with MTBI without the need for chart review. Two attending physician abstractors reviewed the electronic medical records (EMRs) of the patients associated with these orders to determine documented guideline adherence for head CT evaluation in patients with MTBI. Differences were reconciled via discussion and consensus. In the baseline period, the abstractors conducted a structured implicit review of clinician notes in the EMR. If the notes lacked sufficient documentation to determine adherence, the CT was considered non-adherent. As the post-intervention period included the granular clinical data collected from the CDS tool, documented guideline adherence was measured directly from data input into the CDS, without EMR abstraction. To evaluate the accuracy of the information entered by clinicians in the CDS, we manually reviewed a sample of clinician notes and calculated the concordance rates between data in the EMR and that entered into CDS.

Outcome measures and statistical analyses

The primary outcome measure was documented adherence to evidence-based guidelines for use of head CT for ED patients with MTBI, assessed in the random sample of head CTs. The sample size was powered to detect a 15% effect size (power=0.8, α=0.05) with an estimated baseline adherence rate of 40%. This resulted in a desired sample size of 400 records (200 in each group) for the primary outcome. The secondary outcome measure was concordance of adherence documentation between the CDS tool and the clinical note in the EMR. While the CDS tool enforced granular data collection, sufficient comparable data were often lacking in the EMR clinical note. We assumed that, if at least one hard sign to justify head CT imaging for MTBI was documented in the clinical note, then the imaging order was adherent. Analyses were performed using Microsoft Excel 2008 and JMP Pro V.10. χ2 tests with proportional analyses were used to assess baseline and post-intervention differences. A two-tailed p value of <0.05 was considered to be significant.

Results

Patient population

Of 249 014 ED visits during the study period, 19 726 (7.9%) were associated with a head CT (for any indication). Before CDS implementation, CPOE contained imaging indications for trauma but did not contain granular data to unambiguously indicate MTBI, therefore we could not determine the rate of head CTs specifically for MTBI. The mean age of patients receiving head CTs was significantly lower in the baseline period (57.8 years, 95% CI 57.4 to 58.2) than the post-intervention period (60.0 years, 95% CI 59.6 to 60.4) (p<0.05), but there was no significant difference in the proportion of men and women receiving head CTs (45.4% and 46.5% of CTs were performed in men during the baseline and post-intervention periods respectively, p=0.11).

Documented guideline adherence and concordance

During the baseline period, documented guideline adherence, as assessed by manual chart review, was 49.0% (98/200). After CDS implementation, documented guideline adherence, evaluated using data entered into the CDS, increased to 76.5% (153/200). These 27.5% absolute and 56.1% relative effect sizes were significant (p<0.001).

The overall concordance for documented guideline adherence between manual chart review and electronic CDS data entry was 70% (table 1) among 50 charts reviewed. Thirty-five concordant studies (70%) were adherent by both CDS and manual review. Ten studies (20%) were non-adherent by CDS and lacked adequate documentation by manual review to determine adherence, thereby categorizing all 10 as non-adherent. An additional five studies (10%) were discordant; of these, four met adherence criteria by manual review but not CDS, while one met adherence criteria by CDS but lacked adequate documentation by manual chart review.

Table 1.

Concordance of manual clinician note review compared with electronic clinical decision support (CDS) in determining guideline adherence (n=50)

| Manual clinician note review | ||||

|---|---|---|---|---|

| Electronic CDS chart review | Adherent | Not adherent | Incomplete data | Total |

| Adherent | 35 | 0 | 1 | 36 |

| Not adherent | 4 | 0 | 10 | 14 |

| Incomplete data | 0 | 0 | 0 | 0 |

| Total | 39 | 0 | 11 | 50 |

Discussion

Integrating CDS into a CPOE system improved documented guideline adherence for use of head CT in evaluating ED patients with MTBI. Compared with baseline, documented guideline adherence after CDS implementation improved by an absolute 27.5%.

Documenting clinical information is critical for improving healthcare delivery, communication among caregivers, reimbursement, patient safety, and, increasingly, measuring individual clinician and health system performance against established best practices. Many public forums report guideline adherence as a quality measure for the purpose of comparison.19 25 Although clinical practice guidelines have been promoted to improve quality of care,26–29 accurately measuring adherence to guideline-based metrics can be difficult and potentially lead to erroneous conclusions.30 31 A recent study assessing referral information for chest CT pulmonary embolus imaging requests found that documented relevant clinical information on requests was lacking and only 0.4% of requests contained adequate documentation to calculate a Wells score.32 The use of structured CDS can provide documentation of clinical attributes needed for unambiguous assessment of guideline adherence, which we found lacking in prose-form clinician notes. An alternate approach may be to create structured clinician notes to enforce granular data capture; however, such initiatives have not been broadly accepted or adopted in EMRs.33 34

In the baseline period, the vast majority of studies characterized as non-adherent actually lacked the granular level of documentation necessary to allow determination of adherence. In particular, lack of documentation of specific variables did not equate to the lack of symptomology; if a clinician did not document the absence of a clinical finding, it is not possible to determine if the clinician did not evaluate the finding or whether it was truly absent. The CDS served as a prompt for the clinician to document information that may show the imaging order to be guideline-adherent. Similarly, eliciting comprehensive relevant clinical information by the CDS improves the data the radiologist receives, potentially enhancing the quality of the image interpretation.

Manual chart review is a resource-intensive process frequently fraught with incomplete documentation. Although the CDS tool automated and enforced collection of granular data, providing the clarity necessary to assess each imaging request's adherence to guidelines, it did increase the workflow burden (additional screens and mouse clicks were required to submit a head CT order) on the ordering ED clinician. In this study, we did not measure these additional time requirements or clinician satisfaction.

The CDS tool launches on the basis of clinician-entered data into the CPOE system. While this scenario allows clinicians to enter erroneous data to bypass intrusive CDS screens, previous literature indicates that clinicians largely adhere to CDS implementation without attempting to ‘game’ the system.35 Accordingly, we found only 10% of CDS-entered data to be discordant with manual review.

We designed our CDS tool to incorporate the leading evidence at the time of implementation, which incorporated an amalgamation of three validated prediction rules. While our initial aim was to maximize sensitivity and prevent patient harm, we likely sacrificed specificity. Our approach led to clinician buy-in from both departments of radiology and emergency medicine and serves as a platform for iterative improvement.

Limitations

Our study was performed in a single academic setting with an established history of CPOE, CDS, and systematic quality improvement efforts, making its generalizability uncertain. However, as federal mandates for integrated EMRs increase, more commercial vendor systems are including tools such as CDS that could be similarly employed to enable documentation and measurement of national quality measures.

This study was not powered to measure CDS impact on utilization or yield of head CT for patients with MTBI. While previous studies have shown improvements in both metrics, this study's purpose was to assess documentation capabilities of a CDS tool for measuring adherence to established practice guidelines. Moreover, while documented adherence to evidence improved after CDS, we cannot assess the appropriateness of CT examinations performed. However, the documentation of care is measurable and increasingly a method used to assess physician performance.8 25

Finally, we were unable to identify reasons why physicians decided to over-ride the CDS and order a non-guideline-adherent head CT. Furthermore, we were unable to assess the clinical validity of physicians’ over-ride of the CDS recommendations from chart review, as accurately recreating the context of the clinical judgment from the medical record is subjective. Subsequent iterations of CDS implemented at our facility require physicians to provide a reason for over-riding CDS recommendations, which will aid assessment of appropriateness in future studies.

Conclusion

Implementing CDS significantly increased documented adherence to published evidence for imaging in ED patients with MTBI. The CDS tool also provided an efficient, unambiguous method for retrieving the data needed to compute adherence performance metrics, which can support iterative quality improvement projects. However, even after CDS implementation, one-fourth of CTs in ED patients with MTBI remained inconsistent with evidence, suggesting that further opportunities for performance improvement remain.

Acknowledgments

We would like to thank Laura E Peterson, BSN, SM for her assistance in editing this manuscript.

Footnotes

Contributors: All authors participated in research design, execution, analysis, and preparation of this manuscript.

Funding: This study was funded in part by Grant T15LM007092 from the National Library of Medicine and by Grant 1UC4EB012952-01 from the National Institute of Biomedical Imaging and Bioengineering.

Competing interests: The computerized physician order entry and clinical decision support systems used at our hospital and in this study were developed by Medicalis. Brigham and Women's Hospital and its parent entity, Partners Healthcare System, Inc, have equity and royalty interests in Medicalis and some of its products. RK is a consultant to Medicalis and is named on a patent held by Brigham and Women's Hospital that was licensed to Medicalis in 2000.

Ethics approval: Partners Healthcare Institutional Review Board.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Larson DB, Johnson LW, Schnell BM, et al. National trends in CT use in the emergency department: 1995–2007. Radiology 2011; 258:164–73 [DOI] [PubMed] [Google Scholar]

- 2.Shinagare A, Ip I, Abbett S, et al. Inpatient imaging utilization: trends of the last decade. AJR Am J Roentgenol 2013. In press. [DOI] [PubMed] [Google Scholar]

- 3.Brenner DJ, Hall EJ. Computed tomography—an increasing source of radiation exposure. N Engl J Med 2007;357:2277–84 [DOI] [PubMed] [Google Scholar]

- 4.Sodickson A, Baeyens PF, Andriole KP, et al. Recurrent CT, cumulative radiation exposure, and associated radiation-induced cancer risks from CT of adults. Radiology 2009;251:175–84 [DOI] [PubMed] [Google Scholar]

- 5.Stiell IG, Wells GA, Vandemheen K, et al. The Canadian CT Head Rule for patients with minor head injury. Lancet 2001;357:1391–6 [DOI] [PubMed] [Google Scholar]

- 6.Haydel MJ, Preston CA, Mills TJ, et al. Indications for computed tomography in patients with minor head injury. N Eng J Med 2000;343:100–5 [DOI] [PubMed] [Google Scholar]

- 7.De Haan GG, Dekker HM, Vos PE, et al. Predicting intracranial traumatic findings on computed tomography in patients with minor head injury: the CHIP prediction rule. Imaging Minor Head Inj 2008;146:55. [DOI] [PubMed] [Google Scholar]

- 8.Imaging Measures [Internet]. [cited 2013 Jun 25]. http://www.imagingmeasures.com/

- 9.Congress of Rehabilitation Medicine, Head Injury Interdisciplinary American, Special Interest Group. Definition of mild traumatic brain injury. J Head Trauma Rehabil 1993;8:86–7 [Google Scholar]

- 10.Teasdale G, Jennett B. Assessment of coma and impaired consciousness. A practical scale. Lancet 1974;2:81–4 [DOI] [PubMed] [Google Scholar]

- 11.Mannix R, O'Brien MJ, Meehan WP. The Epidemiology of outpatient visits for Minor Head Injury. Neurosurgery 2013;73:129–34 [DOI] [PubMed] [Google Scholar]

- 12.Faul M, Xu L, Wald MM, et al. Traumatic Brain Injury in the United States: Emergency Department Visits, Hospitalizations and Deaths 2002–2006 [Internet]. Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2010. [cited 2013 Feb 6]. http://www.cdc.gov/traumaticbraininjury/

- 13.Smits M, Dippel DW, de Haan GG, et al. External validation of the Canadian CT head rule and the New Orleans Criteria for CT scanning in patients with minor head injury. JAMA 2005;294:1519–25 [DOI] [PubMed] [Google Scholar]

- 14.Stiell IG, Clement CM, Rowe BH, et al. Comparison of the Canadian CT head rule and the New Orleans Criteria in patients with minor head injury. JAMA 2005;294:1511–18 [DOI] [PubMed] [Google Scholar]

- 15.Eagles D, Stiell IG, Clement CM, et al. International survey of emergency physicians’ awareness and use of the Canadian cervical-spine rule and the Canadian computed tomography head rule. Acad Emerg Med 2008;15:1256–61 [DOI] [PubMed] [Google Scholar]

- 16.Stiell IG, Bennett C. Implementation of clinical decision rules in the emergency department. Acad Emerg Med 2007;14:955–9 [DOI] [PubMed] [Google Scholar]

- 17.Jagoda AS, Bazarian JJ, Bruns JJ, et al. Clinical Policy: neuroimaging and decision making in adult mild traumatic brain injury in the acute setting. Ann Emerg Med 2008;52:714–48 [DOI] [PubMed] [Google Scholar]

- 18.NQF. National Voluntary Consensus Standards for Imaging Efficiency: A Consensus Report [Internet]. [cited 2013 Jan 25]. http://www.qualityforum.org/Publications/2012/01/National_Voluntary_Consensus_Standards_for_Imaging_Efficiency__A_Consensus_Report.aspx

- 19.Choosingwisely—An Initiative of the ABIM Foundation [Internet]. [cited 2013 Nov 10]. http://choosingwisely.org/ [Google Scholar]

- 20.Raja AS, Ip IK, Prevedello LM, et al. Effect of computerized clinical decision support on the use and yield of CT pulmonary angiography in the emergency department. Radiology 2012;262:468–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Blumenthal D. Launching HITECH. N Engl J Med 2010;362:382–5 [DOI] [PubMed] [Google Scholar]

- 22.Jha AK. Meaningful use of electronic health records: the road ahead. JAMA 2010;304:1709–10 [DOI] [PubMed] [Google Scholar]

- 23.Federal Register. Health information technology: standards, implementation specifications, and certification criteria for electronic health record technology, 2014 edn. Vol. 77 2012:54163–292 [PubMed] [Google Scholar]

- 24.Ip IK, Schneider LI, Hanson R, et al. Adoption and meaningful use of computerized physician order entry with an integrated clinical decision support system for radiology: ten-year analysis in an urban teaching hospital. J Am Coll Radiol 2012;9:129–36 [DOI] [PubMed] [Google Scholar]

- 25. Hospital Compare [Internet]. [cited 2013 Jun 25]. http://www.medicare.gov/hospitalcompare/

- 26.Lugtenberg M, Burgers JS, Westert GP. Effects of evidence-based clinical practice guidelines on quality of care: a systematic review. Qual Saf Health Care 2009;18:385–92 [DOI] [PubMed] [Google Scholar]

- 27.Tierney WM. Improving clinical decisions and outcomes with information: a review. Int J Med Inform 2001;62:1–9 [DOI] [PubMed] [Google Scholar]

- 28.Hunt DL, Haynes RB, Hanna SE, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA 1998;280:1339–46 [DOI] [PubMed] [Google Scholar]

- 29.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet 1993; 342:1317–22 [DOI] [PubMed] [Google Scholar]

- 30.Schuur JD, Brown MD, Cheung DS, et al. Assessment of medicare's imaging efficiency measure for emergency department patients with a traumatic headache. Ann Emerg Med 2012;60:280–290.e4 [DOI] [PubMed] [Google Scholar]

- 31.Sox HC. Evaluating the quality of decisionmaking for diagnostic tests: a methodological misadventure. Ann Emerg Med 2012;60:291–2 [DOI] [PubMed] [Google Scholar]

- 32.Hedner C, Sundgren PC, Kelly AM. Associations between presence of relevant information in referrals to radiology and prevalence rates in patients with suspected pulmonary embolism. Acad Radiol 2013;20:1115–21 [DOI] [PubMed] [Google Scholar]

- 33.Rosenbloom ST, Denny JC, Xu H, et al. Data from clinical notes: a perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc 2011;18:181–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Linder JA, Schnipper JL, Middleton B. Method of electronic health record documentation and quality of primary care. J Am Med Inform Assoc 2012;19:1019–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. doi: 10.1136/amiajnl-2013-001617. Gupta A, Raja AS, Khorasani R. Examining clinical decision support integrity: is clinician self-reported data entry accurate? J Am Med Inform Assoc 2014;21:23–6. [DOI] [PMC free article] [PubMed] [Google Scholar]