Abstract

The idea of continuously monitoring well-being using mobile-sensing systems is gaining popularity. In-situ measurement of human behavior has the potential to overcome the short comings of gold-standard surveys that have been used for decades by the medical community. However, current sensing systems have mainly focused on tracking physical health; some have approximated aspects of mental health based on proximity measurements but have not been compared against medically accepted screening instruments. In this paper, we show the feasibility of a multi-modal mobile sensing system to simultaneously assess mental and physical health. By continuously capturing fine grained motion and privacy-sensitive audio data, we are able to derive different metrics that reflect the results of commonly used surveys for assessing well-being by the medical community. In addition, we present a case study that highlights how errors in assessment due to the subjective nature of the responses could potentially be avoided by continuous sensing and inference of social interactions and physical activities.

Author Keywords: mental health, physical health, activity inference, mobile sensing, machine learning

ACM Classification Keywords: H.1.2 User/Machine Systems, I.5 Pattern Recognition, J.3 Life and Medical Sciences

INTRODUCTION

One of the pillars of population health is to improve overall quality of life by promoting cognitive, physical and mental well-being [1, 2]. Everyday behaviors are often reflective of physical and mental health and can be predictive of future health problems. The standard practice for collecting behavioral data in the health sciences relies on observational data collected in laboratory settings or through periodic recall surveys or self-reports. These proxy measures have several limitations: (i) the time and resource requirements are too high to simultaneously gather data from a large number of individuals; (ii) the measurements are prone to considerable bias and the manual and sporadic recording of information often fails to capture the finer details of behavior that may be important; and (iii) the end user effort is too high to be suitable for continuous long-term monitoring.

With continued rises in medical costs, the need for a model that screens and facilitates early diagnosis, as well as increased efforts in prevention, are essential concerns for healthcare providers and administrators. Consequently, a growing number of studies are demonstrating potential of behavior monitoring devices to assist in one or more of the three clinical applications mentioned above [3, 4, 5]. The ultimate vision is to develop a mobile sensing system that can contribute significantly to cognitive, physical and mental wellbeing while maintaining easy and universal applicability, security and patient privacy protection, and low cost.

BACKGROUND

Monitoring physical activity and mental health has been extensively investigated in the past via a variety of traditional recall surveys or Ecological Momentary Assessments (EMA) [6]. Paper-based surveys like Yale Physical Activity Survey (YPAS) [7], SF-36 [8], and Center for Epidemiological Studies - Depression (CES-D) [9] are examples of commonly accepted surveys and are some of the primary metrics for assessing physical and mental well-being in medicine. These paper-based surveys utilize recall techniques to capture daily, weekly, and seasonal patterns of behavior, but may require in-person administration and are limited by recall bias, memory dependence, current mood, and their obtrusive nature [10, 11]. Furthermore, answers to the paper-based survey questions are subjective, with risk of social desirability bias, and sometimes suffer from issues like “backfilling” due to non-adherence to time-sensitive protocols (i.e. completing daily surveys at end of the week [6]). Electronic survey tools such as digital diaries, smart-phone and web-based surveys allow investigators to tailor and improve survey questions dynamically, reduce recall bias by collecting responses close in time to one’s experience, and avoid backfilling by digitally time-stamping the submitted surveys. However, recall surveys and EMA, both paper-based and electronic, do not capture activities continuously and rely on the subjects to be responsive, can be cumbersome, and may require periodic re-administration, thereby hindering their consistent use in the primary care setting.

More recently, a variety of sensor-based systems have emerged with great potential of circumventing recall limitation and subjective dependence of aforementioned techniques. These sensor-based systems differ in their mobility, placements, numbers, and continuity in data capture, and have their own advantages and limitations. For example, video camera and RFID equipped rooms have been used to capture physical activity and sleep patterns for users [12]. These systems usually capture a portion of user’s daily life and can be prohibitively costly for mass use. In contrast, wearable single-sensor devices such as pedometers and monoaxial accelerometers have been used to measure physical activity continuously and unobtrusively while observing subjects in their natural environment [13, 14, 15]. Although useful, single-sensor based systems are limited in the types of activities they can capture. To overcome this limitation, investigators have used a combination of multiple sensors placed on different locations of the body to learn more about subject behavior [16]. These systems can capture a richer set of activities and can yield higher accuracy in recognizing activities. Another approach investigators have explored is the use of single mobile devices equipped with multiple types of sensors. Devices such as Actigraph [17], Sensewear [18], the Mobile Sensing Platform (MSP) [19] and LifeShirt system (Vivometrics, Ventura, CA) [20] provide versatility by detecting different modalities ranging from light and directional acceleration to physiological signals. These devices and new sensor-equipped smartphones have been used in a wide range of applications ranging from measurement of physical activity and energy expenditure to providing context-aware instantaneous feedback with goal of preventing future health complications. Furthermore, a network of wearable sensors communicating with a central server has demonstrated the potential benefits of monitoring and assisting in the care of the aged, a rapidly growing subset of population [21].

In contrast, the use of automated sensing techniques in assessing mental health has been very limited. Mental illness costs $30.7 billion/year [22] in the US alone. Depression, a common psychiatric disorder, has a prevalence of 5–25% [23, 24] and contributes significantly to the cost of healthcare [25, 26]. Previous research has shown that increased social activity correlates negatively with depressive behavior and can even improve depressive symptoms [27, 28]. Others have demonstrated using subjective methods that physical activity delays cognitive decline [29]. Some aspects of mental health have been approximated using proximity measurements [30]. We feel proximity based approach has three potential limitations: (i) its inability to detect reduction in physical activity and speech limits availability of useful information for clinicians to treate and manage mental dysfunction, (ii) interactions inferred from speech and colocation have been shown to be different [31], thus it is possible that behavioral indicators of social isolation may be manifesting itself but not being detected by colocation, and (iii) the system has not been compared against medically approved screening techniques validated in the medical literature and used in practice. Nonetheless, purely proximity based approaches may be a useful tool to screen for mental health risk but we believe a detailed measure of behavior will be more useful to practitioners for diagnosis purposes and is a worthy line of investigation. There is also a growing body of evidence supporting the use of acoustical properties of speech to detect changes in emotional health [32], variations in mood [33, 34] and periods of stress [35]. Furthermore, voice analysis can detect changes in verbal initiation (difficulty in starting sentences) and perseveration, characteristics highly associated with changes in mental health and inability to perform Instrumental Activities of Daily Living [36]. We believe these results, together with the fact that face-to-face conversations, are still perceived as a common and important medium for social communication [37], make the continuous study of human speech in people’s daily life a valuable line of investigation for mental health assessment.

CONTRIBUTIONS

In this paper we present an automated system for measuring mental well-being from behavioral indicators in natural everyday settings, in addition to measuring physical wellbeing. We describe the sensing and activity recognition system and its deployment in a real-world setting of older adults. We assess its reliability, its feasibility in this population, and its validity with established health instruments.

We hypothesized that continuous recording of daily audio patterns, specifically relating to the amount of human speech, would be linked to social and mental well being. To address the challenges of survey implementation and test our hypothesis, we used a previously developed mobile multimodal sensor platform for continuous and objective evaluation of mental health in a small group of elders. Specific contributions include:

Automatic analysis of the amount of speech occurring in natural settings while avoiding raw audio collection for privacy protection. Correlation between the total amount of human speech sensed and the well-established paper-based surveys for mental health like CES-D score, SF-36, and friendship scale, approached statistical significance for CES-D and showed statistically significant correlation for SF-36 Mental Health Component and friendship scale.

In addition to mental health, an overall physical activity score was computed, based on a weighted count of simple physical activity categories including stationary (sitting/standing), walking on flat surface, walking up and down inclines. This physical activity score showed significant correlation with Yale Physical Activity Survey (YPAS), a survey commonly used by the medical community.

Presentation of a case study that illustrates how automated sensing could potentially lead to improved screening of mental health disorders and can overcome some of the limitations of current paper-based surveys. Specifically, in a subject whose CES-D scores were well below those that would indicate depressive symptoms, the sensor based measurements were in closer agreement with observational assessment by the physician and the medical trainee on the research team.

STUDY OVERVIEW: ASSESSING MENTAL AND PHYSICAL WELL-BEING

Fine-grained sensor data were collected continuously from a group of older adults living in a continuing care retirement community. Located at the bottom of a hill, the retirement community includes a variety of facilities including a dining center, a library, group meeting rooms, and a fitness center. Apartment buildings surround the community center and are situated at a relatively higher elevation. Sloping roads connect the community center to the apartments.

Letters were sent to resident mailboxes and posted on major announcement boards across the retirement community requesting unpaid volunteers to participate in a study on automatic sensing of mental and physical well being. Interested residents were interviewed by the team physician to establish availability for the entirety of pilot, comfort level with electronic devices, and were selected on a first come basis as a convenience sample. Eight self-selected adults were recruited: 4 singles and 2 couples; 50% male and female. The length of the pilot study was 10 consecutive days in August 2009 from 7am to 7pm; inter-individual variability was roughly ±2 hours for pickup and drop off times. One participant dropped from the study one day before the start of study due to personal reasons unrelated to the study protocol. Investigators found a replacement but lost one day of observation due to recruitment delay for that subject. All participants were informed of their right to leave the study at any time and briefed on the Institutional Review Board (IRB) approved pilot design, its goals, and the extent of use of the collected data. All participants accepted the terms and conditions by signing a written informed consent form during their face-to-face time with project investigators. Four surveys (CES-D, YPAS, SF-36, and Friendship Scale) [9, 7, 8, 38] were administered before and after the pilot and a usability survey was administered post-study only to evaluate participants’ experience with the device, surveys, and to receive comments and concerns about the technology’s potential and privacy concerns.

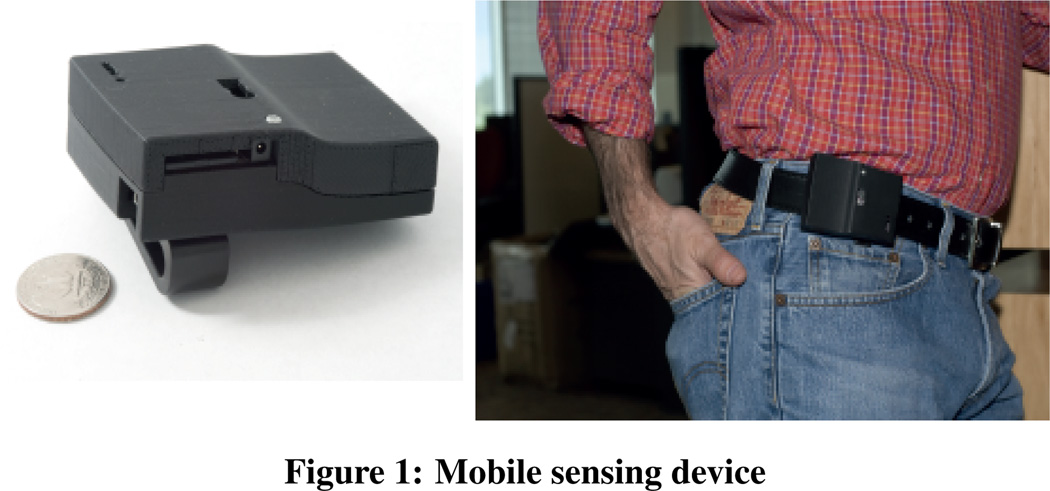

During data collection, each participant wore a 2-inch waist-mounted device equipped with multiple sensors: 3-axis accelerometer, barometer, light sensors, temperature sensor, humidity sensor, compass and microphone. Each device was equipped with a clip so the subject could wear it comfortably around the waist. Participants were trained one day prior to the start of the pilot on basic usage of the device. To tackle scenarios where the participants may feel uncomfortable to record, they were shown how to turn on and off the device and where to contact the medical trainee, who was always present on-site during the study. Also, acceptable ways of wearing the device were demonstrated. Participants were instructed to avoid placing the device in pockets and wet environments as the device is not waterproof. Participants received no special instruction on changing their daily routine, and were allowed to travel outside the facility with the device. At the start and end of the protocol (i.e., on two different days), participants completed a series of activities (short walk, walking up and down inclines) and engaged in casual conversation in different parts of the retirement community that amounted to about 80 minutes of total data (8–15 minutes of data for different subjects) to compare the recognition accuracy with previous published results [39, 40, 41, 42]. Devices were collected daily every evening at which point data were extracted and batteries recharged. Participants were asked to occasionally monitor the recording LED and were provided instructions to seek assistance from onsite support or contact one of the principal investigators (a computer scientist and a family medicine physician) in case there was an issue or if device stopped recording.

Raw audio was not recorded because of privacy reasons (a detailed description of the recorded audio features are in Overview of Speech and Conversation Detection section). Although the recorded features do not allow reconstruction of audio afterwards, they enabled us to infer when human voice was present and whether there was conversation. This information is also sufficient for estimating who was speaking when, and how a specific subject was speaking (energy and pitch) [41, 43]. In this context, it is worth mentioning that during the study we learned that the privacy sensitive audio data collection was very well accepted by users, since there was not a single instance of a participant turning off the device or seeking assistance for turning off the device due to privacy concerns. It can be argued that we could have recorded raw audio for further analysis given such a pilot study. But we want to argue that privacy sensitive features enabled us to collect data in very realistic environments continuously in unobtrusive way. Furthermore, it is still possible to do interesting analysis related to health and mental well-being using non-verbal aspects of speech (e.g., pitch, speaking rate, loudness) and are supported by previous research [44, 45, 46]. In addition, the Health Insurance Portability and Accountability Act (HIPAA) provides strict guidelines on protecting patient personal health information and privacy. Since this act prevents access and use of health information without explicit consent of all patients, recording of raw health information becomes extremely challenging, since it is almost impossible to control when unconsented individuals in the background will be recorded. Even for our experiments, the management authority of the retirement community would not have approved continuous recording of audio. Although focused recording in pre-specified rooms may have been a possibility, it defeats the goal of our study, which aims to assess mental health from passive sensing of everyday speech in naturalistic conditions.

Overview of Speech and Conversation Detection

Audio is processed on-the-fly to extract and record features that are informative for inferring the presence and style of speech and conversations but not enough to reconstruct the words that are spoken. We utilize the privacy-sensitive speech processing methods developed in [47, 42]. Features that are recorded include: (i) non-initial maximum auto-correlation peak, (ii) the total number of auto-correlation peaks, and (iii) relative spectral entropy – these features have been shown to be particularly useful for detecting the structure of voiced speech and are more robust than energy based methods.

The first step in the inference pipeline involves finding audio segments that contain human voice. A two-state hidden Markov model (HMM) is used to classify speech vs. non-speech segments using the aforementioned three recorded features. For each hidden state, the observation probability is modeled using a multi-variate Gaussian distribution (for a detailed description of the classification approach please see [47]). Upon detection of human voice, mutual information between voicing segments for each pair of microphones is used to find conversations among subjects. If two individuals are in the same physical environment and engaged in a conversation, they will take turns in speaking, which will result in high mutual information between the two sensor streams. If a third individual who also happens to be wearing a device also takes part in the conversation with the two, our method will also put the third person in conversation with the first two because conversation detection method will compute mutual information for each pair of users who are wearing the devices (for detailed discussion of the conversation detection technique please see [48, 42])

Overview of Physical Activity Detection

Physical activities such as walking on flat surface, walking up and down on inclines, and stationary (includes both sitting and standing) were detected based on features extracted from accelerometer and barometric pressure data. We segmented the data into quarter second segments and manually labeled the activity for supervised learning and testing. The features included energy, mean, variance, and suite of spectral features for the accelerometer data, and variance and signed change in pressure over various time windows for the barometric pressure data. We use simple boosted decision-stump classifiers [49] in our current experiments to train binary activity classifiers. Boosted decision stumps have been successfully used in a variety of classification [50] tasks including human activity recognition [39, 51]. For each activity Ai, we iteratively learn an ensemble of weak binary classifiers Ci = c1i; c2i; c3i, …, cMi and their associated weights αmi using the variation of the AdaBoost algorithm proposed by [52]. The final output is a weighted combination of the weak classifiers. The prediction of classifier Ci is:

| (1) |

The classification approach is based on the approach previously developed and validated by Lester, et. al.. We refer the reader to [39, 51] for further details.

Overview of Survey Instruments

Four common surveys for measuring well-being used by healthcare practitioners (Friendship Scale, SF-36, CES-D, YPAS) were administered in a pre-post study design. Subjects completed these paper-based surveys one day prior to study commencement of the study and repeated again one day after the study was concluded (three subjects took the post-study surveys two days after the conclusion of the study).

Friendship Scale is a self-administered, previously validated [38] scale that measures six dimensions of social isolation and connectedness. Each question can be scored from 0–4 points and adds up to a total of 24 points. Higher scores indicate social connectedness and low scores near indicate social isolation.

SF-36 [8] is a self-administered and commonly used survey for evaluating overall well-being. Its eight sections are weighed together to produce a mental health and a physical health score. We focused on the SF-36 Mental Health Score and compared it with sensed audio measures. As an example, we present in Table 2 the questions used to obtain the mental health score based on five out of the total eight sections.

Table 2.

Questions asked in different components of the Mental Health Score of SF-36

| Dimension | Summary of Questions Used To Evaluate Dimensions |

|---|---|

| Social Functioning | During the past 4 weeks, to what extent has your physical health or emotional problems interfered with your normal social activities with family, friends, neighbors, or groups? (Not at all, slightly, Moderately, Quite a bit, Extremely) During the past 4 weeks, how much of the time has your physical health or emotional problems interfered with your social activities (like visiting friends, relatives, etc.)? (all the time, most of the time, a good bit of the time, some of the time, little of the time, none of the time) |

| Role Emotional | During the past 4 weeks, have you had any of the following problems with your work or other regular daily activities as a result of any emotional problems (such as feeling depressed or anxious)?

|

| Mental Health | How much of the time during the past 4 weeks (all the time, most of the time, a good bit of the time, some of the time, little of the time, none of the time)

|

| General Health | In general, would you say your health is (Excellent, Very Good, Good, Fair, Poor?)

|

| Vitality | How much of the time during the past 4 weeks (all the time, most of the time, a good bit of the time, some of the time, little of the time, none of the time)

|

CES-D (Center for Epidemiological Study Depression Scale) is one of the most frequently used surveys to screen for depressive symptoms and behaviors. Each question is scored from 0–3 points with a maximum of 60 points. Individuals with scores 16 or higher are considered to have symptoms indicative of clinical depression [9].

YPAS (Yale Physical Activity Survey) [7] is a survey requiring administration that recalls activities performed during a typical period in the previous month. The validated survey estimates energy expenditure (kcals/week), total time spent doing vigorous or leisure activities, and provides a total activity summary index.

Evaluation Methodology

Evaluation of data collected in everyday environment is challenging as it is often impossible to have “ground-truth” for every single data point recorded. In order to be confident in our analysis and findings, we took a multi-pronged approach to evaluation. The first step was to label some data from the subjects in the retirement community and compare the physical activity and speech classification accuracy with the accuracy numbers on labeled datasets collected from other individuals in different geographical locations and from different age groups. For speech classification, the comparison dataset included more than twenty-five individuals ranging between 18–50 years of age and about an hour of labeled speech [48, 42]. For activity classification, the comparison dataset included ten individuals ranging between 20–30 years of age and with more than ten hours of labeled data [39, 40]. The labeled data from the retirement community was much smaller in size but was collected in two different days and in different locations of the facility. The speech data was labeled at a very fine granularity to accurately label human voice that changes in the order of milliseconds. We had 16,000 labeled data points collected from the retirement community amounting to little over 4 minutes of audio. For the activity data, we had slightly over 19,000 labeled data point amounting to 80 minutes of data. Our approach for validation was to ensure consistency in classification results across data sets. Since neither the sensing device nor the algorithms changed, we believe it is sufficient to ensure that the new results are consistent with previous results and it is not necessary to collect an extensive new labeled dataset for this population, which is not feasible given the age of our subjects and their willingness to generate such a dataset.

In addition to verifying the consistency of our classification accuracy with existing results, a family care practitioner interviewed each subject during recruitment and a medical trainee was present on-site everyday throughout the day while the study was ongoing. The medical trainee was trained by the physician to administer the surveys to ensure reliability and compliance. The trainee typically had brief interactions with the subjects when he handed out the sensing devices every morning and collected them every evening and had occasional interactions with the elders if they chose to come by his room during the day for any questions related to the study. A few of the elders used the opportunity to stop by for social interactions. This provided us with some opportunistically collected observational data and indication to whether the survey responses from the subjects were consistent with the medical trainee and the physician’s observations.

RESULTS AND DISCUSSION

In this section we present the classification accuracy of the various activity classifiers as well as our findings related to the in-situ assessment of physical and mental well-being.

Classification Accuracy

As mentioned previously, a small amount of labeled data was used to test whether our performance is similar to previously published performance numbers on larger datasets [48, 42] and whether it is robust across diverse scenarios in the retirement community and across subjects. For speech, the typical accuracy numbers for inferring human speech ranged between 85%–95% [48, 42] and conversation detection was approximately 95%. For activity recognition, detection accuracy numbers ranged from 80%–95% for stationary, walking on a flat surface, walking up and walking down inclines [39, 40].

As described in the Evaluation Methodology section, 4 minutes of raw audio that included speech both inside the meeting room and around the retirement center. These data are manually labeled for the speech and non-speech segments. Three minutes of this data was used for training and the remaining for testing. Testing performance of the human speech classifier in the meeting room was: accuracy 85%, precision 84%, recall 82%, and along the corridors of retirement center was: accuracy 83%, precision 92%, recall 84%. Overall accuracy was 83.7%, precision 90%, recall 84%.

However, one concern is that the speech/non-speech classifier can overhear the sound of TV programs and classify human speech in TV programs as speech. Since in subsequent analysis, we plan to use the amount of human speech in a subject’s conversational vicinity for mental health assessment, we discuss how we filter out speech sounds that occur in TV programs. In a preliminary test a TV recording that included movie and news (including commercials), the speech inference algorithm classified 19% of the recording as speech. To filter out TV speech, we use two energy features derived from the audio signal. During speech in conversational vicinity there is a broader spread in the energy intensity in the voiced region due to non-fixed location of the speech sound (as opposed to fixed location of TV) and the energy values of voiced regions for TV are more uniform across time. Thus, the entropy of energy intensity is higher for human speech occurring in the same physical space compared to the human speech occurring on TV. And entropy of energy distribution over time will be lower for speech in the same physical space compared to speech in TV. These features are computed for every three minute windows. Thirty-three minutes of conversation data and fifty seven minutes of TV program data was used (comprising of a short movie segment and news program) to train a model. A simple Gaussian classifier, where one gaussian is trained for TV and one gaussian for conversation, is used for classification. On a separate testing dataset that included one hundred and three minutes of conversation and forty-two minutes of TV data, the classifier had a 100% classification accuracy for TV and 94% accuracy for human voice occurring in the same location of the subject. The conversation data used for training and testing in this experiment were collected from a research group meeting, casual conversation between colleagues in the department and during the orientation session at the retirement community. We apply this filtering algorithm after the speech inference step to eliminate instances of TV speech.

Table 5 shows the number of minutes inferred as human speech across different subjects for the entire 10 day data collection. During our analysis, we found that subject 08’s microphone was faulty and the audio recorded by the device was unusable for speech and conversation detection. Furthermore, subject 04 stopped recording on several occasions before the afternoon and most of the data collection was heavily skewed towards the morning. We omitted subject 04’s data when computing correlation with the survey scores as the amount and the distribution of data collection from him was very different the rest of the population. (Please see Figure 4 for data availability for different subjects.)

Table 5.

Percentages of sensed human speech during the 10 day study and mental health scores from the surveys. (*ignored for further analysis because of lack of data throughout the day)

| Subject | Amount of Human speech (%) |

SF-36 Mental Health Score |

CES-D Score |

Friendship Scale |

|---|---|---|---|---|

| SU01 | 2.6 | 68 | 25 | 14.5 |

| SU02 | 9.9 | 90 | 13 | 22 |

| SU03 | 11.6 | 90 | 0.5 | 21 |

| SU04 | 6.3* | 96 | 1.5 | 22 |

| SU05 | 15.3 | 90 | 5.5 | 24 |

| SU06 | 6.9 | 88 | 4.5 | 18 |

| SU07 | 12.03 | 88 | 5.5 | 21 |

| SU08 | Faulty Mic. | 96 | 2 | 24 |

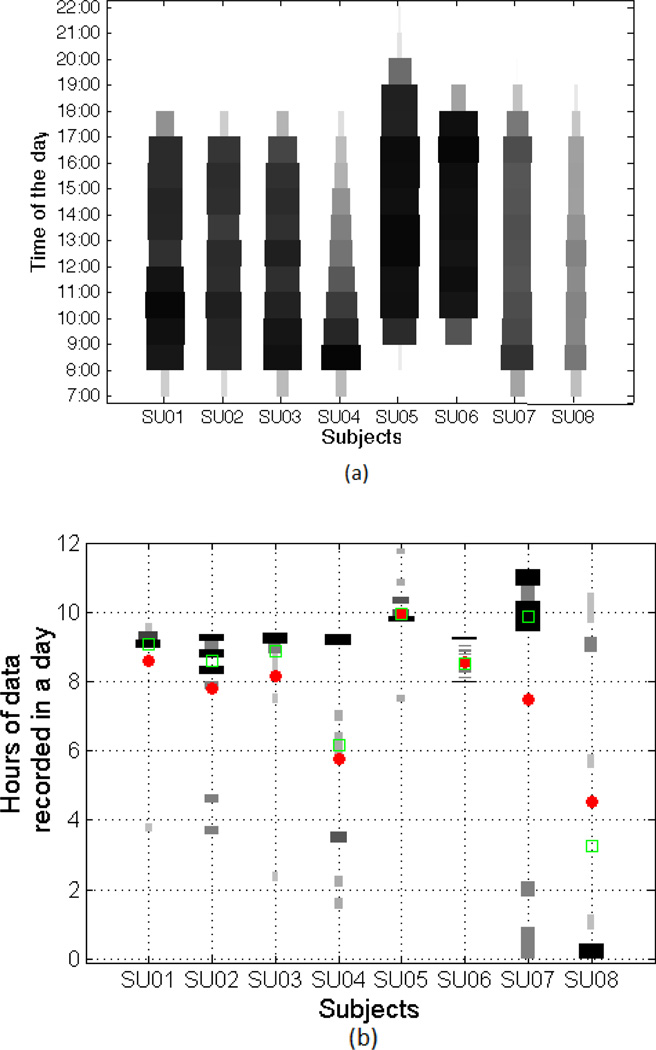

Figure 4.

Amount of data recorded for different subjects through out the 10 day pilot study. (a) shows the amount of recorded data at different parts of a day. Wider and darker horizontal bars represent more recording during that part of the day. (b) shows distribution of the hours of data recorded on each day for a given subject. For example, subject 02 recorded close to 9 hours of data on most days but has a couple of days where she records between 3.5–4.5 hours of data. The red dot represent the mean and the green square represents the median number of recorded hours per day for each subject.

Conversation is detected only between the study participants as the detection algorithm assumes that each of the conversation participant will be carrying their own microphones. The mutual information between the inferred speech streams are calculated for each pair of subjects for every two minute of data and conversation is identified using a threshold on the mutual information score that was obtained via cross-validation [48, 42]. Table 3 shows the number of minutes of conversation that took place between each pair of subjects over the 10 day period. One particular point to note is that subject 03 and 04 are a couple and they have the highest amount of conversation between them (the other couple in the experiment is not presented here because that couple includes subject 08 whose audio data, as stated above, was unusable for further analysis). In this context, we want to mention that we presented conversation analysis here not just for sanity checking our data but to show that it reveals important interpersonal information among the subjects of the experiment. Also conversation analysis is pivotal for speaker segmentation [42] and subsequent user specific speech analysis, like pitch, speaking rate etc., which will be used for future research.

Table 3.

Pairwise and total conversation times in minutes for different subjects over 10 day study period

| SU01 | SU02 | SU03 | SU04 | SU05 | SU06 | SU07 | |

|---|---|---|---|---|---|---|---|

| SU01 | 12 | 20 | 4 | 24 | 10 | 12 | |

| SU02 | 12 | 70 | 10 | 48 | 12 | 30 | |

| SU03 | 20 | 70 | 108 | 42 | 38 | 32 | |

| SU04 | 4 | 10 | 108 | 12 | 10 | 8 | |

| SU05 | 24 | 48 | 42 | 12 | 20 | 26 | |

| SU06 | 10 | 12 | 38 | 10 | 20 | 12 | |

| SU07 | 12 | 30 | 32 | 8 | 26 | 12 | |

| TOTAL | 82 | 182 | 310 | 152 | 172 | 102 | 120 |

For physical activity recognition, a total of 80 minutes of physical activity data were collected from the subjects. Out of these data, 25 minutes of data were labeled for stationary, walking on flat surface, walking up and down inclines. During labeling it was made sure that data from every subject was represented and all classes have roughly same number of labeled data points. Leave one subject out cross-validation results are reported in Table 4. Here, we expect the performance numbers on the small test dataset to be higher and the actual performance will be closer to previously published results [39, 40]. We would like to reiterate that these evaluations are mainly used to check that the classification accuracy is consistent with the performance of prior systems. Furthermore, cross-validation establishes that the classification worked robustly across individuals and device placement variations.

Table 4.

Performance numbers for physical activity classifier

| Actiivty | Precision | Recall | Accuracy |

|---|---|---|---|

| stationary | 92.4% | 100% | 96.05% |

| walking flat surface | 100% | 75.86% | 93.1% |

| walking up | 94.9% | 100% | 99.6% |

| walking down | 86.7% | 100% | 97.4% |

Automated Measurement of Mental Well-Being

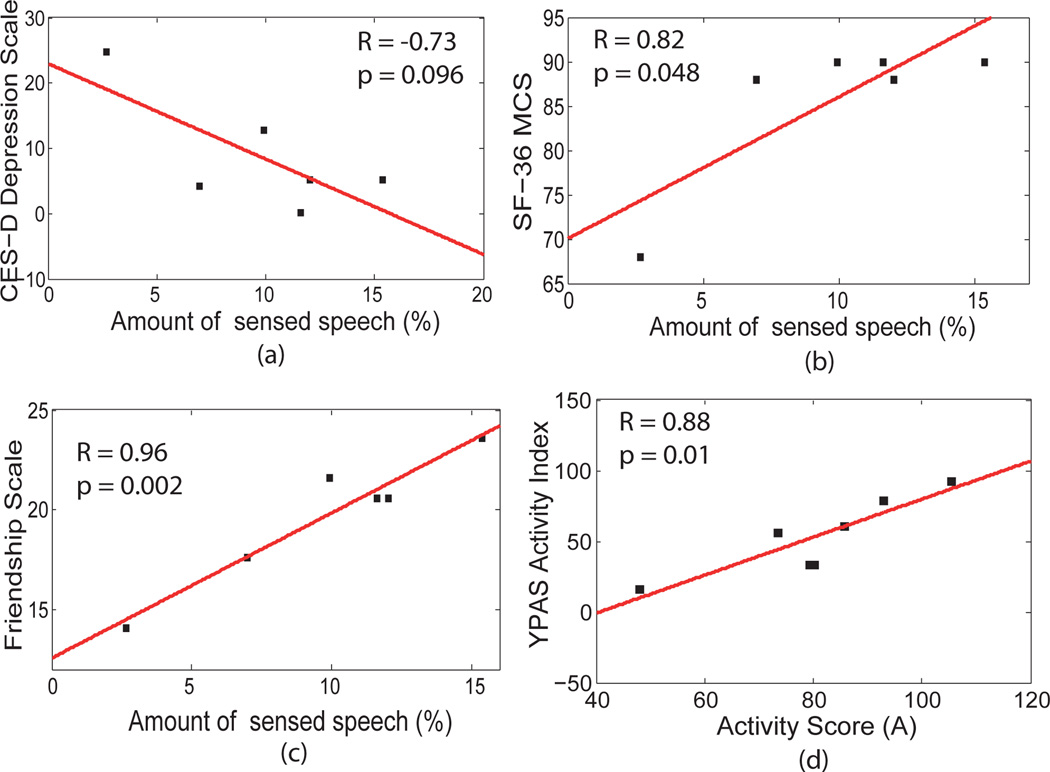

To determine the relationship between sensed speech and mental well-being, we calculated the fraction of time human speech was present within conversational proximity for each subject (as shown in table 5). Fraction of time refers the ratio of the time human speech was present within conversational proximity and the total duration of recording. We used fraction rather than using total amount of speech for the subjects because of unequal data length across different subjects. Univariate regression analysis comparing the sensed speech with SF-36 Mental Health Score revealed a positive correlation R= 0.82 (p=0.048). In order to interpret this result it is worth delving into the SF-36 Mental Health Score. It is the average of five out of eight sections of the survey, which include Social Functioning, Role Emotional, Mental Health, and Reported Health. Table 2 contains the questions used in these five sections. The Social Functioning dimension is concerned with issues that interfere with subjective perception of General Health, and Vitality. Role Emotional is concerned with emotions that interfere with daily social and occupational activities. The Mental Health dimension captures subjective self-perception, which can be clouded by pathologic negative interpersonal interactions and subjects’ current mood, especially in those with mood disorders [53, 54, 55]. The General Health represents subjective perception of health compared to others. Lastly, Vitality captures the subjective perception of energy and stamina. Thus, a comprehensive look at mental health score accounts for emotional or physical stressors that interfere with socialization and seeks to quantify the effect on the emotional integrity of the participant. Directly capturing amount of human speech present in an individual’s close vicinity can indicate whether an individual is socially engaged and provide valuable information about metal health. Although, the measurement of speech alone is unlikely to be a conclusive or comprehensive measurement of mental health, it could serve a continuous indicator and early warning system.

Regression analysis comparing fraction of human speech sensed in conversational proximity and CES-D scores revealed a negative correlation R= −0.73 (p=0.096), which is slightly outside the statistical significance range in this small sample. Note that higher CES-D scores indicate increased presence of depressive symptoms. In addition to CES-D and SF-36, regression analysis comparing fraction of human speech sensed in conversation vicinity and friendship scores revealed a strong correlation of R= 0.96 (p=0.002).

Anecdotal qualitative observations provide support that sensed measurements can be used to assess mental health and are often used as one of the early screening methods for depression. Table 5 shows that subject 01 scored above 25 on CES-D and had a much lower SF-36 Mental Health score. Comparing this score to the interaction-based assessment of the participant by the medical trainee and the physician in the team (before survey results were obtained) confirmed concerns that the participant appeared socially isolated, and avoided large groups, and was dysthymic (exhibiting depressive symptoms without a formal diagnosis of depression) and unhappy. Automatically inferred speech duration for subject 01 was low compared to what was observed for the rest of the group. We also discuss another example in section below.

Automated Measurement of Physical Well-Being

Table 6 shows the breakdown of classified activities, namely walking on flat surface, walking up and down inclines, and being stationary (sitting and standing). The unknown category contained all segments of the data that were not associated with the four classified types of activity. Comparing the percentage of time spent doing a specific activity with daily patterns and physical limitations of individual subjects shows good qualitative correlation. Subject 05 reported walking or participating in other physical activities multiple hours daily and had the highest amount of going up, down and walking and the lowest percentage of stationary data in the group. Lastly, subject 07 had a neurological disease that limited the person’s ability to walk and take part in other physical activities. This is reflected in the amount of stationary activity that is inferred–the highest in the group–and also by the lower values for all other activities. Furthermore, it is worth mentioning that the retirement community is situated in a hilly area with pathways that go up and down. A few of the subjects took long daily walks along those path and as a result have high percentages of walking up and walking down inclines.

Table 6.

Percentages of different physical activities for subjects during the 10 day study (Going up and going down refers to walking up and walking down inclines respectively)

| Sub- ject |

Statio- nary (%) |

Walking (%) |

Going Up (%) |

Going Down (%) |

Unkn- own (%) |

Assistive Devices |

|---|---|---|---|---|---|---|

| SU01 | 63.41 | 3.98 | 10.81 | 6.24 | 15.56 | No |

| SU02 | 57.29 | 11.20 | 6.39 | 5.08 | 20.04 | Walker/Cane |

| SU03 | 62.76 | 18.43 | 1.24 | 3.17 | 14.39 | No |

| SU04 | 65.20 | 9.71 | 0.66 | 7.70 | 16.72 | Cane |

| SU05 | 54.60 | 2.72 | 14.49 | 11.42 | 16.77 | No |

| SU06 | 66.13 | 2.95 | 3.58 | 17.54 | 9.80 | No |

| SU07 | 73.39 | 4.83 | 1.82 | 2.12 | 17.84 | Wheelchair/Cane |

| SU08 | 62.85 | 3.25 | 7.42 | 11.62 | 14.85 | No |

To demonstrate the potential to partly capture the information gathered by surveys using automatically sensed behavior, we compute a weighted sum of physical activity measure inferred using Equation 2 and compare it to the YPAS Activity Index. In the equation, ai (in percentage) represents different types of activities (stationary, walking, walking up inclines, walking down inclines) and wi corresponds to the weights of different activities in the overall inferred activity score A.

| (2) |

A multi-dimensional linear regression is utilized to determine relations between YPAS scores and the measures computed from the sensed data. Coefficients (or weights) generated by this regression are as follows: 0.6 for stationary, 3 for walking, 5 for going up, 1 for going down, and −1.5 for unknown. As can be seen, weights for different activities make intuitive sense because if we consider from stationary, walking down, walking on flat surface to walking up we increasingly going toward more and more difficult and calorie burning physical activities. Furthermore, possible explanation for the negative weight for unknown is two fold: (i) it may represent activities that does not correspond to any physical activity; (ii) a high value for unknown make the values for other physical activities lower than usual (i.e., it has a negative effect on other physical activities).

The total minutes for different activities inferred from sensor measurements can be found in Table 6 and the weighted sum can be computed based on Equation 2 to get the activity score. The inferred activity score demonstrates a correlation of R=0.88 with p=0.01 with the YPAS activity index. In this context, it is worth mentioning that, subject 04’s data is not included in the correlation analysis because of lack of data throughout the day.

An unexpected finding was observed for subject 02 regarding the relationship between inferred activity score (A) (from equation 2) and the YPAS activity score. Direct observation of the person’s daily activities indicated that she spent most of the day being active as a volunteer and also spent time cooking/cleaning her home. The inferred activity level was comparable to the rest of the group and this paralleled observational assessment by the medical trainee who was always at the facility during data collection. However, YPAS reported a much lower estimate of their physical activity level. Despite being administered by the medical trainee, inaccurate recall of daily activities, human error in calculating amount of time spent doing a specific activity, and limitation of survey in capturing the time spent participating in the specific volunteer task the person was engaged in may have contributed to this discrepancy.

Case Study: Limitations of Survey-based Assessment

Our primary goal was to validate sensor based measurement of in-situ behavior with paper-surveys, the current gold standards in health industry for assessing mental and physical health. We also wanted to explore the potential limitations of self-reported measurement of behavior and how continuous sensing of behavior using easily carriable mobile sensors can supplement and improve currently available tools and methods in health behavioral sciences. Analysis of total amount of speech for subject 06 provides a case example illustrating the limitations of the standard methods for measuring mental health and suggests that automated measurements could potentially overcome these limitations. A comparison of subject 06’s CES-D and the Mental Health component of the SF-36 scores and the inferred activities indicate that: (i) there are no major mental health concerns detected by CES-D and SF-36 and (ii) the surveys disagree with the measurement made using the mobile sensors (6.9% of human speech sensed in conversation vicinity, which is second lowest after subject 01), raised concerns of social isolation, a major risk factor for emotional well-being [56]. The sensor based measurement was in agreement with direct observations made by the medical trainee. This subjective measurement of mental health could have been inappropriately influenced by one or more factors including skewed self-perception, misinterpretation of questionnaires, and purposeful misrepresentation. Thus, continuos sensing could be utilized in preventing exclusive dependence on subject for accurate recall, interpretation and response to questions.

LIMITATIONS AND SHORTFALLS

Our study has several of limitations. The sample size was small and it is difficult to draw definitive conclusions and should be viewed as a promising pilot study. Similarly, this study was focused on older adults. Additional investigation needs to be performed on other populations to see if findings are generalizable. While we did collect a small amount of observational data, we did not perform continuous direct observation. Although this strategy would have made comparison between subjective behavior and actual behavior easier, it would have likely biased observations by disrupting the natural setting of participants.

Figure 4 shows the amount of data recorded at different parts of the day during the 10 day study. It highlights the fact that subject 08 provided the least amount of data because he choose not to wear the device during voluntary duties outside the retirement facility. Subject 04 provided data mostly in the morning and his data distribution is heavily skewed towards the morning as can also be seen in Figure 4. To increase reliability and decrease user burden, the participants dropped off the sensing device at the end of the day. The data was offloaded daily and the device was charged overnight and the subjects picked the devices up the following morning. Due to picking up and returning the device at different times, unequal amount of data was collected for different subjects. Finally, significantly less data were collected on day 8 due to insufficient charging of the devices during the previous night. Despite these limitations, this pilot work demonstrates the power and potential of utilizing commonly available sensors with sophisticated processing techniques to improve the detection of specific physical and behavioral activities. As more people are carrying sensors as part of everyday mobile devices, the potential to detect health problems and monitor treatment could become more efficient and effective.

CONCLUSION

In this paper, we demonstrated that daily human behaviors, inferred from mobile sensors, correlate highly with well-established survey metrics, including measures of depressive symptoms, in older adults. In an informal usability survey, most study participants stated that they found the device easy to use, comfortable to wear, and all participants thought the sensor based approach was preferable to surveys. Though the strong quantitative results combined with qualitative acceptance is encouraging, there are scopes for future improvements in scalability, usability, robustness and in detecting finer aspects of mental health. We are currently focusing on implementing our sensing and inference system on smartphones. We believe that the recent proliferation and pervasiveness of smartphones can enable us to truly scale the passive objective well-being system for the masses. These smartphones will not only improve usability but also enable us to collect large amount of data in diverse environments and create models that are robust across scenarios. In addition to data collection, we plan to focus on different aspects of mental health, for example aspects that changes rapidly overtime (e.g., non-chronic stress, mood) and that are less transient (e.g., personality). We believe this work highlights a first step towards our goal by showing that passive and continuous sensing of activity and behavior is feasible and comparable to traditional, more cumbersome methods of assessment for an increasing population like older adults. With continued advancement of these technologies, there is great potential to improve early detection of changes in well-being and overall quality of life.

Figure 1.

Mobile sensing device

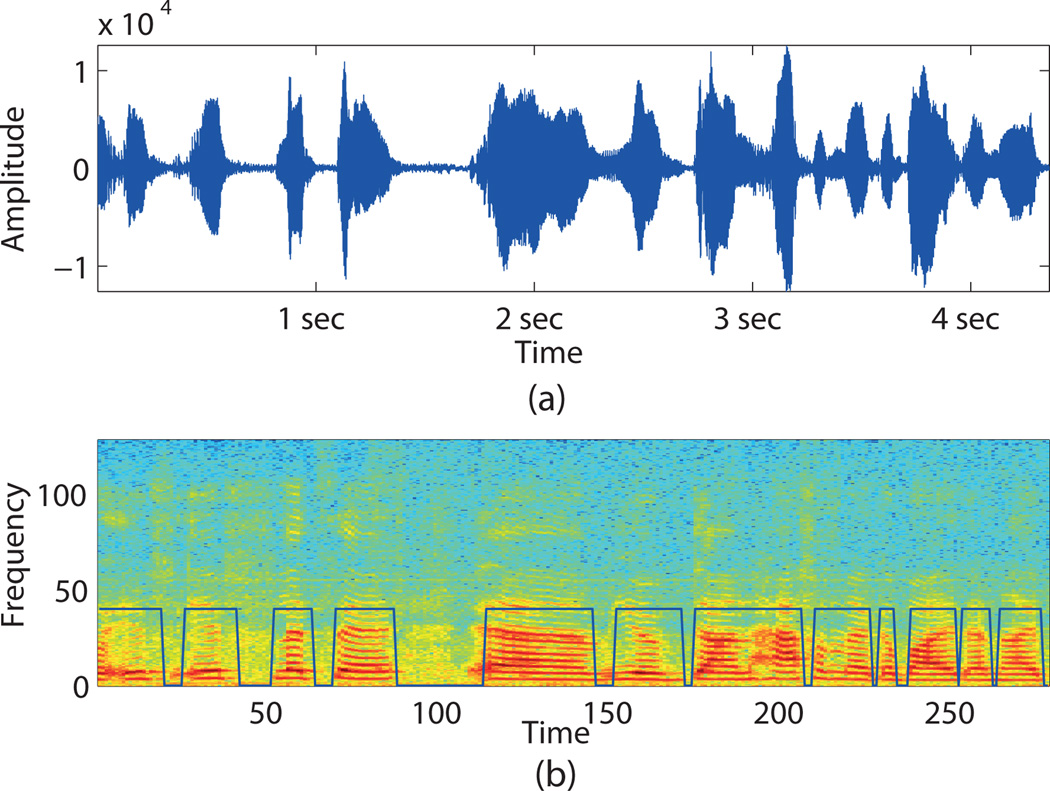

Figure 2.

(a) amplitude values for 5 second recording of audio (b) spectrogram of the recording with blue lines depicting inferred human speech

Figure 3.

Correlation between (a) sensed human speech and CES-D (b) sensed human speech and SF-36 Mental Health Score (c) sensed human speech and friendship scale (d) physical activity score A and YPAS

Table 1.

Subject profile

| Participant ID |

Marital Status |

Age (years) |

|---|---|---|

| SU01 | Single | 90 |

| SU02 | Single | 89 |

| SU03 | Married | 83 |

| SU04 | Married | 82 |

| SU05 | Single | 93 |

| SU06 | Single | 81 |

| SU07 | Married | 84 |

| SU08 | Married | 86 |

Contributor Information

Mashfiqui Rabbi, Dept. of Information Science, Cornell University, ms2749@cornell.edu.

Shahid Ali, Community and Family Medicine, Dartmouth Medical School, shahid.a.ali@dartmouth.edu.

Tanzeem Choudhury, Dept. of Information Science, Cornell University, tanzeem.choudhury@cornell.edu.

Ethan Berke, Community and Family Medicine, Dartmouth Medical School, ethan.berke@tdi.dartmouth.edu.

References

- 1.Camicioli R, Moore MM, Sexton G, Howieson DB, Kaye JA. Age-related brain changes associated with motor function in healthy older people. Journal of the American Geriatrics Society. 1999;47(3):330. doi: 10.1111/j.1532-5415.1999.tb02997.x. [DOI] [PubMed] [Google Scholar]

- 2.Sheridan PL, Solomont J, Kowall N, Hausdorff JM. Influence of executive function on locomotor function: divided attention increases gait variability in Alzheimer’s disease. Journal of the American Geriatrics Society. 2003;51(11):1633–1637. doi: 10.1046/j.1532-5415.2003.51516.x. [DOI] [PubMed] [Google Scholar]

- 3.Olguin Daniel Olguin, Waber Benjamin N, Kim Taemie, Mohan Akshay, Ara Koji, Pentland Alex. Sensible organizations: technology and methodology for automatically measuring organizational behavior. IEEE Transactions on Systems, Man Cybernetics, Part B: Cybernetics. 2009;39(1):43–55. doi: 10.1109/TSMCB.2008.2006638. [DOI] [PubMed] [Google Scholar]

- 4.Rothney Megan P, Schaefer Emily V, Neumann Megan M, Choi Leena, Chen Kong Y. Validity of physical activity intensity predictions by actigraph, actical, and rt3 accelerometers. Obesity (Silver Spring) 2008;16(8):1946–1952. doi: 10.1038/oby.2008.279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Consolvo S, McDonald DW, Toscos T, Chen MY, Froehlich J, Harrison B, Klasnja P, LaMarca A, LeGrand L, Libby R, et al. Activity sensing in the wild: a field trial of ubifit garden; Proceeding of the twenty-sixth annual SIGCHI conference on Human factors in computing systems; 2008. pp. 1797–1806. [Google Scholar]

- 6.Wenze Susan J, Miller Ivan W. Use of ecological momentary assessment in mood disorders research. Clinical psychology review. 2010 doi: 10.1016/j.cpr.2010.06.007. [DOI] [PubMed] [Google Scholar]

- 7.Dipietro L, Caspersen CJ, Ostfeld AM, Nadel ER. A survey for assessing physical activity among older adults. Medicine and science in sports and exercise. 1993;25:628–628. [PubMed] [Google Scholar]

- 8.Sf-36. 2008 http://www.sf-36.org.

- 9.Andresen EM, Malmgren JA, Carter WB, Patrick DL. Screening for depression in well older adults: evaluation of a short form of the ces-d (center for epidemiologic studies depression scale) American Journal of Preventive Medicine. 1994;10(2):77–84. [PubMed] [Google Scholar]

- 10.Stone AA, Schwartz JE, Neale JM, Shiffman S, Marco CA, Hickcox M, Paty J, Porter LS, Cruise LJ. A comparison of coping assessed by ecological momentary assessment and retrospective recall. Journal of Personality and Social Psychology. 1998;74(6):1670–1680. doi: 10.1037//0022-3514.74.6.1670. [DOI] [PubMed] [Google Scholar]

- 11.Schwartz JE, Neale J, Marco C, Shiffman SS, Stone AA. Does trait coping exist? a momentary assessment approach to the evaluation of traits. Journal of personality and social psychology. 1999;77(2):360–369. doi: 10.1037//0022-3514.77.2.360. [DOI] [PubMed] [Google Scholar]

- 12.Bang Sunlee, Kim Minho, Song Sa-Kwang, Park Soo-Jun. Toward real time detection of the basic living activity in home using a wearable sensor and smart home sensors. Proc IEEE Engineering in Medicine and Biology Society. 2008:5200–5203. doi: 10.1109/IEMBS.2008.4650386. [DOI] [PubMed] [Google Scholar]

- 13.Bravata DM, Smith-Spangler C, Sundaram V, Gienger AL, Lin N, Lewis R, Stave CD, Olkin I, Sirard JR. Using pedometers to increase physical activity and improve health: a systematic review. JAMA. 2007;298(19):2296. doi: 10.1001/jama.298.19.2296. [DOI] [PubMed] [Google Scholar]

- 14.Tudor-Locke C, Sisson SB, Collova T, Lee SM, Swan PD. Pedometer-determined step count guidelines for classifying walking intensity in a young ostensibly healthy population. Applied Physiology, Nutrition, and Metabolism. 2005;30(6):666–676. doi: 10.1139/h05-147. [DOI] [PubMed] [Google Scholar]

- 15.Bergman R, Bassett D., Jr Validity of 2 devices for measuring steps taken by older adults in assisted-living facilities. Journal of physical activity & health. 2008;5:S166. doi: 10.1123/jpah.5.s1.s166. [DOI] [PubMed] [Google Scholar]

- 16.Bao L, Intille SS. Activity recognition from user-annotated acceleration data. Pervasive Computing. 2004:1–17. [Google Scholar]

- 17.Actigraph. http://www.theactigraph.com/

- 18.Sensewear. http://sensewear.com/

- 19.Choudhury T, Borriello G, Consolvo S, Haehnel D, Harrison B, Hemingway B, Hightower J. The mobile sensing platform: An embedded activity recognition system. IEEE Pervasive Computing. 2008:32–41. [Google Scholar]

- 20.Conrad Ansgar, Wilhelm Frank H, Roth Walton T, Spiegel David, Taylor C Barr. Circadian affective, cardiopulmonary cortisol variability in depressed and nondepressed individuals at risk for cardiovascular disease. Journal of psychiatric research. 2008;42(9):769–777. doi: 10.1016/j.jpsychires.2007.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Reder S, Ambler G, Philipose M, Hedrick S. Technology and Long-term Care (TLC): A pilot evaluation of remote monitoring of elders. Gerontechnology. 2010;9(1):18–31. [Google Scholar]

- 22.Revenues and expenditures reports from 2006

- 23.Kirmayer LJ, Robbins JM, Dworkind M, Yaffe MJ. Somatization and the recognition of depression and anxiety in primary care. American Journal of Psychiatry. 1993;150(5):734–741. doi: 10.1176/ajp.150.5.734. [DOI] [PubMed] [Google Scholar]

- 24.Olfson M, Fireman B, Weissman MM, Leon AC, Sheehan DV, Kathol RG, Hoven C, Farber L. Mental disorders and disability among patients in a primary care group practice. American Journal of Psychiatry. 1997;154(12):1734–1740. doi: 10.1176/ajp.154.12.1734. [DOI] [PubMed] [Google Scholar]

- 25.Simon G, Ormel J, VonKorff M, Barlow W. Health care costs associated with depressive and anxiety disorders in primary care. American Journal of Psychiatry. 1995;152(3):352–357. doi: 10.1176/ajp.152.3.352. [DOI] [PubMed] [Google Scholar]

- 26.Henk HJ, Katzelnick DJ, Kobak KA, Greist JH, Jefferson JW. Medical costs attributed to depression among patients with a history of high medical expenses in a health maintenance organization. Archives of General Psychiatry. 1996;53(10):899–904. doi: 10.1001/archpsyc.1996.01830100045006. [DOI] [PubMed] [Google Scholar]

- 27.Isaac Vivian, Stewart Robert, Artero Sylvaine, Ancelin Marie-Laure, Ritchie Karen. Social activity and improvement in depressive symptoms in older people: a prospective community cohort study. American Journal of Geriatric Psychiatry. 2009;17(8):688–696. doi: 10.1097/JGP.0b013e3181a88441. [DOI] [PubMed] [Google Scholar]

- 28.Bosworth Hayden B, Hays Judith C, George Linda K, Steffens David C. Psychosocial and clinical predictors of unipolar depression outcome in older adults. International journal of geriatric psychiatry. 2002;17(3):238–246. doi: 10.1002/gps.590. [DOI] [PubMed] [Google Scholar]

- 29.Walk WW. A prospective study of physical activity and cognitive decline in elderly women. Arch Intern Med. 2001;161:1703–1708. doi: 10.1001/archinte.161.14.1703. [DOI] [PubMed] [Google Scholar]

- 30.Madan Anmol, Cebrian Manuel, Lazer David, Pentland Alex. Ubicomp ’10: Proceedings of the 12th ACM international conference on Ubiquitous computing. New York, NY, USA: 2010. Social sensing for epidemiological behavior change; pp. 291–300. ACM. [Google Scholar]

- 31.Wyatt Danny, Choudhury Tanzeem, Kitts James, Bilmes Jeff. Inferring Colocation and Conversation Networks from Privacy-sensitive Audio with Implications for Computational Social Science. To appear in ACM Transactions on Intelligent Systems and Technology. 2010 [Google Scholar]

- 32.France DJ, Shiavi RG, Silverman S, Silverman M, Wilkes M. Acoustical properties of speech as indicators of depression and suicidal risk. IEEE Transactions on Biomedical Engineering. 2002;47(7):829–837. doi: 10.1109/10.846676. [DOI] [PubMed] [Google Scholar]

- 33.Cowie R, Douglas-Cowie E. Automatic statistical analysis of the signal and prosodic signs of emotion in speech. Spoken Language, 1996. ICSLP 96. Proceedings., Fourth International Conference on. 2002;ume 3:1989–1992. [Google Scholar]

- 34.Frick RW. Communicating Emotion:: The Role of Prosodic Features. Psychological Bulletin. 1985;97(3):412–429. [Google Scholar]

- 35.Moore Elliot, Clements Mark A, Peifer John W, Weisser Lydia. Critical analysis of the impact of glottal features in the classification of clinical depression in speech. IEEE Transactions on Biomedical Engineering. 2008;55(1):96–107. doi: 10.1109/TBME.2007.900562. [DOI] [PubMed] [Google Scholar]

- 36.Kiosses DN, Klimstra S, Murphy C, Alexopoulos GS. Executive dysfunction and disability in elderly patients with major depression. American Journal of Geriatric Psych. 2001;9(3):269. [PubMed] [Google Scholar]

- 37.Kirkman BL, Rosen B, Tesluk PE, Gibson CB. The impact of team empowerment on virtual team performance: The moderating role of face-to-face interaction. The Academy of Management Journal. 2004;47(2):175–192. [Google Scholar]

- 38.Hawthorne G. Measuring social isolation in older adults: Development and initial validation of the friendship scale. Social Indicators Research. 2006:1–28. [Google Scholar]

- 39.Lester Jonathan, Choudhury Tanzeem, Kern Nicky, Borriello Gaetano, Hannaford Blake. A hybrid discriminative/generative approach for modeling human activities; Proc. of the International Joint Conference on Artificial Intelligence (IJCAI); 2005. pp. 766–772. [Google Scholar]

- 40.Lester Jonathan, Choudhury Tanzeem, Borriello Gaetano, Hannaford Blake. A practical approach to recognizing physical activities. Proc. of Pervasive. 2006 [Google Scholar]

- 41.Wyatt D, Choudhury T, Bilmes J, Kitts JA. Inferring colocation and conversation networks from privacy-sensitive audio with implications for computational social science. ACM Transactions on Intelligent Systems and Technology (TIST) 2011;2(1):7. [Google Scholar]

- 42.Wyatt D, Choudhury T, Bilmes J. Conversation detection and speaker segmentation in privacy sensitive situated speech data. Proceedings of Interspeech. 2007:586–589. [Google Scholar]

- 43.Wyatt D, Bilmes J, Choudhury T, Kitts JA. Towards the automated social analysis of situated speech data; Proceedings of the 10th international conference on Ubiquitous computing; 2008. pp. 168–171. ACM. [Google Scholar]

- 44.Dellaert F, Polzin T, Waibel A. Recognizing emotion in speech. Spoken Language, 1996. ICSLP 96. Proceedings., Fourth International Conference on. 1996;ume 3:1970–1973. IEEE. [Google Scholar]

- 45.Gatica-Perez D, McCowan I, Zhang D, Bengio S. Detecting group interest-level in meetings; Proc. IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP); 2005. Citeseer. [Google Scholar]

- 46.Hurlburt RT, Koch M, Heavey CL. Descriptive experience sampling demonstrates the connection of thinking to externally observable behavior. Cognitive Therapy and Research. 2002;26(1):117–134. [Google Scholar]

- 47.Basu S. A linked-HMM model for robust voicing and speech detection; Proceedings of International Conference on Acoustic, Speech, and Signal Processing (ICASSP); 2003. [Google Scholar]

- 48.Choudhury Tanzeem, Basu Sumit. Modeling conversational dynamics as a mixed-memory markov process. Proc. of NIPS. 2004 [Google Scholar]

- 49.Friedman Jerome, Hastie Trevor, Tibshirani Robert. Additive logistic regression: a statistical view of boosting. Annals of Statistics. 1998;28:2000. [Google Scholar]

- 50.Torralba Antonio, Murphy Kevin P. Sharing visual features for multiclass and multiview object detection. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2007;29(5):854–869. doi: 10.1109/TPAMI.2007.1055. [DOI] [PubMed] [Google Scholar]

- 51.Blanke Ulf, Schiele Bernt. LoCA ’09: Proceedings of the 4th International Symposium on Location and Context Awareness. Berlin, Heidelberg: Springer-Verlag; 2009. Daily routine recognition through activity spotting; pp. 192–206. [Google Scholar]

- 52.Viola P, Jones M. Robust real-time object detection. International Journal of Computer Vision. 2002;57(2):137–154. [Google Scholar]

- 53.Nezlek JB, Kowalski RM, Leary MR, Blevins T, Holgate S. Personality moderators of reactions to interpersonal rejection: Depression and trait self-esteem. Personality and Social Psychology Bulletin. 1997;23(12):1235. [Google Scholar]

- 54.Simoneau TL, Miklowitz DJ, Saleem R. Expressed emotion and interactional patterns in the families of bipolar patients. Journal of Abnormal Psychology. 1998;107(3):497–507. doi: 10.1037//0021-843x.107.3.497. [DOI] [PubMed] [Google Scholar]

- 55.Shiffman Saul, Stone Arthur A, Hufford Michael R. Ecological momentary assessment. Annual review of clinical psychology. 2008;4:1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415. [DOI] [PubMed] [Google Scholar]

- 56.Seeman TE. Social ties and health: the benefits of social integration. Annals of Epidemiology. 1996;6(5):442–451. doi: 10.1016/s1047-2797(96)00095-6. [DOI] [PubMed] [Google Scholar]