Abstract

Memory encoding engages multiple concurrent and sequential processes. While the individual processes involved in successful encoding have been examined in many studies, a sequence of events and the importance of modules associated with memory encoding has not been established. For this reason, we sought to perform a comprehensive examination of the network for memory encoding using data driven methods and to determine the directionality of the information flow in order to build a viable model of visual memory encoding. Forty healthy controls ages 19–59 performed a visual scene encoding task. FMRI data were preprocessed using SPM8 and then processed using independent component analysis (ICA) with the reliability of the identified components confirmed using ICASSO as implemented in GIFT. The directionality of the information flow was examined using Granger causality analyses (GCA). All participants performed the fMRI task well above the chance level (>90% correct on both active and control conditions) and the post-fMRI testing recall revealed correct memory encoding at 86.33±5.83%. ICA identified involvement of components of five different networks in the process of memory encoding, and the GCA allowed for the directionality of the information flow to be assessed, from visual cortex via ventral stream to the attention network and then to the default mode network (DMN). Two additional networks involved in this process were the cerebellar and the auditory-insular network. This study provides evidence that successful visual memory encoding is dependent on multiple modules that are part of other networks that are only indirectly related to the main process. This model may help to identify the node(s) of the network that are affected by a specific disease processes and explain the presence of memory encoding difficulties in patients in whom focal or global network dysfunction exists.

Introduction

Episodic memory is defined as the ability to consciously recall dated information and spatiotemporal relations from previous experiences, while semantic memory consists of stored information about features and attributes that define concepts [1], [2]. The visual encoding of a scene in order to remember and recognize it later (i.e., visual memory encoding) engages both episodic and semantic memory, and an efficient retrieval system is needed for later recall [3]. This entire process typically includes several important sequential and concurrent steps (e.g., visual attention, analysis of visual features and encoding of the scene features) that are crucial for it to be efficient and consistent.

The cortical underpinnings of these steps and processes have been examined in numerous neuroimaging studies. Primary visual features are encoded through a process of retinotopy [4], [5]. Then, a more precise categorization of visual information occurs via ventral and dorsal visual streams [6]–[9]. The capacity of the visual system to analyze a multi-object scene is limited [10]. Therefore, attentional mechanisms are needed to select relevant and filter out irrelevant information. Visual attention has been shown to improve the quality of visual encoding [11] by increasing contrast sensitivity [12], diminishing distractor's influence [13], and improving acuity [14]. Visual attention processes are widely distributed over the human cortex and appear to be controlled by networks located in frontal and parietal areas generating feedback information to the visual areas [11], [15]–[19].

A model for visual memory encoding based on human brain activity and functional connectivity during a scene-encoding task has not been developed to date. The aim of the present study was to build such a model using data-driven methods. In order to complete this task we used independent component analysis (ICA) of fMRI data combined with Granger causality algorithm (GCA). These advanced methods complement and add to the commonly used hypothesis-driven general linear modeling (GLM) method. While GLM permits identification of cortical and subcortical areas that constitute the underpinnings of the cognitive processes in question [20], it does not allow for the more detailed dissection and temporal arrangement of the individual components that potentially constitute the process to be examined, nor does it allow for the examination of the directionality of the information flow. Current theories agree that human higher cognitive functions emerge from a network of areas with precise interaction dynamics [21]. This is where the recently developed methods allow for in-depth analysis of the group fMRI data in order to uncover the processes that underlie visual memory encoding and sub-networks that support these processes. Further, these methods permit building a model for a specific cognitive process with that model later serving as the basis for examining the effects of a disease state on such a model e.g., epilepsy [22] and identifying nodes of the network that are specifically affected by the disease. The combined application of ICA and GCA to the analysis of blood oxygenation-level dependent (BOLD) data allows for the analysis of functionally connected cognitive networks and of the causal relations between them without required a priori information or preconceptions. Previously, the combination of ICA-GCA analyses has been successfully applied to various cognitive fMRI paradigms [23]–[28].

The first step in assessing the effects of disease states on cognitive networks is to build a robust model of the said network in healthy subjects so that these models can then be applied to testing and understanding of the cognitive deficits produced by the disease state. Recently, we have applied ICA to language fMRI data in order to build models for semantic decision, verb generation, and story processing [29]–[32], and we are currently testing the effects of stroke on such models. The aim of this study was to perform a comprehensive examination of the network for visual memory encoding using ICA and GCA of fMRI data to determine the directionality of the information flow and build a viable model of visual memory encoding that can serve as the basis for testing the effects of epilepsy (e.g., temporal vs. extra-temporal) on such a network.

Methods

Participants

Forty healthy controls (39% female) aged 19–59 (mean age = 33) with no history of neurological disorders or memory complaints were recruited. All subjects were included in our previous analyses of this task using GLM to provide functionally-defined fMRI regions of interest (ROIs) for the analyses of fMRI data collected in patients with epilepsy [22], [33]. This study was approved by the Institutional Review Boards (IRB) at the University of Cincinnati and the University of Alabama at Birmingham and all participants provided written informed consent prior to enrollment. Data sharing permission has been obtained from the IRB and the raw data are available, upon request from the authors.

Functional MRI task

A block-design functional MRI scene encoding task was employed for the purpose of this study [33]–[35]. This task was used and described in our recent publication [22]. Briefly, during the active condition, participants were presented with stimuli that represented a balanced mixture of indoor (50%) and outdoor (50%) scenes that included both images of inanimate objects as well as pictures of people and faces with neutral expressions. Attention to the task was monitored by asking participants to indicate whether the scene was indoor or outdoor using a button box held in the right hand. Participants were also instructed to memorize all scenes for later memory testing. During the control condition, participants viewed pairs of scrambled images and were asked to indicate using the same button box whether both images in each pair were the same or not (50% of pairs contained the same images). Use of the control condition allowed for subtraction of visuo-perceptual, decision-making, and motor aspects of the task, with a goal of improved isolation of the memory encoding aspect of the active condition. Participants completed a practice run before entering the scanner in order to ensure full comprehension of the task. Practice items included five indoor/outdoor scenes as well as five scrambled pictures. Participants did not proceed to the scanner until they responded to all 10 images correctly. The paradigm included 14 alternating blocks of scrambled pictures (7 blocks) and scenes (7 blocks), starting with a block of scrambled pictures, for a total of 70 target pictures and 70 scrambled control pairs. The duration of the task was 7′15″. Each image was presented for 2.5 seconds, followed by a white blank screen for 0.5 seconds. Five whole brain volumes (15 seconds) were collected prior to initiating of the fMRI task run to allow for T2* equilibration – these volumes were discarded. Within 10–15 minutes of completing the scan, participants were administered a post-scan recognition test that included 60 indoor/outdoor scenes, with a balanced content of target and foil pictures. Foil pictures were chosen by matching contents and parameters of foil images to those presented in the scanner. Participants indicated whether they remembered seeing the picture in the scanner by pressing “Y” or “N” on a standard laptop keyboard (respectively “Yes” or “No”).

Functional MRI

Images were collected on a 4-Tesla Varian MRI scanner. For each participant, an anatomical T1 scan was first collected (TR = 13 ms; TE = 6 ms; FOV = 25.6×19.2×15.0; flip angle array of 3: 22/90/180 with a voxel size of 1×1×1 mm). Manual shimming was performed next and was followed by a multi-echo reference scan (MERS) collected for correction of geometric distortion and ghosting artifacts that occur at high field (Schmithorst et al., 2001). Then, fMRI scanning was completed in thirty 4-mm thick contiguous planes sufficient to encompass the apex of the cerebrum to the inferior aspect of the cerebellum in the adult brain using the following echo planar imaging (EPI) protocol: TR/TE = 3000/25 ms, FOV = 25.6×25.6 cm, matrix = 64×64 pixels, slice thickness = 4 mm, flip angle array: 85/180/180/90. Task stimuli were delivered using Psyscope 1.125 [36] running on an Apple Macintosh G3 computer. Subjects were equipped with a button box to record responses and to alert the MRI technologist to any problems if necessary. Head movement was minimized with the use of foam padding and head restraints.

Functional MRI data analysis

All imaging data were preprocessed and modeled using Matlab toolbox SPM8 (http://www.fil.ion.ucl.ac.uk/spm/software/spm8/). First, functional images were corrected for time discrepancy between slices (slice timing, interleaved mode, second to last slice as reference), corrected for motion (motion parameters were calculated with SPM8, normalized (EPI-weighted template, trilinear interpolation, 2×2×2 mm voxel size) and spatially smoothed with a 8-mm kernel full width half-maximum. The first 5 volumes were discarded from further analysis to allow for magnetic equilibration.

General Linear Modeling (GLM) analysis

The fMRI data were initially processed using standard GLM methods to contrast active and control task conditions in single-subject analysis, while also covarying for head motion parameters and MR signal drift. In order to determine whether typical activations were obtained with this task, group-level analysis was performed using a one-sample t-test of the GLM results. The resultant group activation maps were comparable to the results of our previous studies [22], [33] and to the results of similar investigations from the literature [35], [37]. These analyses indicated typical pattern of BOLD signal changes when compared to these investigations (data not shown).

Independent component analysis (ICA)

For the purpose of this study we adopted a previously developed group ICA method [38]. Group ICA is commonly used for making group inferences from fMRI data of multiple subjects. In our study, this was carried out using the Group ICA of fMRI Toolbox (GIFT; http://icatb.sourceforge.net) for not only estimating individual spatial patterns but also facilitating investigation of group differences under the same study condition. The individual datasets were temporally concatenated and reduced for computational feasibility through three stages of principal component analysis in order to reach the final dataset, which was then decomposed by ICA with Infomax algorithm into thirty-two spatially independent components [39]. Briefly, in GIFT, after each subject's functional data were reduced, the data were then concatenated into groups and put through another data reduction step. The number of subjects to put into each group is called partitions with the number of datasets in a partition being equal to the one-fourth of the number of data-sets selected for analyses (here N = 40 thus each partition had 10 datasets). After reduction within each partition the data were stacked into one group and put through the final data reduction. At this stage, the number of components was estimated using the minimum description length criteria [40], similarly to the common settings used in previous ICA-GCA studies [26]–[28], [41], [42]. The Infomax algorithm was repeated twenty times with randomly initialized decomposition matrices and the same convergence threshold using ICASSO approach in GIFT [43]. ICASSO allows for the estimation of small changes in the dataset as a result of changes in data stability; i.e., since the finite data never follows exactly the same ICA model, introducing ICASSO allows for estimating the reliability of the generated components [40], [43]. Subsequent to clustering of the obtained components, all centrotype-based components were selected and considered to be a stable result of the decomposition. Following back-reconstruction using GICA3 algorithm [44], components and their timecourses were averaged over all subjects. After careful visual inspection of the spatio-temporal characteristics of each identified independent component (IC), components reflecting noise were discarded [45]. In order to select components that have an active participation in visual memory processes, components of which time course showed a significant increase during control blocks compared to active blocks were discarded. Further analyses were conducted on the full time course of selected group-ICA components.

Granger causality analysis (GCA)

Granger expressed the formal concept of causality for econometric purposes [46]. It is based on the common sense notion that causes imply effects during the future evolution of events and, conversely, the future cannot affect the past or present. By applying such considerations to temporal signals, if a time series “A” causes a time series “B”, then in some way knowledge about “A” should improve the prediction of “B”. More specifically, causality may be evaluated by comparing the variance of the residuals after an autoregressive (AR) application to the reference signal “A”, with the same variance being obtained when autoregression is evaluated on the past values of the signal “A” and the past values of the potentially causing signal “B”. GCA has been shown to be a viable technique for analyzing fMRI data [47]–[49] and to not vary after filtering [50]. Analysis of effective connectivity between the independent components was thus conducted using GCA, which models directional causality among multiple time series based on a variable autoregressive model [51]. The model order that represents the maximum time lag can be estimated using the Bayesian Information Criterion [52]. GCA was conducted by using a previously implemented MATLAB toolbox [53].

Results

Behavioral results

All subjects performed well above chance on the in-scanner scene and scrambled picture pair identification. Performance for the scrambled picture pair identification (control condition; 93.25±4.1%) was significantly better (p = 0.003) than for the indoor/outdoor scenes (active condition; 90.86±3%). All subjects also performed well above chance on the post-scan scene recognition task (86.33±5.83%).

Independent component analysis

Thirty-two ICA components were identified. Of these, 10 were determined to be task-related (i.e., not representing noise or components related to the control condition) and were included in further analyses and model generation (Table 1). Each retained component was attributed to a particular network based on previously published data.

Table 1. Cortical localizations of the 10 task-related independents components: for each component we presented the anatomical location, corresponding Brodmann area(s), and the maximum Z-score with its Talairach coordinates (obtained using the Talairach utility provided in GIFT toolbox on group-ICA components maps).

| Component ID | Area | Brodmann Area | Max Z-score (x, y, z) L/R |

| 2 | Superior Frontal Gyrus | 6, 8, 9, 10 | 7.8 (−2, 59, 23)/8.7 (4, 56, 34) |

| Medial Frontal Gyrus | 6, 8, 9, 10 | 7.6 (−4, 56, 34)/7.9 (6, 52, 36) | |

| Anterior Cingulate | 32 | 3.9 (−2, 47, 9)/3.7 (2, 45, 9) | |

| Middle Frontal Gyrus | 8, 9, 10 | 3.1 (−22, 59, 21)/3.2 (22, 59, 21) | |

| 5 | Cingulate Gyrus | 23, 24, 31 | 6.9 (−2, −49, 28)/7.3 (2, −47, 28) |

| Precuneus | 7, 19, 23, 31, 39 | 6.7 (−2, −49, 32)/7.1 (2, −47, 32) | |

| Posterior Cingulate | 23, 29, 30, 31 | 6.5 (−2, −49, 25)/7.0 (2, −47, 24) | |

| Cuneus | 7, 18, 19 | 6.0 (0, −66, 33)/5.5 (4, −66, 31) | |

| Angular Gyrus | 39 | 3.5 (−46, −66, 36)/3.1 (51, −63, 31) | |

| Inferior Parietal Lobule | 39, 40 | 3.3 (−46, −64, 40)/3.0 (50, −60, 38) | |

| Supramarginal Gyrus | 40 | 2.9 (−51, −59, 32)/3.3 (53, −59, 31) | |

| Superior Temporal Gyrus | 39 | 3.1 (−53, −61, 29)/3.2 (53, −59, 27) | |

| Middle Temporal Gyrus | 39 | 3.2 (−50, −63, 29)/3.1 (53, −61, 23) | |

| 10 | Middle Frontal Gyrus | 6, 8, 9, 10, 46 | 7.3 (−50, 17, 29)/NA |

| Inferior Frontal Gyrus | 9, 10, 44, 45, 46 | 7.0 (−50, 13, 29)/NA | |

| Precentral Gyrus | 6, 9, 44 | 5.2 (−46, 19, 36)/NA | |

| Medial Frontal Gyrus | 6, 8, 9 | 4.4 (−2, 39, 40)/3.9 (2, 39, 40) | |

| Superior Frontal Gyrus | 6, 8, 9 | 4.1 (−30, 20, 52)/3.5 (2, 35, 46) | |

| Inferior Parietal Lobule | 7, 39, 40 | 3.2 (−46, −56, 43)/NA | |

| Precuneus | 19, 39 | 3.0 (−40, −70, 42)/NA | |

| Angular Gyrus | 39 | 2.9 (−50, −61, 33)/NA | |

| Supramarginal Gyrus | * | 2.8 (−51, −57, 30)/NA | |

| Superior Parietal Lobule | 7 | 2.8 (−42, −58, 51)/NA | |

| Middle Temporal Gyrus | * | 2.8 (−50, −61, 29)/NA | |

| Cingulate Gyrus | * | 2.7 (−2, 23, 39)/NA | |

| 19 | Inferior Frontal Gyrus | 6, 9, 10, 44, 45, 46, 47 | NA/5.6 (53, 19, 25) |

| Middle Frontal Gyrus | 6, 8, 9, 10, 11, 46, 47 | NA/5.6 (51, 17, 32) | |

| Superior Frontal Gyrus | 6, 8, 9, 10 | NA/4.7 (34, 22, 50) | |

| Precentral Gyrus | 6, 9, 44 | NA/4.4 (46, 21, 36) | |

| Medial Frontal Gyrus | 6, 8, 9 | NA/3.4 (6, 31, 37) | |

| Inferior Parietal Lobule | 7, 39, 40 | NA/3.2 (50, −58, 40) | |

| Angular Gyrus | 39 | NA/3.1 (50, −58, 36) | |

| Precuneus | 19, 39 | NA/2.9 (40, −68, 38) | |

| Cingulate Gyrus | 32 | NA/2.9 (6, 23, 39) | |

| Supramarginal Gyrus | 40 | NA/2.8 (53, −57, 30) | |

| 20 | Posterior Cingulate | 23, 29, 30, 31 | 7.4 (−4, −60, 9)/7.4 (4, −62, 10) |

| Culmen of Vermis | * | 7.3 (0, −60, 1)/6.2 (4, −60, 0) | |

| Cuneus | 7, 17, 18, 19, 23, 30 | 7.2 (−4, −64, 9)/7.0 (4, −64, 7) | |

| Culmen | * | 7.1 (−2, −56, 1)/6.9 (2, −56, 1) | |

| Lingual Gyrus | 18, 19 | 7.0 (−4, −64, 5)/5.9 (4, −68, 5) | |

| Precuneus | 23, 31 | 6.7 (0, −69, 18)/6.4 (4, −61, 18) | |

| Cingulate Gyrus | 31 | 3.5 (0, −59, 27)/3.0 (4, −61, 29) | |

| Parahippocampal Gyrus | 30 | NA/2.7 (12, −48, 4) | |

| 23 | Lingual Gyrus | 17, 18, 19 | 9.0 (0, −85, 3)/8.4 (4, −85, 3) |

| Cuneus | 17, 18, 19, 23, 30 | 8.6 (−2, −87, 6)/7.6 (4, −83, 6) | |

| Declive | * | 6.6 (−4, −80, −11)/6.2 (6, −80, −11) | |

| Declive of Vermis | * | 5.1 (−2, −74, −11)/5.1 (2, −74, −10) | |

| Middle Occipital Gyrus | 18 | 4.6 (−10, −91, 14)/4.0 (10, −91, 16) | |

| Culmen | * | 4.4 (−10, −68, −8)/3.6 (12, −68, −8) | |

| Fusiform Gyrus | 19 | 4.1 (−20, −80, −11)/3.0 (22, −82, −13) | |

| 24 | Postcentral Gyrus | 1, 2, 4, 5, 7 | 6.1 (−4, −51, 67)/5.3 (6, −49, 65) |

| Precuneus | 7 | 5.2 (−2, −55, 60)/5.8 (4, −59, 60) | |

| Paracentral Lobule | 4, 5, 6, 31 | 4.4 (−2, −44, 54)/4.7 (2, −42, 54) | |

| Superior Parietal Lobule | 7 | 4.2 (−6, −63, 57)/3.8 (10, −65, 57) | |

| Medial Frontal Gyrus | 6 | 3.0 (−4, −18, 67)/4.0 (4, −10, 67) | |

| Precentral Gyrus | 4, 6 | 3.8 (−32, −22, 67)/2.6 (36, −20, 67) | |

| Inferior Parietal Lobule | 40 | 3.2 (−46, −44, 57)/NA | |

| Superior Frontal Gyrus | * | 2.8 (−30, −6, 65)/NA | |

| Thalamus | * | 2.8 (−4, −5, 9)/2.8 (4, −5, 9) | |

| Anterior Cingulate | * | NA/2.7 (2, 11, 25) | |

| 29 | Culmen | * | 8.1 (−22, −49, −11)/9.6 (24, −51, −11) |

| Declive | * | 8.4 (−24, −53, −11)/8.8 (24, −55, −12) | |

| Fusiform Gyrus | 19, 20, 37 | 7.3 (−22, −53, −7)/8.4 (24, −55, −9) | |

| Parahippocampal Gyrus | 19, 30, 36, 37 | 5.9 (−26, −45, −10)/7.2 (26, −47, −8) | |

| Lingual Gyrus | 18, 19, 30 | 3.3 (−28, −60, −5)/4.9 (22, −59, −5) | |

| 30 | Superior Temporal Gyrus | 13, 21, 22, 38, 41 | 5.7 (−46, −12, −6)/5.7 (46, −16, −6) |

| Insula | 13, 22 | 5.3 (−42, −16, −6)/5.5 (44, −12, −6) | |

| Middle Temporal Gyrus | 21, 22, 38 | 5.0 (−50, −16, −6)/4.8 (50, −20, −4) | |

| Claustrum | * | 4.2 (−38, −23, 1)/3.7 (36, −14, −4) | |

| Superior Frontal Gyrus | 6, 8 | 3.0 (−8, 41, 50)/3.1 (4, 39, 51) | |

| Lentiform Nucleus | * | 2.9 (−32, −16, 1)/2.7 (32, −19, −1) | |

| Caudate | * | 2.8 (−34, −27, −4)/2.6 (34, −25, −4) | |

| 31 | Culmen | * | 16.0 (0, −47, −9)/15.0 (4, −47, −9) |

| Cerebellar Lingual | * | 15.3 (0, −43, −10)/14.4 (4, −43, −10) | |

| Declive | * | 11.0 (0, −55, −12)/9.7 (4, −55, −12) | |

| Culmen of Vermis | * | 9.6 (0, −63, −9)/7.9 (4, −62, −5) | |

| Declive of Vermis | * | 5.8 (0, −71, −12)/3.9 (0, −69, −15) | |

| Lingual Gyrus | 18, 19 | 3.8 (4, −74, −6)/3.3 (16, −60, −5) | |

| Fusiform Gyrus | 19, 37 | 3.6 (−24, −49, −9)/3.9 (24, −51, −9) | |

| Parahippocampal Gyrus | 19, 36, 37 | 3.4 (−24, −45, −10)/3.7 (24, −47, −9) |

Granger causality analysis

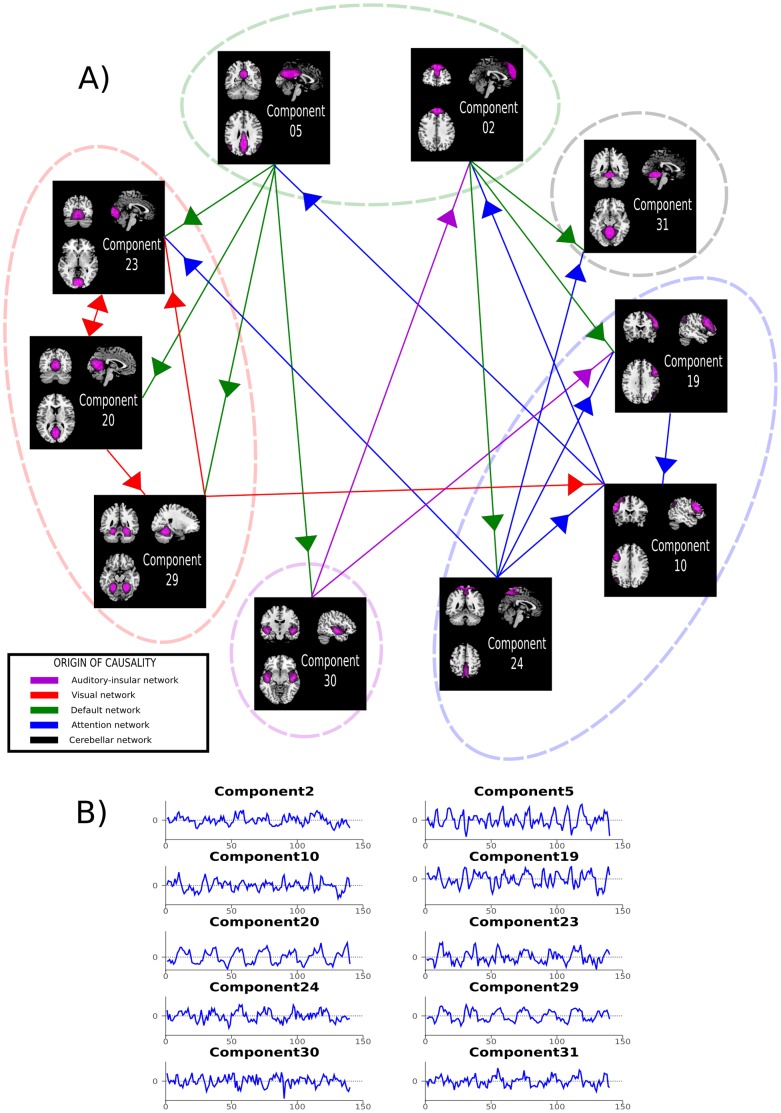

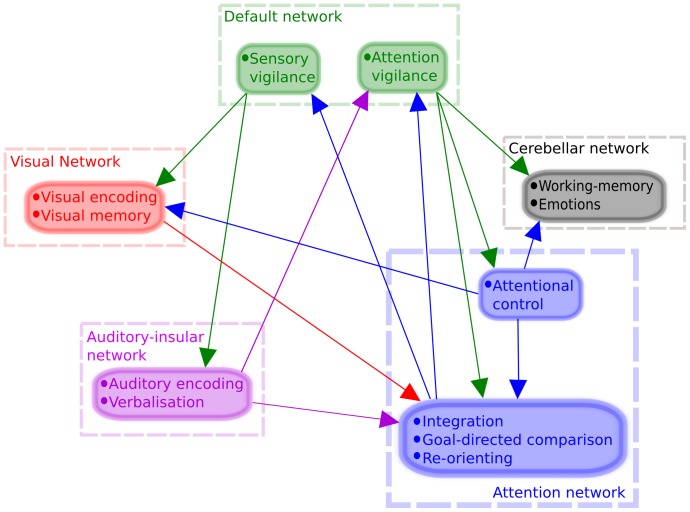

Significant causality relations between each of the ten components (p<0.05; corrected for FDR) have been observed (Figure 1). These components are grouped into five networks that take part in the process of visual memory encoding: auditory, visual, default mode, attention, and cerebellar. Based on these networks a model of memory encoding is created, and the relative contributions of each of the specific networks are depicted in Figure 2. Below, we provide a description of each of the components with their potential implication for the scene-encoding memory network (Figure 1), the results of the causality analyses, and the construction of a visual memory model based on those results.

Figure 1.

A) Relations and directionality of the information flow between task-related ICs. Details regarding each component are provided in Table 1. Each component was attributed to a particular network (See discussion section for a precise analysis). Each arrow is indicating a significant (p<0.05, FDR corrected) causal relation between two components. Component representations are in neurological convention (left hemisphere is on the left side of the image). B) Respective timecourse of components depicted in A)

Figure 2. Proposed model for visual memory encoding based on results obtained in Figure 1 .

A precise description of the model is provided in the Discussion section.

It should be kept in mind that GCA only provides information about causality between two events. Inferences made below about the temporal relationship between multiple events based on GCA results add to the current state of knowledge about cognitive functions related to the task performed by subjects. But, no algorithm that shows the statistical significance of such inferences is provided (see Discussion section).

Independent components of the default mode network

Two ICs (IC 02 and IC 05) were identified that are typically shown to be activated when subjects are at rest and relaxed (Figure 1) [54]. But, in this study, these components are task-positive i.e., the activation in these areas occurred while the patients were performing the task at hand. Although seemingly counterintuitive, the default mode network that is activated here has been described to be involved in maintaining vigilance and it may be responsible for preparation for the new stimulus that is expected to come [55] and/or is involved in the modulation of the level of attention [56].

IC 02 is the superior frontal and anterior cingulate component of the default mode network that was previously found to participate in stimulus-oriented attention [57]. Further, this area has been linked to working memory and episodic memory encoding [58]. The IC 05 is the posterior/retrosplenial component of the default mode network that has been suggested to be an important node for information integration [59]. It was also previously shown to be involved in arousal and awareness [60], [61], controlling balance between internal and external attention [62], and in detection of environmental changes [55], [63], [64]. It was recently suggested that the posterior cingulate cortex also participates in a system that regulates the attentional focus [56].

Independent components of the attention network

Three of the identified components appear to be a part of the network responsible for maintenance of attention. These components include ICs 10, 19, and 24 (Figure 1). The components 10 and 19 need to be considered at the same time as they are thought to constitute the fronto-parietal attention network [65]. The hemispheric temporal divergence between those two components suggests differences in cognitive functions within the same network. It has been suggested that the left and right frontal lobes have different involvement in the encoding and retrieval process with the left responsible for retrieval of semantic memory and simultaneous encoding of novel information into episodic memory while the right prefrontal regions are involved in the process of episodic memory retrieval (hemispheric encoding/retrieval asymmetry or ‘HERA’ model) [66]. Thus, participation in the attention network but somewhat different timing of that participation is easily explained when the HERA model of memory is taken into account. Further, in the right hemisphere, these components have been linked to inhibition and attentional control [67], stimulus-driven reorienting and resetting task-relevant networks [68], and also selective attention and target detection [69]. The right inferior frontal cortex (rIFC) was shown to be critical for behavioral updating, as in a go/no-go task [70], [71]. Clinical studies have put this component forward as a strong candidate for cortical area responsible for cognitive control [72], [73]. This area was also implicated in maintaining attention [67], [74]–[76]. Further, some studies have identified bilateral inferior frontal junction (IFJ) in the detection of visual motion whereas color detection preferentially engaged right IFJ [18], [77]. Other studies also identified hemispheric differences in IFJ activity using visual stimuli in that different fronto-parietal regions were found to be involved in attention to motion versus color features [78], [79]. The right IFJ has also been suggested to be involved into the selection of behaviorally relevant stimulus features [80].

Finally IC 24 involves activation of the intra-parietal sulcus and its surrounding cortical areas that have been strongly implicated in many higher cognitive functions such as spatial orientating and re-orientating [81], [82], which are necessary for the performance of the fMRI task that involves rapid shifting between visual analysis of indoor and outdoor scenes and analysis of matched and unmatched scrambled pictures.

Independent components of the visual network

Three specific components belonging to this network were identified: ICs 20, 23 and 29 (Figure 1). The identified components cover primary and secondary visual area (V1, V2 and V3). Due to the visual nature of this fMRI task the involvement of the primary and secondary visual cortices is expected as such involvement was previously seen in this and similar versions of the task (ICs 20 and 23) [34], [83]. Further, since the scenes and scrambled pictures were presented visually, the visual cortices are involved in the processing of this task first. The sequential involvement of these areas most likely reflects the differences in retinotopy between the polar part and the frontal part of the calcarine sulcus [4], [5]. IC 29 appears to be a part of the cortical network for vision; it was previously identified as belonging to the ventral/ventrolateral visual stream [84]. This cortical area was found to be activated in studies where participants had to perform a task of face vs. non-face recognition [85]. This component probably reflects the process of categorization of the visual stimulus and/or the differences in processing formed vs. unformed images.

Independent component of the auditory-insular network

A single component, IC 30, was identified as part of the auditory-insular network. Anatomically, this component includes predominantly primary and association auditory cortices. The activation in this cortical area corresponds most strongly to action–execution–speech, cognition–language–speech, and perception–audition paradigms [84]. Further, this component also includes posterior insula. Recently, three distinct cytoarchitectonic areas were identified in the human posterior insula [86]. Thus, it appears reasonable to think that these subdivisions form the anatomical substrate of a diversified mosaic of structurally and functionally distinct cortical areas. This may explain why activations in the insula have been reported for virtually all cognitive, affective, and sensory paradigms tested in functional imaging studies and have also been implicated by research in nonhuman primates. However, the most reliable evidence of an involvement of the posterior insula has been received for studies investigating painful [87], somatosensory [88], auditory [89], and interoceptive stimuli [90], as well as motor and language paradigms [91]–[93]. Thus, it is not surprising to note the involvement of these cortical areas in the execution of the task that involves not only visual but also other cognitive processes of working memory, face and scene recognition, decision making, and working memory.

Independent component of the cerebellar network

A single and fairly large superior cerebellar component, IC 31, was identified. Superior cerebellum, especially the midline cerebellum has been implicated in many specific processes including e.g., language, emotion processing or visual memory manipulation [94]–[96]. Because of the inclusion of scenes with people in them and of faces with neutral expressions, it is possible that subjects interpreted not only whether the individual was stationed indoors or outdoors but also focused on facial emotions. Previous studies have postulated cerebellum to be involved in emotional processing via the cerebellar-hypothalamic pathways [97], [98]. A recent meta-analysis of “cerebellar” studies documented the involvement of the vermis and superior cerebellar hemispheres in emotional processing and postulated that these activations may be related to decision-making process in the studies of emotions rather than emotional processing itself [99]. Further, as alluded to above, cerebellar involvement could be related to the process of working memory manipulation [94].

Network for visual encoding of scenes

Taken together, several nodes from multiple cognitive networks take part in the process of visual scene encoding. This process, the participation of the various nodes, and the directionality of the relationships are depicted in Figure 2. It is clear that the information enters the cognitive process via occipital visual cortices (visually presented information). A visual stimulus is retinotopically encoded in the primary visual cortex [5], the participation of ICs 20 and 23 likely reflects this encoding through the bidirectional information exchange between the two components. The third component of the visual network, IC 29, is characteristic of the so-called ventral visual pathway, associated with object recognition and form representation [100]. This is important as the presence of this component explains the further passage of the visually presented information to the other parts of the network via occipito-temporal connections responsible for fine encoding and maintenance in visual working memory of a visual stimulus through feedforward and feedback connections [6]. The only causal direction of information flow is to IC 10, one of the attentional components of the network with several uni- or bidirectional connections within this network and later outflow connections to other components of network for visual scene encoding.

This attention network is subdivided into fronto-parietal nodes (ICs 10 and 19) and a parietal node (IC 24). Altogether, these three components have been suggested to reflect a network which emphasizes start-cue and error-related activity and may initiate and adapt control on a trial-by-trial basis [101]. Considering that within this network causal relations were found from IC 24 to both ICs 10 and 19, but none toward IC 24, we suggest that the parietal component (IC 24) is the one responsible for adapting attentional control. The fact that this component receives a causal influence only from the default mode network (IC 02) further strengthens this hypothesis. Therefore, we posit that the two other components play a role of integrating information and analyzing that information in a task-driven way (cue and error-related activity). Both of the fronto-parietal components indeed receive causal influence from sensory networks (visual and auditory). These findings are in agreement with the previously proposed HERA model for memory encoding and retrieval [66].

The left fronto-parietal component (IC 10) receives a causal influence from the visual ventral pathway network (IC 29). This causality link from the so-called “what” visual pathway to cortical regions highly involved in language is likely to reflect the verbalization of the visual stimulus [102]. Moreover, the right fronto-parietal node receives a causal influence from the auditory-insular network (IC 30) and has a causal influence the left fronto-parietal component (IC 10). Both of those links could also reflect an involvement in the verbalization of the visual stimulus. We have recently shown that right hemisphere regions encompassed by the right fronto-parietal component (IC 19) can influence intra- or extra-scanner behavioral performance in semantic processing [103].

Within the attentional network, the left fronto-parietal component is the only one to have a causal influence on both components of the default-mode network (ICs 02 and 05). After encoding a visual stimulus with visual features and semantic information, the left fronto-parietal component is likely “activating” the default mode network in order to get ready for the next stimulus to come. Although it has been shown that right fronto-parietal regions are involved in stimulus-driven reorienting and resetting of the task-relevant networks [68], it is possible that the flow of information corresponding to this reorienting process needs to go through left fronto-parietal regions. This hypothesis is in agreement with recent results showing that the left dorso-lateral prefrontal cortex plays a necessary role in the implementation of choice-induced preference change [104].

The default mode network and cerebellar network play very important functions in the process of scene encoding. It is likely that the default network is involved into maintaining a certain level of vigilance, preparing for a new stimulus to come [55], and modulating the level of attentional focus [56]. Our results document a binary role of the default mode network. The posterior component (IC 05) has a causal influence on sensory networks (visual and auditory) and the frontal component has a causal influence on the attention network and on the cerebellar network.

Within the framework of the present study, one can only speculate of the implication of the cerebellar component. This component does not have a causal influence on any other network described here. It is possible that this component is actively involved in several cognitive processes related to this task as described above.

Finally, the auditory-insular network receives causal influence from the parietal component of the default network akin to the visual network. This is consistent with the hypothesis that the default network is responsible for maintaining a certain level of vigilance in sensory areas [55]. The auditory network has a causal influence on the left fronto-parietal component, sending auditory information for spatial and verbal integration (see attention network paragraph in discussion).

Discussion

In this study, ICA and GCA were used to build a model of visual memory encoding based on fMRI data obtained from healthy subjects performing a visual scene-encoding task. Such a model for visual memory encoding based on human brain activity and functional connectivity during a scene-encoding task has not been developed to date. Building the groundwork that can be used as a baseline for future investigation of the effects of disease states on such network is therefore essential. As depicted in Figure 1, several components partake in the process of encoding visually presented stimuli. The nodes responsible for this process in healthy subjects are parts of five different networks and include auditory, visual, default, attention, and cerebellar networks. We discussed the relative contributions of the components of these networks and the integration of these seemingly unrelated components into an interactive network responsible for the complex task of visual memory encoding above.

The final level data analysis utilized in this study – Granger causality analysis – is a statistical algorithm for assessing causal influences between two simultaneously recorded time series [105]. Thus, a caveat to the interpretation of results must be considered. It is important to note that this algorithm cannot assess the existence of a cascade of events, which would be solely speculative. Also, GCA is very sensitive to BOLD fluctuations and to the fact that BOLD response may differ in different cortical areas [106]. However, inferences can be made about the temporality of multiple events based on our current knowledge of brain cognitive functions. Thus, while the above caveat puts the results of the study into certain perspective, the results may be interpreted as a series of events that need to occur in order for the cognitive process to be conducted efficiently. Moreover, the aim of the present paper is to build of model of visual memory that could potentially be used in further analyses and be compared to results of analyses that utilize other data processing methods.

In summary, this study identified several components of the network responsible for scene encoding and evaluated the directionality of the information flow within the network in order to build a model for visual memory encoding. While not complete, the proposed model lays the groundwork for further exploration of the processes and connections that are important for the maintenance and correct functionality of this network and for the examination of effects of various disease processes that may affect the functionality of this network, e.g., epilepsy.

Acknowledgments

Christi Banks, CCRC and Kristina Bigras, MA helped with data collection. This study was presented in part at the American Epilepsy Society Meeting in Washington, DC.

Data Availability

The authors confirm that all data underlying the findings are fully available without restriction. The use of any human data is regulated by the Institutional Review Board (IRB). While the IRB at the University of Alabama at Birmingham has agreed to make the data available to the public, it requires us to monitor the distribution and use of the data. The data are therefore available upon request and requests may be sent to: Jerzy P. Szaflarski (szaflaj@uab.edu).

Funding Statement

This study was supported in part by The UC Neuroscience Institute (JPS) and in part by R01 NS048281 (JPS). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Tulving E, Markowitsch HJ (1998) Episodic and declarative memory: role of the hippocampus. Hippocampus 8: 198–204 Available: http://www.ncbi.nlm.nih.gov/pubmed/9662134 Accessed 23 November 2013. [DOI] [PubMed] [Google Scholar]

- 2. Davachi L (2006) Item, context and relational episodic encoding in humans. Curr Opin Neurobiol 16: 693–700 Available: http://www.ncbi.nlm.nih.gov/pubmed/17097284 Accessed 11 November 2013. [DOI] [PubMed] [Google Scholar]

- 3. Jonides J, Lewis RL, Nee DE, Lustig CA, Berman MG, et al. (2008) The mind and brain of short-term memory. Annu Rev Psychol 59: 193–224 Available: http://www.ncbi.nlm.nih.gov/pubmed/17854286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Benson NC, Butt OH, Datta R, Radoeva PD, Brainard DH, et al. (2012) The retinotopic organization of striate cortex is well predicted by surface topology. Curr Biol 22: 2081–2085 Available: http://www.ncbi.nlm.nih.gov/pubmed/23041195 Accessed 1 February 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Wandell B, Winawer J (2011) Imaging retinotopic maps in the human brain. Vision Res 51: 718–737 Available: http://www.sciencedirect.com/science/article/pii/S0042698910003780 Accessed 13 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M (2013) The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn Sci 17: 26–49 Available: http://www.ncbi.nlm.nih.gov/pubmed/23265839 Accessed 9 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Norman-Haignere SV, McCarthy G, Chun MM, Turk-Browne NB (2012) Category-selective background connectivity in ventral visual cortex. Cereb Cortex 22: 391–402 10.1093/cercor/bhr118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Corbetta M, Shulman GL (2002) Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3: 201–215 Available: 10.1038/nrn755 Accessed 3 May 2011. [DOI] [PubMed] [Google Scholar]

- 9. Kravitz DJ, Saleem KS, Baker CI, Mishkin M (2011) A new neural framework for visuospatial processing. Nat Rev Neurosci 12: 217–230 Available: http://www.ncbi.nlm.nih.gov/pubmed/21415848 Accessed 12 July 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Luck SJ, Vogel EK (1997) The capacity of visual working memory for features and conjunctions. Nature 390: 279–281 Available: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- 11. Posner MI, Petersen SE (1990) The attention system of the human brain. Annu Rev Neurosci 13: 25–42 Available: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- 12. Carrasco M, Giordano AM, McElree B (2004) Temporal performance fields: visual and attentional factors. Vision Res 44: 1351–1365 Available: http://www.ncbi.nlm.nih.gov/pubmed/15066395 Accessed 13 December 2013. [DOI] [PubMed] [Google Scholar]

- 13. Shiu LP, Pashler H (1995) Spatial attention and vernier acuity. Vision Res 35: 337–343 Available: http://www.ncbi.nlm.nih.gov/pubmed/7892729 Accessed 13 December 2013. [DOI] [PubMed] [Google Scholar]

- 14. Carrasco M, Loula F, Ho Y-X (2006) How attention enhances spatial resolution: evidence from selective adaptation to spatial frequency. Percept Psychophys 68: 1004–1012 Available: http://www.ncbi.nlm.nih.gov/pubmed/17153194 Accessed 13 December 2013. [DOI] [PubMed] [Google Scholar]

- 15. Bressler SL, Tang W, Sylvester CM, Shulman GL, Corbetta M (2008) Top-Down Control of Human Visual Cortex by Frontal and Parietal Cortex in Anticipatory Visual Spatial Attention. J Neurosci 28: 10056–10061 Available: http://www.jneurosci.org/content/28/40/10056.abstract Accessed 3 May 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Wen X, Yao L, Liu Y, Ding M (2012) Causal Interactions in Attention Networks Predict Behavioral Performance. J Neurosci 32: 1284–1292 Available: http://www.jneurosci.org/content/32/4/1284.abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Nenert R, Viswanathan S, Dubuc DM, Visscher KM (2012) Modulations of ongoing alpha oscillations predict successful short-term visual memory encoding. Front Hum Neurosci 6: 1–11 Available: http://www.frontiersin.org/Human_Neuroscience/10.3389/fnhum.2012.00127/abstract Accessed 8 May 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Zanto TP, Rubens MT, Thangavel A, Gazzaley A (2011) Causal role of the prefrontal cortex in top-down modulation of visual processing and working memory. Nat Neurosci 14: 656–661 Available: http://www.ncbi.nlm.nih.gov/pubmed/21441920 Accessed 3 May 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Capotosto P, Babiloni C, Romani GL, Corbetta M (2009) Frontoparietal Cortex Controls Spatial Attention through Modulation of Anticipatory Alpha Rhythms. J Neurosci 29: 5863–5872 Available: http://www.jneurosci.org/content/29/18/5863.abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Poline J-BB, Brett M (2012) The general linear model and fMRI: Does love last forever? Neuroimage: 1–10. Available: http://www.ncbi.nlm.nih.gov/pubmed/22343127. Accessed 15 March 2012. [DOI] [PubMed]

- 21. McIntosh AR (2000) Towards a network theory of cognition. Neural Netw 13: 861–870 Available: http://www.ncbi.nlm.nih.gov/pubmed/11156197 Accessed 13 December 2013. [DOI] [PubMed] [Google Scholar]

- 22. Bigras C, Shear PK, Vannest J, Allendorfer JB, Szaflarski JP (2013) The effects of temporal lobe epilepsy on scene encoding. Epilepsy Behav 26: 11–21 Available: http://www.ncbi.nlm.nih.gov/pubmed/23207513 Accessed 1 May 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Liu Z, Bai L, Dai R, Zhong C, Wang H, et al. (2012) Exploring the effective connectivity of resting state networks in mild cognitive impairment: an fMRI study combining ICA and multivariate Granger causality analysis. Conf Proc IEEE Eng Med Biol Soc 2012: 5454–5457 Available: http://www.ncbi.nlm.nih.gov/pubmed/23367163. [DOI] [PubMed] [Google Scholar]

- 24. Londei A, D'Ausilio A, Basso D, Sestieri C, Del Gratta C, et al. (2007) Brain network for passive word listening as evaluated with ICA and Granger causality. Brain Res Bull 72: 284–292 Available: http://www.ncbi.nlm.nih.gov/pubmed/17452288 Accessed 26 September 2013. [DOI] [PubMed] [Google Scholar]

- 25. Liao W, Mantini D, Zhang Z, Pan Z, Ding J, et al. (2010) Evaluating the effective connectivity of resting state networks using conditional Granger causality. Biol Cybern 102: 57–69 Available: http://www.ncbi.nlm.nih.gov/pubmed/19937337 Accessed 16 September 2013. [DOI] [PubMed] [Google Scholar]

- 26. Demirci O, Stevens MC, Andreasen NC, Michael A, Liu J, et al. (2009) Investigation of relationships between fMRI brain networks in the spectral domain using ICA and Granger causality reveals distinct differences between schizophrenia patients and healthy controls. Neuroimage 46: 419–431 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2713821&tool=pmcentrez&rendertype=abstract Accessed 18 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ding X, Lee S-W (2013) Changes of functional and effective connectivity in smoking replenishment on deprived heavy smokers: a resting-state FMRI study. PLoS One 8: e59331 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3602016&tool=pmcentrez&rendertype=abstract Accessed 6 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Stevens MC, Pearlson GD, Calhoun VD (2009) Changes in the interaction of resting-state neural networks from adolescence to adulthood. Hum Brain Mapp 30: 2356–2366 Available: http://www.ncbi.nlm.nih.gov/pubmed/19172655 Accessed 18 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Kim KK, Karunanayaka P, Privitera MD, Holland SK, Szaflarski JP (2011) Semantic association investigated with functional MRI and independent component analysis. Epilepsy Behav 20: 613–622 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3078943&tool=pmcentrez&rendertype=abstract Accessed 30 September 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Karunanayaka PR, Holland SK, Schmithorst VJ, Solodkin A, Chen EE, et al. (2007) Age-related connectivity changes in fMRI data from children listening to stories. Neuroimage 34: 349–360 Available: http://www.ncbi.nlm.nih.gov/pubmed/17064940 Accessed 16 December 2013. [DOI] [PubMed] [Google Scholar]

- 31. Karunanayaka P, Schmithorst VJ, Vannest J, Szaflarski JP, Plante E, et al. (2010) A group independent component analysis of covert verb generation in children: a functional magnetic resonance imaging study. Neuroimage 51: 472–487 Available: http://www.ncbi.nlm.nih.gov/pubmed/20056150 Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Karunanayaka P, Kim KK, Holland SK, Szaflarski JP (2011) The effects of left or right hemispheric epilepsy on language networks investigated with semantic decision fMRI task and independent component analysis. Epilepsy Behav 20: 623–632 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3079068&tool=pmcentrez&rendertype=abstract Accessed 21 September 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Vannest J, Szaflarski JP, Privitera MD, Schefft BK, Holland SK (2008) Medial temporal fMRI activation reflects memory lateralization and memory performance in patients with epilepsy. Epilepsy Behav 12: 410–418 Available: http://www.ncbi.nlm.nih.gov/pubmed/18162441 Accessed 9 October 2013. [DOI] [PubMed] [Google Scholar]

- 34. Detre JA, Maccotta L, King D, Alsop DC, Glosser G, et al. (1998) Functional MRI lateralization of memory in temporal lobe epilepsy. Neurology 50: 926–932 Available: http://www.ncbi.nlm.nih.gov/pubmed/9566374. [DOI] [PubMed] [Google Scholar]

- 35. Binder JR, Bellgowan PSF, Hammeke TA, Possing ET, Frost JA (2005) A comparison of two FMRI protocols for eliciting hippocampal activation. Epilepsia 46: 1061–1070 Available: http://www.ncbi.nlm.nih.gov/pubmed/16026558. [DOI] [PubMed] [Google Scholar]

- 36. Macwhinney B, Cohen J, Provost J (1997) The PsyScope experiment-building system. Spat Vis 11: 99–101 Available: http://www.ingentaconnect.com/content/vsp/spv/1997/00000011/00000001/art00011 Accessed 18 October 2013. [DOI] [PubMed] [Google Scholar]

- 37. Mechanic-Hamilton D, Korczykowski M, Yushkevich PA, Lawler K, Pluta J, et al. (2009) Hippocampal volumetry and functional MRI of memory in temporal lobe epilepsy. Epilepsy Behav 16: 128–138 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2749903&tool=pmcentrez&rendertype=abstract Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Calhoun VD, Adali T, Pearlson GD, Pekar JJ (2001) A Method for Making Group Inferences from Functional MRI Data Using Independent Component Analysis. 151: 140–151 10.1002/hbm [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Bell AJ, Sejnowski TJ (1995) An information-maximization approach to blind separation and blind deconvolution. Neural Comput 7: 1129–1159 Available: http://www.ncbi.nlm.nih.gov/pubmed/7584893. [DOI] [PubMed] [Google Scholar]

- 40. Li Y-O, Adali T, Calhoun VD (2007) Estimating the number of independent components for functional magnetic resonance imaging data. Hum Brain Mapp 28: 1251–1266 Available: http://www.ncbi.nlm.nih.gov/pubmed/17274023 Accessed 19 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Sridharan D, Levitin DJ, Menon V (2008) A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proc Natl Acad Sci U S A 105: 12569–12574 Available: http://www.pnas.org/content/105/34/12569.long Accessed 16 September 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Jiao Q, Lu G, Zhang Z, Zhong Y, Wang Z, et al. (2011) Granger causal influence predicts BOLD activity levels in the default mode network. Hum Brain Mapp 32: 154–161 Available: http://www.ncbi.nlm.nih.gov/pubmed/21157880 Accessed 18 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Himberg J, Hyvärinen A, Esposito F (2004) Validating the independent components of neuroimaging time series via clustering and visualization. Neuroimage 22: 1214–1222 Available: http://www.ncbi.nlm.nih.gov/pubmed/15219593 Accessed 18 October 2013. [DOI] [PubMed] [Google Scholar]

- 44. Erhardt EB, Rachakonda S, Bedrick EJ, Allen EA, Adali T, et al. (2011) Comparison of multi-subject ICA methods for analysis of fMRI data. Hum Brain Mapp 32: 2075–2095 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3117074&tool=pmcentrez&rendertype=abstract Accessed 11 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Beckmann CF (2012) Modelling with independent components. Neuroimage 62: 891–901 Available: http://www.ncbi.nlm.nih.gov/pubmed/22369997 Accessed 28 April 2014. [DOI] [PubMed] [Google Scholar]

- 46. Granger CWJ (1969) Investigating Causal Relations by Econometric Models and Cross-spectral Methods. Econometrica 37: 424 Available: http://www.jstor.org/stable/1912791 Accessed 21 October 2013. [Google Scholar]

- 47. Wen X, Rangarajan G, Ding M (2013) Is Granger causality a viable technique for analyzing fMRI data? PLoS One 8: e67428 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3701552&tool=pmcentrez&rendertype=abstract Accessed 8 October 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Seth AK, Chorley P, Barnett LC (2013) Granger causality analysis of fMRI BOLD signals is invariant to hemodynamic convolution but not downsampling. Neuroimage 65: 540–555 Available: http://www.ncbi.nlm.nih.gov/pubmed/23036449 Accessed 27 September 2013. [DOI] [PubMed] [Google Scholar]

- 49. Szaflarski JP, DiFrancesco M, Hirschauer T, Banks C, Privitera MD, et al. (2010) Cortical and subcortical contributions to absence seizure onset examined with EEG/fMRI. Epilepsy Behav 18: 404–413 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2922486&tool=pmcentrez&rendertype=abstract Accessed 11 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Barnett L, Seth AK (2011) Behaviour of Granger causality under filtering: theoretical invariance and practical application. J Neurosci Methods 201: 404–419 Available: http://www.ncbi.nlm.nih.gov/pubmed/21864571 Accessed 6 March 2014. [DOI] [PubMed] [Google Scholar]

- 51. Seth AK (2005) Causal connectivity of evolved neural networks during behavior. Network 16: 35–54 Available: http://www.ncbi.nlm.nih.gov/pubmed/16350433. [DOI] [PubMed] [Google Scholar]

- 52. Schwarz G (1978) Estimating the Dimension of a Model. Ann Stat 6: 461–464 Available: http://projecteuclid.org/euclid.aos/1176344136 Accessed 21 October 2013. [Google Scholar]

- 53. Seth AK (2010) A MATLAB toolbox for Granger causal connectivity analysis. J Neurosci Methods 186: 262–273 Available: http://www.ncbi.nlm.nih.gov/pubmed/19961876 Accessed 21 October 2013. [DOI] [PubMed] [Google Scholar]

- 54. Kay BP, Meng X, Difrancesco MW, Holland SK, Szaflarski JP (2012) Moderating effects of music on resting state networks. Brain Res 1447: 53–64 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3307876&tool=pmcentrez&rendertype=abstract Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Pearson JM, Heilbronner SR, Barack DL, Hayden BY, Platt ML (2011) Posterior cingulate cortex: adapting behavior to a changing world. Trends Cogn Sci 15: 143–151 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3070780&tool=pmcentrez&rendertype=abstract Accessed 7 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Leech R, Sharp DJ (2013) The role of the posterior cingulate cortex in cognition and disease. Brain. Available: http://www.ncbi.nlm.nih.gov/pubmed/23869106. Accessed 10 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Gilbert SJ, Simons JS, Frith CD, Burgess PW (2006) Performance-related activity in medial rostral prefrontal cortex (area 10) during low-demand tasks. J Exp Psychol Hum Percept Perform 32: 45–58 Available: http://www.ncbi.nlm.nih.gov/pubmed/16478325 Accessed 13 November 2013. [DOI] [PubMed] [Google Scholar]

- 58. Fletcher PC, Henson RN (2001) Frontal lobes and human memory: insights from functional neuroimaging. Brain 124: 849–881 Available: http://www.ncbi.nlm.nih.gov/pubmed/11335690. [DOI] [PubMed] [Google Scholar]

- 59. Buckner RL, Andrews-Hanna JR, Schacter DL (2008) The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci 1124: 1–38 Available: http://www.ncbi.nlm.nih.gov/pubmed/18400922 Accessed 7 November 2013. [DOI] [PubMed] [Google Scholar]

- 60. Boly M, Moran R, Murphy M, Boveroux P, Bruno M-A, et al. (2012) Connectivity changes underlying spectral EEG changes during propofol-induced loss of consciousness. J Neurosci 32: 7082–7090 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3366913&tool=pmcentrez&rendertype=abstract Accessed 12 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Laureys S, Owen AM, Schiff ND (2004) Brain function in coma, vegetative state, and related disorders. Lancet Neurol 3: 537–546 Available: http://www.ncbi.nlm.nih.gov/pubmed/15324722 Accessed 12 November 2013. [DOI] [PubMed] [Google Scholar]

- 62. Weissman DH, Roberts KC, Visscher KM, Woldorff MG (2006) The neural bases of momentary lapses in attention. Nat Neurosci 9: 971–978 Available: 10.1038/nn1727. [DOI] [PubMed] [Google Scholar]

- 63.Gilbert SJ, Dumontheil I, Simons JS, Frith CD, Burgess PW (2007) Comment on “Wandering minds: the default network and stimulus-independent thought”. Science 317: 43; author reply 43. Available: http://www.ncbi.nlm.nih.gov/pubmed/17615325. Accessed 8 November 2013. [DOI] [PubMed]

- 64. Hahn B, Ross TJ, Stein EA (2007) Cingulate activation increases dynamically with response speed under stimulus unpredictability. Cereb Cortex 17: 1664–1671 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2693262&tool=pmcentrez&rendertype=abstract Accessed 12 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Duncan J (2013) The structure of cognition: attentional episodes in mind and brain. Neuron 80: 35–50 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3791406&tool=pmcentrez&rendertype=abstract Accessed 11 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Tulving E, Kapur S, Craik FI, Moscovitch M, Houle S (1994) Hemispheric encoding/retrieval asymmetry in episodic memory: positron emission tomography findings. Proc Natl Acad Sci U S A 91: 2016–2020 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=43300&tool=pmcentrez&rendertype=abstract Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Hampshire A, Chamberlain SR, Monti MM, Duncan J, Owen M (2010) The role of the right inferior frontal gyrus: Inhibition and attentional control. Neuroimage 50: 1313–1319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Corbetta M, Patel G, Shulman G (2008) The Reorienting System of the Human Brain: From Environment to Theory of Mind. Neuron 58: 306–324 Available: http://www.sciencedirect.com/science/article/pii/S0896627308003693 Accessed 11 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Shulman GL, Pope DLW, Astafiev SV, McAvoy MP, Snyder AZ, et al. (2010) Right hemisphere dominance during spatial selective attention and target detection occurs outside the dorsal frontoparietal network. J Neurosci 30: 3640–3651 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2872555&tool=pmcentrez&rendertype=abstract Accessed 7 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Chambers CD, Garavan H, Bellgrove MA (2009) Insights into the neural basis of response inhibition from cognitive and clinical neuroscience. Neurosci Biobehav Rev 33: 631–646 Available: http://www.ncbi.nlm.nih.gov/pubmed/18835296 Accessed 6 July 2011. [DOI] [PubMed] [Google Scholar]

- 71. Chikazoe J, Jimura K, Asari T, Yamashita K, Morimoto H, et al. (2009) Functional dissociation in right inferior frontal cortex during performance of go/no-go task. Cereb Cortex 19: 146–152 Available: http://www.ncbi.nlm.nih.gov/pubmed/18445602 Accessed 25 August 2011. [DOI] [PubMed] [Google Scholar]

- 72. Menzies L, Achard S, Chamberlain SR, Fineberg N, Chen C-H, et al. (2007) Neurocognitive endophenotypes of obsessive-compulsive disorder. Brain 130: 3223–3236 Available: http://www.ncbi.nlm.nih.gov/pubmed/17855376 Accessed 24 July 2011. [DOI] [PubMed] [Google Scholar]

- 73. Barch DM, Braver TS, Carter CS, Poldrack RA, Robbins TW (2009) CNTRICS final task selection: executive control. Schizophr Bull 35: 115–135 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2643948&tool=pmcentrez&rendertype=abstract Accessed 26 August 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Li CR, Huang C, Constable RT, Sinha R (2006) Imaging response inhibition in a stop-signal task: neural correlates independent of signal monitoring and post-response processing. J Neurosci 26: 186–192 Available: http://www.ncbi.nlm.nih.gov/pubmed/16399686 Accessed 16 June 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Mostofsky SH, Simmonds DJ (2008) Response inhibition and response selection: two sides of the same coin. J Cogn Neurosci 20: 751–761 Available: http://www.ncbi.nlm.nih.gov/pubmed/18201122. [DOI] [PubMed] [Google Scholar]

- 76. Sharp DJ, Bonnelle V, De Boissezon X, Beckmann CF, James SG, et al. (2010) Distinct frontal systems for response inhibition, attentional capture, and error processing. Proc Natl Acad Sci U S A 107: 6106–6111 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2851908&tool=pmcentrez&rendertype=abstract Accessed 15 June 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Gazzaley A, Cooney J, McEvoy K, Knight RT, D'Esposito M (2005) Top-down enhancement and suppression of the magnitude and speed of neural activity. J Cogn 17: 507–517 Available: 10.1162/0898929053279522 Accessed 20 December 2011. [DOI] [PubMed] [Google Scholar]

- 78. Liu T, Slotnick SD, Serences JT, Yantis S (2003) Cortical mechanisms of feature-based attentional control. Cereb Cortex (New York, NY 1991) 13: 1334–1343 Available: http://www.ncbi.nlm.nih.gov/pubmed/14615298 Accessed 4 May 2011. [DOI] [PubMed] [Google Scholar]

- 79. Derrfuss J, Brass M, Neumann J, von Cramon DY (2005) Involvement of the inferior frontal junction in cognitive control: meta-analyses of switching and Stroop studies. Hum Brain Mapp 25: 22–34 Available: http://www.ncbi.nlm.nih.gov/pubmed/15846824 Accessed 4 May 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Verbruggen F, Aron AR, Stevens MA, Chambers CD (2010) Theta burst stimulation dissociates attention and action updating in human inferior frontal cortex. Proc Natl Acad Sci U S A 107: 13966–13971 Available: http://www.pnas.org/content/early/2010/07/12/1001957107.abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, et al. (1998) A common network of functional areas for attention and eye movements. Neuron 21: 761–773 Available: http://www.ncbi.nlm.nih.gov/pubmed/9808463 Accessed 16 November 2013. [DOI] [PubMed] [Google Scholar]

- 82. Thiel CM, Zilles K, Fink GR (2004) Cerebral correlates of alerting, orienting and reorienting of visuospatial attention: an event-related fMRI study. Neuroimage 21: 318–328 Available: http://www.ncbi.nlm.nih.gov/pubmed/14741670 Accessed 16 November 2013. [DOI] [PubMed] [Google Scholar]

- 83. Szaflarski JP, Holland SK, Schmithorst VJ, Dunn RS, Privitera MD (2004) High-resolution functional MRI at 3T in healthy and epilepsy subjects: hippocampal activation with picture encoding task. Epilepsy Behav 5: 244–252 Available: http://www.ncbi.nlm.nih.gov/pubmed/15123027 Accessed 16 December 2013. [DOI] [PubMed] [Google Scholar]

- 84. Smith SM, Fox PT, Miller KL, Glahn DC, Fox PM, et al. (2009) Correspondence of the brain's functional architecture during activation and rest. Proc Natl Acad Sci U S A 106: 13040–13045 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2722273&tool=pmcentrez&rendertype=abstract Accessed 13 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Scherf KS, Luna B, Avidan G, Behrmann M (2011) “What” precedes “which”: developmental neural tuning in face- and place-related cortex. Cereb Cortex 21: 1963–1980 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3202723&tool=pmcentrez&rendertype=abstract Accessed 11 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Kurth F, Eickhoff SB, Schleicher A, Hoemke L, Zilles K, et al. (2010) Cytoarchitecture and probabilistic maps of the human posterior insular cortex. Cereb Cortex 20: 1448–1461 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2871375&tool=pmcentrez&rendertype=abstract Accessed 13 November 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Chen LM (2007) Imaging of pain. Int Anesthesiol Clin 45: 39–57 Available: http://www.ncbi.nlm.nih.gov/pubmed/17426507 Accessed 18 November 2013. [DOI] [PubMed] [Google Scholar]

- 88. Frot M, Magnin M, Mauguière F, Garcia-Larrea L (2007) Human SII and posterior insula differently encode thermal laser stimuli. Cereb Cortex 17: 610–620 Available: http://www.ncbi.nlm.nih.gov/pubmed/16614165 Accessed 15 November 2013. [DOI] [PubMed] [Google Scholar]

- 89. Bamiou D-E, Musiek FE, Luxon LM (2003) The insula (Island of Reil) and its role in auditory processing. Literature review. Brain Res Brain Res Rev 42: 143–154 Available: http://www.ncbi.nlm.nih.gov/pubmed/12738055. [DOI] [PubMed] [Google Scholar]

- 90. Kitada R, Hashimoto T, Kochiyama T, Kito T, Okada T, et al. (2005) Tactile estimation of the roughness of gratings yields a graded response in the human brain: an fMRI study. Neuroimage 25: 90–100 Available: http://www.ncbi.nlm.nih.gov/pubmed/15734346 Accessed 18 November 2013. [DOI] [PubMed] [Google Scholar]

- 91. Johansen-Berg H, Matthews PM (2002) Attention to movement modulates activity in sensori-motor areas, including primary motor cortex. Exp Brain Res 142: 13–24 Available: http://www.ncbi.nlm.nih.gov/pubmed/11797080 Accessed 14 December 2013. [DOI] [PubMed] [Google Scholar]

- 92. Ciccarelli O, Toosy AT, Marsden JF, Wheeler-Kingshott CM, Sahyoun C, et al. (2005) Identifying brain regions for integrative sensorimotor processing with ankle movements. Exp Brain Res 166: 31–42 Available: http://www.ncbi.nlm.nih.gov/pubmed/16034570 Accessed 14 December 2013. [DOI] [PubMed] [Google Scholar]

- 93. Allendorfer JB, Kissela BM, Holland SK, Szaflarski JP (2012) Different patterns of language activation in post-stroke aphasia are detected by overt and covert versions of the verb generation fMRI task. Med Sci Monit 18: CR135–7 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3319663&tool=pmcentrez&rendertype=abstract Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Tomlinson SP, Davis NJ, Morgan HM, Bracewell RM (2013) Cerebellar Contributions to Verbal Working Memory. The Cerebellum. Available: http://link.springer.com/10.1007/s12311-013-0542-3. Accessed 16 December 2013. [DOI] [PubMed]

- 95. Mariën P, Ackermann H, Adamaszek M, Barwood CHS, Beaton A, et al. (2013) Consensus Paper: Language and the Cerebellum: an Ongoing Enigma. Cerebellum. Available: http://www.ncbi.nlm.nih.gov/pubmed/24318484. Accessed 16 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Koziol LF, Budding D, Andreasen N, D'Arrigo S, Bulgheroni S, et al. (2013) Consensus Paper: The Cerebellum's Role in Movement and Cognition. Cerebellum. Available: http://www.ncbi.nlm.nih.gov/pubmed/23996631. Accessed 11 December 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Szaflarski JP, Allendorfer JB, Heyse H, Mendoza L, Szaflarski BA, et al. (2014) Functional MRI of facial emotion processing in left temporal lobe epilepsy. Epilepsy Behav 32: 92–99 Available: http://www.ncbi.nlm.nih.gov/pubmed/24530849 Accessed 2 June 2014. [DOI] [PubMed] [Google Scholar]

- 98. Schutter DJLG, van Honk J (2009) The cerebellum in emotion regulation: a repetitive transcranial magnetic stimulation study. Cerebellum 8: 28–34 Available: http://www.ncbi.nlm.nih.gov/pubmed/18855096 Accessed 16 December 2013. [DOI] [PubMed] [Google Scholar]

- 99. Stoodley CJ, Schmahmann JD (2009) Functional topography in the human cerebellum: a meta-analysis of neuroimaging studies. Neuroimage 44: 489–501 Available: http://www.ncbi.nlm.nih.gov/pubmed/18835452 Accessed 11 December 2013. [DOI] [PubMed] [Google Scholar]

- 100. D'Esposito M, Aguirre G, Zarahn E, Ballard D (1998) Functional MRI studies of spatial and nonspatial working memory. Cogn Brain 7: 1–13 Available: http://www.ncbi.nlm.nih.gov/pubmed/9714705 Accessed 20 December 2011. [DOI] [PubMed] [Google Scholar]

- 101. Dosenbach NUF, Fair DA, Miezin FM, Cohen AL, Wenger KK, et al. (2007) Distinct brain networks for adaptive and stable task control in humans. Proc Natl Acad Sci U S A 104: 11073–11078 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1904171&tool=pmcentrez&rendertype=abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102. Szaflarski JP, Holland SK, Schmithorst VJ, Byars AW (2006) fMRI study of language lateralization in children and adults. Hum Brain Mapp 27: 202–212 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1464420&tool=pmcentrez&rendertype=abstract Accessed 30 September 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103. Donnelly KM, Allendorfer JB, Szaflarski JP (2011) Right hemispheric participation in semantic decision improves performance. Brain Res 1419: 105–116 Available: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3196305&tool=pmcentrez&rendertype=abstract Accessed 10 April 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Mengarelli F, Spoglianti S, Avenanti A, di Pellegrino G (2013) Cathodal tDCS Over the Left Prefrontal Cortex Diminishes Choice-Induced Preference Change. Cereb Cortex. Available: http://www.ncbi.nlm.nih.gov/pubmed/24275827. Accessed 12 December 2013. [DOI] [PubMed]

- 105. Friston K, Moran R, Seth AK (2013) Analysing connectivity with Granger causality and dynamic causal modelling. Curr Opin Neurobiol 23: 172–178 Available: http://www.ncbi.nlm.nih.gov/pubmed/23265964 Accessed 25 September 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106. Kim S, Ogawa S (2012) Biophysical and physiological origins of blood oxygenation level-dependent fMRI signals. 32: 1188–1206 Available: 10.1038/jcbfm.2012.23. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The authors confirm that all data underlying the findings are fully available without restriction. The use of any human data is regulated by the Institutional Review Board (IRB). While the IRB at the University of Alabama at Birmingham has agreed to make the data available to the public, it requires us to monitor the distribution and use of the data. The data are therefore available upon request and requests may be sent to: Jerzy P. Szaflarski (szaflaj@uab.edu).