Abstract

Natural scientists from Climate Central and social scientists from Carnegie Mellon University collaborated to develop science communications aimed at presenting personalized coastal flood risk information to the public. We encountered four main challenges: agreeing on goals; balancing complexity and simplicity; relying on data, not intuition; and negotiating external pressures. Each challenge demanded its own approach. We navigated agreement on goals through intensive internal communication early on in the project. We balanced complexity and simplicity through evaluation of communication materials for user understanding and scientific content. Early user test results that overturned some of our intuitions strengthened our commitment to testing communication elements whenever possible. Finally, we did our best to negotiate external pressures through regular internal communication and willingness to compromise.

Keywords: collaboration, informed decision-making

Overcoming the Challenges of Collaboration to Design Effective Science Communication

Science powers the technology behind modern society while also providing a window onto its risks. Individual and policy decisions commonly carry both benefits and costs that science can speak to; for example, burning fossil fuel helps energize our economies while also endangering them by contributing to climate change. When the benefits and costs of an action appear similar, or are not broadly or well understood, we are challenged to make more measured decisions (1–4). Having some grasp of the relevant science can help. Decision makers need not be experts, but understanding risks and benefits allows them to make informed decisions reflecting their values (5–8). An important aim for many science communications can be to achieve this end, with the goal not of agreement, per se, but of “fewer, better disagreements” (9).

One approach to science communication follows four interrelated steps: identifying relevant science, determining people’s informational needs, designing communications to fill those needs, and evaluating the adequacy of those communications, with refinements until adequacy is demonstrated (9). We believe that collaboration between natural and social scientists increases the chances for success in this approach. Social scientists need natural scientists for their topic area expertise to ensure communications remain true to the science, and natural scientists need social scientists for their expertise to ensure communications are relevant and understandable to lay target audiences. However, natural and social scientists use different languages and methods (10), which can make it difficult to work together even when sharing the same goal of science communication. However, these challenges are surmountable, and overcoming them may yield a productive collaborative relationship as well as stronger communications.

We are part of a team of natural scientists from Climate Central and social scientists from Carnegie Mellon University who have worked together on a communications research and design effort concerning coastal flood risk as aggravated by sea level rise. This effort supported Climate Central’s development of an interactive, Web-based platform to share related information (Surging Seas Risk Finder, at http://sealevel.climatecentral.org/). Over the course of our collaboration, we had to address many challenges. Some can be attributed to differences in disciplinary perspectives, and others arose as a result of forces outside the team’s control.

Overall, the challenges we encountered fell into four main categories: agreeing on goals; balancing complexity and simplicity; relying on data, not intuition; and negotiating external pressures.

The next sections explore each category in turn, beginning with a general discussion and then sharing anecdotes from our own experience.

Challenge 1: Agreeing on Goals

Collaborators who share clear goals and view them as attainable and important are more likely to achieve quality products and satisfaction with the process (11, 12). One common barrier is simple lack of time (13). Others include lack of openness, patience, understanding, trust, and respect between collaborators; unspoken jealousies; or perceived threats to authority or power (14, 15). Individuals tend to conform to the perceived norms or values of the team (16) and may avert conflict by not fully voicing their own opinions. Collective inexperience may leave collaborators unaware of the importance of clearly defining goals (17). Effective internal team communication may also enhance the team’s ability to work more efficiently together, increase the team’s flexibility, and increase their resiliency in the face of external pressures (15).

The Response: Coming to an Agreement on What We Meant By “Effective” Communication.

In our case, the critical issue for agreeing on common goals was how to define an effective communication of flood and sea level risk. Climate Central researchers invited this collaboration out of a sense that many Americans’ interest and understanding about climate change, and more specifically, in this instance, sea-level rise and coastal flood risk, were not commensurate with the risk. Carnegie Mellon University questioned how anyone could decide what exactly the “right” level of concern is for another person. After a series of conversations, we settled on the goal of providing lay audiences with the information needed to demonstrate knowledge, make logical inferences, and show consistency in preferences.

Knowledge can be assessed through information recall (e.g., “What is the likelihood of there being at least one flood between today and 2020 that is 3 feet?”) (18). The ability to make logical inferences can be assessed through problem-solving tasks (e.g., after showing people the likelihood of a 10-foot flood, asking, “What is the likelihood of an 11-foot flood?”) (19). Consistency can be measured by asking a question in several different ways (e.g., asking participants what level of flood risk they would tolerate and separately asking whether they would move to a place where risk exceeds this level, according to information presented earlier) (20).

In other words, we reached a shared definition of “effective” communications; namely, ones that help people make informed decisions, reflective of their own unique situations and values, rather than communications that push people to make persuaded decisions, reflective of some outside vision of what the decision should be. Furthermore, this early work to define shared goals laid the groundwork for more effective and efficient team communication throughout the project.

Challenge 2: Balancing Complexity and Simplicity

A general challenge for science communications is to stay true to the science, and its complexity, while still allowing most lay audiences to understand it (21). The degree of simplification that can be required often dwarfs what scientists anticipate (22).

Understandability may be enhanced by following “best practices” of science communications (23). For example, to convey probabilistic risk information about sea level rise, communicators can turn to numeracy research. Research on the ability to understand and use numbers has shown that people better understand probabilistic information as denominators of base 10 (e.g., “20 in 100 chance that a region will experience sea level rise of 3 feet between today and 2050”) compared with when the same information is presented using 1 of N (e.g., “1 in 5 chance that a region will experience sea level rise of 3 feet between today and 2050”) (24). Observed differences in understanding can be attributed to the relative ease with which people translate base values into percentages (25). Testing communications with lay target audiences (26) allows refining as needed and may further enhance these initial drafts.

The Response: Understanding the Risk for Coastal Flood Aggravated By Sea Level Rise.

Perhaps the biggest challenge of our project was how to present Climate Central’s extensive and complex set of forecast and exposure analyses in a digestible way that effectively informs decisions affected by future coastal flooding risk. Our practical objective was to inform the development of Climate Central’s interactive Web-based platform, the Surging Seas Risk Finder, which would be (and now is) freely available to the general public.

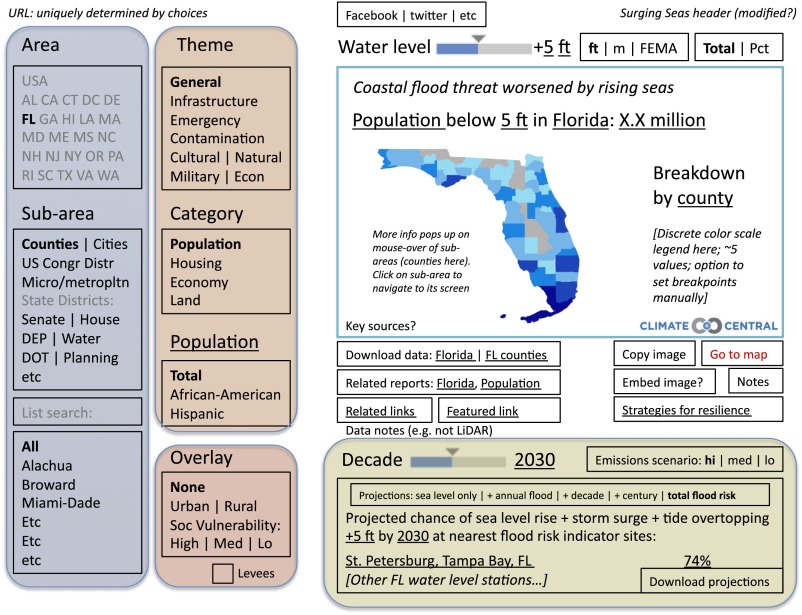

At the start of our work together, Climate Central shared with Carnegie Mellon University a sketch indicative of the data that were available to share and that might be included in a Web platform (Fig. 1). Our joint project focused on forecasts. Climate Central had generated sea level and flood risk projections for 55 water level stations along US coasts from 2012 to 2100, at 10 different water levels ranging from 1 to 10 feet. The analysis assessed annual risk, cumulative risk, and how warming-driven sea level rise will multiply risk. In addition, Climate Central generated results conditional on carbon dioxide emissions scenarios (e.g., low, medium, or high) and sea level rise models. We quickly judged that presenting all of this information together at once would confuse nonexperts. After several team discussions, Climate Central determined that it would be worth exploring the design of a pared down, simplified presentation. This version would show users a small number of basic options up front that they could adjust on an advanced settings page, allowing exploration of more complex and nuanced information.

Fig. 1.

Original sketch of the intended science communication, showing the full range of science information about coastal flooding available and the many options for users to tailor the display to fit their specific needs. Pct, percentage; FEMA, Federal Emergency Management Agency.

To assess user understanding of the most basic elements, we developed a simplified experimental online tool following relevant best practices from the science communications literature (23). For example, we made the tool interactive (allowing users to adjust water level), consistent with evidence suggesting the power of experiential learning (27).

One design issue that warranted empirical evaluation was the effect of time frame. Focusing on just a single period would allow a simplification of the online presentation. Sea level rise and flood risk projections become much more dramatic toward the end of the century, suggesting that a longer time would increase perceived risk. However, research on psychological distance finds that the farther in the future the consequences of a decision appear to be, the less relevant and urgent the decision feels (28). In turn, people are less likely to fully process and understand information relating to decisions they believe lack urgency (29, 30). To explore whether temporal distance affected understanding, users’ understanding was assessed across three time horizons: projections for 2020, 2050, and 2100 (31). Each user saw just a single time frame (Fig. 2). Of course, long periods entail larger cumulative risks (32). Therefore, to the extent that our participants expressed differences in perceived risk, any observed differences in understanding could reflect both cumulative risk and temporal distance. We did not find, however, any differences in understanding between the three time frames.

Fig. 2.

Simplified Risk Finder mockup tool showing risk profile information for the periods between today and 2020 (Left) and between today and 2100 (Right) for Baldwin County, Alabama.

We then piloted the tool by performing user testing, following the Think Aloud Protocol (33). We recruited 10 US adult participants through Carnegie Mellon University’s Center for Behavioral and Decision Research Participant Pool. The center maintains a pool of participants drawn from the City of Pittsburgh and the surrounding areas, representing a wide range of socioeconomic backgrounds. Half the participants were women, and most had at least some college education. Interviews were audiorecorded, transcribed, and analyzed for understanding of key terms and concepts (e.g., sea level rise), preferences for terms (e.g., slow sea level rise versus optimistic sea level rise), and preferences for layout.

Using this approach, we asked participants what they were doing, thinking, and feeling as they interacted with the tool. Thus, we were able to adjust ineffective design elements informed by these interviews and to further test and refine language and the presentation of quantitative information that was not being well understood.

Finally, we evaluated the refined tool with a larger sample of participants. We recruited 149 adult members of the US general public through Amazon’s Mechanical Turk. Their average age was 36.1 years (standard deviation, 13.0 years), with 47.7% being women, 79.2% identifying as White or Caucasian, 48.4% having at least a bachelor’s degree, and 42.2% having household incomes of at least $51K. Most reported being Democrats, at 51%, with 28.2% independents, 16.8% Republicans, and 4% other or “prefer not to answer.” Some reported having lived on the coast (37.6%), and most had vacationed there (71.8%). Many reported some familiarity with the coastline (34.1%), having experienced a hurricane (44.3%) or flood or knowing someone who had (36.9%). Responses to the three time frames were pooled with the exception of the few cases in which they differed significantly. Using statistics, responses were evaluated through three criteria: knowledge, consistency, and active mastery. A manuscript describing this work is currently under review.

After these participants interacted with the platform, we administered an assessment of their knowledge (e.g., “What is the likelihood of there being at least one flood between today and 2020 that is 3 feet?” requires participants to accurately recall information), ability to make logical inferences (e.g., “What is the likelihood of an 11-foot flood?” requires people to extrapolate beyond the tool maximum of 10 feet), and consistency in responses (e.g., asking participants what level of flood risk they would tolerate and separately asking whether they would move to a place where risk exceeds this level, according to information presented earlier).

Challenge 3: Relying on Data, Not Intuition

One common trap that natural and social scientists can fall into is to rely too heavily on their intuition in the design of science communication. Studies have shown that ill-designed science communications can have no effect or can even lead to misunderstandings, misperceptions, and mistrust (34, 35). Designers can forget that their audiences may not share the same scientific language, and therefore may misunderstand or tune out messages that include complex scientific language or visuals. Designers can also forget that people may not understand or respond to science information in the way they expect, which may seem irrational at face value (appearing as biased judgments or hyperbolic discounting), but which may make sense to the recipient, given their personal situations and values (36, 37). Thus, a major challenge facing collaborators is the natural inclination to rely on intuition rather than on data in the design of science communication (38–40).

Data-driven assessments can take many forms, including interviews, surveys, focus groups, and randomized controlled trials (23, 41). Interviews or focus groups can be performed with a small number of individuals from the lay target audience, providing insight into the understandability of science communications through their answers about what makes sense, what does not, and why. Informed by these results, surveys of a larger and more diverse sample of the lay target audience can be conducted to assess understanding more widely. Finally, randomized controlled trials can then be used to evaluate the effectiveness of different variants of the communication.

The Response: Learning How to Let Go of Our Intuition and Listen to What the Data Tell Us.

One concept Climate Central wanted to convey through its final tool was the wide range of possible future sea level rise scenarios (42). Therefore, the team tested ways of presenting three different scenarios drawn from a recent report (43): 0.5, 1.2, or 2.0 m sea level rise by 2100.

On the basis of the risk communication literature on numeracy (44, 45), literacy (46, 47), and compelling presentation (48–50), the team created three terms we believed would help people quickly understand the underlying concept so that they could better interpret the risk information: optimistic (0.5 m), neutral (1.2 m), and pessimistic (2.0 m) sea level rise.

We then conducted semistructured interviews with members of the Carnegie Mellon University’s Center for Behavioral and Decision Research Participant Pool. We matched the terms with basic descriptors (“optimistic” with “slow rise,” “neutral” with “medium rise,” and “pessimistic” with “fast rise”) and showed our participants the matched sets in randomized order. After each term, we asked participants to tell us what came to mind as well as to provide a numerical estimate of the rate of sea level rise. At the end, we asked participants to rank their preference for the terms in each matched set (e.g., “optimistic” versus “slow rise”).

What we found contradicted our intuition. We thought that using an emotionally compelling word like optimistic, rather than slow, would help people understand the underlying concept of rate. Instead, we found that people thought “slow” was a more accurate term than “optimistic,” which made them think there was no sea level rise. We found that people understood “fast” and “pessimistic,” with “pessimistic” making them feel more concerned. However, we also found “fast” made them feel hopeful, as though something could still be done about sea level rise, whereas “pessimistic” made them feel like giving up because all hope is lost. Finally, we found that people thought “medium” was a more accurate term than “neutral,” which was seen as a contradiction in terms because it meant there was no sea level rise taking place. In short, although we found that the use of emotional terms was evocative, it seemed to get in the way of understanding the concept we were trying to convey.

Challenge 4: Negotiating External Pressures

Even collaborators who agree on an ideal process for design (i.e., identify relevant science, determine the audience’s informational needs, target those informational needs, and iteratively evaluate the adequacy of test communications) are subject to external forces that may make it hard for them to do so. Pressures can come from collaborators’ institutional homes, funding sources, or even world events (e.g., natural disasters such as Superstorm Sandy) (12, 13, 15).

Building resilience to external pressures can be difficult. These pressures may play differently on specific members of the team, which may lead to intragroup conflict about how the research should proceed and what the final product should look like. For example, if the scientific topic is relevant to major current events or decisions, there may be external pressure to disseminate the communication before the team has completed its research (e.g., testing for understanding). Team members may also disagree internally over the tradeoff between quality and speed, worried that delay may result in missed opportunities to inform consequential decisions. Although agreeing before such conflicts arise as to how a team will proceed in such cases is ideal, there may be instances in which bringing an outside person to mediate might be warranted. In transdisciplinary research, mediators have helped collaborators to define what could and should be done (51), as well as to note and vocalize points of consensus (52), thereby helping the team overcome intragroup conflict.

The Response: Forces Shaping the Design of the Sea Level Tool.

Of the external pressures facing our team, the most challenging pressure had to do with time. The official launch date for Climate Central’s tool was to be fall 2013, and we began our collaboration in January 2013. In addition, Climate Central was contracting in parallel with a Web design team, which was a necessity because of the launch timeline and the complexity of the information to be presented.

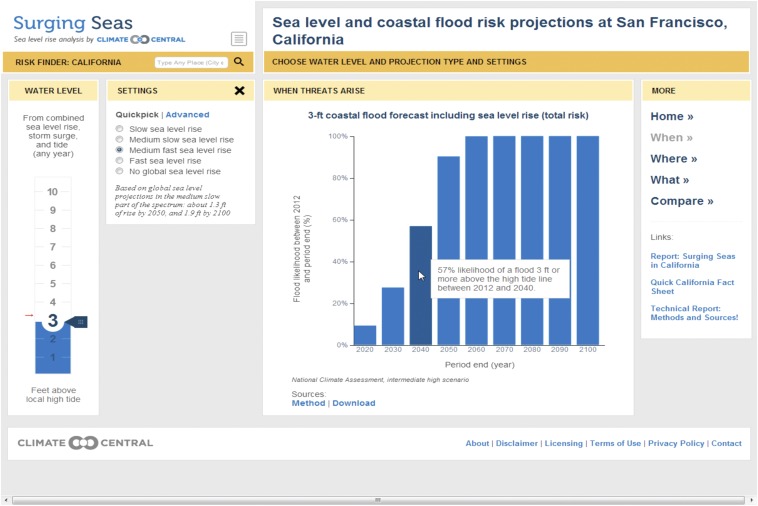

The result of the study described under Challenge 2: Balancing Complexity and Simplicity demonstrated that presenting the content in question with a 2050 time horizon is both compelling and understandable. One limitation of the study is that we showed risk information from only a single water level station, and thus we could not conclude that 2050 would be a “sweet spot” year for stations with different time patterns of risk. Given the tight timeline, we could not investigate further. Furthermore, Climate Central wanted to present risk information across a wide time horizon (by decade, from 2020 to 2100) to service diverse user interests; whereas Carnegie Mellon University felt it was premature to present such granular information. Climate Central decided to present the fine-grained information but, at the same time, include language to more clearly communicate that the risk at each point differs, with a simple sentence or phrase (as shown in Fig. 3). We agreed that if future research were to indicate that the 2050 findings apply widely, then Climate Central would look to emphasize this time frame in other content, such as press releases or fact sheets.

Fig. 3.

Draft Surging Seas page showing the total risk for a 3-foot coastal flood forecast, including sea level rise, with descriptors, by decade.

Conclusions

Effective science communication benefits when collaborators fulfill four interrelated tasks: identifying relevant science, determining people’s informational needs, designing communications to fill those needs, and evaluating the adequacy of those communications. A number of challenges may arise when natural and social scientists work together, even when both parties share the same goal of effective science communication. Here we present the four main challenges we encountered during our collaboration: agreeing on goals; balancing complexity and simplicity; relying on data, not intuition; and negotiating external pressures. We present a brief description of each challenge, describing its nature and the importance of dealing with it, and reflect on our own personal experience.

In the process of our collaboration, we navigated agreement on goals through intensive within-team communication early in the project. We balanced complexity and simplicity through evaluation of the communication for understanding and scientific content. We addressed the challenge of relying on data, not intuition, by agreeing on the value of testing. Finally, we did our best to negotiate external pressures through communication and compromise. Inevitably, we were able to investigate only a fraction of the design elements needed for Climate Central’s full Web tool and communications needs, but our joint research identified and addressed problems that otherwise could have reduced the effectiveness of the website.

Collaborations between natural and social scientists face many challenges in achieving effective science communications, but effort wins rewards. When contributors from different disciplines work together, they may grow to appreciate other ways of looking at the world and creating knowledge, perhaps leading to new or innovative research directions. More important, effective collaboration increases the chances for effective communication to the public, thereby facilitating people’s ability to make more informed decisions, reflective of their values, hoping for “fewer, better disagreements” (9) about challenging decisions they face in their personal lives, as well as those facing our society.

Acknowledgments

We thank Alex Engel and Tamar Krishnamurti for their helpful comments. The research described in this paper was supported by the Rockefeller Foundation and the National Science Foundation's Center for Climate and Energy Decision-Making [NSF 09-554].

Footnotes

The authors declare no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “The Science of Science Communication II,” held September 23–25, 2013, at the National Academy of Sciences in Washington, DC. The complete program and video recordings of most presentations are available on the NAS website at www.nasonline.org/science-communication-II.

This article is a PNAS Direct Submission. B.F. is a guest editor invited by the Editorial Board.

References

- 1.Morgan MG, Fischhoff B, Bostrom A, Atman CJ. Risk Communication: A Mental Models Approach. UK: Cambridge University Press; 2001. [Google Scholar]

- 2.Downs JS, et al. Interactive video behavioral intervention to reduce adolescent females’ STD risk: A randomized controlled trial. Soc Sci Med. 2004;59(8):1561–1572. doi: 10.1016/j.socscimed.2004.01.032. [DOI] [PubMed] [Google Scholar]

- 3.Bostrom A, Fischhoff B, Morgan MG. Characterizing mental models of hazardous processes: A methodology and an application to radon. J Soc Issues. 1992;48(4):85–100. [Google Scholar]

- 4.Klima K, Morgan MG, Grossman I, Emanuel K. Does it make sense to modify tropical cyclones? A decision-analytic assessment. Env Sci Tech. 2011;45(10):4242–4248. doi: 10.1021/es104336u. [DOI] [PubMed] [Google Scholar]

- 5.Braddock CH, 3rd, Edwards KA, Hasenberg NM, Laidley TL, Levinson W. Informed decision making in outpatient practice: Time to get back to basics. JAMA. 1999;282(24):2313–2320. doi: 10.1001/jama.282.24.2313. [DOI] [PubMed] [Google Scholar]

- 6.de Best-Waldhober M, Daamen D, Faaij A. Informed and uninformed public opinions on CO2 capture and storage technologies in the Netherlands. Int J Greenh Gas Control. 2009;3(3):322–332. [Google Scholar]

- 7.Lusardi A. Financial Literacy: An Essential Tool for Informed Consumer Choice? (No. w14084) Cambridge, MA: National Bureau of Economic Research; 2008. [Google Scholar]

- 8.Ver Steegh N. Yes, no, and maybe: Informed decision making about divorce mediation in the presence of domestic violence. Wm & Mary J Women & L. 2002;9:145. [Google Scholar]

- 9.Fischhoff B. The sciences of science communication. Proc Natl Acad Sci USA. 2013;110(Suppl 3):14033–14039. doi: 10.1073/pnas.1213273110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hara N, Solomon P, Kim SL, Sonnenwald DH. An emerging view of scientific collaboration: Scientists' perspectives on collaboration and factors that impact collaboration. J Am Soc Inf Sci Technol. 2003;54(10):952–965. [Google Scholar]

- 11.Barnes T, Pashby I, Gibbons A. Effective University-Industry interaction: A multi-case evaluation of collaborative R&D projects. Eur Manage J. 2002;20(3):272–285. [Google Scholar]

- 12.Stokols D. Toward a science of transdisciplinary action research. Am J Community Psychol. 2006;38(1-2):63–77. doi: 10.1007/s10464-006-9060-5. [DOI] [PubMed] [Google Scholar]

- 13.Mâsse LC, et al. Measuring collaboration and transdisciplinary integration in team science. Am J Prev Med. 2008;35(2) Suppl:S151–S160. doi: 10.1016/j.amepre.2008.05.020. [DOI] [PubMed] [Google Scholar]

- 14.Kelly MJ, Schaan JL, Joncas H. Managing alliance relationships: Key challenges in the early stages of collaboration. R & D Manag. 2002;32(1):11–22. [Google Scholar]

- 15.Naiman RJ. A perspective on interdisciplinary science. Ecosystems (N Y) 1999;2:292–295. [Google Scholar]

- 16.Cialdini RB, Trost MR. In: The Handbook of Social Psychology. 4th Ed. Gilbert DT, Fiske ST, Lindze G, editors. Vol 1 and 2. New York: McGraw-Hill; 1998. pp. 151–192. [Google Scholar]

- 17.Stokols D, Misra S, Moser RP, Hall KL, Taylor BK. The ecology of team science: Understanding contextual influences on transdisciplinary collaboration. Am J Prev Med. 2008;35(2) Suppl:S96–S115. doi: 10.1016/j.amepre.2008.05.003. [DOI] [PubMed] [Google Scholar]

- 18.Todd P, Benbasat I. The use of information in decision making: An experimental investigation of the impact of computer-based decision aids. MIS Q. 1992;16(13):373–393. [Google Scholar]

- 19.Anderson JR. Problem solving and learning. Am Psychol. 1993;48(1):35–44. [Google Scholar]

- 20.O’Connor AM, Légaré F, Stacey D. Risk communication in practice: The contribution of decision aids. BMJ. 2003;327(7417):736–740. doi: 10.1136/bmj.327.7417.736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Weaver W. Science and complexity. Am Sci. 1948;36(4):536–544. [PubMed] [Google Scholar]

- 22.Treise D, Weigold MF. Advancing Science Communication A Survey of Science Communicators. Sci Commun. 2002;23(3):310–322. [Google Scholar]

- 23.Stocklmayer SM, Gore MM, Bryant CR, editors. Science Communication in Theory and Practice. Vol 14. Netherlands: Kluwer Academic Publishers; 2001. [Google Scholar]

- 24.Lipkus IM. Numeric, verbal, and visual formats of conveying health risks: Suggested best practices and future recommendations. Med Decis Making. 2007;27(5):696–713. doi: 10.1177/0272989X07307271. [DOI] [PubMed] [Google Scholar]

- 25.Cuite CL, Weinstein ND, Emmons K, Colditz G. A test of numeric formats for communicating risk probabilities. Med Decis Making. 2008;28(3):377–384. doi: 10.1177/0272989X08315246. [DOI] [PubMed] [Google Scholar]

- 26.Mertens DM. Research and Evaluation in Education and Psychology: Integrating Diversity with Quantitative, Qualitative, and Mixed Methods. Thousand Oaks, CA: Sage Publications; 2009. [Google Scholar]

- 27.Beard CM, Wilson JP. The Power of Experiential Learning. London: Kogan Page Publishers; 2002. [Google Scholar]

- 28.Trope Y, Liberman N. Construal-level theory of psychological distance. Psychol Rev. 2010;117(2):440–463. doi: 10.1037/a0018963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wade SE. Research on importance and interest: Implications for curriculum development and future research. Educ Psychol Rev. 2001;13(3):243–261. [Google Scholar]

- 30.Hidi S. Interest and its contribution as a mental resource for learning. Rev Educ Res. 1990;60(4):549–571. [Google Scholar]

- 31.Spence A, Poortinga W, Pidgeon N. The psychological distance of climate change. Risk Anal. 2012;32(6):957–972. doi: 10.1111/j.1539-6924.2011.01695.x. [DOI] [PubMed] [Google Scholar]

- 32.Slovic P, Fischhoff B, Lichtenstein S. Accident probabilities and seat belt usage: A psychological perspective. Accid Anal Prev. 1978;10(4):281–285. [Google Scholar]

- 33.Ericsson KA, Simon HA. Verbal reports as data. Psychol Rev. 1980;87(3):215–251. [Google Scholar]

- 34.Friedman DB, Corwin SJ, Dominick GM, Rose ID. African American men’s understanding and perceptions about prostate cancer: Why multiple dimensions of health literacy are important in cancer communication. J Community Health. 2009;34(5):449–460. doi: 10.1007/s10900-009-9167-3. [DOI] [PubMed] [Google Scholar]

- 35.Tan HT, Wang E, Zhou B. How Does Readability Influence Investors' Judgments? Consistency of Benchmark Performance Matters. Rochester, NY: Social Science Research Network; 2013. [Google Scholar]

- 36.Wynne B. Creating public alienation: Expert cultures of risk and ethics on GMOs. Sci Cult (Lond) 2001;10(4):445–481. doi: 10.1080/09505430120093586. [DOI] [PubMed] [Google Scholar]

- 37.Marris C. Public views on GMOs: Deconstructing the myths. Stakeholders in the GMO debate often describe public opinion as irrational. But do they really understand the public? EMBO Rep. 2001;2(7):545–548. doi: 10.1093/embo-reports/kve142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cook G, Pieri E, Robbins PT. ‘The scientists think and the public feels’: Expert perceptions of the discourse of GM food. Discourse Soc. 2004;15(4):433–449. [Google Scholar]

- 39.Cosmides L, Tooby J. Beyond intuition and instinct blindness: Toward an evolutionarily rigorous cognitive science. Cognition. 1994;50(1-3):41–77. doi: 10.1016/0010-0277(94)90020-5. [DOI] [PubMed] [Google Scholar]

- 40.Myers DG. Intuition: Its Powers and Perils. New Haven, CT: Yale University Press; 2004. [Google Scholar]

- 41.Merton RK. The focussed interview and focus groups: Continuities and discontinuities. Public Opin Q. 1987;51(4):550–566. [Google Scholar]

- 42.Meehl GA, et al. How much more global warming and sea level rise? Science. 2005;307(5716):1769–1772. doi: 10.1126/science.1106663. [DOI] [PubMed] [Google Scholar]

- 43.Parris A, et al. Global Sea Level Rise Scenarios for the United States National Climate Assessment. Silver Spring, MD: National Oceanic and Atmospheric Administration; 2012. NOAA Technical Report OAR CPO-1. [Google Scholar]

- 44.Reyna VF, Nelson WL, Han PK, Dieckmann NF. How numeracy influences risk comprehension and medical decision making. Psychol Bull. 2009;135(6):943–973. doi: 10.1037/a0017327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Peters E, Hart PS, Fraenkel L. Informing patients: The influence of numeracy, framing, and format of side effect information on risk perceptions. Med Decis Making. 2011;31(3):432–436. doi: 10.1177/0272989X10391672. [DOI] [PubMed] [Google Scholar]

- 46.Davis TC, et al. Low literacy impairs comprehension of prescription drug warning labels. J Gen Intern Med. 2006;21(8):847–851. doi: 10.1111/j.1525-1497.2006.00529.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Fischhoff B, Brewer N, Downs JS. Communicating Risks and Benefits: An Evidence-Based User Guide. Washington, DC: Food and Drug Administration; 2011. [Google Scholar]

- 48.Baldwin RS, Peleg-Bruckner Z, McClintock AH. Effects of topic interest and prior knowledge on reading comprehension. Read Res Q. 1985;20(4):497–504. [Google Scholar]

- 49.Ackerman PL. A Theory of adult intellectual development: Process, personality, interests, and knowledge. Intelligence. 1996;22(2):227–257. [Google Scholar]

- 50.Wong-Parodi G, Dowlatabadi H, McDaniels T, Ray I. Influencing attitudes toward carbon capture and sequestration: A social marketing approach. Environ Sci Technol. 2011;45(16):6743–6751. doi: 10.1021/es201391g. [DOI] [PubMed] [Google Scholar]

- 51.Thompson JK. Prospects for transdisciplinarity. Futures. 2004;36(4):515–526. [Google Scholar]

- 52.Rasmussen B, Andersen PD, Borch K. Managing transdisciplinarity in strategic foresight. Creat Innov Manag. 2010;19(1):37–46. [Google Scholar]