Abstract

Scientific debates in modern societies often blur the lines between the science that is being debated and the political, moral, and legal implications that come with its societal applications. This manuscript traces the origins of this phenomenon to professional norms within the scientific discipline and to the nature and complexities of modern science and offers an expanded model of science communication that takes into account the political contexts in which science communication takes place. In a second step, it explores what we know from empirical work in political communication, public opinion research, and communication research about the dynamics that determine how issues are debated and attitudes are formed in political environments. Finally, it discusses how and why it will be increasingly important for science communicators to draw from these different literatures to ensure that the voice of the scientific community is heard in the broader societal debates surrounding science.

Keywords: advocacy, medialization, public attitudes, deficit model, motivated reasoning

Some of the most polarizing topics in American politics are scientific ones. Even the existence of phenomena, such as global climate change and evolution, that are widely accepted in the scientific community is questioned by significant proportions of the US public (1, 2). In addition, the regulation and public funding of new technologies, such as stem-cell research, have become highly contested issues in national and local election campaigns (3).

The Blurry Lines Between Science and Politics

The explanations for the blurry boundaries between science and politics are multifaceted and some centuries old (4, 5). In other words, the production of reliable knowledge about the natural world has always been a social and political endeavor (6). There are at least three explanations, however, that are particularly relevant when examining the challenges that science faces in modern democracies.

Scientists as Political Advocates.

First, in most democratic societies, scientists have long played advisory roles to a variety of political entities. In those roles they have shaped policy and regulatory frameworks as members of advisory panels, through expert testimony and as political appointees, and—as a result—have been the target of partisan criticism (7). In some instances, however, scientists have also interfaced with the political arena in roles even more explicitly focused on advocacy. These efforts have focused on both advocacy for specific investments in science and recommendations on specific applications of science in societal contexts.

One example is Albert Einstein’s letter to President Roosevelt in 1939, drafted by fellow physicist Leo Szilard, urging the US government to accelerate academic research on nuclear chain reactions and to maintain “permanent contact … between the Administration and the group of physicists working on chain reactions in America” (8). The letter ultimately led to the Manhattan Engineering District, also known as the Manhattan Project, a program designed to develop atomic weapons before Nazi Germany. Six years later, Szilard (9) drafted another petition, this time to President Truman, which did not advocate for investments in science but directly addressed the political implications of using the scientific work of the Manhattan Project for political purposes. In the petition, Szilard and 69 Manhattan Project scientists urged Truman to use a nuclear bomb against Japan only under extreme circumstances and to consider “all the other moral responsibilities which are involved” (9).

In the mid-1990s, Rice University chemist Richard Smalley played a similarly instrumental role when he openly lobbied Congress and two White House administrations to establish and fund the National Nanotechnology Initiative (NNI), a multibillion dollar program that today coordinates the efforts in nanoscale science, engineering, and technology for 25 different US federal agencies (10). After winning a Nobel Prize in Chemistry in 1996, Smalley engaged in advocacy efforts in the political arena that made him “the most visible champion of nanotechnology and its promise to lead to revolutionary sustainable technologies” (11) and that were instrumental in creating the NNI. Although efforts like Smalley's can be tremendously important in securing funding for particular areas of academic research, they also create perceived or real overlaps between the realms of science and politics.

Such overlaps are even more frequent for scientists who work as staff members, advisers, collaborators, or board members at think tanks or advocacy groups. In these roles, scientists often publish not just peer-reviewed work but also reports and other nonrefereed literature that use their own credibility as scientists to lend scientific credibility to those of the sponsoring organization. Roger Pielke, Jr., for example, critiques scientists for too often playing the role of “stealth advocates” who discuss only a subset of potential policy options for a problem their research has identified rather than presenting the tradeoffs and advantages of a broader, comprehensive portfolio of policy choices (12). This tendency to selectively highlight policy options might be—at least in part—motivated by scientists’ own political preferences. Surveys among leading scientists in nanotechnology, for instance, show that, after controlling for discipline, seniority, and scientific judgments about risks and benefits, scientists’ support for regulatory options was significantly correlated to their ideological stances, with liberal scientists being more likely to support regulations than conservative scientists (13).

The Media Orientation of the Scientific Profession.

Some of these overlaps are directly related to a second explanation for blurring boundaries between science and politics that has been described as “medialization” (14) of science. Medialization refers to the notion that science and media are increasingly linked: “With the growing importance of the media in shaping public opinion, conscience, and perception on the one hand and a growing dependence of science on scarce resources and thus on public acceptance on the other, science will become increasingly media-oriented” (14).

Medialization therefore assumes a reciprocal relationship between scientists and media. Media, on the one hand, rely on public scholars or celebrity scientists for newsworthy portrayals of scientific breakthroughs. Scientists, on the other hand, increasingly take advantage of traditional and online media to increase the impact of their research beyond the finite network of academic publishing and to advocate for more public investment in science. A survey comparing responses from scientists in France, Germany, Japan, the United Kingdom, and the United States, for example, showed that—across all five countries—85% of respondents saw the potential “influence on public debate” as a “very important” or “important” benefit of scientists engaging with journalists. Similarly, 95% of scientists answered that creating “a more positive public attitude towards research” was a very important or important benefit, and, for 77% of scientists, “increased visibility for sponsors and funding bodies” was a key benefit (15).

It is important to note, of course, that these results were based on samples of epidemiologists and stem cell researchers: i.e., scientists who work in areas of research that are likely to be of broader public interest than, say, mathematics or theoretical physics. Therefore, levels of medialization likely differ across, and probably even within, disciplines. In fact, previous research has shown that the amount of coverage that scientific issues receive depends—to some degree—on the nature of the societal debates surrounding them and that coverage increases dramatically if and when issues become engulfed in political or societal controversy (16, 17).

Regardless of these differences, an increasing orientation among some scientists toward media and public audiences to shape public attitudes or even attract funding to their research programs also creates explicit overlaps between science and other public and political spheres. Scientists communicating their work in these contexts engage in communication that is—intentionally or not—at least partly political.

The Nature of Modern Science.

A third reason for the blurring of boundaries between science and politics is the nature of modern science itself. Science is in the midst of a rapid emergence of interdisciplinary fields. This development includes what some have called a Nano-Bio-Info-Cogno (NBIC) convergence (18) of new interdisciplinary fields at various interfaces of nanotechnology, biotechnology, cognitive science, and information technology.

It has been argued that debates about whether modern science is increasingly interdisciplinary have been part of American science since at least World War II (19). NBIC technologies, for example, severely exacerbate a host of existing challenges when it comes to communicating about science with lay audiences. As discussed elsewhere (4), these challenges include (i) the scientific complexity of emerging interdisciplinary fields of research, such as synthetic biology or neurobiology, a (ii) the pace of innovation in some of those fields, and (iii) the nature of public debates that accompany different applications of NBIC technologies (4).

The uniquely high pace of innovation surrounding NBIC technologies (18) and the impact it would have on the science–public interface had already been anticipated by some members of the scientific community decades earlier. In a 1967 editorial in the journal Science, for example, geneticist and Nobel laureate Marshall Nirenberg singled out rapid breakthroughs in DNA research as one emerging field of science that would have far-reaching and rapid impacts on society: “New information is being obtained in the field of biochemical genetics at an extremely rapid rate. … [M]an may be able to program his own cells with synthetic information long before he will be able to assess adequately the long-term consequences of such alterations, long before he will be able to formulate goals, and long before he can resolve the ethical and moral problems which will be raised” (20).

Nirenberg’s predictions captured many of the unique types of ethical, legal, moral, and political debates that now accompany NBIC technologies and their applications, partly because of their rapid pace of development. In the early days of the NNI, ethicist George Khushf outlined some of these potential socio-political implications of NBIC technologies: “The more radical the technology, the more radical the ethical challenges, and there is every reason to expect that the kinds of advancements associated with the NBIC technologies will involve such radical ethical challenges. … My point, however, is not simply that we can expect many ethical issues to arise out of NBIC convergence. There is a deeper, more complex problem associated with the accelerating rate of development. We are already approaching a stage at which ethical issues are emerging, one upon another, at a rate that outstrips our capacity to think through and appropriately respond” (21).

In other words, NBIC technologies and modern science, more generally, pose ethical, legal, moral, and political challenges that democratic societies may be increasingly ill-equipped to resolve, especially given the accelerated rate with which they appear on the public agenda. This development is partly due to the fact that—although many of these challenges arise from scientific breakthroughs—they do not have scientific answers. Science can tell citizens how vaccines work, what their likely side effects are, and what the risks are for individuals and society if a certain percentage of the population ends up not getting vaccinated for various reasons. The vaccination issue, however, also raises a series of ethical and political questions: Should vaccinations be mandated? If yes, should there be exceptions based on religious concerns? What kinds of tradeoffs should societies allow between a person’s individual choice to not get vaccinated and the increased risks for all members of society if fewer people get vaccinated? And how can we harmonize regulatory frameworks across different political systems with different underlying value systems to minimize the likelihood of global epidemics? None of these questions have scientific answers: i.e., answers that are based on scientific facts or even accurate judgments of risks and benefits. Instead, the answers to these questions are moral, philosophical, and political in nature.

As a result, public communication about modern science is inherently political, whether we like it or not. Many research areas, such as the ones that developed out of the NBIC convergence discussed earlier (e.g., tissue engineering, nanomedicine, and synthetic biology), raise significant ethical, legal, and social questions with answers that are both scientific and political in nature. How can we ensure the privacy and safety of human genetic information and weigh commercial interests against the rights of individuals? Is it possible to ensure equal access to medical treatments or applications developed from this research, based on race, ethnicity, and socioeconomic factors? And how can society come to an agreement about the right balance between the scientific importance of research on synthetic biology, for instance, and the ethical, moral, and religious concerns that might arise from that research among different public stakeholders?

The tension between what science can do and what might be ethically, legally, or socially acceptable, has become particularly visible for NBIC technologies. When J. Craig Venter and his team transplanted a chemically synthesized genome into a bacterial cell in 2010 (22), the potential of their findings for creating “synthetic life” was immediately apparent. In fact, Venter himself referred to the team’s work as an “important step … both scientifically and philosophically” and described their work as “the first incidence in science where the extensive bioethical review took place before the experiments were done. It’s part of an ongoing process that we’ve been driving, trying to make sure that the science proceeds in an ethical fashion, that we’re being thoughtful about what we do and looking forward to the implications to the future” (23).

As a result, political stakeholders have long claimed that modern NBIC-type science is inextricably linked to the need for political decision making. At a Pacific Grove, CA meeting in February 1975, an international group of scientists decided that strict controls should be placed on the use of recombinant DNA: i.e., transplanting genes from one organism into another (24). The warnings from this group—often referred to as the Asilomar Conference—were echoed in a report to the Subcommittee on Health and Scientific Research of the US Senate Committee on Human Resources (25), which argued that it was “increasingly important to society that the serious problems which arise at the interface between science and society be carefully identified, and that mechanisms and models be devised, for the solution of these problems” (25). For US Senator Jacob Javits, those solutions were inherently political ones, because, as he put it in 1976, a “scientist is no more trained to decide finally the moral and political implications of his or her work than the public—and its elected representatives—is trained to decide finally on scientific methodologies” (26).

Communicating “Politicized” Science

Unfortunately, science has been slow in adjusting its models for communicating with lay audiences to these realities. Instead, most attempts by the scientific community to help the public work through the policy and regulatory difficulties surrounding modern science have continued to focus on closing informational deficits, either with respect to public understanding of new areas of science or to weighing the potential risks and benefits that emerging technologies bring with them.

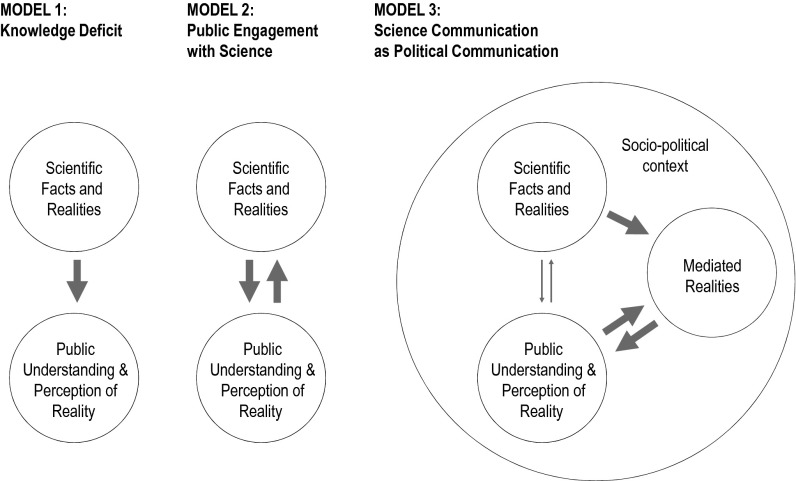

Model 1 in Fig. 1 outlines these so-called “knowledge deficit models” of science communication. They are built on the assumption that (i) higher levels of scientific literacy among the citizenry in specific scientific areas also correlate with increased public support for scientific research in those areas and that (ii) effective science communication, therefore, should be concerned with increasing levels of scientific understanding among various lay publics. The lack of consistent empirical support for this model across numerous studies has been well documented (4).

Fig. 1.

Models of science communication: How views of the science–society interface among social scientists and practitioners have evolved over time.

In recent years, there has been a concerted effort from the leadership of a number of scientific bodies to replace ineffective knowledge-deficit models with efforts to engage with the public in a more bidirectional dialogue (27). This effort has gone hand in hand with a “growing political commitment at the highest levels to giving citizens more of a voice in the decisions that affect their lives, and to engaging citizens in making government more responsive and accountable” (28). This enthusiasm is also shared by some institutional stakeholders. In a letter to House Speaker Nancy Pelosi urging the passage of the 2008 National Nanotechnology Initiative Amendment Act, for example, Institute of Electrical and Electronics Engineers (IEEE) President Russell J. Lefevre emphasized the potential of public meetings and other outreach tools to “reach tens of thousands of people with information about nanoscience” (29).

The renewed attention to public meetings, town halls, science cafes, and other modes of citizen engagement is particularly pronounced for the emerging NBIC field. Public meetings as a tool for formal citizen engagement were an integral part of a 2000 United Kingdom House of Lords report (30) that recommended making direct dialogue with the public a mandatory and integral part of policy processes, and also the 2003 US Nanotechnology Research and Development Act, which mandated “convening of regular and ongoing public discussions, through mechanisms such as citizens’ panels, consensus conferences, and educational events” (31).

Model 2 in Fig. 1 illustrates the basic mechanisms behind all these efforts—often summarized under the label “public engagement model” of science communication (32–35). As Fig. 1 shows, engagement models of science communication typically highlight the two-way nature of communication between the scientific community and various lay publics and break with the implicit one-directional idea of “spreading the word about science” or “building excitement about science” that had been the basis of knowledge-deficit models.

Engagement models also go beyond deficit models in their broader focus on what kinds of content are part of the two-way conversations between science and various publics, including debates about the scientific aspects of new technologies, but also about ethical, legal, and social issues associated with them.

In reality, however, scientists and various public stakeholders are just some of many voices in the political sphere, even for debates that explicitly focus on scientific breakthroughs or its applications. This is not to say that the two-way dialogue envisioned by engagement models is not a crucially important tool for connecting with highly interested publics that are most likely to participate in engagement exercises (36) or publics that are already predisposed positively toward science, based on their socioeconomic background (37). However, these direct forms of communication—through museum exhibits, science cafes, or other forms of engagement—cannot reach broad cross-sections of the citizenry (36). And even for those audiences who can be reached by public engagement efforts, any potential direct effects of scientist–public communication need to be understood in relation to the countless political or mediated messages related to science that citizens are exposed to every day.

Models 1 and 2, therefore, remain somewhat artificial because they both presuppose interactions between science and various publics that occur in a socio-political vacuum. As a result, they do not account for the larger political contexts in which science–public interactions take place, including the way issues get portrayed in modern environments, for how different stakeholders compete for attention in the political sphere, or for the ways in which citizens interact with the (often contradictory) streams of information they are constantly exposed to. Using model 3 as a blueprint, I will use the following sections to create a more granular overview of what we know from empirical social science about what happens when science enters the political marketplace.

Science as “Mediated” Reality—and Why It Matters

A first way in which model 3 expands on traditional engagement models is by acknowledging the fact that the majority of encounters that members of the nonscientific public have with scientific issues—outside of formal educational settings—do not involve any form of direct public engagement. Instead, most citizens hear about scientific issues from various online and offline media (38). Their exposure to science and scientists, in other words, is not a direct one, but indirect through mass or online media.

The relatively small impact of engagement efforts is illustrated in model 3 through the thin arrows connecting “scientific realities” and “public understanding/perceptions.” Instead, model 3 shows much stronger links from “scientific realities” to “public understanding/perceptions,” indirectly through “mediated realities.” In other words, even though lay audiences may never have heard a scientist talk about her work or read any primary literature on a scientific topic, they likely have read or heard about federal funding restrictions on stem-cell research, about efforts to promote the teaching of intelligent design in particular school districts, or about the latest consumer products using nanotechnology.

This role of mediated realities as a conduit between science and lay audiences is an important one for citizens. It is based on the idea that none of us can pay attention to everything that is going on the world around us on a daily basis. As a result, an important function of any type of news organization is to preselect relevant news for citizens to allow them to make informed personal and democratic decisions without having to sift through seemingly infinite amounts of information on a daily basis. As a result, mediated realities heavily influence both public perceptions of science more generally—fact-based or not—and public understanding of scientific topics.

Building Scientific Agendas.

Empirical communication research has been examining the idea of news selection—or “gatekeeping” as it is sometimes called—at least since the 1950s (39). This research has been partly concerned with identifying the professional norms that guide journalists’ work and how they impact editorial choices, surveying journalists or relying on participant observations in newsrooms (40). However, a second focus of this body of research has been on the democratic functions that news media fulfill by selecting some issues over others.

Much of this research is driven by variants of two broad normative models of the role that media should play in democratic societies: Should media outlets in democratic societies simply mirror reality as closely as possible with little editorial influence over the prominence or frequency of coverage of particular issues? Or is it necessary for news outlets to fulfill a watch dog function (41) in some instances: i.e., to intentionally devote disproportionately more attention to an issue to alert society to the need for policy solutions? Climate change is a good example of this dilemma. Should media coverage, for example, give a voice to the small minority of climate scientists who question anthropogenic climate change to alert readers to “all sides” of the issue, or have their coverage follow the consensus view held by the vast majority of climate scientists on the issue (42, 43)?

Although there may be disagreement on the normative goals of gatekeeping or news selection, there is consensus across most empirical work in this area that—by being able to cover only a very finite subset of events and issues—news media create a “mediated reality” that is different from, and potentially more impactful in the political arena than, objective reality. Sociologists Harvey Molotch and Marilyn Lester summarized the unique importance of mediated realities best when they wrote: “[W]hat is ‘really happening’ is identical with what people attend to” in news media (44).

Since the 1970s, a significant portion of the empirical work in this area has shifted to work on “agenda building” (45). Agenda building deals with the idea that the selection of news is not just a function of newsroom routines and professional norms among journalists, but is in fact an outcome of strategic efforts by many stakeholders in the policy arena who compete with one another for access to the news agenda—or to “build” the news agenda. Model 3 in Fig. 1 shows an arrow from “scientific realities” to “mediated realities” that represents agenda-building efforts by scientists or universities: i.e., attempts to attract news coverage on a particular study or scientific initiative, to steer public debate on a scientific issue, or to help change health-related behaviors. All of these agenda-building efforts, of course, take place in the larger political sphere shown in model 3, with fierce competition for access to the news agenda from interest groups, nonprofits, (scientific) associations, policy makers, corporations, and many other entities that all have stakes in communicating with different publics about scientific issues.

Three aspects of agenda building are particularly noteworthy when it comes to communicating science. First, news holes (i.e., the space available for content in news outlets) have been shrinking, especially for scientific issues (4). Shrinking news holes make the competition over access to this space even more pronounced. In addition, the fact that fewer and fewer full-time science journalists are used by major news outlets (4, 46) further limits the likelihood of scientific issues making it onto the news agenda.

Second, research shows that science seldom gets covered for the sake of science alone. In an analysis of almost three decades of public debate around stem-cell research in the United States, for instance, communication researchers Matthew Nisbet, Dominique Brossard, and Adrienne Kroepsch examined the interplay among scientific publications on the topic, press releases from a variety of corporate, political, and academic stakeholders, Capitol Hill Testimony, and news articles (16). Despite significant amounts of research activity (operationalized as the number of peer-reviewed journal publications on stem-cell research), media coverage of the issue remained at fairly low levels until the second half of the 1990s.

Beginning in the late 1990s, Nisbet et al.’s data show an increase in agenda-building efforts (measured through the number of press releases on the issue and also the number of Capitol Hill hearings). This increase also coincided with an increase in press coverage. Finally, new developments in embryonic stem cell research triggered a wave of press releases and congressional hearings on the topic that positioned the issue prominently on the public agenda in the early 2000s.

These findings confirm results from previous research that have shown that issues are much more likely to receive attention from news media once politicians and other public stakeholders become involved (47). This correlation creates an interesting dilemma for science. On the one hand, the scientific community can benefit greatly from partnerships with other stakeholders when trying to draw media attention to specific scientific initiatives or breakthroughs. Senator Orrin Hatch’s visible public support for federal funding of stem-cell research is a good example. On the other hand, stakeholders that can help increase the visibility of scientific issues in public discourse often have policy goals that differ from those of the academic community. The Nisbet et al. study, for instance, shows nicely that the same Capitol Hill testimonies and press releases that helped push stem-cell research onto the media agenda were also connected to the emerging political conflicts surrounding embryonic stem cell lines that eventually led to the restrictions on federal funding for stem-cell research implemented under the George W. Bush administration.

The third aspect of agenda building that is particularly relevant to science relates to the fact that lay publics who seek information about scientific topics increasingly turn to online sources (48, 49). However, web-based search engines and other automated tools for online information retrieval—which are now the most frequently used source by the public when seeking specific scientific information (38)—prioritize information very differently than professional news outlets do. In other words, the traditional notion of realities that are “mediated” by traditional news outlets is beginning to change.

Research has shown, for example, that the issue priorities that the automated algorithms of Google develop based on search traffic, user preferences, and a host of other factors provide search rankings that differ significantly from the types of content that are available online or the terms users initially searched for (50). Similarly, news outlets increasingly rely on algorithms and click rates to tailor news selection and placement based on the types of content that are most popular with users (51). In other words, we are moving toward information environments where issue priorities are built at least partly by search and news algorithms. Given that only 16% of the US public reports in surveys that they follow news about science “very closely” (down from 22% in 1998) (38), an emerging media landscape that further tailors searches and news toward these popular preferences rather than the types of (scientific) content that citizens need to make sound policy choices is at least somewhat disconcerting.

How Coverage Can Prime Attitudes.

The amount of scholarly attention that agenda building has received might—at first glance—be surprising. After all, agenda building is concerned only with which issues are being covered and tells us nothing about the tone of coverage or even factual accuracy of coverage.

Empirical work since the 1970s, however, has also examined how the emphasis or importance assigned to issues by mass media translates into perceptions of importance (or salience) of issues among audiences (52) and ultimately can alter attitudes on issues or political figures (53). The first step (i.e., the transfer of salience from news media to audiences) is called “agenda setting.” The second step (i.e., the influence that issues that are salient in people’s minds can have on public attitudes) is called “media priming.”

Media priming as a concept borrows from the more narrowly defined priming concept in cognitive psychology. It hypothesizes some of the same mechanisms and assumes that the more prominently an issue is being covered in mass media, the more likely it is to activate relevant areas in an audience member’s brain (54) and the easier it will be for the person to retrieve related considerations from memory when asked to form a judgment (55).

Applying this logic to media-effects research, previous empirical work was able to directly link the prominence with which particular issues were covered in news media to subsequent attitudinal judgments among audiences (53, 56–58). This work relies on memory-based models of information processing. Memory-based models assume that most attitudes we hold are not particularly stable, but instead are based on the considerations that are most easily retrievable from memory when we are asked to form a judgment (59). By increasing salience and retrievevability, media can therefore play an important role in shaping subsequent judgments.

One of the more recent illustrations of the important role that salience and retrievability from memory can play in shaping science attitudes, in particular, comes from survey-based work on public attitudes toward climate change. Merging national survey data and location-based climate data on temperature anomalies, Hamilton and Stampone (60) examined the relationship between weather patterns at the place of the interview and beliefs in anthropogenic climate change. Their findings show that, for strong ideologues at both ends of the political spectrum, real-world temperatures made little difference. Self-identified Democrats were likely to believe in climate change and Republicans were unlikely to do so. Among independents, however, salient considerations related to local temperatures on the day of the interview and the previous day were a significantly predictor of attitudes toward climate change. The more unseasonably warm the temperature was at the location of the respondent, the more he or she was likely to believe in climate change and vice versa. In other words, a simple priming effect based on short-term fluctuations in local weather patterns was enough to significantly alter views on climate change.

How We Talk About Science: The Framing of Scientific Debates

A second way in which model 3 in Fig. 1 expands on traditional engagement models is by modeling two-way interactions between various nonscientific publics and media. In fact, most current media-effects models are based on the idea that media effects are amplified or attenuated by particular audience characteristics that make it more or less likely for recipients to selectively process these messages (61) or for messages to resonate with long-term schemas held by audiences (57, 58).

Framing is one of the more prominent examples. It refers to the idea that the way a given piece of information is presented in media—either visually or textually—can significantly impact how audiences process the information. The mechanism behind media framing is known as applicability effects: i.e., a message has a significantly stronger effect if it resonates with (or is applicable to) underlying audience schemas than if it does not (62). The importance of framing for science communication has been documented extensively elsewhere (4, 16, 63). However, two considerations related to framing are worth highlighting when it comes to communicating science in political arenas.

First, there is no such thing as an unframed message. Framing is a tool for conveying meaning by tying the content of communication to existing cognitive schemas and helping the recipient make sense of the message. Framing is therefore inextricably linked to any effective form of human communication. And scientific discourse is no exception. Grant proposals and submissions to scientific journals, such as PNAS, Nature, or Science, present (or frame) findings in ways that convey their novelty and transformative nature.

The challenge for science communication, therefore, is not to debate whether we should find better frames with which we can present science to the public (which would be more in line of outdated deficit models). Instead, we should focus on what types of frames allow us to (i) present science in a way that opens two-way communication channels with audiences that science typically does not connect with, by offering presentations of science in mediated and online settings that resonate with their existing cognitive schemas, and (ii) present issues in a way that “resonates” and therefore is accessible to different groups of nonscientific audiences, regardless of their prior scientific training or interest.

Second, the socio-political contexts highlighted in model 3 create an environment in which the frames offered by the scientific community when communicating their work and its societal impacts (e.g., the potential of nanotechnology to produce new and effective ways of treating cancer) (10) to a broader audience will be met with a wide variety of competing frames offered by other stakeholders. Greenpeace’s “Frankenfood” reframing of genetically modified organisms (GMOs) is just one recent example. Similarly, the Discovery Institute has used the frames “teach the controversy” and “it’s just a theory” antievolution campaign to undermine perceived scientific consensus among lay audiences.

In fact, we know from previous research that most scientific issues go through a fairly predictable lifecycle of frames in public discourse, starting with initial excitement about the promise of social progress and the economic potential of new technologies, and then shifting to concerns about scientific uncertainties, risks, and moral concerns, into framing the technology in terms of the societal controversies surrounding it (63, 64). Understanding and anticipating this framing life cycle is critical for scientists to meaningfully communicate their research when it first enters the public arena and to continue to have their voices heard in the larger political debates about emerging technologies and their applications over time.

Perceptions of Our Social Environment—and Why They Matter for How We Communicate Science

A third way in which model 3 expands on traditional engagement models is by emphasizing the importance of social environments and socio-political cues for any form of successful communication about science.

Social science has long understood the importance of social cues for how we make decisions. In his seminal experiments on conformity, psychologist Solomon Asch (65) asked participants to judge the relative length of a line compared with three alternatives, one of which had the same length. Each participant faced a majority of confederates who posed as participants and unanimously identified one of the incorrect lines as being of equal length. With some variations—based on how unanimous the majority groups were and how obvious their errors were in terms of length differences—Asch found systematically lower levels of willingness to pick the correct line for participants who faced public pressure to go with an incorrect judgment.

Asch’s experiments provided some of the earliest empirical support for the idea that our social environment has powerful influences on our judgments and on the views we express publicly. Two aspects of his findings are particularly relevant to how we communicate science in social environments. First, Asch’s experiments were based on the assumption that expressing views that are opposed by a large majority of people around us creates feelings of discomfort and or even fear. In fact, Asch’s team collected qualitative responses after the experimental debriefing. In their answers, many participants talked about how painful it was to go against the majority view and how they feared being singled out or even ostracized. Respondents referred to the “stigma of being a nonconformist” or feeling “like a black sheep” (65). Others talked about feeling “the need to conform. … Mob psychology builds up on you. It was more pleasant to agree than to disagree” (65).

Second, although the influence of social pressure might be less surprising for expressions of subjective opinions, Asch’s experiments tested people’s willingness to express views that they knew to be incorrect simply because they were facing social pressure to conform with incorrect majority views. In other words, participants knowingly identified incorrect facts as correct ones when faced with majority pressure. Some of the comments in the postexperimental debriefing highlight the tension some respondents felt between knowing the correct answer and not wanting to go across the majority view. As one participant put it: “I agreed less because they were right than because I wanted to agree with them. It takes a lot of nerve to go in opposition” (65).

Asch’s findings were the foundation for a body of work in the second half of the 20th century examining the influences of social environments on individual judgments and behaviors. One of the most interesting models for science communication was put forth by communication researcher Elizabeth Noelle-Neumann (66) in her theory of the “spiral of silence.” The spiral of silence provides a conceptual blueprint for how public opinion dynamics on controversial issues are shaped—at least partly—by social pressure. Like Asch, Noelle-Neumann assumes that most people are to some degree fearful of isolating themselves in social settings and that this “fear of isolation” —as it is called in the spiral-of-silence model—makes them less likely to express unpopular opinions in public. In fact, Noelle-Neumann suggests that people’s innate fear of isolation also makes them scan their social environment for cues on which viewpoints are shared by most people and which ones are not. As a result, people who see their own views in the minority are less likely to express them publicly, which in turn makes the minority less visible in public debate (or the “climate of opinion” as it is called in the spiral-of-silence model). The climate of opinion, of course, is what both media and individuals use to judge which viewpoints are in the minority or majority (66).

Fig. 1 outlines the resulting spiraling process using the issue of GMOs as an example. Individuals who see themselves in the minority with their viewpoints on GMOs are more likely to fall silent. This reluctance to express their opinions means that their view on GMOs is featured less prominently in public debates, which—in turn—shapes other people’s and mass media’s perceptions of what views are in the minority and which ones are in the majority. Over time, this spiraling process establishes one opinion as the predominant one as the other one falls more and more silent. Some of the spiral processes can be attenuated or exacerbated by reference groups (66). These homogenous social networks can accelerate spiraling processes when they are consistent with larger public opinion climates, or slow down or even counteract spiraling processes by slanting individuals’ perceptions of larger opinion climates and shielding them from potential threats of isolation.

Different aspects of the spiral of silence have been tested in countless survey-based and experimental studies since the theory was first presented (67), and—despite both theoretical and operational critiques—its main predictions have been confirmed fairly consistently across political and scientific issues (68). Again, using GMOs as a case study, more recent work has operationalized willingness to express opinions among college students by asking them about their willingness to participate in a separate follow-up study that required them to have discussions about the issue with fellow students who held different opinions (69). Controlling for various demographic factors, issue involvement, and knowledge about the issue, students with higher levels of fear of isolation and perceptions of the overall opinion climate incongruent with their own were significantly less willing to engage in discussions with others on the topic of genetically modified organisms.

Science Communication as Political Communication: A Dual Use Technology?

Agenda building, priming, framing, and the spiral of silence are just a few concepts from different social scientific disciplines that have profound implications for how science gets communicated in democratic societies. Unfortunately, both bench scientists and social scientists have failed to ensure that empirical social science serves as the foundation of efforts to close science–public divides. On the bench sciences side, many efforts to better communicate science have relied on hunches and intuition, instead of building their efforts on insights from the social science presented here. However, social scientists are equally at fault for not being as proactive in many cases as they could be in doing research that offers policy-relevant insights and for not actively seeking out audiences outside their discipline to inform public debates.

This lack of intellectual cross-fertilization among the bench and social sciences has also resulted in a relatively narrow focus on two primary outcomes of science communication or public engagement of science: (i) levels of information among various publics and (ii) perceptions of risks and benefits. Both of these outcomes are crucially important variables in judging the societal value of emerging technologies, of course. However, the multilayered public impacts that modern science brings with it require debates that go well beyond lay audiences’ understanding the science or the risks and benefits of a technology. There is not a scientific answer to the question of whether we as a society should, for example, try to create life in a scientific laboratory. Instead, the answer will require democratic decision making that draws on moral values, that weighs complex political options, and that includes debates about the ethical and legal aspects surrounding emerging technologies.

However, all of these debates should be based on the best scientific input available. Given this changing nature of scientific debates in the United States and elsewhere, science communication will therefore have to draw much more than in the past on theorizing and empirical work in political communication, public opinion research, and related fields. This reliance on empirical social science will be crucial to understand and participate in the processes that determine how science gets communicated and debated in real-world settings. Model 3 in Fig. 1 illustrates many of these complexities.

To a certain degree, the scientific community has no choice. Unless scientists want to increasingly have their voice drowned out by other stakeholders in the broader societal debates surrounding their work, they will have to realize that all of the theoretical models and findings outlined in this essay have dual uses. On the one hand, it may be disconcerting to some that public attitudes toward issues such as climate change are not always based on a comprehensive understanding of climate science and are highly susceptible to priming based on simple temperature anomalies. On the other hand, research insights from agenda building, priming, and framing also help us understand why it is so important for scientists to be involved in efforts to keep issues on the public agenda, to pay attention to the language we use when talking about science, or to provide nonexpert publics with visible illustrations of particular applications of new technologies.

As outlined earlier, priming research tells us that, when asked for their opinions, audiences will rely on the most easily retrievable considerations to form their judgments. Work by Cacciatore et al., for instance, shows that the impact that risk judgments related to nanotechnology had on overall attitudes toward nanotechnology differed significantly, depending on the types of applications that were top-of-mind when respondents were asked to make these judgments (70). In other words, respondents who had the same level of concerns about potential risks translated those risk perceptions into different levels of support for more research or funding for nanotechnology, depending on which application of nanotechnology was most easily retrievable from memory for them when they were asked to make these judgments. Similar research in the area of biotechnology showed that alternate judgments can also depend on specific characteristics of the application. Bauer, for example, showed that publics were more willing to accept medical applications of biotechnology than agricultural ones (71).

Models, such as the spiral of silence, have equally wide-ranging implications for how we think about science communication. At first glance, of course, the spiral-of-silence model suggests a rather gloomy outlook for science communication. Building on the spiral-of-silence model, it is reasonable to expect that there are some conditions in which nonexpert audiences will likely hold on to and express incorrect views about science, even if the majority of the public may disagree with them. Opinion shifts may not occur, for instance, if the minority holding incorrect views—say about climate change—can take refuge in social environments or reference groups that provide enough reinforcement for their views. Asch’s experimental work on conformity pressures, for instance, showed that the presence of even one additional person supporting the minority participant in the experiment “depleted the majority of much of its power” (72). This illustration of the power that reference groups can have in protecting individuals from larger opinion climates explains at least partly the persistent proportions of respondents in national surveys who believe President Obama is a Muslim or that climate change is a hoax.

The mechanisms outlined in the spiral of silence are also the basis of the communication strategies of many interest groups and nonprofits. Anti-GMO activists, for instance, often rely on activities with high public visibility, such as unregistered demonstrations that lead to arrests, to create media coverage. The hope is that the publicity that these activities create will lead to inflated public perceptions of how widespread the opposition is to a technology, such as GMOs, and potentially trigger spirals of silence in its wake. These mechanisms also highlight the need for scientists and universities to develop proactive communication strategies that accurately portray scientific consensus in public discourse (63). Such efforts will go a long way toward countering the development of spirals of silence based on misperceptions of public support or opposition.

In closing, however, one of the most important takeaways of this article is that many of the mechanisms outlined in model 3 in Fig. 1 are not inconsistent with the goals of models 1 or 2 (73). In fact, one of the most pronounced criticisms against knowledge-deficit models is the fact that empirical realities are often at odds with what they hope to achieve. As discussed earlier, we know from numerous studies that higher levels of knowledge do not necessarily translate into more positive attitudes toward science. In fact, research into motivated reasoning (74, 75) suggests that all of us process information in biased ways—based on preexisting religious views (3, 76), cultural values (77), or ideologies (78). Motivated reasoning is partly a function of confirmation and disconfirmation biases: i.e., a tendency to confirm existing viewpoints by selectively giving more weight to information that supports our initial view and to discount information that does not.

At first glance, information processing based on preexisting values and beliefs does not bode well for science communication because it means that any given fact can be interpreted very differently by different audiences. However, more recent work by psychologist Philip Tetlock (79) suggests that the very same mechanisms that make us reluctant to express unpopular viewpoints in public might also serve an important corrective function when it comes to motivated reasoning. His experimental work shows that the possibility of having one’s views challenged by others can significantly increase the cognitive effort that individuals invest in engaging with arguments on both sides and understanding the issue in all its complexity. Although this social accountability effect attenuates motivated reasoning only in some circumstances, it suggests that exposure to non–like-minded viewpoints (or just the anticipation of such encounters) ultimately promotes more rational decision making.

These findings are directly consistent with survey-based work in political communication that showed that citizens with more heterogenous networks (i.e., ones in social environments that routinely exposed them to views different from their own) also tended to be better informed about politics and more participatory in the political process (80, 81). Most recently, experimental work by communication researcher Michael Xenos et al. (82) examined the potential influences of anticipated interactions on information seeking about nanotechnology. Participants were faced with the prospect of being matched up with others in discussion settings that exposed them to like-minded others, non–like-minded others, or unknown others and then allowed to browse a gated online information environment with an equal number of articles from three genres: general news, science and medicine news, and editorial and opinion. Respondents in the non–like-minded condition were significantly more likely than those in any of the other experimental conditions and the control to go to editorial and opinion articles first, presumably to find arguments on both sides of the issue to use in subsequent discussions.

The findings of Xenos et al. (82) are directly consistent with Tetlock’s explanation for why the anticipation of social interactions triggers more accuracy-oriented motivations among participants: “They attempted to anticipate the counterarguments and objections that potential critics could raise to their positions. This cognitive reaction can be viewed as an adaptive strategy for maintaining both one's self-esteem and one's social image” (79). In other words, the same social pressures that might distort perceptions and expressions of public opinion can also serve an important corrective function for what would otherwise be biased information processing. Understanding these mechanisms empirically and capitalizing on them for building a better societal discourse about science will be crucial as we go into an era where science and politics will continue to be inextricably linked.

Acknowledgments

Preparation of this paper was supported by National Science Foundation Grants SES-0937591, DMR-0832760, and DRL-0940143.

Footnotes

The author declares no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “The Science of Science Communication II,” held September 23–25, 2013, at the National Academy of Sciences in Washington, DC. The complete program and video recordings of most presentations are available on the NAS website at www.nasonline.org/science-communication-II.

This article is a PNAS Direct Submission. B.F. is a guest editor invited by the Editorial Board.

References

- 1.Miller JD, Scott EC, Okamoto S. Science communication: Public acceptance of evolution. Science. 2006;313(5788):765–766. doi: 10.1126/science.1126746. [DOI] [PubMed] [Google Scholar]

- 2.Nisbet MC, Myers T. The Polls—Trends: Twenty years of public opinion about global warming. Public Opin Q. 2007;71(3):444–470. [Google Scholar]

- 3.Ho SS, Brossard D, Scheufele DA. Effects of value predispositions, mass media use, and knowledge on public attitudes toward embryonic stem cell research. Int J Public Opin Res. 2008;20(2):171–192. [Google Scholar]

- 4.Scheufele DA. Communicating science in social settings. Proc Natl Acad Sci USA. 2013;110(Suppl 3):14040–14047. doi: 10.1073/pnas.1213275110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Westfall RS. Science and patronage: Galileo and the telescope. Isis. 1985;76(1):11–30. [Google Scholar]

- 6.Jasanoff S. States of Knowledge: The Co-Production of Science and Social Order. New York: Routledge; 2004. [Google Scholar]

- 7.Jasanoff S. The Fifth Branch: Science Advisers as Policymakers. Cambridge, MA: Harvard Univ Press; 1990. [Google Scholar]

- 8.Einstein A. 1939 Letter to President Franklin D. Roosevelt. Available at www.ne.anl.gov/About/legacy/e-letter.shtml. Accesed October 30, 2013. [Google Scholar]

- 9.Axelrod A. The Real History of the Cold War: A New Look at the Past. New York: Sterling; 2009. [Google Scholar]

- 10.Halford B. The world according to Rick. Chem Eng News. 2006;84(41):13–19. [Google Scholar]

- 11.Kroto H. November 8, 2005. Richard Smalley: Chemistry's champion of nanotechnology, he shared a Nobel prize for discovering the Buckyball. The Guardian, p 36.

- 12.Pielke RA., Jr . The Honest Broker: Making Sense of Science in Policy and Politics. New York: Cambridge Univ Press; 2007. [Google Scholar]

- 13.Corley EA, Scheufele DA, Hu Q. Of risks and regulations: How leading U.S. nanoscientists form policy stances about nanotechnology. J Nanopart Res. 2009;11(7):1573–1585. doi: 10.1007/s11051-009-9671-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weingart P. Science and the media. Res Policy. 1998;27(8):869–879. [Google Scholar]

- 15.Peters HP, et al. Science communication: Interactions with the mass media. Science. 2008;321(5886):204–205. doi: 10.1126/science.1157780. [DOI] [PubMed] [Google Scholar]

- 16.Nisbet MC, Brossard D, Kroepsch A. Framing science: The stem cell controversy in an age of press/politics. Harv Int J PressPolit. 2003;8(2):36–70. [Google Scholar]

- 17.Nisbet MC, Huge M. Attention cycles and frames in the plant biotechnology debate: Managing power and participation through the press/policy connection. Harv Int J PressPolit. 2006;11(2):3–40. [Google Scholar]

- 18.Roco MC, Bainbridge WS. Converging Technologies for Improving Human Performance. Dordrecht, The Netherlands: Kluwer Academic Publishers; 2003. [Google Scholar]

- 19.Kohlstedt SG, Sokal M, Lewenstein BV. The Establishment of Science in America: 150 Years of the American Association for the Advancement of Science. New Brunswick, NJ: Rutgers Univ Press; 1999. [Google Scholar]

- 20.Nirenberg MW. Will society be prepared? Science. 1967;157(3789):633. doi: 10.1126/science.157.3789.633. [DOI] [PubMed] [Google Scholar]

- 21.Khushf G. An ethic for enhancing human performance through integrative technologies. In: Bainbridge WS, Roco MC, editors. Managing Nano-Bio-Info-Cogno Innovations: Converging Technologies in Society. Dordrecht, The Netherlands: Springer; 2006. pp. 255–278. [Google Scholar]

- 22.Gibson DG, et al. Creation of a bacterial cell controlled by a chemically synthesized genome. Science. 2010;329(5987):52–56. doi: 10.1126/science.1190719. [DOI] [PubMed] [Google Scholar]

- 23.Wren K. 2010. Science: Researchers are the first to “boot up” a bacterial cell with a synthetic genome. AAAS News. Available at www.aaas.org/news/science-researchers-are-first-%E2%80%9Cboot-up%E2%80%9D-bacterial-cell-synthetic-genome. Accessed April 20, 2014.

- 24.Berg P, Baltimore D, Brenner S, Roblin RO, 3rd, Singer MF. Asilomar conference on recombinant DNA molecules. Science. 1975;188(4192):991–994. doi: 10.1126/science.1056638. [DOI] [PubMed] [Google Scholar]

- 25.Powledge TM, Dach L, editors. Biomedical Research and the Public: Report to the Subcommittee on Health and Scientific Research, Committee on Human Resources, U.S. Senate. Washington, DC: U.S. Government Printing Office; 1977. [Google Scholar]

- 26.Luria S. Reflections on democracy, science, and cancer. Bull Am Acad Arts Sci. 1977;30(5):20–32. [Google Scholar]

- 27.Cicerone RJ. Celebrating and rethinking science communication. Focus. 2006;6(3):3. [Google Scholar]

- 28.Cornwall A. Democratising Engagement: What the UK Can Learn from International Experience. London: Demos; 2008. [Google Scholar]

- 29.Lefevre RJ. Letter to House Speaker Nancy Pelosi. Washington, DC: 2008. Available at www.ieeeusa.org/policy/policy/2008/051908c.pdf. Accessed April 20, 2014. [Google Scholar]

- 30.UK House of Lords 2000. Select Committee on Science and Technology: Third Report. Available at www.publications.parliament.uk/pa/ld199900/ldselect/ldsctech/38/3801.htm. Accessed December 14, 2010.

- 31. 21st Century Nanotechnology Research and Development Act, 15 USC §7501 (2003)

- 32.Dietz T. Bringing values and deliberation to science communication. Proc Natl Acad Sci USA. 2013;110(Suppl 3):14081–14087. doi: 10.1073/pnas.1212740110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kleinman DL, Delborne JA, Anderson AA. Engaging citizens. Public Underst Sci. 2011;20(2):221–240. [Google Scholar]

- 34.Kurath M, Gisler P. Informing, involving or engaging? Science communication, in the ages of atom-, bio- and nanotechnology. Public Underst Sci. 2009;18(5):559–573. doi: 10.1177/0963662509104723. [DOI] [PubMed] [Google Scholar]

- 35.Rowe G, Frewer LJ. A typology of public engagement mechanisms. Sci Technol Human Values. 2005;30(2):251–290. [Google Scholar]

- 36.Scheufele DA. 2011. Modern citizenship or policy dead end? Evaluating the need for public participation in science policy making, and why public meetings may not be the answer. Joan Shorenstein Center on the Press, Politics and Public Policy Research Paper Series (Harvard Univ Press, Cambridge, MA), paper R-34.

- 37.Corley EA, Scheufele DA. Outreach gone wrong? When we talk nano to the public, we are leaving behind key audiences. Scientist. 2010;24(1):22. [Google Scholar]

- 38.National Science Board 2014. Science and Engineering Indicators 2014 (National Science Foundation, Arlington, VA)

- 39.White DM. The 'gatekeeper': A case study in the selection of news. Journal Q. 1950;27(3):383–390. [Google Scholar]

- 40.Shoemaker PJ, Reese SD. Mediating the Message: Theories of Influences on Mass Media Content. 2nd Ed. White Plains, NY: Longman; 1996. [Google Scholar]

- 41.Siebert FS, Peterson T, Schramm W. Four Theories of the Press. Urbana, IL: Univ of Illinois Press; 1956. [Google Scholar]

- 42.Boykoff MT. Flogging a dead norm? Newspaper coverage of anthropogenic climate change in the United States and United Kingdom from 2003 to 2006. Area. 2007;39(2):470–481. [Google Scholar]

- 43.Boykoff MT, Boykoff JM. Balance as bias: Global warming and the US prestige press. Glob Environ Change. 2004;14(2):125–136. [Google Scholar]

- 44.Molotch H, Lester M. News as purposive behavior: On the strategic use of routine events, accidents, and scandals. Am Sociol Rev. 1974;39(1):101–112. [Google Scholar]

- 45.Cobb RW, Elder C. The politics of agenda-building: An alternative perspective for modern democratic theory. J Polit. 1971;33:892–915. [Google Scholar]

- 46.Dudo AD, Dunwoody S, Scheufele DA. The emergence of nano news: Tracking thematic trends and changes in U.S. newspaper coverage of nanotechnology. Journalism Mass Comm. 2011;88(1):55–75. [Google Scholar]

- 47.Berkowitz D. Who sets the media agenda? The ability of policymakers to determine news decisions. In: Kennamer JD, editor. Public Opinion, the Press, and Public Policy. Westport, CT: Praeger; 1992. pp. 81–102. [Google Scholar]

- 48.Brossard D. New media landscapes and the science information consumer. Proc Natl Acad Sci USA. 2013;110(Suppl 3):14096–14101. doi: 10.1073/pnas.1212744110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brossard D, Scheufele DA. Social science: Science, new media, and the public. Science. 2013;339(6115):40–41. doi: 10.1126/science.1232329. [DOI] [PubMed] [Google Scholar]

- 50.Ladwig P, Anderson AA, Brossard D, Scheufele DA, Shaw B. Narrowing the nano discourse? Mater Today. 2010;13(5):52–54. [Google Scholar]

- 51.Peters JW. July 5, 2010. At Yahoo, using searches to steer news coverage. NY Times, p B1.

- 52.Kosicki GM. Problems and opportunities in agenda-setting research. J Commun. 1993;43(2):100–127. [Google Scholar]

- 53.Iyengar S, Kinder DR. News That Matters: Television and American Opinion. Chicago: Univ of Chicago Press; 1987. [Google Scholar]

- 54.Collins AM, Loftus EF. A spreading-activation theory of semantic processing. Psychol Rev. 1975;82:407–428. [Google Scholar]

- 55.Hastie R, Park B. The relationship between memory and judgment depends on whether the task is memory-based or on-line. Psychol Rev. 1986;93:258–268. [Google Scholar]

- 56.Scheufele DA. Agenda-setting, priming, and framing revisited: Another look at cognitive effects of political communication. Mass Commun Soc. 2000;3(2&3):297–316. [Google Scholar]

- 57.Scheufele DA, Tewksbury D. Framing, agenda setting, and priming: The evolution of three media effects models. J Commun. 2007;57(1):9–20. [Google Scholar]

- 58.Tewksbury D, Scheufele DA. News framing theory and research. In: Bryant J, Oliver MB, editors. Media Effects: Advances in Theory and Research. 3rd Ed. Hillsdale, NJ: Erlbaum; 2009. pp. 17–33. [Google Scholar]

- 59.Zaller J, Feldman S. A simple theory of survey response: Answering questions versus revealing preferences. Am J Pol Sci. 1992;36(3):579–616. [Google Scholar]

- 60.Hamilton LC, Stampone MD. Blowin’ in the wind: Short-term weather and belief in anthropogenic climate change. Weather Clim Soc. 2013;5(2):112–119. [Google Scholar]

- 61.Zaller J. The Nature and Origin of Mass Opinion. New York, NY: Cambridge Univ Press; 1992. [Google Scholar]

- 62.Price V, Tewksbury D. News values and public opinion: A theoretical account of media priming and framing. In: Barett GA, Boster FJ, editors. Progress in Communication Sciences: Advances in Persuasion. Vol 13. Greenwich, CT: Ablex; 1997. pp. 173–212. [Google Scholar]

- 63.Nisbet MC, Scheufele DA. What’s next for science communication? Promising directions and lingering distractions. Am J Bot. 2009;96(10):1767–1778. doi: 10.3732/ajb.0900041. [DOI] [PubMed] [Google Scholar]

- 64.Nisbet MC, Scheufele DA. The future of public engagement. Scientist. 2007;21(10):38–44. [Google Scholar]

- 65.Asch SE. Studies of independence and conformity. I. A minority of one against a unanimous majority. Psychol Monogr. 1956;70(9):1–70. [Google Scholar]

- 66.Noelle-Neumann E. The spiral of silence: A theory of public opinion. J Commun. 1974;24(2):43–51. [Google Scholar]

- 67.Scheufele DA, Moy P. Twenty-five years of the spiral of silence: A conceptual review and empirical outlook. Int J Public Opin Res. 2000;12(1):3–28. [Google Scholar]

- 68.Glynn CJ, Hayes AF, Shanahan J. Perceived support for one's opinions and willingness to speak out: A meta-analysis of survey studies on the ''spiral of silence''. Public Opin Q. 1997;61(3):452–463. [Google Scholar]

- 69.Scheufele DA, Shanahan J, Lee E. Real talk: Manipulating the dependent variable in spiral of silence research. Communic Res. 2001;28(3):304–324. [Google Scholar]

- 70.Cacciatore MA, Scheufele DA, Corley EA. From enabling technology to applications: The evolution of risk perceptions about nanotechnology. Public Underst Sci. 2011;20(3):385–404. [Google Scholar]

- 71.Bauer MW. Controversial medical and agri-food biotechnology: A cultivation analysis. Public Underst Sci. 2002;11(2):93–111. doi: 10.1088/0963-6625/11/2/301. [DOI] [PubMed] [Google Scholar]

- 72.Asch SE. Opinions and social pressure. Sci Am. 1955;193(5):31–35. [Google Scholar]

- 73.Brossard D, Lewenstein B. A critical appraisal of model of public understanding of science: Using practice to inform theory. In: Kahlor L, Stout P, editors. Understanding Science: New Agendas in Science Communication. New York: Routledge; 2009. pp. 11–39. [Google Scholar]

- 74.Druckman JN. The politics of motivation. Crit Rev. 2012;24(2):199–216. [Google Scholar]

- 75.Kunda Z. The case for motivated reasoning. Psychol Bull. 1990;108(3):480–498. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- 76.Brossard D, Scheufele DA, Kim E, Lewenstein BV. Religiosity as a perceptual filter: Examining processes of opinion formation about nanotechnology. Public Underst Sci. 2009;18(5):546–558. [Google Scholar]

- 77.Kahan DM, Braman D, Slovic P, Gastil J, Cohen G. Cultural cognition of the risks and benefits of nanotechnology. Nat Nanotechnol. 2009;4(2):87–90. doi: 10.1038/nnano.2008.341. [DOI] [PubMed] [Google Scholar]

- 78.Cacciatore MA, Binder AR, Scheufele DA, Shaw BR. Public attitudes toward biofuels: Effects of knowledge, political partisanship, and media use. Politics Life Sci. 2012;31(1-2):36–51. doi: 10.2990/31_1-2_36. [DOI] [PubMed] [Google Scholar]

- 79.Tetlock PE. Accountability and complexity of thought. J Pers Soc Psychol. 1983;45(1):74–83. [Google Scholar]

- 80.Scheufele DA, Hardy BW, Brossard D, Waismel-Manor IS, Nisbet E. Democracy based on difference: Examining the links between structural heterogeneity, heterogeneity of discussion networks, and democratic citizenship. J Commun. 2006;56(4):728–753. [Google Scholar]

- 81.Scheufele DA, Nisbet MC, Brossard D, Nisbet EC. Social structure and citizenship: Examining the impacts of social setting, network heterogeneity, and informational variables on political participation. Polit Commun. 2004;21(3):315–338. [Google Scholar]

- 82.Xenos MA, Becker AB, Anderson AA, Brossard D, Scheufele DA. Stimulating upstream engagement: An experimental study of nanotechnology information seeking. Soc Sci Q. 2011;92(5):1191–1214. [Google Scholar]