Abstract

Algorithms for Markov boundary discovery from data constitute an important recent development in machine learning, primarily because they offer a principled solution to the variable/feature selection problem and give insight on local causal structure. Over the last decade many sound algorithms have been proposed to identify a single Markov boundary of the response variable. Even though faithful distributions and, more broadly, distributions that satisfy the intersection property always have a single Markov boundary, other distributions/data sets may have multiple Markov boundaries of the response variable. The latter distributions/data sets are common in practical data-analytic applications, and there are several reasons why it is important to induce multiple Markov boundaries from such data. However, there are currently no sound and efficient algorithms that can accomplish this task. This paper describes a family of algorithms TIE* that can discover all Markov boundaries in a distribution. The broad applicability as well as efficiency of the new algorithmic family is demonstrated in an extensive benchmarking study that involved comparison with 26 state-of-the-art algorithms/variants in 15 data sets from a diversity of application domains.

Keywords: Markov boundary discovery, variable/feature selection, information equivalence, violations of faithfulness

1. Introduction

The problem of variable/feature selection is of fundamental importance in machine learning, especially when it comes to analysis, modeling, and discovery from high-dimensional data sets (Guyon and Elisseeff, 2003; Kohavi and John, 1997). In addition to the promise of cost effectiveness (as a result of reducing the number of observed variables), two major goals of variable selection are to improve the predictive performance of classification/regression models and to provide a better understanding of the data-generative process (Guyon and Elisseeff, 2003). An emerging class of filter algorithms proposes solution of the variable selection problem by identification of a Markov boundary of the response variable of interest (Aliferis et al., 2010a, 2003a; Mani and Cooper, 2004; Peña et al., 2007; Tsamardinos and Aliferis, 2003; Tsamardinos et al., 2003a,b). The Markov boundary M is a minimal set of variables conditioned on which all the remaining variables in the data set, excluding the response variable T, are rendered statistically independent of the response variable T. Under certain assumptions about the learner and the loss function, Markov boundary is the solution of the variable selection problem (Tsamardinos and Aliferis, 2003), that is, it is the minimal set of variables with optimal predictive performance for the current distribution and response variable. Furthermore, in faithful distributions, Markov boundary corresponds to a local causal neighborhood of the response variable and consists of all its direct causes, effects, and causes of the direct effects (Neapolitan, 2004; Tsamardinos and Aliferis, 2003).

An important theoretical result states that if the distribution satisfies the intersection property (which is defined in Section 2.2), then it is guaranteed to have a unique Markov boundary of the response variable (Pearl, 1988). Faithful distributions, which constitute a subclass of distributions that satisfy the intersection property, also have a unique Markov boundary (Neapolitan, 2004; Tsamardinos and Aliferis, 2003). However, some real-life distributions contain multiple Markov boundaries and thus violate the intersection property and faithfulness condition. For example, a phenomenon ubiquitous in analysis of high-throughput molecular data, known as the “multiplicity” of molecular signatures (i.e., different gene/biomarker sets perform equally well in terms of predictive accuracy of phenotypes) suggests existence of multiple Markov boundaries in these distributions (Dougherty and Brun, 2006; Somorjai et al., 2003; Aliferis et al., 2010a). Likewise, many engineering systems such as digital circuits and engines typically contain deterministic components and thus can lead to multiple Markov boundaries (Gopnik and Schulz, 2007; Lemeire, 2007).

Related to the above, a distinguished statistician, the late Professor Leo Breiman, in his seminal work (Breiman, 2001) coined the term “Rashomon effect” that describes the phenomenon of multiple different predictive models that fit the data equally well. Breiman emphasized that “multiplicity problem and its effect on conclusions drawn from models needs serious attention” (Breiman, 2001).

There are at least three practical benefits of algorithms that could systematically discover from data multiple Markov boundaries of the response variable of interest:

First, such algorithms would improve discovery of the underlying mechanisms by not missing causative variables. For example, if a causal Bayesian network with the graph X ← Y → T → Z is parameterized such that variables X and Y contain equivalent information about T (see section 2.3 and the work by Lemeire, 2007), then there are two Markov boundaries of T: {X, Z} and {Y, Z}. If an algorithm discovers only a single Markov boundary {X, Z}, then it would miss the directly causative variable Y.

Second, such algorithms can be useful in exploring alternative cost-effective but equally predictive solutions in cases where different variables may have different costs associated with their acquisition. For example, some variables may correspond to cheaper and safer medical tests, while other equally predictive variables may correspond to more expensive and/or potentially unsafe tests. The American College of Radiology maintains Appropriateness Criteria for Diagnostic Imaging (http://www.acr.org/Quality-Safety/Appropriateness-Criteria/) that list diagnostic protocols (sets of radiographic procedures/variables) with the same sensitivity and specificity (i.e., these protocols can be thought of Markov boundaries of the diagnostic response variable) but different cost and radiation exposure level. Algorithms for induction of multiple Markov boundaries can be helpful for de-novo identification of such protocols from patient data.

Third, such algorithms would shed light on the predictor multiplicity phenomenon and how it affects the reproducibility of predictors. For example, in the domain of high-throughput molecular analytics, induction of multiple Markov boundaries with subsequent validation in independent data would allow testing whether multiple and equally predictive molecular signatures are due to intrinsic information redundancy in the biological networks, small sample statistical indistinguishability of signatures, correlated measurement noise, normalization/data preprocessing steps, or other factors (Aliferis et al., 2010a).

Even though there are several well-developed algorithms for learning a single Markov boundary (Aliferis et al., 2010a, 2003a; Mani and Cooper, 2004; Peña et al., 2007; Tsamardinos and Aliferis, 2003; Tsamardinos et al., 2003a,b), little research has been done in development of algorithms for identification of multiple Markov boundaries. The most notable advances in the field are stochastic Markov boundary algorithms that involve running multiple times either a standard or approximate Markov boundary induction algorithm initialized with a random seed, for example, KIAMB (Peña et al., 2007), EGS-NCMIGS and EGS-CMIM (Liu et al., 2010b). Another approach exemplified in the EGSG algorithm (Liu et al., 2010) involves first grouping variables into multiple clusters such that each cluster (i) has variables that are similar to each other and (ii) contributes “unique” information about the response variable, and then randomly sampling a representative from each cluster for the output Markov boundaries. In genomics data analysis, researchers try to induce multiple variable sets (that sometimes approximate Markov boundaries) via application of a standard variable selection algorithm to resampled data, for example, bootstrap samples (Ein-Dor et al., 2005; Michiels et al., 2005; Roepman et al., 2006). Finally, other bioinformatics researchers proposed a multiple variable set selection algorithm that iteratively applies a standard variable selection algorithm after removing from the data all variables that participate in the previously discovered variable sets with optimal classification performance (Natsoulis et al., 2005). As we will see in Sections 3 and 5 of this paper, the above early approaches are either highly heuristic and/or cannot be practically used to induce multiple Markov boundaries in high-dimensional data sets with relatively small sample size.

To address the limitations of prior methods, this work presents an algorithmic family TIE* (which is an acronym for “Target Information Equivalence”) for multiple Markov boundary induction. TIE* is presented in the form of a generative algorithm and can be instantiated differently for different distributions. TIE* is sound and can be practically applied in typical data-analytic tasks. We have previously introduced in the bioinformatics domain a specific instantiation of TIE* for development of multiple molecular signatures of the phenotype using microarray gene expression data (Statnikov and Aliferis, 2010a). The current paper significantly extends the earlier work for general machine learning use. This includes a detailed description of the generative algorithm, expanded theoretical and complexity analyses, various instantiations of the generative algorithm and its implementation details, and an extensive benchmarking study in 15 data sets from a diversity of application domains.

The remainder of this paper is organized as follows. Section 2 provides general theory and background. Section 3 lists prior algorithms for induction of multiple Markov boundaries and variable sets. Section 4 describes the TIE* generative algorithm, traces its execution, presents specific instantiations, proves algorithm correctness, and analyzes its computational complexity. This section also introduces a simpler and faster algorithm iTIE* for special distributions. Section 5 describes empirical experiments with the TIE* algorithm and comparison with prior methods in simulated and real data. The paper concludes with Section 6 that summarizes main findings, reiterates key principles of TIE* efficiency, demonstrates how the generative algorithm TIE* can be configured for optimal results, presents limitations of this study, and outlines directions for future research. The paper includes several appendices with additional details about our work: Appendix A proves theorems and lemmas; Appendix B presents parameterizations of example structures; Appendix C describes and performs theoretical analysis of prior algorithms for induction of multiple Markov boundaries and variable sets; Appendix D provides details about the TIE* algorithm and its implementations; Appendix E provides additional details about experiments with simulated and real data.

2. Background and Theory

This section provides general theory and background.

2.1 Notation and Key Definitions

In this paper upper-case letters in italics denote random variables (e.g., A, B, C) and lower-case letters in italics denote their values (e.g., a, b, c). Upper-case bold letters in italics denote random variable sets (e.g., X, Y, Z) and lower-case bold letters in italics denote their values (e.g., x, y, z). The terms “variables” and “vertices” are used interchangeably. If a graph contains an edge X → Y, then X is a parent of Y and Y is a child of X. A vertex X is a spouse of Y if they share a common child vertex. An undirected edge X – Y denotes an adjacency relation between X and Y (i.e., presence of an edge directly connecting X and Y). A path p is a set of consecutive edges (independent of the direction) without visiting a vertex more than once. A directed path p from X to Y is a set of consecutive edges with direction “→” connecting X with Y, that is, X → … → Y. X is an ancestor of Y (and Y is a descendant of X) if there exists a directed path p from X to Y. A directed cycle is a nonempty directed path that starts and ends on the same vertex X. Three classes of graphs are considered in this work: (i) directed graphs: graphs where vertices are connected only with edges “→”; (ii) directed acyclic graphs (DAGs): graphs without directed cycles and where vertices are connected only with edges “→”; and (iii) ancestral graphs: graphs without directed cycles and where vertices are connected with edges “→” or “↔” (an edge X ↔ Y implies that X is not an ancestor of Y and Y is not an ancestor of X).

When the two sets of variables X and Y are conditionally independent given a set of variables Z in the joint probability distribution , we denote this as X ⊥ Y | Z. For notational convenience, conditional dependence is defined as absence of conditional independence and denoted as X

Y | Z . Two sets of variables X and Y are considered independent and denoted as X ⊥ Y , when X and Y are conditionally independent given an empty set of variables. Similarly, the dependence of X and Y is defined and denoted as X

Y | Z . Two sets of variables X and Y are considered independent and denoted as X ⊥ Y , when X and Y are conditionally independent given an empty set of variables. Similarly, the dependence of X and Y is defined and denoted as X

Y.

Y.

We further refer the readers to the work by Glymour and Copper (1991), Neapolitan (2004), Pearl (2009) and Spirtes et al. (2000) to review the standard definitions of collider, blocked path, d-separation, m-separation, Bayesian network, causation, direct/indirect causation, and causal Bayesian network that are used in this work. Below we state several essential definitions:

Definition 1 Local Markov condition: The joint probability distribution over variables V satisfies the local Markov condition for a directed acyclic graph (DAG) if and only if for each W in V, W is conditionally independent of all variables in V excluding descendants of W given parents of W (Richardson and Spirtes, 1999).

Definition 2 Global Markov condition: The joint probability distribution over variables V satisfies the global Markov condition for a directed graph (ancestral graph) if and only if for any three disjoint subsets of variables X, Y, Z from V, if X is d-separated (m-separated) from Y given Z in then X is independent of Y given Z in (Richardson and Spirtes, 1999, 2002).

It follows that if the underlying graph is a DAG, then the global Markov condition is equivalent to the local Markov condition (Richardson and Spirtes, 1999).

Finally, we provide several definitions of the faithfulness condition. This condition is fundamental for causal discovery and Markov boundary induction algorithms.

Definition 3 DAG-faithfulness: If all and only the conditional independence relations that are true in defined over variables V are entailed by the local Markov condition applied to a DAG , then and are DAG-faithful to one another (Spirtes et al., 2000).

The following definition extends DAG-faithfulness to any directed or ancestral graphs:

Definition 4 Graph-faithfulness: If all and only the conditional independence relations that are true in defined over variables V are entailed by the global Markov condition applied to a directed or ancestral graph , then and are graph-faithful to one another.

A relaxed version of the standard faithfulness assumption is given in the following definition:

Definition 5 Adjacency faithfulness: Given a directed or ancestral graph and a joint probability distribution defined over variables V, and are adjacency faithful to one another if every adjacency relation between X and Y in implies that X and Y are conditionally dependent given any subset of V \ {X, Y} in (Ramsey et al., 2006).

The adjacency faithfulness assumption can be relaxed to focus on the specific response variable of interest:

Definition 6 Local adjacency faithfulness: Given a directed or ancestral graph and a joint probability distribution defined over variables V, and are locally adjacency faithful with respect to T if every adjacency relation between T and X in implies that T and X are conditionally dependent given any subset of V \ {T, X} in

2.2 Basic Properties of Probability Distributions

The following theorem provides a set of useful tools for theoretical analysis of probability distributions and proofs of correctness of Markov boundary algorithms. It is stated similarly to the work by Peña et al. (2007) and its proof is given in the book by Pearl (1988).

Theorem 1 Let X, Y, Z, and W be any four subsets of variables from V.1 The following five properties hold in any joint probability distribution over variables V:

Symmetry: X ⊥ Y | Z ⇔ Y ⊥ X | Z,

Decomposition: X ⊥ (Y ∪ W) | Z ⇒ X ⊥ Y | Z and X ⊥ W | Z,

Weak union: X ⊥ (Y ∪ W) | Z ⇒ X ⊥ Y | (Z ∪ W),

Contraction: X ⊥ Y | Z and X ⊥ W | (Z ∪ Y) ⇒ X ⊥ (Y ∪ W) | Z,

Self-conditioning: X ⊥ Z | Z.

If is strictly positive, then in addition to the above five properties a sixth property holds:

Intersection: X ⊥ Y | (Z ∪ W) and X ⊥ W | (Z ∪ Y) ⇒ X ⊥ (Y ∪ W) | Z.

If is faithful to , then satisfies the above six properties and:

Composition: X ⊥ Y | Z and X ⊥ W | Z ⇒ X ⊥ (Y ∪ W) | Z.

The definition given below provides a relaxed version of the composition property that will be used later in the theoretical analysis of Markov boundary induction algorithms.

Definition 7 Local composition property: Let X, Y, Z be any three subsets of variables from V. The joint probability distribution over variables V satisfies the local composition property with respect to T if T ⊥ X|Z and T ⊥ Y |Z ⇒ T ⊥ (X ∪ Y)|Z.

2.3 Information Equivalence

In this subsection we review relevant information equivalence theory (Lemeire, 2007). We first formally define information equivalence that leads to violations of the intersection property and eliminates uniqueness of the Markov boundary (see next subsection). We then describe distributions that have information equivalence relations and point to a theoretical result that characterizes violations of the intersection property.

Definition 8 Equivalent information: Two subsets of variables X and Y from V contain equivalent information about a variable T iff the following conditions hold: T  X, T

X, T  Y, T ⊥ X | Y and T ⊥ Y | X.

Y, T ⊥ X | Y and T ⊥ Y | X.

It follows from the definition of equivalent information and the definition of mutual information (Cover and Thomas, 1991) that both X and Y contain the same information about T, that is, mutual information I(X, T) = I(Y,T) (Lemeire, 2007).

Information equivalences can result from deterministic relations. For example, if we consider a Bayesian network with the graph  that is parameterized such that X = AND(A, B) and T

that is parameterized such that X = AND(A, B) and T

X, then {X} and {A, B} contain equivalent information with respect to T according to the above definition. However, information equivalences follow from a broader class of relations than just deterministic ones (see Example 2 and Figure 1 in the next subsection). We thus define the notion of equivalent partition that was originally introduced in the work by Lemeire (2007). To do so we first provide the definition of T-partition:

X, then {X} and {A, B} contain equivalent information with respect to T according to the above definition. However, information equivalences follow from a broader class of relations than just deterministic ones (see Example 2 and Figure 1 in the next subsection). We thus define the notion of equivalent partition that was originally introduced in the work by Lemeire (2007). To do so we first provide the definition of T-partition:

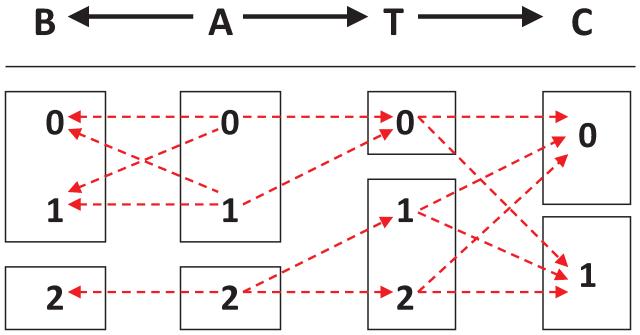

Figure 1.

Graph of a causal Bayesian network with four variables (top) and constraints on its parameterization (bottom). Variables A, B, T take three values {0,1,2}, and variable C takes two values {0,1}. Red dashed arrows denote non-zero conditional probabilities of each variable given its parents. For example, P(T = 0 | A = 1) ≠ 0, while P(T = 0 | A = 2) = 0.

Definition 9 T-partition: The domain of X, denoted by Xdom, can be partitioned into disjoint subsets for which P(T | x) is the same for all . We call this the T-partition of Xdom. We define κT(X) as the index of the subset of the partition.

Accordingly, the conditional distribution can be rewritten solely based on the index of T-partition, that is, P(T | X) = P(T | κT(X)).

Definition 10 Equivalent partition: A relation (where the “×” operator denotes the Cartesian product) defines an equivalent partition in Ydom to a partition of Xdom if:

for any x1 and x2 ∈ Xdom that do not belong to the same partition and for any y1 ∈ Ydom with , it must be that .

for all subsets of the partition, and ∃y1 ∈ Ydom such that .

In other words, for an equivalent partition, every partition corresponds to a partition . If an element of Ydom is related to an element of partition Xdom, then it is not related to an element of another partition, and each partition of Xdom has at least one element that is related to a partition of Ydom. An example of an equivalent partition is provided in Figure 1 in the next subsection.

In the following theorem the concept of equivalent partition is used to characterize violations of the intersection property; the proof of this theorem is given in the work by Lemeire (2007).

Theorem 2 If T

X and T ⊥ Y | X then T ⊥ X | Y if and only if the relation defined by P(x,y) > 0 with x∈ Xdom and y ∈ Ydom defines an equivalent partition in Ydom to the T-partition of Xdom.

X and T ⊥ Y | X then T ⊥ X | Y if and only if the relation defined by P(x,y) > 0 with x∈ Xdom and y ∈ Ydom defines an equivalent partition in Ydom to the T-partition of Xdom.

It is worthwhile to mention that the above definitions of T-partition, equivalent partition, and Theorem 2 can be trivially extended to sets of variables instead of individual variables X and Y.

Next we provide two more definitions of equivalent information that take into consideration values of other variables and also lead to violations of the intersection property.

Definition 11 Conditional equivalent information: Two subsets of variables X and Y from V contain equivalent information about a variable T conditioned on a non-empty subset of variables W iff the following conditions hold T

X | W, T

X | W, T

Y | W, T ⊥ X | (Y ∪ W), and T ⊥ Y | (X ∪ W).

Y | W, T ⊥ X | (Y ∪ W), and T ⊥ Y | (X ∪ W).

Definition 12 Context-independent equivalent information: Two subsets of variables X and Y from V contain context-independent equivalent information about a variable T iff X and Y contain equivalent information about T conditioned on any subset of variables V \(X ∪ Y ∪ {T}).

Finally, we point out that, in general, equivalent information does not always imply context-independent equivalent information. However, equivalent information due to deterministic relations always implies context-independent equivalent information.

2.4 Markov Boundary Theory

In this subsection we first define the concepts of Markov blanket and Markov boundary and theoretically characterize distributions with multiple Markov boundaries of the same response variable. Then we provide examples of such distributions and demonstrate that the number of Markov boundaries can even be exponential in the number of variables in the underlying network. We also state and prove theoretical results that connect the concepts of Markov blanket and Markov boundary with the data-generative graph. Finally, we define optimal predictor and prove a theorem that links the concept of Markov blanket with optimal predictor.

Definition 13 Markov blanket: A Markov blanket M of the response variable T ∈ V in the joint probability distribution over variables V is a set of variables conditioned on which all other variables are independent of T, that is, for every X ∈ (V \M\{T}), T ⊥ X | M.

Trivially, the set of all variables V excluding T is a Markov blanket of T. Also one can take a small Markov blanket and produce a larger one by adding arbitrary (predictively redundant or irrelevant) variables. Hence, only minimal Markov blankets are of interest.

Definition 14 Markov boundary: If no proper subset of M satisfies the definition of Markov blanket of T, then M is called a Markov boundary of T.

The following theorem states a sufficient assumption for the uniqueness of Markov boundaries and its proof is given in the book by Pearl (1988).

Theorem 3 If a joint probability distribution over variables V satisfies the intersection property, then for each X ∈ V, there exists a unique Markov boundary of X.

Since every joint probability distribution that is faithful to satisfies the intersection property (Theorem 1), then there is a unique Markov boundary in such distributions according to Theorem 3. However, Theorem 3 does not guarantee that Markov boundaries will be unique in distributions that do not satisfy the intersection property. In fact, as we will see below, Markov boundaries may not be unique in such distributions.

The following two lemmas allow us to explicitly construct and verify multiple Markov blankets and Markov boundaries when the distribution violates the intersection property (proofs are given in Appendix A).

Lemma 1 If M is a Markov blanket of T that contains a set Y, and there is a subset of variables Z such that Z and Y contain context-independent equivalent information about T, then Mnew = (M\Y)∪Z is also a Markov blanket of T.

Lemma 2 If M is a Markov blanket of T and there exists a subset of variables Mnew ⊆ V \{T} such that T ⊥ M | Mnew, then Mnew is also a Markov blanket of T.

The above lemmas also hold when M is a Markov boundary and immediately imply that Mnew is a Markov boundary assuming minimality of this subset.

The following three examples provide graphical structures and related probability distributions where multiple Markov boundaries exist. Notably, these examples also demonstrate that multiple Markov boundaries can exist even in large samples. Thus it is not an exclusively small-sample phenomenon, as it was postulated by earlier research (Ein-Dor et al., 2005, 2006).

Example 1 Consider a joint probability distribution described by a Bayesian network with graph A → B → T where A, B, and T are binary random variables that take values {0,1}. Given the local Markov condition, the joint probability distribution can be defined as follows: P(A = 0) = 0.3, P(B=0 |A=1)=1.0, P(B=1 |A=0)=1.0, P(T=0 | B=1)=0.2, P(T=0 | B=0)=0.4. Two Markov boundaries of T exist in this distribution: {A} and {B}.

Example 2 Figure 1 shows a graph of a causal Bayesian network and constraints on its parameterization.2 As can be seen, there is an equivalent partition in the domain of A to the T-partition of the domain of B. The following hold in any joint probability distribution of a causal Bayesian network that satisfies the constraints in the figure:

A and B are not deterministically related, yet they contain equivalent information about T;

There are two Markov boundaries of T ({A, C} and {B, C});

If an algorithm selects only one Markov boundary of T (e.g., {B, C}), then there is danger to miss causative variables (i.e., direct cause A) and focus instead on confounded ones (i.e., B);

The union of all Markov boundaries of T includes all variables that are adjacent with T ({A, C}).

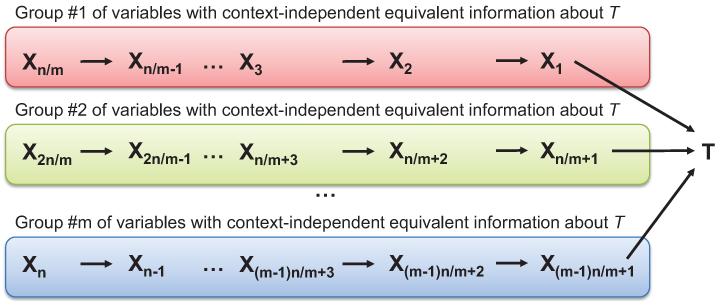

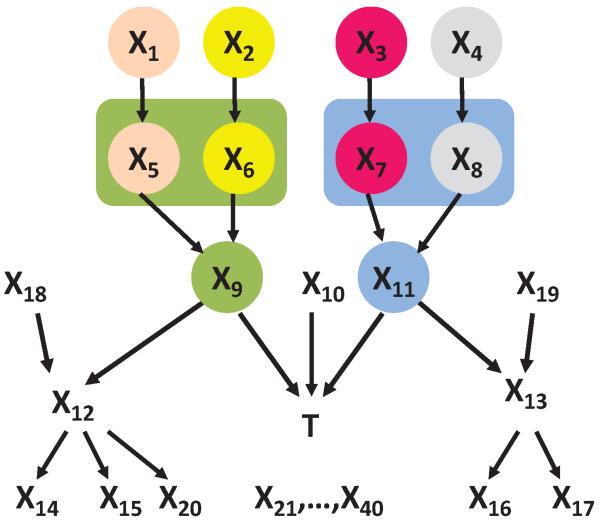

Example 3 Consider a Bayesian network shown in Figure 2. It involves n + 1 binary variables: X1,X2,…,Xn and a response variable T. Variables Xi can be divided into m groups such that any two variables in a group contain context-independent equivalent information about T. Assume that n is divisible by m. Since there are n/m variables in each group, the total number of Markov boundaries is (n/m)m. Now assume that k = n/m. Then the total number of Markov boundaries is km. Since k > 1 and m = O(n), it follows that the number of Markov boundaries grows exponentially in the number of variables in this example.

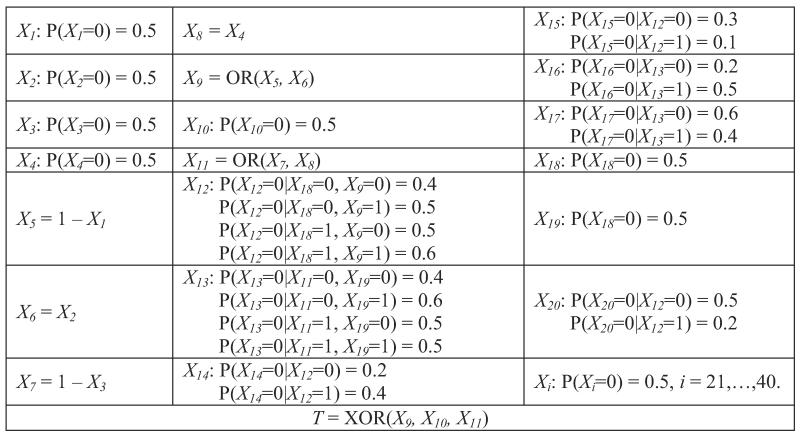

Figure 2.

Graph of a Bayesian network used to demonstrate that the number of Markov boundaries can be exponential in the number of variables in the network. The network parameterization is provided in Table 5 in Appendix B. The response variable is T. All variables take values {0,1}. All variables Xi in each group provide context-independent equivalent information about T.

Now we provide theoretical results that connect the concepts of Markov blanket and Markov boundary with the underlying causal graph. Theorem 4 was proved in the work by Neapolitan (2004) and Pearl (1988), Theorem 5 was proved in the work by Neapolitan (2004) and Tsamardinos and Aliferis (2003), and the proof of Theorem 6 is given in Appendix A.

Theorem 4 If a joint probability distribution satisfies the global Markov condition for directed graph , then the set of children, parents, and spouses of T is a Markov blanket of T.

Theorem 5 If a joint probability distribution is DAG-faithful to , then the set of children, parents, and spouses of T is a unique Markov boundary of T.

Theorem 6 If a joint probability distribution satisfies the global Markov condition for ancestral graph , then the set of children, parents, and spouses of T, and vertices connected with T or children of T by a bi-directed path (i.e., only with edges “↔”) and their respective parents is a Markov blanket of T.

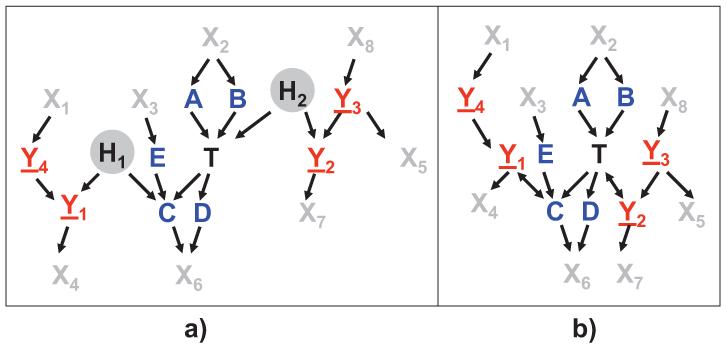

A graphical illustration of Theorem 6 is provided in Figure 3.

Figure 3.

Graphical illustration of a Markov blanket in an ancestral graph. a) Data-generative DAG, variables H1 and H2 are latent. b) Corresponding ancestral graph. The set of parents, children, and spouses of T are shown in blue. Vertices connected with T or children of T by a bi-directed path and their respective parents are shown in red and are underlined. If the global Markov condition holds for the graph and joint probability distribution, a Markov blanket of T consists of vertices shown in blue and red. All grey vertices will be then independent of T conditioned on the Markov blanket.

Definition 15 Optimal predictor: Given a data set (a sample from distribution ) for variables V, a learning algorithm , and a performance metric to assess learner's models, a variable set X ⊆ V \{T} is an optimal predictor of T if X maximizes the performance metric for predicting T using learner in the data set .

The following theorem links together the definitions of Markov blanket and optimal predictor, and its proof is given in Appendix A.

Theorem 7 If is a performance metric that is maximized only when P(T | V \{T}) is estimated accurately3 and is a learning algorithm that can approximate any conditional probability distribution,4 then M is a Markov blanket of T if and only if it is an optimal predictor of T.

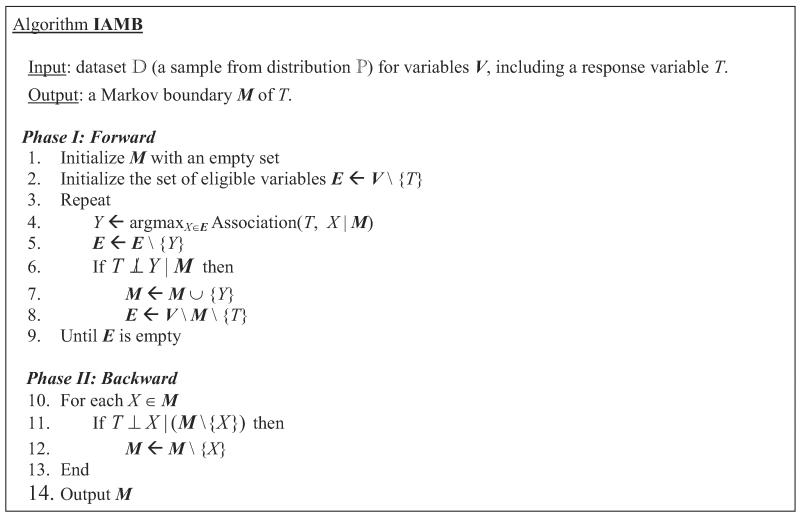

2.5 Prior Algorithms for Learning a Single Markov Boundary

The Markov boundary algorithm IAMB is described in Figure 4 (Tsamardinos and Aliferis, 2003; Tsamardinos et al., 2003a). Originally, this algorithm was proved to be correct (i.e., that it identifies a Markov boundary) if the joint probability distribution is DAG-faithful to . Then it was proved to be correct when the composition property holds (Peña et al., 2007). The following theorem further relaxes conditions sufficient for correctness of IAMB, requiring that only the local composition property holds; the proof is given in Appendix A.

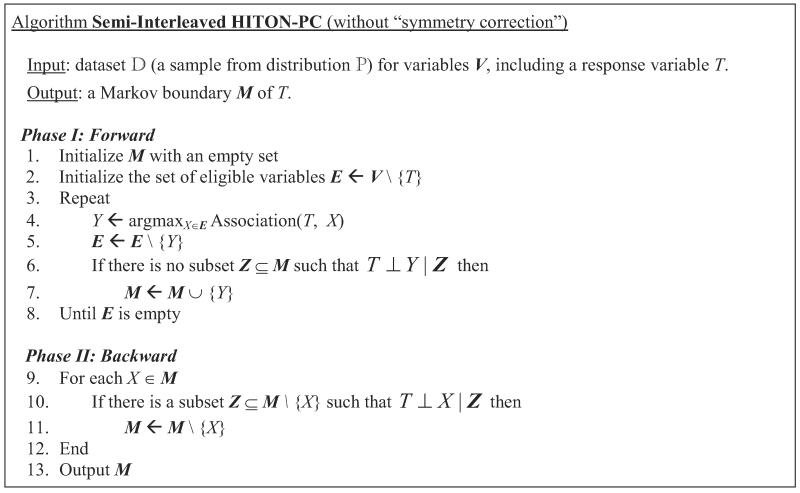

Figure 4.

IAMB algorithm.

Theorem 8 IAMB outputs a Markov boundary of T if the joint probability distribution satisfies the local composition property with respect to T.

Notice that IAMB identifies a Markov boundary of T by essentially implementing its definition and conditioning on the entire Markov boundary when testing variables for independence from the response T. Conditioning on the entire Markov boundary may become especially problematic in discrete data where the sample size required for high-confidence statistical tests of conditional independence grows exponentially in the size of the conditioning set. This in part motivated the development of the sample-efficient Markov boundary induction algorithmic family Generalized Local Learning, or GLL (Aliferis et al., 2010a). Figure 5 presents the Semi-Interleaved HITON-PC algorithm (Aliferis et al., 2010a), an instantiation of the GLL algorithmic family that we will use in the present paper. Originally, Semi-Interleaved HITON-PC was proved to correctly identify a set of parents and children of T in the Bayesian network if the joint probability distribution is DAG-faithful to and the so-called symmetry correction is not required (Aliferis et al., 2010a). The algorithm also retains its correctness for identification of a Markov boundary of T under more relaxed assumptions stated in Theorem 9 (proof is given in Appendix A).

Figure 5.

Semi-Interleaved HITON-PC algorithm (without “symmetry correction”), member of the Generalized Local Learning (GLL) algorithmic family. The algorithm is restated in a fashion similar to IAMB for ease of comparative understanding. Original pseudo-code is given in the work by Aliferis et al. (2010a).

Theorem 9 Semi-Interleaved HITON-PC outputs a Markov boundary of T if there is a Markov boundary of T in the joint probability distribution such that all its members are marginally dependent on T and are also conditionally dependent on T, except for violations of the intersection property that lead to context-independent information equivalence relations.

Theorem 9 can be also restated and proved using sufficient assumptions that are motivated by the common assumptions in the causal discovery literature: (i) the joint probability distribution and directed or ancestral graph are locally adjacency faithful with respect to T with the exception of violations of the intersection property that lead to context-independent information equivalence relations; (ii) satisfies the global Markov condition for ; (iii) the set of vertices adjacent with T in is a Markov blanket of T.

The proofs of correctness for the Markov boundary algorithms in Theorems 8 and 9 implicitly assume that the statistical decisions about dependence and independence are correct. This requirement is satisfied when the data set is a sufficiently large i.i.d. (independent and identically distributed) sample of the underlying probability distribution . When the sample size is small, the statistical tests of independence will incur type I and II errors. This may affect the correctness of the algorithms output Markov boundary.

In the empirical experiments of this work, we use Semi-Interleaved HITON-PC without “symmetry correction” as a primary method for Markov boundary induction because prior research has demonstrated empirical superiority of this algorithm compared to the version with the “symmetry correction”; the GLL-MB family of algorithms (including Semi-Interleaved HITON-MB) that can identify Markov boundary members that are non-adjacent spouses of T (and thus may be marginally independent with T); IAMB algorithms (Tsamardinos et al., 2003a); and other comparator Markov boundary induction methods (Aliferis et al., 2010a,b).

3. Prior Algorithms for Learning Multiple Markov Boundaries and Variable Sets

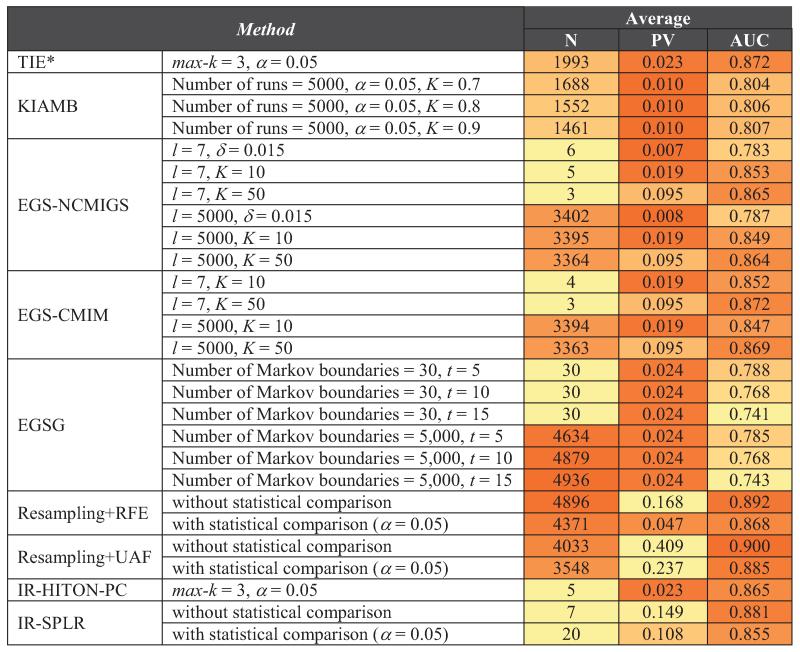

Table 1 summarizes the properties of prior algorithms for learning multiple Markov boundaries and variable sets, while a detailed description of the algorithms and their theoretical analysis is presented in Appendix C. As can be seen, there is no algorithm that is simultaneously theoretically correct, complete, computationally and sample efficient, and does not rely on extensive parameterization. This was our motivation for introducing the TIE* algorithmic family that is described in Section 4.

Table 1.

Prior algorithms for learning multiple Markov boundaries and variable sets. “+” means that the corresponding property is satisfied by a method, “−” means that the property is not satisfied, and “+/−” denotes cases where the property is satisfied under certain conditions.

| Markov boundary identification (assuming faithfulness except for violations of the intersection property) | Parameterization: does not require prior knowledge of | Computationally efficient | sample efficient | |||

|---|---|---|---|---|---|---|

| correct (identifies Markov boundaries) | complete (identifies all Markov boundaries) | the number of Markov boundaries/variable sets | the size of Markov boundaries/variable sets | |||

| KIAMB | + | + | − | + | − | − |

| EGS-CMIM | − | − | − | − | − | + |

| EGS-NCMIGS | − | − | − | +/− | − | + |

| EGSG | − | − | − | + | − | + |

| Resampling+RFE | − | − | − | + | − | + |

| Resampling+UAF | − | − | − | + | − | + |

| IR-HITON-PC | + | − | + | + | + | + |

| IR-SPLR | − | − | + | + | + | + |

We would like to note that not all algorithms listed in Table 1 are designed for identification of Markov boundaries; methods Resampling+RFE, Resampling+UAF, and IR-SPLR are designed for variable selection. However, sometimes variable sets output by these methods can approximate Markov boundaries, that is why we included these methods in our study (Aliferis et al., 2010a,b).

4. TIE*: A Family of Multiple Markov Boundary Induction Algorithms

In this section we present a generative anytime algorithm TIE* (which is an acronym for “Target Information Equivalence”) for learning from data all Markov boundaries of the response variable. This generative algorithm describes a family of related but not identical algorithms which can be seen as instantiations of the same broad algorithmic principles. We decided to state TIE* as a generative algorithm in order to facilitate a broader understanding of this methodology and devise formal conditions for correctness not only at the algorithm level but also at the level of algorithm family. The latter is achieved by specifying the general set of assumptions (admissibility rules) that apply to the generative algorithm and provide a set of flexible tools for constructing numerous algorithmic instantiations, each of which is guaranteed to be correct. This methodology thus significantly facilitates development of new correct algorithms for discovery of multiple Markov boundaries in various distributions.

4.1 Pseudo-Code and Trace

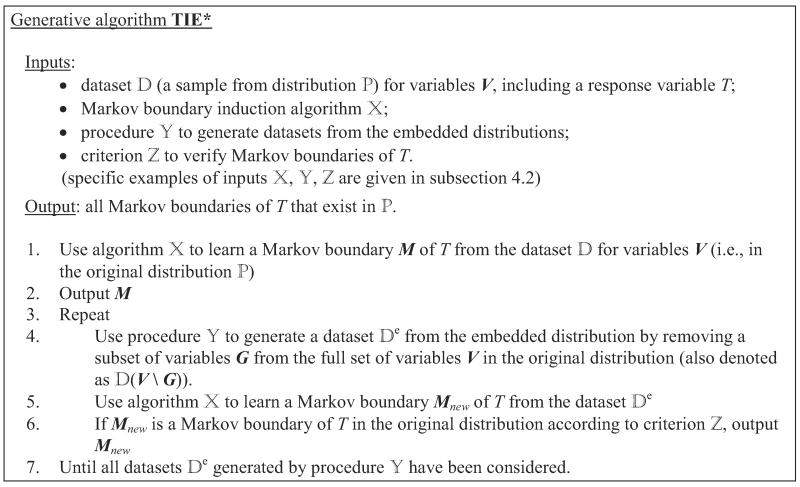

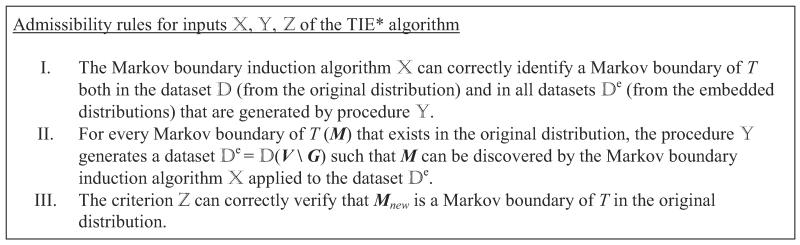

The pseudo-code of the TIE* generative algorithm is provided in Figure 6. On input TIE* receives (i) a data set (a sample from distribution ) for variables V, including a response variable T; (ii) a single Markov boundary induction algorithm ; (iii) a procedure to generate data sets from the so-called embedded distributions that are obtained by removing subsets of variables from the full set of variables V in the original distribution ; and (iv) a criterion to verify Markov boundaries of T. The inputs , , are selected to be suitable for the distribution at hand and should satisfy admissibility rules stated in Figure 7 for correctness of the algorithm (see next two subsections for details). The algorithm outputs all Markov boundaries of T that exist in the distribution .

Figure 6.

TIE* generative algorithm.

Figure 7.

Admissibility rules for inputs , , of the TIE* algorithm.

In step 1, TIE* uses a Markov boundary induction algorithm to learn a Markov boundary M of T from the data set for variables V (i.e., in the original distribution). Then M is output in step 2. In step 4, the algorithm uses a procedure to generate a data set that spans over a subset of variables that participate in . The motivation is that may lead to identification of a new Markov boundary of T that was previously “invisible” to a single Markov boundary induction algorithm because it was “masked” by another Markov boundary of T. Next, in step 5 the Markov boundary algorithm is applied to , resulting in a Markov boundary Mnew in the embedded distribution. If Mnew is also a Markov boundary of T in the original distribution according to criterion , then Mnew is output (step 6). The loop in steps 3–7 is repeated until all data sets generated by procedure have been considered.

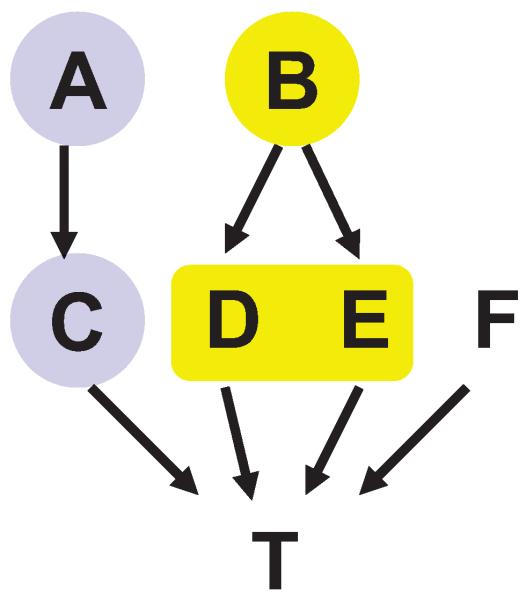

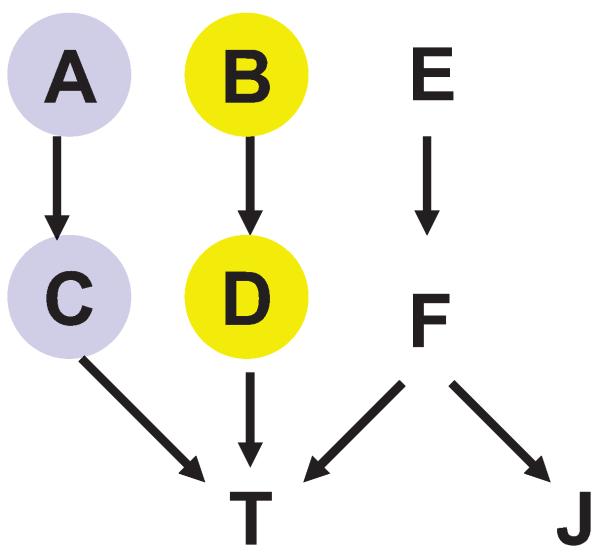

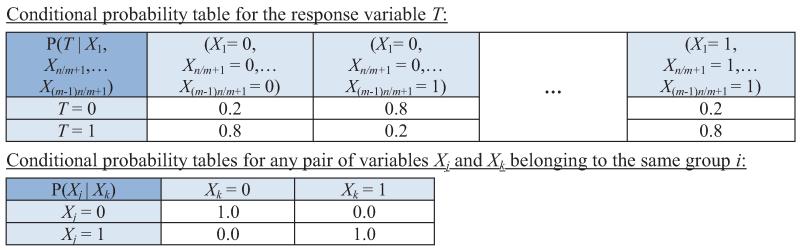

Next we provide a high-level trace of the algorithm. Consider running an instance of the TIE* algorithm with admissible inputs , , implemented by an oracle in the data set generated from the example causal Bayesian network shown in Figure 8.5 The response variable T is directly caused by C, D, E, and F. The underlying distribution is such that variables A and C contain equivalent information about T; likewise two variables {D,E} jointly and a single variable B contain equivalent information about T. In step 1 of TIE* (Figure 6), a Markov boundary induction algorithm is applied to learn a Markov boundary of T, resulting in M = {A,B,F}. Then M is output in step 2. In step 4, a procedure considers removing G = {F} and generates a data set for variables V \ G. Then in step 5 the Markov boundary induction algorithm is run on the data set . This yields a Markov boundary of T in the embedded distribution Mnew = {A,B}. The criterion in step 6 does not confirm that Mnew is also Markov boundary of T in the original distribution; thus Mnew is not output. The loop is run again. In step 4 the procedure considers removing G = {A} and generates a data set for variables V \ G. The Markov boundary induction algorithm in step 5 yields a Markov boundary of T in the embedded distribution Mnew = {C,B,F}. The criterion in step 6 confirms that Mnew is also a Markov boundary in the original distribution, thus it is returned. Similarly, when the Markov boundary induction algorithm is run on the data set where G = {B} or G = {A,B}, two additional Markov boundaries of T in the original distribution, {A,D,E,F} or {C,D,E,F}, respectively, are found and output. The algorithm terminates shortly. In total, four Markov boundaries of T are output by the algorithm: {A,B,F}, {C,B,F}, {A,D,E,F} and {C,D,E,F}. These are exactly all Markov boundaries of T that exist in the distribution.

Figure 8.

Graph of a causal Bayesian network used to trace the TIE* algorithm. The network parameterization is provided in Table 6 in Appendix B. The response variable is T. All variables take values {0,1} except for B that takes values {0,1,2,3}. Variables A and C contain equivalent information about T and are highlighted with the same color. Likewise, two variables {D,E} jointly and a single variable B contain equivalent information about T and thus are also highlighted with the same color.

4.2 Specific Instantiations

In this subsection we give several specific instantiations of the generative algorithm TIE* (Figure 6) and in the next subsection we prove their admissibility (i.e., that they satisfy rules stated in Figure 7). An instantiation of TIE* is specified by assigning its inputs , , to well-defined algorithms.

Input : This is a Markov boundary induction algorithm. For example, we can use IAMB (Figure 4) or Semi-Interleaved HITON-PC (Figure 5) algorithms that were described in Section 2.5. Other sound Markov boundary induction algorithms can be used as well (Aliferis et al., 2010a, 2003a; Mani and Cooper, 2004; Peña et al., 2007; Tsamardinos and Aliferis, 2003; Tsamardinos et al., 2003a,b).

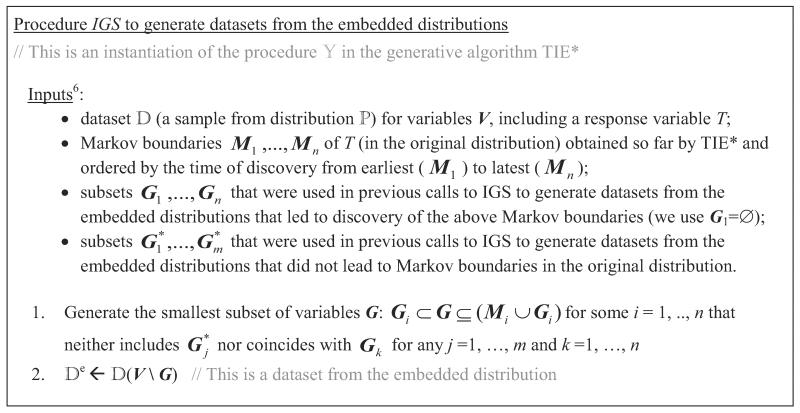

Input : This is a procedure to generate data sets from the embedded distributions that would allow identification of new Markov boundaries of T. Before we give specific examples of this procedure, it is worthwhile to understand its use in TIE*. The main principle of TIE* is to first identify a Markov boundary of T in the original distribution and then iteratively run a Markov boundary induction algorithm in data sets from the embedded distributions (that are obtained by removing subsets of variables from M) in order to identify new Markov boundaries in the original distribution. Generating such data sets from the embedded distributions is the purpose of procedure . The reason why we need to remove subsets of variables from the original data and rerun Markov boundary induction algorithm in the data set is because some variables “mask” Markov boundaries during operation of conventional single Markov boundary induction algorithms by rendering some of the Markov boundary members conditionally independent of T. One possible approach is to generate data sets by removing subsets of the original Markov boundary, or, more broadly, subsets from all currently identified Markov boundaries. The procedure termed IGS (which is an acronym for “Incremental Generation of Subsets”) implements the above stated approach and is described in Figure 9.6

Figure 9.

Procedure IGS () to generate data sets from the embedded distributions Note that IGS is a procedure (not a function), and we assume that and G are accessible in the scope of TIE*.

Below and in Table 2 we revisit the trace of TIE* that was given in the previous subsection, now focusing on the operation of the procedure IGS () from Figure 9. Recall that application of the Markov boundary induction algorithm in step 1 of TIE* resulted in M = {A,B,F}. In step 4 of TIE*, the procedure IGS can generate data sets from the embedded distributions by removing any of the three possible subsets G = {A} or {B} or {F} from V (it will not consider larger subsets because of the requirement of the smallest subset size in step 1 of IGS, see Figure 9). Recall that next we considered a data set obtained by removing G = {F} and identified via algorithm a Markov boundary in the embedded distribution Mnew ={A,B} that did not turn out to be a Markov boundary in the original distribution. When the procedure IGS is executed in the following iterations of steps 3–7, it will never generate data set without {F} because and we require that G does not include for j = 1,…,m. In the next iteration, IGS can generate two possible data sets by removing G = {A} or {B} from V. In order to be consistent with our previous trace, assume that the procedure IGS output a data set obtained by removing G = {A} which led to identification of a new Markov boundary both in the original and embedded distribution Mnew={C,B,F}. When the procedure IGS is executed in the next iteration, it will generate a data set by removing a subset G = {B} from V (all other subsets will have two or more variables and thus will not be considered). This would lead to identification of a new Markov boundary both in the original and embedded distribution Mnew ={A,D,E,F}. When the procedure IGS is executed in the next iteration, it can generate data sets by removing G ={A,B} or{A,C} or {B,D} or {B,E} from V. Assume that the procedure generated a data set by removing G ={A,B}, which would lead to identification of a new Markov boundary both in the original and embedded distribution Mnew ={C,D,E,F}. Several more iterations will follow, but no new Markov boundaries in the original distribution will be identified (see Table 2 for one more iteration), and TIE* will terminate.

Table 2.

Part of the trace of TIE*, focusing on operation of the procedure .

|

As it follows from the above example, we may have several possibilities for the subset G (and thus for defining a data set ) in the procedure IGS and we need to define rules in order to select a single subset. We therefore provide three specific implementations of the procedure IGS:

IGS-Lex (“Lex” stands for “lexicographical”): Procedure IGS from Figure 9 where one chooses a subset G with the smallest lexicographical order of its variables;

IGS-MinAssoc (“MinAssoc” stands for “minimal association”): Procedure IGS from Figure 9 where one chooses a subset G with the smallest association with the response variable T;

IGS-MaxAssoc (“MaxAssoc” stands for “maximal association”): Procedure IGS from Figure 9 where one chooses a subset G with the largest association with the response variable T.

The above three instantiations of the procedure IGS may lead to different traces of the TIE* algorithm, however the final output of the algorithm will be the same (it will discover all Markov boundaries of T).

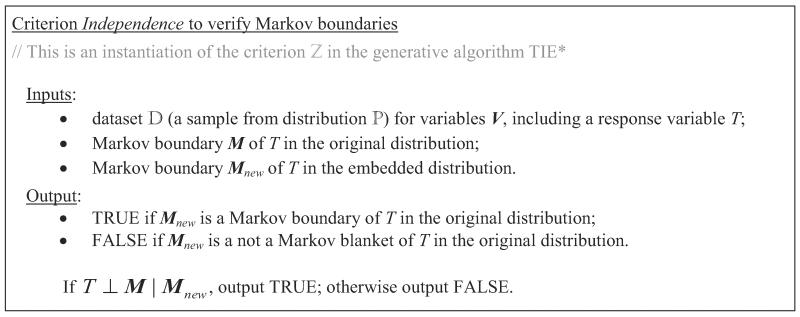

Input : This is a criterion that can verify whether Mnew, a Markov boundary in the embedded distribution (that was found by application of the Markov boundary induction algorithm in step 5 of TIE* to the data set ) is also a Markov boundary in the original distribution. In other words, it is a criterion to verify the Markov boundary property of Mnew in the original definition. For example, we can use the following two criteria given in Figures 10 and 11. Criterion Independence from Figure 10 is closely related to the definition of the Markov boundary, and essentially implies its verification. Criterion Predictivity from Figure 11 verifies Markov boundaries by assessing their predictive (classification or regression) performance using some learning algorithm and performance metric.

Figure 10.

Criterion Independence () to verify Markov boundaries.

Figure 11.

Criterion Predictivity () to verify Markov boundaries.

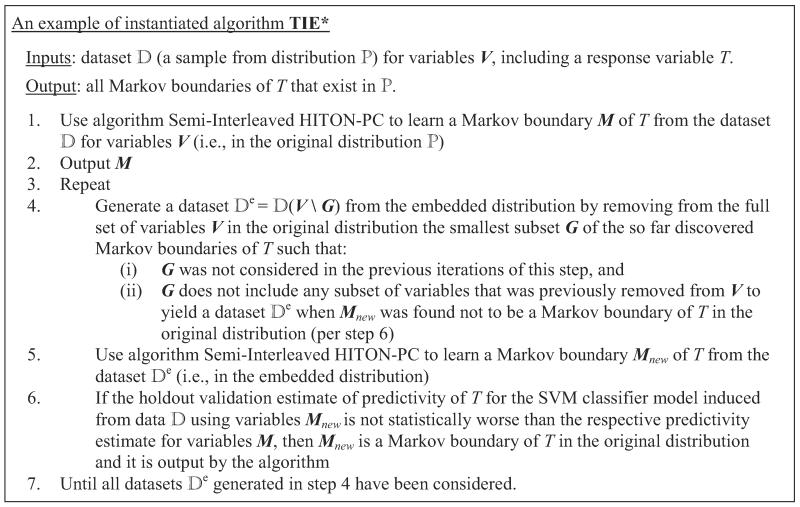

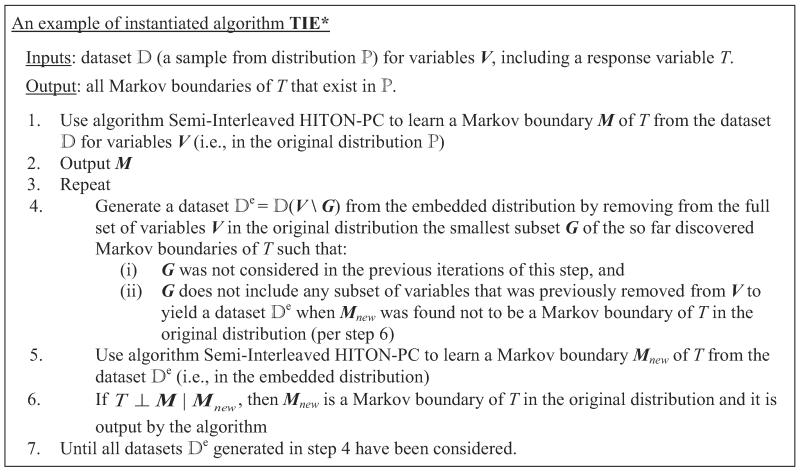

Appendix D provides two concrete admissible instantiations of the generative algorithm TIE*(admissibility follows from theoretical results presented in the next subsection). The instantiation in Figure 17 is obtained using = Semi-Interleaved HITON-PC, = IGS, = Predictivity. The instantiation in Figure 18 is obtained using = Semi-Interleaved HITON-PC, = IGS, = Independence. Appendix D also gives practical considerations for computer implementations of TIE*.

Figure 17.

An example of instantiated TIE* algorithm. This algorithm was used in experiments with real data in Section 5.2.

Figure 18.

An example of instantiated TIE* algorithm. This algorithm was used in experiments with simulated data in Section 5.1.

4.3 Analysis of the Algorithm Correctness

In this subsection we state theorems about correctness of TIE* and its specific instantiations that were described in the previous subsection and Appendix D. The proofs of all theorems are given in Appendix A.

First we show that the generative algorithm TIE* is sound and complete:

Theorem 10 The generative algorithm TIE* outputs all and only Markov boundaries of T that exist in the joint probability distribution if the inputs are admissible (i.e., satisfy admissibility rules in Figure 7).

Now we show that IAMB (Figure 4) and Semi-Interleaved HITON-PC (Figure 5) are admissible Markov boundary algorithms for TIE* under sufficient assumptions. In the case of the IAMB algorithm, the sufficient assumptions for TIE* admissibility are the same as sufficient assumptions for the general algorithm correctness (see Theorem 8). This leads to the following theorem.

Theorem 11 IAMB is an admissible Markov boundary induction algorithm for TIE* (input ) if the joint probability distribution satisfies the local composition property with respect to T.

However, the sufficient assumptions for the general correctness of Semi-Interleaved HITON-PC (Theorem 9) are not sufficient for TIE* admissibility and require further restriction. Specifically, we need to require that all members of all Markov boundaries retain marginal and conditional dependence on T, except for certain violations of the intersection property. This leads to the following theorem.

Theorem 12 Semi-Interleaved HITON-PC is an admissible Markov boundary induction algorithm for TIE* (input ) if all members of all Markov boundaries of T that exist in the joint probability distribution are marginally dependent on T and are also conditionally dependent on T, except for violations of the intersection property that lead to context-independent information equivalence relations.

The next theorem states that the procedure IGS (Figure 9) is admissible for TIE*:

Theorem 13 Procedure IGS to generate data sets from the embedded distributions (input ) is admissible for TIE*.

Finally we show that both criteria Independence (Figure 10) and Predictivity (Figure 11) for verification of Markov boundaries are admissible for TIE* and state sufficient assumptions for the latter criterion. The former criterion implicitly assumes correctness of statistical decisions, similarly to IAMB and Semi-Interleaved HITON-PC (see end of Section 2.5 for related discussion).

Theorem 14 Criterion Independence to verify Markov boundaries (input ) is admissible for TIE*

Theorem 15 Criterion Predictivity to verify Markov boundaries (input ) is admissible for TIE* if the following conditions hold: (i) the learning algorithm can accurately approximate any conditional probability distribution, and (ii) the performance metric is maximized only when P(T| V \ {T}) is estimated accurately.

As mentioned in the beginning of Section 4, the generative nature of TIE* facilitates design of new algorithms for discovery of multiple Markov boundaries by simply instantiating TIE* with input components , , . Furthermore, if , , { are admissible, then TIE* will be sound and complete according to Theorem 10, otherwise the algorithm will be heuristic. For example, one can take an established Markov boundary induction algorithm, prove its admissibility, and then plug it into TIE* with admissible components and (e.g., ones presented above). This will yield a new correct algorithm and significant economies in the proof of its correctness because one has only to prove admissibility of new input components.

4.4 Complexity Analysis

We first note that the computational complexity of TIE* depends on a specific instantiation of its input components (Markov boundary induction algorithm), (procedure for generating data sets from the embedded distributions) and (criterion for verifying Markov boundaries), and on the underlying joint probability distribution over a set variables V. In this subsection we will consider the complexity of the following two specific instantiations of TIE*: (= Semi-Interleaved HITON-PC, =IGS-Lex, =Independence) and (= IAMB, =IGS-Lex, =Independence).

Since in our experiments we found that Markov boundary induction (with input component ) was the most computationally expensive step in TIE* and accounted for > 99% of algorithm runtime, we will omit from consideration the complexity of components and , and will use the complexity of component to derive an estimate of the total computational complexity of TIE*. Following general practice in complexity analysis of Markov boundary and causal discovery algorithms, we measure computational complexity in terms of the number of statistical tests of conditional independence.7 For completeness we also note that there exist efficient implementations of the G2 test for discrete variables that can take only time nlog(n) in the number of training instances n. The time for computation of Fishers Z-test for continuous variables is also bounded by a low order polynomial in n because this test essentially involves solution of a linear system. See the work by Aliferis et al. (2010a) and Anderson (2003) for more details and discussion.

As with all sound and complete computational causal discovery algorithms, discovery of all Markov boundaries (and even one Markov boundary) is worst-case intractable. However we are interested in the average-case complexity of TIE* in real-life distributions that is more instructive to consider. Complexities of Markov boundary induction algorithms IAMB and Semi-Interleaved HITON-PC are O(|V||M|) and O(|V|2|M|), respectively, assuming that the size of the candidate Markov boundary M obtained in the Forward phase is close to the size of the true Markov boundary obtained after the Backward phase (see Figures 4 and 5), which is typically the case in practice (Aliferis et al., 2010a; Tsamardinos and Aliferis, 2003; Tsamardinos et al., 2003a). When TIE* is parameterized with the IGS procedure (as the component ) and there is only one Markov boundary M in the distribution, TIE* will invoke a Markov boundary induction algorithm , |M|+ 1 number of times. Thus, the total computational complexity of TIE* in this case becomes O(|V ||M|2) if and O(|V ||M| | 2|M|) if HITON-PC. When N Markov boundaries with the average size |M| are present in the distribution, TIE* with IGS procedure will invoke a Markov boundary induction algorithm no more than O(N2|M|) times. Therefore, the total complexity of TIE* with the IGS procedure is O(N2|M||V ||M|)) when and O(N|V |22|M|) when HITON-PC.

In practical applications of TIE* with Semi-Interleaved HITON-PC, we use an additional caching mechanism for conditional independence decisions, which alleviates the need to repeatedly conduct the same conditional independence tests during Markov boundary induction when we have only slightly altered the data set by removing a subset of variables G. In this case, induction of the first Markov boundary still takes O(|V |2|M|) independence tests, but all consecutive Markov boundaries typically require less than O(|V |) conditional independence tests. Thus, the overall complexity of TIE* with the IGS procedure and Semi-Interleaved HITON-PC becomes O(|V |2|M| + (N −1)|V |2|M|), or equivalently O(N|V |2|M|).

Finally, in practice we use parameters max-card for IGS procedure in TIE* and max-k for Semi-Interleaved HITON-PC to limit the number of conditional independence tests (see Appendix D). Thus, complexity of TIE* with the IGS procedure becomes O(N|V ||M|max-card+1) when and O(|V ||M|max-k + (N − 1)|V ||M|max-card) when HITON-PC.

4.5 A Simple and Fast Algorithm for Special Distributions

The TIE* algorithm allows to find all Markov boundaries when there are information equivalence relations between arbitrary sets of variables. A simpler and faster algorithm can be obtained by restricting consideration to distributions where all information equivalence relations follow from context-independent information equivalence relations between individual variables. The resulting algorithm is termed iTIE*(which is an acronym for “Individual Target Information Equivalence”) and is described in Figure 12. As can be seen, iTIE* can be described as a modification to Semi-Interleaved HITON-PC (or GLL-PC in general).

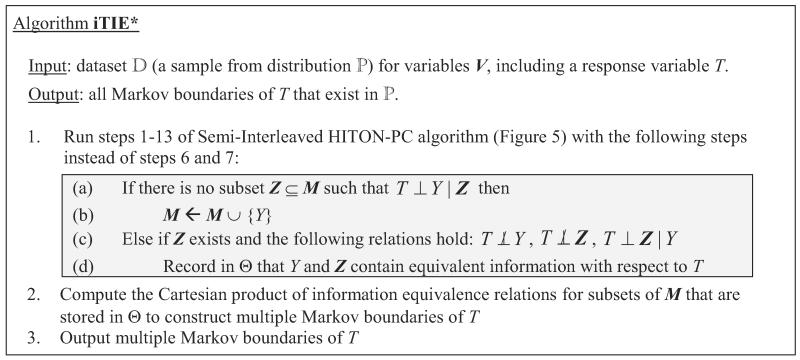

Figure 12.

iTIE* algorithm, presented as a modification of Semi-Interleaved HITON-PC. Similar algorithms may be obtained by modification of other members of the GLL-PC algorithmic family (Aliferis et al., 2010a).

Consider running the iTIE* algorithm on data generated from the example causal Bayesian network shown in Figure 13. The response variable T is directly caused by C, D, F. The underlying distribution is such that variables A and C contain equivalent information about T; likewise variables B and D contain equivalent information about T. iTIE* starts by executing Semi-Interleaved HITON-PC with the modified steps 6 and 7. Assume that we are running the loop in steps 3–8 of Semi-Interleaved HITON-PC and currently E = {C,D} and M = {A,B,F}; variables E and J were eliminated conditioned on F in previous iterations of the loop. In step 4 of Semi-Interleaved HITON-PC, the algorithm may select Y = C. Next the modified steps 6 and 7 of Semi-Interleaved HITON-PC proceed as described in Figure 12, namely: 1(a) we find that a subset Z={A} renders T independent of Y = C; 1(c) T is marginally dependent on Y = C,T is marginally dependent on Z = {A}, and Y = C renders T independent of Z = {A}, thus 1(d) we record in Θ that Y = C and Z = {A} contain equivalent information with respect to T. In the next iteration of the loop in steps 3– 8 of the modified Semi-Interleaved HITON-PC, we record in Θ that Y = D and Z = {B} contain equivalent information with respect to T. The Backward phase in steps 9–13 of Semi-Interleaved HITON-PC does not result in variable eliminations in this example, thus we have M = {A,B,F}. Finally, we build Cartesian product of information equivalence relations for subsets of M that are stored in Θ and obtain 4 Markov boundaries of T: {A,B,F},{A,D,F},{C,B,F}, and {C,D,F}.

Figure 13.

Graph of a causal Bayesian network used to trace the iTIE* algorithm. The network parameterization is provided in Table 7 in Appendix B. The response variable is T. All variables take values {0,1}. Variables A and C contain equivalent information about T and are highlighted with the same color. Likewise, variables B and D contain equivalent information about T and thus are also highlighted with the same color.

The iTIE* algorithm correctly identifies all Markov boundaries under the following sufficient assumptions: (a) all equivalence relations in the underlying distribution follow from context-independent equivalence relations of individual variables, and (b) the assumptions of Theorem 12 hold. The proof of correctness of iTIE* can be obtained from the proofs of Theorems 9 and 12 and Lemma 1.

It is also important to notice that in some cases iTIE* can identify all Markov boundaries even if the above stated sufficient assumption (a) is violated; that is why we do not exclude the possibility that Z can be a set of variables in steps 1(c,d) of iTIE*. Consider a Bayesian network with the graph  that is parameterized such that a variable C and the set of variables {A,B} jointly contain context-independent equivalent information about T, and T is marginally dependent on A,B,C. Thus, there are two Markov boundaries of T in the joint probability distribution: {C} and {A,B}. Now consider a situation when iTIE* first admits {A,B} to M during execution of the modified Semi-Interleaved HITON-PC or another instance of GLL-PC. Then the step 1(c) of iTIE* will reveal that while T ⊥ C | {A,B}, the following relations hold T

that is parameterized such that a variable C and the set of variables {A,B} jointly contain context-independent equivalent information about T, and T is marginally dependent on A,B,C. Thus, there are two Markov boundaries of T in the joint probability distribution: {C} and {A,B}. Now consider a situation when iTIE* first admits {A,B} to M during execution of the modified Semi-Interleaved HITON-PC or another instance of GLL-PC. Then the step 1(c) of iTIE* will reveal that while T ⊥ C | {A,B}, the following relations hold T

C, T

C, T

{A,B}, and T ⊥ {A,B} | C. Thus, the algorithm will identify that C and {A,B} contain equivalent information about T and will correctly find all Markov boundaries in the distribution. However, if iTIE* first admits C to M, then the algorithm will output only one Markov boundary of T that consists of a single variable C, because variables A and B, when considered separately, will be eliminated by conditioning on C and no equivalence relations will be found.

{A,B}, and T ⊥ {A,B} | C. Thus, the algorithm will identify that C and {A,B} contain equivalent information about T and will correctly find all Markov boundaries in the distribution. However, if iTIE* first admits C to M, then the algorithm will output only one Markov boundary of T that consists of a single variable C, because variables A and B, when considered separately, will be eliminated by conditioning on C and no equivalence relations will be found.

Notice that unlike TIE*, iTIE* does not rely on repeated invocation of a Markov boundary induction algorithm and instead extends Semi-Interleaved HITON-PC by potentially performing at most one additional independence test for each variable in V during the Forward phase, as shown in Figure 12.8 This allows iTIE* to maintain computational complexity of the same order as Semi-Interleaved HITON-PC, namely, O(|V |2|M|) conditional independence tests. As before, |M| denotes the average size of a Markov boundary and the above complexity bound assumes that the size of a candidate Markov boundary obtained in the Forward phase is close to the size of a true Markov boundary obtained at the end of the Backward phase (see Figure 5). In practical applications of iTIE*, we also use parameter max-k that limits the maximum size of a conditioning test, which brings complexity of iTIE* to O(|V ||M|max-k). Interestingly, iTIE* can efficiently identify all Markov boundaries in the distribution shown in Figure 2. This is due to the fact that the distribution in Figure 2 satisfies the assumption underlying iTIE* (i.e., that all information equivalences in a distribution follow from context-independent equivalences between individual variables) and thus allows it to capture all equivalence relationships between variables within groups in a single run of the Forward phase of the modified Semi-Interleaved HITON-PC. All Markov boundaries in the example in Figure 2 can then be reconstructed by taking the Cartesian product over sets of variables found to be equivalent with respect to T in step 2 of iTIE* (Figure 12).

For experiments reported in this work, we implemented and ran iTIE* based on the Causal Explorer code of Semi-Interleaved HITON-PC (Aliferis et al., 2003b; Statnikov et al., 2010) with values of parameters and statistical tests of independence that are described in Appendix D.

5. Empirical Experiments

In this section, we present experimental results obtained by applying methods for learning multiple Markov boundaries and variable sets on simulated and real data. The evaluated methods and their parameterizations are shown in Table 9 in Appendix E. These methods were chosen for our evaluation as they are the current state-of-the-art techniques for discovery of multiple Markov boundaries and variable sets. In order to study the behavior of these methods as a function of parameter settings, we considered several distinct parameterizations of each algorithm. In cases when parameter settings have been recommended by the authors of a method, we included these settings in our evaluation. A detailed description of parameters of prior methods for induction of multiple Markov boundaries and variable sets is provided in Appendix C.

Table 9.

Parameterizations of methods for discovery of multiple Markov boundaries and variable sets. Parameter settings that have been recommended by the authors of prior methods are underlined.

|

All experiments involving assessment of classification performance were executed by holdout validation or cross-validation (see below), whereby Markov boundaries and variable sets are discovered in a training subset of data samples (training set), classification models based on the above variables are also developed in the training set, and the reported performance of classification models is estimated in an independent testing set. Assessment of classification performance of the extracted Markov boundaries and variable sets was done using Support Vector Machines (SVMs) (Vapnik, 1998). We chose to use SVMs due to their excellent empirical performance across a wide range of application domains (especially with high-dimensional data and relatively small sample sizes), regularization capabilities, ability to learn both simple and complex classification functions, and tractable computational time (Cristianini and Shawe-Taylor, 2000; Schölkopf et al., 1999; Shawe-Taylor and Cristianini, 2004; Vapnik, 1998). When the response variable was multiclass, we applied SVMs in one-versus-rest fashion (Schölkopf et al., 1999). We used libSVM v.2.9.1 (http://www.csie.ntu.edu.tw/~cjlin/libsvm/) implementation of SVMs in all experiments (Fan et al., 2005). Polynomial kernels were used in SVMs as they have shown good classification performance across the data domains considered in this study. The degree d of the polynomial kernel and the penalty parameter C of SVM were optimized by cross-validation on the training data. Each variable in a data set was scaled to [0,1] range to facilitate SVM training. The scaling constants were computed on the training set of samples and then applied to the entire data set.

All experiments presented in this section were run on the Asclepius Compute Cluster at the Center for Health Informatics and Bioinformatics (CHIBI) at New York University Langone Medical Center (http://www.nyuinformatics.org) and the Advanced Computing Center for Research and Education (ACCRE) at Vanderbilt University (http://www.accre.vanderbilt.edu/). For comparative purposes all experiments used exclusively the latest generation of Intel Xeon Nehalem (x86) processors. Overall, it took >50 years of single CPU time to complete all reported experiments.

5.1 Experiments with Simulated Data

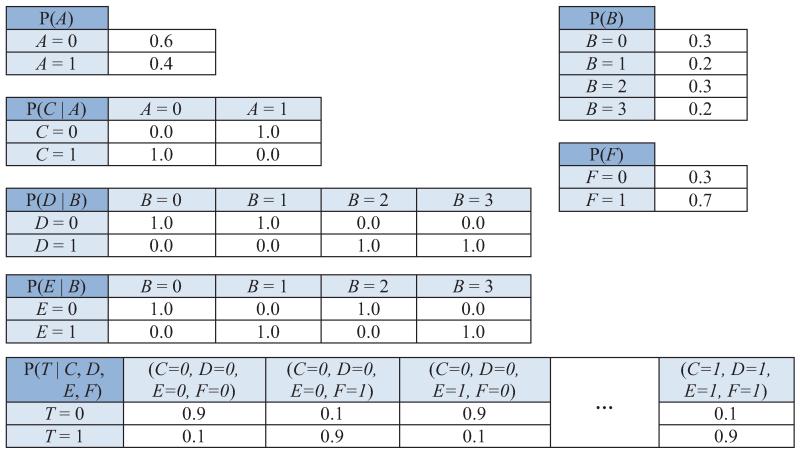

Below we present an evaluation of methods for extraction of multiple Markov boundaries and variable sets in simulated data. Simulated data allows us to evaluate methods in a controlled setting where the underlying causal process and all Markov boundaries of the response variable T are known exactly. Two data sets were used in this evaluation. One of these data sets, referred to as TIED, was previously used in an international causality challenge (Statnikov and Aliferis, 2010b). TIED contains 30 variables, including the response variable T. The underlying causal graph and its parameterization are given in the work by Statnikov and Aliferis (2010b). There are 72 distinct Markov boundaries of T. Each Markov boundary contains 5 variables: variable X10 and one variable from each of the four subsets {X1,X2,X3,X11}, {X5,X9}, {X12,X13,X14} and {X19,X20,X21}. Another simulated data set, referred to as TIED1000, contains 1,000 variables in total and was generated by the causal process of TIED augmented with an additional 970 variables that have no association with T. TIED1000 has the same set of Markov boundaries of T as TIED. TIED1000 allows us to study the behavior of different methods for learning multiple Markov boundaries and variable sets in an environment where the fraction of variables carrying relevant information about T is small.

For each of the two data sets, 750 observations were used for discovery of Markov boundaries/variable sets and training of the SVM classification models of the response variable T (with the goal to predict its values from the inferred Markov boundary variables), and an independent testing set of 3,000 observations was used for evaluation of the models' classification performance.

All methods for extracting multiple Markov boundaries and variable sets were assessed based on the following six performance criteria:

The number of distinct Markov boundaries/variable sets output by the method.

The average size of an output Markov boundary/variable set (number of variables).

The number of true Markov boundaries identified exactly, that is, without false positives and false negatives.9

The average Proportion of False Positives (PFP) in the output Markov boundaries/variable sets.10

The average False Negative Rate (FNR) in the output Markov boundaries/variable sets.11

The average classification performance (weighted accuracy) over all output Markov boundaries/variable sets.12 We also compared the average classification performance of the SVM models with the maximum a posteriori classifier in the true Bayesian network (denoted as MAP-BN) using the same data sample.

Technical details about computing performance criteria III–V are given in Appendix E.

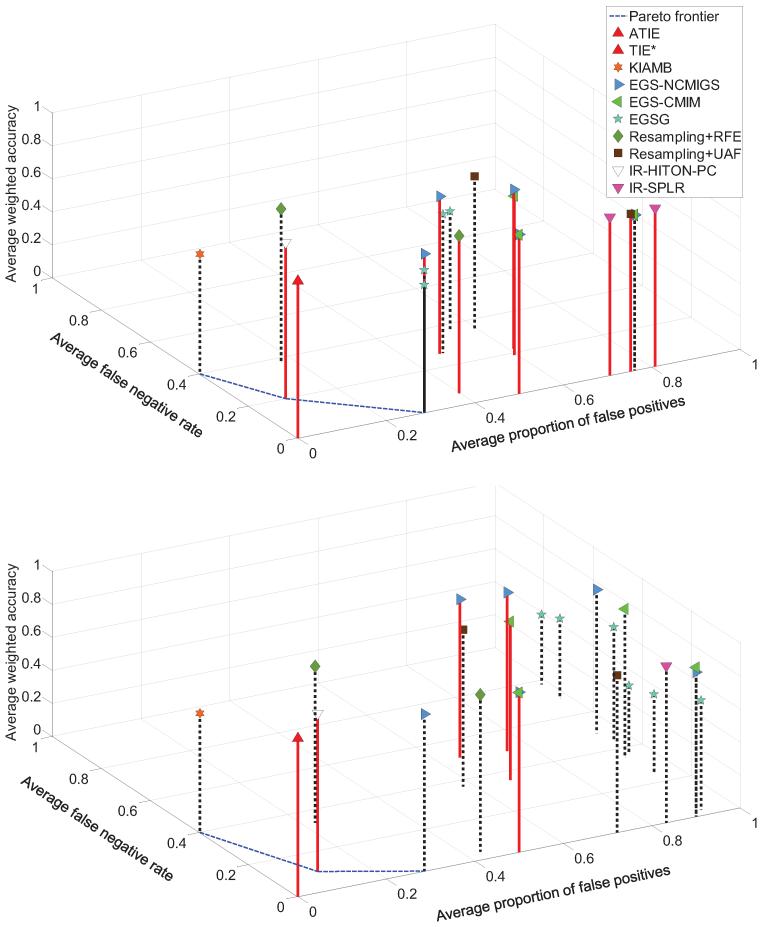

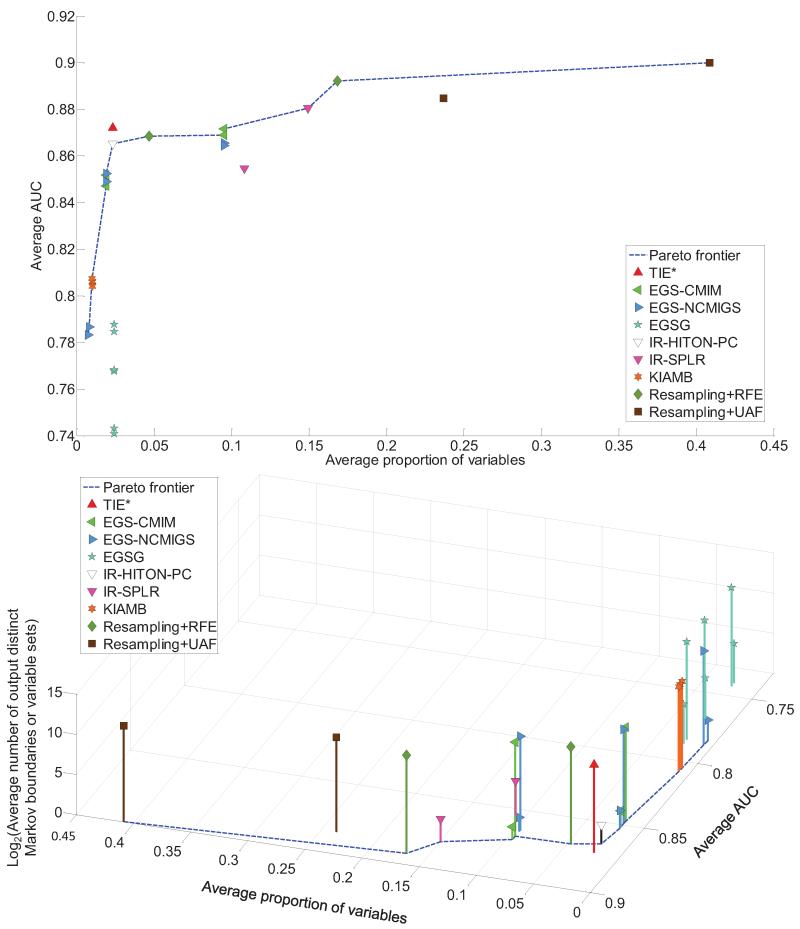

The results presented in Figure 14 in the manuscript, and Tables 10 and 11 and Figure 19 in Appendix E show that only TIE* and iTIE* identified exactly all and only true Markov boundaries of T in both simulated data sets, and their classification performance with the SVM classifier was statistically comparable to performance of the MAP-BN classifier. None of the comparator methods, regardless of the number of Markov boundaries/variable sets output, were able to identify exactly any of the 72 true Markov boundaries, except for Resampling+RFE (without statistical comparison) and IR-HITON-PC that identified exactly 1–2 out of 72 true Markov boundaries, depending on the data set. Overall prior methods had either large proportion of false positives or large false negative rate, and often their classification performance was significantly worse that the performance of the MAP-BN classifier. However, in some cases the classification performance of other methods was comparable to the MAP-BN classifier, regardless of the number of Markov boundaries identified exactly. This can be attributed to (i) the relative insensitivity of the SVM classifiers to false positives, (ii) connectivity in the underlying graph that compensates false negatives with other weakly relevant variables, and (iii) differences between the employed classification performance metric (weighted accuracy) and the metric which is maximized by the Markov boundary variables (that requires accurate estimation of P(T | V \{T}), which is a harder task than maximizing proportions of correct classification in the weighted accuracy metric). Thus, we remind the reader that a high classification performance is often a necessary but not sufficient condition for correct identification of Markov boundaries. Detailed discussion of the performance of comparator methods is given in Appendix E.

Figure 14.

Results for average classification performance (weighted accuracy), average false negative rate, and average proportion of false positives that were obtained in TIED (top figure) and TIED1000 (bottom figure) data sets. The style and color of a vertical line connecting each point with the plane shows whether the average SVM classification performance of a method is statistically comparable with the MAP-BN classifier in the same data sample (red solid line) or not (black dotted line). The Pareto frontier was constructed based on the average false negative rate and the average proportion of false positives over the comparator methods (i.e., non-TIE*). Results of TIE* and iTIE* were identical in both data sets.

Table 10.

Results obtained in simulated data set TIED. “MB” stands for “Markov boundary”, and “VS” stands for “variable set”. The 95% interval for weighted accuracy denotes the range in which weighted accuracies of 95% of the extracted Markov boundaries/variable sets fell. Classification performance of the MAP-BN classifier in the same data sample was 0.966 weighted accuracy. Highlighted in bold are results that are statistically comparable to the MAP-BN classification performance.

| Method | I. Number of distinct MBs or VSs | II. Average size of extracted distinct MBs or VSs | III. Number of true MBs identified exactly | IV. Average proportion of false positives | V. Average false negative rate | VI. Weighted accuracy over all extracted MBs or VSs | |||

|---|---|---|---|---|---|---|---|---|---|

| Average | 95% Interval | ||||||||

| TIE* | max-k = 3, α = 0.05 | 72 | 5.0 | 72 | 0.000 | 0.000 | 0.951 | 0.938 | 0.965 |

| iTIE* | max-k = 3, α = 0.05 | 72 | 5.0 | 72 | 0.000 | 0.000 | 0.951 | 0.938 | 0.965 |

| KIAMB | Number of runs = 5000, α = 0.05, K = 0.7 | 377 | 2.8 | 0 | 0.000 | 0.400 | 0.727 | 0.479 | 0.946 |

| Number of runs = 5000, α = 0.05, K = 0.8 | 377 | 2.8 | 0 | 0.000 | 0.400 | 0.727 | 0.479 | 0.946 | |

| Number of runs = 5000, α = 0.05, K = 0.9 | 377 | 2.8 | 0 | 0.000 | 0.400 | 0.727 | 0.479 | 0.946 | |

| EGS-NCMIGS | l = 7, δ = 0.015 | 6 | 7.0 | 0 | 0.286 | 0.000 | 0.964 | 0.963 | 0.965 |

| l = 7, K = 10 | 6 | 10.0 | 0 | 0.500 | 0.000 | 0.964 | 0.963 | 0.965 | |

| l = 7, K = 50 | 6 | 21.0 | 0 | 0.762 | 0.000 | 0.941 | 0.937 | 0.943 | |

| l = 5000, δ = 0.015 | 24 | 7.3 | 0 | 0.469 | 0.267 | 0.954 | 0.843 | 0.967 | |

| l = 5000, K = 10 | 20 | 10.0 | 0 | 0.610 | 0.220 | 0.964 | 0.954 | 0.970 | |

| l = 5000, K = 50 | 9 | 21.0 | 0 | 0.762 | 0.000 | 0.944 | 0.937 | 0.954 | |

| EGS-CMIM | l = 7, K = 10 | 6 | 10.0 | 0 | 0.500 | 0.000 | 0.963 | 0.963 | 0.965 |

| l = 7, K = 50 | 6 | 21.0 | 0 | 0.762 | 0.000 | 0.939 | 0.937 | 0.942 | |

| l = 5000, K = 10 | 20 | 10.0 | 0 | 0.595 | 0.190 | 0.963 | 0.951 | 0.969 | |

| l = 5000, K = 50 | 9 | 21.0 | 0 | 0.762 | 0.000 | 0.943 | 0.937 | 0.954 | |

| EGSG | Number of Markov boundaries = 30, t = 5 | 30 | 7.0 | 0 | 0.476 | 0.267 | 0.840 | 0.605 | 0.968 |

| Number of Markov boundaries = 30, t = 10 | 30 | 7.0 | 0 | 0.548 | 0.367 | 0.722 | 0.379 | 0.962 | |

| Number of Markov boundaries = 30, 1 = 15 | 30 | 7.0 | 0 | 0.548 | 0.367 | 0.722 | 0.379 | 0.962 | |

| Number of Markov boundaries = 5,000, t = 5 | 1,997 | 7.0 | 0 | 0.286 | 0.000 | 0.863 | 0.620 | 0.965 | |

| Number of Markov boundaries = 5,000, t = 10 | 3,027 | 7.0 | 0 | 0.286 | 0.000 | 0.774 | 0.500 | 0.965 | |

| Number of Markov boundaries = 5,000, t = 15 | 3,027 | 7.0 | 0 | 0.286 | 0.000 | 0.774 | 0.500 | 0.965 | |

| Resampling+RFE | without statistical comparison | 1,374 | 14.9 | 1 | 0.397 | 0.058 | 0.955 | 0.932 | 0.979 |

| with statistical comparison (α = 0.05) | 188 | 4.9 | 0 | 0.171 | 0.378 | 0.930 | 0.917 | 0.967 | |

| Resampling+UAF | without statistical comparison | 184 | 20.8 | 0 | 0.752 | 0.000 | 0.953 | 0.934 | 0.966 |

| with statistical comparison (α = 0.05) | 19 | 8.4 | 0 | 0.592 | 0.347 | 0.930 | 0.917 | 0.938 | |

| IR-HITON-PC | max-k = 3, α = 0.05 | 3 | 4.3 | 1 | 0.083 | 0.200 | 0.946 | 0.936 | 0.965 |

| IR-SPLR | without statistical comparison | 1 | 26.0 | 0 | 0.808 | 0.000 | 0.958 | 0.958 | 0.958 |

| with statistical comparison (α = 0.05) | 1 | 17.0 | 0 | 0.706 | 0.000 | 0.959 | 0.959 | 0.959 | |

Table 11.

Results obtained in simulated data set TIED1000. “MB” stands for “Markov boundary”, and “VS” stands for “variable set”. The 95% interval for weighted accuracy denotes the range in which weighted accuracies of 95% of the extracted Markov boundaries/variable sets fell. Classification performance of the MAP-BN classifier in the same data sample was 0.972 weighted accuracy. Highlighted in bold are results that are statistically comparable to the MAP-BN classification performance.

| Method | I. Number of distinct MBs or VSs | II. Average size of extracted distinct MBs or VSs | III. Number of true MBs identified exactly | IV. Average proportion of false positives | V. Average false negative rate | VI. Weighted accuracy over all extracted MBs or VSs | |||

|---|---|---|---|---|---|---|---|---|---|

| Average | 95% Interval | ||||||||

| TIE* | max-k = 3, α = 0.05 | 72 | 5.0 | 72 | 0.000 | 0.000 | 0.957 | 0.952 | 0.960 |

| iTIE* | max-k = 3, α = 0.05 | 72 | 5.0 | 72 | 0.000 | 0.000 | 0.957 | 0.952 | 0.960 |

| KIAMB | Number of runs = 5000, α = 0.05, K = 0.7 | 349 | 2.8 | 0 | 0.000 | 0.400 | 0.722 | 0.450 | 0.959 |

| Number of runs = 5000, α = 0.05, K = 0.8 | 349 | 2.8 | 0 | 0.000 | 0.400 | 0.722 | 0.450 | 0.959 | |

| Number of runs = 5000, α = 0.05, K = 0.9 | 349 | 2.8 | 0 | 0.000 | 0.400 | 0.722 | 0.450 | 0.959 | |

| EGS-NCMIGS | l = 7, δ = 0.015 | 6 | 7.0 | 0 | 0.286 | 0.000 | 0.953 | 0.952 | 0.956 |

| l = 7, K = 10 | 6 | 10.0 | 0 | 0.500 | 0.000 | 0.968 | 0.967 | 0.969 | |

| l = 7, K = 50 | 6 | 50.0 | 0 | 0.900 | 0.000 | 0.877 | 0.866 | 0.887 | |

| l = 5000, δ = 0.015 | 995 | 8.0 | 0 | 0.648 | 0.508 | 0.960 | 0.950 | 0.968 | |