Abstract

Tonal music is characterized by a continuous flow of tension and resolution. This flow of tension and resolution is closely related to processes of expectancy and prediction and is a key mediator of music-evoked emotions. However, the neural correlates of subjectively experienced tension and resolution have not yet been investigated. We acquired continuous ratings of musical tension for four piano pieces. In a subsequent functional magnetic resonance imaging experiment, we identified blood oxygen level-dependent signal increases related to musical tension in the left lateral orbitofrontal cortex (pars orbitalis of the inferior frontal gyrus). In addition, a region of interest analysis in bilateral amygdala showed activation in the right superficial amygdala during periods of increasing tension (compared with decreasing tension). This is the first neuroimaging study investigating the time-varying changes of the emotional experience of musical tension, revealing brain activity in key areas of affective processing.

Keywords: music, tension-resolution patterns, fMRI, emotion, expectancy

INTRODUCTION

The processing of music involves a complex machinery of cognitive and affective functions that rely on neurobiological processes of the human brain (Peretz and Zatorre, 2005; Koelsch, 2012; Pearce and Rohrmeier, 2012). The past years have seen a growing interest in using music as a tool to study these functions and their underlying neuronal mechanisms (Zatorre, 2005). Because of its power to evoke strong emotional experiences, music is particularly interesting for affective neuroscience, and an increasing number of neuroimaging studies use music to unveil the brain mechanisms underlying emotion (for reviews see Koelsch, 2010; Peretz, 2010). Corresponding research has shown that music-evoked emotions modulate neural responses in a variety of limbic and paralimbic brain areas that are also activated during emotional experiences in other contexts. For example, pleasant emotional responses to music are associated with the activation of the mesolimbic reward system (Blood and Zatorre, 2001; Menon and Levitin, 2005; Salimpoor et al., 2011) that also responds to stimuli of more direct biological reward value such as food or sex (Berridge, 2003). Music thus appears to recruit evolutionarily ancient brain circuits associated with fundamental aspects of affective behaviour, and research on music-evoked emotion is therefore not only relevant for music psychology but promises new insights into general mechanisms underlying emotion.

Furthermore, because music unfolds over time, it is especially well-suited to studying the dynamic, time-varying aspects of emotional experience. These continuous fluctuations of emotional experience have been largely neglected by neuroimaging research on emotions, which predominantly has focused on more stationary aspects of affective experience during short emotional episodes. Corresponding studies, for example, investigated discrete emotions (such as happiness, sadness, anger, or fear) or emotional valence (pleasant vs unpleasant) in response to static experimental stimuli such as facial expressions (Breiter et al., 1996; Morris et al., 1996; Blair et al., 1999), affective pictures (Liberzon et al., 2003; Taylor et al., 2003; Phan et al., 2004), or words (Maddock et al., 2003; Kuchinke et al., 2005). Likewise, the focus on static aspects of emotions is also reflected in neuroimaging studies on music-evoked emotions, which generally assume that an emotional response to a piece of music remains relatively constant over the entire duration of the piece. Neural correlates of music-evoked emotions have, for example, been investigated by contrasting consonant/dissonant (Blood et al., 1999), pleasant/unpleasant (Koelsch et al., 2006), or happy/sad (Khalfa et al., 2005; Mitterschiffthaler et al., 2007) music stimuli, or by comparing different emotion dimensions (e.g. nostalgia, power, or peacefulness) of short music excerpts (Trost et al., 2012). However, emotions are rarely static states, and their dynamic, time-varying nature is in fact one of their defining features (Scherer, 2005). These dynamic fluctuations of emotional experience are poorly captured by fixed emotion labels globally attributed to an experimental stimulus (like happy vs sad, or pleasant vs unpleasant). Using music to investigate the temporal fluctuations of affective experiences can, therefore, provide new insights into mechanisms of neuro-affective processing.

A few studies have begun to investigate dynamic changes of emotions and their underlying brain mechanisms by using continuous self-report methods (Nagel et al., 2007; Schubert, 2010), in which emotions are continuously tracked over time. Chapin et al. (2010), for example, investigated neural correlates of emotional arousal during a Chopin étude in a functional magnetic resonance imaging (fMRI) study, and Mikutta et al. (2012) used arousal ratings for Beethoven’s fifth symphony to investigate their relation to different frequency bands in the electroencephalogram. In the film domain, Goldin et al. (2005) used continuous ratings of emotional intensity to uncover brain activations in response to sad and amusing film clips that were not detected by conventional block contrasts. Similarly, Wallentin et al. (2011) identified brain activations in language and emotion areas (temporal cortices, left inferior frontal gyrus (IFG), amygdala and motor cortices) related to continuous ratings of emotional intensity and valence during story comprehension.

This study aims to extend previous research by using musical tension to investigate the dynamic change of emotional experience over the course of a musical piece. Musical tension refers to the continuous ebb and flow of tension and resolution that is usually experienced when listening to a piece of Western tonal music. In particular, tension is triggered by expectancies that are caused by implication relationships between musical events, which are implicitly acquired through exposure to a musical system (Rohrmeier and Rebuschat, 2012). For example, a musical event that sets up harmonic implications, such as an unstable dominant seventh chord, may build up tension, which is finally resolved by the occurrence of an implied event, such as a stable tonic chord. The implicative relationships can be local or non-local, i.e. the implicative and implied events do not necessarily have to follow each other directly but may stretch over long distances and can be recursively nested (cf. Lerdahl, 2004; Rohrmeier, 2011). Thus, prediction and expectancy, which have been proposed as one key mechanism of emotion elicitation in music (Meyer, 1956; Huron, 2006; Juslin and Västfjäll, 2008; Koelsch, 2012), are inherently linked to musical tension (other factors related to tension are, e.g. consonance/dissonance, stability/instability and suspense emerging from large-scale structures; for details see Lehne and Koelsch, in press). The pertinent expectancy and prediction processes are not only relevant for music processing (for reviews see Fitch et al., 2009; Pearce and Wiggins, 2012; Rohrmeier and Koelsch, 2012) but have been proposed as a fundamental mechanism underlying human cognition (Gregory, 1980; Dennett, 1996) and brain functioning (Bar, 2007; Bubic et al., 2010; Friston, 2010). Therefore, studying the neural bases of musical tension can shed light on general principles of predictive processing, predictive coding (Friston and Kiebel, 2009) and their connections to dynamic changes of affective experience.

In the music domain, neural processes related to expectancy violations have been studied using short chord sequences that either ended on expected or unexpected chords (e.g. Koelsch et al., 2005; Tillmann et al., 2006). The music-syntactic processing of such expectancy violations has mainly been associated with neural responses in Brodmann area 44 of the inferior fronto-lateral cortex (Koelsch et al., 2005). However, activations in areas related to emotive processing, such as the orbitofrontal cortex (OFC) (Koelsch et al., 2005; Tillmann et al., 2006) and the amygdala (Koelsch et al., 2008a) as well as increases in electrodermal activity (Steinbeis et al., 2006; Koelsch et al., 2008b) indicate that affective processes also play a role in the processing of syntactically irregular events. The affective responses to breaches of expectancy in music were investigated more closely in an fMRI study by Koelsch et al. (2008a) who reported increased activation of the amygdala (superficial group) in response to unexpected chord functions, thus corroborating the link between affective processes and breaches of expectancy.

In this study, we acquired continuous ratings of felt musical tension for a set of ecologically valid music stimuli (four piano pieces by Mendelssohn, Mozart, Schubert and Tchaikovsky), thus extending the more simplistic chord-sequence paradigms of previous studies (Koelsch et al., 2005, 2008a; Steinbeis et al., 2006; Tillmann et al., 2006) to real music. Subsequently, we recorded fMRI data (from the same participants) and used the tension ratings as continuous regressor to identify brain areas related to the time-varying experience of musical tension. In addition, we compared stimulus epochs corresponding to increasing and decreasing tension. Based on the studies reported above (Koelsch et al., 2005, 2008a; Tillmann et al., 2006), we investigated whether musical tension modulates neuronal activity in limbic/paralimbic brain structures known to be involved in emotion processing. We also tested the more specific regional hypothesis that tension is related to activity changes in the amygdala, assuming that structural breaches of expectancy are key to the elicitation of tension (Meyer, 1956; Koelsch, 2012), and that such breaches of expectancy modulate amygdala activity as reported in the study by Koelsch et al. (2008a).

METHODS

Participants

Data from 25 right-handed participants (13 female, age range 19–29 years, M = 23.9, s.d. = 2.9) were included in the analysis (data from three additional participants were excluded because of excessive motion in the fMRI scanner or because they did not understand the experimental task correctly). None of the participants was a professional musician. Thirteen participants had received instrument lessons in addition to basic music education at school (instruments: piano, guitar and flute; range of training: 1–13 years, M = 6.4, s.d. = 3.6). All participants gave written consent and were compensated with 25 Euro or course credit. The study was approved by the ethics committee of the Freie Universität Berlin and conducted in accordance with the Declaration of Helsinki.

Stimuli

Four piano pieces were used as stimulus material: (i) Mendelssohn Bartholdy’s Venetian Boat Song (Op. 30, No. 6), (ii) the first 24 measures of the second movement of Mozart’s Piano Sonata KV 280, (iii) the first 18 measures of the second movement of Schubert’s Piano Sonata in B-flat major (D. 960), and (iv) the first 32 measures of Tchaikovsky’s Barcarolle from The Seasons (Op. 37). The pieces had been performed by a professional pianist on a Yamaha Clavinova CLP-130 (Yamaha Corporation, Hamamatsu, Japan) and recorded using the musical digital interface (MIDI) protocol. From the MIDI data, audio files were generated (16 bit, 44.1 kHz sampling frequency) using an authentic grand piano sampler (The Grand in Cubase SL, Steinberg Media Technologies AG, Hamburg, Germany). Stimulus durations were 2:25 min (Mendelssohn), 1:59 min (Mozart), 1:34 min (Schubert) and 1:44 min (Tchaikovsky).

Because musical tension correlates to some degree with loudness of the music (Farbood, 2012; Lehne et al., in press), we also included versions without dynamics (i.e. without the loudness changes related to the expressive performance of the pianist) in the stimulus set to rule out the possibility that brain activations related to musical tension were simply due to these loudness changes (in addition, loudness was modelled as a separate regressor in the data analysis, see Image Processing and Statistical Analysis). Versions without loudness variations were created by setting all MIDI key-stroke velocity values to the average value of all pieces (42). In the following, we refer to the original unmodified versions as versions with dynamics whereas versions with equalized velocity values are referred to as versions without dynamics.

Experimental procedure

Behavioural session

From each participant, continuous ratings of musical tension for each piece were acquired in a separate session that preceded the functional neuroimaging session. Continuous tension ratings were obtained using a slider interface presented on a computer screen. The vertical position of the slider shown on the screen could be adjusted with the computer mouse according to the musical tension experienced by participants (with high positions of the slider corresponding to an experience of high tension and lower slider positions indicating low levels of experienced tension; for an illustration, see supplementary Figure S1). Participants were instructed to use the slider to continuously indicate musical tension as they subjectively experienced it while listening to the stimuli. (‘Please use the slider to indicate the amount of tension you feel during each moment of the music’.) It was emphasized that they should focus on their subjective experience of tension, and not on the tension they thought that the music was supposed to express. That is, ratings of felt musical tension (in contrast to perceived tension, cf. Gabrielsson, 2002) were acquired. As in previous studies of musical tension (Krumhansl, 1996, 1997; Lychner, 1998; Fredrickson, 2000; Vines et al., 2006; Farbood, 2012), in order not to bias participants’ understanding of the concept of musical tension towards one specific aspect of the tension experience (e.g. consonance/dissonance, stability/instability, local vs non-local implicative relationships, see Introduction), musical tension was not explicitly defined, and none of the participants reported difficulties with the task. To familiarize participants with the task, they completed a short practice trial before starting with the actual experiment (a 3-min excerpt from the second movement of Schubert’s Piano Sonata D. 960 was used for the practice trial). The vertical position of the slider (i.e. its y-coordinate on the screen) was recorded every 10 ms (however, to match the acquisition times of the functional imaging, tension ratings were subsequently downsampled, see Image Processing and Statistical Analysis). Each stimulus was presented twice, yielding a total of 16 stimulus presentations (four pieces with dynamics plus four pieces without dynamics, each repeated once). Before each stimulus presentation, the slider was reset to its lowermost position. Stimuli were presented via headphones at a comfortable volume level and in randomized order. The total duration of one behavioural session was approximately 45 min.

fMRI experiment

Within maximally 1 week after the behavioural session (M = 2.6 days, s.d. = 1.6 days), participants listened to the stimuli again while functional imaging data were acquired. Stimuli were presented via MRI-compatible headphones, and special care was taken that stimuli were clearly discernible from scanner noise and presented at a comfortable volume level (an optimal volume level was determined in a pilot test; however, to make the experiment as comfortable as possible, slight individual volume adjustments were made if requested by participants).

To make sure that participants focused their attention on the music, they were given the task to detect a short sequence of three sine wave tones (660 Hz, 230 ms duration) that was added randomly to 4 of the 16 stimulus presentations. Each stimulus presentation was followed by a 30 s rating period, during which participants indicated whether they had detected the sine wave tones using two buttons (yes or no) of an MRI-compatible response box. To assess the global emotional state of participants during each stimulus presentation, we also acquired valence and arousal ratings after each stimulus using the five-point self-assessment manikin scale by Lang (1980). Participants were instructed to listen to the stimuli with their eyes closed. After completion of each piece a short tone (440 Hz, 250 ms duration) signalled them to open their eyes, give their answer for the detection task, and provide the valence and arousal ratings for the preceding stimulus. The fMRI session comprised two runs with each run containing eight stimulus presentations (four pieces with dynamics and four pieces without dynamics) played in randomized order (duration per run: 19:34 min). To make participants familiar with the experiment and the sine wave detection task, they completed two practice trials inside the scanner before starting with the actual experiment.

No tension ratings were acquired during the functional imaging session to avoid contamination of fMRI data related to felt musical tension with those related to cognitive processes associated with the rating task (e.g. motor and monitoring processes). To guarantee the within-subject reliability of tension ratings, participants performed additional tension ratings immediately after fMRI scanning, which were compared with ratings from the behavioural session. To keep the duration of the experiment at a reasonable length, the post-scan ratings were not acquired for the complete stimulus set but only for two representative examples (the Mendelssohn and Mozart piece played with dynamics).

Image acquisition

MRI data were acquired using a 3 Tesla Siemens Magnetom TrioTim MRI scanner (Siemens AG, Erlangen, Germany). Prior to functional scanning, a high-resolution (1 × 1 × 1 mm) T1-weighted anatomical reference image was obtained using a rapid acquisition gradient echo (MP-RAGE) sequence. For the functional session, a continuous echo planar imaging sequence was used (37 slices; slice thickness: 3 mm; interslice gap: 0.6 mm; TE: 30 ms; TR: 2 s; flip angle: 70°; 64 × 64 voxel matrix; field of view: 192 × 192 mm) with acquisition of slices interleaved within the TR interval. A total of 1174 images were acquired per participant (587 per run). To minimize artefacts in areas such as the OFC and the temporal lobes, the acquisition window was tilted at an angle of 30° to the intercommissural (AC–PC) line (Deichmann et al., 2003; Weiskopf et al., 2007).

Image processing and statistical analysis

Data were analysed using Matlab (MathWorks, Natick, USA) and SPM8 (Wellcome Trust Centre for Neuroimaging, London, UK). Prior to statistical analysis, functional images were realigned using a six-parameter rigid body transformation, co-registered to the anatomical reference image, normalized to standard Montreal Neurological Institute (MNI) stereotaxic space using a 12-parameter affine transformation, and spatially smoothed with a Gaussian kernel of 6 mm full-width at half-maximum. Images were masked to include only gray matter voxels in the analysis [voxels with a gray matter probability of <40% according to the ICBM tissue probabilistic atlases included in SPM8 were excluded]. Low-frequency noise and signal drifts were removed using a high-pass filter with a cut-off frequency of 1/128 Hz. An autoregressive AR(1) model was used to account for serial correlations between scans.

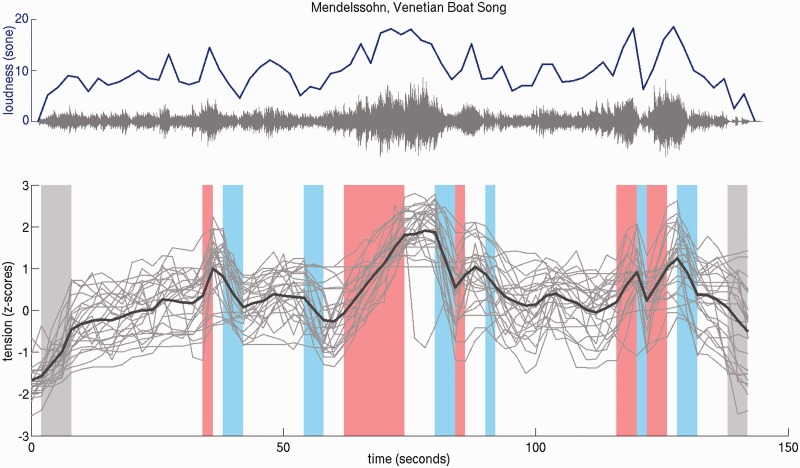

Statistical analysis was performed using a general linear model (GLM) with the following regressors: (1) music periods, (2) rating periods, (3) loudness, (4 + 5) tension (modelled separately for versions with and without dynamics), (6) tension increases and (7) tension decreases. Music and rating periods of the experiment were entered as block regressors into the model. Loudness changes of the music were modelled using a continuous regressor obtained from a Matlab implementation (http://www.genesis-acoustics.com/en/loudness_online-32.html) of the loudness model for time-varying sounds by Zwicker and Fastl (1999). (Note that even versions without dynamics, i.e. versions with equalized MIDI key-stroke velocity values, retained some loudness variations because of varying note density of the music, which made it necessary to include a loudness regressor in the GLM to avoid confounding tension with loudness.) Tension was modelled as continuous regressor using z-normalized tension ratings averaged across participants and the two presentations of each stimulus during the behavioural session (Figure 1, using individual instead of averaged tension ratings yielded similar but statistically weaker results). Tension increases and decreases were modelled in a block-wise fashion based on the first derivative of the average tension rating: values more than 1 s.d. above the mean of the derivative were modelled as tension increases, whereas values more than 1 s.d. below the mean were modelled as tension decreases (Figure 1). Thus, these regressors distinguish between rising and falling tension, showing in which structures activity correlates to transient increase or decrease of tension, whereas the tension regressor indicates in which structures activity correlates to absolute tension values. (The regressors for increase and decrease of tension yielded similar but statistically stronger results compared with a continuous regressor of the tension derivative.) Estimates of the motion correction parameters obtained during the realignment were added as regressors of no interest to the model.

Fig. 1.

Top: Audio waveform (gray) and loudness (blue) of the Mendelssohn piece. Bottom: Individual (gray lines) and average (black line) tension ratings (N = 25). Shaded areas highlight time intervals that were modelled as increasing (red) and decreasing (blue) tension (gray shaded areas were excluded from data analysis because tension effects are confounded by music onset or ending). Loudness and tension ratings were downsampled to 1/2 Hz to match the time intervals of image acquisition. Note the similarity of loudness and the tension profile, and that, therefore, loudness was controlled by including it as regressor in the model used for data evaluation.

To match the time points of image acquisition, continuous regressors (i.e. loudness and tension) were downsampled to 1/2 Hz using the Matlab function interp1.m. The hemodynamic response was modelled by convolving regressors with a canonical double-gamma hemodynamic response function. Model parameters were estimated using the restricted maximum-likelihood approach implemented in SPM8.

Whole-brain statistical parametric maps (SPMs) were calculated for the contrast music vs rating, the continuous regressors loudness and tension, and the contrast tension increase vs tension decrease. To compare tension-related brain activations between versions with and without dynamics (see Stimuli), we also contrasted tension for the different versions with each other, i.e. tension (versions with dynamics) vs tension (versions without dynamics). After calculating contrasts at the level of individual participants, a second-level random effects analysis using one-sample t-tests across contrast images of individual participants was performed. P-values smaller than 0.05 corrected for family-wise errors (FWE) were considered significant.

In addition to the whole-brain analysis, we tested the regional hypothesis that tension is related to activity changes in the amygdala (see Introduction). For this, we tested the contrasts tension and tension increase vs tension decrease with bilateral amygdalae as regions of interest (ROI). The anatomical ROI was created using maximum probabilistic maps of the amygdala as implemented in the SPM anatomy toolbox (Amunts et al., 2005; Eickhoff et al., 2005). These maps were also used to assess the location of significant activations within the three subregions of the amygdala (i.e. laterobasal, centromedial and superficial groups). A statistical threshold of P < 0.05 (FWE-corrected) was used for the ROI analysis.

RESULTS

Behavioural data

Figure 1 shows individual and average tension ratings for the Mendelssohn piece (version with dynamics). Pearson product–moment correlation coefficients between individual and average tension ratings of the different stimuli were high (M = 0.70; s.d. = 0.20). Overall, average tension profiles thus were in good correspondence with individual tension ratings. Likewise, within-participant correlations assessed by comparing tension ratings before and after scanning were high (Mendelssohn: M = 0.76; s.d. = 0.12; Mozart: M = 0.62; s.d. = 0.28) indicating that the subjective experience of musical tension remained relatively stable over time.

Mean valence ratings of the pieces (measured on a five-point scale) ranged between 3.47 and 4.38 (M = 4.00; s.d. = 0.34) showing that participants enjoyed listening to the pieces. Mean arousal ratings for the different pieces were moderate, ranging from 2.00 to 2.98 (M = 2.41, s.d. = 0.31).

Except for one participant who missed one of the four sine tones that had to be detected, all participants correctly detected the tones indicating that they attentively listened to the music stimuli during scanning.

fMRI data

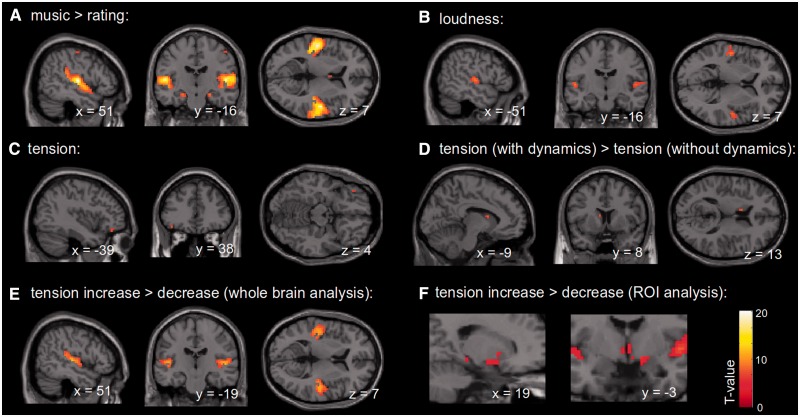

SPMs for the different contrasts and regressors are shown in Figure 2 (for details, see also Table 1). The contrast music > rating (Figure 2A) showed activation of bilateral supratemporal cortices. In each hemisphere, the maximum of this activation was located on Heschl’s gyrus in the primary auditory cortex (right: 90% probability for Te1.0, left: 60% probability for Te1.0 according to Morosan et al., 2001), extending posteriorly along the lateral fissure into the planum temporale as well as anteriorly into the planum polare. Moreover, this contrast showed bilateral activation of the cornu ammonis (CA) of the hippocampal formation (right: 80% probability for CA, left: 70% probability for CA according to Amunts et al., 2005). Smaller clusters of activation for this contrast were found in the right central sulcus, left subgenual cingulate cortex, left caudate and left superior temporal sulcus (STS).

Fig. 2.

SPMs of different contrasts and regressors, (A)–(E) whole-brain analyses (P < 0.05, FWE-corrected) for the contrasts music > rating (A), loudness (B), tension (C), tension (versions with dynamics) > tension (versions without dynamics) (D), and tension increase > tension decrease (E). (F) ROI analysis for left and right amygdala for the contrast tension increase > tension decrease (P < 0.05, small-volume FWE-corrected). All images are shown in neurological convention.

Table 1.

Anatomical locations, MNI coordinates and t-values of significant local maxima for the different contrasts and regressors investigated in the whole-brain analysis

| Anatomical location | X (mm) | Y (mm) | Z (mm) | t-value | Cluster size |

|---|---|---|---|---|---|

| Music > rating | |||||

| Right primary auditory cortex | 51 | −13 | 7 | 19.67 | 768 |

| Left primary auditory cortex | −51 | −19 | 7 | 19.13 | 586 |

| Right hippocampus (CA) | 24 | −13 | −17 | 9.42 | 12 |

| Left hippocampus (CA) | −21 | −16 | −17 | 8.13 | 10 |

| Fornix | 0 | −1 | 13 | 8.60 | 20 |

| Left subgenual cingulate cortex | −3 | 26 | −5 | 6.81 | 2 |

| Left subgenual cingulate cortex | −6 | 29 | −8 | 6.28 | 1 |

| Right central sulcus | 51 | −16 | 55 | 6.63 | 3 |

| Left caudate nucleus | −6 | 11 | 1 | 6.40 | 1 |

| Left anterior STS | −42 | 5 | −23 | 6.23 | 3 |

| Loudness | |||||

| Left primary auditory cortex | −51 | −13 | 4 | 8.33 | 46 |

| Right primary auditory cortex | 51 | −4 | −2 | 8.21 | 44 |

| Tension | |||||

| Left pars orbitalis | −39 | 38 | −17 | 7.11 | 3 |

| Tension (versions with dynamics) > tension (versions without dynamics) | |||||

| Left caudate nucleus | −9 | 8 | 13 | 7.22 | 3 |

| Left caudate nucleus | −6 | 11 | 7 | 5.89 | 1 |

| Tension increase > tension decrease | |||||

| Right primary auditory cortex | 51 | −19 | 4 | 11.58 | 220 |

| Left primary auditory cortex | −39 | −28 | 10 | 10.66 | 217 |

| Right MFG | 42 | 47 | 13 | 9.47 | 12 |

| Right thalamus | 12 | −28 | −5 | 7.67 | 3 |

| Right precentral sulcus | 36 | 2 | 61 | 6.96 | 4 |

| Right cerebellum | 6 | −37 | −8 | 6.65 | 2 |

| Right cerebellum | 6 | −70 | −20 | 6.14 | 1 |

P < 0.05, FWE-corrected; cluster sizes in voxels; CA: cornu ammonis; MFG: middle frontal gyrus; STS: superior temporal sulcus.

Results of the loudness regressor (which, in contrast to the global music > rating contrast, specifically captured brain responses to loudness variations within music pieces) are shown in Figure 2B. This regressor revealed activations of Heschl’s gyrus bilaterally (primary auditory cortices, right: 80% probability for Te1.0, left: 50% probability for Te1.0 according to Morosan et al., 2001) extending into adjacent auditory association cortex.

Figure 2C shows results of the tension regressor (pooled over versions with and without dynamics), indicating structures in which activity is related to felt musical tension. (Note that loudness was controlled for by including the loudness regressor in the model, and that, therefore, loudness did not contribute to the results of this tension analysis.) The tension regressor indicated a positive correlation with blood oxygen level-dependent (BOLD) signal changes in the left pars orbitalis of the IFG. No significant negative correlations were observed. To test whether the activation of the tension regressor differed between versions with and without dynamics, we also contrasted the tension regressor of original versions with versions with equalized MIDI velocity values (see Methods). For this contrast, i.e. tension (versions with dynamics) > tension (versions without dynamics), activations were found in left caudate nucleus (Figure 2D). The ROI analysis for the tension regressor in left and right amygdalae (guided by our hypotheses, see Introduction) did not result in any significant activations.

To investigate structures in which activity correlates specifically to the rise and decline of tension, we also compared epochs of increasing and decreasing tension (see blue and red areas in Figure 1). Figure 2E shows results of the contrast tension increase > tension decrease. This contrast yielded significant activations of Heschl’s gyrus bilaterally (primary auditory cortices, right: 70% Te1.0, left: 70% Te1.1) extending in both hemispheres posteriorly into the planum temporale. Smaller foci of activation for this contrast were also found in right anterior middle frontal gyrus (MFG), right thalamus, right precentral sulcus and right cerebellum. The opposite contrast (tension decrease > tension increase) did not reveal any significant activations. An ROI analysis for the contrast tension increase > tension decrease in left and right amygdala indicated a significant activation of the right amygdala (MNI peak coordinate: 21 −4 −11; 80% probability for superficial group according to Amunts et al., 2005; P < 0.05, FWE-corrected on the peak level; cluster extent: six voxels; Figure 2F).

Functional connectivity/psychophysiological interactions

To investigate whether there is a functional link between the brain regions associated with tension (in particular, the pars orbitalis and the amygdala), we also performed a post hoc functional connectivity/PPI analysis (Friston et al., 1997) in which we tested (i) which brain areas correlate to the activity in the peak voxels of the pars orbitalis and amygdala activations reported above (irrespective of subjectively experienced tension) and (ii) whether there is a psychophysiological interaction (PPI) with increasing or decreasing tension, i.e. in which brain areas the functional connectivity is modulated by tension (increasing vs decreasing).

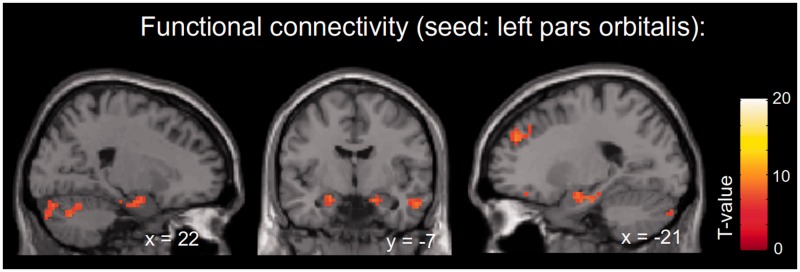

The seed voxel in the left pars orbitalis (MNI coordinate: −39 38 −17) showed, among others, a significant functional connectivity (P < 0.05, FWE-corrected) with bilateral hippocampal and amygdalar regions (Figure 3). Conversely, the seed voxel in the right amygdala (MNI coordinate: 21 −4 −11) was functionally connected to the left pars orbitalis (for a complete list of significant activations from the functional connectivity analysis, see supplementary Table S1). The PPI analysis investigating in which regions functional connectivity is modulated by tension did not yield any significant results.

Fig. 3.

SPMs (P < 0.05, FWE-corrected) for the functional connectivity analysis with the peak voxel of the left pars orbitalis used as seed region (MNI coordinate: −39 38 −17). The maps show functional connectivity with bilateral hippocampal and amygdalar regions.

DISCUSSION

The neural correlates of tension as subjectively experienced over the course of a music piece have not been previously investigated. In this study, we identified brain areas correlating to continuous tension ratings of ecologically valid music stimuli. We found that experienced tension is related to BOLD signal increases in the left pars orbitalis of the IFG. Moreover, an ROI analysis in bilateral amygdala showed signal increases in the right superficial amygdala during periods of tension increase (compared with periods of tension decrease). A connectivity analysis showed functional connections between the pars orbitalis and bilateral amygdalar regions.

In accordance with previous findings (Krumhansl, 1996; Fredrickson, 2000), our behavioural data show that the experience of musical tension was consistent both across and within participants. Correlations between tension ratings before and after scanning were high, indicating that the subjective experience of tension remained stable over time and that ratings acquired before the scanning session were in concordance with the tension experience during scanning. Likewise, the high correlations between individual and average tension ratings showed that the experience of musical tension of individual participants was well captured by the average tension rating which we used for the fMRI data analysis.

For the fMRI data, contrasting music periods with rating periods (i.e. periods of silence) showed, as expected, activations in typical regions of auditory processing in primary auditory cortex and surrounding auditory areas. In addition, this contrast revealed bilateral activation of the hippocampus. Hippocampal activation has regularly been observed in previous neuroimaging experiments using music stimuli (e.g. Blood and Zatorre, 2001; Brown et al., 2004; Koelsch et al., 2006; Mueller et al., 2011) and is taken to reflect memory and emotional processes related to music listening (Koelsch, 2012). Loudness also correlated to primary auditory cortex activations, consistent with previous studies that report BOLD signal increases dependent on the sound pressure level of auditory stimuli (Hall et al., 2001; Langers et al., 2007).

For musical tension, we observed activation in the left pars orbitalis (Brodmann area 47). BA 47 is part of the five-layered orbitofrontal paleocortex, and cyto- as well as receptorarchitectonically only distantly related to neighbouring Brodmann areas 44 and 45 of the IFG (Petrides and Pandya, 2002; Amunts et al., 2010). BA 47 thus appears to be functionally more related to the OFC than to the IFG, suggesting a possible involvement in emotional processes. In the music domain, activation of orbito-frontolateral cortex has been related to emotive processing of unexpected harmonic functions (Koelsch et al., 2005; Tillmann et al., 2006). Similarly, Nobre et al. (1999) reported activations in orbito-frontolateral cortex for breaches of expectancy in the visual modality. In music, such breaches of expectancy are closely associated with the subjective experience of tension: unexpected events (for example, chords that are only distantly related to the current key context, thus creating an urge to return to the established tonal context) are usually accompanied by an experience of tension whereas expected events (e.g. a tonic chord after a dominant chord) tend to evoke feelings of relaxation or repose (Bigand et al., 1996). These musical expectations have been proposed to be the primary mechanism underlying music-evoked emotions (Meyer, 1956; Vuust and Frith, 2008), and psychologically, the subjective experience of tension may thus be taken to reflect the affective response to breaches of expectancy (but note that other phenomena can also give rise to musical tension, for reviews see Huron, 2006; Koelsch, 2012; Lehne and Koelsch, in press). In light of previous research that has proposed a functional role of OFC in representing affective value of sensory stimuli (Rolls and Grabenhorst, 2008) and linking sensory input to hedonic experience (Kringelbach, 2005), the pars orbitalis activation observed for musical tension may therefore reflect neural processes integrating expectancy and prediction processes with affective experience. This is supported by the results of our connectivity analysis, showing that the pars orbitalis is functionally connected to bilateral hippocampal and amygdalar regions (however, this functional connectivity was not modulated by tension). Given the link between tension and processes of expectancy and prediction, the pars orbitalis activation and its functional connections to bilateral regions of the amygdala further corroborate the role of expectancy as a mediator of music-evoked emotions (Meyer, 1956; Huron, 2006; Juslin and Västfjäll, 2008; Koelsch, 2012). Importantly, the activation for musical tension in the pars orbitalis did not depend on the dynamics (i.e. the varying degrees of loudness) of the pieces because no activation differences in this region were observed when comparing tension for versions with and without dynamics.

The ROI analysis for bilateral amygdalae revealed increased activations in the right (superficial) amygdala for periods of increasing tension compared with periods of decreasing tension. This extends previous findings by showing that the amygdala does not only respond to irregular chord functions in simple chord sequences (Koelsch et al., 2008a) but is also related to subjectively experienced tension increases in real music pieces. Note that the amygdala is not a single functional unit: different subregions of the amygdala show distinctive activation patterns for auditory stimuli (with predominantly positive signal changes in the laterobasal group and negative signal changes in the superficial and centromedial groups, see Ball et al., 2007), and core regions of the amygdala respond to both positive and negative emotional stimuli (Ball et al., 2009). Interestingly, in both the present study and the study by Koelsch et al. (2008a), which investigated brain activations in response to unexpected chords, signal differences were maximal in the superficial nuclei group of the amygdala (SF). Although not much is known about this nuclei group, connectivity studies have shown that the SF is functionally connected to limbic structures such as anterior cingulate cortex, ventral striatum and hippocampus, thus underscoring its relevance for affective processes (Roy et al., 2009; Bzdok et al., 2012). In neuroimaging studies using music, SF activations have been found for joyful music stimuli as opposed to experimentally manipulated dissonant counterparts (Mueller et al., 2011) and joyful as opposed to fearful music (Koelsch et al., 2013), suggesting a relation between SF and positive emotion. Considering a possible role of the SF in positive emotion and its functional connection to the nucleus accumbens (Bzdok et al., 2012), the SF activations reported here may also point to a possible relation between musical tension and so-called chill experiences (i.e. intensely pleasurable responses to music, see Panksepp, 1995; Grewe et al., 2009). Such chill experiences are typically evoked by new or unexpected harmonies (Sloboda, 1991), and have been found to be associated with dopaminergic activity in the ventral striatum (Salimpoor et al., 2011). The close relation between the amygdala and the reward processing of the striatum associated with musical pleasure gains further support from a recent fMRI study showing that the reward value of a music stimulus is predicted by the functional connectivity between the amygdala and the nucleus accumbens (Salimpoor et al., 2013). Thus, the SF may play a functional role in mediating pleasurable affective responses such as chills related to breaches of expectancy in music. Finally, a study by Goossens et al. (2009) suggests that the SF is particularly sensitive to social stimuli (in that study, SF responses were stronger in response to faces compared to houses), and a meta-analysis by Bzdok et al. (2011) revealed activation likelihood maxima in the SF for social judgments of trustworthiness and attractiveness, indicating a possible role in the processing of social signals. Thus, the present data perhaps reflect that irregular harmonies are taken by perceivers as significant communicative signals (see also Cross and Morley, 2008; Steinbeis and Koelsch, 2009), but this remains to be further specified.

Note that amygdala activations were only observed when comparing periods of increasing and decreasing tension; the continuous ratings of musical tension did not show amygdala activation. This points to an important limitation of one-dimensional tension measures (and continuous emotion measures in general) that do not distinguish between increases and decreases of rating values: although a point of rising tension can have the same absolute value as a point of falling tension, they probably reflect different cognitive and affective processes. Whereas the former is associated with a build-up of emotional intensity, the latter reflects a process of relaxation or resolution. In this study, we accounted for this by explicitly modelling tension increases and decreases in a block-wise fashion. For future research, it may be promising to break the phenomenon of musical tension even further down into different sub-processes like the build-up of a musical structure, breaches of expectancy, anticipation of resolution and resolution (see also Koelsch, 2012).

Finally, it has to be considered that musical tension involves both cognitive and affective processes, and brain activations related to musical tension can, in principle, be attributed to either of the two (or both). Although our results favour an affective interpretation (based on the observed brain regions as well as the nature of the experimental task, in which participants rated felt musical tension, not perceived tension), the contribution of affective vs purely cognitive processes of prediction and expectation to the observed activations remains to be more closely explored. For example, future research could disentangle cognitive and affective aspects of tension by comparing prediction and expectation processes in musical contexts that are largely devoid of emotional responses and are primarily based on the statistical properties of the stimulus (e.g. using computer-generated single-note sequences) with ones that involve emotion (such as the paradigm used in this study). Furthermore, it may be promising to investigate if theoretical models of musical tension (Lerdahl and Krumhansl, 2007; Farbood, 2012) can predict neural activations and how resulting activations compare to the ones related to subjective ratings of tension. Cognitive and affective processes might also be disentangled in time (using methods with high temporal resolution such as electro- or magnetoencephalography), assuming that brain areas reflecting cognitive processes of expectancy and anticipation precede the ones related to the emotional reaction.

CONCLUSION

The results of this music study show that the subjective experience of tension is related to neuronal activity in the pars orbitalis (BA 47) and the amygdala. Our results are the first to show that time-varying changes of emotional experience captured by musical tension involve brain activity in key areas of affective processing. The results also underscore the close connection between breaches of expectancy and emotive processing, thus pointing to a possible functional role of OFC and amygdala in linking processes of expectancy and prediction to affective experience.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Acknowledgments

We thank Yu Fukuda and Donald Gollmann for helping with the selection and preparation of the music stimuli. We also thank John DePriest, Evan Gitterman and two anonymous reviewers for helpful comments and suggestions on earlier versions of the manuscript.

This research was supported by the Excellence Initiative of the German Federal Ministry of Education and Research.

REFERENCES

- Amunts K, Kedo O, Kindler M, et al. Cytoarchitectonic mapping of the human amygdala, hippocampal region and entorhinal cortex: intersubject variability and probability maps. Anatomy and Embryology. 2005;210(5–6):343–52. doi: 10.1007/s00429-005-0025-5. [DOI] [PubMed] [Google Scholar]

- Amunts K, Lenzen M, Friederici AD, et al. Broca’s region: novel organizational principles and multiple receptor mapping. PLoS Biology. 2010;8(9):e1000489. doi: 10.1371/journal.pbio.1000489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ball T, Derix J, Wentlandt J, et al. Anatomical specificity of functional amygdala imaging of responses to stimuli with positive and negative emotional valence. Journal of Neuroscience Methods. 2009;180:57–70. doi: 10.1016/j.jneumeth.2009.02.022. [DOI] [PubMed] [Google Scholar]

- Ball T, Rahm B, Eickhoff SB, Schulze-Bonhage A, Speck O, Mutschler I. Response properties of human amygdala subregions: evidence based on functional MRI combines with probabilistic anatomical maps. PLoS One. 2007;2(3):e307. doi: 10.1371/journal.pone.0000307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. The proactive brain: using analogies and associations to generate predictions. Trends in Cognitive Sciences. 2007;11(7):280–9. doi: 10.1016/j.tics.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Berridge KC. Pleasures of the brain. Brain and Cognition. 2003;52:106–28. doi: 10.1016/s0278-2626(03)00014-9. [DOI] [PubMed] [Google Scholar]

- Bigand E, Parncutt R, Lerdahl F. Perception of musical tension in short chord sequences: the influence of harmonic function, sensory dissonance, horizontal motion, and musical training. Perception & Psychophysics. 1996;58:124–41. [PubMed] [Google Scholar]

- Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122(5):883–93. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(20):11818–23. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ, Bermudez P, Evans AC. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nature Neuroscience. 1999;2(4):382–7. doi: 10.1038/7299. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17(5):875–87. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Brown S, Martinez MJ, Parsons LM. Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport. 2004;15(13):2033–7. doi: 10.1097/00001756-200409150-00008. [DOI] [PubMed] [Google Scholar]

- Bubic A, von Cramon DY, Schubotz RI. Prediction, cognition and the brain. Frontiers in Human Neuroscience. 2010;4:25. doi: 10.3389/fnhum.2010.00025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Caspers S, et al. ALE meta-analysis on facial judgments of trustworthiness and attractiveness. Brain Structure and Function. 2011;215(3–4):209–23. doi: 10.1007/s00429-010-0287-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Laird AR, Zilles K, Fox PT, Eickhoff SB. An investigation of the structural, connectional, and functional subspecialization in the human amygdala. Human Brain Mapping. 2013;34(12):3247–66. doi: 10.1002/hbm.22138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapin H, Jantzen K, Kelso JA, Steinberg F, Large E. Dynamic emotional and neural responses to music depend on performance expression and listener experience. PLoS One. 2010;5(12):e13812. doi: 10.1371/journal.pone.0013812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cross I, Morley I. The evolution of music: theories, definitions and the nature of the evidence. In: Malloch S, Trevarthen C, editors. Communicative Musicality. Oxford: Oxford University Press; 2008. pp. 61–82. [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. NeuroImage. 2003;19(2):430–41. doi: 10.1016/s1053-8119(03)00073-9. [DOI] [PubMed] [Google Scholar]

- Dennett D. Kinds of Minds: Toward an Understanding of Consciousness. New York: Basic; 1996. [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, et al. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 2005;25(4):1325–35. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Farbood M. A parametric, temporal model of musical tension. Music Perception. 2012;29:387–428. [Google Scholar]

- Fitch WT, von Graevenitz A, Nicolas E. Bio-aesthetics, dynamics and the aesthetic trajectory: a cognitive and cultural perspective. In: Skov M, Vartanian O, editors. Neuroaesthetics. Amityville, NY: Baywood Publishing Company, Inc; 2009. pp. 59–102. [Google Scholar]

- Fredrickson WE. Perception of tension in music musicians versus nonmusicians. Journal of Music Therapy. 2000;37:40–50. doi: 10.1093/jmt/37.1.40. [DOI] [PubMed] [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nature Reviews. Neuroscience. 2010;11(2):127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- Friston K, Buechel C, Fink GR, Morris J, Rolls E, Dolan R. Psychophysiological and modulatory interactions in neuroimaging. NeuroImage. 1997;6(3):218–29. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Friston K, Kiebel S. Predictive coding under the free-energy principle. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 2009;364(1521):1211–21. doi: 10.1098/rstb.2008.0300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabrielsson A. Emotion perceived and emotion felt: Same or different? Musicae Scientiae [Special issue 2001-2002] 2002:123–47. [Google Scholar]

- Goldin PR, Hutcherson CA, Ochsner KN, Glover GH, Gabrieli JD, Gross JJ. The neural bases of amusement and sadness: a comparison of block contrast and subject-specific emotion intensity regression approaches. NeuroImage. 2005;27(1):26–36. doi: 10.1016/j.neuroimage.2005.03.018. [DOI] [PubMed] [Google Scholar]

- Goossens L, Kukolja J, Onur O, et al. Selective processing of social stimuli in the superficial amygdala. Human Brain Mapping. 2009;30:3332–8. doi: 10.1002/hbm.20755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregory RL. Perceptions as hypotheses. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 1980;290:181–97. doi: 10.1098/rstb.1980.0090. [DOI] [PubMed] [Google Scholar]

- Grewe O, Kopiez R, Altenmüller E. Chills as an indicator of individual emotional peaks. Annals of the New York Academy of Sciences. 2009;1169:351–4. doi: 10.1111/j.1749-6632.2009.04783.x. [DOI] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Summerfield AQ, Akeroyd MA, Palmer AR, Bowtell RW. Functional magnetic resonance imaging measurements of sound-level encoding in the absence of background scanner noise. Journal of the Acoustical Society of America. 2001;109(4):1559–70. doi: 10.1121/1.1345697. [DOI] [PubMed] [Google Scholar]

- Huron D. Sweet Anticipation: Music and the Psychology of Expectation. Cambridge, MA: MIT Press; 2006. [Google Scholar]

- Juslin PN, Västfjäll D. Emotional repsonses to music: the need to consider underlying mechanisms. Behavioral and Brain Sciences. 2008;31:559–621. doi: 10.1017/S0140525X08005293. [DOI] [PubMed] [Google Scholar]

- Khalfa S, Schon D, Anton JL, Liégeois-Chauvel C. Brain regions involved in the recognition of happiness and sadness in music. Neuroreport. 2005;16(18):1981–4. doi: 10.1097/00001756-200512190-00002. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Towards a neural basis of music-evoked emotions. Trends in Cognitive Sciences. 2010;14:131–7. doi: 10.1016/j.tics.2010.01.002. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Brain and Music. West Sussex, UK: John Wiley & Sons, Ltd; 2012. [Google Scholar]

- Koelsch S, Fritz T, Schlaug G. Amygdala activity can be modulated by unexpected chord functions during music listening. Neuroreport. 2008a;19:1815–9. doi: 10.1097/WNR.0b013e32831a8722. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, Schulze K, Alsop D, Schlaug G. Adults and children processing music: an fMRI study. NeuroImage. 2005;25(4):1068–76. doi: 10.1016/j.neuroimage.2004.12.050. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Fritz T, von Cramon DY, Müller K, Friederici AD. Investigating emotion with music: an fMRI study. Human Brain Mapping. 2006;27(3):239–50. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S, Kilches S, Steinbeis N, Schelinski S. Effects of unexpected chords and of performer’s expression on brain responses and electrodermal activity. PLoS One. 2008b;3(7):e2631. doi: 10.1371/journal.pone.0002631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S, Skouras S, Fritz T, Herrera P, Bonhage C, Küssner MB, Jacobs AM. The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. NeuroImage. in press;81:49–60. doi: 10.1016/j.neuroimage.2013.05.008. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML. The human orbitofrontal cortex: linking reward to hedonic experience. Nature Reviews. Neuroscience. 2005;6(9):691–702. doi: 10.1038/nrn1747. [DOI] [PubMed] [Google Scholar]

- Krumhansl CL. A perceptual analysis of Mozart’s piano sonata K. 282: segmentation, tension, and musical ideas. Music Perception. 1996;13:401–32. [Google Scholar]

- Krumhansl CL. An exploratory study of musical emotions and psychophysiology. Canadian Journal of Experimental Psychology. 1997;51:336–53. doi: 10.1037/1196-1961.51.4.336. [DOI] [PubMed] [Google Scholar]

- Kuchinke L, Jacobs AM, Grubich C, Võ ML, Conrad M, Herrmann M. Incidental effects of emotional valence in single word processing: an fMRI study. NeuroImage. 2005;28(4):1022–32. doi: 10.1016/j.neuroimage.2005.06.050. [DOI] [PubMed] [Google Scholar]

- Lang PJ. Behavioral treatment and bio-behavioral assessment: computer applications. In: Sidowski JB, Johnson JH, Williams TA, editors. Technology in Mental Health Care Delivery Systems. Norwood, NJ: Ablex; 1980. pp. 119–37. [Google Scholar]

- Langers DR, van Dijk P, Schoenmaker ES, Backes WH. fMRI activation in relation to sound intensity and loudness. NeuroImage. 2007;35(2):709–18. doi: 10.1016/j.neuroimage.2006.12.013. [DOI] [PubMed] [Google Scholar]

- Lehne M, Koelsch S. Tension-resolution patterns as a key element of aesthetic experience: psychological principles and underlying brain mechanisms. In: Nadal M, Huston JP, Agnati L, Mora F, Cela-Conde CJ, editors. Art, Aesthetics, and the Brain. Oxford: Oxford University Press; in press. in press) [Google Scholar]

- Lehne M, Rohrmeier M, Gollmann D, Koelsch S. The influence of different structural features on felt musical tension in two piano pieces by Mendelssohn and Mozart. Music Perception. in press (in press) [Google Scholar]

- Lerdahl F. Tonal Pitch Space. New York: Oxford University Press; 2004. [Google Scholar]

- Lerdahl F, Krumhansl CL. Modeling tonal tension. Music Perception. 2007;24(4):329–66. [Google Scholar]

- Liberzon I, Phan KL, Decker LR, Taylor SF. Extended amygdala and emotional salience: a PET activation study of positive and negative affect. Neuropsychopharmacology. 2003;28(4):726–33. doi: 10.1038/sj.npp.1300113. [DOI] [PubMed] [Google Scholar]

- Lychner JA. An empirical study concerning terminology relating to aesthetic response to music. Journal of Research in Music Education. 1998;46(2):303–19. [Google Scholar]

- Maddock RJ, Garrett AS, Buonocore MH. Posterior cingulate cortex activation by emotional words: fMRI evidence from a valence decision task. Human Brain Mapping. 2003;18(1):30–41. doi: 10.1002/hbm.10075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menon V, Levitin DJ. The rewards of music listening: response and physiological connectivity of the mesolimbic system. NeuroImage. 2005;28:175–84. doi: 10.1016/j.neuroimage.2005.05.053. [DOI] [PubMed] [Google Scholar]

- Meyer LB. Emotion and Meaning in Music. Chicago: University of Chicago Press; 1956. [Google Scholar]

- Mikutta C, Altorfer A, Strik W, Koenig T. Emotions, arousal, and frontal alpha rhythm asymmetry during Beethoven’s 5th symphony. Brain Topography. 2012;25(4):423–30. doi: 10.1007/s10548-012-0227-0. [DOI] [PubMed] [Google Scholar]

- Mitterschiffthaler MT, Fu CH, Dalton JA, Andrew CM, Williams SC. A functional MRI study of happy and sad affective states induced by classical music. Human Brain Mapping. 2007;28(11):1050–62. doi: 10.1002/hbm.20337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. NeuroImage. 2001;13(4):684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, et al. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–5. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Mueller K, Mildner T, Fritz T, et al. Investigating brain response to music: a comparison of different fMRI acquisition schemes. NeuroImage. 2011;54(1):337–43. doi: 10.1016/j.neuroimage.2010.08.029. [DOI] [PubMed] [Google Scholar]

- Nagel F, Kopiez R, Grewe O, Altenmüller E. EMuJoy: software for continuous measurement of perceived emotions in music. Behavior Research Methods. 2007;39(2):283–90. doi: 10.3758/bf03193159. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Frith CD, Mesulam MM. Orbitofrontal cortex is activated during breaches of expectation in tasks of visual attention. Nature Neuroscience. 1999;2(1):11–2. doi: 10.1038/4513. [DOI] [PubMed] [Google Scholar]

- Panksepp J. The emotional sources of ‘chills’ induced by music. Music Perception. 1995;13(2):171–207. [Google Scholar]

- Pearce MT, Rohrmeier M. Music cognition and the cognitive sciences. Topics in Cognitive Science. 2012;4(4):468–84. doi: 10.1111/j.1756-8765.2012.01226.x. [DOI] [PubMed] [Google Scholar]

- Pearce MT, Wiggins GA. Auditory expectation: the information dynamics of music perception and cognition. Topics in Cognitive Science. 2012;4(4):625–52. doi: 10.1111/j.1756-8765.2012.01214.x. [DOI] [PubMed] [Google Scholar]

- Peretz I. Towards a neurobiology of musical emotions. In: Juslin P, Sloboda JA, editors. Handbook of Music and Emotion—Theory, Research, Applications. Oxford: Oxford University Press; 2010. pp. 99–126. [Google Scholar]

- Peretz I, Zatorre RJ. Brain organization for music processing. Annual Review of Psychology. 2005;59:89–114. doi: 10.1146/annurev.psych.56.091103.070225. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. European Journal of Neuroscience. 2002;16(2):291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Phan KL, Taylor SF, Welsh RC, Ho SH, Britton JC, Liberzon I. Neural correlates of individual ratings of emotional salience: a trial-related fMRI study. NeuroImage. 2004;21(2):768–80. doi: 10.1016/j.neuroimage.2003.09.072. [DOI] [PubMed] [Google Scholar]

- Rohrmeier M. Towards a generative syntax of tonal harmony. Journal of Mathematics and Music. 2011;5(1):35–53. [Google Scholar]

- Rohrmeier M, Koelsch S. Predictive information processing in music cognition. A critical review. International Journal of Psychophysiology. 2012;83:164–75. doi: 10.1016/j.ijpsycho.2011.12.010. [DOI] [PubMed] [Google Scholar]

- Rohrmeier M, Rebuschat P. Implicit learning and acquisition of music. Topics in Cognitive Science. 2012;4(4):525–53. doi: 10.1111/j.1756-8765.2012.01223.x. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Grabenhorst F. The orbitofrontal cortex and beyond: from affect to decision-making. Progress in Neurobiology. 2008;86:216–44. doi: 10.1016/j.pneurobio.2008.09.001. [DOI] [PubMed] [Google Scholar]

- Roy AK, Shehzad Z, Margulies DS, et al. Functional connectivity of the human amygdala using resting state fMRI. NeuroImage. 2009;45(2):614–26. doi: 10.1016/j.neuroimage.2008.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salimpoor VN, Benovoy M, Larcher K, Dagher A, Zatorre R. Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nature Neuroscience. 2011;14:257–62. doi: 10.1038/nn.2726. [DOI] [PubMed] [Google Scholar]

- Salimpoor VN, van den Bosch I, Kovacevic N, McIntosh AR, Dagher A, Zatorre R. Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science. 2013;120(6129):216–9. doi: 10.1126/science.1231059. [DOI] [PubMed] [Google Scholar]

- Scherer K. What are emotions? And how can they be measured? Social Science Information. 2005;44:695–729. [Google Scholar]

- Schubert E. Continuous self-report methods. In: Juslin P, Sloboda JA, editors. Handbook of Music and Emotion—Theory, Research, Applications. Oxford: Oxford University Press; 2010. pp. 223–53. [Google Scholar]

- Sloboda JA. Music structure and emotional response: some empirical findings. Psychology of Music. 1991;19:110–20. [Google Scholar]

- Steinbeis N, Koelsch S. Understanding the intentions behind man-made products elicits neural activity in areas dedicated to mental state attribution. Cerebral Cortex. 2009;19(3):619–23. doi: 10.1093/cercor/bhn110. [DOI] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S, Sloboda JA. The role of harmonic expectancy violations in musical emotions: evidence from subjective, physiological, and neural responses. Journal of Cognitive Neuroscience. 2006;18(8):1380–93. doi: 10.1162/jocn.2006.18.8.1380. [DOI] [PubMed] [Google Scholar]

- Taylor SF, Phan KL, Decker LR, Liberzon I. Subjective rating of emotionally salient stimuli modulates neural activity. NeuroImage. 2003;18(3):650–9. doi: 10.1016/s1053-8119(02)00051-4. [DOI] [PubMed] [Google Scholar]

- Tillmann B, Koelsch S, Escoffier N, et al. Cognitive priming in sung and instrumental music: activation of inferior frontal cortex. NeuroImage. 2006;31(4):1771–82. doi: 10.1016/j.neuroimage.2006.02.028. [DOI] [PubMed] [Google Scholar]

- Trost W, Ethofer T, Zentner M, Vuilleumier P. Mapping aesthetic musical emotions in the brain. Cerebral Cortex. 2012;22(12):2769–83. doi: 10.1093/cercor/bhr353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vines BW, Krumhansl CL, Wanderley MM, Levitin DJ. Cross-modal interactions in the perception of musical performance. Cognition. 2006;101:80–113. doi: 10.1016/j.cognition.2005.09.003. [DOI] [PubMed] [Google Scholar]

- Vuust P, Frith CD. Anticipation is the key to understanding music and the effects of music on emotion. Behavioral and Brain Sciences. 2008;31:599–600. [Google Scholar]

- Wallentin M, Nielsen AH, Vuust P, Dohn A, Roepstorff A, Lund TE. Amygdala and heart rate variability responses from listening to emotionally intense parts of a story. NeuroImage. 2011;58:963–73. doi: 10.1016/j.neuroimage.2011.06.077. [DOI] [PubMed] [Google Scholar]

- Weiskopf N, Hutton C, Josephs O, Turner R, Deichmann R. Optimized EPI for fMRI studies of the orbitofrontal cortex: compensation of susceptibility-induced gradients in the readout direction. MAGMA. 2007;20(1):39–49. doi: 10.1007/s10334-006-0067-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre R. Music, the food for neuroscience. Nature. 2005;434:312–5. doi: 10.1038/434312a. [DOI] [PubMed] [Google Scholar]

- Zwicker E, Fastl H. Psychoacoustics—Facts and Models. Berlin: Springer; 1999. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.