Abstract

A previous functional MRI adaptation study on trait inference indicated that a trait code is located in the ventral medial prefrontal cortex (vmPFC), but could not rule out that this adaptation effect is due to the trait’s underlying valence. To address this issue, we presented sentences describing positive and negative valences of either a human trait or object characteristic, and manipulated whether the human trait or object characteristic was repeated or not, either with the same or opposite valance. In two trait conditions, a behavioral trait-implying sentence was preceded by a prime sentence that implied the same or the opposite trait. The results confirmed the earlier finding of robust trait adaptation from prime to target in the vmPFC, and also found adaptation in the precuneus and right mid-occipital cortex. In contrast, no valence adaptation was found in two novel object conditions, in which the target sentence again implied a positive or negative trait, but was preceded by a prime sentence that described an object with the same or the opposite valence. Together with the previous study, this indicates that a specific trait code, but not a generalized valence code, is represented in the vmPFC.

Keywords: trait, valence, mPFC, fMRI adaptation

INTRODUCTION

Recent neuroimaging studies have investigated brain regions crucial for forming enduring trait impression of others based on behavioral information. The primary region associated with such tasks is the medial prefrontal cortex (mPFC) (Mitchell, et al., 2004, 2005, 2006; Harris et al., 2005; Schiller, et al., 2009; Baron, et al., 2011; Ma, et al., 2011, 2012a,b; for a review, Van Overwalle, 2009). However, it is still not clear whether the mPFC only processes traits or whether it is also a repository of trait representations, and to what extent trait valence plays a role in this?

According to a simulation account, knowledge about the self is used as an anchor to mentally simulate the traits and preferences of other people (see Tamir and Mitchell, 2010). Support for this account comes from studies showing that the activity of the dorsal part of the mPFC increases linearly with the dissimilarity between self and other (Mitchell et al., 2006; Tamir and Mitchell, 2010). Other studies have demonstrated that when the self is judged first, a subsequent judgment of similar others on the same trait results in a reduction of the functional MRI (fMRI) signal (fMRI adaptation) as if judging the self and similar others rest on the same process. This was not the case for dissimilar others (Jenkins et al., 2008).

An alternative view is that brain activation in the mPFC does not only reflect trait-relevant information processing, but that the mPFC also stores the neural representation or memory code of traits. In other words, the representational approach suggests that trait information is stored in the mPFC and represented by a distributed population of neurons, and investigates in which location this occurs. This idea is in line with the structured event complex framework by Krueger et al., (2009) who argued that the mPFC represents abstract dynamic summary representations that give rise to social event knowledge.

To investigate the existence of a memory code in the brain, neuroscientific researchers often apply an fMRI adaptation paradigm. Adaptation refers to the observation that repeated presentations of a stimulus consistently reduce fMRI responses relative to presentations of a novel stimulus or stimulus characteristics that are variable and irrelevant (Grill-Spector et al., 2006). The adaptation effect has been demonstrated in many perceptual domains, including the perception of colors, shapes and objects and occurs in both lower- and higher-level visual areas (Grill-Spector et al., 1999; Thompson-Schill et al., 1999; Kourtzi and Kanwisher, 2000; Engel and Furmanski, 2001; Grill-Spector and Malach, 2001; Krekelberg et al., 2006; Bedny et al., 2008; Devauchelle et al., 2009; Roggeman et al., 2011; Diana et al., 2012; Josse et al., 2012). Moreover, fMRI adaptation has also been found during action observation (Ramsey and Hamilton, 2010a,b) and trait inferences of similar others as described above (Jenkins et al., 2008).

Recently, Ma et al. (2013) applied an fMRI adaptation paradigm to explore whether the trait concept is represented in the mPFC or not. They presented two sentences in which different actors engaged in different behaviors that either implied the same trait, an opposite trait, or no trait at all in a given context. If fMRI adaptation is found in the mPFC for the non-presented, but implied trait, irrespective of variations in behaviors and actors, this would provide strong support for a trait code. And indeed, these researchers found robust suppression of activation in the ventral mPFC (vmPFC) when a critical trait-implying sentence was preceded by a sentence that implied the same or opposite trait, but not when the preceding sentence did not imply any trait. This suppression effect was found nowhere else in the brain. This seems to indicate that trait concepts are not only processed but also represented by an ensemble of neurons in the vmPFC.

However, this adaptation effect in the vmPFC might not be exclusively due to trait representation. An alternative explanation is that the stimuli used in this study included a set of social behaviors with positive, negative or neutral valence. Hence, the observed adaptation effect might possibly be due to the repetition of valences. In fact, human social behaviors are always highly intertwined with affective connotations and this study did not disentangle the contribution of specific traits vs their underlying valences on the adaptation effect in the mPFC. The aim of this study is to disentangle the contribution of trait and valence.

To verify whether the vmPFC does represent a trait code or responds to the magnitude of valence, this study modified the previous fMRI adaptation paradigm for exploring trait codes (Ma et al., 2013). Like in the earlier trait-adaptation study (Ma et al., 2013), a behavioral trait-implying sentence (the target) was preceded by another sentence (the prime). To dissociate the role of the mPFC in trait and valence processing, we manipulated positive and negative valences for either a human trait as in the previous study, and added a similar manipulation for an object’s characteristics. This resulted in four conditions overall. In the two trait conditions that replicated the previous study, the target sentence implied a positive or negative trait (e.g. ‘hugs her son’, implying friendly) and was preceded by a prime sentence that implied the same trait (Trait-Similar condition, e.g. ‘tells the kids a story’ implying friendly) or the opposite trait (Trait-Opposite condition; e.g. ‘shows her anger’, implying unfriendly). The previous study (Ma et al., 2013) found substantial adaptation from prime to target for these two trait conditions, in comparison with a no-trait control condition. In the two novel object conditions, the structure was identical. The target sentence again implied a positive or negative trait (e.g. friendly), but was now preceded by a prime sentence that described an object with the same valence (Object-Similar condition, e.g. ‘The photo is nice’) or the opposite valence (Object-Opposite condition, e.g. ‘The soup is sour’). Thus, in the trait conditions, two trait sentences followed upon each other, and potential adaptation is due to either the similarity in the specific trait content (e.g. friendliness) or the similarity in valence. In contrast, in the object conditions, a trait sentence is preceded by an object sentence and potential adaptation can only be due to the similarity in valence.

In line with the results from the previous fMRI adaptation study on trait inference (Ma et al., 2013), we expect a strong adaptation effect of trait implication in the vmPFC from the Trait-Similar and Trait-Opposite conditions. Moreover, if there is a strong adaptation effect of generalized valence irrespective of the source (trait or object), this might also be revealed in the vmPFC. It is possible that this valence code partly overlaps with the trait code (Ochsner, 2008; Fossati, 2012). This prediction is based on the observation that the ventral part of mPFC is involved in regulating emotional responses (Quirk and Beer, 2006; Olsson and Ochsner, 2008; Roy et al.,2012) and affective mentalizing (Sebastian et al., 2012). This overlap in trait and valence code would suggest a strong effect of valence on trait coding. Alternatively, it is possible that there is no overlap between trait and valence code, indicating that valence and trait are represented distinctly in the human brain.

METHOD

Participants

Participants were all right-handed, 12 women and 5 men, with ages varying between 19 and 24. In exchange for their participation, they were paid 10 euro. Participants reported no abnormal neurological history and had normal or corrected-to-normal vision. Informed consent was obtained in a manner approved by the Medical Ethics Committee at the Hospital of University of Ghent (where the study was conducted) and the Free University Brussels (of the principal investigator F.V.O.).

Procedure and stimulus material

We created five conditions: Trait-Similar, Trait-Opposite, Object-Similar, Object-Opposite and a Singleton condition. In the two trait conditions (Trait-Similar and Trait-Opposite), participants read two sentences (a prime sentence followed by a target sentence) concerning different agents who were engaged in behaviors that implied positive or negative moral traits. The target sentence (e.g. ‘Tolvan gave her brother a compliment’ to induce the trait friendly) was preceded by a prime sentence that implied the same trait (Trait-Similar condition, e.g.‘Calpo gave her sister a hug’) or the opposite trait (Trait-Opposite condition, e.g. ‘Angis gave her mother a slap’). In the two object conditions (Object-Similar and Object-Opposite), participants read a prime sentence involving an object followed by a target sentence involving an agent’s trait-implying behavior that had the same or opposite valance. The target sentence (e.g. ‘Jun gave his brother a hug’ to induce the trait with positive valence) was preceded by a prime sentence that described an object with the same valence (Object-Similar condition, e.g. ‘The photo is nice’) or the opposite valence (Object-Opposite condition, e.g. ‘The soup is sour’). The positive or negative trait or object sentences were counterbalanced across participants, so that each set of prime and target sentences was used in different conditions for different participants. To avoid that participants would ignore the (first) prime sentence and pay attention only on the (second) target sentence, we added a Singleton condition consisting of a single trait-implying behavioral sentence, immediately followed by a trait question. There were 16 trials in each condition.

The trait sentences were borrowed from earlier studies on trait inference using fMRI (Ma et al., 2011, 2012) and event-related potential (ERP, by Van Duynslaeger et al., 2007), while the object sentences were created anew. All the trait sentences were originally created in Dutch and consisted of six words (except 10 sentences with seven words) and most of the object sentences consisted of four words (except three sentences with five words and one sentence with six words). Importantly, a pilot study (n = 55) showed that the mean valence of the trait sentences (M = 6.04 and M = 1.97 for positive and negative traits, respectively) was equivalent to the mean valence of the object sentences (M = 6.01 and M = 2.15, respectively; ts(15) < 1, ps > 0.49).

Participants were instructed to infer the agent’s trait from the target sentence and indicated by button press after each trial whether or not a given trait applied to the target description. To avoid associations with a familiar and/or existing name, fictitious ‘Star Trek’-like names were used (Ma et al., 2011, 2012a,b). To exclude any possible adaptation effect resulting from the agent, the agents’ names differed in all the trait-implied sentences. All the sentences were presented at once in the middle of the screen for a duration of 5.5 s. To optimize estimation of the event-related fMRI response, each prime and target sentence was separated by a variable interstimulus interval of 2.5–4.5 s randomly drawn from a uniform distribution, during which participants passively viewed a fixation crosshair. We presented one of four pseudo-randomized versions of the material, counterbalanced between conditions and participants.

Imaging procedure

Images were collected with a 3 Tesla Magnetom Trio MRI scanner system (Siemens medical Systems, Erlangen, Germany), using an eight-channel radiofrequency head coil. Stimuli were projected onto a screen at the end of the magnet bore that participants viewed by way of a mirror mounted on the head coil. Stimulus presentation was controlled by E-Prime 2.0 (www.pstnet.com/eprime; Psychology Software Tools) under Windows XP. Immediately prior to the experiment, participants completed a brief practice session. Foam cushions were placed within the head coil to minimize head movements. We first collected a high-resolution T1-weighted structural scan (MP-RAGE) followed by one functional run (30 axial slices; 4 mm thick; 1 mm skip). Functional scanning used a gradient-echo echoplanar pulse sequence (TR = 2 s; TE = 33 ms; 3.5 × 3.5 × 4.0 mm in-plane resolution).

Image processing and statistical analysis

The fMRI data were preprocessed and analyzed using SPM8 (Wellcome Department of Cognitive Neurology, London, UK). For each functional run, data were preprocessed to remove sources of noise and artifacts. Functional data were corrected for differences in acquisition time between slices for each whole-brain volume, realigned within and across runs to correct for head movement, and coregistered with each participant’s anatomical data. Functional data were then transformed into a standard anatomical space (2 mm isotropic voxels) based on the ICBM 152 brain template [Montreal Neurological Institute (MNI)], which approximates Talairach and Tournoux atlas space. Normalized data were then spatially smoothed (6 mm full-width-at-half-maximum) using a Gaussian kernel. Afterwards, realigned data were examined, using the Artifact Detection Tool software package (ART; http://web.mit.edu/swg/art/art.pdf; http://www.nitrc.org/projects/artifact_detect), for excessive motion artifacts and for correlations between motion and experimental design and between global mean signal and the experimental design. Outliers where identified in temporal difference series by assessing between-scan differences (Z-threshold: 3.0, scan to scan movement threshold 0.45 mm; rotation threshold: 0.02 radians). These outliers were omitted in the analysis by including a single regressor for each outlier (bad scan). The ART software did not detect substantial correlations between motion and experimental design or global signal and experimental design.

Next, single participant (first level) analyses were conducted. Statistical analyses were performed using the general linear model of SPM8 of which the event-related design was modeled with one regressor for each condition, time-locked at the presentation of the prime and target sentences and convolved with a canonical hemodynamic response function (with event duration assumed to be 0 for all conditions). Six motion parameters from the realignment as well as outlier time points (identified by ART) were included as nuisance regressors. The response of the participants was not modeled. We used a default high-pass filter of 128 s and serial correlations were accounted for by the default autoregressive AR(1) model.

For the group (second level) analyses, we conducted a whole-brain analysis with a voxel-based statistical threshold of P ≤ 0.001 (uncorrected) with a minimum cluster extent of 10 voxels. Statistical comparisons between conditions were conducted using t-tests on the parameter estimates associated with each trial type for each subject, P < 0.05 (FWE corrected). We defined adaptation as the contrast (i.e. decrease in activation) between prime and target sentences (i.e. prime > target). This adaptation contrast was further analyzed in conjunction analyses (combining all trait or object conditions) to identify the brain areas commonly involved in the processes of trait inference or evaluation, respectively. More critically, an interaction analysis of the adaptation effect with a trait > objects contrast was conducted to isolate the brain areas involved in a trait code. Likewise, an interaction analysis of the adaptation effect with an object > trait contrast was conducted to isolate the brain areas involved in a non-social valence code. To further verify that the brain areas identified in the previous analysis showed the hypothesized adaptation pattern, we computed the percentage signal change. This was done in two steps. First, we identified a region of interest (ROI) as a sphere of 8 mm around the peak coordinates from the whole-brain interaction as described above. Second, we extracted the percentage signal change in this ROI from each participant using the MarsBar toolbox (http://marsbar.sourceforge.net). We also calculated an adaptation index as the percentage signal change of prime minus target condition. These data were analyzed using ANOVA and t-tests with a threshold of P < 0.05. The same strategy was applied for a possible valence code as well.

RESULTS

Behavioral results

A repeated-measure ANOVA was conducted on the reaction times (RT) and accuracy rates from the five conditions (Table 1). The RT data revealed a significant effect, F(1, 16) = 36.46, P < 0.001. Participants responded almost equally fast in the four experimental conditions, and much faster as compared to the Singleton condition. The faster RT in all the four conditions reflect a significant amount of priming across the experimental trials, and so provide behavioral confirmation for the priming manipulation that produces the fMRI adaptation effect. The accuracy rate data revealed significant differences among conditions, F(1, 16) = 6.60, P < 0.001. Participants responded with higher accuracy in the Trait-Similar and Object-Opposite conditions as compared to the Trait-Opposite, Object-Similar and Singleton conditions.

Table 1.

RT and accuracy rate from behavioral performance

| Condition | Trait-similar | Trait-opposite | Object-similar | Object-opposite | Singleton |

|---|---|---|---|---|---|

| RT (ms) | 1287a | 1252a | 1286a | 1249a | 1486b |

| Accuracy rate (%) | 97.8a | 95.8b | 96.2b | 98.5a | 95.1b |

Means in a row sharing the same subscript do not differ significantly from each other according to a Fisher LSD test, P < 0.05.

fMRI results

Our analytic strategy for detecting an adaptation effect during trait and valence processing was based on a similar strategy by Ma et al. (2013). First, to identify a common process of trait inference and object–trait evaluation across the Similar and Opposite conditions, we conducted a whole-brain, random-effects analysis contrasting prime > target trials in the Similar and Opposite conditions for traits and objects, followed by a conjunction analysis. Next and more importantly, we conducted a number of Prime > Target interactions to isolate the specific areas involved in trait and valence codes, respectively (Table 2). Second, to verify that the areas isolating the trait and valence code showed the hypothesized adaption pattern, we defined ROIs centered on the peak values from the whole-brain interaction and extracted the % signal change. From this, we calculated an adaptation index which was submitted to a significance test.

Table 2.

Adaptation (prime > target contrast) effects from the whole brain analysis

| Anatomical label | x | y | z | Voxels | Max t | x | y | z | Voxels | Max t | x | y | z | Voxels | Max t |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Similar traits |

Opposite traits |

Conjunction: similar and opposite traits | |||||||||||||

| [1 −1 0 0 0 0 0 0] | [0 0 1 −1 0 0 0 0] | ||||||||||||||

| Prime > target contrasts for traits | |||||||||||||||

| vmPFC | 6 | 30 | −12 | 1956 | 5.39** | −4 | 48 | −4 | 2149 | 6.44*** | 8 | 32 | −10 | 1591 | 5.08* |

| L insula | −38 | −12 | 4 | 354 | 5.03* | ||||||||||

| R inferior parietal | 60 | −26 | 34 | 668 | 5.13* | ||||||||||

| L inferior parietal | −58 | −32 | 28 | 395 | 5.14* | ||||||||||

| R cingulated | 16 | −32 | 46 | 943 | 4.91* | ||||||||||

| L fusiform | −28 | −36 | −18 | 576 | 6.14*** | −26 | −36 | −16 | 324 | 6.17*** | −28 | −36 | −18 | 312 | 6.05*** |

| R fusiform | 32 | −42 | −10 | 422 | 5.90** | 30 | −38 | −12 | 250 | 5.11* | 30 | −38 | −12 | 222 | 5.11* |

| R precuneus | 18 | −54 | 18 | 454 | 5.00* | 18 | −54 | 18 | 361 | 5.00* | |||||

| L precuneus | −14 | −56 | 20 | 670 | 6.20*** | −12 | −54 | 20 | 1791 | 6.99*** | −14 | −56 | 20 | 518 | 6.20*** |

| L mid-occipital | −42 | −76 | 32 | 467 | 5.45** | ||||||||||

| R mid-occipital | 44 | −76 | 30 | 659 | 5.86** | 44 | −72 | 32 | 297 | 5.08* | 44 | −72 | 32 | 297 | 5.08* |

| Object–trait pairs with similar valence |

Object–trait pairs with opposite valence |

Conjunction | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [0 0 0 0 1 −1 0 0] | [0 0 0 0 0 0 1 −1] | ||||||||||||||

| Prime > target contrasts for object–trait pairs | |||||||||||||||

| R fusiform | 32 | −34 | −16 | 190 | 5.02* | ||||||||||

| L fusiform | −30 | −40 | −12 | 281 | 5.87** | −28 | −40 | −12 | 321 | 6.01*** | −30 | −40 | −12 | 242 | 5.72** |

| L mid-occipital | −40 | −78 | 34 | 241 | 5.63** | −38 | −80 | 42 | 222 | 5.54** | −40 | −78 | 38 | 179 | 5.35** |

| For similar traits only |

For opposite traits only |

For similar > opposite traits only |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [1 −3 0 0 1 1 0 0] | [0 0 1 −3 0 0 1 1] | [1 −4 1 −2 1 1 1 1] | |||||||||||||

| Trait adaptation: interaction of prime > target contrast with traits only (not objects) | |||||||||||||||

| vmPFa | −6 | 46 | −2 | 2392 | 5.69** | −6 | 48 | −4 | 3219 | 6.64*** | −6 | 48 | −4 | 3235 | 6.65*** |

| L insula | −38 | −10 | −6 | 504 | 5.02* | ||||||||||

| R postcentral | 62 | −26 | 36 | 927 | 6.28*** | 62 | −26 | 36 | 887 | 6.12*** | |||||

| L inferior parietal | −62 | −30 | 28 | 474 | 5.98*** | −62 | −30 | 28 | 430 | 5.92** | |||||

| Cingulate | −8 | −32 | 46 | 2044 | 6.04*** | −12 | −32 | 44 | 464 | 5.16* | −8 | −32 | 46 | 1527 | 6.16*** |

| R fusiform | 30 | −34 | −20 | 575 | 7.44*** | 30 | −38 | −14 | 442 | 6.10*** | 32 | −34 | −18 | 582 | 7.19*** |

| L fusiform | −28 | −40 | −14 | 793 | 8.31*** | −28 | −34 | −18 | 523 | 6.92*** | −28 | −36 | −18 | 730 | 8.39*** |

| R pSTS | 56 | −56 | 4 | 196 | 4.91* | ||||||||||

| L pSTS | −54 | −58 | 2 | 220 | 5.08* | −54 | −58 | 2 | 173 | 4.86* | |||||

| R precuneusa | 20 | −54 | 20 | 347 | 5.45** | 18 | −54 | 20 | 401 | 5.55** | |||||

| L precuneusa | −14 | −56 | 20 | 736 | 7.30*** | −12 | −54 | 20 | 684 | 6.65*** | −12 | −54 | 20 | 818 | 7.78*** |

| R mid-occipitala | 44 | −76 | 30 | 647 | 5.83** | 44 | −76 | 30 | 561 | 5.52** | |||||

| L mid-occipital | −42 | −76 | 32 | 795 | 7.39*** | −42 | −76 | 32 | 658 | 6.81*** | |||||

| For similar > opposite traits |

For similar > opposite object–trait pairs |

for similar > opposite Valence |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [1 −3 1 1 0 0 0 0] | [0 0 0 0 1 −3 1 1] | [1 −3 1 1 1 −3 1 1] | |||||||||||||

| Valence adaptation: interaction of prime > target contrast with similar > opposite valence contrast | |||||||||||||||

| R fusiform | 32 | −34 | −18 | 152 | 4.86* | ||||||||||

| L fusiform | −28 | −40 | −14 | 291 | 5.29* | −30 | −40 | −12 | 237 | 5.11* | |||||

| L precuneus | −12 | −56 | 20 | 434 | 5.88** | −12 | −56 | 20 | 174 | 5.13* | |||||

| R mid-occipital | 44 | −72 | 30 | 468 | 4.95* | ||||||||||

| L mid-occipital | −42 | −78 | 30 | 332 | 5.42** | −42 | −78 | 32 | 242 | 5.40** | |||||

Coordinates refer to the MNI stereotaxic space. All clusters thresholded at P < 0.001 with at least 10 voxels. Only significant clusters are listed. The contrasts between parentheses refer to the prime and target in the Trait-Similar, Trait-Opposite, Object-Similar, Object-Opposite conditions, respectively. aPredicted trait-adaptation pattern in a % signal change analysis was significant according to a t-test (P < 0.05); the predicted valence adaptation pattern was nowhere significant.

*P < 0.05, **P < 0.01, ***P < 0.001 (FWE corrected).

We begin with the trait conditions to isolate a trait code. The whole-brain analysis of the prime > target adaptation contrast revealed significant adaptation effects (P < 0.05, FWE corrected) in the mPFC for the Trait-Similar and Trait-Opposite conditions. This adaptation effect was also observed in other brain areas in the Trait-Similar condition, including the left insula, bilateral inferior parietal cortex, right cingulate, bilateral fusiform and bilateral mid-occipital cortex and some of these areas were also significant in the Trait-Opposite condition (Table 2). The conjunction analysis of the Trait-Similar and Trait-Opposite conditions confirmed a common adaptation effect in the vmPFC, bilateral precuneus, bilateral fusiform and right mid-occipital cortex, irrespective of valence.

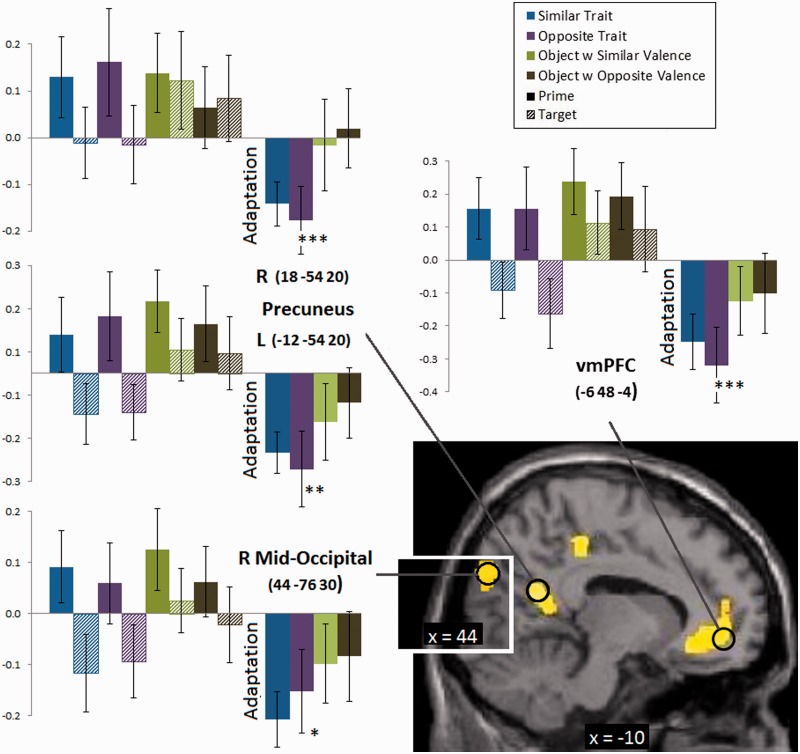

To identify the brain areas involved in the trait code, we conducted a number of whole-brain interaction analyses with the prime > target adaptation contrast. Specifically, we explored interactions where this prime > target adaptation contrast was present for traits, but absent for objects (Table 2 for the contrast weights, where object conditions receive a uniform ‘1’ weight indicating that this adaptation contrast is absent). We conducted these adaptation contrasts for the Trait-Similar condition, for the Trait-Opposite conditions, and finally for the theoretically most interesting Trait-Similar > Trait-Opposite contrast. This latter contrast gives somewhat more weight to adaptation for similar than for opposite traits. These three interactions showed a consistent pattern of active brain areas that included the vmPFC, left insula, right postcentral cortex, left inferior parietal cortex, cingulate, bilateral fusiform, bilateral pSTS, bilateral precuneus and bilateral mid-occipital cortex. However, it is possible that the results of these interactions are driven by some difference between trait and object conditions, but not necessarily by the predicted adaptation pattern. To verify which of these brain areas would reveal the predicted adaptation effect for traits but not for objects, we defined ROIs centered at the peak value of the clusters identified by latter whole-brain interaction (i.e. prime > target contrast in interaction with Trait-Similar > Trait-Opposite; Table 2). We then calculated an adaptation index by subtracting the percentage signal change in the target sentence from the prime sentence (Figure 1). Statistically, a trait code would be revealed when adaptation is present for traits, and less so for objects, that is, when the adaptation index is significantly larger for traits than for objects. This difference was largest for the vmPFC (P < 0.001) as predicted, followed by the bilateral precuneus (P < 0.01) and right mid-occipital cortex (P < 0.05; all df = 17). No other brain area showed this predicted adaptation pattern.

Fig. 1.

Percent signal change in the ventral prefrontal cortex, bilateral precuneus and right mid-occipital for the prime and target sentences in all conditions, and for the adaptation index (target–prime condition) based on four ROIs centered around the peak values in the interaction analysis (with MNI coordinates, vmPFC: −6 48 −4; left precuneus: −12 −54 20; right precuneus: 18 −54 20; right mid-occipital: 44 −76 30).

To ensure that these areas (including the vmPFC) were involved only in adaptation (i.e. decrease of activation and no increase of activation), we also conducted a whole-brain analysis of the reverse target > prime contrast in the Trait-Similar and Trait-Opposite conditions (Table 3). The results revealed a series of brain areas that were more strongly recruited during the presence of the target sentence, including the left mid-orbital frontal cortex, right insula, supplementary motor cortex, bilateral inferior parietal cortex and lingual gyrus. Importantly, there was no activation of the mPFC, precuneus or mid-occipital.

Table 3.

The target > prime contrast from the whole brain analysis

| Anatomical label | x | y | z | Voxels | Max t | x | y | z | Voxels | Max t | x | y | z | Voxels | Max t |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Similar traits |

Opposite traits |

Conjunction: Similar and Opposite traits | |||||||||||||

| [−1 1 0 0 0 0 0 0] | [0 0 −1 1 0 0 0 0] | ||||||||||||||

| Target > prime contrasts for traits | |||||||||||||||

| L mid-orbital frontal | −42 | 48 | −4 | 872 | 5.96** | −42 | 48 | −2 | 795 | 5.95** | |||||

| R insula | 34 | 22 | −6 | 3949 | 8.05*** | 32 | 24 | −4 | 3857 | 8.03*** | |||||

| Supplementary motor | −4 | 18 | 48 | 7009 | 8.21*** | −4 | 18 | 48 | 6537 | 8.21*** | |||||

| R parahippocampal | 12 | 2 | −8 | 340 | 5.20* | ||||||||||

| L inferior parietal | −32 | −50 | 48 | 2407 | 7.90*** | −32 | −52 | 50 | 30014 | 9.98*** | −32 | −50 | 48 | 2293 | 7.90*** |

| R inferior parietal | 36 | −50 | 44 | 1696 | 6.69*** | 36 | −50 | 44 | 1579 | 6.69*** | |||||

| R fusiform | 30 | −60 | −32 | 611 | 6.01*** | ||||||||||

| L fusiform | −38 | −62 | −30 | 617 | 5.61** | ||||||||||

| Lingual gyrus | −6 | −74 | −24 | 937 | 5.83** | −8 | −76 | −26 | 1206 | 6.39*** | −6 | −74 | −24 | 850 | 5.83** |

| Object–trait pairs with similar valence |

Object–trait pairs with opposite valence |

Conjunction | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [0 0 0 0 −1 1 0 0] | [0 0 0 0 0 0 −1 1] | ||||||||||||||

| Target > prime contrasts for object − trait pairs | |||||||||||||||

| R Insula | 32 | 26 | 4 | 1802 | 7.51*** | 30 | 26 | 2 | 1664 | 6.99*** | 30 | 26 | 2 | 1212 | 6.99*** |

| Supplementary motor | −4 | 6 | 58 | 2081 | 11.08*** | −4 | 6 | 58 | 2081 | 11.08*** | |||||

| L precentral | −42 | 0 | 56 | 5252 | 8.69*** | −44 | −2 | 56 | 4327 | 8.49*** | |||||

| R parahippocampal | 20 | −24 | −8 | 64 | 5.16* | 22 | −22 | −8 | 129 | 6.08*** | 20 | −24 | −8 | 64 | 5.16* |

| L hippocampus | −22 | −24 | −10 | 81 | 5.68** | −22 | −24 | −10 | 191 | 6.95*** | −22 | −24 | −10 | 81 | 5.68** |

| L mid-temporal | −60 | −32 | 4 | 1935 | 9.04*** | −60 | −32 | 4 | 2167 | 9.16*** | −60 | −32 | 4 | 1811 | 9.04*** |

| R superior temporal | 44 | −34 | 4 | 258 | 5.19* | ||||||||||

| L fusiform | 28 | −58 | −50 | 41 | 4.91* | ||||||||||

| L calcarine | −10 | −86 | 2 | 33783 | 11.59*** | −10 | −86 | 2 | 34418 | 12.55*** | −10 | −86 | 2 | 26629 | 11.59*** |

Coordinates refer to the MNI stereotaxic space. All clusters thresholded at P < 0.001 with at least 10 voxels. Only significant clusters are listed. The contrasts between parentheses refer to the prime and target in the Trait-Similar, Trait-Opposite, Object-Similar, Object-Opposite conditions, respectively. *P < 0.05, ** P < 0.01, ***P < 0.001 (FWE corrected also).

We applied a similar set of analyses using the same logic to detect a potential adaptation effect for valence. The whole-brain analysis of the prime > target adaptation contrast for object conditions (i.e. object–trait pairs with similar or opposite valance) revealed significant adaptation effects (P < .05, FWE corrected) in the fusiform and left mid-occipital areas for the Object-Similar and Object-Opposite conditions (Table 2). The left fusiform and left mid-occipital cortex were also involved in a conjunction analysis. We then tested for a stronger (prime > target) adaptation effect for similarly as compared to oppositely valenced stimuli. Specifically, we conducted a whole-brain interaction analysis of the adaptation contrast on the prime > target contrast and on the Similar > Opposite valence contrast. This analysis revealed a valence adaptation effect for traits, but not for object–trait pairs (Table 2), which runs against the idea of a generalized valence effect irrespective of stimulus type. Moreover, the adaptation index for the brain areas (also computed from ROIs centered around the whole-brain peak values in the same manner as before) failed to show significant differences between Similar and Opposite valences for object–trait pairs. Because these differences are essential for a valence interpretation, this failure is very damaging for this hypothesis. The whole-brain analysis of the reverse target > prime contrast in the Object-Similar and Object-Opposite conditions revealed several brain areas (Table 3), but no mPFC activation.

DISCUSSION

Numerous neuroimaging studies have revealed that the mPFC is involved in inferring traits of others or the self (Ma et al., 2012b; for a review, see Van Overwalle, 2009). Traits are not only processed but also represented in the vmPFC irrespective of specific behaviors, as revealed in a novel adaptation study by Ma et al. (2013). This is an important finding, because it sheds light on how exactly traits are activated, interpreted and processed in the brain, and how neural dysfunctions such as lesions might disrupt this process. However, because human social behaviors and traits are highly intertwined with affective meaning and feelings, and because the vmPFC is proposed to be critical in the generation of affective meaning (e.g. Roy et al., 2012; Sebastian et al., 2012) and in regulating emotional responses (Quirk and Beer, 2006; Olsson and Ochsner, 2008; Roy et al., 2012), it is necessary to disentangle the role of valence in trait representation. This was the aim of this study.

Trait code only in the vmPFC

The results confirmed the previous study (Ma et al., 2013) that a trait code is represented in a distinct location of the vmPFC—and only the trait code. There is no coding of general valence. This conclusion was made possible by including two novel object conditions in this study, with a similar or opposite valence than the traits. We found that adaptation of general valence from object prime sentences to trait target sentences was absent. In contrast, adaptation from prime to target sentences for traits was robust, and was revealed in the same location as the earlier trait-adaptation study (Ma et al., 2013). It was further confirmed by the adaptation index, which showed a great amount of adaption for similar and opposite traits, but not for object–trait pairs. Interestingly, the finding that similar and opposite traits show approximately the same amount of adaptation was also demonstrated in the earlier Ma et al. (2013) study, and demonstrates that a trait and its opposite seem to be represented by a highly similar and overlapping neural population in the mPFC. Presumably, it is mainly the trait content itself that is represented in the trait code, and less so the extent to which the trait is expressed. For instance, stating that a person is not romantic often makes one think of romantic behaviors and then negates them. This is in line with the schema-plus-tag model, in which a negated trait is represented as the original (true) trait with a negation tag (Mayo et al., 2004).

Our finding that the vmPFC houses the neural substrate of a trait code is consistent with the claim that one of the primary functions of PFC is the representation of action and guidance of behavior (Barbey et al., 2009; Forbes and Grafman, 2010). According to these authors, series of events form a script that represents a set of goal-oriented actions, that is sequentially ordered and guides perceptions and behavior. These scripts—also referred to as structured event complexes—are represented as memory codes in the brain, and upon activation, can guide the interpretation of observed behavior and the execution of behaviors (Grafman, 2002; Wood and Grafman, 2003; Barbey et al., 2009). This is in line with the social psychology literature that conceives traits as abstracted instances of goal-directed behaviors (see also Read, 1987; Read et al., 1990, Reeder et al., 2004; Reeder, 2009). Recent behavioral and neural evidence confirms that goals are primary inferences (e.g. an aggressive act), whereas traits are secondary inferences (e.g. an aggressive agent) which follow from these goal interpretations (Van Duynslaeger et al., 2007; Van der Cruyssen et al.2009; Ma et al., 2012b; Malle and Holbrook, 2012; Van Overwalle et al., 2012). Neuroimaging studies confirm that trait inferences involve a high-level form of abstraction of agent characteristics on the basis of lower-level actions (Spunt et al., 2010; Baetens et al., 2013; Gilead and Liberman, 2013). Therefore, actions, intentions and traits in a social context can be ordered hierarchically according to their level of abstractness, with traits at the highest level of abstraction in this context.

The crucial role of the mPFC in social reasoning is attested by studies demonstrating that social reasoning is disrupted by lesions in the mPFC. Several lesion studies revealed that damage to the mPFC impairs social mentalizing (i.e. theory of mind, false beliefs, moral judgments; see recent findings by Geraci et al., 2010; Martín-Rodríguez et al., 2010; Young et al., 2010; Roca et al., 2011; for an exception, Bird et al., 2004). More to the point, it has been found that although patients with vmPFC lesions can explicitly articulate traits based on learned social rules, they appear impaired at accessing trait information automatically (Milne and Grafman, 2001). Together with our results, this suggests that they lack the ability to make sensitive and appropriate use of trait information in everyday life. This is certainly an area for further research.

We found no adaptation for our valence manipulation across objects and traits, although a great number of neuroimaging studies revealed that the vmPFC is recruited during emotion regulation (Quirk and Beer, 2006; Olsson and Ochsner, 2008; Etkin et al., 2011; Roy et al., 2012), affective mentalizing (Sebastian et al., 2012) and reward-related processing (Van Den Bos et al., 2007). This suggests that a generalized valence coding across objects and humans is not represented in the brain, at least not as investigated in this study. Remember that participants read behavioral sentences that implied a trait, after they had read sentences that describe a quality of an object with a same or different valence. The results did not reveal any significant adaptation of valence from object to trait (as shown by a significant adaptation index), not in mPFC or any other brain area. This confirms the finding of the previous trait-adaptation study (Ma et al., 2013) that a trait code is represented in the mPFC, and extends that work to show that this code is independent from valence.

While this study established that the mPFC locates a trait code, other research has been directed at investigating how this kind of abstract information is acquired and encoded in the cortex in the first place. Functional neuroimaging and lesion studies in humans as well as computational models provide converging evidence for the hypothesis that all memories initially implicate the hippocampus and are consolidated and transformed into the cortex after some delay (McClelland et al., 1995; Winocur et al., 2007; Wang and Morris, 2010; McKenzie and Eichenbaum, 2011). That is, as time passes, memories in the hippocampus are transformed from representations that code the context-dependent features of the event, to neocortical representations of its abstract features that are independent of context. We presume that a similar learning process of consolidation and transformation of episodic behavioral information into abstract trait information takes place during childhood. Our study and the previous study by Ma et al. (2013) showed that this consolidated information can be re-activated and so allows access to this stored information for further processing and interpreting the behavior and traits of others.

A role for the precuneus?

This study also revealed that the bilateral precuneus was involved in trait adaptation. At first sight, this might seem to confirm findings from the previous fMRI adaptation study (Ma et al., 2013). This study found that the precuneus was involved in adaption of trait-relevant sentences (Trait-Similar and Trait-Opposite conditions) as well as trait-irrelevant sentences. However, the precuneus showed no adaptation when the trait-irrelevant condition served as control (i.e. trait-relevant > trait-irrelevant contrast). Ma et al. (2013) explained the activation of the precuneus by assuming that some minimal amount of a trait inference process takes place even for irrelevant control sentences, due to the explicit instructions to infer a trait. Thus, participants might have attempted to infer a trait even when the behavior was non-diagnostic. This study adds another explanation. It starts from the observation that the trait-irrelevant sentences which served as control in the previous study (Ma et al., 2013) were similar to the trait-relevant sentences in all respects, except for the fact that a trait was not implied. However, in this study, there were more differences in the object sentences which served as control. Object sentences had no two interacting agents but only an object and the verb did not represent an intentional action but rather an unintentional state or observation (e.g. ‘is’, ‘smells’), hence rendering the overall scene completely different. Given that the precuneus is strongly involved in scene construction (Hassabis and Maguire, 2007; Speer et al., 2007), we presume that its activation in this study is due to these larger differences between the trait sentences in comparison with the object sentences. That is, the larger difference between the trait and the object conditions may have increased the perceived similarity within the trait conditions, increasing adaptation not only for traits but also for scenes and so may have activated the precuneus. We found additional activation in the occipital cortex that survived a significant test of the adaptation index, and which—using the same logic—may be related to the greater complexity of and consequently increased attention to the trait sentences in comparison with the object control sentences.

Nevertheless, it is possible that the precuneus serves a role in trait representation, albeit at a more indirect level. In support of this idea, it has been suggested that the precuneus may be associated with mentalizing to some extent (see meta-analyses by Carrington and Bailey, 2009; Mar, 2011; Schilbach et al., 2012), the situational structure and context or construction of a scene, which includes the integration of relevant behavioral information into a coherent spatial context (Hassabis and Maguire, 2007; Speer et al., 2007), and the retrieval of episodic context information including autobiographic memory (Spreng et al., 2009). This may indicate that the precuneus reflects episodic information, such as a scene or situational background with prospective social intention, linked to the abstract trait concept represented in the vmPFC. This is also consistent with the simulation account proposed by Mitchell (2009; see also Tamir and Mitchell, 2010) which states that perceivers attribute mental states to another person by using their own mind as a model of the other mind. Mitchell (2009) suggested that individuals can use their previous knowledge and experience (by retrieving episodic or autobiographic memory which recruits the precuneus, e.g. Spreng et al., 2009) or their own mental traits (self-referential processing which recruits the mPFC) as proxies for understanding other minds (Mitchell et al., 2005; Jenkins et al., 2008). Nevertheless, so far several studies failed to find functional connectivity between the vmPFC and the precuneus during trait processing of others (Lombardo et al., 2010) and self-processing (Schmitz and Johnson, 2006; Sajonz et al., 2010; Van Buuren et al., 2010; Whitfield-Gabrieli et al., 2011). Future studies should further investigate this functional connectivity during trait judgments of others.

Limitations

This study attempted to dissociate trait and valence representations based on the previous fMRI study on trait adaptation (Ma et al., 2013). To address this issue, we replaced the trait-irrelevant condition in the previous study with two objects conditions. To control for the total scanning time and to keep the participants attentive, we decreased the number of trials to 16 for each condition. This lowered the statistical power and reliability of this study (Button et al., 2013). Moreover, the creation of two new object-conditions may have induced confounding factors, such as imaging the objects described in the sentences, which activated the fusiform and other brain areas (Simons et al., 2003; Grill-Spector et al., 2004). Nevertheless, even under these less ideal circumstances, the peak coordinates in the vmPFC (−6 48 −4) of this study were very closed to the previous fMRI findings on trait adaptation (−6 42 −14; Ma et al., 2013). This suggests that the current findings are quite robust, and confirms that a trait code is represented in vmPFC.

CONCLUSION

We demonstrate here that the vmPFC houses a trait code, independently from the valence that comprises this trait. This study was set up to detect a valence code, but we found no adaptation effect for it, only for traits. Although this finding awaits further confirmation, it is important because it indicates that a full trait meaning and valence is achieved after the appropriate trait code is activated upon receiving a stimulus input, and so allows further processing in the brain. Whether valence is first extracted upon activating the trait code, followed by a fuller trait meaning, or vice versa, is not clear from the present findings. In general, the results are in line with the social brain hypothesis (Dunbar 1992, 2009) which states that during human evolution, the frontal brain has been expanded greatly among humans compared with non-human primates because of evolutionary pressures to live and collaborate in increasingly larger groups, and to store interpersonal trait knowledge in the brain. The present results also suggest that neural dysfunction in this particular area of the vmPFC may severely disrupt trait identification and processing, as patients lose the capacity to form a deep trait meaning, rendering any interpretation of human action superficial and incapable of integration with concrete behavior (Milne and Grafman, 2001; Young et al., 2010). We suggest that they lack an essential key to do so fluently and adequately: the trait code.

Acknowledgments

This research was supported by an OZR Grant of the Vrije Universiteit Brussel to F.V.O. and conducted at GIfMI (Ghent Institute for Functional and Metabolic Imaging).

REFERENCES

- Baetens K, Ma N, Steen J, Van Overwalle F. Involvement of the mentalizing network in social and non-social high construal. Social Cognitive and Affective Neurosciences. 2014;9(6):817–24. doi: 10.1093/scan/nst048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbey AK, Krueger F, Grafman J. Structured event complexes in the medial prefrontal cortex support counterfactual representations for future planning. Philosophical Transactions of the Royal Society Biological Sciences. 2009;364:1291–300. doi: 10.1098/rstb.2008.0315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron SG, Gobbini MI, Engell AD, Todorov A. Amygdala and dorsomedial prefrontal cortex responses to appearance-based and behavior-based person impressions. Social Cognitive and Affective Neurosciences. 2011;6:572–81. doi: 10.1093/scan/nsq086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, McGill M, Thompson-Schill SL. Semantic adaptation and competition during word comprehension. Cerebral Cortex. 2008;18:2574–85. doi: 10.1093/cercor/bhn018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bird CM, Castelli F, Malik O, Frith U, Husain M. The impact of extensive medial frontal lobe damage on ‘Theory of Mind’ and cognition. Brain. 2004;127:914–28. doi: 10.1093/brain/awh108. [DOI] [PubMed] [Google Scholar]

- Button KS, Ioannidis JPA, Mokrysz C, et al. Power failure: why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience. 2013;14:365–76. doi: 10.1038/nrn3475. [DOI] [PubMed] [Google Scholar]

- Carrington SJ, Bailey AJ. Are there theory of mind regions in the brain? A review of the neuroimaging literature. Human Brain Mapping. 2009;30(8):2313–35. doi: 10.1002/hbm.20671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devauchelle A, Oppenheim C, Rizzi L, Dehaene S, Pallier C. Sentence syntax and content in the human temporal lobe: an fMRI adaptation study in auditory and visual modalities. Journal of Cognitive Neuroscience. 2009;21:1000–12. doi: 10.1162/jocn.2009.21070. [DOI] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. Adaptation to cognitive context and item information in the medial temporal lobes. Neuropsychologia. 2012;50:3062–9. doi: 10.1016/j.neuropsychologia.2012.07.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunbar RIM. Neocortex size as a constraint on group size in primates. Journal of Human Evolution. 1992;20:469–93. [Google Scholar]

- Dunbar RIM. The social brain hypothesis and its implications for social evolution. Annals of Human Biology. 2009;36:562–72. doi: 10.1080/03014460902960289. [DOI] [PubMed] [Google Scholar]

- Engel SA, Furmanski CA. Selective adaptation to color contrast in human primary visual cortex. Journal of Neuroscience. 2001;21:3949–54. doi: 10.1523/JNEUROSCI.21-11-03949.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etkin A, Egner T, Kalisch R. Emotional processing in anterior cingulate and medial prefrontal cortex. Trends in Neurosciences. 2011;15:85–93. doi: 10.1016/j.tics.2010.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forbes CE, Grafman J. The role of the human Prefrontal Cortex in social cognition and moral judgment. Annual Review Neuroscience. 2010;33:299–324. doi: 10.1146/annurev-neuro-060909-153230. [DOI] [PubMed] [Google Scholar]

- Fossati P. Neural correlates of emotion processing: from emotional to social brain. European Neuropsychopharmacology. 2012;22:487–91. doi: 10.1016/j.euroneuro.2012.07.008. [DOI] [PubMed] [Google Scholar]

- Geraci A, Surian L, Ferraro M, Cantagallo A. Theory of Mind in patients with ventromedial or dorsolateral prefrontal lesions following traumatic brain injury. Brain Injury. 2010;24:978–87. doi: 10.3109/02699052.2010.487477. [DOI] [PubMed] [Google Scholar]

- Gilead M, Liberman N, Maril A. From mind to matter: neural correlates of abstract and concrete mindsets. Social Cognitive and Affective Neuroscience. 2014;9(5):638–45. doi: 10.1093/scan/nst031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafman J. The structured event complex and the human prefrontal cortex. In: Stuss DTH, Knight RT, editors. Principles of Frontal Lobe Function. Oxford/New York: Oxford University Press; 2002. p. 616. [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus specific effects. Trends in Cognitive Science. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf T, Kanwisher N. The fusiform face area subserves face perception, not generic within-category identification. Nature Neuroscience. 2004;7:555–62. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychologica. 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- Harris LT, Todorov A, Fiske ST. Attributions on the brain: neuro-imaging dispositional inferences, beyond theory of mind. Neuroimage. 2005;28:763–9. doi: 10.1016/j.neuroimage.2005.05.021. [DOI] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA. Deconstructing episodic memory with construction. Trends in Cognitive Sciences. 2007;11:299–306. doi: 10.1016/j.tics.2007.05.001. [DOI] [PubMed] [Google Scholar]

- Jenkins AC, Macrae CN, Mitchell JP. Repetition suppression of ventromedial prefrontal activity during judgments of self and others. Proceedings of the National Academy of Sciences of the United States of America. 2008;105:4507–12. doi: 10.1073/pnas.0708785105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Josse G, Joseph S, Bertasi E, Giraud A-L. The brain’s dorsal route for speech represents word meaning: evidence from gesture. PLoS One. 2012;7(9):e46108. doi: 10.1371/journal.pone.0046108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Cortical regions involved in perceiving object shape. Journal of Neuroscience. 2000;20:3310–8. doi: 10.1523/JNEUROSCI.20-09-03310.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krekelberg B, Boynton GM, van Wezel RJA. Adaptation: from single cells to BOLD signals. Trends in Neurosciences. 2006;29:250–6. doi: 10.1016/j.tins.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Krueger F, Barbey AK, Grafman J. The medial prefrontal cortex mediates social event knowledge. Trends in Cognitive Sciences. 2009;13:103–9. doi: 10.1016/j.tics.2008.12.005. [DOI] [PubMed] [Google Scholar]

- Lombardo MV, Chakrabarti B, Bullmore ET, et al. Shared neural circuits for mentalizing about the self and others. Journal of Cognitive Neuroscience. 2010;22:1623–35. doi: 10.1162/jocn.2009.21287. [DOI] [PubMed] [Google Scholar]

- Ma N, Baetens K, Vandekerckhove M, Kestemont J, Fias W, Van Overwalle F. Traits are represented in the medial Prefrontal Cortex: an fMRI adaptation study. Social, Cognitive and Affective Neuroscience. 2014;9(8):1185–92. doi: 10.1093/scan/nst098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma N, Vandekerckhove M, Baetens K, Van Overwalle F, Seurinck R, Fias W. Inconsistencies in spontaneous and intentional trait inferences. Social, Cognitive and Affective Neuroscience. 2012a;7:937–50. doi: 10.1093/scan/nsr064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma N, Vandekerckhove M, Van Hoeck N, Van Overwalle F. Distinct recruitment of temporo-parietal junction and medial prefrontal cortex in behavior understanding and trait identification. Social Neuroscience. 2012b;7:591–605. doi: 10.1080/17470919.2012.686925. [DOI] [PubMed] [Google Scholar]

- Ma N, Vandekerckhove M, Van Overwalle F, Seurinck R, Fias W. Spontaneous and intentional trait inferences recruit a common mentalizing network to a different degree: spontaneous inferences activate only its core areas. Social Neuroscience. 2011;6:123–38. doi: 10.1080/17470919.2010.485884. [DOI] [PubMed] [Google Scholar]

- Malle BF, Holbrook J. Is there a hierarchy of social inferences? The likelihood and speed of inferring intentionality, mind, and personality. Journal of Personality and Social Psychology. 2012;102:661–84. doi: 10.1037/a0026790. [DOI] [PubMed] [Google Scholar]

- Mar RA. The neural bases of social cognition and story comprehension. Annual Review of Psychology. 2011;62:103–34. doi: 10.1146/annurev-psych-120709-145406. [DOI] [PubMed] [Google Scholar]

- Martín-Rodríguez JF, León-Carrión J. Theory of mind deficits in patients with acquired brain injury: a quantitative review. Neuropsychologia. 2010;48:1181–91. doi: 10.1016/j.neuropsychologia.2010.02.009. [DOI] [PubMed] [Google Scholar]

- Mayo R, Schul Y, Burnstein E. “I am not guilty” vs “I am innocent”: successful negation may depend on the schema used for its encoding. Journal of Experimental Social Psychology. 2004;40:433–49. [Google Scholar]

- McClelland JL, McNaughton B, O’Reilly R. Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and the failures of connectionist models of learning and memory. Psychological Review. 1995;102:419–57. doi: 10.1037/0033-295X.102.3.419. [DOI] [PubMed] [Google Scholar]

- McKenzie S, Eichenbaum H. Consolidation and reconsolidation: two lives of memories? Neuron. 2011;71:224–33. doi: 10.1016/j.neuron.2011.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milne E, Grafman J. Ventromedial prefrontal cortex lesions in humans eliminate implicit gender stereotyping. The Journal of Neuroscience. 2001;21(12):RC150. doi: 10.1523/JNEUROSCI.21-12-j0001.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP. Inferences about other minds. Philosophical Transactions of the Royal Society Biological Sciences. 2009;364:1309–16. doi: 10.1098/rstb.2008.0318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Banaji MR, Macrae CN. The link between social cognition and self-referential thought in the medial prefrontal cortex. Journal of Cognitive Neuroscience. 2005;17:1306–15. doi: 10.1162/0898929055002418. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Cloutier J, Banaji MR, Macrae CN. Medial prefrontal dissociations during processing of trait diagnostic and nondiagnostic person information. Social Cognitive and Affective Neuroscience. 2006;1:49–55. doi: 10.1093/scan/nsl007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell JP, Macrae CN, Banaji MR. Encoding-specific effects of social cognition on the neural correlates of subsequent memory. Journal of Neuroscience. 2004;24:4912–7. doi: 10.1523/JNEUROSCI.0481-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell J, Macrae C, Banaji M. Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron. 2006;50:655–63. doi: 10.1016/j.neuron.2006.03.040. [DOI] [PubMed] [Google Scholar]

- Ochsner KN. The social-emotional processing stream: five core constructs and their translational potential for schizophrenia and beyond. Biological Psychiatry. 2008;64:48–61. doi: 10.1016/j.biopsych.2008.04.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olsson A, Ochsner KN. The role of social cognition in emotion. Trends in Cognitive Sciences. 2008;12:65–71. doi: 10.1016/j.tics.2007.11.010. [DOI] [PubMed] [Google Scholar]

- Quirk GJ, Beer JS. Prefrontal involvement in the regulation of emotion: convergence of rat and human studies. Current Opinion in Neurobiology. 2006;16:723–7. doi: 10.1016/j.conb.2006.07.004. [DOI] [PubMed] [Google Scholar]

- Ramsey R, Hamilton FC. Triangles have goals too: understanding action representation in left aIPS. Neuropsychologia. 2010a;48:2773–6. doi: 10.1016/j.neuropsychologia.2010.04.028. [DOI] [PubMed] [Google Scholar]

- Ramsey R, Hamilton FC. Understanding actors and object-goals in the human brain. Neuroimage. 2010b;50:1142–7. doi: 10.1016/j.neuroimage.2009.12.124. [DOI] [PubMed] [Google Scholar]

- Read SJ. Constructing causal scenarios: a knowledge structure approach to causal reasoning. Journal of Personality and Social Psychology. 1987;52:288–302. doi: 10.1037//0022-3514.52.2.288. [DOI] [PubMed] [Google Scholar]

- Read SJ, Jones DK, Miller LC. Traits as goal-based categories: the importance of goals in the coherence of dispositional categories. Journal of Personality and Social Psychology. 1990;58:1048–61. [Google Scholar]

- Reeder GD. Mindreading: judgments about intentionality and motives in dispositional inference. Psychological Inquiry. 2009;20:1–18. [Google Scholar]

- Reeder GD, Vonk R, Ronk MJ, Ham J, Lawrence M. Dispositional attribution: multiple inferences about motive-related traits. Journal of Personality and Social Psychology. 2004;86:530–44. doi: 10.1037/0022-3514.86.4.530. [DOI] [PubMed] [Google Scholar]

- Roca M, Torralva T, Gleichgerrcht E, et al. The role of area 10 (BA10) in human multitasking and in social cognition: a lesion study. Neuropsychologia. 2011;49:3525–31. doi: 10.1016/j.neuropsychologia.2011.09.003. [DOI] [PubMed] [Google Scholar]

- Roggeman C, Santens S, Fias W, Verguts T. Stages of non-symbolic number processing in occipito-parietal cortex disentangled by fMRI-adaptation. Journal of Neuroscience. 2011;31:7168–73. doi: 10.1523/JNEUROSCI.4503-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy M, Shohamy D, Wager TD. Ventromedial prefrontal-subcortical systems and the generation of affective meaning. Trends in Cognitive Sciences. 2012;16:147–56. doi: 10.1016/j.tics.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sajonz B, Kahnt T, Margulies DS, et al. Delineating self-referential processing from episodic memory retrieval: common and dissociable networks. Neuroimage. 2010;50(4):1606–17. doi: 10.1016/j.neuroimage.2010.01.087. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Bzdok D, Timmermans B, et al. Introspective minds: using ale meta-analyses to study commonalities in the neural correlates of emotional processing, social & unconstrained cognition. PLoS One. 2012;7(2):e30920. doi: 10.1371/journal.pone.0030920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiller D, Freeman JB, Mitchell JP, Uleman JS, Phelps EA. A neural mechanism of first impressions. Nature Neuroscience. 2009;12:508–14. doi: 10.1038/nn.2278. [DOI] [PubMed] [Google Scholar]

- Schmitz TW, Johnson SC. Self-appraisal decisions evoke dissociated dorsal-ventral aMPFC networks. Neuroimage. 2006;30:1050–8. doi: 10.1016/j.neuroimage.2005.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sebastian CL, Fontaine MG, Bird G, et al. Neural processing associated with cognitive and affective theory of mind in adolescents and adults. Social Cognitive and Affective NeuroScience. 2012;7:53–63. doi: 10.1093/scan/nsr023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simons JS, Koutstaal W, Prince S, et al. Neural mechanisms of visual object priming: evidence for perceptual and semantic distinctions in fusiform cortex. NeuroImage. 2003;19:613–26. doi: 10.1016/s1053-8119(03)00096-x. [DOI] [PubMed] [Google Scholar]

- Speer N, Zacks J, Reynolds J. Human brain activity time-locked to narrative event boundaries. Psychological Science. 2007;18:449–55. doi: 10.1111/j.1467-9280.2007.01920.x. [DOI] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim AS. The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. Journal of Cognitive Neuroscience. 2009;21:489–510. doi: 10.1162/jocn.2008.21029. [DOI] [PubMed] [Google Scholar]

- Spunt RP, Falk EB, Lieberman MD. Dissociable neural systems support retrieval of how and why action knowledge. Psychological Science. 2010;21(11):1593–8. doi: 10.1177/0956797610386618. [DOI] [PubMed] [Google Scholar]

- Tamir DI, Mitchell JP. Neural correlates of anchoring-and-adjustment during mentalizing. Proceedings of the National Academy of Sciences of the United States of America. 2010;107:10827–32. doi: 10.1073/pnas.1003242107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL, D’Esposito M, Kan IP. Effects of repetition and competition on activity in left prefrontal cortex during word generation. Neuron. 1999;23:513–22. doi: 10.1016/s0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- Van Buuren M, Gladwin TE, Zandbelt BB, Kahn RS, Vink M. Reduced functional coupling in the default-mode network during self-referential processing. Human Brain Mapping. 2010;31:1117–27. doi: 10.1002/hbm.20920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Den Bos W, McClure SM, Harris LT, Fiske ST, Cohen JD. Dissociating affective evaluation and social cognitive processes in the ventral medial prefrontal cortex. Cognitive, Affective, and Behavioral Neuroscience. 2007;7:337–46. doi: 10.3758/cabn.7.4.337. [DOI] [PubMed] [Google Scholar]

- Van der Cruyssen L, Van Duynslaeger M, Cortoos A, Van Overwalle F. ERP time course and brain areas of spontaneous and intentional goal inferences. Social Neuroscience. 2009;4:165–84. doi: 10.1080/17470910802253836. [DOI] [PubMed] [Google Scholar]

- Van Duynslaeger M, Van Overwalle F, Verstraeten E. Electrophysiological time course and brain areas of spontaneous and intentional trait inferences. Social Cognitive and Affective Neuroscience. 2007;2:174–88. doi: 10.1093/scan/nsm016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Overwalle F. Social cognition and the brain: a meta-analysis. Human Brain Mapping. 2009;30:829–58. doi: 10.1002/hbm.20547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Overwalle F, Baetens K. Understanding others' actions and goals by mirror and mentalizing systems: a meta-analysis. Neuroimage. 2009;48:564–84. doi: 10.1016/j.neuroimage.2009.06.009. [DOI] [PubMed] [Google Scholar]

- Van Overwalle F, Van Duynslaeger M, Coomans C, Timmermans B. Spontaneous goal inferences are often inferred faster than spontaneous trait inferences. Journal of Experimental Social Psychology. 2012;48:13–18. [Google Scholar]

- Wang SH, Morris RGM. Hippocampal-neocortical interactions in memory formation, consolidation, and reconsolidation. Annual Review of Psychology. 2010;61:49–79. doi: 10.1146/annurev.psych.093008.100523. [DOI] [PubMed] [Google Scholar]

- Whitfield-Gabrieli S, Moran JM, Nieto-Castanon A, Triantafyllou C, Saxe R, Gabrieli J. Associations and dissociations between default and self-reference networks in the human brain. Neuroimage. 2011;55:225–32. doi: 10.1016/j.neuroimage.2010.11.048. [DOI] [PubMed] [Google Scholar]

- Winocur G, Moscovitch M, Sekeres M. Memory consolidation or transformation: context manipulation and hippocampal representations of memory. Nature Neuroscience. 2007;10:555–7. doi: 10.1038/nn1880. [DOI] [PubMed] [Google Scholar]

- Wood JN, Grafman J. Human prefrontal cortex: processing and representational perspectives. Nature Reviews Neuroscience. 2003;4:139–47. doi: 10.1038/nrn1033. [DOI] [PubMed] [Google Scholar]

- Young L, Bechara A, Tranel D, Damasio H, Hauser M, Damasio A. Damage to ventromedial prefrontal cortex impairs judgment of harmful intent. Neuron. 2010;65:845–51. doi: 10.1016/j.neuron.2010.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]