Abstract

As an alternative to standard quality improvement approaches and to commonly used after action report/improvement plans, we developed and tested a peer assessment approach for learning from singular public health emergencies. In this approach, health departments engage peers to analyze critical incidents, with the goal of aiding organizational learning within and across public health emergency preparedness systems. We systematically reviewed the literature in this area, formed a practitioner advisory panel to help translate these methods into a protocol, applied it retrospectively to case studies, and later field-tested the protocol in two locations. These field tests and the views of the health professionals who participated in them suggest that this peer-assessment approach is feasible and leads to a more in-depth analysis than standard methods. Engaging people involved in operating emergency health systems capitalizes on their professional expertise and provides an opportunity to identify transferable best practices.

In the last decade, organizations involved in public health emergency preparedness (PHEP) have worked to innovate and improve their systems, but most have not systematically analyzed why innovations do or do not work, nor have they developed frameworks for disseminating lessons learned from their experiences. As a result, lessons from public health emergencies often remain underused, leading to avoidable morbidity and deaths or, at best, inefficient uses of resources in future events.1

One reason for the challenges in learning from public health emergencies is that public health emergencies are singular events—i.e., they are relatively rare and generally not repeated in the same manner and context. Unlike routine health-care services that can be studied and improved with statistical process and outcome measures, system improvement for rare events requires the in-depth study of individual cases.2 Ensuring objective, systematic, and reliable analyses of such cases, namely “critical incidents,” can be difficult, especially if health officials are evaluating their own system's response.

To address the challenge of systematic learning from the response to actual public health emergencies, we present a peer assessment approach to support collaborative efforts to learn from singular events. This process is designed to engage public health and other professionals in the analysis of a public health system's response to an emergency. By identifying root causes of successes and failures, it highlights lessons that can be institutionalized to improve future responses. This approach seeks to (1) improve future responses for the public health system that responded to the incident and (2) identify best practices for other public health systems that will respond to similar incidents in the future. Rigorous analytical methods such as root cause analysis (RCA)—which seeks to move from partial, proximate causes to system-level root causes by repeatedly asking why each identified cause occurred3—and a facilitated look-back meeting4 are two strategies employed in this approach.

We describe the peer assessment process that we have developed through work with a practitioner advisory panel, a detailed literature review, retrospective case analyses, and field testing. The process and the results are illustrated by a case study of the 2012 West Nile virus (WNV) outbreak in the Dallas-Fort Worth Metroplex in Texas. Finally, we examine the implications of using this approach in public health practice.

BACKGROUND

Effectively responding to health emergencies, including disasters, disease outbreaks, and humanitarian emergencies, requires the concerted and coordinated effort of complex people-centered health systems that include public health agencies, health-care delivery organizations, public- and private-sector entities responsible for public safety and education, employers and other organizations, and individuals and families. The complexity of these systems, coupled with the singular nature of health emergencies (i.e., each differs in the nature of the threat, the capabilities of the responding agencies, and the context in which it occurs), creates challenges when determining which approaches are most effective and, more generally, for organizational learning. In 2008, for instance, Nelson and colleagues noted that despite efforts aimed at standardizing formats, the structure of these reports is almost as varied as the individuals who produce them.5 More recently, Savoia and colleagues examined after action reports (AARs) that described the response to the 2009 H1N1 pandemic, as well as to Hurricanes Katrina, Gustav, and Ike, drawn from the U.S. Department of Homeland Security (DHS) Lessons Learned Information Sharing system. The researchers found that these reports varied widely in their intended uses and users, scope, timing, and format.6 The DHS Homeland Security Exercise Evaluation Program (HSEEP) format that is commonly used in these reports permits but does not require RCAs, and they are not common.7 Singleton and colleagues are more sanguine about these reports but still report significant difficulties in the application of the HSEEP approach, especially to identify root causes.8

Given these circumstances, new methods are needed to generate systematic and rigorous knowledge to improve the quality of the health sector response to emergencies. To guide our research, we started with three qualitative methods that draw on the expertise of the people involved in leading the response: peer assessment, facilitated look-backs, and RCA.

QUALITATIVE METHODS

Peer assessment

Ensuring objective, systematic, and reliable analyses of critical incidents can be challenging if health officials are evaluating their own response.1 As an alternative, evaluation by peers in similar jurisdictions offers the potential for objective analyses by professionals with experience in PHEP and knowledge of the particularities of the system being assessed. It seeks to improve future responses for the public health agencies that responded to the incident, as well as to identify best practices for other health departments that will respond to similar incidents.

Research and experience suggest that peer assessments can be reliable and objective, and that the peer assessment approach enables health departments to collaborate in their efforts to learn from rare and seemingly unique incidents.9 For example, the Health Officers Association of California10 conducted in-depth emergency preparedness assessments in 51 of the state's 61 local health departments (LHDs) to (1) assess PHEP in each LHD relative to specific federal and state funding guidance and (2) identify areas needing improvement. A structured assessment instrument, keyed to the Centers for Disease Control and Prevention (CDC) and Health Resources and Services Administration guidance, was used to examine the extent of LHD capacity and progress in preparedness based on interviews with multiple levels of LHD staff, a review of preparedness-related documents, and direct observation. The instrument included performance indicators and a four-point scoring rubric (from 1 = minimally prepared to 4 = well prepared) to quantify the results. Teams of three to four consultants from a small corps of expert public health professionals made two-day site visits to the LHDs that agreed to participate in the assessment and prepared an LHD-specific written report of findings and recommendations.

Facilitated look-backs

The facilitated look-back is a validated method for examining public health systems' emergency response capabilities and conducting a systems-level analysis. It uses a neutral facilitator and a no-fault approach to probe the nuances of past decision making through moderated discussions. The facilitator guides the discussion by reviewing a brief chronology of the incident and asks probing questions about (1) key issues regarding what happened at various points during the response, (2) key decisions that were made by various stakeholders, and (3) how decisions were perceived and acted upon by others. The result is to elicit lessons learned.4

Root cause analysis

Many strategies have been described for deep, probing analyses about what caused a negative outcome or engendered a positive one. RCA is familiar to many in the health-care sector because both the Joint Commission and Department of Veterans Affairs require RCAs for certain clinical events.11 The general goal of RCA is to move from superficial, proximate causes to system-level root causes by repeatedly asking why each identified cause occurred.3 In principle, RCAs should facilitate significantly better learning from a single incident, but they sometimes fail to do so due to tendencies to (1) simplify explanations about critical incidents, either by discounting information that does not conform to preexisting beliefs or by failing to examine a problem from multiple perspectives;12 and (2) blame failures on situational factors instead of identifying opportunities for systems improvement.13 While these problems are not inevitable, they do highlight the need for tools and processes intended to be responsive to these common challenges when conducting RCAs and the persistent issues that arise from a lack of training in retrospective analysis. Therefore, we have identified a course of action that mixes these methodologies to isolate root causes in this setting to improve organizational learning from adverse events.

METHODS

The peer review process begins after an incident that stresses a public health system's capabilities has occurred. After an agency (or group of agencies) responds to an incident that overwhelms routine capabilities, representatives of the jurisdiction that responded to the incident can initiate a peer assessment process. The primary players involved are the requestor (i.e., the public health practitioner or group of practitioners representing the jurisdictions that responded to the incident) and the assessment team or assessors (i.e., the peer public health practitioners who review the incident response).

The peer assessment process begins with a review of preliminary reports and other incident documents and may include interviews with key players to identify critical response issues. The assessment team then visits the requesting jurisdiction and conducts a facilitated look-back meeting (or uses a similar approach) to further discuss the issues with representatives of the organizations that were involved in the response. Together, the requestor and the assessors conduct an RCA to identify factors that contributed to positive and negative aspects of the response and that should be addressed to improve future responses. Following the conclusion of the meeting, the parties involved write an analysis report, or an existing AAR is revised.

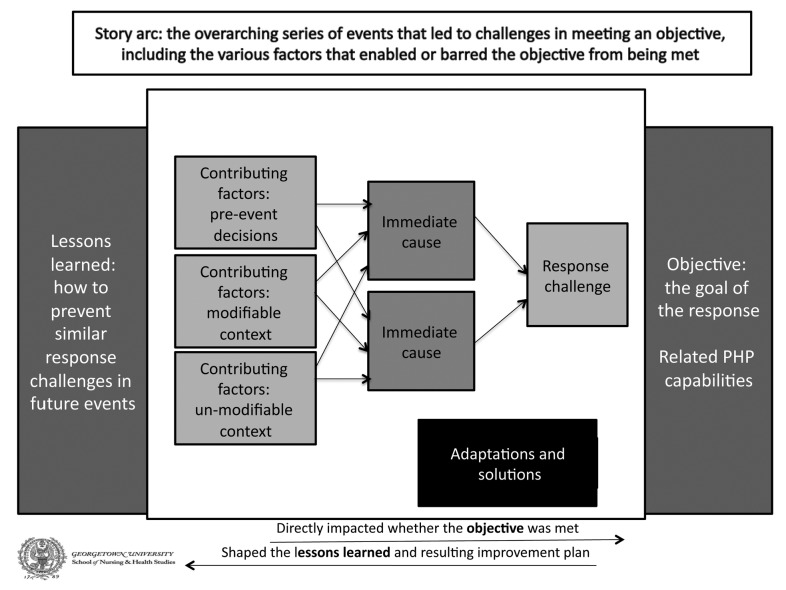

One approach to RCA that the group could use is the tool we developed and tested for PHEP through a retrospective application to three 2009 H1N1 case studies (in Martha's Vineyard, Massachusetts; Emilia Romagna, Italy; and Los Angeles, California).9,14,15 This tool, which is illustrated in Figure 1, takes the user through a multistep process that can be visualized through filling in the various boxes on the page, as a user moves from right to left identifying the following: (1) the story arc (i.e., the context in which the incident occurred and its basic features); (2) the response challenge; (3) the objective (i.e., what the responding jurisdiction was trying to achieve in this element of the response); (4) the immediate causes of the challenge; (5) the contributing factors, both those that are modifiable and unmodifiable by the jurisdiction related to that immediate cause of the incident; (6) adaptations and solutions that were implemented on the ground; and (7) lessons learned through this part of the response.

Figure 1.

Peer assessment root cause analysis process diagram

PHP = public health preparedness

Peer assessment process example

We field-tested this process in two jurisdictions after a Salmonella outbreak in Alamosa County, Colorado, and a major WNV outbreak in the Dallas-Fort Worth Metroplex in Texas. We conducted a site visit for each incident. During each visit, a peer assessment team consisting of a public health practitioner assisted by our research team engaged the practitioners from the responding jurisdiction through a review of documents related to the incident and a facilitated look-back meeting to perform an RCA of major challenges experienced during the response to the incident. The peer assessment and RCA process are described in more detail, along with job action sheets and templates, in the toolkit we have developed.9 The toolkit contains both the Salmonella and WNV cases. The WNV outbreak peer assessment is described hereafter.

In the summer of 2012, the Dallas-Fort Worth Metroplex experienced a severe WNV outbreak in which more than 1,868 confirmed cases of WNV disease and 89 WNV-related deaths were reported. The incident stressed a number of public health preparedness capabilities and involved multiple counties. As part of the peer assessment process, a facilitated look-back meeting was conducted in Arlington, Texas, on May 13, 2013, to review the public health system response to the incident. Representatives from the Texas Department of State Health Services (DSHS) and each of the three county health departments joined the meeting, along with the Dallas county judge, who is the county's chief executive officer. A peer assessor facilitated the meeting with the assistance of research staff from Georgetown University. The peer assessor was a practitioner from the Houston area who knew about the incident but had not been directly involved in the response. An AAR had already been drafted at the time the peer assessment process was initiated, so it served as (1) the foundation for the issues to be discussed at the meeting and (2) an informative document on the chronology of the incident and on key personnel who responded to the incident.

Two weeks prior to the site visit, the peer assessor met via teleconference with the state health officials who had requested the assessment. The teleconference consisted of a brief planning discussion to establish the key issues to discuss with meeting participants and to plan the visit. Meeting attendees were invited via e-mail by the state officials who requested the assessment.

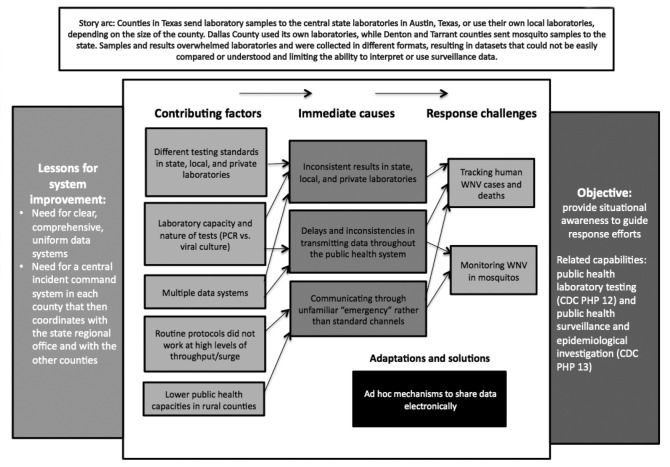

The facilitated look-back process was the primary data-gathering method used during the meeting. The facilitator guided meeting participants through three separate RCA discussions based on the previously identified three major response challenges experienced during the incident: surveillance, mitigation, and communication with the public. A separate RCA was conducted for each issue. An example of this process as it pertains to surveillance is shown in Figure 2. Throughout the identification of response challenges, immediate causes, contributing factors, and lessons learned, a variety of participants engaged in discussion and shared views not previously included in the drafted AAR.

Figure 2.

Root cause analysis process conducted through peer assessment for challenges around surveillance in the 2012 WNV outbreak in the Dallas Metroplex area in Texas

WNV = West Nile virus

CDC = Centers for Disease Control and Prevention

PCR = polymerase chain reaction

PHP = public health preparedness

After the meeting, the peer assessor and state health officials met for approximately one hour to discuss how the meeting went, what could have gone better, and how the information gathered during the meeting could be included in the AAR. The peer assessors shared their RCA diagrams and corresponding summary with the requesting practitioners. The requestors then commented on the diagrams and made a few substantive suggestions based on their perception of the meeting. The assessors considered these changes and, after discussing them via teleconference with the requestors, made the appropriate adjustments to reconcile the AAR.

OUTCOMES

Our field tests and the views of the public health professionals who participated in the peer assessment process suggest that our approach is applicable and leads to more in-depth analyses than some other current methods. It is particularly useful to have participants who experienced the incident from multiple perspectives together at the same table, as some participants had not been aware of the others' experiences and perspectives. In comparison with another field trial in which the state health department was not involved, having representatives of the DSHS present was also helpful to each of the parties in conducting their ongoing review process. These trials and the experience of the authors also suggest that there is inherent value to practitioners in learning from one another, especially when analyzing singular events, and indeed DSHS revised its AAR as a result of the peer assessment. Therefore, we believe the peer assessment approach can serve as a means to bring these practitioners together and offer a viable, flexible method for conducting context-based, rigorous analysis of these events to improve organizational learning.

This process not only provides direct benefits to the requestor, which will have the assistance of a peer assessment team in the after action review process, but also fosters communication and collaboration across jurisdictions, allowing requestors to engage with each other and with their assessment team. The assessment team indirectly benefits as well by learning from the public health response of the requesting jurisdiction.

LESSONS LEARNED

Our results demonstrate how peer assessment for public health emergency incidents (1) enables practitioners to learn from experience, in the spirit of quality improvement (QI) recommended by the National Health Security Strategy,16 and (2) aligns PHEP QI efforts with the Public Health Accreditation Board's national accreditation process. Through the peer assessment process, public health practitioners help their peers assess PHEP responses and work with them to solve identified challenges. Research has shown that standard QI methods (e.g., learning collaboratives) may not be appropriate in the context of PHEP because of (1) a lack of evidence-based and accepted performance measures, (2) the difficulty of carrying out rapid plan-do-study-act cycles, and (3) challenges with measuring processes and results after rare events.17 The peer assessment process is designed to ascertain the root causes of response successes and failures and to develop thoughtful lessons learned and improvement strategies.

The incident report resulting from the peer assessment process can serve as a supplement to a standard AAR. In addition, the report can be shared with others through a critical incident registry (CIR) for PHEP. A PHEP CIR is intended to provide a database of incident reports, allowing for both sharing with others in similar contexts and facilitating cross-case analysis. The success of CIRs in other fields suggests that a properly designed PHEP CIR could support broader analysis of critical public health incidents, facilitate both deeper analysis of particular incidents and stronger improvement plans, and help to support a culture of systems improvement.

In particular, by encouraging RCAs and sharing the results of those analyses with others through a database, a PHEP CIR could be a valuable approach for systems improvement.1 Drawing on the social sciences literature on qualitative analysis of public health systems,15 the peer assessment process described in this article was designed to improve the analysis of actual public health emergency events by addressing two inherent challenges in the analysis of singular or rare events not repeated in the same manner and context. First, by involving practitioners who were involved in the response to the event and permitting—indeed encouraging—them to speculate on other participants' perspectives and alternative paths, the process provides an opportunity to learn from the practical wisdom of experienced public health and other professionals. By focusing on in-depth analysis, the peer assessment approach counters tendencies to (1) simplify explanations about critical incidents, either by discounting information that does not conform to preexisting beliefs or by failing to examine a problem from multiple perspectives;12 and (2) blame failures on situational factors instead of identifying opportunities for systems improvement.13

Second, including peers from other jurisdictions helps improve the objectivity of the analysis, which can be difficult to ensure if health officials are evaluating their own system's response. Participation of peers from similar jurisdictions offers the potential for objective analyses, both by professionals with experience in PHEP and of the particularities of the systems being assessed. At the same time, it can be an effective way to share best practices to support and amplify technical assistance provided by CDC.

Footnotes

This article benefited from the contributions of a practitioners advisory panel, practitioners at two field-testing sites, and others. The authors thank Jesse Bump and Elizabeth Lee of Georgetown University for their contributions.

This article was developed with funding support awarded to the Harvard School of Public Health under a supplement to a cooperative agreement with the Centers for Disease Control and Prevention (CDC) (#5P01TP000307-05). The content of this publication as well as the views and discussions expressed in this article are solely those of the authors and do not necessarily represent the views of any partner organizations, CDC, or the U.S. Department of Health and Human Services.

REFERENCE

- 1.Piltch-Loeb R, Kraemer JD, Stoto MA. Synopsis of a public health emergency preparedness critical incident registry. J Public Health Manag Pract. 2013;19(Suppl 2):S93–4. doi: 10.1097/PHH.0b013e31828bf5b3. [DOI] [PubMed] [Google Scholar]

- 2.Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41(1 Suppl):I30–8. doi: 10.1097/00005650-200301001-00004. [DOI] [PubMed] [Google Scholar]

- 3.Croteau RJ, editor. Oakbrook Terrace (IL): Joint Commission on Accreditation of Healthcare Organizations; 2010. Root cause analysis in health care: tools and techniques. [Google Scholar]

- 4.Aledort JE, Lurie N, Ricci KA, Dausey DJ, Stern S. Santa Monica (CA): RAND Corporation; 2006. Facilitated look-backs: a new quality improvement tool for management of routine annual and pandemic influenza. Report No.: RAND TR-320. Also available from: URL: http://www.rand.org/pubs/technical_reports/TR320.html [cited 2014 Apr 7] [Google Scholar]

- 5.Nelson CD, Beckjord EB, Dausey DJ, Chan E, Lotstein D, Lurie N. How can we strengthen the evidence base in public health preparedness? Disaster Med Public Health Prep. 2008;2:247–50. doi: 10.1097/DMP.0b013e31818d84ea. [DOI] [PubMed] [Google Scholar]

- 6.Savoia E, Agboola F, Biddinger PD. Use of after action reports (AARs) to promote organizational and systems learning in emergency preparedness. Int J Environ Res Public Health. 2012;9:2949–63. doi: 10.3390/ijerph9082949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stoto MA, Nelson C, Higdon MA, Kraemer J, Singleton CM. Learning about after action reporting from the 2009 H1N1 pandemic: a workshop summary. J Public Health Manag Pract. 2013;19:420–7. doi: 10.1097/PHH.0b013e3182751d57. [DOI] [PubMed] [Google Scholar]

- 8.Singleton CM, DeBastiani S, Rose D, Kahn EB. An analysis of root cause identification and continuous quality improvement in public health H1N1 after-action reports. J Public Health Manag Pract. 2014;20:197–204. doi: 10.1097/PHH.0b013e31829ddd21. [DOI] [PubMed] [Google Scholar]

- 9.Piltch-Loeb R, Nelson C, Kraemer JD, Savoia E, Stoto MA. Peer assessment of public health emergency response toolkit [cited 2014 Apr 7] Available from: URL: http://www.hsph.harvard.edu/h-perlc/files/2014/01/Peer-Assessment-toolkit-12-15-13.pdf.

- 10.Health Officers Association of California. Sacramento (CA): California Department of Health Services; 2007. Emergency preparedness in California's local health departments: final assessment report. [Google Scholar]

- 11.Wu AW, Lipshutz AK, Pronovost PJ. Effectiveness and efficacy of root cause analysis of medicine. JAMA. 2008;299:685–7. doi: 10.1001/jama.299.6.685. [DOI] [PubMed] [Google Scholar]

- 12.Weick KE, Sutcliffe KM. San Francisco: Jossey-Bass; 2001. Managing the unexpected: assuring high performance in an age of complexity. [Google Scholar]

- 13.Edmondson AC. Strategies for learning from failure. Harvard Business Rev. 2011 Apr;:48–55. [PubMed] [Google Scholar]

- 14.Stoto MA, Nelson CD, Klaiman T. Getting from what to why: using qualitative research to conduct public health systems research [cited 2014 Apr 7] Available from: URL: http://www.academyhealth.org/files/publications/QMforPH.pdf.

- 15.Higdon MA, Stoto MA. The Martha's Vineyard public health system responds to 2009 H1N1. Int Public Health J. 2013;5:175–86. [Google Scholar]

- 16.Department of Health and Human Services (US) National health security strategy of the United States of America [cited 2014 Apr 7] Available from: URL: http://www.phe.gov/Preparedness/planning/authority/nhss/strategy/Documents/nhss-final.pdf.

- 17.Stoto MA, Cox H, Higdon MA, Dunnell K, Goldmann D. Using learning collaboratives to improve public health emergency preparedness systems. Front Public Health Serv Syst Res. 2013;2 Article 3. Also available from: URL: http://uknowledge.uky.edu/frontiersinphssr/vol2/iss2/3 [cited 2014 Apr 7] [Google Scholar]