Abstract

We applied emerging evidence in simulation science to create a curriculum in emergency response for health science students and professionals. Our research project was designed to (1) test the effectiveness of specific immersive simulations, (2) create reliable assessment tools for emergency response and team communication skills, and (3) assess participants' retention and transfer of skills over time. We collected both quantitative and qualitative data about individual and team knowledge, skills, and attitudes. Content experts designed and pilot-tested scaled quantitative tools. Qualitative evaluations administered immediately after simulations and longitudinal surveys administered 6–12 months later measured student participants' individual perceptions of their confidence, readiness for emergency response, and transfer of skills to their day-to-day experience. Results from 312 participants enrolled in nine workshops during a 24-month period indicated that the 10-hour curriculum is efficient (compared with larger-scale or longer training programs) and effective in improving skills. The curriculum may be useful for public health practitioners interested in addressing public health emergency preparedness competencies and Institute of Medicine research priority areas.

To date, public health, health-care, and academic institutions have created predominantly postgraduate training opportunities to enhance disaster preparedness among practicing health professionals.1 These efforts have been largely limited to individual specialties or targeted professions.2 While many specialty accreditation bodies have developed core disaster response competencies for health professionals,3 this body of knowledge is not introduced at the pre-graduate or pre-licensure levels, missing an important opportunity for integration and early application. Starting in 2008, we conducted a study called “Disaster 101: Effectiveness of Simulated Disaster Response Scenarios” (D101) at the University of Minnesota, which sought to meet the need for this kind of training by designing and testing an educational intervention based on best-evidence practices in immersive simulation (i.e., a simulation that produces a state of being deeply engaged, a suspension of disbelief, and active involvement).4

This project aimed to (1) test the effectiveness of specific immersive simulations, (2) create reliable assessment tools for emergency response and team communication skills, and (3) assess participants' retention and transfer of skills over time. Most importantly, we were committed to creating and testing emergency preparedness training that had the potential to significantly improve the readiness of the tens of thousands of new health-care providers graduated and licensed each year in the United States. As other studies have noted, academic organizations, accrediting bodies, and federal agencies have called for consistent and sustained training in emergency preparedness in the pre-licensure health-care curriculum.5,6 We sought to provide a replicable, sustainable model that addressed the Institute of Medicine (IOM) research priorities.7 As the project evolved, it became clear that D101 addressed the Public Health Preparedness and Response Core Competencies to improve the system readiness of the health-care workforce.8,9

PURPOSE

While our primary purpose was to assess the efficiency and effectiveness of this particular educational intervention using best practices in immersive simulation, both the curriculum and the research design were intended to address limitations documented in the research literature in both emergency preparedness and interprofessional education (i.e., “learning about, from, and with two or more health-care professions”).10 Both sets of literature repeatedly note the limited size of the datasets, the inconsistency of assessment tools, the short-term assessment of impact (i.e., limited almost exclusively to pre- or posttest methods immediately following the intervention), and an overemphasis on knowledge-based assessments that do not capture attitudinal, skill, or behavioral outcomes.5,11 As Donahue and Tuohy note, “Our experience suggests that purported lessons learned are not really learned; many problems and mistakes are repeated in subsequent events…. Reports and lessons are often ignored, and even when they are not, lessons are too often isolated and perishable, rather than generalized and institutionalized.”12 We intended to test evidence-based educational practices and their institutionalization to make this kind of training and its impact less “isolated and perishable.”

In light of these observations, we sought to employ best-evidence practices in professional education and simulation by using diverse educational tools and instructional methods. These methods included repetitive practice of specific skills; multiple opportunities for feedback; variability in task difficulty; simulations in a controlled, standardized environment; multiple learning strategies; and content integrated with existing curriculum.4 While a few other examples of educational interventions have been described in the literature since our research began,6,13,14 they do not apply the full range of strategies employed in our study, have limited numbers of subjects (ranging from six to 79 participants), and do not include assessments of long-term impact.

The accepted competencies and priorities from three significant sources—the public health emergency preparedness (PHEP) capabilities, the IOM priorities, and the Interprofessional Education Collaborative (IPEC) competencies—aligned neatly with the goals of D101.15 The skills taught and assessed by the research team and content experts in the University of Minnesota's D101 curriculum specifically addressed several competencies from each of these sources, including the PHEP capability of Mass Care, the IPEC competency of Interprofessional Communication, and the IOM priority of Improving Communications.

METHODS

We focused on designing and testing this emergency preparedness intervention for both effectiveness of learning and efficient use of participant time. As a result, the total time for student training was limited to 10 hours: two hours for an introductory online module and eight hours for face-to-face workshops and simulation. We used multiple strategies (i.e., multiple-choice question tests, performance checklists, and pre- and posttest attitudinal surveys) to assess knowledge, skill, attitudinal, and behavioral outcomes at multiple points throughout the training as well as 6–12 months after completing the curriculum, according to the National Institutes of Health Office of Behavioral and Social Sciences Research guidelines on mixed-methods research.16 Specifically, the training included:

A two-hour online module designed to deliver foundational content on emergency preparedness and teamwork while providing an assessment of the learners' knowledge and attitudes prior to the face-to-face workshop. Topics included the Incident Command System, the simple triage and rapid treatment (START) method, the emergency medical system (EMS), and potential roles for health-care professionals in emergency response.

Three 45-minute hands-on workshops at the University of Minnesota led by first-responder content experts in triage, scene safety and situational awareness, and interprofessional communication under response conditions.

Two immersive simulation scenarios—a bomb blast and a structural collapse—were the vehicles for training. These scenarios were of comparable complexity and employed realistic atmospheric effects, simulated victims with realistic moulage (i.e., mock) injuries, and mannequins with injuries. Student teams experienced each scenario twice in succession, with half of the teams experiencing the bomb blast as their first scenario and half of the teams experiencing the structural collapse first.

Use of repetitive practice, providing teams with the opportunity to repeat responses in standardized simulations following formative feedback from trained facilitators.

Use of advocacy-inquiry debriefing methodology in small and large group settings to maximize learning outcomes and transfer of skills.

Pilot-tested standardized assessment tools—focused on leadership, teamwork, and emergency response skills—used by trained observers.

Follow-up survey administered 6–12 months after completion of the one-day workshop to assess retention of knowledge, attitudes toward emergency response, and individual reflections on how participants had actually applied the training.

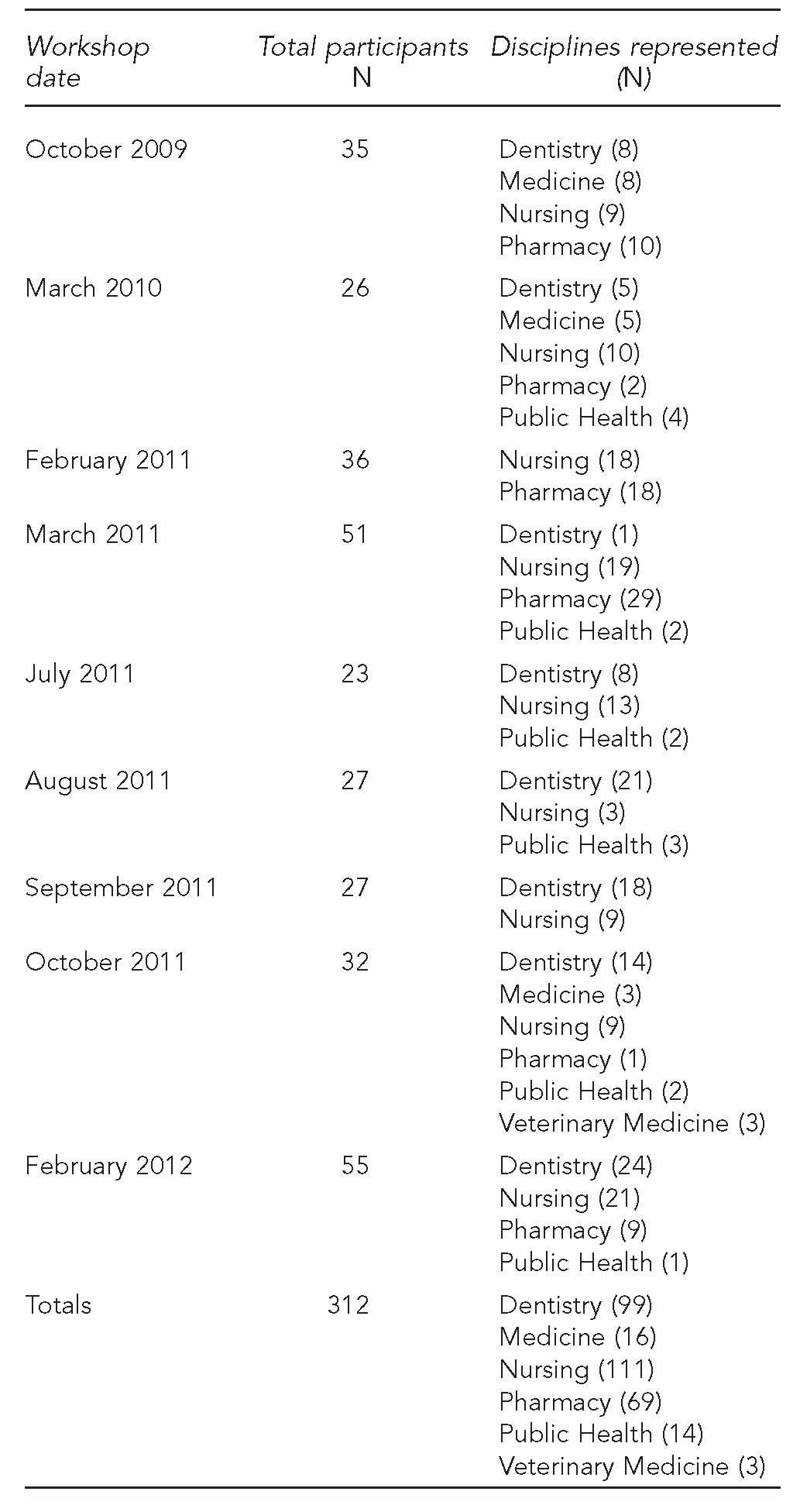

Participants were assigned to interprofessional teams of 6–12 participants involving at least two health science professions. Student registrants came from the disciplines of nursing (undergraduate and graduate), pharmacy, dentistry, medicine, veterinary medicine, and public health at the University of Minnesota (Table 1). Interprofessional teams of emergency preparedness content experts recruited from the Twin Cities emergency response community guided student teams and evaluated their communication and teamwork performance. Professions represented on facilitator teams included EMS, fire, police, emergency medicine, nursing, and public health. Student teams were not assigned roles based on their professional education programs. Unlike some other emergency preparedness training programs, the simulations were specifically designed to emphasize skills and roles regardless of professional identity.

Table 1.

Student participants in the Disaster 101 workshop, by professional school: University of Minnesota (Twin Cities campus), 2009–2012

To evaluate performance, trained content experts (including some of the same professionals who led the workshops) completed quantitative assessments of student teams' response skills and teamwork/communication skills after each iteration of a simulated disaster. The original tool evaluated both individual student and team performance using the Homeland Security Exercise and Evaluation Program Exercise Evaluation Guides, particularly Triage and Pre-Hospital Treatment, Medical Surge, Responder Safety, and Health. After field-testing the tools in our pilot workshop in fall 2009, 16 trained evaluators (e.g., physicians, nurses, pharmacists, public health professionals, and first responders) met to revise the tools using a modified Delphi technique to improve usability. The revised assessment tools allowed us to document changes in skills during the course of the workshop, before and after students had received targeted feedback.

Participants' self-reported confidence in their knowledge and skills related to emergency response was measured both immediately before and after the workshop, and again 6–12 months after workshop completion. Participants completed detailed evaluations of the workshop. These evaluations included open-ended questions in which they could discuss what they found most useful about the workshop and what could be improved for future participants. Finally, 6–12 months after the workshop, participants were surveyed using an online survey format to assess the workshop's impact on their knowledge and attitudes related to emergency preparedness and response. The survey also measured the workshop's impact on participants' involvement in additional emergency response educational experiences. The workshop was delivered nine times in 24 months, with a mix of different professions and programs represented each time. The number of participants varied by workshop, for a total of 312 participants during the study period from October 2009 through February 2012. Students were recruited by University of Minnesota faculty from relevant courses already offered in each professional school's curriculum or by peers from student interest groups.

OUTCOMES

On knowledge items alone, students demonstrated a 31.9% improvement over pretest scores following completion of the online mini-course. When their knowledge level was measured again 6–12 months after course completion, the greatest decay was noted in the cohort with the longest lag time between intervention and longitudinal survey. However, none of the cohorts experienced a return to pre-intervention levels (data not shown). These findings are consistent with existing literature on decay of knowledge and skills following educational interventions.17

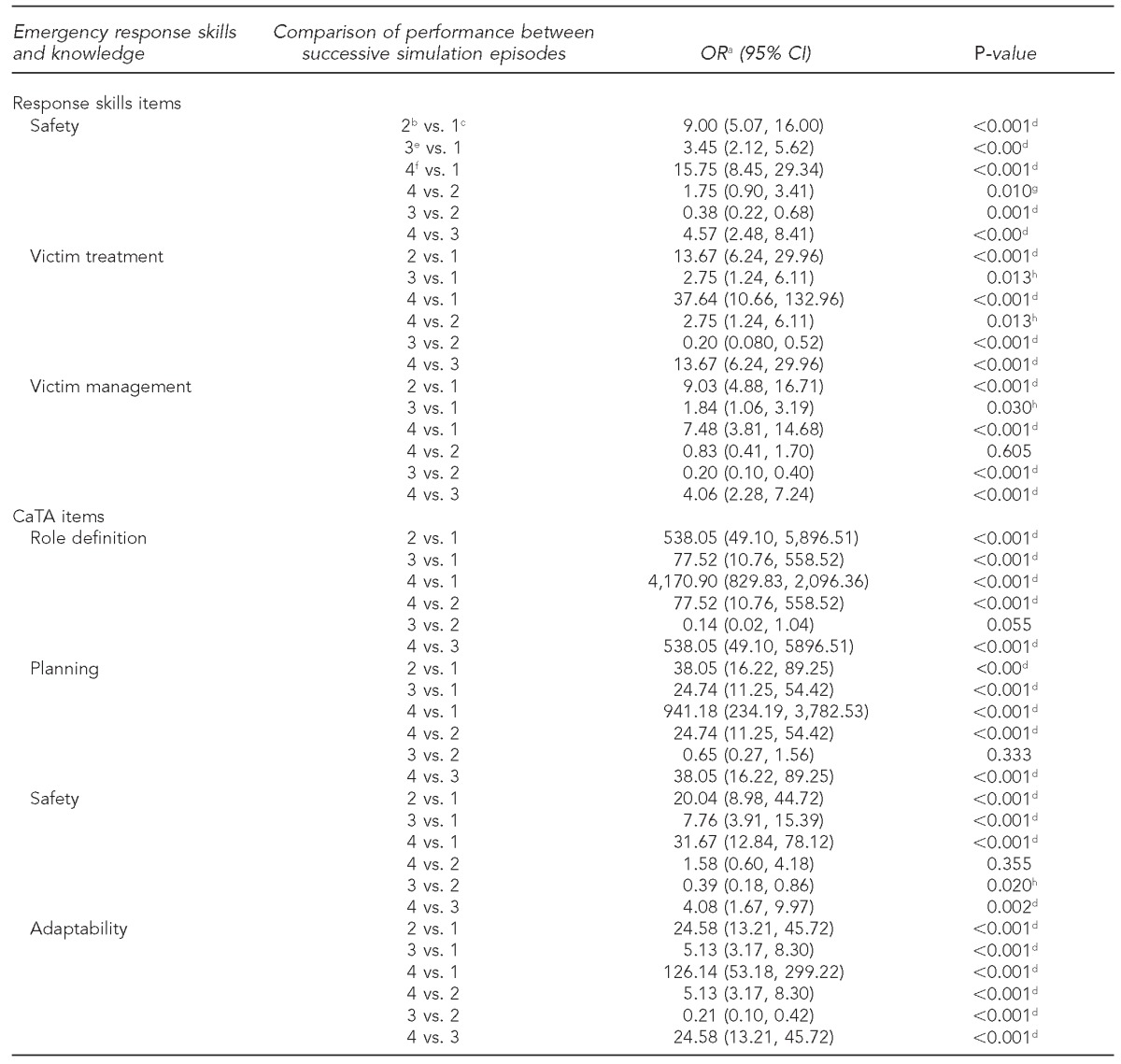

Students also experienced significant improvement in their observed team and emergency response skills (Table 2). To improve the statistical analysis, the 10 communication and team assessment (CaTA) skills items assessed during the simulation were aggregated into four areas correlated with the PHEP capabilities and characteristic of high-performing teams: role definition, planning, adaptability, and safety awareness. Specifically, the 10 team skills were: identified an effective leader, effectively assigned roles to all team members, made a clear plan and verbalized it so all team members were aware of it, developed a shared “mental model,” regularly conducted visual scans of the emergency area to reassess needs and/or hazards, proposed changes to their plan of action as the emergency evolved, asked for assistance from team members as needed, were receptive to recommendations and new ideas from other team members, responded effectively to support fellow team members as conditions changed and/or worsened, and used closed-loop communication when specific actions were called for (e.g., calling 9-1-1).

Table 2.

Training impact on performance during successive simulation experiences in the Disaster 101 workshop: University of Minnesota (Twin Cities campus), 2009–2012

aORs were calculated to measure the association between the exposure to training (i.e., realistic simulated scenarios with focused feedback from interprofessional facilitator teams) and improved team performance.

bFirst simulated disaster event, second trial

cFirst simulated disaster event, first trial

dSignificant at p<0.001

eSecond simulated disaster event, first trial

fSecond simulated disaster event, second trial

gSignificant at p<0.01

hSignificant at p<0.05

OR = odds ratio

CI = confidence interval

CaTA = communication and teamwork assessment

Similarly, the 12 response skill items were aggregated into three areas—victim triage and treatment, responder safety, and victim management—and included: conducted an initial scene assessment for safety; attempted to identify any additional threats (e.g., secondary devices or imminent structural collapse); called 9-1-1; identified an evacuation area for mobile victims and victims who could be moved safely; enlisted the help of mobile victims appropriately; used the START triage protocol (i.e., respiration, perfusion, and mental status); overall, categorized victims correctly; used triage tags correctly to categorize victims; provided appropriate treatment overall, given victims' injuries; improvised medical supplies with onsite resources as needed; kept an accurate count of victims by level of severity; and shared relevant information with EMS.

Each group of items was analyzed using a generalized linear mixed model with a cumulative logic link and an assumed multinomial distribution. Odds ratios were calculated to measure the association between the exposure to training (i.e., realistic simulated scenarios with focused feedback from interprofessional facilitator teams) and improved team performance. Results indicate the following conclusions:

Student teams scored significantly better with repeated performance (i.e., the second time through a scenario, after receiving focused feedback from trained facilitators). This trend continued through the second scenario (i.e., performance 2 v. performance 1, performance 4 v. performance 3).

Students usually regress significantly during the first attempt of the second scenario of the workshop (i.e., performance 3 v. performance 2). However, we found in our study that the first time through the second scenario, students performed consistently better than the first time through the first scenario. The fourth performance (performance 4 v. performance 1) was always statistically significant. These findings underscore the value of repetition in anchoring specific behaviors in simulation.

Additional data gathered in the longitudinal survey administered 6–12 months following workshop completion provided compelling evidence of the D101 intervention's effectiveness. All respondents indicated that their skills had improved in at least one of eight target skill areas relevant to the IOM priorities, PHEP capabilities, and IPEC competencies. Specifically, students were prompted, “Please indicate the skills you feel were improved as a result of your participation in Disaster 101 (check all that apply).” In all, 79%–92% of respondents indicated improved confidence in five areas: crisis communication (91.7%), situational awareness (85.7%), maintaining safety in an emergency (85.2%), triage (85.2%), and crisis leadership (79.2%). For the three remaining skill areas—working within an incident command framework (64.9%), ad hoc teamwork (53.6%), and hand-off to another team (25.6%)—≤65% of respondents indicated improved confidence (data not shown).

LESSONS LEARNED

Outcomes were consistent with other published research using a range of active learning training strategies.18 However, our longitudinal data highlight the value of well-designed, well-executed operational simulations for maximizing retention and transfer of skills to participants' day-to-day practice. These findings are consistent with multiple studies in aviation and the military19 as well as models of experiential learning. Students were not expected to attain or practice skills at the level of a professional first responder. However, the training was designed to improve understanding of incident command and emergency response in a way that would allow learners to induce, generalize, deduce, and apply team and response skills in their ongoing clinical education.20 As results emerged, D101 was also offered as a continuing education experience, through the University of Minnesota's Public Health Institute and through a multi-week course on providing health care in underresourced systems globally. (Results from these experiences were not included in the analysis reported in this article.) These iterations of the workshop included practicing professionals in emergency response, public health, medicine, nursing, dentistry, and veterinary medicine. Outcomes immediately following workshop completion were comparable with outcomes from the student version of D101. These results demonstrated the flexibility of the delivery platform and the effectiveness of the simulation strategies—particularly repetitive practice and formative feedback—described in the literature.4

Perhaps even more importantly, the results of our research on D101 indicate the value of the training for a wide range of health-care professionals at different points in their professional development. The structure of the training as a whole and the dynamism of the simulations in particular allowed participants to learn and practice skills at a professionally appropriate level, and to notice different levels of complexity based on their preparation and experience. Established professionals indicated that they were surprised by both the realism and applicability of the simulations as they experienced them. Students—who had little previous experience with crisis management, either inside or outside of clinical settings—demonstrated that they could quickly attain emergency response and team skills.

Limitations

While this study was built on existing literature in emergency preparedness simulation, the flexibility required for implementation created inconsistencies in the intervention. Specifically, participants were recruited in multiple ways, the number of participants varied from 26 to 55, and the health-care professions represented changed with each workshop. This variation created challenges for the comparability of results across cohorts. Also, the wide range of performances sometimes produced large confidence intervals (Table 2). While some of these instances were very highly significant, the wide range calls into question the precision (though not the accuracy) of particular measures.

CONCLUSIONS

D101 efficiently and effectively addresses several needs in emergency preparedness training—addressing all four IOM research priorities, meeting PHEP capabilities and IPEC competencies, and addressing -shortcomings identified in systematic reviews. As Williams et al. noted, knowing whether or not an intervention is effective for more than one group, being able to reproduce it, using common evaluation criteria, and incorporating multiple types of health-care providers into training are all important aspects of disaster preparedness research and system readiness.5 As both health-care and emergency response systems continue to face challenges in meeting normal operational needs, much less disaster-related demands, programs such as D101 can provide an important resource in expanding the capabilities of emerging and current health-care providers to improve system readiness.

Footnotes

The authors thank Robert Leduc, PhD, and Scott Lunos, MS, of the University of Minnesota Biostatistical Design and Analysis Center for their statistical design and analysis services.

This project was part of University of Minnesota: Simulations, Exercises, and Effective Education Preparedness and Emergency Response Research Center and was supported, in part, through the Centers for Disease Control and Prevention (CDC) grant #5P01TP000301-03. The views and opinions expressed in this article are those of the authors and do not necessarily represent the views of CDC.

REFERENCE

- 1.Markenson D, DiMaggio C, Redlener I. Preparing health professions students for terrorism, disaster, and public health emergencies: core competencies. Acad Med. 2005;80:517–26. doi: 10.1097/00001888-200506000-00002. [DOI] [PubMed] [Google Scholar]

- 2.Subbarao I, Lyznicki JM, Hsu EB, Gebbie KM, Markenson D, Barzansky B, et al. A consensus-based educational framework and competency set for the discipline of disaster medicine and public health preparedness. Disaster Med Public Health Prep. 2008;2:57–68. doi: 10.1097/DMP.0b013e31816564af. [DOI] [PubMed] [Google Scholar]

- 3.Stanley JM. Disaster competency development and integration in nursing education. Nurs Clin North Am. 2005;40:453–67. doi: 10.1016/j.cnur.2005.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Issenberg SB, McGaghie WC, Petrusa ER, Gordon DL, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27:10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 5.Williams J, Nocera M, Casteel C. The effectiveness of disaster training for health care workers: a systematic review. Ann Emerg Med. 2008;52:211–22. doi: 10.1016/j.annemergmed.2007.09.030. [DOI] [PubMed] [Google Scholar]

- 6.Morrison AM, Catanzaro AM. High-fidelity simulation and emergency preparedness. Public Health Nurs. 2010;27:164–73. doi: 10.1111/j.1525-1446.2010.00838.x. [DOI] [PubMed] [Google Scholar]

- 7.Altevogt BM, Pope AM, Hill MN, editors. Research priorities in emergency preparedness and response for public health systems: a letter report. Washington: National Academies Press; 2008. [Google Scholar]

- 8.Centers for Disease Control and Prevention (US) Public health preparedness and response core competency model, version 1.0 [cited 2014 Jan 6] Available from: URL: www.cdc.gov/phpr/documents/perlcPDFS/PreparednessCompetencyModelWorkforce-Version1_0.pdf.

- 9.Khan AS, Kosmos C, Singleton CM Division of State and Local Readiness in the Office of Public Health Preparedness and Response. Atlanta: Centers for Disease Control and Prevention (US); 2011. Public health preparedness capabilities: national standards for state and local planning. Also available from: URL: www.cdc.gov/phpr/capabilities [cited 2014 Jan 6] [Google Scholar]

- 10.Hopkins D, editor. Framework for action on interprofessional education & collaborative practice. Geneva: World Health Organization; 2010. [PubMed] [Google Scholar]

- 11.Reeves S, Perrier L, Goldman J, Freeth D, Zwarenstein M. Interprofessional education: effects on professional practice and healthcare outcomes (update) Cochrane Database Syst Rev. 2013;3:CD002213. doi: 10.1002/14651858.CD002213.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Donahue A, Tuohy R. Lessons we don't learn: a study of the lessons of disasters, why we repeat them, and how we can learn them. Homeland Security Aff. 2006;2:1–28. Also available from: URL: http://www.hsaj.org/?article=2.2.4 [cited 2014 Jan 6] [Google Scholar]

- 13.Kaji AH, Coates W, Fung CC. A disaster medicine curriculum for medical students. Teach Learn Med. 2010;22:116–22. doi: 10.1080/10401331003656561. [DOI] [PubMed] [Google Scholar]

- 14.Scott LA, Swartzentruber DA, Davis CA, Maddux PT, Schnellman J, Wahlquist AE. Competency in chaos: lifesaving performance of care providers utilizing a competency-based, multi-actor emergency preparedness training curriculum. Prehosp Disaster Med. 2013;28:322–33. doi: 10.1017/S1049023X13000368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Interprofessional Education Collaborative. Washington: Interprofessional Education Collaborative; 2011. Core competencies for interprofessional collaborative practice: report of an expert panel. Also available from: URL: https://ipecollaborative.org/uploads/IPEC-Core-Competencies.pdf [cited 2014 Jan 6] [Google Scholar]

- 16.Creswell JW, Klassen AC, Plano Clark VL, Smith KC. Bethesda (MD): National Institutes of Health (US); 2011. Best practices for mixed methods research in the health sciences. Also available from: URL: http://obssr.od.nih.gov/mixed_methods_research [cited 2014 Mar 21] [Google Scholar]

- 17.Arthur W, Jr, Bennett W, Jr, Stanush PL, McNelly TL. Factors that influence skill decay and retention: a quantitative review and analysis. Hum Perf. 1998;11:57–101. [Google Scholar]

- 18.Biddinger PD, Savoia E, Massin-Short SB, Preston J, Stoto MA. Public health emergency preparedness exercises: lessons learned. Public Health Rep. 2010;125(Suppl 5):100–6. doi: 10.1177/00333549101250S514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Waag WL, Bell HH. Fort Belvoir (VA): Defense Technical Information Center; 1997. Estimating the effectiveness of interactive air combat simulation. Report No.: AL/HR-TP-1996-0039. [Google Scholar]

- 20.Priest S, Gass MA. Champaign (IL): Human Kinetics; 1997. Effective leadership in adventure programming. [Google Scholar]