Abstract

Background

Dementia includes a group of neurodegenerative disorders characterized by progressive loss of cognitive function and a decrease in the ability to perform activities of daily living. Systematic reviews of diagnostic test accuracy (DTA) focus on how well the index test detects patients with the disease in terms of figures such as sensitivity and specificity. Although DTA reviews about dementia are essential, at present there is no information about their quantity and quality.

Methods

We searched for DTA reviews in MEDLINE (1966–2013), EMBASE (1980–2013), The Cochrane Library (from its inception until December 2013) and the Database of Abstracts of Reviews of Effects (DARE). Two reviewers independently assessed the methodological quality of the reviews using the AMSTAR measurement tool, and the quality of the reporting using the PRISMA checklist. We describe the main characteristics of these reviews, including basic characteristics, type of dementia, and diagnostic test evaluated, and we summarize the AMSTAR and PRISMA scores.

Results

We selected 24 DTA systematic reviews. Only 10 reviews (41.6%), assessed the bias of included studies and few (33%) used this information to report the review results or to develop their conclusions Only one review (4%) reported all methodological items suggested by the PRISMA tool. Assessing methodology quality by means of the AMSTAR tool, we found that six DTA reviews (25%) pooled primary data with the aid of methods that are used for intervention reviews, such as Mantel-Haenszel and separate random-effects models (25%), while five reviews (20.8%) assessed publication bias by means of funnel plots and/or Egger’s Test.

Conclusions

Our assessment of these DTA reviews reveals that their quality, both in terms of methodology and reporting, is far from optimal. Assessing the quality of diagnostic evidence is fundamental to determining the validity of the operating characteristics of the index test and its usefulness in specific settings. The development of high quality DTA systematic reviews about dementia continues to be a challenge.

Electronic supplementary material

The online version of this article (doi:10.1186/s12883-014-0183-2) contains supplementary material, which is available to authorized users.

Keywords: Diagnosis, Dementia, Alzheimer’s disease dementia, Systematic review, PRISMA checklist, AMSTAR tool

Background

Population ageing is generating a considerable increase in chronic and neurodegenerative diseases, as well as severe consequences for global public health [1,2]. Dementia includes a group of neurodegenerative disorders characterized by progressive loss of cognitive function as well as the ability to perform activities of daily living, sometimes accompanied by neuropsychiatric symptoms [3]. Criteria for dementia diagnosis include a deficit in one or more cognitive domains that is severe enough to impair functional activities, and is progressive over a period of at least six months and not attributable to any other brain disease [4,5]. The presence of cognitive impairment, a fundamental part of the dementia profile, could be detected through a combination of history, clinical examination, and objective cognitive assessment such as a brief mental assessment or comprehensive neuropsychological testing [6,7]. At present, there is a trend towards incorporating biomarker tests into dementia diagnosis criteria, such as amyloid-β protein accumulation, neuronal injury, synaptic dysfunction, and neuronal degeneration [8-10].

Systematic reviews of diagnostic test accuracy (DTA) focus on how well an index test detects patients with the disease in terms of figures such as sensitivity and specificity. DTA reviews present summarized information to consumers (such as clinicians, stakeholders, guideline developers and patients) about which test should be used over another as the initial step in a diagnostic pathway or as an add-on element to confirm the presence of the target disease. Although the methodology for performing DTA reviews is constantly evolving, organizations such as the Cochrane Collaboration have published methodological guidance with basic requirements to develop these kinds of reviews [11].

Recently, we evaluated the quality of clinical practice guidelines for diagnosing dementia and found a wide variety in terms of quality of evidence as well as the strength of the recommendations provided [12]. Although DTA reviews are an essential part of any clinical guidelines, at present there is no information about the quantity and quality of dementia DTA reviews. This information could help clinicians and stakeholders provide adequate management and appropriate care for these patients, in line with the rise in dementia and its expected burden on health systems.

The objective of this study was to evaluate the quality (in terms of rigor in conduct and reporting) of DTA systematic reviews related to diagnostic tools for Alzheimer’s disease dementia (ADD) and other dementias. These tools included brief cognitive tests, biomarkers, and neuropsychological assessment, and they were assessed by means of standardized tools, as well as by describing the tests evaluated and their main characteristics.

Methods

We produced a protocol for the review (available from the authors on request) detailing the proposed review methods. We searched in MEDLINE (1966–2013), EMBASE (1980–2013), The Cochrane Library (from its inception until December 2013) and the Database of Abstracts of Reviews of Effects (DARE), by means of a predesigned search strategy adapted to each database (Additional file 1), in order to identify diagnostic systematic reviews focused on the test accuracy of diagnostic tools for dementia, ADD or other dementias (e.g. vascular dementia, frontotemporal dementia, Lewy bodies, and Parkinson dementia). We checked the reference lists of the selected studies for additional references, and excluded congress abstracts and references with insufficient information.

Two reviewers independently assessed the eligibility of the results and extracted data from the selected studies. In this overview we included systematic reviews of diagnostic studies that focused on the accuracy of tests for dementia. Only reviews that used a systematic approach, included adult patients aged over 50 suspected of having dementia, and estimated the accuracy of the assessed test (i.e. providing sensitivity and specificity figures) were considered. We used a predefined data extraction form to extract descriptive information including year of publication, type of studies included, and clinical reference standard, and whether a checklist was used to evaluate the methodological quality of primary studies (such as the Quality Assessment of Diagnostic Accuracy Studies, QUADAS [13,14]).

After this was done, two reviewers independently assessed the methodological quality of the selected reviews using the Assessment of Multiple Systematic Reviews (AMSTAR) measurement tool [15], tailored to the characteristics of DTA systematic reviews (Additional file 1). They also assessed the quality of the reporting using the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) checklist [16]. We resolved disagreements through discussion. In this article we describe the main characteristics of the selected reviews, including basic characteristics (e.g. reference standard used and diagnostic bias reported). We also describe the type of dementia and index test evaluated, as well as AMSTAR and PRISMA scores by item.

Results

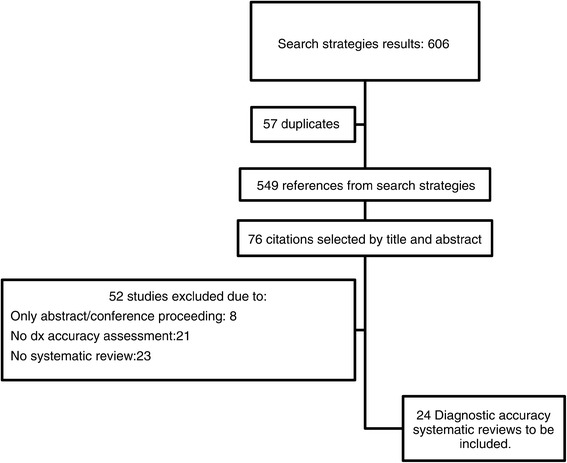

We retrieved a total of 549 citations after excluding duplicates and initially selected a total of 76 references for full review. We excluded 52 articles because they did not provide diagnostic accuracy information, presented a narrative overview about dementia, or did not have enough information to evaluate their quality (i.e. congress abstracts) (Additional file 1). Finally, we selected 24 DTA systematic reviews [17-40], with a median sample size of 2,190 patients (range from 160 to 26,019) (Figure 1 and Additional file 1).

Figure 1.

Flowchart of the systematic search.

Ten reviews (41.6%) focused on mild cognitive impairment (MCI), an early stage of dementia, either for detection or conversion to full dementia, while nine reviews (37.5%) focused on ADD, and eight on dementia in general. Eight out of 24 DTA reviews (33%) included more than one subtype of dementia. Seven studies (29%) had less than 1,000 patients, and a similar number did not report the total number of patients derived from primary studies, while nine (37.5%) of the reviews included less than 10 studies (Additional file 1).

The reviews selected included mostly cross-sectional and cohort studies, with a median of 19.5 primary studies included (range from two to 233 primary studies). The index tests most frequently evaluated were cognitive tests (nine DTA reviews), followed by PET/SPECT and serum levels of Total Tau and P-Tau (six DTA reviews each). Several reference standards were used to validate dementia diagnoses, with NINCS-ARDRA and DSM-IV being the most common (11 and nine reviews, respectively). Four DTA reviews did not indicate the reference standard used to evaluate the validity of dementia diagnoses. Table 1 shows the selected reviews by type of dementia and diagnostic tool evaluated.

Table 1.

DTA systematic reviews about dementia by type of dementia and diagnostic tool evaluated

| MMSE | Other cognitive tests | PET/ SPECT | CSF Aβ 42 | P –Tau/T-Tau | FDG/PIB uptake on PET | MRI/ CT | Other diagnostic tools | |

|---|---|---|---|---|---|---|---|---|

| ADD | Bloudek [19] | Bloudek [19] | Bloudek [19] | Bloudek [19] | Bloudek [19] | |||

| Dougall [22] | Mitchell [28] | Matchar [27] | ||||||

| Ferrante [24] | Patwardhan [34] | |||||||

| DLB | Yeo [40] | Van Harten [36] | Papathanasiou [33] | |||||

| Treglia [35] | ||||||||

| Yeo [40] | ||||||||

| VaD | Dougall [22] | Van Harten [36] | Beynon [18] | Yeo [40] | ||||

| Yeo [40] | ||||||||

| FTD | Dougall [22] | Van Harten [36] | Yeo [40] | |||||

| Yeo [40] | ||||||||

| Dementia in general | Mitchell [29] | Appels [17] | Ferrante [24] | Matchar [27] | ||||

| Carnero [20] | ||||||||

| Crawford [21] | ||||||||

| Mitchell [30] | ||||||||

| Mitchell [31] | ||||||||

| MCI | Lischka [25] | Ehreke [23] | Yuan [38] | Monge [32] | Mitchell [28] | Zhang [39] | Yuan [38] | |

| Lonie [26] | Lischka [25] | van Rossum [37] | Monge [32] | |||||

| Mitchell [29] | Lonie [26] | van Rossum [37] |

Abbreviations: Aβ 42 42 aminoacid form of amyloid-β, ADD Alzheimer’s Disease Dementia, CT Computed tomography, DLB Dementia with Lewy Bodies, FDG-PET PET using 2-Fluro-deoxy D-glucose, FTD Fronto-Temporal Dementia, MCI Mild cognitive Impairment, MMSE Mini-Mental State Examination, MRI Magnetic Resonance Imaging, PIB-PET 11 C-Pittsburgh Compound B- positron emission tomography, Ptau Phosphorylated Tau, SPECT Single photon emission computed tomography, Ttau Total Tau, VaD Vascular Dementia.

Only 10 reviews (41.6%) assessed the methodological quality of primary studies, with QUADAS-I being the most commonly used tool for assessing risk of bias of primary studies (six studies, 60%). Patient spectrum and incorporation bias were the most frequent biases reported by review authors. Four reviews assessed the methodological quality of primary studies by means of the STARD tool (16%), which is intended to assess reporting quality. None of the DTA reviews reported results related to inconclusive results, adverse events, or the use of resources related to index tests in an explicit way. Eleven reviews (45.8%) reported the sources of funding or support to perform the DTA review with most of them being government sources.

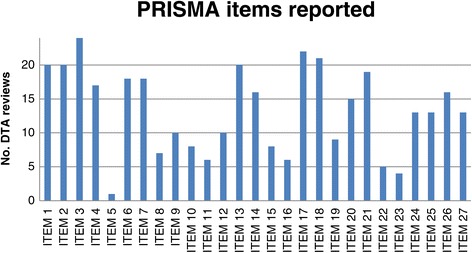

With regards to the PRISMA checklist, all selected reviews (100%) described the rationale for the review (Item 3), and 20 (83.3%) identified themselves as systematic reviews (Item 1). Twenty-two reviews (91.6%) reported the number of studies screened, assessed for eligibility, and included in the review, by means of a flow chart (Item 17) and 21 (87.5%) presented characteristics of studies and provided citations (Item 18). However, only one study (4.1%) reported a review protocol (Item 5), four (16.6%) reported results of additional analysis (Item 23), and five (20.8%) presented results of risk of bias assessment across studies as publication bias or selective reporting within studies (Item 22). Only one review reported all methodological items suggested by the PRISMA tool (Items 5 to 16) (Figure 2).

Figure 2.

PRISMA items reported by DTA reviews about dementia. Notes: Item 1 = Identify the report as a systematic review; Item 2 = Provide a structured summary; Item 3 = Describe the rationale of the review; Item 4 = Provide an explicit statement of questions being addressed; Item 5 = Indicate if a review protocol exists; Item 6 = Specify study characteristics; Item 7 = Describe all information sources in the search and date last searched; Item 8 = Present full electronic search strategy; Item 9 = State the process for selecting studies; Item 10 = Describe method of data extraction; Item 11 = List and define all variables for which data were sought; Item 12 = Describe methods used for assessing risk of bias of individual studies; Item 13 = State the principal summary measures; Item 14 = Describe the methods of handling data and combining results; Item 15 = Specify any assessment of risk of bias that may affect the evidence; Item 16 = Describe methods of additional analyses; Item 17 = Give numbers of studies screened, assessed for eligibility, and included in the review; Item 18 = For each study, present characteristics for which data were extracted; Item 19 = Present data on risk of bias of each study; Item 20 = For all outcomes present simple summary data, effect estimates and confidence intervals; Item 21 = Present the main results of the review; Item 22 = Present results of any assessment of risk of bias across studies; Item 23 = Provide results of additional analyses; Item 24 = Summarize the main findings; Item 25 = Discuss limitations at study and outcome level and at review-level; Item 26 = Provide a general interpretation of the results and implications for future research; Item 27 = Describe sources of funding for the systematic review.

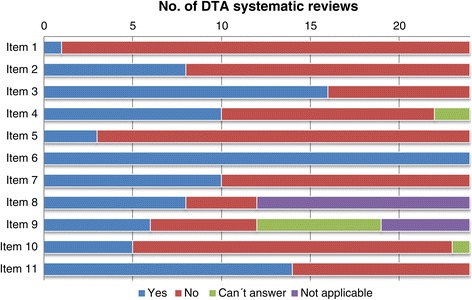

With respect to the quality of conduct in terms of the AMSTAR tool, 21 reviews (87.5%) did not provide a list of included/excluded studies and 16 (66.6%) did not report duplicate study selection/data extraction (Figure 3). All reviews reported the characteristics of included studies (100%). Six DTA reviews (25%) pooled primary data by means of methods that are used for intervention reviews such as Mantel-Haenszel and separate random-effects models, while in seven reviews (29%) it was not possible to determine which methods were used to combine the numerical results. Five reviews (20.8%) assessed publication bias by means of funnel plots and/or Egger’s Test. Fourteen of these DTA reviews (58.3%) reported possible conflicts of interest. As mentioned above, only 10 reviews (41.6%) assessed the bias of included studies, and only eight (33%) used this information to report the review results or reach their conclusions.

Figure 3.

Results of AMSTAR assessment- DTA systematic reviews about dementia. Notes: Item 1 = Priori design; Item 2 = Duplicate study selection/data extraction; Item 3 = Comprehensive literature search; Item 4 = inclusion criterion -status of publication; Item 5 = list of studies provided; Item 6 = characteristics of the included studies provided; Item 7 = scientific quality of the included studies assessed & documented; Item 8 = scientific quality of the included studies in formulating conclusions; Item 9 = methods used to combine the findings of studies appropriate; Item 10 = publication bias assessed; Item 11 = conflict of interest stated.

Discussion

Our review of DTA systematic reviews about dementia shows several areas for improvement. First, we had to exclude a significant number of reviews focused not on the accuracy of the test (i.e. sensitivity and specificity figures), but instead presenting information about the average differences between case and control groups. In these reviews, the authors gathered information about Phase I diagnostic studies, evaluating the differences (for example, in terms of difference of means) between a group of subjects with the disease and healthy controls [41]. These studies are essential for an adequate and full assessment of any diagnostic tool, but cannot show if the test distinguishes between those with and without the target condition. Authors of future reviews should be careful in appraising these studies due to the higher risk of bias (for instance, the wide use of cases and controls design) and their limitations in decision-making processes.

In relation to the basic characteristics of dementia DTA systematic reviews, we noticed that a significant number of reviews were focused on mild cognitive impairment (MCI). Identification of early forms of dementia has become an important topic because some interventions have been claimed to be effective in slowing or stopping the cognitive decline when they are administered in earlier stages of dementia, but these findings are still being investigated [42-45]. Similarly, it is interesting that a significant number of reviews focused on cognitive tests, which are the first line of detection for cognitive impairment in dementia. At present it is unclear which cognitive test should be the instrument of choice for initial dementia screening in population-based, primary and secondary settings, due to rising criticism of the role of traditional tests such as Mini-Mental State examination (MMSE) [12].

Our assessment of these DTA reviews reveals that their quality, in terms of both methodology and reporting, is far from optimal. We found that more than half of the included reviews did not provide a quality assessment of the primary studies, and therefore information of an unknown quality was gathered and even numerical pooled results were provided. Assessing the quality of diagnostic evidence is fundamental to determining the validity of the operating characteristics of the index test, and its usefulness in specific settings [46]. Four reviews (16%) did not report the reference standards they used to evaluate the accuracy of the different tests appraised, while others reported STARD scores as an evaluation of methodological quality. Only 13 of the 24 reviews (54%) described the limitations of the information gathered, and in only eight cases (33%) was the quality of this information considered in the conclusions. Likewise, AMSTAR and PRISMA items in conjunction showed an almost complete absence of a priori protocols presenting pre-specified methodological plans. The importance of pre-specified protocols has been established in intervention reviews as well as in clinical trials of pharmacological interventions. Diagnostic tests for dementia, such as FDG-PET and Tau-AB42, can be understood as medical technologies that can be affected by conflicts of interest. The availability of protocols at the beginning of any study not only ensures rigor in development, but also avoids conducting unnecessary research [47].

In our study we also identified drawbacks in developing DTA systematic reviews related to the application of statistical methods generally used in intervention systematic reviews. For example, when the methods used for pooling numerical information were assessed, we identified three reviews that used Der Simonian-Laird random effects models, instead of methods highly recommended in these cases such as bivariate models [48]. Some authors have asserted that the use of inadequate statistical techniques to deal with diagnostic information could lead to failures in managing the combined results of sensitivity and specificity [48-51]. Similarly, some reviews used the I2 statistic to illustrate the heterogeneity between the analyzed studies. Heterogeneity is a common issue in accuracy reviews, due to factors such as threshold used, prevalence of the target condition in the sample selected, and settings of test evaluation [52], but at present, there are no defined standards for how diagnostic heterogeneity should be measured and managed in DTA reviews [53].

In the same way, we identified that evaluation of publication bias remains a problem in dementia DTA reviews. In our study, 18 reviews (75%) did not provide information about this bias, but it is unclear if the authors simply omitted this evaluation or if they decided not to assess this topic due to lack of suitable analysis methods. Three additional cases (12.5%) used funnel-plot figures or statistical tests (for instance, Egger’s test). While these methods are highly useful in intervention systematic reviews, several research studies have shown that their use in the field of DTA reviews, usually by means of diagnostic odds ratios (DOR), can generate misleading results [54].

Our study has some limitations. One of these is related to the tools used to evaluate systematic reviews (such as PRISMA and AMSTAR), focused mainly on intervention reviews. In order to correctly use the AMSTAR tool we developed tailored definitions to adequately reflect the most accepted methodology of DTA systematic reviews. However, it is important to encourage discussion about how current tools and methodologies (for example, overview methodology) can be applied or adapted to developing DTA studies. A second difficulty that we found was the large number of diagnostic reviews reported only in abstract form, which had to be excluded because of the absence of information to allow for a full assessment of their elements. We believe that these “ongoing” studies reflect the growing interest in the diagnosis of dementia, as well as the need for comprehensive discussion about dementia diagnosis tools. Finally, our search strategy was specific and did not include terms related to MCI. Our findings related to this early stage of dementia might be incidental and not reflect all possible DTA reviews in this area.

Conclusions

Development of systematic reviews of diagnostic test accuracy for dementia remains a difficult task. However, an increasing number of health professionals require information about the quality of diagnostic technologies due to their role in detecting, staging and monitoring. We believe that some recent initiatives might help improve methodology and reporting quality in DTA reviews on dementia [11,48,55]. In the near future, high quality DTA reviews could play an important role in helping clinicians, policy-makers and even patients to make informed decisions for the diagnosis of this prevalent disease.

Acknowledgments

The work was supported by the Fundacion Universitaria de Ciencias de la Salud, Bogota- Colombia. Ingrid Arevalo-Rodriguez is a PhD student at the Department of Pediatrics, Obstetrics and Gynecology, and Preventive Medicine of the Universitat Autònoma de Barcelona.

Abbreviations

- 7MS

Seven-minute screen

- 99mTc-HMPAO 99 m

Technetium-hexamethyl-propylenamine oxime

- Aβ 1–42

42 aminoacid form of amyloid-β

- ACE

Addenbrooke’s cognitive examination

- ADAS-CoG

Alzheimer’s disease assessment scale- cognitive

- ADD

Alzheimer’s disease dementia

- ADDTC

State of california AD diagnostic and treatment centre criteria

- BKSCA-R

Brief Kingston standardized cognitive assessment- revised

- BVRT

Benton’s visual retention test

- CAMCI

Chinese abbreviated mild cognitive impairment test

- CAMCOG

Cambridge cognitive examination

- CASI

Cognitive abilities screening instrument

- CAST

Cognitive assessment screening test

- CCCE

Cross-cultural cognitive examination

- CCSE

Cognitive capacity screening examination

- CERAD

Consortium to Establish a registry for Alzheimer’s Disease

- CSF

Cerebrospinal fluid

- CSI-D

Community screening instrument for dementia

- CT

Computed tomography

- DaTSCAN

DaT, 123 I-FP-CT

- DLB

Dementia with Lewy Bodies

- DSM

Diagnostic and statistical manual of mental disorders

- DTA

Diagnostic test accuracy

- FDG-PET

PET using 2-Fluro-deoxy D-glucose

- FTD

Fronto-temporal dementia

- ICD-10

International statistical classification of diseases and related health problems, 10th revision

- IQCODE

Informant questionnarie on cognitive decline in the elderly

- IST

Isaacs set test

- M@T

Memory alteration test

- MCI

Mild cognitive impairment

- MDRS

Mattis dementia rating scale

- MFI

Mental function index

- MIBG

123 I-metaiodobenzylguanidine scintigraphy

- MIS

Memory impairment screen

- MMSE

Mini-mental state examination

- MOCA

Montreal cognitive assessment

- MRI

Magnetic resonance imaging

- NCSE

Neurobehavioral cognitive screening examination

- NINCDS-ADRDA

National Institute of Neurological and Communicative Disorders and Stroke and the Alzheimer’s Disease and Related Disorders Association

- NINDS-AIREN

National Institute of Neurological Disorders and Stroke and Association Internationale pour la Recherche et l’Ensignement en Neurosciences

- NUCOG

Neuropsychiatry unit cognitive assessment tool

- PIB-PET

11 C-Pittsburgh Compound B- positron emission tomography

- Ptau

Phosphorylated tau

- RBANS

Repeatable battery for the assessment of neuropsychological status

- RUDAS

Rowland universal dementia assessment scale

- SPECT

Single photon emission computed tomography

- STMS

Short test of mental status

- TMT B

Trail making Test Part B

- Ttau

Total tau

- VaD

Vascular dementia

Additional file

This file includes the following information: 1. Search strategy used on MEDLINE. 2. Operational definitions of AMSTAR’s Items for DTA reviews about dementia. 3. DTA systematic reviews about dementia- Descriptive information. 4. DTA systematic reviews about dementia- AMSTAR items. 5. DTA systematic reviews about dementia- PRISMA items. 6. List of excluded studies.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

IAR conceived the review, participated in its design, development and coordination, and helped draft the manuscript. OS participated in the assessment of DTA reviews. IS designed the search strategies and helped draft the manuscript. XB participated in the design of this study and helped draft the manuscript. ES conceived the study, and helped draft the manuscript. PA conceived the review, participated in its design, development and coordination, and helped draft the manuscript. All authors read and approved the final manuscript.

Contributor Information

Ingrid Arevalo-Rodriguez, Email: iarevalo@fucsalud.edu.co.

Omar Segura, Email: odsegura@fucsalud.edu.co.

Ivan Solà, Email: isola@santpau.cat.

Xavier Bonfill, Email: xbonfill@santpau.cat.

Erick Sanchez, Email: ersanche88@gmail.com.

Pablo Alonso-Coello, Email: palonso@santpau.cat.

References

- 1.Prince M, Bryce R, Albanese E, Wimo A, Ribeiro W, Ferri CP. The global prevalence of dementia: a systematic review and metaanalysis. Alzheimers Dement. 2013;9(1):e62–e75. doi: 10.1016/j.jalz.2012.11.007. [DOI] [PubMed] [Google Scholar]

- 2.Wimo A, Winblad B, Jonsson L. The worldwide societal costs of dementia: Estimates for 2009. Alzheimers Dement. 2010;6(2):98–103. doi: 10.1016/j.jalz.2010.01.010. [DOI] [PubMed] [Google Scholar]

- 3.Davis Daniel HJ, Creavin Sam T, Noel-Storr A, Quinn Terry J, Smailagic N, Hyde C, Brayne C, McShane R, Cullum S. Neuropsychological tests for the diagnosis of Alzheimer’s disease dementia and other dementias: a generic protocol for cross-sectional and delayed-verification studies. Cochrane Database Syst Rev. 2013;3:CD010460. doi: 10.1002/14651858.CD010460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.American Psychiatric Association . Diagnostic and statistical manual of mental disorders (DSM-IV) Washington DC: American Psychiatric Association; 1994. [Google Scholar]

- 5.Jack CR, Jr, Albert MS, Knopman DS, McKhann GM, Sperling RA, Carrillo MC, Thies B, Phelps CH. Introduction to the recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011;7(3):257–262. doi: 10.1016/j.jalz.2011.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CR, Jr, Kawas CH, Klunk WE, Koroshetz WJ, Manly JJ, Mayeux R, Mohs RC, Morris JC, Rossor MN, Scheltens P, Carrillo MC, Thies B, Weintraub S, Phelps CH. The diagnosis of dementia due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011;7(3):263–269. doi: 10.1016/j.jalz.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Feldman HH, Jacova C, Robillard A, Garcia A, Chow T, Borrie M, Schipper HM, Blair M, Kertesz A, Chertkow H. Diagnosis and treatment of dementia: 2. Diagnosis. CMAJ. 2008;178(7):825–836. doi: 10.1503/cmaj.070798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dubois B, Feldman HH, Jacova C, Cummings JL, Dekosky ST, Barberger-Gateau P, Delacourte A, Frisoni G, Fox NC, Galasko D, Gauthier S, Hampel H, Jicha GA, Meguro K, O'Brien J, Pasquier F, Robert P, Rossor M, Salloway S, Sarazin M, de Souza LC, Stern Y, Visser PJ, Scheltens P. Revising the definition of Alzheimer’s disease: a new lexicon. Lancet Neurol. 2010;9(11):1118–1127. doi: 10.1016/S1474-4422(10)70223-4. [DOI] [PubMed] [Google Scholar]

- 9.Mak E, Su L, Williams GB, O'Brien JT. Neuroimaging characteristics of dementia with Lewy bodies. Alzheimers Res Ther. 2014;6(2):18. doi: 10.1186/alzrt248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Risacher SL, Saykin AJ. Neuroimaging biomarkers of neurodegenerative diseases and dementia. Semin Neurol. 2013;33(4):386–416. doi: 10.1055/s-0033-1359312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Reitsma JR AWS, Whiting P, Vlassov VV, Leeflang MMG, Deeks JJ: Chapter 9: Assessing Methodological Quality. In Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy Version 1.0. Edited by Deeks JB PM, Gatsonis C.: The Cochrane Collaboration; 2009:1–27. Available from: http://srdta.cochrane.org/.

- 12.Arevalo-Rodriguez I, Pedraza OL, Rodriguez A, Sanchez E, Gich I, Sola I, Bonfill X, Alonso-Coello P. Alzheimer’s disease dementia guidelines for diagnostic testing: a systematic review. Am J Alzheimers Dis Other Demen. 2013;28(2):111–119. doi: 10.1177/1533317512470209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Whiting P, Rutjes AW, Reitsma JB, Bossuyt PM, Kleijnen J. The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003;3:25. doi: 10.1186/1471-2288-3-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MM, Sterne JA, Bossuyt PM. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011;155(8):529–536. doi: 10.7326/0003-4819-155-8-201110180-00009. [DOI] [PubMed] [Google Scholar]

- 15.Shea BJ GJ, Wells GA, Boers M, Andersson N, Hamel C, Porter A, Tugwell P, Moher D, Bouter L. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10. doi: 10.1186/1471-2288-7-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ (Clinical Research Ed) 2009;339:b2700. doi: 10.1136/bmj.b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Appels BA, Scherder E. The diagnostic accuracy of dementia-screening instruments with an administration time of 10 to 45 minutes for use in secondary care: a systematic review. Am J Alzheimers Dis Other Demen. 2010;25(4):301–316. doi: 10.1177/1533317510367485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Beynon R, Sterne JA, Wilcock G, Likeman M, Harbord RM, Astin M, Burke M, Bessell A, Ben-Shlomo Y, Hawkins J, Hollingworth W, Whiting P. Is MRI better than CT for detecting a vascular component to dementia? A systematic review and meta-analysis. BMC Neurol. 2012;12:33. doi: 10.1186/1471-2377-12-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bloudek LM, Spackman DE, Blankenburg M, Sullivan SD. Review and meta-analysis of biomarkers and diagnostic imaging in Alzheimer’s disease. J Alzheimers Dis. 2011;26(4):627–645. doi: 10.3233/JAD-2011-110458. [DOI] [PubMed] [Google Scholar]

- 20.Carnero-Pardo C. Systematic review of the value of positron emission tomography in the diagnosis of Alzheimer’s disease. Rev Neurol. 2003;37(9):860–870. [PubMed] [Google Scholar]

- 21.Crawford S, Whitnall L, Robertson J, Evans JJ. A systematic review of the accuracy and clinical utility of the Addenbrooke’s Cognitive Examination and the Addenbrooke’s Cognitive Examination-Revised in the diagnosis of dementia. Int J Geriatr Psychiatry. 2012;27(7):659–669. doi: 10.1002/gps.2771. [DOI] [PubMed] [Google Scholar]

- 22.Dougall NJ, Bruggink S, Ebmeier KP. Systematic review of the diagnostic accuracy of 99mTc-HMPAO-SPECT in dementia. Am J Geriatr Psychiatry. 2004;12(6):554–570. doi: 10.1176/appi.ajgp.12.6.554. [DOI] [PubMed] [Google Scholar]

- 23.Ehreke L, Luppa M, Konig HH, Riedel-Heller SG. Is the clock drawing test a screening tool for the diagnosis of mild cognitive impairment? A systematic review. Int Psychogeriatr. 2010;22(1):56–63. doi: 10.1017/S1041610209990676. [DOI] [PubMed] [Google Scholar]

- 24.Ferrante D. Ciudad de Buenos Aires: Institute for Clinical Effectiveness and Health Policy (IECS) 2004. SPECT for the Diagnosis and Assessment of Dementia and Alzheimer’s Disease. [Google Scholar]

- 25.Lischka AR, Mendelsohn M, Overend T, Forbes D. A systematic review of screening tools for predicting the development of dementia. Can J Aging. 2012;31(3):295–311. doi: 10.1017/S0714980812000220. [DOI] [PubMed] [Google Scholar]

- 26.Lonie JA, Tierney KM, Ebmeier KP. Screening for mild cognitive impairment: a systematic review. Int J Geriatr Psychiatry. 2009;24(9):902–915. doi: 10.1002/gps.2208. [DOI] [PubMed] [Google Scholar]

- 27.Matchar DB, Kulasingam SL, McCrory DC, Patwardhan MB, Rutschmann OT, Samsa GP, Schmechel DE: Use of positron emission tomography and other neuroimaging techniques in the diagnosis and management of Alzheimer’s disease and dementia. In Rockville: Agency for Healthcare Research and Quality (AHRQ); 2001. [PubMed]

- 28.Mitchell AJ. CSF phosphorylated tau in the diagnosis and prognosis of mild cognitive impairment and Alzheimer’s disease: a meta-analysis of 51 studies. J Neurol Neurosurg Psychiatry. 2009;80(9):966–975. doi: 10.1136/jnnp.2008.167791. [DOI] [PubMed] [Google Scholar]

- 29.Mitchell AJ. A meta-analysis of the accuracy of the mini-mental state examination in the detection of dementia and mild cognitive impairment. J Psychiatr Res. 2009;43(4):411–431. doi: 10.1016/j.jpsychires.2008.04.014. [DOI] [PubMed] [Google Scholar]

- 30.Mitchell AJ, Malladi S. Screening and case finding tools for the detection of dementia. Part I: evidence-based meta-analysis of multidomain tests. Am J Geriatr Psychiatry. 2010;18(9):759–782. doi: 10.1097/JGP.0b013e3181cdecb8. [DOI] [PubMed] [Google Scholar]

- 31.Mitchell AJ, Malladi S. Screening and case-finding tools for the detection of dementia. Part II: evidence-based meta-analysis of single-domain tests. Am J Geriatr Psychiatry. 2010;18(9):783–800. doi: 10.1097/JGP.0b013e3181cdecd6. [DOI] [PubMed] [Google Scholar]

- 32.Monge-Argiles JA, Sanchez-Paya J, Munoz-Ruiz C, Pampliega-Perez A, Montoya-Gutierrez J, Leiva-Santana C. Biomarkers in the cerebrospinal fluid of patients with mild cognitive impairment: a meta-analysis of their predictive capacity for the diagnosis of Alzheimer’s disease. Rev Neurol. 2010;50(4):193–200. [PubMed] [Google Scholar]

- 33.Papathanasiou ND, Boutsiadis A, Dickson J, Bomanji JB. Diagnostic accuracy of (1)(2)(3)I-FP-CIT (DaTSCAN) in dementia with Lewy bodies: a meta-analysis of published studies. Parkinsonism Relat Disord. 2012;18(3):225–229. doi: 10.1016/j.parkreldis.2011.09.015. [DOI] [PubMed] [Google Scholar]

- 34.Patwardhan MB, McCrory DC, Matchar DB, Samsa GP, Rutschmann OT. Alzheimer disease: operating characteristics of PET–a meta-analysis. Radiology. 2004;231(1):73–80. doi: 10.1148/radiol.2311021620. [DOI] [PubMed] [Google Scholar]

- 35.Treglia G, Cason E, Giordano A. Diagnostic performance of myocardial innervation imaging using MIBG scintigraphy in differential diagnosis between dementia with Lewy bodies and other dementias: a systematic review and a meta-analysis. J Neuroimaging. 2012;22(2):111–117. doi: 10.1111/j.1552-6569.2010.00532.x. [DOI] [PubMed] [Google Scholar]

- 36.van Harten AC, Kester MI, Visser PJ, Blankenstein MA, Pijnenburg YA, van der Flier WM, Scheltens P. Tau and p-tau as CSF biomarkers in dementia: a meta-analysis. Clin Chem Lab Med. 2011;49(3):353–366. doi: 10.1515/CCLM.2011.086. [DOI] [PubMed] [Google Scholar]

- 37.van Rossum IA, Vos S, Handels R, Visser PJ. Biomarkers as predictors for conversion from mild cognitive impairment to Alzheimer-type dementia: implications for trial design. J Alzheimers Dis. 2010;20(3):881–891. doi: 10.3233/JAD-2010-091606. [DOI] [PubMed] [Google Scholar]

- 38.Yuan Y, Gu ZX, Wei WS. Fluorodeoxyglucose-positron-emission tomography, single-photon emission tomography, and structural MR imaging for prediction of rapid conversion to Alzheimer disease in patients with mild cognitive impairment: a meta-analysis. AJNR Am J Neuroradiol. 2009;30(2):404–410. doi: 10.3174/ajnr.A1357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zhang S, Han D, Tan X, Feng J, Guo Y, Ding Y. Diagnostic accuracy of 18F-FDG and 11 C-PIB-PET for prediction of short-term conversion to Alzheimer’s disease in subjects with mild cognitive impairment. Int J Clin Pract. 2012;66(2):185–198. doi: 10.1111/j.1742-1241.2011.02845.x. [DOI] [PubMed] [Google Scholar]

- 40.Yeo JMLX, Khan Z, Pal S. Systematic review of the diagnostic utility of SPECT imaging in dementia. Eur Arch Psychiatry Clin Neurosci. 2013;263(7):539–552. doi: 10.1007/s00406-013-0426-z. [DOI] [PubMed] [Google Scholar]

- 41.Sackett D, Haynes RB. The Arquitecture of Diagnostic Research. In: Knottnerus J, editor. The Evidence Base of Clinical Diagnosis. London: BMJ Publisher; 2002. [Google Scholar]

- 42.Birks J, Flicker L. Donepezil for mild cognitive impairment. Cochrane Database Syst Rev. 2006;3:CD006104. doi: 10.1002/14651858.CD006104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Martin M, Clare L, Altgassen Anne M, Cameron Michelle H, Zehnder F. Cognition-based interventions for healthy older people and people with mild cognitive impairment. Cochrane Database Syst Rev. 2011;1:CD006220. doi: 10.1002/14651858.CD006220.pub2. [DOI] [PubMed] [Google Scholar]

- 44.Russ Tom C, Morling Joanne R. Cholinesterase inhibitors for mild cognitive impairment. Cochrane Database Syst Rev. 2012;9:CD009132. doi: 10.1002/14651858.CD009132.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Yue J, Dong Bi R, Lin X, Yang M, Wu Hong M, Wu T. Huperzine A for mild cognitive impairment. Cochrane Database Syst Rev. 2012;12:CD008827. doi: 10.1002/14651858.CD008827.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Tatsioni A, Zarin DA, Aronson N, Samson DJ, Flamm CR, Schmid C, Lau J. Challenges in systematic reviews of diagnostic technologies. Ann Intern Med. 2005;142(12 Pt 2):1048–1055. doi: 10.7326/0003-4819-142-12_Part_2-200506211-00004. [DOI] [PubMed] [Google Scholar]

- 47.Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gülmezoglu AM, Howells DW, Ioannidis JPA, Oliver S. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383(9912):156–165. doi: 10.1016/S0140-6736(13)62229-1. [DOI] [PubMed] [Google Scholar]

- 48.Macaskill PG C, Deeks JJ, Harbord RM, Takwoingi Y: Chapter 10: Analysing and presenting results. In Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy Version 1.0. Edited by Deeks JB PM, Gatsonis C.: The Cochrane Collaboration; 2010:1–61. Available from: http://srdta.cochrane.org/.

- 49.Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol. 2005;58(10):982–990. doi: 10.1016/j.jclinepi.2005.02.022. [DOI] [PubMed] [Google Scholar]

- 50.Harbord RM, Whiting P, Sterne JA, Egger M, Deeks JJ, Shang A, Bachmann LM. An empirical comparison of methods for meta-analysis of diagnostic accuracy showed hierarchical models are necessary. J Clin Epidemiol. 2008;61(11):1095–1103. doi: 10.1016/j.jclinepi.2007.09.013. [DOI] [PubMed] [Google Scholar]

- 51.Hayen AMP, Irwig L, Bossuyt P. Appropriate statistical methods are required to assess diagnostic tests for replacement, add-on, and triage. J Clin Epidemiol. 2010;63(8):883–891. doi: 10.1016/j.jclinepi.2009.08.024. [DOI] [PubMed] [Google Scholar]

- 52.Whiting P, Rutjes AWS, Reitsma JB, Glas AS, Bossuyt PMM, Kleijnen J. Sources of variation and bias in studies of diagnostic Accuracy: a systematic review. Ann Intern Med. 2004;140(3):189–202. doi: 10.7326/0003-4819-140-3-200402030-00010. [DOI] [PubMed] [Google Scholar]

- 53.Leeflang MM, Deeks JJ, Rutjes AW, Reitsma JB, Bossuyt PM. Bivariate meta-analysis of predictive values of diagnostic tests can be an alternative to bivariate meta-analysis of sensitivity and specificity. J Clin Epidemiol. 2012;65(10):1088–1097. doi: 10.1016/j.jclinepi.2012.03.006. [DOI] [PubMed] [Google Scholar]

- 54.Deeks JJ, Macaskill P, Irwig L. The performance of tests of publication bias and other sample size effects in systematic reviews of diagnostic test accuracy was assessed. J Clin Epidemiol. 2005;58(9):882–893. doi: 10.1016/j.jclinepi.2005.01.016. [DOI] [PubMed] [Google Scholar]

- 55.Noel-Storr AH, McCleery JM, Richard E, Ritchie CW, Flicker L, Cullum SJ, Davis D, Quinn TJ, Hyde C, Rutjes AW, Smailagic N, Marcus S, Black S, Blennow K, Brayne C, Fiorivanti M, Johnson JK, Kopke S, Schneider LS, Simmons A, Mattsson N, Zetterberg H, Bossuyt PM, Wilcock G, McShane R. Reporting standards for studies of diagnostic test accuracy in dementia: The STARDdem Initiative. Neurology. 2014;83(4):364–373. doi: 10.1212/WNL.0000000000000621. [DOI] [PMC free article] [PubMed] [Google Scholar]