Abstract

This chapter examines brain mechanisms of reward utility operating at particular decision moments in life—moments such as when one encounters an image, sound, scent, or other cue associated in the past with a particular reward or perhaps just when one vividly imagines that cue. Such a cue can often trigger a sudden motivational urge to pursue its reward and sometimes a decision to do so. Drawing on a utility taxonomy that distinguishes among subtypes of reward utility—predicted utility, decision utility, experienced utility, and remembered utility—it is shown how cue-triggered cravings, such as an addict’s surrender to relapse, can hang on special transformations by brain mesolimbic systems of one utility subtype, namely, decision utility. The chapter focuses on a particular form of decision utility called incentive salience, a type of “wanting” for rewards that is amplified by brain mesolimbic systems. Sudden peaks of intensity of incentive salience, caused by neurobiological mechanisms, can elevate the decision utility of a particular reward at the moment its cue occurs. An understanding of what happens at such moments leads to a better understanding of the mechanisms at work in decision making in general.

Keywords: decisions, reward utility, decision-making, mesolimbic system, predicted utility, decision utility, experienced utility, remembered utility, incentive salience

This is good news and bad news for utilitarians: the limbic system reward pathways seem to correspond to a utility pump, but specialized brain circuitry processes experience in ways that are not necessarily consistent with relentless maximization of hedonic experience.

—Daniel McFadden, Frisch Lecture, Econometric Society World Congress, London, August 20, 2005 (McFadden, 2005)

How do brain representations of the utility of a reward guide decisions about whether to pursue it? Our focus here is on brain mechanisms of reward utility operating at particular decision moments in life—moments such as when you encounter an image, sound, scent, or other cue associated in your past with a particular reward or perhaps just vividly imagine that cue. Such a cue can often trigger a sudden motivational urge to pursue its reward and sometimes a decision to do so. In drug addicts trying to quit, a cue for the addicted drug might trigger urges that rise to compulsive levels of intensity despite prior commitments to abstain, leading to the decision to relapse into taking the drug again. Normal or addicted, the urge and decision may well have been lacking immediately before the cue was encountered. The decision to pursue the cued reward might never have happened if the cue had not been encountered. Why can such cues momentarily dominate decision making?

This question has both psychological and neural answers, and it may be useful to consider them together. In particular, we think that a full psychological answer involves a particular subtype of reward utility. To help make this answer clear, we draw on a utility taxonomy that distinguishes among subtypes of reward utility: predicted utility, decision utility, experienced utility, and remembered utility (Kahneman, Wakker, & Sarin, 1997). We show how cue-triggered cravings, such as the addict’s surrender to relapse, can hang on special transformations by brain mesolimbic systems of one utility subtype, namely, decision utility. The particular form of decision utility we focus on here is called incentive salience, a type of “wanting” for rewards that is amplified by brain mesolimbic systems. Sudden peaks of intensity of incentive salience, caused by neurobiological mechanisms to be described, can elevate the decision utility of a particular reward at the moment its cue occurs. An understanding of what happens at such moments will lead to a better understanding of the mechanisms at work in decision making in general.

Decisions and Reward Utility Types

When making decisions on hedonic grounds, a good decision is to choose and pursue the outcome, from among all available options, that will be liked best when it is gained. That is, a good decision maximizes reward utility. However, reward utility is not all of one type. To identify the types of reward utility involved in cue-triggered decisions, we draw here on a four-type utility framework proposed by Daniel Kahneman and colleagues: predicted utility, decision utility, experienced utility, and remembered utility (Kahneman et al., 1997).

Predicted utility is the expectation of how much a future reward will be liked. It is based on cognitive or associative prediction of the rewarding value an outcome will have when it is gained in the future.

Decision utility is the subtype of reward utility most directly connected to an actual decision (although most difficult to isolate in psychological terms from other subtypes, especially from predicted utility). As the name suggests, decision utility is the essence of an actual decision at the moment it is made, the valuation of the outcome manifest in choice and pursuit. Most typically, decision utility is revealed by what we decide actually to do.

Experienced utility is what most people think of the term “reward.” It is the hedonic impact of the reward that is actually experienced when it is finally gained. It is the affective pleasure component of reward utility. For many, experienced utility is the essence of what reward is all about.

Remembered utility is the memory of how good a previous reward was in the past. It is the reconstructed representation of the hedonic impact carried by the remembered reward. Whenever we decide about outcomes we have previously experienced in our past, remembered utility is perhaps the chief factor that determines predicted utility: We generally expect future rewards to be about as good as they have been in the past.

Ordinarily in optimal decisions, all these subtypes of reward utility may be maximized together. But sometimes a decision is less than optimal, and then subtypes of utility may diverge from each other. A major contribution of Kahneman’s utility taxonomy has been to identify cases where predicted or remembered utility diverges from actual experienced utility (Gilbert & Wilson, 2000; Kahneman, Fredrickson, Schreiber, & Redelmeier, 1993; Kahneman et al., 1997). Such divergence can lead to bad decisions on the basis of wrong expectations, called “miswanting” by Gilbert and Wilson (2000; Morewedge, Gilbert, & Wilson, 2005). If one has a distorted remembered utility because of memory illusions of various sorts, one will have a distorted predicted utility. Decisions made on the basis of false predicted utility are likely to turn out to fail to maximize eventual experienced utility. Or if predicted utility is distorted for reasons other than faulty memory, such as by inappropriate cognitive theories about what rewards will be like in the future, then decisions will again turn out wrong. In either case, predicted utility will fail to match actual experienced utility, and the decision is liable to be wrong.

Thus, if decisions are guided principally by predictions about future reward (if decision utility equals predicted utility), then faulty predictions mean that wrong decisions will be made (decision utility does not equal experienced utility). We may thus choose outcomes that we turn out not to like when our predictions about them are wrong. We choose them because we wrongly expect to like them in such cases (and perhaps because we wrongly remember having liked them in the past)—but then we turn out not to like them after all.

The previously described mismatch captures much of what is discussed under the label of miswanting and decisions that fail to maximize utility. But Kahneman’s taxonomy has a further use for an even more intriguing form of miswanting that we exploit here. This might be called irrational miswanting because it can lead to an outcome being wanted even when an outcome value is correctly predicted to be less than desirable. In this case, we suggest that decision utility may fail to match predicted utility (Berridge, 1999, 2003a; Robinson & Berridge, 1993). If decision utility exists as a distinct psychological variable (with a somewhat separate neurobiological mechanism), it might sometimes dissociate from predicted utility—just as decision utility (together with predicted utility) sometimes dissociates from experienced utility (Berridge, 1999, 2003b; Robinson & Berridge, 1993). If at any time decision utility could grow above predicted utility, that could mean choosing an outcome that we actually expected not to like at the moment of decision (and that we not only expected to like but also turned out not to like in the end).

Rational Decisions Versus Irrational Decisions

This brings us squarely to the topic of decision rationality. Decision rationality has been defined in various ways, so we wish to be clear about our own definition. First, unlike some, we do not demand consistency of preference. For psychologists and neuroscientists, there are many good reasons why individual preferences will change from time to time, so we would not call irrational any mere inconsistency of preference. Second, we would also suggest that the rationality of a decision has nothing at all to do with whether an impartial judge or the majority of other people would like the same outcome. Individual tastes are idiosyncratic (as the adage goes, de gustibus non est disputandum: there is no use disputing about individual differences in tastes). For the purpose of decision rationality, we simply accept individual tastes for what they are—differences in individual characteristics of experienced utility that make different things liked by different people (or even by the same person at different times).

Further, the rationality of a decision does not even depend on whether deciders themselves end up eventually liking their chosen outcome. Deciders can be mistaken about whether they will like an outcome they choose, as in mispredicted miswanting mentioned previously. People often choose an outcome they expect to like but then are disappointed to find they actually do not like it. That is not irrational—in those cases choosers may have done the best they can—they were simply wrong in their expectations of predicted utility. Reasons for being wrong about the predicted utility of an outcome can include ignorance for never-experienced outcomes, incorrect theories about the goodness of a hypothetical outcome or about one’s own hedonic tastes, and mistaken memories about having liked something in the past (incorrect remembered utility) (Gilbert & Wilson, 2000; Kahneman et al., 1997). All these can make a decision mistaken, wrong, bad, and regrettable—even stupid. But by themselves, they do not make a decision irrational, however wrong the decision turns out to be.

We suggest that a decision remains rational as long as one chooses what one expects to like best, that is, as long as decision utility equals predicted utility. If predicted utility of an outcome is high, then choosing that outcome is rational by definition. If you believe that you will like an outcome, you are rational to choose it, to want it, and to pursue it actively—you should pursue it precisely to the degree that you expect to like it. If you turn out not to like the outcome after all, well, blame your theories, memories, or understanding of the world. But decision rationality cannot be held responsible for the eventual unhappy experienced utility because rationality in this sense cannot be held accountable for the accuracy of your predictions—only for the consistency with which you act on them.

An irrational decision is to choose what you expect not to like. That is, a decision is irrational when its decision utility does not equal predicted utility. When decision utility is greater than predicted utility, if that can happen, then one might be said to choose what one does not expect to like (not only what one mistakenly expects to like). To choose what one does not expect to like is to choose in a way that is strongly irrational, as we define irrationality. For the purpose of identifying irrational decision mechanisms in the experiments here, this is the definition we will rely on: that one chooses disproportionately to expectation of liking so that decision utility is greater than predicted utility. Here we describe a mechanism that under specific conditions produces irrational decisions, even by our restrictive definition of irrationality, though we believe it evolved to motivate good decisions in normal life.

Brain Mechanisms of Reward Utility

Insights into rewards and decisions would be enhanced by an understanding of their brain mechanisms. Affective neuroscience studies of reward have shown that many brain structures are activated by reward utilities (Berridge, 2003b; Davidson, Shackman, & Maxwell, 2004; Kringelbach, 2004; Montague, Hyman, & Cohen, 2004; Schultz, 2006; Shizgal, 1999; Shizgal, Fulton, & Woodside, 2001). These include regions of the neocortex, especially the prefrontal cortex (ventromedial, orbitofrontal, and anterior cingulate areas), the insular cortex (which includes taste sensory representations), and the amygdala.

But what brain mechanisms actually cause reward utility as generator mechanisms? So far, the most potent causal demonstrations for actually causing reward utility have come chiefly from manipulations of brain structures below the cortex: subcortical limbic structures (Berridge, 2003b; Shizgal, 1999). We focus our analysis of experienced utility and decision utility generation on these subcortical structures, such as mesolimbic dopamine systems, nucleus accumbens, and ventral pallidum.

Before we focus on details, we must emphasize that neither cortical nor subcortical regions operate on their own and that massive reciprocal projections link them together. Connections from subcortical to cortical regions are undoubtedly required for translation of “liking” and other basic utilities generated in subcortical limbic structures into consciousness and cognitive representations. In return, descending projections from the cortex to subcortical limbic structures permit cognitive appraisals or voluntary intentions to modulate basic emotional reactions (Davidson, Jackson, & Kalin, 2000). Still, if one has choose just a few causal generators of reward utility, the best candidates for utility generators come mostly from below the cortex.

Brain Mesolimbic Utility Generator

Perhaps the most famous subcortical reward generating substrate has been the mesolimbic dopamine system that sends its dopamine-containing fibers up from midbrain to the nucleus accumbens and related structures, passing through the lateral hypothalamus on the way. The nucleus accumbens in turn projects heavily downward, most densely above all to the ventral pallidum, a relatively little known but highly intriguing limbic structure that sits just in front of the lateral hypothalamus near the bottom of the forebrain. The ventral pallidum projects back upward into thalamocortical circuits that reach the orbitofrontal cortex, the cingulate cortex, and the insular cortex as well as downward to deeper brain structures. This looping mesolimbic dopamine–accumbens–pallidum–cortical system is a useful brain circuit to turn to in order to tease apart reward utility types. We take examples from studies of both humans and animals. Humans provide the most vivid insights into psychological dissociations, while animal studies give the clearest revelation of underlying mechanisms.

Do Strongly Irrational Decisions Exist? (Decision Utility Is Greater Than Predicted Utility)

The point of our subcortical focus here is to show how rational and irrational decision utility, especially as cue-triggered “wanting,” might be generated by brain systems in particular circumstances. To start with, you might well wonder, are there really any cases where people irrationally want what they neither like nor expect to like? We think there may be some cases generated by subcortical manipulations, though these cases have not always been recognized for what they are. A good example might be false “pleasure electrodes,” perhaps a case of neuroscientific mistaken identity.

False Pleasure Electrodes—Decision Utility Without Experienced Utility?

Pleasure electrodes have been famous since the 1950s but may generally turn out not to live up to their name. These are stimulation electrodes in the subcortical forebrain that rats and people would work to stimulate, pressing a lever or button thousands of times in a few hours to activate (Heath, 1972; Olds & Milner, 1954; Shizgal, 1999). What intense pleasure (experienced utility) and expectations of pleasure (predicted utility) must occur in order to motivate such intense wanting to activate the electrode (decision utility)—or so you might think.

But maybe most “pleasure electrodes” are not so pleasurable after all. For example, one of the most famous cases ever was “B-19,” implanted with stimulation electrodes by Heath and colleagues as a young man in the 1960s (Heath, 1972). B-19 voraciously self-stimulated his electrode and protested when the stimulation button was taken away. In addition, his electrode caused “feelings of pleasure, alertness, and warmth (goodwill); he had feelings of sexual arousal and described a compulsion to masturbate” (Heath, 1972, p. 6). Still, did B19’s electrode really cause an intense pleasure sensation? The answer seems to be no. B-19 was never quoted as saying that the sensation was pleasurable in the papers and books written by Heath, not even an exclamation or anything like “Oh wow—that feels nice!”

Rather than simple pleasure, stimulation of B19’s electrode evoked the desire to stimulate again and again, along with strong sexual arousal. It never produced actual sexual orgasm or clear evidence of actual pleasure sensation. Clearly, the stimulation did not serve as a substitute for sexual acts.

Decades later, another patient showed similar findings, this time a woman with an electrode implanted in deep subcortical forebrain (Portenoy et al., 1986). She stimulated her electrode at home compulsively to the extent that “at its most frequent, the patient self-stimulated throughout the day, neglecting personal hygiene and family commitments” (Portenoy et al., 1986, p. 279). When her electrode was stimulated in the clinic, it produced a strong desire to drink liquids, some erotic feelings, and a continuing desire to stimulate again. Notably, records indicate that “though sexual arousal was prominent, no orgasm occurred” (Portenoy et al., 1986, p. 279). “She described erotic sensations often intermixed with an undercurrent of anxiety. She also noted extreme thirst, drinking copiously during the session, and alternating generalized hot and cold sensations” (Portenoy et al., 1986, p. 279). Clearly, this woman felt a mixture of subjective feelings, but the description’s emphasis is on aversive thirst and anxiety. Like patient B19, there is no evidence of distinct pleasure sensations. Although stimulation made B19 want to perform sexual acts and the woman had erotic thoughts, neither patient had orgasmic sensations from his or her electrode (in contrast to the failure of these forebrain-stimulating electrodes, spinal cord stimulation has been suggested to actually improve sexual function by enhancing orgasmic performance; Meloy & Southern, 2006).

What could brain stimulation be doing, if not inducing pleasure? This helps pinpoint the idea of incentive salience, a psychological process of reward “wanting” that is a form of decision utility (Berridge, 2003a; Berridge & Robinson, 1998; Robinson & Berridge, 1993). Incentive salience is different from reward “liking” or pleasurable hedonic impact that corresponds to experienced utility. We suggest that brain stimulation in these patients evoked only intense “wanting”—but not “liking.” Brain stimulation may have caused incentive salience to be attributed to stimuli perceived at surrounding moments, including people (who became more interesting and appealing), the room (which became attractive and “brightened up”), and most especially the button and the act of pushing it (which became irresistible to do again). The button itself is most closely paired with electrode activation and so becomes a conditioned stimulus attributed most with incentive salience. If brain stimulation elevated “wanting” attribution to the button as a form of decision utility without a corresponding increase in experienced utility, a person might well “want” to activate their electrode again and again, even if it produced no pleasure sensation. That would be mere incentive salience “wanting”—without hedonic “liking.”

Does the electrode hijack decision utility alone as we suggest? Or does it also hijack predicted utility as well as decision utility, causing false expectations of future reward? That is, the electrodes might produce a false declarative expectation that the activation will produce an intensely liked pleasure even though the last one never did. If so, then both predicted utility and decision utility would exceed the eventually experienced utility or the lack of pleasure actually received. We return to this issue in the animal affective neuroscience experiments later in this chapter.

One would like to know more about the experience, expectations, and motives of these people with brain self-stimulation. The information available from past studies of patients is frustratingly sparse and crude. It is possible that better information might be gathered in future, now that a revival of deep brain stimulation and electrode implantation appears to be under way (e.g., as an experimental treatment for Parkinson’s disease). Better information is something to be hoped for.

Animal Affective Neuroscience Experiments: Isolating Decision Utility

Some better information can be gained from affective neuroscience experiments with animals because in them one can use painless brain manipulations to better tease apart “wanting” from “liking.” Our own analyses of reward utility types began over a decade ago in part with an animal equivalent of the electrode patients. In rats as well as people, “rewarding” brain electrodes typically turn on motivations to eat, drink, have sex, and so on if the electrode stimulation is given freely. Why do rats eat, say, when a reward electrode is turned on? Early hypotheses suggested that they ate more food because stimulation made them like food more (Hoebel, 1988). But an early study in our lab with Elliot Valenstein led to the different conclusion that the electrode increased the incentive salience or decision utility of food, causing rats to “want” to eat it without increasing “liking” for its hedonic impact or experienced utility (Berridge & Valenstein, 1991).

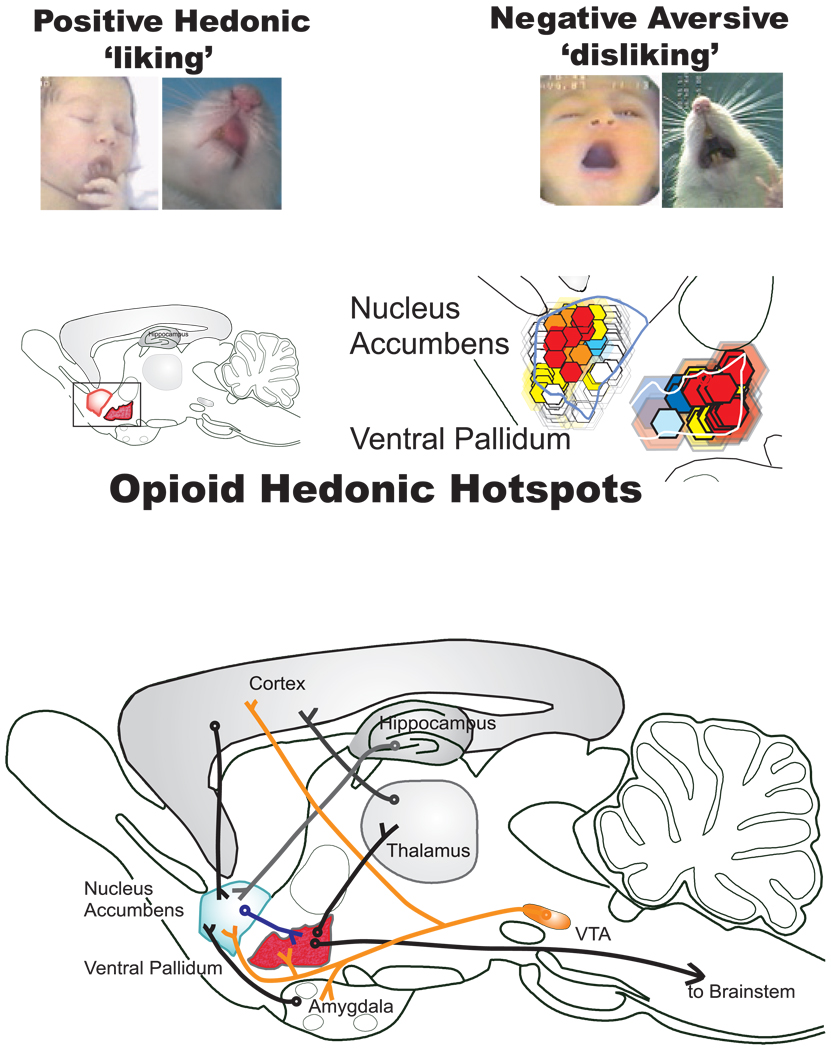

How can “wanting” and “liking” possibly be told apart in rats? We have tackled this by assessing affective reactions that are very specific to hedonic impact “liking” (Figure 24.1). They are not influenced by independent changes in “wanting.” The affective reactions are “liking” facial expressions that are elicited sweet tastes, of which several expressions are homologous in human infants and many animals, including apes, monkeys, and rats (e.g., tongue protrusions). By contrast, nasty, bitter tastes elicit “disliking” expressions (e.g., gapes). Such affective “liking”/“disliking” reactions provide windows into brain systems that paint a pleasure gloss onto sweet and related taste sensations because the expressions change when brain manipulations alter the pleasant hedonic impact of those tastes.

Fig. 24.1.

“Liking” reactions and brain hedonic hot spots. Top: Positive hedonic “liking” reactions are elicited by sucrose taste from human infant and adult rat (e.g., rhythmic tongue protrusion). By contrast, negative aversive “disliking” reactions are elicited by bitter quinine taste. Below: Forebrain hedonic hot spots in limbic structures where mu opioid activation causes a brighter pleasure gloss to be painted on sweet sensation. Red/yellow shows hot spots in nucleus accumbens and ventral pallidum where opioid microinjections caused the biggest increases in the number of sweet-elicited “liking” reactions. Based on Peciña and Berridge (2005), Smith and Berridge (2005), and Peciña, Smith, and Berridge (2006).

Brain Limbic Hedonic Hot Spots Generate Experienced Utility (“Liking”)

Using this approach, recent studies by Susana Peciña, Kyle Smith, Stephen Mahler, Sheila Reynolds and others in our laboratory have begun to map neural substrates that generate a basic experienced utility for sweetness hedonic impact (Mahler, Smith, & Berridge, 2007; Peciña & Berridge, 2000, 2005; Peciña, Smith, & Berridge, 2006; Reynolds & Berridge, 2002; Smith & Berridge, 2005; Tindell, Smith, Peciña, Berridge, & Aldridge, 2006). These studies have identified a number of hedonic hot spots: neuroanatomical sites where specific neurochemical signals are able to cause increases in the hedonic impact of sweetness “liking” (Figure 24.1). Such experiments use painless microinjections, delivered by previously implanted brain cannulae, to activate a brain substrate. Tiny droplets of morphine-type drugs (called opiate drugs because they activate opioid brain chemicals) are delivered to a hot spot in a brain structure such as the nucleus accumbens, where they activate the opioid circuits and cause increased hedonic “liking” reactions to the sweet taste of sugar. By moving microinjections to different locations in the structure, we can map the boundaries of the hedonic hot spot, and by varying the drug content, we can identify the neurochemical systems that paint the pleasure gloss of this basic experienced utility onto sweet sensation (Peciña et al., 2006; Tindell et al., 2006).

Hedonic hot spots, each about a cubic millimeter each in size in rats (in humans, hot spots might be closer to a cubic centimeter if proportional to overall brain size), exist in subcortical limbic structures such as the nucleus accumbens and the ventral pallidum (Peciña et al., 2006; Tindell et al., 2006). In these hot spots, microinjection of the drug DAMGO activates mu opioid receptors on neurons and causes sweet tastes to elicit double or triple the number of positive hedonic “liking” reactions they normally would. In other words, DAMGO in these hot spots activates an experienced utility mechanism that magnifies the pleasure impact of sweet tastes to make them more “liked.” At the same time, the microinjections that cause “liking” (experienced utility) also cause greater “wanting” (decision utility): The rats seek out food and eat three times as much as normal. Accordingly, neurons in a hedonic hot spot appear to code both “liking” and “wanting” by their firing rates (Tindell, Berridge, Zhang, Peciña, & Aldridge, 2005; Tindell et al., 2006).

Mesolimbic Dopamine Generates “Wanting” but Not “Liking”

In contrast with opioid microinjections that induce both “liking” and “wanting” for rewards, deep brain electrode stimulation that makes rats eat more nonetheless fails to increase “liking” reactions to sweetness (Berridge & Valenstein, 1991). If anything, the electrode caused more “disliking” reactions to be elicited by sugar taste (as if making it more similar to a bitter taste). In other words, the rats do not seem to eat more because they “like” food more. Instead, rats eat more despite not “liking” it more or even in some instances actually “disliking” food more. This seems to be a brain-based separation among utility types for food reward: increased decision utility (“wanting” and food consumption) without increased experienced utility (“liking” reactions to sugar).

We have observed a number of other similar brain manipulations that caused increases in motivational “wanting” but failed to increase pleasure (“liking”) for the same reward (Reynolds & Berridge, 2002; Robinson & Berridge, 2003; Wyvell & Berridge, 2000, 2001). Many of these brain manipulations that dissociated decision utility from experienced utility have involved the brain’s mesolimbic dopamine system, which was once thought to cause sensory pleasure. Our work, combined with other neuroscience evidence, has led to the contrary conclusion that dopamine fails to live up to its pleasure neurotransmitter label. Dopamine systems simply seem unable to cause pleasure, as assessed by “liking” reactions, unless accompanied by other neural events, even though dopamine activation can induce powerful motivation to acquire food and other rewards in animals and humans. We have tried both activating and suppressing dopamine in several ways, but it never alters pleasure reactions (Berridge, 2007; Berridge & Robinson, 1998; Tindell et al., 2005).

So if dopamine is a faux pleasure, what is its real psychological role? Our studies led us to suggest that modulating reward “wanting” rather than “liking” best captures what dopamine does. In particular, by “wanting,” we mean the attribution of incentive salience to reward stimuli, which makes them be perceived as attractive incentives (Berridge, 2007; Berridge & Robinson, 1998; Tindell et al., 2005). For most of us in our everyday experience, “liking” and “wanting” usually go together for pleasant rewards, as two sides of the same psychological coin. But “wanting” may be separable in the brain from “liking,” and mesolimbic dopamine systems mediate only “wanting.” We and our colleagues coined the phrase incentive salience for the particular psychological form of “wanting” that we think is mediated by brain dopamine systems.

What Is “Incentive Salience”?

“Wanting” is not “liking.” “Wanting” is not a sensory pleasure in any sense. And “wanting” cannot increase positive facial reactions to sweet taste or the hedonic impact of any sensory pleasure. Indeed, incentive salience is essentially nonhedonic in nature, even though it is important to the larger composite of processes that motivate us for reward. Faced with a number of goals (e.g., thirst versus hunger), “wanting” evolved to serve as a means to make decisions among different types of rewards (e.g., water versus food). Thus, “wanting” may provide a common neural currency or a comparison yardstick for decision utility in evaluating multiple choices (Shizgal, 1997). Usually “liking” and “wanting” for pleasant incentives do go together, but specific manipulations of dopamine-related brain mechanisms may sometimes pull them apart.

We believe that brain dopamine systems especially attribute incentive salience to reward representations at moments when a cue is encountered that has been associated with the reward in the past (or perhaps even vividly imagined). Incentive salience is attributed to Pavlovian cues following what have been called Bindra–Toates rules of learned incentive motivation (Berridge, 2001; Bindra, 1978; Toates, 1986). When a cue is attributed with incentive salience by mesolimbic brain systems, it causes both that cue and its reward to become momentarily more intensely attractive and sought. The cue actually takes on “motivational magnet” properties of its reward: It can become almost ingestible if it is a cue for food reward, drinkable if a cue for water reward, attractive in a drug-related way for cues for drug reward, and so on (and animals have been known to try to eat or drink their incentive cues in studies of what is called Pavlovian “autoshaping”). Related conditioned stimulus (CS) effects may be visible in human crack cocaine addicts who “chase ghosts” and visible CSs, scrabbling on the kitchen floor after white crumbs resembling crack crystals even if they know the crumbs are only sugar. The cue also is able to trigger increased “wanting” for its actual reward, priming the motivational desire in cue-triggered “wanting”—such as when a cue reminds you that it is lunchtime and you suddenly feel hungry.

Physiological drive states such as hunger or thirst directly modulate the incentive salience attributed to cues relevant to their particular reward. They also modulate the hedonic impact of the rewards themselves. For example, hunger makes food taste better than usual, whereas physiological sodium appetite make salty tastes “liked” more, and these physiological states also make learned cues for those rewards instantly attractive and “wanted.” Multiplicative interactions between reward cues and relevant physiological appetite states are a defi ning feature of incentive salience “wanting.”

“Wanting” Versus Ordinary Wanting

The quotation marks around the term “wanting” serve as caveat to acknowledge that incentive salience means something different from the ordinary common language sense of the word wanting. For one thing, “wanting” in the incentive salience sense need not have a conscious goal or declarative target. Wanting in the ordinary sense, on the other hand, nearly always means a conscious desire for an explicitly expected outcome. In the ordinary sense, we consciously and rationally want those things we expect to like. Conscious wanting and core “wanting” differ psychologically and probably also in their brain substrates, with cognitive wanting mediated by cortical structures and incentive salience “wanting” mediated more by subcortical systems.

Reward “wanting” or incentive salience is thus just one type of decision utility. It is only decision utility—not experienced utility (which is more similar to “liking”) or predicted utility (prediction or expectation of future reward). And it is not even all of decision utility—it can leave out the cognitive beliefs and resolutions that constitute more cognitive wants. If we are correct in our hypothesis, then this specificity of “wanting” means that selective activation of mesolimbic dopamine systems can produce truly irrational decisions. Activation of brain mesolimbic mechanisms for incentive salience can lead to “wanting” what is neither “liked” nor even expected to be liked sufficiently to rationally justify the decision to pursue (and thus sometimes not even wanted in a more abstract cognitive sense: irrational “wanting” impulses that occur despite not cognitively wanting).

Cue-Triggered “Wanting” as a Special Subtype of Decision Utility

Reward cues are often potent triggers for urges and decisions to pursue and consume those rewards. Why are cues so motivationally potent? The incentive salience hypothesis offers a specific answer because it posits that reward cues are attributed with dopamine-driven incentive salience by mesolimbic circuits.

These conclusions come largely from animal experiments on cue-triggered decision utility, which we describe now. Such experiments have sometimes used a procedure called Pavlovian instrumental transfer, which for our purposes can be thought of as a way of isolating incentive salience as cue-triggered “wanting.” In those studies, the rats are first trained to work (press a lever) for the real rewards. Since rewards come only every so often, animals learn to persist in working to earn reward even when sparse. In a separate training session, rats are presented with rewards under conditions where they do not have to work. Besides not having to work for the reward, the significant change here is that each reward is associated with an auditory tone cue 10 to 30 seconds long. Just as with Pavlov’s dogs, the cues come to signify reward for the animals, becoming Pavlovian conditioned stimuli (CS+). With these two steps, training is complete.

Testing begins after the training is completed. A special experimental feature is employed, namely, extinction tests. Rats are tested for their willingness to work for rewards later under extinction conditions, so called because the rewards are no longer delivered at all. Since there are no real rewards any longer, the rats have only their expectations of reward to guide them. Naturally, without real rewards to sustain efforts, performance in the extinction test gradually falls. But since the rats originally learned that perseverance pays off, they persist for quite some time in working based largely on their ordinary wanting for reward. The amount of work (number of lever presses) the animals are willing to perform under these conditions of no reward delivery is the measure of “wanting.” Since no actual rewards are delivered (i.e., extinction), the analysis is not confounded by consumption of rewards.

The crux of the matter to reveal cue-triggered “wanting” is to test the effects of Pavlovian cues, the tones formerly presented in association with the rewards, in various states of brain mesolimbic activation. These cues are presented once in a while as the rats continue to work—or not, as the case may be. During this extinction test, cues come and go while the rats work in order to get reward that is never delivered. Finally, brain mesolimbic activation is manipulated by varying whether the rats receive a drug microinjection that causes increases in dopamine release.

Dopamine Magnifies Cue-Triggered “Wanting”

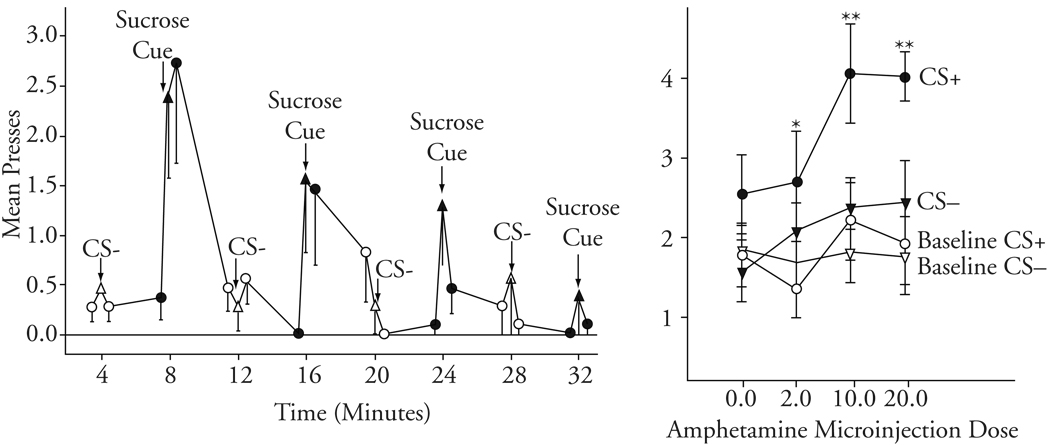

Cindy Wyvell used this test in our laboratory and found a form of truly irrational choice that depended on mesolimbic (dopamine) activation (Wyvell & Berridge, 2000, 2001). She used amphetamine microinjections into the brain nucleus accumbens to activate mesolimbic dopamine systems. Amphetamine causes dopamine neurons to release their dopamine into synapses so that it can reach other neurons. Wyvell found that dopamine activation caused a transient but intense form of irrational pursuit linked to incentive salience (Figure 24.2). One group of rats received amphetamine microinjections before their behavioral test, while another group received saline. During this test, their baseline performance could be guided only by their expectation of the cognitively wanted sugar because they received no real sugar rewards. And while they pursued their expected reward, the Pavlovian reward cue (light or sound for 30 seconds) was occasionally presented to them over the course of the half-hour session.

Fig. 24.2.

Irrational cue-triggered “wanting.” Transient irrational “wanting” comes and goes with the cue (left). Amphetamine microinjection in nucleus accumbens magnifies “wanting” for sugar reward—but only in presence of reward cue (CS+). Cognitive expectations and ordinary wanting are not altered (reflected in baseline lever pressing in absence of cue and during irrelevant cue, CS−) (right). Based on Wyvell and Berridge (2000).

Wyvell’s findings were consistent and clear. Amphetamine microinjection enhanced “wanting” for sugar. Animals worked for the rewards, and during the presentation of the Pavlovian cue, they showed peaks of dramatically harder work; that is, their level of “wanting” increased. Amphetamine in their brains selectively raised the height of those “wanting” peaks without changing the baseline plateau on which the peaks sat or anything else. It should be noted that there are two types of wanting assessed here: (a) ordinary wanting, where the rat is guided primarily by its cognitive expectation that it will like the worked-for sugar reward, and (b) cue-triggered “wanting,” or incentive salience attributed by mesolimbic systems to the representation of sugar reward that is activated by the cue. Dopamine activation selectively quadrupled cue-triggered “wanting,” causing a specific elevation in this particular form of decision utility. A similar specificity, in reverse, has been found for suppressing effects of dopamine-blocking drugs on cue-triggered “wanting” (Dickinson, Smith, & Mirenowicz, 2000).

Even though the dopamine rise in Wyvell’s experiments was relatively constant over the half-hour test, the elevation in “wanting” was not. It required two conditions simultaneously: dopamine activation plus the presence of the cue previously associated with reward. Thus, the “wanting” peak was repeatedly reversible, even over the short span of a half-hour test session, coming and going with the 30-second cue (Wyvell & Berridge, 2000). This exaggeration of a “cue-triggered wanting” phenomenon caused by activating mesolimbic dopamine demonstrated by Wyvell was both irrational (detaching from stable expectations of reward value expressed by lever pressing for reward between cues) and transient (always decaying within a minute of the cue’s end).

In a related experiment, Wyvell tested the effect of amphetamine microinjections on the experienced utility of real sugar by measuring positive hedonic “liking” reactions of rats as they received an infusion of sugar solution into their mouths. The amphetamine never increased rats’ positive facial reactions elicited by the taste of real sugar, indicating once again that dopamine did not increase “liking” for the sugar reward. Thus, Wyvell found that activation of dopamine neurotransmission in the accumbens did not change ordinary wanting based on cognitive expectation of liking (measured by baseline performance on the lever), nor did it alter “liking.”

In an elevated dopamine state, hyper-“wanting” is triggered by an encounter with reward cues, and at that moment it exerts its irrational effect, disproportionate to the cognitively expected hedonic value of the reward. In other words, we suggest that at the moment of a reward cue, decision utility diverges from predicted utility if the brain is dopamine stimulated by amphetamine. One moment, the dopamine-activated brain of the rat simply wants sugar in the ordinary sense, although the decision is tempered by the fact that there is no reward presented during extinction. The next moment, when the cue comes, the dopamine-activated brain both wants sugar and “wants” sugar to an exaggerated degree, according to the incentive salience hypothesis (Figure 24.2). A few moments after the cue ends, it has returned to its rational level of wanting appropriate to its expectation of reward. Moments later still, the cue is reencountered again, and excessive and irrational “wanting” again takes control.

The irrational level of pursuit thus has two sources that determine its occurrence and duration: a physiological factor (brain mesolimbic activation) and a psychological factor (reward cue activation). It seems unlikely that mesolimbic activation altered rats’ cognitive expectation of how much they would like sugar (which might have rationally increased desire even though their expectation would be mistaken). That is because amphetamine was present in the nucleus accumbens throughout the entire session, but the intense enhancement of pursuit lasted only while the cue stimulus was actually present.

Human Drug Addiction as Sensitized “Wanting”

Human drug addiction may be a special illustration of irrational “wanting” driven by mesolimbic brain systems (Robinson & Berridge, 1993, 2003). Addictive drugs not only activate brain dopamine systems when the drug is taken but also may sensitize them afterward. Neural sensitization means that the brain’s mesolimbic system is hyperreactive and therefore more easily activated by drugs or related cues for a long time and maybe even permanently. The mesolimbic system reacts more strongly than normal if the drug is taken again. This state of hyperactive reactivity is gated by associative cues and contexts that predict the drug. Neural sensitization occurs to different degrees in different individuals. Some individuals are susceptible to sensitization, but others are not, depending on many factors, ranging from genes to prior experiences as well as on the drug itself, the dose, and so on (Robinson & Berridge, 1993, 2003).

Efforts to apply these insights gave rise to the incentive-sensitization theory of addiction, developed primarily by Terry Robinson, which specifies the role sensitization of incentive salience may play in driving addicts to compulsively take drugs (Robinson & Berridge, 1993, 2003). This theory suggests that if the mesolimbic system of addicts becomes sensitized after taking drugs, they may irrationally “want” to take drugs again—even if they have fully emerged from withdrawal by the time they relapse and even if they decide they don’t “like” the drugs very much (or at least like them less than they like the lifestyle they will lose by taking them). This incentive-sensitization theory of addiction thus accounts for why addictive relapse is so often precipitated by encounters with drug cues that trigger excessive “wanting” for drugs. In a sensitized mesolimbic state, the reward cues trigger a momentary rise in decision utility that far outstrips any predicted or experienced utility of the drugs. Drug cues are attributed with more incentive salience than other cues because they are associatively paired with strong drugs. Drug cues could trigger irrational “wanting” in an addict whose brain was sensitized even long after withdrawal was over (because sensitization lasts longer) and regardless of expectations of “liking.”

Actual evidence that sensitization does indeed cause irrational cue-triggered “wanting” was recently found by Cindy Wyvell in an affective neuroscience animal study of mesolimbic sensitization by drugs similar to the study described previously (Wyvell & Berridge, 2001). Rats that had been previously sensitized by amphetamine responded to a sugar cue with excessive “wanting” despite not having had any drug for 10 days. Even though the rats were drug free at the time of testing, sensitization (i.e., the brain in a state of permanent mesolimbic excitability) caused excessively high cue-triggered “wanting” for their reward. For sensitized rats, irrational “wanting” for sugar came and went transiently with the Pavlovian cue associated with the sugar reward, just as if they had received a brain microinjection of drug to immediately activate the mesolimbic system—but they had not. Their persisting pattern of cue-triggered irrationality seems consistent with the incentive-sensitization theory of human drug addiction (Robinson & Berridge, 1993, 2003). Similarly, neural sensitization by drugs has been found to increase other cue and motivation effects, such as conditioned reinforcement, and the persistence of motivated performance on second-order schedules and instrumental breakpoint in animals (Vanderschuren & Everitt, 2005; Vezina, 2004).

Separating Neural Codes for Predicted Utility From Decision Utility

A crucial question about enhancements of cue-triggered “wanting” discussed previously is whether the mesolimbic increase applies to predicted utility as well or just decision utility. It is clear that decision utility was elevated in the previously mentioned experiments by prior sensitization or direct amphetamine effects. But did dopamine magnify decision utility purely and alone? Or could dopamine elevation also have raised predicted utility too? If so, a sensitized individual might hold mistakenly exaggerated expectations for future reward, expecting eventual experienced utility to be higher than it really will be. If that happened, then decision utility would also elevate and passively trail after predicted utility. After all, if one mistakenly expects a reward to be better than it will be, then one may choose to pursue it more than one otherwise would.

Contemporary Dopamine Models of Predicted Utility

A prediction error interpretation (mistakenly elevated expectation of reward) is highlighted by recent intriguing hypotheses about dopamine and predictive reward learning in computational neuroscience. These have suggested that dopamine neurons may help mediate the associations and predictions involved in reward learning, either via stamping in associations to an unconditioned stimulus (UCS) prediction error or by modulating the strength of learned predictions or learned habits elicited by a CS (Dayan & Balleine, 2002; McClure, Berns, & Montague, 2003; Montague et al., 2004; O’Doherty, Dayan, Friston, Critchley, & Dolan, 2003; Schultz, 2002, 2006; Schultz, Dayan, & Montague, 1997).

Elegant and influential studies by Wolfram Schultz and colleagues, for example, have led to suggestions that the firing of dopamine neurons may code the predicted utility of a CS cue that predicts reward (and the prediction error of a surprising UCS reward itself). For example, dopamine firing has been suggested by learning theorists to approximate the Rescorla–Wagner model of Pavlovian conditioning, that is, ΔV = αβ(γ − V), and the temporal difference model of gradual associative learning, that is, V(st) = 〈∑i=0 γirt+1〉 (Montague et al., 1996; Rescorla & Wagner, 1972; Schultz, 2002). In those dopamine-learning models, firing by dopamine neurons to a CS cue that predicts reward encodes its predicted utility (V). If a UCS reward has failed to be predicted and thus is surprising, dopamine neurons may fire again, suggested by these models to encode the prediction error: (γ − V) or δ(r).

The crucial feature of such learning models, when applied to predicted utility of reward and to mesolimbic dopamine function, is that dopamine elevation can generate new learning only by creating a UCS prediction error if the experienced utility of UCS is greater than CS expected. This feature results from the fact that previously learned values are “cached” and can be changed only incrementally and only by having further opportunities to learn a changed new relationship between CS and UCS.

Teasing Apart Predicted Utility and Decision Utility

For example, if one elevates learning parameters in the models, as a dopamine rise might produce (according to these dopamine-prediction models), the predicted utility V carried by a CS + does not immediately change. Instead, in the next learning trial, the UCS will cause a larger prediction error or faster learning rate, which will be saved until the next trial. Evidence for new learning is postponed until it can be demonstrated in subsequent trials. The new learning is then reflected in a gradually incremented increase in predicted utility generated the next times the CS is encountered.

Thus, a startling feature of Wyvell’s behavioral effects of amphetamine and sensitization on cue-triggered “wanting” described previously (and the neural recording experiment described in the next section) is that mesolimbic activation occurred after all learning trials were completed. It did not need to be relearned. It was immediate on the first cue presentations in the activated mesolimbic state. Even on the very first trial, the next time the cue was encountered, CS+ decision utility was elevated. Dopamine activation did not occur before training, so there was no possibility that learning could have enhanced subsequent prediction errors (increased predicted utility). In the Wyvell experiment, mesolimbic activation was delayed until after learning, when it was too late to be able to promote predicted utility via boosting the association between CS+ and UCS. Mesolimbic activation still increased cue-triggered “wanting,” indicating that it could not have generated increased predicted utility as suggested by these temporal difference–based computational models.

But perhaps mesolimbic dopamine activation could be reinterpreted as having caused excessive general or cue-triggered predicted utility, expressed as overoptimistic expectations about the quality or quantity of upcoming rewards. In plain language, what if dopamine caused a cue to carry higher predicted utility than it ordinarily would as well as higher decision utility? If so, the elevation of predicted utility by amphetamine or sensitization would become similar to the standard types of wrong decisions or miswanting identified by Kahneman and colleagues, Gilbert and colleagues, and others (Gilbert & Wilson, 2000; Kahneman et al., 1997; Loewenstein & Schkade, 1999). Those wrong choices are based on wrong expectations. That means that they need not be irrational by the criteria we have adopted—wrong as the decisions remain—as long as the choice’s decision utility matches predicted utility.

We believe we can rule out such a possibility for dopamine-based irrational “wanting” described previously. But we have to turn to inside the brain in order to do it. What happens to utility from the brain’s point of view when dopamine is released?

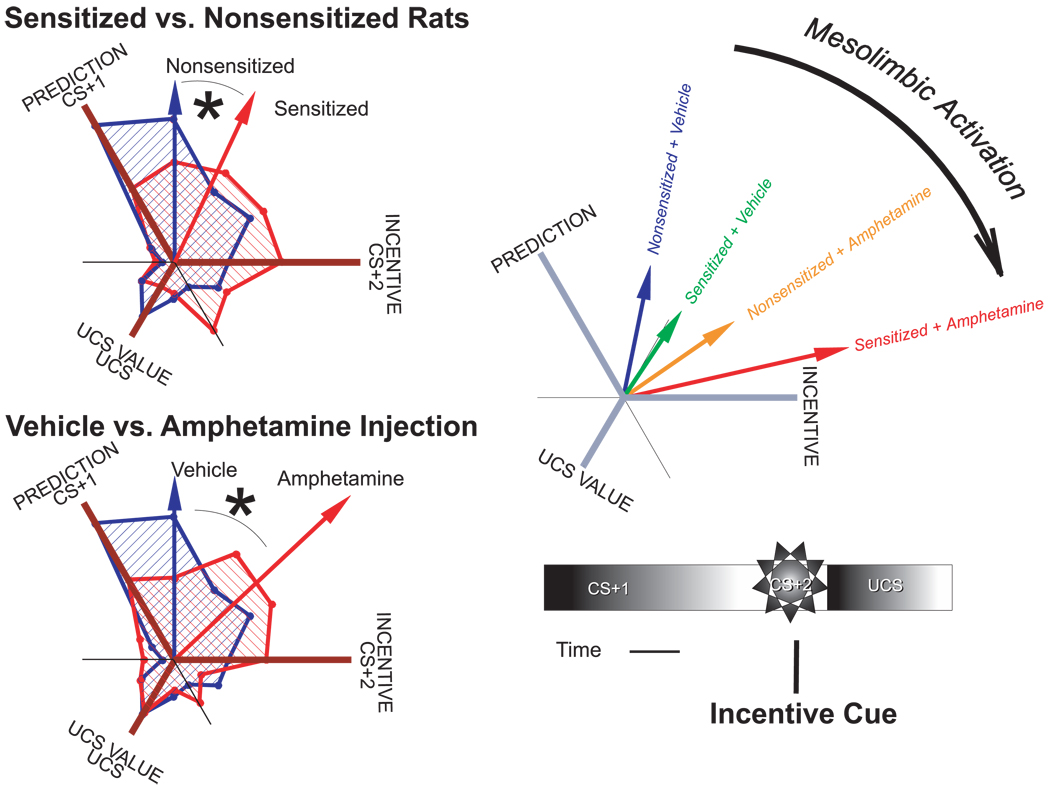

Neuronal Coding of Predicted Utility and Decision Utility in Limbic Ventral Pallidum

A recent study of neural coding in our laboratory examined changes in predicted utility versus decision utility caused by mesolimbic dopamine activation (Tindell et al., 2005). This dissertation study was conducted primarily by Amy Tindell in the Aldridge laboratory and focused on the ventral pallidum, a mesolimbic output structure. Tindell used recording electrodes to study the firing patterns of neurons that receive the impact of dopamine elevation and the relationship of neuronal firing to predicted utility, decision utility, and experienced utility of sugar rewards and their Pavlovian cues.

We focused on the ventral pallidum for neural coding of reward utility because it is a “limbic final common path” for reward signals in mesocorticolimbic circuits. The ventral pallidum integrates reward-related information from the nucleus accumbens (compressed as much as 29 to 1) with other structures (Kalivas & Nakamura, 1999; Oorschot, 1996; Zahm, 2000). It especially integrates dopamine influences with reward signals because the ventral pallidum receives the heaviest projections sent from the nucleus accumbens neurons that most famously get mesolimbic dopamine and also receives direct mesolimbic dopamine inputs itself. The output of the ventral pallidum is directed back to cortex through the thalamus and also onward to brain stem nuclei.

Serial Cues Uncouple Predicted Versus Decision Utilities

In order to tease apart predicted utility from decision utility, we used two different cues in series to predict the sugar reward (Tindell et al., 2005). A 10-second auditory tone cue (CS+1) was followed by a 1-second auditory click cue (CS+2), which finally was followed immediately by a sugar pellet (UCS). The two different CSs have very different ratios of predicted utility to decision utility.

The first tone cue has the most prediction utility because it predicts everything that follows: It predicts the click cue 10 seconds later and the sugar pellet 1 second after that. Once a rat learns this relationship, which usually takes only a few dozen presentations of the series, the CS+1 tone cue tells the animal everything there is to know about upcoming signals and rewards for the immediate future. By contrast, the second click cue is completely redundant as a predictor. It adds no new information. Rats can easily keep track of the 10- to 11-second interval between first tone and sugar—they do not need the second cue to tell them sugar is coming. In fact, they begin to hover around the sugar dish a few seconds before it arrives. Thus, the CS+1 tone cue carries greatest predicted utility. It sets all expectations for the future. That means that if mesolimbic activation can raise predicted utility, it should best be evident in changes in neuronal firing elicited by the CS+1 tone cue.

But the second click cue still has something the first tone cue does not: the greatest decision utility. The CS+2 click carries the greatest incentive salience. It occurs at the moment of highest incentive motivation or “wanting” for sugar, reflected in part by rat’s eager hovering around the dish at that moment. If mesolimbic activation causes increases in decision utility that occur without any matching increase in predicted utility, then this should be most evident in amplification of neuronal firing elicited by the CS+2 click cue. And it should occur even if there is no change in firing to the CS+1 tone. That profile of activation would indicate that decision utility is greater than predicted utility at the moment of the CS+2 cue, setting the stage for the possibility of strongly irrational choice.

Finally, the sugar reward UCS that comes last carries the greatest experienced utility. The sweet sugary pellet is the event that is actually “liked” best. It is also the teaching signal event, the reward value that “stamps in” an association or that instructs a predictive actor in an actor–critic model that a reward event has occurred. One should expect a change in sugar-elicited neuronal firing if mesolimbic activation causes elevations in either hedonic impact, associative stamping in, or UCS prediction errors generated as teaching signals. And just to double-check if hedonic impact “liking” is enhanced by mesolimbic activation, we also examined whether amphetamine or drug sensitization caused any elevation in “liking” reactions of rats to the taste of sugar.

Dopamine and Sensitization Specifically Elevate Coded Signal for Decision Utility

Rats were trained for 2 weeks, and then some were sensitized while others were treated with a saline placebo over another 2-week period. Sensitization leaves mesolimbic neurons structurally changed and ready to release more dopamine than normal when stimulated by drugs or certain other events (Robinson & Kolb, 2004). Then all the rats were implanted with recording electrodes in their ventral pallidum and allowed to recover for another 2 weeks. To decode reward utilities in neuronal firing patterns, we used a novel computational technique, “profile analysis,” developed by our colleague Jun Zhang, that compares firing of neurons to different stimuli, asking whether the greatest firing is elicited by either the CS1, the CS+2, or the UCS to identify the maximal stimulus for each neuron. This technique allows use to identify how mesolimbic activation changes the stimulus preference “profile” of ventral pallidal (VP) neurons.

We found that individual VP neurons usually fire to all three stimuli but not equally to all (Figure 24.3). Ordinarily, predictive utility seems to dominate neuronal coding in VP in the sense that the neurons fire most to the CS+1 (next to the CS+2 and only moderately to the sugar). But decision utility was purely and specifically elevated by mesolimbic activation, including dopamine elevation, caused by either prior drug-induced neural sensitization or simply injecting the rats with amphetamine just before test (to make mesolimbic neurons release extra dopamine). Dopamine-related brain activation shifted the profiles of VP neural activation toward incentive coding of decision utility at the expense of prediction coding of predicted utility (Figure 24.3). The elevations in decision utility appeared to add together across amphetamine and sensitization treatments, combining to produce an even greater enhancement of decision utility than either treatment alone. This may model the special vulnerability of a sensitized addict at a moment of trying to take “just one hit” again who thus maximally boosts the drug’s decision utility and so precipitates a previously unintended binge of relapse and drug consumption.

Fig. 24.3.

Mesolimbic activation magnifies decision utility coding by neuron firing in ventral pallidum. Population profile vector shifts toward incentive coding with mesolimbic activation. Profile analysis shows stimulus preference coded in firing for all 524 ventral pallidum neurons. Ordinarily, neurons prefer to code predicted utility (firing maximally to CS+1 tone). Sensitization and amphetamine administration each shift neuronal coding preference toward decision utility (firing maximally to the CS+2 click) and away from predicted utility of CS+1 (without altering signal for experienced utility of the sugar UCS). Combination of sensitization with amphetamine shifts ventral pallidum coding profiles even further toward the signal for pure decision utility or incentive salience. Thus, as mesolimbic activation increases, ventral pallidum neurons increasingly carry coded signals for decision utility (relative to predicted utility and experienced utility signals). Entire populations are shown by shaded areas. Arrows shows the maximal averaged response of the population under each treatment. Based on Tindell, Berridge, Zhang, Peciña, and Aldridge (2005, pp. 2628 and 2629, figs. 6 and 7).

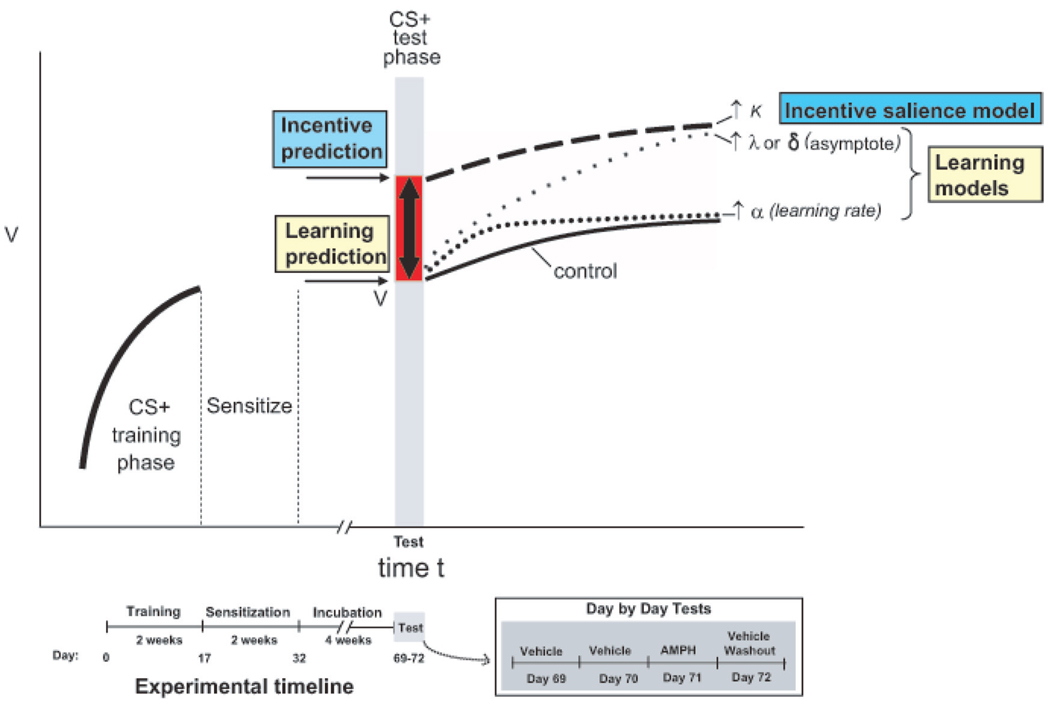

It is noteworthy that the shift toward neuronal incentive coding was immediate on the first test trials and did not require any relearning (Figure 24.4). That immediate change supports the incentive-sensitization hypothesis and stands in contrast to the alternative dopamine-learning hypotheses that require further training trials for the boosted prediction error of an increased reward, for example, (δ)t, to magnify relearned predictions (V). It appears that in a dopamine-activated or sensitized state, incentive coding by VP neurons might mediate increased cue-triggered “wanting” and could lead to the compulsive relapse of addiction, especially for drug cues that occur close in time to their reward. And strengthening of the decision utility signal occurred at the expense of relative weakening of the predicted utility signal after drug and sensitization dopamine activations.

Fig. 24.4.

Decision utility increment happens too fast for relearning. Time line and alternative outcomes for neuronal firing coding of reward cue after mesolimbic activation of sensitization and/or amphetamine in ventral pallidum recording experiment (Tindell, Berridge, Zhang, Peciña, & Aldridge, 2005). The incentive salience model predicts that mesolimbic activation dynamically increases the decision utility of a previously learned CS+. The increased incentive salience coding is visible the first time the already-learned cue is presented in the activated mesolimbic state. Learning models by contrast require relearning to elevate learned predicted utilities. They predict merely gradual acceleration if mesolimbic activation increases rate parameters of learning and gradual acceleration plus asymptote elevation if mesolimbic activation increases prediction errors. Actual data support the incentive salience model. Based on data from Tindell et al. (2005).

Finally, these shifts toward VP incentive coding were not due to enhanced UCS hedonic impact (“liking”). Behavioral hedonic “liking” reactions to sucrose taste remained constant or even diminished slightly with sensitization and amphetamine administration. In other words, mesolimbic activation caused increases in cue-triggered “wanting” as coded by VP neurons when encountering a CS+ for sugar reward without any increase in experienced utility or “liking” for sugar itself.

In normal life, such enhancement of incentive salience might occur during normal appetite states, such as hunger. Incentive salience or cue-triggered decision utility normally depends on integrating two separate factors: (a) current physiological/neurobiological state and (b) previously learned associations about CS+ (Berridge, 2004; Toates, 1986). Integrating current physiological state with learned cues allows behavior to be guided dynamically by appetite-appropriate stimuli without need of further learning (e.g., Pavlovian cues associated with food are immediately more attractive to a hungry animal). Drug sensitization or acute amphetamine may each “short-circuit” this neurobiological system and directly increase the incentive value attributed to particular conditioned stimuli, triggering greater “wanting” and pursuit of their reward (Robinson & Berridge, 2003; Tindell et al., 2005).

Explanation for Cued Hyperbolic Temporal Discounting?

The shift toward incentive coding suggests how sensitization and addictive drugs may prime motivational behavioral responses of addicts to drug-related stimuli by amplifying the incentive impact of encountering a UCS-proximal drug CS+. Finally, it suggests a mechanism to help explain hyperbolic temporal discounting, at least in cue-triggered decisions. Temporal discounting is well recognized in addicts (Ainslie, 1992), and neuroimaging has shown that mesolimbic systems code immediate rewards (McClure, Laibson, Loewenstein, & Cohen, 2004). But temporal discounting is usually just described and accepted as a given. Although it is sometimes posited as a mechanism that drives choices, little is known about the explanatory mechanism for hyperbolic discounting itself. Part of the explanation may be that limbic activation causes circuits involving the ventral pallidum to fire more to cues for a temporally close reward and therefore selectively enhance their incentive salience, causing excessive cue-triggered “wanting” for the close reward. This also may be why “visceral states” sometimes exacerbate temporal discounting effects (Loewenstein & Schkade, 1999).

Irrational Decision Utility From Mesolimbic Activation

We suggest that both the Wyvell and Tindell experiments described previously are examples of decision utility being greater than predicted utility at the same moment. Thus, both are examples of irrational “wanting,” defined as “wanting” something more than one expects to like. In the Wyvell cue-triggered “wanting” experiments, the elevated decision utility is a peak of frenzied pursuit of the sugar reward, at least for a while. The reward cue causes a momentary irrational desire, during which decision utility is greater than predicted utility (as well as decision utility being greater than experienced utility). In the Tindell neuronal firing experiments, the magnified firing bursts of VP neurons at the moment of the cue with the most incentive salience reflects a neural mechanism that may drive irrational “wanting.” Irrational “wanting” happens best when a reward cue occurs simultaneously with mesolimbic activation, especially dopamine-related activation. Individuals may then “want” what they do not want cognitively. The high incentive salience type of “wanting” exerts its power independent of more cognitive wants. For example, a recovering brain-sensitized addict may sincerely want in every cognitive way to remain abstinent from drugs but may nonetheless be precipitated by a chance encounter with drug cues into intense “wanting” despite cognitive desires and thus relapse into taking drugs again. Further, they may not predict associatively in a manner that would justify their “want.” The addict may accurately predict that drug pleasure will not be enough to off - set the adverse consequences of taking the drug yet will still “want” to take it. The decision utility is irrational in the sense that their immediate “want” exceeds what they know cognitively they will not like (or, at least, will not “like” proportionally to their excessive “want”).

Importantly, incentive salience attributions are encapsulated and modular in the sense that people may not have direct conscious access to them and find them difficult to cognitively controls (Robinson & Berridge, 1993, 2003; Winkielman & Berridge, 2004). Cue-triggered “wanting” belongs to the class of automatic reactions that operate by their own rules under the surface of direct awareness (see Chapters 5 and 23; Bargh & Ferguson, 2000; Bargh, Gollwitzer, Lee-Chai, Barndollar, & Trötschel, 2001; Dijksterhuis, Bos, Nordgren, & van Baaren, 2006; Gilbert & Wilson, 2000; Wilson, Lindsey, & Schooler, 2000; Zajonc, 2000). People are sometimes aware of incentive salience as a product but never of the underlying process. And without an extra cognitive monitoring step, they may not even be always aware of the product. Sometimes incentive salience can be triggered and can control behavior with very little awareness of what has happened. For example, subliminal exposures to happy or angry facial expressions, too brief to see consciously, can cause people later to consume more or less of a beverage—without being at all aware their “wanting” has been manipulated (Winkielman & Berridge, 2004). Additional monitoring by brain systems of conscious awareness, likely cortical structures, is required to bring a basic “want” into a subjective feeling of wanting.

Applications to Human Decision Making

Although our experiments used drugs and sensitization to manipulate brain dopamine systems in rats, people have brain dopamine systems too, which are likely to respond in similar ways. Human mesolimbic systems can be equally activated by drugs and addiction. And perhaps more relevant to everyday decisions, the same dopamine brain systems are also spontaneously activated by natural appetite states and in many emotional situations.

As a result of all this, an irrational “want” for something can occur despite cognitively not wanting it, cognitively wanting not to “want,” or cognitively wanting something else. An irrational cue-triggered “want” may even surprise the person who has it by its power, suddenness, and autonomy. This may explain why some long-term drug addicts can proclaim (perhaps even truthfully) to not enjoy their drug as they once did while at the same time they may take part in criminal activity in order to acquire the drug.

Both rewarding and stressful situations activate brain mesolimbic dopamine systems. This seems to raise the possibility for decision utility elevations when reward cues occur simultaneously with brain activation at moments requiring a choice. If a person’s brain dopamine system were highly activated and the person encountered a reward cue at that moment, then the person might irrationally elevate the decision utility of the cued outcome over and above both its experienced utility and its predicted utility. That person would be under the control of a decision utility peak. The person might “want” the cued reward just like the rat—even if the person cognitively expected not to like it very much.

Of course, for general psychologists the primary value of issues discussed here may be a better insight into the mechanisms that underlie more normal decision making. Most decisions in ordinary life are rational, choosing and wanting what one expects to like best (Higgens, 2006). In those cases, there is no divergence between underlying brain mechanisms of incentive salience and reward prediction. Instead, these mesolimbic dopamine mechanisms of decision utility simply provide motivational oomph to help power behavioral choices that were guided by more cognitive, cortical mechanisms based on the predicted utility of potential outcomes. When choice is optimized, the multiplicity of underlying mechanisms may be seamlessly papered over, and only a psychologist would know that there are multiple mechanisms beneath the surface.

But the underlying multiplicity remains whether a given decision is rational or not. And the potential remains for irrational dissociation at future moments. Such phenomena might not be restricted to basic consumption behavior but could extend to interact with more abstract and even economic decisions too (Bernheim & Rangel, 2004; Camerer & Fehr, 2006). Whether hijacked decision utility and irrational “wanting” actually play this role in ordinary human lives and decisions seems to be an intriguing possibility that may deserve further consideration from psychologists who study decisions in action.

Acknowledgments

This chapter is based on an article for Social Cognition. Research described here was supported by grants from the NIH to KCB and to JWA (DA015188 and MH63649, DA017752), and writing of the original draft was supported by a fellowship from the John Simon Guggenheim Memorial Foundation. We are grateful to the editors for helpful comments on an earlier version.

References

- Ainslie G. Picoeconomics. Cambridge: Cambridge University Press; 1992. [Google Scholar]

- Bargh JA, Ferguson MJ. Beyond behaviorism: On the automaticity of higher mental processes. Psychological Bulletin. 2000;126:925–945. doi: 10.1037/0033-2909.126.6.925. [DOI] [PubMed] [Google Scholar]

- Bargh JA, Gollwitzer PM, Lee-Chai A, Barndollar K, Trötschel R. The automated will: Nonconscious activation and pursuit of behavioral goals. Journal of Personality and Social Psychology. 2001;81:1014–1027. [PMC free article] [PubMed] [Google Scholar]

- Bernheim BD, Rangel A. Addiction and cue-triggered decision processes. American Economic Review. 2004;94:1558–1590. doi: 10.1257/0002828043052222. [DOI] [PubMed] [Google Scholar]

- Berridge KC. Pleasure, pain, desire, and dread: Hidden core processes of emotion. In: Kahneman D, Diener E, Schwarz N, editors. Well-being: The foundations of hedonic psychology. New York: Russell Sage Foundation; 1999. pp. 525–557. [Google Scholar]

- Berridge KC. Reward learning: Reinforcement, incentives, and expectations. In: Medin DL, editor. The psychology of learning and motivation. Vol. 40. New York: Academic Press; 2001. pp. 223–278. [Google Scholar]

- Berridge KC. Irrational pursuits: Hyper-incentives from a visceral brain. In: Brocas I, Carrillo J, editors. Psychology and economics. Vol. 1. Oxford: Oxford University Press; 2003a. pp. 14–40. [Google Scholar]

- Berridge KC. Pleasures of the brain. Brain and Cognition. 2003b;52:106–128. doi: 10.1016/s0278-2626(03)00014-9. [DOI] [PubMed] [Google Scholar]

- Berridge KC. Motivation concepts in behavioral neuroscience. Physiology and Behavior. 2004;81:179–209. doi: 10.1016/j.physbeh.2004.02.004. [DOI] [PubMed] [Google Scholar]

- Berridge KC. The debate over dopamine’s role in reward: The case for incentive salience. Psychopharmacology (Berl) 2007;191(3):391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: Hedonic impact, reward learning, or incentive salience? Brain Research Reviews. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Valenstein ES. What psychological process mediates feeding evoked by electrical stimulation of the lateral hypothalamus? Behavioral Neuroscience. 1991;105:3–14. doi: 10.1037//0735-7044.105.1.3. [DOI] [PubMed] [Google Scholar]

- Bindra D. How adaptive behavior is produced: A perceptual-motivation alternative to response reinforcement. Behavioral and Brain Sciences. 1978;1:41–91. [Google Scholar]

- Camerer CF, Fehr E. When does “economic man” dominate social behavior? Science. 2006;311:47–52. doi: 10.1126/science.1110600. [DOI] [PubMed] [Google Scholar]

- Davidson RJ, Shackman AJ, Maxwell JS. Asymmetries in face and brain related to emotion. Trends in Cognitive Sciences. 2004;8:389–391. doi: 10.1016/j.tics.2004.07.006. [DOI] [PubMed] [Google Scholar]

- Davidson RJ, Jackson DC, Kalin NH. Emotion, plasticity, context, and regulation: Perspectives from affective neuroscience. Psychological Bulletin. 2000;126(6):890–909. doi: 10.1037/0033-2909.126.6.890. [DOI] [PubMed] [Google Scholar]

- Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Smith J, Mirenowicz J. Dissociation of Pavlovian and instrumental incentive learning under dopamine antagonists. Behavioral Neuroscience. 2000;114:468–483. doi: 10.1037//0735-7044.114.3.468. [DOI] [PubMed] [Google Scholar]

- Dijksterhuis A, Bos MW, Nordgren LF, van Baaren RB. On making the right choice: The deliberation-without-attention effect. Science. 2006;311:1005–1007. doi: 10.1126/science.1121629. [DOI] [PubMed] [Google Scholar]

- Gilbert DG, Wilson TD. Miswanting: Some problems in forecasting future affective states. In: Forgas J, editor. Feeling and thinking: The role of affect in social cognition. Cambridge: Cambridge University Press; 2000. pp. 178–197. [Google Scholar]

- Heath RG. Pleasure and brain activity in man: Deep and surface electroencephalograms during orgasm. Journal of Nervous and Mental Disease. 1972;154:3–18. doi: 10.1097/00005053-197201000-00002. [DOI] [PubMed] [Google Scholar]

- Higgins ET. Value from hedonic experience and engagement. Psychological Review. 2006;113:439–460. doi: 10.1037/0033-295X.113.3.439. [DOI] [PubMed] [Google Scholar]

- Hoebel BG. Neuroscience and motivation: pathways and peptides that define motivational systems. In: Atkinson RC, Herrnstein RJ, Lindzey G, Luce RD, editors. Stevens’ handbook of experimental psychology. Vol. 1. New York: John Wiley & Sons; 1988. pp. 547–626. [Google Scholar]

- Kahneman D, Fredrickson BL, Schreiber CA, Redelmeier DA. When more pain is preferred to less: Adding a better end. Psychological Science. 1993;4:401–405. [Google Scholar]

- Kahneman D, Wakker PP, Sarin R. Back to Bentham? Explorations of experienced utility. Quarterly Journal of Economics. 1997;112:375–405. [Google Scholar]

- Kalivas PW, Nakamura M. Neural systems for behavioral activation and reward. Current Opinion in Neurobiology. 1999;9:223–227. doi: 10.1016/s0959-4388(99)80031-2. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML. Food for thought: Hedonic experience beyond homeostasis in the human brain. Neuroscience. 2004;126:807–819. doi: 10.1016/j.neuroscience.2004.04.035. [DOI] [PubMed] [Google Scholar]

- Loewenstein G, Schkade D. Wellbeing: The foundations of hedonic psychology. New York: Russell Sage Foundation; 1999. Wouldn’t it be nice? Predicting future feelings; pp. 85–105. [Google Scholar]

- Mahler SV, Smith KS, Berridge KC. Endocannabinoid hedonic hotspot for sensory pleasure: Anandamide in nucleus accumbens shell enhances liking of a sweet reward. Neuropsychopharmacology. 2007;32(11):2267–2278. doi: 10.1038/sj.npp.1301376. [DOI] [PubMed] [Google Scholar]

- McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- McClure SM, Laibson DI, Loewenstein, Cohen Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306(5695):503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- McFadden D. The new science of pleasure (Frisch Lecture). Econometric Society World Congress; London. 2005. Retrieved from http://www.econ.berkeley.edu/wp/mcfadden0105/ScienceofPleasure.pdf. [Google Scholar]

- Meloy TS, Southern JP. Neurally augmented sexual function in human females: A preliminary investigation. Neuromodulation. 2006;9:34–40. doi: 10.1111/j.1525-1403.2006.00040.x. [DOI] [PubMed] [Google Scholar]

- Montague PR, Hyman SE, Cohen JD. Computational roles for dopamine in behavioural control. Nature. 2004;431:760–767. doi: 10.1038/nature03015. [DOI] [PubMed] [Google Scholar]

- Morewedge CK, Gilbert DT, Wilson TD. The least likely of times: How remembering the past biases forecasts of the future. Psychological Science. 2005;16:626–630. doi: 10.1111/j.1467-9280.2005.01585.x. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Olds J, Milner P. Positive reinforcement produced by electrical stimulation of septal area and other regions of rat brain. Journal of Comparative and Physiological Psychology. 1954;47:419–427. doi: 10.1037/h0058775. [DOI] [PubMed] [Google Scholar]