Abstract

This article describes a preliminary study of the effects of State rate-setting programs on volumes of hospital services, specifically admission rates, occupancy levels, and average lengths of stay. A volume response to rate-setting may be anticipated as a result of program effects on hospital costs or charges as well as on hospitals' behavioral incentives. We analyzed data for samples of hospitals and counties in States with and without rate-setting programs for the 9-year period 1969 to 1978. The results suggested that rate regulation has brought about, in some States, an increase in hospital occupancy by increasing patients' lengths of stay. Few programs have had a measurable effect on the admission rate. Programs that regulate per diem rates seem to produce more consistent and predictable volume effects than those controlling charges. The findings were generally consistent with prior hypotheses and partially account for earlier findings regarding the effects of rate-setting programs on hospital costs.

Introduction

State hospital rate-setting programs may have wide-ranging effects on hospital behavior, through their influence upon the cost and/or charge structures of the regulated facilities. Although their specific objectives vary almost by State, ail rate-setting programs impose limits on the reimbursement hospitals will receive for the services provided to patients. To the extent that their revenues are constrained, therefore, hospitals may have cause to re-evaluate their capacity, service capabilities and staffing, and utilization patterns. The effects of these programs will be determined not only by their objectives and structure, but also the vigor with which their objectives are pursued.

This study was designed to explore, in a preliminary fashion, the effects of rate-setting on volumes of hospital services. The analyses focused on three interrelated measures: admission rates, occupancy levels, and average length of stay. This preliminary analysis is one of a series that has been prepared as part of the National Hospital Rate-Setting Study (NHRS), a large-scale evaluation of rate-setting programs funded by the Health Care Financing Administration (HCFA). The NHRS was funded to evaluate the effects of such programs in nine States: Arizona, Connecticut, Maryland, Massachusetts, Minnesota, New Jersey, New York, Western Pennsylvania, and Washington.

This study addressed two broad questions. The first asks whether there has been a volume response to rate-setting programs because of, or in addition to, program effects on costs/charges. Since total hospital expenditures represent the product of unit cost and volume of services, changes in either unit cost or quantity could produce changes in total outlays. The close link between quantity and unit cost suggests that a separate investigation of program impact on volumes of service should supplement any findings with respect to cost.

In an earlier paper in this series, for instance, Coelen and Sullivan (1981) concluded that rate-setting programs have restrained hospital expenditures per patient day and per admission and, to a lesser extent, per capita. The analyses reported in this paper supplement those findings by providing evidence about the presence and nature of hospitals' quantity responses to rate-setting. Was the growth in cost per admission slowed, for example, because admissions increased more rapidly after rate-setting was instituted? Were the estimated effects on expenditures per capita tied to program-induced cutbacks in volume?

The second question this study investigates asks whether rate-setting programs alter incentives for hospitals with respect to volumes of service and, more importantly, whether hospitals respond to those incentives. The individual programs vary along a number of dimensions, including objectives, unit of payment regulated, and the methods used to establish limits. Given these and many other differences, it is likely that each program creates somewhat different incentives for hospitals. In accordance with the specific incentives, therefore, different responses on the part of hospitals would be anticipated.

Evidence that different responses do occur can be derived from several evaluations conducted during the mid-1970s. While by no means conclusive, the results of these assessments suggested that control of unit costs or charges may have promoted an increase in the volume of services provided. The types of services for which increases were observed by earlier evaluators seemed to vary depending on the unit of payment employed by the program, with systems based on per diem affecting length of stay and systems based on charges affecting service intensity (that is, the quantity of resources applied to a day in the hospital). The evaluation results also suggest that the impact of rate-setting programs on volumes may strengthen after the program has been in effect for several years and hospitals have become familiar with the nature of the incentives. These earlier findings were derived from programs that have since been modified, in some cases radically, but they can be considered as hypotheses in need of further testing.

The remainder of this paper is divided into several sections. The following section contains a review of the key features of rate-setting programs that are likely to influence volumes of service and develops a classification of the State programs and associated research hypotheses. Next, we describe the statistical methods used to measure program effects. Finally, we present analytic results and discuss their implications.

Relevant Features of the Study Programs

The nine States that are the focus of the NHRS represent a range of alternative approaches to hospital rate regulation. The key inter-program similarities and differences have been summarized in Hamilton et al. (1980). We used that summary, along with additional program-specific data obtained during the first year of the NHRS, to analyze potential program effects on volume and to develop preliminary hypotheses to be tested empirically.

The impact of rate-setting programs on volumes of service is likely to depend upon both the overall scope and stringency of the program and the particular program features that will create the most direct incentives for hospitals. With respect to the former, it seems reasonable to assume that the greater its scope of coverage and the more it is perceived by hospitals to be stringent or binding, the greater the program's impact will be. Programs that are limited in scope and/or not viewed as constraining by hospitals are less likely to significantly affect hospital behavior. Scope and stringency can be measured by a vector of program characteristics, including: 1) extent of legal authority; 2) payers covered; 3) scope of analysis during review process; 4) compliance mechanisms; and 5) coordination with the planning program. Data concerning each of these five characteristics are displayed on Table 1 for the nine programs. 1

Table 1. Characteristics Determining Program Scope and Stringency.

| Program | Extent of Authority | Payers Covered | Scope of Analysis During Review | Compliance Mechanisms | Coordination with Planning Program |

|---|---|---|---|---|---|

| Arizona | Least extensive | Blue Cross Commercial insurers Self pay | Subjective review | Voluntary (public disclosure) | Informal |

| Connecticut | Moderate | Commercial insurers Self pay | Sequential screens applied to budget, clusters of departments, and departments | Mandatory | Formal |

| Maryland | Extensive | All payers | Department-level screens and adjustments | Mandatory (cumulative) | Counter |

| Massachusetts Medicaid | Extensive | Medicaid | Formula-based adjustments of past adjusted expenses | Mandatory (cumulative) | Informal |

| Charge-control3 | Moderate | Commercial insurers Self pay | Review of overall budget | Mandatory But limited | Informal |

| Minnesota | Moderate | Blue Cross Commercial insurers Self pay | Screens applied to selected functional categories | Mandatory | Minimal |

| New Jersey | Extensive | Medicaid Blue Cross | Sequential screens applied to departmental clusters, then individual departments | Mandatory (cumulative) | Formal |

| New York | Most extensive | Medicaid Blue Cross Commercial insurers Self pay | Screens applied to routine expenses per day and ancillary expenses per admission | Mandatory | Formal |

| Western Pennsylvania | Moderate | Blue Cross Medicare Medicaid | Screens applied to overall expenses and subjective analysis used for departmental costs | Mandatory | Minimal |

| Washington | Extensive | All payers | Sequential screens applied to overall unit costs, natural expense categories, and departments | Mandatory | Formal |

Characterization based on program's ranking within various dimensions of authority, as indentified in the First Annual Report of the National Hospital Rate Setting Study (Hamilton and Walter, 1980).

Types of coordination are defined as follows: formal Involves well-defined, structured planning-regulatory interaction; Informal involves less structured interagency contact and sharing of data and other input; counter coordination occurs when the two groups frequently work at cross purposes; and minimal coordination occurs when virtually no linkages exist.

Term refers to charge paying individuals or agencies.

With respect to the incentives created by the program, three categories of program features are likely to contain the most direct, volume-related incentives. These include the following:

Unit of Payment Controlled

The payment unit established by the rate-setting program creates the dominant, volume-related incentives for hospitals. Two units of payment are currently in use: per diem rates and charges per unit of service. Table 2 shows the units used in each of the study programs. The incentives created by each payment unit—and hence, the impact of the associated programs—would be expected to differ.

Table 2. Unit of Payment Controlled by the Rate-Setting Program.

| Program | Unit of Payment |

|---|---|

| Arizona | Charges |

| Connecticut | Charges |

| Maryland | Charges |

| Massachusetts Medicaid |

Per diem rate |

| Charge-control | Charges |

| Minnesota | Charges |

| New Jersey | Per diem rate |

| New York Medicaid/Blue Cross |

Per diem rate |

| Charge-control | Charges |

| Western Pennsylvania | Per diem rate |

| Washington | Varies by hospital payment group:

|

Per diem rates constitute all-inclusive amounts paid to hospitals for each day patients within regulated payer classes spend in inpatient facilities. These rates usually reflect average allowable costs per patient day. Since it is often the case that the first days of a patient's stay are more resource-intensive (and thus more costly) than later days, per diem rates create incentives for hospitals to keep patients in the hospital longer, since these added days will tend to be profitable. Also, since the rates they are paid do not vary by patient diagnosis, hospitals also have an incentive to admit more patients that are less seriously ill and hence less expensive to treat. Therefore, as a result of these incentives, one might expect admissions, length of stay, and occupancy all to increase under systems based on per diem.

Systems basing reimbursement on charges per unit of service create somewhat different incentives for hospitals. Analysis of the nature of these incentives is complicated by the diversity of the rate-setting process across programs. For example, somewhat different results might be anticipated when the program requires that costs and charges be aligned within cost centers, compared to those where such alignments are not required. In general, however, the use of charges, rather than per diem rates, should create an identifiable set of incentives that are discernibly different from those associated with per diem-based reimbursement.

Under charge-based systems, for example, there should be less of an incentive for hospitals to increase admissions or lengths of stay, although a positive effect would still be anticipated. The most direct incentive may be to increase service intensity, and particularly the volume of ancillary services. This incentive arises for two reasons. First, if allowable charges were set equal to average costs (assuming a certain budgeted volume of services), then by increasing the volume beyond budgeted levels, the marginal cost of producing additional services could well be less than the allowed charge, in which event the hospital would profit. This incentive would be eliminated only if the program reduced the rate paid for those additional services to an amount at or below the marginal cost of producing them. (Treatment of volume increases by prospective reimbursement programs is described in the next section.) Second, if the system is primarily concerned with unit costs, there will be an incentive to increase the number of units, since this will cause average cost per unit to fall.

Review and Rate-Setting Procedures

Volume-related incentives are imbedded in the procedures by which budgets are reviewed and/or rates are set. Screens of unit costs during budget review, for example, would seem to provide an incentive to increase volumes in order to reduce average costs. Many programs, however, have developed methodologies that address volume changes directly in an attempt to discourage unjustified increases.

Typically, rate-setting programs do not allow hospitals to receive the full approved rate for units of service provided in excess of budgeted volume. Rather, they allow only a specified proportion of that rate to be paid, which is designed to reflect the marginal cost of providing those added units. Another way of viewing this is in terms of fixed versus variable costs. When a rate is set, it is assumed to adequately reimburse hospitals for the fixed and variable costs incurred in providing the budgeted volume of services. When volume increases beyond the quantity budgeted, fixed costs are assumed to remain unchanged, necessitating only the reimbursement of the variable costs incurred in providing the extra units. Conversely, when actual volume is below the quantity budgeted, hospitals are allowed full compensation for their fixed costs. Usually, rate adjustments induced by volume changes are made at the end of the year, so that they actually affect the following year's rates. There is thus a lag between the volume change and the associated rate adjustment.

The extent to which the adjustments just described influence volume-related behavior depends upon the fixed/variable proportions employed and the design and timing of the adjustments. The key to the profitability of volume increases for hospitals lies in the congruence between the proportion of the rate adjudged to be variable and actual variable costs. The greater the margin between the payment rate and actual costs for the incremental units of service, the greater will be the incentive to increase volumes. That incentive may also be reinforced by other program features. For example, if actual volumes produced in the current year automatically, and without penalty, become the base for future calculations, then there may be added incentive to increase volumes during the current year. The time lag between the actual increase in volume and the volume adjustments also reinforces the incentive. Since volume adjustments applicable to the current year's performance do not take effect until the following year, hospitals can profit, in the short run, from receiving the full rate for all units of service produced, including the volume increase.

Most State systems treat volume changes straightforwardly, applying designated fixed/variable proportions to the appropriate rates in accordance with volume changes. In a few systems, such as Maryland's, where volumes have been a particular concern, elaborate methodologies have been developed which involve differential treatment of volume changes by the type of service (for example, routine versus ancillary) and by the magnitude of the change. These procedures have been designed to discourage hospitals from increasing volumes and to encourage them to adjust behavior in desired ways.

Table 3 summarizes the approaches employed by the nine systems in reviewing costs and setting rates. The table indicates that about half of the programs have concentrated on restraining volumes, and several of these have begun only recently. Maryland, Massachusetts (charge-control), and New Jersey have exhibited the most long-standing concern with volume changes. Of these three programs, Maryland's appears to have the most carefully structured and stringent provisions. These methodologies aimed at volume changes are likely to discourage hospitals from increasing volumes, thus depressing relative rates of increase in admissions, occupancy, and length of stay.

Table 3. Methodologies Employed to Address Volume Changes.

| Program | Fixed/Variable Ratio | Other Provisions |

|---|---|---|

| Arizona | None | Program does not specifically address volume changes |

| Connecticut | 50/50 (as of FY 1979) Adjustment made to adjusted budget base | None |

| Maryland | Generally, 40/60 for routine centers and 60/40 for ancillary services. Adjustment mechanism is complicated and depends on actual percent change the change In revenues and adjusted admissions, and whether the adjustment applies to retrospective or prospective volume changes. Adjustments are computed for hospital overall but are applied departmentally. | Intensity increases are addressed via a separate system (Table 4) |

| Massachusetts Medicaid | None | No analysis of volume changes |

| Charge-control | 60/40; 40% of base year costs allowed for volume increases (or decreases) in revenue-producing centers beyond acceptable corridors | Projected volume assessed for reasonableness |

| Minnesota | None | During budget review, hospital's length of stay is evaluated |

| New Jersey | Generally, 50/50 for personnel and 100% variable for supplies; applied to relevant costs in non-overhead cost centers | Only “exceptional” volume increases are scrutinized |

| New York | None | Volume changes are not evaluated per se, but utilization is an important system target (Table 4) |

| Western Pennsylvania | None | Volume changes are generally considered beyond control of hospital administrator. Beginning in 1977, retroactive adjustments could be obtained to compensate for decrease in length of stay. |

| Washington | As of 1978, ratios established for each peer group (80/20, 70/30, 60/40); applied to incremental revenue resulting from volume changes | Trends in various volume measures are examined for reasonableness. |

Explicit Incentives or Penalties Directed at Volumes

Some systems incorporate in their review or rate-setting procedures explicit penalties or incentives directed at particular volume measures. Most often, these penalties apply to occupancy and length of stay. Occupancy penalties, which impose minimum occupancy standards by service,2 are imposed when hospitals fall below the established standards. Similarly, length of stay penalties are imposed when mean length of stay exceeds the standards set for it. New York, which has both occupancy and length of stay penalties, has the most extensive penalty system, although the Massachusetts Medicaid program employs occupancy standards as well.

Other programs have developed somewhat different approaches to the outright regulation of volumes, and some actually encourage growth in certain hospitals. In New Jersey, for example, low-cost hospitals are provided 1 to 3 percent beyond actual costs (depending on their standing within their peer group) as an intensity allowance. In Maryland, the rate-setting system was augmented with a procedure to stem the observed increase in volumes, particularly of ancillary services. Termed the Guaranteed Inpatient Revenue (GIR) system, this methodology calculates reimbursement to participating hospitals on the basis of cost per case, by diagnosis. Hospitals are rewarded for not increasing cost per case beyond the overall inflation rate (plus 1 percent) and penalized for increasing per case costs beyond that limit.

Table 4 describes any additional volume-related penalties or incentives in the nine States, and it reveals that four of the nine programs have adopted such provisions. Among these, the program features in three States—Maryland, Massachusetts (Medicaid), and New York—seem likely to most affect the incentives for hospitals. New Jersey's intensity allowance, which is not a key feature of the system, is less likely to have a significant effect. The New York program can be viewed as the most stringent, in that it incorporates both occupancy and length of stay penalties. These penalties apply pressure in opposite directions, encouraging hospitals to keep occupancy rates up, but not by increasing length of stay. Faced with these penalties, underutilized hospitals appear to have three options: 1) increase use by increasing the number of admissions; 2) face financial losses; or 3) close beds.

Table 4. Additional, Volume-Related Penalties or Incentives.

| Program | Penalties or Incentives |

|---|---|

| Arizona | None |

| Connecticut | None |

| Maryland | For a sample of hospitals (including all large hospitals), a Guaranteed Inpatient Revenue (GIR) amount is calculated for each hospital, based on its own diagnostic distribution and average charges by diagnosis (adjusted for inflation plus 1 percent for growth and technology). Hospitals are then rewarded when total revenues are below the GIR and penalized when total revenues are higher. |

| Massachusetts Medicaid | An occupancy penalty is incorporated in rate calculation, whereby allowable costs are divided by base year patient days or, if higher, the number of patient days that would have been experienced if minimum occupancy standards had been met. |

| Charge-Control | None |

| Minnesota | None |

| New Jersey | Budget review system sometimes provides intensity allowances, depending on a hospital's standing within its peer group. |

| New York | An occupancy penalty is incorporated in rate calculation, whereby additional patient days are imputed to increase the denominator—and hence lower the per diem rate if hospital occupancy falls below minimum standards. A length of stay penalty is the basis for computation of disallowances, if a hospital's length of stay is above the group mean plus ½ day. Disallowance consists of “excess” days multiplied by the lower of the group's or the hospital's routine per diem. |

| Western Pennsylvania | None |

| Washington | None |

Massachusetts Medicaid imposes an occupancy penalty which is similar to New York's. Since it is not accompanied by a length of stay penalty, it may increase admissions and/or length of stay in underutilized hospitals. Given the more limited scope of the program, however, the impact of the penalty should only be measurable in hospitals with large Medicaid patient loads.

The Maryland GIR system must be viewed somewhat differently. Those hospitals to which the system applies (about one-third of those in the State, including all large facilities) are provided with explicit incentives not to increase intensity of service, as measured by the diagnosis-specific cost per case. The GIR system directly encourages hospitals to constrain average length of stay as well as the number of ancillary procedures performed. The likely effect of the system on admissions is unclear, leaving the anticipated GIR effect on occupancy in doubt.

Given the number of relevant provisions within each program, and the sometimes conflicting incentives that they would appear to create, it is very difficult to gauge in advance what the net effect of the program on admissions, length of stay, and occupancy will be. The New York program clearly illustrates this problem. Its per diem-based system, together with the occupancy penalty, would appear to provide strong incentives for hospitals to increase average length of stay. To counter that “perverse incentive,” however, a length of stay penalty was added to the system. Which incentive actually prevails is an empirical question, not only in New York but in other States where different provisions appear to work in different directions.

If volume-related provisions are considered in isolation, then it seems reasonable to predict that unit of payment will provide the dominant incentive for hospitals, unless there are other offsetting program features. The net effect of rate-setting on volumes of service, however, will also be heavily influenced by the program's overall stringency and scope of authority.

Table 1 listed characteristics influencing stringency and authority. Based on those characteristics, and consistent with the conclusions of Coelen and Sullivan (1981), four categories of programs can be defined, ranging from the most to least stringent. The New York program appears to be most stringent on all criteria. The second category includes the programs in Maryland, New Jersey, and Washington. Connecticut and Massachusetts rank third, and the systems in Arizona, Minnesota, and Western Pennsylvania are the least stringent. One might thus expect that the strength of any program effect, and hence the significance of any findings, will vary as a function of program stringency and authority. Finally, the impact of rate-setting will doubtless be affected by hospitals' perceptions and attitudes about the program, the ability of individual facilities to change their behavior, and the degree of sophistication the industry brings to the regulatory process.

The remainder of this paper is devoted to the empirical analysis of the effects of rate regulation on volumes of hospital services. The analysis has been designed to provide at least preliminary answers to the questions that were raised at the outset. The results of the various analyses follow a brief discussion of the research methodology and data sources used to generate the results presented.

Statistical Methodology

This preliminary analysis evaluates program effects on three measures: admission rate, average length of stay, and percent occupancy. Each of these reflects a somewhat different aspect of hospital utilization and is influenced by a number of factors, including incidence and prevalence of particular illnesses, supply of hospital services, style of medical practice, socioeconomic characteristics of the population, and availability of alternative sources of care. Rate-setting programs seem likely to exert the most influence on the second of these factors, the supply of hospital services.

The methods used in this paper are relatively simple. They have been designed to assess, in a preliminary fashion, whether rate-setting programs have affected hospital utilization. Regression analysis is the primary evaluation method employed in this investigation, and we estimated reduced form equations. These reduced form equations evaluate changes in hospital use as a function only of variables that are not likely to be affected by rate-setting programs, at least in the short run. These variables, which are defined in Table 5, have been selected to measure important dimensions within the categories of potential influences listed above. They include selected measures of “need” (such as birth rates), socioeconomic status of the population (such as age, income, and education), the availability of alternatives to hospitalization (such as the supply of physicians and nursing home beds), and the presence of other regulatory programs (such as Certificate of Need). Dummy variables have been used to measure each program, and each major version of the program is measured by a separate dummy variable.

Table 5. Variable Definitions Used in Hospital Level Analysis.

| ADMBED | Total admissions/total beds, short-term hospitals |

| OCC | Total inpatient days/(total beds x 365), short-term hospitals |

| LOS | Total inpatient days/total admissions, short-term hospitals |

| AFDC | Percent of population on AFDC in county (X̄ =4.13) |

| BIRTH | Births per 10,000 population in county (X̄ =1.56) |

| COMMINS | Percent of population covered by commercial insurance (including Blue Cross) in State (X̄ =79.39) |

| CRIME | Crimes per 100,000 population in county in 1975 (X̄ =4131.81) |

| DSMSA | County located in an SMSA (X̄ =.54) |

| EDUC | Average years of educational attainment for county population (X̄ =11.63) |

| GOV | Hospital operated by non-Federal government agency (X̄ =.24) |

| INCOME | Personal income per capita in county (X̄ =4322.88) |

| MDPOP | Active physicians per capita in county (X̄ =.001) |

| MEDSCHL | Number of years as a teaching hospital (X̄ =1.21) |

| NHBPC | Nursing home beds per 1,000 persons in county (X̄ =.008) |

| POPDENS | Population (100s) per square mile in county (X̄ =22.06) |

| POPT18 | Percent of population in Part A Medicare (X̄ =11.35) |

| PROF | For-profit hospital (X̄ =.09) |

| SPMD | Percent of physicians in county who are specialty physicians (X̄ =48.41) |

| UNEMRT | Proportion of labor force in county unemployed (X̄ =.06) |

| WHITE | Proportion of population composed of whites in county (X̄ =92.06) |

| CON1 | Certificate of need index (measuring activism of the State program) (X̄ =.25) |

| CON2 | Certificate of need index (measuring limitations of State program) (X̄ =.01) |

| DPSRO | Dummy variable: equals 1.0 if hospital ever covered by binding review (X̄ =.59) |

| D70-78 | Dummy variables: equal 1.0 in year indicated by the two digits (for example, 1970 for D70) and all later years (through 1978);equal 0.0 for earlier years |

| Dss | Dummy variable: equals 1.0 for all years if hospital is in State ss; 0.0 otherwise (ss indicates the two-letter abbreviation of the State) |

| Dssyy | Dummy variable: equals 1.0 for hospital in State ss in year yy and later; 0.0 otherwise (ss is two-letter abbreviation for a State; yy indicates the first fiscal year during which rate-setting [or a version of rate-setting] was in place) |

Coelen and Sullivan (1981) described in detail the evaluation methodology used to assess rate-setting effects in the first paper of this series. To summarize briefly, the equations included a series of dummy variables that measured independent State- and time-specific effects exclusive of rate-setting programs. The third set of dummy variables, described earlier, were included to indicate the presence of some form of rate regulation.

The units of analysis used to assess program effects on hospital use included both individual hospitals and counties. We performed hospital-level analysis to evaluate institutional responses to rate-setting, taking into account facility characteristics (such as ownership) that might influence the type of response. We describe results of this analysis in detail. We also performed county-level analysis to determine whether rate-setting has affected the overall rates at which the population uses hospital services and at which area facilities as a whole are utilized. We summarize these results more briefly.

Sources of Data

The NHRS hospital file was derived from a sample of 2,693 hospitals, constituting a 25 percent random sample of the more than 8,000 hospitals reporting annually to the American Hospital Association,3 supplemented by the remaining hospitals in the eight study States and Western Pennsylvania. In addition, a full census of hospitals was also obtained for six other States that were judged to have mature, state-wide, rate-setting programs: Colorado, Indiana, Kentucky, Nebraska, Rhode Island, and Wisconsin. Data were obtained for the sample hospitals for the 10 year period 1969 to 1978.

The hospital sample was also the basis for constructing the county file. The counties that were included in the file consisted of all those containing at least one sample hospital. Once the counties were identified, data were aggregated for all short-term hospitals within each of them. A total of 1,317 counties (out of a grand total of 3,049 in the country) were included. This sample of counties contains two-thirds of all U.S. hospitals as well as 90 percent of the population.

While the data reported by hospitals to the AHA constitute the best available source of information concerning the nation's hospitals, they are flawed. The data are self-reported and unaudited, and the accuracy of the numbers reported cannot be ensured. While the most serious problems with reliability, validity, and consistency over time are likely to be associated with the financial data, there are undoubtedly inaccuracies within the utilization data as well. The quality of the data used for this analysis will be further investigated when data become available from an additional source, the Medicare Cost Reports, later in the course of this study.

Hospital Utilization Trends

During 1969-1978, a period in which community hospital costs were rising rapidly, relative hospital use actually decreased. Admissions per capita remained virtually unchanged, lengths of stay became shorter, and hospitals used consistently less of their available capacity. Declines in all three measures were registered during the study period. Only admissions per bed increased during this period, and that increase was only slight.

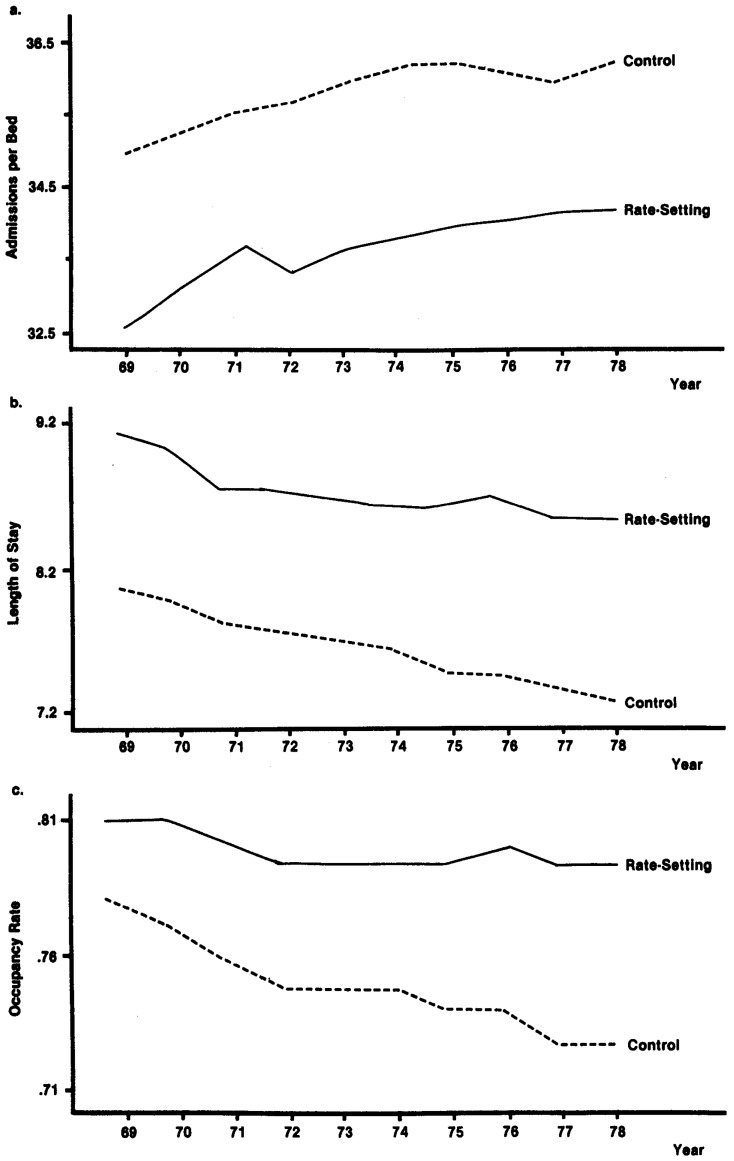

Figure 1 illustrates relative levels and rates of change in occupancy, length of stay, and admissions per bed in States with and without rate-setting programs. The trend lines suggest that there are some basic differences in utilization patterns in the two groups of States. The level of admissions per bed, for example, has always been lower for hospitals in States with prospective reimbursement than for “control” hospitals.

Figure 1. Comparative Trends in Rate-Setting and Control States.

In addition, while there was a decreasing trend in average length of stay within each of the two groups, that measure averaged about one day longer in the rate-setting States throughout the 1969 to 1978 period. However, the decline in length of stay was somewhat less sharp in the rate-setting States—suggesting that the programs may have worked against the trend—and the difference between the two groups had increased slightly by the end of the period.

Finally, as Figure 1 reveals, occupancy in both groups of States declined during the study period. Neither rates of decline nor absolute levels, however, were identical for the two groups of States. Occupancy rates for the study group averaged about 5 percentage points higher during the first half of the period and 6 percentage points higher for the remaining half. Moreover, during the second half of the period when hospitals in the control group used even less of their capacity, those in the study States maintained their occupancy at roughly 79 percent. This indicates that occupancy practices were different for the two groups and suggests that rate regulation may have caused increases in capacity utilization.

The preliminary review of utilization patterns indicates that there are clear differences between the States with rate-setting programs and those without such programs. Such differences may stem from any number of factors, including relative availability of services, economic environment, and style of medical practice, as well as the presence or absence of rate-setting programs. While in several cases they suggest that the programs might be having an effect, the trend lines plotted on each of the three figures reflect the net effect of the combined influences. Therefore, while an awareness of relative differences is useful background and facilitates interpretation of analytic results, it is impossible to draw any conclusions from these figures about program effects on hospital use. Such conclusions can be reached with more confidence if they are based on a multivariate analysis that takes potential confounding factors into account. The results of such an analysis are presented in the following section.

Econometric Results

Rate-setting programs are most likely to affect hospital utilization in two ways: 1) by increasing the level of utilization and 2) by influencing the annual rate of change in service use. Tighter budget constraints imposed by rate-setting programs that tie hospital revenue to units of service may give hospitals an incentive to increase the number of units provided. This may take the form of longer stays or the admission of more patients. As a result of these actions, the downward trends in hospital use described earlier may decelerate, if not reverse.

We assessed the effects of rate regulation on volumes of service by evaluating the variation over time and across hospitals and counties in levels of hospital use. The level of each dependent variable will be expressed in original units, or as the (natural) logarithm, of occupancy rate, admission rate, and average length of stay. We also used percentage changes as dependent variables, but there was little variation in these measures overall and, hence, little to explain. The difficulty arises because the utilization measures exhibit strong trends over time that translate into nearly constant percentage changes. Thus the results were not instructive.

The focus of our analysis was therefore upon the variation in hospital use rates within rate-setting and control States/areas. The evaluation methodology involved the direct estimation of rate-setting effects, as captured by the coefficients of the program dummy variables. The equations also included a series of variables measuring important influences on hospital utilization that are not likely to be affected by rate-setting programs. In addition, we included State dummy variables to control for differences among the States and time variables to account for changes in any unmeasured variables over time. When the full set of control variables is included, the coefficients of the program dummy variables represent direct estimates of the difference between the before/after change in the value of the intercept for the rate-setting States and the before/after change that occurs for the control areas.

Hospital-Level Analysis

Tables 6 through 8 show the results of the hospital-level analyses of the three types of utilization measures. Tables 6 and 8 present the results for Models I (admissions per bed) and III (occupancy rate), respectively. These models express all of the variables in their original units. In Model II, the results of which are shown in Table 7, the dependent variable is length of stay, which is expressed in (natural) logarithms. A logarithmic transformation of the length of stay measure improved the fit of the model over that obtained when the data were not transformed.

Table 6. Regression Results for Model I, Admissions per Bed (Hospital-Level Analysis).

| Dependent Variable: ADMBED R2 = .1611 F = 41.56 N = 22,079 | ||

|---|---|---|

|

| ||

| Explanatory Variable | Estimated Coefficient | t-ratio |

| Intercept | 39.07 | 16.58 |

| DSMSA | −1.00 | −5.281 |

| SPMD | .05 | 12.181 |

| COMMINS | .01 | .65 |

| MDPOP | 86.52 | .89 |

| NHBPC | −34.16 | −2.461 |

| GOV | −1.46 | −8.781 |

| POPDENS | −.006 | −4.041 |

| AFDC | .05 | 1.33 |

| CRIME | .0001 | 3.631 |

| WHITE | .06 | 5.861 |

| BIRTH | .83 | 2.851 |

| INCOME | .00004 | .42 |

| EDUC | −.82 | −7.511 |

| PROF | .28 | 1.16 |

| UNEMRT | −20.44 | −4.061 |

| MEDSCHL | −.13 | −5.411 |

| POPT18 | −.49 | −16.921 |

| CON1 | −.03 | −.16 |

| CON2 | −.15 | −.87 |

| DPSRO | .90 | 5.291 |

| DAZ74 | 1.15 | 1.37 |

| DC072 | −.11 | −.14 |

| DCOA75 | 5.84 | 3.451 |

| DCT72 | 1.05 | .82 |

| DCT75 | .76 | .64 |

| DKY75 | −.81 | −1.29 |

| DMA75 | 1.30 | 1.32 |

| DMA76 | −.63 | −.60 |

| DMDA75 | −.76 | −.35 |

| DMDA76 | .70 | .27 |

| DMDB76 | .31 | .23 |

| DNBA73 | 3.34 | 2.621 |

| DRI71 | 1.84 | .77 |

| DRI75 | 3.07 | 1.662 |

| DMNA 75 | −.20 | −.34 |

| DNJV | 1.00 | 1.25 |

| DNJ75 | −.16 | −.19 |

| DNJ77 | .28 | .30 |

| DNYD71 | 2.36 | 3.591 |

| DNYD76 | .18 | .30 |

| DNYU71 | .35 | .49 |

| DNYU76 | .98 | 1.56 |

| DWA76 | −.75 | −1.12 |

| DWI73 | −.97 | −1.752 |

| DWPVPR | −.42 | −.60 |

| DAL | 2.59 | 3.161 |

| DAR | 7.74 | 8.281 |

| DAZ | .29 | .32 |

| DCA | 4.93 | 8.341 |

| DCO | 2.81 | 3.291 |

| DCT | 2.50 | 2.391 |

| DDC | 1.77 | .78 |

| DDE | −1.66 | −.81 |

| DFL | 4.63 | 5.891 |

| DGA | 5.06 | 6.361 |

| DIA | −1.77 | −2.461 |

| DIL | .09 | .15 |

| DID | 2.35 | 1.981 |

| DIN | 3.08 | 5.741 |

| DKS | −1.63 | −2.141 |

| DKY | 5.67 | 8.321 |

| DLA | 6.68 | 8.031 |

| DMA | −.74 | −1.12 |

| DMD | 1.42 | 1.772 |

| DME | .37 | .40 |

| DMI | −.42 | −.62 |

| DMN | −1.47 | −2.501 |

| DMO | −1.06 | −1.43 |

| DMS | 6.73 | 7.321 |

| DMT | −2.80 | −2.481 |

| DNB | .16 | .26 |

| DNC | 1.62 | 2.101 |

| DND | −.81 | −.80 |

| DNH | −.55 | −.44 |

| DNJ | .67 | .87 |

| DNM | 5.70 | 5.331 |

| DNV | −2.71 | −1.932 |

| DNYD | .18 | .21 |

| DNYU | −.90 | −1.04 |

| DOK | 3.92 | 4.641 |

| DOR | 3.57 | 4.021 |

| DPA | −.97 | −1.42 |

| DRI | −1.41 | −.70 |

| DSC | 5.05 | 4.801 |

| DSD | −.48 | −.47 |

| DTN | 1.09 | 1.35 |

| DTX | 4.73 | 7.421 |

| DUT | 5.55 | 4.421 |

| DVA | −.52 | −.54 |

| DVT | 1.58 | 1.05 |

| DWA | 8.92 | 13.821 |

| DWI | −.29 | −.44 |

| DWP | .07 | .12 |

| DWV | 1.15 | 1.24 |

| DWY | 1.55 | 1.43 |

| D70 | .53 | 1.812 |

| D71 | .24 | .82 |

| D72 | −.09 | −.31 |

| D73 | .22 | .81 |

| D74 | .39 | 1.37 |

| D75 | .27 | .87 |

| D76 | −.21 | −.75 |

| D77 | −.26 | −.93 |

| D78 | −.03 | −.11 |

Indicates significant at 5 percent level.

Indicates significant at 10 percent level.

Table 8. Regression Results for Model III, Occupancy Rate (Hospital-Level Analysis).

| Dependent Variable: OCC R2 = .3731 F = 128.75 N = 22,079 | ||

|---|---|---|

|

| ||

| Explanatory Variable | Estimated Coefficient | t-ratio |

| Intercept | .83 | 26.38 |

| DSMSA | .04 | 14.351 |

| SPMD | .0009 | 18.961 |

| COMMINS | .0003 | 1.52 |

| MDPOP | 2.77 | 2.131 |

| NHBPC | −1.09 | −5.851 |

| GOV | −.03 | −13.031 |

| POPDENS | −.00004 | −2.411 |

| AFDC | .002 | 4.961 |

| CRIME | .000005 | 9.821 |

| WHITE | .0001 | 1.14 |

| BIRTH | −.03 | −8.021 |

| INCOME | .0000006 | .45 |

| EDUC | −.01 | −5.901 |

| PROF | −.05 | −14.071 |

| UNEMRT | .15 | 2.301 |

| MEDSCHL | .01 | 18.341 |

| POPT18 | .0004 | 1.11 |

| CON1 | .003 | 1.18 |

| CON2 | .001 | .53 |

| DPSRO | .02 | 6.571 |

| DAZ74 | .03 | 2.531 |

| DCO72 | .001 | .10 |

| DCOA75 | .07 | 3.171 |

| DCT72 | .01 | .62 |

| DCT75 | .01 | .88 |

| DKY75 | .02 | 1.792 |

| DMA75 | .007 | .52 |

| DMA76 | .01 | .85 |

| DMDA75 | .03 | 1.04 |

| DMDA76 | .01 | .41 |

| DMDB76 | .06 | 3.551 |

| DNBA73 | .03 | 1.952 |

| DRI71 | .05 | 1.662 |

| DRI75 | .02 | .62 |

| DMNA | −.001 | −.18 |

| DNJV | .05 | 4.281 |

| DNJ75 | .004 | .32 |

| DNJ77 | .02 | 1.40 |

| DNYD71 | .05 | 5.531 |

| DNYD76 | .04 | 4.901 |

| DNYU71 | .03 | 3.631 |

| DNYU76 | .04 | 4.361 |

| DWA76 | −.001 | −.13 |

| DWI73 | .008 | 1.03 |

| DWPVPR | .007 | .71 |

| DAL | −.02 | −2.161 |

| DAR | −.03 | −2.101 |

| DAZ | −.14 | −11.351 |

| DCA | −.15 | −19.271 |

| DCO | −.13 | −11.021 |

| DCT | −.08 | −5.461 |

| DDC | −.04 | −1.27 |

| DDE | −.05 | −1.702 |

| DFL | −.07 | −6.251 |

| DGA | −.05 | −4.401 |

| DIA | −.11 | −11.591 |

| DIL | −.04 | −4.791 |

| DID | −.08 | −5.151 |

| DIN | −.009 | −1.23 |

| DKS | −.07 | −6.561 |

| DKY | −.02 | −1.762 |

| DLA | −.08 | −7.031 |

| DMA | −.07 | −7.751 |

| DMD | −.02 | −2.161 |

| DME | −.13 | −10.341 |

| DMI | −.06 | −6.611 |

| DMN | −.09 | −11.801 |

| DMO | −.06 | −5.881 |

| DMS | .04 | 2.861 |

| DMT | −.16 | −10.911 |

| DNB | −.10 | −12.101 |

| DNC | −.03 | −3.161 |

| DND | −.11 | −8.191 |

| DNH | −.17 | −10.181 |

| DNJ | −.05 | −4.711 |

| DNM | −.11 | −8.031 |

| DNV | −.16 | −8.591 |

| DNYD | −.01 | −.87 |

| DNYU | −.02 | −1.932 |

| DOK | −.10 | −9.181 |

| DOR | −.15 | −12.971 |

| DPA | −.05 | −5.461 |

| DRI | −.09 | −3.011 |

| DSC | −.01 | −.92 |

| DSD | −.17 | −12.321 |

| DTN | −.06 | −5.261 |

| DTX | −.07 | −8.751 |

| DUT | −.14 | −8.211 |

| DVA | .01 | .71 |

| DVT | −.03 | −1.54 |

| DWA | −.15 | −17.631 |

| DWI | −.09 | −10.051 |

| DWP | .006 | .74 |

| DWV | −.03 | −2.341 |

| DWY | −.15 | −10.601 |

| D70 | −.02 | −4.831 |

| D71 | −.03 | −8.071 |

| D72 | −.02 | −4.891 |

| D73 | −.006 | −1.742 |

| D74 | .0008 | .20 |

| D75 | −.02 | −4.031 |

| D76 | −.006 | −1.662 |

| D77 | −.009 | −2.351 |

| D78 | .003 | .82 |

Indicates significant at 5 percent level.

Indicates significant at 10 percent level.

Table 7. Regression Results for Model II, Average Length of Stay (Hospital-Level Analysis).

| Dependent Variable: ℓn(LOS) R2 = .3420 F = 112.48 N = 22,079 | ||

|---|---|---|

|

| ||

| Explanatory Variable | Estimated Coefficient | t-ratio |

| Intercept | 2.08 | 33.98 |

| DSMSA | .08 | 16.731 |

| SPMD | .0002 | 1.872 |

| COMMINS | .0004 | .89 |

| MDPOP | 2.87 | 1.14 |

| NHBPC | −.71 | −1.962 |

| GOV | −.001 | −.33 |

| POPDENS | .0002 | 5.081 |

| AFDC | .002 | 2.091 |

| CRIME | .000004 | 4.351 |

| WHITE | −.001 | −4.411 |

| BIRTH | −.08 | −10.831 |

| INCOME | −.000004 | −1.36 |

| EDUC | .009 | 3.221 |

| PROF | −.07 | −11.911 |

| UNEMRT | .86 | 6.601 |

| MEDSCHL | .01 | 17.601 |

| POPT18 | .01 | 18.501 |

| CON1 | .006 | 1.22 |

| CON2 | .004 | .96 |

| DPSRO | −.01 | −2.701 |

| DAZ74 | .01 | .36 |

| DCO72 | .02 | .89 |

| DCOA75 | −.05 | −1.15 |

| DCT72 | −.006 | −.19 |

| DCT75 | .006 | .18 |

| DKY75 | .05 | 3.131 |

| DMA75 | −.02 | −.97 |

| DMA76 | .04 | 1.34 |

| DMDA75 | .08 | 1.35 |

| DMDA76 | −.009 | −.14 |

| DMDB76 | .08 | 2.441 |

| DNBA73 | −.07 | −1.991 |

| DRI71 | .02 | .41 |

| DRI75 | −.06 | −1.26 |

| DMNA 75 | −.007 | −.47 |

| DNJV | .04 | 1.922 |

| DNJ75 | .01 | .46 |

| DNJ77 | .02 | .73 |

| DNYD71 | .01 | .70 |

| DNYD76 | .04 | 2.691 |

| DNYU71 | .06 | 2.971 |

| DNYU76 | .03 | 1.692 |

| DWA76 | .01 | .66 |

| DWI73 | .05 | 3.201 |

| DWPVPR | .03 | 1.872 |

| DAL | −.09 | −4.071 |

| DAR | −.24 | −9.721 |

| DAZ | −.21 | −9.191 |

| DCA | −.35 | −22.611 |

| DCO | −.25 | −11.351 |

| DCT | −.19 | −6.901 |

| DDC | −.06 | −1.08 |

| DDE | −.009 | −.18 |

| DFL | −.22 | −10.851 |

| DGA | −.19 | −9.431 |

| DIA | −.08 | −4.261 |

| DIL | −.06 | −3.541 |

| DID | −.09 | −2.901 |

| DIN | −.09 | −6.621 |

| DKS | −.03 | −1.34 |

| DKY | −.15 | −8.751 |

| DLA | −.27 | −12.571 |

| DMA | −.08 | −4.531 |

| DMD | −.05 | −2.621 |

| DME | −.20 | −8.351 |

| DMI | −.08 | −4.331 |

| DMN | −.07 | −4.551 |

| DMO | −.03 | −1.732 |

| DMS | −.11 | −4.601 |

| DMT | −.15 | −5.061 |

| DNB | −.12 | −7.311 |

| DNC | −.09 | −4.331 |

| DND | −.12 | −4.411 |

| DNH | −.25 | −7.781 |

| DNJ | −.10 | −5.101 |

| DNM | −.29 | −10.301 |

| DNV | −.13 | −3.461 |

| DNYD | −.03 | −1.31 |

| DNYU | −.02 | −.77 |

| DOK | −.26 | −11.771 |

| DOR | −.30 | −13.081 |

| DPA | −.04 | −2.001 |

| DRI | −.09 | −1.712 |

| DSC | −.10 | −3.601 |

| DSD | −.24 | −9.031 |

| DTN | −.10 | −4.841 |

| DTX | −.22 | −13.361 |

| DUT | −.35 | −10.711 |

| DVA | .05 | 2.071 |

| DVT | −.09 | −2.361 |

| DWA | −.44 | −26.511 |

| DWI | −.10 | −5.891 |

| DWP | −.003 | −.19 |

| DWV | −.03 | −1.13 |

| DWY | −.28 | −9.851 |

| D70 | −.04 | −5.381 |

| D71 | −.06 | −7.491 |

| D72 | −.03 | −3.671 |

| D73 | −.02 | −2.431 |

| D74 | −.007 | −1.00 |

| D75 | −.04 | −4.701 |

| D76 | .00004 | .01 |

| D77 | −.004 | −.50 |

| D78 | .01 | 1.53 |

Indicates significant at 5 percent level.

Indicates significant at 10 percent level.

We derived the results from ordinary least squares (OLS) procedures, which include all the explanatory variables simultaneously. We also considered using stepwise regression procedures. Stepwise procedures are very useful when the number of explanatory variables is large, but the results are also highly sensitive to intercorrelations among the variables.

The equations for occupancy and length of stay had considerably greater explanatory power than that for admissions per bed. Explanatory power is measured by the R2 statistic, which indicates the proportion of the overall variation in the dependent variables that can be explained by all four sets of independent variables. The value of R2 was .37 for occupancy and .34 for length of stay, compared to .16 for admissions per bed. In each equation, variables from all four sets contributed to explaining the observed variation in usage. The estimated coefficients, however, suggest that hospital use is largely explained by characteristics of the hospital's market environment, such as the social and economic characteristics of the population and the availability of physicians.

Environmental variables contributed substantially toward explaining the overall variation in admissions per bed, although about one-fifth of the rate-setting programs studied also had an effect. Included among the programs identified as having a significant positive effect were the small, voluntary program in Colorado (1975 and after), and the programs in Nebraska, downstate New York (1971 and after), Rhode Island (1975 and after), and Wisconsin. Only one of the programs studied had a significant negative effect on rates of admission. Although the explanatory power of the equation was low, the validity of the program-specific coefficients should not be jeopardized as long as the omitted variables are unrelated to rate-setting activities.

We used a semi-log equation to isolate program effects on average length of stay. This equation, which is displayed in Table 7, has twice the explanatory power of the admissions per bed equation (R2 =.34). Length of stay, like admission rates, seems to be predicted best by population and environmental characteristics. The pronounced downward time trend described in the previous section was captured by the time dummy variables. However, after these factors, as well as individual State effects, had been accounted for, there remained evidence that rate-setting has affected length of stay. In many areas, the effects were as anticipated. The per diem-based systems in New York, New Jersey (voluntary program), and Western Pennsylvania all seem to have induced increases in average length of stay. We also observed effects for several other programs. Rate-setting increased length of stay in Kentucky, Wisconsin, and a cohort of hospitals in Maryland4 and decreased it in Nebraska.

Finally, rate-setting programs have had a positive effect on hospital occupancy rates, resulting in the increased use of bed capacity. These findings were also generally consistent with our earlier hypotheses. All but two of the individual program coefficients were positive, and the two that were not were not statistically significant. Positive effects were significant for almost half of the programs studied, including those in New York, New Jersey, Maryland (one cohort only), Colorado (1975 and after), Rhode Island (1971 and after), Arizona, Nebraska, and Kentucky. The positive effects registered by the other programs were too weak to be considered conclusive.

Given a certain hospital capacity, the occupancy rate is determined by the rate at which patients are admitted and the length of time they stay in the hospital. The implication of this relationship for this analysis is that there should be some consistency between program effects on occupancy and its determinants: admission rate and length of stay. That is, if rate-setting is found to increase both the admission rate and length of stay, it should also yield increased occupancy. Of course, if only one of the two components was affected, then the net impact on occupancy will depend upon the magnitude of the effect on the individual component. If a small positive effect was registered for the admission rate, for example, it may have been offset by a concomitant—though insignificant—decrease in length of stay.

Our analysis generated consistent results. Table 9 summarizes the apparent mechanisms by which occupancy was increased in the States or areas for which we found a significant program effect on occupancy. Most of the occupancy rises apparently resulted from either an increased admission rate or lengthened stays. In two States—Rhode Island and Arizona—occupancy was significantly affected by rate-setting, though neither component was. Since the program effect on both admission rate and length of stay was weakly positive in both of these States, the slight effects on both components most likely produced the increase in occupancy.

Table 9. Mechanism for Occupancy Increases Induced by Prospective Reimbursement.

| Reason(s) for Increased Occupancy | Prospective Reimbursement Program |

|---|---|

| Increased rate of admission | Downstate New York (1971 and after) Colorado (1975 and after) Nebraska |

| Increased length of stay | Downstate New York (1976 and after) Upstate New York (1971 and after) Upstate New York (1976 and after) Maryland (second cohort) New Jersey (voluntary program) Kentucky |

| Unclear | Rhode Island (1971 and after) Arizona |

Three programs that are not shown on Table 9 affected some measure(s) of utilization, though their effects on occupancy were not significant. In each of the three States/areas governed by these programs-Wisconsin, Rhode Island, and Western Pennsylvania—rate-setting had offsetting effects by increasing the admission rate while decreasing length of stay, or vice versa. In Rhode Island (1975 and after), the admission rate increased while length of stay decreased, both possibly as a result of the rate-setting program, while the converse occurred in Wisconsin and Western Pennsylvania. In Wisconsin, both effects were statistically significant.

Two interesting observations can be made about Table 9. The first is the presence of two of the three per diem-based systems (New York and New Jersey). Under both, rate regulation was associated with an increase in the utilization measures, consistent with our a priori hypotheses. Second, the results provide some evidence that the occupancy penalty in New York has had the desired effect, but also that the imposition of a length of stay penalty in New York may not have been effective. All four versions of the New York program were found to increase relative occupancy rates, which is convincing evidence that the combination of incentives contained in the New York program has produced occupancy increases. However, it seems that hospitals achieved these increases, at least in part, by raising length of stay. In the upstate regions of the State, program-induced length of stay increases were observed after 1971 and again after 1976. Such an effect on length of stay was found for the downstate area (dominated by New York City) only after 1976—and after the length of stay penalty was imposed.

Overall, the results of this analysis suggest that rate-setting programs have affected all three volume measures, especially occupancy. On average, the occupancy rate in hospitals governed by rate-setting programs was more than two percentage points higher than that in control hospitals. Rate regulation also had a similar effect in the secondary study group. Since these results take into account relevant environmental factors, State differences, and time trends, we can reasonably conclude that the programs have led to different patterns of bed usage within the hospitals under its jurisdiction.

Finally, while they were not the central focus of this study, the effects of other regulatory efforts are worthy of mention, particularly in that there are often direct interactions among the programs within States. The regression results indicate that Certificate of Need programs do not have a significant impact on any of the three utilization measures studied. Such an impact—though indirect—might have been anticipated because of the program's jurisdiction over the hospital bed supply. In contrast, the findings for the PSRO program were strongly significant. The existence of binding review in a hospital appears to lead to increased admissions per bed and a reduced length of stay, with a net positive effect on occupancy. This implies that PSROs encourage higher patient turnover, by cutting back on extra days of stay and substituting more admissions. Along with the effects of rate-setting, the effects of these companion programs will be further investigated during the next phase of the NHRS.

County-Level Analysis

Models similar to those presented in Tables 6 through 8 were also estimated with counties, rather than individual hospitals, as the units of analysis. In theory, analyzing areawide effects will enable a better assessment of the influence rate-setting programs have had on the overall use of hospitals by the population in States/areas that have implemented such programs. In practice, however, the county-level analysis is likely to be flawed for several reasons. One reason is that county-level data on hospital utilization must be aggregated from individual hospitals' responses to the AHA annual survey, and because of missing data over the course of the study period, the county data include fluctuations that reflect inconsistent reporting rather than actual trends. The county-level aggregation means that the same amount of missing data will affect a higher proportion of observations in the county file than it will in the hospital-level file.

A second potential problem relates to using counties as units of analysis. In doing so, it is assumed that counties approximate market areas—an assumption that is commonly used in empirical work. Specifically, in this case, it is necessary to assume that the hospital use measured in all hospitals located within a particular county approximates the utilization patterns of county residents. This assumption will not always hold. Violations of this assumption will jeopardize the results of the analysis if they are not consistent over time, particularly if shifts that are unrelated to rate-setting coincide with its implementation. County-level data that reflected patient origin, rather than hospital location, would have been much more appropriate for this analysis. Since such data were not available, however, the county file described earlier was used to evaluate program effects, and the results should be considered tentative.

We analyzed four types of utilization measures using the county file. Admissions per bed, average length of stay, and occupancy rate were again included, although at the county rather than the hospital level of aggregation. We added admissions per capita to the county-level dependent variables. Table 10 presents a summary of the results obtained from our regression equations. (Detailed results are available from the authors upon request.)

Table 10. Summary of Prospective Reimbursement Effects as Derived from County-Level Analysis.

| Dependent Variable | Significant1 Program Coefficients | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| TADMBED | DKY75 | DWPVPR | |||||||

| −1.41 | −2.94 | ||||||||

| (−1.663) | (−2.652) | ||||||||

| ADMPC | DNB73 | ||||||||

| 14.49 | |||||||||

| (2.222) | |||||||||

| (ℓn)ALOS | DKY75 | DNB73 | DNYU71 | DNYU76 | DWA76 | DWPVPR | |||

| .05 | .08 | .09 | .08 | −.06 | .08 | ||||

| (2.052) | (3.272) | (3.002) | (2.022) | (−2.032) | (2.512) | ||||

| OCCRATE | DCOA75 | DMD76 | DNB73 | DNYD71 | DNYU71 | DNYU76 | |||

| .05 | .06 | .03 | .06 | .03 | .05 | ||||

| (1.663) | (2.182) | (2.342) | (2.532) | (2.012) | (4.302) | ||||

Coefficients among the prospective reimbursement dummy variables that achieved a 90 percent or greater level of significance. Figures in parentheses are t-statistics.

Indicates significant at .05 level.

Indicates significant at .10 level.

Again with the county-level equations, rate-setting was found to have the least effect on the admission rate. Twenty-four and 47 percent of the overall variation in admissions per bed and per capita, respectively, was explained by the included variables. Environmental variables, such as the demographic and economic characteristics of the county population and the availability of physicians and alternative facilities, have the most impact on admission rates. Very few rate-setting programs have significant effects. We found significant downward effects on admissions per bed for Kentucky and Western Pennsylvania. In both cases, the direction of effect was the same as that estimated at the hospital level, but the latter results had not been significant. None of the programs found to be significant in the earlier analysis proved significant according to the county-level equations.

The ratio of admissions per bed will, of course, measure changes in beds as well as admissions, thus confusing its interpretation somewhat. The ratio of admissions to population was therefore included among the dependent variables to measure the number of admissions within a county relative to the size of its population. While the resulting equation had the highest explanatory power of any we estimated (R2 =.47), rate regulation did not prove to significantly influence per capita admissions. Only the Nebraska program had an effect.

We found substantially greater program effects for average length of stay and occupancy. The semi-log equation used to determine the effects of rate-setting on the county-wide average length of stay explained about 29 percent of the overall variation. A number of programs appeared to have an impact on this measure. We found upward effects on length of stay for several of the per diem-based systems—New York (upstate only) and Western Pennsylvania—and also for Kentucky. For some programs, however, there were some apparent inconsistencies between the hospital- and county-level results. The direction of effect for the Nebraska program, for example, changed for the county-level analysis, from negative to positive, and we found a significant downward effect for the Washington program.

Apparent inconsistencies between the hospital- and county-level results can be accounted for in several ways. One possibility, of course, is that the reporting and aggregation problems described previously have distorted the county-level finding. Another, perhaps more likely, explanation is that county-level aggregations will tend to be dominated by the larger hospitals within the counties, whereas in the hospital-level analysis, each hospital had essentially equal weight. To the extent that effects in larger hospitals differ from those in smaller facilities, the two sets of results might also be expected to differ. This hypothesis will be tested during the forthcoming round of analysis.

Finally, the county-level equation to determine rate-setting effects on occupancy, which explained about 42 percent of the overall variation, reinforced the general conclusion reached earlier that rate regulation increased occupancy levels. While fewer programs demonstrated significant effects in the county-level equations, all of those found to be significant had also shown strong positive effects in the hospital-level analysis. This latter group included Colorado (1975 and after), Maryland (1976 and after) 5, Nebraska, and New York.

Summary and Conclusions

The results presented in this paper have been derived from an initial, preliminary investigation of the effects of State rate-setting programs on three measures of hospital utilization: admission rates (per bed and per capita), average length of stay, and occupancy levels. The results of our analyses suggest that the dominant influences on utilization include market area characteristics, such as age, income and health status of the population, availability of nursing home beds and physicians, as well as other aspects of the county and State environment that were not directly measurable. However, the results also suggest that rate regulation has affected certain volume measures. The principal conclusion supported by the evidence is that rate-setting programs have brought about an increase in hospital occupancy by increasing the average length of stay of patients once they are in the hospital rather than by increasing the number of patients admitted. A corollary is that few programs have had a measurable effect on admissions, measured either per bed or per capita.

The results supported many, though not all, of our a priori hypotheses. Most strongly supported were our hypotheses about the effects of per diem-based systems. All three of the systems based on per diem produced an increase in average length of stay, and occupancy increased significantly in two of the three. There was far less evidence that the programs influenced the admission rate.

Hospitals' responses to rate-setting in the States with charge-based systems conformed less well to our a priori predictions, although since there were relatively few significant findings among these programs it is difficult to assess the extent of conformity. The predominance of null findings for this group of programs may be a function of their lack of stringency, since most of the charge-based systems were earlier classified into the less stringent categories. However, the absence of findings may support our hypothesis that the most pronounced effects of charge-based systems will be on intensity of care, rather than the more aggregate measures explored in this analysis. It is not possible, given available data, to evaluate that hypothesis.

The most sizable discrepancy between the predicted and actual effects occurred for the Maryland program, for which decreases in length of stay and occupancy would have been predicted and increases in both were found. The findings again suggest strongly that the unit of payment (in this case, the charge-based rate structure) dominates the incentives for hospitals and that additional penalties or incentives are not offsetting. A similar conclusion may be drawn from the results for New York. Both sets of findings will be discussed further.

The results also indicate that rate-setting does not have much effect on the rate at which people are hospitalized. This finding is not inconsistent with our prior expectations, which were that admission rates would either not change or that the net effect of the program could not be predicted in advance of the analysis. If admission policies have changed as a result of rate-setting, the changes have been too small to be measured by this analysis.

Finally, our results supply preliminary answers to the two broad questions the analyses were designed to address. The first of these related to the interaction between unit costs and volumes of services. Coelen and Sullivan's findings (1981) about the impact of rate-setting on hospital costs per adjusted patient day, per adjusted admission, and per capita can now be partially explained by our results.

The effectiveness in slowing the growth in average cost per day that has been attributed to rate-setting may have resulted in part from program-induced increases in occupancy and length of stay. If, as is commonly assumed, the additional days were relatively low in cost, the overall effect would have been a deceleration in average costs per day. This could account for the program effects found by Coelen and Sullivan for Arizona, Kentucky, Maryland, New York, and possibly New Jersey. In the remaining States where cost per day effects were identified (Connecticut, Indiana, Massachusetts, and Minnesota), no significant effects of rate-setting on volumes of service were found, indicating that other factors must account for the findings.

Similar findings with respect to cost per admission were not as readily explained by our results. In three of the programs with a significant downward effect on cost per admission (New York, New Jersey, and Maryland), rate regulation was associated with a significantly Increased length of stay. For the remaining programs, our findings were not statistically significant. In order for the programs to have had the downward effect on costs found by Coelen and Sullivan, therefore, there must have been significant changes in inputs per stay in the hospital. Intensity of service, for example, may have declined as a result of the financial incentives created by the programs. Such a decline in intensity is, however, consistent with our a priori hypotheses concerning systems based on per diem. The cost effects in Maryland, a charge-based system in which a positive program effect on length of stay was also found, may in part be explained by the GIR system imposed on a portion of Maryland hospitals to control intensity increases.

Coelen and Sullivan's results with respect to cost per capita revealed less of a program effect, with significant effects found only for New York (1976 and after) and Washington. Our county-level findings similarly showed less effect. While rate-setting was mostly associated with lower admissions per capita in our analysis, the effects were almost uniformly insignificant. For both New York and Washington, the program coefficients were negative, but not significant. The only relatively strong program effect measured at the county level was an increase in county-wide bed occupancy. Such an increase would not necessarily lead to a deceleration in expense per capita; in fact, it might be expected to increase per capita expenses. Our analysis, therefore, does not provide an explanation for Coelen and Sullivan's findings with respect to per capita costs. Since both analyses were weakened by problems with data availability and aggregation, however, it would be premature to draw definitive conclusions from a comparison of the two sets of results.

The second question to be investigated was whether rate-setting might affect hospitals differently, depending upon the specific incentives created by individual programs. Of particular interest was the unit of payment employed by the system (that is, per diem rates or charges per unit of service) and the specific, volume-related incentives arrayed on Tables 3 and 4. Several preliminary answers to this question can be derived from our results. First, per diem-based systems seem to produce more consistent and predictable effects than do charge-based systems. The results for New York, New Jersey, and, to a lesser extent, Western Pennsylvania strongly suggest that payment on a per diem basis fosters an increase in length of stay and, as a consequence, occupancy rate. Charge-based systems also promote such increases, but less consistently.

Second, our results provide insufficient basis for evaluating the effectiveness of volume adjustments (used during rate review). In two States, Connecticut and Washington, the introduction of such adjustments was too recent for their effects to be measured by our data. Elsewhere, their application was probably too limited to be measured by aggregate data (that is, the Massachusetts Medicaid program). These adjustments have been in place long enough, and on a large enough scale, only in New Jersey, Maryland, and Rhode Island. Since the effects of the post-1974 New Jersey programs were statistically insignificant, it appears that the adjustment has not had a discernible effect. For Maryland and Rhode Island, the results for the most part suggest that volumes have not decreased, but have increased under the program. While these findings certainly do not suggest that the adjustment has been effective, they are also, to some extent, confounded by other program characteristics.

Lastly, the results provide preliminary evidence that supplementary volume-related penalties or incentives are not effective in offsetting the “perverse incentives” created by the unit of payment. The results for New York and Maryland provide most of this evidence. In both States, the measured program effects on length of stay are counter to those that would have been anticipated if the relevant penalties had worked.

As of 1977, the three major components of the New York system (Medicaid, Upstate Blue Cross, and Downstate Blue Cross) instituted additional penalties for excessive length of stay relative to each hospital's peer group average. If this penalty had been successful, a negative (or at least a neutral) effect of the New York program during the later years of the study should have been uncovered. In fact, in both the downstate and upstate regions, the effect of the program on length of stay was strongly positive throughout the study period. This leads to the conclusion that the length of stay has been dominated by other aspects of the system, most likely the per diem rate structure. The results thus imply that hospitals gain more from increasing length of stay than they lose because of the penalty. It should be noted, however, that the availability of data only through 1978 limited the amount of experience with the length of stay penalty that could be observed. Later analyses should therefore be more instructive.

In contrast, the occupancy penalty that has long been a feature of the New York system seems to be effective, although it is impossible to separate the effects of the payment unit and occupancy penalty since both would cause hospitals to respond in the same way. Since the inception of rate-setting in the State, program effects on occupancy have been to significantly increase it in both the upstate and down-state regions.

In the mid-1970s, the Maryland program implemented on a small scale a supplementary system to penalize hospitals that increased length of stay and intensity within a diagnostic category (and to reward those that decreased them). Had this incentive system (the GIR) been successful, a negative (or neutral) effect on length of stay should have been uncovered. In fact, for one cohort of Maryland hospitals, the overall effect of the rate-setting program was to lengthen stays. For the remaining cohorts, which contain the majority of hospitals participating in the GIR system, no significant program effect was observed. This may, of course, indicate that the GIR provisions exactly offset the other incentives contained in the Maryland program. The results may also suggest that, if the GIR is working, its effects have been to constrain other measures of intensity than length of stay.

This analysis, it should be emphasized, must be considered to be preliminary and exploratory. Future analyses of the effects of rate-setting programs on volumes of service will include both methodological refinements and more reliable data. In these analyses, program effects on additional aspects of hospital utilization, including use of outpatient services and rates of surgery, will also be investigated. These later analyses will support more sound conclusions about program effects. The results of this preliminary evaluation provided the basis for an initial assessment, but the development of a more complete understanding of hospital rate-setting programs must await further exploration and more reliable information.

Acknowledgments

The authors wish to acknowledge the capable research assistance of Kathe Kneeland. Craig Coelen and an anonymous reviewer provided helpful and instructive comments on an earlier version of the paper.

Footnotes

This article was prepared as part of the National Hospital Rate-Setting Study, Contract No. HCFA 500-78-0036.

Throughout this discussion, the New York program will be treated as a single system, although it in fact has four component parts: Medicaid, Upstate Blue Cross, Downstate Blue Cross, and commercial insurance/self-pay patients. While the components have historically differed, these differences are not significant enough to cause us to predict different directions of effect. In our empirical analyses, however, the upstate and downstate regions of New York will be separated to test the hypothesis that different effects might have occurred.

Occupancy penalties employ minimum standards for occupancy in a particular service (for example, 80 percent in the adult medical-surgical service). If actual occupancy is lower than the standard (70 percent, for example), hospitals will be reimbursed as if occupancy were at the standard—the additional patient days would be imputed. Thus, hospitals would be paid less per unit than they would need to meet their costs, given the low occupancy.

A more detailed discussion of the sample selection procedure, data collection, and screening processes is contained in Coelen and Sullivan (1981).

The program-specific dummy variables for Maryland were applied to two cohorts of hospitals, depending upon the period during which the initial budget review was performed. The first cohort (A) includes those hospitals for which budget review was completed during 1975 (in time for the establishment of fiscal year 1976 rates). The second cohort (B) includes the remaining hospitals. The year contained in the variable name reflects the time period to which the dummy variable applied.