Abstract

In 1983, the Health Care Financing Administration funded a multiyear evaluation of Medicaid demonstrations in six States. The alternative delivery systems represented by the demonstrations contained a number of innovative features, most notably capitation, case management, limitations on provider choice, and provider competition. Implementation and operation issues as well as demonstration effects on utilization and cost of care, administrative costs, rate setting, biased selection, quality of care, and access and satisfaction were evaluated. Both primary and secondary data sources were used in the evaluation. This article contains an overview and summary of evaluation findings on the effects of the demonstrations.

Introduction

This article contains a detailed overview and summary of the activities and findings of the Nationwide Evaluation of Medicaid Competition Demonstrations1. Six States (California, Florida, Minnesota, Missouri, New Jersey, and New York) applied for and received waivers of regulations from the Health Care Financing Administration (HCFA) in 1982 and 1983 for demonstration projects to implement and test alternative strategies for the delivery and financing of health and medical services to Medicaid beneficiaries.2 The goal of the evaluation was to provide a comprehensive understanding and assessment of the following:

Implementation and operation issues.3

Utilization and costs of care.

Administrative costs.

Rate setting.

Biased selection and Medicare-Medicaid dual eligibles.

Quality of care and access and satisfaction.

The evaluation was intended to inform both researchers and policymakers at State and Federal levels about the impact of capitation and case management on the delivery of Medicaid services4.

The Medicaid Competition Demonstrations were initiated against a backdrop of a rapidly changing health policy environment (Freund and Neuschler, 1986). After a decade of sharp health care cost escalation and soaring Medicare and Medicaid outlays, decisionmakers in the public and private sectors were experimenting with new cost-management techniques. The competition demonstration projects examined under this evaluation were part of a wave of public sector reforms that occurred during this period as Government officials tried to control costs without slashing benefits to the poor and disabled.

The waiver of beneficiary freedom of choice of provider permitted under the demonstrations was critical because freedom of choice had been a hallmark of Medicaid since its enactment in 1965. Freedom of choice under the original legislation was intended to provide open access to the mainstream medical system. Although intended to avoid a “two-tier” system of care, the freedom-of-choice provision was also a deterrent to public authorities who tried to steer recipients toward more cost-conscious doctors, hospitals, and other providers of care, and away from providers judged to be less conservative in the use of resources. The freedom-of-choice waivers allowed States to try to reduce the amount of “doctor shopping” believed to characterize the patterns of service use by some Medicaid recipients.

The demonstrations examined during the evaluation were designed to address fundamental problems in the Medicaid program. One objective, for example, was to establish realistic payment schedules that would both encourage providers to treat Medicaid patients and, at the same time, distribute the risks and rewards of future cost trends between Government and providers. By pilot testing concepts (such as the primary care network) in which groups of primary care doctors entered into risk-sharing financial arrangements with payers, the demonstrations hoped to ascertain whether the potential promise of these new incentives could be achieved in practice. The demonstration programs in most sites were designed to enhance the access of Medicaid beneficiaries to mainstream providers who had not customarily participated in the Medicaid programs. These providers included existing prepaid health plans and primary care networks, as well as private physicians (Freund, 1987).

Although the original concept was to test alternatives to the traditional Medicaid system based on the principles of competition, the actual designs of the demonstrations did not approach a full competitive model. For example, States generally did not allow health plans to market to beneficiaries based on more attractive benefit packages or through special media campaigns. In many respects, the demonstrations were more a test of the feasibility and impact of a managed-care system, relative to a traditional, fee-for-service, open-ended system, than they were a test of competitive models for the delivery of Medicaid services. Further, the program designs placed real limits on the potential for cost savings as the demonstrations typically only encompassed a portion of eligible groups and covered services, and they usually omitted altogether such high-cost groups as the institutionalized population and the disabled.

Description of demonstrations

The various program characteristics are summarized in Table 1; more complete descriptions are contained in Hurley (1986) and Heinen, Fox, and Anderson to be published.

Table 1. Selected demonstration characteristics, by demonstration site: Medicaid competition demonstrations.

| Demonstration site | Date of implementation | Type of enrollment | Estimated maximum enrollment | Organizational structure | Eligible population | Participating providers | Provider payment |

|---|---|---|---|---|---|---|---|

| California | |||||||

| Monterey County1 | June 1983 | Mandatory enrollment; choice of provider | 26,000 | Risk-assuming intermediaries that contract with primary care organizations and individuals and other providers of Medi-Cal covered services | Categorically eligible and medically needy | Case managers are primary care providers, including physicians, clinics, and hospitals | Intermediary capitated Monterey-fee-for-service plus fee. Santa Barbara capitation and negotiated fees to providers |

| Santa Barbara County | September 1983 | 21,000 | |||||

| Florida2 | September 1987 | Voluntary enrollment | NA | State contracts with prepaid plan | Supplemental Security Income frail elderly | Hospital | Capitation |

| Minnesota | |||||||

| Hennepin and Dakota Counties | December 1985 | Mandatory4 enrollment; choice of provider | 23,750 | State contracts with prepaid health plans or county (Itasca) | Aid to Families with Dependent Children, aged, blind, disabled | Prepaid health plans | Capitation for plans in Hennepin and Dakota and for county in Itasca |

| Itasca County | August 1985 | 3,441 | |||||

| Missouri | |||||||

| Jackson County | November 1983 | Mandatory enrollment; choice of provider | 24,000 | State contracts with prepaid health plans and individual physicians | Aid to Families with Dependent Children | Plans include hospitals, individual practice association, neighborhood health centers, and individual physicians | Capitation for prepaid health plans; fee-for-service with case-management fee for physicians |

| New Jersey | June 1983 | Voluntary enrollment | 8,400 | State contracts with primary care organizations and individual physicians | Categorically eligible | Case manager must be primary care provider; includes health centers and physicians | Capitation |

| New York | |||||||

| Monroe County3 | June 1985 | Mandatory enrollment; choice of provider | 41,300 | Intermediary that contracts with prepaid health plans | Aid to Families with Dependent Children, home relief, medically needy | Prepaid health plans | Capitation |

Terminated March 1985.

Three of four proposed modules never implemented as demonstrations.

Terminated December 1987.

Random assignment employed in Hennepin County.

SOURCE: Research Triangle Institute: Nationwide Evaluation of Medicaid Competition Demonstrations Final Report, Volume 1, Dec. 1988.

Each of the programs that was implemented had some elements of capitation and/or case management either between the State agency and providers (Missouri and New Jersey) or between the State agency and local risk-bearing entities (California demonstrations and New York). All of the programs incorporated limitation on freedom of choice of provider, which required waiver authorization from HCFA. Within this choice limitation, enrollees were permitted and, in fact, encouraged to make their own selection of a “gatekeeping” personal provider or plan.

Virtually all of the programs embraced case management as a central feature of utilization coordination and control. In some instances (e.g., Missouri and Minnesota), however, formal mechanisms, such as requiring assignment of enrollees to a specific case-managing physician, were left to the discretion of the participating prepaid health plan. Competition was an elusive goal in all of the demonstration programs. Multiple providers participated in each site except New York, which had only a single network model health maintenance organization (HMO), and in the single demonstration module implemented in Florida. There was little evidence of efforts to actively recruit enrollees or to offer expanded or differentiated service packages.

In contrast to these commonalities, a number of differences also existed. Participation among eligible beneficiary groups ranged from only those in the Aid to Families with Dependent Children program (AFDC) in Missouri to virtually all Medicaid-eligible persons in California and Minnesota. The New York demonstration intended to phase in all eligibility groups but, in practice, never got beyond AFDC and Home Relief. Participation was voluntary in New Jersey and Florida but mandatory for covered eligible in all other sites, though several programs exempted such high-cost groups as the disabled. Case-manager participation ranged from individual physicians to institutional providers such as hospitals and health centers. Only Missouri, New York, and Minnesota engaged existing HMOs in their demonstrations. The others, in effect, discouraged broader provider participation by capitating only primary care physicians (Santa Barbara and New Jersey) or by offering only a fee-for-service program with case management (Monterey). Florida proposed a variety of demonstration modules, some involving HMOs; however, the reimbursement levels of the Florida Medicaid program, as well as other factors, prevented implementation of all but one module. Hurley and Freund (1988a) proposed a typology for Medicaid-managed care that encompasses the various characteristics described earlier.

Evaluation strategy and data sources

During the preparation of the evaluation plan, the critical research areas previously listed in the “Introduction” were identified and developed into a comprehensive evaluation strategy utilizing multiple data sources and analytical approaches4. Both primary and secondary data sources were employed to explore these issues and annual indepth case studies were planned at each demonstration site. Table 2 contains a summary of the principal research issues and the related data sources used to explore these issues.

Table 2. Research issues and data sources: Medicaid competition demonstrations.

| Outcome analysis areas | Selected research issues | Medicaid data files | Other secondary data | Actuarial consultant | Consumer survey | Medical chart review | Site case studies |

|---|---|---|---|---|---|---|---|

| Cost of care | Program cost changes source | • | • | • | |||

| Selection bias | • | • | • | ||||

| Adequacy of rates | • | • | • | ||||

| Utilization | Use by patient and site characteristics | • | • | • | |||

| Effects of gatekeeping | • | • | • | ||||

| Substitution effects | • | • | |||||

| Quality of care | Differential outcomes for inpatients | • | |||||

| Differential outcomes for outpatients | • | ||||||

| Effectiveness of plan-based quality control | • | • | |||||

| Health status and health habits | • | ||||||

| Access | Convenience | • | • | ||||

| Travel and wait times | • | • | |||||

| Symptom-response and use-disability ratios | • | • | |||||

| Enrollment and disenrollment | Plan choice | • | • | ||||

| Reasons for disenrollment | • | • | • | ||||

| Grievances | • | • | |||||

| Provider information | Physician decisions to join or quit | • | |||||

| Physician characteristics | • | • | |||||

| Physician satisfaction with program | • | ||||||

| Satisfaction | Perceived quality | • | |||||

| Demographic correlates | • | • | |||||

| Effects on use | • | • |

SOURCE: Research Triangle Institute: Nationwide Evaluation of Medicaid Competition Demonstrations Final Report, Volume 1, Dec. 1988.

The research issues associated with utilization and cost of services and with quality of care were formulated into testable hypotheses associated with the characteristics of the demonstration program designs. The issues were examined in the context of both cross-sectional and pre- and post-research designs. Comparison groups for the demonstration sites were chosen to permit appropriate contrasts.

Primary data collected for the evaluation included a survey of Medicaid beneficiaries, medical chart abstractions, and case study information. Secondary sources of data included Medicaid Management Information System (MMIS) eligibility and claims data maintained by the States or private contractors, administrative cost reports, and reviews of rate-setting methodologies. Brief descriptions of these sources are presented in the following sections.

Medicaid consumer survey

The Medicaid consumer survey was the major primary data collection activity for the evaluation. The principal objective of the survey was to obtain information on health status, health care use, satisfaction, health habits, and background characteristics of respondents. The survey was conducted through personal interviews with stratified random samples of AFDC enrollees and nonenrollees in Missouri, California, and Minnesota in late Spring 1986. Information was collected for both the sample adult and one randomly selected child. Supplemental Security Income aged recipients were also surveyed in California. Overall survey response rate, based on actual number of completed cases that could be used in statistical analyses, was 86.8 percent. Consumer survey data were employed in the analyses of use of services, quality of care, and beneficiary access and satisfaction. The number of completed interviews ranged between 189 and 264 per plan or demonstration and comparison site, with a total of approximately 2,700 adult respondents.

Secondary claims and eligibility data

Secondary data from State-level and plan-level MMISs were essential for the analysis of service utilization and cost effects. Eligibility and claims files were requested from the States, the plans, or, in the case of California, the HCFA-funded Tape-to-Tape project. Secondary data obtained by the evaluation were built by RTI into uniform service and eligibility files, initially modeled on the Tape-to-Tape approach, but subsequently adapted according to the evaluation's particular needs and situation. Event-level files were then constructed for medical (ambulatory), inpatient, and drug (prescription) events, which were accumulated with eligibility information for person-level files for each site. These person-level files provided the bases for bivariate and multivariate use and cost analyses.

Numerous critical issues had to be resolved in the successful design and construction of the secondary data analysis files. These issues included differing sources and formats of data received, inadequate or incomplete documentation, proper definitions of events from line item claims, incomplete files resulting from the failure of capitated programs to obtain pseudo or dummy claims on medical encounters, and understanding the complexity of differing State eligibility systems critical for the building of person-level files. Secondary data sample sizes were approximately 3,100 individuals per site per year, with oversampling in Missouri in order to analyze by plan type.

Secondary data files from the demonstrations were limited in two important ways. First, because of the slowness of several of the demonstrations in becoming operational, as well as the time required for claims files to become complete (9-12 months was allowed), only first-year demonstration data for each site were available for the evaluation and used in the analysis. Examination of second-year and subsequent data, which might have reflected “learning-curve” differences and more the “steady state” of the demonstrations' cost and utilization experience, was not possible. Second, underreporting of primary care encounters in the demonstrations was well documented in the case studies conducted as part of the evaluation. The extent of underreporting was investigated and the level of underreporting estimated as part of the utilization analyses.

Case studies

Indepth case studies were done on an approximately annual basis for all of the demonstration programs. These case studies involved extensive site visits and included interviews with demonstration officials, participating plans and providers, beneficiaries and beneficiary groups, governmental officials at various levels, and others. Drafts of each year's case studies were reviewed by officials in charge of the demonstrations, the evaluation staff, and HCFA before final completion. The case studies provided detailed information on implementation and operational issues and background information for the utilization, cost, quality, and access analyses. In addition to each of the site-specific case studies, three annual overviews were also prepared. See Health Care Financing Administration (1986), Hurley (1986), or Heinen, Fox, and Anderson, to be published.

Medical record abstraction

The demonstration programs offered incentives to providers to alter their practice behaviors. Because of the potential for adverse consequences for enrollees as a result of these incentives, it was necessary to examine whether the programs had detectable effects on the quality of medical care delivered to the enrolled beneficiaries. Samples of adults and children with diagnosis and treatment for specified tracer conditions of relatively high prevalence in the target populations were selected for the purpose of conducting onsite (e.g., inoffice and inhospital) medical record abstraction. Adult inpatient samples, adult outpatient samples, and child outpatient samples were drawn from claims files for Santa Barbara and Ventura Counties, California, and for Jackson County and St. Louis City, Missouri. Data were collected for treatment relative to tracer conditions of interest and were subsequently analyzed to assess whether differences in clinical practice were evident. Provider participation in the chart abstraction exceeded 90 percent in three of four sites and was 73 percent in the fourth site. Medical record abstractions were completed for 430 to 1,381 patients, depending upon the strata.5

Administrative cost studies

Studies of administrative costs associated with developing and managing the demonstration programs were conducted in each site. Unique administrative cost data were compiled to allow separate analysis of administrative costs from service costs. An examination of administrative costs offers opportunities to estimate the levels of resources required to start these new programs and to maintain them, and examination of these costs along with service delivery costs provides a more complete picture of the costs associated with the demonstrations. Sites were requested to submit administrative information in a series of prespecified categories to facilitate comparison of program designs. Administrative cost studies were conducted in all sites except Florida.

Ratesetting studies

Within each site except Florida, a study was also conducted of the ratesetting methodology used by the State for paying the risk-sharing intermediary or the participating plans and providers. Data for the ratesetting studies came from a variety of sources, including formal ratesetting documentation from the States; informal working papers used in the ratesetting process; interviews and telephone conversations with State and demonstration officials; and interviews and telephone conversations with ratesetting consultants where they were used. Ratesetting methodologies, including assumptions used by program administrators in setting rates initially and, where applicable, making subsequent adjustments to them were examined in these studies. In two sites, additional detailed actuarial review was also performed. These studies were designed to assess the approaches used and the adequacy of the ratemaking process.

Evaluation findings

Utilization and cost of care

Among the most important issues for the demonstrations was whether they lowered costs; however, there are different interpretations of the cost-saving issue, depending on whether one takes a Federal, State or provider viewpoint. The Federal and State governments effectively limit their risk for program costs through placing plans and providers at risk. Thus, one could argue that appropriately set risk-based payments should automatically produce cost savings for Federal and State governments. If, however, payment rates are set too low and efficiencies in service provision are not attained, the viewpoint of providers regarding cost savings could be quite different. Moreover, Federal and State governments may not experience net cost savings if the costs of service delivery are lower and administrative costs are higher. The economic issues are complex and depend on one's viewpoint. Throughout the summary discussion that follows, the perspective of Federal and State governments, and not that of the service-providing plans, is emphasized.

Service use was examined and analyzed to determine whether the demonstrations were successful in changing utilization patterns and which types of services were most affected. Although fee-for-service costs are primarily determined by fee-for-service use rates, the same is not true for the demonstration programs. The demonstration service delivery costs to the State Medicaid program are dependent on the capitation payment rates established, and not actual service use rates. Extensive claims data were analyzed to understand what impact reported capitation costs may have had on service use patterns. It was possible for the demonstrations to change utilization, yet if capitation rates were set inaccurately, there would be no net savings to the demonstration or, conversely, the plans and providers might suffer losses. In fact, service delivery cost savings for the demonstrations were minimal, and reductions in service use in the expected areas were achieved. Administrative costs were somewhat higher in most sites, but this was expected given the emphasis in the demonstrations on case management and consumer choice.

Utilization results

The individual site analyses indicated a substantial number of significant program effects on utilization6. Program effects were tested by contrasting the pre-utilization and post-utilization experience of a sample of enrollees in each demonstration site with pre-utilization and post-utilization experience of nonenrollees from comparison samples, described in more detail in the Evaluation Final Report, available through NTIS, and in the appendix to Hurley and Freund (1989). The tests were conducted in multivariate models of use that controlled for differences among the individual recipients on several characteristics that might be expected to affect use. By incorporating samples from each site for both the pre-year and the demonstration year, it was also possible to examine program effects controlling for potential changes over time unrelated to the introduction of the demonstration programs. Several measures of use were examined, as shown in Table 3. Impacts shown represent changes in use from pre-year to demonstration year in the demonstration site that were statistically different from the corresponding change in the comparison site.

Table 3. Utilization measures and summary of results, by demonstration program: Medicaid competition demonstrations.

| Utilization measure | Monterey | Santa Barbara | Missouri | New Jersey | ||||

|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|||||

| Child | Adult | Child | Adult | Child | Adult | Child | Adult | |

| Inpatient | ||||||||

| Percent with stay | — | — | −32.1 | — | −43.7 | — | — | — |

| Days per 1,000 | −17.4 | — | — | — | — | −12.3 | — | — |

| Emergency use | ||||||||

| Percent with visit | −35.6 | −38.6 | −27.5 | −30.6 | −34.9 | −44.1 | −36.7 | −44.3 |

| Visits per user | −11.9 | — | −13.4 | — | — | — | −12.1 | — |

| Physician use | ||||||||

| Percent with visit | −14.7 | −2.0 | −20.1 | −14.6 | −11.5 | — | −1.3 | −9.5 |

| Visits per user | — | — | −13.4 | −34.0 | — | −28.8 | −15.0 | −20.9 |

| Ancillary use | ||||||||

| Percent with visit | −28.8 | — | −38.8 | −12.0 | −34.7 | −23.7 | −51.5 | −41.8 |

| Visits per user | — | — | −17.1 | — | — | −18.8 | — | −20.4 |

| Specialist use | ||||||||

| Percent with visit | −54.7 | −32.5 | −67.2 | −64.7 | — | — | −36.7 | −41.1 |

| Visits per user | — | — | — | — | — | −35.1 | — | — |

| Primary care use | ||||||||

| Percent with visit | — | + 8.2 | −35.8 | −34.8 | — | — | −5.7 | −5.5 |

| Visits per user | — | + 21.1 | −29.4 | — | −18.5 | — | −7.6 | — |

| Number of providers seen | −12.0 | — | −18.0 | −22.0 | −24.6 | — | −24.7 | −16.9 |

NOTE: Results shown represent statistically significant (p < 0.05) changes in use from the pre-year to the demonstration year in the demonstration site as compared with the corresponding change in the comparison site.

SOURCE: Research Triangle Institute: Nationwide Evaluation of Medicaid Competition Demonstrations Final Report, Volume 1, Dec. 1988.

Monterey County, California

The Monterey program showed several indications of having affected enrollee patterns of utilization despite the fact that it ultimately went bankrupt and was considered a failure by most analysts. The Monterey model of gatekeeping without financial risk appears to have reduced referrals, improved continuity of care, and limited the use of emergency rooms by improved coordination of care. However, this program did not result in utilization reductions or cost savings of sufficient magnitude to compensate for an apparently inappropriate payment methodology for participating providers, and for this and other reasons, the financial failure of the demonstration resulted.

Santa Barbara County, California

The program in Santa Barbara County has been customarily viewed as differing from Monterey in its use of financial risk sharing for the case manager. As such, it may be viewed as having provided intensification of the gatekeeper responsibility. Case management plus financial risk bearing (the Santa Barbara model) appear to produce more extensive effects than the case-management model alone, though these effects represent rather marginal reductions in cost because inpatient use is still not substantially affected. These findings lend some support to the view that Santa Barbara's program has endured more because of its management and relatively conservative payment amounts than by markedly strong utilization effects. Finally, it should be noted that utilization effects in Santa Barbara remained even after estimates of underreporting of encounters were fully factored in.

Jackson County, Missouri

Summary comments about the Missouri program, especially relative to the other programs analyzed, need three cautionary remarks. First, this program included two different designs (capitation and fee-for-service case management) and four different provider types (hospital plans, neighborhood health centers, an individual practice association (IPA), and a “Physician Sponsor Plan” (PSP), so the aggregated Jackson County findings obscure substantial variation in program effects7. Second, structural differences in the health care delivery system between the demonstration area (Jackson County) and the comparison area (St. Louis City) are significant and could account for some of the observed differences. Demographically, however, the populations examined were quite similar. Third, the Missouri program capitated participating plans (except the PSP) for virtually all Medicaid services, rather than just primary care services, as was the case in Santa Barbara and Monterey; thus program effects may not be equivalently interpreted across sites.

The Missouri program displayed evidence of inpatient reductions for both children and adults. These effects are still apparent even after taking into consideration the St. Louis City comparison group, which also saw sharp reductions in inpatient use between the pre-year and demonstration year. This finding seems to indicate that including inpatient care in the scope of services covered by capitation can produce reductions in utilization of this costly service. Moreover, it might suggest that capitated plans are substituting less costly forms of care for inpatient care, though this was not specifically tested for the evaluation.

Emergency room reductions in the probability of a visit were noted for demonstration children and adult enrollees, as seen in the California programs. The interplan contrasts indicated that this effect was evident for all plan types except the hospital plans. In fact, if the hospital plans were omitted from the analysis, the effects would be even more pronounced because the hospital plans represented approximately 40 percent of all enrollees included in the aggregate analysis.

Program effects in Missouri for physician services, including specialist and primary case providers, were limited, but this is probably because the prepaid plan encounter data were not sufficiently detailed to identify actual providers of services. These differences suggest that the effects of the total Missouri program are not necessarily adequately captured by the measures used in other sites. The dynamics associated with participation by the individual plans are so markedly different that aggregation across the entire demonstration county renders them neither easily interpretable nor necessarily meaningful. The inpatient use and emergency room reductions, however, were notable and remained even after consideration of possible underreporting of encounters.

New Jersey

Although the only voluntary program among the demonstrations studied, the New Jersey program design was broadly comparable with the Santa Barbara primary care capitation design, and the results tended to be very similar to those seen in Santa Barbara. There was no evidence that New Jersey enrollees experienced reduced inpatient use for which case managers were not at financial risk. On the other hand, emergency room use once again was sharply lower for demonstration enrollees for adults and children. Physician visits were lower for all categories, though this could have been the result of underreporting. However, specialty use was lower for adults and children, which would not have been affected by underreporting, because specialists were paid on a fee-for-service basis. The increase in the percent of adults with a primary care visit is surprising in light of overall reductions in reported physician visits, though this could have resulted from the higher number of adults receiving obstetrical care in the enrollee population. Increased concentration of care consistent with gatekeeping under primary care case management is evident for both adults and children in New Jersey.

Cost results

Cost impacts were examined by comparing the capitation payments for a sample of enrollees in each demonstration site with the fee-for-service cost experience of nonenrollees from comparison samples. Tests were conducted in multivariate models of costs that controlled for differences among the sample members on available sociodemographic and other characteristics. The samples from each site for pre-year and demonstration years were pooled to test for changes in costs across years and between sites.

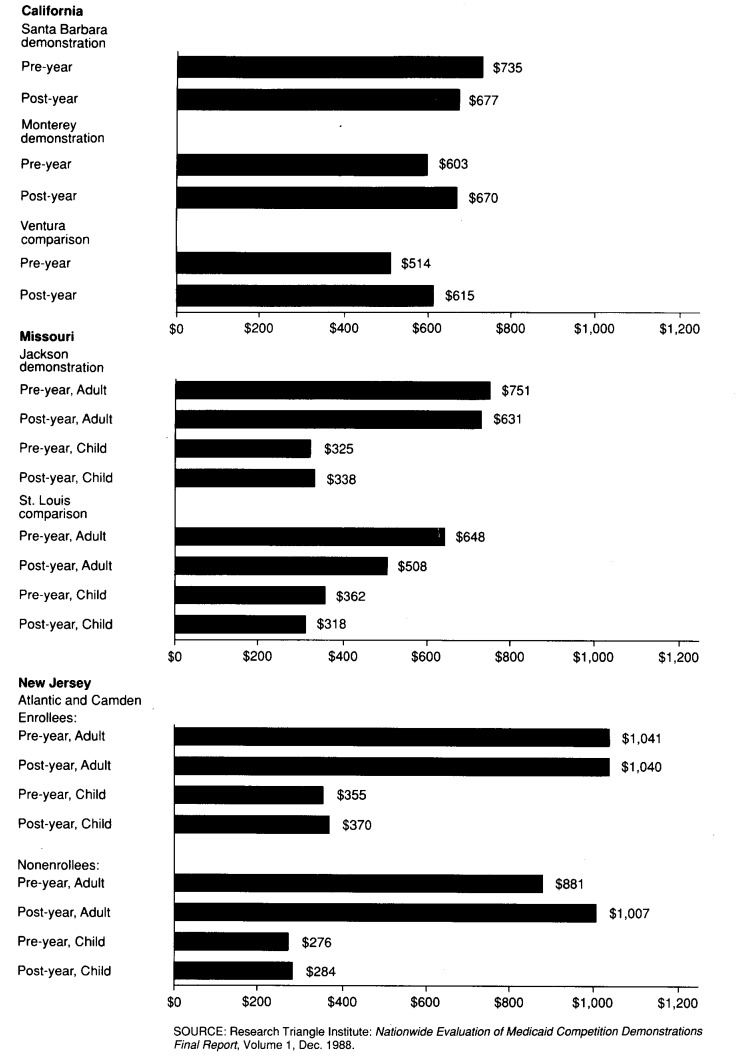

Actual service delivery expenditures for each of the demonstrations are shown in Figure 1. Because of differences in covered benefits, cost sharing, and population characteristics, Medicaid program costs varied substantially across the demonstration sites, and dollar figures across sites are not directly comparable. To control for differences in programs, we compared the demonstration results with those of the selected comparison site. To control for differences in population characteristics, we pooled samples to estimate a regression equation predicting what fee-for-service costs would have been in the absence of the demonstration, given the characteristics of the demonstration sample.

Figure 1. Service delivery expenditures, by demonstration and comparison sites: Medicaid Competition Demonstrations, 1983.

In Monterey, demonstration costs were higher than fee-for-service costs compared with the previous year and with the comparison site. In Santa Barbara, capitation costs were lower in the demonstration year. However, they were still above the comparison fee-for-service amount shown for Ventura County. In Missouri, costs rose minimally for children and fell minimally for adults with the introduction of the demonstration, but the comparison site costs fell sharply during the same period because of other, unrelated, cost-containment measures instituted by the State. This result suggests that, if the changes in the comparison site had occurred in the demonstration site, costs would have been lower if fee-for-service rather than capitation had been the ongoing payment methodology. Apparently the ratesetting methodology in Missouri did not fully anticipate the large utilization changes that occurred in the St. Louis City comparison site. Finally, in New Jersey, both enrollee and nonenrollee (demonstration and comparison) costs changed minimally for children over time and increased only in the adult comparison group.

Multivariate analyses comparing capitation payments for predicted fee-for-service costs supported the conclusion from the actual service expenditure analyses that the demonstrations did not have a cost-lowering effect during their first demonstration year. Multivariate analysis revealed no cost impact in Santa Barbara and New Jersey. In Monterey and Missouri, on the other hand, demonstration service costs actually appear to have been more than what would have occurred in the absence of the demonstration.

Summary

The demonstrations showed a number of effects lowering utilization consistent with the variations in program designs, particularly in the area of emergency room use8. Further, self-reported utilization from the Medicaid Consumer Survey was highly consistent with results found in the claims data. These findings suggest that participating plans and providers changed delivery patterns in response to program incentives. However, because many of the programs paid providers through capitated rates based on fee-for-service levels from prior years, these utilization changes were not directly translatable into program savings.

Even though service use impacts are apparent, there is clearly no overall pattern of cost impacts from the four demonstration sites. Prepaid case management did not appear to provide large cost savings for the States or for the Federal Government, but it did represent a control mechanism that could potentially be used to control future large cost increases. Capitation, however, does encourage providers to change use patterns. Decreases in emergency room use and/or specialist use were typical. The ratesetting process and the changes occurring in the fee-for-service sector are important determinants of whether cost savings will occur from prepaid case management. If the rates are set inaccurately or set only to equal expected fee-for-service experience, costs might not change. The findings reported here suggest that the rates established in several of the demonstration sites in the years examined were not accurate enough to yield the expected overall 5-to-10-percent savings.

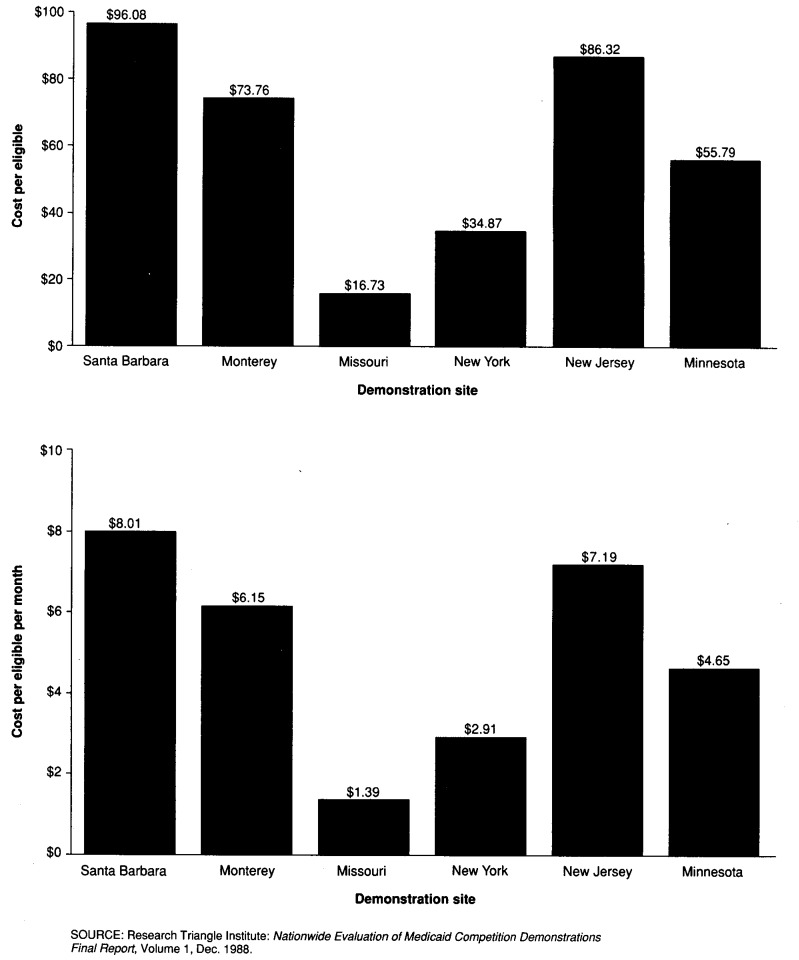

Administrative costs

The foregoing analysis of expenditures for service delivery included administrative costs for providers and health plans because these were included, either explicitly or implicitly, as part of the reimbursement from the State to the plans. Administrative costs at the State level relating to the demonstrations were not included. Administrative cost comparisons presented later may be interpreted to be a function more of design factors implicit to the demonstration than of efficiency factors under operational control.

Attractive features of prepaid case management to the States and the Federal Government include the greater control of patient behavior that it offers, as well as the incentives that encourage cost-conscious provider behavior, rewarding coordination instead of fragmentation. However, undertaking management control and incentive systems are not costless activities. Thus, although prepaid case management has potential benefits, it may also mean new utilization review systems, new and different enrollment systems for plans that rely on patient choice of case manager, and monitoring functions that identify and financially reward favorable provider behavior. The differing design features of the demonstrations have a direct bearing on administrative costs. Data on the distribution of costs suggest that the share of costs for salaries and benefits, as well as contractual costs, varies with the type of prepaid case-management program adopted, with a substantially larger share of overall costs going toward contract services in models that do not contract with existing alternative health plans.

As expected, the cost per eligible enrollee and per eligible enrollee per month varies with the model type adopted, as well as the number of enrollees, as shown in Figure 2. To establish the Santa Barbara or Monterey models required higher administrative costs than establishing models by contracting with existing HMOs. Thus, Santa Barbara County had costs of $96 per eligible enrollee and $8 per eligible enrollee per month. The figures for Monterey County were similar. Missouri and New York, on the other hand, had costs per eligible of $16.73 and $34.87, respectively, in large part reflecting the design of the program they adopted that transferred much of the administrative costs to existing plans.

Figure 2. First-year administrative costs per eligible and per eligible per month, by demonstration sites: Medicaid Competition Demonstrations, 1983.

Ratesetting

Among the most problematic issues for the demonstrations was the process for establishing prepaid capitation payments. Not only were payment rates a key source of dispute in several of the demonstrations, but also the data and the actuarial methods used to derive the rates came under scrutiny and criticism among plans and providers who felt the payment rates were not adequate. Rate setting is a particular problem for the Medicaid program because capitation payments have been based traditionally on Medicaid fee-for-service experience, and fee-for-service Medicaid is among the lowest paying third-party payers. A ratesetting methodology that relied on fee-for-service experience might be satisfactory if it were further developed using competitive principles and payments are set accurately. If payment rates are set too high, savings to the program will not be realized even in light of utilization changes. If the payment rates are set too low, providers will not be willing to participate or could experience financial difficulty.

Five of the six demonstrations developed a payment rate to either plans and providers or intermediaries that included all Medicaid-covered services, with only a few services excluded. Only New Jersey capitated primary care separately and created a different mechanism for paying for inpatient care. Of the five demonstrations with a comprehensive capitation payment, four made provisions for State-sponsored stop-loss coverage. All the stop-loss coverage was at the individual level, with triggering levels ranging from $15,000 to $30,000; no aggregate stop-loss coverage was provided, except for the first 2 years of the Minnesota program. In each case, except New Jersey, the capitation payment rates were based on fee-for-service claims experience from a prior year, trended forward in some cases for inflation only, in other cases for inflation and known changes in the service base. A process of setting the payment rate as a percent of fee for service was adopted in all sites that would make the payment no more than 95 percent of fee-for-service primary care cost experience. New Jersey and Missouri made special allowances in their capitation for pregnancy-related services.

All the demonstrations made payments monthly, based on monthly determination of Medicaid eligibility. In Santa Barbara, special classes of enrollees were identified and excluded from case management: pregnant women, spend-down eligibles, and long-term care recipients.

In all of the demonstrations except New Jersey, the ratesetting process passed on most of the risk for covered services from the State to another entity. In California, risk was borne by the Health Initiatives, except in Monterey, where some of the risk was ultimately borne by providers who did not receive all the reimbursement they were owed because of the Initiatives' bankruptcy. In Missouri, New York, and Minnesota, contracting HMO plans were put at risk. New Jersey bore the risk for most inpatient services and passed on to providers only the risk for primary care services.

The data used to establish payment rates were either based on statewide fee-for-service Medicaid experience and adjusted for area differences or on the experience of the area included in the demonstration. The payment rate cells were relatively simple, with a minimum of two payment rate cells in Missouri and up to a maximum of 38 (noninstitutionalized) rate cells in Minnesota. In four sites, category of eligibility and age were used to differentiate payment rates, and sex was used in two sites. Age categories ranged from 2 (child and adult) to 11 categories for distinguishing rates. Four States set their own capitation rates using State actuaries, and two States relied on private consultants for ratesetting. A number of demonstrations made special adjustments, reflecting current areas of concern for Medicaid ratesetting. The special adjustments to capitation payment included interest offset, increased incidence of human immunodeficiency virus infection, administrative costs, coordination of private health insurance benefits, claims incurred but not reported, local county-option paid services (transportation and housekeeping), utilization review activities, graduate medical education, and change in fees for pharmacy and immunizations.

In every demonstration, subsequent year ratesetting methods and inflation adjustment factors became a focal point of scrutiny and disagreement between the State and the providers. Year-to-year trend factors ranged from a simple percent increase based on statewide fee-for-service experience to more complex regression adjustment mechanisms based on experience.

Provider selection and selection bias

In all the demonstrations, enrolled beneficiaries were required to select their provider and were normally “locked-in” with that provider for some period of time. Those beneficiaries who failed to select a provider despite repeated requests and attempts at providing information to facilitate the selection were assigned one. A separately reported paper describes the issues and results relating to provider selection or assignment (Hurley and Freund, 1988b).

Studies of evidence of selection bias were undertaken in New Jersey and Jackson County, Missouri, using claims data in both sites plus consumer survey data in Jackson County. In New Jersey, no evidence was detected that persons voluntarily enrolling differed significantly from those opting to remain in traditional Medicaid, as measured by the prior use of services. The Jackson County data were examined to determine whether any particular plans were adversely or positively affected by beneficiaries choosing them relative to their competitors. Both prior-use techniques and more complex econometric techniques failed to uncover bias in beneficiary, plan selection in the Jackson County demonstration.

Medicaid-Medicare dual eligibles

A separate study was undertaken for the Santa Barbara demonstration in order to examine whether the demonstration program affected utilization patterns of the dually eligible populations, and whether there was any indication that the program design contributed to shifting of care from Medicaid-capitated ambulatory care sources to Medicare-reimbursed inpatient sources. This study (Wan, to be published) used demonstration and comparison county data from the Medicaid Consumer Survey, because available secondary data files were not suitable for this analysis. It was concluded that no significant effects on utilization were apparent and that there was no evidence of care-shifting behavior on the part of capitated providers that would result in cost shifting.

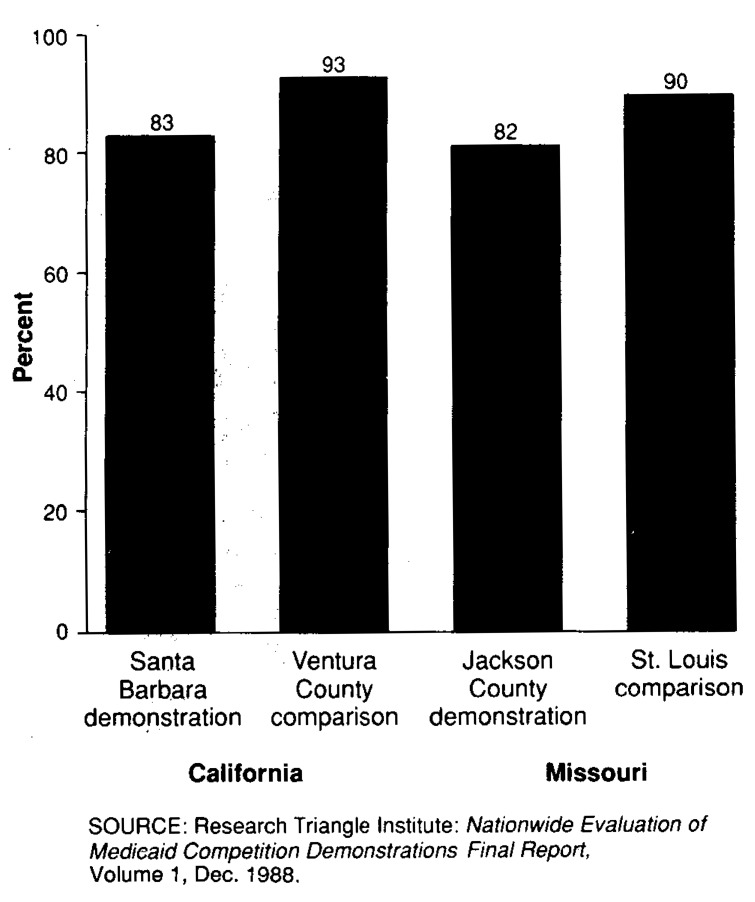

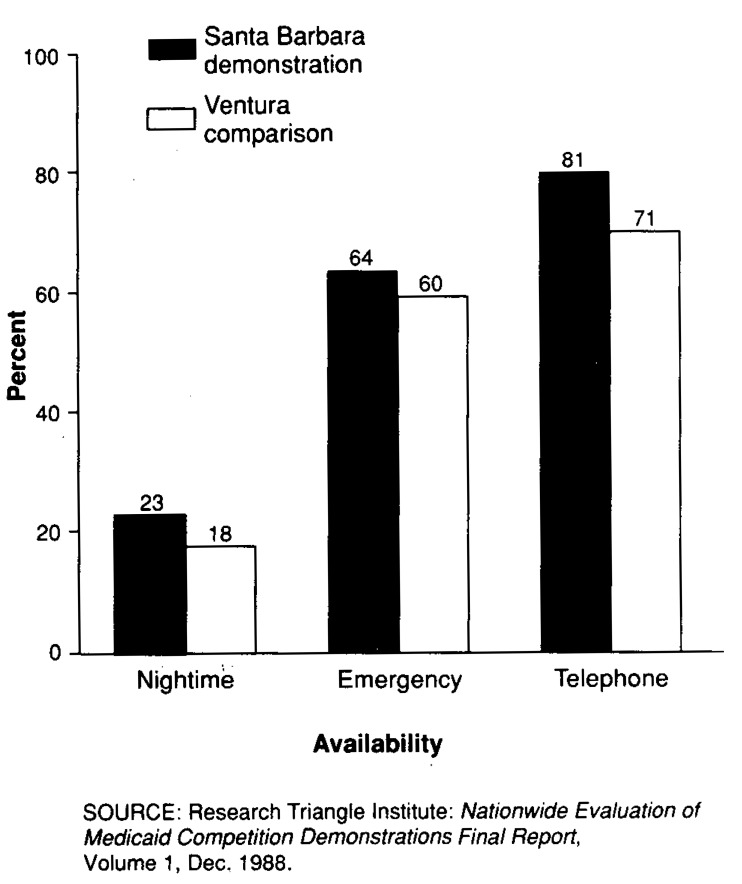

Access and satisfaction

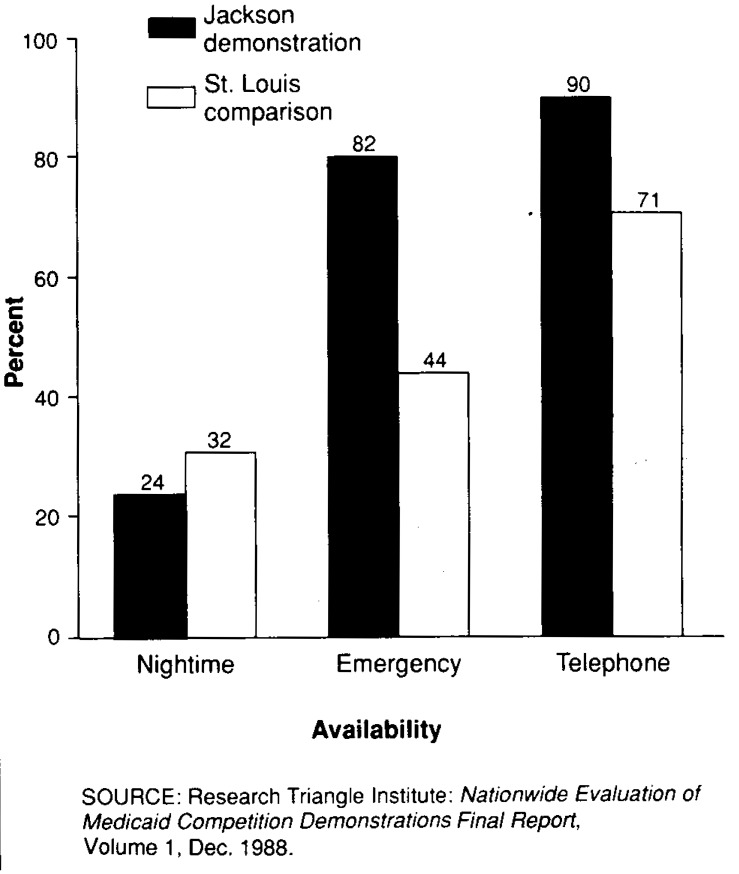

Access to care and satisfaction with care were also assessed from the Medicaid Consumer Survey. The survey's results indicated that the satisfaction of AFDC enrollees with their health care providers was significantly lower in demonstration sites than in the respective comparison sites. Most enrollees at all four sites, however, were satisfied with their care (Figure 3). Reported (perceived) access to care for demonstration enrollees in California and Missouri was generally greater than that of fee-for-service Medicaid eligibles in the comparison site (Figures 4 and 5). Objective measures of access (waiting times for appointments, travel time, office wait time), however, were mixed for the Santa Barbara demonstration, but equivalent for demonstration and comparison sites in Missouri.

Figure 3. Percent of respondents expressing satisfaction with their current care, by demonstration and comparison sites in California and Missouri: Medicaid Competition Demonstrations, 1983.

Figure 4. Percent of respondents reporting perception of off-hour availability, by demonstration and comparison sites in California: Medicaid Competition Demonstrations, 1983.

Figure 5. Percent of respondents reporting perception of off-hour availability, by demonstration and comparison sites in Missouri: Medicaid Competition Demonstrations, 1983.

Data from the Medicaid Consumer Survey yielded no significant evidence of program effects on self-reported health status, health habits, or use of preventive services. There was evidence, however, that patients in Santa Barbara County (demonstration) were less likely to seek physician care for a given symptom when compared with patients in Ventura County (comparison).

Quality of care

Information regarding the process and outcomes of medical care in the Medicaid demonstrations was collected from two sources: from the patients themselves through the Medicaid Consumer Survey and from medical record abstraction of the care they received. These two methods of measuring access, satisfaction, and quality of care are complementary to each other. Taken together, they give an overall portrait of the care given in the demonstrations, compared with the fee-for-service comparison sites.

Although the quality of care study included the largest chart abstraction ever undertaken with regard to Medicaid, several limitations were intrinsic to the design. Only two of the six demonstrations were subjected to the detailed quality measurements of both the consumer survey and the chart abstractions. These two demonstration sites—Santa Barbara County, California, and Jackson County, Missouri— were quite dissimilar in patient demographics, structure of medical care, and in the nature of the capitated intervention. Therefore, differences found between States could be related to structural factors rather than to an effect of the demonstration. In addition, the study design utilized was a cross-sectional comparison of similar populations. Lacking any predemonstration-year data for these segments of the evaluation, there was no way to ensure that some of the differences found may not have been a result of preexisting factors. However, the demographics (age, race, and income) of the populations in the demonstration and comparison sites within States were quite similar, and it is unlikely that these factors alone had a major effect on the study findings.

The structure of medical care in the California demonstration site (Santa Barbara County) and comparison site (Ventura County) was similar, but the medical care system in the Missouri demonstration site (Jackson County) was substantially more centralized and coordinated than that in the comparison site (St. Louis City). Some of the apparently better quality of care found in Jackson County may have been the result of structural factors such as more reliance on institutional providers of care. The study conclusions can be generalized only to populations similar to those studied: women and children insured under AFDC Medicaid programs. Generalization of these results to other Medicaid populations, such as the disabled or the aged, should not be inferred from these data.

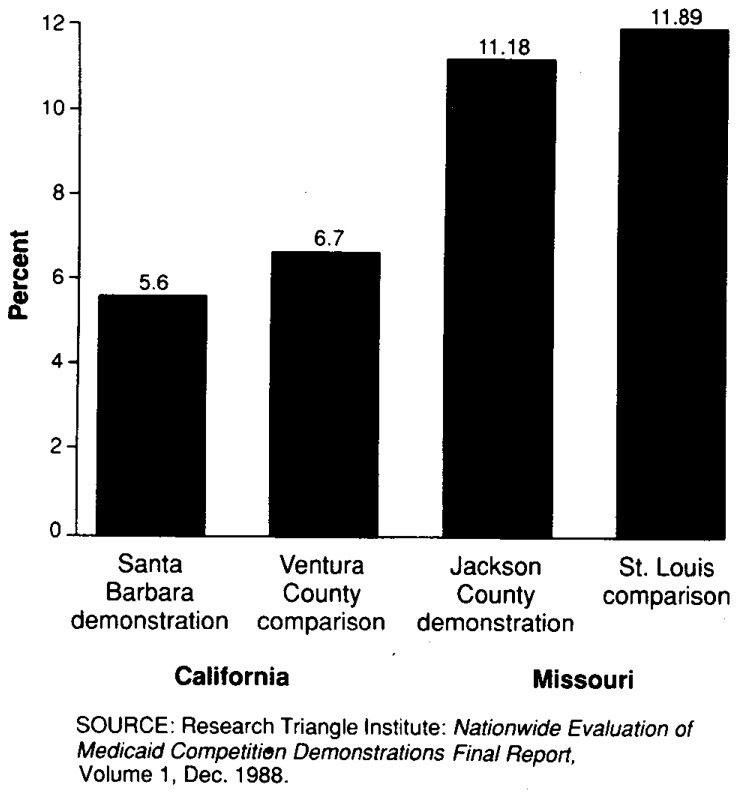

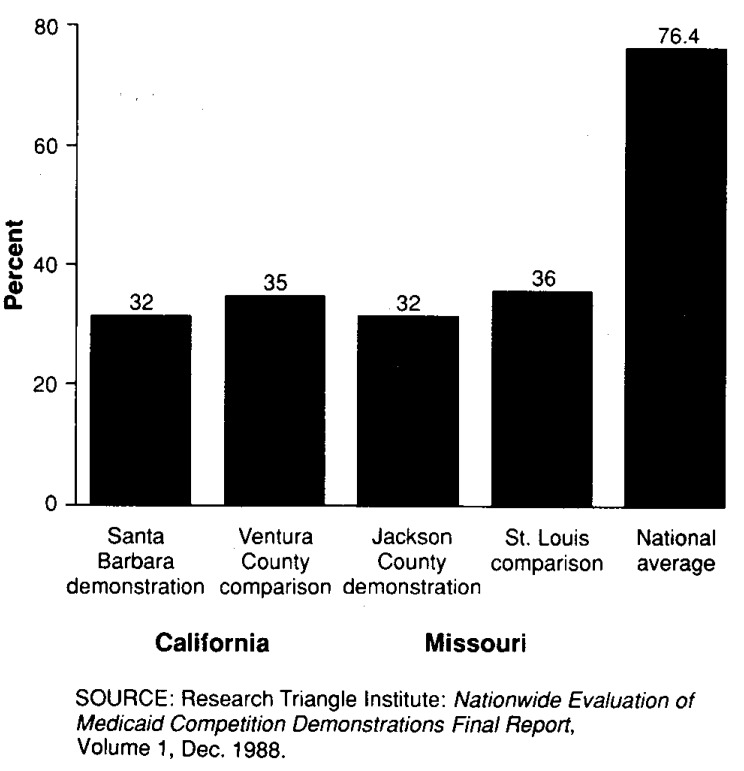

Adult inpatient care

Inpatient care was abstracted in detail for the major health event in the AFDC population, childbirth. No clinically significant program effect was noted in mean birthweight or in the clinically important proportion of infants born of low birthweight (less than 2,500 grams), as shown in Figure 6. No difference was found in rates for caesarean section or for complications of delivery. However, the number of low-birthweight infants born at both Missouri sites (comparison and demonstration) was quite high, and the amount and timeliness of prenatal care were inadequate across all sites (Figure 7). Most women did not receive any prenatal care until the second trimester of pregnancy, and the average number of prenatal visits at all sites was less than 8, far fewer than the 12 prenatal visits recommended by the American College of Obstetrics and Gynecology. Although there appears to be no demonstration effect either increasing or decreasing the timing and amount of prenatal care, it is unavoidable to note apparent underutilization of prenatal care by the Medicaid population in general.

Figure 6. Percent of deliveries with birth weights of less than 2,500 grams, by demonstration and comparison sites in California and Missouri: Medicaid Competition Demonstrations, 1983.

Figure 7. Percent of deliveries with prenatal care in first trimester of pregnancy, by demonstration and comparison sites in California and Missouri: Medicaid Competition Demonstrations, 1983.

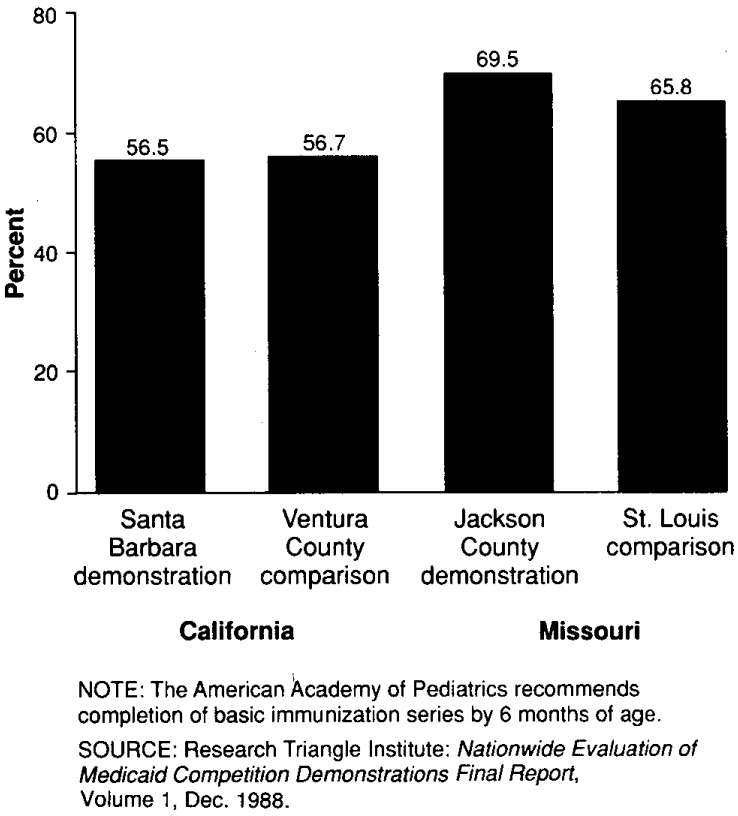

Ambulatory care for children

Review of ambulatory care provided to children presented a mixed picture. The number of immunizations provided children in the demonstration sites indicated they were slightly more likely to have completed basic childhood immunizations, but all sites fell far short of assurance of adequate immunization status for all children, as shown in Figure 8. Care for anemia and otitis media was somewhat worse in the Santa Barbara County demonstration site when compared with Ventura County, but it was equal in the Missouri sites.

Figure 8. Percent of children with basic immunization series at 1 year of age, by demonstration and comparison sites in California and Missouri: Medicaid Competition Demonstrations, 1983.

Adult female ambulatory care

Multiple acute and chronic conditions were surveyed in the adult ambulatory chart abstraction. Care was equivalent between demonstration and comparison sites for many conditions. Where differences did exist, care was often worse in the St. Louis City comparison group when compared with the Jackson County demonstration. The Santa Barbara County demonstration had superior care for the condition of vaginitis; was deficient in some aspects of prenatal care and care for hypertensives; and was similar to the Ventura County comparison group for the conditions of urinary tract infection, pelvic inflammatory disease, and adult preventive care.

Summary

Overall, the effects on the quality of care can be summarized as follows. Enrollees' self-assessed health status did not seem to be adversely affected by the demonstrations, although they were less likely to seek care for a given symptom. Whether this decreased physician-seeking behavior would result in diminished health status over a longer period of observation cannot be assessed. The quality of the process and outcomes of care as assessed by chart abstraction showed no differences for birth outcomes and complications, although major deficiencies in the provision of prenatal care existed across all sites. The other diagnoses studied presented a mixed picture: equivalent or slightly worse care in the Santa Barbara County demonstration and equivalent or somewhat better care in the Jackson County demonstration.

Problems with quality were identified at all sites. These problems may be generic for the population served and to Medicaid programs in general, regardless of the existence of capitation or case management. Although the presence of a capitated, case-managed program did not appear to significantly harm the health status of the individuals enrolled, the existence of these overall problems in the provision of medical care to the Medicaid population should continue to be addressed by policymakers and service delivery professionals.

Successful elements of case management

The results of the evaluation present a comprehensive set of parameters within which the demonstrations can be examined. Results were found that appear across multiple demonstration sites or have been reported elsewhere in the literature and were supported by our analyses. Conclusions in this section are for both the State program level and the plan level. In some cases, the issues and lessons are the same; in others, they are different.

Risk sharing and ratesetting9

One of the key considerations for State program administrators concerns the type and extent of risk sharing to introduce into any prepaid Medicaid initiative. States must consider how to apportion savings and losses between the State and each health plan, whether the health plan is a county intermediary (such as in Monterey or Santa Barbara Counties, California, or Monroe County, New York) or whether the State is contracting directly with service-delivery entities (such as in Missouri and New Jersey). This issue is most prominent when initially considering how to set the capitation rates and, thus, how to share expected savings and losses from the original fee-for-service base.

As was described earlier, ratesetting was a contentious issue for almost all the demonstrations. The States had to balance a guarantee of savings up front against the prospect that too few plans or providers would agree to participate. In the case of the demonstrations with intermediary plans (California and New York), ratesetting negotiations were further complicated because they had to occur at two levels. Finally, actual rate decreases over time occurred in several demonstration sites; in one of the cases, the demonstration shut down after this event.

Limitations on risk bearing

Another important issue at the State level is how to share the risk for high-cost cases and, in particular, how the State should provide stop-loss reinsurance to each health plan for catastrophic health care. All States did; in only one site was the arrangement found problematic. Most of the participating providers felt the stop-loss arrangements were important enticements to participation. None of the States mandated particular types of risk sharing between and among providers at the plan level. However, the manner in which plans shared risk with providers, within applicable Federal regulations, is an important element regarding the viability and cost-saving potential for each plan. The States must confront the issue regarding the degree to which they mandate risk arrangements or leave these to the discretion of the plans.

Expanded provider participation

Prepaid contracting as a means for States to obtain an expanded group of health care plans and providers for Medicaid beneficiaries has been another important issue. Ideally, provider networks should be well dispersed and should provide an expanded set of options to enrollees for a price the State can afford. Many States, however, have found that increasing the number of providers beyond those traditionally associated with Medicaid has been difficult. Successful strategies included one or more of the following:

Recruiting newer, younger groups of physicians who did not already have substantial private practices and were therefore interested in new business.

Planning convincing media presentations that showed how judicious management of capitation funds could be advantageous to the primary care physician in comparison with traditional fee-for-service Medicaid.

Developing advisory panels of well-known community physician leaders to encourage participation.

Designing the program so that any physician wishing to have any part of the Medicaid business would have to participate.

Eligibility and enrollment

After the States have established contracts with the plans or providers and rates have been agreed on, the next critical issue involves establishing a system to track program eligibility and enrollment in each of the participating plans. The linkage between eligibility and enrollment is critical and one that was a problem in each of the demonstration States. For the purposes of correctly paying capitation, States must have the ability to know at any given time who is enrolled in which plan. Similarly, an accurate list of current enrollees is critical for each plan, so that plans can protect themselves against retroactive determination of Medicaid ineligibility. To deal with this problem, States must be able to integrate computer programs relating to eligibility (often from departments of welfare or social services) with computer programs tracking enrollment (usually maintained by the department of health or other administering agency). Programs have to be substantially debugged in advance, and a realistic planning horizon has to be established to do so (Freund, 1986).

Quality assessment systems

States are also responsible for ensuring the quality of health care being provided to Medicaid beneficiaries. None of the demonstration programs had formalized quality assurance programs during the first several years of the demonstration; this is perhaps an indication of priorities on quality issues versus cost-containment issues. Although it is true that there are limited methodologies for monitoring quality, more can be done: surveillance and utilization review system programs can be modified, plans can be required to report data relating to quality, and outside audits can be carried out. In several of the States, such quality of care programs are now being undertaken.

Management information systems

In order to monitor financial viability and enrollee quality of care, an ongoing, timely, and complete management information system (MIS) needs to be in place at the plan and Medicaid program agency level. As an example of the importance of an MIS, one entire demonstration failed largely because debts incurred but not reported were not being tracked. All of the demonstrations and plans have struggled to establish and operate an adequate MIS; IPA-type plans that pay physicians on a fee-for-service basis need an MIS that operates at the encounter level; aggregate-level statistics may suffice for other plan types. Plans should not begin operation without an MIS, and long lead times for developing such systems (often 1 year or more) must be recognized. States may wish to subsidize the development of such systems, both to protect their own financial interests and to help ensure compatibility with the State system.

Monitoring of service use

All demonstrations began with the assumption that dummy claims or pseudo-claims submission on the part of the plans would be an integral part of the reporting to the States. Intended uses for such encounter data included ratesetting, assessment of under- or overutilization, and triggering of reinsurance. The effort to comply with this reporting requirement was expensive and frustrating for the plans, as well as for the State analysts intended to be the recipients of these data. Significant problems centered on noncompliance at the provider level with reporting of encounters and on incompatibility of data organization methodologies and computer systems. Alternative forms of utilization review, or reporting of aggregate statistics, should perhaps be implemented because the health impact of not following utilization patterns in a population at risk for the consequences of underservice may be significant. Given the difficulties of providing prenatal care to the AFDC population, surveillance of the provision of this (and other) care is important. Aggregate data must, however, include information on the health status of the population so that the potential for selection bias can be explored.

Conclusion

The evaluation of the demonstrations provided a detailed understanding of the implementation and operation of the programs, as well as a comprehensive assessment of program features of case management, capitation, limitation on freedom of choice, and competition on several outcome measures across several program designs. Although much has changed in the Medicaid program since these demonstrations were conceived in the early 1980s, a number of important findings with policy and managerial significance were revealed.

Primary care case-management responsibility produced significant effects on service delivery patterns, in particular emergency room use, for virtually all of the programs. The findings are particularly notable because they were detected in the first operational year of each demonstration. Capitation payments appear to have intensified utilization effects resulting from case management.

Despite the reductions in utilization, however, first-year program expenditures were not substantially reduced for any of the demonstrations. This lack of cost savings in the first demonstration year is primarily the result of very limited reductions in inpatient use and of the basing of capitation rates on prior-year use levels. In some sites, use of capitation appears to have prevented the demonstrations from benefiting from secular declines in costs associated with other program reforms or changing market conditions. Conversely, however, capitation would have provided protection against large cost increases in the fee-for-service sector had these occurred during the same period. In sum, capitation provides the opportunity to develop greater predictability and control in program expenditures and provides a buffer against rapid cost changes in the future.

The limitation on freedom of choice of provider that accompanied case management and capitation did not have an adverse effect on quality of care, as measured through in-office medical record abstraction of tracer conditions indicative of process and outcome. Overall levels of quality indicators for both demonstration enrollees and fee-for-service comparison group Medicaid beneficiaries were, however, disappointing relative to national standards of care. The demonstration programs seem to result in slightly lower levels of beneficiary satisfaction, but availability of care is as good, if not better, in the demonstration counties versus the comparison counties with traditional fee-for-service Medicaid.

Although a certain percent of enrollees in all the demonstrations failed to choose a plan or provider and thus had to be “auto-assigned,” no insurmountable problems were encountered by the demonstrations in accomplishing full enrollment of Medicaid beneficiaries. It appears practical to expect that the Medicaid population will enroll in prepaid plans.

The implementation and operational experience of the programs was mixed. Two of the demonstrations failed after implementation, and one essentially never got past the planning stages. The successful program designs were feasible but challenging and, in some instances, involved highly contentious negotiations. More than any other issue, ratesetting was the crucial problem program developers had to face to initiate and maintain their demonstrations. Administrative costs over the first 2 years of the demonstrations varied widely, reflecting variation in program designs and the manner in which they were organized and staffed.

The Medicaid population often is reported to have difficulty accessing what is an apparently fragmented fee-for-service system. The results from this evaluation provide some indication of the promise of prepaid case management to better organize the care giving, to reduce unnecessary service use, and to match the quality of care obtained in traditional fee-for-service Medicaid.

In conclusion, these programs represent workable and reasonable reforms to traditional fee-for-service Medicaid. They offer enhanced predictability and control of program expenditures and, perhaps, opportunities for modest cost savings. Because they can provide these benefits without significant adverse effects on quality, access, or satisfaction, they merit serious consideration by both policymakers and program managers.

Acknowledgments

The authors wish to thank their colleagues and collaborators in the Nationwide Evaluation of Medicaid Competition Demonstrations for their assistance in the early versions of this article. Additionally, the consistent help and cooperation of Spike Duzor, Project Officer, Health Care Financing Administration (HCFA), and his colleagues in the Office of Demonstrations and Evaluations are gratefully acknowledged.

The Nationwide Evaluation of Medicaid Competition Demonstrations was funded by the Health Care Financing Administration (Contract No. 500-83-0050).

Footnotes

The evaluation was initiated in 1983 by Research Triangle Institute (RTI) under contract with HCFA. Subcontractors to RTI for the evaluation included the University of North Carolina at Chapel Hill; Medical College of Virginia; New Directions for Policy; Lewin/ICF; and Tillinghast, Nelson, and Warren, consulting actuaries.

For an overview of the demonstration program origins and designs, see Freund and Neuschler (1986) and Freund and Hurley (1987).

For detailed discussions, see Hurley (1986); Anderson and Fox (1987); and Heinen, Fox, and Anderson (to be published).

Reprint requests: John E. Paul, Ph.D., Center for Policy Studies, Research Triangle Institute, P. O. Box 12194, Research Triangle Park, North Carolina 27709.

The evaluation activities and design, the analytical approach, and the findings are described in detail elsewhere in the published literature and in an eight-volume set, Nationwide Evaluation of Medicaid Competition Demonstrations Final Report, and its appendixes that are available from the National Technical Information Service (NTIS), 5285 Royal Road, Springfield, Va. 22161.

See Weis and Carey (1988) for additional details regarding the approach taken in the quality of care component of the evaluation.

See Freund et al. (1989); Hurley, Paul, and Freund (1989); and Hurley and Freund (1989) for details on differing aspects of effects on utilization as a result of the demonstrations.

An analysis examining interplan differences in Missouri that disaggregates program effects by plan type is presented in Volume 3 and Appendix 3-3 of the Evaluation Final Report cited previously.

See Freund (1986) for an earlier discussion of operational issues.

References

- Anderson MD, Fox PD. Lessons learned from Medicaid managed care approaches. Health Affairs. 1987 Spring;6(1):71–86. doi: 10.1377/hlthaff.6.1.71. [DOI] [PubMed] [Google Scholar]

- Freund DA. Private delivery of Medicaid services: Lessons for administrators, providers, and policy makers. Journal of Ambulatory Care Management. 1986 May;9(2):54–65. doi: 10.1097/00004479-198605000-00006. [DOI] [PubMed] [Google Scholar]

- Freund DA. Competitive health plans and alternative payment arrangements for physicians in the United States: Public sector examples. Health Policy. 1987;7:163–173. doi: 10.1016/0168-8510(87)90029-7. [DOI] [PubMed] [Google Scholar]

- Freund DA, Hurley RE. Managed care in Medicaid: Selected issues in program origins, design, and research. Annual Review of Public Health. 1987;8:137–163. doi: 10.1146/annurev.pu.08.050187.001033. [DOI] [PubMed] [Google Scholar]

- Freund DA, Hurley RE, Paul JE, et al. Interim findings from the Medicaid Competition Demonstrations. In: Scheffler RM, Rossiter LF, editors. Advances in Health Economics Research. Vol. 10. Greenwich, Conn.: JAI Press, Inc.; 1989. [PubMed] [Google Scholar]

- Freund DA, Neuschler E. Health Care Financing Review, 1986 Annual Supplement. Washington: U. S. Government Printing Office; Dec. 1986. Overview of Medicaid capitation and case-management initiatives. HCFA Pub. No. 03225. Office of Research and Demonstrations, Health Care Financing Administration. [PMC free article] [PubMed] [Google Scholar]

- Health Care Financing Administration. Nationwide Evaluation of Medicaid Competition Demonstrations. Office of Research and Demonstrations Extramural Report; Sept. 1986. HCFA Pub. No. 03236. [Google Scholar]

- Heinen L, Fox PD, Anderson MD. Findings from the Medicaid Competition Demonstrations: A guide for States. Health Care Financing Review. 1990 Summer; to be published. [PMC free article] [PubMed] [Google Scholar]

- Hurley RE. Health Care Financing Review. No. 2. Vol. 8. Washington: U. S. Government Printing Office; Winter. 1986. Status of the Medicaid competition demonstrations. HCFA Pub. No. 03226. Office of Research and Demonstrations, Health Care Financing Administration. [PMC free article] [PubMed] [Google Scholar]

- Hurley RE, Freund DA. A typology of Medicaid managed care. Medical Care. 1988a Jul;26(7):764–774. doi: 10.1097/00005650-198808000-00003. [DOI] [PubMed] [Google Scholar]

- Hurley RE, Freund DA. Emergency room use and primary care case management: Evidence from four Medicaid demonstration programs. American Journal of Public Health. 1989 Jul;79(7):843–846. doi: 10.2105/ajph.79.7.843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hurley RE, Freund DA. Provider selection or assignment in a mandatory case management program: Determinants and implications for utilization. Inquiry. 1988b Fall;25(3):402–440. [PubMed] [Google Scholar]

- Hurley RE, Freund DA, Taylor DE. Gatekeeping the emergency room: Impact of a Medicaid primary care case management program. Health Care Management Review. 1989 Spring;14(2):63–71. doi: 10.1097/00004010-198901420-00008. [DOI] [PubMed] [Google Scholar]

- Hurley RE, Paul JE, Freund DA. Going into gatekeeping: An empirical assessment. Quality Review Bulletin. 1989 Oct. doi: 10.1016/s0097-5990(16)30308-6. [DOI] [PubMed] [Google Scholar]

- Wan TTH. The effect of managed care on health services use by dually eligible elders. Medical Care. doi: 10.1097/00005650-198911000-00001. to be published. [DOI] [PubMed] [Google Scholar]

- Weis KA, Carey TS. Group Health Proceedings: 1987. Washington, D.C.: Group Health Association of America; 1988. Quality of Care Component: National Medicaid Competition Demonstration Evaluation. [Google Scholar]