Abstract

In this article we describe and evaluate quality monitoring and improvement activities conducted by Massachusetts Medicaid for its primary care case management program, the primary care clinician plan (PCC). Emulating managed care organization (MCO) practices, the State uses claims to analyze and report service delivery rates on the practice level and then works directly with individual medical practices on quality improvement (QI) activities. We discuss the value and limitations of claims-based data for profiling, report provider perspectives, and identify challenges in evaluating the impact of these activities. We also provide lessons learned that may be useful to other States considering implementing similar activities.

Introduction

Quality monitoring and improvement are important activities in MCOs, intended to promote medical care consistent with clinical guidelines or address consumer satisfaction issues. While it is common for MCOs to use a variety of approaches to quality monitoring and improvement, the use of these techniques in a Medicaid primary care case management is unusual. Since 1995, the Massachusetts Medicaid Program, MassHealth (administered by the Massachusetts Division of Medical Assistance [DMA]), has implemented policies and procedures in its PCC plan that emulate MCO quality monitoring and improvement practices, including profiling individual primary care practices. Given the problems States have had attracting or retaining MCOs in the Medicaid market, it is valuable to understand what may be transferable from this program to other States, as well as the associated challenges and limitations to the Massachusetts approach. In this article we summarize issues regarding the use of physician profiling as a quality monitoring and quality improvement (QM/QI) technique, describe key aspects of the MassHealth primary care profiling activities, and report on provider perspectives. We also discuss the limitations associated with the use of claims-based data for profiling and the implications for the appropriate use of these data.

Background

Issues in QM/QI

The literature regarding QM/QI in health care focuses on several themes including: defining aspects of quality, the relative value and availability of process and outcome measures of quality, and approaches to changing physician behavior as the crux of improving quality of care. The Institute of Medicine defines quality of care as “…the degree to which health service for individuals and populations increase the likelihood of desired health outcomes and are consistent with professional knowledge.” Deficient aspects of care are typically the reason for monitoring physicians' practice patterns. Three classes of process measures are typically reported in the medical literature: (1) patients not receiving beneficial care, (2) receipt of unnecessary treatments, and (3) poorly performed interventions (Becher and Chassin, 2001).

Quality of care can be measured either by looking at the process of care (i.e., the delivery of recommended procedures), or at health outcomes (i.e., morbidity and mortality rates). While positive health outcomes are the ultimate goals of care, outcome measures are difficult to develop and interpret, and can be affected by exogenous factors such as the short eligibility periods common in a Medicaid Program. Process measures may be more useful and attainable for several reasons. Process measures clearly indicate which processes a clinician did or did not follow, in realms in which clinicians feel accountable. The information from process measures is “actionable,” i.e., the provider can do something about improving processes of care (Rubin, Pronovost, and Diette, 2001). Case-mix adjustment, which can be challenging, is not as relevant for process measures as for outcome measures. Indeed, differences in the delivery of preventive or screening services by patient characteristics (e.g., age, sex, or comorbidities) are relevant information that should not be case-mix adjusted out of process analysis. While there may be some technical challenges to defining the eligible population in process measurement, the challenges are not as great as the case-mix adjustments necessary for meaningful health outcome measurement (Mant, 2001). Furthermore, measurable processes of care occur more frequently (e.g., annual rates of immunization) than specific health outcomes that might derive from the process of care (e.g., cases of whooping cough resulting from missed immunizations), as well as being “immediate, controllable, and rarely confounded by other factors.” (Eddy, 1998).

Improving quality of care boils down to changing physician behavior. In a summary of the literature on changing physician behavior, Bauchner, Simpson, and Chessare (2001) identify effective and ineffective strategies. They report little or no impact on physician behavior following didactic continuing medical education presentations, passive distribution of information (e.g., mailings), or audit and feedback approaches. However, small group discussions or case studies, implementation of manual and electronic reminder systems, educational outreach, and a combination of auditing and reporting with specific recommendations and financial incentives have been effective in changing behavior that is associated with improved quality of care. Clemmer et al. (1998) offer recommendations for promoting cooperation in health quality including: developing a shared purpose; creating an open, safe environment; encouraging diverse viewpoints and negotiating agreements.

Physician Profiling

Physician profiling is one means to generate information about processes of care, generally using claims data to generate rates of service delivery linked to enrollment or eligibility data. For example, claims may be used to evaluate mammography rates overall and for subgroups defined by age, race, or ethnicity. Claims are readily available to systematically evaluate patterns of care, can support analysis of treatment patterns for an entire enrolled population, and are much less costly than medical record reviews. However, there are also distinct limitations in the types of treatment that can be observed in claims, and problems with the accuracy of data (Hofer et al., 1999; and Richman and Lancaster, 2000).

Rates generated from the claims are subject to error in both the numerator (which may be too small if services delivered are not captured) and the denominator (which may be too large or small depending on the specification of eligible patients for inclusion). These inaccuracies can lead to lower reported than actual rates of compliance with recommended treatment guidelines. As a result, profiles may provide general information about trends, providers whose performance is exceptionally strong or exceptionally weak, or population groups that are systematically under-treated. However, profiles do not always provide precise information about the performance of individual practices or physicians because claims-based systems do not always capture all of the services delivered.

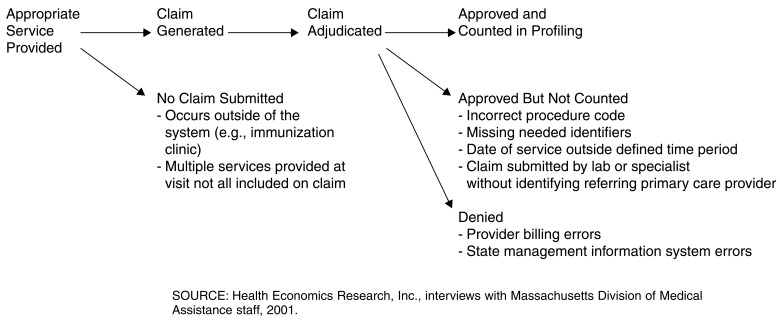

In Figure 1, we show the many ways a service actually delivered could be missed in a claims-based profiling system, i.e., potential problems with the numerator in generating the profiles. First, an appropriate service could have been delivered outside the system. For example, immunizations are often provided at public clinics, and hence no claim is submitted. Even if the physician is appropriately evaluating whether patients have received necessary services (e.g., by conducting a medical assessment or record reviews), there is no way to indicate this through a claims-based system. Alternatively, there may be no claim when a service was actually provided if the physician referenced only one service on a claim for a visit in which multiple services were delivered. Even when an actual claim for the specified service is generated, there are several ways it may not make it into the rate calculation. Claims submitted for appropriate services may be denied in the adjudication process due to provider billing errors or to problems with the payer's management information system. Claims which make it through the adjudication process may still not make it into the rate calculation if the provider used the wrong billing code, left out or incorrectly entered needed identifiers, provided the service outside the cutoff dates for the rate calculation, or if the claim was submitted by a lab or specialist without including information about the referring primary care provider (if the system uses provider identification numbers to link claims rather than patient identification numbers).

Figure 1. Potential Sources of Error in Claims-Based Profiling.

The combination of possible errors in claims-based performance measures can lead to sizable discrepancies between actual service delivery and practice profile rates. For example, in comparing claims-based immunization data to medical records, Richman and Lancaster (2001) found claims identified only 29 percent of children under the age of 2 as fully immunized, less than one-half of the actual services recorded in the medical records (70 percent). Feedback provided to the physicians in that study yielded an increase of 16 percent appearing in claims. This 16 percent improvement still substantially underreported the services delivered. In addition, the increase could have been achieved solely through correcting billing practices without actually improving quality of care, i.e., ensuring that the remaining 30 percent of the children receive the recommended immunizations.

Denominator problems result if the patient panels are not correctly identified or the eligible patients correctly specified. Unless the denominator is limited to patients continuously enrolled for an appropriate period of time, the measures may be inappropriate. For example, a measure that is based on annual treatment guidelines is not appropriately applied to patients enrolled for less than 1 year. The denominator can also be inaccurate if patients are misassigned to a particular primary care provider in the enrollment or eligibility files. In other words, patients can be wrongly counted as being on a physician's panel.

Finally, some argue that small sample sizes at the individual provider level are another reason for cautious interpretation of profile reports, and may require case-mix adjustments (Zaslavsky, 2001). In a study of diabetic care, little of the overall variance observed in diabetes care was attributable to differences in provider (Hofer et al., 1999). Given this small effect, these researchers calculate that each provider would need to have at least 100 diabetic patients to yield valid results, where in their sample the mean number of patients with diabetes per provider was 61. Alternatively, one could argue that the rate is inconsequential, as the goal should be care according to clinical standards for every patient.

Methods

This article summarizes information we gathered about practice profiling in the PCC plan. The data were collected by three methods: (1) interviews with administrators from the DMA and the vendor that conducts many of the QI activities; (2) review of QM/QI materials and reports provided by DMA; and (3) interviews with practice managers or physicians representing 13 PCC practices that participate in the PCC Plan Profiling Activities.1 In addition to describing the profiling initiative, we sought to evaluate whether the Massachusetts profiling initiative affects physician behavior, has any measurable impact on the desired outcomes, and to understand the degree of burden it imposes on participating medical practices. The practice managers, clinical coordinators, and physicians were not randomly selected, hence their feedback may not be representative. They were selected from a list provided by the vendor and designed to include a range of practice types (solo practitioners, group practices, community health centers, and outpatient departments) and of informants who would be willing to engage in discussion with us. The practices whose staff we interviewed serve almost 30,000 PCC plan enrollees, representing about 8 percent of PCC plan enrollees statewide. We also reviewed sample profile reports and a 1999 evaluation of the PCC Profile Improvement Project (Primary Care Clinician Plan Network Management Services Program, 1999) and discussed with DMA changes made in response to the 1999 evaluation. Interviews with the DMA and vendor staff occurred in several phases between 2000-2002, and the interviews with PCC practice staff took place between November 2001 and February 2002.

Findings

MassHealth's PCC Plan

MassHealth serves its beneficiaries with a combination of standard fee-for-service (FFS), and two types of managed care arrangements: (1) contracted MCOs (referred to jointly as the MCO plan and paid on a capitated basis), and (2) PCC plan paid on a FFS basis. Of the three mechanisms, the PCC plan is the dominant delivery model. In fiscal year 2000, 46 percent of MassHealth beneficiaries were enrolled in the PCC plan, compared with only 15 percent in any of the contracted MCOs (Table 1). An additional 35 percent of beneficiaries were not eligible for managed care enrollment (PCC or MCO), including Medicare/Medicaid dually eligible beneficiaries, others with significant third-party resources, and institutionalized beneficiaries. In any given month, another 4 percent were eligible for enrollment in either a MCO or the PCC plan, but not yet assigned.

Table 1. MassHealth Enrollments: Fiscal Year 2000.

| Enrollment | Number of Beneficiaries | Percent | Expenditures | Percent |

|---|---|---|---|---|

| Total | 922,436 | 100 | $2,891,000,000 | 100 |

| Enrolled in PCC Plan | 428,727 | 46 | 1,214,000,000 | 42 |

| Unenrolled PCC Plan Eligibles | 38,024 | 4 | 191,000,000 | 7 |

| Enrolled in Managed Care | 136,181 | 15 | 312,000,000 | 11 |

| Subtotal | 602,932 | 65 | 1,717,000,000 | 59 |

| All Other Beneficiaries1 | 319,504 | 35 | 1,174,000,000 | 41 |

Includes Medicare/Medicaid dually eligible beneficiaries, including individuals under age 65 with third-party resources and others not considered eligible for managed care enrollment.

NOTE: PCC is primary care clinician.

SOURCE: Massachusetts Division of Medical Assistance, 2001.

As of August 2001, there were 1,250 PCC practices participating in the PCC plan, including solo practitioners, group practices, community health centers, and hospital outpatient departments. These practices were spread across 1,750 clinical sites, and included about 3,000 individual physicians in total. These PCCs, like PCC managers in other States, are expected to coordinate care for their patients and serve as gatekeepers for other services. However, unlike other States with PCC management programs, the PCC plan does not provide a per member per month capitation payment. Instead, PCCs receive a higher per visit rate for preventive care than for sick visits, and, as with all MassHealth providers, an enhanced rate for providing after-hours urgent care. In addition, PCCs with 200 or more PCC plan enrollees are expected to participate in quality improvement activities as are the MCOs contracting with the State. Of the 1,250 PCC practices, 385 had at least 200 PCC plan enrollees, serving about 85 percent of the total PCC plan enrollees. Thus, the QI activities directed to these 385 PCCs target about 40 percent of all MassHealth beneficiaries.

QI in the PCC Plan

DMA's QI activities are varied, and include systemwide goals, applicable to both the MCOs and the PCC plan, work with hospitals, production of beneficiary education materials, beneficiary surveys, and primary care practice profiling. In this article, after providing a brief overview of the range of QM/QI activities, we focus on the profiling activities within the PCC plan as a unique feature of Massachusetts' Medicaid Program.

The DMA staff, in conjunction with a contracted vendor, the Massachusetts Behavioral Health Partnership (The Partnership), manages the QI activities for the PCC plan. DMA QM/QI activities include complying with Health Plan Employer Data and Information Set® (HEDIS®) requirements, conducting beneficiary surveys, and developing agencywide QI projects. DMA staff also create provider and beneficiary education materials. The Partnership, which also manages the behavioral health carve out for PCC plan and FFS MassHealth beneficiaries, has a subcomponent focused on QM/QI activities in the PCC plan, Performance Improvement Management Services (PIMS). PIMS is responsible for the day-to-day management of PCC profiling activities, maintaining a hotline for PCC provider questions, monitoring and verifying provider telephone availability 24 hours a day and 7 days a week, and creating and implementing small education sessions targeted to specific provider types or communities. For example, PIMS has recently designed forums for behavioral health providers, providers who serve a large number of homeless patients, and focused on managing large numbers of patient no shows.

PCC plan and PIMS staff have implemented a number of quality monitoring and improvement activities. Some, like HEDIS® reporting and enrollee surveys, are conducted jointly with the MCO and Behavioral Health components of MassHealth. Others are planwide projects that take a multi-faceted approach to changing practice patterns and educating beneficiaries. For example, the goals of the Perinatal Care Quality Improvement Project are to increase prenatal and postpartum care service delivery, and ensure that perinatal care services are delivered in accordance with clinical guidelines. This extensive project involves staff from a number of DMA units, including the PCC plan, delivery systems, member services, and the Office of Clinical Affairs; representatives from the MCOs, and from the Department of Public Health. The resulting team meets regularly to develop strategies and implement projects designed to encourage and facilitate early access to prenatal care, reinforce the importance of going for regular prenatal care, encourage women to make and keep their postpartum visits, and to encourage pregnant women to choose a pediatrician for their child. These workgroups led to the production of multiple educational materials for both providers and members.

Profiling and Action Plan

PCC profiling (of each PCC practice, not of individual physicians), onsite meetings with PCC staff, and the development of practice-based improvement activities based on the profiling data, are the central quality monitoring and improvement activities conducted on the practice level. The components of this process which we describe include: (1) measurement selection determined by DMA, (2) claims data analysis, (3) creation and dissemination of provider profile and reminder reports, (4) twice yearly meetings with the individual practice managers or clinicians from each participating practice, and (5) the development and implementation of action plans to address QI opportunities. The MassHealth QI activities are extensive and resource intensive. In addition to DMA's 4.36 full-time equivalent staff positions for QM/QI in the PCC plan, computer resources, and printing costs for education materials ($48,000 was spent between July 2000 and June 2001 on program support materials), the current PIMS contract is for $1.1 million dollars.

Twice a year, participating PCCs (i.e., those with at least 200 PCC plan enrollees) receive their practice-specific profile reports during meetings with PIMS staff. The PCC profile report includes data on the PCC-specific panel and comparison information on the entire PCC plan. Measurement selection for the profile report is based on HEDIS® results or other quality monitoring activities suggesting opportunities for improvement. Data for the profile report is compiled using paid claims, and is generally 12-18 months old by the time each PCC receives its report. The data lag is the accumulated effect of waiting 6 months after the reporting period for claims to be filed and the claims adjudication to be complete, and a several month data analysis and report production process.

The report includes the following sections:

PCC panel characteristics, summarizing panel enrollment by age, sex, and disability status.

PCC quartile rankings for performance measures as compared with all other PCC practices that have at least 200 beneficiaries in their panel. At the providers' request, rates are now broken out by site within the PCC practice to help the PCC better understand site performance.

PCC and PCC plan performance in specific review periods for the following measures:

Percentage of children receiving the expected number of well child visits in accordance with age-specific Massachusetts Early and Periodic Screening, Diagnosis, and Treatment (EPSDT) schedule.

Emergency room (ER) visit rates.

Percentage of eligible females receiving cervical cancer screening.

Percentage of eligible females receiving breast cancer screening.

Percent of enrollees with asthma utilizing the ER, observation beds or requiring hospitalization.

Percent of members with diabetes receiving biannual and quarterly HbA1c testing, and annual retinal exams.

For each measure, back-up detail derived from Medicaid administrative data is provided about each person whose care might indicate a need for followup, e.g., either having missed a recommended service, or having been seen in the ER This data includes the date enrolled with the PCC practice and date last seen by the PCC in the last calendar year. For ER visits, data are also provided regarding office visits around the date of the ER visit to identify whether the beneficiary was sent to the ER after seeing the physician and whether there was any followup after the ER visit. This information is intended to assist the providers in their outreach efforts, as well as to identify the root cause of any barriers to care.

However, given the lag between the measurement period and the dissemination of these profile data, this information is out of date. Some patients have since received the recommended services, or are no longer on the PCC's panel. While the information is still useful to monitor trends or identify patterns of care, it is not as useful for identifying specific beneficiaries in need of services. To address this issue, DMA introduced the reminder report that includes more current member detail about needed services for panel members. Mailed out every 6 months, the reminder report includes data from all submitted claims (i.e., not limited to paid, adjudicated claims as are the profile reports) and the data are only about 6 weeks old when the PCCs receive it. The PCCs are encouraged to use the reminder report as an outreach tool to track and contact patients in need of services.

Educational Materials

Each version of the PCC profile report is accompanied by a variety of physician or member education materials to assist PCCs in specific clinical performance areas or in their outreach efforts. These materials, developed internally or obtained from another State agency or private affiliation, are available in several languages. Many of these materials have been developed from PCC plan or agencywide QI projects. The materials are frequently updated.

Site Visits

PCC plan links the distribution of the profile reports with in-person meetings of PIMS staff with the practice manager, medical director, or another representative of each PCC practice. These site visits are a core component of the PCC plan profiling activities and serve several purposes. In the course of these meetings, PIMS staff review the profile report, answer questions, review improvements since the last profile report, and initiate discussion of areas for improvement. DMA views the meetings as an opportunity to get to know the practice and collect information that is necessary to develop and implement an action plan. The PIMS staff conducting these meetings includes registered nurses with experience in primary care and former practice managers. Clinical back up is provided by medical directors who can address clinical issues and questions raised by participating physicians about the measures, clinical guidelines, or interpretation of the profile report data.

The primary goal of the meetings between the PIMS staff and the practice staff is to work collaboratively to identify appropriate areas for improvement and an action plan related to each selected area. Implementation of action plans is a requirement for practices participating in the PCC plan. These action plans may include administrative or operational practice improvements such as improved communication with hospital ER departments to improve care coordination and minimize ER utilization, or implementation of a recall and reminder system for preventive care. As part of the discussion, the PIMS staff share information with the PCC providers about how other similar practices are addressing similar problems.

Action plans are developed over the course of at least two site visits, allowing the PCCs and PIMS staff time to “look behind” or investigate problems suggested by the profile reports. Practice staff check to see if low rates of recommended care observed in the report reflect billing errors or other measurement issues, rather than non-compliance with clinical guidelines or practice standards. This investigation period is also an important opportunity for the practice to identify operational factors that contributed to the rates observed in the profile reports. For example, one practice noted that the percent of children receiving the recommended well child visits had decreased and found out the office staff had stopped sending out reminder post cards.

This staged process has several positive effects. It ensures that action plans target actual problems, not artifacts of data collection problems, and that the action plans are designed effectively for the individual practice. It also provides PIMS and DMA the opportunity to learn the extent of problems related to faulty enrollment data or claims-processing problems.

Impact on Physician Behavior

The State commissioned an evaluation of the PCC profiling activities in 1999, including a survey of PCCs and review of profile data at several points in time. The authors were unable to identify any impact on physician behavior as measured by trends in the profile reports (Primary Care Clinician Plan Network Management Services Program, 1999). We also reviewed profile reports at two points in time, and found no significant change at the plan or practice level. DMA staff attribute the lack of measurable impact on the rates to several factors. First, refinements have been made to the measures over time. These include changes in the claims specifications to improve the measures (e.g., to avoid including trauma-related ER visits), or to the defined data collection periods, and changes resulting from new clinical standards, such as changes in the EPSDT schedule. These refinements improve measures for the future, at the cost of comparability between profile data in the short run. Lack of visible progress is also attributed to short beneficiary enrollment periods, to movement of practices in and out of the PCC plan in response to changes in the managed care market (i.e., when a MCO exited the market and physicians joined the PCC plan to continue to serve their Medicaid patients), and to cultural barriers to patient compliance with some of the recommended services. Finally, the measurement and improvement cycle is long: data collection, claims analysis, report production, review with the PCCs, action plan selection, development and implementation, and followup profiling span several years. Thus, although the PCC plan first implemented profiling in 1995, it may still be too early to have achieved a measurable impact on specific service rates.

While the profile reports do not show much change, several of the practices we interviewed described ways in which the profiling activities and action plan process had affected their operations. More than one-half had redesigned aspects of their practice activities as a result of the PCC plan profile and action plan activities. Most commonly, they had implemented recall and reminder systems for the first time in their practice, and used the education materials with their patients. Almost all of them reported using the information to help them track patients in need of particular services. Finding ways to systematically increase delivery of needed care is challenging to these providers, especially those lacking computerized systems to identify individuals due for specific services. Practices had designed special forms or added components to routine assessment forms to flag the records of patients needing services. However, some of the changes target improving billing accuracy (i.e., ensuring that services delivered are indicated on submitted claims), thereby increasing the profile rates, but not necessarily increasing service delivery rates. Other changes aim to decrease the burden on the practices of investigating whether individuals who were flagged by the profile report actually need services. For example, one practice placed stickers on the medical records to indicate a service had been delivered so that the medical record need not be reviewed for that service.

While few clinicians or practice managers attend the quarterly PCC plan regional meetings (recently replaced with smaller, more targeted quality forums), the site visits from the PIMS staff may achieve the same goals in a way that is more targeted to the needs of individual practices and less time consuming. In addition, DMA expects better attendance at their newly designed quality forums that will focus on specific service delivery issues.

Provider Perspective

The practice staff we interviewed spoke highly of the site visit process, and had mixed views regarding the profile reports and action plans. All expressed appreciation for the communication and negotiation skills of the PIMS staff and their understanding of practice operations, the population served, and the limitations of the profile data they were presenting. Most were also very pleased with the educational materials provided by DMA and respect DMA's clear commitment to quality health care for their patients.

Provider viewpoints regarding the value of the profile reports varied by the size and resources of the practices. Smaller practices, with little or no ability to generate reports of their own, found the profiling activities especially valuable. In contrast, hospital outpatient departments, with substantial resources for tracking patient care, did not find the profiling reports as valuable. Those with a large number of PCC plan members on their panels invest substantial time tracking down individual records to distinguish those who did indeed receive services, but did not appear in the profile rates, from those who actually did not receive the recommended care. To the extent this activity uncovers individuals in need of service, providers feel the time is well spent. However, providers report that a substantial amount of this tracking only turns up cases where the service was indeed delivered. Some of the providers who conducted extensive tracking efforts were motivated to identify every possible need for followup with their patients and willing to accept that the profile data would include people who had already received care. For others, the inaccuracies led to a general disregard for the value of the measures even as a starting point for discussion.

PCCs are expected to contact beneficiaries who have not received needed services. DMA provides beneficiary contact information to support this activity and the new, more timely, reminder reports are a very well-received enhancement to this process. However, the contact information in DMA's database is often incomplete or inaccurate, and is a source of great frustration to the PCCs. It is not clear why these data are inaccurate. DMA staff attribute the inaccuracies to frequent changes in address or telephone number in their beneficiary population that are not reported to DMA.

The lack of accurate contact information from DMA is only one aspect of a larger disagreement between the State and the practices about who should be included in the denominator for service rate calculations. All PCC plan members either choose or are assigned to a PCC provider, yet not all come in to be seen. The PCC plan requires providers to contact new patients to schedule an initial visit, or to followup as needed, however, the address and telephone numbers provided by DMA are often incorrect. From the physicians' perspective, individuals who did not choose the provider (e.g., the 20 percent who are assigned by an automatic process), or who cannot be contacted, or who do not respond to outreach efforts, are not their patients. The physicians would like to see these beneficiaries removed from their panels and hence from the denominator in rate calculations. DMA acknowledges that there are problems with the accuracy of the contact information that providers receive and that outreach is challenging. However, the State considers these beneficiaries part of the overall PCC plan panel and considers their assignments to individual practices meaningful. From the State perspective, it is important to include these beneficiaries in the rate calculations while realizing that the practices cannot be held responsible for outreach to patients who cannot be located.

The lag between the profile periods and dissemination of the profile reports is also a sticking point for some PCCs. As we have discussed, claims-based approaches to monitoring quality are subject to substantial lags as the claims may trickle in over several months, the adjudication process can be slow, and State information systems may have trouble handling the volume, or the key departments may be understaffed. For example, the PCC profile reports received in fall 2001 reflect activity from calendar year 2000. From a State perspective, this may be acceptable, as the goal is to look at the patterns across providers or for the total enrollment, and a delay of a year is not problematic. However, providers are less interested in looking at their past performance, especially if they already consider the data inaccurate, and are most interested in information that can help them address individuals in need of service in the present. In addition, as they check back through patient records, providers often find that the missed service has since been delivered, albeit outside of the recommended time period. While the time period may be important for some services, for others a delay of several months may be trivial, or the service may have occurred within days of the cut-off for measurement. This reinforces some providers' view that the information does not reflect the needs of their patients or accurately represent their present performance.

Our informants also had mixed views regarding the burden associated with participating in the PCC plan QI activities. In most cases, a practice manager or administrator, rather than physicians, participated in the PIMS site visits and was responsible for follow through. Smaller practices reported that the follow through, including checking on cases that may need outreach, or devising and implementing action plans, was not burdensome, and was basically worthwhile. Practices reported incorporating the followup tasks into their ongoing office procedures so that they could not estimate the time spent. Some of the larger practices, however, found the process more burdensome, as more staff (sometimes across multiple sites) needed to be involved after each site visit to review the materials, organize followup activities, and involve in action plan development and implementation. One informant expressed frustration with the administrative burden given the Medicaid payment rates and the need to meet the varying QI requirements of multiple payers.

In summary, as shown in Table 2, our informants identified challenges to QI at the practice, State, and beneficiary level. Medical practices need designated staff responsible for implementing change and the infrastructure and information systems to support new approaches to care. Larger provider groups may be contracting with several MCOs, each with different QI requirements. In addition, large medical practices with multiple sites may have to address differences in procedures and culture across sites. At the State level, inherent limits of claims-based profiling decrease the salience and credibility of the data to some providers in turn creating resistance to participation in QI activities, and providers cannot conduct effective outreach if the State cannot provide up-to-date contact information for beneficiaries. Finally, there are beneficiary level challenges to improving processes of care. Short eligibility periods give physicians little time to deliver needed services to individual patients, and for some there are cultural barriers to the use of recommended services.

Table 2. Challenges to Quality Improvement.

Practice-Level Challenges

|

State-Level Challenges

|

Beneficiary-Level Challenges

|

SOURCE: Health Economics Research, Inc., interviews with Primary Care Clinician plan providers, the Massachusetts Division of Medical Assistance, and the Behavioral Health Partnership, 2001.

Role of Rewards and Sanctions

Massachusetts is very cautious about tying any performance incentives to the profiling activities. DMA staff clearly understand that claims-based data is not a complete source of information about services provided, and approach working with their providers with this understanding. To date, DMA does not tie financial incentives to the profile results, and is cautious in considering implementing any sanctions, such as closing off new enrollments. Given the potential inaccuracies of the data, DMA is considering sanctions for low performing providers who do not meet with the PIMS staff or who do not follow through with their action plans. However, even this may not be implemented as policymakers are concerned about a possible negative impact on access to care.

Conclusions and Recommendations

Massachusetts is successfully incorporating managed care practices in a PCC plan. However, changes in processes of care are not evident in the profile reports to date. The lack of observable change is at least partially a function of the lengthy QI cycle, changes in measure specification that do not permit meaningful comparisons across years, relatively short beneficiary eligibility spells, and problems inherent in claims-based performance measurement. The changes some providers report in their practice as a result of the QI efforts, such as implementing recall and reminder systems, suggest that improvements should become observable over time.

It is clear that DMA's program has real strengths, and also problems that other Medicaid agencies should consider in approaching similar QI strategies. The strengths include: use of process measures that are credible to providers and which they can address; a well-developed system of working with individual medical practices and tailoring quality improvement plans to each practice; network management staff who work effectively with the medical practices; provision of useful beneficiary education materials; and redesign of DMA's own procedures in response to feedback from the PCC plan providers. Clinical involvement in the selection and development of the measures and in working with the practices contributes to the appropriateness of the activities and the positive response from many providers. The collaborative approach taken with practices, to understand what factors contribute to the reported service rates and to develop action plans, is consistent with quality improvement principles including creating an open, safe environment, encouraging diverse viewpoints, and negotiating agreements. Providers are very pleased with the recently-revised and much more timely reminder reports that provide the names of patients who may be in need of followup. Perhaps most important, DMA understands the limitations of the profile data and use these data as a starting point for dialog with individual medical practices, not as the basis for rewards or sanctions.

Weaknesses or limitations include those that all States or payers face in the use of claims-based profiling and some specific to Massachusetts. Limitations to the accuracy of claims-based profiling are clear, and many are unavoidable. The lack of up-to-date addresses and telephone numbers for beneficiaries who may be in need of followup is the single most frustrating issue for PCC plan providers trying to conduct effective outreach. There is a substantial lag time between the periods of performance and dissemination of the profiles, decreasing the salience of the information to providers. While the more timely reminder reports address many of the providers' concerns about the data lags regarding individual patients who may need followup, there may be other ways the time line for the routine profiling reports could be reduced if the resources were available (e.g., if DMA staff were available to analyze the data more quickly, and the PIMS staff able to deliver the profiling reports over a shorter time period). In addition, by approaching all practices with the same level of intensity, regardless of size, performance, or access to other ways to analyze their own performance, DMA may not be targeting its efforts most effectively. As a result, State resources may not be used most efficiently and large practices with internal QM/QI procedures feel time spent on PCC plan activities are redundant.

States must clearly understand that claims-based data is not a complete source of information about services provided, and approach working with their providers with this understanding. Because of the limitations of claims data, States should approach providing incentives or rewards tied to performance very cautiously.

Finally, Massachusetts is investing more resources in these activities than are available in many other States. Indeed, whether Massachusetts can sustain the current level of investment given recent budget pressures remains to be seen. States with fewer resources to draw on should consider developing fewer measures, focusing perhaps on well-child visits and cervical cancer screening for adults, and consider more targeted approaches to onsite work with individual practices. Appropriate targets would include specific provider types, such as solo practitioners with a high proportion of Medicaid beneficiaries in their panels, who would benefit most from the opportunity to better understand their patient panels. Alternatively, a State could focus on practices whose profiles suggest poor performance.

Acknowledgments

The authors wish to express their appreciation to the many staff members of the Massachusetts Division of Medical Assistance and Massachusetts Behavioral Health Partnership, and the PCC providers who all generously contributed time and information for this study. We also would like to acknowledge the input of Carol Magee and Renee Mentnech and of the anonymous reviewers who provided valuable comments on an earlier draft of this article, and the capable assistance of Anita Bracero and Michelle Klosterman.

Footnotes

Edith G.Walsh, Deborah S. Osber, and C. Ariel Nason are with Research Triangle Institute, Inc. Marjorie A. Porell is with JEN Associates, Inc. Anthony J. Asciutto is with The Medstat Group. The research in this article was funded by the Centers for Medicare & Medicaid Services (CMS) under Contract Number 500-95-0058 (TO#9). The views expressed in this article are those of the authors and do not necessarily reflect the views of Health Economics Research, Inc., JEN Associates, Inc., The Medstat Group, or CMS.

Additional information about the informants, interview protocols, and documents reviewed is available from the authors.

Reprint Requests: Edith G. Walsh, Ph.D., Research Triangle Institute, Inc., 411 Waverley Oaks Road, Waltham, MA 02452-8414. E-mail: ewalsh@rti.org

References

- Bauchner H, Simpson L, Chessare J. Changing Physician Behavior. Archives of Diseases in Childhood. 2001;84(6):459–462. doi: 10.1136/adc.84.6.459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becher EC, Chassin MR. Improving the Quality of Health Care: Who Will Lead? Health Affairs. 2001 Oct;20(5):164–179. doi: 10.1377/hlthaff.20.5.164. [DOI] [PubMed] [Google Scholar]

- Clemmer TP, Spuhler VJ, Berwick DM, et al. Cooperation: The Foundation of Improvement. American College of Physicians. 1998;128(12):1004–1009. doi: 10.7326/0003-4819-128-12_part_1-199806150-00008. [DOI] [PubMed] [Google Scholar]

- Eddy DM. Performance Measurement: Problems and Solutions. Health Affairs. 1998 Jul-Aug;17(4):7–25. doi: 10.1377/hlthaff.17.4.7. [DOI] [PubMed] [Google Scholar]

- Hofer TP, Hayward RA, Greenfield S, et al. The Unreliability of Individual Physician “Report Cards” for Assessing the Costs and Quality of Care of a Chronic Disease. The Journal of the American Medical Association. 1999;281(22):2098–2105. doi: 10.1001/jama.281.22.2098. [DOI] [PubMed] [Google Scholar]

- Mant J. Process Versus Outcome Indicators in the Assessment of Quality of Health Care. International Journal for Quality in Health Care. 2001;13(6):475–480. doi: 10.1093/intqhc/13.6.475. [DOI] [PubMed] [Google Scholar]

- Primary Care Clinician Plan Network Management Services Program. PCC Plan Profile Improvement Project. Jul, 1999. Final Report submitted to the Massachusetts Division of Medical Assistance.

- Richman R, Lancaster DR. The Clinical Guideline Process Within a Managed Care Organization. International Journal of Technology Assessment in Health Care. 2000;16(4):1061–1076. doi: 10.1017/s0266462300103125. [DOI] [PubMed] [Google Scholar]

- Rubin HR, Pronovost P, Diette GB. The Advantages and Disadvantages of Process-Based Measures of Health Care Quality. International Journal for Quality in Health Care. 2001;13(6):469–474. doi: 10.1093/intqhc/13.6.469. [DOI] [PubMed] [Google Scholar]

- Zaslavsky AM. Statistical Issues in Reporting Quality Data: Small Samples and Casemix Variation. International Journal for Quality in Health Care. 2001;13(6):481–488. doi: 10.1093/intqhc/13.6.481. [DOI] [PubMed] [Google Scholar]