Abstract

We developed a new framework for combining 17 Health Plan Employer Data and Information Set (HEDIS®) indicators into a single composite score. The resultant scale was highly reliable (coefficient alpha =0.88). A principal components analysis yielded three components to the scale: effectiveness of disease management, access to preventive and followup care, and achieving medication compliance in treating depression. This framework for reporting could improve the interpretation of HEDIS® performance data and is an important step for CMS as it moves towards a Medicare managed care (MMC) performance assessment program focused on outcomes-based measurement.

Introduction

The growth of managed care has resulted in increased concerns about the quality of health care services. These concerns have led to the development of a myriad of performance measures. However, in our opinion, performance measures have not always been used appropriately by the public, the organizations being measured, and those tracking the results of these measures, such as private accrediting bodies, regulators, and health care purchasers. CMS, which oversees the largest health care system in the country in its Medicare and Medicaid Programs, continues to analyze ways to improve the use of performance measures.

According to Maxwell et al., 1998, many public employers and State agencies today consider themselves value-based purchasers of health care services by way of managed care health service delivery systems. Several years ago the U.S. Department of Health and Human Services Office of Inspector General and the U.S. General Accounting Office (1995, 1999a, 1999b) produced reports critical of CMS' Medicare managed care performance assessment efforts. The Office of the Inspector General (1997a), along with Bailit (1997a, b) also produced reports encouraging CMS to adopt an outcomes-based performance assessment model that emphasizes use of outcomes-oriented performance data. This emphasis on use of outcomes-oriented performance data is consistent with the goals of value-based purchasing. Value-based purchasing emphasizes strategies that improve quality, encourage the efficient use of resources, and provide information to assist those making choices about health care.

CMS has made progress in becoming a value-based purchaser of health care by pursuing high quality care for beneficiaries at a reasonable cost (Sheingold and Lied, 2001). CMS now requires managed care plans to submit clinical effectiveness and other performance measures in order to determine if purchasing dollars are being appropriately spent. Nevertheless, additional work remains for CMS as it continues to pursue outcomes-based performance assessment in the MMC program.

Starting in 1997, CMS required that managed care organizations (MCOs) participating in Medicare report data from two measurement sets: HEDIS® and the Consumer Assessments of Health Plans Study (CAHPS®). CAHPS® is a self-reported survey measure of enrollee experience with their health plan. By 1998, the Medicare Health Outcomes Survey (HOS), a self-reported measure of functional status, was also used as a performance measure. HEDIS®, CAHPS®, and HOS are well-researched tools that assess a number of dimensions of plan performance, including effectiveness of care, access to care, enrollee experience with their health plan, and enrollee physical and mental health status.

While there is optimism about the potential for performance measures such as HEDIS®, CAHPS®, and HOS, to improve quality of care, there is evidence that performance data are frequently not well reported or presented and not effectively used for performance assessment purposes. Epstein (1998) suggests that several years ago the most important impediments to quality reporting were the unavailability of good indicators and standardized data; today the main impediments are in how data are reported and used. The U.S. General Accounting Office (1999a, b), the Office of the Inspector General (1997a, b) and Bailit (1997a, b) point to CMS' limited historical use of plan performance data as a major weakness in CMS' efforts to oversee the performance of MMC contractors. CMS has been examining these issues from both the perspectives of the purchaser (Zema and Rogers, 2001; Ginsberg and Sheridan, 2001) and the viewpoints of Medicare beneficiaries (Goldstein, 2001; McCormack et al., 2001).

In this article, we present a potential framework for combining HEDIS® indicators into a scale that can provide a global measurement view of important aspects of a MCO's performance. The formation of this scale could be an interim step in the development of a score card that could be used by managed care plans, themselves, and by those providing plan oversight to assess health plan performance. In addition, we examine the reliability and factorial validity of this scale. The composite score on this scale is a direct measure of 17 health care processes or outcomes, in combination, rather than an indirect measure of plan performance based on a survey of beneficiary perceptions. To our knowledge, this is the first study to examine the results of combining HEDIS® indicators into a composite score that is reported in the peer-reviewed literature, although related work is being done to develop a composite diabetes measure by the Geriatric Measurement Advisory Panel supported by the National Committee for Quality Assurance under CMS contract. Also, Cleary and Ginsberg (2001), as part of a CMS funded study, explored the feasibility of combining health plan indicators from HEDIS® and CAHPS® into composite measures.

Background

The Balanced Budget Act in 1997 substantially affected Federal funding of health care. Among its requirements, the Balanced Budget Act mandated that CMS establish quality requirements for health plans enrolling Medicare and Medicaid beneficiaries. This legislation has had a significant impact on people with Medicare since the population served by MMC programs as of October 1, 20011 was approximately 5.6 million, about 14 percent of the total Medicare population.

MCOs that participate in Medicare and Medicaid are now required to show evidence of improvement in the services they provide. CMS requires that Medicare MCOs annually conduct a quality assessment and performance improvement project. At the point of remeasurement, following interventions, the project must result in demonstrable and sustained improvement.

Recent evidence suggests that providing performance data to purchasers, regulators, providers, and consumers improves outcomes. Some believe this is the litmus test of performance measurement. Kazandjian and Lied (1998) found that continuous participation in a performance measurement project was associated with significantly lower cesarean section rates among a cohort of 110 hospitals between 1991 and 1996. Lied and Sheingold (2001) analyzed performance trends between 1996 and 1998 for MCOs in the MMC program by considering four measures from the National Committee on Quality Assurance's HEDIS®. Using a cohort analysis at the health plan level, statistically significant improvements in performance rates were observed for all measures. One interpretation of these results is that MCOs and providers are responding positively to information on quality. Reporting performance measures might also be a good business strategy for some organizations. For example, there is some evidence that reporting outcomes data can lead to increased market share and higher charges for high-performing providers. Mukamel and Mushlin (1998) tested the hypothesis that hospitals and surgeons with better outcomes in coronary artery bypass graft (CABG) surgery reported in the New York State Cardiac Surgery Reports (Hannan et al., 1994) experience a relative increase in their market share and prices. They found that hospitals and physicians with better outcomes experienced higher rates of growth in market shares and that physicians with better outcomes had higher rates in growth in charges for the CABG procedure. The authors concluded that patients and referring physicians appear to respond to quality information about hospitals and surgeons.

Conceptual Framework

Regulators and others providing health plan oversight often struggle to make sense of performance measures. This limits the utility of the measures for those providing regulatory oversight of MCOs. We believe that this struggle has been due, in large part, to the nearly exclusive focus on individual performance measures and individual statutory or regulatory provisions, creating a somewhat myopic view that has obscured the big picture. We furthermore believe that a more global and comprehensive view might be needed to identify poorly performing health plans as well as health plans performing at high levels.

Some States such as California, Maryland, New Jersey, New York, and Pennsylvania have produced health plan ranking reports, and Newsweek magazine has published annual surveys ranking the Nation's 100 largest health maintenance organizations. However, these published rankings have rarely provided evidence of the scientifically established reliability and validity of their rankings. A CMS-sponsored study by RAND and authored by McGlynn et al. (1999) attempted to assist CMS in designing report cards for Medicare beneficiaries to aid in their choice of managed care plans. RAND looked at various reporting frameworks and recommended that CMS report summary scales in all written materials. They also recommended that CMS use a national benchmark based on optimal national performance. Their recommendations may be worth considering in designing a report card for purchasers since many of the problems of data interpretation and use apply to purchasers as well as consumers.

Currently, CMS conducts oversight and performance evaluation by assessing a contracting health plan's compliance with specific statutory, regulatory, and policy provisions. This oversight methodology is process-oriented and audit-based; it requires that organizations demonstrate through interviews, written documentation, and submission (and subsequent analysis by CMS auditors) of limited process-oriented operations data, such as claims payment and appeals data, that applicable Medicare compliance provisions are met. This process assumes that by meeting specific requirements the company ensures access to quality health care services for its enrolled Medicare beneficiaries and safeguards the Medicare Program from fraud and abuse.

The use of composite scores, as derived directly from a number of health care indicators, might improve the assessment of overall MCO performance, especially in terms of the value of the payment dollar and the level of clinical services to the member. The development of composite scores based on outcomes-oriented data sources, such as HEDIS® or CAHPS®, could be an important part of CMS' efforts to move towards a MMC performance assessment program focused on outcomes-based measurement rather than on process-oriented measurement. Having a composite score for each health plan also permits the ranking of organizations for the purpose of deciding the level below which regulatory intervention is warranted. This could be important in an environment where staff, time, and financial resources are limited. By comparing performance of health plans, a process that can be improved by the development of scales and composite scores, reviewers might have more productive discussions with organizations and can focus on specific areas of performance to learn the basis for reported indicator scores and the process the MCO is using to improve performance. This approach to performance assessment might be less invasive and burdensome for health organizations, and appears consistent with CMS' ongoing efforts to base MMC performance assessment on outcomes-oriented data rather than on process-oriented measurement.

Methodology

Participants

A total of 160 plans out of 179 coordinated care plans as of October 1, 2001, reported HEDIS® 2001 data (for calendar year 2000). These plans enrolled 5,125,702 beneficiaries for calendar year 2000. The mean enrollment per plan was 32,036 beneficiaries with a minimum of 1,061 and a maximum of 411,553 beneficiaries. Only 64 plans (40 percent) reported on all 17 HEDIS® indicators. The mean number of indicators reported per plan was 13.58 and the standard deviation was 3.66. A total of 148 plans (92.5 percent) reported on 9 or more indicators.

We reported at the plan contract level except that in some highly populated areas, contracts were subdivided for reporting purposes into multiple, geographically defined reporting units. In these cases, the HEDIS® data for the multiple reporting units were aggregated to the contract level so that the 160 plans actually represent 160 managed care contracts. The vast majority of these contracts involved MCOs participating in Medicare+Choice.

Measures

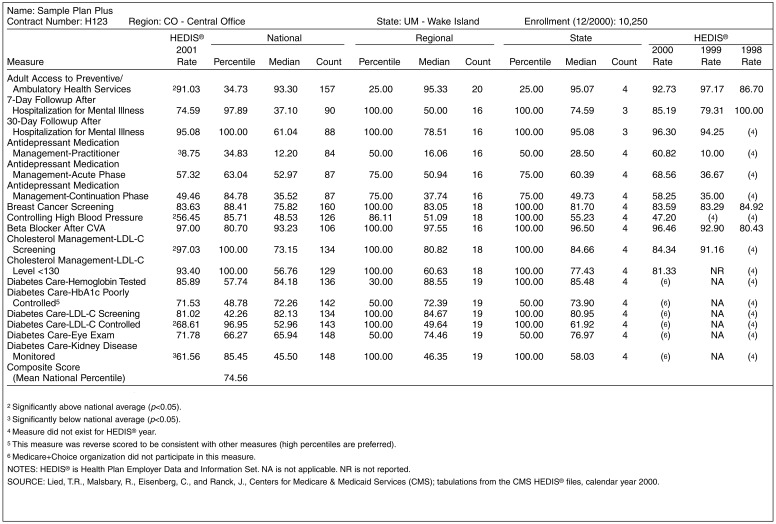

This study used 17 HEDIS® indicators within 8 measures, including both process and outcome measures from the CMS HEDIS® files (Table 1). While there are additional HEDIS® indicators available, those indicators reflect other domains such as use of services which were outside of the scope of this study. All of the process and outcome indicators measure the domain of effectiveness of care with the exception of adult access to prevention and ambulatory health services, which measures access and availability of care. Many of the effectiveness of care indicators appear to capture aspects of effective preventive health care use. The mock HEDIS® score card that we developed (Figure 1) illustrates how these 17 HEDIS® measures might be used. This score card allows for national, regional, and State comparisons. It permits tracking trends within the health plan, and contains overall composite scores.

Table 1. HEDIS® Indicators Descriptive Information: Medicare Managed Care Plans: Calendar Year 2000.

| Indicator | National Reporting Plans | National Mean Rate | Standard Deviation | Low-High Rate | Correlation with Composite Score |

|---|---|---|---|---|---|

| Adult Access to Preventive/Ambulatory Health Services | 155 | 91.36 | 6.45 | 60.30-100.00 | 0.43 |

| 7-Day Followup for Hospitalization for Mental Illness | 90 | 37.93 | 16.98 | 8.33-88.10 | 0.56 |

| 30-Day Followup for Hospitalization for Mental Illness | 91 | 60.25 | 18.20 | 8.16-95.08 | 0.62 |

| Antidepressant Medication Management-Optimal Contacts | 87 | 12.96 | 7.64 | 1.41-38.64 | 0.14 |

| Effective Acute Phase Treatment of Depression | 90 | 54.15 | 10.72 | 29.49-81.27 | 0.23 |

| Effective Chronic Phase Treatment of Depression | 90 | 37.55 | 11.97 | 6.25-75.76 | 0.38 |

| Breast Cancer Screening | 154 | 74.62 | 9.26 | 30.30-90.83 | 0.72 |

| Controlling High Blood Pressure | 151 | 47.64 | 9.02 | 24.01-70.82 | 0.47 |

| Beta Blocker after CVA | 106 | 90.23 | 10.16 | 39.73-100.00 | 0.64 |

| Cholesterol Management-LDL-C Screening | 124 | 70.88 | 13.30 | 9.09-97.03 | 0.74 |

| Cholesterol Management-LDL-C Level <130 | 120 | 53.71 | 16.83 | 3.03-93.40 | 0.82 |

| Diabetes Care-HbA1C Tested | 153 | 83.40 | 9.58 | 34.66-97.15 | 0.78 |

| Diabetes Care-HbA1C Poorly Controlled1 | 151 | 67.53 | 17.82 | 1.92-91.97 | 0.78 |

| Diabetes Care-LDL-C Screening | 153 | 80.90 | 10.63 | 25.00-96.59 | 0.58 |

| Diabetes Care-LDL- C Controlled | 151 | 51.87 | 12.29 | 6.45-80.57 | 0.76 |

| Diabetes Care-Eye Exam | 153 | 64.33 | 15.23 | 20.47-92.94 | 0.62 |

| Diabetes Care-Kidney Disease Monitored | 150 | 46.38 | 15.84 | 17.22-94.65 | 0.67 |

Item is reverse-scored.

NOTES: HEDIS® is Health Plan Employer Data and Information Set. Correlations are significant at the 0.01 level, two-tailed test. Correlations have been corrected for the spurious effects of individual indicators on the composite score.

SOURCE: Lied, T.R., Malsbary, R., Eisenberg, C., and Ranck, J., Centers for Medicare & Medicaid Services (CMS). Author's tabulations from the CMS HEDIS® files, calendar year 2000.

Figure 1. Mock HEDIS® Score Card1.

1This card was created by the author.

Procedures

In developing a scale of HEDIS® indicators, we borrowed from the methods that have been used for decades by many educators and social scientists in constructing composite scores from test batteries and developing a basis for comparing these composite scores with a standard or norm. In our case, data were aggregated for comparison purposes with State, regional, and national averages or “norms.”

For each of the reporting health plans, we converted each of the 17 HEDIS® rates into either a State, regional, or national percentile. These converted rates were percentile-ranks theoretically varying from 1 to 100, depending on how a given HEDIS® rate for a particular plan fared against the State, regional, or national averages for that HEDIS® rate. The theoretical average percentile-rank was 50. To develop a plan composite score, we averaged the percentile ranks for each HEDIS® indicator that a plan reported based on the national sample of plans. We did not apply weights to the indicators so that each indicator was, in effect, self-weighted, and, thus, those indicators with the greatest variation had the most weighting.

The mean national composite score was 49.36, differing only slightly from the theoretical mean value of 50 due to rounding. Plans markedly deviating from this mean could be considered to be either high overall HEDIS® performers (e.g., those with composite scores above 70) or low overall HEDIS® performers (e.g., those with composite scores below 30) based on the national comparison group.

We conducted our statistical analysis using SPSS™ 10.00. We computed descriptive statistics of the indicators and the composite score. We also computed item-total correlations between the indicators and the composite score, adjusting the correlations to remove the effects of individual indicators on the composite score (Henrysson, 1963). We had only one indicator where a negative correlation was potentially problematic (diabetes care-Hemoglobin—poorly controlled), but we dealt with this problem by reverse scoring the item.

The component structure of the scale was analyzed using a principal components analysis with a promax rotation method, because there were theoretical reasons and supporting literature to posit that various quality components might be related (Lied and Sheingold, 2001). A scree plot was used to assist in determining the number of components that would provide the most appropriate solution. All 160 reporting plans were included in the analysis. An examination of the scree plot and component matrix suggested that a three-component solution would be the most interpretable solution. We reran the principal component analysis, setting the maximum number of components at three. We also conducted an analysis of the internal consistency reliability of the scale.

Results

Descriptive and Correlational Analysis

Numbers of reporting plans for each indicator, means, standard deviations, minimum, and maximum values for the rates (expressed as percentages) of the 17 HEDIS® indicators are contained in Table 1. The adult access to preventive and ambulatory health services displayed the highest mean national rate at 91.36 percent. Optimal practitioner contacts for antidepressant medication management had the lowest mean national rate at 12.96 percent.

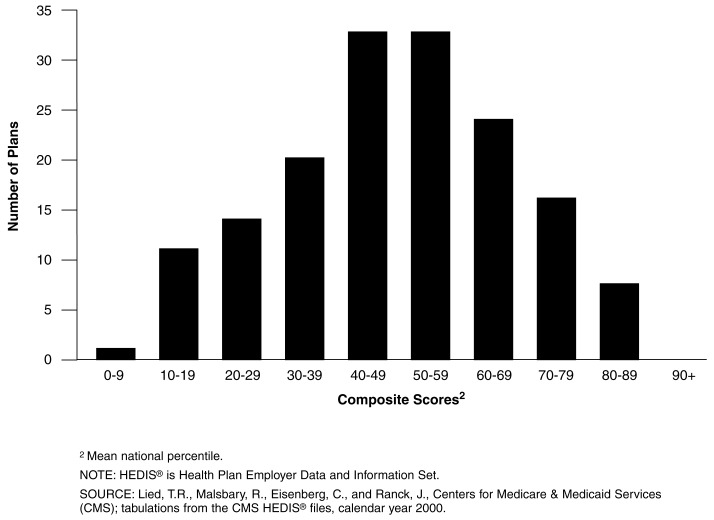

Figure 2 presents the distribution of the composite scores for these 160 plans. The mean composite score was 49.36, and the standard deviation was 18.23. The distribution of these composite scores was widespread and closely approximated a normal distribution. Twenty-six plans had composite scores below 30, and 22 plans had composite scores above 70. No plans had a composite score above 89.

Figure 2. Distribution of HEDIS® Composite Scores of Medicare Managed Care Plans: Calendar Year 20001.

1N=160.

Table 1 shows the results of our correlational analysis relating HEDIS® indicator results with the scale composite score. Moderate or high correlations between the converted plan indicator rates (all individual plan HEDIS® rates were converted to a percentile based on national rankings) and the composite score provided support for including the HEDIS® indicator in our scale. Adjusted item-scale correlations between HEDIS® indicators and the composite score ranged from a low of 0.14 to a high of 0.82. All but the lowest correlation was statistically significant. Most were moderate to high, supporting their inclusion in the composite score. The notable exceptions were the three antidepressant medication management indicators, although two of them were significantly related to the composite score.

Internal Consistency Reliability Analysis

Cronbach's coefficient alpha was the statistic used to assess internal consistency reliability. Cronbach's alpha was 0.88 for the 64 plans that reported on all 17 indicators, denoting high internal consistency reliability of the scale.

Principal Components Analysis

Using a rotated solution for three components, the total scale variance explained by the three components was 59.2 percent. The first component explained 38.34 percent of the variance; the second, 11.20 percent; and the third, 9.69 percent.

Table 2 shows the principal components structure matrix. Eight indicators listed in Table 1 (which included controlling high blood pressure, cholesterol screening and management, and all five diabetes care indicators) loaded substantially on the first component. We interpreted this component as measuring effective disease management. The adult access indicator, the two indicators for followup after hospitalization for mental illness, breast cancer screening, and, as noted, eye exams for people with diabetes, loaded substantially on the second component, although the latter two indicators also loaded on the first component. We interpreted the second component as measuring access to preventive and followup care. The effective acute and chronic phase treatment indicators for depression loaded substantially on the third component, which we called achieving medication compliance in treating depression. The only indicator that did not load substantially on any of the three components was optimal practitioner contacts for medication management of depression. This indicator also had the lowest mean rate of all the indicators (12.96 percent), suggesting several possibilities, including underreporting or a prevalence of inadequate clinical management of new treatment episodes of depression. Table 3 lists the three scale components along with their interpretations, loadings, and percentage of explained variance.

Table 2. HEDIS® Indicators Principal Components Structure Matrix: Calendar Year 2000.

| HEDIS® Indicator | Component Loading | ||

|---|---|---|---|

|

| |||

| 1 | 2 | 3 | |

|

| |||

| Percent | |||

| Adult Access to Preventive/Ambulatory Health Services | 0.34 | 0.55 | 0.03 |

| 7-Day Followup for Hospitalization for Mental Illness | 0.32 | 0.84 | 0.10 |

| 30-Day Followup for Hospitalization for Mental Illness | 0.39 | 0.89 | 0.15 |

| Antidepressant Medication Management-Optimal Contacts | 0.03 | 0.16 | 0.05 |

| Effective Acute Phase Treatment of Depression | 0.06 | 0.05 | 0.91 |

| Effective Chronic Phase Treatment of Depression | 0.19 | 0.22 | 0.93 |

| Breast Cancer Screening | 0.62 | 0.71 | 0.24 |

| Controlling High Blood Pressure | 0.53 | 0.23 | -0.10 |

| Beta Blocker After CVA | 0.59 | 0.60 | 0.16 |

| Cholesterol Management-LDL-C Screening | 0.84 | 0.38 | 0.09 |

| Cholesterol Management-LDL-C Level <130 | 0.87 | 0.50 | 0.25 |

| Diabetes Care-HbA1C Tested | 0.79 | 0.52 | 0.21 |

| Diabetes Care-HbA1c Poorly Controlled1 | 0.82 | 0.40 | 0.37 |

| Diabetes Care-LDL-C Screening | 0.71 | 0.17 | -0.05 |

| Diabetes Care-LDL- C Controlled | 0.80 | 0.41 | 0.41 |

| Diabetes Care-Eye Exam | 0.51 | 0.67 | 0.29 |

| Diabetes Care-Kidney Disease Monitored | 0.69 | 0.46 | 0.07 |

Indicator is reverse scored.

NOTES: Components are: (1) effective disease management, (2) access to preventive and followup care, and (3) achieving medication compliance in treating depression. HEDIS® is Health Plan Employer Data and Information Set. Extraction method: Principal component analysis. Rotation method: Promax with Kaiser normalization.

SOURCE: Lied, T.R., Malsbary, R., Eisenberg, C., and Ranck, J., Centers for Medicare & Medicaid Services (CMS); tabulations from the CMS HEDIS® files, calendar year 2000.

Table 3. Total Scale Variance Explained, by Scale Components: Calendar Year 2000.

| Component | Interpretation of Component | Initial Eigen Value | Variance Explained | Cumulative Variance Explained |

|---|---|---|---|---|

|

| ||||

| Percent | ||||

| 1 | Effective Disease Management | 6.52 | 38.34 | 38.34 |

| 2 | Access to Preventive and Followup Care | 1.90 | 11.2 | 49.54 |

| 3 | Achieving Medication Compliance in Treating Depression | 1.65 | 9.67 | 59.23 |

NOTES: HEDIS® is Health Plan Employer Data and Information Set. Extraction method: Principal components analysis. Rotation method: Promax with Kaiser normalization.

SOURCE: Lied, T.R., Malsbary, R., Eisenberg, C., and Ranck, J., Centers for Medicare & Medicaid Services (CMS); tabulations from the CMS HEDIS® files, calendar year 2000.

Discussion

While CMS has been reporting HEDIS® rates since 1997 for its managed care program, it has not developed a score card or any other tool that allows for plans to be assessed in a comprehensive comparative manner using process or outcomes data. While CAHPS® has several composite scores that improve its usefulness as a measure of consumer perceptions, and is significantly related to some HEDIS® indicators (Schneider et al., 2001), it does not directly measure processes or outcomes of care as HEDIS® does.

Process and outcomes indicators like the ones contained in HEDIS®, that could allow for a comprehensive approach to measurement by combining indicators, until recently, have rarely been considered as amenable to the formation of a scale. Our study suggests that a number of HEDIS® indicators can be combined to form a scale that is reliable (internally consistent) and suggestive of factor validity. The Cronbach coefficient alpha, a measure of internal consistency reliability, was 0.88 for the 17-item scale, suggesting that the scale was highly internally consistent. Composite scores of health plans on this scale are distributed in an approximately normal fashion, and there is considerable variability among health plans, both in terms of the individual indicators and the composite scores. This suggests that the scale has statistical properties that may make it useful as a measure of interplan variability in HEDIS® performance. In conducting a principal components analysis, we found that the three-component solution was the most readily interpreted. We called the first component effective disease management. Eight of 17 HEDIS® indicators loaded substantially on this component. Five indicators loaded substantially on the second component, which we termed access to preventive and followup care, but two of those indicators also loaded on the first component—breast cancer screening and beta blocker treatment after heart attack. Two items loaded substantially on the third component that we called achieving medication compliance in treating depression.

An argument could have been made for a single component solution based on the variance explained by the first component (38 percent) in comparison to the variance explained by the other two components together (21 percent). The three component solution, however, seemed to be a better fit of the data than a solution only accounting for 38 percent of the scale variation. By deciding on a three component solution, we are suggesting that it may be useful to consider developing three composite scores rather than a single composite score for these HEDIS® indicators. Alternatively, future versions of this scale might be more pure if some of the indicators were dropped. One indicator that we would recommend dropping from the scale as it currently exists is antidepressant medication management—practitioner contacts. This indicator was not significantly related to the composite score and had a low component loading on all three components.

Our first attempt at developing and validating a new measurement approach for HEDIS® indicators has several limitations. First, only 64 out of 160 health plans reported on all 17 HEDIS® indicators, although 148 plans (92.5 percent) reported on 9 or more indicators. These missing data could limit the utility of the composite score for interplan comparisons, even though the composite score was an average, not a sum, of the reported indicators. For example, if a plan reports on only a few indicators, high performance on one or two indicators can mask poor performance on other indicators. We, therefore, recommend that interplan comparisons be conducted with caution if composite scores are used and the number of reported indicators for a given plan is less than 9 or 10. Second, we did not attempt to determine whether our scale could be improved by weighting the indicators, which could increase the scale's validity. Weighting indicators was beyond the scope of this first study, and is a topic that is sufficiently complex to merit a separate research study. A third limitation is that, except for our principal components analysis, we did not investigate construct validity.

We anticipate that future efforts will be directed at examining the relationships between the HEDIS® composite score and other performance measures and provide evidence for or against construct validity. Future research should also look at improving the validity of the composite score by excluding indicators with low correlations with the composite score and, perhaps, by including additional indicators. In addition, future research should examine the relationship between HEDIS® composite scores and other performance measures such as CAHPS® composite scores and overall ratings, voluntary disenrollment rates, ambulatory care sensitive condition indices, and, perhaps, HOS, risk-adjustment scores, and appeals data.

This study was not designed to answer policy questions but rather to begin the steps of providing a valid tool that can serve both regulators and care providers. We believe that this reporting framework for HEDIS® process and outcomes measurement could have a positive impact on MCOs' ability to evaluate their own performance. By comparing their composite scores against those of other managed care plans, as well as by drilling down to compare themselves with others on individual indicators, health care organizations can better assess how they compare with a national sample of their peers. Comparative data allows organizations to establish benchmarks for improvement, not only in specific processes and outcomes but also in an overall sense. These organizations could be better informed with a HEDIS® composite score, and made more aware of whether their targeting efforts are truly effective or whether their efforts are increasing the vulnerability of non-targeted areas to performance declines. Ultimately, this approach could lead to a reduction in burden for MCOs and improve quality of care.

Acknowledgments

The authors would like to express their appreciation to Cynthia Tudor and Robert Donnelly for their helpful comments on this article.

Footnotes

The authors are with the Centers for Medicare & Medicaid Services (CMS). The views expressed in this article are those of the authors and do not necessarily reflect the views of CMS.

MMC contract report, Internet address: http://www.hcfa.gov/stats/monthly.htm

Reprint Requests: Terry R. Lied, Ph.D., Centers for Medicare & Medicaid Services, C4-13-01, 7500 Security Boulevard, Baltimore, MD 21244-1850. E-mail: tlied@cms.hhs.gov

References

- Bailit Health Purchasing, LLC. Assessment of the Medicare Managed Care Compliance Monitoring Program. Nov 4, 1997a. Report 1. Unpublished report.

- Bailit Health Purchasing, LLC. Review of the Managed Care Contractor Compliance Monitoring Programs of Major U.S. Health Care Purchasers. Nov 12, 1997b. Unpublished report.

- Cleary PD, Ginsberg C. Research on Methods to Combine Performance Indicators Into Simpler Composite Measures. Centers for Medicare and Medicaid Services; Baltimore, MD.: Sep 15, 2001. Final Report. [Google Scholar]

- Epstein AM. Rolling Down the Runway: The Challenges Ahead for Quality Report Cards. Journal of the American Medical Association. 1998 Jun;279(21):1691–1696. doi: 10.1001/jama.279.21.1691. [DOI] [PubMed] [Google Scholar]

- Ginsberg C, Sheridan S. Limitations of and Barriers to Using Performance Measurement: Purchaser's Perspectives. Health Care Financing Review. 2001 Spring;22(3):49–57. [PMC free article] [PubMed] [Google Scholar]

- Goldstein E. CMS's Consumer Information Efforts. Health Care Financing Review. 2001 Fall;23(1):1–4. [PMC free article] [PubMed] [Google Scholar]

- Hannan EL, Kumar D, Racz M, et al. New York State's Cardiac Surgery Reporting System: Four Years Later. Annals of Thoracic Surgery. 1994;58(6):1852–1857. doi: 10.1016/0003-4975(94)91726-4. [DOI] [PubMed] [Google Scholar]

- Henrysson S. Correction of Item-Total Correlations in Item Analysis. Psychometrika. 1963;28:211–218. [Google Scholar]

- Kazandjian VA, Lied TR. Cesarean Section Rates: Effects of Participation in a Performance Measurement Project. The Joint Commission Journal on Quality Improvement. 1998 Apr;24(4):187–196. doi: 10.1016/s1070-3241(16)30371-6. [DOI] [PubMed] [Google Scholar]

- Lied TR, Sheingold S. HEDIS® Performance Trends in Medicare Managed Care. Health Care Financing Review. 2001 Fall;23(1):149–160. [PMC free article] [PubMed] [Google Scholar]

- Maxwell J, Briscoe F, Davidson S, et al. Managed Competition in Practice: Value Purchasing by Fourteen Employers. Health Affairs. 1998 May-Jun;17(3):216–226. doi: 10.1377/hlthaff.17.3.216. [DOI] [PubMed] [Google Scholar]

- McCormack LA, Garfinkel SA, Hibbard JH, et al. Beneficiary Survey-Based Feedback on New Medicare Information Materials. Health Care Financing Review. 2001 Fall;23(1):21–35. [PMC free article] [PubMed] [Google Scholar]

- McGlynn EA, Adams J, Hicks J, Klein D. Developing Health Plan Performance Reports: Responding to the BBA. Aug, 1999. RAND DRU-2122-HCFA.

- Mukamel DB, Mushlin AI. Quality of Care Information: An Analysis of Market Share and Price Changes after Publication of the New York State Cardiac Surgery Reports. Medical Care. 1998 Jul;36(7):945–954. doi: 10.1097/00005650-199807000-00002. [DOI] [PubMed] [Google Scholar]

- Office of the Inspector General. Medicare's Oversight of Managed Care: Implications for Regional Staffing. U.S. Department of Health and Human Services; Washington, DC.: 1997a. Report OEI-01-96-00191. [Google Scholar]

- Office of the Inspector General. Medicare's Oversight of Managed Care: Monitoring Plan Performance. U.S. Department of Health and Human Services; Washington, DC.: 1997b. Report OEI-01-96-00190. [Google Scholar]

- Schneider EC, Zaslavsky AM, Landon BE, et al. National Monitoring of Medicare Health Plans: The Relationship Between Enrollees' Reports and Quality of Clinical Care. Medical Care. 2001 Winter;39(12):1313–1325. doi: 10.1097/00005650-200112000-00007. [DOI] [PubMed] [Google Scholar]

- Sheingold S, Lied TR. An Overview: The Future of Plan Performance Measurement. Health Care Financing Review. 2001 Spring;22(3):1–5. [PMC free article] [PubMed] [Google Scholar]

- U.S. General Accounting Office. Medicare: Increased HMO Oversight Could Improve Quality and Access to Care. U.S. General Accounting Office; Washington, DC.: Aug, 1995. GAO/HEHS-95-229. [Google Scholar]

- U.S. General Accounting Office. Medicare Contractors: Despite Its Efforts, HCFA Cannot Ensure Their Effectiveness or Integrity. U.S. General Accounting Office; Washington, DC.: Jul, 1999a. GAO/HEHS-99-115. [Google Scholar]

- U.S. General Accounting Office. Medicare Managed Care: Greater Oversight Needed to Protect Beneficiary Rights. U.S. General Accounting Office; Washington, DC.: Apr, 1999b. GAO/HEHS-99-68. [Google Scholar]

- Zema CL, Rogers L. Evidence of Innovative Uses of Performance Measures Among Purchasers. Health Care Financing Review. 2001 Spring;22(3):35–47. [PMC free article] [PubMed] [Google Scholar]