Abstract

Senior hospital executives responding to a 2005 national telephone survey conducted for the Centers for Medicare & Medicaid Services (CMS) report that Hospital Compare and other public reports on hospital quality measures have helped to focus hospital leadership attention on quality matters. They also report increased investment in quality improvement (QI) projects and in people and systems to improve documentation of care. Additionally, more consideration is given to best practice guidelines and internal sharing of quality measure results among hospital staff. Large, Joint Commission on Accreditation of Healthcare Organizations (JCAHO) accredited hospitals appear to be responding to public reporting efforts more consistently than small, non-JCAHO accredited hospitals.

Introduction

The development of standard health care quality measures and systems for reporting quality measures to the public are rooted in two events: (1) growing awareness of recent research indicating that patients receive only about one-half of the diagnostic tests and treatments they should receive according to professional guidelines (McGlynn et al., 2003) and (2) the loss of at least 44,000 lives each year to health care errors (Institute of Medicine, 2000). Many public reporting systems have been developed exclusively for hospitals. The Delmarva Foundation for Medical Care and JCAHO recently reviewed 51 such systems (Shearer and Cronin, 2005). The State and Federal agencies, business coalitions, accrediting agencies, and health care provider organizations that created the earliest measures and reporting systems did so on the assumption that, instead of using a heavy-handed regulatory approach to motivate providers to improve care, improvements in health care quality could be accomplished by relying on the following: market pressure from individual consumers and other purchasers of health care, providers' own interest in upholding their reputations, and provider desire to avoid legal exposure that could be connected to low quality scores (Devers, Pham, and Liu, 2004).

Studies on hospital quality reporting from the late 1980s through mid-1990s, however, suggested that public reporting of hospital mortality or complication rates was not especially effective in motivating providers to implement QI programs, or in encouraging consumers to seek out higher-quality providers (Luce et al., 1996; Berwick and Wald, 1990; Vladek et al., 1988). However, evidence from more recent State- and local-level initiatives and the Community Tracking Study (CTS)1 is beginning to suggest that the subsequent generation of quality reports—which often include process measures—have been more useful to hospitals in shaping QI programs, although they still do not appear to affect hospital market shares to any large degree. Studies of public reporting of surgical care quality indices in New York and California noted that, while some hospital administrators were critical of the timeliness of the reporting and of the indicators reported, most believed that the reporting systems were generally accurate in describing their hospital's performance and were useful in shaping their QI efforts (Romano, Rainwater, and Antonius, 1999; Chassin, 2002). A Pennsylvania program to publicize quality and cost indices for cardiac surgeons and hospitals prompted positive changes in patient care and physician recruiting practices (Bentley and Nash, 1998). In Madison, Wisconsin, hospitals scoring low in a public report on hospital quality implemented a larger number of internal QI activities, though their market share did not significantly decrease after publication of the negative findings (Hibbard, Stockard, and Tusler, 2005). The CTS qualitatively assessed hospital responses to quality reporting programs in the largest hospitals in a small number of urban communities and found similar responses to those reported in this article (Pham, Coughlan, and O'Malley, 2006). Our study supplements this body of research by providing the first nationally representative information about hospitals' operational responses to public reporting in general, and to CMS' Hospital Compare public report in particular.

On April 1, 2005, the Hospital Compare Web site for consumers was launched by CMS and the Hospital Quality Alliance, led by the American Hospital Association, the American Association of Medical Colleges, and the Federation of American Hospitals. Hospital Compare is by far the largest hospital public reporting system. It provides information on aspects of quality of care for approximately 4,200 short-term acute care hospitals that voluntarily report their scores on some or all of the system's quality measures.2 About 90 percent of the facilities that report their scores are acute care hospitals and 10 percent are critical access hospitals (CAHs). The quality measures in Hospital Compare relate to four clinical areas—heart attack, congestive heart failure, pneumonia, and surgical infection prevention.

The study documented in this article draws from a recent national survey of hospital leadership staff that examined how hospitals have responded in general to the call to publicly report on the quality of care they deliver and, in particular, to the new Hospital Compare Web site. This article explains how participation in public reporting programs has helped to spur changes in: the attention that management gives to quality; internal QI programs and documentation efforts; the level and type of staff effort devoted to QI; and quality scores.

Data and Methods

The data for the study come from a national telephone survey of senior hospital executives (typically the vice president of medical affairs or the chief medical officer) and directors of hospital QI departments, administered by Mathematica Policy Research, Inc. (MPR), in summer 2005. The initial survey sample of 800 hospitals was a stratified national probability sample of short-term acute-care general hospitals and CAHs in the 50 States and the District of Columbia that submitted quality data to Hospital Compare in 2005. The sampling frame was constructed by merging the American Hospital Association's 2003 Annual Survey database with CMS' 2005 Hospital Compare database to identify a total of 3,856 relevant U.S. hospitals for the survey. This sampling frame represents approximately 87 percent of all acute care hospitals and CAHs.3

The sampling process guaranteed that the sample was representative of all such hospitals on the dimensions of: number of beds (<99, 100-299, or 300+), JCAHO accreditation status, and participation in the Premier Hospital Quality Incentive Demonstration (HQID).4 The initial sample was selected with equal probability within each of these three strata. In some strata all hospitals were selected and in other strata as few as 10 percent of the hospitals were selected. Hospital bed size was chosen as a stratification variable given the policy interest in both ends of this spectrum. Small hospitals are more likely to be rural, CAHs, and sole community hospitals. Large hospitals are more likely to be academic medical centers and to treat a large proportion of Medicare beneficiaries. Because Hospital Compare measures are aligned with JCAHO and HQID program measures, accredited or HQID-participating hospitals were expected to have less additional burden attributable to Hospital Compare data submission given that they were already reporting similar measures for the JCAHO and HQID programs.

MPR's telephone survey center administered separate surveys for hospital QI directors and for senior hospital executives. Interviews were conducted primarily through computer-assisted telephone interviews, with mail followup for respondents who preferred to complete a hard-copy questionnaire. Interviews with QI directors lasted approximately 40 minutes and interviews with senior executives lasted about 30 minutes.

Of the 800 hospitals selected for the survey, 664 QI directors and 650 senior executives provided complete interview data. For QI directors, this represented an unweighted response rate of 98 percent and a weighted response rate of 95 percent (using the sample selection weight); for senior executives, this represented a 96 percent unweighted response rate and an 89 percent weighted response rate.5 There was substantial overlap in hospital affiliation among the QI director and senior executive interviews. The high survey response rate by both types of respondents and their general consistency of responses for similar questions in the two surveys increase our confidence in the reliability of the survey findings. Each respondent was assured prior to the interview that his/her responses would remain strictly confidential, and that only statistical totals would be reported. No remuneration was provided to respondents.

Unless noted, all descriptive statistics presented in this article are based on weighted survey responses for all acute care hospitals and CAHs nationally. Selected point estimates of population proportions—those that are very low and may not be different from zero or those based on a small subgroup of respondents—are followed by numbers in parentheses that represent the 95 percent confidence interval for the point estimate. These confidence intervals are not theoretically expected to be symmetric for proportions deviating from 50 percent.

In addition to reporting overall results, we employed descriptive statistics to compare responses from hospitals that are large (300 or more beds) and JCAHO accredited, with hospitals that are small (1-99 beds) and non-JCAHO accredited, and with other hospitals that do not fall within in either of these two groups (most are 100-299 beds). Because hospital size and JCAHO accreditation are likely to affect hospital views and resources devoted to quality reporting and QI efforts, we expected to see the widest differences in survey responses between the first two hospital subgroups. Ideally, it would be useful to disentangle the impacts of bed-size and JCAHO accreditation, which are highly correlated, through multivariate analysis, but such analysis was beyond the scope of our study.6 Large, JCAHO accredited hospitals accounted for 26 percent of both the senior executive and QI director final samples, with 171 completed senior executive interviews for a 97-percent response rate and 172 completed QI director interviews for a 98-percent response rate. Small non-JCAHO accredited hospitals accounted for 21 percent of both the senior executive and QI director final samples, with 133 completed senior executive interviews for a 96-percent response rate and 140 completed QI director interviews for a 97-percent response rate. The remaining hospitals accounted for 53 percent of both the senior executive and QI director final samples, with 334 completed senior executive interviews for a 95-percent response rate and 341 completed QI director interviews for a 98-percent response rate. Where differences among these hospital subgroups are described, chi-square or t-tests as appropriate, indicated a statistically significant difference at the 95-percent level of confidence unless otherwise noted.

Because hospitals could qualify for our survey by submitting any data to Hospital Compare, additional information about their level of involvement is necessary to interpret the survey results. According to the survey, almost all QI directors (95 percent) worked in hospitals that voluntarily submit data for all 10 of the starter set of measures for the combined clinical areas of heart attack, chronic heart failure, and pneumonia. Hospitals that submit data for these 10 measures receive the full annual Medicare payment update.7 Forty-four percent (39-49 percent) of QI directors were in hospitals that voluntarily submit data on additional Hospital Compare measures in these three areas, and 25 percent (18-32 percent) submit data in the fourth measurement area of surgical infection prevention.

Our study's main limitation is its reliance on hospital executive self-reports in documenting the impacts of public reporting on hospitals. Aware that CMS was sponsoring the study, the respondents may have either exaggerated or tempered some of their answers. To mitigate this problem, the survey questions were designed to probe changes in hospital activities in some detail, getting at the components of an organization's response in multiple ways rather than relying on general questions that are more vulnerable to exaggerated response. Also, as previously noted, our survey had very high response rates in the mid to upper 90 percent. Hospital leaders were clearly eager to talk about their experience with public reporting and pleased that CMS was seeking their feedback through a survey. It seems likely that the eagerness to talk reflected by the high response rates may be a reflection of the intensive efforts that they report. Further, our results are qualitatively consistent with findings from the CTS based on in-person interviews with hospital executives from selected large hospitals (Pham, Coughlan, and O'Malley, 2006). Survey responses to several questions that were fairly critical of some aspects of Hospital Compare lend further evidence that hospital respondents were in fact not limiting themselves to providing only positive feedback to CMS. For example, QI directors were quite willing to describe navigation of the QualityNet Web site as difficult (32 percent)8 and one-third (33 percent) also felt that the overall process of collecting and submitting Hospital Compare data to CMS was somewhat or very difficult. Additionally, 72 percent of QI directors and 86 percent of senior executives believed that Hospital Compare data represent the hospital's quality performance for the measured conditions only somewhat accurately or not accurately at all. Given this level of open criticism of the survey's sponsor, there seems to be less reason to suspect the responses to other portions of the survey.

Findings

Public Reaction to Hospital Reporting

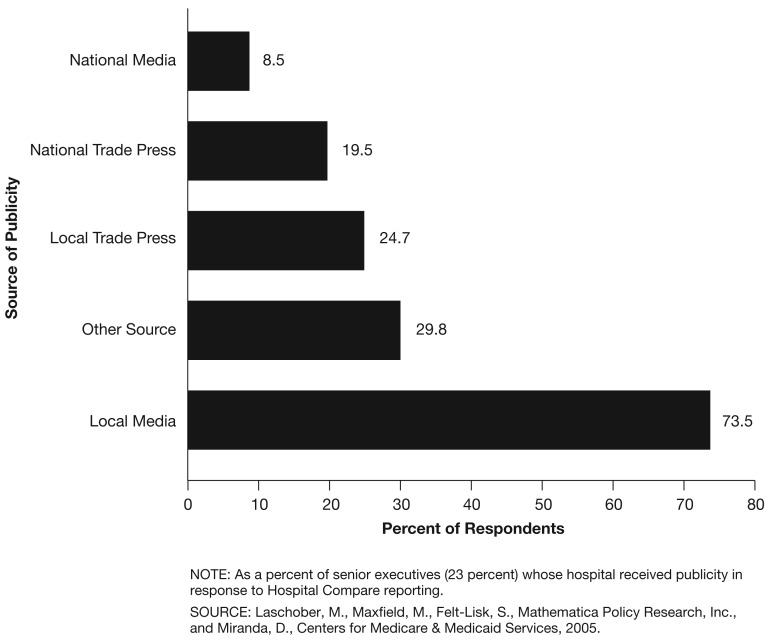

Hospital Compare appears to have received little public attention beyond hospitals themselves. For example, less than one-quarter of senior executives (23 percent) and QI directors (22 percent) were in hospitals that received any external publicity or other attention as a result of the quality data published on Hospital Compare. Of the population of QI directors who received publicity related to Hospital Compare, less than one-half (44 percent [34-55 percent]) perceived the publicity as positive, while 30 percent (21-41 percent) felt that it was neutral, and 26 percent (16-39 percent) perceived it as negative. According to senior executives, by far the most common source of publicity was local, rather than national, print or broadcast media. For example, of those who received publicity related to Hospital Compare, 74 percent (59-84 percent) received publicity from local media while only 20 percent (13-28 percent) and 9 percent (5-15 percent) received it from the national trade press and the national media, respectively (Figure 1).

Figure 1. Source of Publicity or Attention Related to Hospital Compare Data.

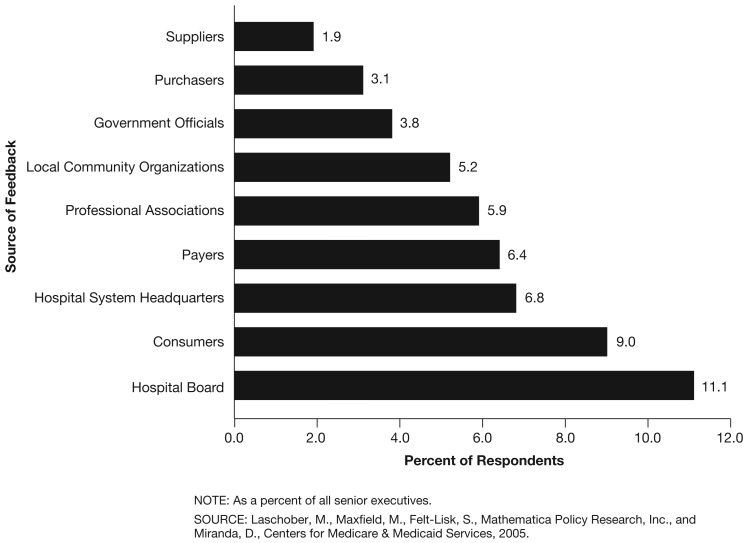

Approximately 15 percent (12-18 percent) of senior executives received feedback other than publicity as a result of the publication of their hospital's quality data (Figure 2). Only 9 percent of all senior executives received comments on their Hospital Compare data from individual consumers. A higher percentage though had feedback from sources inside their hospital; 18 percent received feedback from their board of directors or from senior staff in their hospital system's corporate office. Hospitals less seldom heard comments on Hospital Compare from organizations outside of the hospital, such as local community organizations (5 percent), professional provider associations (6 percent), or third-party payers (6 percent).

Figure 2. Sources of Feedback Other Than Publicity on Hospital Compare Reports.

Internal Reaction to Public Reporting

Internal Distribution of Hospital Compare Data

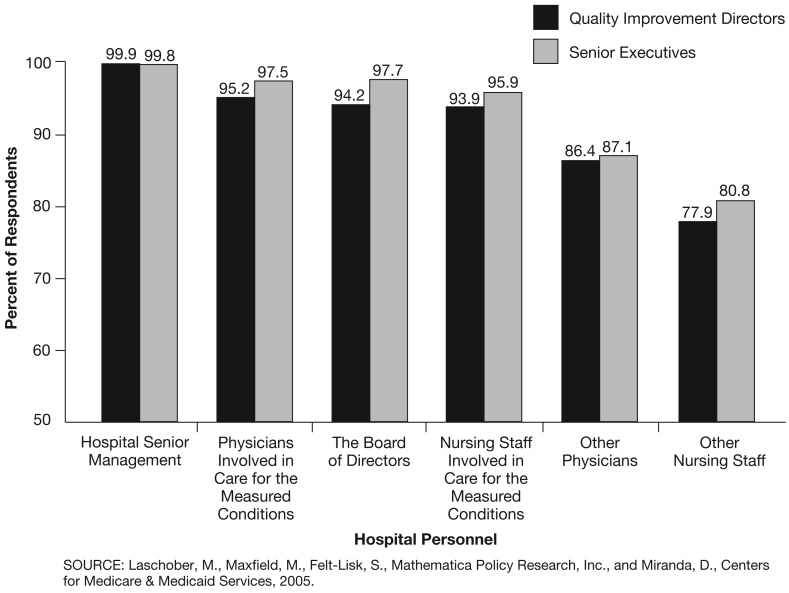

In contrast to what appears to be only moderate external reaction to Hospital Compare reporting, the internal use of public reporting was much more prevalent. In addition to receiving feedback from internal hospital leadership, an important indicator of the potential impact of Hospital Compare data is its internal distribution among hospital management and staff. Nearly all hospitals (96 percent of senior executives; 94 percent of QI directors) follow this practice routinely. Virtually all share the data quarterly with hospital senior management, and nearly all share it with physicians and nursing staff involved in care for the measured conditions and with the hospital's board (Figure 3). Just slightly lower percentages share the data with other physicians and nursing staff (Figure 3). About one-fifth of hospitals (19 percent of senior executives and 20 percent of QI directors) also routinely distribute the data to regular and ancillary staff (e.g., laboratory and radiology).

Figure 3. Frequency of Sharing of Hospital Compare Data with Hospital Management and Staff.

Senior executives believe that the routine distribution of Hospital Compare data among the hospital's management and staff has a number of highly desirable effects. For instance, nearly all work in hospitals where staff is both more aware of (97 percent) and more inclined to comply with best practice guidelines (96 percent); they are getting more support from the hospital in terms of meeting these guidelines (95 percent); and the care provided overall is better documented (89 percent) (Table 1). The 59 percent of QI directors in hospitals that in turn generate physician-level data from the Hospital Compare measures nearly unanimously consider this to be a very important step (80 percent) or a somewhat important step (20 percent) toward better hospital performance. However, according to 56 percent (50-62 percent) of such QI directors, the practice constitutes a major burden for their hospitals. Small, non-JCAHO accredited hospitals were less likely to generate physician-level data than others (47 percent compared with 68 percent of large, JCAHO accredited hospitals and 65 percent of other hospitals).

Table 1. Impacts of Routine Sharing of Hospital Compare Data with Hospital Management and Staff.

| Hospital Compare Data | Percent of Senior Executives Saying Impact has Occurred |

|---|---|

| Heightened Awareness of Guidelines Among Staff | 97.2 |

| Improved Hospital Processes to Create Better Support for Meeting Guidelines (e.g., Patient Chart Reminders) | 94.8 |

| Improved Staff Documentation of Procedures | 88.9 |

| Staff Practices that are More Consistent with Guidelines | 96.2 |

NOTE: As a percent of senior executives (95 percent) whose hospital regularly shares Hospital Compare data with management and staff.

SOURCE: Laschober, M., Maxfield, M., Felt-Lisk, S., Mathematica Policy Research, Inc., and Miranda, D., Centers for Medicare & Medicaid Services, 2005.

Impact on Hospital Leadership's Attention to Quality

The presentation of Hospital Compare and other publicly reported hospital measures to the hospital's senior management and staff has clearly directed more of their attention to quality of care issues. A large portion of senior executives (87 percent) and an even larger portion of QI directors (93 percent) worked in hospitals where CEOs and other top leaders were paying more attention to hospital quality or understanding more about quality performance over the past 2 years. Extremely few worked in hospitals where leadership's attention to these issues had declined over the 2-year period (1.8 percent [0.3-10.4 percent] of senior executives; 0.7 percent [0.3-1.8 percent] of QI directors). This finding did not vary significantly by hospital size or JCAHO accreditation status. Many hospital executives asserted that their hospital's participation in Hospital Compare in particular played a major role in drawing more leadership attention to quality (62 percent of senior executives; 55 percent of QI directors).

Several factors signal a rise in leadership attention to quality. For instance, a very high percentage of senior executives and QI directors worked in hospitals where staff requested performance information more often than in the past (86 and 82 percent, respectively). These hospitals also experienced more discussion about the hospital's quality performance in strategic planning meetings than in the past, and more hospital staff were devoting greater attention to QI efforts (Table 2). Although QI directors and senior executives were interviewed separately at most hospitals, their responses to this question, as to most others in the survey, were remarkably similar.

Table 2. Indicators of Increased Attention/Knowledge of Quality Among Hospital's Senior Management and Staff.

| Quality Attention Indicator | Responding Yes | ||||

|---|---|---|---|---|---|

|

| |||||

| Senior Executives | QI Directors | QI Directors | |||

|

| |||||

| Large, JCAHO Accredited | Small, Non-JCAHO Accredited | Others | |||

|

| |||||

| Percent | |||||

| More Frequent Internal Requests for Information about Quality Performance1 | 85.8 | 82.2 | 87.2 | 70.3 | 83.7 |

| Hospital Management with More Frequent Requests | |||||

| Medical Staff Leadership1 | 88.2 | 88.1 | 91.9 | 75.7 | 89.5 |

| Other Physicians1 | 77.6 | 74.5 | 82.6 | 56.7 | 75.8 |

| Board Members1 | 84.9 | 81.0 | 84.7 | 69.0 | 82.3 |

| Senior Executives1 | 96.8 | 98.2 | 100.0 | 91.4 | 99.1 |

| More Discussion of Quality Performance in Hospital's Strategic Planning Process | 93.6 | 91.2 | 89.6 | 90.1 | 91.9 |

| Heightened Attention to Improving Quality by a Larger Group of Hospital Staff | 96.5 | 95.8 | 93.4 | 94.5 | 96.8 |

Chi-square test for different responses among the hospital subgroups (large, JCAHO accredited; small, non-JCAHO accredited; and other hospitals) are statistically significantly at the 95 percent confidence level.

NOTES: As a percent of quality improvement (QI) directors (93 percent) and senior executives (87 percent) who reported increased hospital leadership attention to quality over the past 2 years. JCAHO is Joint Commission on Accreditation of Healthcare Organizations.

SOURCE: Laschober, M., Maxfield, M., Felt-Lisk, S., Mathematica Policy Research, Inc., and Miranda, D., Centers for Medicare & Medicaid Services, 2005.

We found a weaker trend in increased internal requests for quality information in small, non-JCAHO accredited hospitals relative to other hospitals, with 70 percent of QI directors in these hospitals reporting such an increase versus 87 percent for large, accredited hospitals and 84 percent for others. Medical staff leadership, other physicians, and board members were all less likely in the small hospitals to be requesting more information compared to 2 years ago (Table 2).

In addition to hospital staff, hospital boards were more attentive to quality issues. For example, a full 85 percent of senior executives worked in hospitals where their board of directors paid more attention to quality matters than it did 2 years prior. Although the estimated percentage was slightly lower for small, non-accredited hospitals (78 percent) than for large, accredited hospitals (88 percent), the difference was not statistically significant. Virtually no hospitals experienced a decline in their board's attention to quality (0.3 percent [0.1-1.2 percent]). Moreover, nearly all of these hospitals' boards had become more familiar not only with quality issues in general (98 percent), but also with their hospital's performance vis-à-vis quality measures (99 percent). About three-fourths of senior executives (76 percent) were in hospitals where their board played a larger role in quality oversight activities than it did 2 years ago. Nearly the same percentage (74 percent) gave at least partial credit to Hospital Compare for the rise in their board's attention to quality.

New or Enhanced QI Initiatives

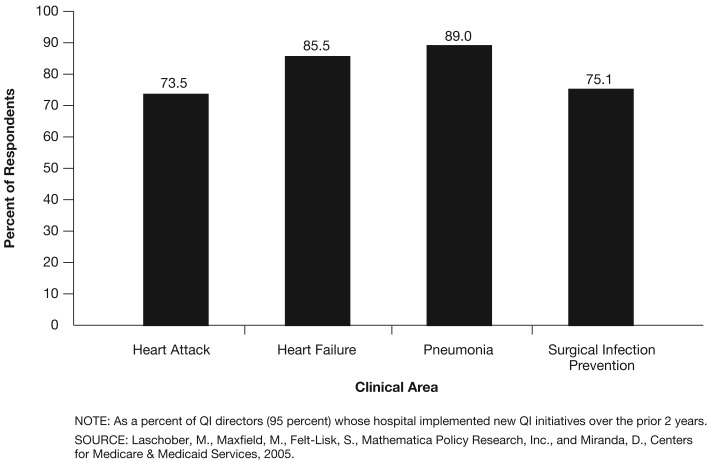

Almost all QI directors (95 percent) worked in hospitals that had launched either new or enhanced QI initiatives over the prior 2 years. These initiatives typically focused on care for pneumonia (89 percent of QI directors) and chronic heart failure (86 percent), but many were designed either to prevent infections related to surgery (75 percent) or to improve care for heart attack patients (74 percent) (Figure 4).

Figure 4. Clinical Areas for New or Enhanced Quality Improvement (QI) Initiatives.

According to a number of QI directors, public reporting has also drawn attention to clinical areas not listed in the survey. More attention is paid to the quality of intensive care unit (ICU) care (32 percent [28-36 percent]), patient safety (14 percent [12-18 percent]), and clinical areas involved in the Institute for Healthcare Improvement's 100,000 Lives Campaign (17 percent [14-22 percent]).9 Eighty-six percent of hospitals with an increased focus on the survey- and non-survey-listed clinical areas were particularly influenced by Hospital Compare reporting (playing a major role in 49 percent of these hospitals and a minor role in 37 percent). Public reporting in general (not only Hospital Compare) also played a similar role in increasing QI activities in the same clinical areas (playing a major role in 50 percent of these hospitals and a minor role in 37 percent).

New or Enhanced Data-Collection Initiatives

A large portion of QI directors (85 percent) worked in hospitals that over the previous 2 years had begun to gather previously uncollected data that could be used to measure quality. Large, JCAHO accredited hospitals were much more likely to be collecting or abstracting new data than small, non-JCAHO accredited hospitals (93 versus 67 percent, respectively), but were little different from other hospitals (87 percent).

Regarding the clinical areas included in the survey, hospitals had focused more heavily on collecting data that would allow them to better document preventive care for surgery-related infections (78 percent) (Table 3). Regarding the clinical areas not listed in the survey, 25 percent (21-30 percent) of hospitals had begun to collect data that would allow them to assess ICU care.

Table 3. New Data Collection or Abstraction Activities for Quality Measurement.

| Clinical Area | Hospital has Initiated New Data Collection or Abstraction Activities | Hospital has Initiated New Efforts to Improve Documentation of Care | ||||||

|---|---|---|---|---|---|---|---|---|

|

|

|

|||||||

| Of Hospitals that Initiated These New Activities1 | Large, JCAHO Accredited | Small, Non-JCAHO Accredited | Others | Of All Hospitals | Large, JCAHO Accredited | Small, Non-JCAHO Accredited | Others | |

|

| ||||||||

| Percent | ||||||||

| Heart Attacks2,3 | 66.6 | 72.9 | 57.7 | 66.7 | 90.0 | 98.4 | 81.0 | 89.9 |

| Heart Failure3 | 67.3 | 69.3 | 64.8 | 67.2 | 94.5 | 100.0 | 90.0 | 94.1 |

| Pneumonia3 | 69.0 | 72.2 | 67.9 | 68.2 | 93.5 | 97.3 | 88.8 | 93.6 |

| Surgical Infection Prevention3,4 | 78.0 | 82.0 | 46.7 | 82.9 | 74.3 | 88.8 | 51.0 | 76.3 |

| ICU3,4 | 25.2 | 36.8 | 15.7 | 23.5 | 10.4 | 21.7 | 7.2 | 8.0 |

| Stroke (CVA)3,4 | 9.3 | 26.0 | 3.6 | 5.4 | 7.4 | 10.8 | 1.8 | 4.0 |

| All Clinical Areas4 | 7.3 | 6.6 | 9.0 | 7.3 | 12.6 | 22.6 | 5.0 | 11.8 |

Of all hospitals, 85 percent initiated new data collection or abstraction activities, as did 93 percent of all large, JCAHO accredited hospitals, 67 percent of small, non-JCAHO accredited hospitals, and 87 percent of other hospitals.

Chi-square test significant, p<0.10, for significantly different responses among the hospital subgroups large, JCAHO accredited; small, non-JCAHO accredited; and others, as to whether they initiated new data collection or abstraction activities for this condition.

Chi-square test significant, p<0.05, for significantly different responses among the hospital subgroups large, JCAHO accredited; small, non-JCAHO accredited; and others, as to whether they initiated new efforts to improve documentation of care for this condition.

Chi-square test significant, p<0.05, for significantly different responses among the hospital subgroups large, JCAHO accredited; small, non-JCAHO accredited; and others, as to whether they initiated new data collection or abstraction activities for this condition.

NOTES: As a percent of all QI directors. JCAHO is Joint Commission on Accreditation of Healthcare Organizations. ICU is intensive care unit. CVA is cerebrovascular accident.

SOURCE: Laschober, M., Maxfield, M., Felt-Lisk, S., Mathematica Policy Research, Inc., and Miranda, D., Centers for Medicare & Medicaid Services, 2005.

We found differences in clinical areas of focus for new data collection by hospital size and JCAHO accreditation that likely relate to a varying mix of services. For example, large, JCAHO accredited hospitals were far more likely than small, non-JCAHO accredited hospitals to focus on obtaining new data regarding surgical infection prevention, probably at least in part because many of the small hospitals may not offer surgery (Table 3).

According to over one-half of QI directors (56 percent), these new data collection efforts were prompted at least in part by participation in Hospital Compare. However, the other 44 percent of QI directors believed that Hospital Compare had no influence on their decision to initiate new data collection activities for quality measurement, presumably because such activities were underway for other reasons.

Although only 56 percent of QI directors saw much of an effect from their hospital's participation in Hospital Compare on new data collection efforts, nearly three-fourths felt that their participation did improve the thoroughness of care documentation in many clinical areas. For instance, most QI directors were in hospitals that had adopted efforts to more thoroughly document treatment provided for heart attack (90 percent), heart failure (95 percent), pneumonia (94 percent), and the prevention of surgery-related infection though less so than for the other areas (74 percent) (Table 3). Some hospitals also improved their documentation of care in other areas not explicitly listed in the survey, including all clinical areas (13 percent [10-16 percent]), stroke care (7 percent [6-10 percent]), and ICU care (10 percent [8-13 percent]).

QI and Reporting

Slightly more than one-half of the senior executives (53 percent) worked in hospitals where the number of new staff dedicated to QI and public reporting had recently increased. This is not surprising in light of the numerous QI programs and enhanced documentation efforts underway at many hospitals. According to QI directors, the mean number of staff devoted to QI projects increased from 4.4 in 2003 to 4.8 in 2005, and the mean number of staff devoted to the collection and reporting of quality data increased from 1.9 in 2003 to 2.5 in 2005.

Although about one-half of hospitals did not increase the number of staff devoted to these quality-related activities, most (96 percent) had experienced increased workload for staff already involved in such activities over the past 2 years. These numbers are only expected to rise as quality-improvement programs and quality-related data collection efforts grow in response to greater hospital leadership attention to quality.

According to QI directors, Hospital Compare had a fairly strong impact on investment in hospital staff dedicated to QI. In hospitals in which more staff had begun to collect data on care quality, two-thirds (66 percent) of QI directors attributed a major reason for this trend to the Hospital Compare program. This percentage did not vary significantly by hospital size and accreditation. Other public reporting requirements also contributed to the increase in quality of care data collection activities in 67 percent of hospitals. Many fewer QI directors, but still one-half of them (51 percent), perceived Hospital Compare as a major reason for the increase in the number of staff devoted to QI activities.

The survey further distinguished between staff effort devoted to quality-related activities in the clinical areas covered by public reporting efforts (including Hospital Compare), and clinical areas in which the hospital was not doing any public reporting at the time of the survey. A large majority of QI directors asserted that staff effort increased substantially over the past 2 years in both types of clinical areas, although more so in publicly reported areas than non-reported ones (93 versus 78 percent). Very few worked in hospitals in which staff effort in either area had declined over the same period (0.7 percent [0.3-1.5 percent] and 5 percent [4-7 percent], respectively).

Reasons for Improvement in Performance

It is clear that hospital executives in general are now paying more attention to quality. A remaining question is: Has this new awareness manifested itself in the hospitals' performance? The answer appears to be yes, based on self-reported improvement in Hospital Compare scores.10 Seventy-five percent of QI directors and 81 percent of senior executives worked in hospitals in which one or more Hospital Compare score improved significantly over the previous reporting period.

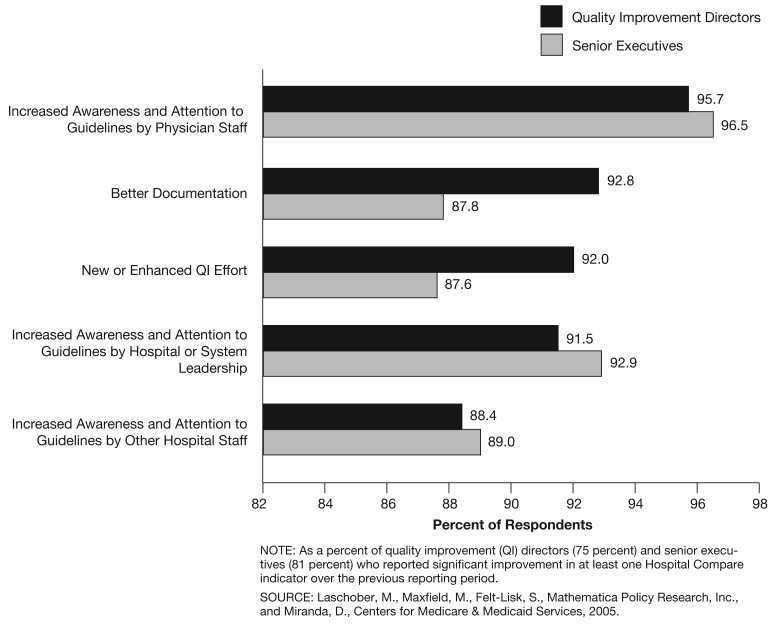

Approximately 90 percent of both QI directors and senior executives attributed the improvement to the five quality-related factors covered in the survey (Figure 5):

Figure 5. Reasons for Hospital Compare Measure Improvements.

Increased awareness and attention to guidelines by physician staff.

Increased awareness and attention to guidelines by hospital or system leadership.

Better documentation of care.

New or enhanced QI efforts.

Increased awareness and attention to guidelines by other hospital staff.

Very few hospital executives attributed the improvement to factors other than these.

Discussion

The survey findings on the relationship between public reporting and hospital performance support previous regional research described in this article that points to the value of making information on hospital performance available to the public, particularly with respect to its impact on internal hospital QI efforts. Our survey results also suggest that public reporting may be substantially impacting hospital QI and reporting efforts. To be more specific, the clear story from hospital executives is that Hospital Compare and other public reporting has changed the way that hospital staff, leadership, and boards view quality in the current context, which includes the future prospect of pay-for-performance. While many hospitals attributed the improvement in leadership attention to quality directly to participation in Hospital Compare, the survey was not designed to tease out the role of hospitals' widespread expectation that CMS is moving toward pay-for-performance and the fact that Hospital Compare measures represent logical targets for inclusion in such a program. We suspect that this general expectation—evidenced, for example, by the increase in pay-for-performance conferences designed for health care executives—contributes to, but may not fully explain, the hospital behavioral changes that were reported.

The findings also point to the critical role played by physicians in hospital performance. Ninety-six percent of QI directors and senior executives at hospitals that did better on at least one Hospital Compare measure attributed the improvement to the fact that physicians were more aware of and paid more attention to best practice guidelines. On the other hand, although 80 percent of hospital executives whose hospitals produced physician-level data from Hospital Compare indicators believed such data were very important to hospital performance, over one-half also felt that the process of generating the data was especially burdensome. This information complements data from the same survey reported elsewhere, which found that 76 percent of senior executives and 83 percent of QI directors working in hospitals with significant room for improvement on one or more Hospital Compare scores perceived a lack of physician involvement in the hospital's QI efforts as a barrier to improvement (Laschober, 2006). This reason ranked second only to documentation of care as a barrier to higher scores. In terms of health care policy, this finding shows that there is much to be gained by bringing physicians into QI initiatives early in the process and by better aligning physician and hospital incentives to improve care.

Better Hospital Compare scores can indicate improvements in care or enhancement in the documentation of that care. Our findings indicate that hospitals have been giving more attention to documentation, and when hospitals have improved their scores, 90 percent of hospital executives credited better documentation as one reason for the score increase. But hospital executives also believe that more awareness and attention to best practice guidelines is a key to improvements, just as are new or enhanced QI efforts. In the end, it may be impossible to distinguish between the role played by efforts to improve documentation and the role played by efforts to improve care through clinical guidelines because, in practice, steps to improve documentation often support guideline implementation. For example, using physician-level data to question physicians about specific cases that appear to have not met a guideline will inevitably highlight the guideline in physicians' minds in addition to reminding them of the importance of documentation.

We found some signs that small, non-JCAHO accredited hospitals may be less engaged than other hospitals in enhancing their QI programs. A smaller majority had begun collecting or abstracting new data in the past 2 years, and fewer produced physician-level data from Hospital Compare data, considered important for improvement by the hospitals that take this extra step. Different service mixes in these small hospitals mean many of the Hospital Compare measures may not be relevant for them, which could help explain this finding. Because these small hospitals are often critical access points for rural populations, a failure to keep up with the rest could lead to disparities between urban and rural populations. Finding more applicable measures to fully engage small hospitals in improvement efforts therefore represents an important item for the policy agenda.

Finally, the study's findings point to two other important factors for policymakers to consider as they seek to use the power of public reporting to encourage high-quality care. First, while publicity as a result of Hospital Compare scores is currently the exception rather than the rule, local media appears to be the most active external reactor to the data at present. Sensitive to the potential importance of the media, CMS launched Hospital Compare at a conference for journalists. Our findings suggest that continued efforts to educate journalists on reporting on these data could be fruitful.

Second, public reporting has clearly motivated hospitals to invest in the human and systems resources that will lead to better reporting. Along with this being a necessary part of improvement comes the responsibility of those crafting and endorsing specific hospital quality measures to ensure that these measures are ones that will most improve the care and health of our Nation's population, given our findings that staff effort devoted to quality-related activities increased more in publicly-reported clinical areas than in non-reported ones.

Acknowledgments

The authors gratefully recognize Martha Kovac for her oversight of the survey reported here, Frank Potter for his statistical expertise, Katherine Bencio for programming assistance, and Meredith Lee and Melissa Neuman for research assistance.

Footnotes

Mary Laschober, Myles Maxfield, and Suzanne Felt-Lisk are with Mathematica Policy Research, Inc. David Miranda is with CMS. The research in this article was supported by CMS under Contract Number 500-02-MD02. The statements expressed in this article are those of the authors and do not necessarily reflect the views or policies of Mathematica Policy Research, Inc., or CMS.

For information about the CTS study, which is administered by the Center for Studying Health System Change, refer to: http://www.hschange.com/index.cgiPdata-01 (Accessed 2007.)

These hospitals represent approximately 94 percent of the 4,450 acute care hospitals receiving payment under CMS' prospective payment system, plus CAHs that existed in 2004 (Medicare Payment Advisory Commission, 2006).

The denominator for this percentage is the 4,450 acute care hospitals receiving payment under CMS' prospective payment system plus CAHs that existed in 2004 (Medicare Payment Advisory Commission, 2006).

The sampling strata, frame counts, and sizes are available from the authors on request.

Response rates were computed using a variation of the American Association for Public Opinion Research's definition number 3 and accounted for the subsampling of the hospitals after release of the initial sample (The American Association for Public Opinion Research, 2006).

Other interesting analyses would include differences in response for rural versus urban hospitals, hospitals with high versus low Hospital Compare scores, network/system versus non-network/freestanding hospitals, and HQID participants versus non-participants.

In December 2003, section 501(b) of the 2003 Medicare Prescription Drug, Improvement, and Modernization Act stipulated that CMS would reduce by 0.4 percent the annual percentage increase in Medicare reimbursement rates for acute care hospitals that do not submit the Hospital Quality Alliance 10-measure starter set of hospital quality data to CMS.

Hospitals use QualityNet Exchange, a CMS-approved secure communications Web site, to submit Hospital Compare measure data to CMS.

The Institute for Healthcare Improvement's campaign enlisted hospitals to implement six changes in care, encompassing 26 intervention-level process measures, that have been proven to prevent avoidable deaths. For the list of measures, refer to Institute for Healthcare Improvement, 2007.

Assessing actual measured improvement in scores was beyond the scope of the study, but represents an important area for future research.

Reprint Requests: Mary Laschober, Ph.D., Mathematica Policy Research, Inc., 600 Maryland Ave., SW, Suite 550, Washington, DC 20024-2512. E-mail: mlaschober@mathematica-mpr.com

References

- Berwick D, Wald D. Hospital Leaders' Opinions of the HCFA Mortality Data. Journal of the American Medical Association. 1990 Jan 12;263(2):247–249. [PubMed] [Google Scholar]

- Bentley J, Nash D. How Pennsylvania Hospitals Have Responded to Publicly Released Reports on Coronary Artery Bypass Surgery. The Joint Commission Journal on Quality Improvement. 1998 Jan;24(1):50–51. doi: 10.1016/s1070-3241(16)30358-3. [DOI] [PubMed] [Google Scholar]

- Chassin M. Achieving and Sustaining Improved Quality: Lessons from New York State and Cardiac Surgery. Health Affairs. 2002 Jul-Aug;21(4):40–51. doi: 10.1377/hlthaff.21.4.40. [DOI] [PubMed] [Google Scholar]

- Devers K, Pham H, Liu G. What Is Driving Hospitals' Patient-Safety Efforts? Health Affairs. 2004 Mar-Apr;23(2):103–115. doi: 10.1377/hlthaff.23.2.103. [DOI] [PubMed] [Google Scholar]

- Hibbard J, Stockard J, Tusler M. Hospital Performance Reports: Impact on Quality, Market Share, and Reputation. Health Affairs. 2005 Jul-Aug;24(4):1150–1160. doi: 10.1377/hlthaff.24.4.1150. [DOI] [PubMed] [Google Scholar]

- Kohn L, Corrigan J, Donaldson M, editors. Institute of Medicine. To Err is Human. National Academy Press; Washington, DC.: 2000. [Google Scholar]

- Institute for Healthcare Improvement. Data Submission and Measurement. Internet address: http://www.ihi.org/IHI/Programs/Campaign/Campaign.htm?TabId=2#DataSubmissionandMeasurement (Accessed 2007.)

- Laschober M. Trends in Health Care Quality, Issue Brief #2. Mathematica Policy Research; Mar, 2006. Hospital Compare Highlights Potential Challenges in Public Reporting for Hospitals. Internet address: http://www.mathematica-mpr.com/publications/PDFs/hospcompare.pdf. (Accessed 2007.) [Google Scholar]

- Luce J, Thiel G, Holland M, et al. Use of Risk-Adjusted Outcome Data for Quality Improvement by Public Hospitals. Western Journal of Medicine. 1996 May;164(5):410–414. [PMC free article] [PubMed] [Google Scholar]

- McGlynn E, Asch S, Adams J, et al. The Quality of Health Care Delivered to Adults in the United States. The New England Journal of Medicine. 2003 Jun 26;348(26):2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- Medicare Payment Advisory Commission. A Data Book: Healthcare Spending and the Medicare Program. Jun, 2006. [Google Scholar]

- Pham H, Coughlan J, O'Malley A. The Impact of Quality-Reporting Programs on Hospital Operations. Health Affairs. 2006 Sep-Oct;25(5):1412–1422. doi: 10.1377/hlthaff.25.5.1412. [DOI] [PubMed] [Google Scholar]

- Romano P, Rainwater J, Antonius D. Grading the Graders: How Hospitals In California and New York Perceive and Interpret their Report Cards. Medical Care. 1999 Mar;37(3):295–305. doi: 10.1097/00005650-199903000-00009. [DOI] [PubMed] [Google Scholar]

- Shearer A, Cronin C. The State-of-the-Art of Online Hospital Public Reporting: A Review of Fifty-One Websites. 2005 Jul; Internet address: http://www.delmarvafoundation.org/newsAndPublications/reports/index.html. (Accessed 2007.)

- The American Association for Public Opinion Research. 4th edition. Lenexa, Kansas: 2006. Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys. Internet address: http://www.aapor.org/pdfs/standarddefs_4.pdf). (Accessed 2007.) [Google Scholar]

- Vladeck B, Goodwin E, Myers L, et al. Consumers and Hospital Use: The HCFA “Death List.”. Health Affairs. 1988 Spring;7(1):122–125. doi: 10.1377/hlthaff.7.1.122. [DOI] [PubMed] [Google Scholar]