Abstract

As competition, cost control, and new modes of delivery emerge in health care, there is a need to reexamine both the traditional definitions of health care quality and the methods by which it is measured.

Industries other than health care have much to teach regarding the methods for obtaining, analyzing, and displaying data; techniques for problem identification, problem solving, and reassessment; and ideas about organizational factors that produce a high quality product or service. The Quality-of-Care Measurement Department at the Harvard Community Health Plan has built a program that draws from a distinguished health care quality assurance tradition and incorporates techniques that have been successful in other industries.

Introduction

Quality assurance in health care is an enterprise strangely disconnected from the object of its study. Despite a distinguished intellectual tradition now decades old, the routine assessment of quality is rarely linked with the day-to-day management of health care systems or with the decisions made by individual and aggregate purchasers of health care. In other industries, it would be unacceptable for such a situation to exist. It would be as if neither the producers nor the buyers of automobiles regularly assessed, for purposes of making their decisions, whether the cars they made and bought ran reliably and well.

New directions in the delivery of health care are pressing the industry to reevaluate how quality is assessed, to consider how information about the quality of care delivered may ultimately be used, and to challenge existing notions of the definitions of quality. Health care in managed care systems, competition, regulation of cost, resource constraints, and new regulations are changing how care is provided. We are reducing the length and frequency of hospital stays, cutting capital and operating margins, and forcing economies in ambulatory care. Corporate producers of health care, profit and not for profit, seem somehow threatening to the traditional model of the individual doctor serving the individual patient. In the course of things, we are unmasking enormous variation in the patterns of care from region to region and from doctor to doctor, and there is the temptation to conclude that the lowest resource users provide a goal towards which all should strive.

Important questions have been raised demanding thoughtful answers. Physicians and patients ask about the sanctity of the doctor-patient relationship; patient advocates fear that needed care may be withheld if there is money to be saved; patients ask if the lowest cost care is really the best; and the purchasers of care for groups wonder which is the best choice for their clients. The current state of the art in health care quality assessment does not offer tools to answer these concerns. We cannot currently tell wise decisions from faulted ones; we cannot choose those economies that leave patients safe and satisfied and avoid those which unacceptably damage care. The development of a quality measurement system is now an urgent agenda in health care. Meeting the need for managerially relevant quality assessment in health care requires new perspectives and new tools. The field to date has built a respectable array of resources, some of which can be incorporated into future designs, but the resources do not yet form a pattern.

To build the future, it is useful both to review the past experience in health care quality assurance and also to look to other industries that have long histories in the assessment of quality.

History

Modern quality assurance in health care has two central strands of inquiry. One seeks definition of the object of scrutiny: What exactly is to be studied? The other seeks methods: How to study quality. The dominant figure in the first areas is Avedis Donabedian, considered by many the father of the academic enterprise of quality assessment in health, and the author of a recently completed three-volume summary of the field as of the early 1980's (Donabedian, 1980, 1982, and 1985). Donabedian, among others, offered the categories of "structure," "process," and "outcome" as the three classes of potential objects of investigation (Donabedian, 1966). "Structure" is a general term for the nature of the resources that, assembled, provide health care, including, for example, the mix of manpower, the credentials of the providers, the facilities, and the rules of procedure. "Process" refers to intermediate products of care, such as patterns of diagnostic evaluation, access to care, rate of utilization, and choice of therapies. "Outcomes" are end products of care, the health status, longevity, comfort, and, perhaps, the satisfaction of its clients.

Traditionally, structure, process, and outcome are, in that order, increasingly difficult to study and increasingly important. Indeed, many investigators in the field have assumed, tacitly or explicitly, that the first two—structure and process—are appropriate objects of scrutiny only to the extent that they are demonstrably related to valued outcomes. Through this logic, quality measurement has been closely associated with such other domains of research as technology assessment, clinical evaluation, and randomized clinical trials, enterprises that seek to prove that certain processes (or resources) yield particular outcomes.

The second major theme in the quality assessment literature deals with methods of investigation: Having chosen an object of scrutiny, how good is the performance? Three general methods have been explored over time: implicit review (Hulka, Romm, Parkerson, et al., 1979; Moorehead et al., 1964, 1967), explicit review (Brook and Appel, 1973; Brook, 1973; Lembcke, 1956; Fitzpatrick, Riedel, and Payne, 1962; Payne, Lyons, Dwarshius et al., 1976; Williamson, 1971), and the use of sentinels (Lembcke, 1967; Sheps, 1955; Ciocco et al., 1950; Ciocco, 1960; Rutstein, Berenberg, Chalmers, et al., 1976). Implicit review processes use experts who are able to recognize good care (structure, process, or outcome) when it occurs, or, in some cases, groups whose joint knowledge or judgment is thought better than any individual's. Implicit review procedures may assign scores to records of care or otherwise judge in global terms how well a system or provider dealt with individual cases or groups of patients. Both explicit and implicit reviews may involve sophisticated group techniques for selecting problems for review and for forming consensus on the quality of care.

Explicit review involves specifying criteria for care and review of records or observations to check on the degree to which what happens conforms to these prior criteria. By its nature, explicit review is better suited than implicit review to using nonprofessional staff to conduct the actual reviews of care. Professionals (often using group discussion or implicit techniques) write the standards and train nonprofessionals to rate the care. Explicit review has the advantage of clarity, compared with implicit review, but the disadvantage of oversimplification and, in the worst examples, clinical irrelevance. Recent researchers have attempted to modify review procedures to incorporate clinical algorithms and branching logic into criteria maps (Greenfield, Lewis, Kaplan, et al., 1975), as distinguished from simple criteria lists (Payne, Lyons, Dwarshius, et al., 1976), in the hope that the more complex rules for rating care may produce results that are clinically more plausible and useful. In one report (Greenfield et al, 1981), criteria maps were, indeed, shown to be superior to lists in predicting which clinicians made correct decisions in sorting chest pain patients who required hospital admission from those who did not.

Implicit and explicit review have remained largely separate streams partly because they seem to produce different results. In direct head-to-head comparisons, explicit and implicit reviews of the same cases have yielded some significant discrepancies in ratings (Hulka, Romm, Parkerson, et al., 1979). Which is superior is a matter of continuing debate, with practicing physicians, in general, favoring implicit processes and managers and regulators favoring explicit criteria and scoring systems.

A third, somewhat different, school of method proposes the use of sentinels as the major form of quality review. The advocates of this technique attempt to define classes of unacceptable or red-flag events and then to perform detailed investigations of the events, using implicit or explicit methods. Such case reviews can range freely among issues of structure, process, and outcome; and they can address problems at individual or systemic levels. Perhaps the clearest such model is the morbidity and mortality rounds held in many departments of surgery, but other schemes have been proposed at larger scales. To some extent, the review techniques developed and recommended by Wennberg and his colleagues (Wennberg and Gittelsohn, 1982), who search particularly for statistical outliers in rates of utilization, are connected with this notion of surveillance for sentinel events. Sentinel events can also be used directly as indicators of quality when they are judged to be outcomes that ought to be avoided by a sound health care system.

Donabedian's three volumes (Donabedian, 1980, 1982, and 1985), in which the field of quality assessment in health care is summarized, provide an impressive pedigree. Why then is quality assessment not yet in the life's blood of health care? Why, if we ask whether cost containment is hurting quality, or whether health maintenance organization "A" is better than health maintenance organization "B," must our answers be so impoverished, or at least so anecdotal? What should happen next in the field if the measurers are to become more useful to those who manage health care—for example, physicians and other health professionals; clinical department managers and chiefs of service; medical and administrative facilities managers; regulators; purchasers of care; and patients who more and more will wish to exercise choice in managing their own health care? A logical next step for health care assessment is to look to other industries to learn from their experience, their successes, their techniques, and perhaps their failures.

Learning from other industries

Other industries have a long history of assessing quality and use measurement more self-consciously and systematically than health care does. Industrial quality measurement techniques have benefited from nearly five decades of trial, development, evaluation, and reevaluation on the way to their current state of sophistication.

The discipline of industrial quality control has drawn energy from the pressures of the marketplace and the demand of consumers that products and services be continually improved. Similar pressures now confront health care providers, and important lessons lie in the extensive experience of other industries in the applied technology of quality control and assurance (Crosby, 1979; Juran, 1964; Deming, 1982).

Lesson I: Importance of design

In the history of industrial quality assessment and control, the first quality assessor was the consumer. A product was usually distributed without being subjected to a quality control process, and the only mechanism for knowing if the product was unacceptable was if the consumer complained or abandoned it. In time, industry's response was to institute systems that would attempt to intercept substandard products before they were distributed in order to prevent abrasion of consumers and loss of market share.

As health care delivery has become more organized and more competitive over the past decade, consumers of health care now have the opportunity to select among care delivery systems to find one that best meets their needs. Health care is learning, as other industries have learned, that to use consumer dissatisfaction as an index of quality is both hazardous and costly.

Early internal quality control mechanisms placed an inspector at the end of a production line to weed out those items that did not meet a specific set of criteria. Completed products that might in some way harm the consumer or cause dissatisfaction were discarded before they could reach the marketplace. Although this system may prevent the consumer from receiving faulty goods, it is a costly form of quality control. It offers some protection for the consumer, but, because unacceptable products are still beng produced, risks are high. In addition, such inspection systems do little to correct inefficiences throughout the line of production, may displace responsibility for meeting criteria to a separate entity remote from the production line, and require that the ultimate cost of the product be higher than necessary.

Recent advances in industrial quality assessment and control concepts have moved responsibility for identifying and fixing problems further and further up the production line. Quality control mechanisms are more and more being placed at interim stages in the production process. Modern quality control engineers now try to control quality in the actual design of the product. Corporate mottos such as "Do it Right the First Time" or "Quality by Design" are not just slogans for boosting morale; they also reflect modern notions of the most efficient strategies for quality assurance. High quality design is more efficient in the long run than thorough inspection at the end of the production line is.

Lesson II: Multidimensionality of quality

As industrial quality control has come to understand the importance of higher quality in design, so also has it come to regard the quality of a product or service as a fundamentally multidimensional concept. Here, too, health care can learn from other industries.

Over the past few decades, quality assurance in health care has invested heavily in efforts to demonstrate the relationships between the elements of care—structure and process—and the outcomes of care. The assumption that better health care produces better health outcomes has been the foundation of much of the search for operational definitions of quality. According to this view, the measurement of process is actually a surrogate for the measurement of the real goals of health care: improved health status, function, and comfort.

Making health status outcome so central to the definition of quality has at least three serious limitations. First, it burdens the exploration of quality with the agenda of virtually all clinical and health services research. If assuring quality means assuring that what we do really works, then we can only assess quality when the production functions are known. As Donabedian (1980) has pointed out, if we know the relationship between, say, process and outcome, then measuring process is quite acceptable as a surrogate for measuring outcomes. If we do not know the relationship between process and outcome, then measuring outcome is not a useful indicator of system quality, because we cannot use the information to determine which aspects of process to preserve and which to change. On reflection, making an understanding of effectiveness a prerequisite for measuring quality is probably a formula for paralysis. We simply know too little today about what in health care actually does produce health.

Second, there is good reason to believe that a great proportion of health care probably does very little to alter the course of illness or to preserve life or function. In the average ambulatory encounter, at least, the physician is usually dealing with acute, self-limited diseases, for which definitive treatments do not exist and for which a wide variety of diagnostic and therapeutic strategies are equally innocuous. For many encounters, the patient's major objective is to gain reassurance, to feel cared about or listened to, or to undergo a nearly ritualistic ruling out of unlikely major diseases. Unless we define outcome very broadly, to include many elements of the patient's feelings, attitudes, and satisfaction, then the tyranny of outcome in defining quality risks calling much of the activity in health care wasteful, useless, and scientifically unsupported. But doctors and patients know better; they know that, often, what health care delivers is not outcome—in the sense of improved longevity or function—but rather process, itself.

In this regard, health care is like other important consumer goods. The quality of an airplane flight lies foremost in a safe conclusion, but it also connotes the ability to obtain a reservation at a time when it is needed, the courtesy of the staff at each contact, the ease of making connecting flights, and the quality of the food. Such a broader notion of quality, incorporating many different and potentially independent dimensions, raises the important possibility that reasonable and fully informed people may disagree about the quality of a health care provider and goes far beyond the simpler notion that the quality of a health system is coextensive with its safety or ability to produce longer life.

Lesson III: Importance of organizational culture

Quality assurance in other industries has now moved back through the production or service line into the very structure of the organization. Industry has learned that, in order for quality assessment to be effective and efficient, it is not enough to develop new techniques of measurement and control to be implemented at various points of manufacture but that there also must be an investment in a corporate culture geared towards producing a high quality product. Industries have silenced arguments against the cost of quality assurance mechanisms as they have learned that, although such systems do require an investment, they return high dividends through reduced customer attrition, through fewer losses from unusable products and less need for rework, and through greater efficiency in the production process. Commitment from senior managers to invest in producing a high quality product is one key to successful quality control. Managers must commit to assisting every employee to improve quality continually. Resources must be available to develop ways of identifying and fixing problems. Goals must be clearly stated, with mechanism to assure that they are understood by all. Employees must have the means to meet these goals and adequate training to enable them to do their jobs effectively. Objective measurement is necessary to know whether goals are being met and where improvement is most feasible. Feedback of the results of measurement must be prompt and must reach those managers, supervisors, and other staff who are responsible for production. Reports must appear in clear, useful, and usable format.

Health care can also learn lessons from other industries about the ways in which measurement data are collected and about the statistical techniques used for analysis. Traditionally in health care quality assurance, the primary source of data for the assessment has been the medical record. Although rich ininformation, the medical record as an assessment source is widely acknowledged as being flawed by differences in record keeping systems and variations in recording practices. With health care now expanding its view of what constitutes care in an organized delivery system, it can draw upon methods for collecting data such as those used in service industries—banks, hotels, or airlines. Service industries use three techniques, in particular, that deserve increased exploration in health care: surveys, observations, and simulations.

Perhaps the greatest achievements of industrial quality assurance lie in the practical statistical methods that have been developed for sampling and for modeling performance. Like health care, for example, many industries face the challenge of finding flaws that are extremely rare. Industrial quality control engineers use well-developed techniques for calculation of failure time or of the probability of deficiency, yet these same techniques are rarely used in the assessment of health care. Industrial quality assurance pays strict attention not only to average performance but to variance and loss functions which include terms for reliability. Health care, too, ought to be sensitive, not just to the average performance level of a person or system but also to the variability in that performance.

The time is ripe for some new directions in quality measurement in health care. The next decade of development should not only draw on the distinguished intellectual achievements of the field but should seek, as well, to make the measurement enterprise more useful to the managers and decisionmakers whose choices and strategies will shape the system we shall pass on to the 21st century. Other industries have much to teach us, if we will think inventively about analogies and similarities. To fail to develop ways to measure and publish wisely the performance of our health care systems will place us at the mercy of ill-informed choices, and may, in the end, cause sacrifice of the legacy of caring that we should seek to preserve.

Harvard Community Health Plan

At the Harvard Community Health Plan (HCHP), a 300,000 member staff model health maintenance organization (HMO) located in the greater Boston area, the Quality of Care Measurement Program has begun to supplement traditional concepts and methods in health care quality assessment with new approaches, applicable new models, and management needs in health care delivery systems. Using concepts and techniques from a variety of industrial settings, the quality assessment function has been relocated in the corporate structure, has expanded its view of the very definition of quality, and is now developing new technical methods for obtaining data, conducting analyses, and producing reports.

HCHP's Quality of Care Measurement (QCM) Program has a reporting relationship to the highest internal policymaking levels. This position declares and emphasizes that the commitment to providing high quality care is a serious endeavor valued by the corporation as a total system for delivery of care. Further, renaming the former quality assurance program "Quality of Care Measurement" emphasizes the distinction between assurance and measurement. The assurers of care are the providers of care, the physicians, nurses, technicians, and support staff who, on a day-to-day basis, manage the care and service provided to patients, along with the other staff and managers who create the environment in which sound care is possible. The measurers of care provide objective information that is useful to the clinicians as an aid to practice management.

HCHP has embraced a multidimensional view of quality defined from multiple perspectives, including that of the consumer. In the Quality-of-Care Measurement Department, new techniques of gathering data, such as observation, simulation, and patient survey, are being rigorously designed and tested to develop new methodologies that will supplement traditional ones.

Redesign of the quality measurement program at Harvard Community Health Plan began with a specific conceptual framework for the definition of health care quality and a conviction that any system of assessment should focus on those attributes of care that the Harvard Community Health Plan values for its members and that integrates both professional and lay views of the elements of high quality.

The primary components of the definition of quality remain excellent technical care and favorable health status outcomes. However, other areas of performance, such as providing access to care, receive high weight as additional important aspects of quality. The multidimensional view of quality Harvard Community Health Plan includes, at a minimum, measures of technical process, health status, outcome, access, coordination of care, ambiance, interpersonal relationships and satisfaction, support staff training, and staff morale and satisfaction.

Because the Harvard Community Health Plan offers many different products of care (encounters with well patients, care of those with symptoms, care for emergency problems, hospitalizations, psychotherapy, perinatal care, and encounters in such intermediate care areas as laboratories, pharmacies, and radiology suites), the Quality of Care measurement program may measure performance in each type of encounter with respect to any of the relevant attributes of care. For each type of encounter, different attributes may deserve different weights: technical process is more important than interpersonal demeanor in a cardiac arrest, but interpersonal qualities may be much more highly valued in a well-child care visit, where the risks of faulty process are much lower and reassurance is often a key goal. Each system (indeed, each patient) may attach different weights to these desired attributes, and intelligent, completely informed consumers may rationally disagree about which of two systems demonstrates better overall quality.

This multidimensional view of the quality of care raises important issues of measurement. Medical records are the traditional source documents for the assessment of outcomes and technical process, but they are not useful for the assessment of such other dimensions as access, interpersonal care, or ambiance. New dimensions require new measurement strategies.

Primary among these strategies and tools are surveys to obtain information about patients' perceptions of care received. Building on work done by other health service researchers, the Harvard Community Health Plan is developing and using scaled questionnaires that can help consumers of health care tell about the care they received. Items on these questionnaires ask about appointment access, waiting time in the waiting room, ambiance, support staff warmth, provider warmth, provider skill, continuity of care, the patients' sense of sharing in the control of care, and a summative satisfaction rating. In addition, members are asked about their intention to keep the same health care provider and the intention to remain an HMO member.

The Quality of Care measurement program currently uses two modes for administering these questionnaires. One type of instrument is administered onsite in clinical units as patients exit from their visits; the intent is to gather immediate impressions of the encounter and to focus on the single care event. Collection occurs at regular cycles throughout the year, and attempts are made to collect a completed survey from each visitor. Response rates using this technique range from 75 percent to 90 percent. All Harvard Community Health Plan sites are surveyed during the same time period to assure comparability and reduce confounding effects of events outside of the HMO's control—such as bad weather. Once collected, the data from several thousand surveys are entered into a microcomputer system and analyzed using standard statistical packages.

The other form of survey administration is through the mail. Harvard Community Health Plan uses mailed questionnaires primarily for evaluating services that do not occur onsite, such as hospitalizations. Specialized surveys have, so far, been developed for medical and obstetrical hospitalizations. Hospital surveys have a broader focus than the visit surveys; during the obstetrical survey, for example, patients are asked to rate their prenatal care, initial labor contact, hospital admission process, labor and delivery care, postpartum care, hospital ambiance, patient education, and length of stay. In addition, patients rate the various clinicians they see, such as obstetricians during pregnancy, nurses in labor and delivery, and pediatricians in the hospital.

All surveys include demographic information such as age, gender, length of HMO membership, race, socioeconomic status, and whether the HMO visit was for well or sick care. Where appropriate, these data are used as covariates in stratified analyses.

Once survey data are collected, the turnaround time for data entry, analysis, and report generation is less than 4 weeks. The goal of most Quality of Care Measurement work is to give information to involved managers and clinicians in a time frame consistent with their management needs. Survey reports go first to the chiefs and supervisors of involved services and thereafter to the medical directors of facilities and to other senior level managers.

Direct observation as a data collection technique has been little explored in health care quality assurance, though observation is a common method of assessment of service in the hotel and airline industries, in which trained observers sample actual transactions and rate them according to previously established criteria. At the Harvard Community Health Plan, observations have been used to rate the quality of the ambiance of the facilities. Observers use rating forms (modeled on those used by the hotel industry) to gather observations about cleanliness of public areas, noise levels, protection of patient privacy, and clarity of signs. Observations of service behaviors among support staff have also begun.

Simulations pose much greater difficulties but also hold greater promise. They are used routinely in some service industries where trained individuals pose as users and report on what customers actually experience. Harvard Community Health Plan has proceeded cautiously in the use of simulated clinical events. Initial efforts have included attempts to schedule simulated appointments for a variety of types of visit. Quality-of-Care Measurement staff are trained in the use of written scenarios that request appointments for routine or urgent visits. Appointments are made and then immediately cancelled. Such simulations offer a realistic view of a member's attempt to get an appointment and afford a better estimate of true access than do more traditional nonintrusive methods.

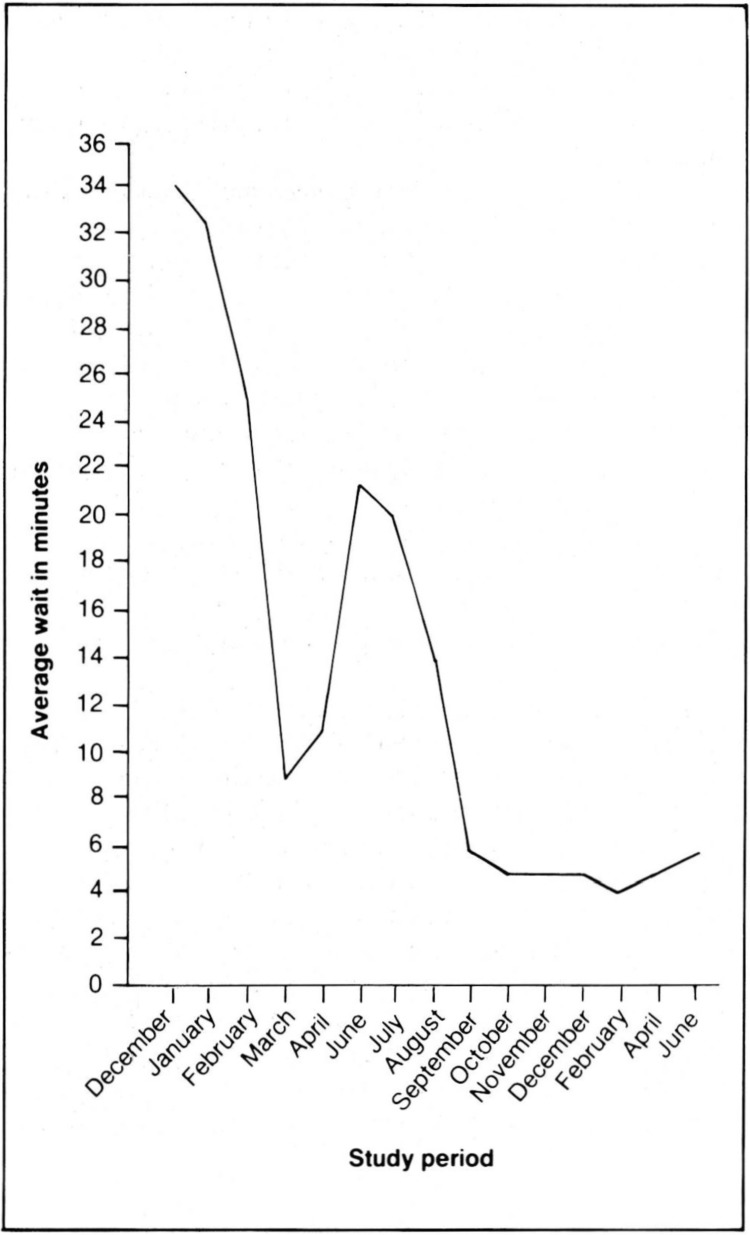

Displaying information in a digestible format, one that is easily grasped in one glance, has been an important goal of the QCM Program, which continues to experiment with various display methods. Figure 1 shows data collected on waiting times in an HCHP unit.

Figure 1. Average patient waiting time in the same care delivery unit for selected months: 1985-87.

In December 1985, QCM data and member comments indicated a problem with extended waiting times. Once the problem was clearly documented, managers attempted to determine the probable cause of the problem and to recommend a solution, which required restructuring the workflow during the winter of 1986. During February, the waiting times dropped from an average of 34 minutes to 10 minutes. During the spring and summer, installation of a new computer system caused a rise again in waiting times, but by September the new systems were in place. Waiting times remained constant thereafter at an acceptable level, and member complaints ceased. Regular measurements using standardized procedures displayed in simple formats have been standards in industry for decades. In many instances, as in this example, these techniques are easily transferred to a health care setting. They can help managers identify problems, measure trends, and demonstrate improved performance.

Measures of technical process and outcome, still the most important dimensions of quality, are being developed to ensure the capture of accurate, consistent data. Working closely with senior medical managers and clinician groups to formulate criteria and standards of care, QCM designs mechanisms to measure performance against such criteria. Currently, clinical data are collected in a number of routine ways, some involving searches through Harvard Community Health Plan's automated medical record systems and some involving detailed, traditional explicit manual reviews. Computer programs have been written for the automated medical record to identify categories of patients whose records are then scanned to determine if appropriate management has occurred. For example, software in the automated record system identifies women with abnormal pap smears and automatically notifies clinicians if appropriate followup has not occurred in a time interval specified by Harvard Community Health Plan clinical staff. Similar systems exist for following up negative rubella screens, diabetic patients, patients on lithium, patients on Coumadin, and children with urinary tract infections. These reminders to the physicians have been designed primarily as a tool for physicians to use in managing patients, but the program also collects data on the proportion of patients whose care meets the established criteria.

A data-base system that has been built on a microcomputer now includes information on all recent Harvard Community Health Plan deliveries— approximately 2,000 births so far. Reports are generated from this system to display prenatal care patterns, Cesarean section rates, complication rates, rates of high-risk pregnancies, birth weights, and neonatal death rates.

The examples mentioned here provide an overview of the type of work underway at HCHP. Goals in the short term are to develop measurements in all of the identified dimensions of quality, to test them, assess their value, eliminate those that are not viewed as being useful, and, ultimately, to weight dimensions according to their importance to provider and patient populations.

In addition to its general measurement activities the Quality-of-Care Measurement Department also organizes case reviews of significant incidents. These indepth studies have proven very useful in identifying broad inefficiencies in systems or areas of potential hazard. Although much more subjective in nature, the historical reconstruction of a failure reveals rich information about how, when, and where problems are most likely to arise. Quality-of-Care Measurement staff also initiate and assist in the design and implementation of special projects, serve on organization-wide task forces, and consult with HMO staff in a large variety of situations, often with committees of managers and clinicians organized at local care sites.

The Quality-of-Care Measurement effort at Harvard Community Health Plan, though innovative in some respects, has taken only the first steps towards a truly applied technology for quality assessment and control in health care. In full flower, such a technology would offer a wide range of tools, tested for validity and reliability, to assess quality in its several dimensions and to display results in formats familiar and friendly to purchasers and producers of care. It would, further, fit comfortably into an overall organizational strategy for quality control, in which measurement is not an end but a means for continual improvement of health care and of the systems which produce that care.

Acknowledgments

The authors are grateful to Ms. Deborah Alessi for her assistance in preparation of this article.

References

- Brook RH, Appel FA. Quality-of-care assessment: Choosing a method for peer review. New England Journal of Medicine. 1973;288:1323–1329. doi: 10.1056/NEJM197306212882504. [DOI] [PubMed] [Google Scholar]

- Brook RH. Critical issues in the assessment of quality-of-care and their relationship to HMO's. Journal of Medical Education. 1973;48:114–134. doi: 10.1097/00001888-197304000-00028. [DOI] [PubMed] [Google Scholar]

- Ciocco A, et al. Statistics on clinical services to new patients in medical groups. Public Health Reports. 1950 Jan.65:99–115. [Google Scholar]

- Ciocco A. On indices for the appraisal of health departmental activities. Journal of Chronic Diseases. 1960 May;11:509–522. doi: 10.1016/0021-9681(60)90015-1. [DOI] [PubMed] [Google Scholar]

- Crosby PP. Quality is Free: The Art of Making Quality Certain. New York: McGraw-Hill Book Co.; 1979. [Google Scholar]

- Deming WE. Quality, Productivity and Competitive Position. Cambridge, Mass.: MIT Center for Advanced Engineering Study; 1982. [Google Scholar]

- Donabedian A. Evaluating the quality of medical care. Milbank Memorial Fund Quarterly. 1966 Jul;44(Part 2) [PubMed] [Google Scholar]

- Donabedian A. Explorations in Quality Assessment and Monitoring, Volume I, The Definition of Quality and Approaches to its Assessment. Ann Arbor, Mich.: Health Administration Press; 1980. [Google Scholar]

- Donabedian A. Explorations in Quality Assessment and Monitoring, Volume II, The Criteria and Standards of Quality. Ann Arbor, Mich.: Health Administration Press; 1982. [Google Scholar]

- Donabedian A. The Methods and Findings of Quality Assessment and Monitoring, An Illustrated Analysis. Ann Arbor, Mich.: Health Administration Press; 1985. [Google Scholar]

- Fitzpatrick TB, Riedel DC, Payne BC. Criteria of effectiveness of hospital use. In: McNerney WJ, Study Staff, editor. Hospital and Medical Economics: Services, Costs, Methods of Payment and Controls. I. Chicago: Hospital Research and Educational Trust; 1962. [Google Scholar]

- Greenfield S, Cretin S, Worthman L, et al. Comparison of a criteria map to a criteria list in quality-of-care assessment for patients with chest pain: The relation of each to outcome. Medical Care. 1981 Mar.19:255–272. doi: 10.1097/00005650-198103000-00002. [DOI] [PubMed] [Google Scholar]

- Greenfield S, Lewis CE, Kaplan SH, et al. Peer review by criteria mapping: Criteria for diabetes mellitus: The use of decision making in chart audit. Annals of Internal Medicine. 1975;83:761–770. doi: 10.7326/0003-4819-83-6-761. [DOI] [PubMed] [Google Scholar]

- Hulka BS, Roram FJ, Parkerson GR, Jr, et al. Peer review in ambulatory care: Use of explicit criteria and implicit judgements. Medical Care. 1979;17(Supplement):1–73. [PubMed] [Google Scholar]

- Juran J. Managerial Breakthrough. New York: McGraw-Hill Book Co.; 1964. [Google Scholar]

- Lembcke PA. Evolution of medical audit. Journal of the American Medical Association. 1967 Feb.199:543–550. [PubMed] [Google Scholar]

- Lembcke PA. Medical auditing by scientific methods: Illustrated by major female pelvic surgery. New England Journal of Medicine. 1956 Oct.162:646–655. doi: 10.1001/jama.1956.72970240010009. [DOI] [PubMed] [Google Scholar]

- Morehead MA, Donaldson RS, et al. A Study of the Quality of Hospital Care Secured by a Sample of Teamster Family Members in New York City. New York: Columbia University, School of Public Health and Administrative Medicine; 1964. [Google Scholar]

- Morehead MA. The medical audit as an operational tool. American Journal of Public Health. 1967 Sept.57:1643–1656. doi: 10.2105/ajph.57.9.1643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Payne B, Lyons T, Dwarshius L, et al. The Quality of Medical Care: Evaluation and Improvement. Chicago: Hospital Research and Educational Trust; 1976. [Google Scholar]

- Sheps MC. Approaches to the quality of hospital care. Public Health Reports. 1955 Sept.70:877–886. [PMC free article] [PubMed] [Google Scholar]

- Rutstein DD, Berenberg W, Chalmers TC, et al. Measuring the quality of medical care: A clinical method. New England Journal of Medicine. 1976;294:582–588. doi: 10.1056/NEJM197603112941104. [DOI] [PubMed] [Google Scholar]

- Wennberg JE, Gittlesohn A. Variations in medical care among small areas. Scientific American. 1982;246:120–134. doi: 10.1038/scientificamerican0482-120. [DOI] [PubMed] [Google Scholar]

- Williamson JW. Evaluating quality of patient care: A strategy relating outcome and process assessment. Journal of the American Medical Association. 1971 Oct.218:564–569. [PubMed] [Google Scholar]