Abstract

Contemporary theories of the medial temporal lobe (MTL) suggest that there are functional differences between the MTL cortex and the hippocampus. High-resolution functional magnetic resonance imaging and multivariate pattern analysis were utilized to study whether MTL subregions could classify categories of images, with the hypothesis that the hippocampus would be less representationally categorical than the MTL cortex. Results revealed significant classification accuracy for faces versus objects and faces versus scenes in MTL cortical regions—parahippocampal cortex and perirhinal cortex—with little evidence for category discrimination in the hippocampus. MTL cortical regions showed significantly greater classification accuracy than the hippocampus. The hippocampus showed significant classification accuracy for images compared to a non-mnemonic baseline task, suggesting that it responded to the images. Classification accuracy in a region of interest encompassing retrosplenial cortex and the posterior cingulate cortex posterior to retrosplenial cortex (RSC/PCC), showed a similar pattern of results to parahippocampal cortex, supporting the hypothesis that these regions are functionally related. The results suggest that parahippocampal cortex, perirhinal cortex, and RSC/PCC are representationally categorical and the hippocampus is more representationally agnostic, which is concordant with the hypothesis of the role of the hippocampus in pattern separation.

Keywords: fMRI, MVPA, Parahippocampal Cortex, Perirhinal Cortex, Pattern Separation

Introduction

The Complementary Learning Systems (CLS) model posits that fundamental computational trade-offs have led the brain to develop multiple memory systems. In particular, it advances the notion that one system—or set of systems—is specialized for extracting statistical regularities of the world through gradual, interleaved learning and another is specialized for rapid, arbitrary associative learning via pattern separation (McClelland et al., 1995; O’Reilly and Rudy, 2000; O’Reilly and Norman, 2002; Norman and O’Reilly, 2003; Norman, 2010). Pattern separation transforms overlapping input patterns into more dissimilar patterns, and hence could theoretically allow rapid learning of novel information without high levels of interference to existing, potentially similar memories. Using the CLS framework, Norman (2010) posited that patterns of activity in the MTL cortex (MTLC) allow computation of “summed similarity” (i.e., similar stimuli elicit similar patterns of activity) while “the hippocampus cannot compute summed similarity” due to its pattern-separated representations (i.e., similar stimuli elicit unique patterns of activity). Neither form of learning or representation alone would provide an adaptive memory system, leading to the need for multiple memory systems operating under different computational principles.

Rodent studies provided evidence supporting the hippocampus’ role in pattern separation (Gilbert et al., 1998; Leutgeb et al., 2005; Leutgeb et al., 2007; Leutgeb and Leutgeb, 2007; Potvin et al., 2009; Talpos et al., 2010; Morris et al., 2012). Supporting the CLS model’s predictions of behavioral changes caused by hippocampal lesions (Norman and O’Reilly, 2003; Norman, 2010), investigations of patients with selective hippocampal damage revealed deficits in behavioral pattern separation tasks, with relative sparing of traditional recognition memory (Holdstock et al., 2002, 2005; Mayes et al., 2002; Duff et al., 2012; Kirwan et al., 2012; but see Reed and Squire, 1997; Stark and Squire, 2003; Bayley et al., 2008). Additionally, recent functional magnetic resonance imaging (fMRI) studies have shown activity signals consistent with pattern-separation in the human hippocampus (e.g., Bakker et al., 2008; Lacy et al., 2011; Motley and Kirwan, 2013; for across-species review of pattern separation see Yassa and Stark, 2011). These studies leveraged the repetition-suppression effect, in which different levels of brain activity are observed to a first presentation of a stimulus compared to a repeated presentation of a stimulus, to assess whether activity in different regions was consistent with pattern separation. The hippocampus showed activity to related lure images that was more similar to first presentations than it was to repeat presentations, hence supporting the hypothesized role of the human hippocampus in pattern separation.

Multivariate pattern analysis (MVPA; for reviews see Haynes and Rees, 2006; Norman et al., 2006) investigates patterns of activity, and can be used to more directly test the aforementioned hypothesis that the MTLC can compute summed similarity while the hippocampus cannot. We refer to brain regions that show significant classification accuracy between categories of images as being representationally categorical, and we coin the term “representationally agnostic” to refer to brain regions that are not representationally categorical. Providing evidence that the MTLC is representationally categorical would support its hypothesized ability to compute summed similarity because it would suggest that similar items (items within a category) are represented similarly (with distinct representations across categories), while providing evidence that the hippocampus is far more representationally agnostic (i.e., category classification accuracy that is consistently at or near chance) would support its hypothesized role in pattern separation.

Previous studies suggested that the MTLC is more representationally categorical than the hippocampus (Diana et al., 2008; Liang et al., 2013; LaRocque et al., 2013). Two of these studies used blocked designs (Diana et al., 2008; Liang et al., 2013), thus precluding the study of representations of individual stimuli. LaRocque et al. (2013) used a rapid event-related design, presenting the same stimuli in each run, thus allowing use of the general linear model to extract stimulus-specific estimates of activity. Here we report the results of two experiments in which we used a slow event-related design to allow for the extraction of patterns of activity at the level of individual trials. Using MVPA, we aimed to replicate previous results using individual trial patterns of activity and to rule out the possibility that the hippocampus is more representationally agnostic than the MTLC due to: 1) lack of responsiveness to the stimuli, 2) differing numbers of voxels across the MTLC and the hippocampus, or 3) noisier data.

In addition to testing this central hypothesis, we examined category representation within a region of interest encompassing retrospenial cortex (RSC; traditionally defined as Brodmann areas 29 and 30; Vann et al., 2009) and the posterior cingulate cortex (PCC) posterior to RSC. Recent accounts have suggested that RSC should be considered as part of the network of regions involved in memory (Vann et al., 2009; Ranganath and Ritchey, 2012). Specifically, Ranganath and Ritchey (2012) posited that there are two cortical systems involved in memory—one in the anterior temporal lobe that includes PRC, and another in the posterior cortex that includes PHC, RSC, and PCC. Several recent accounts have suggested that PHC, RSC, and PCC are anatomically situated to be involved in representing contextual information, including scenes or spatial information (Buffalo et al., 2006; Vann et al., 2009; Wixted and Squire, 2011; Ranganath and Ritchey, 2012). We predicted that RSC/PCC would show a similar pattern of classification results to those in PHC. Finally, we performed a variant of informational connectivity (Coutanche and Thompson-Schill, 2012) in which we investigated the correlation between the trial-by-trial multivariate pattern discriminability in PHC and RSC/PCC. Given the predicted role of these regions in scene and spatial representation, we hypothesized that the multivariate pattern discriminability in these regions would be correlated during an experiment that contained images of scenes.

Methods and Materials

Participants

A total of 29 participants (12 males, 17 females) underwent MRI scanning. Three were excluded because half or fewer of the total functional runs were obtained, one was excluded for sleeping during functional scans, and one was excluded due to excessive motion; therefore, 24 participants were used for analysis, with 12 in each of two experiments. Participants consented to the procedures in accordance with the Institutional Review Board of the University of California, Irvine and received $25 for their first hour of participation and $5 for each additional 20 minutes.

Stimuli

In Experiment 1, stimuli consisted of face and object images (12 each per run, totaling 192 each over 16 runs). In Experiment 2, stimuli consisted of face and scene images (8 each per run, totaling 128 each over 16 runs). Face images in both experiments included the hair and neck of the depicted individual. Half of the stimuli in each run were novel images, one quarter were repeated images, and the remaining quarter were related lure images. The novel versus repeated status of images was irrelevant for the present experiment. Participants viewed images and were asked to indicate, using an MRI-compatible button box, whether they found the picture to be “pleasant” or “unpleasant.” Each picture was displayed for 4 seconds, followed by an 8 second interstimulus interval (ISI). To discourage mind wandering during the ISI, subjects performed an engaging perceptual but non-mnemonic “arrows” task (Stark & Squire, 2001), in which they indicated the direction (left or right) in which arrows were pointing on the screen (6 trials per ISI; the ISI was modeled after Kuhl et al., 2011, 2012).

In Experiment 2, subjects also performed an engaging, but non-mnemonic perceptual baseline (PB) task (Law et al., 2005; Mattfeld et al., 2010, 2011; Hargreaves et al., 2012; 8 per run, totaling 128 for 16 runs). In the current version, subjects made two subsequent judgments (totaling 4 seconds) about which of two boxes was brighter (on a random static background), followed by the arrows task during the 8 second ISI. To keep the perceptual baseline task challenging, the difference in brightness of the boxes was continuously titrated to keep subjects between 50 and 70 percent accurate.

Image Acquisition

Data were collected using a 3.0-T Philips scanner using a sensitivity encoding (SENSE) head coil at the Research Imaging Center at University of California, Irvine. A whole-brain 1.0 mm isotropic 3D magnetization-prepared rapid gradient echo (MP-RAGE) structural scan was collected. Functional data were acquired using a T2*-weighted echo planar imaging sequence (TE=26 ms, TR=2400 ms, 32 slices, 1.5 mm isotropic voxels). To allow for magnetic field stabilization, the first 5 TRs of each run were immediately discarded. One hundred and twenty volumes were collected in each functional run. The full experiment consisted of 16 runs; however, for technical reasons data were obtained (or processed) for fewer than 16 runs for 4 subjects (mean number of runs=15.8, minimum=14).

Preprocessing

Functional data were aligned to the subject’s MP-RAGE using AFNI’s align_epi_anat.py script (Saad et al., 2009). Data were quadratically detrended, high-pass filtered (f > 0.01 Hz), and minimally smoothed by a 2 mm FWHM Gaussian kernel, using AFNI (Cox, 1996). Data were then z-scored and three TRs (beginning at 2.4, 4.8, and 7.2 seconds after stimulus onset) were averaged to form individual trial activity patterns, using PyMVPA (Hanke et al., 2009).

Regions of Interest

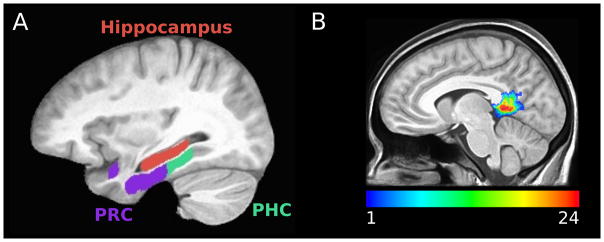

Anatomical regions of interest (ROI) for hippocampus, parahippocampal cortex (PHC), and perirhinal cortex (PRC) were manually segmented on a model template (see Figure 1A). PRC was labeled according to landmarks described by Insausti et al. (1998), and PHC was defined as the portion of the parahippocampal gyrus caudal to the perirhinal cortex and rostral to the splenium of the corpus callosum, as in our previous research (Stark and Okado, 2003; Kirwan and Stark, 2004; Law et al., 2005; Okado and Stark, 2005). Each participant’s MP-RAGE was aligned to the model template, using Advanced Normalization Tools (ANTs; Avants et al., 2008), allowing the model template ROIs to be warped to each subject’s original space using the inverse warp vectors. We utilized Freesurfer’s isthmus cingulate label (Desikan et al., 2006)—which encompasses RSC (traditionally defined as Brodmann’s areas 29 and 30; Vann et al., 2009) and PCC posterior to RSC—for our RSC/PCC ROI. The data were collected using a partial brain coverage acquisition box, resulting in slightly limited coverage of the superior aspect of RSC/PCC (see Figure 1B). Each participant’s ROIs were resampled to functional resolution and masked to contain only completely sampled voxels (mean number of voxels: hippocampus=1803, PHC=1270, PRC=1888, RSC/PCC = 655).

Figure 1.

ROI locations. (A) Hippocampus (red), parahippocampal cortex (green), and perirhinal cortex (purple) within model space. (B) For visualization, RSC/PCC participant masks were warped to template space to create an overlap map of the ROI across participants (24 participants total).

MVPA Analysis

Classification analysis was performed using PyMVPA (Hanke et al., 2009) and custom-written Python code (python.org), using the NeuroDebian package repository for Linux (Hanke and Halchenko, 2012). Linear support vector machine (SVM) analysis was carried out in each subject’s original space, using individual trial patterns of activity within each ROI (see Figure 1). The classification accuracy across each N-fold-leave-one-run-out cross validation was averaged to generate an average classification accuracy for each participant for each ROI. Two-tailed one sample t-tests of group data were used to assess significance of classification accuracy (compared to chance) and two-tailed paired t-tests were used to assess classification accuracy differences between ROIs. Resultant p-values were Bonferroni corrected for six comparisons (three ROIs and three comparisons between ROIs). Welch’s t-tests, which can account for unequal variance between samples, were used to assess significance of differences between experiments. R (cran.r-project.org) was used to perform all t-tests and to plot figures.

To test the hypothesis that RSC/PCC was representationally similar to PHC, we performed a variant of informational connectivity (Coutanche and Thompson-Schill, 2012). The original instantiation of informational connectivity utilized a correlational classifier to calculate the within minus between category similarity to create a vector of multivariate pattern discriminability on a TR-by-TR basis. Then the Spearman rank correlation between multivariate pattern discriminability in different regions (in their case a seed region and a spherical searchlight region) was calculated. Here we utilized SVM decision values (referred to as “estimates” in PyMVPA) on a trial-by-trial basis to test whether the multivariate pattern discriminability was correlated between PHC and RSC/PCC. To maximize similarity to the previously described method (Coutanche and Thompson-Schill, 2012), the value of multivariate pattern discriminability was set to be greater than zero when the classifier’s prediction was correct and less than zero the classification prediction was incorrect. Spearman’s rank correlation was calculated between the multivariate pattern discriminability vectors in PHC and RSC/PCC. Resultant values were Fisher’s r to z transformed.

Results

Linear SVM classification analysis was performed using individual trial patterns of activity from all of the voxels within each ROI. The stimulus set contained novel as well as non-novel images within each run (see Methods). The same pattern of results was obtained when using the full stimulus set as when using the novel-only stimulus set, and paired t-tests revealed no significant differences between the two stimulus sets (all MTLC and hippocampal t(11) < 1.4, uncorrected p > 0.19); therefore, we limit our discussion to the results obtained from the full stimulus set because it contained more stimuli.

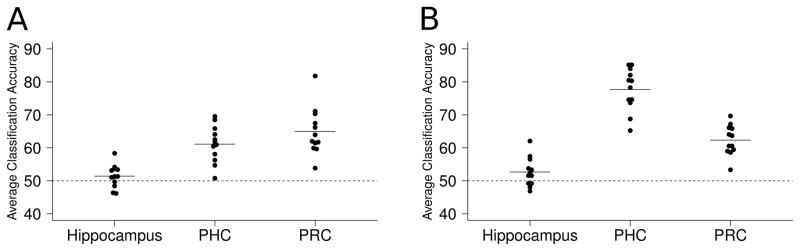

In Experiment 1, one sample t-tests revealed significant classification accuracy for faces versus objects in PHC and PRC (mean classification accuracy: PHC = 61.1%, PRC = 64.9%; both t(11) > 6.9, corrected p < 0.001) but not in the hippocampus (mean classification accuracy = 51.4%; t(11) = 1.41, uncorrected p = 0.19, see Figure 2A). Paired t-tests revealed significantly greater classification accuracy for faces versus objects in PHC and PRC than in the hippocampus (both t(11) > 4.9, corrected p < 0.005), with no significant difference between PHC and PRC (t(11) = 1.86 uncorrected p = 0.09, corrected p = 0.53).

Figure 2.

Linear SVM classification results. Each dot represents the average classification accuracy for one participant and each line represents the group ROI mean. (A) Experiment 1—faces versus objects. (B) Experiment 2—faces versus scenes. Classification accuracy was significantly greater than chance in MTLC but not in hippocampus, and was significantly greater in MTLC than in hippocampus. Classification accuracy for faces versus scenes was greater in PHC than PRC, and PHC classification accuracy was greater for faces versus scenes than faces versus objects.

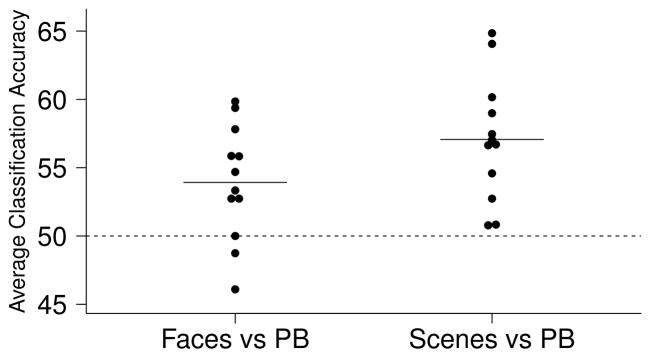

In Experiment 2, one sample t-tests revealed significant classification accuracy for faces versus scenes in PHC and PRC (mean classification accuracy: PHC = 77.7%, PRC = 62.3%; both t(11) > 9, corrected p < 0.001) with little evidence for above-chance category discrimination in the hippocampus (mean classification accuracy = 52.7%; t(11) = 2.11, uncorrected p = 0.06, corrected p = 0.35, see Figure 2B). Paired t-tests revealed significantly greater classification accuracy for faces versus scenes in PHC and PRC than in the hippocampus (both t(11) > 7, corrected p < 0.005) and in PHC than PRC (t(11) = 5.8, corrected p < 0.001). In contrast to classification results between the image categories, one-sample t-tests revealed significant classification accuracy for images versus the perceptual baseline task in the hippocampus (mean classification accuracy: faces vs PB = 53.9%, scenes vs PB = 57.1%; both t(11) > 3, p < 0.05, corrected for 6 comparisons for consistency with the previous analyses; see Figure 3).

Figure 3.

Linear SVM classification results of images versus the perceptual baseline task (Experiment 2). The hippocampus significantly classified faces versus perceptual baseline and scenes versus perceptual baseline.

Bonferroni corrected (for 3 comparisons), two-tailed Welch’s two sample t-tests were used to compare the classification accuracy results obtained from Experiment 1 and 2. This revealed significantly greater classification accuracy in PHC for faces versus scenes than faces versus objects (t = 6.75, corrected p < 0.001). Classification accuracy within PRC and within the hippocampus was not significantly different between Experiment 1 and 2 (both t < 1.1, uncorrected p > 0.3).

In order to rule out the possibility that the previous results were driven by differing numbers of voxels across ROIs (or due to a differing number of noisy voxels), voxel selection was performed. The 200 voxels with the largest ANOVA F-scores of activation differences between the two stimulus categories were selected from the training data prior to classification analysis. A similar pattern of results emerged as the full-ROI method—paired t-tests revealed no significant differences between the results obtained from full-ROI and voxel selection methods (all t(11) < 1.1, uncorrected p > 0.3). In Experiment 1, one sample t-tests revealed significant classification accuracy for faces versus objects in PHC and PRC (mean classification accuracy: PHC = 59.1%, PRC = 64.9%; both t(11) > 7, corrected p < 0.001) but not in the hippocampus (mean classification accuracy = 50.2%, t(11) = 0.16, uncorrected p = 0.88). Paired t-tests revealed significantly greater classification accuracy for faces versus objects in PHC and PRC than the hippocampus (both t(11) > 5, corrected p < 0.005). In contrast to the full-ROI method, a paired t-test revealed marginally significantly greater classification accuracy for faces versus objects in PRC than PHC (t(11) = 3.14, corrected p = 0.056). In Experiment 2, one sample t-tests revealed significant classification accuracy for faces versus scenes in PHC and PRC (mean classification accuracy: PHC=78.1%; PRC=61.6%; both t(11) > 8.5, corrected p < 0.001) with little evidence for category discrimination in the hippocampus (mean classification accuracy=52.0%, t(11) = 2.17, uncorrected p = 0.053, corrected p = 0.32). Paired t-tests revealed significantly greater classification accuracy for faces versus scenes in PHC and PRC than the hippocampus (both t(11) > 5.5, corrected p < 0.005) and in PHC than PRC (t(11) = 6.58, corrected p < 0.001).

In order to rule out the possibility that the hippocampus was representationally categorical but was not showing significant classification accuracy due to noisier individual trial patterns of activity compared to MTLC, we generated run averaged patterns of activity by averaging all of the individual trial patterns of activity within a category for each run. The results obtained from this analysis can be thought of as being similar those obtained from a block design. A similar pattern of results was observed, with significantly greater classification accuracy in the MTLC using run averaged patterns of activity compared to individual trial patterns of activity for faces versus objects (both t(11) > 8, corrected p < 0.001; but not in the hippocampus, t(11) = 0.16, uncorrected p = 0.87) and for faces versus scenes (both t(11) > 8, corrected p < 0.001; but not in the hippocampus, t(11) = 1.26, uncorrected p = 0.23). In Experiment 1, one sample t-tests revealed significant classification accuracy of faces versus objects in PHC and PRC (mean classification accuracy: PHC = 82.0%, PRC = 91.4%; both t(11) > 8.5, corrected p < 0.001) but not in the hippocampus (mean classification accuracy = 52.1.%, t(11) = 0.47, uncorrected p = 0.65). Paired t-tests revealed significantly greater classification accuracy for faces versus objects in PHC and PRC than the hippocampus (both t(11) > 4.5, corrected p < 0.005). In contrast to the full-ROI method using individual trial patterns of activity—but supporting the voxel selection method using individual trial patterns of activity—a paired t-test revealed marginally significantly greater classification accuracy for faces versus objects in PRC than PHC (t(11) = 3.17, corrected p = 0.053). In Experiment 2, one sample t-tests revealed significant classification accuracy for faces versus scenes in PHC and PRC (mean classification accuracy PHC = 97.1%, PRC = 81.1%; both t(11) > 15, corrected p < 0.001) with little evidence for category discrimination in the hippocampus (mean classification accuracy = 56.3%, t(11) = 2.13, uncorrected p = 0.06, corrected p = 0.34). Paired t-tests revealed significantly greater classification accuracy for faces versus scenes in PHC and PRC than the hippocampus (both t(11) > 6.5, corrected p < 0.001) and significantly greater classification accuracy in PHC than PRC (t(11) = 7.11, corrected p < 0.001).

To investigate whether there were differences between anterior and posterior hippocampus that might have been obscured by the overall poor classification performance, separate analyses were run on each region. The most posterior slice of anterior hippocampus was labeled as the most posterior coronal slice in which the uncal apex was visible (Poppenk and Moscovitch, 2011). A one-sample t-test revealed that classification accuracy for faces versus objects was not significantly different than chance in either region (average classification accuracy: anterior hippocampus = 49.3%, t(11) = 0.85, uncorrected p = 0.42; posterior hippocampus = 51.4%, t(11) = 1.26, uncorrected p = 0.23) and a paired t-test revealed that classification accuracy in posterior and anterior hippocampus were not significantly different (t(11) = 1.42, uncorrected p = 0.18). A one-sample t-test revealed that classification accuracy for faces versus scenes was not significantly different than chance in either region (average classification accuracy: anterior hippocampus = 51.8%, t(11) = 1.08, uncorrected p = 0.30, posterior hippocampus = 51.5%, t(11) = 1.05, uncorrected p = 0.31) and a paired t-test revealed that classification accuracy in posterior and anterior hippocampus were not significantly different (t(11) = 0.13, uncorrected p = 0.90).

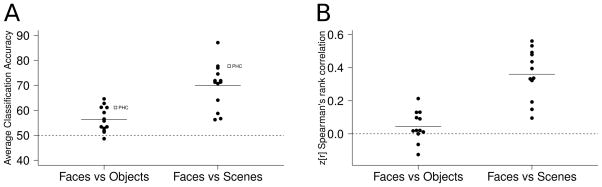

To test the hypothesis that RSC/PCC was representationally similar to PHC, we performed classification analysis as well as a variant of informational connectivity. In Experiment 1, a one-sample t-test revealed significant classification accuracy for faces versus objects in RSC/PCC (mean classification accuracy = 55.9%, t(11) > 4, p < 0.005, see Figure 4A). In Experiment 2, a one-sample t-test revealed significant classification accuracy for faces versus scenes in RSC/PCC (mean classification accuracy = 69.3%, t(11) > 7, p < 0.001, see Figure 4A). Similar to the results in PHC, a two-tailed Welch’s two sample t-test revealed significantly greater classification accuracy for faces versus scenes than faces versus objects (t > 4, p < 0.001). We used trial-by-trial variability in the SVM decision value to investigate the correlation between multivariate pattern discriminability in PHC and RSC/PCC (see Methods). Two-tailed one sample t-tests revealed a significant Fisher’s r to z transformed Spearman’s rank correlation between the multivariate pattern discriminability in these regions for faces versus scenes (mean = 0.36, t(11) = 8.2, p < 0.001, see Figure 4B) but not for faces versus objects (mean Spearman’s rank correlation = 0.045, t(11) = 1.7, p = 0.12). A two-tailed Welch’s two sample t-test revealed significantly greater Fisher’s r to z transformed Spearman’s rank correlation between PHC and RSC/PCC for faces versus scenes than for faces versus objects (t = 6.13, p < 0.001).

Figure 4.

(A) Linear SVM classification results in RSC/PCC revealed a similar pattern of results to PHC: significant classification accuracy for both faces versus objects and faces versus scenes, with greater accuracy for faces versus scenes. The white squares represent mean classification results in PHC. (B) Significant informational connectivity between RSC/PCC and PHC was observed in Experiment 2 (faces versus scenes) but not Experiment 1 (faces versus objects).

Discussion

Using biologically-motivated computational models of the MTL, Norman (2010) suggested that the MTLC can compute summed similarity while the hippocampus cannot—a pattern of results caused by differences in the degree of pattern separation in the MTLC and the hippocampus. To test the summed similarity hypothesis, we used high resolution fMRI and MVPA to study whether the MTLC was more representationally categorical than the hippocampus. If the MTLC was more representationally categorical than the hippocampus, it would provide support for the hypothesis that the MTLC is more functionally specialized for computing summed similarity than the hippocampus. Consistent with the hypothesis, linear SVM analysis revealed significant classification accuracy of faces versus objects and faces versus scenes in PHC and PRC with limited evidence at best for category discrimination in the hippocampus. Additionally, greater classification accuracy was observed in PHC and PRC than the hippocampus. These results support the hypothesis that MTLC can compute summed similarity because stimuli within a category (i.e., similar stimuli) elicited similar patterns of activity (with distinct patterns of activity across image categories), while suggesting that the hippocampus cannot.

The fact that the hippocampus showed little evidence for classification accuracy for faces versus objects and faces versus scenes suggests that the hippocampus is not representationally categorical for these categories. As suggested by Diana et al. (2008), the lack of evidence for category representation in the hippocampus does not rule out the possibility that it is representationally categorical at a more fine-grained scale than our fMRI resolution. However, the fact that we observed significant classification accuracy for images (faces and scenes) compared to perceptual baseline suggests that the hippocampus responded to the images, and therefore the more representationally agnostic results observed in the hippocampus than in the other regions are consistent with our hypothesis.

We utilized voxel selection to control for differing numbers of voxels across ROIs, as well as differing numbers of noisy voxels across ROIs. A similar pattern of results emerged, suggesting that the results were not driven by differing numbers of voxels between MTLC and hippocampus. We used run averaged patterns of activity to control for differences in the noisiness of single trial patterns of activity across ROIs. Once again, a similar pattern of results emerged, suggesting that the results were not driven by noisier single trial patterns of activity within the hippocampus. Taken together our results provide novel evidence that the hippocampus is more representationally agnostic than MTLC, a pattern of results that is not completely driven by non-responsiveness, number of voxels, or noisier individual trial patterns of activity. These results are concordant with the hypothesis that the hippocampus is involved in pattern separation (McClelland et al., 1995; O’Reilly and Rudy, 2000; O’Reilly and Norman, 2002; Norman and O’Reilly, 2003; Norman, 2010) as well as theories that suggest that the hippocampus plays a domain general role in memory formation (Eichenbaum and Cohen, 2001; Davachi, 2006, Azab et al., in press).

Our results are in agreement with recent studies using MVPA to investigate representations in PHC, PRC, and the hippocampus (Diana et al., 2008; Liang et al., 2013; LaRocque et al., 2013), which all found greater category representation in the MTLC than the hippocampus. As mentioned in the Introduction, we hypothesize that a core function of the hippocampus is to perform pattern separation and amplify the dissimilarity across representations, which would result in more representationally agnostic patterns of activity than the MTLC. We expect that under certain conditions, above chance classification accuracy for categories could be observed in the hippocampus; however, we posit that even under these conditions the hippocampus would be more representationally agnostic than the MTLC. In fact, Liang et al. (2013) found evidence that posterior hippocampus was representationally categorical for scenes, while anterior hippocampus was more representationally agnostic. We did not observe significant classification accuracy in posterior (or anterior) hippocampus for faces versus scenes, suggesting that our hippocampal results were not driven by differences between these subregions. Overall, however, the results of Liang et al. (2013) support our conclusions because they found that the hippocampus (including posterior hippocampus) was more representationally agnostic than the MTLC.

Two of the previously mentioned studies also found differences in category representation between PHC and PRC (Liang et al., 2013; LaRocque et al., 2013). Consistent with previous results, in Experiment 2, greater classification accuracy for faces versus scenes was observed in PHC than PRC. Comparing the results from Experiment 1 and 2, PHC showed greater classification accuracy for faces versus scenes than faces versus objects. Taken together, these results suggest that PHC may be preferentially tuned for representation of scenes; however, the fact that PHC showed significant classification accuracy for faces versus objects suggests that it is not involved solely in representation of scenes. We found marginally significantly greater classification accuracy in PRC than PHC for faces versus objects when using voxel selection of individual patterns of activity and when using run averaged patterns of activity, suggesting that PRC may be relatively specialized for representing object or face stimuli. Taken together, our findings support the “binding of item and context” model (Eichenbaum et al., 2007; Diana et al., 2007), which posits that PHC is relatively specialized for representing contexts (including spatial and non-spatial contextual information, as well as scenes, based on anatomical input from neocortical structures in the “where” pathway) and PRC is relatively specialized for representing items (e.g., objects, based on anatomical input from neocortical structures in the “what” pathway). Furthermore, our findings add to studies implicating PHC’s role in scene representation (Litman et al., 2009; Liang et al., 2013; see Davachi, 2006, for a review of studies using univariate analysis of fMRI to investigate domain specificity in PHC, PRC, and the hippocampus).

Recent theories suggest that RSC should be included as part of a larger MTL-cortical memory network (Vann et al., 2009; Ranganath and Ritchey, 2012). Expanding on the “binding of item and context” model, Ranganath and Ritchey (2012) hypothesized that PHC, RSC, and PCC are part of a posterior memory network involved in contextual representation. Consistent with our hypothesis that PHC and RSC/PCC are representationally similar, we observed a similar pattern of results in RSC/PCC to PHC: significant classification of faces versus objects and faces versus scenes, with significantly greater classification for faces versus scenes than faces versus objects. Similar to our conclusions of PHC, these findings suggest that RSC/PCC might be preferentially tuned for scene or spatial representation; however, the fact that it showed significant classification for faces versus objects suggests that it is not tuned solely for the representation of scenes or spatial information. The fact that RSC/PCC showed significant classification in both experiments further highlights the distinctness of the representationally agnostic results observed in the hippocampus.

A variant of informational connectivity was utilized to investigate the correlation between trial-by-trial multivariate pattern discriminability in PHC and RSC/PCC. As discussed previously (Coutanche and Thompson-Schill, 2012), informational connectivity provides novel insight into the functional synchrony between brain regions by measuring the relatedness in multivariate pattern discriminability over time. Consistent with the hypothesis that PHC and RSC/PCC are functionally related and involved in representing scenes or spatial information, we observed a significant correlation between the multivariate pattern discriminability on a trial-by-trial basis in these regions for an experiment with face scene stimuli but not an experiment with face and object stimuli. These findings suggest that there may be a stimulus-dependent modulation in the correlation between multivariate pattern discriminability across these regions.

In contrast to the relative lack of evidence of category representation in the human hippocampus (Diana et al., 2008; LaRocque et al., 2013; but see Liang et al., 2013), recent evidence using linear SVM analysis showed successful classification between distinct locations in a virtual environment (Hassabis et al., 2009), between memories of highly overlapping video clips (Chadwick et al., 2010, 2011), and between highly similar scene images (Bonnici et al., 2012). Additionally, these studies observed significantly higher classification accuracy in the hippocampus than the MTLC. These results provide evidence for the role of the human hippocampus in pattern separation, thus suggesting that the hippocampus may exhibit unique patterns of activity in response to each individual stimulus, thus resulting in a lack of category representation (Chadwick et al., 2012). Furthermore, LaRocque et al. (2013) provided support for behavioral relevance of the differences in representations in the MTL cortex and hippocampus, showing a positive relationship between subsequent memory and within-category similarity in PHC and PRC and a negative relationship between subsequent memory and within-category similarity in the hippocampus. Taken together, it appears that there may be a double dissociation between the MTLC and the hippocampus, such that the MTLC is specialized for computing summed similarity and the hippocampus is specialized for pattern separation, thus supporting Norman (2010).

Acknowledgments

Grant sponsor: NIA; Grant number: R01 AG034613 and Grant sponsor: NIH; Grant number: R01 MH085828. We would like to thank Shauna Stark and Samantha Rutledge for their assistance with data collection.

References

- Avants B, Duda JT, Kim J, Zhang HY, Pluta J, Gee JC, Whyte J. Multivariate analysis of structural and diffusion imaging in traumatic brain injury. Academic Radiology. 2008;15:1360–1375. doi: 10.1016/j.acra.2008.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azab M, Stark SM, Stark CEL. Contributions of human hippocampal subfields to spatial and temporal pattern separation. Hippocampus. doi: 10.1002/hipo.22223. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakker A, Kirwan CB, Miller MI, Stark CEL. Pattern separation in the human hippocampal CA3 and dentate gyrus. Science. 2008;319:1640–1642. doi: 10.1126/science.1152882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayley PJ, Wixted JT, Hopkins RO, Squire LR. Yes/no recognition, forced-choice recognition, and the human hippocampus. The Journal of Cognitive Neuroscience. 2008;20:505–512. doi: 10.1162/jocn.2008.20038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Kumaran D, Chadwick MJ, Weiskopf N, Hassabis D, Maguire EA. Decoding representations of scenes in the medial temporal lobes. Hippocampus. 2012;22:1143–1153. doi: 10.1002/hipo.20960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buffalo EA, Bellgowan PSF, Martin A. Distinct roles for medial temporal lobe structures in memory for objects and their locations. Learn Mem. 2006;13:638–643. doi: 10.1101/lm.251906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Welskopf N, Maguire EA. Decoding individual episodic memory traces in the human hippocampus. Current Biology. 2010;20:544–547. doi: 10.1016/j.cub.2010.01.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Maguire EA. Decoding overlapping memories in the medial temporal lobes using high-resolution fMRI. Learn Mem. 2011;18:742–746. doi: 10.1101/lm.023671.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Bonnici H, Maguire EA. Decoding information in the human hippocampus: a user’s guide. Neuropsychologia. 2012;50:3107–3121. doi: 10.1016/j.neuropsychologia.2012.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coutanche MN, Thompson-Schill SL. Informational connectivity: identifying synchronized discriminability of multi-voxel patterns across the brain. Front Hum Neurosci. 2012;7(15):1–14. doi: 10.3389/fnhum.2013.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29(3):162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in humans. Current Opinion in Neurobiology. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Desikan RS, Se gonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, Albert MS, Killiany RJ. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage. 2006;31:968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. Imaging recollection and familiarity in the medial temporal lobe: A three-component model. Trends in Cognitive Sciences. 2007;11(9):379–386. doi: 10.1016/j.tics.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. High-resolution multi-voxel pattern analysis of category selectivity in the medial temporal lobes. Hippocampus. 2008;18:536–541. doi: 10.1002/hipo.20433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duff MC, Warren DE, Gupta R, Vidal JP, Tranel D, Cohen NJ. Teasing apart tangrams: testing hippocampal pattern separation with a collaborative referencing paradigm. Hippocampus. 2012;22:1087–1091. doi: 10.1002/hipo.20967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen NJ. From conditioning to conscious recollection: memory systems in the brain. Upper Saddle River, NJ: Oxford University Press; 2001. p. 583. [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C. The medial temporal lobe and recognition memory. Annu Rev Neurosci. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert PE, Kesner RP, DeCoteau WE. Memory for spatial location: role of the hippocampus in mediating spatial pattern separation. J Neurosci. 1998;18(2):804–810. doi: 10.1523/JNEUROSCI.18-02-00804.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanke M, Halchenko YO, Sederberg PB, Hanson SJ, Haxby JV, Pollman S. PyMVPA: a python toolbox for multivariate pattern analysis of fMRI data. Neuroinformatics. 2009;7(1):37–53. doi: 10.1007/s12021-008-9041-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanke M, Halchenko YO. Neuroscience runs on GNU/Linux. Front Neuroinform. 2012 Jul 7;5:8. doi: 10.3389/fninf.2011.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hargreaves EL, Mattfeld AT, Stark CEL, Suzuki WA. Conserved fMRI and LFP signals during new associative learning in the human and macaque monkey medial temporal lobe. Neuron. 2012;74:743–752. doi: 10.1016/j.neuron.2012.03.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Chu C, Rees G, Weiskopf N, Molyneux PD, Maguire EA. Decoding neuronal ensembles in the human hippocampus. Current Biology. 2009;19:546–554. doi: 10.1016/j.cub.2009.02.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7(7):523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- Holdstock JS, Mayes AR, Roberts N, Cezayirli E, Isaac CL, O’Reilly RC, et al. Under what conditions is recognition spared relative to recall after selective hippocampal damage in humans? Hippocampus. 2002;12:341–351. doi: 10.1002/hipo.10011. [DOI] [PubMed] [Google Scholar]

- Holdstock JS, Mayes AR, Gong QY, Roberts N, Kapur N. Item recognition is less impaired than recall and associative recognition in patients with selective hippocampal damage. Hippocampus. 2005;15(2):203–215. doi: 10.1002/hipo.20046. [DOI] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen K, Vainio P, Laakso MP, Pitkänen A. MR Volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. Am J Neuroradiol. 1998;19:659–671. [PMC free article] [PubMed] [Google Scholar]

- Kirwan CB, Hartshorn A, Stark SM, Goodrich-Hunsaker NJ, Hopkins RO, Stark CEL. Pattern separation deficits following damage to the hippocampus. Neuropsychologia. 2012;50:2408–2414. doi: 10.1016/j.neuropsychologia.2012.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirwan CB, Stark CEL. Medial temporal lobe activation during encoding and retrieval of novel face-name pairs. Hippocampus. 2004;14:919–930. doi: 10.1002/hipo.20014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Chun MM, Wagner AD. Fidelity of neural reactivation reveals competition between memories. Proceedings of the National Academy of Sciences. 2011;108(14):5903–5908. doi: 10.1073/pnas.1016939108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Wagner AD. Multi-voxel patterns of visual category representation during episodic encoding are predictive of subsequent memory. Neuropsychologia. 2012;50(4):458–469. doi: 10.1016/j.neuropsychologia.2011.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacy JW, Yassa MA, Stark SM, Muftuler LT, Stark CE. Distinct pattern separation related transfer functions in human CA3/dentate and CA1 revealed using high-resolution fMRI and variable mnemonic similarity. Learn Mem. 2011;18(1):15–18. doi: 10.1101/lm.1971111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque KF, Smith ME, Carr VA, Witthoft N, Grill-Spector K, Wagner AD. Global similarity and pattern separation in the human medial temporal lobe predict subsequent memory. J Neurosci. 2013;33:5466–5474. doi: 10.1523/JNEUROSCI.4293-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Law JR, Flanery MA, Wirth SW, Yanike M, Smith AC, Frank LM, Suzuki WA, Brown EN, Stark CEL. Functional magnetic resonance imaging activity during the gradual acquisition and expression of paired-associate memory. J Neurosci. 2005;25(24):5720–5729. doi: 10.1523/JNEUROSCI.4935-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leutgeb JK, Leutgeb S, Treves A, Meyer R, Barnes CA, McNaughton BL, Moser MB, Moser EI. Progressive transformation of hippocampal neuronal representations in “morphed” environments. Neuron. 2005;48(2):345–58. doi: 10.1016/j.neuron.2005.09.007. [DOI] [PubMed] [Google Scholar]

- Leutgeb Leutgeb S, Moser MB, Moser EI. Pattern separation in the dentate gyrus and CA3 of the hippocampus. Science. 2007;315(5814):961–966. doi: 10.1126/science.1135801. [DOI] [PubMed] [Google Scholar]

- Leutgeb S, Leutgeb JK. Pattern separation, pattern completion, and new neuronal codes within a continuous CA3 map. Learn Mem. 2007;14(11):745–757. doi: 10.1101/lm.703907. [DOI] [PubMed] [Google Scholar]

- Liang JC, Wagner AD, Preston AR. Content Representation in the Human Medial Temporal Lobe. Cerebral Cortex. 2013;23:80–96. doi: 10.1093/cercor/bhr379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litman L, Awipi A, Davachi L. Category-specificity in the human medial temporal lobe cortex. Hippocampus. 2009;19:308–319. doi: 10.1002/hipo.20515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattfeld AT, Stark CEL. Striatal and medial temporal lobe functional interactions during visuomotor associative learning. Cerebral Cortex. 2010;21(3):647–658. doi: 10.1093/cercor/bhq144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattfeld AT, Gluck MA, Stark CEL. Functional specialization within the striatum along both the dorsal/ventral and anterior/posterior axes during associative learning via reward and punishment. Learn and Mem. 2011;18:703–711. doi: 10.1101/lm.022889.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayes AR, Holdstock JS, Isaac CL, Hunkin NM, Roberts N. Relative sparing of item recognition memory in a patient with adult-onset damage limited to the hippocampus. Hippocampus. 2002;12:325–340. doi: 10.1002/hipo.1111. [DOI] [PubMed] [Google Scholar]

- McClelland JL, McNaughton BL, O’Reilly RC. Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and failures of connectionist models of learning and memory. Psychological Review. 1995;102:419–457. doi: 10.1037/0033-295X.102.3.419. [DOI] [PubMed] [Google Scholar]

- Morris AM, Churchwell JC, Kesner RP, Gilbert PE. Selective lesions of the dentate gyrus produce disruptions in place learning for adjacent spatial locations. Neurobiol Learn Mem. 2012;97(3):326–331. doi: 10.1016/j.nlm.2012.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motley SE, Kirwan CB. A parametric investigation of pattern separation processes in the medial temporal lobe. J Neurosci. 2013;32(38):13076–85. doi: 10.1523/JNEUROSCI.5920-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman KA, O’Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: a complementary learning systems approach. Psychological Review. 2003;110:611–646. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends in Cognitive Sciences. 2006;10(9):424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Norman KA. How hippocampus and cortex contributes to recognition memory: Revisiting the Complementary Learning Systems model. Hippocampus. 2010;20(11):1217–1227. doi: 10.1002/hipo.20855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okado Y, Stark CEL. Neural activity during encoding predicts false memories created by misinformation. Learn Mem. 2005;12:3–11. doi: 10.1101/lm.87605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Reilly RC, Rudy JW. Computational principles of learning in the neocortex and hippocampus. Hippocampus. 2000;10:389–397. doi: 10.1002/1098-1063(2000)10:4<389::AID-HIPO5>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- O’Reilly RC, Norman KA. Hippocampal and neocortical contributions to memory: Advances in the complementary learning systems framework. Trends in Cognitive Sciences. 2002;6(12):505–510. doi: 10.1016/s1364-6613(02)02005-3. [DOI] [PubMed] [Google Scholar]

- Poppenk J, Moscovitch M. A hippocampal marker of recollection memory ability among healthy young adults: contributions of posterior and anterior segments. Neuron. 2011;72:931–937. doi: 10.1016/j.neuron.2011.10.014. [DOI] [PubMed] [Google Scholar]

- Potvin O, Doré FY, Goulet S. Lesions of the dorsal subiculum and the dorsal hippocampus impaired pattern separation in a task using distinct and overlapping visual stimuli. Neurobiol Learn Mem. 2009;91:287–297. doi: 10.1016/j.nlm.2008.10.003. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Ritchey M. Two cortical systems for memory-guided behavior. Nat Rev Neurosci. 2012;13:713–726. doi: 10.1038/nrn3338. [DOI] [PubMed] [Google Scholar]

- Reed JM, Squire LR. Impaired recognition memory in patients with lesions limited to the hippocampal formation. Behav Neurosci. 1997;111:667–675. doi: 10.1037//0735-7044.111.4.667. [DOI] [PubMed] [Google Scholar]

- Saad ZS, Glen DR, Chen G, Beauchamp MS, Desai R, Cox RW. A new method for improving functional-to-structural alignment using local Pearson correlation. NeuroImage. 2009;44:839–848. doi: 10.1016/j.neuroimage.2008.09.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark CEL, Okado Y. Making memories without trying: medial temporal lobe activity associated with incidental memory formation during recognition. J Neurosci. 2003;23(17):6748–6753. doi: 10.1523/JNEUROSCI.23-17-06748.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark CEL, Squire LR. When zero is not zero: The problem of ambiguous baseline conditions in fMRI. Proceedings of the National Academy of Sciences. 2001;98(22):12760–12766. doi: 10.1073/pnas.221462998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark CEL, Squire LR. Hippocampal damage equally impairs memory for single items and memory for conjunctions. Hippocampus. 2003;13:281–292. doi: 10.1002/hipo.10085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talpos JC, McTighe SM, Dias R, Saksida LM, Bussey TJ. Trial-unique, delayed nonmatching-to-location (TUNL): A novel, highly hippocampus-dependent automated touchscreen test of location memory and pattern separation. Neurobiol Learn Mem. 2010;94:341–352. doi: 10.1016/j.nlm.2010.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vann SD, Aggleton JP, Maguire EA. What does the retrosplenial cortex do? Nat Rev Neurosci. 2009;10:792–802. doi: 10.1038/nrn2733. [DOI] [PubMed] [Google Scholar]

- Wixted JT, Squire LR. The medial temporal lobe an the attributes of memory. Trends Neurosci. 2011;15(5):210–217. doi: 10.1016/j.tics.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yassa MA, Stark CEL. Pattern separation in the hippocampus. Trends Neurosci. 2011;34(10):515–525. doi: 10.1016/j.tins.2011.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]