Abstract

The sensorimotor transformations necessary for generating appropriate motor commands depend on both current and previously acquired sensory information. To investigate the relative impact (or weighting) of visual and haptic information about object size during grasping movements, we let normal subjects perform a task in which, unbeknownst to the subjects, the object seen (visual object) and the object grasped (haptic object) were never the same physically. When the haptic object abruptly became larger or smaller than the visual object, subjects in the following trials automatically adapted their maximum grip aperture when reaching for the object. This adaptation was not dependent on conscious processes. We analyzed how visual and haptic information were weighted during the course of sensorimotor adaptation. The adaptation process was quicker and relied more on haptic information when the haptic objects increased in size than when they decreased in size. As such, sensory weighting seemed to be molded to avoid prehension error. We conclude from these results that the impact of a specific source of sensory information on the sensorimotor transformation is regulated to satisfy task requirements.

Sensorimotor transformations are processes whereby sensory information is used to generate motor commands. In many behavioral situations, these transformations depend on feedforward mechanisms that use currently available sensory information and previously acquired sensorimotor memories (Iberall et al. 1986; Sainburg et al. 1993; Gentilucci et al. 1995; Jenmalm and Johansson 1997; Johansson 1998). Consider, for instance, the familiar task of repeatedly grasping a glass of water. In this situation, the parameterization of the motor behavior is based on current visual information as well as visual and haptic information obtained during previous lifts (Jenmalm and Johansson 1997; Johansson 1998).

The relative impact of visual and haptic information on the sensorimotor transformation during grasping has never been quantified. Moreover, the principles regulating the relative impact of different sensory sources on motor behavior are not well understood. To address these issues, we designed an experimental paradigm, in which the relationship between visual and haptic information about object size was manipulated in a systematic fashion. Subjects performed a grasping task, in which the object seen (the visible object) and the object grasped (the haptic object) were never the same physical object, while the positions of the tip of the index finger and the thumb were recorded. Without subjects' being aware of it, the haptic object was sometimes larger (increased-size condition) and sometimes smaller (decreased-size condition).

In numerous studies in which conflicts between the senses have been induced, adaptation occurs, a process that serves to reduce the discrepancy between the modalities and reflects how sensory information is integrated (Welch 1978). As suggested by van Beers et al. (2002), the inverse of the adaptation can be interpreted as the relative weight of a sensory source, as the modality weighted most heavily will adapt least.

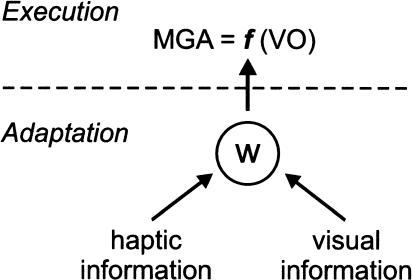

The maximum amplitude of the grip aperture during prehension is a clearly identifiable event that occurs well before contact with the object and covaries linearly with object size (Jeannerod 1984; Marteniuk et al. 1990). We assume that adaptation of the maximum grip aperture (MGA) in our paradigm would reflect changes in the weighting of haptic and visual information, that is, how sensory information about object size is dynamically integrated for behavioral purposes (Fig. 1). Visual information is the only sensory source that drives the behavior during the execution of the grasping task, that is, MGA is a function of the object's size as assessed by vision (function f in Fig. 1). Under natural circumstances, the sensorimotor transformation (f) depends, of course, on numerous factors (e.g., movement speed, Wing et al. 1986; and lighting conditions, Jakobson and Goodale 1991), but we designed our experiment so that changes in the transformation would depend only on the subjects' evaluation of visual and haptic information acquired during the course of an experimental series.

Figure 1.

Execution and adaptation. During execution of normal grasping movements, the sensorimotor transformation (f) determines the relation between the visual size of the object (VO) and the maximum grip aperture (MGA). The behavior is presumed to be continually monitored by visual and haptic information. When visual and haptic information about object size are dissociated, any change in f would reflect how the evaluated sensory feedback is weighted. Because of the functional requirements of the task, we expected haptic information to be more heavily weighted when the haptic size of the object increased as compared with when it decreased.

The functional implications of changing the relationship between visual and haptic objects were asymmetrical between conditions, because there was a risk of collision between the object and the fingers only when the haptic object was increased compared with the visual object. Attending to haptic information was therefore functionally more important for a successful execution of the grasp during the increased-size condition than during the decreased-size condition. Specifically, two predictions were made about the adaptation process. First, we predicted that haptic information should be weighted more heavily during the increased-size condition than during the decreased-size condition, when the subjects were fully adapted to the size discrepancy. Second, we expected the adaptation process to be completed faster during the increased-size condition than during the decreased-size condition.

RESULTS

General Grasping Behavior During Equal Visual and Haptic Object Size

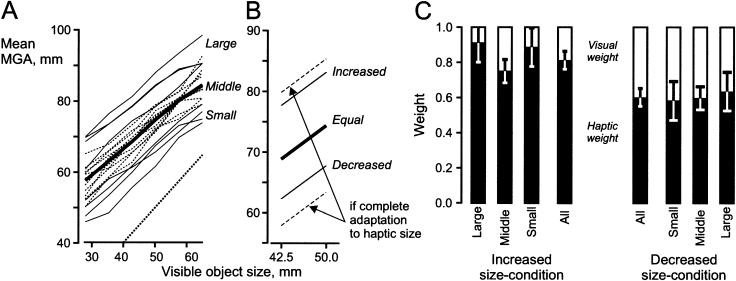

All subjects adjusted the maximum grip aperture (MGA) during the reaching phase to the visual size of the object (Fig. 3A, below). The relationship between the size of the visible object and the MGA was nearly linear with a subject-specific slope (across all subjects 0.73 ± 0.10, mean ± SD) and offset (37.7 ± 9.2). As expected, subjects with the smallest offset tended to have the largest slopes and vice versa (coefficient of determination, r2 = 0.52). No effect was observed from the size of the object in the immediately preceding trial on the MGA [F(5, 216) = 0.164; no interaction: F(5, 216) = 0.585].

Figure 3.

Adapted behavior during equal-, increased-, and decreased-size conditions. (A) The thick solid line represents the mean across all 19 subjects. The thin solid lines represent subjects with large and small clearance from the 1:1 line (broken line at bottom, right); the dotted lines represent subjects with intermediate clearance. (B) Solid lines represent the mean MGA across all subjects during the equal-, increased-, and decreased-size condition. The broken lines represent the expected behavior had subjects completely adapted to the haptic size of the objects [i.e., f(VO+15) and f(VO-15), respectively]. (C) All subjects, whether they showed a large, small, or intermediate clearance weighted haptic and visual information similarly, i.e., the haptic information was weighted considerably more during the increased than during the decreased-size condition. The ratio between haptic and visual weights across all subjects were 0.81:0.19 in the increased-size and 0.60:0.40 in the decreased-size condition. Error bars, 0.95 confidence intervals.

The difference between the MGA and the size of the actual object represents a clearance against failing to grasp the object during the task [= (offset + slope · object size) - object size]. This clearance varied considerably between subjects during the equalsize condition (13-38 mm for objects of sizes 42.5 and 50 mm; Fig. 3A, below). The source of this variability remains unknown. Specifically, we could not explain the intersubject differences in clearance by the individual subjects' variability in achieving a certain MGA given a specific size of the visible object (Spearman rank correlation coefficient, rs = 0.09). Neither was there a significant correlation between the subjects' hand size assessed by the maximal achievable aperture (168 ± 17 mm, mean ± SD) and the clearance (Spearman rank correlation coefficient, rs = 0.31).

The Relative Weighting of Visual and Haptic Information When Fully Adapted to a Size Discrepancy

The relationship between the size of the visible and the haptic object abruptly changed at the tenth trial in each series; either the haptic object became 15 mm larger (increased-size condition) or 15 mm smaller (decreased-size condition) than the visible object (cf. Fig. 2D). The subjects gradually adapted the MGA to this change and adopted an apparently stable new relationship between the visible object size and the MGA. The last four trials of the exposure period were chosen to reflect the fully adapted behavior (as no further adaptation occurs beyond ∼20 trials, see Materials and Methods). To avoid extrapolations outside of the range where the relationship between the MGA and object size was known for both increased- and decreased-size conditions (Fig. 3A), the analysis of the adapted behavior was limited to visible objects of sizes 42.5 and 50 mm (solid lines in Fig. 3B). The broken lines in Figure 3B represent the expected MGA if the subjects would have completely adapted to the haptic size of the objects during the increased and decreased-size condition, respectively (i.e., a visual relative weight of 0.0 and a haptic weight of 1.0; see Materials and Methods). There was a significant difference between the increased- and decreased-size condition across all subjects (Fig. 3C); the haptic weight was considerably larger for the increased than for the decreased-size condition [F(1,18) = 8.376, P < 0.01]. The ratio between haptic and visual weights was 0.81:0.19 in the increased-size and 0.60:0.40 in the decreased-size condition. The average slope during the increased- and decreased-size condition was the same as during the equalsize condition (i.e., 0.73 for all three size conditions, Fig. 3B). Interestingly, subjects with small, intermediary, and large clearance weighted haptic and visual information in a similar manner (Fig. 3C; small and large clearance were each represented by four extreme subjects; the rest of the subjects were designated to the intermediate group.) In conclusion and in accordance with our first prediction, when subjects were fully adapted to a size discrepancy, haptic information was weighted more heavily during the increased-size condition than during the decreased-size condition.

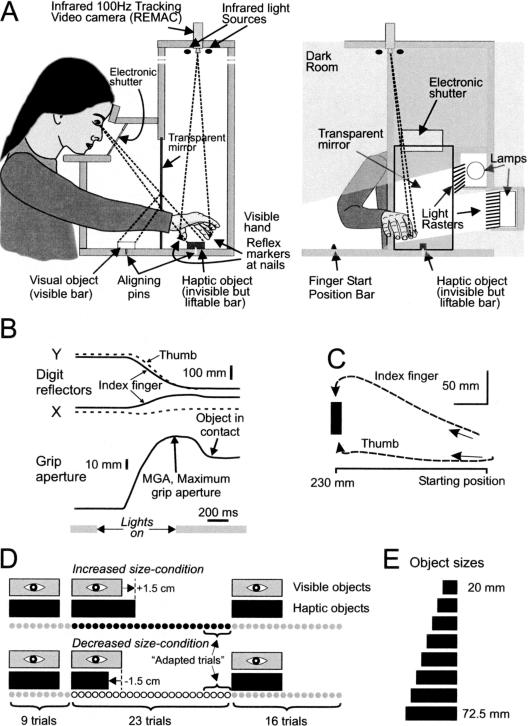

Figure 2.

Materials and methods. (A) A transparent mirror divided the experimental apparatus into two compartments, one with a bar visible to the subject (visible object), and one with an invisible bar that could be reached by the subject's right hand (haptic object). The haptic object was painted black and never illuminated. (B,C,) Single trial showing the coordinates of the reflex markers, the calculated grip aperture, and the XY plot of the digit movements. (D) All experimental series began with nine trials with equal visual and haptic size, followed by 23 trials with haptic size of either 15 mm larger or smaller than the visual size; each series ended with 16 trials with equal visual and haptic size. (E) Visual and haptic objects drawn to scale.

The Time-Course of the Adaptation Process

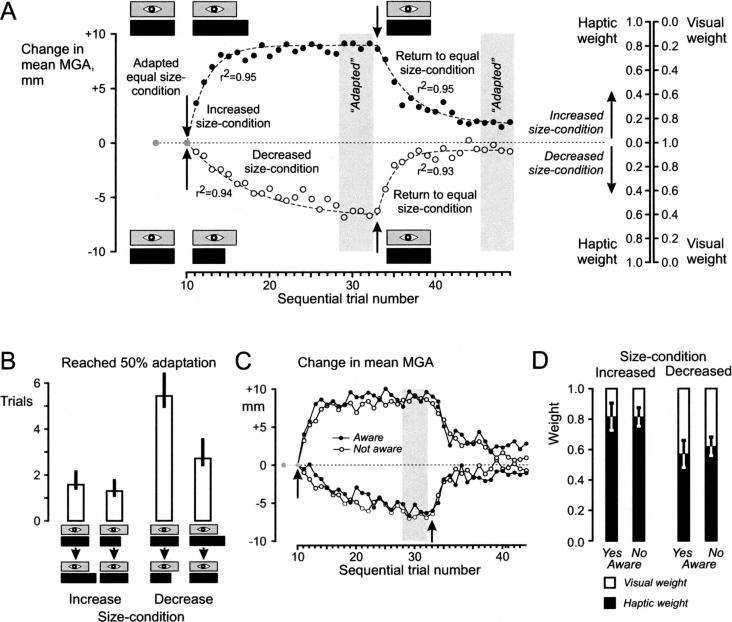

Figure 4A illustrates how the mean MGA and the weighting of sensory information across all subjects changed during the course of adaptation to a new size relationship. Subjects, on average, reached the fully adapted state faster during the increased-size than during the decreased-size condition. The number of trials required to reach 50% of the maximal observed adaptation was, on average, 1.7 trials during the increased-size and 5.4 trials during the decreased-size condition (Fig. 4B). These values differed significantly from each other [F(1,18) = 12.60, P < 0.01]. Subjects also readapted faster when they returned to the equalsize condition when the size of the haptic object was increased (that is, returning from the decreased-size condition) than when it was decreased. In conclusion and in accordance with our second prediction, the adaptation process was completed more quickly when the size of the haptic object was increased compared with the previous trials, than when it was decreased in size.

Figure 4.

Time course of adaptation process. (A) The increased- and decreased-size condition induced systematic changes in the mean MGA adopted by the subjects (the figure illustrates averages across subjects). The adaptation process was well fitted with exponential functions (broken lines, r2 values refer to fit of the four segments). Using the MGA expected if the subjects had completely adapted to the haptic size of the objects (cf. Fig. 2B), the haptic and visual weights for the increased and decreased-size condition were calculated (scales to the right). (B) Not only did subjects on average weight haptic information more in the increased-size condition, they also adapted significantly faster than during the decreased-size condition. (C,D) Irrespective of subjects' awareness of any mismatch between the size of the haptic and the visual objects, the adaptation proceeded with the same speed and to the same extent. Specifically, the haptic weight after adapting to the increased- and decreased-size condition was the same for both groups. Error bars, 0.95 confidence intervals.

The Effect of Subjects' Awareness of Size Discrepancies

The majority of the subjects (13/19) was unaware of any conflict between visual and haptic information and reacted with surprise when the experimental paradigm was explained to them. The remaining 6/19 subjects reported that the visible object sometimes appeared to have differed in size from the manipulated object; most of them discovered this early in the experiment. Surprisingly, being aware or not being aware of a changing relationship between the visible and haptic size of the object did not influence the subjects' behavior. Both groups adapted in a similar fashion to a new size relationship (Fig. 4C) and displayed similar haptic and visual weights once they had reached the fully adapted state (Fig. 4D).

DISCUSSION

We have quantified the relative impact (or weighting) of visual and haptic information on the sensorimotor transformation during grasping movements in two different experimental conditions. In accordance with our predictions, haptic information was weighted more during the increased-size condition than during the decreased-size condition when subjects were fully adapted to a size discrepancy (Fig. 3C). Moreover, the adaptation process was completed faster when the size of the haptic object had increased compared with when it had decreased (Fig. 4A,B). This indicates that haptic information was weighted more heavily when it was functionally more important for a successful execution of the grasp. These measures were not influenced by whether or not subjects were aware of any size discrepancy (Fig. 4C,D). We conclude from these results that the impact of a specific source of sensory information on the sensorimotor transformation is regulated to satisfy task requirements.

When using the concept `sensory integration', we refer to the weighting process in which different modalities providing information about the same object property both influenced the sensorimotor transformation. Our measure of sensory weighting describes which impact the two sensory sources actually had on motor behavior. Therefore, the terms `weighting' and `impact' (of sensory sources) are used interchangeably throughout the study. A 1:0 weighting of visual and haptic information would have implied that the subjects actually grasped the object as if haptic information was ignored, whereas the opposite weighting would imply that subjects completely adapted their aperture to the haptic size of the object. This approach is not dependent on any elaborate hypothesis about how the weighting process may have been accomplished, for instance, at the stage of motor planning, through sensory realignment, by cue combination, or by some combinations of these suggestions.

After considering the validity of the experimental paradigm, we will discuss the principles that may regulate the observed adaptation and, finally, potential mechanisms of sensory encoding of unexpected changes in object size.

Validity of the Experimental Paradigm

In accord with numerous previous studies (e.g., Marteniuk et al. 1990), the MGA varied linearly with object size (see Fig. 3A). The same linear relation remained when changing the relation between visual and haptic information, and the slope was the same during all conditions (Fig. 3B). Notably, MGA is not a direct measure of the perceived size of the object as a subject would report it in a perceptual task. Rather, it reflects how the action system uses information about object sizes to accommodate motor behavior (Aglioti et al. 1995; Hu and Goodale 2000).

The visual and haptic information about object properties was not temporally coincident in our experiment, because visual information was shut off slightly before the digits came in contact with the object. This lack of absolute temporal simultaneity, however, is not relevant, because both sources yielded useful information about the object. Naturally, haptic information about the current object could not be used to scale the MGA, as the MGA occurs prior to contact with the object. However, the adaptation we observed shows that previously acquired information was used to guide motor behavior. It is unlikely that visual information about the digits while they approached the target object provided visual cues about the haptic size of the object, because subjects are known to fixate their gaze to the target object and not their hands when they make grasping movements (Johansson et al. 2001; Land and Hayhoe 2001). Also, given that most subjects were unaware of the discrepancies between visual and haptic information, it seems incredible that they would have used visual information about the fingers to deduce information about the size of the haptic object.

A relatively large discrepancy (15 mm) was introduced between the visible and the haptic objects, yet the majority of our subjects did not verbally report any change in the size relationship. Gentilucci et al. (1995) have reported that subjects remained unaware of incongruities between visual and haptic information during grasping unless they voluntarily directed their attention to it. When subjects direct their attention to incongruities between visual and haptic information, their discrimination threshold is reported to be about 5 mm (Hillis et al. 2002). Therefore, it seems likely that our subjects could have consciously discriminated the size difference had they directed their attention to it during the experiments.

One could argue that the final weighting of sensory information might have been more similar between conditions if we had investigated a longer adaptation period. This, however, seems unlikely given the results obtained in our pilot experiments, where much longer adaptation periods were used (see Materials and Methods). Moreover, our measure of the time course of the adaptation process (trials needed to reach 50% of the fully adapted state) would still have reflected the difference between conditions, even if the final weighting of sensory information after a larger number of trials would have been more similar.

Principles Regulating the Observed Adaptation

Our conclusion regarding the principles regulating sensory integration is that haptic information was more heavily weighted when it was functionally more important for a successful execution of the grasp. It seems obvious that conscious perception of size discrepancies cannot explain the adaptation observed, as the adaptation pattern was similar in consciously aware and unaware subjects (Fig. 4C,D).

Gentilucci et al. (1995) have proposed that motor programs are updated to preserve the temporal structure of the movement (e.g., keeping the total grasp time constant) when visual and haptic information are incongruent. That such a mechanism may have resulted in an adaptation of the MGA in our experiments is compatible with the fact that subjects with different MGA magnitudes in our study seemed to adapt in a similar way (Fig. 3C). With this explanation, it seems impossible, however, to account for the asymmetries observed between the increased- and decreased-size conditions (Figs. 3 and 4).

A partial explanation of our results can be given from the finding made in previous prism adaptation studies (e.g., Fernàndez-Ruiz and Díaz 1999) that the magnitude of aftereffects is correlated with the magnitude of the adaptation, that is, low levels of adaptation are followed by smaller and shorter aftereffects. A similar effect might have contributed to the more rapid adaptation when the condition changed from both increased- and decreased-size conditions back to equal-size condition as compared with when shifting from the equal-size condition to either the increased- or decreased-size condition (see Fig. 4A). Moreover, this might explain why the readaptation from the decreased-size condition was quicker than from the increased-size condition, because in the former case, the adaptation was less pronounced. Nevertheless, this suggestion is only partially satisfactory, because it fails to explain why the adaptation was faster and more prominent during the increased-size condition than during the decreased-size condition.

Several recent studies suggest that the important factor regulating sensory integration is the precision of information. The central nervous system seems to integrate visual and haptic information by weighting each source by its precision and thus minimizing the uncertainty in the final estimates (Ernst and Banks 2002). Similarly, visual and proprioceptive information about upper-limb position is weighted in accordance with their respective accuracy (van Beers et al. 1999, 2002). Other studies have demonstrated dynamic interactions between vision and haptics when altering the availability of sensory information; noninformative vision can improve both tactile two-point discrimination (Kennett et al. 2001), and the proprioceptive accuracy of limb position information (Newport et al. 2001, 2002), and furthermore, haptic feedback can alter the visual perception of slant (Ernst et al. 2000). These studies demonstrate that the precision and availability of sensory information are factors that influence the integration process. Our approach to sensory integration differs from these experiments on two important points. First, we measured changes in a motor parameter related to an object property instead of a perceptual estimate of that object property. Second, the integration of information occurred during the course of several sensorimotor interactions, whereas the studies cited above concern the integration of simultaneous sensory information about an object property. Therefore, it is somewhat problematic to apply the conclusions from these studies on our experiment. Nevertheless, both the precision and availability of sensory information were constant in our experiment (we did not alter the precision or availability of sensory information between conditions). The differences observed between conditions must therefore depend on factors other than sensory precision or availability.

The optimal integration models for sensory integration that have recently received much attention derives from Bayes' rule and its implementation typically includes a cost function (Clark and Yuille 1990). However, in several of the studies in which the results have been interpreted within the framework of optimal integration models, the quantification of the experimental situation has been simplified by not rewarding correct or incorrect answers, thereby obviating the need for a cost function (van Beers et al. 1999, 2002; Ernst and Banks 2002; Newport et al. 2002). In contrast, in our experimental paradigm, there is an implicit cost function; `there is a larger risk that the task fails with a too small MGA than with a too large MGA'. Therefore, one interpretation of our results is that in situations in which feedback occurs, sensory integration depends on the cost of making different kinds of errors.

Precision of sensory information is what one might call an intrinsic aspect of sensory information. Our data indicate that the contextual aspect of sensory information, that is, what it signals in relation to functional task requirements, influenced the integration process. It has been demonstrated previously that the CNS monitors specific sensory events to regulate motor behavior during manipulative actions (Johansson 1998; Flanagan and Johansson 2003). Motor reactions to such events depend highly on the context in which the sensory event takes place. Moreover, the CNS has an ability to distinguish functionally important sensory events from nonimportant sensory events, and to release adequate motor programs in response only to the former. When making grasping movements, information indicating a sudden decrease in the clearance might be of particular importance for avoiding prehension errors, and may therefore be more heavily weighted than information indicating an increased clearance. It seems unlikely that collisions with the objects per se caused the adaptation, as subjects with small aperture magnitudes did not display more weighting of haptic information, despite the fact that they were more likely to collide with the objects (Fig. 3C). However, regardless of the initial magnitude of the clearance, a sudden decrease can be interpreted as indicating an increased risk of colliding with the object, compared with when using the initial clearance. Moreover, a decrease in the clearance makes the angle of approach more oblique when the fingers contact the object, and this increases the potential contact surface area between the finger and the object, making the grasping movement less accurate (Smeets and Brenner 2001).

Encoding Unexpected Changes in Object Size

We do not see how the clearance as such could be represented in sensory signals, but we can identify several theoretical ways to signal a sudden change in the clearance, that is, an unexpected object size. First, the larger-than-expected objects during the increased-size condition may have been contacted with a larger-than-planned velocity, and therefore, increased contact forces. Second, the larger-than-expected objects would alter the angle of approach when the digits contact the object, giving rise to increased tangential forces at the fingertip. Both of these suggestions seem realistic, given that both the amplitude and the direction of forces applied on the fingertips are effectively encoded by cutaneous afferents (Birznieks et al. 2001), and that fingertip forces are known to update motor behavior (Lackner and Dizio 1994; Rabin et al. 1999). A third way to signal a sudden change in the clearance depends on an appropriate internal representation of the fingertip trajectories and a prediction of the moment of contact with the object; if the contact occurs too early, the object is larger than expected and vice versa. A more complex variant of the last suggestion would be that the CNS is able to assess the joint configurations at the moment of object contact, and from this information, deduce whether the size of the object was different than predicted.

Additional experiments are required to elucidate how unexpected changes in object size are encoded as sensory signals. In particular, there is a distinct possibility that the differences in sensory weighting observed between the increased- and decreased-size conditions (the ability to satisfy the functional requirements of the task) can be explained by asymmetrical changes in the afferent inputs evoked under these conditions.

MATERIALS AND METHODS

Subjects

A total of 19 right-handed subjects (11 males and 8 females, aged 21-32 yr), naive to the purpose of the study and with normal or corrected-to-normal vision, participated in the study. They had no impairments in their motor functioning. The experimental procedure was approved by the local Ethics Committee of the Faculty of Medicine and Odontology, Umeå University. All subjects gave their informed, written consent prior to the experiment in accordance with the declaration of Helsinki.

Apparatus and Movement Recording

The subjects sat in a dark room on a height-adjustable chair in front of the experimental apparatus (Fig. 2A). A transparent mirror divided the apparatus into two compartments, one with a bar visible to the subject (visible object), and one with an invisible bar that could be reached by the subject's right hand (haptic object, eye-object distance, 46 cm). The visible object created a visual illusion of a bar located in spatial correspondence with the haptic object, making the subjects falsely believe they were seeing and lifting the same object. The objects were made of wood and shaped as bars with height and width of 19 mm and lengths between 20 and 72.5 mm, in steps of 7.5 mm. The visibility of the visual object was regulated by lamps (switching off-time to 10% of light was 50 msec) and an electronic shutter (closing-time to 1% transmission was 1.3 msec; Speedglas from Hörnell International AB). The haptic objects were painted black and never illuminated, to ensure that the subjects would not be able to see them through the semitransparent mirror. Reflex markers were attached with double-sided sticky tape to the nails of the thumb and index finger. The movement of the markers was recorded by an infrared 100 Hz tracking video camera located 115 cm above the working plane (REMAC; Sandström et al. 1996). The X/Y coordinates of the reflex markers were fed to a digital sampling system (SC/ZOOM) for off-line processing and analysis (Fig. 2B,C).

Experimental Procedure

During the experiment, subjects positioned their heads in a head- and-chin rest, and were using their right hands to make the prehension movements. Between trials, the subjects kept their hands at a fixed starting position that they gripped with their thumbs and index fingers with the fingertips at 23 cm from the haptic object. Between trials, the shutter was closed and the lamps switched off.

The trial started when the shutter opened and the lamps simultaneously switched on (Fig. 2B). The subjects were instructed to grip the object across its long axis with their thumbs and index fingers when the object became visible, and after lifting it, to put it down and return to the starting position. No explicit speed or accuracy instruction was given. The subject's hand was illuminated during the transport of the hand toward the haptic object, but when the distance between the fingertips and the long axis of the object became <20 mm (i.e., 10 mm from the edge of the object and ∼250 msec before object contact, Fig. 2B) the light switched off, and the electronic shutter glass closed to ensure zero visibility of the hand and the lifted object. No visual information was provided to the subjects while lifting the object, as that would have dispelled the illusion of a single object. The experimenter changed objects between trials and started a new trial by pressing a button.

Immediately after the experiment, the subjects were explicitly asked if they ever had felt anything unusual while grasping the objects, or whether occasionally there had been anything peculiar with the objects. If the subject claimed to have become aware of a conflict between the visual appearance of the object and its haptic properties, additional questions were asked, that is, whether the manipulated object had appeared to be of different size than its visual appearance and when they became aware of this.

Experimental Design

Six different visible objects and eight different haptic objects were presented to the subjects during the experimental series (cf. Fig. 2E). They were presented in a fixed, but for the subjects, in an unpredictable order.

Three different size relationships were used between the visual and haptic objects; the haptic object was either of the same size as the visible object (equal-size condition), 15 mm larger (increased-size condition), or 15 mm smaller than the visible object (decreased-size condition). All subjects made the same set of trials, and thus participated in all three conditions.

Two different series of trials were constructed. One of the series included an increased-size condition part and the other a decreased-size condition part (Fig. 2D). Both series started with nine trials under the equal-size condition. This initial part had one main objective, to serve as a reference to compare with when describing the adaptation process.

The second part of the series consisted of 23 trials under the increased- or decreased-size condition, that is, the haptic object became 15 mm larger or smaller than the visible object. The two main objectives of these trials were to (1) examine the time-course of the adaptation process, and (2) to establish the fully adapted relationship between MGA and the visible object when the visual and haptic objects differed in size. The number of trials needed to reach a fully adapted state was determined in pilot experiments in which longer adaptation periods (>50 trials, 6 subjects) were used. The average MGA in trials 21-30, 31-40, and 41-50 was 73.4, 72.7, and 72.8 mm, respectively, in the decreased-size condition, and 83.6, 83.0, and 84.4 mm, respectively, in the increased-size condition. These differences within conditions were not significant [F(2, 176) = 0.054, in the decreased-size condition] and [F(2, 177) = 0.204, in the increased-size condition]. Thus, no significant further adaptation occurred beyond ∼20 trials. During the last four trials, only two different visual objects were presented (42.5 and 50.0 mm), as we wanted to avoid extrapolations outside of the range in which the relationship between the MGA and object size was known (visual objects 27.5-65.0 mm, Fig. 3A) when we calculated the weight of each modality during the fully adapted behavior (Fig. 3B).

The last 16 trials in all series were again under the equal-size condition. The main objective of these trials was to let the subjects readapt to the equal-size condition before the next series.

Each of the two series thus consisted of 48 trials and was run six times, making the total number of trials in one experimental session, that is, 2 series · 48 trials · 6 runs = 576. Increased and decreased-size condition series were presented in a mixed order. The total time for one experimental session was approximately 2 h.

Measurements, Data Processing, and Statistical Analysis

In addition to the MGA, other parameters of the prehensile movement were measured; the tangential velocity for an imaginary point centered between the digits at the time of MGA, the distance from this point to the center of the object at the moment of MGA, the time from the movement start until MGA, and the time from MGA to contact with the object. The analyses of these parameters did not contribute any interesting results concerning the topics addressed in this study. The maximum achievable grip aperture, that is, the maximum distance between the index finger and the thumb, was measured in all subjects. The MGA was recalculated to represent the maximum distance between the fingerpads, not the distance between the reflectors.

In a small number of trials (0.48% of 10,944 trials), it was not possible to identify a clear peak in the distance between the index finger and the thumb (cf. Fig. 2B). These trials were excluded from the analyses.

The effect of the haptic object size in the immediately previous trial on the MGA in the subsequent trial was analyzed by a repeated measures ANOVA; 2 (size of current haptic object: 42.5 and 50.0 mm) × 6 (previous haptic object: 27.5, 35.0, 42.5, 50.0, 57.5, and 65.0 mm). This analysis only included trials from the first part (first nine trials) of the experimental series.

When calculating the relative weight or impact of each sensory modality in determining MGA, we assumed that the haptic and visual information were congruent when the subjects experienced an equal size of the haptic and visual objects in the first part of the series (e.g., Fig. 3A). The relationship between MGA and the size of the visual object (VO) was expressed in a linear regression equation as MGA = f(VO) (= a + b · VO; a and b are constants). If the subjects ignored haptic information, the relationship should remain MGA = f(VO), even if the relative size of the haptic object increased. In contrast, if the relative size of the haptic object increased 15 mm and the subject completely adapted his or her MGA to the size of the haptic object, the relationship should change to MGA = f(VO + 15) (cf. Fig. 3B). We expected, of course, the averaged MGA observed to be somewhere between these extremes, that is, f(VO) ≤ MGAobserved ≤ f(VO + 15) and f(VO - 15) ≤ MGAobserved ≤ f(VO) for the increased- and decreased-size condition, respectively. The weight of the haptic information, WHaptic was calculated as

|

1 |

and the weight of the visual information, WVisual was calculated as

|

2 |

Accordingly, WVisual + WHaptic = 1 and each weight can be interpreted as the relative impact of the sensory source on the change in the subjects' behavior. The difference in haptic weight when fully adapted was analyzed in a single-factor repeated measures ANOVA.

An exponential function was fitted by nonlinear regression to the measured MGA during the increased- and decreased-size conditions, as well as when subjects readapted to the equal-size condition:

|

3 |

a and a-b, respectively, represent the MGA after and before adaptation to a change in size condition, c is a constant, and T is the trial number with T = 0 corresponding to the first trial with a change in size condition (cf. Fig 4A). The number of trials required to reach 50% adaptation, T50%, was calculated as

|

4 |

and the difference in T50% between increased- and decreased-size conditions was analyzed in a single-factor repeated measures ANOVA.

A significance level of P < 0.05 was chosen for all ANOVA analyses described above.

Acknowledgments

This work was supported by grants from the Swedish Medical Research Council (project 08667) and the 5th Framework Program of EU (projects: QLG3-CT-1999-00448 and IST-2001-33073). We thank Göran Westling for constructing the experimental apparatus and Anders Bäckström for computer support.

The publication costs of this article were defrayed in part by payment of page charges. This article must therefore be hereby marked “advertisement” in accordance with 18 USC section 1734 solely to indicate this fact.

Article and publication are at http://www.learnmem.org/cgi/doi/10.1101/lm.71804.

References

- Aglioti, S., DeSouza, J.F., and Goodale, M.A. 1995. Size-contrast illusions deceive the eye but not the hand. Curr. Biol. 5: 679-685. [DOI] [PubMed] [Google Scholar]

- Birznieks, I., Jenmalm, P., Goodwin, A.W., and Johansson, R.S. 2001. Encoding of direction of fingertip forces by human tactile afferents. J. Neurosci. 21: 8222-8237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark, J.J. and Yuille, A.L. 1990. Data fusion for sensory information processing systems. Kluwer Academic, Boston, MA.

- Ernst, M.O. and Banks, M.S. 2002. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429-433. [DOI] [PubMed] [Google Scholar]

- Ernst, M.O., Banks, M.S., and Bülthoff, H.H. 2000. Touch can change visual slant perception. Nat. Neurosci. 3: 69-73. [DOI] [PubMed] [Google Scholar]

- Fernàndez-Ruiz, J. and Díaz, R. 1999. Prism adaptation and aftereffect: Specifying the properties of a procedural memory system. Learn. Mem. 6: 47-53. [PMC free article] [PubMed] [Google Scholar]

- Flanagan, J.R. and Johansson, R.S. 2003. Action plans used in action observation. Nature 424: 769-771. [DOI] [PubMed] [Google Scholar]

- Gentilucci, M., Daprati, E., Toni, I., Chieffi, S., and Saetti, M.C. 1995. Unconscious updating of grasp motor program. Exp. Brain. Res. 105: 291-303. [DOI] [PubMed] [Google Scholar]

- Hillis, J.M., Ernst, M.O., Banks, M.S., and Landy, M.S. 2002. Combining sensory information: Mandatory fusion within, but not between, senses. Science 298: 1627-1630. [DOI] [PubMed] [Google Scholar]

- Hu, Y. and Goodale, M.A. 2000. Grasping after a delay shifts size-scaling from absolute to relative metrics. J. Cogn. Neurosci. 12: 856-868. [DOI] [PubMed] [Google Scholar]

- Iberall, T., Bingham, G., and Arbib, M. 1986. Opposition space as a structuring concept for the analysis of skilled hand movements. In Generation and modulation of action patterns. (eds. H. Heuer and C. Fromm), pp. 158-173. Springer-Verlag, Berlin, Germany.

- Jakobson, L.S. and Goodale, M.A. 1991. Factors affecting higher-order movement planning: A kinematic analysis of human prehension. Exp. Brain Res. 86: 199-208. [DOI] [PubMed] [Google Scholar]

- Jeannerod, M. 1984. The timing of natural prehension movements. J. Mot. Behav. 16: 235-254. [DOI] [PubMed] [Google Scholar]

- Jenmalm, P. and Johansson, R.S. 1997. Visual and somatosensory information about object shape control manipulative fingertip forces. J. Neurosci. 17: 4486-4499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson, R.S. 1998. Sensory input and control of grip. Novartis Foundation Symp. 218: 45-59. [DOI] [PubMed] [Google Scholar]

- Johansson, R.S., Westling, G., Backstrom, A., and Flanagan, J.R. 2001. Eye-hand coordination in object manipulation. J. Neurosci. 21: 6917-6932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennett, S., Taylor-Clarke, M., and Haggard, P. 2001. Noninformative vision improves the spatial resolution of touch in humans. Curr. Biol. 11: 1188-1191. [DOI] [PubMed] [Google Scholar]

- Lackner, J.R. and Dizio, P. 1994. Rapid adaptation to Coriolis force perturbations of arm trajectory. J. Neurophys. 72: 299-313. [DOI] [PubMed] [Google Scholar]

- Land, M.F. and Hayhoe, M. 2001. In what ways do eye movements contribute to everyday activities? Vision Res. 41: 3559-3565. [DOI] [PubMed] [Google Scholar]

- Marteniuk, R.G., Leavitt, J.L., MacKenzie, C.L., and Athènes, S. 1990. Functional relationships between grasp and transport components in a prehension task. Hum. Mov. Sci. 9: 149-176. [Google Scholar]

- Newport, R., Hindle, J.V., and Jackson, S.R. 2001. Links between vision and somatosensation. Vision can improve the felt position of the unseen hand. Curr. Biol. 11: 975-980. [DOI] [PubMed] [Google Scholar]

- Newport, R., Rabb, B., and Jackson, S.R. 2002. Noninformative vision improves haptic spatial perception. Curr. Biol. 12: 1661-1664. [DOI] [PubMed] [Google Scholar]

- Rabin, E., Bortolami, S.B., DiZio, P., and Lackner, J.R. 1999. Haptic stabilization of posture: Changes in arm proprioception and cutaneous feedback for different arm orientations. J. Neurophysiol. 82: 3541-3549. [DOI] [PubMed] [Google Scholar]

- Sainburg, R.L., Poizner, H., and Ghez, C. 1993. Loss of proprioception produces deficits in interjoint coordination. J. Neurophysiol. 70: 2136-2147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandström, G., Bäckström, A., and Olsson, K.Å. 1996. REMAC: A video-based motion analyser interfacing to an existing flexible sampling system. J. Neurosci. Meth. 69: 205-211. [DOI] [PubMed] [Google Scholar]

- Smeets, J.B. and Brenner, E. 2001. Independent movements of the digits in grasping. Exp. Brain. Res. 139: 92-100. [DOI] [PubMed] [Google Scholar]

- van Beers, R.J., Sittig, A.C., and Gon, J.J. 1999. Integration of proprioceptive and visual position-information: An experimentally supported model. J. Neurophysiol. 81: 1355-1364. [DOI] [PubMed] [Google Scholar]

- van Beers, R.J., Wolpert, D.M., and Haggard, P. 2002. When feeling is more important than seeing in sensorimotor adaptation. Curr. Biol. 12: 834-837. [DOI] [PubMed] [Google Scholar]

- Welch, R.B. 1978. Perceptual modification. Academic Press, New York.

- Wing, A.M., Turton, A., and Fraser, C. 1986. Grasp size and accuracy of approach in reaching. J. Mot. Behav. 18: 245-260. [DOI] [PubMed] [Google Scholar]