Several previous studies have evaluated the peer review process for journals or grant agencies. However, the lack of formal investigations evaluating the internal review processes performed within academic organizations prompted the authors of this questionnaire-based study to assess the perceptions of authors and reviewers regarding the review of grants and manuscripts in their organization, with the aim of informing future changes and improvements to the process.

Keywords: Authors, Peer review, Research consortium, Reviewers, Survey

Abstract

RATIONALE AND OBJECTIVES:

All grants and manuscripts bearing the Canadian Critical Care Trials Group name are submitted for internal peer review before submission. The authors sought to formally evaluate authors’ and reviewers’ perceptions of this process.

METHODS:

The authors developed, tested and administered two electronic nine-item questionnaires for authors and two electronic 13-item questionnaires for reviewers. Likert scale, multiple choice and free-text responses were used.

RESULTS:

Twenty-one of 29 (72%) grant authors and 16 of 22 (73%) manuscript authors responded. Most author respondents were somewhat or very satisfied with the turnaround time, quality of the review and the review process. Two-thirds of grant (13 of 20 [65%]) and manuscript authors (11 of 16 [69%]) reported one or more successful submissions after review. Changes made to grants based on reviews were predominantly editorial and involved the background, rationale, significance/relevance and the methods/protocol sections. Twenty-one of 47 (45%) grant reviewers and 32 of 44 (73%) manuscript reviewers responded. Most reviewer respondents reported a good to excellent overall impression of the review process, good fit between their expertise and interests and the grants reviewed, and ample time to review. Although most respondents agreed with the current nonblinded review process, more grant than manuscript reviewers preferred a structured review format.

CONCLUSIONS:

The authors report a highly favourable evaluation of an existing internal review process. The present evaluation has assisted in understanding and improving the current internal review process.

Abstract

HISTORIQUE ET OBJECTIFS :

Les subventions et les manuscrits avalisés par le Canadian Critical Care Trials Group sont soumis à un processus de révision interne par des pairs avant d’être proposés. Les auteurs ont cherché à évaluer officiellement les perceptions des auteurs et des réviseurs à l’égard de ce processus.

MÉTHODOLOGIE :

Les auteurs ont élaboré, mis à l’essai et distribué deux questionnaires virtuels de neuf questions aux auteurs et deux questionnaires virtuels de 13 questions aux réviseurs. Ils ont utilisé l’échelle de Likert, les choix multiples et les réponses ouvertes.

RÉSULTATS :

Vingt et un des 29 auteurs de subvention (72 %) et 16 des 22 auteurs de manuscrits (73 %) ont répondu. La plupart des auteurs étaient quelque peu ou très satisfaits du délai, de la qualité de la révision et du processus de révision. Les deux tiers des auteurs de subventions (13 sur 20 [65 %]) et de manuscrits (11 sur 16 [69 %]) ont déclaré avoir obtenu au moins une réponse positive après la révision. Les modifications apportées aux demandes de subvention conformément aux révisions étaient surtout d’ordre rédactionnel et touchaient l’historique, les objectifs, la signification et la pertinence ainsi que la méthodologie et le protocole. Vingt et un des 47 réviseurs de subventions (45 %) et 32 des 45 réviseurs de manuscrits (71 %) ont répondu. La plupart affirmaient avoir une impression globale bonne à excellente du processus de révision, constater un bon jumelage de leurs compétences et de leurs intérêts avec les subventions à réviser et avoir amplement de temps pour réviser. Même si la plupart des répondants approuvaient le processus actuel de révision sans insu, plus de réviseurs de subventions que de manuscrits préféraient une structure de révision structurée.

CONCLUSIONS :

Les auteurs évaluaient de manière très favorable un processus de révision interne en place. La présente évaluation a contribué à comprendre et améliorer ce processus.

Internal review of research grant applications and manuscripts to be submitted for publication is an informal process at many institutions. The Canadian Critical Care Trials Group (CCCTG) is an investigator-funded consortium of critical care researchers in Canada whose vision is to foster investigator-initiated, collaborative, multi-disciplinary clinical research, advance critical care research methodology, mentor highly qualified investigators and to promote a culture of inquiry into the practice of critical care. Based on explicit terms of reference, all grant applications and manuscripts bearing the name of the CCCTG are submitted to the Grants and Manuscripts Committee of the CCCTG before submission to the granting agency or journal. Each document is sent to at least two internal reviewers (members of the CCCTG), one content expert and one methodology expert, where possible. The format of the review is open such that authors know who is reviewing their document and reviewers know the identity of the authors, but the reviewers are not coauthors or coinvestigators with the authors of the documents submitted for review. Furthermore, the review is voluntary and unstructured. Reviewers are asked to return their comments to the authors within a two-week period. Although this process has been in place for several years, it has not yet been formally evaluated.

There are several published evaluations of peer review (1–7), but all of these pertain to reviews performed by reviewers for journals or grant agencies. We are not aware of any evaluations of internal reviews performed within academic organizations. Therefore, the purpose of the present study was to evaluate the internal review process of grants and manuscripts in the CCCTG from the perspective of both the authors of the grants and manuscripts, and the reviewers of these documents.

METHODS

Questionnaire development, testing and formatting

To evaluate the processes and outcomes of the internal review process, two nine-item questionnaires were developed, tested and administered for authors (one survey for authors of grants, and one survey for authors of manuscripts) and two 13-item questionnaires for reviewers (one survey for reviewers of grants, and one survey for reviewers of manuscripts). The present study was approved by the University of British Columbia/Providence Health Care Research Ethics Board (Vancouver, British Columbia). Two authors (PD, KB) generated and reduced items for possible inclusion in the questionnaires. Likert scale (8), multiple choice and free-text response formats were used. All surveys were published for completion on SurveyMonkey (Online Appendixes, go to www.pulsus.com).

Each pair of questionnaires (for grants and manuscripts) administered to authors (first round) and reviewers (second round) was pre-tested (9). Twelve CCCTG members (eight for the authors’ questionnaire and four for the reviewers’ questionnaire) assisted with questionnaire pretesting.

Questionnaire administration

Authors of grants (n=29) and manuscripts (n=22) that were submitted for internal review between January 2009 and September 2011 were first invited to complete a nine-item questionnaire to address authors’ perspectives of the peer review process. Subsequently, reviewers of grants (n=47) and manuscripts (n=44) over the same time period were invited to complete a 13-item questionnaire to address reviewers’ perspectives of the review process. If more than one grant or manuscript was submitted or reviewed by a given author or reviewer, they were asked to consider the review of all grants and/or manuscripts in their responses to each of the author or reviewer questionnaires. Both author and reviewer questionnaires were accompanied by a cover letter bearing separate links to the grant and manuscript questionnaires; these letters were sent by e-mail approximately six months apart. A single e-mail reminder was sent to authors and reviewers approximately one month after the initial invitation to complete the questionnaires.

Descriptive statistics and free-text comments were tabulated from the aggregate responses to all questionnaires.

RESULTS

Authors of grants and manuscripts

Twenty-one of 29 (72%) grant authors and 16 of 22 (73%) manuscript authors responded. Most respondents were somewhat or very satisfied with the turnaround time and quality of the review, and with the review process overall (Table 1). These internal reviews by members of the CCCTG replaced the need for institutional internal peer review, where required, for 33% of grant and 75% of manuscript authors. Twenty-eight percent and 48% of grant and manuscript authors, respectively, reported that new scientific issues not identified by the CCCTG review process were identified by reviewers for grant agencies or journals. Of 20 grant authors who completed a question about grant success, 13 (65%) acknowledged that one or more of the grants that were reviewed were funded. Of 16 manuscript authors who completed a parallel question pertaining to manuscript success, 11 (69%) stated that one or more of the manuscripts that were reviewed were accepted for publication.

TABLE 1.

Authors’ satisfaction with the review process

| Item | Grants | Manuscripts |

|---|---|---|

| Somewhat or very satisfied with turn-around time of review | 95 | 94 |

| Somewhat or very satisfied with quality of review | 95 | 94 |

| Somewhat or very satisfied overall with review process | 95 | 100 |

| Review supplanted institutional internal review (if applicable) | 33 | 75 |

Data presented as % of respondents

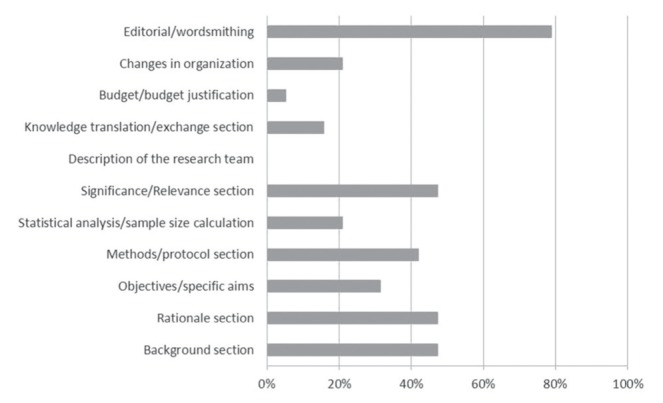

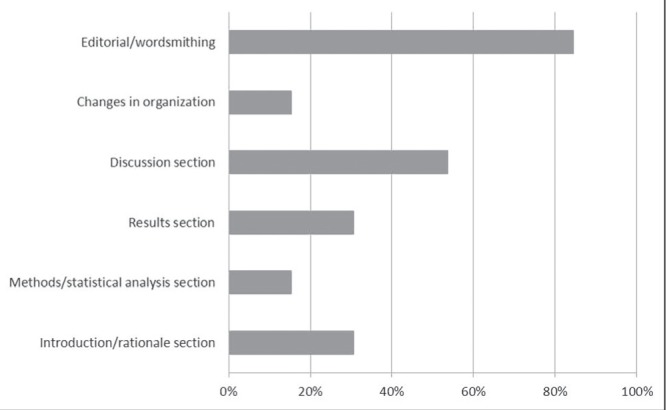

Changes made to grant applications based on these reviews were predominantly editorial and involved the background, rationale, significance/relevance and the methods/protocol sections. Less frequent changes to grant applications included the budget/budget justification, knowledge translation/exchange, changes in organization, statistical analysis/sample size calculation and objectives but never involved description of the research team (Figure 1). Changes made to manuscripts based on these reviews were primarily editorial and most frequently addressed the discussion section. Less frequent changes to manuscripts included the introduction/rationale, results, methods/statistical analysis sections, and overall changes in organization (Figure 2).

Figure 1).

Changes made to grants by authors based on the internal reviews (percent of respondents). Responses to question, “Which changes did you make to your grant(s) based on the reviews you received? (Please check ALL that apply)”

Figure 2).

Changes made to manuscripts by authors based on the internal reviews (percent of respondents). Responses to question, “Which changes did you make to your manuscript(s) based on the reviews you received? (Please check ALL that apply)”

In response to open-ended questions about what worked well and what could be improved about the review process, authors of grants and manuscripts praised the rapid turnaround time, experience/expertise of the reviewers and thoroughness of the reviews. Some respondents also suggested pairing of a senior reviewer with a junior reviewer, and posing specific questions or having an explicit template for the review process (data not shown).

Reviewers of grants and manuscripts

Twenty-one of 47 (45%) grant reviewers and 32 of 44 (73%) manuscript reviewers responded. Approximately one-half (11 of 21 [52%]) of the grant reviewers reported reviewing one to three grants per year for other agencies. In contrast, most manuscript reviewers reported reviewing either nine to 12 (10 of 32 [31%]) or >12 (six of 32 [19%]) manuscripts per year for journals. Most respondents reported a good to excellent overall impression of the grant and manuscript review process, good fit between their expertise/interests and the grants they were asked to review, and ample time to review grants and manuscripts (Table 2). Although most respondents agreed with the current ‘open’ or nonblinded review process, more grant than manuscript reviewers preferred a structured review format. Most reviewers would have liked to have seen their co-reviewers review and to have received constructive, written feedback pertaining to the quality of their reviews from the Grants and Manuscripts Committee. Of reviewers who responded to a question about preferences, most grant (10 of 15 [75%]) and nearly one-half of manuscript (13 of 27 [48%]) reviewers did not express a preference to review only grants and manuscripts pertaining to topics within their areas of interest and expertise.

TABLE 2.

Reviewers’ satisfaction with the review process (percent of respondents)

| Item | Grants | Manuscripts |

|---|---|---|

| Overall impression of process, good or excellent | 95 | 94 |

| ‘Good fit’ between your expertise and documents you reviewed, agree or strongly agree | 100 | 78 |

| Ample time to review, yes | 70 | 88 |

| Agree with ‘open’ review process, yes | 90 | 88 |

| Prefer structured or unstructured format for review, structured | 76 | 44 |

| Would you like to see your co-reviewer’s review, yes | 71 | 75 |

| Would you like feedback about the quality of your review from the CCCTG, yes | 62 | 56 |

Data presented as %. CCCTG Canadian Critical Care Trials Group

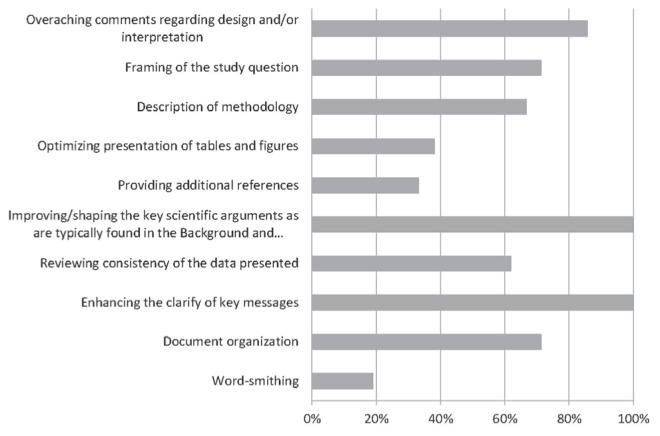

Most grant reviewers believed that their role was to enhance the clarity of key messages, improve or shape key scientific arguments, and make overarching comments regarding design and/or interpretation. In addition, some grant reviewers believed that their role was to frame the study question, to review the consistency of the data presented, and to aid in document organization and description of methodology. Only a few grant reviewers believed that their role was to provide additional references, optimize presentation of tables and figures, or wordsmith. Two additional grant reviewers provided other responses highlighting a sense of altruism in helping in whatever manner possible, and a desire to replicate the grant or manuscript review process in other contexts (Figure 3).

Figure 3).

Perceived roles according to grant reviewers (percent of respondents). Responses to question, “Which of the following do you believe are the roles of the Canadian Critical Care Trials Group grant reviewers (Please check ALL that apply)”

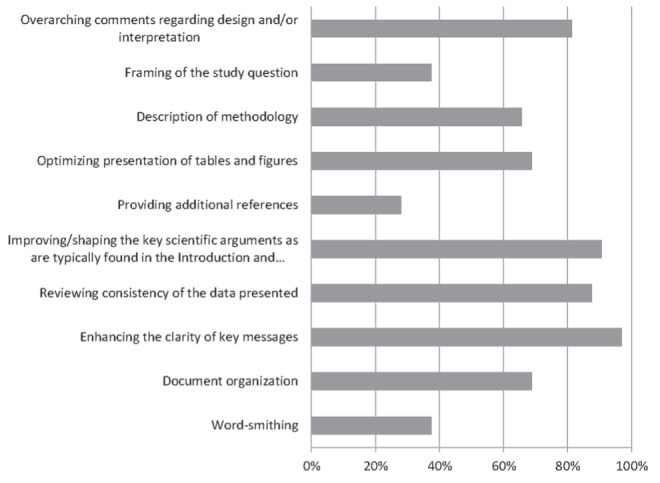

Similar to grant reviewers, most manuscript reviewers believed that their role was to enhance the clarity of key messages, improve/shape the key scientific arguments, review the consistency of the data presented, and make overarching comments regarding design and/or interpretation. Some manuscript reviewers believed that their role was to help optimize the presentation of tables and figures, document organization and description of methodology. Only a few manuscript reviewers believed that their role was to aid in framing the study question, wordsmith or to provide additional references. Other responses emphasized altruism in the review process, and highlighted the importance of identifying grammatical and typographical errors, and editing (Figure 4).

Figure 4).

Perceived roles according to manuscript reviewers (percent of respondents). Responses to question, “Which of the following do you believe are the roles of the Canadian Critical Care Trials Group manuscript reviewers (Please check ALL that apply)”

In response to open-ended questions about what worked well and what could be improved about the review process, reviewers praised the collegial and timely process, but preferred a structured template for reviews that included specific questions to reviewers from authors. In addition, they recommended a ‘gratitude’ report to reviewers that indicated how many reviews individuals had performed (data not shown).

DISCUSSION

The grant and manuscript review process of the CCCTG is highly regarded by authors and reviewers, is useful in modifying grants and manuscripts, and is associated with good success rates for authors. Most author respondents were somewhat or very satisfied with the turnaround time, quality of the review and the review process overall. Changes made to grant applications based on the review were predominantly editorial and involved the background, rationale, significance/relevance and the methods/protocol sections. Most reviewer respondents reported a good to excellent overall impression of the grant and manuscript review process, and a good fit between their expertise/interests and the grants they were asked to review. Most respondents agreed with the current ‘open’ or nonblinded review process. More grant reviewers than manuscript reviewers preferred a structured review format. Most reviewers would have liked to have seen their coreviewers’ review and to have received constructive written feedback about the quality of their reviews. Grant reviewers reported that their role was to enhance the clarity of key messages, improve or shape key scientific arguments, and provide overarching comments regarding design and interpretation. Similarly, manuscript reviewers reported that their role was to enhance the clarity of key messages, shape the key scientific arguments, review the consistency of the data presented, and make overarching comments regarding design and interpretation. Opportunities for improvement include a more systematic and explicit process in which authors pose specific questions to the reviewers for their input, use of a structured review format, feedback on the reviewed documents and access to co-reviewers’ comments.

The review of grants and manuscripts is performed extensively as part of the scientific paradigm; however, this process has several limitations. For example, manuscripts are more likely to be recommended for publication if they describe a positive outcome, compared with no difference between experimental groups (1). In addition, inter-rater reliability among reviewers is low (2,3). Use of reporting guidelines in the review of manuscripts is associated with improvement of manuscripts, but authors adhere more to suggestions from conventional reviews than to the reviews related to these guidelines (4). One approach to reducing inter-reviewer differences in review of grants is use of a panel score; however, a panel discussion does not improve the reliability of a panel score (6). Although there are different perspectives about the value of blinding a review – authors prefer unblinded reviews and reviewers prefer blinded reviews (7) – quality of reviews and manuscript scores are independent of blinding of the review (5,7). Alternatives to traditional peer review including ranking instead of reviewing, bidding to review instead of being asked to review, and peer evaluation through social media have been proposed as potential solutions to some of these limitations (10).

We are not aware of any published evaluations of an internal process such as the one used in the CCCTG. This process was developed to help authors develop and strengthen their grants and manuscripts and to improve the success rate in funding and publication, respectively. Although we have no data from the period that preceded this process, the strongly positive feedback that we received from this evaluation suggests that the process is working well. Nevertheless, we learned that the process could be improved by pairing junior and senior investigators, and content experts and methodologists as reviewers and by making the review process more systematic and explicit. In our survey, both authors and reviewers suggested that authors should pose specific questions to reviewers so that the reviewers can focus on what the authors need most. This improvement is similar to research studios (11) in which an investigator presents their proposal and specific questions to an in-person panel of experts. The studio process has also been rated very favourably (11).

Strengths of the present study include the explicit approach to survey development, inclusion of all authors and reviewers over a two- to three-year period as potential respondents, and high response rates. Limitations include the relatively small number of total possible respondents and that some respondents completed both questionnaires, as both authors and reviewers. Our findings may not be generalizable to other research consortia that have different organizational cultures. In addition, we did not use a previously developed review quality instrument (12) because we intentionally developed our survey items based on input from members of the CCCTG. Coincidentally, many of the items that we developed were similar to the items on the review quality instrument (12).

SUMMARY

We report a highly favourable evaluation of an existing internal review process for grants and manuscripts. The responses and constructive feedback have enabled authors to pose questions to reviewers, fostered development of structured templates for future grant and manuscript reviews, and are expected to inform additional changes to the review process in the near future.

Acknowledgments

All three authors fulfilled all of the following three criteria: substantial contributions to research design, or the acquisition, analysis or interpretation of data; drafting the manuscript or revising it critically; and approval of the submitted and final versions. The authors express their gratitude to the members of the CCCTG for their assistance in designing, testing and completing the questionnaires.

APPENDIX

REFERENCES

- 1.Emerson GB, Warme WJ, Wolf FM, Heckman JD, Brand RA, Leopold SS. Testing for the presence of positive-outcome bias in peer review: A randomized controlled trial. Arch Intern Med. 2010;170:1934–9. doi: 10.1001/archinternmed.2010.406. [DOI] [PubMed] [Google Scholar]

- 2.Bornmann L, Mutz R, Daniel H-D. A reliability-generalization study of journal peer reviews: A multilevel meta-analysis of inter-rater reliability and its determinants. PLoS ONE. 2010;5:e14331. doi: 10.1371/journal.pone.0014331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jackson JL, Srinivasan M, Rea J, Fletcher KE, Kravitz RL. The validity of peer review in a general medicine journal. PLoS ONE. 2011;6:e22475. doi: 10.1371/journal.pone.0022475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cobo E, Cortés J, Ribera JM, et al. Effect of using reporting guidelines during peer review on quality of final manuscripts submitted to a biomedical journal: Masked randomised trial. BMJ. 2011;343:d6783. doi: 10.1136/bmj.d6783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Alam M, Kim NA, Havey J, et al. Blinded vs. unblinded peer review of manuscripts submitted to a dermatology journal: A randomized multi-rater study. Br J Dermatol. 2011;165:563–7. doi: 10.1111/j.1365-2133.2011.10432.x. [DOI] [PubMed] [Google Scholar]

- 6.Fogelholm M, Leppinen S, Auvinen A, Raitanen J, Nuutinen A, Vaananen K. Panel discussion does not improve reliability of peer review for medical research grant proposals. J Clin Epidemiol. 2012;65:47–52. doi: 10.1016/j.jclinepi.2011.05.001. [DOI] [PubMed] [Google Scholar]

- 7.Vinther S, Haagen Nielsen O, Rosenberg J, Keiding N, Schroeder TV. Same review quality in open versus blinded peer review in “Ugeskrift for Læger”. Dan Med J. 2012;59:A4479–A83. [PubMed] [Google Scholar]

- 8.Elder JP, Artz LM, Beaudin P, et al. Multivariate evaluation of health attitudes and behaviors: Development and validation of a method for health promotion research. Prev Med. 1985;14:34–54. doi: 10.1016/0091-7435(85)90019-2. [DOI] [PubMed] [Google Scholar]

- 9.Burns KE, Duffett M, Kho ME, et al. A Guide for the design and conduct of self-administered surveys of clinicians for the ACCADEMY Group. CMAJ. 2008;179:245–52. doi: 10.1503/cmaj.080372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Birukou A, Wakeling JR, Bartolini C, et al. Alternatives to peer review: Novel approaches for research evaluation. Front Comput Neurosci. 2011;5:56. doi: 10.3389/fncom.2011.00056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Byrne DW, Biaggioni I, Bernard GR, et al. Clinical and Translational Research Studios: A Multidisciplinary Internal Support Program. Academ Med. 2012;87:1052–9. doi: 10.1097/ACM.0b013e31825d29d4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.van Rooyen S, Black N, Godlee F. Development of the Review Quality Instrument (RQI) for assessing peer reviews of manuscripts. J Clin Epidemiol. 2012;52:625–9. doi: 10.1016/s0895-4356(99)00047-5. [DOI] [PubMed] [Google Scholar]