Abstract

A goal of many health studies is to determine the causal effect of a treatment or intervention on health outcomes. Often, it is not ethically or practically possible to conduct a perfectly randomized experiment and instead an observational study must be used. A major challenge to the validity of observational studies is the possibility of unmeasured confounding (i.e., unmeasured ways in which the treatment and control groups differ before treatment administration which also affect the outcome). Instrumental variables analysis is a method for controlling for unmeasured confounding. This type of analysis requires the measurement of a valid instrumental variable, which is a variable that (i) is independent of the unmeasured confounding; (ii) affects the treatment; and (iii) affects the outcome only indirectly through its effect on the treatment. This tutorial discusses the types of causal effects that can be estimated by instrumental variables analysis; the assumptions needed for instrumental variables analysis to provide valid estimates of causal effects and sensitivity analysis for those assumptions; methods of estimation of causal effects using instrumental variables; and sources of instrumental variables in health studies.

Keywords: instrumental variables, observational study, confounding, comparative effectiveness

1. Introduction

The goal of many medical studies is to estimate the causal effect of one treatment vs. another, i.e., to compare the effectiveness of giving patients one treatment vs. another. To compare the effects of treatments, randomized controlled studies are the gold standard in medicine. Unfortunately, randomized controlled studies cannot answer many comparative effectiveness questions because of cost or ethical constraints. Observational studies offer an alternative source of data for developing evidence regarding the comparative effectiveness of different treatments. However, a major challenge for observational studies is confounders – pre-treatment variables that affect the outcome and differ in distribution between the group of patients who receive one treatment vs. the group of patients who receive another treatment.

The impact of confounders on the estimation of a causal treatment effect can be mitigated by methods such as propensity scores, regression and matching[1–3]. However, these methods only control for measured confounders and do not control for unmeasured confounders.

The instrumental variable (IV) method was developed to control for unmeasured confounders. The basic idea of the IV method is 1) find a variable that influences which treatment subjects receive but is independent of unmeasured confounders and has no direct effect on the outcome except through its effect on treatment; 2) use this variable to extract variation in the treatment that is free of the unmeasured confounders; and 3) use this confounder-free variation in the treatment to estimate the causal effect of the treatment. The IV method seeks to find a randomized experiment embedded in an observational study and use this embedded randomized experiment to estimate the treatment effect.

1.1. Tutorial Aims and Outline

IV methods have long been used in economics and are being increasingly used to compare treatments in health studies. There have been many important contributions to IV methods in recent years. The goal of this tutorial is to bring together this literature to provide a practical guide on how to use IV methods to compare treatments in a health study. We focus on several important practical issues in using IVs: (1) when is an IV analysis needed and when is it feasible? (2) what are sources of IVs for health studies; (3) how to use the IV method to estimate treatment effects, including how to use currently available software; (4) for what population does the IV estimate the treatment effect; (5) how to assess whether a proposed IV satisfies the assumptions for an IV to be valid; (6) how to carry out sensitivity analysis for violations of IV assumptions and (7) how does the strength of a potential IV affect its usefulness for a study.

In the rest of this section, we will present an example of using the IV method that we will use throughout the paper. In Section 2, we discuss situations when one should consider using the IV method. In Section 3, we discuss common sources of IVs for health studies. In Section 4, we discuss IV assumptions and estimation for a binary IV and binary treatment. In Section 5, we discuss the treatment effect that the IV method estimates. In Section 6, we provide a framework for assessing IV assumptions and sensitivity analysis for violations of assumptions. In Section 7, we demonstrate the consequences of weak instruments. In Section 8, we discuss power and sample size calculations for IV studies. In Section 9, we present techniques for analyzing outcomes that are not continuous outcomes, such as binary, survival, multinomial and continuous outcomes. In Section 10, we discuss multi-valued and continuous IVs. In Section 11, we discuss using multiple IVs. In Section 12, we present IV methods for multi-valued and continuously valued treatments. In Section 13, we suggest a framework for reporting IV analyses. In Section 14, we provide examples of using software for IV analysis.

If you are just beginning to familiarize yourself with IVs we recommend focussing on Sections 1-4, 5.1-5.2, 6-8 and 13-14, while skipping Sections 5.3-5.5 and 9-12. Sections 5.3-5.5 and 9-12 contain interesting, cutting-edge, and more specialized applications of IVs that the beginner may want to return to at a later point. We include these sections for advanced readers, or those interested in more specialized applications.

Table 1 is a table of notation that will be used throughout the paper.

Table 1.

Table of notation.

| Notation | Meaning |

|---|---|

| Y | observed outcome |

| Y1, Y0 | potential outcome if treatment is 1, potential outcome if treatment is 0 |

| D | observed treatment |

| D1, D0 | potential treatment if instrumental variable (IV) is 1, potential treatment if IV is 0 |

| Z | observed IV |

| X | measured confounders |

| C | compliance class |

| U | unmeasured confounder |

| CACE | Complier Average Causal Effect, the average effect of treatment for the compliers (C = co) |

1.2. Example: Effectiveness of high level neonatal intensive care units

As an example where the IV method is useful, consider comparing the effectiveness of premature babies being delivered in high volume, high technology neonatal intensive care units (high level NICUs) vs. local hospitals (low level NICUs), where a high level NICU is defined as a NICU that has the capacity for sustained mechanical assisted ventilation and delivers at least 50 premature infants per year. [4] used data from birth and death certificates and the UB-92 form that hospitals use for billing purposes to study premature babies delivered in Pennsylvania. The data set covered the years 1995-2005 (192,078 premature babies). For evaluating the effect of NICU level on baby outcomes, a baby's health status before delivery is an important confounder. Table 2 shows that babies delivered at high level NICUs tend to have smaller birthweight, be more premature, and the babies’ mothers tend to have more problems during the pregnancy. Although the available confounders, which include those in Table 2 and several other variables that are given in [4], describe certain aspects of a baby's health prior to delivery, the data set is missing several important confounding variables such as fetal heart tracing results, the severity of maternal problems during pregnancy (e.g., we only know whether the mother had pregnancy induced hypertension but not the severity) and the mother's adherence to prenatal care guidelines.

Table 2.

Imbalance of measured covariates between babies delivered at high level NICUs vs. low level NICUs. The standardized difference is the difference in means between the two groups in units of the pooled within group standard deviation, i.e., for a binary characteristic X, where D = 1 or 0 according to whether the baby was delivered at a high or low level NICU, the standardized difference is .

| Characteristic X | P(X|High Level NICU) | P(X|Low Level NICU) | Standardized Difference |

|---|---|---|---|

| Birthweight < 1500g | 0.12 | 0.05 | 0.28 |

| Gestational Age <= 32 weeks | 0.18 | 0.07 | 0.34 |

| Mother College Graduate | 0.28 | 0.23 | 0.12 |

| African American | 0.22 | 0.09 | 0.36 |

| Gestational Diabetes | 0.05 | 0.05 | 0.03 |

| Diabetes Mellitus | 0.02 | 0.01 | 0.06 |

| Pregnancy Induced Hypertension | 0.12 | 0.08 | 0.13 |

| Chronic Hypertension | 0.02 | 0.01 | 0.07 |

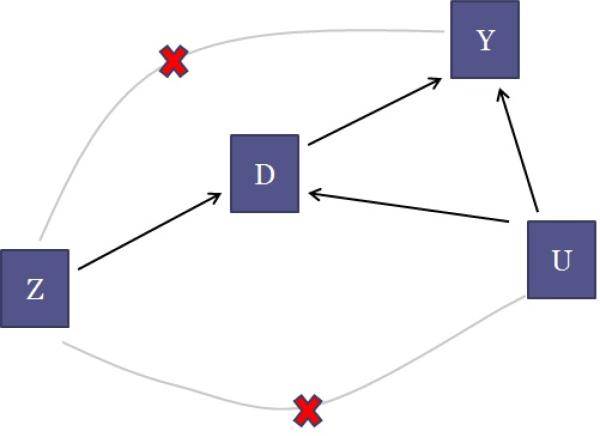

Figure 1, which is an example of a directed acyclic graph [5], illustrates the difficulty with estimating a causal effect in this situation. The arrows denote causal relationships. Read the arrow between the treatment D and outcome Y like so: Changing the value of D causes Y to change. In our example, Y represents in-hospital mortality, and D indicates whether or not a baby attended a high level NICU. Our goal is to understand the arrow connecting D to Y , that is, the effect of attending a high level NICU on in-hospital mortality compared to attending a low level NICU. Assume that Figure 1 shows relationships within a strata of the observed covariates X, e.g., Figure 1 represents the relationships for only babies with gestational age 33 weeks and mother had pregnancy induced hypertension. The U variable causes concern as it represents the unobserved level of severity of the preemie and it is causally linked to both mortality (sicker babies are more likely to die) and to which treatment the preemie receives (sicker babies are more likely to be delivered in high level NICUs). Because U is not recorded in the data set, it cannot be precisely adjusted for using statistical methods such as propensity scores or regression. If the story stopped with just D, Y and U, then the effect of D on Y could not be estimated.

Figure 1.

Directed acyclic graph for the relationship between an instrumental variable Z, a treatment D, unmeasured confounders U and an outcome Y.

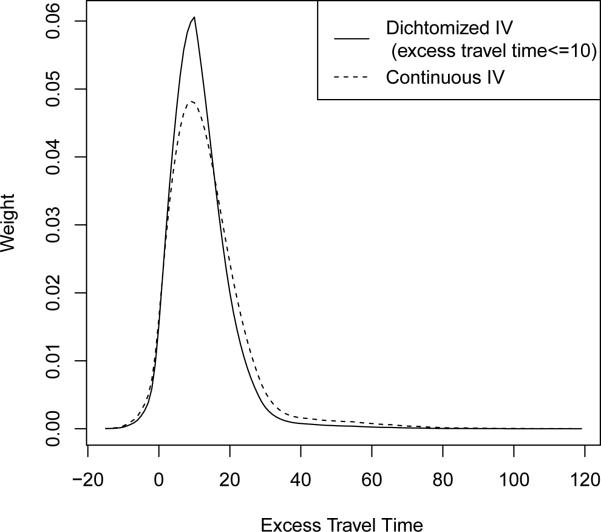

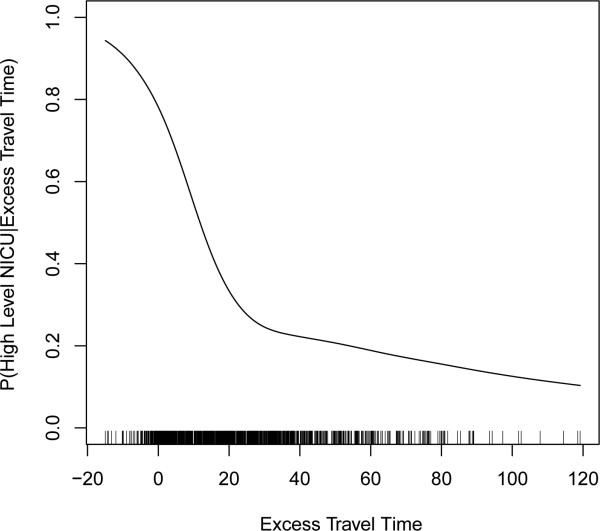

IV estimation makes use of a form of variation in the system that is free of the unmeasured confounding. What is needed is a variable, called an IV (represented by Z in Figure 1), that has very special characteristics. In this example we consider excess travel time as a possible IV. Excess travel time is defined as the time it takes to travel from the mother's residence to the nearest high level NICU minus the time it takes to travel to the nearest low level NICU. We write Z = 1 if the excess travel time is less than or equal to 10 minutes (so that the mother is encouraged by the IV to go to a high level NICU) and Z = 0 if the excess travel time is greater than 10 minutes. (We dichotomize the instrument here for simplicity of discussion.)

There are three key features a variable must have in order to qualify as an IV (see Section 4 for mathematical details on these features and additional assumptions for IV methods). The first feature (represented by the directed arrow from Z to D in Figure 1) is that the IV causes a change in the treatment assignment. When a woman becomes pregnant, she has a high probability of establishing a relationship with the proximal NICU, regardless of the level, because she is not anticipating having a preemie. Proximity as a leading determinant in choosing a facility has been discussed in [6]. By selecting where to live, mothers assign themselves to be more or less likely to deliver in a high level NICU. The fact that changes in the IV are associated with changes in the treatment is verifiable from the data.

The second feature (represented by the crossed out arrow from Z to U) is that the IV is not associated with variation in unobserved variables U that also affect the outcome. That is, Z is not connected to the unobserved confounding that was a worry to begin with. In our example, this would mean unobserved severity is not associated with variation in geography. Since high level NICUs tend to be in urban areas and low level NICUs tend to be the only type in rural areas, this assumption would be dubious if there were high level of pollutants in urban areas (think of Manchester, England circa the Industrial Revolution) or if there were more pollutants in the drinking water in rural areas than in urban areas. These hypothetical pollutants may have an impact on the unobserved levels of severity. The assumption that the IV is not associated with variation in the unobserved variables, while certainly an assumption, can at least be corroborated by examining the values of variables that are perhaps related to the unobserved variables of concern (see Section 6.1).

The third feature (represented by the crossed out line from Z to Y in Figure 1) is that the IV does not cause the outcome variable to change directly. That is, it is only through its impact on the treatment that the IV affects the outcome. This is often referred to as the exclusion restriction assumption. In our case, the exclusion restriction assumption seems reasonable as presumably a nearby hospital with a high level NICU affects a baby's mortality only if the baby receives care at that hospital. That is, proximity to a high level NICU in and of itself does not change the probability of death for a preemie, except through the increased probability of the preemie being delivered at the high level NICU. See Section 6.2.2 for further discussion about the exclusion restriction in the NICU study.

2. Evaluating the Need for and Feasibility of an IV Analysis

As discussed above, IV methods provide a way to control for unmeasured confounding in comparative effectiveness studies. Although this is a valuable feature of IV methods relative to regression, matching and propensity score methods which do not control for unmeasured confounding, IV methods have some less attractive features such as increased variance. When considering whether or not to include an IV analysis in an evaluation of a treatment effect, the first question one should ask is whether or not an IV analysis is needed. The second question one should ask is whether or not an IV analysis is feasible in the sense of there being an IV that is close enough to being valid and has a strong enough effect on the treatment to provide useful information about the treatment effect. In this section, we will discuss how to think about these two questions.

2.1 Is an IV analysis needed?

The key consideration in whether an IV analysis is needed is how much unmeasured confounding there is. It is useful to evaluate this using both scientific considerations and statistical considerations.

Scientific Consideration

Whether or not there is any unmeasured confounding should be first thought of from a scientific point of view. In the NICU example discussed in Section 1.2, mothers (as advised by doctors) who choose to deliver in a far away high level NICU rather than a nearby low level NICU often do so because they think their baby may be at a high risk of having a problem and that delivery at a high level NICU will reduce this risk. Investigators know that a lot of variables can be confounders (i.e., associated with delivery at a high level NICU and associated with in hospital mortality) such as variables indicating a baby's health prior to delivery. They know that the data set available for analyses is missing several important confounding variables such as fetal heart tracing results, the severity of maternal problems during pregnancy and the mother's adherence to prenatal care guidelines. When unmeasured confounding is a big concern in a study like in the NICU study, analyses with IV methods are desired and helpful to better understand the treatment effect.

Unmeasured confounders are particularly likely to be present when the treatment is intended to help the patient (as compared to unintended exposures)[7]. When two patients who have the same measured covariates are given different treatments, there are often rational but unrecorded reasons. In particular, administrative data often does not contain measurements of important prognostic variables that affect both treatment decisions and outcomes such as lab values (e.g., serum cholesterol levels), clinical variables (e.g., blood pressure and fetal heart tracing results), aspects of lifestyle (e.g., smoking status, eating habits) and measures of cognitive and physical functioning[8, 9].

Common sources of IVs for health studies are discussed in Section 3. When using IV analyses, the assumptions that are required for a variable to be a valid IV are usually at best plausible, but not certain. If the assumptions are merely plausible, but not certain, are IV analyses still useful? Imbens and Rosenbaum [10] provide a nice discussion of two settings in which IV analyses with plausible but not certain IVs are useful: 1) When one is concerned about unmeasured confounding in any way, it's helpful to replace the implausible assumption of no unmeasured confounding by a plausible assumption, although not a certain assumption, with IV methods; 2) When there is concern about unmeasured confounding, IV analyses play an important role in replicating an observational study. Consider two sequences of studies, the first sequence in which each study involves only adjusting for measured confounders and the other sequence in which each study involves using a different IV (one of the studies in this second sequence could also involve only adjusting for measured confounders). Throughout the first sequence of studies, the comparison is likely to be biased in the same way. For example, a repeated finding that people with more education are more healthy from different survey data sets that do not contain information about genetic endowments or early life experiences does little to address the concern of unmeasured confounding from these two variables. However, if different IVs are used for education, e.g., a lottery that affects education[11], a regional discontinuity in educational policy[12], a temporal discontinuity in educational policy[13] and distance lived from a college when growing up[14], and if each IV is plausibly, but not certainly, valid, then there may be no reason why these different IVs should provide estimates that are biased in the same direction. If studies with these different IVs all provide evidence that education affects health in the same direction, this would strengthen the evidence for this finding[15] (when different IVs are used, each IV identifies the average treatment effect for a different subgroup, so that we would only expect that findings from the different IVs would agree in direction if the average treatment effects for the different subgroups have the same direction; see Section 5.5 and 11 for discussion).

In summary, when unmeasured confounding is a big concern in a study based on one's understanding of the problem and data, investigators should consider IV methods in their analyses. At the same time, if investigators only expect a small amount of unmeasured confounding in their study, [16] suggest that investigators may not want to use IV methods for the primary analysis but may want to consider IV methods for a secondary analysis.

Statistical Tests

Under some situations in practice, especially for exploratory studies, investigators may not have enough scientific information to determine whether or not there is unmeasured confounding. Then statistical tests can be helpful to provide additional insight. The Durbin-Wu-Hausman test is widely used to test whether there is unmeasured confounding[17–19]. The test requires the availability of a valid IV. The test compares the ordinary least squares estimate and IV estimate of the treatment effect; a large difference between the two estimates indicates the potential presence of unmeasured confounding.

The Durbin-Wu-Hausman test assumes homogeneous treatment effects, meaning that the treatment effect is the same at different levels of covariates. The test cannot distinguish between unmeasured confounding and treatment effect heterogeneity[16, 20]. As an alternative approach, [20] developed a test that can detect unmeasured confounding as distinct from treatment effect heterogeneity in the context of the model described below in Section 4.1.

2.2. Valid IVs

As discussed in Section 1, a variable must have three key features to qualify as an IV: 1) Relevance: the IV causes a change in the treatment received; 2) Effective random assignment: the IV is independent of unmeasured confounding conditional on covariates as if it was randomly assigned conditional on covariates; 3) Exclusion restriction: the IV does not have a direct effect on outcomes, i.e., it only affects outcomes through the treatment. Section 4 includes mathematical details on these features and assumptions. To use IV methods in a real study, investigators need to evaluate if there is any variable which satisfies the three features and qualifies as a good IV based on both scientific understanding and statistical evidence. Please see Section 3 below for sources of IVs in health studies. Note that not all of the features/assumptions can be completely tested, but methods have been proposed to test certain parts of the assumptions. Please see Section 6 for a discussion about how to evaluate if a variable satisfies those features/assumptions needed to be a valid IV.

We would also like to point out that even if there is no variable which is a perfectly valid IV, an IV analysis may still provide helpful information about the treatment effect. As discussed above, when there is unmeasured confounding, a repeated finding from a sequence of analyses with different IVs (even though none of the IVs is perfect) will provide very helpful evidence on the treatment effect [10]. Also, sensitivity analyses can be performed to assess the evidence provided by an IV analysis allowing for the IV not being perfectly valid; see Section 6.2.

2.3. Strength of IVs

An IV is considered to be a strong IV if it has a strong impact on the choice of different treatments and a weak IV if it only has a slight impact. When the IV is weak, even if it is a valid IV, treatment effect estimates based on IV methods have some limitations, such as large variance even with large samples. Then investigators face a trade-off between an IV estimate with a large variance and a conventional estimate with possible bias [16]. Additionally, the estimate with a weak IV will be sensitive to a slight departure from being a valid IV. Please see Section 7 for more detailed discussion on the problems when only weak IVs are available in a study.

In summary, whether or not an IV analysis will be helpful for a study depends on if unmeasured confounding is a major concern, if there is any plausibly close to valid IV, and if the IV is strong enough for a study. For studies with treatments that are intentionally chosen by physicians and patients, there is often substantial unmeasured confounding from unmeasured indications or severity[7, 10, 16]. Therefore, an IV analysis can be most helpful for those studies. When an IV is available, even if it is not perfectly valid, an IV analysis or a sequence of IV analyses with different IVs can provide very helpful information about the treatment effect. For studies in which unmeasured confounding is not a big concern and no strong IV is available, we suggest investigators to consider IV analyses as secondary or sensitivity analyses.

3. Sources of Instruments in Health Studies

The biggest challenge in using IV methods is finding a good IV. There are several common sources of IVs for health studies.

Randomized Encouragement Trials

One way to study the effect of a treatment when that treatment cannot be controlled is to conduct a randomized encouragement trial. In such a trial, some subjects are randomly chosen to get extra encouragement to take the treatment and the rest of the subjects receive no extra encouragement[21]. For example, [22] studied the effect of maternal smoking during pregnancy on an infant's birthweight using a randomized encouragement trial in which some mothers received extra encouragement to stop smoking through a master's level staff person providing information, support, practical guidance and behavioral strategies [23]. For a randomized encouragement trial, the randomized encouragement assignment (1 if encouraged, 0 if not encouraged) is a potential IV. The randomized encouragement is independent of unmeasured confounders because it is randomly assigned by the investigators and will be associated with the treatment if the encouragement is effective. The only potential concern with the randomized encouragement being a valid IV is that the randomized encouragement might have a direct effect on the outcome not through the treatment. For example, in the smoking example above, the encouragement could have a direct effect if the staff person providing the encouragement also encouraged expectant mothers to stop drinking alcohol during pregnancy. To minimize a potential direct effect of the encouragement, [23] asked the staff person providing encouragement to avoid recommendations or information concerning other habits that might affect birthweight such as alcohol or caffeine consumption and also prohibited discussion of maternal nutrition or weight gain. A special case of a randomized encouragement trial is a usual randomized trial in which the intent is for everybody to take their assigned treatment, but in fact some people do not adhere to their assigned treatment so that assignment to treatment is in fact just an encouragement to treatment. For such randomized trials with non-adherence, random assignment can be used as an IV to estimate the effect of receiving the treatment vs. receiving the control provided that random assignment does not have a direct effect (not through the treatment); see Section 4.7 for further discussion and an example.

Distance to Specialty Care Provider

When comparing two treatments, one of which is only provided by specialty care providers and one of which is provided by more general providers, the distance a person lives from the nearest specialty care provider has often been used as an IV. For emergent conditions, proximity to a specialty care provider particularly enhances the chance of being treated by the specialty care provider. For less acute conditions, patients/providers have more time to decide and plan where to be treated, and proximity may have less of an influence on treatment selection, while for treatments that are stigmatized (e.g., substance abuse treatment), proximity could have a negative effect on the chance of being treated. A classic example of using distance as an IV in studying treatment of an emergent condition is McClellan et al.'s study of the effect of cardiac catheterization for patients suffering a heart attack[24]. The IV used in the study was the differential distance the patient lives from the nearest hospital that performs cardiac catheterization to the nearest hospital that does not perform cardiac catheterization. Another examples is the study of the effect of high level vs. low level NICUs [4] that was discussed in Section 1.2. Because distance to a specialty care provider is often associated with socioeconomic characteristics, it will typically be necessary to control for socioeconomic characteristics in order for distance to potentially be independent of unmeasured confounders. The possibility that distance might have a direct effect because the time it takes to receive treatment affects outcomes needs to be considered in assessing whether distance is a valid IV.

Preference-Based IVs

A general strategy for finding an IV for comparing two treatments A and B is to look for naturally occurring variation in medical practice patterns at the level of geographic region, hospital or individual physician, and then use whether the region/hospital/individual physician has a high or low use of treatment A (compared to treatment B) as the IV. [9] termed these IVs “preference-based instruments” because they assume that different providers or groups of providers have different preferences or treatment algorithms dictating how medications or medical procedures are used. Examples of studies using preference-based IVs are [25] that studied the effect of surgery plus irradiation vs. mastectomy for breast cancer patients using geographic region as the IV, [26] that studied the effect of surgery vs endovascular therapy for patients with a ruptured cerebral aneurysm using hospital as the IV and [27] that studied the benefits and risks of selective cyclooxygenase 2 inhibitors vs. nonselective nonsteroidal antiinflammatory drugs for treating gastrointenstinal problems using individual physician as the IV. For proposed preference-based IVs, it is important to consider that the patient mix may differ between the different groups of providers with different preferences, which would make the preference-based IV invalid unless patient mix is fully controlled for. It is useful to look at whether measured patient risk factors differ between groups of providers with different preferences. If there are measured differences, there are likely to be unmeasured differences as well; see Section 6.1 for further discussion. Also, for proposed preference-based IVs, it is important to consider whether the IV has a direct effect (not through the treatment); a direct effect could arise if the group of providers that prefers treatment A treats patients differently in ways other than the treatment under study compared to the providers who prefer treatment B. For example, [28] studied the efficacy of phototherapy for newborns with hyperbilirubinemia and considered the frequency of phototherapy use at the newborn's birth hospital as an IV. However, chart reviews revealed that hospitals that use more phototherapy also have a greater use of infant formula; use of infant formula is also thought to be an effective treatment for hyperbilirubinemia. Consequently, the proposed preference-based IV has a direct effect (going to a hospital with higher use of phototherapy also means a newborn is more likely to receive infant formula even if the newborn does not receive phototherapy) and is not valid. The issue of whether a proposed preference-based IV has a direct effect can be studied by looking at whether the IV is associated with concomitant treatments like use of infant formula [9]. A related way in which a proposed preference-based IV can have a direct effect is that the group of providers who prefer treatment A may have more skill than the group of providers who prefer treatment B. Also, providers who prefer treatment A may deliver treatment A better than those providers who prefer treatment B because they have more practice with it, e.g., doctors who perform surgery more often may perform better surgeries. [29] discuss a way to assess whether there are provider skill effects by collecting data from providers on whether or not they would have treated a different provider's patient with treatment A or B based on the patient's pretreatment records.

Calendar Time

Variations in the use of one treatment vs. another over time could result from changes in guidelines; changes in formularies or reimbursement policies; changes in physician preference (e.g., due to marketing activities by drug makers); release of new effectiveness or safety information; or the arrival of new treatments to the market [16]. For example, [30] studied the effect of hormone replacement therapy (HRT) on cardiovascular health among postmenopausal women using calendar time as an IV. HRT was widely used among postmenopausal women until 2002; observational studies had suggested that HRT reduced cardiovascular risk, but the Womens’ Health Initiative randomized trial reported opposite results in 2002, which caused HRT use to drop sharply. A proposed IV based on calendar time could violate the assumption of being independent of unmeasured confounders by being associated with unmeasured confounders that change in time such as the characteristics of patients who enter the cohort, changes in other medical practices and changes in medical coding systems [16]. The most compelling type of IV based on calendar time is one where a dramatic change in practice occurs in a relatively short period of time [16].

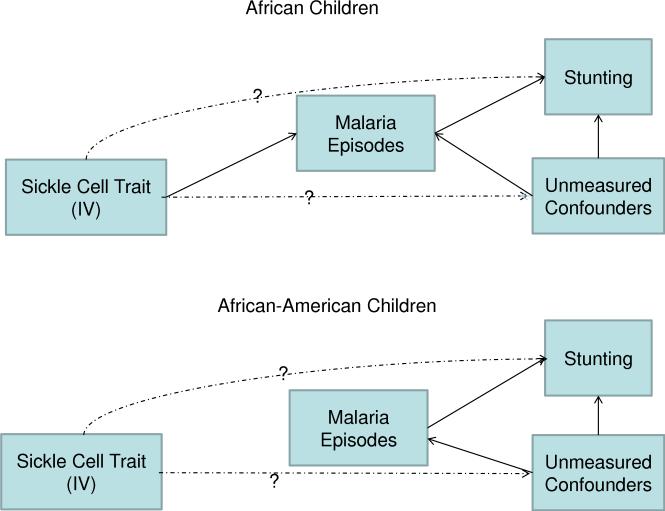

Genes as IVs

Another general source for potential IVs is genetic variants which affect treatment variables. For example, [31] studied the effect of HDL cholesterol on myocardial infarction using as an IV the genetic variant LIPG 396Ser allele for which carriers have higher levels of HDL cholesterol but similar levels of other lipid and non-lipid risk factors compared with noncarriers. Another example is that [32] studied the effect of maternal smoking on orofacial clefts in babies using genetic variants that increase the probability that a mother smokes as IVs. The approach of using genetic variants as an IV is called Mendelian randomization because it makes use of the random assignment of genetic variants conditional on parents’ genes discovered by Mendel. Although genetic variants are randomly assigned conditional on a parent's genes, genetic variants need to satisfy additional assumptions to be valid IVs that include the following:

Not associated with unmeasured confounders through population stratification. Most Mendelian randomization analyses do not condition on parents’ genes, creating the potential of the proposed genetic variant IV being associated with unmeasured confounders through population stratification. Population stratification is a condition where there are subpopulations, some of which are more likely to have the genetic variant, and some of which are more likely to have the outcome through mechanisms other than the treatment being studied. For example, consider studying the effect of alcohol consumption on hypertension. Consider using the ALDH2 null variant, which is associated with alcohol consumption, as an IV (individuals who are homozygous for the ALDH2 null variant have severe adverse reactions to alcohol consumption and tend to drink very little [33]). The ALDH2 null variant is much more common in people with Asian ancestry than other types of ancestry [34]. Suppose ancestry was not fully measured. If ancestry is associated with hypertension through mechanisms other than differences in the ALDH2 null variant (e.g., through different ancestries tending to have different diets), then ALDH2 would not be a valid IV because it would be associated with an unmeasured confounder.

Not associated with unmeasured confounders through genetic linkage. Genetic linkage is the tendency of genes that are located near to each other on a chromosome to be inherited together because the genes are unlikely to be separated during the crossing over of the mother's and father's DNA [35]. Consider using a gene A as an IV where gene A is genetically linked to a gene B that has a causal effect on the outcome through a pathway other than the treatment being studied. If gene B is not measured and controlled for, then gene A is not a valid IV because it is associated with the unmeasured confounder gene B.

No direct effect through pleiotropy. Pleiotropy refers to a gene having multiple functions. If the genetic variant being used as an IV affects the outcome through a function other than affecting the treatment being studied, this would mean the genetic variant has a direct effect. For example, consider the use of the APOE genotype as an IV for studying the causal effect of low-density lipoprotein cholesterol (LDLc) on myocardial infarction (MI) risk. The ∊2 variant of the APOE gene is associated with lower levels of LDLc but is also associated with higher levels of high-density lipoprotein cholesterol, less efficient transfer of very low density lipoproteins and chylomicrons from the blood to the liver, greater postprandial lipaemia and an increased risk of Type III hyperlipoproteinaemia (the last three of which are thought to increase MI risk)[33]. Thus, the gene APOE is pleiotropic, affecting myocardial infarction risk through different pathways, making it unsuitable as an IV to examine the causal effect of any one of these pathways on MI risk.

[36] and [33] provide good reviews of Mendelian randomization methods.

Timing of Admission

Another source of IVs for health studies is timing of admission variables. For example, [37] used day of the week of hospital admission as an IV for waiting time for surgery to study the effects of waiting time on length of stay and inpatient mortality among patients admitted to the hospital with a hip fracture. Day of the week of admission is associated with waiting time for surgery because many surgeons only do non-emergency operations on weekdays, and therefore patients admitted on weekends may have to wait longer for surgery. In order for weekday vs. weekend admission to be a valid IV, patients admitted on weekdays vs. weekends must not differ on unmeasured characteristics (i.e., the IV must be independent of unmeasured confounders) and other aspects of hospital care that affect the patients’ outcomes besides surgery must be comparable on weekdays vs. weekends (i.e., the IV has no direct effect). Another example of a timing of admission variable used as an IV is hour of birth as an IV for a newborn's length of stay in the hospital [38, 39].

Insurance Plan

Insurance plans vary in the amount of reimbursement they provide for different treatments. For example, [40] used drug co-payment amount as an IV to study the effect of β-blocker adherence on clinical outcomes and health care expenditures after a hospitalization for heart failure. In order for variations in insurance plan like drug co-payment amount to be a valid IV, insurance plans must have comparable patients after controlling for measured confounders (i.e., the IV is independent of unmeasured confounders) and insurance plans must not have an effect on the outcome of interest other than through influencing the treatment being studied (i.e., the IV has no direct effect).

We have discussed several common sources of IVs for health studies and considerations to think about in deciding whether potential IVs from these sources satisfy the assumptions to be a valid IV. A detailed understanding of how treatments are chosen in a particular setting may yield additional, creative ideas for potential IVs. In Section 4, we will formally state the assumptions for an IV to be valid and discuss how to use a valid IV to estimate the causal effects of a treatment.

4. IV Assumptions and Estimation for Binary IV and Binary Treatment

In this section, we consider the simplest setting for an instrumental variable design, when both the instrument and treatment are binary. The main ideas in IV methods are most easily understood in this setting and the ideas will be expanded to more complicated settings in later sections.

4.1 Framework and Notation

The Neyman-Rubin potential outcomes framework[41, 42] will be used to describe causal effects and formulate IV assumptions. The classic econometric formulation of instrumental variables is in terms of structural equations and assumptions about the IV being uncorrelated with structural error terms; the formulation in terms of potential outcomes that is described here provides clarity about what effects are being estimated when there are heterogeneous treatment effects and provides a firm foundation for nonlinear as well as linear outcome models[21, 43]. Suppose there are N subjects. Let Z denote the N-dimensional vector of IV assignments with individual elements Zi, i = 1,..., N, where Zi = 0 or 1. Level 1 of the IV is assumed to mean the subject was encouraged to take level 1 of the treatment, where the treatment has levels 0 and 1. Let Dz be the N-dimensional vector of potential treatment under IV assignment z with elements , i = 1, . . . , N, or 0 according to whether person i would receive treatment level 1 or 0 under IV assignment z. Let Yz,d be the N-dimensional vector of potential outcomes under IV assignment z and treatment assignment d where is the outcome subject i would have under IV assignment z and treatment assignment d. The observed treatment for subject i is and the observed outcome for subject i is . Let Xi denote observed covariates for subject i. When we write expressions like E(Y), we mean the expected value of Y for a randomly sampled subject from the population.

[43] considered an IV to be a variable satisfying five assumptions – the stable unit treatment value assumption, the IV is positively correlated with treatment assumption, the IV is independent of unmeasured confounders assumption, the exclusion restriction assumption and the monotonicity assumption. We will describe the first four of these assumptions and then describe the need for the fifth assumption or some substitute to obtain point identification. The first four assumptions are

IV-A1 Stable Unit Treatment Value Assumption (SUTVA). If then and if and , then . In words, this assumption says that the treatment affects only the subject taking the treatment and that there are not different versions of the treatment which have different effects (see [43, 44] for details). The stable unit treatment value assumption allows us to write as where z here denotes subject i having IV assignment z and as where z and d here denote subject i having IV assignment z and treatment d.

IV-A2 IV is positively correlated with treatment received. E(D1|X) > E(D0|X) (Note that we have assumed that level 1 of the IV means that the subject was encouraged to take level 1 of the treatment).

- IV-A3 IV is independent of unmeasured confounders (conditional on covariates X).

IV-A4 Exclusion restriction (ER). This assumption says that the IV affects outcomes only through its effect on treatment received: for all i. Under the ER, we can write for any z, i.e., is the potential outcome for subject i if she were to receive level 1 of the treatment (regardless of her level of the IV) and is the potential outcome if she were to receive level 0 of the treatment. The exclusion restriction assumption is also called the no direct effect assumption.

Assumptions IV-A2, IV-A3 and IV-A4 are the assumptions depicted in Figure 1. These assumptions are the “core” IV assumptions that basically all IV approaches make; assumption IV-A1 is typically implicitly made as well. The core IV assumptions enable bounds on treatment effects to be identified but do not point identify a treatment effect[45].

To see why the core IV assumptions alone do not point identify a treatment effect and to understand what additional assumptions would identify a treatment effect, it is helpful to introduce the idea of compliance classes[43]. A subject in a study with binary IV and treatment can be classified into one of four latent compliance classes based on the joint values of potential treatment received [43]. The four compliance classes are referred to as never-takers, always-takers, defiers and compliers. We denote subject i's compliance class as Ci, which are defined like so: Ci = never-taker (nt) if ; complier (co) if ; always-taker (at) if and defier (de) if . Note that there being four compliance classes is not an assumption but an exhaustive list of the possible types of compliance. Note also that a subject' compliance class is relative to a particular IV, e.g., in studying the effect of regular exercise on the lung function of patients with chronic pulmonary obstructive disease, a person might be a complier if the IV is Z = 1 means the person will receive $1000 if she regularly exercises vs. Z = 0 means the person will receive no extra payment if she regularly exercises but the person might be a never taker if Z = 1 means the person will receive only $100 if she regularly exercises. Table 3 shows the relationship between the latent compliance classes and the observed groups.

Table 3.

The relation between observed groups and latent compliance classes

| Zi | Di | Ci |

|---|---|---|

| 1 | 1 | Complier or Always-taker |

| 1 | 0 | Never-taker or Defier |

| 0 | 0 | Never-taker or Complier |

| 0 | 1 | Always-taker or Defier |

Suppose the outcome is binary so that the observed data (Y, D, Z) is a multinomial random variable with 2 × 2 × 2 = 8 categories. Under Assumptions (IV-A1)-(IV-A4), there are ten free unknown parameters: P(Z = 1), P(Y1 = 1|C = at), P(Y1 = 1|C = co), P(Y0 = 1|C = co), P(Y0 = 1|C = nt), P(Y1 = 1|C = de), P(Y0 = 1|C = de), P(C = at), P(C = co) and P(C = nt) (note that P(C = de) is determined by P(C = co) + P(C = at) + P(C = nt) + P(C = de) = 1). Since there are ten free parameters but the observed data multinomial random variable has only 8 categories (so 7 free probabilities), the model is not identified. Two types of additional assumptions have been considered that reduce the number of free parameters: (i) an assumption about the process of selecting a treatment based on the IV that restricts the number of compliance classes by ruling out defiers; (ii) assumptions that restrict the heterogeneity of treatment effects among the different compliance classes.

We first consider the approach (i) to point identification of restricting the number of compliance classes. The assumption made by [43] rules out defiers:

IV-A5 Monotonicity assumption. This assumption says that there are no subjects who are “defiers,” who would only take level 1 of the treatment if not encouraged to do so, i.e., there is no subject i with .

Monotonicity is automatically satisfied for single-consent randomized encouragement designs in which only the subjects encouraged to receive the treatment are able to receive it[46] (for this design, there are only compliers and never takers). Monotonicity is also plausible in many applications in which the encouragement (Z = 1) provides a clear incentive and no disincentive to take the treatment. For the setting of a binary outcome, (IV-A5) reduces the number of free parameters to seven, enabling identification since there are seven free probabilities for the observed data. However, the model only identifies the average treatment effect for compliers. The never-takers and always-takers do not change their treatment status when the instrument changes, so under the ER assumption, the potential treatment and potential outcome under either level of the IV (Zi = 1 or 0) is the same. Consequently, the IV is not helpful for learning about the treatment effect for always-takers or never-takers. Compliers are subjects who change their treatment status with the IV, that is, the subjects would take the treatment if they were encouraged to take it by the IV but would not otherwise take the treatment. Because these subjects change their treatment with the level of the IV, the IV is helpful for learning about their treatment effects. The average causal effect for this subgroup, , is called the complier average causal effect (CACE) or the local average treatment effect (LATE). It provides the information on the average causal effect of receiving the treatment for compliers.

Approach (ii) to making assumptions about IVs to enable point identification of a treatment effect keeps Assumptions (IV-A1)-(IV-A4) but does not make Assumption (IV-A5) (Monotonicity); instead, it makes an assumption that restricts the heterogeneity of treatment effects among the compliance classes. The strongest such assumption is that the average effect of the treatment is the same for all the compliance classes, E(Y1 – Y0|C = co) = E(Y1 – Y0|C = at) = E(Y1 – Y0|C = nt) = E(Y1 – Y0|C = de). This assumption identifies the average treatment effect for the whole population (this can be derived by using the same reasoning as in the derivation of (2)). A weaker restriction on the heterogeneity of treatment effects among the compliance classes is the no current treatment value interaction assumption[47]:

| (1) |

Assumption (1) combined with (IV-A1)-(IV-A4) identifies the average effect of treatment among the treated, E(Y1 – Y0|D = 1). Under Assumptions (IV-A1)-(IV-A5), Assumption (1) says that the average treatment effect is the same among always takers and compliers conditional on X, since the left hand side of (1) is the average effect of treatment among compliers and always takers and the right hand side is the average effect among always takers (all conditional on X). For further information on approach (ii), see [48, 49].

We are going to focus on approach (i) to the IV assumptions (i.e., Assumptions (IV-A1)-(IV-A5)) for the rest of this tutorial. An attractive feature of this approach is that the monotonicity assumption (IV-A5) is reasonable in many applications (e.g., when the encouragement level of the IV provides a clear incentive to take treatment and no disincentive); see also Section 5.3 for discussion of a weaker assumption than monotonicity which has similar consequences. Although the effect identified by (IV-A1)-(IV-A5), the CACE, is only the effect for the subpopulation of compliers, the data is in general not informative about average effects for other subpopulations without extrapolation, just as a randomized experiment conducted on men is not informative about average effects for women without extrapolation[50]. By focusing on estimating the CACE, the researcher sharply separates exploration of the information in the data from extrapolation to the (sub)-population of interest[50]. See Section 5.1-5.2 for discussion of extrapolation of the CACE to other (sub)-populations.

[51] showed that Assumptions (IV-A1)-(IV-A5) are equivalent to a version of common approach to IV assumptions in economics. In economics, selection of treatment A vs. B is often modeled by a latent index crossing a threshold, where the latent index is interpreted as the expected net utility of choosing treatment A vs. B. For example,

where

Zi independent of εi1, εi2

[51] showed that a nonparametric version of the latent index model is equivalent to the Assumptions (IV-A1)-(IVA5) above that [43] use to define an IV.

4.2 Two stage least squares (Wald) estimator

Let us first consider IV estimation when there are no observed covariates X. For binary IV and treatment variable, [43] show that under Assumptions (IV-A1)-(IV-A5), the CACE is nonparametrically identified by

| (2) |

where the second equality follows from the monotonicity assumption (Assumption IV-A5), the third equality follows from the IV is independent of unmeasured confounders assumption (Assumption IV-A3), the fourth equality follows from the exclusion restriction assumption (Assumption IV-A4) and the fifth equality follows from the IV is correlated with treatment received assumption (Assumption IV-A2).

The standard IV estimator for a binary IV and a binary treatment is the sample analogue of the first expression in (2),

| (3) |

where Ê denotes the sample mean. The standard IV estimator is called the Wald estimator after [52].

The standard IV estimator is also called the two stage least squares (2SLS) estimator because it can be obtained from the following two stage least squares procedure: (i) regress D on Z by least square to obtain Ê(D|Z); (ii) regress Y on Ê(D|Z). The coefficient on Ê(D|Z) from the regression (ii) equals (3). Since (3) can be obtained by the two stage least squares procedures, we denote (3) by CÂCE2SLS,

| (4) |

To see why two stage least squares provides a consistent estimate of the CACE, write Y = α + CACE × D + u, where α is chosen so that E(u) = 0. Then, under the monotonicity and exclusion restriction assumption,

Thus, under the IV independent of unmeasured confounders assumption, E(Y|Z) = α + CACE × E(D|Z) and an unbiased estimate of the CACE can be obtained from regressing Y on E(D|Z). We do not know E(D|Z) but can replace it by the consistent estimate Ê(D|Z) from regressing D on Z, and then regress Y on Ê(D|Z); this is the two stage least squares procedure. The standard error for CÂCE2SLS is not the standard error from the second stage regression but needs to account for the sampling uncertainty in using Ê(D|Z) as an estimate of E(D|Z); see [53–55] and [56], Chapter 9.8 Specifically, the asymptotic standard error for CÂCE2SLS is given in [55], Theorem 3. The 2SLS estimator CÂCE2SLS can also be written as [54].

The 2SLS estimator does not take into full account the structure in Table 3 that the observed outcomes are mixtures of outcomes from different compliance classes. [57–60] develop approaches to estimating the CACE that use the mixture structure to improve efficiency. For example, [59] develops an empirical likelihood approach that is consistent under the same assumptions as 2SLS but that provides substantial efficiency gains in some finite sample settings.

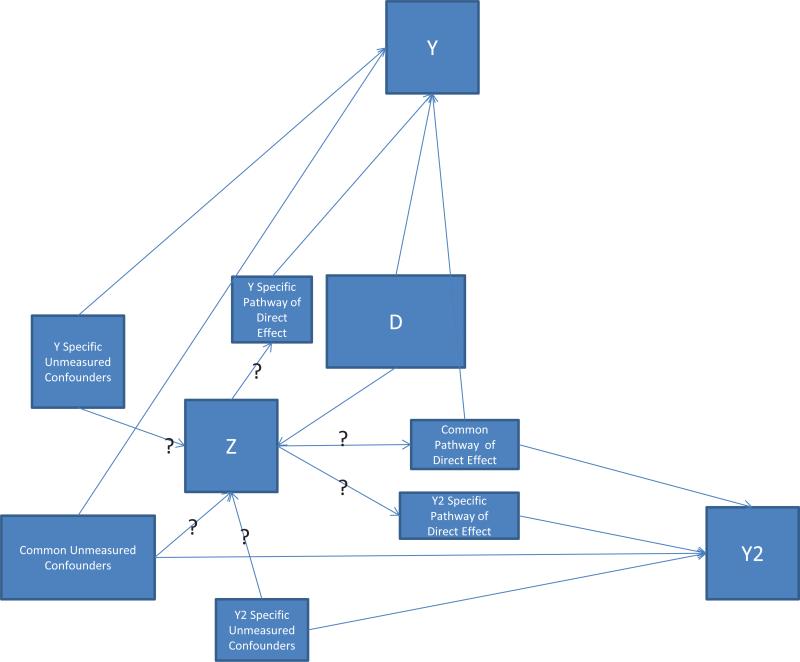

4.3 Estimation with Observed Covariates

As discussed above, various methods have been proposed to use IVs to overcome the problem of unmeasured confounders in estimating the effect of a treatment on outcomes without covariates. However, in practice, IVs may be valid only after conditioning on covariates. For example, in the NICU study of Section 1.2, race is associated with the proposed IV excess travel time and race is also thought to be associated with infant complications through mechanisms other than level of NICU delivery such as maternal age, previous Caesarean section, inadequate prenatal care and chronic maternal medical conditions [61]. Consequently, in order for excess travel time to be independent of unmeasured confounders conditional on measured covariates, it is important that race be included as a measured covariate. To incorporate covariates into the two-stage least squares estimator, regress Di on Xi and Zi in the first stage to obtain D̂i and then regress Yi on D̂i and Xi in the second stage. Denote the coefficient D̂i in the second stage regression by . The estimator estimates a covariate-averaged CACE as we shall discuss [62]. Let (λ, ϕ) be the minimum mean squared error linear approximation to the average response function for compliers E(Y|X D, C = co), i.e., (λ, ϕ) = arg minλ*,ϕ* E[Y – ϕ*T X – λ*D)2|C = co] (where X is assumed to contain the intercept). Specifically, if the complier average causal effect given X is the same for all X and the effect of X on the outcomes for compliers is linear (i.e., E(Y|X D, C = co) = ϕT X + λD), then λ equals the CACE. The estimator is a consistent (i.e., asymptotically unbiased) estimator of λ. Thus, if the complier average causal effect given X is the same for all X and the effect of X on the outcomes for compliers is linear, is a consistent estimator of the CACE. As discussed in Section 4.2, the standard error for is not the standard error from the second stage regression but needs to account for the sampling uncertainty in using D̂i as an estimate of E(Di|Xi, Zi); see [53–55] and [56], Chapter 9.8. Other methods besides two-stage least squares for incorporating measured covariates into the IV model are discussed in [59, 63–69] among others. [63] and [64] introduce covariates in the IV model of [55] with distributional assumptions and functional form restrictions. [65] consider settings under fully saturated specifications with discrete covariates. Without distributional assumptions or functional form restrictions, [66] develops closed forms for average potential outcomes for compliers under treatment and control with covariates. [59] discuss incorporating covariates with an empirical likelihood approach.

4.4. Robust Standard Errors for 2SLS

When there is clustering in the data, standard errors that are robust to clustering should be computed. For 2SLS, this can be done by using robust Huber-White standard errors[70]. Code for computing robust Huber-White standard errors for IV analysis with clustering in R is given in Section 14. For the NICU study, there is clustering by hospital.

Even when there is no clustering, we recommend always using the robust Huber-White standard errors for 2SLS as the non-robust standard error's correctness requires additional strong assumptions about the relationships between the different compliance classes’ outcome distributions and homoskedasticity while the robust standard error's correctness does not require these assumptions; see Theorem 3 in [55] and Section 4.2.1 of [62]. Code for computing robust Huber-White standard errors without clustering in R is given in Section 14.

4.5. Two Sample IV

The 2SLS estimator (4) can be used when information on Y, Z, D and X are not available in a single data set, but one data set has Y, Z and X and the other data set has D, Z and X. One can estimate the regression function Ê(D|Z, X) from the first data set and then compute Ê(D|Zi, Xi) for the subjects i in the second data set and regress Yi on Ê(D|Zi, Xi) and Xi for the second data set. This is called two-sample two stage least squares[71, 72]; see [72] for how to compute standard errors. An example of using two-sample two-stage least squares is [73] that studied the effect of food stamps on body mass index (BMI) in immigrant families using differences in state responses to a change in federal laws on immigrant eligibility for the food stamp program as an IV. The National Health Interview Study was used to estimate the effect of state lived in on BMI and the Current Population Survey was used to estimate the effect of state lived in on food stamp program participation because neither data set contained all three variables.

4.6. Example 1: Analysis of NICU study

For the NICU study, Table 4 shows the two stage least squares estimate for the effect of high level NICUs using excess travel time as an IV and compares the 2SLS estimate to the estimate that does not adjust for any confounders and the multiple regression estimate that only adjusts for the measured confounders (those in Table 4 plus several other variables described in [4]) The unadjusted estimate is that high level NICUs increase the death rate, causing 10.9 more deaths per 1000 deliveries; this estimate is probably strongly biased by the selection bias that doctors and mothers are more likely to insist on babies being delivered at a high level NICU if the baby is at high risk of mortality. The regression estimate that adjusts for measured confounders is that high level NICUs save 4.2 babies per 1000 deliveries. The two stage least squares estimate that adjusts for measured and unmeasured confounders is that high level NICUs save even more babies, 5.2 babies per 1000 deliveries.

Table 4.

Risk Difference Estimates for Mortality Per 1000 Premature Births in High Level NICUs vs. Low Level NICUs. The confidence intervals account for clustering by hospital through the use of Huber-White robust standard errors.

| Estimator | Risk Difference | Confidence Interval |

|---|---|---|

| Unadjusted | 10.9 | (6.6, 15.3) |

| Multiple Regression, Adjusted for Measured Confounders | −4.2 | (−6.8, −1.5) |

| Two Stage Least Squares, Adjusted for Measured and Unmeasured Confounders | −5.9 | (−9.6, −2.2) |

As illustrated by Table 4, the multiple regression estimate of the causal effect will generally have a smaller confidence interval than the 2SLS estimate. However, when the IV is valid and there is unmeasured confounding, the multiple regression estimate will be asymptotically biased whereas the 2SLS estimate will be asymptotically unbiased. Thus, there is a bias-variance tradeoff between multiple regression vs. 2SLS (IV estimation). When the IV is not perfectly valid, the 2SLS estimator will be asymptotically biased, but the bias-variance tradeoff may still favor 2SLS. [74] develops a diagnostic tool for deciding whether to use multiple regression vs. 2SLS.

4.7. Example 2: The Effect of Receiving Treatment in a Randomized Trial with Nonadherence

An important application of IV methods is to estimating the effect of receiving treatment in randomized trials with nonadherence. When some subjects do not adhere to their assigned treatments in a randomized trial, the intention to treat (ITT) effect is often estimated, IT̂T = Ê(Y|Z = 1) – Ê(Y|Z = 0); ITT is estimating the effect of being assigned the active treatment compared to being assigned the control (e.g., a placebo or usual care). When there is nonadherence, the ITT effect is different from the effect of receiving the treatment vs. the control. Both of these effects are valuable to know. One situation when knowing the effect of receiving the treatment vs. the control is particularly valuable is when the treatment non-adherence pattern is expected to differ between the study sample and the target population[75–77]. In this situation, the ITT estimate may be biased for estimating the effect of offering the treatment to the target population[75, 76] and a key quantity that needs to be known to accurately predict the effect of offering the treatment to the target population is the effect of actually receiving the treatment[76, 77]. For example, [75] discuss a trial of vitamin A supplementation to reduce child mortality. In the trial, the Vitamin A supplementation was implemented by having children take pills and some children who were randomized to treatment did not take the pills. The ITT effect is the effect of making Vitamin A pills available to children. However, if the the trial showed that taking the pills was efficacious, Vitamin A supplementation would not likely be implemented by providing pills but instead by fortifying a daily food item such as monosodium glutamate or salt[75]. By knowing the effect of receiving Vitamin A supplementation (the biologic efficacy) and the rate at which Vitamin A supplementation would be successfully delivered under a fortification program, we can estimate the effectiveness of a fortification program. A second situation in which knowing the effect of receiving treatment vs. control is particularly valuable is when patients who are interested in fully adhering to a treatment are making decisions about whether to take the treatment[76, 78]. For example, [78] mention the setting that to decide whether to use a certain contraception method, a couple may want to know the failure rate if they use the method as indicated, rather than the failure rate in a population that included a substantial proportion of non-adherers.

A standard estimate of the effect of receiving the treatment vs. the control is the as treated estimate, E(Y|D = 1) – Ê(Y|D = 0), which compares the outcomes of subjects who received the treatment vs. the control regardless of the subjects’ assigned treatment. The as-treated estimate may be biased for estimating the effect of receiving the treatment because of unmeasured confounding, e.g., individuals with better diet may be more likely to adhere to treatment and to have better outcomes regardless of treatment. Another standard estimate is the per protocol estimate, Ê(Y|Z = 1, D = 1) – Ê(Y|Z = 0. D = 0), which compares the outcomes of subjects who were assigned to the treatment and followed the treatment protocol to subjects who were assigned the control and followed the control protocol; similar to the as treated estimate, the per protocol estimate may be biased for estimating the effect of receiving the treatment because of unmeasured confounding, e.g., individual with better diet may be more likely to adhere to treatment but all subjects may adhere with the control if the control is usual care. The IV method has the potential of overcoming bias from unmeasured confounding in estimating the effect of receiving the treatment. Consider as a possible IV, the randomly assigned treatment Z. The randomly assigned treatment satisfies the IV is independent of unmeasured confounders assumption (IV-A3) because of the randomization and the randomly assigned treatment will usually make receiving treatment more likely, thus satisfying (IV-A2) that the IV is positively correlated with treatment received. It needs to be considered whether the randomly assigned treatment satisfies the exclusion restriction (IV-A4) and monotonicity assumptions (IV-A5) for specific trials. In the Vitamin A pill trial mentioned above, the treatment was only available to those assigned to treatment so that monotonicity was automatically satisfied. [75] argue that the exclusion restriction is also likely satisfied for the Vitamin A trial because for never takers, being assigned to the treatment group vs. the control group is unlikely to affect mortality (there was no placebo in the trial). In contrast, [79] raise concern about the exclusion restriction holding in certain mental health randomized trials. If the randomly assigned treatment does satisfy all the IV assumptions (IV-A1)-(IV-A5) for a trial, then the two stage least squares (Wald) estimator (4) is a consistent estimate of the effect of actually receiving treatment for compliers. The numerator of (4) is equal to the intent to treat estimate and the denominator of (4) is an estimate of the proportion of compliers.

Table 5 shows the mortality rates in the Vitamin A trial, stratified by assigned treatment and received treatment[75]. The ITT estimate for the effect on the mortality rate per 1000 children is 3.8 – 6.4 = -2.6, the as treated estimate is 1.2 – 7.7 = -6.5, the per protocol estimate is 1.2 – 6.4 = -5.2 and the IV estimate is . As discussed above, the assumptions for randomization assignment to be a valid IV are plausible for the Vitamin A trial and the IV estimate says that taking the Vitamin A pills saves an estimated 3.3 per 1000 children among those children who would take the Vitamin A pills if offered them (the compliers in this trial – note that there are no always takers in this trial).

Table 5.

Mortality rates in Vitamin A trial, stratified by assigned treatment and received treatment. The top part of the table shows all three strata and the bottom part shows certain collapsed strata

| Randomization Assignment | Treatment Received | # of Children | Deaths (per 1000) | Mortality Rate |

|---|---|---|---|---|

| Control | Control | 11,588 | 74 | 6.4 |

| Treatment | Control | 2,419 | 34 | 14.1 |

| Treatment | Treatment | 9,675 | 12 | 1.2 |

| Treatment | Control or Treatment | 2,419+9,675=12,094 | 34+12 | 3.8 |

| Control or Treatment | Control | 11,588+2,419 | 74+34 | 7.7 |

5. Understanding the Treatment Effect that IV Estimates

As discussed in Section 4, the IV method estimates the CACE, the average treatment effect for the compliers (i.e. E[Y1 – Y0|C = co]), which might not equal the average treatment effect for the whole population. Although we might ideally want to know the average treatment effect for the whole population, the average treatment effect for compliers often provides useful information about the average treatment for the whole population and the average treatment effect for compliers may be of interest in its own right. In Section 5.1, we discuss how to relate the average treatment for compliers to the average treatment effect for the whole population and in Section 5.2, we discuss how to understand more about who the compliers are, which is helpful for interpreting the average treatment effect for compliers in its own right. In Sections 5.3-5.5, we discuss additional issues related to understanding the treatment effect that IV estimates. In particular, in Section 5.3, we discuss interpreting the treatment effect when the compliance class is not deterministic; in Section 5.4, we discuss interpreting the treatment effect when there are different versions of the treatment; and in Section 5.5, we discuss interpretation issues when there is heterogeneity in response.

5.1 Relationship between Average Treatment Effect for Compliers and Average Treatment Effect for the Whole Population

As discussed in Section 4, the IV method estimates the CACE, the average treatment effect for the compliers (i.e. E[Y1 – Y0|C = co]). The average treatment effect in the population is, under the monotonicity assumption, a weighted average of the average treatment effect for the compliers, the average treatment effect for the never-takers and the average treatment effect for the always-takers:

The IV method provides no direct information on the average treatment effect for always-takers (i.e., E[Y1 – Y0|C = at]) or the average treatment effect for never-takers (i.e., E[Y1 – Y0|C = nt]). However, the IV method can provide useful bounds on the average treatment effect for the whole population if a researcher is able to put bounds on the difference between the average treatment effect for compliers and the average treatment effects for never-takers and always-takers based on subject matter knowledge. For example, suppose a researcher is willing to assume that this difference is no more than b. Then

| (5) |

where the quantities on the left and right hand sides of (5) other than b can be estimated as discussed in Section 4 and [58, 60, 64]. For binary or other bounded outcomes, the boundedness of the outcomes can be used to tighten bounds on the average treatment effect for the whole population or other treatment effects [45, 80]. Qualititative assumptions, such as that the average treatment effect is larger for always-takers than compliers, can also be used to tighten the bounds, e.g., [80–82].

In thinking about extrapolating the CACE to the full population, it is useful to think about how compliers’ outcomes compare to always takers and never takers’ outcomes[50]. The data provide some information about this. Specifically, we can compare

| (6) |

Under (IV-A1)-(IV-A5), [83] shows that the following are consistent estimates of the quantities in (1) when there are no covariates: Ê(Y0|C = nt) = Ê(Y|D = 0, Z = 1), , Ê(Y1|C = at) = Ê(Y|D = 1, Z = 0) and ; when there are covariates, the methods of [66] or [64] can be used to estimate the quantities in (1). If compliers never takers and always takers are found to be substantially different in levels by evidence of a substantial difference between E(Y0|C = nt) and E(Y0|C = co) and/or between E(Y1|C = at) and E(Y1|C = co), then it appears much less plausible that the average effect for compliers is indicative of average effects for other compliance types. On the other hand, if one finds that potential outcomes given the control for never takers and compliers are similar, and potential outcomes given the treatment are similar for compliers and always takers, it is more plausible that average treatment effects for the groups are also comparable[50]. For example, in the Vitamin A trial described in Table 5, the mortality rate for never takers is estimated to be 14.1 (per 1000 children) and for compliers under control is estimated to be 4.8 (note that there are no always takers in the Vitamin A trial). This substantial difference in estimated mortality rates between the compliance classes suggests that we should be cautious in extrapolating the CACE to the full population.

5.2 Characterizing the Compliers

The IV method estimates the average treatment effect for the subpopulation of compliers. In most situations, it is impossible to identify which subjects in the data set are “compliers” because we only observe a subject's treatment selection under either Z = 1 or Z = 0 which means we cannot identify if the subject would have complied under the unobserved level of the instrument. So who are these compliers and how do they compare to noncompliers? To understand this better, it is useful to characterize the compliers in terms of their distribution of observed covariates [9, 62]. The mean of a covariate Xi among the compliers is the following under the IV assumptions 1-5 from Section 4.1, where f represents the probability mass function or probability density function,

| (7) |

where

| (8) |

where X denotes the vector of measured covariates that need to be condition on for (IV-A3) to hold and X denotes the covariate for which we are examining its distribution among the compliers (note that X need not be included in X). An alternate representation for E(X|C=co) from [66] is E(X|C=co)=E[κi*Xi]/E[κi] where κi=1-Di*(1-Zi)/[1-P(Zi=1|Xi)]-(1-Di)/P(Zi=1|Xi). We can estimate E(X|C=co) by usign logistic regression to estimate P(Zi=1|Xi) and using these estimates to estimate κi and then estimating E(X|C=co) by the sample mean of *X divided by the sample mean of . The prevalence ratio of a binary characteristic X among compliers compared to the full population is

Table 6 shows the mean of various characteristics X among compliers vs. the full population, and also shows the prevalance ratio. Babies whose mothers are college graduates are slightly underrepresented (prevalence ratio = 0.87) and African Americans are slightly overrepresented (prevalence ratio = 1.14) among compliers. Very low birthweight (< 1500 g) and very premature babies (gestational age ≤ 32 weeks) are substantially underrepresented among compliers, with prevalence ratios around one-third; these babies are more likely to be always-takers, i.e., delivered at high level NICUs regardless of mother's travel time. Babies whose mothers’ have comorbidities such as diabetes or hypertension are slightly underrepresented among compliers. Overall, Table 6 suggests that higher risk babies are underrepresented among the compliers. If the effect of high level NICUs is greater for higher risk babies, then the IV estimate will underestimate the average effect of high level NICUs for the whole population.

Table 6.

Complier characteristics for NICU study. The second column shows the estimated proportion of compliers with a characteristic X, the third column shows the estimated proportion of the full population with the characteristic X and the fourth column shows the estimated ratio of compliers with X compared to the full population with X.

| Characteristic X | Prevalence of X among compliers | Prevalence of X in full population | Prevalence Ratio of X among compliers to full population |

|---|---|---|---|

| Birthweight < 1500g | 0.03 | 0.09 | 0.33 |

| Gestational Age <= 32 weeks | 0.04 | 0.13 | 0.34 |

| Mother College Graduate | 0.23 | 0.26 | 0.87 |

| African American | 0.17 | 0.15 | 1.14 |

| Gestational Diabetes | 0.05 | 0.05 | 0.91 |

| Diabetes Mellitus | 0.02 | 0.02 | 0.77 |

| Pregnancy Induced Hypertension | 0.08 | 0.10 | 0.82 |

| Chronic Hypertension | 0.02 | 0.02 | 0.89 |

5.3 Understanding the IV Estimate When Compliance Status Is Not Deterministic

For an encouragement that is uniformly delivered, such as patients who made an appointment at a psychiatric outpatient clinic are sent a letter encouraging them to attend the appointment [84], it is clear that a subject is either a complier, always taker, never taker or defier with respect to the encouragement. However, sometimes encouragements that are not uniformly delivered are used as IVs. For example, in the NICU study, consider the IV of whether the mother's excess travel time to the nearest high level NICU is more than 10 minutes. If a mother whose excess travel time to the nearest high level NICU was more than 10 minutes moved to a new home with an excess travel time less than 10 minutes, whether the mother would deliver her baby at a high level NICU might depend on additional aspects of the move, such as the location and availability of public transportation at her new home [85] and the exact travel time to the nearest high level NICU at her new home. Consequently, a mother may not be able to be deterministically classified as a complier or not a complier – she may be a complier with respect to certain moves but not others. Another example of nondeterministic compliance is that when physician preference for one drug vs. another is used as the IV (e.g., Z = 1 if a patient's physician prescribes drug A more often drug B), whether a patient receives drug A may depend on how strongly the physician prefers drug A [9, 86]. Another situation in which nondeterministic compliance status can arise is that the IV may not itself be an encouragement intervention but a proxy for an encouragement intervention. Consider the case of Mendelian randomization, in which the IV is often a single nucleotide polymorphism (SNP). Changes in the SNP itself may not affect the exposure D. Instead, genetic variation at another location on the same chromosome as the SNP, call it L, might affect D. The SNP might just be a marker for the subject's genetic code at location L. The encouragement intervention is having a certain genetic code at L and the SNP is just a proxy for this encouragement. Consequently, even if a subject's exposure level would change as a result of a change in the genetic code at location L, whether the subject is a complier with respect to a change in the SNP depends on whether the change in the SNP leads to a change in the genetic code at location L, which is randomly determined through the process of recombination[85].

Brookhart and Schneeweiss [9] provide a framework for understanding how to interpret the IV estimate when compliance status is not deterministic. Suppose that the study population can be decomposed into a set of κ + 1 mutually exclusive groups of patients based on clinical, lifestyle and other characteristics such that within each group of patients, whether a subject receives treatment is independent of the effect of the treatment. All of the common causes of the potential treatment receiveds D1, D0 and the potential outcomes Y1, Y0 should be included in the characteristics used to define these groups. For example, if there are L binary common causes of (D1, D0, Y1, Y0), then the subgroups can be the κ + 1 = 2L possible values of these common causes. Denote patient membership in these groups by the set of indicators S = {S1, S2, . . ., Sκ}. Consider the following model for the expected potential outcome:

The average effect of treatment in the population is and the average effect of treatment in subgroup j is α1 + α3,j. Under the IV assumptions (IV-A1)-(IV-A4) in Section 4.1, i.e., all the assumptions except monotonicity, the 2SLS estimator (4) converges in probability to the following quantity:

| (9) |

where