Abstract

The aim of this project was to determine, for bimodal cochlear implant (CI) patients, i.e., patients with low-frequency hearing in the ear contralateral to the implant, how speech understanding varies as a function of the difference in level between the CI signal and the acoustic signal. The data suggest that (i) acoustic signals perceived as significantly softer than a CI signal can contribute to speech understanding in the bimodal condition, (ii) acoustic signals that are slightly softer than, or balanced with, a CI signal provide the largest benefit to speech understanding and (iii) acoustic signals presented at MCL provide nearly as much benefit as signals that have been balanced with a CI signal.

Bimodal cochlear implant patients, i.e., patients who have an implant in one ear and low-frequency hearing in the contralateral ear, generally achieve higher scores on tests of speech understanding than patients who receive stimulation from a cochlear implant only (e.g., Shallop et al., 1992, Armstrong et al., 1997, Tyler et al., 2002, Ching et al., 2004, Kong et al., 2005, Mok et al., 2006, Dorman et al., 2010). At issue in this paper is the level of the acoustic signal relative to the CI signal that will produce the greatest benefit from low-frequency hearing.

The standard procedure for setting the level of the acoustic signal is to balance or match, in some fashion, the loudness relative to the CI signal. It is assumed that similar loudness of the acoustic and electric portions of the signal will produce the best results (e.g., Blamey et al., 2000; Ching et al., 2004; Keilmann et al., 2009). We examine that assumption by presenting the acoustic signal at levels ranging from just over detection threshold to above the level of the CI signal and asking how performance varies as a function of the difference in level between the CI signal and the acoustic signal.

Methods

Subjects

Five postlingually-deafened, bimodal CI listeners were invited to participate in this project based on evidence of bimodal benefit in a standard clinical test environment. All provided signed informed consent forms as per institutional guidelines at Arizona State University. All subjects (i) used a Cochlear Corporation signal processor, (ii) had at least 24 months experience with electric stimulation, (iii) had at least 20 years of experience with amplification prior to implantation and (iv) were known, from previous testing, to have bimodal benefit. No patient had residual hearing in the operated ear. Biographical data are presented in Table 1. All were paid in hourly wage for their participation. The audiogram for each patient's non-implanted ear is shown in Figure 1.

Table 1.

Biographical data for patients. Duration of significant hearing loss was calculated from the time patients started to have difficulty using the telephone. CNC = single word recognition. CI = cochlear implant. HA = hearing aid.

| Listener | Age | Etiology | Duration (yr) of loss for HA ear | Duration (yr) of loss for CI ear | CI experience (yr) | CNC score CI alone | CNC score HA alone |

|---|---|---|---|---|---|---|---|

| S1 | 70 | Familial | 28 | 28 | 2 | 74 | 58 |

| S2 | 54 | Unknown | 24 | 39 | 10 | 52 | 46 |

| S3 | 74 | Unknown | 22 | 28 | 6 | 66 | 12 |

| S4 | 76 | Noise | 56 | 56 | 4 | 72 | 6 |

| S5 | 37 | Unknown | 37 | 37 | 4 | 72 | 36 |

Figure 1.

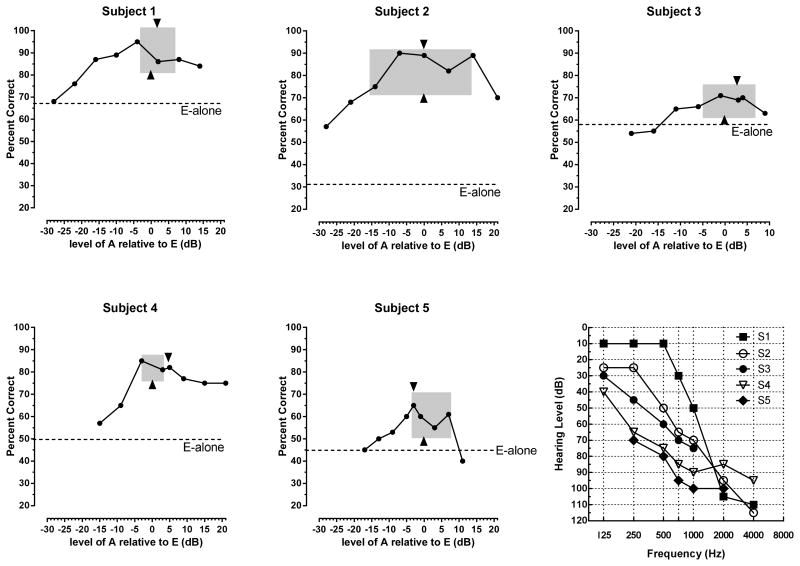

Percent correct sentence understanding as a function of the level of the acoustic signal. E = CI stimulation. A = low-frequency acoustic stimulation. Left edge of grey box = acoustic stimulation level just softer than the CI level. Right edge of grey box = acoustic stimulation level just louder than the CI level. Arrow at bottom of box = balance point for acoustic and electric stimulation. Arrow at top of box = MCL for acoustic stimulation. Bottom right figure shows audiograms of the acoustically stimulated ear.

Signal Processing

Signal processing was implemented on a Personal Digital Assistant (PDA)-based signal processor (Ali et al., 2011; 2013). This cell-phone-sized, research device functions as the external signal processor for a Cochlear Corporation implant. The device was used in an ‘offline’ mode only, i.e., speech signals were processed offline based on each patients MAP, stimulation parameters for electric stimulation were computed using the ACE algorithm, and the resulting parameters were passed directly to the internal receiver. Direct stimulation removed the need for a sound booth or a quiet room and loudspeakers to deliver speech signals to the patient.

The PDA also functioned as a hearing aid and synchronously provided (i) parameters for electrical stimulation and (ii) an acoustic signal to the contralateral ear, delivered via an insert receiver, processed in the manner of a hearing aid. To accommodate the degree of hearing loss for each patient, acoustic signals were amplified and subjected to the frequency-gain characteristic prescribed by the National Acoustic Laboratories (NAL-RP) prescription method (Byrne & Dillon 1986; Byrne et al. 1990) using MATLAB.

The threshold-equalizing noise (TEN) test (Moore et. al., 2000; 2004) and a fast method for measuring Psychophysical Tuning Curves (PTCs) (Sek & Moore, 2011) were used to detect the presence of dead regions along the cochlea with residual acoustic hearing. This information was used to further shape the amplification prescribed by the NAL-RP formula, i.e., amplification was not provided at and above a dead region. All processing was done in MATLAB off-line. For S1, S2, S3, S4 and S5 cochlear dead regions started at edge frequencies (fe) of 1500Hz, 1000Hz, 1500Hz, 4000Hz and 750 Hz, respectively and extended upwards from the fe.

Stimuli

The speech stimuli were sentences from the AzBio sentence lists (Spahr et al., 2012). The noise signal was the multitalker babble from Spahr et al. (2012).

Procedures

The overall logic of the experiment was as follows: (i) present the electric signal in sufficient noise to drive performance near 60 % correct and then (ii) add (to the electric signal) the acoustic signal at levels ranging from just above detection threshold to above the level of the CI signal.

To accomplish this goal, for each patient the following steps were implemented using sentences from the AzBio sentence corpus as test material:

Determine the most comfortable presentation level for the CI signal, using the Advanced Bionics loudness scale (see below) and an ascending/descending method of stimulus presentation, and then determine a signal-to-noise ratio (SNR) that drives CI-alone performance to near 60% correct. The signal level was fixed and the noise level was decreased to achieve the desired SNR.

Find the signal levels that range from just noticeable (level 1) to the upper limit of loudness (level 9) for signals delivered to the non-implanted ear, using an 11 discrete point loudness scale (Advanced Bionics loudness scale where 0 = off, 1=just noticeable, 2=very soft, 3=soft, 4= comfortable but too soft, 5= comfortable but soft, 6=most comfortable, 7=loud but comfortable, 8=loud, 9=upper loudness limit, and 10 = too loud).

Find, using an ascending/descending procedure, the most comfortable level (6 on the Advanced Bionics loudness scale) for signals directed to the non-implanted ear (with implant switched off).

Find the ‘balance point’ for electric and acoustic stimulation by varying the level of the acoustic signal relative to the CI signal. This was achieved using a picture of a head with an arc in front of it and asking the subject to indicate when the acoustic and electric stimulation produced the sensation that the stimulus was balanced directly in front of the head. For some patients this procedure was easier than judgments of relative loudness although both procedures likely accessed the same underlying perceptual information. The signals were repeated using an ascending/descending method, until the patient reported the signals were balanced.

Find the level of the acoustic signal that was (i) just noticeably softer than the CI (the auditory image shifted towards the CI side of the head) and (ii) just noticeably louder than the CI (the auditory image shifted towards the hearing-aid side of the head). This was accomplished by changing the level of the acoustic stimulus in 1 dB steps until the patient indicated it was either softer than or louder than the CI stimulus (or until the two types of stimulation were ‘unbalanced’).

The speech stimuli used for determining the loudness levels described above were AzBio sentences that were not used in the following speech-recognition tests. The procedure of determining loudness levels was repeated until 2-3 consistent results were achieved.

To create the acoustic signals for the experiment, the dynamic range of acoustic hearing, i.e., the dB range in acoustic stimulation from lower to upper limit (as determined in #2 above), was divided into 7 steps. The first step was ‘just noticeable’ stimulation and the last step was equal to or less than the ‘upper limit of loudness’. An additional acoustic signal was created at the level judged to be ‘most comfortable loudness’ (see #3 above). The acoustic signals were combined with the CI signal to produce eight test conditions. Twenty AzBio sentences were presented in each test condition. The order of conditions was fixed – from softest acoustic signal plus CI to loudest acoustic signal plus CI. There were five practice trials of AzBio sentences before each test condition.

Results

The results for each patient are shown in Figure 1. In each figure the level of performance with the implant alone is indicated by a dotted line and labeled E-alone. We succeeded in driving performance to near 60 percent correct for subjects 3, 4 and 5 by adding noise (using signal to noise ratios of +7 dB, +13 dB and +2 dB, respectively). Subjects 1 and 2 were tested in quiet – noise was not necessary to reach our criterion.

All patients benefited from acoustic stimulation – the mean was 29 percentage points [minimum = 13 percentage points (S3); maximum = 49 percentage points (S2)]. Benefit was an inverted U function, with minimum or no improvement at the lowest level of acoustic stimulation (with one exception), the most improvement near the balance or the acoustic MCL point, and a lower level of performance at the highest levels of acoustic stimulation.

In Figure 1 the grey boxes indicate the range of acoustic stimulus levels for signals reported to be ‘just softer’ than the CI stimulus (the left edge of the box) and just louder than the CI stimulus (the right edge of the box). Box width was for S1 = 7 dB, for S2 = 30 dB, for S3 = 12 dB, for S4 = 6 dB and for S5 = 11 dB.

The point of balance between the electric and acoustic stimulus is indicated by the upward pointing triangle at the bottom of each box. The MCL point for the acoustic stimulus alone is indicated by the downward pointing triangle at the top of the box. The balance and MCL points were within, on average, 2.6 dB (S1= 2 dB; S2 = 0 dB; S3 = 3 dB; S4 = 5 dB; S5 = 3 dB.).

Performance in the condition that produced the best performance overall was little different than performance in the condition in which the acoustic signal was presented at MCL. The mean difference between the two conditions was -4.2 percentage points (S1= -10 percentage points; S2= -1 percentage point; S3= -2 percentage points; S4 = -3 percentage points; S5= -5 percentage points). Although all difference scores are in the same direction, none of the difference scores exceed the expected variance of the AzBio test material (Spahr et al., 2012).

Discussion

Our aim in this project was to determine, for bimodal CI patients, how performance varies as a function of the difference in level between the CI signal and the acoustic signal. We find that the benefit of adding the low-frequency signal to the CI signal is an inverted U-shaped function of the level of the acoustic signal. Very soft acoustic signals (as expected) produce very little benefit – the first data point in each figure is near the level of performance with the CI alone (with one exception – see discussion below). Signals presented near the balance point between acoustic and electric stimulation always provided the highest levels of performance. And, for all subjects, performance fell for acoustic signals that were judged to much higher in level than the CI stimulus. This performance decrease could be due to a shift in attention to the loudest signal and away from the CI signal, or due to central masking of the electric signal by the acoustic signal (James et al., 2001; Van Hoessel and Clark, 1997). Our data do not speak to these possibilities.

Inspection of Figure 1 suggests that for three of the five subjects (#1,4,5) an acoustic stimulus that is judged as slightly softer, or slightly unbalanced towards the CI ear, provides the most benefit when added to the CI signal. Thus, a clinical recommendation to set the acoustic signal balanced with, or slightly softer than, the CI signal is supported by the data. Moreover, the data suggest that balance does not need to be determined with a high degree of precision.

Acoustic signals set to MCL, without reference to the CI signal, also support high levels of benefit. When the acoustic signals were set to MCL, scores, on average, were within 4 percentage points of the best score. Thus, balancing is not necessary to achieve a high level of benefit from the acoustic signal. This could be a useful datum for setting the level of the auditory signal for patients who have difficulty understanding CI vs. acoustic signal balancing procedures.

For all subjects, some signals labeled as softer than the CI signal provided benefit to intelligibility. This suggests that patients who cannot achieve normal loudness growth may still benefit from acoustic stimulation. For Subject 2, even the acoustic signal labeled as ‘just detectable’ provided a very large gain in intelligibility. We have no account for this but note that this patient also had an extremely large range when judging signals to be just softer than or just louder than the CI stimulus. This patient might have had difficulty judging loudness. It is also the case that this was one of two listeners for whom noise was not necessary to depress performance to our target range. It is possible that the absence of noise facilitated the access to the information in the low-level acoustic signal.

Summary

The data suggest that (i) acoustic signals perceived as significantly softer than a CI signal can contribute to speech understanding in the bimodal condition, (ii) acoustic signals that are slightly softer than, or balanced with, a CI signal provide the largest benefit to speech understanding and (iii) acoustic signals presented at MCL provide nearly as much benefit as signals that have been balanced with a CI signal. Studies involving a larger number of patients and other kinds of test materials are necessary to assess the generality of these results.

Acknowledgments

This work was supported by a subcontract to the first author from NIH grant R01 DC010494 to Philip Loizou for research on bimodal stimulation using a PDA-based signal processor. Professor Loizou passed away on July 22, 2012.

References

- Ali H, Lobo A, Loizou P. A PDA Platform for Offline Processing and Streaming of Stimuli for Cochlear Implant Research. Conf Proc IEEE Eng Med Biol Soc. 2011:1045–8. doi: 10.1109/IEMBS.2011.6090243. [DOI] [PubMed] [Google Scholar]

- Ali H, Lobo A, Loizou P. Design and evaluation of a personal digital assistant-based research platform for cochlear implants. IEEE Trans Biomed Eng. 2013;60(11):3060–3073. doi: 10.1109/TBME.2013.2262712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armstrong M, Pegg P, James C, Blamey P. Speech perception in noise with implant and hearing aid. Am J Otol. 1997;18:S140–S141. [PubMed] [Google Scholar]

- Blamey P, Dooley G, James C, Parisi E. Monaural and binaural loudness mesures in cochlear implant users with contralateral residual hearing. Ear Hear. 2000;21(1):6–17. doi: 10.1097/00003446-200002000-00004. [DOI] [PubMed] [Google Scholar]

- Byrne D, Dillon H. The National Acoustic Laboratories' (NAL) new procedure for selecting the gain and frequency response of a hearing aid. Ear Hear. 1986;7:257–265. doi: 10.1097/00003446-198608000-00007. [DOI] [PubMed] [Google Scholar]

- Byrne D, Parkinson A, Newall P. Hearing aid gain and frequency response requirements for the severely/profoundly hearing impaired. Ear Hear. 1990;11:40–49. doi: 10.1097/00003446-199002000-00009. [DOI] [PubMed] [Google Scholar]

- Ching T, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25:9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Dorman M, Gifford R. Combining acoustic and electric stimulation in the service of speech recognition. I J Audiology. 2010 Dec;49(12):912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James C, Blamey P, Shallop JK, Incerti PV, Nicholas AM. Contralateral masking in cochlear implant users with residual hearing in the non-implanted ear. Audio Neuro-otol. 2001;6:87–97. doi: 10.1159/000046814. [DOI] [PubMed] [Google Scholar]

- Keilmann A, Bohnert A, Gosepath J, Mann W. Cochlear implant and hearing aid: A new approach to optimizing the fitting in this bimodal situation. Eur Arch Otorhinolaryngol. 2009;266:1879–1884. doi: 10.1007/s00405-009-0993-9. [DOI] [PubMed] [Google Scholar]

- Kong Y, Stickney G, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–61. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Mok M, Grayden D, Dowell R, Lawrence D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49:338–51. doi: 10.1044/1092-4388(2006/027). [DOI] [PubMed] [Google Scholar]

- Moore B, Glasberg B, Stone M. New version of the TEN test with calibrations in dB HL. Ear Hear. 2004;25:478–487. doi: 10.1097/01.aud.0000145992.31135.89. [DOI] [PubMed] [Google Scholar]

- Moore B, Huss M, Vickers D, et al. A test for the diagnosis of dead regions in the cochlea. Br J Audiol. 2000;34:205–224. doi: 10.3109/03005364000000131. [DOI] [PubMed] [Google Scholar]

- Moore B. Dead regions in the cochlea: Diagnosis, perceptual consequences, and implications for the fitting of hearing aids. Trends Amplif. 2001;5:1–34. doi: 10.1177/108471380100500102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler R, Parkinson A, Wilson B, Witt S, Preece J, Noble W. Patients utilizing a hearing aid and a cochlear implant: speech perception and localization. Ear Hear. 2002;23:98–105. doi: 10.1097/00003446-200204000-00003. [DOI] [PubMed] [Google Scholar]

- Sęk A, Moore B. Implementation of a fast method for measuring psychophysical tuning curves. Int J Audiol. 2011;50(4):237–42. doi: 10.3109/14992027.2010.550636. [DOI] [PubMed] [Google Scholar]

- Shallop J, Arndt P, Turnacliff K. Expanded indications for cochlear implantation: Perceptual results in seven adults with residual hearing. J Speech-Lang Path & Appl Behav Analy. 1992;16:141–148. [Google Scholar]

- Spahr A, Dorman M, Litvak L, van Wie S, Gifford R, Loiselle L, Oakes T, Cook S. Development and validation of the AzBio sentence lists. Ear Hear. 2012;33(1):112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Hoesel RJM, Clark GM. Psychophysical studies with two binaural cochlear implant subjects. J Acoust Soc Am. 1997;102:495–507. doi: 10.1121/1.419611. [DOI] [PubMed] [Google Scholar]