Abstract

The brain improves speech processing through the integration of audiovisual (AV) signals. Situations involving AV speech integration may be crudely dichotomized into those where auditory and visual inputs contain (1) equivalent, complementary signals (validating AV speech) or (2) inconsistent, different signals (conflicting AV speech). This simple framework may allow the systematic examination of broad commonalities and differences between AV neural processes engaged by various experimental paradigms frequently used to study AV speech integration. We conducted an activation likelihood estimation metaanalysis of 22 functional imaging studies comprising 33 experiments, 311 subjects, and 347 foci examining “conflicting” versus “validating” AV speech. Experimental paradigms included content congruency, timing synchrony, and perceptual measures, such as the McGurk effect or synchrony judgments, across AV speech stimulus types (sublexical to sentence). Colocalization of conflicting AV speech experiments revealed consistency across at least two contrast types (e.g., synchrony and congruency) in a network of dorsal stream regions in the frontal, parietal, and temporal lobes. There was consistency across all contrast types (synchrony, congruency, and percept) in the bilateral posterior superior/middle temporal cortex. Although fewer studies were available, validating AV speech experiments were localized to other regions, such as ventral stream visual areas in the occipital and inferior temporal cortex. These results suggest that while equivalent, complementary AV speech signals may evoke activity in regions related to the corroboration of sensory input, conflicting AV speech signals recruit widespread dorsal stream areas likely involved in the resolution of conflicting sensory signals. Hum Brain Mapp 35:5587–5605, 2014. © 2014 Wiley Periodicals, Inc.

Keywords: cross‐modal; language, superior temporal sulcus, activation likelihood estimation, multisensory, auditory dorsal stream, inferior frontal gyrus, asynchronous, incongruent

INTRODUCTION

During speech processing, the brain enhances comprehension through the incorporation of both auditory and visual sensory signals, that is, audiovisual (AV) integration. In most natural settings for speech, auditory and visual sensory inputs are equivalent in content and timing, so integration of these complementary cues can provide validation of sensory information. In other instances, auditory and visual sensory inputs may contribute inconsistent speech signals; conflicting in content and/or timing, in which case, neural processes must resolve the discrepancy for understanding. Common everyday examples include trying to have a conversation with someone in a noisy setting [Nath and Beauchamp, 2011; Sumby and Pollack, 1954], or viewing a dubbed foreign language film or poorly downloaded/synchronized video. Deficits and differences in AV speech integration are associated with several disorders, such as schizophrenia [Ross et al., 2007; Szycik et al., 2009a], Alzheimer's disease [Delbeuck et al., 2007], autism spectrum disorders [Irwin et al., 2011; Smith and Bennetto, 2007; Woynaroski et al., 2013], dyslexia [Blau et al., 2009, 2010; Pekkola et al., 2006], and other learning disabilities [Hayes et al., 2003], and have been found in some cases of focal brain injury [Baum et al., 2012; Hamilton et al., 2006]. Thus, understanding the normal processes and brain regions consistently related to AV speech processing may provide insight into the underlying biological substrates associated with these disorders.

AV speech integration can be examined in detail by manipulating the content and timing of auditory and visual signals relative to each other. These types of stimulus manipulations are commonly reported in the multisensory literature [Beauchamp, 2005; Hocking and Price, 2008]. Many functional neuroimaging studies across languages have used different types of speech signals (e.g., sublexical, words, and sentences), manipulations of the AV sensory signals, and measurements of the perceived signals (see Table 1 for example studies). Manipulations of stimulus sensory characteristics have often included content congruency (e.g., contributing different auditory and visual signals) and timing synchrony (e.g., shifting the onset of the auditory signal relative to the visual signal). AV speech integration can also be assessed based on the perceived signal, which may actually differ from both the auditory and visual signal presented, such as the McGurk effect [McGurk and MacDonald, 1976]. The McGurk effect occurs when an entirely new, merged speech percept (e.g., “da”), called the McGurk percept, arises from the resolution of conflicting auditory (e.g., “ba”), and visual cues (e.g., “ga”). In general, other percepts, called non‐McGurk percepts, can be typically described as the perception of the speech sound (e.g., “ba”) or the visual‐only facial movements (e.g., “ga”), although other AV combinations have been reported [McGurk and MacDonald, 1976]. Similarly, the judgment of fusion of AV sensory events in time is another perceptual measure, which is examined by varying the onset timing of auditory and visual stimuli [Lee and Noppeney, 2011; Miller and D'Esposito, 2005; Noesselt et al., 2012]. The fusion percept is the perception of only one sensory event in time and occurs during synchronous or near‐synchronous AV speech, while increasingly asynchronous AV speech can lead to perception of two distinct sensory events in time, much like the example of viewing a poorly synched video, where the lips appear to move separately from the speech sounds.

Table 1.

Studies, contrasts, foci, and categorized comparisons included in the ALE analysis

| Conflicting AV speech | Validating AV speech | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Study # | Reference | N | Subjects' language | AV stimulus | Task | Contrast | # of foci | Source | Contrast | # of foci | Source |

| 1 | Balk et al., [2010] | 14 | Finnish | Vowels | Target Detection | Async > Sync | 1 | Author Email | Sync > Async | 6 | Author Email |

| 2 | Benoit et al., [2010] | 15 | English | McGurk Syllables | Congruency Discrimination | Incong > Cong | 40 | Table 2 | None | 0 | None |

| 3 | Bishop and Miller, [2009] | 25 | English | VCV + babble | Speech Identification | None | 0 | None | Sync > Async | 22 | Table 1 |

| 4 | Fairhall and Macaluso, [2009] | 12 | Italian | Story | Selective Attention Target Detection | None | 0 | None | Cong > Incong | 6 | Table 1 |

| 5 | Jones and Callan, [2003] | 12 | English | McGurk VCV | Consonant Discrimination | Incong > Cong | 3 | Results text | Non‐McG > McG | 1 | Results text |

| 6 | Lee and Noppeney, [ 2003] | 37 | German | Short sentences | a) Passive viewing/listening (fMRI); b) Synchrony judgments | a) Async > Sync b) Non‐Fus > Fus | 22 | a) Table S1 b) Table 2 | None | 0 | None |

| 7a | Macaluso et al., [ 2010] | 8 | English | Nouns | Target Detection | None | 0 | None | Sync > Async | 8 | Table 1 |

| 8 | Miller and D'Esposito, [2005] | 11 | English | VCV | Synchrony Judgments | a) Async > Sync b) Non‐Fus > Fus | 15 | Table 1 | Fus > Non‐Fus | 2 | Table 1 |

| 9 | Murase et al., [2008] | 28 | Japanese | Vowels | Vowel Discrimination | Incong > Cong | 3 | Figure 4 (caption) | None | 0 | None |

| 10b | Nath et al., [2011] | 17 | English | McGurk Syllables | Target Detection | McG > Non‐McG | 3 | Table 2 | Non‐McG > McG | 7 | Table 2 |

| 11 | Noesselt et al., [ 2005] | 11 | German | Sentences | Synchrony Judgments | a) Async > Sync b) Non‐Fus > Fus | 42 | a) Table 2 b) Table 1, 3 | a) Sync > Async b) Fus > Non‐Fus | 12 | a) Table 2 b) Table 1, 3 |

| 12 | Ojanen et al., [ 2012] | 10 | Finnish | Vowels | Stimulus Change Detection | Incong > Cong | 4 | Table 1 | None | 0 | None |

| 13 | Olson et al., [2002] | 10 | English | McGurk Words | Passive viewing/listening Button press end of block | McG > Non‐McG | 2 | Table 1 | None | 0 | None |

| 14c | Pekkola et al., [ 2010] | 10 | Finnish | Vowels | Stimulus change Detection | Incong > Cong | 2 | Table 3 | None | 0 | None |

| 15 | Skipper et al., [ 2008] | 13 | English | McGurk Syllables | Passive viewing/listening | Incong > Cong | 30 | Table 3 | Cong > Incong | 57 | Table 4 |

| 16 | Stevenson et al., [ 2007] | 8 | English | Monosyllabic Nouns | Semantic Categorization | None | 0 | None | Sync > Async | 8 | Table 2 |

| 17 | Szycik et al., [2009] | 12 | German | Disyllabic Words | Target Detection | None | 0 | None | Cong > Incong | 1 | Table 2 |

| 18 | Szycik et al., [2008] | 8 | German | Disyllabic Nouns | Target Detection | Incong > Cong | 9 | Table 1 | None | 0 | None |

| 19d | Szycik et al., [2008] | 7 | German | McGurk Syllables | Syllable Discrimination | a) Incong > Cong b) McG > Non‐McG | 23 | a) Table 2 b) Table 2, 3 | None | 0 | None |

| 20 | van Atteveldt et al., [ 2012] | 13 | Dutch | Phonemese | Congruency Discrimination | Incong > Cong | 7 | Table 4 | None | 0 | None |

| 21 | van Atteveldt et al., [ 2009a] | 16 | Dutch | Phonemese | Target Detection | Incong > Cong | 4 | Table 1 | None | 0 | None |

| 22 | Wiersinga‐Post et al., [2010] | 14 | Dutch | McGurk VCV | Syllable Discrimination | None | 0 | None | Non‐McG > McG | 7 | Table 1 |

| Total | 311 | 20 | 210 | 13 | 137 | ||||||

“a” and “b” designate separate contrast types from the same study and distinct group of subjects. Note that the references for the studies included in the ALE analysis are provided as a Supporting Information section.

Async, asynchronous; Cong, congruent; Fus, fusion percept; Incong, incongruent; McG, McGurk percept; Non‐McG, non‐McGurk percept; Non‐Fus, non‐fusion percept; Sync, synchronous; VCV, vowel–consonant–vowel token.

PET study.

Subjects were children.

Only foci from controls were included.

While two of the included foci were from contrasts with n = 12, n = 7 was used for all foci for simplicity.

Phoneme speech sounds were paired with visual text of letters (only two studies #20, #21).

The goal of the current study was to evaluate the neuroimaging literature on AV speech integration through the examination of the brain activity patterns associated with commonly used paradigms including AV stimulus manipulations and percept measurements. While many approaches have been used to assess AV speech integration, when considering the big picture, the results of these different approaches have not been systematically and quantitatively compared. Formal comparisons could demonstrate commonalities or differences in results that would suggest either common or discrete types of AV speech computations associated with different types of AV conflict. Because of the variety of specific experimental manipulations used within the common paradigms, that is, content, timing, percept reports, a simplified framework was needed to systematically examine colocalization of activity within broadly similar studies.

Although the different manipulations and measurements used to examine AV speech in neuroimaging experiments certainly involve different specific processes in AV integration, in broad terms, these experiments can be thought of as stressing, to varying degrees, two general and fundamental types of operations that are in direct opposition with each other: resolution of conflict between discrepant AV sensory signals versus validation of the same, complementary AV sensory signals. When the content or timing of the stimulus is equivalent (e.g., sound “ba” is presented synchronously with the visual articulation of “ba”), neural processes related to sensory validation are stressed, since there is no conflict between AV signals. By contrast, when the content or timing of the stimulus is inconsistent (e.g., sound “hotel” is paired with the visual articulation “island” [Szycik et al., 2012], or sound “tree” is presented 240 ms before visual articulation of “tree,” see Macaluso et al. [2004], it is likely that neural operations related to processing conflicting auditory and visual inputs are more strongly stressed compared to when auditory and visual cues are congruent and synchronous.

In experiments examining the perceived AV signal, while there is potentially stress on both conflict resolution and validation processes, the relative stress on each may likely differ depending on the percept. Both the McGurk and non‐McGurk percept occur during conflicting AV stimulation, but we suggest that, in general, the McGurk percept may serve as a behavioral outcome indicating more stress on neural systems responsible for processing AV conflict resolution, represented by the merging of disparate sensory inputs, and less strain on reinforcement of one sensory signal or the other. Conversely, the non‐McGurk percept compared to the McGurk percept may suggest relatively less stress on resolution of AV conflict between the sensory signals, and more bias toward bolstering one sensory modality, resulting typically in the perception of either the speech sound or facial movements. Similarly, in the conflicting versus validating framework, the fusion percept may reflect relatively more validation of sensory cues than conflict, whereas the non‐fusion percept of asynchronous sensory input may reflect relatively more conflict between auditory and visual input.

Whether AV speech integration is examined based on the sensory stimulus presented or the percept reported, the neural computations of commonly used contrasts across studies can be broadly considered within the conflicting versus validating framework. This meta‐analytic framework does group several specific computation types present within the AV speech literature, synchrony versus congruency versus percept. However, it may still provide an acceptable scheme to integrate findings, and allow for the critical evaluation of the degree of overlap versus the difference among distinct contrast and AV stimulus types across a variety of experimental paradigms in the field. Despite these frequently used approaches, previous studies have mainly focused on specific contrasts and have not typically asked whether there may be more general processing demands inherent to AV speech integration regardless of the specific stimulus or contrast type. Thus, using the proposed conflicting versus validating framework to categorize experiments for meta‐analysis is not only useful, but also novel. The conflicting versus validating framework has the potential to inform hypotheses regarding the types of neural operations performed in these brain regions, influence existing models of speech processing [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009; Skipper et al., 2007], and allow for the broad‐view quantitative examination of neural systems involved in AV speech integration, which is, to the best of our knowledge, lacking in the current literature.

Many brain regions are involved in processing AV speech signals including areas within the auditory dorsal and ventral streams [Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009; Rauschecker and Tian, 2000], such as the posterior superior temporal sulcus [STS; Beauchamp et al., 2004a, 2010; Calvert et al., 2000; Hein and Knight, 2008; Raij et al., 2000], the frontal motor areas [Skipper et al., 2005, 2007], and the inferior frontal gyrus [Ojanen et al., 2005; Sekiyama et al., 2003]. Even relatively early sensory areas have demonstrated multimodal speech processes [Bavelier and Neville, 2002; Calvert et al., 1997; Driver and Noesselt, 2008; Hackett and Schroeder, 2009; Pekkola et al., 2005; Sams et al., 1991]. However, the extent and constraint of AV computation types occurring within these regions have not been completely examined. Thus, a systematic and quantitative evaluation of the common experimental paradigms within the AV speech literature is needed.

We first hypothesized that, across experiments, the conflicting versus validating framework would capture two general computational characteristics of AV speech integration, which should be reflected in consistent patterns of activity within each type of contrast, and different patterns when comparing conflict versus validation. We hypothesized, further, that AV speech integration contrasts that stress conflict over validation would require involvement of multisensory regions, such as the posterior STS [Beauchamp et al., 2004b, 2010; Man et al., 2012; Watson et al., 2014], and a larger network of regions proposed in speech‐related feedback/error processing, such as auditory dorsal stream areas [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009], or in regions proposed to process domain‐general conflict resolution and response selection, such as inferior frontal cortex [Novick et al., 2005, 2010]. In contrast, we hypothesized that experiments emphasizing validation of AV input over conflict would consistently recruit regions more proximal to sensory areas as compared to frontal and parietal regions hypothesized for processing conflicting AV speech, where sensory areas were defined in terms of relative location to A1 or V1 as compared to conflicting AV speech. This hypothesis was supported by previous studies that have shown increased activity for congruent AV speech in auditory areas [Okada et al., 2013; van Atteveldt et al., 2004, 2007], and increased activity for non‐native, second language processing of congruent AV speech in visual areas [Barros‐Loscertales et al., 2013].

To interrogate these hypotheses, we conducted an activation likelihood estimation [ALE; Turkeltaub et al., 2002] meta‐analysis of 22 functional imaging studies comprising 33 experiments, 311 subjects, and 347 activation foci. These experiments examined conflicting versus validating AV speech including paradigms of content congruency, timing synchrony, and perceptual measures, such as the McGurk effect and other perceptual fusions related to synchrony judgments. These experiments used AV speech stimulus types that ranged from sublexical to sentence in various languages. Specifically, across experiments, we distinguished the brain areas more consistently active when there were discrepancies in sensory signals (conflicting AV speech) versus brain areas more consistently active when sensory signals were in agreement (validating AV speech). We then examined the specific experiments driving the ALE activation patterns to determine the degree to which various specific manipulations of content, timing, and perception overlap in their processing and to what degree these specific experiments differ. Finally, we assessed the specificity of each ALE cluster for conflicting and validating AV speech, which was examined based on the proximity of foci from validating experiments to conflicting AV speech ALE peaks, and vice versa.

MATERIALS AND METHODS

Literature Search

Studies published through September 2013 were identified through online searches of PubMed, using EndNote software (http://endnote.com), and Google Scholar databases for functional magnetic resonance imaging (fMRI) and positron emission tomography (PET) studies using the following key words: “speech,” “audiovisual,” “auditory,” “visual,” “integration,” “cross modal,” “crossmodal,” “McGurk,” and “multisensory” in various combinations. References from studies identified and review articles were also reviewed for additional publications.

Inclusion Criteria

Studies were included with the following criteria: (1) conducted experiments using fMRI or PET imaging modalities; (2) subjects were normal, healthy participants; (3) stimuli consisted of AV speech, that is, speech sounds consisting of either sublexical parts of speech (e.g., phonemes, syllables, vowel–consonant–vowel [VCV] tokens, and so forth), or words, or sentences, paired with visual stimuli consisting of either video of a speaker or text (e.g., letters; only studies #20, #21); (4) contrasts could be classified to identify activity for conflicting AV stimuli, validating AV stimuli, or differences between them; (5) AV stimuli could be classified as conflicting or validating based on content (incongruent versus congruent) or timing (asynchronous versus synchronous); (6) perceptual measures that could be classified included the McGurk percept, or other perceptual fusions associated with judgments of AV synchrony (e.g., perception of one sensory event or two sensory events close in time); (7) results reported foci in a stereotactic/standard three‐dimensional (3‐D) coordinate system (Talairach or MNI) or foci coordinates were provided by the author (only one study, #1); and (8) experiments examined the whole brain, or used large slabs covering frontal, temporal, parietal, and occipital cortex (only studies #13, #19, #21), or included functional localizers that were not anatomically restricted to a specific brain region and allowed for the possibility of activity to be found across the whole brain. Among the included studies that reported handedness, all subjects were right‐handed with the exception of study #9, where two of the 28 subjects were left‐handed. Three studies (#6, #11, and #22) did not report handedness. All included experiments used univariate designs. All included studies are listed in Table 1 with study characteristics noted.

Exclusion Criteria

Single‐subject reports, experiments that assessed non‐native/second language processing, and experiments that appeared to report foci within anatomically restricted brain regions were excluded from the meta‐analysis. Studies that met all inclusion criteria, but did not report results in the form of 3‐D stereotactic coordinates (Talairach or MNI) were also excluded.

Experiment Classification

Based on the framework described in the Introduction, each individual experiment that met inclusion criteria was broadly classified as contrasting conflict over validation, or validation over conflict in AV signals. A study was defined as a distinct set of subjects. An experiment was defined as a distinct set of subjects tested on a specific AV contrast type, where a distinct set of subjects could be tested on more than one AV contrast type (e.g., study #11). AV contrast types were classified into eight categories and included stimulus contrasts (i.e., incongruent versus congruent and asynchronous versus synchronous), and percept contrasts (i.e., McGurk versus non‐McGurk percept and non‐fusion versus fusion percept).

Focusing on stimulus contrast types, conflicting AV speech was categorized as discordant AV speech stimuli, either in content incongruence, where auditory and visual speech signals were not the same, and/or presented asynchronously, where the timing was offset between the auditory and visual signals. Conflicting AV speech experiments were classified as contrasts that assessed neural activity related to the comparison of processing incongruent > congruent or asynchronous > synchronous AV speech stimuli. In contrast, validating AV speech was categorized as equivalent auditory and visual speech signals, either in content congruence and/or presented synchronously. In other words, validating AV speech experiments were classified as contrasts that assessed neural activity related to processing when the auditory and visual speech stimuli were the same compared to inconsistent, that is, congruent > incongruent and synchronous > asynchronous.

For perceptual measures, the contrast of McGurk > non‐McGurk percept was classified as conflicting AV speech, and non‐McGurk > McGurk percept was classified as validating AV speech. These AV contrast types applied to correlations of activity with number of McGurk responses, where positive correlations were classified as McGurk > non‐McGurk percept and negative correlations were classified as non‐McGurk > McGurk percept. While both the McGurk percept contrasts have some level of conflict inherent in the AV stimuli, intended to elicit the McGurk percept, we suggest that there may be more conflict processing when the McGurk percept is reported, which may lead to the merged resolution of AV signals. In contrast, we suggest that the non‐McGurk percept may have more processing related to the perception of a particular AV signal, that is, typically either the sound or the visual input, and less conflict processing related to integration of disparate AV signals. During timing synchrony paradigms, non‐fusion > fusion percept was classified as conflicting AV speech, and fusion > non‐fusion percept was classified as validating AV speech. Here, the fusion percept was described as the perception of one sensory event and the non‐fusion percept was described as the perception of two sensory events in succession. In parallel with McGurk percept processing, we suggest that regardless of the stimulus characteristics of the AV signal, perception of one sensory event indicates relatively more validation than conflict processing related to the timing of the AV signal, whereas the perception of two sensory events in time may represent more conflict present between the AV signals. Importantly, since the perceptual contrasts, McGurk versus non‐McGurk percept and non‐fusion versus fusion percept, were less clearly accommodated within the conflicting versus validating framework, supplementary ALE analyses were conducted with the exclusion of the percept contrasts.

ALE Methods

ALE is a quantitative meta‐analysis technique that assesses colocalization across neuroimaging (fMRI and PET) studies in the brain using coordinates of activation foci reported in the literature [Turkeltaub et al., 2002, 2012]. To summarize, ALE operates on the assumption that there is “uncertainty” regarding the actual location of foci reported in standardized, stereotaxic brain space (Talairach, MNI). For each set of experiments organized by distinct subject groups, ALE creates a whole‐brain map of localization probabilities modeled by 3‐D Gaussian probability densities distributions. Across experiments, whole‐brain voxelwise cumulative probabilities are calculated to generate an overall ALE map. The voxelwise ALE value is equal to the probability that at least one study should have activity/foci located there [Turkeltaub et al., 2012]; the larger the ALE value, the higher the probability of activity being reported in that location. Significance is assessed using a random‐effects significance test against the null hypothesis that localization of activity is independent between studies. Detailed methodological descriptions of the ALE equations and algorithms have been published elsewhere [Eickhoff et al., 2009, 2012; Turkeltaub et al., 2002, 2012].

We determined the localization of conflicting and validating AV speech in the brain through the assessment of two separate ALE analyses on each set of experiments. Every experiment included in this meta‐analysis contrasted two AV conditions that differed in their degree of conflict versus validation, thus, each of the ALE analyses presented represent contrasts between conflict and validation processes in AV integration. ALE analyses were performed using GingerALE 2.1 (http://www.brainmap.org). Coordinates of foci reported in Talairach space were transformed to MNI space in the GingerALE 2.1 platform, using tal2icbm [Laird et al., 2010; Lancaster et al., 2007] or Brett tal2mni transform if the coordinates of foci appeared to be previously transformed using this method. GingerALE provides coordinate conversions between Talairach and MNI stereotactic space in both directions. Coordinates of foci were organized by subject group to eliminate false positives due to within‐group effects, as described in Turkeltaub et al. [2012]. Significant activation likelihood clusters met the following criteria to reduce false positives (type I errors): (1) a false discovery rate (FDR) q < 0.01 was applied, (2) at least two experiments contributed to each cluster, and (3) a cluster extent threshold > 100 mm3. The applied cluster extent threshold is commonly used in the ALE literature and has previously been demonstrated to show good sensitivity while reducing false positives [Turkeltaub et al., 2012]. Experiments reporting foci within three standard deviations of the calculated localization uncertainty from a peak in the ALE map were considered contributors to that peak, see Turkeltaub et al. [2011].

To confirm the specificity of these clusters for conflicting or validating AV speech, we examined whether experiments in the opposite category (validating or conflicting AV speech, respectively) also reported foci within three standard deviations of the calculated localization uncertainty from each ALE peak. For example, validating AV speech experiments reporting foci within three standard deviations of the calculated localization uncertainty from each conflicting AV speech ALE peak were reported as “Nearby Validating Experiments”; whereas, conflicting AV speech experiments containing foci within three standard deviations of each validating AV speech ALE peak were reported as “Nearby Conflicting Experiments.”

Supplementary ALE analyses were also conducted: (1) the exclusion of percept contrasts for conflicting AV speech and (2) the exclusion of percept contrasts for validating AV speech. The supplementary findings are reported with a FDR q < 0.01 and a cluster extent threshold > 100 mm3.

All cluster anatomical locations were verified through a combination of the automated anatomical labeling atlas and the Colin27 brain anatomy in MRIcron (http://www.mccauslandcenter.sc.edu/mricro/index.html). Results are displayed on surface renderings and slices of the Colin27 brain using MRIcron.

RESULTS

We classified 33 fMRI and PET experiments that met our inclusion criteria. These experiments derived from 22 imaging studies that comprised a total of 311 subjects and 347 foci (Table 1). The fMRI/PET experimental designs included block, event‐related, and adaptation. Of the 33 experiments, there were 21 sublexical level (e.g., phonemes, vowels, syllables, and so forth), five word level, and seven sentence level AV speech stimulus types. These studies used active and passive tasks that assessed conflicting versus validating AV speech through the manipulation of sensory stimulus characteristics that differed in content congruency (incongruent versus congruent) or timing synchrony (asynchronous versus synchronous), or through perceptual measures (e.g., the McGurk percept or judgments of the AV fusion percept of sensory events in time). Detailed information regarding study characteristics is located in Table 1.

Localization of Conflicting AV Speech

The ALE analysis of conflicting AV speech included 210 foci from 20 experiments. Ten experiments were incongruent > congruent contrasts, four were asynchronous > synchronous contrasts, three were McGurk > non‐McGurk percept contrasts, and three were non‐fusion > fusion percept contrasts. The ALE analysis resulted in nine clusters of significant activation likelihood in areas of the frontal, temporal, and parietal lobes (Table 2; Fig. 1). These ALE findings were consistent whether or not the percept contrasts were included (Supporting Information Fig. 1). All 17 conflicting AV speech ALE peaks were derived from both content and timing conflicts, 15 from incongruent > congruent and asynchronous > synchronous, and two from incongruent > congruent and non‐fusion > fusion percept contrast types.

Table 2.

Conflicting AV speech ALE analysis results

| Conflicting contributing experiments | Contrast type | AV stimulus type | Nearby validating experiments | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Volume (mm3) | ALE value | MNI | Stimulus contrast | Percept contrast | Sublexical | Word | Sentence | ||||||

| x | y | z | Async > Sync | Incong > Cong | Non‐Fus > Fus | McG > Non‐McG | ||||||||

| Left STG/STS/MTG | 2,008 | 0.0256 | −54 | −44 | 8 | 6a, 9, 10, 11a, 11b, 18, 19b | 2 | 2 | 1 | 2 | 3 | 1 | 3 | 11b |

| 0.0176 | −52 | −54 | 8 | 6a, 9, 10, 11a, 11b, 18 | 2 | 2 | 1 | 1 | 2 | 1 | 3 | 11b, 15 | ||

| 0.0113 | −56 | −34 | 14 | 11a, 11b, 19a, 19b, 21 | 1 | 2 | 1 | 1 | 3 | 2 | None | |||

| Left STG/STS/MTG | 1,424 | 0.0153 | −62 | −26 | 2 | 1, 2, 6a, 9, 11a, 18, 19a, 19b | 3 | 4 | 1 | 5 | 1 | 2 | 4, 11b | |

| 0.0144 | −64 | −34 | −4 | 2, 6a, 11b, 18, 19a, 19b | 1 | 3 | 1 | 1 | 3 | 1 | 2 | 4, 11b | ||

| Left IFG/MFG | 1,104 | 0.0169 | −44 | 16 | 28 | 2, 11a, 11b, 12, 14, 20 | 1 | 4 | 1 | 4 | 2 | None | ||

| 0.0141 | −42 | 10 | 36 | 2, 11b, 14, 15, 20 | 4 | 1 | 4 | 1 | None | |||||

| 0.0140 | −46 | 22 | 24 | 11a, 11b, 12, 14, 18, 20 | 1 | 4 | 1 | 3 | 1 | 2 | None | |||

| Right STG/STS/MTG | 960 | 0.0160 | 54 | −40 | 6 | 2, 6a, 8b, 9, 11a, 19a | 2 | 3 | 1 | 4 | 2 | 11a, 11b, 16 | ||

| 0.0142 | 58 | −28 | 2 | 2, 6a, 9, 19a, 19b | 1 | 3 | 1 | 4 | 1 | 11b | ||||

| Right IFG | 656 | 0.0143 | 48 | 20 | 22 | 11a, 11b, 18, 19a, 20 | 1 | 3 | 1 | 2 | 1 | 2 | None | |

| 0.0130 | 46 | 12 | 24 | 2, 8a, 11a, 18, 19a, 20 | 2 | 4 | 4 | 1 | 1 | None | ||||

| SMA | 632 | 0.0155 | 0 | 24 | 48 | 2, 8a, 14, 18, 20 | 1 | 4 | 4 | 1 | None | |||

| 0.0139 | 10 | 18 | 46 | 2, 8a, 14, 20 | 1 | 3 | 4 | None | ||||||

| Right Precentral Gyrus | 240 | 0.0145 | 40 | 8 | 46 | 2, 11a, 11b, 20 | 1 | 2 | 1 | 2 | 2 | None | ||

| Left IPL | 192 | 0.0135 | −44 | −56 | 52 | 5, 8a, 15, 18 | 1 | 3 | 3 | 1 | None | |||

| Left IFG | 160 | 0.0130 | −30 | 26 | −10 | 2, 11b, 18 | 2 | 1 | 1 | 1 | 1 | None | ||

MNI coordinates are reported with an FDR q < 0.01 and a cluster size > 100 mm3. Contributing experiments are reported within three standard deviations of the calculated localization uncertainty from a peak (see Materials and Methods section for details). We determined whether any foci from validating AV speech experiments were within three standard deviations from the calculated localization uncertainty of each conflicting AV speech ALE peak (Nearby Validating Experiments). Note that study numbers and abbreviations match those designated in Table 1.

IFG, inferior frontal gyrus, IPL, inferior parietal lobule; MFG, middle frontal gyrus; MTG, middle temporal gyrus; SMA, supplementary motor area; STG, superior temporal gyrus; and STS, superior temporal sulcus.

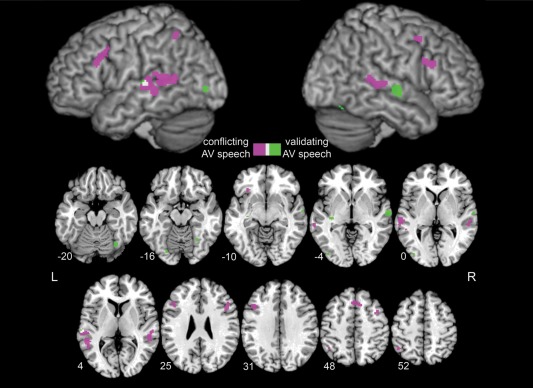

Figure 1.

Significant ALE clusters for conflicting and validating AV speech. Conflicting AV speech recruited primarily dorsal stream regions, such as bilateral posterior STG/STS/MTG, bilateral dorsal IFG, left IPL, and SMA (Table 2), shown in purple. In contrast, validating AV speech generally localized to ventral stream visual areas in the occipital and inferior temporal cortex, such as bilateral FFG and left inferior occipital lobe, as well as other regions, such as bilateral mid‐STG, shown in green (Table 3). Overlap between conflicting and validating AV speech is shown in white. One left STG validating cluster was not present if percept contrasts were excluded (Supporting Information Fig. 1). Results are displayed on Colin27 brain with surface rendering of the left and right hemispheres, significant at FDR q < 0.01 with a cluster size > 100 mm3. Axial slices are presented in neurological convention with the corresponding MNI Z coordinate. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Two large clusters were identified in the left posterior superior/middle temporal cortex that spanned superior temporal gyrus (STG) through the STS to the middle temporal gyrus (MTG), with peak ALE values of 0.0256 and 0.0153, and Y values of −44 and −26, respectively. These ALE clusters derived from all contrast types (stimulus and percept contrasts, Table 2), and large range of AV speech stimulus types from sublexical to sentence. Nine different experiments in total contributed to the larger left posterior STG/STS/MTG cluster (2,008 mm3). Of the three peaks, the highest ALE peak derived from seven experiments composed of equal number of incongruent > congruent, asynchronous > synchronous, and McGurk > non‐McGurk percept contrast types, and only one non‐fusion > fusion percept contrast type. Note that two of the three McGurk > non‐McGurk percept experiments included in the meta‐analysis reported foci here. Nine experiments also contributed to the smaller left posterior STG/STS/MTG cluster (1,424 mm3), with most being either incongruent > congruent (four experiments) or asynchronous > synchronous contrast types (three experiments). Note that three of the four total asynchronous > synchronous contrasts in the conflicting AV speech ALE were localized in this cluster (#1, #6a, #11a). Similar to the left posterior STG/STS/MTG clusters, the right posterior STG/STS/MTG cluster was also derived from all contrast types, more frequently incongruent > congruent (three of seven experiments) and asynchronous > synchronous (two of seven experiments), using both sublexical and sentence AV stimulus types.

Among the other clusters outside the temporal lobe, the supplementary motor area (SMA) cluster derived mainly from incongruent > congruent experiments (four of five contributing experiments), comprised mostly of sublexical AV speech stimulus types with only one experiment that used a word AV stimulus type, disyllabic nouns. Bilateral dorsal inferior frontal gyrus (IFG) clusters and one smaller ventromedial left IFG cluster were also identified. The left dorsal IFG cluster (1,104 mm3) was most frequently derived from incongruent > congruent experiments (six of eight overall contributing experiments) and these experiments used sublexical AV speech stimulus types with the exception of one experiment using disyllabic nouns. One study (#11) contributed foci to this cluster from both asynchronous > synchronous and non‐fusion > fusion percept contrast types using sentence stimuli. The right dorsal IFG cluster showed a relatively similar pattern of contributing experiments and AV stimulus types, with four of seven contributing experiments classified as incongruent > congruent. Finally, the left inferior parietal lobule (IPL) was derived from four experiments in which three experiments were classified as incongruent > congruent, and one experiment was classified as asynchronous > synchronous.

Localization of Validating AV Speech

A smaller ALE analysis of validating AV speech included 137 foci from 13 experiments. Three experiments were congruent > incongruent contrasts, five were synchronous > asynchronous contrasts, three were non‐McGurk > McGurk percept contrasts, and two were fusion > non‐fusion percept contrasts. The ALE analysis revealed six clusters of significant activation likelihood (Table 3; Fig. 1) in sensory areas including bilateral fusiform gyrus (FFG), left inferior occipital lobe, and bilateral middle superior temporal gyrus (mid‐STG). These findings remain largely the same whether percept contrasts were included or not, with some exceptions as described below (Supporting Information Fig. 1).

Table 3.

Validating AV speech ALE analysis results

| Validating contributing experiments | Contrast Type | AV Stimulus Type | Nearby conflicting experiments | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Volume (mm3) | ALE value | MNI | Stimulus contrast | Percept contrast | Sublexical | Word | Sentence | ||||||

| x | y | z | Sync > Async | Cong > Incong | Fus > Non‐Fus | Non‐McG > McG | ||||||||

| Right STG | 656 | 0.0176 | 64 | −14 | −4 | 4, 11b, 17 | 2 | 1 | 1 | 2 | 11a, 21 | |||

| Right FFG | 344 | 0.0117 | 34 | −68 | −20 | 7, 10, 16, 22 | 2 | 2 | 2 | 2 | None | |||

| 0.0107 | 28 | −60 | −18 | 1, 7, 10, 16 | 3 | 1 | 2 | 2 | None | |||||

| Left STG/inferior white matter | 192 | 0.0124 | −34 | −22 | −6 | 3, 4, 10 | 1 | 1 | 1 | 2 | 1 | 13 | ||

| Left STG | 168 | 0.0127 | −62 | −26 | 4 | 4, 11b | 1 | 1 | 2 | 1, 2, 6a, 9, 11a, 18, 19a, 19b | ||||

| Left FFG | 152 | 0.0111 | −24 | −80 | −16 | 1, 10, 15, 22 | 1 | 1 | 2 | 4 | None | |||

| Left inferior occipital lobe | 128 | 0.0113 | −40 | −84 | −2 | 3, 7, 15 | 2 | 1 | 2 | 1 | 2 | |||

We determined whether any foci from conflicting AV speech experiments were within three standard deviations of the calculated localization uncertainty for each validating AV speech ALE peak (Nearby Conflicting Experiments). All other details match those listed in the Table 2 legend.

FFG, fusiform gyrus.

Validating AV speech brain areas were identified in bilateral posterior FFG. A right posterior FFG cluster derived from activity reported in five experiments using sublexical and word level AV speech stimuli in two contrast types: synchronous > asynchronous and non‐McGurk > McGurk percept. Note that two of the three non‐McGurk > McGurk percept experiments within the list of validating AV speech experiments reported activity here. The left posterior FFG cluster derived from similar contrast types, also including two of the three non‐McGurk > McGurk percept contrasts in the validating AV speech analysis, as well as congruent > incongruent, using only sublexical AV speech stimuli.

The largest cluster (656 mm3) was located in the right mid‐STG with an ALE value of 0.0176 and derived from three experiments classified as congruent > incongruent and fusion > non‐fusion percept, using word and sentence AV speech stimulus types. Comparably, a smaller cluster was found in the left mid‐STG with an ALE value of 0.0127, derived from two experiments also classified as congruent > incongruent and fusion > non‐fusion percept, using sentence AV speech stimuli. This cluster overlaps with the anterior edge of the left STG cluster in the conflicting AV speech ALE map (Fig. 1). It was no longer significant when percept contrasts were excluded (Supporting Information Fig. 1). One other medial cluster was also found in the left mid‐STG region, extending deep into inferior white‐matter regions, derived from three experiments using three different contrast types, congruent > incongruent, synchronous > asynchronous and non‐McGurk > McGurk percept, and both sublexical and sentence AV stimulus types.

Specificity for AV Speech Conflict and Validation

The ALE analyses above revealed largely nonoverlapping networks for conflicting and validating AV speech processing. The only area of overlap was in the left mid‐STG, where a small cluster in the validating AV speech ALE map overlapped with the anterior edge of a cluster in the conflicting AV speech ALE map. Despite the apparent differences observed in the ALE maps, it remains possible that activity in validating AV speech experiments was reported in the areas identified as involved in AV conflict, but failed to reach significance in the ALE analysis due to lower power in the validating AV speech ALE analysis. In general, threshold effects in the ALE analyses may lead to a false impression of specificity. One approach to address this issue is an ALE subtraction analysis in which the two datasets are directly compared. The current analysis is underpowered for a direct ALE subtraction, and this approach still may not provide a full picture of the degree of specificity in different areas of the brain. Therefore, to assess the specificity of the ALE results for conflicting and validating AV speech, we examined each ALE peak in both maps and identified “nearby experiments” from the other dataset. “Nearby” was defined by the same criterion used to determine whether experiments in each dataset contribute to their own ALE maps (Materials and Methods). In other words, we asked “if this validating AV experiment had been included in the conflicting AV dataset, would it have contributed to this ALE result?” and we asked “if this conflicting AV experiment had been included in the validating AV dataset, would it have contributed to this ALE result?”

For the conflicting AV speech map, this specificity analysis demonstrated that all clusters outside the temporal lobes were specific to AV conflict. That is, no validating AV speech experiments reported activity near any of the conflicting AV speech ALE clusters in the parietal or frontal lobes (Table 2). In the mid‐posterior superior temporal lobe of both hemispheres, a few validating AV speech experiments reported foci near most of the conflicting AV speech ALE peaks (range 0–3 nearby validating experiments). One validating AV speech experiment (#11b) using a fusion > non‐fusion percept contrast was responsible for much of this overlap. Notably, substantially more conflicting AV speech experiments compared to validating AV speech experiments reported foci within the temporal lobe (Table 2).

In parallel to the findings for the conflicting AV speech map, the validating AV speech map showed no specificity for validating AV speech in the mid‐STG ALE clusters. Eight nearby conflicting AV speech experiments were identified for one left mid‐STG ALE peak, one nearby conflicting AV speech experiment for the other medial left mid‐STG ALE peak, and two nearby conflicting AV speech experiments were identified for the right mid‐STG ALE peak (Table 3). These findings suggest that the mid‐STG may not be involved in processes exclusive to conflicting or validating AV speech. The left inferior occipital lobe ALE cluster had one nearby conflicting AV speech experiment. In contrast, no foci from conflicting AV speech experiments were found near the left or right FFG clusters identified in the validating AV speech ALE analysis, suggesting these areas may be engaged in processes specific to AV speech validation (Table 3).

Complementary Findings with the Removal of Percept Contrast Types

Although the conflicting versus validating dichotomization clearly captures key processing differences based on our results, there are gray areas around the boundary between the categories. The gray areas are particularly related to the percept contrast classifications, although only a small number of percept contrasts were included in each analysis. For example, some conflict‐related activity might be expected in a non‐McGurk > McGurk percept contrast, even though the experiment was classified as validating AV speech. In general, this issue should have diluted our findings, creating apparent overlap between processes related to AV conflict and validation. However, this was not the case, as there was not sufficient overlap to warrant excluding percept studies all together. Regardless, to address this potential shortcoming, we conducted an additional ALE analysis with the exclusion of percept contrasts (Supporting Information Fig. 1). Excluding percept contrasts did not significantly alter the main findings, suggesting that the overall observed patterns do not critically depend on relatively subjective decisions, like the assignment of percept contrasts within the conflicting versus validating framework. As discussed above, the main difference of note was that the left mid‐STG validating AV speech cluster, which overlapped with the anterior edge of the conflicting AV speech cluster, was not identified with the exclusion of percept contrasts. This result was not surprising since the cluster was derived from two experiments, including one percept contrast.

DISCUSSION

Using the ALE meta‐analysis technique, we identified distinct brain regions that were consistently more active during the resolution of discrepancies in sensory input (conflicting AV speech) or the reinforcement of complementary sensory input (validating AV speech) in a large number of neuroimaging studies across several languages. The conflicting versus validating framework allowed for the critical evaluation of localization overlap among different contrast and AV stimulus types reflective of the AV literature. Overall, there was consistency in localization within each of these groups of experiments, more convincingly for conflicting AV speech, despite the wide variation in experimental methods (e.g., task and design) and kinds of AV stimulation (e.g., manipulations of timing versus content and sublexical versus sentence). These findings remained largely the same whether or not percept contrasts were included in the meta‐analysis (Supporting Information Fig. 1). In general, these results indicate a partial dichotomy of AV processes that serve to resolve conflict between discrepant AV signals versus those that serve to validate equivalent AV signals, which is reflected by a reliance on distinct brain regions. Within these broad brain networks, patterns were observed wherein specific types of speech signals or contrast types (e.g., synchrony versus congruency and conflict versus validation) were more likely to activate specific regions than others. These differences may inform the specific roles of these regions in AV integration beyond the simple conflict versus validation dichotomy. These findings are relevant to current sensorimotor speech models [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009; Skipper et al., 2007], and indicate that the auditory dorsal stream may be important during AV speech conflict processing.

Recruitment of Bilateral Posterior Temporal Areas for Processing of Conflicting AV Speech Integration

Every contrast type contained within this analysis, including experiments examining conflicts in content and timing of AV signals and perceptual measures, that ranged from sublexical to sentence level AV speech stimulus types, consistently activated the same regions of the bilateral posterior STG/STS/MTG, in general spanning an area more lateral and posterior to the validating AV speech clusters. While a few validating AV speech experiments reported foci near the posterior temporal clusters, most experiments reporting foci here used conflicting AV speech contrasts. These results provide preliminary evidence that the posterior STG/STS region may be involved in general AV sensory integration processes that are stressed by the presence of conflict between auditory and visual signals.

The posterior STS conflict‐related activation likelihood was left lateralized both in terms of peak ALE values and the total volume of significant ALE clusters. Left and to a lesser degree right posterior STG/STS has been argued to provide storage of and access to phonological representations of speech [Hickok and Poeppel, 2007, but see DeWitt and Rauschecker, 2012], and is activated in auditory speech studies without visual input [Turkeltaub and Coslett, 2010]. It could be argued that the posterior STS might play no role in AV integration, but that conflicting AV signals induce competing coactivation of multiple phonemic or lexical representations, placing stress on the left posterior STG/STS storage/access system and resulting in greater brain activity in this area. However, the stimuli included here represent an array of speech signals, and recent meta‐analytic evidence suggests that auditory speech representations reside farther anterior with sublexical units, words and phrases hierarchically arrayed along a gradient from the mid‐to‐anterior STG/STS [DeWitt and Rauschecker, 2012]. Also, competing coactivation of speech representations could theoretically cause a decrease rather than an increase in activity in these storage/access areas, if the conflict results in mutual inhibition of the two competing representations.

If posterior temporal areas serve a different purpose in speech processing, it remains possible that conflict in AV signals places strain on more general processes, which contribute to speech that is served by the posterior STG/STS region, such as phonological working memory [Leff et al., 2009], resulting in greater activity in this area for conflicting AV speech signals. Validating AV contrasts may engage these general processes as well, albeit to a lesser degree, resulting in inconsistent activity in the posterior STG/STS, as we observed here. However, the posterior STS has also been implicated in AV integration for nonspeech signals [Beauchamp et al., 2004a, b; Man et al., 2012], making this unlikely.

Rather, the consistency of activity in the bilateral posterior STS observed here, across all contrast types, particularly those that stress AV conflict, likely suggests a direct role for this region in comparison of auditory and visual inputs not specific to speech stimuli. This may result in greater fMRI signal when there is discrepancy between the inputs, which could be related to the recruitment of more neural processes responding to the different auditory signal, visual signal, or both [see Hocking and Price, 2008]. This is supported by previous work in demonstrating connections between the STS and auditory/visual areas [Beer et al., 2011; Falchier et al., 2002; Rockland and Pandya, 1981], and that the STS does indeed have a “patchy” organization containing both AV and unisensory areas [Beauchamp et al., 2004a; Dahl et al., 2009]. Other neuroimaging studies also provide further support. A multivariate pattern analysis of posterior STS identified similar neural patterns for both the sound and video of particular objects [Man et al., 2012]. One study of nonspeech AV stimuli showed effective connectivity changes between posterior STS and auditory/visual areas after AV synchrony discrimination training [Powers et al., 2012], suggesting that STS may help to discriminate timing‐related perceptions of AV sensory events. Sensory signal accuracy may contribute to STS connectivity patterns; one study showed increased reliability of speech sounds compared to visual speech movements correlated with the increased functional connectivity between posterior STS and auditory cortex [Nath and Beauchamp, 2011], indicating that posterior STS may evaluate which sensory input is more likely to be accurate. In another study, the left pSTS was recruited with the addition of noise to conflicting AV speech stimuli [Sekiyama et al., 2003]. Finally, a transcranial magnetic stimulation (TMS) study [Beauchamp et al., 2010] and a case study on a patient with damage to the left STS [Baum et al., 2012] provide further evidence for bilateral STS involvement in AV conflict processing. Beauchamp et al. [2010] found that inhibitory TMS of the left posterior STS greatly reduced the number of “fused” McGurk percept reports within a specific time window. Baum et al. [2012] reported on a patient that could still perceive the McGurk effect with left STS damage and with an intact right STS, where this patient had increased right STS activity compared to healthy controls during McGurk stimuli presentation. These findings [Baum et al., 2012; Beauchamp et al., 2010] suggest that the left and right STS may have complementary functions in processing AV conflicts. Overall, previous studies suggest that the neural computations performed by the STS are necessary for interpreting, and in some cases, resolving AV sensory inconsistencies.

The posterior STS was identified in the meta‐analysis through the overlap of mostly different conflicting AV speech contrast and stimulus types, however, a few validating AV speech experiments reported foci nearby. Thus, it could be that the posterior STS is involved in more general sensory processes not specific to conflict between AV signals, or restricted to multisensory AV inputs. The STS, of both the left and right hemisphere, may have a role in numerous types of computations [Hein and Knight, 2008], both unimodal and multimodal [Allison et al., 2000; Beauchamp et al., 2004a, b, 2008; Bidet‐Caulet et al., 2005; Calvert et al., 2000; Giese and Poggio, 2003; Grossman and Blake, 2002; Lahnakoski et al., 2012; Man et al., 2012; Noesselt et al., 2007; Peelen et al., 2010; Pelphrey et al., 2003, 2004; Raij et al., 2000; Redcay, 2008; Watson et al., 2014], suggesting the STS may merge different kinds of sensory information, and possibly, allow for the identification of general sensory discrepancies. Future experiments are needed to test whether these potential conflict detection/resolution processes are domain‐general and extend beyond speech processes, perhaps to sensorimotor actions [Rauschecker, 2011; Rauschecker and Scott, 2009]. It also remains possible that the posterior STS region may compute comparisons between conflicting stimuli, and specific neuronal populations that receive inputs from different types of signals may be intermingled or spatially segregated (synchrony versus congruency or conflict versus validation). Some studies have started to distinguish discrete processing regions in the superior temporal cortex and STS [Beauchamp et al., 2004a; Noesselt et al., 2012; Stevenson et al., 2010, 2011; Stevenson and James, 2009; van Atteveldt et al., 2010]. However, because the current ALE study did not have high enough spatial resolution, we could not reliably identify small differences in localization of activity for different types of signal (e.g., synchrony versus congruency or conflict versus validation). With attention to specific localization of various unimodal and cross‐modal computations within individual subjects [Beauchamp et al., 2010; Nath and Beauchamp, 2012], future studies using other more precise parcellation methods are clearly needed to continue to investigate the specific functions and organization of the STG/STS.

Dorsal Stream Structures Involved in Conflicting AV Speech Integration

In addition to the posterior STG/STS regions, conflicting AV speech consistently activated frontal and parietal regions within the dorsal “how/where” auditory stream [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009; Rauschecker and Tian, 2000], including the left IPL, SMA, right precentral gyrus, and bilateral dorsal IFG. This network of dorsal stream regions identified outside of the temporal lobe may be specific to AV conflict, since no foci from validating AV speech experiments were identified near these conflicting AV speech ALE peaks.

The auditory/language dorsal stream may constitute a sensorimotor feedback system, whereas the ventral stream may process inputs related to object recognition and comprehension [Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009]. A central sensorimotor mechanism of the dorsal stream, includes an error detection process [Rauschecker, 2011; Rauschecker and Scott, 2009], which suggests that the dorsal stream may be well‐suited to contribute to conflict resolution. It is likely that conflict in the AV signal stresses these sensorimotor feedback systems, because the auditory and visual signals are composed of different information, and these sensorimotor interactions in the dorsal stream may help to resolve the discrepancy [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009]. In general, for these reasons, dorsal stream regions may be linked to the interpretation of ambiguous or inconsistent sensory input. For example, one auditory dorsal stream model suggests that the IFG, premotor areas, IPL, and posterior superior temporal regions contribute to these sensorimotor feedback mechanisms to minimize error and help with “disambiguation” of phonological input [Rauschecker, 2011; Rauschecker and Scott, 2009], which is likely highly significant when sensory input is in disagreement.

These dorsal stream areas may not be specific to processing speech but perhaps extend to “doable” actions [Rauschecker, 2011; Rauschecker and Scott, 2009], and may be involved in comparisons between other classes of sensory stimuli. The left posterior STG and IPL have been recruited not only during comparisons of speech sounds [Turkeltaub and Coslett, 2010] but also during tasks of perceptual color discrimination [Tan et al., 2008] and have been implicated in stimulus change detection, not exclusive to speech [Zevin et al., 2010]. The IPL has been associated with visual‐tactile integration [Pasalar et al., 2010], and with detection of conflicting sensorimotor input, including increased activation when there is conflict between motor actions and visual feedback related to “agency” perception [Farrer et al., 2003]. Similarly, beyond its classical role in speech output, the IFG has been implicated in processing visual “symbolic gestures” [Xu et al., 2009], and conflict resolution for response selection from competing options [January et al., 2009; Novick et al., 2005, 2010]. Previous meta‐analytic evidence evaluated “interference resolution” in other types of conflict‐related tasks, such as stroop, and showed recruitment of some similar regions, for example, IPL and IFG [Nee et al., 2007]. Within speech processing, the IFG and pre‐SMA have also been implicated in categorical processing of phonemes [Lee et al., 2012], as has the premotor cortex [Chevillet et al., 2013]. Notably, the experiments that activated the IFG and SMA for AV conflict processing in this meta‐analysis most frequently used sublexical AV stimuli, which might suggest that these areas become involved in resolving conflict in AV speech signals because of their role in discriminating between sublexical speech units. Overall, this network of mostly dorsal stream regions [Hickok, 2012; Hickok and Poeppel, 2007; Rauschecker, 2011; Rauschecker and Scott, 2009] was colocalized across studies during processing of conflicting AV speech and showed selectivity to the conflicting AV signals. We suggest that these dorsal stream regions may be involved in the detection and resolution of sensory discrepancies among multimodal functions and in the selection of a single response among multiple viable options.

Overall, different types of conflicting AV speech contrasts colocalized across the dorsal stream network, although there was a degree of selectivity in certain brain areas. The activation likelihood in frontal and parietal areas was mainly derived from experiments using comparisons between incongruent and congruent content, likely influenced by the large number of these experiments included in this analysis (10 out of 20). For example, left IPL and SMA were activated by predominantly incongruent > congruent experiments (three experiments for left IPL, and four and three experiments for SMA ALE peaks) with only one asynchronous > synchronous experiment (#8a) identified for each ALE peak. The most likely explanation for the high influence of incongruent > congruent contrasts in the conflicting AV speech ALE findings is that the greatest degree of conflict between auditory and visual signals occurs when the content of these signals conflict, and this conflict drives activity in parietal and frontal dorsal stream areas. However, as noted above, other AV contrast types (asynchrony and percept) did identify activity in the bilateral STG/STS and overall, 15 of the 17 conflicting AV speech ALE peaks were derived from both incongruent > congruent and asynchronous > synchronous contrast types. As discussed elsewhere, percept comparisons involve relatively subtle differences in AV conflict (e.g., McGurk versus non‐McGurk percept), and thus may be sufficient to activate posterior STG/STS regions specifically involved in AV integration. However, the activity in these experiments may be less robust in dorsal stream areas involved in domain‐general conflict processing and response selection.

Sensory Areas in Validating AV Speech Integration

Compared to the widespread network of brain regions recruited in processing conflicting AV speech, including frontal and parietal areas, brain areas involved in the processing of validating AV speech were localized to more proximal auditory and visual areas of the temporal and occipital cortex, including bilateral FFG, left inferior occipital lobe, and to a lesser degree bilateral mid‐STG. In general, while these activation likelihoods were derived from a small number of contributing experiments, they still preliminarily establish coherence among the literature and suggest that validating compared to conflicting AV sensory inputs may generate more activity in auditory and ventral stream visual areas of the temporal lobe, including the FFG. It is possible that consistent visual speech paired with auditory speech may create a more explicit, unambiguous signal in these areas. In other words, complementary, redundant speech information contributed by each sensory input may help to boost the most accurate signal and lead to reinforcement of the correct perception [see Ghazanfar and Schroeder, 2006]. General mechanisms of AV validation could include increased bottom‐up activity in neurons receiving the same speech information from separate sensory sources, or top‐down tuning in the form of inhibition of similar, yet incorrect signals [see other AV integration model in van Atteveldt et al., 2009 or multisensory models in Driver and Noesselt, 2008]. Others have proposed that many more sensory areas than previously assumed may have multimodal properties [Driver and Noesselt, 2008; Ghazanfar and Schroeder, 2006; Hackett and Schroeder, 2009], and previous studies have shown plasticity of sensory areas in blind or deaf individuals [Amedi et al., 2003, 2007; Bavelier and Neville, 2002; Bedny et al., 2011; Finney et al., 2001; Rauschecker, 1995; Renier et al., 2010; Striem‐Amit and Amedi, 2014; Striem‐Amit et al., 2012; Weeks et al., 2000]. A recent study of non‐native, second language processing recruited bilateral occipital cortex during congruent versus incongruent stimulation of AV sentences [Barros‐Loscertales et al., 2013]. Other studies have shown FFG activation in voice/speaker recognition tasks of auditory‐only speech [von Kriegstein et al., 2005], and FFG recruitment during face processing [Haxby et al., 2000; Hoffman and Haxby, 2000].

While bilateral mid‐STG was recruited for validating AV speech, these ALE peaks were less conclusive. Conflicting AV speech experiments were identified near these mid‐STG ALE peaks. One left mid‐STG ALE peak overlapped with the anterior portion of a conflicting AV speech cluster and was not identified when percept contrasts were excluded. These findings indicate that this mid‐STG region may not be exclusive to processing specific types of AV signals. DeWitt and Rauschecker [2012] have proposed that the mid‐STG may correspond to the auditory lateral belt in non‐human primates, and Ghazanfar and Schroeder [2006] have suggested that auditory core and lateral belt are multisensory, responding to auditory, visual, and somatosensory input. Some previous experiments indicate that classical auditory areas in the STG may be involved in processing congruent AV speech signals. For example, others have shown modulation of auditory cortex during lip‐reading [Calvert et al., 1997; Calvert and Campbell, 2003; Kauramäki et al., 2010; Pekkola et al., 2005], increased auditory cortex activity during congruent compared to incongruent stimulation of AV phoneme sounds presented with visual letters [van Atteveldt et al., 2004, 2007], increased auditory cortex activity with stimulation of congruent AV syllables compared to sounds only [Okada et al., 2013], and face/voice integration in auditory cortex in nonhuman primates [Ghazanfar et al., 2008]. While this analysis may provide preliminary evidence for the possibility of cross‐modal validation of AV speech in regions more proximal to sensory areas as compared to frontal and parietal regions found for conflicting AV speech, future studies are certainly needed to further examine the interaction of different types of sensory inputs in sensory regions in humans, particularly concerning the mid‐STG region.

Limitations

While we acknowledge that the conflicting versus validating framework may not capture all nuances of the processes involved in AV speech integration, this framework did allow for the broad quantitative examination of AV speech imaging experiments. Conflicting AV speech had more robust findings with the inclusion of 20 experiments and perhaps as a result, a higher degree of colocalization across experiments. This analysis included two experiments (#20, #21) using stimuli that paired speech sounds to letters, and we recognize it is likely there are differences in neural processing related to moving versus static/orthographic visual signals, particularly concerning attention effects and temporal components. However, both experiments did contribute to activation likelihoods found for conflicting AV speech, indicating that despite computational differences these AV integration processes may still localize to similar brain regions. The validating AV speech analysis had relatively less colocalization across experiments and less overall specificity to validating AV speech. Thus, the validating AV speech findings should be interpreted with caution pending more research in this area.

Subanalyses related to the isolation of specialized areas for different types of computations (synchrony versus congruency versus percept; moving versus static/orthographic visual signals) were not possible due to the relatively small number of studies reporting foci for each contrast type. Because of this limitation, and because we acknowledge that the computations required for comparisons of timing and content must differ, we have provided detailed information regarding which specific contrast types contributed to each ALE cluster, demonstrating where these experiments colocalized and where they did not (Tables 2 and 3). Notably, though, all 17 ALE peaks identified in the conflicting AV speech analysis were recruited by both content and timing contrast types (incongruent > congruent and either asynchronous > synchronous or non‐fusion > fusion percept), suggesting that there may be broad colocalization of content and timing processes within those brain regions. It is also important to note that ALE operates at a relatively low spatial resolution (roughly similar to PET resolution), and that colocalization of activity from experiments testing different types of conflict or validation (e.g., content versus timing) does not necessarily indicate that these processes rely on the same neuronal populations. The general location of the activity is the same, but more specialized subregions within these broader areas may specifically process one type of input or another. Using these findings as a springboard, future studies using more precise methods can further examine these possibilities, likely in within‐subject comparisons.

CONCLUSIONS

In this ALE meta‐analysis of 33 experiments, 311 subjects, and 347 foci, we identified distinct brain regions involved in the integration of conflicting versus validating AV speech, confirming that different neural computations are likely responsible for the detection and resolution of inconsistent AV speech versus the validation of equivalent, complementary AV signals. Conflicting AV speech integration revealed a network of primarily dorsal stream regions involved in the resolution of inconsistent sensory input. In contrast, validating AV speech integration was localized to ventral stream visual areas of the occipital and inferior temporal lobe, suggesting functional properties related to the validation of complementary AV input. Future studies can assess whether these networks translate to other communication domains, such as face/voice integration, other sensorimotor functions, biological motion, or social‐related processes. Additionally, localization of AV speech integration networks for a normal, healthy population provides the foundation for future studies in populations where this network may be altered.

Supporting information

Supplementary Information

Supplementary Information Figure 1.

ACKNOWLEDGMENTS

The authors would also like to thank the reviewers for their valuable comments and contributions.

REFERENCES

- Allison T, Puce A, McCarthy G (2000): Social perception from visual cues: role of the STS region. Trends Cogn Sci 4:267–278. [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Pianka P, Malach R, Zohary E (2003): Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci 6:758–766. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, Rotman S, Hemond C, Meijer P, Pascual‐Leone A (2007): Shape conveyed by visual‐to‐auditory sensory substitution activates the lateral occipital complex. Nat Neurosci 10:687–689. [DOI] [PubMed] [Google Scholar]

- Balk MH, Ojanen V, Pekkola J, Autti T, Sams M, Jääskeläinen IP (2010): Synchrony of audio‐visual speech stimuli modulates left superior temporal sulcus. Neuroreport 21:822–826. [DOI] [PubMed] [Google Scholar]

- Barros‐Loscertales A, Ventura‐Campos N, Visser M, Alsius A, Pallier C, Avila Rivera C, Soto‐Faraco S (2013): Neural correlates of audiovisual speech processing in a second language. Brain Lang 126:253–262. [DOI] [PubMed] [Google Scholar]

- Baum SH, Martin RC, Hamilton AC, Beauchamp MS (2012): Multisensory speech perception without the left superior temporal sulcus. Neuroimage 62:1825–1832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ (2002): Cross‐modal plasticity: Where and how? Nat Rev Neurosci 3:443–452. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS (2005): See me, hear me, touch me: Multisensory integration in lateral occipital‐temporal cortex. Curr Opin Neurobiol 15:145–153. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A (2004a): Unraveling multisensory integration: Patchy organization within human STS multisensory cortex. Nat Neurosci 7:1190–1192. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A (2004b): Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41:809–823. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Yasar NE, Frye RE, Ro T (2008): Touch, sound and vision in human superior temporal sulcus. Neuroimage 41:1011–1020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Nath AR, Pasalar S (2010): fMRI‐Guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the McGurk effect. J Neurosci 30:2414–2417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual‐Leone A, Dodell‐Feder D, Fedorenko E, Saxe R (2011): Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci USA 108:4429–4434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beer AL, Plank T, Greenlee MW (2011): Diffusion tensor imaging shows white matter tracts between human auditory and visual cortex. Exp Brain Res 213:299–308. [DOI] [PubMed] [Google Scholar]

- Benoit MM, Raij T, Lin FH, Jääskeläinen IP, Stufflebeam S (2010): Primary and multisensory cortical activity is correlated with audiovisual percepts. Hum Brain Mapp 31:526–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidet‐Caulet A, Voisin J, Bertrand O, Fonlupt P (2005): Listening to a walking human activates the temporal biological motion area. Neuroimage 28:132–139. [DOI] [PubMed] [Google Scholar]

- Bishop CW, Miller LM (2009): A multisensory cortical network for understanding speech in noise. J Cogn Neurosci 21:1790–1805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau V, van Atteveldt N, Ekkebus M, Goebel R, Blomert L (2009): Reduced neural integration of letters and speech sounds links phonological and reading deficits in adult dyslexia. Curr Biol 19:503–508. [DOI] [PubMed] [Google Scholar]

- Blau V, Reithler J, van Atteveldt N, Seitz J, Gerretsen P, Goebel R, Blomert L (2010): Deviant processing of letters and speech sounds as proximate cause of reading failure: a functional magnetic resonance imaging study of dyslexic children. Brain 133(Pt 3):868–879. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Campbell R (2003): Reading speech from still and moving faces: the neural substrates of visible speech. J Cogn Neurosci 15:57–70. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SC, McGuire PK, Woodruff PW, Iversen SD, David AS (1997): Activation of auditory cortex during silent lipreading. Science 276:593–596. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ (2000): Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol 10:649–657. [DOI] [PubMed] [Google Scholar]

- Chevillet MA, Jiang X, Rauschecker JP, Riesenhuber M (2013): Automatic phoneme category selectivity in the dorsal auditory stream. J Neurosci 33:5208–5215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahl CD, Logothetis NK, Kayser C (2009): Spatial organization of multisensory responses in temporal association cortex. J Neurosci 29:11924–11932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delbeuck X, Collette F, Van der Linden M (2007): Is Alzheimer's disease a disconnection syndrome? Evidence from a crossmodal audio‐visual illusory experiment. Neuropsychologia 45:3315–3323. [DOI] [PubMed] [Google Scholar]

- DeWitt I, Rauschecker JP (2012): Phoneme and word recognition in the auditory ventral stream. Proc Natl Acad Sci USA 109:E505–E514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Noesselt T (2008): Multisensory interplay reveals crossmodal influences on ‘sensory‐specific’ brain regions, neural responses, and judgments. Neuron 57:11–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Laird AR, Grefkes C, Wang LE, Zilles K, Fox PT (2009): Coordinate‐based activation likelihood estimation meta‐analysis of neuroimaging data: a random‐effects approach based on empirical estimates of spatial uncertainty. Hum Brain Mapp 30:2907–2926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Bzdok D, Laird AR, Kurth F, Fox PT (2012): Activation likelihood estimation meta‐analysis revisited. Neuroimage 59:2349–2361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Macaluso E (2009): Spatial attention can modulate audiovisual integration at multiple cortical and subcortical sites. Eur J Neurosci 29:1247–1257. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H (2002): Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci 22:5749–5759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrer C, Franck N, Georgieff N, Frith CD, Decety J, Jeannerod M (2003): Modulating the experience of agency: a positron emission tomography study. Neuroimage 18:324–333. [DOI] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR (2001): Visual stimuli activate auditory cortex in the deaf. Nat Neurosci 4:1171–1173. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE (2006): Is neocortex essentially multisensory? Trends Cogn Sci 10:278–285. [DOI] [PubMed] [Google Scholar]