Abstract

Purpose

Gatekeeper-training programs (GKTs) are an increasingly popular approach to addressing access to mental health care in adolescent and young adult populations. This study evaluates the effectiveness of a widely used GKT program, Mental Health First Aid (MHFA), in college student populations.

Methods

A randomized control trial was conducted on 32 colleges and universities between 2009 and 2011. Campus residence halls were assigned to the intervention (MHFA plus preexisting trainings) or control condition (pre-existing trainings only) using matched pair randomization. The trainings were delivered to resident advisors (RAs). Outcome measures include service utilization, knowledge and attitudes about services, self-efficacy, intervention behaviors, and mental health symptoms. Data come from two sources: (1) surveys completed by the students (RAs and residents) (N=2,543), 2-3 months pre- and post-intervention; and (2) utilization records from campus mental health centers, aggregated by residence.

Results

The training increases trainees’ self-perceived knowledge (regression-adjusted effect size (ES)=0.38, p<0.001), self-perceived ability to identify students in distress (ES=0.19, p=0.01), and confidence to help (ES=0.17, p=0.04). There are no apparent effects, however, on utilization of mental health care in the student communities in which the trainees live.

Conclusions

Although GKTs are widely used to increase access to mental health care, these programs may require modifications in order to achieve their objectives.

Keywords: gatekeeper-training, mental health, college students

INTRODUCTION

The majority of people with mental disorders receive treatment only after a delay of several years, if at all.1 Access to mental health care is especially important in young adult populations, because nearly three-quarters of all lifetime mental disorders have first onset by the mid-20s.2 Among college students, the prevalence of mental health problems appears to be increasing,3 and over half of students with apparent disorders are untreated.4-6 With over 20 million students enrolled in U.S. postsecondary education,7 population-level interventions to increase access to mental health care could make a significant societal impact.

Gatekeeper-trainings (GKTs)

GKTs target individuals (“gatekeepers”) who are in frequent contact with others in their communities. The trainings equip nonprofessionals with the skills and knowledge to recognize, intervene with, and link distressed individuals to appropriate mental health resources. There are many different GKT programs, which have been used on hundreds of college campuses. Most programs focus on suicide prevention, but many also address common issues such as depression and anxiety.

Borrowing from attachment theory, the gatekeeper model posits that individuals may find comfort sharing their feelings with acquaintances.9 The conceptual model is also guided by the public health principle of mass saturation of awareness,10 whereby the likelihood of community members intervening in a crisis increases with the proportion of capable gatekeepers.11 Peers may be especially influential for key factors that determine help-seeking, such as attitudes and knowledge.12

Despite the popularity of GKTs, there have been no large-scale studies on college campuses evaluating their effectiveness in increasing service utilization and improving mental health. In the college setting, GKTs typically target residential life staff, specifically resident advisors (RAs) trained to serve as gatekeepers for their residents. A gap also exists more generally in the literature on peer-based GKTs across settings: most studies have measured effects for trainees’ self-reported knowledge and skills, without measuring actual helping behavior and population-level service utilization and wellbeing.13 The present study reports findings from the first large-scale, multi-site study of GKTs for college students and one of the first studies of a peer-based GKT in any setting to estimate population-level effects. The study design and scope enable one of the most comprehensive evaluations of a GKT program to date.

Hypotheses

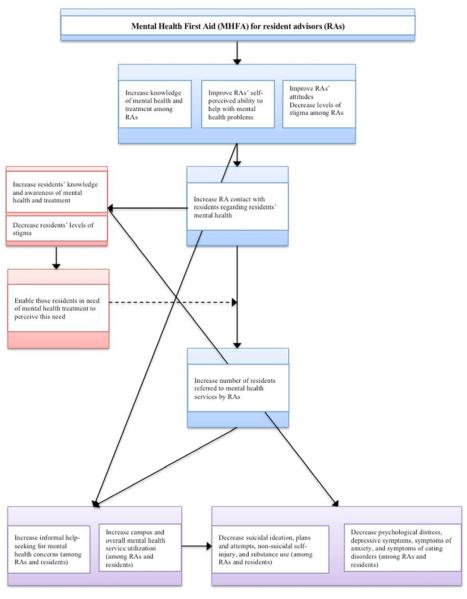

The hypotheses are based on the intended process and outcomes of GKTs, as depicted in Figure 1 and described in the Surgeon General’s 2012 National Strategy for Suicide Prevention.14 RAs trained as gatekeepers are hypothesized to have improved attitudes and increased knowledge and self-efficacy to respond to mental health issues in their residential communities (H1). This should lead to more contact with residents about mental health concerns (H2), resulting in enhanced knowledge and attitudes at the population-level (H3). Ultimately, training is hypothesized to increase residents’ service utilization (H4), thus improving mental health (H5).

Figure 1.

Intended Process of GKT Intervention

METHODS

Intervention

This study evaluates the impact of one widely used GKT program, Mental Health First Aid (MHFA). Developed in 2001, the version of MHFA evaluated here is a 12-hour course comprised of five modules, covering depression, anxiety, psychosis, substance abuse, and eating disorders. Each module includes information about signs and symptoms, appropriate responses, and interactive activities.

A cornerstone of MHFA is the five-step gatekeeper action plan, represented by the acronym ALGEE: (1) Assess risk, particularly for suicidality; (2) Listen nonjudgmentally to the individual and discuss how s/he feels; (3) Give information (e.g., about effectiveness of available treatments); (4) Encourage self-management; and (5) Encourage help-seeking by providing referral information. MHFA is careful to emphasize that self-help is not a substitute for professional care in potential crises.

The evidence base for MHFA comes primarily from Australia, where the training was originally developed by Betty Kitchener, a health education nurse, and Anthony Jorm, a mental health literacy professor. There have been at least three evaluation studies of MHFA: an uncontrolled trial with the public,15 a wait-list randomized control trial in the workplace,16 and an effectiveness trial using a cluster randomized design with the public.17 Collectively, these studies indicate that MHFA has positive effects on knowledge, attitudes, self-efficacy, helping behavior, and trainees’ own mental health.18 The program has been implemented in 14 countries but has not been evaluated in the U.S. college setting and, like many other GKTs, has not been fully evaluated at the population level.

In the present study, MHFA was delivered by instructors certified by the National Council on Behavioral Healthcare (only the National Council can certify trainers). The majority of instructors (10 of 14) were behavioral health clinicians. All instructors used the same slides, demonstrations, and examples.

Study sites

Campuses were recruited in 2009 via announcements to email lists for campus mental health administrators. Recruitment was compressed because the project was funded by a NIH “Challenge Grant” grant under the American Recovery and Reinvestment Act, with a rapid start-up and two-year maximum study period. To ensure coordination, the study was limited to campuses that volunteered to participate and had clear administrative support. All participating campuses offered free mental health services. This included some form of treatment for at least a few sessions. Campuses also had an effective triaging system, in case demand increased due to the intervention. A total of 32 campuses enrolled in the study at no cost to the institutions. Although this is essentially a convenience sample of institutions, they are diverse along several dimensions, including type, size, and location. The schools are located in 19 states representing all four census regions in the U.S. The Montana State University Institutional Review Board (IRB) served as the central IRB, and approval was obtained on other campuses as needed. The trial is publically registered through ClinicalTrials.gov.

Participants

To be included, RAs (2nd-year and higher undergraduates) and residents had to be enrolled at a participating institution and at least 18 years old. There were no other inclusion or exclusion criteria. Residences were randomized to intervention and control conditions, as detailed below. In intervention residences, all RAs were invited to participate in MHFA, which took place in the middle of the academic year. In both intervention and control residences, all RAs and a random subset of residents were recruited for surveys, which occurred 2-3 months before and after MHFA. Residents were selected for the survey with probability proportional to the population of their residence.

RAs and residents were introduced to the study by a letter sent via regular mail, and then recruited for web surveys via email. Recruitment communication came from the campus coordinator (in most cases a director of housing or counseling). The initial mailing also contained a “pre-incentive” (kept regardless of participation): in year 1 $10 for both the pre- and post-surveys, in year 2 $5 for the pre-survey and $10 for the post-survey. A random drawing for cash prizes was also conducted during each round of data collection; regardless of participation, everyone in the invited samples was eligible for one of five $500 prizes. RAs in the intervention condition were also given a $100 participation stipend.

RAs in the intervention condition voluntarily participated in MHFA in addition to preexisting mental health training provided by their schools. RAs in the control condition participated only in pre-existing trainings. The study measures the incremental benefit of the more intensive and standardized MHFA program.

The primary analyses focus on 19 campuses with both intervention and control residences. The other 13 campuses were used as a supplemental sample. Residences at these campuses were purely in the intervention or control condition. (Sensitivity analyses were conducted using the full sample (977 RAs, 3,947 residents) for all primary outcomes. Findings remained consistent in magnitude, significance, and direction with those in Table 3.) This supplemental sample is intended to measure potential “spillover” effects, whereby effects might spill over from intervention to control residences on the same campus.

Table 3.

Primary Outcomes: Regression-adjusted Intervention Effects

| RAs | Residents | ||||||

|---|---|---|---|---|---|---|---|

| N | Intervention Effect | p | N | Intervention Effect | p | ||

| MH knowledge | OLS | 549 | 0.31 (0.06) | <0.001 | 1965 | 0.02 (0.03) | 0.56 |

|

| |||||||

| Personal stigma | OLS | 549 | −0.05 (0.04) | 0.20 | 1962 | −0.02 (0.02) | 0.21 |

|

| |||||||

| Self-efficacy | OLS | 539 | 0.07 (0.05) | 0.16 | ---- | ---- | ---- |

| Service knowledge |

OLS | 539 | 0.06 (0.06) | 0.36 | ---- | ---- | ---- |

| Recognize | OLS | 539 | 0.07 (0.08) | 0.38 | ---- | ---- | ---- |

| Approach | OLS | 539 | 0.0001 (0.07) | 1.0 | ---- | ---- | ---- |

| Confidence | OLS | 539 | 0.14 (0.06) | 0.04 | ---- | ---- | ---- |

| Identify | OLS | 539 | 0.13 (0.05) | 0.01 | ---- | ---- | ---- |

| Connect | OLS | 539 | 0.09 (0.07) | 0.20 | ---- | ---- | ---- |

|

| |||||||

|

Contact w/

resident |

OLR | 539 | 0.77 (0.11) | 0.08 | ---- | ---- | ---- |

|

MH tx, past 2

months |

Logit | ---- | ---- | ---- | 1967 | 0.87 (0.12) | 0.32 |

|

| |||||||

|

Distress score

(K6) |

OLS | 549 | −0.11 (0.26) | 0.67 | 1963 | −0.17 (0.11) | 0.13 |

Notes: OLR=ordinal logistic regression. Models control for age, racial/ethnic minority status, treatment assignment, and baseline response to the dependent variable. For RAs, models also control for whether the RA was a first-time or returning RA. Standard errors are clustered by campus and reported in parentheses.

Random assignment

In the primary campus sample, residences were assigned to conditions using matched pair randomization. Residences were matched into pairs based on three characteristics: number of undergraduates, number of RAs, and percentage of first-year students. Values for these characteristics were standardized (subtracting the mean and dividing by the standard deviation). Two residences at a time were randomly sampled without replacement, the sum of squared differences on each characteristic was calculated, and the process was repeated with additional residence pairs until the set of residences was exhausted. This process was repeated 10,000 times and the set with the lowest match discrepancy (sum of squared differences) was selected.

Within residence pairs, one was randomly assigned to the intervention condition and the other to control, using a numeric randomization function in Microsoft Excel. Beginning with the pairs with the lowest matched discrepancy, residences were included in the sample until the total number of RAs assigned to the intervention was no more than 30 per campus (the maximum that could be accommodated per training).

Sample size and power

Power analyses were conducted under conservative assumptions about the intraclass correlation coefficients to account for the clustered sample design. The survey design was powered at β=0.80 (p=0.05) to detect standardized effect sizes as small as 0.20 and odds ratios (OR) of 1.5. The availability of aggregate counseling center utilization data further enhanced the potential to detect even small effects on service use.

Measures

Outcomes are categorized into five domains: Knowledge, Attitudes/Health Beliefs, Self-Efficacy, Help-Seeking, and Mental Health/Health Behaviors. The specific wording for these outcomes is shown in Appendix A.

The primary Knowledge outcome is self-perceived mental health knowledge, measured by a question developed by the researchers: “Relative to the average person, how knowledgeable are you about mental illnesses and their treatments?” (response categories: “well above average”, “above average”, “average”, “below average”, “well below average”). For Attitudes/Health Beliefs, the primary outcome is a three-item measure of personal stigma adapted from the Discrimination-Devaluation Scale19 and previously used in national surveys of college students20; the measure is operationalized as a composite score ranging from 0-5, where higher values indicate higher levels of stigma). Self-Efficacy is measured only for RAs and is computed as an average score from six items related to self-perceived gatekeeper skills. For Help-Seeking, the primary outcome for RAs is a categorical measure of the number of residents with whom they discussed mental health issues (0, 1, 2-3, or 4+); for residents, the primary outcome is a dichotomous measure of treatment utilization (any/no medication and/or therapy) in the past 2 months. Help-seeking is measured through the survey data (individual self-reported treatment utilization) and the counseling center utilization data (residence hall-level observed utilization). The primary outcome in the Mental Health/Behaviors domain is a continuous measure of psychological distress, the K-6.21 Scores range from 0 to 24, with higher scores indicating higher distress.

Analysis

Survey data are analyzed at the individual-level. Separate intention-to-treat analyses are estimated for RAs and residents. Binary logistic regression is used to estimate effects for dichotomous outcomes such as mental health treatment; ordinal logistic regression is used to estimate effects for categorical outcomes such as amount of contact between RAs and residents; and ordinary least squares (OLS) is used for continuous outcomes such as K6 score. All regressions control for baseline outcomes, age, race (white versus non-white), and parental education (whether at least one parent had a bachelor’s degree). (Sex was not included due to missingness for 26.0% of residents and 26.9% of RAs. In sensitivity analyses controlling for sex using a restricted sample with this variable, the main pattern of findings in Table 3 remains the same.) In regressions for RAs, a binary measure of experience (first-time vs. returning) is also included as a covariate. In all analyses, robust standard errors were estimated, accounting for clustering of students within campuses.22

Sensitivity analyses were conducted using multi-level models with random effects at the residence and campus levels. With this approach, results remain consistent in magnitude, significance, and direction for all primary outcomes.

Campus-reported treatment utilization data are analyzed at the aggregated residence level, because obtaining individual-level client data was not feasible due to human subjects concerns. Utilization data were collected only in year 2 and are available for 18 of 19 campuses in the primary sample, including 194 residence halls (97 intervention and 97 control residences). The number of mental health visits in fall 2010 serves as the baseline and the number of visits in winter/spring 2011 as the outcome. Winter/spring utilization was regressed on a dummy for intervention/control condition, fall utilization, and two residence characteristics: number of first-year students and total number of students.

Regression-adjusted effect sizes (ES) are included for all continuous outcomes; these were calculated by dividing the regression coefficient by the standard deviation for the variable. All analyses were conducted using Stata 12.1.

RESULTS

Participant flow

A total of 7,138 students (1,042 RAs, 6,096 residents) were recruited in the fall term. The baseline surveys were completed by 4,104 students (810 RAs, 3,294 residents), for a 57% completion rate (78% for RAs, 54% for residents). Students who completed the baseline survey were recruited for the follow-up survey in the winter/spring term, which were completed by 2,726 students (618 RAs, 2,108 residents), for a 70% completion rate (68% for RAs, 70% for residents). In the primary sample, all 535 RAs from intervention residences were invited to MHFA trainings. Of these, 376 (70.3%) attended; 359 (95.5%) completed the full 12 hours and 17 (4.5%) completed less than 12 hours.

Bivariate analyses

The primary analytic sample (N=2,543) is comprised of 553 RAs and 1,990 residents. (The primary analytic sample is smaller (N=2,543) than the full follow-up sample (N=2,726) due to item missingness and a small number of students (N=46) who were excluded because they switched from treatment to control residences or vice versa.) At baseline, RAs and residents in the intervention condition are similar to their control counterparts across all demographic characteristics (Table 1) and primary outcomes.

Table 1.

Participant Characteristics at Baseline by Intervention Condition (N=2543)

| RAs (n=553) | Residents (n=1990) | |||||

|---|---|---|---|---|---|---|

| Control (n=262) |

MHFA (n=291) |

p | Control (n=1002) |

MHFA (n=988) |

p | |

| Age, mean (SD) | 20.3 (1.2) | 20.4 (1.3) | 0.49 | 19.1 (1.6) | 19.1 (1.5) | 0.99 |

| Gender (% women) | 56.4 | 58.4 | 0.69 | 62.5 | 65.3 | 0.28 |

| Race (% white) | 72.5 | 76.6 | 0.27 | 72.5 | 70.6 | 0.35 |

| Parental education (% BA) | 61.3 | 55.4 | 0.16 | 57.5 | 60.6 | 0.17 |

| RA experience (% first time) | 57.7 | 53.6 | 0.44 | ---- | ---- | ---- |

Notes: Gender is missing for 26.0% of residents and 26.9% of RAs.

For residents, there are no significant differences for outcomes by condition at follow-up, indicating no intervention effects (Table 2a). At follow-up, RAs trained in MHFA have significantly higher self-perceived knowledge and self-efficacy (Table 2b). For gatekeeper behaviors, however, there are no significant differences: 66.7% of RAs in control halls and 62.3% of RAs in intervention halls report contact with at least one resident about mental health issues in the past two months, with the majority reporting contact with only one or two residents. Approximately two-thirds of RAs report providing support to students (control: 64.3%, intervention: 61.3%), while a smaller proportion report helping students in crisis (control: 17.1%, intervention: 14.1%). Many RAs report referring students to professional counseling (control: 41.9%, intervention: 37.7%), while a smaller proportion report referring to campus administrators (control: 22.5%, intervention: 19.7%). Roughly one-third of RAs report having at least one student who received services as a result of their referrals (control: 37.7%, intervention: 32.0%).

Table 2a.

Residents: Means and Proportions for Primary Outcomes by Condition

| Baseline | Follow-Up | ||||||

|---|---|---|---|---|---|---|---|

| Range | Control | MHFA | p | Control | MHFA | p | |

| MH knowledge | (1-5) | 3.42 (0.83) | 3.41 (0.82) | 0.80 | 3.42 (0.82) | 3.43 (0.82) | 0.62 |

|

| |||||||

| Personal stigma | (0-5) | 0.62 (0.55) | 0.63 (0.55) | 0.51 | 0.62 (0.55) | 0.61 (0.55) | 0.69 |

|

| |||||||

| MH tx, past 2 months | (0/1) | 15.8% | 13.6% | 0.15 | 17.1% | 14.7% | 0.15 |

|

| |||||||

| Distress score (K6) | (0-24) | 5.76 (4.31) | 5.80 (4.34) | 0.82 | 5.69 (4.38) | 5.60 (4.42) | 0.64 |

Notes: Percentages and chi-squared test results are reported for dichotomous outcomes and means, standard deviations, and t-test results for continuous outcomes.

Table 2b.

RAs: Means and Proportions for Primary Outcomes by Condition

| Baseline | Follow-up | ||||||

|---|---|---|---|---|---|---|---|

| Control | MHFA | p | Control | MHFA | p | ||

| MH knowledge | (1-5) | 3.61 (0.80) | 3.65 (0.75) | 0.48 | 3.69 (0.77) | 4.01 (0.81) | <0.001 |

|

| |||||||

| Personal stigma | (0-5) | 0.61 (0.57) | 0.56 (0.53) | 0.25 | 0.55 (0.57) | 0.47 (0.49) | 0.07 |

|

| |||||||

| Self-efficacy (total) | (1-5) | 3.92 (0.45) | 3.98 (0.46) | 0.10 | 3.95 (0.49) | 4.05 (0.50) | 0.01 |

| Service knowledge | (1-5) | 4.57 (0.63) | 4.62 (0.53) | 0.29 | 4.53 (0.63) | 4.61 (0.56) | 0.11 |

| Recognize | (1-5) | 4.02 (0.64) | 4.07 (0.68) | 0.41 | 4.09 (0.62) | 4.16 (0.68) | 0.17 |

| Approach | (1-5) | 4.13 (0.69) | 4.22 (0.69) | 0.11 | 4.10 (0.70) | 4.13 (0.71) | 0.72 |

| Confidence | (1-5) | 3.78 (0.82) | 3.80 (0.85) | 0.77 | 3.78 (0.87) | 3.94 (0.79) | 0.03 |

| Identify | (1-5) | 3.45 (0.66) | 3.57 (0.68) | 0.03 | 3.52 (0.70) | 3.70 (0.66) | 0.002 |

| Connect | (1-5) | 3.57 (0.65) | 3.62 (0.69) | 0.40 | 3.66 (0.73) | 3.77 (0.74) | 0.08 |

|

| |||||||

| Contact w/resident | None | 34.0% | 32.4% | 0.40 | 33.3% | 37.7% | 0.64 |

| 1 | 33.6% | 32.4% | 31.8% | 31.0% | |||

| 2 | 23.7% | 30.0% | 29.8% | 27.8% | |||

| 3+ | 5.8% | 5.2% | 5.0% | 3.5% | |||

|

| |||||||

| Distress score (K6) | (0-24) | 4.74 (3.29) | 4.97 (3.85) | 0.46 | 4.64 (3.72) | 4.69 (3.81) | 0.88 |

Notes: Percentages and chi-squared test results are reported for dichotomous outcomes and means, standard deviations, and t-test results for continuous outcomes.

Regression-adjusted intervention effects (primary outcomes, Table 3)

As in the bivariate analyses, there are no significant effects for residents for any of the primary outcomes. For RAs, being trained in MHFA increases self-perceived knowledge (ES=0.38, p<0.001), self-perceived ability to identify students in distress, (ES=0.19, p=0.01), and confidence to help (ES=0.17, p=0.04).

Regression-adjusted intervention effects (secondary outcomes, Appendix A, Table A4)

For residents, the majority of secondary outcomes are also insignificant. One exception is that MHFA is associated with lower odds of reporting mental health impairment (OR=0.87, p=0.04). The intervention is also associated with two effects in an unexpected direction: lower odds of treatment (OR=0.64, p=0.004) and receiving informal support (OR=0.81, p=0.05).

At follow-up, RAs trained in MHFA have 1.70 times higher odds of receiving therapy over the past two months (p=0.03). For RAs, training is also associated with higher positive affect (ES=0.15, p=0.05), more positive beliefs about the effectiveness of therapy (ES=0.12, p=0.008) and medication (ES=0.12, p=0.05), and lower odds of binge drinking (OR=0.56, p=0.008). Other secondary outcomes are insignificant for RAs, including objective measures of gatekeeper knowledge (based on a brief multiple choice quiz).

Heterogeneous effects

Additional analyses investigate whether certain RAs (by gender, prior experience, baseline symptoms and knowledge) exhibit different intervention effects (individual heterogeneity) and whether effects vary based on how much mental health training was already provided by institutions (relative dose effects). Overall, these analyses provide little evidence of heterogeneity. As described in Appendix B, RAs with higher baseline knowledge exhibit larger benefits. Also, MHFA, in the presence of higher levels of pre-existing training, has a larger positive effect on RAs’ confidence to help students. Most notably, the intervention did not significantly increase service use among students with apparent baseline need.

Campus-reported service utilization

In the pre-intervention period (fall 2010) the average number of weekly visits per residence is nearly identical between the intervention (mean=0.44, SD=0.43) and control group (mean=0.41, SD=0.38). This translates to 0.003 weekly visits per student for both conditions at baseline. Both groups had small decreases post-intervention (winter/spring 2011): intervention residences averaged 0.40 (SD=0.40) weekly visits and control residences 0.37 (SD=0.37). As with the survey results, the estimated intervention effect on residences’ campus service utilization is insignificant (ES=0.05, p=0.56).

Spillover effects

To estimate potential spillovers, the supplemental campus sample is included and outcomes are compared for two types of controls: control residences on “mixed” campuses (which include intervention residences), and “pure” control campuses (with only control residences). Across all primary outcomes for both RAs and residents, there is evidence of spillover for only one outcome, and in an unexpected direction: being a resident in a control hall on a “mixed” campus is associated with slightly increased stigma (ES=0.07, p=0.02), relative to being a resident on a “pure” control campus.

DISCUSSION

GKTs have been implemented at hundreds of colleges, and in many other settings. The present study is the largest campus-based evaluation of GKTs to date, and one of the first in any setting to measure outcomes at the population level for a peer-delivered mental health intervention.

The findings suggest that MHFA had no effect on the broader student community. There was no apparent impact on residents’ help-seeking despite availability of free mental health services. MHFA did, however, have some effect on trainees. Training was effective in enhancing RAs’ self-perceived knowledge and self-efficacy. RAs were more likely to seek professional mental health services for themselves, a finding consistent with at least one other recent study.23 For some outcomes, the effect of MHFA was stronger among RAs with higher baseline knowledge and on campuses with more intensive pre-existing trainings. This suggests that trainees might be more receptive to MHFA if they have had a certain amount of previous experience.

Limitations

Alongside the strengths of the study, there are important limitations. First, campuses were selected using a convenience sampling approach. It is possible that these campuses constitute a stronger than average control condition. The analysis of heterogeneous effects by intensity of pre-existing trainings indicate, however, that MHFA might have been more effective when supplementing higher levels of pre-existing training. This would imply that effects might have actually been even weaker in a more representative campus sample. On the other hand, it is possible that some campuses chose to participate because of a need for better trainings, in which case their pre-existing trainings might be less intensive than average.

A second limitation, as in most GKT studies, is that the time period of post-training data collection might be insufficient to capture effects on certain outcomes, such as service utilization and mental health symptoms. Still, one might expect to observe at least some evidence of increased helping by RAs, as a precursor to increased service utilization and wellbeing among residents during the study period.

Third, it is important to acknowledge that professional treatment is not necessarily the most appropriate option for all students. This study used brief screens, which are not equivalent to clinical diagnoses. That said, MHFA did not increase service use even among students with apparent mental health problems at baseline.

Fourth, RAs’ participation in MHFA was far from perfect (70.3%). The present study can be thought of as approximating a real-world effectiveness trial for settings in which perfect attendance cannot be enforced. In analyses not shown, the main pattern of results remained the same when the sample was limited to campuses with perfect attendance (n=12). Relatedly, survey non-response might have made it harder to detect effects. This concern is partially alleviated, however, by the corroborating evidence from counseling center utilization, which represented complete censuses and also yielded null results.

Finally, many interrelated hypotheses were tested. The overarching question of whether MHFA was effective overall is highly susceptible to type I error without appropriate adjustments. Using a false discovery rate,24 effects for RAs’ self-perceived mental health knowledge and self-efficacy to identify students in distress remain statistically significant (at p<0.05), indicating that MHFA did have a positive impact on at least a small number of outcomes. The analyses of secondary outcomes and heterogeneous effects should be viewed as exploratory.

Implications

The findings from this study provide a mixed answer about how well MHFA works in college settings and, more generally, how effectively GKTs increase access to mental health services. There is no evidence for effects on the overall student communities. Whether these null effects are attributable to MHFA as a program, the choice of RAs as gatekeepers, or both is an important but unanswered question. Despite no apparent impact for residents, RAs appear to benefit personally. This evidence is on par with the limited evidence for other GKTs. Therefore, campuses may want to deliver MHFA if they prefer a more comprehensive and intensive training. Since the time of this study, MHFA has been shortened from 12 to eight hours, while still retaining the breadth of content.

The overall weakness of the results suggests that new GKT models might be necessary. Trainees may need a more sustained learning process, with repeated opportunities to practice skills and discuss gatekeeping experiences. It is also important for campus practitioners to consider other trainees. Although RAs are peers in the sense of being fellow students, they have a degree of authority that might impede sensitive discussions. Peers without any official role might be more effective gatekeepers.

Conclusion

The present study suggests that GKTs may not be fully achieving their ultimate objectives. Self-reported knowledge and self-efficacy appear insufficient for promoting intervention behaviors among gatekeepers or help-seeking and wellbeing in student communities. GKTs may need to be revised, and entirely new strategies may need to be considered.

Supplementary Material

Acknowledgements

The study was funded by a NIH “Challenge Grant” grant under the American Recovery and Reinvestment Act (NIMH 1RC1MH089757-01). We report no conflicts of interest. The authors would like to thank the National Institutes of Health and the Western Interstate Commission for Higher Education (WICHE) for their support of this project. Scott Crawford and his colleagues at the Survey Sciences Group (SSG) administered the web survey data collection on behalf of our research team. This work has been presented in oral presentations at the NIMH Mental Health Services Research (MHSR) conference (July 2011) and the Annual Research Meeting of AcademyHealth (June 2013). All individuals who contributed significantly to this work are acknowledged here.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest/Disclosure Statement: The authors report no potential, perceived, or real conflicts of interest. The study sponsor (The National Institutes of Health) had no role in any of the following aspects of this project: the study design; collection, analysis, and interpretation of data; the writing of the report; and the decision to submit the manuscript for publication. The first draft of this manuscript was written by Sarah Ketchen Lipson, who was funded by a graduate student research assistantship through the University of Michigan.

Clinical Trials Registration: ClinicalTrials.gov Identifier: NCT02021344

Implications and Contributions: A large-scale, multi-site study of gatekeeper training programs (GKTs) to increase utilization of mental health services among college students showed mixed results. Trainees experienced improved outcomes, but utilization mental health services in student communities did not increase.

Contributor Information

Sarah Ketchen Lipson, University of Michigan School of Public Health, Department of Health Management & Policy University of Michigan School of Education, Center for the Study of Higher and Postsecondary Education.

Nicole Speer, Intermountain Neuroimaging Consortium.

Steven Brunwasser, Vanderbilt University Kennedy Center.

Elisabeth Hahn, Harvard Graduate School of Education.

Daniel Eisenberg, University of Michigan School of Public Health, Department of Health Management & Policy 1415 Washington Heights M3517, SPH II Ann Arbor, MI 48109-2029.

REFERENCES

- 1.Wang PS, Berglund P, Olfson M, Pincus HA, Wells KB, Kessler RC. Failure and delay in initial treatment contact after first onset of mental disorders in the National Comorbidity Survey Replication. Archives of General Psychiatry. 2005;62(6):603. doi: 10.1001/archpsyc.62.6.603. [DOI] [PubMed] [Google Scholar]

- 2.Kessler R, Amminger G, Aguilar-Gaxiola S, Alonso J, Lee S, Ustun T. Age of onset of mental disorders: A review of recent literature. Current Opinion in Psychiatry. 2007;20(4):359–364. doi: 10.1097/YCO.0b013e32816ebc8c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Twenge JM, Gentile B, DeWall CN, Ma D, Lacefield K, Schurtz DR. Birth cohort increases in psychopathology among young Americans, 1938–2007: A cross-temporal meta-analysis of the MMPI. Clinical Psychology Review. 2010;30(2):145–154. doi: 10.1016/j.cpr.2009.10.005. [DOI] [PubMed] [Google Scholar]

- 4.Hunt J, Eisenberg D. Mental health problems and help-seeking behavior among college students. Journal of Adolescent Health. 2010;46(1):3–10. doi: 10.1016/j.jadohealth.2009.08.008. [DOI] [PubMed] [Google Scholar]

- 5.Eisenberg D, Nicklett E, Roeder K, Kirz N. Eating disorder symptoms among college students: Prevalence, persistence, correlates, and treatment-seeking. Journal of American College Health. 2011;59(8):700–707. doi: 10.1080/07448481.2010.546461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Blanco C, Okuda M, Wright C, Hasin D. Mental health of college students and their non–college-attending peers: Results from the national epidemiologic study on alcohol and related Conditions. Archives of General Psychiatry. 2008;65(12):1429. doi: 10.1001/archpsyc.65.12.1429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.U.S. Department of Education Institute of Education Sciences, National Center for Education Statistics. Integrated Postsecondary Education Data System (IPEDS), “Fall Enrollment Survey” (IPEDS-EF:90-99), and Spring 2001 through Spring 2011, Enrollment component. 2012.

- 8.Eisenberg D, Hunt J, Speer N. Help seeking for mental health on college campuses: Review of evidence and next steps for research and practice. Harvard Review of Psychiatry. 2012;20(4):222–232. doi: 10.3109/10673229.2012.712839. [DOI] [PubMed] [Google Scholar]

- 9.Anderson K, Maile J, Fisher L. The healing tonic: Pilot study of the perceived ability and potential of bartenders. Journal of Military and Veterans’ Health. 2010;18(4):17–24. [Google Scholar]

- 10.Quinnett P. QPR gatekeeper training for suicide prevention the model, rationale and theory. 2007 Retrieved from http://www.qprinstitute.com/pdfs/QPR%20Theory%20Paper.pdf.

- 11.Brown C, Wyman P, Guo J, Pena J. Dynamic wait-listed designs for randomized trials: New designs for prevention of youth suicide. Clinical Trials. 2006;3(3):259–271. doi: 10.1191/1740774506cn152oa. [DOI] [PubMed] [Google Scholar]

- 12.Andersen R. Revisiting the behavioral model and access to medical care: Does it matter? Journal of Health and Social Behavior. 1995:1–10. [PubMed] [Google Scholar]

- 13.Lipson SK. A comprehensive review of mental health gatekeeper-trainings for adolescents and young adults. International Journal of Adolescent Medicine and Health. 2013:1–12. doi: 10.1515/ijamh-2013-0320. [DOI] [PubMed] [Google Scholar]

- 14.U.S. Department of Health and Human Services (HHS) Office of the Surgeon General and National Action Alliance for Suicide Prevention . National Strategy for Suicide Prevention: Goals and Objectives for Action. HHS; Washington, DC: Sep, 2012. 2012. [PubMed] [Google Scholar]

- 15.Kitchener BA, Jorm AF. Mental health first aid training for the public: evaluation of effects on knowledge, attitudes and helping behavior. BMC psychiatry. 2002;2(1):10. doi: 10.1186/1471-244X-2-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kitchener B, Jorm A. Mental health first aid training in a workplace setting: A randomized controlled trial. BMC Psychiatry. 2004;4(1):23. doi: 10.1186/1471-244X-4-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jorm A, Kitchener B, O’Kearney R, Dear K. Mental health first aid training of the public in a rural area: A cluster randomized trial. BMC Psychiatry. 2004;4(1):33. doi: 10.1186/1471-244X-4-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kitchener BA, Jorm AF. Mental health first aid training: review of evaluation studies. Australian and New Zealand Journal of Psychiatry. 2006;40(1):6–8. doi: 10.1080/j.1440-1614.2006.01735.x. [DOI] [PubMed] [Google Scholar]

- 19.Link BG. Understanding labeling effects in the area of mental disorders: An assessment of the effects of expectations of rejection. American Sociological Review. 1987:96–112. [Google Scholar]

- 20.Eisenberg D, Downs MF, Golberstein E, Zivin K. Stigma and help seeking for mental health among college students. Medical Care Research and Review. 2009;66(5):522–541. doi: 10.1177/1077558709335173. [DOI] [PubMed] [Google Scholar]

- 21.Kessler R, Andrews G, Colpe L, Hiripi E, Mroczek D, Normand S, Walters E, Zaslavsky A. Short screening scales to monitor population prevalences and trends in non-specific psychological distress. Psychological Medicine. 2002;32(6):959–976. doi: 10.1017/s0033291702006074. [DOI] [PubMed] [Google Scholar]

- 22.Nichols A, Schaffer M. Clustered errors in Stata. United Kingdom Stata Users’ Group Meeting. 2007 Sep; [Google Scholar]

- 23.Drum D, Brownston C, Denmark A, Smith S. New data on the nature of suicidal crises in college students: Shifting the paradigm. Professional Psychology, Research and Practice. 2009;40(3):213–222. [Google Scholar]

- 24.Benjamini Y, Hochberg Y. Controlling the False Discovery Rate: a Practical and Powerful Approach to Multiple Testing. Journal of the Royal Statistical Society B. 1995;57:289–300. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.