Significance

Sensitivity to fine timing cues in speech is thought to play a key role in language learning, facilitating the development of phonological processing. In fact, a link between beat synchronization, which requires fine auditory–motor synchrony, and language skills has been found in school-aged children, as well as adults. Here, we show this relationship between beat entrainment and language metrics in preschoolers and use beat synchronization ability to predict the precision of neural encoding of speech syllables in these emergent readers. By establishing links between beat keeping, neural precision, and reading readiness, our results provide an integrated framework that offers insights into the preparative biology of reading.

Keywords: auditory processing, temporal processing, rhythm, cABR, speech envelope

Abstract

Temporal cues are important for discerning word boundaries and syllable segments in speech; their perception facilitates language acquisition and development. Beat synchronization and neural encoding of speech reflect precision in processing temporal cues and have been linked to reading skills. In poor readers, diminished neural precision may contribute to rhythmic and phonological deficits. Here we establish links between beat synchronization and speech processing in children who have not yet begun to read: preschoolers who can entrain to an external beat have more faithful neural encoding of temporal modulations in speech and score higher on tests of early language skills. In summary, we propose precise neural encoding of temporal modulations as a key mechanism underlying reading acquisition. Because beat synchronization abilities emerge at an early age, these findings may inform strategies for early detection of and intervention for language-based learning disabilities.

Literacy skills are critical for school success, employment, and general well-being (1), but reading disorders plague a significant portion (5–10%) of the population (2). Although we can characterize the perceptual and physiological deficits generally observed in reading-impaired individuals, each child is unique, challenging both diagnosis and intervention. Developmentally, speech rhythm is one of the earliest cues used by infants to segment speech and discern phonemes (3, 4), and parents naturally use emphatic stress and exaggerated rhythmic patterns to teach children language (5). Children and adults with dyslexia struggle to pick up on these rhythmic patterns (6), and this struggle may reflect a temporal encoding deficit underlying reading disabilities (5, 7). Furthermore, many reading-impaired children have pronounced problems with phonological awareness (i.e., the knowledge of which acoustic distinctions in speech are meaningful) that stem, at least in part, from deficient speech-sound processing (8–12). Therefore, we posit that sensitivity to temporal modulations in speech influences the neural processing of discrete speech components and that a breakdown of the temporal encoding of speech segments may impede the development of phonological skills critical for language learning.

Beat synchronization (a task necessitating precise integration of auditory perception and motor production) has offered an intriguing window into the biology of reading ability and its substrate skills. Converging lines of evidence indicate that children and adults who struggle to synchronize to a beat also struggle to read and have deficient neural encoding of sound (13–16). The preschool years constitute a sensitive period for phonological development, a time when experience with language and its internalization lay the foundation for reading acquisition (17). Here, we examined preschoolers’ ability to synchronize their drumming to that of an experimenter (using drumming rates that approximated phonemic rates), language skills, and neural encoding of temporal modulations within speech syllables. Characterizing phonological skills in children before they begin explicit reading instruction offers insights into the preparative biology of reading. We predicted that poor auditory–motor timing, reflected by poor beat synchronization, relates to less precise neural representation of temporal amplitude modulations in speech and inferior perception of language primitives that pave the way for reading development (i.e., phonological processing, short-term memory, and rapid naming). If so, beat synchronization ability and neural auditory processing might serve as objective early markers for reading readiness, allowing clinicians to identify children at risk for language-learning difficulties and provide remediation before they fall behind their peers in reading achievement.

Results

Drumming Consistency.

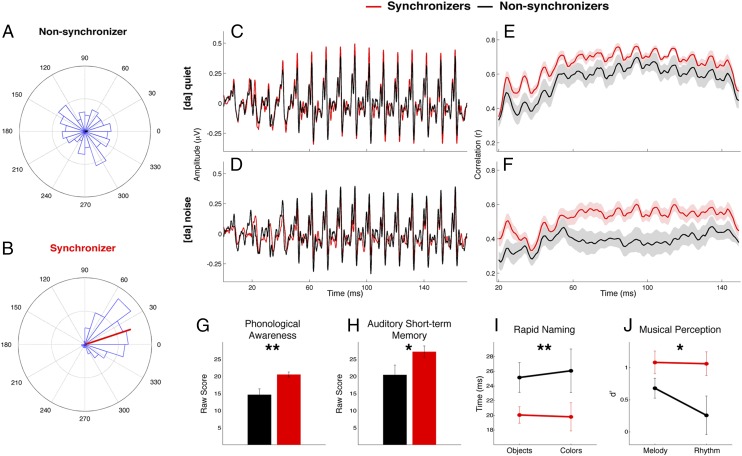

Based on whether they could synchronize to an acoustic beat at two speeds that overlapped with the stressed syllable rate of speech, children were grouped as “Synchronizers” (Rayleigh’s test P < 0.05, n = 22, 15 females) or “Non-synchronizers” (Rayleigh’s test P > 0.05, n = 13, 3 females) (see Fig. 1 A and B for drumming performance of representative participants in each group). Groups did not differ in age, verbal or nonverbal intelligence, or receptive vocabulary. Groups differed in sex ratio, with a greater proportion of males than females failing to synchronize; this observation may reflect the elevated rate of developmental reading disabilities in males typical of an at-risk population (18) or a maturational difference in motor development between the sexes. Because covarying for sex did not change our results, we report statistics without this covariate (see SI Analysis of Covariance for ANCOVA results). Group differences were not attributed to peripheral auditory function: all participants passed otoscopy, tympanometry, and otoacoustic emissions screenings and had clinically normal click-evoked wave V auditory brainstem responses (ABRs). Summary statistics for the two groups are presented in Table S1.

Fig. 1.

The ability to synchronize to a beat relates to neural encoding of speech and prereading language metrics. Data for Synchronizers are shown in red, data for Non-synchronizers in black. (A and B) Phase histograms (blue) of representative participants’ drum hits across a drumming session relative to stimulus (0°). (A) The Non-synchronizer’s drum hits are distributed randomly throughout the stimulus-phase cycle, with a negligible phase vector (black). (B) The Synchronizer’s drum hits cluster around a time region just before the stimulus, indicating the child is predicting the beat. The length of the mean phase vector (red) corresponds to the consistency of the relationship between the time of the drum hit and the time of onset of the stimulus. (C and D) Synchronizers (red) and Non-synchronizers (black) did not differ in broadband subcortical encoding of the speech syllable [da] in (C) quiet or (D) background noise. (E and F) Synchronizers benefit from selectively enhanced envelope precision encoding, as evinced by higher stimulus-to-response correlation values. (G–J) Synchronizers also performed better than Non-synchronizers on behavioral reading-related tasks measuring (G) phonological processing, (H) auditory short-term memory, (I) rapid naming, and (J) musical perception. *P < 0.05, **P < 0.01.

Language Metrics.

Synchronizers had better perceptual and cognitive language skills than Non-synchronizers (Fig. 1), as observed through tests of phonological awareness [only normed for and administered to 4-y-olds; n = 18 Synchronizers, n = 10 Non-synchronizers, F(1,26) = 13.378, P = 0.001, Cohen’s d = 1.33] and auditory short-term memory [F(1,32) = 4.885, P = 0.034, Cohen’s d = 0.74]. Synchronizers also were faster at naming objects and colors [F(1,32) = 6.794, P = 0.014, Cohen’s ds = 0.77 and 0.52, respectively]. In a test of musical perception, Synchronizers performed better at both melody and rhythm discrimination [F(1,32) = 5.423, P = 0.026, Cohen’s ds = 0.57 and 0.82, respectively], further substantiating our claim that Synchronizers are better tuned in to timing cues than Non-synchronizers and that Non-synchronizers’ inability to synchronize cannot necessarily be explained by an attentional or social disconnect or motoric challenges. Group means are presented in Table S2 and illustrated in Fig. 1 G–J.

Speech Syllable Envelope Encoding.

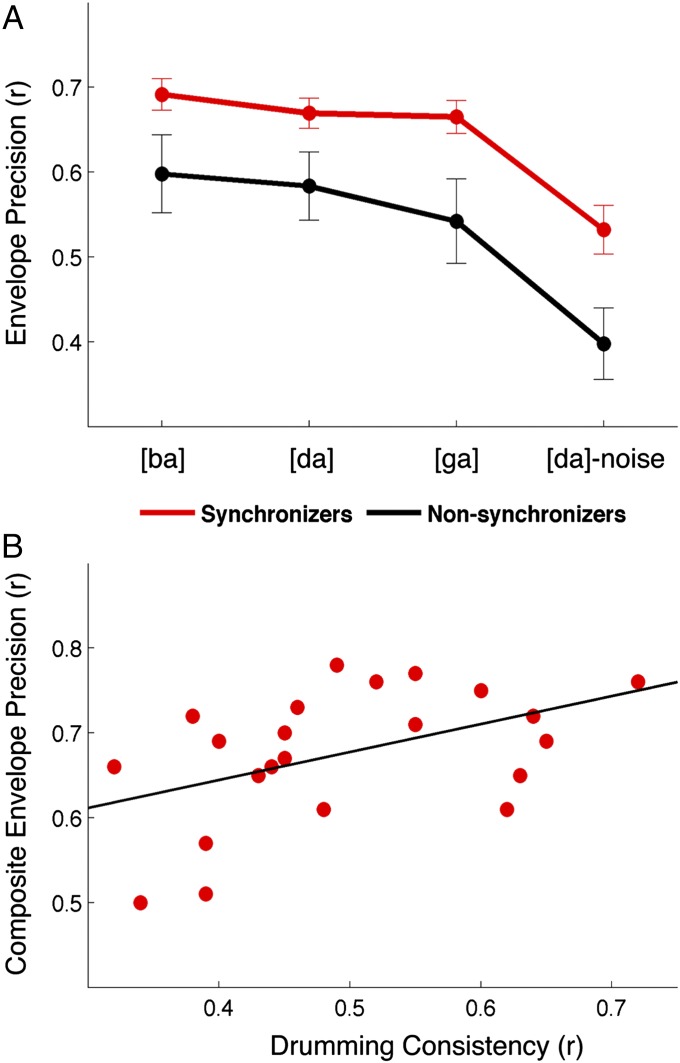

We elicited ABRs to complex sounds (cABRs) to the consonant-vowel syllables [ba], [da], and [ga] in quiet and to [da] in background noise ([da]-noise) to examine the neural encoding of speech syllables. We extracted and cross-correlated the envelopes of the responses with the envelopes of the evoking stimuli to determine the precision of neural encoding of the slowly modulating temporal features. This analysis revealed that Synchronizers had more precise neural encoding of the speech syllable envelope for all speech stimuli [repeated measures ANOVA (RMANOVA): F(1,33) = 7.173, P = 0.011, Cohen’s d of averaged stimuli = 0.89; Fig. 2A]. Envelope encoding differed between stimuli in quiet and noise [F(1,31) = 28.025, P < 0.001], with decreased neural response precision in noise. However, there was no stimulus × group interaction [F(1,31) = 0.492, P = 0.691], indicating that Synchronizers had better neural encoding of the speech envelope regardless of stimulus ([ba], [da], or [ga]) or condition (quiet or noise). This group difference could not be attributed to differences in the magnitude of the response [F(1,33) = 0.559, P = 0.460] or magnitude of spectral energy at the fundamental frequency of the response [F0; F(1,33) = 2.286, P = 0.140]. Within the Synchronizers, we found a systematic relationship with drumming consistency and neural precision: those who drummed more consistently had more precise encoding of the syllable envelopes in quiet (composite of [ba], [da], and [ga]: r(22) = 0.467, P = 0.029; Fig. 2B).

Fig. 2.

The relationship between beat synchronization and neural envelope encoding generalizes across a range of stimuli, and envelope precision correlates with drumming consistency. (A) Synchronizers (red) demonstrated more precise neural encoding of the speech envelope than Non-synchronizers (black) as evinced by greater correlations between stimulus and brainstem response envelopes across stimuli in both quiet and noise conditions. (B) Individual synchronization ability within the Synchronizers is correlated with more precise envelope encoding (a composite of [ba], [da], and [ga]).

Having established relationships between beat synchronization and prereading language skills on the one hand, and beat synchronization and neural encoding of the speech syllable envelope on the other, we asked how beat synchronization and language skills combine to account for variance in precision of neural encoding of sounds. We performed a hierarchical linear regression on the Synchronizer group, with stimulus-to-response envelope correlation as the dependent variable. On the first step the independent variables sex, age, intelligence, and vocabulary did not significantly predict unique variance of neural encoding [R2 = 0.288, F(5,14) = 1.134, P = 0.388]. On the second step we added the independent variables rapid naming time and auditory short-term memory. This step did not significantly improve the model [∆R2 = 0.216, F(2,12) = 2.622, P = 0.114] over and above age, sex, intelligence quotient (IQ), and vocabulary. On the third step we added the independent variables drumming consistency and rhythmic perception and found that these rhythm metrics significantly improved the model, explaining an additional 30.1% of neural envelope encoding precision variance [F(2,10) = 7.719, P = 0.009] over and above demographic factors and language scores. Our overall model accounts for 80.5% [F(9,10) = 4.595, P = 0.013] of variance in neural envelope encoding precision. See Table S3 for a full presentation of the regression results.

Discussion

We demonstrate that preschoolers unable to synchronize their motor output with a phonemic-paced external auditory beat have poorer prereading skills (phonological processing, auditory short-term memory, rapid naming) and music perception than their synchronizing peers. Additionally, these children demonstrate less precise neural encoding of the speech syllable envelope. Taken together, we demonstrate that beat synchronization, the precision of neural encoding, and language skills are interrelated in preschoolers and suggest that auditory–motor synchrony underlies both beat-keeping and language development. These findings are consistent with previous studies suggesting that sensitivity to temporal modulations in speech is a key mechanism of reading development (15, 19), and we provide the first (to our knowledge) biological evidence of this phenomenon in preschoolers.

Across languages, reading is recognized as a difficult but imperative task for successful communication and education. Reading began so recently in human evolutionary history that reading skills must piggyback on brain circuits that evolved for other purposes, such as those for the acquisition of spoken language and attention; integration of these systems, together with the visual system, is necessary for fluency and automaticity in reading (20). Multiple theories have evolved to delineate the underlying impairment(s) responsible when this integration goes awry. Perceptual impairments of basic sensory or sensorimotor processing have been observed in many poor readers. These impairments have been investigated using tasks measuring visual (21, 22) or auditory processing (11, 23–25) across time scales, with dyslexics struggling to process fast temporal information in both visual and auditory domains.

A number of neurophysiological investigations have shaped what is known about the biology of reading, and a recurring theme is the importance of synchronous neural activity in subcortical and cortical structures. Poor readers have increased variability (i.e., less consistency) in their neural responses to speech (26, 27), a phenomenon also observed in a rat model of dyslexia (28). Additionally, poor readers have difficulty encoding rapidly changing frequency content in speech such as formant transitions in consonant-vowel syllables (12, 29, 30), inadequate timing of subcortical auditory encoding (31), and impoverished representation of speech harmonics (31). Germane to the current study, previous investigations have linked poor reading to deficient tracking of the temporal envelope of speech (5, 32–35). Here, we establish relationships between the precision of this temporal encoding, motor synchronization, and prereading skills. We suggest auditory–motor synchronization and reading abilities rely on shared neural resources for temporal precision, which may be reflected by synchronous neural activity in auditory midbrain (16).

The dyslexic brain has been described as “in tune but out of time” (36), suggesting that reading difficulties may be rooted in underlying deficient tracking of speech rhythms manifested through envelope modulations, with reduced temporal sensitivity impeding phonological development. The Temporal Sampling Framework (TSF), elucidates how these auditory deficits come together to explain what may be at fault when learning to read proves challenging (7). The TSF resolves many discrepancies between competing theories of dyslexia, contending that substandard phonological development is rooted in deficient tracking of low-frequency modulations (i.e., 4–10 Hz) such as the amplitude envelope onset (rise-time) of sounds, which reduces temporal sensitivity and impedes phonological discrimination.

Our findings support this theory by explicitly demonstrating the importance of temporal sensitivity for language learning in prereaders. The significant differences we observed for neural envelope encoding between our preschool Synchronizers and Non-synchronizers are broadly consistent with previous findings that report poor readers have impoverished encoding of acoustic envelope modulations (5, 33, 35). The gamut of theories implicating impaired neural encoding of acoustic signals allows us to postulate that children with developmental dyslexia struggle to make sound-to-meaning connections because of poor neural synchrony throughout the auditory system. Additionally, we provide further evidence for beat detection relating to individual differences in phonological processing and sensitivity to fine-grained amplitude modulations in children too young to have attained reading competence. This result demonstrates that synchronous temporal precision is detectable across both auditory and motor domains and that assessing a child’s ability to entrain to a beat could be used as a behavioral marker of neural synchrony by providing an estimation of neural tracking of speech modulations and the skills necessary for learning and manipulating the building blocks of language.

In fact, all children in this sample passed the criterion scores for each of the prereading tests and can be classified as performing within the “normal” range, indicating that this task is predictive of variance in auditory and language processing in the entire population, not just as a means to detect disordered systems. Future work should evaluate how motor system development fits into this framework of auditory–motor integration, neural synchrony, and emergent language skills. However, Thomson and Goswami (13) assessed manual dexterity using the Purdue pegboard battery (37) and did not find a difference in motor skills between dyslexics and typically developing 10-y-olds.

The prominence and early emergence of beat-entrainment abilities could allow parents, educators, and clinicians to target effectively prereaders who may fall behind their peers in reading achievement. Auditory training has been shown to improve the precision and discrimination of acoustic components of speech sounds (38–40); therefore, anticipatory interventions based on music, particularly rhythm (41–44), might benefit children with developmental language disorders via subcortical motor structures (45, 46). More general attention and working memory training also might boost temporal processing via a prefrontal cognitive control system (47).

The preschool age is a necessary starting point for examining the developmental trajectory of reading aptitude: these children have not yet attained literacy competence, and at this age they have not yet undergone formal reading instruction. The present findings provide a window into language processing in children for whom we can begin to trace the maturation of language primitives that facilitate more complex language-based tasks such as reading. We report empirical evidence that auditory–motor synchrony may reflect biological processes related to the precision of neural temporal coding that underlie a child’s rhythmic development, sound processing, and language learning. Children who struggle to move synchronously to a beat may have poorer neural representation of sounds, and additional longitudinal research may show that this lack of neural synchrony could predict future struggles in learning to read or could put such children at risk for developing auditory processing disorders.

Materials and Methods

Participants.

Thirty-five children (18 females) between the ages of 3 and 4 y (Mean = 4.37, SD = 0.51) were recruited from the Chicago area. No child had a history of a neurologic condition, a diagnosis of autism spectrum disorder, or second language exposure. Parents completed a questionnaire about family history of learning disabilities. All children passed a screening of peripheral auditory function (normal otoscopy, tympanometry, and distortion product otoacoustic emissions at least 6 dB above the noise floor) and had normal click-evoked ABRs [identifiable wave V latency of <5.84 ms in response to a 100-μs square-wave click stimulus presented at 80 dB sound pressure level (SPL) in rarefaction at a rate of 31/s]. Informed consent and assent was obtained from legal guardians and children, respectively, in accordance with procedures approved by the Northwestern University Institutional Review Board, and children were monetarily compensated for their participation.

All behavioral and neurophysiological tests were randomly presented to participants over two to three sessions.

Behavioral Measures: Beat Synchronization.

Our drumming task was based on Kirschner and Tomasello’s (48) social drumming paradigm for preschoolers, which found that preschoolers’ beat synchronization abilities are best evaluated in a social joint-attention drumming condition. Two identical conga drums were placed adjacent to one another, with a DR-1 drum trigger (Pulse Percussion) attached to the underside of each drum head to record the drum hits for the experimenter and participant. The experimenter covertly listened and drummed to an isochronous pacing beat presented through in-ear headphones and encouraged the child to imitate and drum along with the experimenter. Auditory stimuli and drum hits of both the experimenter and participant were recorded in two stereo recordings as two separate channels collected simultaneously on two different computers in Audacity version 2.0.5 (audacity.sourceforge.net). Before beginning testing, we confirmed that all experimenters were able to produce a steady beat (mean SD of interdrum intervals of 25 ms or less at each of the two experimental rates). After a brief practice session, four trials were performed, with two trials at 2.5 Hz followed by two trials at 1.67 Hz. In each 2.5-Hz trial 50 isochronous drum sounds were presented; in each 1.67-Hz trial 33 drum sounds were presented so that each trial was 20 s in duration. The use of two rates allowed us to assess general synchronization ability rather than the ability to synchronize to a specific rate and eliminated the potential bias of an individual’s preferred tempo for isochronous drumming.

Data processing.

Data were processed using software developed in house in MATLAB. First, for each trial, drum hits for both experimenter and participant and pacing stimulus onsets were detected by setting an amplitude threshold and a refraction time on a subject-by-subject basis. Starting at the beginning of the track, the first point at which the signal exceeded the amplitude threshold was marked as an onset. To ensure that multiple onsets were not marked for each drum or stimulus hit, each onset was followed by a refractory time period during which the program did not mark onsets, regardless of amplitude. Given the high degree of intersubject variability in the strength of drum hits and the rapidity of the drumming, amplitude thresholds and refraction times were selected manually for each participant, and the accuracy of onset marking was checked manually. When synchronizing to a metronome, drummers tend to anticipate the beat (49), so the stimulus to which the participants were synchronizing (the experimenter’s drumming) was presented slightly earlier than the drum track itself. Therefore the average offset between the experimenter’s drumming and the pacing stimulus onsets was subtracted from the time of each drum hit in the participant’s data.

Data analysis.

Synchronization performance commonly is assessed by examining the variability of intertap intervals produced. However, this procedure relies upon participants producing roughly one drum hit per metronome tick and therefore is unsuitable for use with younger populations, whose performance is inherently more variable. As a result, we measured synchronization ability using circular statistics (48). We assigned each drum hit a phase angle in degrees by subtracting the onset time of the drum hit from the onset of the stimulus hit nearest in time, dividing the result by the interstimulus interval, and multiplying by 360. We then summed all the vectors and divided the result by the number of drum hits produced, resulting in a mean vector R (see two representative participants in Fig. 1 A and B). The angle of this vector represents the extent to which the subject tended to lead or follow the stimulus hits, and the length of the vector is a measurement of the extent to which participants tended to maintain a constant temporal relationship between their drum hits and the stimulus hits—i.e., the extent to which they synchronized. The length of vector R (described in results as “drumming consistency”) was computed by averaging the synchronicity of the participant’s taps at each of the two trials across both drumming rates. Rayleigh’s test, which tests the consistency in the phase of the responses versus a uniform distribution around the circle, was applied to the set of all of the vectors produced in the two trials for a given rate to determine whether a participant was significantly synchronizing to a stimulus. The two trials at each rate were combined to compute a Rayleigh’s P value for each rate. If a child’s Rayleigh’s test resulted in a P value of less than 0.05 at both rates, the child was deemed a Synchronizer. If the P value was greater than 0.05 at both rates, the child was categorized as a Non-synchronizer. These P value thresholds were motivated by prior work by Kirschner and Tomasello (48) for determining synchronization in young children. P values for individuals at each rate are detailed in Fig. S1.

Behavioral Measures: IQ and Vocabulary.

Verbal and nonverbal IQ scores were estimated with the Wechsler Preschool and Primary Scale of Intelligence, third edition (Pearson/PsychCorp). This test is designed to measure cognitive development in preschoolers. We administered the information subtest to assess verbal IQ and the object assembly (3-y-olds) or matrix reasoning (4-y-olds) to measure nonverbal IQ. Receptive vocabulary was assessed using the National Institutes of Health’s Toolbox for Assessment of Neurological and Behavioral Function Picture Vocabulary Test (50). Scaled scores were computed to compare group performance across age groups.

Behavioral Measures: Language Assessment: Phonological Awareness and Verbal Memory.

Language abilities were measured with the Clinical Evaluation of Language Fundamentals Preschool, second edition (Pearson/PsychCorp) Phonological Awareness (4-y-olds) and Recalling Sentences (3- and 4-y-olds) subtests (51). The Phonological Awareness subtest assesses the child’s knowledge of the sound structure of language and ability to manipulate sounds through compound word and syllable blending, sentence and syllable segmentation, and rhyme awareness and production. Raw scores were computed and used for analyses, with higher scores indicating better performance. This subtest was administered only to 4-y-olds (n = 10 Non-synchronizers; 18 Synchronizers). The Recalling Sentences subtest, a measure of auditory short-term memory, evaluates the child’s ability to repeat sentences of varying length and complexity without changing any word meanings or structure. This test measures a skill necessary for learning beyond following directions, because the child’s response indicates whether critical meaning or structural features are internalized for recall. Raw scores were computed for each child for comparisons between synchronization groups.

Behavioral Measures: Rapid Automatized Naming.

Rapid automatized naming (RAN) was assessed with the Prekindergarten RAN test (Pro-Ed, Inc.). This test is designed to estimate a child’s ability to recognize and name visual symbols rapidly and accurately (52). We used the colors and objects subtests, each of which comprised five high-frequency stimuli that were repeated randomly for a total of 15 stimulus items. Each test was prefaced with a familiarization task to ensure the child knew the names of each item. During the test, the participant was asked to name each item as quickly as possible without making mistakes. Scores reported are the amount of time required to name all of the items on each test. Two children were outliers on the color naming subtest (scores >2 SDs above the mean), and their scores were corrected to mean + 2 SD. One child did not know the colors and was subsequently excluded from RAN analyses.

Behavioral Measures: Music Perception.

Gordon’s AUDIE (53) musical perception assessment for 3- and 4-y-olds comprises two subtests designed to assess tonal (AUDIE-Melody) and rhythmic (AUDIE-Rhythm) aptitude. Each of the subtests contains 10 items. In the recorded directions at the beginning of each subtest, the child is taught “Audie's special song,” a three-note phrase that the experimenter pretended was sung by a plush animal. For the melody subtest, children listen to similar short phrases and are asked to state whether each is or is not Audie's special song. If the song is different from Audie’s song, it is because the melody is different; all elements of the song other than pitch are constant. In the rhythm subtest, children perform the same 10-item task while listening for rhythmic similarities and differences. Audie was administered by cassette tape, with the experimenter providing encouragement between trials. Sensitivity d′ scores were computed according to signal detection theory for group comparisons of performance on each subtest.

Electrophysiological Recordings.

Stimuli.

cABRs were elicited to 170-ms six-formant stop consonant-vowel speech syllables, [ba], [da], and [ga], at 80 dB SPL at a 4.35-Hz sampling rate. These syllables were synthesized at 20 kHz using a Klatt-based formant synthesizer (54), with voicing onset at 5 ms, a 50-ms formant transition, and a 120-ms steady-state vowel. Stimuli differed only in the onset frequency of the second formant (F2) during the 50-ms formant transition period (F2 [ba] = 900 Hz, F2 [da] = 1,700 Hz, F2 [ga] = 2,480 Hz), transitioning to 1,240 Hz during the steady-state vowel portion. Over the transition period for all stimuli, the first and third formants were dynamic (F1 from 400–720 Hz, F3 from 2,580–2,500 Hz) with the fundamental frequency, fourth, fifth, and sixth formants constant (F0 = 100, F4 = 3,300, F5 = 3,750, and F6 = 4,900 Hz). The stimulus [da] was also presented in a “noise” condition: the previously described [da] in the context of multitalker background babble, [da]-noise, adapted from Van Engen and Bradlow (55). The looped babble track (45-s duration) was presented at a signal-to-noise ratio of +10 dB relative to the signal ([da]) based on the root mean square amplitude of the entire noise track. All stimuli were presented in alternating polarities (stimulus waveform was inverted 180°) with an 81-ms interstimulus interval controlled by E-Prime version 2.0 (Psychology Software Tools, Inc.). For each stimulus, 4,200 artifact-free sweeps (amplitudes ±35 μV rejected as artifact) were presented, and the presentation order was randomized for each subject. These stimuli have been used in previous work from our laboratory (see ref. 28).

Recording parameters.

The cABR is an automatic response that can be recorded under passive listening conditions (56). To decrease myogenic noise, participants sat comfortably while watching a movie of their choice during each recording session in an electrically shielded sound-proof booth. Stimuli were presented monaurally to the right ear through an insert earphone (ER-3, Etymotic Research). The left ear remained unblocked so that the soundtrack of the movie (<40 dB SPL) was audible but not loud enough to mask the presented stimuli. cABRs were collected using a BioSEMI Active2 recording system with an ActiABR module recorded into LabView 2.0 (National Instruments). Responses were digitized at 16.384 kHz and collected with an online bandpass filter from 100–3,000 Hz (20 dB per decade roll-off). The active electrode was placed at the vertex (Cz), with references at each earlobe. Grounding electrodes Common Mode Sense (CMS) and Driven Right Leg (DRL) were placed on the left and right sides of the forehead at Fp1 and Fp2. Only ipsilateral (Cz-Right earlobe) responses were used in analysis. Offset voltage was <50 mV for all electrodes. Because of the challenges inherent in obtaining neurophysiological recordings from small children who find it difficult to sit still through long testing sessions, data were collected over two or three sessions within a period of 1 mo.

Data reduction and processing.

Responses were offline amplified in the frequency domain 20 dB per decade for three decades below 100 Hz. Amplified offline responses were bandpass filtered from 70–2,000 Hz (12 dB per octave roll-off) and segmented into epochs with an interval of −40 to 213 ms (stimulus onset at 0 ms) and baseline-corrected to the prestimulus period. Responses exceeding ±35 µV were rejected as artifacts, and remaining sweeps were averaged. Final responses to each syllable comprised 2,000 artifact-free sweeps of each polarity, and responses to the two polarities were added to limit the influence of cochlear microphonic and stimulus artifact (57) and to emphasize the temporal envelope component of the cABR (58). All data reduction was done using custom routines MATLAB (Mathworks, Inc.).

Data analysis.

To analyze the fidelity of participants’ brainstem responses to the evoking stimulus, the stimulus was bandpass filtered to match the brainstem response characteristics (70–2,000 Hz) with a 12 dB per octave roll-off. We then performed a Hilbert transform on the stimulus and response waveforms, low-pass filtered at 200 Hz, and rectified to obtain the broadband amplitude envelopes of the stimulus and response (Fig. S2). To calculate the precision between the stimulus and participants’ neural encoding of the envelope, a cross-correlation was performed by shifting the stimulus relative to the response, starting with a 5-ms lag and ending with a 12-ms lag. The maximum correlation is reported. This range of lags was chosen because it encompasses the neural lag between the cochlea and rostral brainstem. MATLAB was used for all data analyses.

Statistical Analysis.

A mixed-model RMANOVA was used to compare neural coding of the envelope across the four stimuli ([ba]/[da]/[ga]/[da]-noise) between the two groups, with stimulus as a within-subjects factor and group (Synchronizers/Non-synchronizers) as a between-subjects factor. Post hoc paired and independent samples t tests were performed to define observed effects. Additional ANOVAs compared behavioral performance between the two groups. All results reported reflect two-tailed values. Pearson correlations also were performed between variables, and a hierarchical three-step linear regression was used to determine how well behavioral measures predicted variance for neural encoding. Dependent variables conformed to the expectations of the linear model (normality and sphericity). Statistics were computed using SPSS (SPSS, Inc.).

Supplementary Material

Acknowledgments

We thank members of the Auditory Neuroscience Laboratory for their assistance with data collection, as well as Trent Nicol, Jessica Slater, and Erika Skoe for comments on an earlier draft of the manuscript. We also thank Steven Zecker and Casey Lew-Williams for valuable insight and analysis suggestions. This work was supported by National Institutes of Health Grants R01 HD069414 (to N.K.) and T32 DC009399 (to K.W.C.) and by the Knowles Hearing Center of Northwestern University (N.K.).

Footnotes

The authors declare no conflict of interest.

*This Direct Submission article had a prearranged editor.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1406219111/-/DCSupplemental.

References

- 1.Felsenfeld S, Broen PA, McGue M. A 28-year follow-up of adults with a history of moderate phonological disorder: Educational and occupational results. J Speech Hear Res. 1994;37(6):1341–1353. doi: 10.1044/jshr.3706.1341. [DOI] [PubMed] [Google Scholar]

- 2.Démonet J-F, Taylor MJ, Chaix Y. Developmental dyslexia. Lancet. 2004;363(9419):1451–1460. doi: 10.1016/S0140-6736(04)16106-0. [DOI] [PubMed] [Google Scholar]

- 3.Ramus F, Hauser MD, Miller C, Morris D, Mehler J. Language discrimination by human newborns and by cotton-top tamarin monkeys. Science. 2000;288(5464):349–351. doi: 10.1126/science.288.5464.349. [DOI] [PubMed] [Google Scholar]

- 4.Eimas PD, Siqueland ER, Jusczyk P, Vigorito J. Speech perception in infants. Science. 1971;171(3968):303–306. doi: 10.1126/science.171.3968.303. [DOI] [PubMed] [Google Scholar]

- 5.Goswami U, et al. Amplitude envelope onsets and developmental dyslexia: A new hypothesis. Proc Natl Acad Sci USA. 2002;99(16):10911–10916. doi: 10.1073/pnas.122368599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Huss M, Verney JP, Fosker T, Mead N, Goswami U. Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex. 2011;47(6):674–689. doi: 10.1016/j.cortex.2010.07.010. [DOI] [PubMed] [Google Scholar]

- 7.Goswami U. A temporal sampling framework for developmental dyslexia. Trends Cogn Sci. 2011;15(1):3–10. doi: 10.1016/j.tics.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 8.Liberman IY, Shankweiler D, Fischer FW, Carter B. Explicit syllable and phoneme segmentation in the young child. J Exp Child Psychol. 1974;18(2):201–212. [Google Scholar]

- 9.Pugh KR, et al. The relationship between phonological and auditory processing and brain organization in beginning readers. Brain Lang. 2013;125(2):173–183. doi: 10.1016/j.bandl.2012.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ramus F, Marshall CR, Rosen S, van der Lely HKJ. Phonological deficits in specific language impairment and developmental dyslexia: Towards a multidimensional model. Brain. 2013;136(Pt 2):630–645. doi: 10.1093/brain/aws356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tallal P. Auditory temporal perception, phonics, and reading disabilities in children. Brain Lang. 1980;9(2):182–198. doi: 10.1016/0093-934x(80)90139-x. [DOI] [PubMed] [Google Scholar]

- 12.Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proc Natl Acad Sci USA. 2009;106(31):13022–13027. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Thomson JM, Goswami U. Rhythmic processing in children with developmental dyslexia: Auditory and motor rhythms link to reading and spelling. J Physiol Paris. 2008;102(1-3):120–129. doi: 10.1016/j.jphysparis.2008.03.007. [DOI] [PubMed] [Google Scholar]

- 14.Thomson JM, Fryer B, Maltby J, Goswami U. Auditory and motor rhythm awareness in adults with dyslexia. J Res Read. 2006;29(3):334–348. [Google Scholar]

- 15.Tierney AT, Kraus N. The ability to tap to a beat relates to cognitive, linguistic, and perceptual skills. Brain Lang. 2013;124(3):225–231. doi: 10.1016/j.bandl.2012.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tierney A, Kraus N. The ability to move to a beat is linked to the consistency of neural responses to sound. J Neurosci. 2013;33(38):14981–14988. doi: 10.1523/JNEUROSCI.0612-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ruben RJ. A time frame of critical/sensitive periods of language development. Acta Otolaryngol. 1997;117(2):202–205. doi: 10.3109/00016489709117769. [DOI] [PubMed] [Google Scholar]

- 18.Rutter M, et al. Sex differences in developmental reading disability: New findings from 4 epidemiological studies. JAMA. 2004;291(16):2007–2012. doi: 10.1001/jama.291.16.2007. [DOI] [PubMed] [Google Scholar]

- 19.Goswami U, Gerson D, Astruc L. Amplitude envelope perception, phonology and prosodic sensitivity in children with developmental dyslexia. Read Writ. 2009;23(8):995–1019. [Google Scholar]

- 20.Dehaene S. Reading in the Brain: The Science and Evolution of a Human Invention. Penguin; New York: 2009. [Google Scholar]

- 21.Stein J, Walsh V. To see but not to read; the magnocellular theory of dyslexia. Trends Neurosci. 1997;20(4):147–152. doi: 10.1016/s0166-2236(96)01005-3. [DOI] [PubMed] [Google Scholar]

- 22.Skottun BC. The magnocellular deficit theory of dyslexia: The evidence from contrast sensitivity. Vision Res. 2000;40(1):111–127. doi: 10.1016/s0042-6989(99)00170-4. [DOI] [PubMed] [Google Scholar]

- 23.Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends Cogn Sci. 2004;8(10):457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- 24.Corriveau KH, Goswami U, Thomson JM. Auditory processing and early literacy skills in a preschool and kindergarten population. J Learn Disabil. 2010;43(4):369–382. doi: 10.1177/0022219410369071. [DOI] [PubMed] [Google Scholar]

- 25.Heim S, Friedman JT, Keil A, Benasich AA. Reduced Sensory Oscillatory Activity during Rapid Auditory Processing as a Correlate of Language-Learning Impairment. J Neurolinguist. 2011;24(5):539–555. doi: 10.1016/j.jneuroling.2010.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hornickel J, Kraus N. Unstable representation of sound: A biological marker of dyslexia. J Neurosci. 2013;33(8):3500–3504. doi: 10.1523/JNEUROSCI.4205-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wible B, Nicol T, Kraus N. Correlation between brainstem and cortical auditory processes in normal and language-impaired children. Brain. 2005;128(Pt 2):417–423. doi: 10.1093/brain/awh367. [DOI] [PubMed] [Google Scholar]

- 28.Centanni TM, et al. Knockdown of the Dyslexia-Associated Gene Kiaa0319 Impairs Temporal Responses to Speech Stimuli in Rat Primary Auditory Cortex. Cereb Cortex. 2013;24(7):1753–1766. doi: 10.1093/cercor/bht028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kraus N, et al. Auditory neurophysiologic responses and discrimination deficits in children with learning problems. Science. 1996;273(5277):971–973. doi: 10.1126/science.273.5277.971. [DOI] [PubMed] [Google Scholar]

- 30.White-Schwoch T, Kraus N. Physiologic discrimination of stop consonants relates to phonological skills in pre-readers: A biomarker for subsequent reading ability?(†) Front Hum Neurosci. 2013;7:899. doi: 10.3389/fnhum.2013.00899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Banai K, et al. Reading and subcortical auditory function. Cereb Cortex. 2009;19(11):2699–2707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Pasquini ES, Corriveau KH, Goswami U. Auditory processing of amplitude envelope rise time in adults diagnosed with developmental dyslexia. Sci Stud Read. 2007;11(3):259–286. [Google Scholar]

- 33.Abrams DA, Nicol T, Zecker S, Kraus N. Abnormal cortical processing of the syllable rate of speech in poor readers. J Neurosci. 2009;29(24):7686–7693. doi: 10.1523/JNEUROSCI.5242-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Witton C, et al. Sensitivity to dynamic auditory and visual stimuli predicts nonword reading ability in both dyslexic and normal readers. Curr Biol. 1998;8(14):791–797. doi: 10.1016/s0960-9822(98)70320-3. [DOI] [PubMed] [Google Scholar]

- 35.Lehongre K, Ramus F, Villiermet N, Schwartz D, Giraud A-L. Altered low-γ sampling in auditory cortex accounts for the three main facets of dyslexia. Neuron. 2011;72(6):1080–1090. doi: 10.1016/j.neuron.2011.11.002. [DOI] [PubMed] [Google Scholar]

- 36.Corriveau KH, Goswami G. Rhythmic motor entrainment in children with speech and language impairments: Tapping to the beat. Cortex. 2009;45(1):119–130. doi: 10.1016/j.cortex.2007.09.008. [DOI] [PubMed] [Google Scholar]

- 37.Tiffin J. The Purdue Pegboard Battery. Lafayette Instrument Company; Lafayette, IN: 1999. [Google Scholar]

- 38.Tallal P, et al. Language comprehension in language-learning impaired children improved with acoustically modified speech. Science. 1996;271(5245):81–84. doi: 10.1126/science.271.5245.81. [DOI] [PubMed] [Google Scholar]

- 39.Strait DL, Chan K, Ashley R, Kraus N. Specialization among the specialized: Auditory brainstem function is tuned in to timbre. Cortex. 2012;48(3):360–362. doi: 10.1016/j.cortex.2011.03.015. [DOI] [PubMed] [Google Scholar]

- 40.Zuk J, et al. Enhanced syllable discrimination thresholds in musicians. PLoS ONE. 2013;8(12):e80546. doi: 10.1371/journal.pone.0080546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bhide A, Power A, Goswami U. A rhythmic musical intervention for poor readers: A comparison of efficacy with a letter-based intervention: Musical intervention for poor readers. Mind Brain Educ. 2013;7(2):113–123. [Google Scholar]

- 42.Peretz I, Coltheart M. Modularity of music processing. Nat Neurosci. 2003;6(7):688–691. doi: 10.1038/nn1083. [DOI] [PubMed] [Google Scholar]

- 43.Patel AD. Why would musical training benefit the neural encoding of speech? The OPERA Hypothesis. Front Psychol. 2011;2:142. doi: 10.3389/fpsyg.2011.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Iversen JR, Patel AD, Ohgushi K. Perception of rhythmic grouping depends on auditory experience. J Acoust Soc Am. 2008;124(4):2263–2271. doi: 10.1121/1.2973189. [DOI] [PubMed] [Google Scholar]

- 45.Kotz SA, Schwartze M. Cortical speech processing unplugged: Atimely subcortico-cortical framework. Trends Cogn Sci. 2010;14(9):392–399. doi: 10.1016/j.tics.2010.06.005. [DOI] [PubMed] [Google Scholar]

- 46.Chen JL, Penhune VB, Zatorre RJ. Moving on time: Brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J Cogn Neurosci. 2008;20(2):226–239. doi: 10.1162/jocn.2008.20018. [DOI] [PubMed] [Google Scholar]

- 47.Anguera JA, et al. Video game training enhances cognitive control in older adults. Nature. 2013;501(7465):97–101. doi: 10.1038/nature12486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kirschner S, Tomasello M. Joint drumming: Social context facilitates synchronization in preschool children. J Exp Child Psychol. 2009;102(3):299–314. doi: 10.1016/j.jecp.2008.07.005. [DOI] [PubMed] [Google Scholar]

- 49.Aschersleben G. Temporal control of movements in sensorimotor synchronization. Brain Cogn. 2002;48(1):66–79. doi: 10.1006/brcg.2001.1304. [DOI] [PubMed] [Google Scholar]

- 50.Hodes RJ, Insel TR, Landis SC. NIH Blueprint for Neuroscience Research The NIH toolbox: Setting a standard for biomedical research. Neurology. 2013;80(11) Suppl 3:S1–S92. doi: 10.1212/WNL.0b013e3182872e90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wiig E, Secord W, Semel E. 2004. Clinical Evaluation of Language Fundamentals – Preschool 2 (Pearson/PsychCorp, San Antonio)

- 52.Norton ES, Wolf M. Rapid automatized naming (RAN) and reading fluency: Implications for understanding and treatment of reading disabilities. Annu Rev Psychol. 2012;63(1):427–452. doi: 10.1146/annurev-psych-120710-100431. [DOI] [PubMed] [Google Scholar]

- 53.Gordon E. Audie: A Game for Understanding and Analyzing Your Child’s Music Potential. GIA Publications; Chicago: 1989. [Google Scholar]

- 54.Klatt D. Software for a cascade/parallel formant synthesizer. J Acoust Soc Am. 1980;67:971–995. [Google Scholar]

- 55.Van Engen KJ, Bradlow AR. Sentence recognition in native- and foreign-language multi-talker background noise. J Acoust Soc Am. 2007;121(1):519–526. doi: 10.1121/1.2400666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Skoe E, Kraus N. Auditory brain stem response to complex sounds: A tutorial. Ear Hear. 2010;31(3):302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Campbell T, Kerlin JR, Bishop CW, Miller LM. Methods to eliminate stimulus transduction artifact from insert earphones during electroencephalography. Ear Hear. 2012;33(1):144–150. doi: 10.1097/AUD.0b013e3182280353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Aiken SJ, Picton TW. Envelope and spectral frequency-following responses to vowel sounds. Hear Res. 2008;245(1-2):35–47. doi: 10.1016/j.heares.2008.08.004. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.