Abstract

The scientific community has become very concerned about inappropriate image manipulation. In journals that check figures after acceptance, 20–25% of the papers contained at least one figure that did not comply with the journal’s instructions to authors. The scientific press continues to report a small, but steady stream of cases of fraudulent image manipulation. Inappropriate image manipulation taints the scientific record, damages trust within science, and degrades science’s reputation with the general public. Scientists can learn from historians and photojournalists, who have provided a number of examples of attempts to alter or misrepresent the historical record. Scientists must remember that digital images are numerically sampled data that represent the state of a specific sample when examined with a specific instrument. These data should be carefully managed. Changes made to the original data need to be tracked like the protocols used for other experimental procedures. To avoid pitfalls, unexpected artifacts, and unintentional misrepresentation of the image data, a number of image processing guidelines are offered.

Keywords: Digital image, Ethics, Manipulation, Image processing, Microscopy

1. Introduction

For over a decade, Dr. Michael Rossner has been a voice crying in the wilderness (1-4). The editor of the Journal of Cell Biology (JCB) has called on the scientific publishing world to be proactive in monitoring the poorly addressed issue of the inappropriate manipulation of scientific digital images. Publishers have resisted, in part to avoid the extra expense of screening images (5), and journal editors have assumed that their reviewers would catch outright falsifications (6, 7). All the while the evidence for, and concern about, the problem of inappropriate or outright falsified images has continued to grow (2, 8-24). In many ways, the turning point may have been the fraudulent stem cell paper (Hwang et al. 25) in Science. Screening before publication could have caught the falsified images in this paper and saved Science, and the scientific community, from major embarrassment (3).

The US Department of Health & Human Services’ Office of Research Integrity (ORI) defines misconduct as “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results” and specifically adds that it “does not include honest error or differences of opinion” (26, 27). Misconduct injures the scientific community in a number of ways. The biggest loss is that of trust (28). A good reputation with the general public is important for the credibility of scientific research and scientific researchers, something they do not want to lose. Just as important, scientists need to be able to trust one another’s publications and data to move their own research forward and avoid wasting time and resources on erroneous research ideas.

2. How Bad Is the Problem of Inappropriate Image Manipulation?

Biological science began to make the transition to digital images in the 1990s. Images captured on film have their own technical issues, but the expense and expertise that were required to create a quality publication photo were usually sufficient to protect against amateurish manipulations and fraud (29). With the arrival of commercial image editing software such as Adobe Photoshop® (1990) and Corel Photopaint® (1992), it became much easier for users to manipulate their images. Unlike the darkroom, where experience was often passed down, computer manipulation became the domain of the younger members of the lab (29, 30). Experience was more likely to come from trial and error, rather than from formal education in image analysis or manipulation. In addition, these commercial programs were designed primarily for the graphic arts community, and as a result many standard manipulation functions included in the software are wholly inappropriate for use on scientific images.

While there have been a number of individual cases reported in the literature involving image manipulation fraud, there have not been extensive studies of the problem. Based on the experience of the JCB (3), the American Journal of Respiratory and Critical Care Medicine (31) and Blood (32) it appears that, even with explicit instructions to authors, about 20–25% of reviewed and accepted articles at these journals had at least one figure that needed to be remade due to failure to comply with the instructions to authors. In approximately 1% of the instances, the JCB found that the manipulations caused sufficient concern that the author’s institution was contacted (3). The ORI has also seen a growing number of image manipulation issues in the cases that they investigate for misconduct accusations (23).

There are still a significant number of journals that have not made much effort in dealing with the problem. In a presentation given to the 2011 Council of Science Editors meeting, Caelleigh and Miles surveyed 446 journals and found that 2% had no formal guidelines, 48% had guidelines that referred to digital images as “art” or “illustrations,” 40% had general guidelines for digital images, and only 10% had explicit guidelines along the lines of those found at the JCB (33). Since there are hints of how bad the problem is at the journals that review images from peer reviewed and accepted articles, one has to wonder how much is being missed at journals that do not provide specific guidelines or check the images submitted. One troubling possibility is that people who know their data is suspect may purposefully select journals that do not check the integrity of the data.

3. Learning from the Past

Historians and photojournalists have worried about inappropriate image manipulation for far longer than scientists. The issues raised in these fields suggest important questions that scientists should be pondering. Due to issues with copyright, including a refusal to allow an image to be used, readers are encouraged to visit “Photo Tampering throughout History” where the images mentioned here, and many other examples can be seen (http://www.fourandsix.com/photo-tampering-history/) (34).

3.1. Manipulated Images Lead to an Inaccurate Historical Record

There are numerous examples of image manipulation as a means of trying to rewrite history. During his nearly 30-year reign, Joseph Stalin (USSR) routinely had images altered to remove the faces of people who had fallen out of favor (e.g., Leon Trotsky) (35). In the 1950s and 1960s, images of the Soviet Cosmonaut program were heavily edited to de-emphasize the military nature of the program and to minimize the loss of personnel due to accidents (36). Other ideologies have influenced the manipulation of historical images; for example in 2011 US Secretary of State, Hilary Clinton, was erased from a widely circulated news photograph of a group of high US government officials gathered in the Pentagon’s “situation room” by a Hassidic Jewish newspaper because of the paper’s policy of not running images of females (37).

“The past was erased, the erasure was forgotten, the lie became truth.” George Orwell, Part 1, Chapter 7 Nineteen eighty-four (published in 1949) (38).

Issues: The historical records in science are lab books, electronic data archives, and journal articles. If these are misleading, scientists could use the altered image information found in these sources to guide the direction of their research, possibly embarking on fruitless studies, wasting time and money. What is our responsibility to our coworkers and professional colleagues?

3.2. The Failure, to Capture a Representative Sampling of Images of the Subject Being Studied Could Lead Others to Misinterpret the Data

Franklin D. Roosevelt (FDR) served as the President of the United States of America from 1933 to 1945 (39). Over the course of his long political career (which began in 1910), FDR was photographed thousands of times and frequently appeared in short news-reels shown in movie theaters. By carefully staging these media opportunities, FDR was able to impart an image as a strong and resolute President during a time of economic depression and World War II. The general public was not aware that FDR was paralyzed from the waist down, that he had great difficulty walking any more than short distances, and that he often wore iron braces under his clothing. Most pictures of FDR show him seated, or if he is standing he can be seen holding on to something or someone to maintain his balance. Only three pictures are known to exist showing FDR in the wheelchair that he frequently used to get around when he was out of the public eye (see Fig. 1).

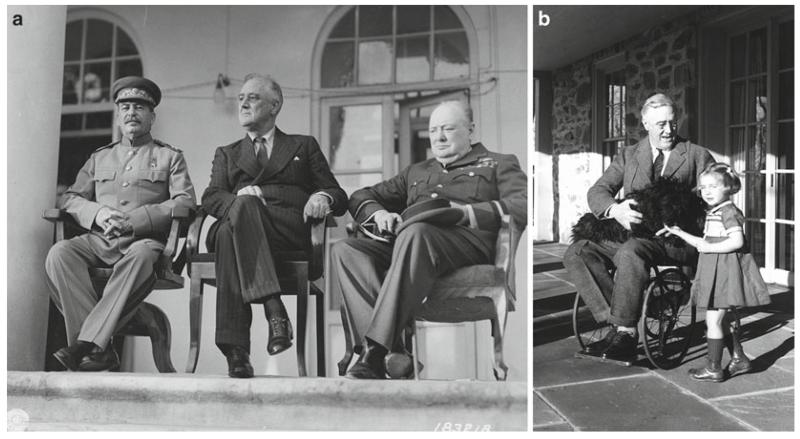

Fig. 1.

US President Franklin D. Roosevelt was photographed thousands of times during his long political career. Most of the photographs show him seated, or if he was shown standing he was frequently holding onto someone or something. According to the FDR Library (112), Roosevelt’s paralysis was concealed (with the cooperation of the press) for political reasons, since at the time disabled persons were not considered able to perform the demanding responsibilities of elected Office. Because the images the public routinely saw of Roosevelt did not hint of a physical limitation, the vast majority of the public was unaware that Roosevelt was paralyzed from the waist down (see Subheading 3.2). (a) Joseph Stalin (USSR), Franklin D. Roosevelt (USA), and Winston Churchill (UK) at the Tehran Conference, Teheran, Iran. November 29, 1943 (courtesy of the Franklin Delano Roosevelt Library website, Library ID 48-22 3715-107, Public Domain image). (b) Franklin D. Roosevelt in a wheelchair with his dog Fala in his lap, also pictured is family friend Ruthie Bie. Hyde Park, NY, February 1941 (courtesy of the Franklin Delano Roosevelt Library website, Library ID 73-113 61, Photographer: Margaret Suckley. Public Domain image).

Issues: Selectively presenting images that only tell one side of “the story” may be an engrained part of politics, but the expectation for scientists is that we will be unbiased and truthful. If we only show other people the pictures we want them to see, then the viewers will most likely reach the interpretation that we want them to have. What if our interpretation is wrong? It may be a poorly kept secret that most published images are somewhat less than “representative,” but is this right? What would happen to the quality of science if we only showed our most compelling images to our project leaders, lab group, or collaborators?

3.3. “Artistic” Changes to an Image Can Unintentionally Alter the Factual Content and/or a Viewer’s Interpretation of the Image

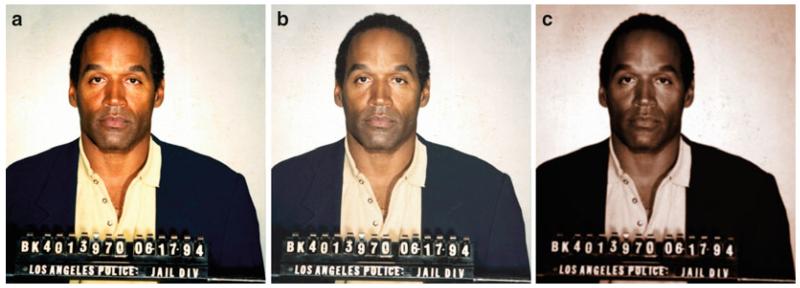

In 1994, television sports commentator and former American football player OJ Simpson was accused of, among other things, murdering his wife. He was later acquitted of the charges. Shortly after Mr. Simpson’s arrest, the Los Angeles Police Department provided a booking photograph to the media. On June 27, 1994 Newsweek ran the image almost unchanged as its cover photo, but Time magazine’s graphic artist significantly darkened the image to make it “more artful, more compelling” (40). The African-American community expressed outrage (41), because they perceived that Time had made Mr. Simpson look more sinister (42). To underscore the power of the images, few people complained about Newsweek’s bold headline “Trail of Blood,” while Time’s less prejudicial headline “An American Tragedy” was ignored (see Fig. 2).

Fig. 2.

US sports and television personality OJ Simpson was arrested and accused of murdering his wife in June 1994. He was later acquitted of the charges. Newsweek and Time magazines both used the same Los Angeles Police Department booking photograph on the covers of the June 27, 1994 issues of their respective magazines. The heavily manipulated Time magazine cover (c) was seen by many as making Mr. Simpson appear more “sinister” (42) (see Subheading 3.3). (a) Original public domain image as found at the Washington Post website (113). (b) Color adjusted to more closely resemble the Newsweek cover. (c) Multiple image manipulations were performed to have the image more closely resemble the Time cover. To color match the images, regions were sampled from an image of the respective magazine covers (not shown) and the original image (a) was adjusted to match using the hue/saturation tool in Adobe Photoshop® CS3 to create the derivative images (b, c). The right image (c) was then colorized using the hue/saturation tool, the curves tool was used to darken the image, then a Gaussian blur (radius = 2.0) was applied, followed by setting the overall gamma to 0.85 in the levels tool to darken the image further, then selecting the background and lightening it using a gamma of 1.5. Photograph provided to the press on June 17, 1994 by the Los Angeles Police Department, Los Angeles, CA. Time magazine’s legal department would not grant permission for the magazine cover to be reproduced here.

Issues: We need to be very cautious about manipulating an image so that it will better convey the message that we want to present. How should we make clear to readers that the manipulations performed on digital images are appropriate and scientific?

3.4. Photo-Illustrations That “Look” Real Are Misleading

Composite images are a staple of celebrity magazine covers. Occasionally news magazines have used realistic looking composites, with tiny disclaimers inside the magazine. For example, US media personality Martha Stewart spent 5 months in Federal prison on an insider stock trading charge. In March 2005, Newsweek magazine portrayed Ms. Stewart on their cover even before she was released from prison by digitally pasting an earlier image of Martha’s head onto another woman’s body (43). The composite was briefly described on page 3 of the magazine. The National Association of Press Photographers called the Newsweek cover a major ethical breach (44).

Governments with something to prove have sometimes used composite images. In July of 2008 the Iranian government provided an image to the press that gave the impression of a successful launch of four Shahab-3 medium range ballistic missiles. The New York Times quickly determined that the image provided by Iran had been manipulated using “cloning” to give the impression that all four missiles had successfully launched. The analysis by The New York Times was confirmed by the discovery later of another image taken at the launch that showed only three missiles were successfully launched (45).

Even prestigious scientific journals have occasionally stumbled when using composite images. A letter to the editor of the scientific journal Nature accused the publisher of a “deceptive” composite image that was featured on the cover of the August 2, 2007 issue (46). The Editor later apologized, stating “The cover caption should have made it clear that this was a montage” (47).

Issues: The public “rolls their eyes” at manipulated images in tabloid newspapers and celebrity magazines, but they have higher expectations for scientists. Are composite images with disclaimers appropriate in science?

4. Before the Image Is Captured: Appropriate Image Acquisition Strategies

Bias is a far greater problem in science than is often acknowledged (48, 49). If images will be analyzed to create numerical data (size, shape, count, etc.), they need to be acquired in a systematic and well-defined manner (50). Systematic would include sufficient numbers of images, since there is always a certain amount of error in image analysis and analysis of enough structures will ensure that the error is small. Well-defined simply means that the images should be of the structures being measured, but selected in a manner that reduces the operator’s own bias. Images are data and should, therefore, be subject to the same statistical treatment as all other scientific experiments.

If the images will be used primarily for illustrative purposes, it is still best to take a number of images (51). Users should study the sample meticulously to allow the sample to provide the answer to the research question, rather than searching for an image field that best documents their hypothesis. The captured images can be examined later in the lab and it may be that further examination will provide a different interpretation of the data. The only way to accurately describe the differences seen in images of different treatment groups is to be very familiar with the appearance of the normal, untreated samples.

To acquire appropriate and high-quality images it is important to make sure that the instrument used for the acquisition has been calibrated and properly aligned (52, 53). In addition, users need to understand the instrument’s capabilities, as well as its limitations. If images were acquired with different acquisition settings, it is important to communicate this to all the members of the lab, since changes in settings can affect the appearance of the image and possibly its interpretation.

5. How Digital Image Data Are Stored

5.1. Scientific Digital Images Are Data That Can Be Compromised by Inappropriate Manipulations

The underlying premise of image publication and ethics guidelines is that a digital image is data and that the data should not be manipulated inappropriately. Image data can represent intensity data acquired from a microscope CCD camera, or complex pre-processed information that comes from an Atomic Force Microscope or a Magnetic Resonance Imaging (MRI) scanner.

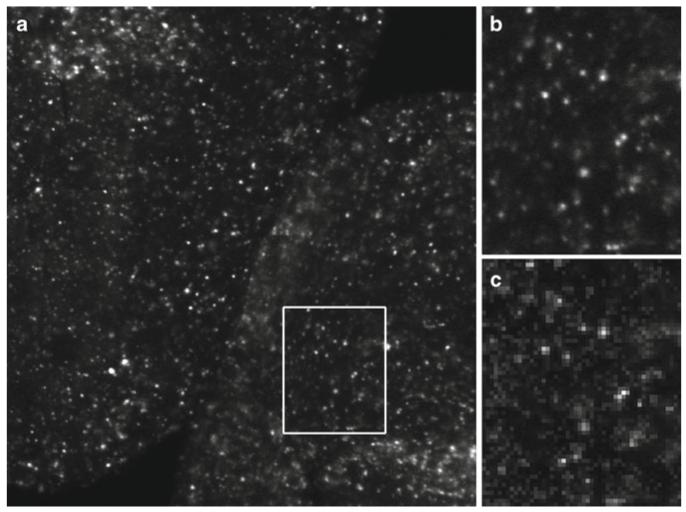

Photographic film is an analog form of image capture, in that the information in the image is continuously variable. Digital imaging is a technique that samples the incoming data into discrete units called picture elements (pixels) that are presented as part of an array or grid. The scale of the pixels needs to be well matched to the resolution of the image capture instrument to ensure correct sampling and to avoid artifacts (see Fig. 3).

Fig. 3.

Digital images are a representative sampling of real life at discrete points (pixels). To ensure that the image correctly captures all the smallest details in the specimen, the Nyquist/Shandon theories suggest a minimum of 2× oversampling of the smallest resolvable element, with 2.4–2.8× oversampling suggested by some (55). Failure to adequately oversample can cause aliasing artifacts. Image (b) shows correct sampling and image (c) shows the same field undersampled. Note that in image (c) it is no longer possible to accurately count the number of visible in situ hybridization spots (see Subheadings 5.2, 5.3, 6.9, and 6.10). (a) Image captured at 2,048 × 2,048 pixels representing a field of view of 225 by 225 μm. The image was cropped to fit the page. (b) 2× enlargement of the field represented by the white box. Enlarged using Adobe Photoshop® CS3’s nearest neighbor resampling algorithm. (c) 2× enlargement of the same area, but taken from an image (not shown) that was captured at 512 × 512 pixels to represent the same field of view. Enlarged in the same manner as (b). Ciona intestinalis embryos, in situ hybridization stain, image captured with a Zeiss LSM 510 confocal microscope. These images are used by permission of Ella Starobinska and Dr. Bradley Davidson, University of Arizona.

The pixel in a digital image contains or represents a good deal of information. Each pixel has:

XY positional information relative to the rest of the grid that makes up the image.

Intensity information, presented as a numerical value within a range described as bit depth (e.g., 8 bit grayscale = 256 shades, 24 bit color = 16.7 M colors). This numerical information can represent data other than the amount of light being gathered by a detector. Intensity information may be used to represent forces, height, wavelength, etc.

A voxel (volume element) is a pixel with a z dimension, representing a volume. Information defining the voxel is usually stored in the metadata.

Many scientific images have associated metadata (data about data) that can store additional information. If the instrument does not capture these data automatically, it should be recorded manually. These data could include:

Information about the spatial, temporal, or spectral scale that was used to record each pixel. It is from this information that scale bars or other scalar references can be created. For many types of acquisitions, this includes a z dimension.

Acquisition settings used by the instrument to record the data (objective lens, magnification information, xyz stepper motor positions, filter wheel positions, illumination source, gain settings, etc.).

The date and/or time that the data was acquired.

The operator who captured the image data.

Many scientific instruments save data in proprietary image file formats, with the Laboratory for Optical and Computational Instrumentation (LOCI, University of Wisconsin) Bio-Formats project supporting a growing list of 115 different formats (54). The proliferation of file formats is due to one of two reasons: most image formats were not designed to store complex metadata, and/or a format was needed that was capable of storing complex multi-dimensional data (xyztλ).

The correct acquisition of a digital image involves a number of considerations.

5.2. Sampling (See Subheadings 6.9 and 6.10)

Because digital images are a product of sampling, at least two to three times oversampling (e.g., Nyquist/Shandon) of the smallest resolvable elements in the image is required to avoid the possibility of artifacts (55, 56) (see Fig. 3). With some imaging techniques, this may include oversampling in the z dimension, as well as the x and y dimensions. Higher levels of oversampling have a benefit if the imaging technique has high light levels, but in low light situations higher levels of oversampling may reduce contrast and lower the S/N ratio to the point where the ability to resolve structures (e.g., Rayleigh criterion) is impaired (57).

If the image acquisition has a time dimension, temporal oversampling is also important. The “wagon wheel effect” (a form of temporal aliasing) has been known since the earliest days of movie making as a mismatch between the frames/s of the film and the rotational speed of a vehicle’s wheels (58, 59) (see Fig. 4).

In many cases, sampling at a higher bit depth can be beneficial, however much like over-magnifying an image (no additional resolution information), some noisy image acquisition techniques (e.g., confocal microscopy) do not warrant high bit depth images.

Wavelength (spectral) scanning should be correctly sampled using at least 2× the smallest resolvable element in the spectra. In practical application this can be difficult, especially in low light applications like fluorescence imaging.

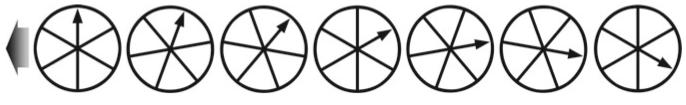

Fig. 4.

Temporal aliasing is a mismatch between the speed of the object and the speed of the camera (incorrect temporal sampling). The “wagon wheel effect” (also known as the “stroboscopic effect”) became familiar to viewers of Western movies as far back as the silent movie era (58). In the above illustration, the timing of each image is such that the wagon wheel has only rotated 94.5% of a turn (340°) per frame. When the video is played back, the wheel will appear to rotate in a clockwise direction, when in reality it is rotating counterclockwise (based on the indicated direction of travel, large arrow). Exploiting this artifact, a video could be created that showed an automobile obviously moving forward while the wheels appeared to be stationary, or a helicopter flying without the rotor turning (http://www.youtube.com/watch?v=Xh-sf6vwSMc). Given the misleading possibilities of this artifact, it is important to know how quickly things are changing in a sample and to oversample correctly (see Subheadings 5.2 and 6.9). Dr. David Elliott, University of Arizona, provided technical assistance with this figure.

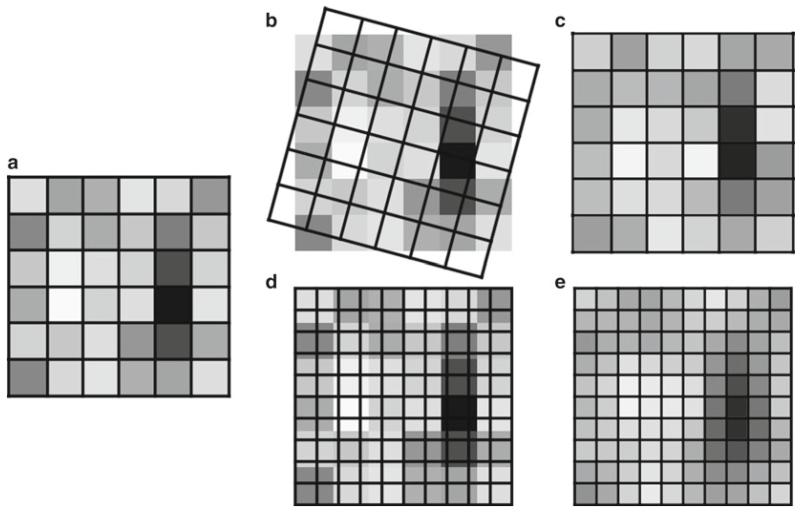

5.3. Digital Image Artifacts

Aliasing is the error that occurs when analog data is sampled incorrectly, or as a result of the interpolation that comes from resizing or rotating a digital image (see Figs. 3 and 5). James Pawley, editor of Handbook of Biological Confocal Microscopy says Aliasing may cause features to appear larger, smaller, or in different locations than they should be (60). An example would be the jagged line that appears when sampling a complex curved edge. Color aliasing artifacts can occur as well, particularly when sampling complex structures using a single chip color camera, since the color of any one pixel is based on the interpolation of color data from neighboring pixels.

Moiré is an image artifact produced when a repeated pattern is sampled at less than the Nyquist frequency, particularly when the sampling is performed with a pattern (e.g., the grid pattern of pixels on a CCD chip) (24, 61).

Bit depth saturation is often an operator-caused artifact, where the signal is truncated at the brightest or darkest ends of the spectrum (see Fig. 6). Any differences in the data that would have occurred outside of the range of the detector are lost in the truncated areas. This form of overly zealous adjustment is typically made during the image acquisition to suppress background or make the image appear more striking. Aggressive post-processing of images can also create this effect. A spike in the image’s intensity histogram (maps the frequency distribution of the pixels for the entire bit depth range) at the darkest or brightest values is an indication that some of the pixels in the image have been truncated (62).

Noise is present in most image acquisition systems. Some noise is sample-related, but the electronics of the image capture device also contribute to the overall noise levels (e.g., thermal noise, shot noise). Ideally, images should be acquired with a high signal-to-noise ratio, so that the effects of noise are minimized. In some instances (e.g., fluorescence imaging of live cells), that may not be possible.

Fig. 5.

Rotating, as well as enlarging or reducing the image size (total number of pixels), causes the intensity values in an image to be resampled using interpolation (Merriam-Webster “to estimate values of (data or a function) between two known values” (114)). Rotating and/or resizing an image may be necessary for reporting the image data in a publication; however, this interpolation of the data should only be performed once on an image to avoid the compounding of interpolation artifacts (see Subheading 6.10). It is important to be very careful when using Adobe Photoshop®’s powerful image size dialog box, since it is very easy to accidentally resample an image with this tool (93). (a) A 6 × 6 pixel array. (b) A 15° rotation overlaid on the original image. (c) The result of the 15° rotation. (d) A 10 × 10 array is overlaid on the original image prior to enlarging the image. (e) The result of the 10 × 10 enlargement. Fifteen degree rotation performed using the Adobe Photoshop® CS3 Edit | Transform | Rotation tool. Enlargement performed using the Adobe Photoshop® CS3 Image Size dialog box, using the “bicubic smoother (better for enlargement)” resizing algorithm.

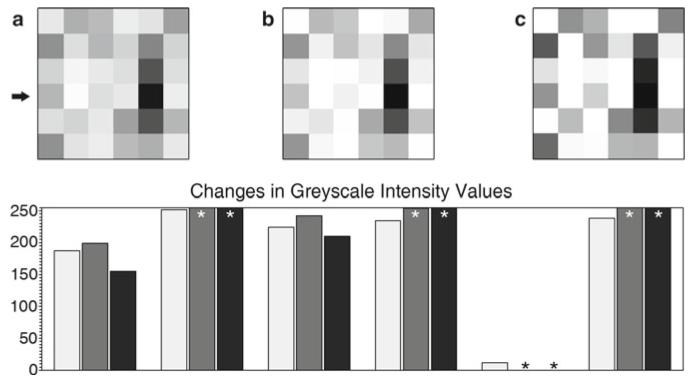

Fig. 6.

(a) Six by six pixel array (see Fig. 5a). Pixels have discrete intensity values, positional information within the array, and may imply a three-dimensional voxel (volume element). (b) The same array as (a) after a moderately aggressive brightness and contrast adjustment using ImageJ 1.43 m (115). (c) The same array as (a) after applying the Adobe Photoshop® CS3 sharpen filter. Graph: (key: light grey = (a), medium grey = (b), black = (c)). The graph shows the intensity values of the fourth row of pixels (arrow) in the images. The original image contains values that fit within the range of 0–255 (black-white). After the brightness and contrast adjustment, or the sharpening filter, a number of the intensity values have been truncated (asterisk) since they exceeded the 0–255 range, leaving just the maximum or minimum values. The relationship of these truncated values to those of the neighboring pixels has been lost (see Subheadings 6.1 and 6.5).

5.4. Data Management

The US National Science Foundation is now requiring a two-page data management plan for all new grant applications (63, 64). It would not be surprising to see other US granting agencies follow suit. This raises the important issue of correctly storing the original data in its native file format. There are initiatives to develop open source software that will assist in organizing, tagging, storing, translating file formats, pre-configured analysis routines, and secure sharing of data (65, 66). These programs require appropriate information technology resources and support. For the lab that does its own IT support, there needs to be a plan for organized storage of the original data and regular backups. Many labs have used CDs or DVDs as “read only” backups of their data, and while this has some appeal, optical media can be damaged and writable optical media’s use as reliable long-term storage is doubtful (estimated at 2–5 years) (67). Magnetic tape is estimated to have a 10–30-year lifespan, but even tape backups should be periodically tested (67).

There are several good reasons for retaining the original data. Most of these fall under the heading of data provenance, which has been defined as “Information about the source and history of particular data items or sets, which is generally necessary to ensure their integrity, currency, and reliability” (68).

Any metadata stored in the original file format is retained.

Investigators can compare the final image used in a figure with the original image data to ensure that any manipulations performed were appropriate (69).

In the event that there is a question from a journal or granting agency about the image manipulations, the original data can be produced (70). It is important to note that online images (published or supplementary data) can be analyzed many years after publication (71).

The original data may be needed to satisfy regulatory requirements (e.g., CFR 21 part 11 (72, 73), HIPPA (74), Forensics (75, 76)) for maintaining the data.

Because the software to open older file formats can become obsolete or will no longer run on modern operating systems, exporting the data to a more standard format is often a wise precaution. Some software can export to the Open Microscopy Environment’s OME-TIFF specification (77); however, if this format is not available, the more common TIFF file format is recommended by the Microscopy Society of America (78). Other file formats lack the bit depth for scientific images, or saving into the format performs a lossy type of compression on the image. TIFF is a quasi-standard file format (79) that supports large bit depths in grayscale and color and does not alter the exported bitmap data (see Subheadings 5.5 and 6.8). Scientists should not use the JPEG file format for image data, as this format’s lossy file compression alters the positional and intensity information of every pixel (see Subheading 6.8).

5.5. Manipulation of Digital Images Should Only Be Performed on a Copy of the Unprocessed Image Data File

When you begin your image manipulations, it is crucial to always use a copy of the original image. In most software it is far too easy to overwrite the original file with a manipulated image. Overwriting the original data with commercial image editing software (e.g., Adobe Photoshop®) can cause embedded metadata (e.g., nonstandard TIFF tags) to be lost or changed. The best practice is to open the file and immediately save a copy of the image, close the original file, and open the copy image.

In recent years the issue of “self-plagiarism” has been raised when authors have reused image data that was previously published (80-82). Another issue of data provenance is tracking how a particular image was used in the past, so that reuse of the image does not occur without the appropriate permissions from the publisher or copyright holder of the original image. Good data management practices will go a long way to prevent this from occurring.

6. Post-processing

Image processing (editing, manipulation) is a form of communication. As scientists, we need to be careful that our manipulations are helpful and do not hurt the communication of what is true in the image (see Subheading 3.3). Just because something can be done via image processing does not mean that it should be done. When editing an image, it is good to remember that serendipity has a wonderful role in science (83) and that a published image may mean something entirely different to a reader from outside of our specific field of expertise.

To be clear, image processing is not a form of artistic expression; it is the mathematical manipulation of the underlying numbers in a digital image. Resist the urge to beautify scientific images (84), and remember that artistic changes can alter how others will interpret the image (see Subheading 3.3).

Hard and fast rules that apply to every image-forming discipline are difficult to create (the National Academy of Sciences found this out (71, 85) when they were unable to agree on guidelines). The following guidelines are based on several decades of experience with microscopy and digital images (the numbers preceding the individual guidelines refer to the section of the text where it is discussed.)

Ethical guidelines for the appropriate use and manipulation of scientific digital images (24, 86, 87)

5. 1 Scientific digital images are data that can be compromised by inappropriate manipulations.

5. 5 Manipulation of digital images should only be performed on a copy of the unprocessed image data file (Always keep the original data file safe and unchanged!).

6. 1 Simple adjustments to the entire image are usually acceptable.

6. 2 Cropping an image is usually acceptable.

6. 3 Digital images that will be compared to one another should be acquired under identical conditions, and any post-acquisition image processing should also be identical.

6. 4 Manipulations that are specific to one area of an image and are not performed on other areas are questionable.

6. 5 Use of software filters to improve image quality is usually not recommended for biological images.

6. 6 Cloning or copying objects into a digital image, from other parts of the same image or from a different image, is very questionable.

6. 7 Intensity measurements should be performed on uniformly processed image data, and the data should be calibrated to a known standard.

6. 8 Avoid the use of lossy compression.

6. 9 Magnification and resolution are important.

6. 10 Be careful when changing the size (in pixels) of a digital image.

6.1. Simple Adjustments Performed on the Entire Image Are Usually Considered an Acceptable Practice

While the reporting of certain basic image manipulations is not required, a better practice is to record the entire protocol of how the image was changed. Some software (e.g., ImageJ, Adobe Photoshop®, ImagePro®) have the ability to create an audit trail of image manipulations, but the function must be enabled by the user (88). The alternative is to manually document the protocol in a lab notebook.

Careful adjustments of image brightness and contrast or a histogram stretch (e.g., levels tool) are usually considered non-reportable. Adjustment of image gamma, a non-linear operation, is considered a manipulation that should be reported by some journals and unnecessary to report by others (24, 89-91). Be aware that aggressive manipulation of images can over/under saturate the image, truncating the intensity data (see Subheading 5.3, Fig. 6), and may cause the apparent size of objects to change due to aliasing artifacts.

In labs that are sharing images among many computers, with different monitors (of varying age) and different operating systems (92), it is a worthwhile investment to purchase a monitor calibration device (approximately $200–300 USD) to ensure that everyone will be seeing the image with the correct image brightness and/or color. It should be noted that laptop computers running on battery power often dim the screen as a means of prolonging battery life. This change in screen brightness can affect how detail in the image is seen. Ambient lighting (e.g., exterior windows) can affect image brightness and color perception as well. Monitor calibration, in addition to attention to lighting, will ensure that adjustments made on one computer will appear the same on all the lab’s computers, and should improve the reproduction of the images in print.

6.2. Cropping an Image Is Usually Considered an Acceptable Form of Image Manipulation

Image cropping typically removes pixels from the outside edges of the image as a means to center an object of interest, or to permit the image to fit a defined space. It is important to consider the motivation for cropping. Will the cropped image improve the communication of unbiased scientific information, or will it change how the image is perceived by the viewer? Will the crop remove something that disagrees with the hypothesis being presented, or is the crop being performed to hide something in the image that cannot be explained?

Be careful about how aggressively an image is cropped. To reproduce a bitmapped image in print, most journals require 300 pixels per inch. For example, a 512 × 512 image at 300dpi is only 1.7 by 1.7 in. in size and in many cases this may not be a large enough figure to show all the detail. Increasing the total number of pixels in the image using software (e.g., Photoshop’s dangerous “image size” dialog box (93)) usually gives the image an indistinct, or less crisp look, due to aliasing artifacts (see Subheading 6.10).

6.3. Digital Images That Will Be Compared to One Another Should Be Acquired Under Identical Conditions, and Any Post-acquisition Image Processing Should Also Be Identical

This would include images intended for computerized analysis or images being assembled in a combined publication figure.

A protocol for identical conditions would include:

- Similar/identical sample preparation techniques.

-

-Ideally the sample preparation would occur at the same time; however in many studies this is not possible.

-

-Be very careful with different lots of reagents (e.g., polyclonal antibodies).

-

-

- The same instrument.

-

-Two different instruments with the same hardware do not always acquire identical images.

-

-

The acquisition conditions (settings) should be identical.

Post-processing of the images should be uniform (e.g., background subtractions, white level balancing).

In many core facilities, it is the students and staff that acquire the bulk of the images. If someone who is unfamiliar with the instrument (i.e., post-doc, PI) will be interpreting the images, it is crucial that the acquirer of the data communicates with this person any differences in acquisition settings that were made. For example, small changes to the gain (signal amplification) and offset (black level) in a confocal microscope can greatly affect the final image data.

Much like other composite images (see Subheading 3.4), if the images in a figure are presented as means of conveying similarities and differences in the outcomes of treatment, the assumption is made by the reader that they were acquired under similar conditions.

6.4. Manipulations That Are specific to One Area of an Image and Are Not Performed on Other Areas Are Questionable

While dodging and burning of photographic prints was certainly done in the past, enhancing only selected areas in a scientific digital image is generally not allowed today. While rare, there may still be an appropriate rationale for enhancement of specific areas of an image in special cases, but it is mandatory that the manipulations be declared.

High dynamic range (94) or extended depth of focus (95) images are useful techniques to overcome physical limitations in instrumentation (inability to capture the brightest and dimmest parts of a field of view in the same image, and inability to capture an in-focus image through the entire depth of a large three-dimensional object, respectively). These are computational techniques that combine data from multiple images into a single image. With appropriate explanation, these are acceptable techniques for scientific data.

6.5. Use of Software Filters to Improve Image Quality Is Usually Not Recommended for Biological Images

Software filters are typically a convolution kernel, a small array of mathematical functions that are applied to each pixel and the resulting change in the central pixel of the array is based on the kernel function and the values in neighboring pixels in the image. Since the values in the neighboring pixels will be different all throughout the image, the change created by the filter will not be the same in all areas (96). This is how a sharpening filter detects edges, based on localized changes in pixel intensity, with the effect being most pronounced in the edge area and less noticeable in areas where the intensity does not change significantly (see Fig. 6).

Commercial image editing software (e.g., Adobe Photoshop®) does not provide access to the mathematical functions used in software filters. If commercial software is used, the software version number, filter name, and any additional settings should be carefully recorded. Although scientific image processing software may provide more information about the kernel used, or even allow the user to design their own kernel, these steps should also be carefully documented.

6.6. Cloning or Copying Objects into a Digital Image, from Other Parts of the Same Image or from a Different Image, Is Very Questionable

Composite images can be very misleading (see Subheading 3.4), even when they are explained in the figure legend. If two parts are added together, a white or black line should indicate the added piece. Adding an insert that shows a portion of the field at a higher magnification is appropriate, but it should be set off in a way that clearly shows that it is a different image.

Use of cloning and copying to “clean up” or beautify an image is an invitation to trouble. These sorts of changes to an image are fairly easy to detect and they should always be declared in the figure legend. Failing to declare these sorts of changes may lead to the publication being rejected and could prompt further investigation of the author’s previously published papers.

Commercial image editing software provides many options for retouching images. Almost all of these tools, in particular “auto” manipulation tools, should be avoided by scientists. If these tools are used, they should be documented as discussed previously (see Subheading 6.5).

6.7. Intensity Measurements Should Be Performed on Uniformly Processed Image Data, and the Data Should Be Calibrated to a Known Standard

Intensity measurements, particularly fluorescence intensities, are very difficult to do well (97). This is due, in part, to the difficulty in creating reproducible calibration standards (53). In addition, many instruments have known shortcomings that make the collection of reproducible data difficult (98). The data acquisition parameters and post-processing techniques need to be standardized, or the data are worthless. Mathematical analysis of images with pixels that have reached bit depth saturation (see Subheading 5.3) should be avoided to ensure accurate results (99).

Densitometry of colorimetric stains (e.g., DAB, HRP) is easier to perform than fluorescence intensity measurements. Care should be taken with densitometry, since staining is not linear and the curve tends to flatten out at high staining densities (100, 101).

Users should be careful about post-processing of images. Image filtering can introduce artifacts that may affect intensity and size measurements in unexpected ways (see Fig. 6).

6.8. Avoid the Use of Lossy Compression

There are several types of file size reduction schemas used with image files. TIFF images can use a form of the loss-less LZW (Lempel-Ziv-Welsh (61)) compression, which does not change the image data. It should be noted that LZW-TIFF files are not universally supported by image processing software. GIF images reduce file size by using a limited color palette (256 maximum), which can reduce the color or intensity information in the image. JPEG images reduce the file size by using the lossy discrete cosine function to reduce the high frequency information in the image.

JPEG compression is particularly problematic, since it was designed to create changes that were not readily perceptible to the human eye (48). JPEG compression makes subtle changes in the color, intensity, and the location of the intensity, making it unsuitable for use with scientific images (48) (see Fig. 7). If JPEG must be used (e.g., web pages, required by journal), the compression should only be performed one time on any image as the final step, and only with the highest quality setting (minimal artifacts).

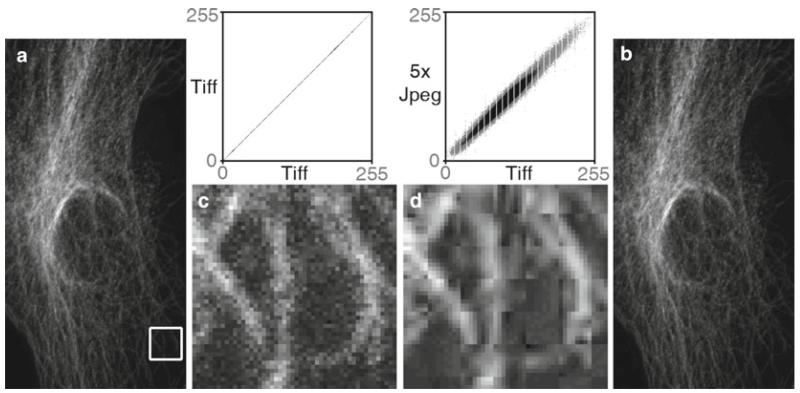

Fig. 7.

The dangers of the JPEG file format. A TIFF image (a) was opened and saved as a JPEG file, then the JPEG was opened and saved as a JPEG a total of five times in a row (Adobe Photoshop® CS3’s “medium” JPEG setting, 5). Each time a JPEG file is saved, the compression algorithm is performed. The white box in the TIFF (a) image and the corresponding area in the JPEG image (b) were enlarged seven times using Adobe Photoshop’s® nearest neighbor resampling algorithm to show the compression artifacts (with a histogram stretch and gamma set to 1.5). Image (c) is the enlarged TIFF, and image (d) is the enlarged JPEG. Comparing image (a, b), there appears to be no visible change, but at the pixel level ((c) vs. (d)), the changes are very noticeable. The scatter plots illustrate the differences between the files. The plot compares the grey values of each pixel in both images. The TIFF images are identical, hence the straight line. The intensity values are quite different in the JPEG image. The gaps in the plots are due to an initial histogram stretch of the original TIFF image. The original TIFF file size is 204 kB, the first JPEG save is 36 kB and JPEG files 2–5 are all 35 kB. The Pearson’s correlation of TIFF vs. TIFF is 1.0, the correlation of TIFF vs. the first JPEG save is 0.991, with the correlation between subsequent JPEG saves being 0.99. The first JPEG save causes the biggest changes in the image and saves the most file space, and after that the subsequent saves degrade the image slightly each time. Saving the TIFF file one time at the highest JPEG quality factor (12, Adobe Photoshop®) reduced the file size to 123 kB and yielded a tighter, but not perfectly linear, scatterplot (data not shown). If JPEG is required, saving once at the highest quality factor makes the smallest change in the image (see Subheading 6.8). Confocal microscope image of cellular cytoskeleton (this figure was inspired by colleague Charles “Chip” Hedgcock, University of Arizona, and the colocalization technique was suggested by Dr. John Krueger, ORI).

Be careful using JPEG, because every time the file is saved in this format the compression algorithm is performed. With each iteration of the file save, the JPEG artifacts compound one another, further degrading the image. On careful examination, lower quality factor JPEG images typically have an artifact of 8 × 8 pixel squares (96), also known as a macroblock (see Fig. 7c). In many cases, a more suitable alternative to JPEG is the loss-less PNG file format, although PNG does not support conversion to CYMK for printing (102). PNG is greatly preferred over JPEG when compressing figures that have small text or fine lines, since it does not blur the edges of these small items.

Occasionally image data with a time or z dimension are converted to a video format. To keep the file size manageable, most video file formats perform some form of compression. Many of the video formats use a variation of the discrete cosine transform that is used in JPEG images. While it is generally understood that video files use lossy compression, it is still important to carefully check the video to ensure that the software codec has not introduced misleading artifacts into the data. The codec name and the conversion settings used to create the video should be documented.

Scientists should avoid using presentation software (e.g., Microsoft’s PowerPoint®) for assembling publication figures. The software may be familiar and available, but these programs are designed for images that will be shown on a projection screen at low resolution, not the 300 dpi needed to print bitmapped images or the 1,200 dpi needed for line art. Resizing images in PowerPoint® brings up the interpolation issues discussed below (see Subheading 6.10) and running the program’s file compression routine to reduce file size will perform some form of lossy compression (suspected to be JPEG) on every image in the file. The JCB will not accept images that have been manipulated by PowerPoint® (103).

6.9. Magnification and Resolution Are Important

Digital images are a product of sampling the data at discrete intervals. This sampling should be performed in a way that satisfies the Nyquist/Shandon criteria. Undersampling can lead to artifacts such as incorrect size, or very small elements being missed entirely, which is the result of aliasing artifacts such as moiré (spatial aliasing) and temporal aliasing. Oversampling can more closely approximate the information in the images, but concerns such as cost, low signal-to-noise in the image, and optical issues can limit the ability to sample at high levels of oversampling.

Sampled images are acquired with information about the scale or size of each pixel in spatial (xyz), as well as temporal (T) and sometimes spectral (λ) dimensions. This information needs to be conveyed to the reader through the use of spatial scale bars, time stamps, or other scalar information. Any image manipulation that might change this important information must be done with care.

Spatial scale bars are preferred over stating the calculated magnification in a figure legend because journals occasionally resize images in the process of publishing. The scale bar will resize proportionally with the image. While common in some fields, the practice of simply stating the microscope objective used to acquire the image does not factor in several other magnification factors that can be different with each microscope and is very imprecise. Correct magnifications can be calculated by taking and measuring images of a stage micrometer or other calibration standards.

If structures are detected whose size is less than that of a microscope’s resolution limit, the structure’s apparent size will match the resolution limit (104). This is an artifact of the optics. Any measurements taken of these structures should keep this artifact in mind.

6.10. Be Careful When Changing the Size (in Pixels) of a Digital Image

If the digital image captured by a microscope is a sampled image, then changing the total number of pixels in an image or reorienting the sample grid is a form of resampling the image (see Fig. 5). If an image is rotated in intervals of 90°, then the pixels are simply remapped. If the image size is changed by a power of two, the resampling mathematics is a fairly simple interpolation. If the image is resized or rotated at any other interval, a more significant form of interpolation must be performed in the software to estimate what the pixels would look like if the sampling grid had been that size or oriented at that angle. While rotating and/or resizing an image may be necessary for reporting the data in a publication, this interpolation of the data should only be performed once on an image to avoid the compounding of interpolation artifacts (analysis should only be performed on the un-interpolated image). This is usually not considered a reportable image manipulation, but it should be documented. Enlarging the image does not increase the resolution (Raleigh criterion) in the image; in fact this may make formerly crisp edges seem fuzzier due to aliasing artifacts that are introduced by the interpolation algorithm.

6.11. Reviewing the Processed Image

As mentioned earlier, the final product should be compared to the original image(s) to ensure that any manipulations performed were appropriate and do not alter the data. Senior authors have a particular responsibility for the work coming out of their lab (69).

If there are suspicions that an image has been manipulated inappropriately, how can we tell? The JCB uses an experienced human editor to examine images, while other journals have used sophisticated image screening software (4, 105, 106). The only readily accessible resources for performing image forensics have been provided by the ORI (107). The ORI has several tools for examining suspect images that work in Adobe Photoshop® (see http://ori.hhs.gov/tools/). On a simpler level, users can examine the image’s intensity histogram for hints. Original, unprocessed images tend to have continuous histograms. Processed images frequently show common artifacts (gaps, spikes) that can suggest if the image is truly an original or if it has received some post-processing (62).

7. Going to Press

As was mentioned earlier (see Subheading 2), some journals do not have a firm grasp on digital image requirements and their instructions to authors read like they were written by a graphic designer. Dealing with journals can lead to additional issues for authors.

7.1. High Bit Depth Images

High bit depth images (>8bit greyscale, or >24bit color) cannot be reproduced in print. Some form of downsampling of the bit depth needs to occur to be able to publish these images. This should be documented.

7.2. Line Art

Many journals request that line art (graphs, charts) be submitted as vector graphic files. Vector files use mathematical formulas to describe the lines and do not have pixels, so they can be printed at any size without aliasing artifacts. Line art that is not in vector file format is typically requested at 1,200 dpi so that the very fine lines print smoothly. Line art resolution is 16 times (4× the linear resolution) the total number of pixels (300 dpi) normally required to nicely reproduce a raster image. If your lab lacks the software or expertise to work with vector files, it is possible to zoom in on a chart (e.g., from Microsoft Excel®) and perform a series of screen captures on a high resolution monitor and then stitch the multiple images together in Photoshop.

7.3. RGB and CYMK

The process of printing takes a digital image that was usually captured and manipulated as grayscale or RGB color and converts it to a very different color space (often CYMK). The printing process cannot reproduce all the tones that are present in a digital image, particularly if the image is in color and the image uses the brightest reds or blues, and, to a lesser extent, green colors. The reproduction of color in print is highly dependent on the number and type of inks used by the printing press, as well as the type of paper used. It is baffling why journals expect scientists with little expertise in this highly technical area to optimally convert their images from the RGB color space to CYMK prior to submission. Fortunately, a number of journals have stepped away from color space conversion and now accept images in RGB (103, 108, 109).

7.4. File Compression Issues

Journals sometimes still ask for the digital image files to be sent as JPEG files. Inquire if they will take a TIFF image or a PNG file. If the journal insists on JPEG, perform this conversion as the very last item before submitting the image and do the conversion only at the highest possible quality factor to keep the artifacts to a minimum. Bitmapped images with fine lines or small text do not convert well to JPEG format. The lines and text lose their crispness. PNG is a better compressed image file format choice for images with fine lines and small text.

It is also a good idea to check the file size of the proof PDF sent to you by the journal. The default image compression used by Adobe Acrobat is JPEG. Use the zoom features in your PDF viewer to examine the images (figures and line art) carefully. If you send the journal a document and image files totaling 8–10 MB, your PDF galley proof should not be a file that is less than 1 MB in size. Suggest that the journal change their PDF output settings from screen to print. This PDF conversion will still perform a JPEG compression; however it will be much less aggressive. If the journal agrees to make this change, your replacement PDF proof will be a larger file size and better quality.

8. Conclusions

Once upon a time the darkroom technician acted as a “gate keeper” of image processing technology, but the photographic enlarger has been replaced by Adobe Photoshop® and other commercial image editing software. The tricky darkroom procedures that could be done by a few have now been replaced by digital tricks that can performed by almost anyone. Along the way we seem to have lost the distinction between appropriate and inappropriate image manipulations. While misconduct (fraud) is a concern in science (see Subheading 1), the bigger issue seems to be related to the lack of understanding of how to correctly manipulate digital images. Combine the excitement for a person’s research with a lack of understanding and one can see why the journals are still finding over-manipulated images (see Subheading 2) in spite of their detailed instructions to authors. One editor went as far as to admonish people to “stop misbehaving” (110).

The first thing that needs to change is our mindset. We still tend to think of digital images as a “picture,” when in reality they are data. Pictures are artwork that can be changed to suit our desire for how they are presented to others, while image data are numerical and must be carefully manipulated in a way that does not alter their meaning. We need to ignore the pressure (peers and yourself) to beautify (19, 111) our images (see Subheading 3.3). Accurately communicating the truth is a fundamental issue in science (see Subheadings 3.1, 3.2, 3.3, and 3.4).

Secondly, we need to develop the discipline of documenting all the manipulation steps performed on an image, as well as the software and version number used to perform the manipulations. This would include steps that ordinarily do not have to be reported. Other types of scientific experiments are documented in great detail in our notebooks. Shouldn’t our image manipulations be as well? If an “image is worth a thousand words” shouldn’t we be more careful with these data? Providing adequate information about the image manipulations performed is a protection against accusations of fraud. If a reviewer does not like a manipulation that was performed, the discussion now becomes a “difference of scientific opinion,” not an accusation. It is important to remember that online images and supplemental data can be checked for inappropriate manipulations many years after publication.

Lastly, we count on our colleagues to be fully truthful in reporting their results. Shouldn’t we return them the same favor? We also need to teach our colleagues and mentor the next generation of scientists in how to correctly work with digital images. These guidelines are a starting point for discussion and training, but when it comes to submitting your publications, follow the instructions to authors. If those are short on detail, refer to those found at the JCB.

Acknowledgements

This work was supported in part by the Southwest Environmental Health Sciences Center (NIEHS ES006694); the Division of Biotechnology, Arizona Research Labs at the University of Arizona; the Arizona Cancer Center (NCI P30-CA23074); and the University of Arizona Department of Cellular and Molecular Medicine. The views, opinions, and conclusions of this chapter are not necessarily those of the SWEHSC, NIEHS, AZCC, NCI, or the University of Arizona.

References

- 1.Rossner M, Held MJ, Bozuwa GP, et al. Managing editors and digital images: shutter diplomacy. CBE Views. 1998;21:187–192. [Google Scholar]

- 2.Rossner M, Yamada KM. What’s in a picture? The temptation of image manipulation. J Cell Biol. 2004;166:11–15. doi: 10.1083/jcb.200406019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rossner M. How to guard against image fraud. Scientist. 2006;20:24. [Google Scholar]

- 4.Rossner M. A false sense of security. J Cell Biol. 2008;183:573–574. doi: 10.1083/jcb.200810172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Marris E. Should journals police scientific fraud? Nature. 2006;439:520–521. doi: 10.1038/439520a. [DOI] [PubMed] [Google Scholar]

- 6.Marcus A, Oransky I. Stop the picture doctors. Lab Times. 2011;4-2011:35. [Google Scholar]

- 7.Editorial Nature Cell Biology Combating scientific misconduct. Nat Cell Biol. 2011;13:1. doi: 10.1038/ncb0111-1. [DOI] [PubMed] [Google Scholar]

- 8.Anderson C. Easy-to-alter digital images raise fears of tampering. Science. 1994;263:317–318. doi: 10.1126/science.8278802. [DOI] [PubMed] [Google Scholar]

- 9.Taubes G. Technology for turning seeing into believing. Science. 1994;263:318. doi: 10.1126/science.8278803. [DOI] [PubMed] [Google Scholar]

- 10.McInnes SJ. Is it real? Zool Anz. 2001;240:467–469. [Google Scholar]

- 11.Suvarna SK, Ansary MA. Histopathology and the ‘third great lie’. When is an image not a scientifically authentic image? Histopathology. 2001;39:441–446. doi: 10.1046/j.1365-2559.2001.01312.x. [DOI] [PubMed] [Google Scholar]

- 12.Krueger J. Forensic examination of questioned scientific images. Account Res Policies Qual Assur. 2002;9:105–125. [Google Scholar]

- 13.Pritt BS, Gibson PC, Cooper K. Digital imaging guidelines for pathology: a proposal for general and academic use. Adv Anat Pathol. 2003;10:96–100. doi: 10.1097/00125480-200303000-00004. [DOI] [PubMed] [Google Scholar]

- 14.Editorial Nature Cell Biology Gel slicing and dicing: a recipe for disaster. Nat Cell Biol. 2004;6:275. doi: 10.1038/ncb0404-275. [DOI] [PubMed] [Google Scholar]

- 15.Editorial Nature Cell Biology Images to reveal all? Nat Cell Biol. 2004;6:909. doi: 10.1038/ncb1004-909. [DOI] [PubMed] [Google Scholar]

- 16.Guneri P, Akdeniz BG. Fraudulent management of digital endodontic images. Int Endod J. 2004;37:214–220. doi: 10.1111/j.0143-2885.2004.00780.x. [DOI] [PubMed] [Google Scholar]

- 17.Krueger J. Confronting manipulation of digital images in science. Office Res Integr Newslett. 2005;13-3:8–9. [Google Scholar]

- 18.Pearson H. Image manipulation: CSI: cell biology. Nature. 2005;434:952–953. doi: 10.1038/434952a. [DOI] [PubMed] [Google Scholar]

- 19.Nature Cell Biology Editorial Beautification and fraud. Nat Cell Biol. 2006;8:101–102. doi: 10.1038/ncb0206-101. [DOI] [PubMed] [Google Scholar]

- 20.Nature Cell Biology Editorial Appreciating data: warts, wrinkles and all. Nat Cell Biol. 2006;8:203. [Google Scholar]

- 21.Abraham E. Update on the AJRCCM—2007. Am J Respir Crit Care Med. 2007;175:207–208. [Google Scholar]

- 22.Nature Cell Biology Editorial Imagine. Nat Cell Biol. 2007;9:355. [Google Scholar]

- 23.Krueger J. Incidences of ORI cases involving falsified images. Office Res Integr Newslett. 2009;17-4:2–3. [Google Scholar]

- 24.Cromey DW. Avoiding twisted pixels: ethical guidelines for the appropriate use and manipulation of scientific digital images. Sci Eng Ethics. 2010;16:639–667. doi: 10.1007/s11948-010-9201-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hwang WS, Ryu YJ, Park JH, et al. Evidence of a pluripotent human embryonic stem cell line derived from a cloned blastocyst. Science. 2004;303:1669–1674. doi: 10.1126/science.1094515. [DOI] [PubMed] [Google Scholar]

- 26.Department of Health and Human Services [Accessed 16 Sept 2011];Public Health Service Policies on Research Misconduct; Final Rule, (Public Health Service, Ed.) 42 CFR Parts 50 and 93. 2005 http://www.ori.dhhs.gov/documents/42_cfr_parts_50_and_93_2005.pdf.

- 27.Office of Research Integrity [Accessed 16 Sept 2011];Definition of Research Misconduct. http://ori.hhs.gov/misconduct/definition_misconduct.shtml.

- 28.National Academies (U.S.) Committee on Science Engineering and Public Policy . On being a scientist: a guide to responsible conduct in research. 3rd edn. National Academies Press; Washington, DC: 2009. [Google Scholar]

- 29.Young JR. Journals find fakery in many images submitted to support research. Chron Higher Ed; Washington, DC: [Accessed 16 Sept 2011]. 2008. http://chronicle.com/article/Journals-Find-Fakery-in-Man/846/ [Google Scholar]

- 30.Couzin J. Scientific publishing. Don’t pretty up that picture just yet. Science. 2006;314:1866–1868. doi: 10.1126/science.314.5807.1866. [DOI] [PubMed] [Google Scholar]

- 31.Abraham E, Adler KB, Shapiro SD, et al. The ATS journals’ policy on image manipulation. Am J Respir Cell Mol Biol. 2008;39:499. doi: 10.1165/rcmb.2008-0010ED. [DOI] [PubMed] [Google Scholar]

- 32.Shattil SJ. A digital exam for hematologists. Blood. 2007;109:2275. [Google Scholar]

- 33.Caelleigh AS, Miles KD. [Accessed 16 Sept 2011];Biomedical Journals’ Standards for Digital Images in Biomedical Articles (presented at the Council of Science Editors annual meeting) 2011 http://scienceimageintegrity.org/wp-content/uploads/2010/04/CSEPoster-April20-FINAL-AC-Edit1.ppt.

- 34.Farid H. [Accessed 16 Sept 2011];Photo tampering throughout history, Fourandsix Technologies. 2011 http://www.fourandsix.com/photo-tampering-history/

- 35.King D. The commissar vanishes. The Newseum; Washington, DC: [Accessed 16 Sept 2011]. 2011. http://www.newseum.org/berlinwall/commissar_vanishes/ [Google Scholar]

- 36.Oberg J. Soviet Space Propaganda: Doctored Cosmonaut Photos. Condé Nast Digital; [Accessed 16 Sept 2011]. 2011. Wired.com. http://www.wired.com/wiredscience/2011/04/soviet-space-propaganda/?pid=1177. [Google Scholar]

- 37.Mackey R. The Lede. New York Times; New York: [Accessed 16 Sept 2011]. 2011. Newspaper ‘Regrets’ Erasing Hillary Clinton. http://thelede.blogs.nytimes.com/2011/05/10/newspaper-regrets-erasing-hillary-clinton/ [Google Scholar]

- 38.Orwell G. Nineteen eighty-four, a novel. Secker & Warburg; London: 1949. [Google Scholar]

- 39.Roosevelt Franklin D. Wikipedia: [Accessed 16 Sept 2011]. 2011. http://en.wikipedia.org/wiki/Fdr. [Google Scholar]

- 40.Meltzer B. Digital photography: a question of ethics. Lead Learn Technol. 1996;23-4:18–21. [Google Scholar]

- 41.Stanleigh S. Ryerson Review of Journalism. Ryerson University School of Journalism; Toronto, Canada: [Accessed 16 Sept 2011]. 1995. Where do we re-draw the line? http://www.rrj.ca/m3693/ [Google Scholar]

- 42.Carmody D. Time responds to criticism over Simpson cover. New York Times Company; New York: [Accessed 16 Sept 2011]. 1994. http://www.nytimes.com/1994/06/25/us/time-responds-to-criticism-over-simpson-cover.html. [Google Scholar]

- 43.Glater JD. New York Times. New York Times Company; New York: [Accessed 16 Sept 2011]. 2005. Martha Stewart Gets New Body in Newsweek. http://www.nytimes.com/2005/03/03/business/media/03mag.html. [Google Scholar]

- 44.National Press Photographer’s Association [Accessed 16 Sept 2011];NPPA Calls Newsweek’s Martha Stewart Cover “A Major Ethical Breach”. 2005 http://www.nppa.org/news_and_events/news/2005/03/newsweek.html.

- 45.Nizza M, Lyon PJ. New York Times. New York Times Company; New York: [Accessed 16 Sept 2011]. 2008. In an Iranian Image, a Missile Too Many. http://thelede.blogs.nytimes.com/2008/07/10/in-an-iranian-image-a-missile-too-many/ [Google Scholar]

- 46.Nature Cover Image. Nature. 2007;448(7153) [Google Scholar]

- 47.Sincich L. Cover story may obscure the plane truth. Nature. 2007;449:139. doi: 10.1038/449139b. [DOI] [PubMed] [Google Scholar]

- 48.Russ JC. Seeing the scientific Image (parts 1-3) Proc R Microsc Soc. 2004;39(2):97–114. (113):179-194; (114):267-281. [Google Scholar]

- 49.Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2:e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hyde DM, Tyler NK, Plopper CG. Morphometry of the respiratory tract: avoiding the sampling, size, orientation, and reference traps. Toxicol Pathol. 2007;35:41–48. doi: 10.1080/01926230601059977. [DOI] [PubMed] [Google Scholar]

- 51.McNamara G. [Accessed 16 Sept 2011];Crusade for Publishing Better Light Micrographs—Light Microscope Publication Guidelines. 2006 http://home.earthlink.net/~geomcnamara/CrusadeBetterMicrographs.htm.

- 52.North AJ. Seeing is believing? A beginners’ guide to practical pitfalls in image acquisition. J Cell Biol. 2006;172:9–18. doi: 10.1083/jcb.200507103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Stack RF, Bayles CJ, Girard AM, et al. Quality assurance testing for modern optical imaging systems. Microsc Microanal. 2011;17:598–606. doi: 10.1017/S1431927611000237. [DOI] [PubMed] [Google Scholar]

- 54.Supported Formats . Bio-Formats. Laboratory for Optical and Computational Instrumentation (LOCI), University of Wisconsin-Madison; [Accessed 16 Sept 2011]. 2011. http://loci.wisc.edu/bio-formats/formats. [Google Scholar]

- 55.Pawley JB. Points, pixels, and gray levels: digitizing image data. In: Pawley JB, editor. Handbook of biological confocal microscopy. 3rd edn. Springer Science + Business Media LLC; New York, NY: 2006. pp. 59–79. [Google Scholar]

- 56.Spring KR, Parry-Hill MJ, Long JC, et al. [Accessed 16 Sept 2011];Spatial resolution in digital images. 2006 http://micro.magnet.fsu.edu/primer/java/digitalimaging/processing/spatialresolution/

- 57.Spring KR, Fellers TJ, Davidson MW. [Accessed 16 Sept 2011];Resolution and contrast in confocal microscopy. 2006 http://www.olympusconfocal.com/theory/resolutionintro.html.

- 58.New York Times written in . Why movie wheels turn backward; an explanation of the illusion and a suggested method for correcting it. In: Sunday, editor. New York Times. New York Times; New York: [Accessed 16 Sept 2011]. 1918. http://query.nytimes.com/gst/abstract.html?res=9A05E6DA143EE433A25752C2A9619C946996D6CF. [Google Scholar]

- 59.Purves D, Paydarfar JA, Andrews TJ. The wagon wheel illusion in movies and reality. Proc Natl Acad Sci U S A. 1996;93:3693–3697. doi: 10.1073/pnas.93.8.3693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Pawley JB. Fundamental limits in confocal microscopy. In: Pawley JB, editor. Handbook of biological confocal microscopy. 3rd edn. Springer Science + Business Media LLC; New York, NY: 2006. pp. 20–42. [Google Scholar]

- 61.Microsoft Corporation . The Microsoft Press® computer dictionary. 3rd edn. Microsoft Press®; Redmond, WA: 1997. [Google Scholar]

- 62.Burger W, Burge MJ. Texts in Computer Science: Digital image processing. Springer; London: 2008. Histograms; pp. 37–52. [Google Scholar]

- 63.National Science Foundation [Accessed 16 Sep 2011];Chapter II—Proposal Preparation Instructions: Data Management Plan. 2011 http://www.nsf.gov/pubs/policydocs/pappguide/nsf11001/gpg_2.jsp#dmp.

- 64.National Science Foundation [Accessed 16 Sept 2011];Dissemination and sharing of research results. 2011 http://www.nsf.gov/bfa/dias/policy/dmp.jsp.

- 65.Center for Bio-Image Informatics [Accessed 16 Sept 2011];University of California—Santa Barbara. Bisque Database. 2011 http://www.bioimage.ucsb.edu/bisque.

- 66.Open Microscopy Environment [Accessed 16 Sept 2011];2011 http://www.openmicroscopy.org/site.

- 67.Blau J. Do burned CDs have a short life span? PC Magazine; [Accessed 16 Sept 2011]. 2006. http://www.pcworld.com/article/124312/do_burned_cds_have_a_short_life_span.html. [Google Scholar]

- 68.Searls DB. Data integration: challenges for drug discovery. Nat Rev Drug Discov. 2005;4:45–58. doi: 10.1038/nrd1608. [DOI] [PubMed] [Google Scholar]

- 69.Alberts B. Promoting scientific standards. Science. 2010;327:12. doi: 10.1126/science.1185983. [DOI] [PubMed] [Google Scholar]

- 70.Melino G. Policy and procedures on scientific misconduct. Cell Death Differ. 2010;17:1805–1806. doi: 10.1038/cdd.2010.122. [DOI] [PubMed] [Google Scholar]

- 71.Committee on Ensuring the Utility and Integrity of Research Data in a Digital Age, National Academy of Sciences . Ensuring the integrity, accessibility, and stewardship of research data in the digital age. National Academies Press; Washington, DC: 2009. [PubMed] [Google Scholar]

- 72.Tengowski MW. Image compression in morphometry studies requiring 21 CFR Part 11 compliance: procedure is key with TIFFs and various JPEG compression strengths. Toxicol Pathol. 2004;32:258–263. doi: 10.1080/01926230490274399. [DOI] [PubMed] [Google Scholar]

- 73.Food and Drug Administration . CFR—Code of Federal Regulations Title 21. Food and Drug Administration; [Accessed 16 Sept 2011]. 2010. http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfcfr/cfrsearch.cfm?cfrpart=11. [Google Scholar]

- 74.Wikipedia [Accessed 16 Sept 2011];Health Insurance Portability and Accountability Act. 2011 http://en.wikipedia.org/wiki/Health_Insurance_Portability_and_Accountability_Act.

- 75.Scientific Working Group Imaging Technology . Best Practices for Documenting Image Enhancement, section 11 (Version 1.2 2004.03.04) 2004. [Google Scholar]

- 76.Scientific Working Group Imaging Technology [Accessed 16 Sept 2011];Best Practices for Documenting Image Enhancement, section 11 (Version 1.3 2010.01.15) 2010 http://www.theiai.org/guidelines/swgit/guidelines/section_11_v1-3. pdf.

- 77.Open Microscopy Environment [Accessed 16 Sept 2011];OME-TIFF Overview and Rationale. 2011 http://www.ome-xml.org/wiki/OmeTiff.

- 78.MacKenzie JM, Burke MG, Carvalho T, Eades A. Ethics and digital imaging. Microsc Today. 2006;14–1:40–41. [Google Scholar]

- 79.Wikipedia [Accessed 16 Sept 2011];Tagged Image File Format. 2011 http://en.wikipedia.org/wiki/Tagged_Image_File_Format.

- 80.Guo H. Retraction: Guo H. Complication of central venous catheterization. N Engl J Med. 2007;356:e2. doi: 10.1056/NEJMc076064. 2007. [DOI] [PubMed] [Google Scholar]; N Engl J Med. 356:1075. [Google Scholar]

- 81.Le HQ, Chua SJ, Koh YW, et al. Growth of single crystal ZnO nanorods on GaN using an aqueous solution method (Retraction of vol 87, 101908, 2005) Appl Phys Lett. 2010;97:239903. [Google Scholar]

- 82.Jiang S, Alberich-Jorda M, Zagozdzon R, Parmar K, Fu Y, Mauch P, Banu N, Makriyannis A, Tenen DG, Avraham S, Groopman JE, Avraham HK. Cannabinoid receptor 2 and its agonists mediate hematopoiesis and hematopoietic stem and progenitor cell mobilization. Blood. 2011;117(3):827–838. doi: 10.1182/blood-2010-01-265082. Retraction: 2011. Blood. doi: 10.1182/blood-2011-06-363325. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 83.Miano JM. What is truth? Standards of scientific integrity in American Heart Association Journals. Arterioscler Thromb Vasc Biol. 2010;30:1–4. doi: 10.1161/ATVBAHA.109.200204. [DOI] [PubMed] [Google Scholar]

- 84.Editorial Nature Not picture-perfect. Nature. 2006;439:891–892. doi: 10.1038/439891b. [DOI] [PubMed] [Google Scholar]

- 85.Kaiser J. Data integrity report sends journals back to the drawing board. Science. 2009;325:381. doi: 10.1126/science.325_381. [DOI] [PubMed] [Google Scholar]

- 86.Cromey DW. [Accessed 16 Sept 2011];Digital Imaging: Ethics. 2001 http://swehsc.pharmacy.arizona.edu/exp-path/micro/digimage_ethics.php.

- 87.Vollmer SH. [Accessed 16 Sept 2011];Online Learning Tool for Research Integrity and Image Processing. 2008 http://www.uab.edu/researchintegrityandimages/

- 88.Miles KD. [Accessed 16 Sept 2011];Integrity of Science Image Data Issues and emerging standards—Audit Tutorial, PI Outcomes. 2011 http://scienceimage-integrity.org/wp-content/uploads/2010/04/Audit_Tutorial.pptx.

- 89.Microscopy Society of America. Position on ethical digital imaging. Microsc Today. 2003;11-6:61. [Google Scholar]

- 90.Journal of Cell Biology . Instructions for Authors; [Accessed 16 Sept 2011]. 2011. http://jcb.rupress.org/site/misc/ifora.xhtml. [Google Scholar]

- 91.Nature [Accessed 16 Sept 2011];Editorial Policy: Image integrity and standards. 2009 http://www.nature.com/authors/policies/image.html.

- 92.Hall PA, Wixon J, Poulsom R. The Journal of Pathology’s approach to publication ethics and misconduct. J Pathol. 2011;223:447–449. doi: 10.1002/path.2843. [DOI] [PubMed] [Google Scholar]

- 93.Cromey DW. [Accessed 16 Sept 2011];Potentially the most dangerous dialog box in Adobe Photoshop™. 2002 http://swehsc.pharmacy.arizona.edu/exp-path/resources/pdf/Photoshop_Image_Size_dialog_box.pdf.

- 94.Bell AA, Brauers J, Kaftan JN, et al. High dynamic range microscopy for cytopathological cancer diagnosis. Sel Top Signal Process J IEEE. 2009;3:170–184. [Google Scholar]

- 95.Valdecasas AG, Marshall D, Becerra JM, Terrero JJ. On the extended depth of focus algorithms for bright field microscopy. Micron. 2001;32:559–569. doi: 10.1016/s0968-4328(00)00061-5. [DOI] [PubMed] [Google Scholar]

- 96.Russ JC. The image processing handbook. 3rd edn CRC Press; Boca Raton, FL: 1998. [Google Scholar]

- 97.Zwier JM, Van Rooij GJ, Hofstraat JW, Brakenhoff GJ. Image calibration in fluorescence microscopy. J Microsc. 2004;216:15–24. doi: 10.1111/j.0022-2720.2004.01390.x. [DOI] [PubMed] [Google Scholar]

- 98.Pawley JB. The 39 steps: a cautionary tale of quantitative 3-D fluorescence microscopy. Biotechniques. 2000;28(884-886):888. doi: 10.2144/00285bt01. [DOI] [PubMed] [Google Scholar]

- 99.Bolte S, Cordelières FP. A guided tour into subcellular colocalization analysis in light microscopy. J Microsc. 2006;224:213–232. doi: 10.1111/j.1365-2818.2006.01706.x. [DOI] [PubMed] [Google Scholar]

- 100.Taylor CR, Levenson RM. Quantification of immunohistochemistry— issues concerning methods, utility and semiquantitative assessment II. Histopathology. 2006;49:411–424. doi: 10.1111/j.1365-2559.2006.02513.x. [DOI] [PubMed] [Google Scholar]

- 101.Bernardo V, Lourenco SQ, Cruz R, et al. Reproducibility of immunostaining Quantification and description of a new digital image processing procedure for quantitative evaluation of immunohistochemistry in pathology. Microsc Microanal. 2009;15:353–365. doi: 10.1017/S1431927609090710. [DOI] [PubMed] [Google Scholar]

- 102. [Accessed 16 Sept 2011];PNG (Portable Network Graphics) specification, Version 1.2-12. Appendix: Rationale. 2011 http://www.libpng.org/pub/png/spec/1.2/PNG-Credits.html.