Abstract

Objective

To examine the long-term impact of Medicare payment reductions on patient outcomes for Medicare acute myocardial infarction (AMI) patients.

Data Sources

Analysis of secondary data compiled from 100 percent Medicare Provider Analysis and Review between 1995 and 2005, Medicare hospital cost reports, Inpatient Prospective Payment System Payment Impact Files, American Hospital Association annual surveys, InterStudy, Area Resource Files, and County Business Patterns.

Study Design

We used a natural experiment—the Balanced Budget Act (BBA) of 1997—as an instrument to predict cumulative Medicare revenue loss due solely to the BBA, and basing on the predicted loss categorized hospitals into small, moderate, or large payment-cut groups and followed Medicare AMI patient outcomes in these hospitals over an 11-year panel between 1995 and 2005.

Principal Findings

We found that while Medicare AMI mortality trends remained similar across hospitals between pre-BBA and initial-BBA periods, hospitals facing large payment cuts saw smaller improvement in mortality rates relative to that of hospitals facing small cuts in the post-BBA period. Part of the relatively higher AMI mortalities among large-cut hospitals might be related to reductions in staffing levels and operating costs, and a small part might be due to patient selection.

Conclusions

We found evidence that hospitals facing large Medicare payment cuts as a result of BBA of 1997 were associated with deteriorating patient outcomes in the long run. Medicare payment reductions may have an unintended consequence of widening the gap in quality across hospitals.

Keywords: Medicare, mortality, payment reduction, BBA, hospital

Health policy researchers and decision makers have long been concerned about the relationship between provider payment generosity and quality of care for many reasons. One view about Medicare spending suggests that there is much inefficiency in the system so that it might be safe to reduce provider payments without hurting quality. Studies by leading researchers have demonstrated that Medicare often operates beyond the “flat of the curve,” where areas with additional care/spending are not associated with better outcomes (Fisher et al. 2003a,b; Baicker and Chandra 2004; Skinner, Staiger, and Fisher 2006). By contrast, several recent studies indicate that higher spending may be valuable, especially in the hospital setting. Greater hospital inpatient spending is shown to be associated with lower mortality rates in teaching hospitals (Ong et al. 2009), in selected states such as California (Romley, Jena, and Goldman 2011), Florida (Doyle 2011; Doyle et al. 2014), and Pennsylvania (Barnato et al. 2010); for a set of nationally representative hospitals (Romley et al. 2013) or for several medical (Kaestner and Silber 2010) or surgical (Chandra and Staiger 2007; Silber et al. 2010) conditions.

This important topic has sparked a long stream of research examining the effect of payment reductions on patient outcomes in the past. The literature points to a general finding that past payment reductions have led to cost-cutting responses in the management and provision of care (Feder, Hadley, and Zuckerman 1987; Newhouse and Byrne 1988; Hodgkin and McGuire 1994; Cutler 1995; Bazzoli et al. 2005, 2007, 2008; Lindrooth, Clement, and Bazzoli 2007; Zhao et al. 2008); and while more patients were being discharged in unstable condition (Kosecoff et al. 1990), there was limited or no adverse impact on patient outcomes (Kahn et al. 1990a,b; Rogers et al. 1990; Staiger and Gaumer unpublished data; Cutler 1995; Shen 2003; Volpp et al. 2005; Seshamani, Schwartz, and Volpp 2006; Seshamani, Zhu, and Volpp 2006). However, the literature has focused primarily on short-term impact. Our study fills the gap by examining the long-term effect of provider payment cuts by using a plausibly exogenous shock to hospital revenue—the Balanced Budget Act of 1997 (BBA). Understanding the long-term impact of the BBA on patient quality is especially timely in light of the recent Affordable Care Act (ACA) of 2010, which includes permanent Medicare payment cuts to providers that began in 2012.

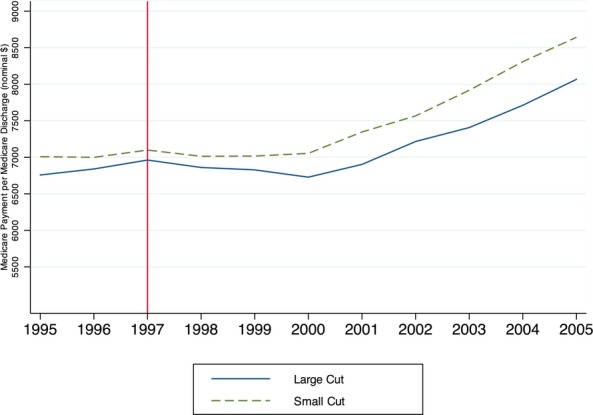

Several facts highlight the importance of the BBA. First, the BBA contained the most significant Medicare payment reductions in decades. With the exception of the Prospective Payment System (PPS), the BBA is the only legislation that reduced Medicare inpatient payments in nominal terms, rather than just slowing down the growth rate. Second, BBA payment cuts could have a long-lasting effect on hospitals because the legislation not only reduced diagnosis-related group (DRG) payment levels between 1998 and 2002 but also permanently altered the formula for special add-on payments.1 As illustrated in Figure 1, even though hospital payments grew at the full “market basket” update after the 1998–2002 period, the gap in payment across hospitals was permanent (a more detailed explanation of Figure 1 is presented in the Results section). Third, Medicare BBA reductions occurred after a sustained period of declining inpatient admissions and lengths of stay, as well as aggressive payment negotiations from managed care plans (Wu 2009) that limited hospital ability to cost shift to private payers (Wu 2010). As a result, hospital actions to produce further savings in this environment were more likely to have direct consequences on patient outcomes than in previous decades.

Figure 1.

Trends in Medicare Payment per Medicare Discharge by BBA Cuts, 1995–2005

To capture the long-term effect of BBA, we follow the methodology in the literature in simulating policy impacts (Staiger and Gaumer unpublished data; Gruber 1994; Cutler 1998; Shen 2003; Wu 2010), but we simulate the total cumulative payment cuts over the BBA implementation period instead of measuring yearly impacts and use it as an instrumental variable (IV) to extract the portion in Medicare payment change that is due to BBA only. We then use a difference-in-difference-in-differences (DDD) empirical design to follow hospitals facing large versus small (top and bottom quartiles) over an 11-year panel to identify changes in survival outcomes over time for Medicare acute myocardial infarction (AMI) patients. We provide details about the data and the methodology in the following sections.

Methods

Data Sources

Patient data were obtained from 100 percent Medicare Provider Analysis and Review between 1995 and 2005. Data on Medicare revenues and discharges by payers were obtained from Medicare hospital cost reports. Information regarding proposed and actual changes in DRG payment updates and payment formulas came from a series of Medicare Payment Advisory Commission reports and the Federal Register (1998–2001). PPS Payment Impact Files provided data needed to simulate Medicare DRG pricing. Additional hospital characteristics were obtained from American Hospital Association annual surveys between 1995 and 2005. Other area characteristics were obtained from Interstudy (Health Maintenance Organization [HMO] enrollment), Area Resource Files, and County Business Patterns.

Our study sample was composed of urban hospitals that operated continuously between 1996 and 2000, for which we had complete information to instrument the impact of Medicare payment cuts due to the BBA. We focused on urban hospitals because the HMO data were available only for metropolitan statistical areas (MSAs). The sample was further restricted to hospitals with at least 20 AMI admissions in a year due to Centers for Medicare and Medicaid Services (CMS) regulations and confidentiality concerns. We excluded hospitals whose change in Medicare revenue per discharge between 1996 and 2000 made them outliers (hospitals falling in the top and bottom 1 percent of the distribution), because reported revenue data are highly skewed. Our final sample consisted of approximately 1,400 urban hospitals’ data from 1995 to 2005, for a total of 14,021 observations.

Methodology Overview

An empirical concern in estimating the effect of Medicare payment on patient outcomes is that observed Medicare revenue is endogenous because hospitals may respond to payment changes by selecting patients, altering diagnostic group coding, or inflating charges for higher payments—and such behavior is likely correlated with hospital quality. In addition, there may be unobserved hospital characteristics that are correlated with both Medicare revenue and patient outcomes. Our empirical strategy used IVs coupled with a DDD model to address the endogeneity and selection concerns. The IV approach sought to break the endogenous relationship between actual Medicare revenue and patient outcomes by isolating the portion of Medicare revenue change that is solely due to exogenous BBA provisions. This is done by selecting instruments that are correlated with exogenous changes in Medicare revenue due to BBA, but the instruments themselves are unlikely to cause changes in patient outcomes directly other than through their impacts on Medicare revenue.

The BBA serves as a great instrument because the legislation was drafted due to concerns that rapidly rising Medicare spending is posing enormous fiscal pressure to the federal government and that Medicare may be overpaying providers in the early- to mid-1990s (Newhouse 2002), but not with any concerns related hospital quality. Therefore, the BBA generates unexpected Medicare revenue shocks to hospitals, which are unrelated quality/patient outcomes other than through BBA’s impact on Medicare payment. The BBA has resulted in the largest Medicare payment reduction since the introduction of the Inpatient PPS in 1983, about $40 billion between 1998 and 2002 (Guterman 2000). The BBA reduced inpatient payments primarily by cutting the annual DRG payment update between 1998 and 2002 across all hospitals.2 In addition, the BBA reduced/altered supplementary add-ons in the DRG payment formula, which caused larger cuts among teaching hospitals and those that serve large share of low-income and costly patients. Since then, legislation was passed to relieve some of the BBA cuts, including the BBRA of 1999, BIPA of 2000, and the Medicare Modernization Act of 2003. As a result, hospitals experienced their largest payment reduction between 1998 and 2000 but received some financial relief in 2001 and 2002 due to the subsequent legislations.

Empirically, the instrumental estimation was conducted in two steps. In the first step, we used the exogenous change in BBA policy to assign hospitals into treatment and control groups, by using the BBA instruments to predict hospitals that would experience unexpectedly large versus small BBA cuts. In the second step, we examined the pre-post change in patient outcomes between the treatment and control groups in a difference-in-differences (DD) estimation. In essence, this is a DDD design: We compared the relative change in mortality trends between large- and small-cut hospitals before and after the BBA. The identification assumption here is that once we control for the hospital-specific effect, treatment assignment is exogenous. We analyzed 11 years of data that spanned across three periods—1995–1997 (pre-BBA), 1998–2000 (initial-BBA), and 2001–2005 (post-BBA)—so that we could more effectively control for any systematic differences in pre-BBA trends that may explain the differences in observed posttrends.

First Stage Estimation

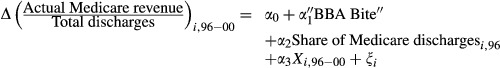

The first stage regression exploited the exogenous variation in changes in Medicare reimbursement as a result of BBA policy change during the BBA implementation period. The regression specification is as follows:

|

(1) |

where i indexes hospitals.

The dependent variable, the actual change in Medicare revenue per discharge,3 was calculated as the difference between the actual and hypothetical Medicare revenue per discharge in 2000, which was computed by updating the 1996 actual Medicare revenue per discharge to 2000 assuming that, in the counterfactual world, Medicare revenue would go up by a full market basket update between 1996 and 2000 (Cutler 1998).

The key independent variables are the BBA instruments: (1) a simulated DRG price payment reduction (“BBA bite” in equation (1)); and (2) the share of Medicare discharges in 1996. A “BBA bite” variable was constructed to capture the cumulative impact in unit DRG price from all the component parts affected by the BBA between 1996 and 2000.4 To derive the BBA bite, we first simulated a Laspeyres DRG price index given the provisions in the BBA while holding hospital behavioral inputs (including case mix, resident-to-bed ratio, and the percentage add-ons in DSH and OUT payments) at the pre-BBA (1996) level. We then generated a hypothetical DRG price had there been no BBA by updating the 1996 DRG price by full market basket to 2000. The BBA bite is the difference between the two prices.

The second instrument was the Medicare share of discharges fixed at the 1996 level. Hospitals with a larger share of Medicare patients would face greater loss in revenues per discharge. In addition, a theoretical model such as Glazer and McGuire (2002) suggests that hospital responses to Medicare price change will depend on the share of its patients because of joint production of care across payers. This is similar to several previous papers that weighted the “bite” variable with Medicare share directly (Hadley, Zuckerman, and Feder 1989; Staiger and Gaumer unpublished data; Gruber 1994; Cutler 1998; Shen 2003; Wu 2010) or studies that tested the effect of Medicare price to vary by Medicare share (Yip 1998; Dafny 2005; Acemoglu and Finkelstein 2008; Kaestner and Guardado 2008).

Lastly, control variables included in the model were changes in the Medicare case mix, hospital bed sizes and occupancy rates, hospital ownership (public, teaching, or for-profit), whether a hospital was part of a hospital system, the hospital Herfindahl-Hirschman Index (HHI), and the level and change in HMO penetration. Because many variables did not change much between 1996 and 2000, we used 1996 values for these variables in the regression.5 We also included dummies for each hospital referral region, as defined by Dartmouth Atlas Methods.

Second Stage Estimation

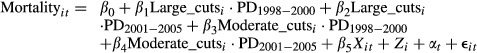

To estimate the effect of BBA payment cuts on mortality, we implemented a hospital fixed-effects model with the following specifications in the second stage:

|

(2) |

where i indexes hospitals and t indexes year.

Dependent Variables

Our main dependent variables were risk-adjusted AMI mortality rates among Medicare patients, measured as death within the hospital, within 7 days, 30 days, 90 days, and 1 year from admission date. These were aggregated hospital-specific outcome measures derived from patient-level regressions that included hospital indicators and fully interacted patient demographic covariates (five age groups, gender, race, and urban or rural residence) as well as 17 comorbidity measures to control for the severity of the illness following the methodology in prior work (Shen 2003; Skinner and Staiger 2009). In other words, instead of using the actual percentage of patients who died in each hospital as the outcome measure, we used these risk-adjusted hospital intercepts, which represented the mean value of outcomes for each hospital holding patient characteristics constant.

Key Identifying Variables

Based on the predicted Medicare revenue loss due to BBA (defined negatively) from the first stage estimation, we classified hospitals into three payment-cut categories: small (lowest 25th quartile), moderate (25th to 75th interquartile range), and large cut (top 25th quartile). This specification allowed us to better illustrate the effect of diverging trends in mortality outcomes by payment-cut categories. We also estimated an alternative specification using the numerical predicted Medicare revenue loss directly from the first stage.

The coefficients of interest are β1–β4. The interaction between “large cut” and the “1998–2000” period dummy (i.e., coefficient β1) identified the change in patient mortality trends between pre- and initial-BBA periods for hospitals that experienced large cuts in payment relative to that of hospitals that only experienced small payment cuts (the referenced hospital group). Similarly, β2 allowed us to examine the difference in mortality trends between pre- and post-BBA periods.6 We estimated bootstrapped standard errors in second-stage regressions to account for the fact that our payment-cut categories were based on predicted values from the first-stage regression.

Control Variables

We included a set of variables to control for hospital and market characteristics that may affect patient outcomes (Xit in equation (2)). The Medicare case mix controlled for the general severity of Medicare patients’ condition in a hospital. Hospital characteristics included a log of hospital beds, occupancy rates, hospital ownership (public, teaching, or for-profit), and whether a hospital belonged to a hospital system. HMO penetration and hospital-specific HHI captured the competitiveness of the insurance and hospital markets that hospitals face. HMO penetration is the percent of the population enrolled in an HMO plan in an MSA. Hospital HHI was derived following Melnick et al. (1992). Hospital-fixed effects (Zi in equation (2)) controlled for idiosyncratic time-invariant hospital characteristics as well as differences in initial hospital status such as financial conditions prior to the BBA that may affect the change in mortality systematically. Lastly, year dummies (αt in equation (2)) controlled for secular time trends in each year.

Outcomes in Exploratory Analysis

To explore the potential mechanisms of how patient mortality may be affected, we examined several hospital/treatment inputs that are correlated with patient outcomes, including the operating costs, level of staffing, and AMI technology use. To capture overall resources or inputs utilized at a hospital, we examined changes in total operating cost per 1996 bed.7 Nurse staffing levels have been shown to be directly associated with patient outcomes (Needleman et al. 2011), so we included both full-time equivalent (FTE) registered nurses and licensed practical nurses. We also examined the total staffing levels (FTEs). Because a patient’s mortality at discharge is a function of how long the patient stays in a hospital, we coupled our analysis on in-hospital mortality with that on length of stay.

Results

Figure 1 shows the trends in DRG payments by the degree of instrumented BBA cuts. The graph illustrates the key argument of our study that BBA payment reductions have a lasting effect that extends beyond the initial BBA period. In Figure 1, Medicare payment per Medicare discharge differed little between the largest cut and smallest cut hospitals in the pre-BBA period. The BBA generated a payment gap and this payment gap persisted in the post-BBA period despite the fact that Medicare payments grew at similar rates between the groups.

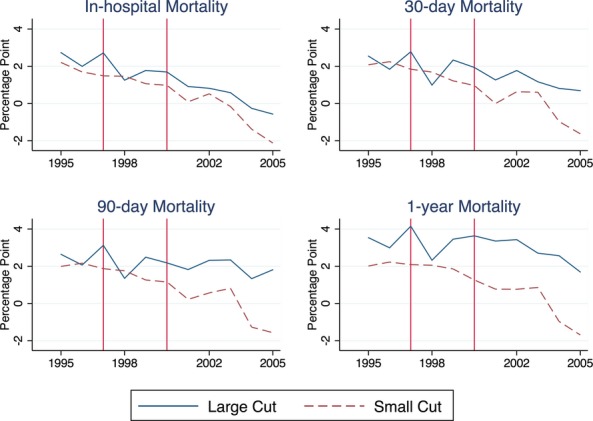

Figure 2 shows Medicare AMI mortality trends at discharge, 30 days, 90 days, and 1 year from hospital admission between 1995 and 2006.8 These are average mortality rates at a hospital after adjusting for differences in patient demographics and comorbidity across hospitals.9 In general, the mortality rates exhibited a downward trend during the study period. The two categories of hospitals did not appear to have systematically different trends in the pre-BBA period, while their trends diverged over time, particularly in the years after 1998. Using the trend in 90-day mortality as an example, hospitals with the smallest and largest cuts started out at similar mortality rates in 1995. However, while small-cut hospitals mortality declined over time, the trend was flat among hospitals with large payment cuts.

Figure 2.

Trends in Medicare AMI Patients’ Mortality Rates: Difference between Hospitals Facing Large and Small Medicare BBA Cuts

Table 1 presents the summary statistics of key variables for the entire study sample, and separately for the large cut and small cut subsamples by pre-BBA and post-BBA periods. The mean of simulated BBA bite—DRG price cut—was $-603 with a standard deviation of $409. Using the IVs, large-cut hospitals on average experienced a loss of $616 Medicare dollars per total discharge due to BBA, while the small-cut hospitals saw a $260 increase. In the pre-BBA period, large-cut hospitals were more likely to be major teaching or regular not-for-profit hospitals. This is consistent with the BBA payment change that affected large Medicare-share and major teaching hospitals the most. Despite some differences, however, both groups of hospitals had similar bed sizes, occupancy rates, and mortality outcomes.

Table 1.

Hospital Characteristics by Degree of BBA Medicare Revenue Cut

| All Hospitals 1995–2005 | 1995–1997 | |||

|---|---|---|---|---|

| Largest Cuts | Moderate Cuts | Smallest Cuts | ||

| Simulated DRG price cut—BBA bite (SD) | −607 (403) | −645 (172) | −602 (383) | −535 (263) |

| Instrumented loss in Medicare revenue due to BBA | −166 | −616 | −170 | 260 |

| Raw AMI 90-day mortality | 0.23 | 0.24 | 0.23 | 0.23 |

| Operating cost per 1,996 beds | 505,442 | 358,976 | 385,300 | 388,999 |

| % FP hospital | 15 | 8 | 14 | 15 |

| % Public hospital | 12 | 8 | 15 | 14 |

| % Teaching hospital | 9 | 13 | 8 | 4 |

| Medicare CMI | 1.44 | 1.39 | 1.41 | 1.42 |

| Hospital beds | 285 | 275 | 281 | 278 |

| Occupancy rate (%) | 59 | 56 | 53 | 53 |

| % Hospital system | 65 | 51 | 63 | 67 |

| HMO penetration (%) | 23 | 24 | 22 | 18 |

| Hospital HHI | 0.35 | 0.33 | 0.33 | 0.38 |

| Total discharge | 13,679 | 10,084 | 11,009 | 12,079 |

| % Discharge Medicare | 38 | 44 | 38 | 36 |

| % Discharge Medicaid | 13 | 13 | 14 | 15 |

| % Discharge private | 49 | 43 | 49 | 50 |

| Medicare AMI cases | 94 | 99 | 89 | 91 |

| Observations (No. of hospitals years) | 14,021 | 982 | 2,088 | 1,028 |

The first-stage regression results are shown in the appendix table. The coefficients in both instruments are significant and had the expected signs: More simulated BBA loss predicted a greater drop in actual Medicare revenue per discharge between 1996 and 2000, and a greater share in Medicare in 1996 led to higher actual Medicare income losses. In terms of the validity of the instruments, the Sargan test of over-identification restrictions cannot reject the null hypothesis that these instruments are exogenous/valid for all models (the bottom panel of the appendix table). In terms of strengths, the significant F-statistics of 26 passed the tests of weak instruments (Staiger and Stock 1997; Stock and Yogo 2005).

Table 2 shows the second-stage results, where the dependent variables are the AMI mortality rates. The first two rows show that there was no statistically significant difference in the mortality trends for all hospitals in 1998–2000 during the initial implementation period of the BBA compared to that in the 1995–1997 pre-BBA period. However, hospitals faced large Medicare cuts saw increased mortality in the post-BBA period (2001–2005), when comparing the same trend to those with small cuts. The difference was statistically significant for all time-specific mortality rates: ranging from 0.7 PP for 7-day to 1.6 PP increases in 1-year mortality (p < .01). Similar increased mortality trends were observed for hospitals with moderate cuts in the post-BBA period, although the magnitude was smaller and only significant for 30-day or longer mortality rates (about 0.8 PP, p < .05).

Table 2.

Regressions of Risk-Adjusted Mortality Rates on Instrumented BBA Cuts

| Risk Adjusted Mortality‡ | |||||

|---|---|---|---|---|---|

| In-Hospital | 7 days | 30 days | 90 days | 1 year | |

| Mean of raw mortality (%) (SD) | 12.67 (4.67) | 10.40 (4.11) | 17.56 (5.31) | 22.90 (6.08) | 29.52 (7.10) |

| Effect of BBA | |||||

| Initial-BBA period, 1998–2000 | |||||

| Hospitals with moderate cuts*1998–2000 | −0.30 (0.25) | −0.22 (0.21) | −0.03 (0.26) | −0.11 (0.29) | 0.02 (0.31) |

| Hospitals with large cuts*1998–2000 | −0.26 (0.30) | 0.24 (0.26) | 0.09 (0.30) | −0.06 (0.37) | 0.12 (0.40) |

| Post-BBA period, 2001–2005 | |||||

| Hospitals with moderate cuts*2001–2005 | 0.16 (0.25) | 0.26 (0.23) | 0.78** (0.28) | 0.84** (0.31) | 0.77* (0.32) |

| Hospitals with large cuts*2001–2005 | 0.32 (0.30) | 0.73** (0.28) | 1.08** (0.33) | 1.36** (0.36) | 1.57** (0.42) |

| Control variables | |||||

| For profit | −0.07 (0.35) | −0.13 (0.29) | −0.12 (0.40) | −0.09 (0.43) | −0.49 (0.49) |

| Government | −0.09 (0.55) | 0.16 (0.44) | −0.25 (0.54) | 0.13 (0.64) | −0.12 (0.72) |

| Teaching | 0.08 (0.30) | 0.13 (0.24) | 0.08 (0.35) | −0.02 (0.34) | 0.06 (0.38) |

| Medicare case mix | −1.75* (0.73) | −2.03** (0.65) | −3.20** (0.83) | −4.57** (0.86) | −3.79** (1.03) |

| Log (hospital beds) | −0.08 (0.28) | −0.14 (0.24) | 0.03 (0.30) | −0.10 (0.35) | −0.46 (0.40) |

| Occupancy rate | 1.02† (0.54) | 0.07 (0.67) | 0.20 (0.74) | 0.11 (0.75) | 0.12 (0.67) |

| Member of hospital system | 0.21 (0.21) | 0.14 (0.20) | 0.32 (0.27) | 0.29 (0.26) | 0.77* (0.32) |

| HMO penetration | 3.21* (1.27) | 1.82 (1.15) | 4.02** (1.51) | 3.78* (1.78) | 3.32† (1.76) |

| Hospital HHI | 3.48* (1.61) | 3.12* (1.57) | 1.98 (2.01) | 1.11 (2.26) | 3.10 (2.15) |

| Constant | 2.18 (1.82) | 3.15† (1.70) | 3.70† (2.12) | 6.59** (2.45) | 6.03* (2.76) |

| Observations | 14,021 | 14,021 | 14,021 | 14,021 | 14,021 |

| R-squared | 0.36 | 0.29 | 0.32 | 0.34 | 0.38 |

| Sargan test for first stage | |||||

| NR2 | 2* e-12 | 2*e-11 | 1*e-11 | 2*e-11 | 9*e-12 |

| p-value | .82 | .82 | .82 | .82 | .82 |

Notes. Hospital fixed effects and year dummies are included in all models.

Bootstrapped standard errors in parentheses.

†significant at 10%; *significant at 5%; **significant at 1%.

‡Risk-adjusted mortality rate used as the dependent variable was normalized so that its average was 0 over the study period.

To assess the elasticity, we estimated an alternative linear specification of the second-stage regressions using the predicted change in Medicare revenue from the first stage directly. The estimates were presented in Table 3 showed similar findings. As the first row shows, every $1,000 predicted loss in Medicare revenue per discharge was associated with 0.9, 1.33, 1.67, and 2.07 PP increases in 7-day, 30-day, 90-day, and 1-year mortality rates, respectively (p < .01 for all). In the bottom row of Table 3, we converted the coefficients into elasticities. Every $1,000 of instrumented Medicare revenue loss, or −33 percent, was associated with a 6 percent to 8 percent increase in mortality rates.10 Taken together, the elasticity was approximately −0.2 for all mortality, implying a 10 percent reduction in payments that would translate to a 2 percent increase in mortality rates. It is important to note that while the percentage point change in mortality goes up the longer after hospital discharge (Table 2), the elasticity of the effect is similar from 7-day to 1-year mortality (Table 3).

Table 3.

Regressions of Risk-Adjusted Mortality Rates on Linear Instrumented BBA Cuts and Elasticity

| Risk-Adjusted Mortality‡ | |||||

|---|---|---|---|---|---|

| In-Hospital | 7 days | 30 days | 90 days | 1 year | |

| Mean of raw mortality (%) (SD) | 12.67 (4.67) | 10.40 (4.11) | 17.56 (5.31) | 22.90 (6.08) | 29.52 (7.10) |

| Regression estimates§ | |||||

| Instrumented BBA cuts*1998–2000 | 0.13 (0.29) | −0.40 (0.26) | −0.30 (0.32) | −0.26 (0.35) | −0.42 (0.38) |

| Instrumented BBA cuts*2001–2005 | −0.49† (0.25) | −0.90** (0.24) | −1.33** (0.29) | −1.67** (0.33) | −2.07** (0.35) |

| Elasticity | |||||

| Elasticity for instrumented BBA cuts in 2001–2005 | −0.11 | −0.23 | −0.21 | −0.21 | −0.19 |

Notes. Control variables are identical to the models reported in Table 2.

Bootstrapped standard errors in parentheses.

‡Risk-adjusted mortality rate used as the dependent variable was normalized so that its average was 0 over the study period.

§Regressions using linear instrumented BBA loss based on first-stage model in the Appendix.

†significant at 10%; *significant at 5%; **significant at 1%.

We ran a battery of tests to compare estimates from more basic ordinary least square FE models and explore whether the increased mortalities we observed could be explained away by potential confounding factors unrelated to the BBA. Our results are consistent throughout the tests performed (details are provided in the appendix).

Discussion

We found evidence that the BBA generated long-term financial pressure to hospitals that extended beyond the BBA implementation period. While there is a general declining trend in AMI mortality rate, Medicare patients treated at hospitals facing a large degree of such financial pressure experienced smaller improvement in mortality outcomes relative to patients treated in small-cut hospital, not in the short run, but in the longer run post-BBA period. The elasticity of the effect, while small (−0.2), is constant and consistently observed from 7-day to 1-year post hospitalization. Our findings are consistent with a growing number of studies recently that find that higher spending may be valuable, especially in the hospital setting (Ong et al. 2009; Barnato et al. 2010; Kaestner and Silber 2010; Silber et al. 2010; Doyle 2011; Romley, Jena, and Goldman 2011; Doyle et al. 2014; Romley et al. 2013). From the clinical perspective, it is fairly rare for a patient to die immediately if he or she is not going to die anyway from a serious disease. In fact, the 30-day to 1-year mortality has been used commonly in other MI-related studies for quality of hospital care for this reason (Stenestrand and Wallentin 2001). As most first-time AMIs are not fatal, our results were somewhat expected in that we know that differences in quality of care in the acute phase may not immediately lead to higher death rates. Suboptimal care in the acute phase most likely does lead to poorer long-term outcomes by simply failing to optimize downstream consequences.

Our exploratory analyses on staffing and operating cost showed that hospitals responded to BBA cuts by reducing operating costs per bed immediately after the BBA took effect, and such effort involved a reduction in staffing, particularly among registered nurses. However, there was a delay between payment reductions and their ultimate impact on patient outcomes. It is possible that hospitals might have had some financial slack in their operations in the late 1990s, slowing their responses to initial payment cuts and delaying effects on patient outcomes. As the financial pressure persisted in the 2001–2005 period, further reductions began to affect treatment capability and patient outcome effects consequently emerged. The delay in detecting a statistically significant difference may also have been related to attenuation bias because mortality rates were prone to measurement errors (McClellan and Staiger, 2000). As the gap widened over time, the difference became statistically significant.

Results are remarkably stable under the series of sensitivity analyses that we performed. However, there are some limitations of the study. One limitation is that our IV model assumed that the BBA cuts were exogenous once the fixed hospital characteristics were controlled for in the second stage. The model also assumed that there were no time-variant unobservables that led to differential quality improvement trends that were correlated with the degree of BBA cuts. If these two assumptions were violated, our results are biased and cannot be interpreted as causal. Another limitation of the study is that while we explored some aspects of hospital operation in this study, we did not identify the exact mechanism(s) that led to slower improvement in patient mortality outcomes in large cut hospitals. Lastly, the results are not applicable to rural hospitals that operate in a very different market environment and are often subject to different Medicare payment rules. Our results also might not have been applicable to very small hospitals (the excluded hospitals represented less than 25 percent of urban hospitals).

The study findings have important policy implications. Our study shows that changes in Medicare payment level can affect quality. This would suggest that strategies such as pay-for-performance and value purchasing can be powerful tools to influence provider quality. However, our study also indicates that major Medicare payment reductions may have an unintended consequence of creating a quality gap between hospitals. Therefore, it would be important to evaluate the effects of pay-for-performance/value purchasing initiatives when combined with payment cuts, such as those contained in the ACA, for potential intended and unintended consequences on provider quality.

Conclusion

In this study, we use the BBA of 1997 as a natural experiment to analyze whether large payment cuts, such as ones being proposed under the ACA, would have long-term effects on patient outcomes. We found evidence consistent with the possibility that Medicare payment cuts as a result of BBA of 1997 had a long-term impact on patient outcomes. Furthermore, payment reductions may have an unintended consequence of widening the gap in quality across hospitals. These results warrant careful examination of the total costs and benefits of future Medicare payment reductions such as those contained in the ACA and similar policies, as well as exploration of payment incentives and policies that could reduce the gap.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: We are grateful for comments from seminar and conference participants at UCLA, Tulane, RAND, USC, AcademyHealth Annual Conference 2011, ASSA Annual Meeting 2012, and NBER Health Care Program meeting 2012. All errors are our own.

Disclosures: None.

Disclaimer: None.

Footnotes

For example, a teaching hospital’s indirect medical education subsidy is capped at its 1996’s resident-to-bed ratio, and the marginal reimbursement per resident-to-bed ratio is permanently reduced.

Specifically, the annual update was reduced by 2.7 percentage points (PP) in 1998, 1.9 PP in 1999, 1.8 PP in 2000, 0 PP in 2001 (net of Balanced Budget Refinement Act [BBRA] relief), and 0.55 PP in 2002 (net of BBRA and Benefits Improvement and Protection Act [BIPA] relieves).

Medicare inpatient revenue is the total of primary payer amount and payments from beneficiaries.

The BBA was enacted in 1997 with its provisions taking effect between 1998 and 2002. We chose 1996 as the base year for simulation to avoid some behavioral changes that already started in 1997. We used 2000 as the last year of effective BBA, as much of the BBA reductions in 2001 and 2002 were reversed by BBRA of 1999 and BIPA of 2000. We discussed an alternative BBA bite specification where we simulated the BBA effect up to 2002 in sensitivity analysis.

Due to the concern of endogeneity between change in Medicare revenue and HMO penetration, we followed prior literature (Baker, 1997; Dranove, Simon, and White, 2002) and predicted the values of HMO penetration between 1996 and 2000 using the percentage of workers employed in large firms and the percentage of white collar workers in a county.

Hospital beds are fixed at the 1996 level to avoid a potential endogeneity problem between hospital bed (size) and Medicare payment.

We omitted 7-day mortality from the graphical presentation for simplicity, because its mortality trend was similar to that of 30-day’s.

In the risk-adjustment process, average mortality for the entire period is normalized to zero, so that the average values are zeros in all plots.

Take 90-day mortality rate, for example. The baseline 90-day mortality rate is 24 percent, so the 1.67 PP increase we observed in the post-BBA period would translate into a 7 percent increase (1.67/24 = 0.07). $1,000 instrumented Medicare loss represented about −33 percent cut of Medicare revenue per discharge in 1997.

For example, delayed care for patients requiring cardiac catheterization as a result of staff shortage means that patients’ hearts have had more time to infarct. This means later, for example, their systolic ejection fraction may be lower than what it could have been, leading to more severe cases of congestive heart failure than had it been treated earlier. We know that aspirin, for example, in the immediate phase decreases longer term mortality (not just initial mortality) as well. A similar logic can be applied to other treatments described in the literature (Stenestrand and Wallentin 2001).

Supporting Information

Additional supporting information may be found in the online version of this article:

First Stage Regression.

Sensitivity Tests.

References

- Acemoglu D. Finkelstein A. Input and Technology Choices in Regulated Industries: Evidence from the Health Care Sector. Journal of Political Economics. 2008;116(5):837–80. [Google Scholar]

- Baicker K. Chandra A. Medicare Spending, the Physician Workforce, and Beneficiaries’ Quality of Care. Health Affairs. 2004;23(3):W4184–97. doi: 10.1377/hlthaff.w4.184. [DOI] [PubMed] [Google Scholar]

- Barnato AE, Chang C-CH, Farrell MH, Lave JR, Roberts MS. Angus DC. Is Survival Better at Hospitals with Higher “End-of-Life” Treatment Intensity? Medical Care. 2010;48(2):125–32. doi: 10.1097/MLR.0b013e3181c161e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bazzoli G, Lindrooth R, Hasnain-Wynia R. Needleman J. The Balanced Budget Act of 1997 and U.S. Hospital Operations. Inquiry. 2005;41(4):401–17. doi: 10.5034/inquiryjrnl_41.4.401. [DOI] [PubMed] [Google Scholar]

- Bazzoli G, Clement J, Lindrooth R, Che H, Aydede S, Braun B. Loeb J. Hospital Financial Condition and Operational Decisions Related to the Quality of Hospital Care. Medical Care Research and Review. 2007;64(2):148–68. doi: 10.1177/1077558706298289. [DOI] [PubMed] [Google Scholar]

- Bazzoli G, Chen H, Zhao M. Lindrooth R. Hospital Financial Condition and Quality of Patient Care. Health Economics. 2008;17(8):977–95. doi: 10.1002/hec.1311. [DOI] [PubMed] [Google Scholar]

- Chandra A. Staiger DO. Productivity Spillovers in Health Care: Evidence from the Treatment of Heart Attacks. The Journal of Political Economy. 2007;115(1):103–40. doi: 10.1086/512249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cutler DM. The Incidence of Adverse Medical Outcomes under Prospective Payment. Econometrica. 1995;63(1):29–50. [Google Scholar]

- Cutler DM. Cost Shifting or Cost Cutting? The Incidence of Reductions in Medicare Payments. Tax Policy and the Economy. 1998;12:1–27. [Google Scholar]

- Dafny L. How Do Hospitals Respond to Price Changes? The American Economic Review. 2005;95:1525–47. doi: 10.1257/000282805775014236. [DOI] [PubMed] [Google Scholar]

- Doyle JJ. Returns to Local-Area Health Care Spending: Evidence from Health Shocks to Patients Far from Home. American Economic Journal-Applied Economics. 2011;3(3):221–43. doi: 10.1257/app.3.3.221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doyle J, Graves J, Gruber J. Kleiner S. Measuring Returns to Hospital Care: Evidence from Ambulance Referral Patterns. Journal of Political Economy. 2014 doi: 10.1086/677756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feder J, Hadley J. Zuckerman S. How Did Medicare’s Prospective Payment System Affect Hospitals? New England Journal of Medicine. 1987;317(14):867–73. doi: 10.1056/NEJM198710013171405. [DOI] [PubMed] [Google Scholar]

- Fisher ES, Wennberg DE, Stukel TrsA, Gottlieb DJ, Lucas FL. Pinder ÃtL. The Implications of Regional Variations in Medicare Spending. Part 1: The Content, Quality, and Accessibility of Care. Annals of Internal Medicine. 2003a;138(4):273–87. doi: 10.7326/0003-4819-138-4-200302180-00006. [DOI] [PubMed] [Google Scholar]

- Fisher ES. The Implications of Regional Variations in Medicare Spending. Part 2: Health Outcomes and Satisfaction with Care. Annals of Internal Medicine. 2003b;138(4):288–98. doi: 10.7326/0003-4819-138-4-200302180-00007. [DOI] [PubMed] [Google Scholar]

- Glazer J. McGuire TG. Multiple Payers, Commonality and Free-Riding in Health Care: Medicare and Private Payers. Journal of Health Economics. 2002;21(6):1049–69. doi: 10.1016/s0167-6296(02)00078-4. [DOI] [PubMed] [Google Scholar]

- Gruber J. The Effect of Competitive Pressure on Charity: Hospital Responses to Price Shopping in California. Journal of Health Economics. 1994;13(2):183–211. doi: 10.1016/0167-6296(94)90023-x. [DOI] [PubMed] [Google Scholar]

- Guterman S. Putting Medicare in Context: How Does the Balanced Budget Act AffectHospitals. Washington, DC: Urban Institute; 2000. [Google Scholar]

- Hadley J, Zuckerman S. Feder J. Profits and Fiscal Pressure in the Prospective Payment System: Their Impacts on Hospitals. Inquiry. 1989;26(3):354. [PubMed] [Google Scholar]

- Hodgkin D. McGuire TG. Payment Levels and Hospital Response to Prospective Payment. Journal of Health Economics. 1994;13(1):1–29. doi: 10.1016/0167-6296(94)90002-7. [DOI] [PubMed] [Google Scholar]

- Kaestner R. Guardado J. Medicare Reimbursement, Nurse Staffing, and Patient Outcomes. Journal of Health Economics. 2008;27(2):339–61. doi: 10.1016/j.jhealeco.2007.04.003. [DOI] [PubMed] [Google Scholar]

- Kaestner R. Silber JH. Evidence on the Efficacy of Inpatient Spending on Medicare Patients. Milbank Quarterly. 2010;88(4):560–94. doi: 10.1111/j.1468-0009.2010.00612.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn KL, Keeler EB, Sherwood MJ, Rogers WH, Draper D, Bentow SS, Reinisch EJ, Rubenstein LV, Kosecoff J. Brook RH. Comparing Outcomes of Care before and after Implementation of the DRG-Based Prospective Payment System. Journal of the American Medical Association. 1990a;264(15):1984–8. [PubMed] [Google Scholar]

- Kahn KL, Rubenstein LV, Draper D, Kosecoff J, Rogers WH, Keeler EB. Brook RH. The Effects of the DRG-Based Prospective Payment System on Quality of Care for Hospitalized Medicare Patients. Journal of the American Medical Association. 1990b;264(15):1953–5. [PubMed] [Google Scholar]

- Kosecoff J, Kahn KL, Rogers WH, Reinisch EJ, Sherwood MJ, Rubenstein LV, Draper D, Roth CP, Chew C. Brook RH. Prospective Payment System and Impairment at Discharge. Journal of the American Medical Association. 1990;264(15):1980–3. [PubMed] [Google Scholar]

- Lindrooth RC, Clement J. Bazzoli GJ. The Effect of Reimbursement on the Intensity of Hospital Services. Southern Economic Journal. 2007;73(3):575–87. [Google Scholar]

- McClellan M. Staiger D. Comparing Hospital Quality at For-Profit and Not-For-Profit Hospitals. In: Cutler DM, editor; The Changing Hospital Industry: Comparing Not-For-Profit and For-Profit Institutions. Chicago, IL: University of Chicago Press; 2000. [Google Scholar]

- Melnick G, Zwanziger J, Bamezai A. Pattison R. The Effects of Market Structure and Bargaining Position on Hospital Prices. Journal of Health Economics. 1992;11(3):217–33. doi: 10.1016/0167-6296(92)90001-h. [DOI] [PubMed] [Google Scholar]

- Needleman J, Buerhaus P, Pankratz VS, Leibson CL, Stevens SR. Harris M. Nurse Staffing and Inpatient Hospital Mortality. New England Journal of Medicine. 2011;364(11):1037–45. doi: 10.1056/NEJMsa1001025. [DOI] [PubMed] [Google Scholar]

- Newhouse JP. Pricing the Priceless: A Health Care Conundrum. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- Newhouse JP. Byrne DJ. Did Medicare’s Prospective Payment System cause length of stay to fall? Journal of Health Economics. 1988;7(4):413–6. doi: 10.1016/0167-6296(88)90023-9. [DOI] [PubMed] [Google Scholar]

- Ong MK, Mangione CM, Romano PS, Zhou Q, Auerbach AD, Chun A, Davidson B, Ganiats TG, Greenfield S, Gropper MA, Malik S, Rosenthal JT. Escarce JJ. Looking Forward, Looking Back: Assessing Variations in Hospital Resource Use and Outcomes for Elderly Patients with Heart Failure. Circulation. Cardiovascular Quality and Outcomes. 2009;2(6):548–57. doi: 10.1161/CIRCOUTCOMES.108.825612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers WH, Draper D, Kahn KL, Keeler EB, Rubenstein LV, Kosecoff J. Brook RH. Quality of Care Before and After Implementation of the DRG-Based Prospective Payment System. Journal of the American Medical Association. 1990;264(15):1989–94. [PubMed] [Google Scholar]

- Romley JA, Jena AB. Goldman DP. Hospital Spending and Inpatient Mortality: Evidence from California: An Observational Study. Annals of Internal Medicine. 2011;154(3):160–7. doi: 10.7326/0003-4819-154-3-201102010-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romley JA, Jena AB, O’Leary JF. Goldman DP. Spending and Mortality in US Acute Care Hospitals. The American Journal of Managed Care. 2013;19(2):e46–54. [PMC free article] [PubMed] [Google Scholar]

- Seshamani M, Schwartz J. Volpp K. The Effect of Cuts in Medicare Reimbursement on Hospital Mortality. Health Services Research. 2006;41(3 Pt 1):683–700. doi: 10.1111/j.1475-6773.2006.00507.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seshamani M, Zhu JS. Volpp KG. Did Postoperative Mortality Increase after the Implementation of the Medicare Balanced Budget Act? Medical Care. 2006;44(6):527–33. doi: 10.1097/01.mlr.0000215886.49343.c6. [DOI] [PubMed] [Google Scholar]

- Shen Y-C. The Effect of Financial Pressure on the Quality of Care in Hospitals. Journal of Health Economics. 2003;22(2):243–69. doi: 10.1016/S0167-6296(02)00124-8. [DOI] [PubMed] [Google Scholar]

- Silber JH, Kaestner R, Even-Shoshan O, Wang Y. Bressler LJ. Aggressive Treatment Style and Surgical Outcomes. Health Services Research. 2010;45(6 Pt 2):1872–92. doi: 10.1111/j.1475-6773.2010.01180.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner J. Staiger D. Technology Diffusion and Productivity Growth in Health Care. Cambridge, MA: National Bureau of Economic Research; 2009. NBER Working Paper Series No. w14865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner JS, Staiger DO. Fisher ES. Is Technological Change in Medicine Always Worth It? The Case of Acute Myocardial Infarction. Health Affairs. 2006;25(2):w34–47. doi: 10.1377/hlthaff.25.w34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staiger D. Stock JH. Instrumental Variables Regression with Weak Instruments. Econometrica. 1997;65(3):557–86. [Google Scholar]

- Stenestrand U. Wallentin L. Early Statin Treatment Following Acute Myocardial Infarction and 1-year Survival. Journal of the American Medical Association. 2001;285(4):430–6. doi: 10.1001/jama.285.4.430. [DOI] [PubMed] [Google Scholar]

- Stock J, Yogo M. Testing for Weak Instruments in Linear IV Regerssion. In: Stock JH, Andrews DW, editors. Identification and Inference for Econometric Models: A Festchrift in Honor of Thomas Rothenberg. Cambridge, UK: Cambridge University Press; 2005. pp. 80–108. [Google Scholar]

- Volpp KG, Konetzka R, Zhu J, Parsons L. Peterson E. Effect of Cuts in Medicare Reimbursement on Process and Outcome of Care for Acute Myocardial Infarction Patients? Circulation. 2005;112(15):2268–75. doi: 10.1161/CIRCULATIONAHA.105.534164. [DOI] [PubMed] [Google Scholar]

- Wu VY. Managed Care’s Price Bargaining with Hospitals. Journal of Health Economics. 2009;28(2):350–60. doi: 10.1016/j.jhealeco.2008.11.001. [DOI] [PubMed] [Google Scholar]

- Wu V. Hospital Cost Shifting Revisited: New Evidence from the Balanced Budget Act of 1997. International Journal of Health Care Finance and Economics. 2010;10(1):61–83. doi: 10.1007/s10754-009-9071-5. [DOI] [PubMed] [Google Scholar]

- Yip WC. Physician Response to Medicare Fee Reductions: Changes in the Volume of Coronary Artery Bypass Graft (CABG) Surgeries in the Medicare and Private Sectors. Journal of Health Economics. 1998;17(6):675–99. doi: 10.1016/s0167-6296(98)00024-1. [DOI] [PubMed] [Google Scholar]

- Zhao M, Bazzoli G, Clement J, Lindrooth R, Nolin J. Chukmaitov A. Hospital Staffing Decisions: Does Financial Performance Matter? Inquiry. 2008;45(3):293–307. doi: 10.5034/inquiryjrnl_45.03.293. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

First Stage Regression.

Sensitivity Tests.